94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 24 December 2020

Sec. Quantitative Psychology and Measurement

Volume 11 - 2020 | https://doi.org/10.3389/fpsyg.2020.608677

The Resilience Scale for Adolescents (READ) is a highly rated scale for measuring protective factors of resilience. Even though the READ has been validated in several different cultural samples, no studies have validated the READ across samples in German from Switzerland and Germany. The purpose of this study was to explore the construct validity of the German READ version in two samples from two different countries and to test the measurement invariance between those two samples. A German sample (n = 321, M = 12.74, SD = 0.77) and a German-speaking Swiss sample (n = 349, M = 12.67, SD = 0.69) of seventh graders completed the READ, Hopkins Symptom Checklist (HSCL-25), Rosenberg Self-Esteem Scale (RSE), General Self-Efficacy Scale, and Satisfaction with Life Scale (SWL). The expected negative correlations between READ and HSCL-25 and the positive correlations between RSE, self-efficacy, and SWL were supported. Furthermore, the results of the measurement invariance demonstrated that the originally proposed five-dimensional structure is equal in the German and Swiss samples, and it can be assumed that the same construct was assessed by excluding one item. The five-factor, 27-item solution is a valid and reliable self-report measure of protective factors between two German-speaking samples.

According to the World Health Organization (WHO, 2019), half of all mental health conditions start by the age of 14, and 15% of all adolescents (aged 10–19) are affected by mental health disorders. However, most cases remain undetected and untreated, which might be problematic because extended mental health conditions can deteriorate physical and psychological health and limit opportunities to lead a fulfilling life as an adult (WHO, 2019).

Until the late 1970s, the concept of pathogenesis, pioneered and developed by Williamson and Pearse (1980), was predominant in medicine and health-care systems (Antonovsky, 1996). This approach aims to determine the origin and cause of certain diseases and retrospectively try to avoid, manage, or terminate the disorder (Becker et al., 2010). In contrast, Antonovsky’s (1979) salutogenesis approach focused on preventing mental disorders. Psychological ill-being should be absent, and the presence of psychological well-being is needed. Therefore, the main aim is to maintain or even improve health and psychological well-being by fostering health-promoting factors (Langeland and Vinje, 2017). Such factors are salutogenic factors, resilience factors, positive mental health, a supportive social system, and life satisfaction, which act in protective health-promoting processes (Bibi et al., 2020). Thus, it is crucial to determine, measure, foster, and strengthen protective factors and understand the risks associated with the physical, social, and economic aspects and vulnerability (Proag, 2014). These protective factors underlie positive psychological development (Masten, 2011) and help individuals resist in times of risk and adversity or balance the risk to which they are exposed (Rutter, 1985, 2012).

In this regard, resilience has gained interest in research and applied practice over the last three decades because it is an essential source of subjective well-being (Snyder et al., 2011; Estrada et al., 2016). Resilient resources can buffer the negative consequences of facing adversity and maintain (Connor and Davidson, 2003) or even improve psychological and physical health (Ryff and Singer, 2000). In scientific literature, various definitions of resilience can be found. Its complexity has been widely acknowledged, which has led to no universal operational definition of resilience (Luthar et al., 2000; Kaplan, 2002; Masten, 2007). However, five key concepts of resilience can be identified in the empirical literature (Aburn et al., 2016). The first concept focuses on a certain ‘ability’ of individuals to rise above to overcome adversities (e.g., Rutter, 1990; Garmezy, 1991; Bonanno, 2004). While certain definitions state a positive adaptation and adjustment is linked to resilience (e.g., Rutter, 1987; Werner and Smith, 1992; Luthar, 2006), a key concept which has been cited by many studies (e.g., Dowrick et al., 2008; Canvin et al., 2009; Janssen, 2011) is Masten’s ‘ordinary magic.’ Resilience is a common phenomenon which is an interplay between an individual’s strengths and the support of family, friends and the external social environment (Masten, 2001). Measuring the absence or low incidence of mental health despite ongoing adversity is a method used by several researchers. Thus, good mental health despite significant adversity is considered a proxy for resilience (e.g., Masten et al., 1999; Bonanno et al., 2002; Connor and Davidson, 2003). Finally, the fifth key concept is the ability to bounce back from adversity, based on the word’s Latin origin ‘resiliere’ meaning to jump back (e.g., Werner, 1990; Luthar et al., 2000; Luthar, 2006). Notwithstanding the difference in the key concepts, they all include a stable trajectory and outcome of individual and communal healthy functioning after a highly adverse event (Southwick et al., 2014). Furthermore, because it is a dynamic adaptation and development process, it changes throughout a lifetime and is multidimensional (Rutter, 2000; Scheithauer et al., 2000; Opp et al., 2008; Wustmann, 2008). Masten (2014, 6) defines resilience broadly as “the capacity of a dynamic system to adapt successfully to disturbances that threaten system function, viability, or development.” According to Hjemdal et al. (2006, 84), resilience can be defined as “the protective factors, processes, and mechanisms that contribute to a good outcome despite experiences with stressors shown to carry significant risks for developing psychopathology.”

Even though some exposure to adversity is important for adolescents’ growth and development of resilience (Masten, 2014), it is well established that adolescence is perceived as a crucial stage in human life and development and thus, being exposed to severe stress, trauma and adversities can lead to a set of negative neurodevelopmental, psychological and physiological outcomes (Putnam, 2006; Brady and Back, 2012). These adolescents are at risk of suffering from posttraumatic stress disorder (PTSD), depression, anxiety, disruptive behaviors, and substance abuse (McLaughlin and Lambert, 1970). Especially in times of the coronavirus disease 2019 (COVID-19) there is high prevalence of psychological distress in adolescents (e.g., Havnen et al., 2020; Prime et al., 2020; Ran et al., 2020).

Three overarching categories of resilience are generally accepted by researchers in adolescence: positive individual factors, social family support, and characteristics of the supportive environment outside the family (Garmezy, 1983; Werner, 1989, 1993; Rutter, 1990; Werner and Smith, 1992; Hjemdal, 2007). Firstly, positive individual factors include self-system variables, such as positive self-concept, motivation, internal locus of control, sense of coherence, engaging temperament, sociability, and low emotionality (Werner, 2000, 118). Additionally, Olsson et al. (2003, 5) mention robust neurobiology, intelligence, and communication skills. Secondly, perceived social family support, parental warmth, encouragement, and assistance, as well as close relationships with parents, and cohesion and care within the family are crucial (Masten et al., 1988; Olsson et al., 2003; Leidy et al., 2010). Thirdly, the broader social environment, such as the neighborhood, work, and school, plays a significant role in the last category: supportive environment outside the family. Adolescents who use social support systems effectively and form strong bonds with peers and teachers are considered more resilient. It is vital to have nurturing and competent individuals who are supportive and prosocial in order to overcome adversities (Masten, 2009), because it is associated with several psychological and behavioral mechanism, including motivation, feelings of being understood, increased self-esteem and the use of active coping strategies (Southwick et al., 2016).

The lack of a clear definition and the interpretation of resilience as a conceptual umbrella for factors that can modify the impact of adversities (Hjemdal et al., 2006) has led to the development of a number of scales (Windle et al., 2011). However, there are several significant critiques regarding the wide variety of (indirect) measures assessing resilience. First, most of these instruments have been used to assess adults’ resilience, and only a few scales are available for young children and adolescents. For example, Youth Resiliency: Assessing Developmental Strengths (Donnon et al., 2003; Donnon and Hammond, 2007), Adolescent Resilience Scale (Oshio et al., 2003), The Resilience Scale (Wagnild and Young, 1993), Psychological Resilience (Windle et al., 2008), and Ego Resiliency (Bromley et al., 2006) can be applied for children and adolescents. Even though the READ (Hjemdal et al., 2006) has originally been adapted from the Resilience Scale for Adults (RSA; Friborg et al., 2003), the wording and response format has been simplified, shortened and validated and is therefore considered adequate for the use in adolescent samples (see Development and Validation of the READ). Secondly, most of these instruments do not cover all three introduced overarching categories of resilience (positive individual factors, social family support, and characteristics of the supportive environment outside the family), and they differ significantly and focus mainly on individual factors. Among the just mentioned scales, only the Youth Resiliency: Assessing Developmental Strengths (YR: ADS) measures dimensions apart from individual factors, such as family, community, and peers. Nevertheless, this scale includes 10 dimensions and 94 items, which might be too lengthy and extensive for certain research applications. Thirdly, some of these scales are in the early stages of development and all need further validation (Windle et al., 2011). Thus, these aspects hamper the possibilities of making valid comparisons. However, the READ (Hjemdal et al., 2006) is the only direct measure of resilience for adolescents that incorporates all three overarching protective factors (positive individual factors, social family support, supportive environment outside the family; Kelly et al., 2017), in a five-factor scale (Personal Competence, Social Competence, Structured Style, Family Cohesion, Social Resources). It has been validated in different countries and with different samples (von Soest et al., 2010; Stratta et al., 2012; Ruvalcaba-Romero et al., 2014; Kelly et al., 2017; Moksnes and Haugan, 2018; Askeland et al., 2019; Pérez-Fuentes et al., 2020).

The present study explores the validity and reliability of the READ (Hjemdal et al., 2006), a measure of protective factors of resilience, in two independent German-speaking samples in Germany and Switzerland. Even though the scale has been previously validated and thus is a valid and reliable instrument in several countries and languages, it has not been validated in a German-speaking sample so far. Furthermore, the samples differ in sizes, ages, and linguistic and cultural backgrounds. Therefore, following a literature review, the validation process and results will be outlined, and finally, the use of the READ in practice and empirical applications will be addressed.

The READ (Hjemdal et al., 2006) was developed in Norway and adapted from the Resilience Scale for Adults (RSA; Friborg et al., 2003). Initially, the RSA consisted of 41 items, which were simplified for the READ by changing two critical aspects. First, the semantic differential-type response format was changed to a five-point Likert format. Secondly, the wording was simplified, and phrases were only positively formulated to improve interpretation and completion of the survey. Furthermore, two items were excluded because they were considered irrelevant. With the remaining 39 items, a structural equation post hoc modeling and a confirmatory factor analysis (CFA) were conducted, with a sample of 421 adolescents aged 13–15 years, resulting in a 28-item, five-factor structure with acceptable model fit indices. The five factors of the READ comprised all three generally accepted higher categories of resilience, and the READ scale was organized into five factors, which are represented in the six factors of the RSA. The first overarching factor, positive individual factors, is represented by the three dimensions, Personal Competence, Social Competence, and Structured Style. The second and third overarching factors, social family support and supportive environment outside the family are covered by the subscales Family Cohesion and Social Resources.

The Personal Competence factor includes factors that measure several individual aspects, such as self-esteem, self-efficacy, ability to uphold daily routines, or the ability to plan and organize. The Social Competence factor focuses mainly on excellent communication skills and flexibility in social matters. The Structured Style factor measures the preference of an individual to plan and structure their daily routines. The Family Cohesion factor measures family support and the family’s attitude toward life in times of adversity. Lastly, the Social Resources factor measures perceived access to a social environment outside the family, such a relatives and friends, and the availability of their support (Hjemdal et al., 2006). The READ has been validated in five countries (Norway, Italy, Mexico, Ireland, and Spain), which do not all support the original factor structure and have raised questions about the factor-item patterns. So far, seven further validations of the READ (von Soest et al., 2010; Stratta et al., 2012; Ruvalcaba-Romero et al., 2014; Kelly et al., 2017; Moksnes and Haugan, 2018; Askeland et al., 2019; Pérez-Fuentes et al., 2020) have been published in English speaking journals, showing that the five-factor 28-item solution might be problematic. The number of factors differ, as do the number of items and which item(s) should be removed.

von Soest et al. (2010) conducted exploratory factor analysis, using a sample of 6,723 adolescents, aged 18–20, and based in Norway, which supported a five-factor solution. Nevertheless, the following confirmatory factor analysis supported the findings of the exploratory factor analysis for further improvement. Therefore, five items were removed, and an acceptable fit for all factors and the overall model was obtained. Stratta et al. (2012) collected data from 446 students in their final year of high school (18–20 years) in Italy, and found a four-dimensional structure by using principal component analyses and confirmatory factor analyses. Personal Competence and Structured Style factors were combined. However, cross-loadings on 17 of 28 items were > 0.40, indicating the items were not contributing to measuring the construct itself. Similarly to Stratta et al. (2012), Ruvalcaba-Romero et al. (2014) conducted a principal component analysis but removed six items in their five-factor solution. The composed five-factor structure differed from the original structure in two dimensions. Social Competence, Family Cohesion, Social Resources remained largely the same, whereas Personal Competence comprised only four instead of eight items, measuring mainly self-confidence. Furthermore, the remaining Personal Competence items and a few items of the Structured Style factor were combined in a new factor called Goal–Orientation. This data was collected from a sample of 840 adolescents (12–17 years) in Mexico.

Just like von Soest et al. (2010) and Ruvalcaba-Romero et al. (2014), Moksnes and Haugan (2018) excluded several items for their final solution by using confirmatory factor analysis. However, they kept the original five-factor structure, and the 20-item version showed good model fit indices. The sample was based on 1,183 adolescents, aged 13–18 years, based in Norway.

Criticizing the use of confirmatory factor analyses and exploratory factor analyses, Askeland et al. (2019) conducted, aside from the just mentioned analyses, an exploratory structural equation modeling with a sample of 9,596 students, aged 16–19 years, from Norway. The exploratory factor analysis model, as well as the exploratory structural equation model, showed good model indices for a 28-item, five-factor solution, whereas the results of the confirmatory factor analysis for the same model were poor. Finally, Pérez-Fuentes et al. (2020) tested the Spanish 22-item, five-factor version of Ruvalcaba-Romero et al. (2014) with 317 students (13–18 years) in Spain by using a principal component analysis followed by confirmatory factor analysis. Results indicated that the originally proposed structure by Ruvalcaba-Romero et al. (2014) was the best model and therefore showed good fit indices. However, they tested the 22-item version instead of the original five-factor, 28-item structure.

There is only one study so far that could confirm the original structure by using a confirmatory factor analysis without any modifications (Kelly et al., 2017). The study was conducted in Ireland, and 6,030 students, aged 12–18 years, participated. Unlike other previous studies (e.g., von Soest et al., 2010), Kelly et al. (2017) used a confirmatory approach and a common factor analysis framework in validating the READ, overcoming some of the methodological limitations in previous studies. For example, the exploratory approach (e.g., EFA) is used when the latent structure of a measure is unknown or when a confirmatory approach fails to reproduce an initial measurement model. It is unclear why EFA preceded CFA in validating a known latent structure of the READ in the studies conducted by von Soest et al. (2010); Stratta et al. (2012), and Ruvalcaba-Romero et al. (2014). As the PCA is not a common factor analytic framework, it fails to account for measurement unreliability (Brown, 2015); thus, previous studies using the PCA (Stratta et al., 2012; Ruvalcaba-Romero et al., 2014; Pérez-Fuentes et al., 2020) did not overcome the limitations of measurement unreliability. Three studies investigated measurement invariance across gender (Kelly et al., 2017; Moksnes and Haugan, 2018; Askeland et al., 2019) resulting in acceptable model fit for both genders. However, only Askeland et al. (2019) showed metric and partial scalar invariance for their newly built 5-factor solution by conducting an ESEM.

The construct validity of the READ has been supported by negative correlations with, on the one hand, depression (Hjemdal et al., 2006; Hjemdal, 2007; Skrove et al., 2013; Moksnes and Haugan, 2018) and anxiety (e.g., von Soest et al., 2010; Hjemdal et al., 2011, 2015; Skrove et al., 2013). Both constructs have been well investigated and provide evidence for validity (McLaughlin and Lambert, 1970). On the other hand, self-esteem (e.g., Ruvalcaba-Romero et al., 2014; Kelly et al., 2017; Moksnes and Haugan, 2018), and self-efficacy (Sagone and Caroli, 2013), are well-known indicators for construct validity. However, satisfaction with life has, to the authors’ knowledge, not been included in previous validation studies yet, but is according to Seligman (2002) positively connected to resilience, and thus, included in the present study.

The literature review has demonstrated several strengths in previous validations, such as sample sizes and age ranges. However, certain methodological weaknesses need to be addressed. There is a lack of correspondence between the results of the confirmatory factor analysis (CFA), exploratory factor analysis (EFA), and exploratory structure equation modeling (ESEM) because the factor analysis techniques may not be fully comparable (van Prooijen and van der Kloot, 2001). CFA is usually used for testing an instrument’s theoretical structure; thus, the latent structure is already known and the most widely used method for investigation factorial invariance (Byrne et al., 1989). Items are only allowed to load on one factor. EFA, which has been used as the most common approach to validate the READ scale, presupposes no structure, and the number of cross-loadings is not limited. This exploratory approach can also be used in cases of unsatisfactory confirmatory analyses. ESEM is a combination of the CFA and EFA approaches by allowing unrestricted estimations of all factor loadings (Fischer and Karl, 2019). According to Mai et al. (2018), ESEM might be a necessary method for large samples and substantive cross-loadings in the model, which cannot be ignored. Nevertheless, it remains unclear why exploratory approaches have been used to validate the READ in previous papers, and therefore, comparisons to previous studies should be made cautiously.

Previous validations have shown that there is not only a lack of methodological clarity but also a lack of a validated version of the READ in any German-speaking countries. German is the native language to more than 100 million people, mainly spoken in Central Europe (Germany, Austria, Switzerland, Lichtenstein, and some parts in Italy, Belgium, Luxembourg, and Poland). Even though these countries and regions share a common language, they differ in their cultural backgrounds and dialects (Krieger et al., 2020). Some words and phrases might not be understood or have a different meaning in another country/region. However, irrespective of the cultural, educational, or individual differences of these countries and regions, it is indispensable to have an adequately adapted questionnaire understandable for all German-speaking regions.

Therefore, this study examines the psychometric properties and the factorial validity of the German READ in a German and Swiss sample of adolescents in seventh grade by conducting a multi-group factor analysis (MGCFA).

Even though most studies have used exploratory approaches to validate the READ, Kelly et al. (2017) were able to replicate the original five-factor structure proposed by Hjemdal et al. (2006). Thus, we hypothesize that,

(i) the German version of the READ is a reliable and valid instrument, and the five-factor structure of the READ is consistent with the model proposed by the developers (Hjemdal et al., 2006) and the replication by Kelly et al. (2017).

Furthermore, Pérez-Fuentes et al. (2020) were able to replicate the five-factor structure proposed by Ruvalcaba-Romero et al. (2014), showing that even though the scale has been shortened, the structure replicates in two independent samples sharing a common language but having different cultures. Therefore, in this study, it was expected that,

(ii) not only form invariance but also metric invariance can be expected, indicating the equivalence of factor loadings across the German and Swiss samples.

However, the equivalence in residual variances was not expected or regarded as a requirement for a valid use of the READ within the German-speaking countries (Hjemdal et al., 2015).

Lastly, several studies (e.g., Hjemdal et al., 2006, 2011, 2015; Hjemdal, 2007; von Soest et al., 2010; Skrove et al., 2013; Moksnes and Haugan, 2018) have found that the READ correlates negatively with depression and anxiety, on the one hand. On the other hand, the READ correlates positively with self-esteem (e.g., Ruvalcaba-Romero et al., 2014; Kelly et al., 2017; Moksnes and Haugan, 2018), self-efficacy (Sagone and Caroli, 2013), and satisfaction with life (Seligman, 2002). This leads to the hypothesis that,

(iii) the READ correlates negatively with anxiety and depression but positively with self-esteem, self-efficacy, and satisfaction with life.

Due to the rapid pace of globalization, the worldwide population diversity has increased and calls for a need to ensure that assessment tools function equivalently across contexts and that the same construct manifests similarly and, therefore, is measured similarly. Researchers and clinicians conduct more multinational and multicultural studies and have to adapt instruments for use in other languages and cultures (Beaton et al., 2000). However, the adaptation of longer scales is challenging when trying to maintain the meaning of the original measurement and keeping a relevant and comprehensible form (Sperber et al., 1994; Sousa and Rojjanasrirat, 2011). Cross-cultural or cross-national studies try to examine the validity of an instrument across cultures and nations. A specific hypothesis will not be tested; rather, the demonstration of equivalence (the absence of a construct, method, and item bias) will be explored (Matsumoto and van de Vijver, 2011).

However, these cross-cultural studies vary according to the contexts (Beaton et al., 2000), and even though the same language is used in a questionnaire, cultural backgrounds need to be taken into account. Therefore, two independent samples using the same language version of the questionnaire can help identify cultural differences in certain items for one version of a scale.

Substantive differences in the meaning of resilience and how resilience manifests across the two independent samples could restrict the generalizability of findings. This could be the result of differences in the scale locations or the starting points that different people use to scale their responses on the READ scale despite having the same values on the latent resilience construct. Measurement invariance examines whether the same construct has been measured in the same way across different people, contexts, and cultures (Meredith, 1993; Steenkamp and Baumgartner, 1998; Chen, 2007; Marsh et al., 2010; Millsap, 2011). Measurement invariance can pinpoint any sources of non-invariance across a hierarchy of levels, ranging from equal form to equal intercepts. These levels are required for comparing scale means across independent samples when differences exist in the way the construct has been measured or interpreted by independent samples (Milfont and Fischer, 2010).

This cross-sectional sample is based on the National Centres of Competence in Research (NCCR) project On the Move—The Migration-Mobility Nexus Overcoming Inequalities with Education—School and Resilience, funded by the Swiss National Science Foundation (SNSF). Six hundred and seventy seventh graders from lower secondary education classes (ISCED 2) in Germany and Switzerland completed the German-speaking web-based survey of risk and protective factors of mental health. The mean age of the random sample in Germany (n = 321), ranging from 11 to 16 years, was 12.74 (SD = 0.77), and 44.2% of the participants were female. Whereas, the mean age of the random sample in Switzerland (n = 349), ranging from 11 to 15 years, was 12.67 (SD = 0.69), and 45.6% of the participants were female (gender was not identified for seven participants).

The research has been conducted in accordance with the World Medical Association’s Declaration of Helsinki. The Ministry of Baden-Württemberg (Germany), the Cantonal Bureau for Education in the Cantons of Aargau, Basel-City, and Solothurn, and the Ethics Committee (for psychological and related research) of the Faculty of Arts and Social Sciences of the University of Zürich endorsed the data collection. First, the students’ questionnaires were translated into seven languages (Arabic, English, Farsi, French, German, Greek, and Turkish). A first translator translated the questionnaire into the target language, and a second independent translator checked the translation via retranslation. In the end, they decided on a consensus questionnaire. For the present study, only German-speaking questionnaires have been considered, as German is also the primary language in the three participating Swiss cantons. Afterward, schools in rural and urban areas in the Federal State Baden-Württemberg and the Cantons of Aargau, Basel-City, and Solothurn were asked to participate in the study. The headmaster of each school and the teachers approved participation in the survey. All parents and students received information letters explaining the procedure, and participation and data collection was voluntary, anonymous, and confidential. Participants were free to withdraw at any point in the study. Written informed consent to participate in the study was provided by the students and by their legal guardians. Students were asked to fill out the web-based questionnaires individually at their convenience on tablets. Teachers helped to administer the surveys during a regular school session of 90 min. Those not present while the study was carried out were asked to participate later.

Gender and age were collected as single-item indicators for demographical information.

The READ (Hjemdal et al., 2006) is a 28-item self-report scale composed of only positively phrased items and a 5-point Likert-type response scale, ranging from 1 (totally disagree) to 5 (totally agree). Higher scores indicate higher levels of resilience (Hjemdal et al., 2006). The scale with its five subscales (Personal Competence, Social Competence, Structured Style, Family Cohesion, and Social Resources) is a valid and reliable measurement for resilience.

The Hopkins Symptom Checklist (HSCL-25; Mattsson et al., 1969; Derogatis et al., 1974) is a self-report mental health screening questionnaire for measuring depression and anxiety. The scale has been used before for construct validity of the READ and includes 25 items (15 items about depression and 10 items about anxiety), originally derived from the 90-item Symptom Checklist (SCL-90; Derogatis and Savitz, 1999). It is a four-point Likert scale, ranging from 1 (Not bothered) to 4 (Extremely bothered), with a high internal consistency. One item was omitted (“Loss of sexual interest or pleasure”) due to the participants’ age range.

The Rosenberg Self-Esteem Scale (RSE; Rosenberg, 1965) measures global self-worth by using ten positively, as well as negatively, phrased items. The RSE allows adolescents to rate items on a four-point Likert scale, ranging from 1 (Strongly disagree) to 4 (Strongly agree). Higher scores on the unidimensional high internal consistency scale (Cronbach’s alpha coefficients are usually above 0.80, and values above 0.90 have been reported) indicate a higher level of self-esteem (Heatherton and Wyland, 2003).

To measure general self-efficacy, Schwarzer and Jerusalem’s (1995) scale has been used. With its good internal reliability, the 10-item psychometric scale assesses optimistic self-beliefs on a 4-point Likert scale, ranging from 1 (Not at all true) to 4 (Exactly true). The total score of the scale, which is available in 33 languages, is calculated by finding the overall sum. The total score ranges between 10 and 40, indicating more self-efficacy with a higher score.

The Satisfaction with Life Scale (SWL; Pavot and Diener, 1993) is a brief self-report scale consisting of five items and a 7-point Likert response scale, ranging from 1 (Strongly disagree) to 7 (Strongly agree). A score of 20 represents a neutral point on the scale, whereas scores of 5–9 indicate extreme dissatisfaction, and scores of 31–35 indicate extreme satisfaction with life. According to Pavot and Diener (1993), the scale has high internal consistency and good test–retest correlations (0.84, 0.80 over a month interval).

For the confirmatory factor and measurement invariance analyses, Mplus version 8 (Muthén and Muthén, (1998-2017)) was used. First, confirmatory factor analysis was conducted, using robust maximum likelihood estimation with robust standard error procedures to account for non-normality and the five categories of the READ (Rhemtulla et al., 2012). The model fit was assessed using the Comparative Fit Index (CFI), the Tucker-Lewis Index (TLI), the Root Mean Square Error of Approximation (RMSEA), and the Standardized Root Mean Square Residual (SRMR). CFI and TLI values higher than 0.90 indicate an acceptable fit, whereas values greater than 0.95 correspond to an excellent fit (Hu and Bentler, 1999). According to Hu and Bentler (1999) and Bühner (2011), an RSMEA between 0.08 and 0.10 is considered acceptable. The same applies to the SRMR (Browne and Cudeck, 1992). Modification indices and, therefore, possible options for adjustment of the measurement model were inspected if the model fit was not acceptable.

Secondly, configural, metric, and scalar invariance for the CFA model across the two national samples were examined (Cieciuch and Davidov, 2016). Configural invariance, which also represents the baseline model, was tested first. It is satisfied when the latent structure is invariant across groups (in this case, across a German and Swiss sample), thus supporting the idea of an identical number of factors and a pattern of factor-item relations across the two groups (Brown, 2015). According to Putnick and Bornstein (2016), metric (weak) invariance is met when different groups respond to items in the same way, resulting in a comparable rating. This test can be conducted by constraining all factor loadings as equal across groups. Scalar (strong) invariance provides information about the comparison in latent constructs across groups by implying that participants “with the same value on the latent construct should have equal values on the observed variable” (Hong et al., 2003, 641). It is satisfied by constraining the intercepts of items to be equal across groups. However, it is rarely supported (Marsh et al., 2018).

The Chi square-test statistics have disadvantages due to the sensitivity of the sample size (Hayduk et al., 2007), and a compensatory test for measurement invariance for higher sample sizes was conducted (Cheung and Rensvold, 2002). According to Chen (2007) and Putnick and Bornstein (2016), a ΔCFI of ≤ 0.01 supplemented by an ΔRMSEA of ≤ 0.015 indicates invariance when testing weak invariance.

Thirdly, reliability and validity were evaluated by using IBM SPSS Statistics, version 24 (IBM Corp, 2016). Cronbach’s alpha and McDonald’s (1970) omega were computed, and convergent validity was assessed by correlating the five factors of the READ with the HSCL depression and anxiety dimensions, as well as the RSE, self-efficacy, and SWL, where certain patterns of correlation were expected. These correlation coefficients, retrieved from the two independent samples, were compared and tested to see whether a significant difference in the correlation of both cohorts could be found. Therefore, the test statistic z-value was calculated.

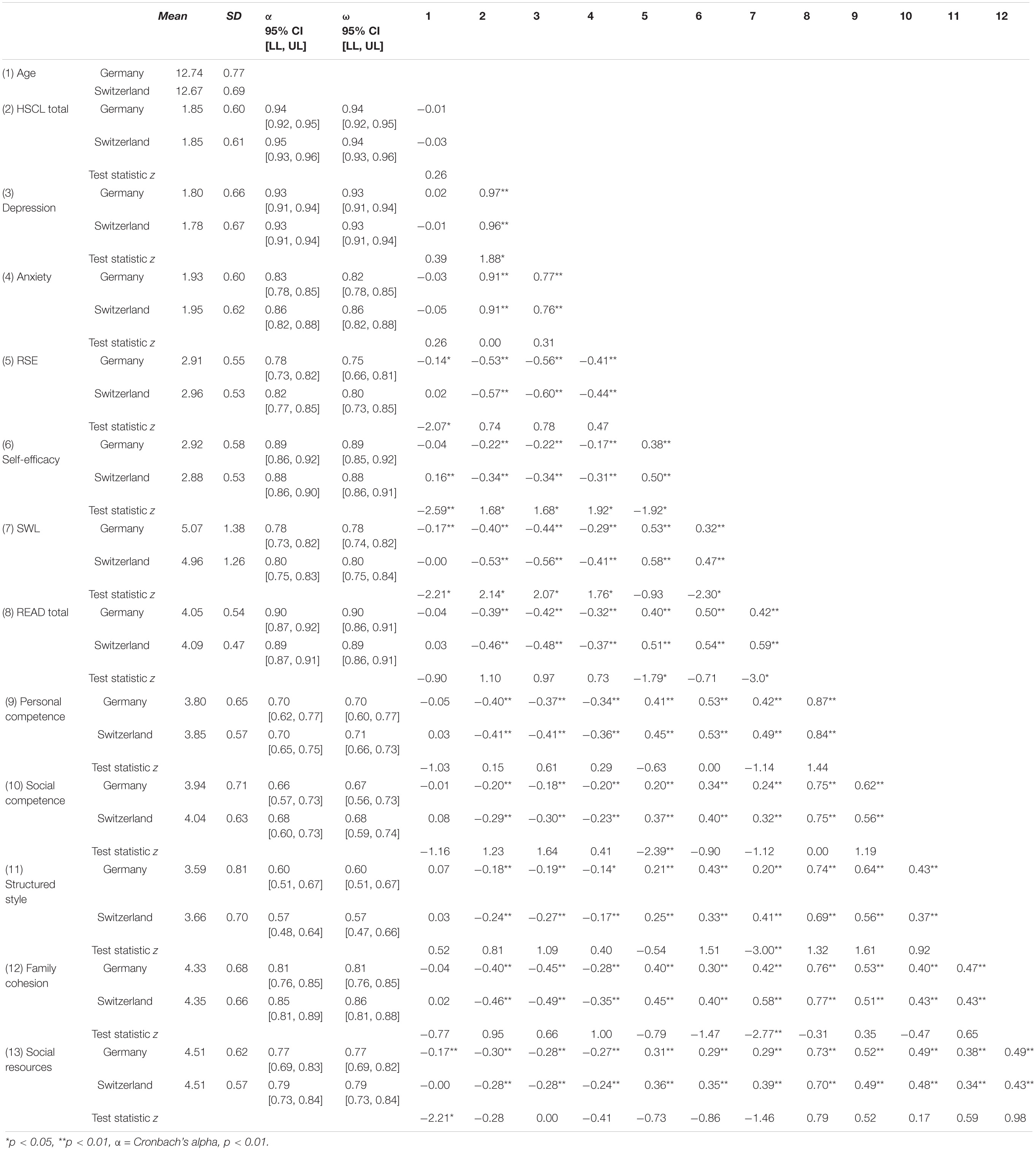

Table 1 presents the means, standard deviations, reliability estimates, and z-values of all measurement instruments and their subscales. The sum scores of the following scales and subscales were higher in Switzerland compared to the German sample: HSCL-25 Anxiety, RSE, READ total, Personal Competence, Social Competence, Social Competence, Structured Style, Family Cohesion, and Social Resources. Furthermore, both countries show mean scores in the HSCL-25 scale above the cut-off value for “caseness,” which is defined at 1.75 for the original English version (Winokur et al., 1984) and is widely used for several other languages (Mollica et al., 1987).

Table 1. Means, standard deviations, reliability, Pearson’s correlations between HSCL-25, RSE, self-efficacy, SWL, and READ scores for Germany (N = 321), and Switzerland (N = 349).

The original 28-item, five-factor model of the READ was tested through a confirmatory factor analysis (CFA) across the two independent samples. The results showed that the fit indices yielded a fit with room for improvement in the German sample (χ2(340) = 581.934, p < 0.001; RMSEA = 0.047 [90% CI = 0.041–0.054]; SRMR = 0.058 CFI = 0.876; TLI = 0.862), which was also the case for the Swiss sample (χ2(340) = 595.875, p < 0.001; RMSEA = 0.047 [90% CI = 0.040–0.053]; SRMR = 0.060 CFI = 0.882; TLI = 0.868).

After freely estimating the covariance of two (Germany) and three (Switzerland) error terms to the items 28–14 and 16–11, and 7–1, 15–5, and 8–2, respectively, the fit indices still showed room for improvement. They have been freely estimated due to statistical reasons and similar wording content of the items. The modification indices further demonstrated one problematic item: item 4 (“I am satisfied with my life up till now”). Therefore, it was removed, which resulted in acceptable fit indices for both samples: Germany (χ2(312) = 460.87, p < 0.001; RMSEA = 0.039 [90% CI = 0.031–0.046]; SRMR = 0.053 CFI = 0.918; TLI = 0.907), and Switzerland (χ2(311) = 491.237, p < 0.001; RMSEA = 0.041 [90% CI = 0.034–0.048]; SRMR = 0.057 CFI = 0.912; TLI = 0.900). Hence, these results confirm the original five-factor structure of the READ by excluding one item, consequently leaving a 27-item solution (M1a and M1b in Table 2).

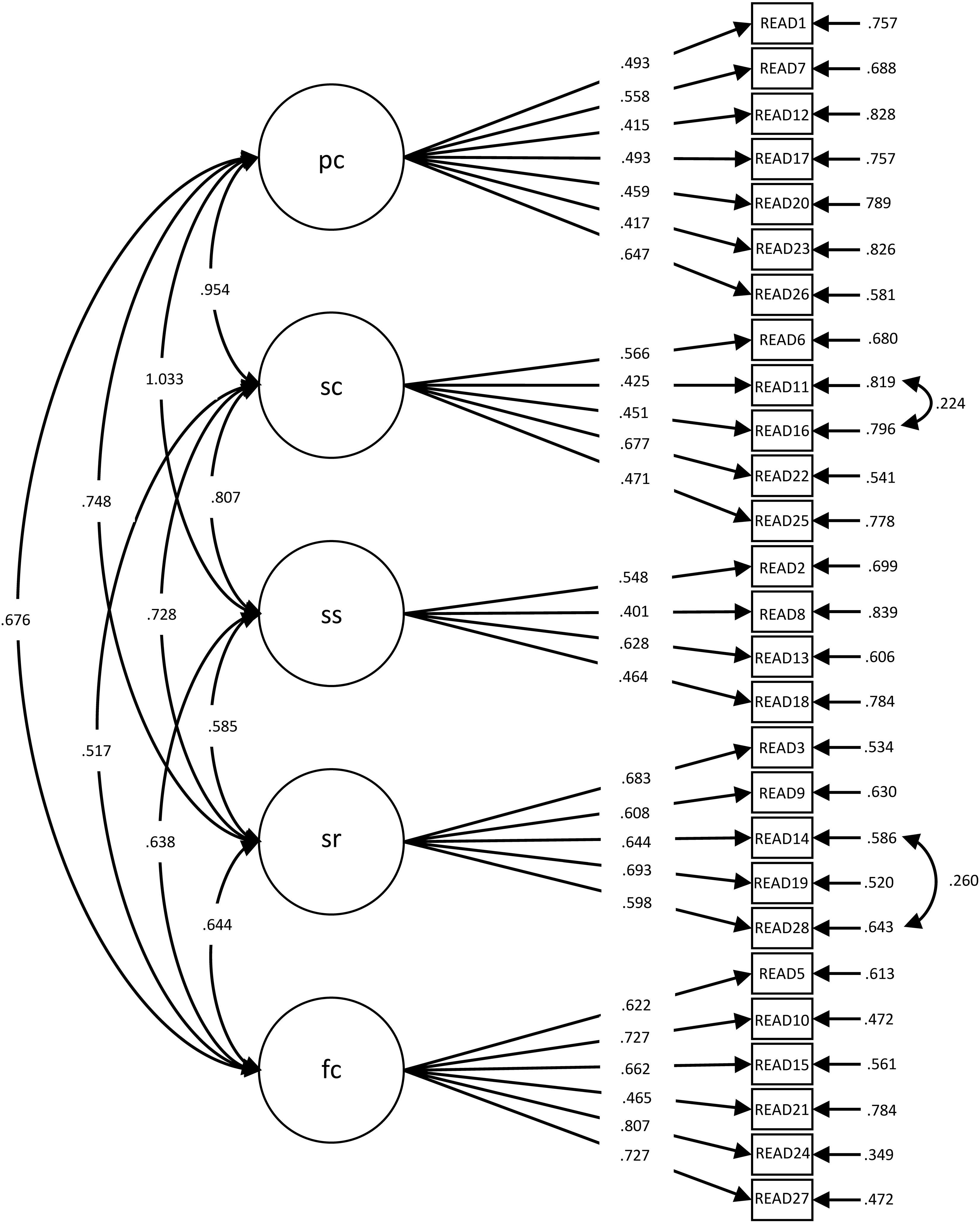

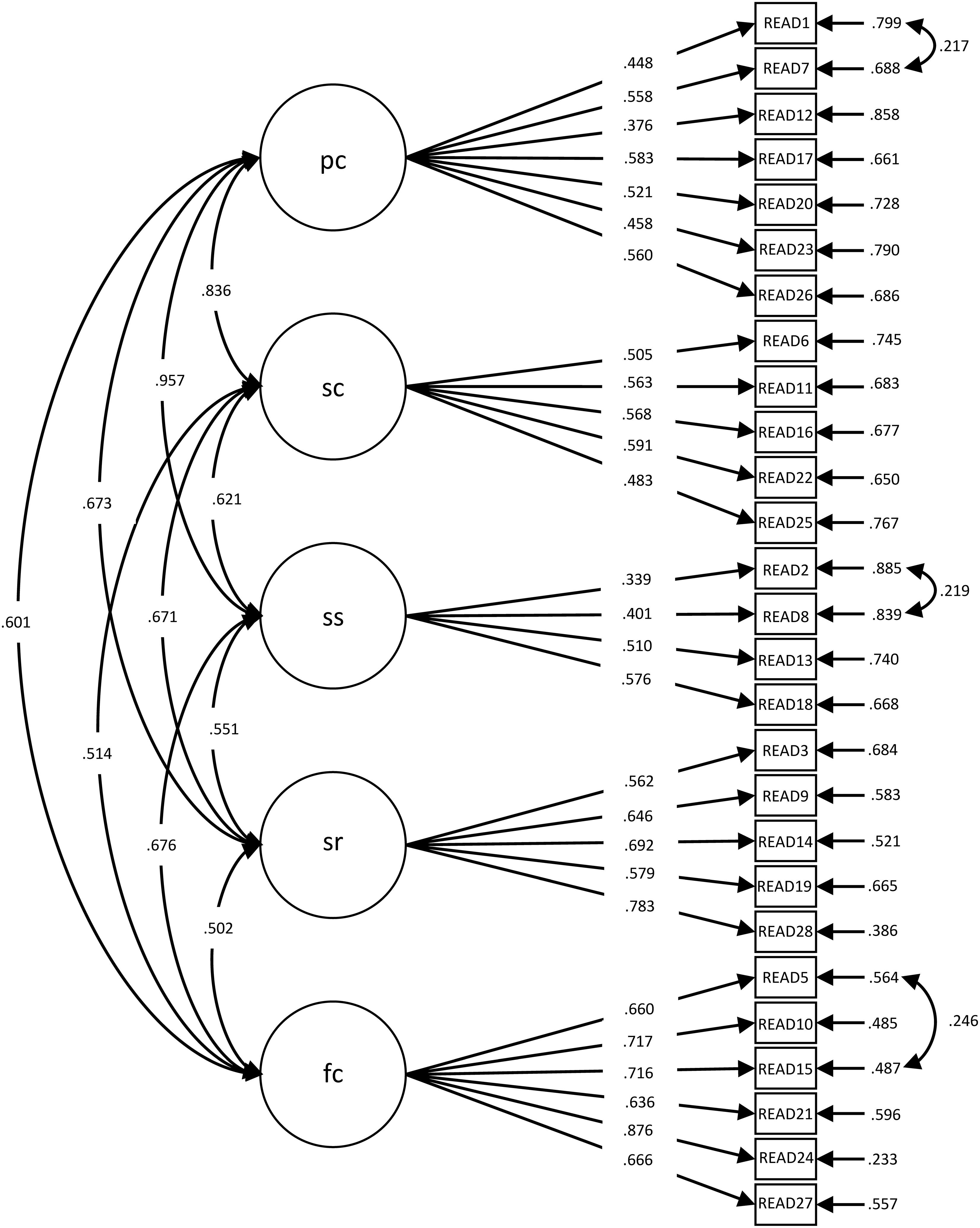

The configural invariance (the baseline model, M2 in Table 2) was supported for the READ in both national samples. This model was compared to the model with constrained factor loadings equally across both groups (M3) to test what is considered together with the test for configural invariance to be the most important test of measurement invariance (Meredith, 1993; Meade and Lautenschlager, 2004; Vleeschouwer et al., 2014): metric invariance. The difference between the CFI values of the two models was below 0.01, and the differences between the RMSEA and SRMR values were below 0.015. Thus, the metric invariance was supported. The standardized factor loadings are presented in Table 3 and in Figures 1, 2. By constraining the intercepts to be the same across both groups, the χ2 value increased and resulted in a decrease in the fit of the scalar invariance model in comparison to the metric invariance model, as expected. However, the differences in the CFI, RMSEA, and SRMR values were below the cutoffs for rejecting invariance and, therefore, still supported scalar invariance.

Figure 1. Factor loadings for the five-factor, 27-item model (Germany: N = 321). pc, personal competence; sc, social competence; ss, structured style; sr, social resources; fc, family cohesion.

Figure 2. Factor loadings for the five-factor, 27-item model (Switzerland: N = 349). pc, personal competence; sc, social competence; ss, structured style; sr, social resources; fc, family cohesion.

To validate the READ, all five factors of the 27-item version were correlated with several psychological variables to investigate its convergent validity (see Table 1). As expected, the READ total score and subscales correlated significantly and negatively and of a low to moderate size with the HSCL-25 in both countries. The READ, as well as its subscales, correlated significantly and positively with the RSE (ranging from r = 0.20 to 0.51), self-efficacy (ranging from r = 0.29 to 0.54), and SWL (ranging from r = 0.20 to 0.59). The highest negative correlations were between HSCL-25 and the READ factors Personal Competence and Family Cohesion. The same subscale factors had the highest associations with RSE, self-efficacy, and SWL. In general, most of the correlations between all measurements were higher in the Swiss sample than in the German sample. However, testing for the significance of these correlations showed that only a few z-values were significant. The significant differences between the total READ scale and RSE (z = −1.79, p < 0.05), as well as the SWL (z = −3.0, p < 0.05), are higher in Switzerland than in Germany. The same applies for the subscale Social Competence and RSE (z = −2.39, p < 0.01), Structured Style and SWL (z = −3.00, p < 0.01), and Family Cohesion and SWL (z = −2.77, p < 0.01). The comparison of the correlations between the subscale Social Resources and age showed a significant z-value of −2.21 (p < 0.05), which was more negative for the German sample than for the Swiss sample.

The READ is an approved scale that continues to be used widely as a measure of protective factors of resilience (e.g., Windle et al., 2011). The primary aim of this first cross-cultural validation of the READ was to investigate the psychometric properties, measurement invariance, and construct validity. The main question was whether the READ is a valid scale to measure a similar latent construct of resilience across a German and a Swiss sample of seventh graders.

The first finding of the presented study was the support for the five-factor structure of the READ, even though one item had to be removed, thus supporting configural invariance. Item four, which has typically loaded poorly or inconsistently in previous studies of the READ, has been excluded several times (e.g., von Soest et al., 2010; Ruvalcaba-Romero et al., 2014; Moksnes and Haugan, 2018; Pérez-Fuentes et al., 2020). “I am satisfied with my life up till now” seems to be a problematic item, and its exclusion was justified statistically by showing high cross-loadings with the Family Cohesion factor and high modification indices. However, the item was designed to measure Personal Competence and cannot be statistically or clearly allocated to this factor. It can only be assumed that being satisfied with your own life might be strongly influenced by the family in this specific age range. The argument is founded on research by Szcześniak and Tułecka (2020), who demonstrated high positive correlations between satisfaction with life and family adaptability and cohesion. On the other hand, adolescents’ family stressors and life satisfaction correlate significantly and negatively (Chappel et al., 2014), showing that family influences an adolescent’s satisfaction with life. Therefore, item four seems to tap into this duality, which does not coincide with the classical test theory. Although the item may yield interesting information about this relation, it should be used with caution, particularly when exploring the factors in question. Future replicative studies with broader age-ranges are needed to determine if it would be better to leave it out for German or Swiss adolescent samples. Nonetheless, it appears that seventh graders from Germany and Switzerland conceptualize protective factors of resilience similarly, as reflected by the five factors.

The most important test of invariance, metric invariance, was also supported, showing that the factor loadings are equal for both groups. Thus, participants in Germany and Switzerland understand the items similarly and, therefore, interpret the wording of the items equally; their scores on the scale are comparable. Thus, it allowed simple regression analyses to predict comparable changes in criterion-related outcome variables across the two samples. Even scalar invariance was confirmed and therefore demonstrated that participants with the same value on the latent construct have identical values on the observed variable in both countries. These results show that the comparisons across the German and Swiss sample are meaningful and valid, direct mean comparisons are possible, and it can be assumed the same construct was assessed.

The internal consistency was supported by Cronbach’s alpha, which was 0.90 for the total READ score in the German sample and 0.89 in the Swiss sample but varied in both countries for the five READ factor subscales between 0.57 and 0.85. While the reliability of four subscales is satisfactory, the subscale Structured Style is rather low. This factor was the weakest one in previous studies (Hjemdal et al., 2006; von Soest et al., 2010; Kelly et al., 2017; Moksnes and Haugan, 2018). This alpha value could be caused by a low number of items (four items), poor interrelatedness between these items, or a heterogeneous construct (Tavakol and Dennick, 2011). Hence, this factor should be used with caution and be investigated further.

The construct validity of the READ was supported in the German, as well as in the Swiss sample, as the READ total score correlated significantly and negatively with the HSCL-25 total score and its subscales that measured anxiety and depression, and significantly and positively correlated with self-esteem, self-efficacy, and the newly integrated aspect satisfaction with life. These correlations were low to strong in size, with the expected directionality, and therefore supported construct validity. These findings are in accordance with previous studies. However, the significant differences in the correlation coefficients need to be further evaluated, as there are no studies available that have investigated similar differences. Hence, the significant differences could be related to higher levels of resilience and self-esteem among the Swiss sample, which could also mean that the Swiss sample may have relatively more access to resilience resources to boost their self-esteem. Thus, it is not surprising that the Swiss sample scored relatively higher on Personal Competence than the German samples because higher self-esteem contributes to higher personal competence. As mentioned, these findings are to be used with caution because more studies are needed for further evaluations.

Moreover, the READ is also relevant in practical and applied settings. The READ requires, compared to other resilience scales (e.g., Ego Resiliency by Bromley et al., 2006, including 102 items), a considerable length of time and thus might have lower rates of non-responses and missing data. Apart from only showing that the scale is a valid and reliable instrument to measure protective factors, providing test-retest information to indicate the instrument’s stability would be helpful. This would also give for example teachers or clinicians a possibility to use the scale in order to know an individual’s weaknesses and strengths in times of adversities and foster their resilience. Especially in times of Covid-19, where the mental health among adolescents could very likely decrease (Guessoum et al., 2020), knowing the availability of assets and resources could guide a framework for intervention (Windle et al., 2011).

Several limitations in this study need to be recognized. First, the sample consists of only seventh graders in one federal state of Germany and four cantons of Switzerland. We suggest that further measurement invariance analyses, ideally cross-cultural analyses, under different school levels and other regions, are needed to assess the generalizability of the READ scale. The study showed that seventh graders in the Federal State Baden-Württemberg and three cantons of Switzerland—Aargau, Basel-City, and Solothurn—understood the instrument in a similar fashion. On all measurement invariance levels, the findings of both samples did not differ significantly. Nonetheless, the samples have not been further investigated. Therefore, it remains unclear how these two samples differ according to their cultural, educational and individual backgrounds. Further studies could shed light on the comparability of a German and a Swiss sample.

Additionally, a further cross-cultural validation across two completely different samples (cultural and linguistic) would be of great benefit. As Germany and Switzerland are culturally similar (Kopper, 1993) and the language is almost the same, it raises the question of whether these results will be supported by a comparison between completely different cultures and two language versions, for example, between a Norwegian and Swiss sample. A study among 3,419 undergraduate students in 24 countries showed that language has an influence on response patterns. Half of all students in a country received an English-language questionnaire, whereas the other half filled out the same survey in their native language. Findings showed that cultural accommodations were present, and respondents altered their responses according to the culture of the language and, thus, the cultural values (Harzing, 2005). This strongly supports the idea of questionnaire translations and the idea trying to make several language versions available. To have these translations available (cross-national and cross-cultural), validations are essential.

Secondly, according to Hjemdal et al. (2006), a sample of individuals who have already dealt with long-term adversity and have overcome these adversities should have been chosen. Whether the students have dealt with such adversity has not been examined in detail. Even though, the mean values for the HSCL-25 total in both samples were above the cut-off value of M = 1.75 (Winokur et al., 1984): in the German sample M = 1.85 (SD = 0.60), and in the Swiss sample M = 1.85 (SD = 0.61). In addition, the sum scores of the subscales indicated “caseness,” and no specific sample facing adversity was investigated. Therefore, it would be interesting to have a closer look at a specific subsample with HSCL-25 values above 1.75 to examine the psychometric properties and factorial validity of a sample truly facing adversities. Furthermore, adolescents could have been categorized into depress/non-depressed or anxious/non-anxious adolescents based on the HSCL-25 to verify the difference in the READ scores among these groups. Also finding out to see if resilience as measured through the READ negatively predicts the total score at the HSCL-25 over and above self-esteem and self-efficacy could be a further way of showing incremental validity of the READ.

Thirdly, self-report measures always bear the risk of social desirability, especially when carrying out a study in the classroom (Althubaiti, 2016). To reduce this bias, students filled out the questionnaires separately in class on tablets, without seeing their peers’ answers. Furthermore, if there is a significant bias, the findings would differ for the two samples, or it can be assumed that the bias is similar for each sample. Finally, the cross-sectional nature of the present design must be noted. It is suggested that future research examines the consistency of the findings (test–retest reliability). However, the present study is the first cross-cultural validation study and the first validation study of the READ in adolescent samples from Germany and Switzerland. Further studies testing the reliability and validity in other countries and cultures in a cross-cultural design, focusing additionally on gender and age invariance, would be of great benefit to the READ.

In conclusion, the first cross-cultural validation of the READ with samples from Germany and Switzerland provides further evidence on the psychometric properties of the READ as a valuable tool for the assessment of protective factors of resilience. The five-factor, 27-item model resulted in a good fit for the German and Swiss samples and has the potential for future research. Participants from both countries understood and interpreted these items in a similar and comparable fashion, which allows prospective studies to investigate adolescents’ mental health. However, modifications indicate a need for further investigations, whether the findings of the existing latent structure are replicable in different samples, countries, and cultures.

The datasets presented in this article are not readily available because the raw data supporting the conclusions of this article will be made available by the authors after 2023 when the project and dissertations will be completed. Requests to access the datasets should be directed to Clarissa Janousch, Y2xhcmlzc2EuamFub3VzY2hAZmhudy5jaA==.

The studies involving human participants were reviewed and approved by the Ministry of Baden-Württemberg (Germany), the Cantonal Bureau for Education in the Cantons of Aargau, Basel-City, and Solothurn; and Ethics Committee (for psychological and related research) of the Faculty of Arts and Social Sciences of the University of Zürich. Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin.

CJ collected the data, conducted the data analysis, wrote the first draft of the manuscript, and continuously revised and developed the final version based on the feedback received. FA, OH, and CH provided substantial feedback on the manuscript and data analysis. All authors contributed to the article and approved the submitted version.

This study was funded by the Swiss National Science Foundation (SNSF) through the National Centres of Competence in Research (NCCR) project On the Move—The Migration-Mobility Nexus Overcoming Inequalities with Education—School and Resilience. Furthermore, the University of Applied Sciences and Arts Northwestern Switzerland (FHNW) generously supported the study.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We thank the participating schools, teachers, and students of the project Overcoming Inequalities with Education—School and Resilience.

Aburn, G., Gott, M., and Hoare, K. (2016). What is resilience? An integrative review of the empirical literature. J. Adv. Nurs. 72, 980–1000. doi: 10.1111/jan.12888

Althubaiti, A. (2016). Information bias in health research: definition, pitfalls, and adjustment methods. J. Multidiscip. Healthc. 9, 211–217. doi: 10.2147/JMDH.S104807

Antonovsky, A. (1996). The salutogenic model as a theory to guide health promotion. Health Promot. Int. 11, 11–18. doi: 10.1093/heapro/11.1.11

Askeland, K. G., Hysing, M., Sivertsen, B., and Breivik, K. (2019). Factor structure and psychometric properties of the resilience scale for adolescents (READ). Assessment 27, 1575–1587. doi: 10.1177/1073191119832659

Beaton, D. E., Bombardier, C., Guillemin, F., and Ferraz, M. B. (2000). Guidelines for the process of cross-cultural adaptation of self-report measures. Spine 25, 3186–3191. doi: 10.1097/00007632-200012150-00014

Becker, C. M., Glascoff, M. A., and Felts, W. M. (2010). Salutogenesis 30 years later: where do we go from here. Int. Electronic J. Health Educ. 13, 25–32.

Bibi, A., Lin, M., and Margraf, J. (2020). Salutogenic constructs across Pakistan and Germany: a cross sectional study. Int. J. Clin. Health Psychol. 20, 1–9. doi: 10.1016/j.ijchp.2019.10.001

Bonanno, G. A. (2004). Loss, Trauma, and human resilience: have we underestimated the human capacity to thrive after extremely aversive events? Am. Psychol. 59, 20–28. doi: 10.1037/0003-066X.59.1.20

Bonanno, G. A., Wortman, C. B., Lehman, D. R., Tweed, R. G., Haring, M., Sonnega, J., et al. (2002). Resilience to loss and chronic grief: a prospective study from preloss to 18-months postloss. J. Pers. Soc Psychol. 83, 1150–1164. doi: 10.1037/0022-3514.83.5.1150

Brady, K. T., and Back, S. E. (2012). Childhood trauma, posttraumatic stress disorder, and alcohol dependence. Alcohol Res. 34, 408–413.

Bromley, E., Johnson, J. G., and Cohen, P. (2006). Personality strengths in adolescence and decreased risk of developing mental health problems in early adulthood. Compr. Psychiatry 47, 315–324. doi: 10.1016/j.comppsych.2005.11.003

Brown, T. A. (2015). Confirmatory Factor Analysis for Applied Research, Second Edn. New York, NY: The Guilford Press.

Browne, M. W., and Cudeck, R. (1992). Alternative ways of assessing model fit. Sociol. Methods Res. 21, 230–258. doi: 10.1177/0049124192021002005

Bühner, M. (2011). Einführung in Die Test- und Fragebogenkonstruktion. 3., aktualisierte und erweiterte Auflage. Mexico City: Pearson.

Byrne, B. M., Shavelson, R. J., and Muthén, B. (1989). Testing for the equivalence of factor covariance and mean structures: the issue of partial measurement invariance. Psychol. Bull. 105, 456–466. doi: 10.1037/0033-2909.105.3.456

Canvin, K., Marttila, A., Burstrom, B., and Whitehead, M. (2009). Tales of the unexpected? Hidden resilience in poor households in Britain. Soc. Sci. Med. 69, 238–245. doi: 10.1016/j.socscimed.2009.05.009

Chappel, A. M., Suldo, S. M., and Ogg, J. A. (2014). Associations between adolescents’. Family stressors and life satisfaction. J. Child Fam. Stud. 23, 76–84. doi: 10.1007/s10826-012-9687-9

Chen, F. F. (2007). Sensitivity of goodness of fit indexes to lack of measurement invariance. Struct. Equ. Model. Multidiscip. J. 14, 464–504. doi: 10.1080/10705510701301834

Cheung, G. W., and Rensvold, R. B. (2002). Evaluating goodness-of-fit indexes for testing measurement invariance. Struct. Equ. Model. Multidiscip. J. 9, 233–255. doi: 10.1207/S15328007SEM0902_5

Cieciuch, J., and Davidov, E. (2016). Establishing measurement invariance across online and offline samples. A tutorial with the software packages AMOS and Mplus. Stud. Psychol. 14:83. doi: 10.21697/sp.2015.14.2.06

Connor, K. M., and Davidson, J. R. (2003). Development of a new resilience scale: the Connor-Davidson resilience scale (CD-RISC). Depress. Anxiety 18, 76–82. doi: 10.1002/da.10113

Derogatis, L. R., Lipman, R. S., Rickels, K., Uhlenhuth, E. H., and Covi, L. (1974). The Hopkins symptom checklist (HSCL): a self-report symptom inventory. Behav. Sci. 19, 1–15. doi: 10.1002/bs.3830190102

Derogatis, L. R., and Savitz, K. L. (1999). “The SCL-90-R, brief symptom inventory, and matching clinical rating scales,” in The Use of Psychological Testing for Treatment Planning and Outcomes Assessment, 2nd Edn, ed. M. E. Maruish (Mahwah, NJ: Lawrence Erlbaum Associates Publishers), 679–724.

Donnon, T., and Hammond, W. (2007). A psychometric assessment of the self-reported youth resiliency: assessing developmental strengths questionnaire. Psychol. Rep. 100, 963–978. doi: 10.2466/pr0.100.3.963-978

Donnon, T., Hammond, W., and Charles, G. (2003). Youth resiliency: assessing students’ capacity for success at school. Teach. Learn. 1, 23–28. doi: 10.26522/tl.v1i2.109

Dowrick, C., Kokanovic, R., Hegarty, K., Griffiths, F., and Gunn, J. (2008). Resilience and depression: perspectives from primary care. Health (London) 12, 439–452. doi: 10.1177/1363459308094419

Estrada, A. X., Severt, J. B., and Jiménez-Rodríguez, M. (2016). Elaborating on the conceptual underpinnings of resilience. Ind. Organ. Psychol. 9, 497–502. doi: 10.1017/iop.2016.46

Fischer, R., and Karl, J. A. (2019). A primer to (Cross-Cultural) multi-group invariance testing possibilities in R. Front. Psychol. 10:1507. doi: 10.3389/fpsyg.2019.01507

Friborg, O., Hjemdal, O., Rosenvinge, J., and Martinussen, M. (2003). A new rating scale for adult resilience: what are the central protective resources behind healthy adjustment? Int. J. Methods Psychiatric Res. 12, 65–76. doi: 10.1002/mpr.143

Garmezy, N. (1983). “Stressors of childhood,” in Stress, Coping, and Development in Children, eds N. Garmezy and M. Rutter (Baltimore, MD: Johns Hopkins University Press), 43–84.

Garmezy, N. (1991). Resiliency and vulnerability to adverse developmental outcomes associated with poverty. Am. Behav. Sci. 34, 416–430. doi: 10.1177/0002764291034004003

Guessoum, S. B., Lachal, J., Radjack, R., Carretier, E., Minassian, S., Benoit, L., et al. (2020). Adolescent psychiatric disorders during the COVID-19 pandemic and lockdown. Psychiatry Res. 291:113264. doi: 10.1016/j.psychres.2020.113264

Harzing, A.-W. (2005). Does the use of english-language questionnaires in cross-national research obscure national differences? Int. J. Cross Cult. Manage. 5, 213–224. doi: 10.1177/1470595805054494

Havnen, A., Anyan, F., Hjemdal, O., Solem, S., Gurigard Riksfjord, M., and Hagen, K. (2020). Resilience moderates negative outcome from stress during the COVID-19 pandemic: a moderated-mediation approach. IJERPH 17:6461. doi: 10.3390/ijerph17186461

Hayduk, L., Cummings, G., Boadu, K., Pazderka-Robinson, H., and Boulianne, S. (2007). Testing! testing! one, two, three – testing the theory in structural equation models! Pers. Individ. Differ. 42, 841–850. doi: 10.1016/j.paid.2006.10.001

Heatherton, T. F., and Wyland, C. L. (2003). “Assessing self-esteem,” in Positive Psychological Assessment: A Handbook of Models and Measures, eds S. J. Lopez, and C. R. Snyder (Washington, DC: American Psychological Association), 219–233. doi: 10.1037/10612-014

Hjemdal, O. (2007). Measuring protective factors: the development of two resilience scales in Norway. Child Adolesc. Psychiatric Clin. North Am. 16, 303–321. doi: 10.1016/j.chc.2006.12.003

Hjemdal, O., Friborg, O., Stiles, T. C., Martinussen, M., and Rosenvinge, J. H. (2006). A new scale for adolescent resilience: grasping the central protective resources behind healthy development. Meas. Eval. Counsel. Dev. 39, 84–96. doi: 10.1080/07481756.2006.11909791

Hjemdal, O., Roazzi, A., Dias, M., and Friborg, O. (2015). The cross-cultural validity of the resilience scale for adults: a comparison between Norway and Brazil. BMC Psychol. 3:1–9. doi: 10.1186/s40359-015-0076-1

Hjemdal, O., Vogel, P. A., Solem, S., Hagen, K., and Stiles, T. C. (2011). The relationship between resilience and levels of anxiety, depression, and obsessive-compulsive symptoms in adolescents. Clin. Psychol. Psychother. 18, 314–321. doi: 10.1002/cpp.719

Hong, S., Malik, M. L., and Lee, M.-K. (2003). Testing configural, metric, scalar, and latent mean invariance across genders in sociotropy and autonomy using a non-western sample. Educ. Psychol. Meas. 63, 636–654. doi: 10.1177/0013164403251332

Hu, L., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. 6, 1–55. doi: 10.1080/10705519909540118

Janssen, M. (2011). Resilience and adaptation in the governance of social-ecological systems. Int. J. Commons 5:340. doi: 10.18352/ijc.320

Kaplan, H. B. (2002). “Toward an understanding of resilience,” in Resilience and Development: Positive Life Adaptations, eds M. D. Glantz, and J. L. Johnson (Boston, MA: Springer US), 17–83. doi: 10.1007/0-306-47167-1_3

Kelly, Y., Fitzgerald, A., and Dooley, B. (2017). Validation of the resilience scale for adolescents (READ) in Ireland: a multi-group analysis: validation of READ with adolescents in Ireland. Int. J. Methods Psychiatr. Res. 26:e1506. doi: 10.1002/mpr.1506

Kopper, E. (1993). Swiss and Germans: similarities and differences in work-related values, attitudes, and behavior. Int. J. Intercult. Relat. 17, 167–184. doi: 10.1016/0147-1767(93)90023-2

Krieger, B., Schulze, C., Boyd, J., Amann, R., Piškur, B., Beurskens, A., et al. (2020). Cross-cultural adaptation of the participation and environment measure for children and youth (PEM-CY) into German: a qualitative study in three countries. BMC Pediatr. 20:492. doi: 10.1186/s12887-020-02343-y

Langeland, E., and Vinje, H. F. (2017). “The application of salutogenesis in mental healthcare settings,” in The Handbook of Salutogenesis, eds M. B. Mittelmark, M. Eriksson, G. F. Bauer, J. M. Pelikan, B. Lindström, and G. A. Espnes (Cham: Springer), 299–305. doi: 10.1007/978-3-319-04600-6_28

Leidy, M. S., Guerra, N. G., and Toro, R. I. (2010). Positive parenting, family cohesion, and child social competence among immigrant Latino families. J. Fam. Psychol. 24, 252–260. doi: 10.1037/a0019407

Luthar, S. (2006). Resilience in development: a synthesis of research across five decades. Dev. Psychopathol. 3, 739–795. doi: 10.1002/9780470939406.ch20

Luthar, S. S., Cicchetti, D., and Becker, B. (2000). The construct of resilience: a critical evaluation and guidelines for future work. Child Dev. 71, 543–562. doi: 10.1111/1467-8624.00164

Mai, Y., Zhang, Z., and Wen, Z. (2018). Comparing exploratory structural equation modeling and existing approaches for multiple regression with latent variables. Struct. Equ. Model. Multidiscip. J. 25, 737–749. doi: 10.1080/10705511.2018.1444993

Marsh, H. W., Guo, J., Parker, P. D., Nagengast, B., Asparouhov, T., Muthén, B., et al. (2018). What to do when scalar invariance fails: the extended alignment method for multi-group factor analysis comparison of latent means across many groups. Psychol. Methods 23, 524–545. doi: 10.1037/met0000113

Marsh, H. W., Lüdtke, O., Muthén, B., Asparouhov, T., Morin, A. J. S., Trautwein, U., et al. (2010). A new look at the big five factor structure through exploratory structural equation modeling. Psychol. Assess. 22, 471–491. doi: 10.1037/a0019227

Masten, A. S. (2001). Ordinary magic: resilience processes in development. Am. Psychol. 56, 227–238. doi: 10.1037/0003-066X.56.3.227

Masten, A. S. (2007). Resilience in developing systems: progress and promise as the fourth wave rises. Dev. Psychopathol. 19, 921–930. doi: 10.1017/S0954579407000442

Masten, A. S. (2009). Ordinary magic: lessons from research on resilience in human development. Educ. Can. 49, 28–32.

Masten, A. S. (2011). Resilience in children threatened by extreme adversity: frameworks for research, practice, and translational synergy. Dev. Psychopathol. 23, 493–506. doi: 10.1017/S0954579411000198

Masten, A. S., Garmezy, N., Tellegen, A., Pellegrini, D. S., Larkin, K., and Larsen, A. (1988). Competence and stress in school children: the moderating effects of individual and family qualities. J. Child Psychol. Psychiatry 29, 745–764. doi: 10.1111/j.1469-7610.1988.tb00751.x

Masten, A. S., Hubbard, J. J., Gest, S. D., Tellegen, A., Garmezy, N., and Ramirez, M. (1999). Competence in the context of adversity: pathways to resilience and maladaptation from childhood to late adolescence. Dev. Psychopathol. 11, 143–169. doi: 10.1017/s0954579499001996

Matsumoto, D. R., and van de Vijver, F. J. R. (eds). (2011). Cross-cultural Research Methods in Psychology. New York, NY: Cambridge University Press.

Mattsson, N. B., Williams, H. V., Rickels, K., Lipman, R. S., and Uhlenhuth, E. H. (1969). Dimensions of symptom distress in anxious neurotic outpatients. Psychopharm. Bull. 5, 19–32.

McDonald, R. P. (1970). The theoretical foundations of principal factor analysis, canonical factor analysis, and alpha factor analysis. Br. J. Math. Stat. Psychol. 23, 1–21. doi: 10.1111/j.2044-8317.1970.tb00432.x

McLaughlin, K. A., and Lambert, H. K. (1970). Child trauma exposure and psychopathology: mechanisms of risk and resilience. Curr. Opin. Psychol. 14, 29–34. doi: 10.1016/j.copsyc.2016.10.004

Meade, A. W., and Lautenschlager, G. J. (2004). A monte-carlo study of confirmatory factor analytic tests of measurement equivalence/invariance. Struct. Equ. Model. Multidiscip. J. 11, 60–72. doi: 10.1207/S15328007SEM1101_5

Meredith, W. (1993). Measurement invariance, factor analysis and factorial invariance. Psychometrika 58, 525–543. doi: 10.1007/BF02294825

Milfont, T. L., and Fischer, R. (2010). Testing measurement invariance across groups: applications in cross-cultural research. Int. J. Psychol. Res. 3, 111–130. doi: 10.21500/20112084.857

Millsap, R. E. (2011). Statistical Approaches to Measurement Invariance. New York, NY: Routledge Taylor & Francis Group. doi: 10.4324/9780203821961

Moksnes, U. K., and Haugan, G. (2018). Validation of the resilience scale for adolescents in Norwegian adolescents 13-18 years. Scand. J. Caring Sci. 32, 430–440. doi: 10.1111/scs.12444

Mollica, R. F., Wyshak, G., and Lavelle, J. (1987). The psychosocial impact of war trauma and torture on Southeast Asian refugees. AJP 144, 1567–1572. doi: 10.1176/ajp.144.12.1567

Muthén, L. K., and Muthén, B. O. (1998-2017). Mplus User’s Guide. 8th Edn. Los Angeles, CA: Muthén & Muthén.

Olsson, C., Bond, L., Burns, J., Vella-Brodrick, D., and Sawyer, S. (2003). Adolescent resilience: a concept analysis. J. Adolesc. 26, 1–11. doi: 10.1016/S0140-1971(02)00118-5

Opp, G., Fingerle, M., and Bender, D. (eds). (2008). Was Kinder stärkt: Erziehung zwischen Risiko und Resilienz. 3. Aufl. München: Reinhardt.

Oshio, A., Kaneko, H., Nagamine, S., and Nakaya, M. (2003). Construct validity of the adolescent resilience scale. Psychol. Rep. 93, 1217–1222. doi: 10.2466/pr0.2003.93.3f.1217

Pavot, W., and Diener, E. (1993). Review of the satisfaction with life scale. Psychol. Assess. 5, 164–172. doi: 10.1037/1040-3590.5.2.164

Pérez-Fuentes, M., del, C., Molero Jurado, M., del, M., Barragán Martín, A. B., Mercader Rubio, I., et al. (2020). Validation of the resilience scale for adolescents in high school in a Spanish population. Sustainability 12:2943. doi: 10.3390/su12072943

Prime, H., Wade, M., and Browne, D. T. (2020). Risk and resilience in family well-being during the COVID-19 pandemic. Am. Psychol. 75, 631–643. doi: 10.1037/amp0000660

Proag, V. (2014). The concept of vulnerability and resilience. Proc. Econ. Finance 18, 369–376. doi: 10.1016/S2212-5671(14)00952-6

Putnam, F. W. (2006). The impact of Trauma on child development. Juvenile Fam. Court J. 57, 1–11. doi: 10.1111/j.1755-6988.2006.tb00110.x

Putnick, D. L., and Bornstein, M. H. (2016). Measurement invariance conventions and reporting: the state of the art and future directions for psychological research. Dev. Rev. 41, 71–90. doi: 10.1016/j.dr.2016.06.004

Ran, L., Wang, W., Ai, M., Kong, Y., Chen, J., and Kuang, L. (2020). Psychological resilience, depression, anxiety, and somatization symptoms in response to COVID-19: a study of the general population in China at the peak of its epidemic. Soc. Sci. Med. 262:113261. doi: 10.1016/j.socscimed.2020.113261

Rhemtulla, M., Brosseau-Liard, P. É, and Savalei, V. (2012). When can categorical variables be treated as continuous? A comparison of robust continuous and categorical SEM estimation methods under suboptimal conditions. Psychol. Methods 17, 354–373. doi: 10.1037/a0029315

Rosenberg, M. (1965). Society and the Adolescent Self-image. Princeton, NJ: Princeton: University Press.

Rutter, M. (1985). Resilience in the face of adversity: protective factors and resistance to psychiatric disorder. Br. J. Psychiatry 147, 598–611. doi: 10.1192/bjp.147.6.598

Rutter, M. (1987). Psychosocial resilience and protective mechanisms. Am. J. Orthopsychiatry, 57, 316–331. doi: 10.1111/j.1939-0025.1987.tb03541.x

Rutter, M. (1990). “Psychosocial resilience and protective mechanisms,” in Risk and Protective Factors in the Development of Psychopathology Risk and Protective Factors in the Development of Psychopathology, eds J. E. Rolf, A. S. Masten, D. Cicchetti, K. H. Nuechterlein, and S. Weintraub (New York, NY: Cambridge University Press), 181–214. doi: 10.1017/CBO9780511752872.013

Rutter, M. (2000). “Resilience reconsidered: conceptual considerations, empirical findings, and policy implications,” in Handbook of Early Childhood Intervention, eds J. P. Shonkoff and S. J. Meisels (New York, NY: Cambridge University Press), 651–682. doi: 10.1017/CBO9780511529320.030

Rutter, M. (2012). Resilience as a dynamic concept. Dev. Psychopathol. 24, 335–344. doi: 10.1017/S0954579412000028

Ruvalcaba-Romero, N. A., Gallegos-Guajardo, J., and Villegas-Guinea, D. (2014). Validation of the resilience scale for adolescents (READ) in Mexico. J. Behav. Health Soc. Issues 6, 21–34. doi: 10.5460/jbhsi.v6.2.41180

Ryff, C. D., and Singer, B. (2000). Interpersonal flourishing: a positive health agenda for the new millennium. Pers. Soc. Psychol. Rev. 4, 30–44. doi: 10.1207/S15327957PSPR0401_4

Sagone, E., and Caroli, M. E. D. (2013). Relationships between resilience, self-efficacy, and thinking styles in Italian middle adolescents. Proc. Soc. Behav. Sci. 92, 838–845. doi: 10.1016/j.sbspro.2013.08.763

Scheithauer, H., Niebank, K., and Petermann, F. (2000). “Biopsychosoziale Risiken in der frühkindlichen Entwicklung: das Risiko-und Schutzfaktorenkonzept aus entwicklungspsychopathologischer Sicht,” in Risiken in der Frühkindlichen Entwicklung. Entwicklungspsychopathologie der Ersten Lebensjahre, eds F. Petermann, K. Niebank, and H. Scheithauer (Göttingen: Hogrefe Verl. für Psychologie), 65–97.

Schwarzer, R., and Jerusalem, M. (1995). “Generalized self-efficacy scale,” in Measures in Health Psychology: A User’s Portfolio. Causal and Control Beliefs, eds J. Weinman, S. Wright, and M. Johnston (Windsor: NFER-NELSON), 35–37.

Seligman, M. E. P. (2002). Authentic Happiness: using the New Positive Psychology to Realize your Potential for Lasting Fulfillment. New York, NY: Free Press.

Skrove, M., Romundstad, P., and Indredavik, M. (2013). Resilience, lifestyle and symptoms of anxiety and depression in adolescence: the young-HUNT study. Soc. Psychiatry Psychiatric Epidemiol. 48, 407–416. doi: 10.1007/s00127-012-0561-2

Snyder, C. R., Lopez, S. J., and Pedrotti, J. T. (2011). Positive Psychology: the Scientific and Practical Explorations of Human Strengths, 2nd Edn. Thousand Oaks, CA: SAGE.

Sousa, V. D., and Rojjanasrirat, W. (2011). Translation, adaptation and validation of instruments or scales for use in cross-cultural health care research: a clear and user-friendly guideline: validation of instruments or scales. J. Eval. Clin. Pract. 17, 268–274. doi: 10.1111/j.1365-2753.2010.01434.x

Southwick, S. M., Bonanno, G., Masten, A. S., Panter-Brick, C., and Yehuda, R. (2014). Resilience definition, theory and challenges. Eur. J. Psychotraumatol. 5, 1–14. doi: 10.3402/ejpt.v5.25338

Southwick, S. M., Sippel, L., Krystal, J., Charney, D., Mayes, L., and Pietrzak, R. (2016). Why are some individuals more resilient than others: the role of social support. World Psychiatry 15, 77–79. doi: 10.1002/wps.20282

Sperber, A. D., Devellis, R. F., and Boehlecke, B. (1994). Cross-cultural translation: methodology and validation. J. Cross Cult. Psychol. 25, 501–524. doi: 10.1177/0022022194254006

Steenkamp, J. E. M., and Baumgartner, H. (1998). Assessing measurement invariance in cross−national consumer research. J. Consum. Res. 25, 78–107. doi: 10.1086/209528

Stratta, P., Riccardi, I., Di Cosimo, A., Cavicchio, A., Struglia, F., Daneluzzo, E., et al. (2012). A validation study of the italian version of the resilience scale for adolescents (READ): resilience scale for adolescents Italian validation. J. Community Psychol. 40, 479–485. doi: 10.1002/jcop.20518

Szcześniak, M., and Tułecka, M. (2020). Family functioning and life satisfaction: the mediatory role of emotional intelligence. Psychol. Res. Behav. Manag. 13, 223–232. doi: 10.2147/PRBM.S240898

Tavakol, M., and Dennick, R. (2011). Making sense of Cronbach’s alpha. Int. J. Medical Educ. 2, 53–55. doi: 10.5116/ijme.4dfb.8dfd

van Prooijen, J.-W., and van der Kloot, W. A. (2001). Confirmatory analysis of exploratively obtained factor structures. Educ. Psychol. Meas. 61, 777–792. doi: 10.1177/00131640121971518

Vleeschouwer, M., Schubart, C. D., Henquet, C., Myin-Germeys, I., van Gastel, W. A., Hillegers, M. H. J., et al. (2014). Does assessment type matter? A measurement invariance analysis of online and paper and pencil assessment of the community assessment of psychic experiences (CAPE). PLoS One 9:e84011. doi: 10.1371/journal.pone.0084011

von Soest, T., Mossige, S., Stefansen, K., and Hjemdal, O. (2010). A validation study of the resilience scale for adolescents (READ). J. Psychopathol. Behav. Assess. 32, 215–225. doi: 10.1007/s10862-009-9149-x

Werner, E. E. (1989). High-risk children in young adulthood: a longitudinal study from birth to 32 years. Am. J. Orthopsychiatry 59, 72–81. doi: 10.1111/j.1939-0025.1989.tb01636.x

Werner, E. E. (1990). “Antecedents and consequences of deviant behavior,” in Health Hazards in Adolescence, eds F. Lösel, and K. Hurrelmann (Oxford: De Gruyter), 219–231. doi: 10.1515/9783110847659-012

Werner, E. E. (1993). Risk, resilience, and recovery: perspectives from the kauai longitudinal study. Dev. Psychopathol. 5, 503–515. doi: 10.1017/S095457940000612X

Werner, E. E. (2000). “Protective factors and individual resilience,” in Handbook of Early Childhood Intervention, 2nd Edn, eds J. P. Shonkoff, and S. J. Meisels (New York, NY: Cambridge University Press), 115–132. doi: 10.1017/CBO9780511529320.008

Werner, E. E., and Smith, R. S. (1992). Overcoming the Odds: High Risk Children from Birth to Adulthood. Ithaca: Cornell University Press.

WHO (2019). Adolescent Mental Health. Available online at: https://www.who.int/news-room/fact-sheets/detail/adolescent-mental-health (accessed January 15, 2020).

Williamson, G. S., and Pearse, I. H. (1980). Science, Synthesis, and Sanity: an Inquiry into the Nature of Living. Edinburgh: Scottish Academic Press.

Windle, G., Bennett, K. M., and Noyes, J. (2011). A methodological review of resilience measurement scales. Health Qual. Life Outcomes 9:8. doi: 10.1186/1477-7525-9-8

Windle, G., Markland, D. A., and Woods, R. T. (2008). Examination of a theoretical model of psychological resilience in older age. Aging Ment. Health 12, 285–292. doi: 10.1080/13607860802120763

Winokur, A., Winokur, D. F., Rickels, K., and Cox, D. S. (1984). Symptoms of emotional distress in a family planning service: stability over a four-week period. British J. Psychiatry 144, 395–399. doi: 10.1192/bjp.144.4.395

Keywords: Germany, Switzerland, protective factors, factor analysis, resilience, Resilience Scale for Adolescents, validation

Citation: Janousch C, Anyan F, Hjemdal O and Hirt CN (2020) Psychometric Properties of the Resilience Scale for Adolescents (READ) and Measurement Invariance Across Two Different German-Speaking Samples. Front. Psychol. 11:608677. doi: 10.3389/fpsyg.2020.608677

Received: 21 September 2020; Accepted: 17 November 2020;

Published: 24 December 2020.

Edited by:

Daniela Fadda, University of Cagliari, ItalyReviewed by:

Aurelio José Figueredo, University of Arizona, United StatesCopyright © 2020 Janousch, Anyan, Hjemdal and Hirt. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Clarissa Janousch, Y2xhcmlzc2EuamFub3VzY2hAZmhudy5jaA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.