95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 30 October 2020

Sec. Cognition

Volume 11 - 2020 | https://doi.org/10.3389/fpsyg.2020.578562

This article is part of the Research Topic Coronavirus Disease (COVID-19): Psychological Reactions to the Pandemic View all 66 articles

Prior research suggests that the pandemic coronavirus pushes all the “hot spots” for risk perceptions, yet both governments and populations have varied in their responses. As the economic impacts of the pandemic have become salient, governments have begun to slash their budgets for mitigating other global risks, including climate change, likely imposing increased future costs from those risks. Risk analysts have long argued that global environmental and health risks are inseparable at some level, and must ultimately be managed systemically, to effectively increase safety and welfare. In contrast, it has been suggested that we have worry budgets, in which one risk crowds out another. “In the wild,” our problem-solving strategies are often lexicographic; we seek and assess potential solutions one at a time, even one attribute at a time, rather than conducting integrated risk assessments. In a U.S. national survey experiment in which participants were randomly assigned to coronavirus or climate change surveys (N = 3203) we assess risk perceptions, and whether risk perception “hot spots” are driving policy preferences, within and across these global risks. Striking parallels emerge between the two. Both risks are perceived as highly threatening, inequitably distributed, and not particularly controllable. People see themselves as somewhat informed about both risks and have moral concerns about both. In contrast, climate change is seen as better understood by science than is pandemic coronavirus. Further, individuals think they can contribute more to slowing or stopping pandemic coronavirus than climate change, and have a greater moral responsibility to do so. Survey assignment influences policy preferences, with higher support for policies to control pandemic coronavirus in pandemic coronavirus surveys, and higher support for policies to control climate change risks in climate change surveys. Across all surveys, age groups, and policies to control either climate change or pandemic coronavirus risks, support is highest for funding research on vaccines against pandemic diseases, which is the only policy that achieves majority support in both surveys. Findings bolster both the finite worry budget hypothesis and the hypothesis that supporters of policies to confront one threat are disproportionately likely also to support policies to confront the other threat.

Rarely has humanity faced two powerful environmental threats to global well-being simultaneously. For decades scholars have been studying how people perceive climate change and what they are doing, would do, and want their governments to do to address the threat posed by climate change to themselves as individuals, to their nations, and to global well-being (e.g., Fischhoff and Furby, 1983; Löfstedt, 1991; O’Connor et al., 1999; Böhm and Pfister, 2001; Leiserowitz, 2005; Bostrom et al., 2012; Lee et al., 2015). With the emergence of the pandemic coronavirus at the close of 2019, scholars are asking how people are protecting themselves and what they want their governments to do to address the threat posed by pandemic coronavirus. Both lines of research are providing useful information on the political, psychological, and social determinants of attitudes toward these risks. Little research, however, compares the two threats in the public mind or looks at how the presence of a second potentially calamitous threat influences attitudes toward the other threat.

This paper reports results from an April 2020 survey of 3,203 respondents in the United States to identify the fundamental similarities and differences in how the public understands these threats and how these views of the nature of the threat influence the level of concern and willingness to act in the public interest. Policy preferences flow from levels of dread (Fischhoff et al., 1978), but also from efficacy judgments (both for personal actions and government policies; Bostrom et al., 2019) and moral responsibility assessments (Doran et al., 2019).

The presence of two powerful threats at the same time may influence what people are willing to support differently than if there were only one threat. One logical hypothesis is that people who are deeply concerned about addressing either climate change or the coronavirus pandemic are part of a cultural community that is likely to view the threat as systemic and needing a coherent institutional response. The idea is that people who demand a strong governmental response to the coronavirus pandemic threat are more likely also to demand a strong governmental response to the threat from climate change (and vice-versa) because they understand that these sorts of threats require a strong governmental response. There is a “crowding-in” phenomenon by which recognition that one of the threats needs a strong centralized policy response makes an individual more likely to perceive that the other threat also needs a strong centralized policy response. In contrast, to “crowding-in” that leads to systemic thinking in general and recognition that the world and national communities must act, there is a “crowding-out” hypothesis that argues that people have a “worry budget” so that great concern for one threat reduces concern for and willingness to confront the other threat (Linville and Fischer, 1991; Weber, 2006; Huh et al., 2016). The idea is that people can devote only so much energy to caring about and addressing problems, so that the increase in concern for pandemics would limit concern for climate change (and vice versa).

In summary, after describing the materials, procedures and methods of data acquisition and treatment, this paper compares the psychometrics for each threat, identifies the determinants of support for policies to address the threat, assesses the finite pool of worry thesis, and concludes with a discussion of the significance of the findings.

The study is a paywall-intercept (also called “survey wall”) survey experiment conducted in the United States through Google Surveys publisher network, to achieve a representative sample of internet users. Google Surveys samples tens of millions of internet users daily using a “river sampling” or “web intercept” sampling approach, through a network of publishers on over 1,500+ sites publishing a variety of content, including 74% News, 5% Reference, 4% Arts and Entertainment, and 17% other (McDonald et al., 2012; Sostek and Slatkin, 2018). Google Surveys pays these publishers. Surveys are kept extremely short, up to a maximum of 10 questions, with formats restricted to minimize response burdens. All responses are anonymous. The surveys are offered by publishers to internet users, who can choose to pay for accessing the publisher’s content instead of answering questions, or can skip the survey. Internet users are selected through a computer-algorithm-driven stratified-sampling process to create an internet-user sample that matches the national internet-using population age, gender and location. Users cannot opt into surveys; they are assigned a random survey from those available (Keeter and Christian, 2012). For paywall intercept surveys run on the Google survey platform in the first half of 2018, the response rate was 25% (Sostek and Slatkin, 2018). Although Google Surveys publisher network does not offer a population random sample, comparative analyses have concluded that it provides a sample of adult internet users that is as representative as others available, appropriate and sufficiently accurate for survey experiments (Keeter and Christian, 2012; McDonald et al., 2012; Santoso et al., 2016), and that the platform is useful given its affordability and ease of survey implementation (e.g., Tanenbaum et al., 2013). In comparative studies Google Surveys samples have been found to be highly representative of the internet-user population in the United States, for example including more conservatives (40%) than a Pew Research survey sample (36%) (Keeter and Christian, 2012, p. 9).

The study was reviewed by the University of Washington Human Subjects Division and determined to be exempt from federal human subjects regulations (IRB ID STUDY00009946). Data collection took place in mid-April 2020 (April 12–17, 2020).

A total of N = 3203 U.S. adults completed all 10 questions in the survey block they were offered (see below), out of 4,570 who answered the first question. The drop-off rate (after the first question) was 29.3% on average for the pandemic survey blocks, and 30.5% on average for the climate survey blocks. Age and gender were inferred by Google; for the 818 participants who had opted out in the Ads setting (which applies to Google survey as well) age and gender are unknown. The estimated distribution across the age categories 18–24, 25–34, 35–44, 45–54, 55–64, 65+ years was 243, 371, 422, 383, 478, and 488, respectively. Gender was not inferred for 714 participants; 1,295 participants identified as female, 1,194 as male. For the publisher network samples, Google estimates response biases for each survey, comparing age, gender, and region to provide weights for a representative sample, and reports the bias in the sample as Root Mean Square Error estimated across these characteristics, for each question. RMSE varied from 2 to 4.4% for questions in our surveys. In general our samples slightly overestimate those aged 55–64 and 65+, and those living in the Midwestern U.S. Because weights are not calculated for those opting out, weighted samples are much smaller. Our sensitivity analyses comparing results on analyses conducted with weighted versus unweighted data revealed no noteworthy differences in results (e.g., differences in percentages supporting policies between the weighted and unweighted data were in the tenth of a percent range), for which reason we report analyses using unweighted data.

The questionnaire consisted of measures on psychometric judgments, policy preferences, and political orientation. Age and gender were inferred for all participants by Google.

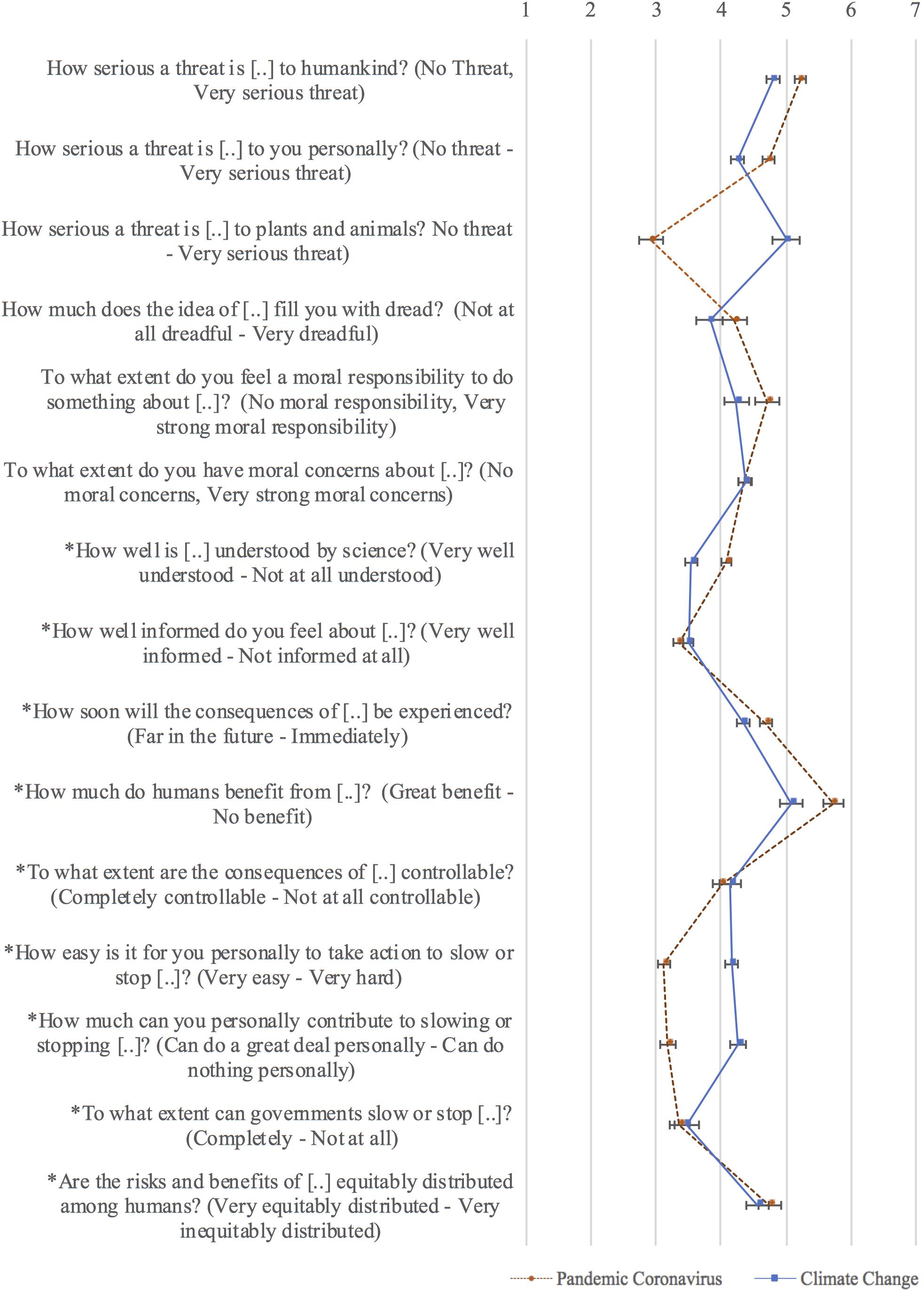

The total set of survey items included 15 psychometric risk judgments adapted from prior risk perception research on what is often referred to in risk research as the psychometric paradigm (Slovic, 1987; Bostrom et al., 2012, 2020). Each item has a seven-point rating scale with labeled endpoints. The psychometric judgments tapped into the following facets of perceived risk: threat and dread, known risk, morality, controllability and efficacy, and human benefits. Depending on the experimental condition (i.e., version of the survey), respondents provided psychometric judgments with respect to either global climate change or the pandemic coronavirus. Figure 1 and Table 1 list the complete wording and response scale labels.

Figure 1. Average psychometric risk ratings from the raw data (no imputations included), by risk, with 95% confidence intervals for the means. Sample sizes vary from 400 to 1,601 per mean, as seven of these survey questions were presented in only one block, one in two blocks, one in three, and six in all four blocks. *Indicates that the item has been reverse coded for purposes of this figure, so that the response scale is in the direction indicated in parentheses; this way higher numbers imply higher perceived risk consistently for all items.

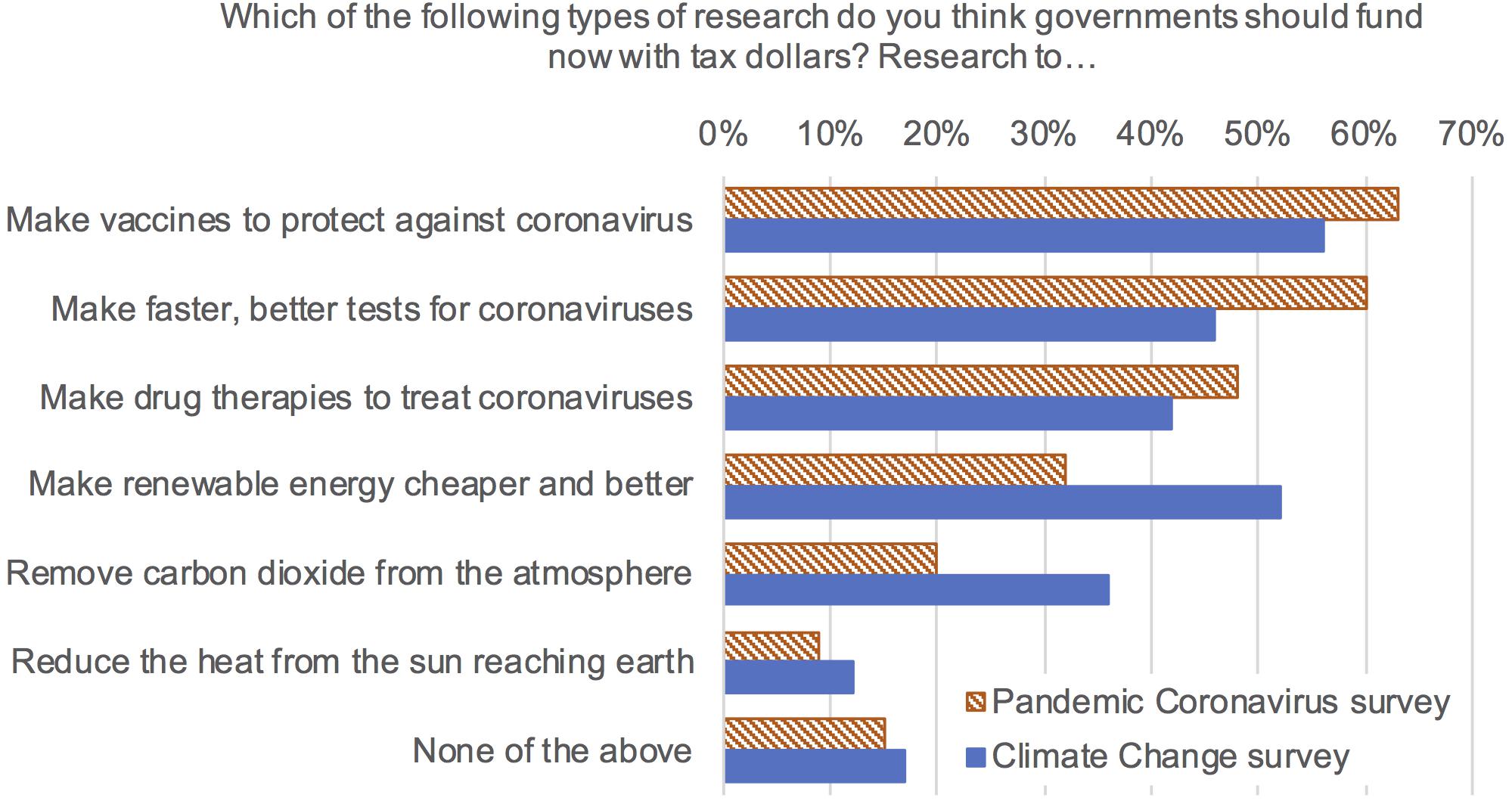

Preferences with regard to supporting or not supporting each of six policies were posed to all participants in block D in a check-all-that apply survey question (response order randomized, with an explicit “None of the above” option presented last). Three of these referred to policies regarding climate change, the other three to policies addressing the coronavirus pandemic. For each risk issue, we selected a policy that is popular (e.g., research on renewable energy, to address climate change; Howe et al., 2015), and a policy associated with some contention or disagreement (e.g., funding research on solar radiation management to address climate change). Figure 2 provides the exact wording for the six policies and percentages supporting each of them.

Figure 2. Between survey comparison of percentage of respondents selecting to support each category of research (N = 800, 400 per survey).

Political orientation was measured with the prompt “Would you describe yourself as” and a seven-point rating scale with the verbal endpoint anchors “Extremely liberal” and “Extremely conservative.”

In order to fit the constraints of Google’s survey platform, which allows a maximum of 10 questions per survey, we implemented a sparse matrix design which randomized respondents to one of eight distinct blocks, four on pandemic coronavirus, four on climate change. From the set of measures on psychometric judgments and policy support, we grouped questions into four distinct blocks of 10 questions each, with a few core questions asked across all blocks, following existing guidance for sparse matrix designs (e.g., Rhemtulla and Hancock, 2016). The order of the questions varied by block, but each block was exactly the same across the two risks (pandemic coronavirus and climate change). The check-all-that-apply survey question about policy preferences appeared only in block D and was presented as the last question in that block. Participants were randomly assigned to answer survey items from a single block.

The sparse matrix survey design results in systematically missing data as respondents could not answer the items that were not included in their randomly assigned survey block. We resolved this issue by using multiple imputation to construct a complete dataset that could then be analyzed. In particular, we constructed 100 imputed datasets using the Amelia package in R (Honaker et al., 2019). The Amelia algorithm assumes data are jointly multivariate normal, and uses a bootstrapped Expectation-Maximization (EM)-algorithm to generate complete datasets from the posterior distributions (Honaker and King, 2010). While the missing survey data contain dichotomous and seven-point Likert items that do not match the assumption of multivariate normality, research has shown that this imputation method works nearly as well for handling these data types as imputation methods that are more specialized but also less robust (Kropko et al., 2014). We imputed missing data only if they were missing due to the survey block randomization, fulfilling the requirement that imputed data be missing at random (Rubin, 1976).

The imputation procedure incorporated all survey data, including all psychometric judgments, policy support, and political orientation, as well as risk comparison questions that asked respondents how more familiar risks compare to management of coronavirus and climate change, respectively, and a question asking participants to rate how similar managing pandemic coronavirus is to managing climate change (or vice versa, for the climate change survey). The imputation procedures also included demographic information from Google, such as gender, age category, and categorical geographic information. Prior to imputation we centered all non-binary variables and dichotomized respondents’ categorical geographic information which we included in the imputation only if at least 10 respondents shared a particular location.

We used the mean variance-covariance matrix across all 100 imputations to conduct the principal components analysis (Van Ginkel, 2010; Van Ginkel and Kroonenberg, 2014), and the confirmatory factor analyses.

The regression analyses were estimated by bootstrapping each regression model equally across all imputations and limiting the regression to fit only the observations for which the dependent variable is not imputed. We imputed age and gender data for any respondents for which Google was unable to infer this information. We included the imputed gender values in the regression, but only included the non-imputed age due to apparently poor quality of the imputed age values. Our results reported in the following section are not sensitive to these choices. Nor are the results substantially different if we round the ordinal categorical imputations or restrict the imputed data to the initial data range.

We report the results in three sections. First, we report results concerning the psychometric scales. A description of the profiles of climate change and coronavirus pandemic on the psychometric scales is followed by factor analyses that were undertaken in order to inspect the correlational structure of the psychometric items. The last two sections of the results then focus on testing the worry budget versus crowding-in hypotheses more specifically. This is first done by reporting regression analyses to account for policy preferences then by analyzing the effects of the survey context (climate change versus pandemic coronavirus) on perceived risk and policy preferences.

Participants rated pandemic coronavirus as slightly more dreadful and threatening to humankind and to themselves personally than climate change, but far less threatening to plants and animals, as might be expected (Figure 1). Despite feeling almost equally well informed about both risks, participants rated pandemic coronavirus as less understood by science than climate change. They also judged it harder to take action and to personally contribute to slowing or stopping climate change than to pandemic coronavirus, and felt a greater moral responsibility to do something about pandemic coronavirus. Nevertheless, they reported similar levels of moral concerns across both risks.

In order to investigate the dimensional structure of the psychometric judgments, we conducted principal component and confirmatory factor analyses. For all factor analyses we used the R statistical environment (R Core Team, 2020).

For comparative purposes, we started by following the procedures introduced in risk research by the psychometric paradigm in the 1970s and 1980s (e.g., Slovic, 1987), which entail conducting exploratory principal component analyses (PCA) with varimax rotation. We conducted these analyses separately for each of the two risk issues pandemic coronavirus and climate change. Based on the scree test and Kaiser criterion (Eigenvalue of at least 1.0), we inspected the 2-, 3-, and 4-factor solutions. The results turned out to be unsatisfactory. While the first factor could generally be interpreted as a threat/dread and morality factor, the other items did not form a clearly interpretable factor structure and showed substantial cross-loadings. Also, in the case of climate change, the known risk items did not form a separate factor, as has been found previously in the psychometric literature, but instead loaded on the first factor together with threat/dread and morality (see online supplement for additional details).

We therefore proceeded by trying to identify a consistent factor structure in a confirmatory rather than exploratory manner. We derived three factor models from the literature (see Table 1), two from empirical work by Bostrom et al. (2020), who used a set of psychometric items almost identical to ours, and one from the seminal work by Slovic and colleagues on the psychometric paradigm (specifically, from Slovic, 1987).

Similar to our study, Bostrom et al. (2020) measured perceived risk concerning climate change and pandemic influenza (within-subjects, in contrast to our between-subjects design) on psychometric items that correspond to 12 of our 15 items. They report (a) a two-factor solution that they computed separately for climate change and pandemic influenza and which replicated across the two risks, and (b) a four-factor solution that was computed analyzing both risks together. Models 1 and 2 (Table 1) were specified according to Bostrom et al.’s two- and four-factor solutions, respectively. These two models use all 15 items. The three items that our questionnaire included in addition to Bostrom et al.’s twelve items were allocated to the factor that matched them conceptually.

A robust finding in the literature on the psychometric paradigm is that two factors have emerged across various risk domains and respondent populations: Dread Risk and Known Risk. We specified Model 3 (Table 1) by selecting the marker items of these two traditional factors from our items, resulting in a two-factor model using seven of our items.

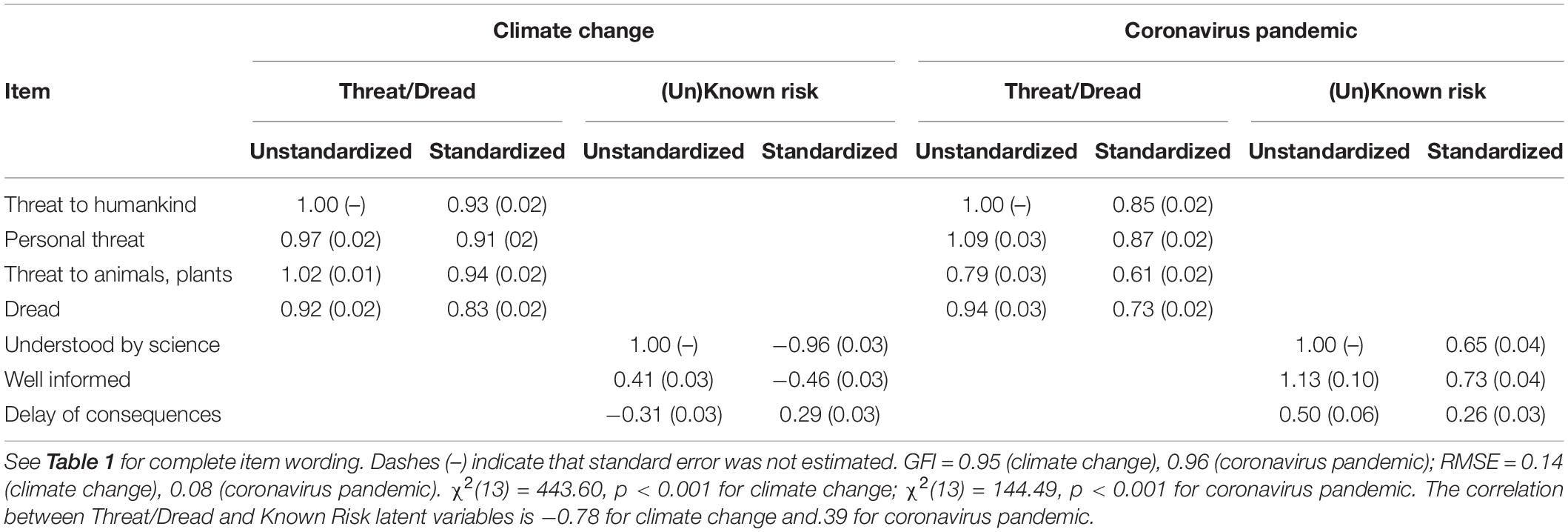

We estimated all models separately for pandemic coronavirus and climate change, and with both orthogonal and correlated factors. Table 2 shows the goodness-of-fit indices of the models (two models could not be estimated, see note to Table 2). The only model that approached acceptable fit measures was Model 3. For climate change, it could only be estimated with correlated factors; for pandemic coronavirus, the fit is better with correlated than with orthogonal factors. We therefore display loadings only for Model 3 with correlated factors (see Table 3).

Table 3. Unstandardized loadings (standard errors) and standardized loadings for Model 3 (correlated) confirmatory factor analyses of climate change (n = 1,601) and coronavirus pandemic (n = 1,602).

In sum, we find supportive evidence for the two traditional psychometric factors: Dread and Known Risk in their pure form, that is, using only marker items for each of these two factors. For the remaining items, we could not identify a consistent factorial structure. For Model 3 the structure of the Known Risk factor differs for climate change and pandemic coronavirus (Table 3). For climate change, the loadings of the extent to which the risk issue is understood by science and of how well informed the respondent feels, on the one hand, and of how delayed the consequences are perceived to be, on the other hand, have different signs. That is, respondents believe that the risk issue is less understood by science and feel less informed themselves the more delayed they perceive the consequences of climate change to be. For pandemic coronavirus, in contrast, the loadings of these three items on the Known Risk factor have the same sign. Hence, for pandemic coronavirus, respondents believe that the risk issue is better understood by science and feel better informed the more delayed the perceived consequences are. One potential explanation of this difference may lie in the fact that climate change and pandemic coronavirus differ in familiarity. Climate change is by now an “old” risk and people may believe that science knows a great deal about it. Temporal delay of consequences may then be associated with greater uncertainty of predictions. Pandemic coronavirus, in contrast, is a new risk that just emerged a couple of months before our survey was administered. Albeit with concerted and prolific research efforts, science had just started to investigate the virus at the time of the survey. In such a situation, people may regard delayed consequences as providing the opportunity for science to accumulate more insights.

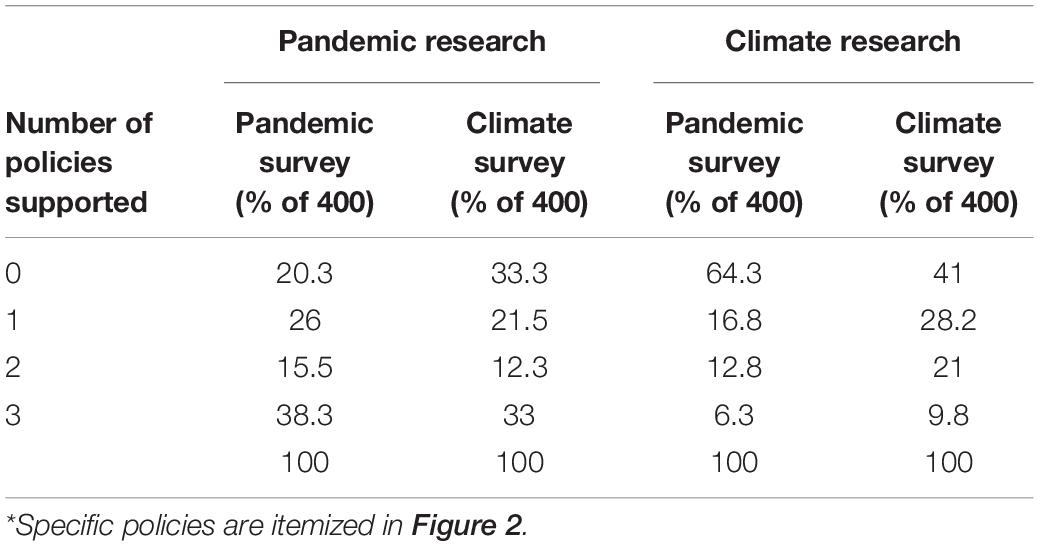

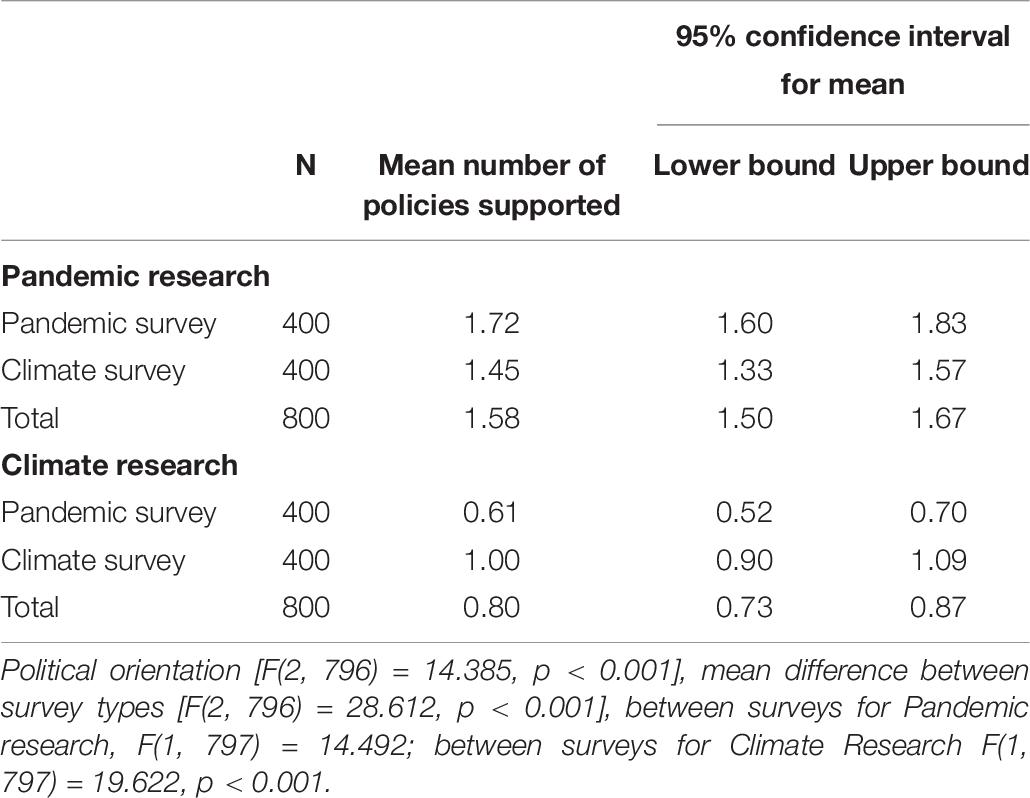

The numbers of policies supported by survey context and risk type are reported in Table 4. Figure 2 shows the percentage of each sample supporting each policy.

Table 4. Percentage of survey participants supporting none, 1, 2 or all 3 research policies*, for each risk and by risk survey.

To examine whether risk perceptions as measured on psychometric scales are associated with risk policy support, we created additive scales corresponding to the factors we hypothesized. Based on the factor analyses reported in section “Dimensional structure of psychometric judgments,” we calculate the average of four items to create a Threat scale (Cronbach’s alpha 0.91): threat to humankind, personal threat, threat to plants and animals, and dread. The Known Risk factor that emerged from confirmatory factor analysis was not reliable by common standards, for which reason we calculate the average of two items (Cronbach’s alpha 0.62)–understood by science and well informed–to represent this factor in the regression analyses. Averaging the items measuring moral responsibility and moral concerns produces a Moral scale with a Cronbach’s alpha of 0.86. Efficacy is measured with the average of four items (Cronbach’s alpha 0.82): ease of personal action, personal contribution to slowing or stopping the risk, the extent to which government can slow or stop the risk, and the controllability of the risk.

Ordinal probit models predicting the number of policies supported were estimated separately for each risk. Consistently across both coronavirus and climate change risks, greater perceived threat was associated with greater support for government funding research on addressing pandemic disease and climate change (Tables 5, 6 show the mean coefficients estimated across 100 imputed datasets for Block D of each risk, in which no dependent variable data are imputed). In the climate change survey, both higher perceived threat and greater moral concerns correlate with supporting more investments in government funding for research to address climate change, controlling for all else. Although the estimated mean coefficient for the Moral scale does not quite rise to standard levels of significance, the coefficient magnitude is relatively large compared to other coefficients in the model.

For the two most popular policies, research on vaccines and research on renewable energy, binary probit regressions were also estimated for each of the 100 imputed datasets for each risk, restricted to Block D. Here again, perceived Threat from pandemic coronavirus is positively associated with being more likely to support research on vaccines for pandemic diseases, and perceived Threat from climate change is positively associated with favoring government support for research on renewable energy (Tables 7, 8).

Policy preferences are stronger within a same-topic context; in other words, the average number of pandemic disease mitigation research policies supported in the pandemic coronavirus survey is higher than the average number of pandemic disease mitigation policies supported in the climate change survey. Similarly, the average number of climate change policies supported in the climate change survey is higher than the average number of climate change policies supported in the pandemic coronavirus survey context. Additionally, overall, respondents support more research funded by tax dollars to address pandemic diseases than they do to address climate change, controlling for political orientation (Tables 4, 9). These results support a “worry budget” narrative, although support for research on risk mitigation of pandemic diseases does not completely crowd out support for research on approaches to reducing the risks of climate change. In fact, the number of policies supported for research to mitigate the risks of pandemic diseases is positively correlated with the number of policies supported for research to mitigate the risks of climate change (r = 0.407, p < 0.001 partial correlation, controlling for political orientation).

Table 9. One-way ANCOVA of the survey context effect and differences between risks, controlling for political orientation.

On average, respondents supported more than one policy for each risk, with the exception that in the pandemic survey a majority (64.3%) preferred that governments support none of the three research approaches proposed to address climate change. While it is possible that the solar radiation management and carbon removal policies are more contentious than any of the proposed research for pandemic diseases (on vaccines, tests, and treatments), extensive polling has demonstrated recent strong public support for renewable energy (Howe et al., 2015; Steentjes et al., 2017), which was also one of the climate change mitigation research policy options.

The psychometric risk perception profiles, including moral concerns, for pandemic coronavirus and climate change demonstrate that people see the two risks as similar in many ways. Our results demonstrate that risk perceptions matter; we find that threat and dread form a single dimension in the exploratory factor analysis, as found in much previous work. The extent to which individuals feel informed about the risk and to which they see the risk as understood by science also correlate positively. These two judgments clearly form one dimension. Our confirmatory factor analysis shows that these two judgments load together with the perceived immediacy of consequences on a single factor. However, immediacy had a low loading and was not a reliable component of an additive scale; for these reasons only the first two items were used in the Known scale as a predictor in our regression analyses. In sum, the role of perceived immediacy of risk consequences in the dimension of Known risks is less clear.

A robust result of the regression analyses is that perceived threat is positively and consistently correlated with support for government expenditures on research to reduce risk, for both pandemic coronavirus and climate change, controlling for judgments of efficacy, how well the risk is known, moral concerns and responsibility, political orientation, and demographics.

The idea of the “finite pool of worry” or “worry budget” is that people have limited cognitive capabilities (e.g., Achen and Bartels, 2016), so the emergence of a new, potentially calamitous concern such as pandemic coronavirus must necessarily lead people to worry less about “old” concerns such as climate change. A different view of the consequences of the emergence of a new threat is that the new threat may actually increase overall attention to communal threats as people understand that responding effectively to both these threats requires systemic thinking and cooperative actions at individual, organizational, and national levels. In other words, learning about what needs to be done to control the pandemic coronavirus has a spillover effect of people learning that similarly climate change needs enactment and implementation of new policies. Although we lack the longitudinal data necessary to test these hypotheses, our data definitively demonstrate that people are more likely to support policies that address the threat about which they were encouraged to focus than they are to support policies to address the other threat. The data also show a significant relationship between policy support for the two threats (i.e., people with higher levels of support for policies to address the pandemic coronavirus are more likely also to support policies to address climate change). Perhaps our questions tap three different cognitive realities: a finite pool of worry, acceptance that policy resources are finite, and general support for policies to address communal threats. How people link (or fail to link) their perceptions of the risks from two potentially calamitous threats as well as preferences for policies to address these threats seems to us worthy of extensive further research. The concept of threat fatigue may be a useful addition to future research designs.

A final note of caution regards the survey methods in this study, and the potential threats to validity they pose. The short survey format poses minimal burdens on respondents, and thus is likely to have tapped into spontaneous reactions regarding the risks investigated, pandemic coronavirus and climate change. This can be seen as a positive, to the extent it mitigates response biases, and reduces context biases that might be induced by longer surveys. On the other hand, there is little deliberation, and respondents may not have thought deeply before answering the 10 questions posed to them. To accommodate the short format we implemented a sparse matrix design and used imputation to fill in responses missing completely at random. Imputation methods take full advantage of the information value of the available raw data and are conducted only on responses missing completely at random. Nevertheless, they are less informative than actual responses would be and may underrepresent actual response variability. Another caution is that while the survey is likely representative of internet users in the United States, it is not a true probability sample. Further, although the vast majority of adults in the United States are now internet users, not all are. It follows that the results may be subject to biases stemming from the sampling and survey platform. Finally, we focus here on risk perception in the United States, and these results are not necessarily generalizable to other countries where culture and political attitudes may differ.

This survey provides an empirical snapshot of comparative risk perceptions of pandemic coronavirus and climate change in the initial months of the COVID-19 pandemic in the United States, at a time when comparisons between risks from the pandemic coronavirus and climate change had begun to attract risk analysts’ attention (Bostrom et al., 2020). Further, the study contributes to insights on worry budgets. While this study does not provide within-individual comparative measures of perceived threat, the psychometric results indicate that collectively climate change is still perceived as a threat by the U.S. public, even as the threat of pandemic coronavirus impinges on daily lives. The manifest support for policies to address both pandemic coronavirus and climate change demonstrates that immediate contexts—both the overwhelming presence of the pandemic in April 2020, as well as the immediate pandemic coronavirus survey context—do not completely crowd out concerns about and interests in addressing climate change.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation, to any qualified researcher.

The studies involving human participants were reviewed and approved by University of Washington Human Subjects Committee (IRB ID STUDY00009946). Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

AB, GB, and RO’C contributed conception and design of the study. AB managed the survey and wrote the first draft of the manuscript. AB, AH, and GB performed the statistical analysis. AH specifically contributing to imputation. GB, RO’C, and AH wrote sections of the manuscript. All authors contributed to iterations of manuscript revision, read and approved the submitted version.

The survey was funded by AB from the Weyerhaeuser endowed Professorship in Environmental Policy.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Achen, C., and Bartels, L. (2016). Democracy for Realists: Why Elections Do Not Produce Responsive Government. Princeton NJ: Princeton University Press. doi: 10.1515/9781400882731

Böhm, G., and Pfister, H. R. (2001). Mental representation of global environmental risks. Res. Soc. Probl. Public Policy 9, 1–30. doi: 10.1016/s0196-1152(01)80022-3

Bostrom, A., Böhm, G., O’Connor, R. E., Hanss, D., Bodi-Fernandez, O., and Halder, P. (2020). Comparative risk science for the coronavirus pandemic. J. Risk Res. doi: 10.1080/13669877.2020.1756384 [Epub ahead of print].

Bostrom, A., Hayes, A. L., and Crosman, K. M. (2019). Efficacy, action, and support for reducing climate change risks. Risk Anal. 39, 805–828. doi: 10.1111/risa.13210

Bostrom, A., O’Connor, R. E., Böhm, G., Hanss, D., Bodi, O., Ekström, F., et al. (2012). Causal thinking and support for climate change policies: international survey findings. Glob. Environ. Change 22, 210–222. doi: 10.1016/j.gloenvcha.2011.09.012

Doran, R., Böhm, G., Pfister, H. R., Steentjes, K., and Pidgeon, N. (2019). Consequence evaluations and moral concerns about climate change: insights from nationally representative surveys across four European countries. J. Risk Res. 22, 610–626. doi: 10.1080/13669877.2018.1473468

Fischhoff, B., and Furby, L. (1983). “Psychological dimensions of climatic change,” in Social Science Research and Climate Change, eds R. S. Chen, E. Boulding, and S. H. Schneider (Dordrecht: Springer), 177–203. doi: 10.1007/978-94-009-7001-4_10

Fischhoff, B., Slovic, P., Lichtenstein, S., Read, S., and Combs, B. (1978). How safe is safe enough? A psychometric study of attitudes towards technological risks and benefits. Policy Sci. 9, 127–152. doi: 10.1007/bf00143739

Honaker, J., and King, G. (2010). What to do about missing values in time−series cross−section data. Am. J. Polit. Sci. 54, 561–581. doi: 10.1111/j.1540-5907.2010.00447.x

Howe, P. D., Mildenberger, M., Marlon, J. R., and Leiserowitz, A. (2015). Geographic variation in opinions on climate change at state and local scales in the USA. Nat. Clim. Chang. 5, 596–603. doi: 10.1038/nclimate2583

Huh, B., Li, Y., and Weber, E. (2016). “A finite pool of worry,” in NA – Advances in Consumer Research, eds P. Moreau and S. Puntoni (Duluth, MN: Association for Consumer Research), 44.

Keeter, S., and Christian, L. (2012). A Comparison of Results from Surveys by the Pew Research Center and Google Consumer Surveys. Washington, DC: Pew Research Center.

Kropko, J., Goodrich, B., Gelman, A., and Hill, J. (2014). Multiple imputation for continuous and categorical data: comparing joint multivariate normal and conditional approaches. Polit. Anal. 22, 497–519. doi: 10.1093/pan/mpu007

Lee, T. M., Markowitz, E. M., Howe, P. D., Ko, C. Y., and Leiserowitz, A. A. (2015). Predictors of public climate change awareness and risk perception around the world. Nat. Clim. Chang. 5, 1014–1020. doi: 10.1038/nclimate2728

Leiserowitz, A. A. (2005). American risk perceptions: is climate change dangerous? Risk Anal. 25, 1433–1442. doi: 10.1111/j.1540-6261.2005.00690.x

Linville, P. W., and Fischer, G. W. (1991). Preferences for separating or combining events. J. Pers. Soc. Psychol. 60, 5–23. doi: 10.1037/0022-3514.60.1.5

Löfstedt, R. E. (1991). Climate change perceptions and energy-use decisions in Northern Sweden. Glob. Environ. Change 1, 321–324. doi: 10.1016/0959-3780(91)90058-2

McDonald, P., Mohebbi, M., and Slatkin, B. (2012)). Comparing Google Consumer Surveys to Existing Probability and Non-probability Based Internet Surveys. Mountain View, CA: Google Inc.

O’Connor, R. E., Bard, R. J., and Fisher, A. (1999). Risk perceptions, general environmental beliefs, and willingness to address climate change. Risk Anal. 19, 461–471. doi: 10.1111/j.1539-6924.1999.tb00421.x

R Core Team (2020). A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing.

Rhemtulla, M., and Hancock, G. R. (2016). Planned missing data designs in educational psychology research. Educ. Psychol. 51, 305–316. doi: 10.1080/00461520.2016.1208094

Rubin, D. B. (1976). Inference and missing data. Biometrika 63, 581–592. doi: 10.1093/biomet/63.3.581

Santoso, P., Stein, R., and Stevenson, R. (2016). Survey experiments with Google Consumer Surveys: promise and pitfalls for academic research in social science. Polit. Anal. 24, 356–373. doi: 10.1093/pan/mpw016

Sostek, K., and Slatkin, B. (2018). How Google Surveys Work. White Paper. Available online at: https://services.google.com/fh/files/misc/white_paper_how_google_surveys_works.pdf (accessed June, 2018).

Steentjes, K., Pidgeon, N., Poortinga, W., Corner, A., Arnold, A., Böhm, G., et al. (2017). European Perceptions of Climate Change: Topline Findings of A Survey Conducted in Four European countries in 2016. Cardiff: Cardiff University.

Tanenbaum, E. R., Parvati, K., and Michael, S. (2013). “How representative are Google Consumer Surveys? Results from an analysis of a Google Consumer Survey question relative national level benchmarks with different survey modes and sample characteristics,” in Proceedings of the American Association for Public Opinion Research (AAPOR) 68th Annual Conference, Boston, MA.

Van Ginkel, J. R. (2010). Investigation of multiple imputation in low-quality questionnaire data. Multivariate Behav. Res. 45, 574–598. doi: 10.1080/00273171.2010.483373

Van Ginkel, J. R., and Kroonenberg, P. M. (2014). Using generalized procrustes analysis for multiple imputation in principal component analysis. J. Classif. 31, 242–269. doi: 10.1007/s00357-014-9154-y

Keywords: pandemic, coronavirus, climate change, risk perception, risk management, worry budget

Citation: Bostrom A, Böhm G, Hayes AL and O’Connor RE (2020) Credible Threat: Perceptions of Pandemic Coronavirus, Climate Change and the Morality and Management of Global Risks. Front. Psychol. 11:578562. doi: 10.3389/fpsyg.2020.578562

Received: 30 June 2020; Accepted: 29 September 2020;

Published: 30 October 2020.

Edited by:

Joanna Sokolowska, University of Social Sciences and Humanities, PolandReviewed by:

Pierluigi Cordellieri, Sapienza University of Rome, ItalyCopyright © 2020 Bostrom, Böhm, Hayes and O’Connor. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ann Bostrom, YWJvc3Ryb21AdXcuZWR1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.