94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

BRIEF RESEARCH REPORT article

Front. Psychol., 30 September 2020

Sec. Developmental Psychology

Volume 11 - 2020 | https://doi.org/10.3389/fpsyg.2020.02011

This article is part of the Research TopicSociomateriality in Children with Typical and/or Atypical DevelopmentView all 15 articles

Federico Manzi1*†

Federico Manzi1*† Giulia Peretti1†

Giulia Peretti1† Cinzia Di Dio1

Cinzia Di Dio1 Angelo Cangelosi2

Angelo Cangelosi2 Shoji Itakura3

Shoji Itakura3 Takayuki Kanda4,5

Takayuki Kanda4,5 Hiroshi Ishiguro5,6

Hiroshi Ishiguro5,6 Davide Massaro1

Davide Massaro1 Antonella Marchetti1

Antonella Marchetti1Recent technological developments in robotics has driven the design and production of different humanoid robots. Several studies have highlighted that the presence of human-like physical features could lead both adults and children to anthropomorphize the robots. In the present study we aimed to compare the attribution of mental states to two humanoid robots, NAO and Robovie, which differed in the degree of anthropomorphism. Children aged 5, 7, and 9 years were required to attribute mental states to the NAO robot, which presents more human-like characteristics compared to the Robovie robot, whose physical features look more mechanical. The results on mental state attribution as a function of children’s age and robot type showed that 5-year-olds have a greater tendency to anthropomorphize robots than older children, regardless of the type of robot. Moreover, the findings revealed that, although children aged 7 and 9 years attributed a certain degree of human-like mental features to both robots, they attributed greater mental states to NAO than Robovie compared to younger children. These results generally show that children tend to anthropomorphize humanoid robots that also present some mechanical characteristics, such as Robovie. Nevertheless, age-related differences showed that they should be endowed with physical characteristics closely resembling human ones to increase older children’s perception of human likeness. These findings have important implications for the design of robots, which also needs to consider the user’s target age, as well as for the generalizability issue of research findings that are commonly associated with the use of specific types of robots.

Currently, we are witnessing an increasing deployment of social robots (Bartneck and Forlizzi, 2004) in various contexts, from occupational to clinical to educational (Murashov et al., 2016; Belpaeme et al., 2018; Marchetti et al., in press). Humanoid social robots (HSRs), in particular, have proven to be effective social partners, possibly due to their physical human likeness (Dario et al., 2001). Humanoid social robots can vary in the degree of their anthropomorphic physical characteristics, often depending on the target user (children, adults, elderly, students, clinical populations, etc.) and the context (household, education, commercial, and rehabilitation). For example, the humanoid KASPAR robot that resembles a young child (with face, arms and hands, legs and feet), was specifically built for children with autism spectrum disorder (Dautenhahn et al., 2009; Wainer et al., 2014). In other instances, however, the same HSRs are used both for different purposes and different populations, like the NAO robot, which is largely used both with clinical and non-clinical populations (Shamsuddin et al., 2012; Mubin et al., 2013; Begum et al., 2016; Belpaeme et al., 2018), or the Robovie robot, that is employed both with adults and children (Shiomi et al., 2006; Kahn et al., 2012). A recent review of the literature by Marchetti et al. (2018) showed that different physical characteristics of HSRs may significantly affect the quality of interaction between humans and robots at different ages. The construction of robots that integrate and expand the specific biological abilities of our species led to two different directions in robotic development based on different, though related, theoretical perspectives: developmental cybernetics (DC; Itakura, 2008; Itakura et al., 2008; Moriguchi et al., 2011; Kannegiesser et al., 2015; Okanda et al., 2018; Di Dio et al., 2019; Wang et al., 2020; Manzi et al., 2020a) and developmental robotics (DR; De La Cruz et al., 2014; Cangelosi and Schlesinger, 2015, 2018; Lyon et al., 2016; Morse and Cangelosi, 2017; Vinanzi et al., 2019; Zhong et al., 2019; Di Dio et al., 2020a, b). The first perspective (DC) consists of creating a human-like system, by simulating human psychological processes and prosthetic functions in the robot (enhancing the function and lifestyle of persons) to observe people’s behavioral response toward the robot. The second perspective (DR) is related to the development of cognitive neural networks in the robot that would allow it to autonomously gain sensorimotor and mental capabilities with growing complexity, starting from intricate evolutionary principles. From these premises, the next two paragraphs briefly outline current findings concerning the effect that physical features of the HSRs have on human perception, thus outlining the phenomenon of anthropomorphism, and a recent methodology devised to measure it.

Anthropomorphism is a widely observed phenomenon in human–robot interaction (HRI; Fink et al., 2012; Airenti, 2015; Złotowski et al., 2015), and it is also greatly considered in the design of robots (Dario et al., 2001; Kiesler et al., 2008; Bartneck et al., 2009; Sharkey and Sharkey, 2011; Zanatto et al., 2016, 2020). In psychological terms, anthropomorphism is the tendency to attribute human characteristics, physical and/or psychological, to non-human agents (Duffy, 2003; Epley et al., 2007). Several studies have shown that humans may perceive non-anthropomorphic robots as anthropomorphic, such as Roomba (a vacuum cleaner with a semi-autonomous system; Fink et al., 2012). Although anthropomorphism seems to be a widespread phenomenon, the attribution of human traits to anthropomorphic robots is significantly greater compared to non-anthropomorphic robots. A study by Krach et al. (2008) compared four different agents (computer, functional robot, anthropomorphic robot, and human confederate) in a Prisoner’s Dilemma Game, and showed that the more the interactive partner displayed human-like characteristics, the more the participants appreciated the interaction and ascribed intelligence to the game partner. What characteristics of anthropomorphic robots (i.e., the HSRs) increase the perception of anthropomorphism? The HSRs can elicit the perception of anthropomorphism mainly at two levels: physical and behavioral (Marchetti et al., 2018). Working on the physical level is clearly easier than on intrinsic psychological features, and – although anthropomorphic physical features of robots are not the only answer to enhance the quality of interactions with humans – the implementation of these characteristics can positively affect HRIs (Duffy, 2003; for a review see Marchetti et al., 2018). It should be stated, however, that extreme human-likeness can result in the known uncanny valley effect, according to which HRIs are negatively influenced by robots that are too similar to the human (Mori, 1970; MacDorman and Ishiguro, 2006; Mori et al., 2012). Thus, the HSRs’ appearance represents an important social affordance for HRIs, as further demonstrated by the psychological research on racial and disability prejudice (Todd et al., 2011; Macdonald et al., 2017; Sarti et al., 2019; Manzi et al., 2020b). The anthropomorphic features of the HSRs can increase humans’ perception of humanness, such as mind attribution and personality, and influence other psychological mechanisms and processes (Kiesler and Goetz, 2002; MacDorman et al., 2005; Powers and Kiesler, 2006; Bartneck et al., 2008; Broadbent et al., 2013; Złotowski et al., 2015; Marchetti et al., 2020).

The study of the design of physical characteristics of the HSRs and their classification has been already investigated in HRI, but not systematically. A pioneering study by DiSalvo et al. (2002) explored the perception of humanness using 48 images of different heads of HSRs, and showed that three features are particularly important for the robot’s design: the nose, eyes, and mouth. Furthermore, a study by Duffy (2003) categorized different robots’ head in a diagram composed of three extremities: “human head” (as-close-as-possible to a human head), “iconic head” (a very minimum set of features) and “abstract head” (a more mechanistic design with minimal human-like aesthetics). Also, in this instance, human likeness was associated with greater mental abilities. Furthermore, a study by MacDorman (2006) analyzed the categorization of 14 types of robots (mainly androids and humanoids) in adults. It was shown that humanoid robots displaying some mechanical characteristics – such as the Robovie robot – were classified average on a “humanness” scale and rated lower on the uncanny valley scale. Recent studies compared one of the most widely used HSRs, the NAO robot, with different types of robots. It was shown that the NAO robot is perceived less human-like than an android – which is a highly anthropomorphic robot in both appearance and behavior (Broadbent, 2017)-, but more anthropomorphic than a mechanical robot, i.e., the Baxter robot (Yogeeswaran et al., 2016; Zanatto et al., 2019). However, there were no differences in perceived ability to perform physical and mental tasks between NAO and the android (Yogeeswaran et al., 2016), indicating that human-likeness (and not “human-exactness”) is sufficient to trigger the attribution of psychological features to a robot. In addition, a database has recently been created that collects more than 200 HSRs classified according to their level of human likeness (Phillips et al., 2018). In this study the NAO robot was classified with a score of about 45/100, in particular thanks to the characteristics of its face and body. Robovie and other similar robots were classified with a score ranging between 27 and 31/100, deriving mainly from body characteristics. These findings corroborate the hypothesis that NAO and Robovie are two HSRs with different levels of human-likeness due to their physical anthropomorphic features.

The interest in observing the effect of different physical characteristics of robots in terms of attribution of intentions, understanding, and emotions has also been investigated in children (Bumby and Dautenhahn, 1999; Woods et al., 2004; Woods, 2006). In particular, a study by Woods (2006), comparing 40 different robots, revealed that children experience greater discomfort with robots that look too similar to humans, favoring robots with mixed human-mechanical characteristics. These results were confirmed in a recent study by Tung (2016) showing that children preferred robots with not too many human-like features over robots with many human characteristics. Overall, these results suggested that an anthropomorphic design of HSRs may increase children’s preference toward them. Still, an excessive implementation of human features can negatively affect the attribution of positive qualities to the robot, again in line with the Uncanny Valley effect above.

Different scales were developed to measure psychological anthropomorphism toward robots in adults. These scales typically assess attribution of intelligence, personality and emotions, only to mention a few. In particular, the attribution of internal states to the robot, i.e., to have a mind, is widely used and very promising in HRI (Broadbent et al., 2013; Stafford et al., 2014).

In psychology, the ability to ascribe mental states to others is defined as the Theory of Mind (ToM). Theory of mind is the ability to understand one’s own and others’ mental states (intentions, emotions, desires, beliefs), and to predict and interpret one’s own and others’ behaviors on the basis of such meta-representation (Premack and Woodruff, 1978; Wimmer and Perner, 1983; Perner and Wimmer, 1985). Theory of mind abilities develop around four years of age, becoming more sophisticated with development (Wellman et al., 2001). Theory of mind is active not only during humans’ relationships but also during interactions with robots (for a review, see Marchetti et al., 2018).

Recent studies have shown that adults tend to ascribe greater mental abilities to robots that have a human appearance (Hackel et al., 2014; Martini et al., 2016). This tendency to attribute human mental states to robots was also observed in children. Generally, children are inclined to anthropomorphize robots by attributing psychological and biological characteristics to them (Katayama et al., 2010; Okanda et al., 2019). Still, they do differentiate between humans and robots’ abilities. A pioneering study by Itakura (2008) investigating the attribution of mental verbs to a human and a robot showed that children did not attribute the epistemic verb “think” to the robot. More recent studies have further shown that already from three years of age, children fairly differentiate a human from a robot in terms of mental abilities (Di Dio et al., 2020a), although younger children appear to be more inclined to anthropomorphize robots compared to older children. This effect may be due to the phenomenon of animism, particularly active at three years of age (Di Dio et al., 2020a, b).

The present study aimed to investigate the attribution of mental states (AMS) in children aged 5–9 years to two humanoid robots, NAO and Robovie, varying in their anthropomorphic physical features (DiSalvo et al., 2002; Duffy, 2003). Differences in the attribution of mental qualities to the two robots were then explored using the robots’ degree of physical anthropomorphism and the child’s chronological age. The two humanoid robots, NAO and Robovie, have been selected for two main reasons: (1) in relation to their physical appearance, both robots belong to the category of HSRs, but differ for their degree of anthropomorphism (for a detailed description of the robots, see section “Materials”); (2) both robots are largely used in experiments with children (Kanda et al., 2002; Kose and Yorganci, 2011; Kahn et al., 2012; Shamsuddin et al., 2012; Okumura et al., 2013a, b; Tielman et al., 2014; Cangelosi and Schlesinger, 2015, 2018; Hood et al., 2015; Di Dio et al., 2020a, b).

In light of previous findings associated with the use of these specific robots described above, we hypothesized the following: (1) independent of age, children would distinguish between humans and robots in terms of mental states by ascribing lower mental attributes to the robots; (2) children would tend to attribute greater mental qualities to NAO compared to Robovie because of its greater human-likeness; and (3) younger children would tend to attribute more human characteristics to robots (i.e., to anthropomorphize more) than older children.

Data were acquired on 189 Italian children from kindergarten and primary school age. The children were divided into three age groups for each robot as follows: (1) for the NAO robot, 5 years (N = 24, 13 females; M = 68.14; SD = 3.67); 7 years (N = 25, 13 females; M = 91.9; SD = 3.43); and 9 years (N = 23, 12 females; M = 116.38, SD = 3.91); (2) for the Robovie robot, 5 years (N = 33, 13 females; M = 70.9, SD = 2.95); 7 years (N = 49, 26 females; M = 93.4, SD = 3.62); and 9 years (N = 35, 15 females; M = 117.42, SD = 4.44). The initial inhomogeneity between sample sizes in the NAO and Robovie conditions were corrected by the random selection of children in the Robovie condition, caring to balance by gender. Accordingly, the sample for the Robovie condition used for statistical analysis was composed as follows: 5 years (N = 24, 8 females; M = 70.87, SD = 3.1); 7 years (N = 25, 14 females; M = 92.6, SD = 3.73); and 9 years (N = 23, 10 females; M = 117.43, SD = 4.62). The children’s parents received a written explanation of the procedure of the study, the measurement items, and gave their written consent. The children were not reported by teachers or parents for learning and/or socio-relational difficulties. The study was approved by the Local Ethic Committee (Università Cattolica del Sacro Cuore, Milan).

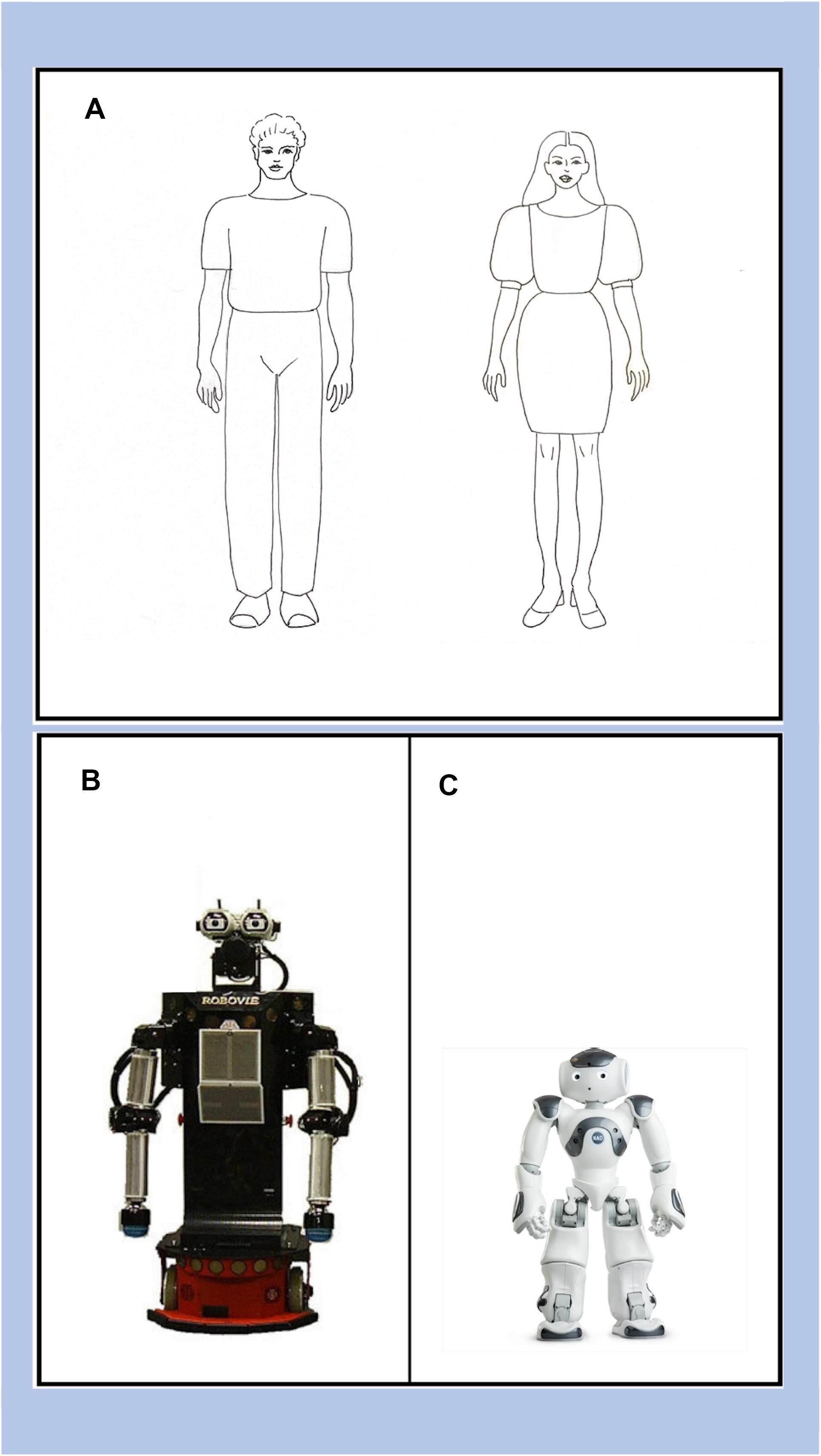

The two HSRs selected for this study were the Robovie robot (Hiroshi Ishiguro Laboratories, ATR; Figure 1B) and the NAO robot (Aldebaran Robotics, Figure 1C). We chose these two robots because, although they both belong to the category of HSRs, they differ in their degree of anthropomorphic features (DiSalvo et al., 2002; Duffy, 2003; MacDorman, 2006; Zhang et al., 2008; Phillips et al., 2018). Robovie is a HSR with more abstract anthropomorphic features: no legs but two driving wheels to move, two arms without hands. In particular, the head can be considered “abstract” (Duffy, 2003) because of two important human-like features: two eyes and a microphone that looks like a mouth (DiSalvo et al., 2002). Robovie is an HSR that can be rated as average in the continuum of mechanical-humanlike (Ishiguro et al., 2001; Kanda et al., 2002; MacDorman, 2006). NAO is a HSR with more pronounced anthropomorphic features compared to Robovie: two legs, two arms, and two hands with three moving fingers (Figure 1C). Besides, the face can be classified as “iconic” and consists of three cameras suggesting two eyes and a mouth. However, considering the whole body and the more detailed shape of the face, NAO is a HSR that can be rated as more human-like than Robovie (DiSalvo et al., 2002; MacDorman, 2006; Phillips et al., 2018).

Figure 1. The AMS images: (A) the Human condition (male and female), (B) Robovie robot, and (C) the NAO robot.

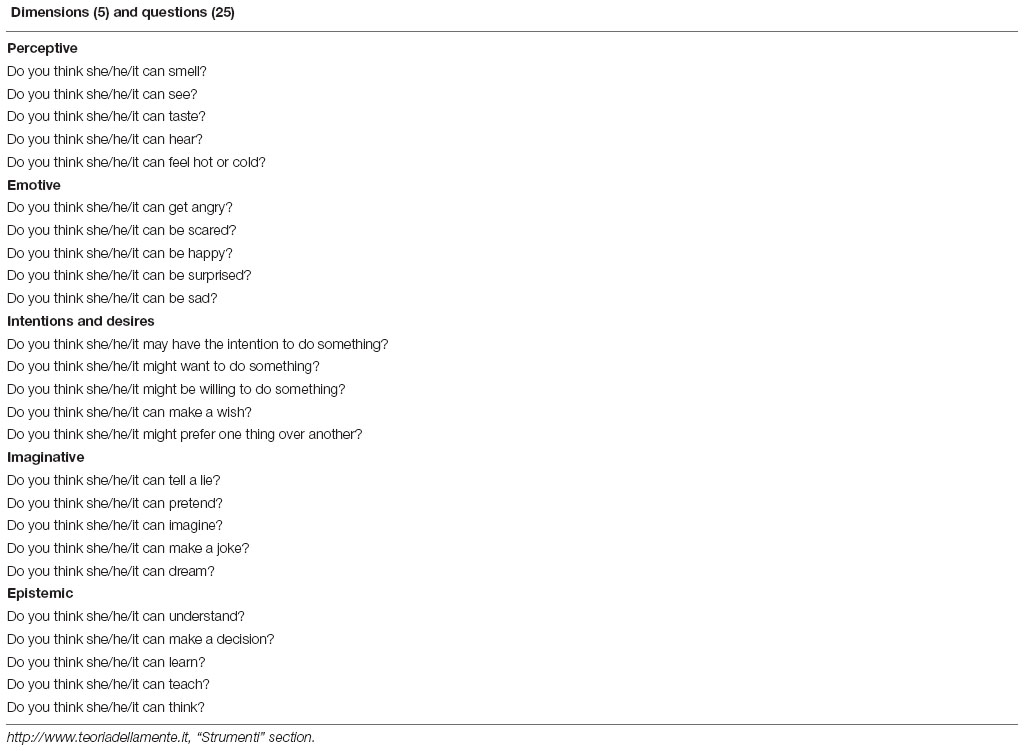

The AMS questionnaire1 is a measure of mental states that participants attribute to when they look at images depicting specific characters, in this case a human (female or male based on the participant’s gender; Figure 1A), and, according to the group condition, the Robovie or the NAO robot (Figures 1B,C). The AMS questionnaire was inspired by the methodology described in Martini et al. (2016) and is already used in several experiments with children (Manzi et al., 2017; Di Dio et al., 2019, 2020a, b). The construction of the content of the questionnaire is based on the theoretical model of Slaughter et al. (2009) on the categorization of children’s mental verbs resulting from communication exchanges between mother and child. This classification divides mental verbs into four categories: perceptive, volitional, cognitive, and dispositional. For the creation of the AMS questionnaire an additional category related to imaginative verbs has been added. We considered it necessary to distinguish between cognitive, epistemic, and imaginative states, since – especially for the robot – this specification enables the analysis of different psychological processes in terms of development. The AMS therefore consists of five dimensions: Perceptive, Emotive, Desires and Intentional, Imaginative, and Epistemic.

The human condition was used as a baseline measure to evaluate children’s ability to attribute mental states. In fact, as described in the results below, children scored quite high when ascribing mental attributes to the human character, thus supporting children’s competence in performing the mental states attribution task. Also, the human condition was used as a comparison measure against which the level of psychological anthropomorphism of NAO and Robovie was evaluated. The Cronbach’s alfa for each category is as follows: Perceptive (α = 0.8), Emotive (α = 0.8), Desires and Intentional (α = 0.8), Imaginative (α = 0.8), and Epistemic (α = 0.7).

Children answered 25 questions grouped into the five different state categories described above (see Appendix 1 for the specific items). The child had to answer “Yes” or “No” to each question, obtaining 1 when the response is “Yes” and 0 when the response is “No”. The sum of all responses (range = 0–25) gave the total score (α = 0.9); the five partial scores were the sum of the responses within each category (range = 0–5).

The children were tested individually in a quiet room inside their school. Data acquisition was carried out by a single researcher during the normal school activities.

The experimenter showed each child the image on a paper depicting a human - gender matched - and one of the two robots, NAO or Robovie. The presentation order of the image -human and robot- was randomized. Afterward, the experimenter asked children the questions on the five categories of the AMS (Perceptive, Emotive, Intentions and Desires, Imaginative, and Epistemic). The presentation order of the five categories was also randomized. The total time required to complete the test was approximately 10 min.

To evaluate the effect of age, gender, states, agent, and type of robots on children’s mental state attribution to robots, a GLM analysis was carried out with five levels of states (Perceptive, Emotive, Intentions and Desires, Imaginative, and Epistemic) and two levels of agent (Human, Robot) as within-subjects factors, and age (5-, 7-, 9-year-olds), gender (Male, Female) and robot (Robovie, NAO) as the between-subjects factor. The Greenhouse-Geisser correction was used for violations of Mauchly’s Test of Sphericity (p < 0.05). Post hoc comparisons were Bonferroni corrected.

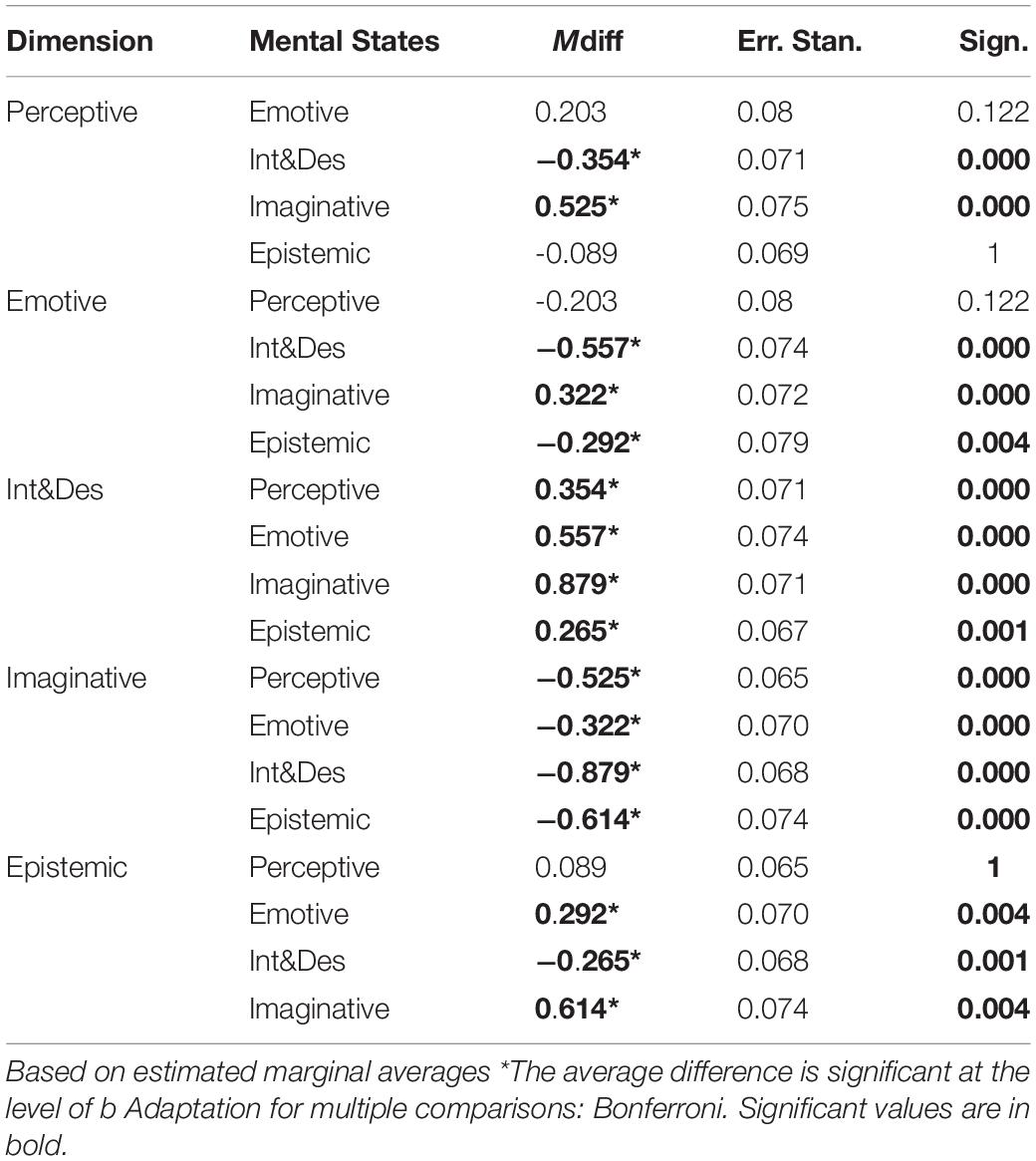

The results showed (1) a main effect of agent, F(1, 126) = 570.9, p < 0.001, partial-η2 = 0.819, δ = 1, indicating that children attributed greater mental states to the human (M = 4.6, SD = 0.27) compared to the robot (M = 2.7, SD = 0.21; Mdiff = 1.75, SE = 0.087); (2) a main effect of states, F(4,504) = 40.33, p < 0.001, partial-η2 = 0.243, δ = 1, mainly indicating that children attributed greater intention and desires and lower imaginative states (for a full description of the statistics, see Table 1); (3) a main effect of robot, F(1,126) = 39.4, p < 0.001, partial-η2 = 0.238, δ = 1, showing that children attributed greater mental states to NAO (M = 3.98, SD = 0.17) compared to Robovie (M = 3.4, SD = 0.14; Mdiff = 0.568, SE = 0.099).

Table 1. Statistics comparing the attribution of all AMS dimensions (Perceptive, Emotive, Intentions and Desires, Imaginative, Epistemic).

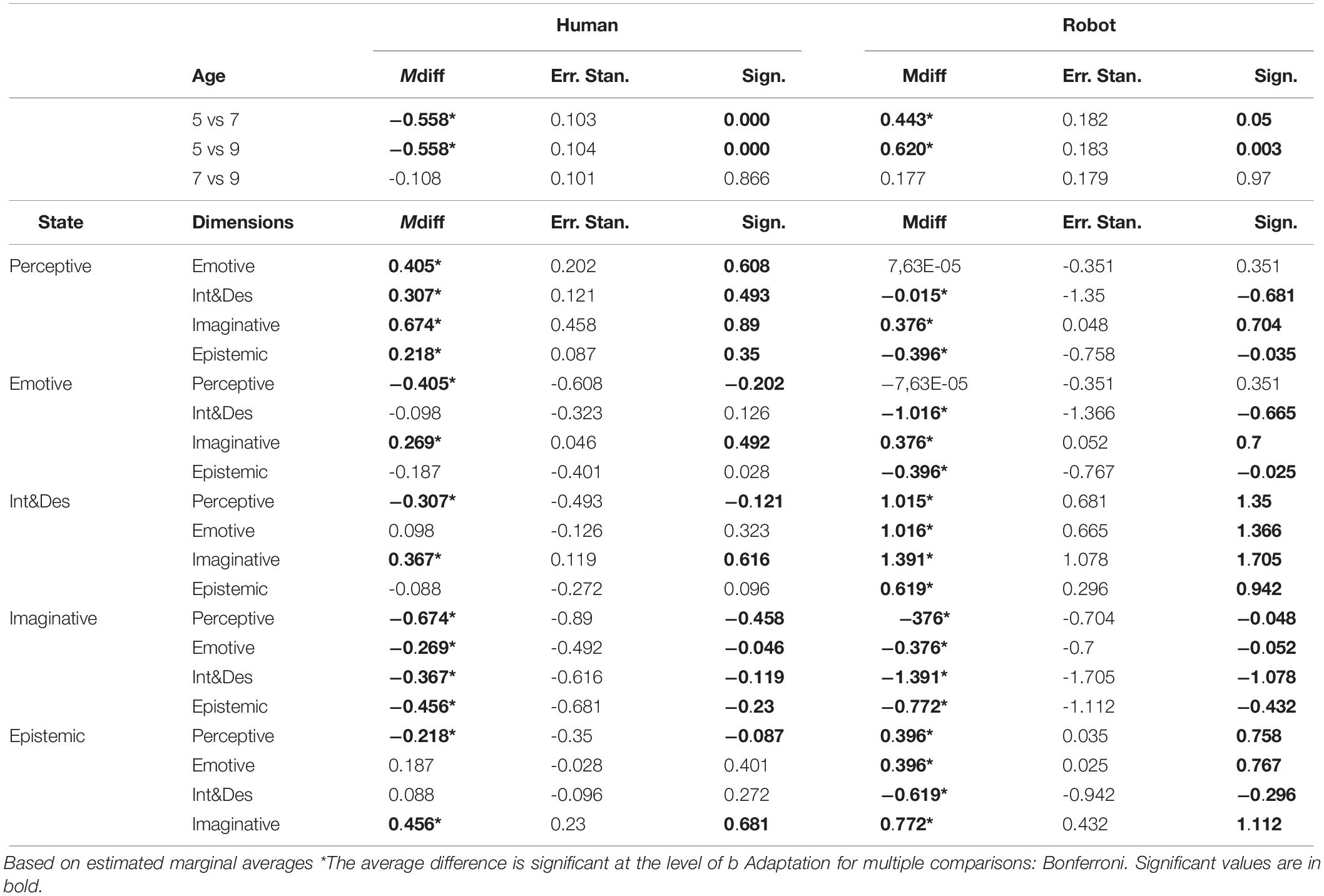

A two-way interaction was also found between (1) states and agent, F(1,126) = 16.51, p < 0.001, partial-η2 = 0.183, δ = 1 (for a detailed description of the differences see Table 2), and (2) agent and age, F(2,126) = 25.17, p < 0.001, partial-η2 = 0.285, δ = 1, showing that 5-year-old children attributed greater mental states to the robotic agents compared to older children (see Table 2).

Table 2. Statistics comparing the attribution of all AMS dimensions (Perceptive, Emotive, Intentions and Desires, Imaginative, Epistemic) and the AMS for the two agents (Human, Robot) across ages (5-, 7- and 9-years).

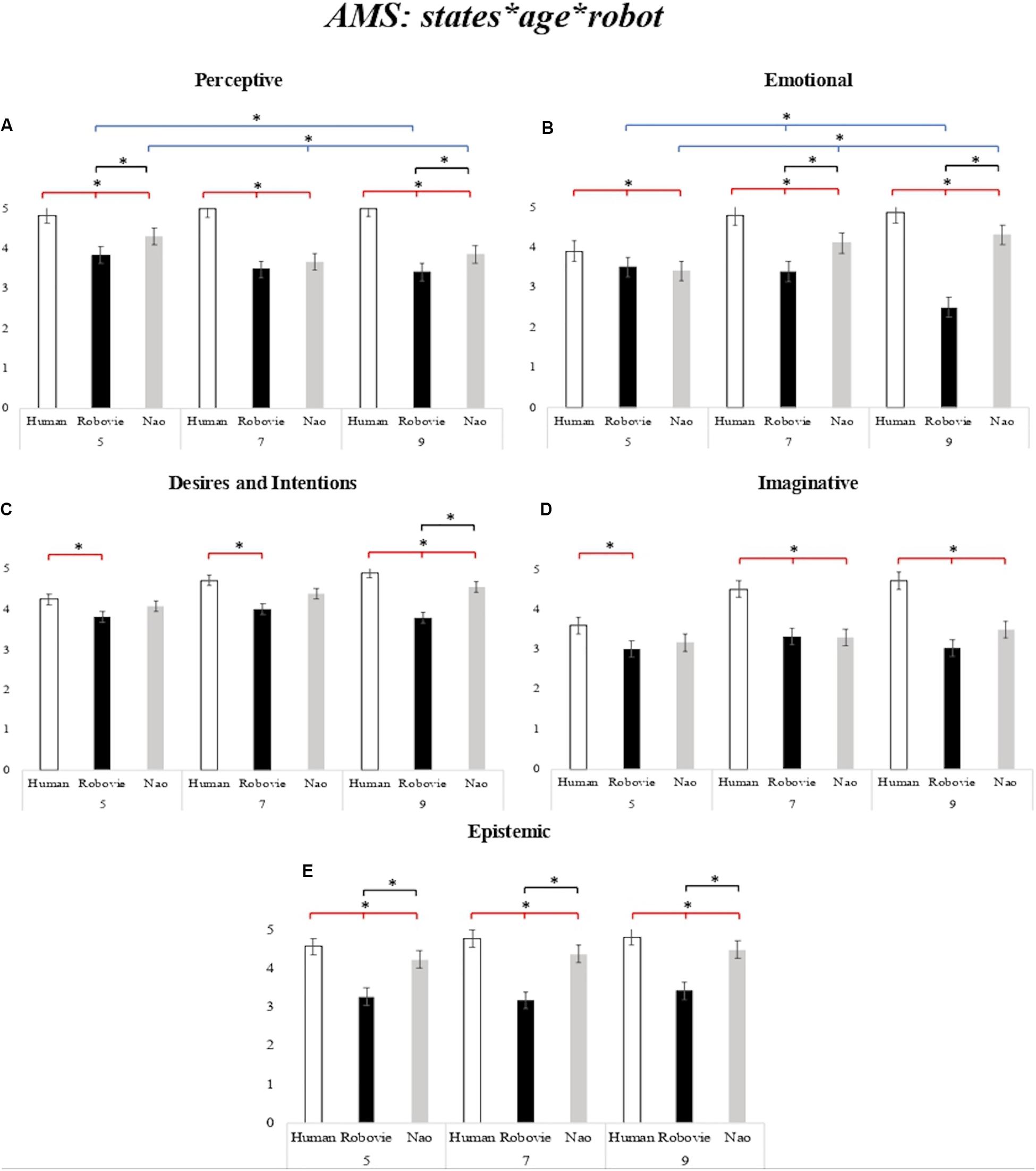

Additionally, we found a three-way interaction between states, age, and robot, F(8,126) = 4.95, p < 0.001, partial-η2 = 0.073, δ = 1. The planned comparisons on the three-way interaction revealed that children attributed greater mental states to NAO compared to Robovie, with the youngest children differentiating on the Perceptive and Epistemic dimensions, and with this difference spreading to all dimensions (but imaginative) in the older children (see Figure 2).

Figure 2. (A–E) Children’s scores on the attribution of mental states (AMS) scale. AMS mean scores for the Human (white bar), for Robovie robot (black bar), and NAO robot (gray bar) for each state (Perceptive, Emotions, Intentions and Desires, Imagination, and Epistemic) as a function of age group (5-, 7-, and 9-year-olds). The bars represent the standard error of the mean. *Indicates significant differences. The red lines indicate the differences between agents (Human, Robot); the blue lines indicate the differences between ages (5-, 7-, and 9-year-olds); the black lines indicate the differences between robots (Robovie, NAO).

In the present study we compared the AMS in children aged 5–9 years between two HSRs, NAO and Robovie, also with respect to a human. The aim was to explore children’s patterns of mental attribution to different types of HSRs, varying in their degree of physical anthropomorphism, from a developmental perspective.

Our results on the AMS to the human and robot generally confirmed the tendency of children to ascribe lower human mental qualities to the robots, thus supporting previous findings (Manzi et al., 2017; Di Dio et al., 2018, 2019, 2020a, b). In addition, children generally attributed greater mental states to the NAO robot than to the Robovie robot, although differences were found in the quality of mental states attribution as a function of age, with older children discriminating more between the types of robots that the younger ones. As a matter of fact, the important role played by the type of robot in influencing children’s AMS can be appreciated by evaluating differences in state attribution developmentally.

Firstly, 5-year-old children generally attributed greater human-like mental states to the robotic agents compared to older children. Additionally, while 5-year-old children discriminated between robots’ mental attribution only on the perceptive and epistemic dimensions – with the NAO robot being regarded as more anthropomorphic than Robovie –, children aged 7 and 9 years were particularly sensitive to the type of robots, and attributed greater mental states to NAO than Robovie on most of the tested mental state dimensions. From a developmental perspective, the tendency of younger children to anthropomorphize HSRs could be reasonably explained by the phenomenon of animism (Piaget, 1929). Already Piaget in 1929 suggested that children younger than 6 years tend to attribute a consciousness to objects, i.e., the phenomenon of animism, and that this fades around 9 years of age. Recently, this phenomenon has been defined as a cognitive error in children (Okanda et al., 2019), i.e., animism error, characterized by a lack of differentiation between living and non-living things. In this respect, several studies showed that, although children are generally able to discriminate between humans and robots, children aged 5–6 years tend to overuse animistic interpretations for inanimate things, and to attribute biological and psychological properties to robots (Katayama et al., 2010; Di Dio et al., 2019, 2020a, b), in line with the results of this study. Interestingly, we further found a difference in emotional attribution to NAO between 5-year-olds and 7- and 9-year-old children: younger children attributed lower emotions to NAO compared to the older ones. This result may seem counterintuitive in light of what we discussed above; however, by finely looking at the scores obtained from the 5-year-olds for each single emotional question, we found that younger children attributed significantly lower negative emotions to NAO compared to the other age groups, favoring positive emotions (χ2 < 0.01). This resulted in an overall decrease of scores in the emotional dimension for the young children. Therefore, not only does this result not contradict the idea of a greater tendency to anthropomorphize robots in younger children compared to older ones, but also highlights that 5-years-olds perceive NAO as a positive entity that cannot express negative emotions such as anger, sadness, and fear: the “good” play-partner.

From the age of 7, children’s belief of the robots’ mind is significantly affected by a sensitivity to the type of the robot, as shown by differences between NAO and Robovie on most mental dimensions, except for Imaginative. The lack of differences between robots on the Imaginative dimension (for all age-groups), which encompasses psychological processes like pretending, and making jokes, appears to be regarded by children as a human prerogative. Interestingly, this result supports findings from a previous study (Di Dio et al., 2018) that compared 6-year-old children’s mental state attribution to different entities (human, dog, robot, and God). Also, in that study, imagination was specific to the human entity.

Generally, the findings for older children indicate that the robot’s appearance does affect mental state attribution to the robot, and this is increasingly evident with age. However, the judgment of older children could also be significantly influenced by the robot’s behavioral characteristics, as demonstrated in a long-term study conducted with children aged 10–12 years (Ahmad et al., 2016). In this study, children played a snakes and ladders game with a NAO robot three times across 10 days, whose behavior in terms of personality for a social robot in education was adapted to maintain and create long-term engagement and acceptance. It was found that children positively reacted to the use of the robot in education, stressing a need to implement robots that are able to adapt based on previous experiences in real time. Of course, this is very much in line with the great vision of disciplines such DR (Cangelosi and Schlesinger, 2015) and DC (Itakura, 2008). In this respect, it is also important to consider further aspects related to the effectiveness in human relations of constructs such as understanding the perspective of others (e.g., Marchetti et al., 2018) and empathy, on which several research groups are actively working. For example, in an exploratory study Serholt et al. (2014) highlighted the perceived need both for teachers and learners to deal with robots showing such a competence.

In the same vein, other studies that used Robovie as an interactive partner in educational contexts, have also shown that when the robot is programed to facilitate interactional dynamics with children, it can be considered by the children as a group member and even part of the friendship circle. In these studies, the robot is typically programed to act as an effective social communicative partner using strategies, like calling children by their name, or adapting the interactive behaviors for each child by means of behavioral patterns drawn from developmental psychology (Kanda et al., 2007; see also, Kahn et al., 2012). The study by Kahn et al. (2012) further showed that after interacting with Robovie, most children believed that Robovie had mental states (e.g., was intelligent and had feelings) and was a social being (e.g., could be a friend, offer comfort, and be trusted with secrets).

The above studies highlight the prospective use of robots, particularly in the educational field. However, in reality, today’s robots are not yet able to sustain autonomous behavior in the long term, even though research is actively laying a good foundation for this. What we can certainly work on with direct effects on children’s perception of the mental abilities of robots are their physical attributes. By outlining differences in mental states attribution to different types of humanoid robots across ages based on robots’ physical appearance, our findings could help map the design of humanoid robots for children: in early ages, robots can display more abstract and mechanical features (possibly also due to the phenomenon of animism as described above); conversely, in older ages, the tendency to anthropomorphize robots is at least partially affected by the design of the robot. However, it has to be kept in mind that excessive human-likeness may be felt as uncomfortable, as suggested by findings showing that children experience less discomfort with robots displaying both human and mechanical features compared to robots whose physical features markedly evoke human ones (Bumby and Dautenhahn, 1999; Woods et al., 2004; Woods, 2006). Excessive resemblance to the human triggers the Uncanny Valley effect (the more the appearance of robots is similar to humans, the higher the sense of eeriness). These data suggest that a well-designed HSR for children should combine both human and mechanical dimensions, which, in our study, seems to be better represented by the NAO robot.

This study enabled us to analyze the AMS to two types of HSRs, highlighting how different types of robot can evoke different attributions of mental states in children. More specifically, our findings suggest that children’s age is an important factor to consider when designing a robot, and provided us with at least two important insights associated with the phenomenon of anthropomorphism from a development perspective, and the design of HSRs for children. Anthropomorphism seems to be a widespread phenomenon in 5-year-olds, while it becomes more dependent on physical features of the robot in older children, with a preference ascribed to the NAO robot that is perceived as more human-like. This effect may then influence the design of robots, which can be more flexible in terms of physical features, as with Robovie, when targeted to young children.

Overall, our results suggest that the assessment of HSRs in terms of mental states attribution may represent a useful measure for studying the effect of different robots’ design for children. However, it has to be noted that the current results involved only two types of HSRs. Therefore, future studies will have to evaluate the mental attribution to a greater variety of robots by also comparing anthropomorphic and non-anthropomorphic robots, and across different cultures. In addition, in future studies it will be important to assess children’s socio-cognitive abilities such as language, executive functions, and ToM, to analyze the effect of these abilities on the AMS to robots developmentally. Finally, this study explored the mental attributions through images depicting robots. Future studies should include a condition where children interact with the robots in vivo to explore the intersectional effect between the robot’s physical appearance and its behavioral patterns. This would enable us to highlight the relative weight of each factor on children’s perception of the robots’ mental competences.

The datasets generated for this study are available on request to the corresponding author.

The studies involving human participants were reviewed and approved by the Ethic Committee, Università Cattolica del Sacro Cuore, Milano, Italy. Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin.

All the authors conceived and designed the experiment. FM and GP conducted the experiments in schools. AM, FM, and GP secured ethical approval. FM and CDD carried out the statistical analyses. All authors contributed to the writing of the manuscript.

This publication was granted by Università Cattolica del Sacro Cuore of Milan.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Ahmad, M. I., Mubin, O., and Orlando, J. (2016). “Children views’ on social robot’s adaptations in education,” in Proceedings of the 28th Australian Conference on Computer-Human Interaction (Tasmania: University Of Tasmania), 145–149.

Airenti, G. (2015). The cognitive bases of anthropomorphism: from relatedness to empathy. Int. J. Soc. Robot. 7, 117–127. doi: 10.1007/s12369-014-0263-x

Bartneck, C., Croft, E., and Kulic, D. (2008). “Measuring the anthropomorphism, animacy, likeability, perceived intelligence and perceived safety of robots,” in Proceedings of the Metrics for Human-Robot Interaction Workshop in affiliation with the 3rd ACM/IEEE International Conference on Human-Robot Interaction (HRI 2008), Technical Report 471 (Piscataway, NJ: IEEE), 37–44.

Bartneck, C., and Forlizzi, J. (2004). “A design-centered framework for social human-robot interaction,” in Preceedings of the RO-MAN 2004. 13th IEEE International Workshop on Robot and Human Interactive Communication (Piscataway, NJ: IEEE), 591–594.

Bartneck, C., Kanda, T., Mubin, O., and Al Mahmud, A. (2009). Does the design of a robot influence its animacy and perceived intelligence? Int. J. Soc. Robot. 1, 195–204. doi: 10.1007/s12369-009-0013-7

Begum, M., Serna, R. W., and Yanco, H. A. (2016). Are robots ready to deliver autism interventions? A comprehensive review. Int. J. Soc. Robot. 8, 157–181. doi: 10.1007/s12369-016-0346-y

Belpaeme, T., Kennedy, J., Ramachandran, A., Scassellati, B., and Tanaka, F. (2018). Social robots for education: a review. Sci. Robot. 3:eaat5954. doi: 10.1126/scirobotics.aat5954 doi: 10.1016/j.ijhcs.2011.04.003.

Broadbent, E. (2017). Interactions with robots: the truths we reveal about ourselves. Annu. Rev. Psychol. 68, 627–652. doi: 10.1146/annurev-psych-010416-043958

Broadbent, E., Kumar, V., Li, X., Sollers, J., Stafford, R. Q., MacDonald, B. A., et al. (2013). Robots with display screens: A robot with a more humanlike face display is perceived to have more mind and a better personality. PLoS One 8:e72589. doi: 10.1371/journal.pone.0072589

Bumby, K., and Dautenhahn, K. (1999). “Investigating children’s attitudes towards robots: A case study,” in Proceedings of the CT99, The 3rd International Cognitive Technology Conference, San Francisco, 391–410.

Cangelosi, A., and Schlesinger, M. (2015). Developmental Robotics: from Babies to Robots. London: MIT Press.

Cangelosi, A., and Schlesinger, M. (2018). From babies to robots: the contribution of developmental robotics to developmental psychology. Child Dev. Perspect. 12, 183–188. doi: 10.1111/cdep.12282

Dario, P., Guglielmelli, E., and Laschi, C. (2001). Humanoids and personal robots: design and experiments. J. Robot. Syst. 18, 673–690. doi: 10.1002/rob.8106

Dautenhahn, K., Nehaniv, C. L., Walters, M. L., Robins, B., Kose-Bagci, H., Mirza, N. A., et al. (2009). KASPAR–a minimally expressive humanoid robot for human–robot interaction research. Appl. Bionics Biomech. 6, 369–397. doi: 10.1155/2009/708594

De La Cruz, V. M., Di Nuovo, A., Di Nuovo, S., and Cangelosi, A. (2014). Making fingers and words count in a cognitive robot. Front. Behav. Neurosci. 8:13. doi: 10.3389/fnbeh.2014.00013

Di Dio, C., Isernia, S., Ceolaro, C., Marchetti, A., and Massaro, D. (2018). Growing up Thinking of God’s Beliefs: Theory of Mind and Ontological Knowledge. Thousand Oaks, CA: Sage Open, 1–14. doi: 10.1177/2158244018809874

Di Dio, C., Manzi, F., Itakura, S., Kanda, T., Hishiguro, H., Massaro, D., et al. (2019). It does not matter who you are: fairness in pre-schoolers interacting with human and robotic partners. Int. J. Soc. Robot. 1–15. doi: 10.1007/s12369-019-00528-9

Di Dio, C., Manzi, F., Peretti, G., Cangelosi, A., Harris, P. L., Massaro, D., et al. (2020a). Come i bambini pensano alla mente del robot. Il ruolo dell’attaccamento e della Teoria della Mente nell’attribuzione di stati mentali ad un agente robotico. Sistemi Intell. 32, 41–56. doi: 10.1422/96279

Di Dio, C., Manzi, F., Peretti, G., Cangelosi, A., Harris, P. L., Massaro, D., et al. (2020b). Shall I trust you? From child human-robot interaction to trusting relationships. Front. Psychol. 11:469. doi: 10.3389/fpsyg.2020.00469

DiSalvo, C. F., Gemperle, F., Forlizzi, J., and Kiesler, S. (2002). “All robots are not created equal: The design and perception of humanoid robot heads,” Proceedings of the 4th conference on Designing Interactive Systems: Processes, Practices, Methods, and Techniques. (New York, NY: ACM).

Duffy, B. R. (2003). Anthropomorphism and the social robot. Robot. Auton. Syst. 42, 177–190. doi: 10.1016/s0921-8890(02)00374-3

Epley, N., Waytz, A., and Cacioppo, J. T. (2007). On seeing human: a three-factor theory of anthropomorphism. Psychol. Rev. 114, 864–886. doi: 10.1037/0033-295x.114.4.864

Fink, J., Mubin, O., Kaplan, F., and Dillenbourg, P. (2012). “Anthropomorphic language in online forums about Roomba, AIBO and the iPad,” in Proceedings of the 2012 IEEE Workshop on Advanced Robotics and its Social Impacts (ARSO), Munich: IEEE, 54–59.

Hackel, L. M., Looser, C. E., and Van Bavel, J. J. (2014). Group membership alters the threshold for mind perception: the role of social identity collective identification and intergroup threat. J. Exp. Soc. Psychol. 2014, 15–23. doi: 10.1016/j.jesp.2013.12.001

Hood, D., Lemaignan, S., and Dillenbourg, P. (2015). “When children teach a robot to write: An autonomous teachable humanoid which uses simulated handwriting,” in Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction (Piscataway, NJ: IEEE) 83–90.

Ishiguro, H., Ono, T., Imai, M., Kanda, T., and Nakatsu, R. (2001). Robovie: an interactive humanoid robot. Int. J. Industrial Robot 28, 498–503. doi: 10.1108/01439910110410051

Itakura, S. (2008). Development of mentalizing and communication: from viewpoint of developmental cybernetics and developmental cognitive neuroscience. IEICE Trans. Commun. 2109–2117. doi: 10.1093/ietcom/e91-b.7.2109

Itakura, S., Ishida, H., Kanda, T., Shimada, Y., Ishiguro, H., and Lee, K. (2008). How to build an intentional android: infants’ imitation of a robot’s goal-directed actions. Infancy 13, 519–532. doi: 10.1080/15250000802329503

Kahn, P. H. Jr., Kanda, T., Ishiguro, H., Freier, N. G., Severson, R. L., Gill, B. T., et al. (2012). “Robovie, you’ll have to go into the closet now”: children’s social and moral relationships with a humanoid robot. Dev. Psychol. 48:303. doi: 10.1037/a0027033

Kanda, T., Ishiguro, H., Ono, T., Imai, M., and Mase, K. (2002). “Development and Evaluation of an Interactive Robot “Robovie”,” in Proceedings of the IEEE International Conference on Robotics and Automation, Washington, DC, 1848–1855. doi: 10.1109/ROBOT.2002.1014810

Kanda, T., Sato, R., Saiwaki, N., and Ishiguro, H. (2007). A two-month field trial in an elementary school for long-term human–robot interaction. IEEE Trans. Robot. 23, 962–971. doi: 10.1109/TRO.2007.904904

Kannegiesser, P., Itakura, S., Zhou, Y., Kanda, T., Ishiguro, H., and Hood, B. (2015). The role of social eye-gaze in children’s and adult’s ownership attributions to robotic agents in three cultures. Interact. Stud. 16, 1–28. doi: 10.1075/is.16.1.01kan

Katayama, N., Katayama, J. I., Kitazaki, M., and Itakura, S. (2010). Young children’s folk knowledge of robots. Asian Cult. Hist. 2:111.

Kiesler, S., and Goetz, J. (2002). “Mental models of robotic assistants,” in Proceedings of the CHI’02 Extended Abstracts on Human Factors in Computing Systems (New York, NY: ACM), 576–577.

Kiesler, S., Powers, A., Fussell, S. R., and Torrey, C. (2008). Anthropomorphic interactions with a robot and robot–like agent. Soc. Cogn. 26, 169–181. doi: 10.1521/soco.2008.26.2.169

Kose, H., and Yorganci, R. (2011). “Tale of a robot: Humanoid robot assisted sign language tutoring,” in Proceedings of the IEEE International Conference on Robotics and Automation2011 11th IEEE-RAS International Conference on Humanoid Robots (Piscataway, NJ: IEEE), 105–111.

Krach, S., Hegel, F., Wrede, B., Sagerer, G., Binkofski, F., and Kircher, T. (2008). Can machines think? Interaction and perspective taking with robots investigated via fMRI. PLoS One 3:e2597. doi: 10.1371/journal.pone.0002597

Lyon, C., Nehaniv, L. C., Saunders, J., Belpaeme, T., Bisio, A., Fischer, K., and Cangelosi, A. (2016). Embodied language learning and cognitive bootstrapping: methods and design principles. Int. J. Adv. Robotic Syst. 13:105. doi: 10.5772/63462

Macdonald, S. J., Donovan, C., and Clayton, J. (2017). The disability bias: understanding the context of hate in comparison with other minority populations. Disabil. Soc. 32, 483–499. doi: 10.1080/09687599.2017.1304206

MacDorman, K. F. (2006). “Subjective ratings of robot video clips for human likeness, familiarity, and eeriness: An exploration of the uncanny valley,” in Proceedings of the ICCS/CogSci-2006 Long Symposium: Toward social Mechanisms of Android Science, Vancouver, 26–29.

MacDorman, K. F., and Ishiguro, H. (2006). The uncanny advantage of using androids in cognitive and social science research. Interact. Stud. 7, 297–337. doi: 10.1075/is.7.3.03mac

MacDorman, K. F., Minato, T., Shimada, M., Itakura, S., Cowley, S., and Ishiguro, H. (2005). “Assessing human likeness by eye contact in an android testbed,” in Proceedings of the 27th annual meeting of the cognitive science society (Austin, TX: CSS) 21–23.

Manzi, F., Ishikawa, M., Di Dio, C., Itakura, S., Kanda, T., Ishiguro, H., et al. (2020a). The understanding of congruent and incongruent referential gaze in 17-month-old infants: An eye-tracking study comparing human and robot. Sci. Rep. 10:11918. doi: 10.1038/s41598-020-69140-6

Manzi, F., Massaro, D., Kanda, T., Tomita, K., Itakura, S., and Marchetti, A. (2017). “Teoria della Mente, bambini e robot: l’attribuzione di stati mentali,” in Proceedings of the Abstract de XXX Congresso Nazionale, Associazione Italiana di Psicologia, Sezione di Psicologia dello Sviluppo e dell’Educazione (Messina, 14-16 September 2017), Italy: Alpes Italia srl, 65–66. Available online at: http://hdl.handle.net/10807/106022

Manzi, F., Savarese, G., Mollo, M., and Iannaccone, A. (2020b). Objects as communicative mediators in children with autism spectrum disorder. Front. Psychol. 11:1269. doi: 10.3389/fpsyg.2020.01269

Marchetti, A., Di Dio, C., Manzi, F., and Massaro, D. (in press). “Robotics in clinical and developmental psychology,” in Comprehensive Clinical Psychology, 2nd Edn, Amsterdam: Elsevier.

Marchetti, A., Di Dio, C., Manzi, F., and Massaro, D. (2020). The psychosocial fuzziness of fear in the COVID-19 era and the role of robots. Front. Psychol. 10:2245. doi: 10.3389/fpsyg.2020.02245

Marchetti, A., Manzi, F., Itakura, S., and Massaro, D. (2018). Theory of Mind and humanoid robots from a lifespan perspective. Z. Psychol. 226, 98–109. doi: 10.1027/2151-2604/a000326

Martini, M. C., Gonzalez, C. A., and Wiese, E. (2016). Seeing minds in others – Can agents with robotic appearance have human-like preferences? PLoS One 11:e0146310. doi: 10.1371/journal.pone.0146310

Mori, M., MacDorman, K. F., and Kageki, N. (2012). The uncanny valley [from the field]. IEEE Robot. Autom. Mag. 19, 98–100. doi: 10.1109/mra.2012.2192811

Moriguchi, Y., Kanda, T., Ishiguro, H., Shimada, Y., and Itakura, S. (2011). Can young children learn words from a robot? Interact. Stud. 12, 107–119. doi: 10.1075/is.12.1.04mor

Morse, A. F., and Cangelosi, A. (2017). Why are there developmental stages in language learning? A developmental robotics model of language development. Cognitive Sci. 41, 32–51. doi: 10.1111/cogs.12390

Mubin, O., Stevens, C. J., Shahid, S., Al Mahmud, A., and Dong, J. J. (2013). A review of the applicability of robots in education. J. Technol. Educ. Learn. 1:13.

Murashov, V., Hearl, F., and Howard, J. (2016). Working safely with robot workers: Recommendations for the new workplace. J. Occup. Environ. Hyg. 13, D61–D71.

Okanda, M., Taniguchi, K., and Itakura, S. (2019). “The role of animism tendencies and empathy in adult evaluations of robot,” in Proceedings of the 7th International Conference on Human-Agent Interaction, HAI ’19 (New York, NY: Association for Computing Machinery), 51–58. doi: 10.1145/3349537.3351891

Okanda, M., Zhou, Y., Kanda, T., Ishiguro, H., and Itakura, S. (2018). I hear your yes–no questions: Children’s response tendencies to a humanoid robot. Infant Child Dev. 27:e2079. doi: 10.1002/icd.2079

Okumura, Y., Kanakogi, Y., Kanda, T., Ishiguro, H., and Itakura, S. (2013a). Can infants use robot gaze for object learning? The effect of verbalization. Int. Stud. 14, 351–365. doi: 10.1075/is.14.3.03oku

Okumura, Y., Kanakogi, Y., Kanda, T., Ishiguro, H., and Itakura, S. (2013b). Infants understand the referential nature of human gaze but not robot gaze. J. Exp. Child Psychol. 116, 86–95. doi: 10.1016/j.jecp.2013.02.007

Perner, J., and Wimmer, H. (1985). “John thinks that Mary thinks that.” attribution of second-order beliefs by 5- to 10-year- old children. J. Exp. Child Psychol. 39, 437–471. doi: 10.1016/0022-0965(85)90051-7

Phillips, E., Zhao, X., Ullman, D., and Malle, B. F. (2018). “What is human-like? Decomposing robots’ human-like appearance using the anthropomorphic roBOT (ABOT) database,” in Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction. (Piscataway, NJ: IEEE).

Powers, A., and Kiesler, S. (2006). “The advisor robot: tracing people’s mental model from a robot’s physical attributes,” in Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction (New York, NY: ACM), 218–225.

Premack, D., and Woodruff, G. (1978). Does the chimpanzee have a theory of mind. Behav. Brain Sci. 1, 515–526. doi: 10.1017/s0140525x00076512

Sarti, D., Bettoni, R., Offredi, I., Tironi, M., Lombardi, E., Traficante, D., et al. (2019). Tell me a story: socio-emotional functioning, well-being and problematic smartphone use in adolescents with specific learning disabilities. Front. Psychol. 10:2369. doi: 10.3389/fpsyg.2019.02369

Serholt, S., Barendregt, W., Leite, I., Hastie, H., Jones, A., Paiva, A., et al. (2014). “Teachers’ views on the use of empathic robotic tutors in the classroom,” in Preceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication (Piscataway, NJ: IEEE), 955–960.

Shamsuddin, S., Yussof, H., Ismail, L. I., Mohamed, S., Hanapiah, F. A., and Zahari, N. I. (2012). Initial response in HRI-a case study on evaluation of child with autism spectrum disorders interacting with a humanoid robot Nao. Procedia Eng. 41, 1448–1455. doi: 10.1016/j.proeng.2012.07.334

Sharkey, A., and Sharkey, N. (2011). Children, the elderly, and interactive robots. IEEE Robot. Autom. Mag. 18, 32–38. doi: 10.1109/mra.2010.940151

Shiomi, M., Kanda, T., Ishiguro, H., and Hagita, N. (2006). “Interactive humanoid robots for a science museum,” in Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-robot Interaction (New York, NY: ACM) 305–312.

Slaughter, V., Peterson, C. C., and Carpenter, M. (2009). Maternal mental state talk and infants’ earlygestural communication. J. Child Lang. 36, 1053–1074. doi: 10.1017/S0305000908009306

Stafford, R. Q., MacDonald, B. A., Jayawardena, C., Wegner, D. M., and Broadbent, E. (2014). Does the robot have a mind? Mind perception and attitudes towards robots predict use of an eldercare robot. Int. J. Soc. Robot. 6, 17–32. doi: 10.1007/s12369-013-0186-y

Tielman, M., Neerincx, M., Meyer, J. J., and Looije, R. (2014). “Adaptive emotional expression in robot-child interaction,” in Proceedings of the 2014 9th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (Piscataway, NJ: IEEE), 407–414.

Todd, A. R., Bodenhausen, G. V., Richeson, J. A., and Galinsky, A. D. (2011). Perspective taking combats automatic expressions of racial bias. J. Pers. Soc. Psychol. 100, 1027–1042. doi: 10.1037/a0022308

Tung, F. W. (2016). Child perception of humanoid robot appearance and behavior. Int. J. Hum. Comput. Interact. 32, 493–502. doi: 10.1080/10447318.2016.1172808

Vinanzi, S., Patacchiola, M., Chella, A., and Cangelosi, A. (2019). Would a robot trust you? Developmental robotics model of trust and theory of mind. Philos. Tr. R. Soc. B 374:20180032. doi: 10.1098/rstb.2018.0032

Wainer, J., Robins, B., Amirabdollahian, F., and Dautenhahn, K. (2014). Using the humanoid robot KASPAR to autonomously play triadic games and facilitate collaborative play among children with autism. IEEE Trans. Auton. Ment. Dev. 6, 183–199. doi: 10.1109/tamd.2014.2303116

Wang, Y., Park, Y. H., Itakura, S., Henderson, A. M. E., Kanda, T., Furuhata, N., et al. (2020). Infants’ perceptions of cooperation between a human and robot. Infant Child Dev. 29:e2161. doi: 10.1002/icd.2161

Wellman, H. M., Cross, D., and Watson, J. (2001). Meta-analysis of theory-of-mind development: the truth about false belief. Child Dev. 72, 655–684. doi: 10.1111/1467-8624.00304

Wimmer, H., and Perner, J. (1983). Beliefs about beliefs: Representation and constraining function of wrong beliefs in young children’s understanding of deception. Cognition 13, 103–128. doi: 10.1016/0010-0277(83)90004-5

Woods, S. (2006). Exploring the design space of robots: Children’s perspectives. Interact. Comput. 18, 1390–1418. doi: 10.1016/j.intcom.2006.05.001

Woods, S., Dautenhahn, K., and Schulz, J. (2004). “The design space of robots: Investigating children’s views,” in Proceedings of the 13th IEEE International Workshop on Robot and Human Interactive Communication (Piscataway, NJ: IEEE), 47–52.

Yogeeswaran, K., Złotowski, J., Livingstone, M., Bartneck, C., Sumioka, H., and Ishiguro, H. (2016). The interactive effects of robot anthropomorphism and robot ability on perceived threat and support for robotics research. J. Hum. Robot Interact. 5, 29–47. doi: 10.5898/jhri.5.2.yogeeswaran

Zanatto, D., Patacchiola, M., Cangelosi, A., and Goslin, J. (2020). Generalisation of Anthropomorphic stereotype. Int. J. Soc. Robot. 12, 163–172. doi: 10.1007/s12369-019-00549-4

Zanatto, D., Patacchiola, M., Goslin, J., and Cangelosi, A. (2016). “Priming anthropomorphism: can the credibility of humanlike robots be transferred to non-humanlike robots?” in Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch: IEEE, 543–544. doi: 10.1109/HRI.2016.7451847

Zanatto, D., Patacchiola, M., Goslin, J., and Cangelosi, A. (2019). Investigating cooperation with robotic peers. PLoS One 14:e0225028. doi: 10.1371/journal.pone.0225028

Zhang, T., Zhu, B., Lee, L., and Kaber, D. (2008). “Service robot anthropomorphism and interface design for emotion in human-robot interaction,” in Proceedings of the 2008 IEEE International Conference on Automation Science and Engineering (Piscataway, NJ: IEEE), 674–679.

Zhong, J., Ogata, T., Cangelosi, A., and Yang, C. (2019). Disentanglement in conceptual space during sensorimotor interaction. Cogn. Comput. Syst. 1, 103–112. doi: 10.1049/ccs.2019.0007

Złotowski, J., Proudfoot, D., Yogeeswaran, K., and Bartneck, C. (2015). Anthropomorphism: opportunities and challenges in human–robot interaction. Int. J Soc. Robot. 7, 347–360. doi: 10.1007/s12369-014-0267-6

I will show you an image of a girl/boy/robot (to be selected depending on condition). I will ask you some questions about her/him/it (depending on condition). You can answer Yes or No to the questions.

Keywords: child–robot interaction (cHRI), social robots, humanoid and anthropomorphic robots, differences among robots, children, anthropomorphism, mental states attribution

Citation: Manzi F, Peretti G, Di Dio C, Cangelosi A, Itakura S, Kanda T, Ishiguro H, Massaro D and Marchetti A (2020) A Robot Is Not Worth Another: Exploring Children’s Mental State Attribution to Different Humanoid Robots. Front. Psychol. 11:2011. doi: 10.3389/fpsyg.2020.02011

Received: 20 December 2019; Accepted: 20 July 2020;

Published: 30 September 2020.

Edited by:

Emily Mather, University of Hull, United KingdomReviewed by:

Muneeb Imtiaz Ahmad, Edinburgh Centre for Robotics, Heriot-Watt University, United KingdomCopyright © 2020 Manzi, Peretti, Di Dio, Cangelosi, Itakura, Kanda, Ishiguro, Massaro and Marchetti. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Federico Manzi, ZmVkZXJpY28ubWFuemlAdW5pY2F0dC5pdA==

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.