95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 26 July 2019

Sec. Emotion Science

Volume 10 - 2019 | https://doi.org/10.3389/fpsyg.2019.01715

Spontaneous facial mimicry is modulated by many factors, and often needs to be suppressed to comply with social norms. The neural basis for the inhibition of facial mimicry was investigated in a combined functional magnetic resonance imaging and electromyography study in 39 healthy participants. In an operant conditioning paradigm, face identities were associated with reward or punishment and were later shown expressing dynamic smiles and anger expressions. Face identities previously associated with punishment, compared to reward, were disliked by participants overall, and their smiles generated less mimicry. Consistent with previous research on the inhibition of finger/hand movements, the medial prefrontal cortex (mPFC) was activated when previous conditioning was incongruent with the valence of the expression. On such trials there was also greater functional connectivity of the mPFC with insula and premotor cortex as tested with psychophysiological interaction, suggesting inhibition of areas associated with the production of facial mimicry and the processing of facial feedback. The findings suggest that the mPFC supports the inhibition of facial mimicry, and support the claim of theories of embodied cognition that facial mimicry constitutes a spontaneous low-level motor imitation.

People have the tendency to spontaneously imitate others’ actions, postures, and facial expressions (Brass et al., 2000; Heyes, 2011; Wood et al., 2016). For the face, this phenomenon is termed facial mimicry.

According to theories of embodied cognition, facial mimicry is a low-level motor imitation that occurs spontaneously and can facilitate and/or speed up the recognition of the observed expression through afferent feedback to the brain (Niedenthal, 2007; Barsalou, 2008; Wood et al., 2016). This view is sometimes called the “matched motor” hypothesis (Hess and Fischer, 2013). Facial mimicry can occur without conscious perception of the stimulus face (Dimberg et al., 2000; Mathersul et al., 2013; but see Korb et al., 2017), and is difficult to suppress voluntarily (Dimberg et al., 2002; Korb et al., 2010). Mimicry influences judgments of expression authenticity (Hess and Blairy, 2001; Korb et al., 2014), and the blocking of facial mimicry compromises the processing of facial expressions by reducing recognition speed and accuracy as measured with behavior (Niedenthal et al., 2001; Oberman et al., 2007; Stel and van Knippenberg, 2008; Maringer et al., 2011; Rychlowska et al., 2014; Baumeister et al., 2016), and electroencephalography (EEG; Davis et al., 2017), as well as reducing activation in the brain’s emotion centers, such as the amygdala (Hennenlotter et al., 2009).

Although of automatic origin, facial mimicry can be increased or decreased in a top-down fashion. Indeed, facial mimicry has been found to be modulated by several factors including the expresser–observer relationship (Seibt et al., 2015; Kraaijenvanger et al., 2017). For example, mimicry of happiness is reduced for faces associated with losing money, compared to faces associated with winning money (Sims et al., 2012). Such findings suggest that people spontaneously generate facial mimicry, which is inhibited or increased, depending on whether they like or dislike the person they are interacting with. Related behavioral work demonstrated that the automatic tendency to imitate seen hand gestures (thumbs up and middle finger) is modulated by their pro-social or anti-social meaning (Cracco et al., 2018). Consistent with this, in a delayed cued counter-imitative task, activation of the primary motor cortex is greater first according to a stimulus-congruent “mirror” response, and later reflecting a rule-based “nonmirror” response (Ubaldi et al., 2015).

Research employing functional magnetic resonance imaging (fMRI) and transcranial magnetic stimulation (TMS) has suggested that facial mimicry originates in lateral and medial cortical, as well as subcortical motor, premotor, and somatosensory areas (van der Gaag et al., 2007; Schilbach et al., 2008; Keysers et al., 2010; Likowski et al., 2012; Korb et al., 2015b; Paracampo et al., 2016). But which neural circuits are necessary to inhibit facial mimicry in a top-down manner? We aimed to address this question in the present study.

Several lines of research suggest that the modulation of facial mimicry may originate in the medial prefrontal cortex (mPFC). First, areas of the PFC are involved in cognitive control and emotion regulation (Miller, 2000; Ochsner and Gross, 2005; Korb et al., 2015a), and patients with prefrontal lesions often lack inhibitory control of prepotent response tendencies, such as action imitation, and can even display over-imitation of words (echolalia) and actions (echopraxia) (Lhermitte et al., 1986; De Renzi et al., 1996; Brass et al., 2003). Second, the mPFC is involved in suppressing motor imitation of hand actions, as shown by Brass et al. (2001, 2005) using a simple task, in which participants executed predefined finger movements while observing congruent or incongruent finger movements. In this task, increased activation is observed on incongruent compared to congruent trials in a network encompassing the mPFC and the temporo-parietal junction (TPJ). In this network, the right TPJ and the neighboring supramarginal gyrus allow participants to differentiate between their own and the observed movements (Silani et al., 2013), and the mPFC likely underlies the inhibition of spontaneously arising imitation tendencies. For example, Wang et al. (2011) and Wang and Hamilton (2012) found the mPFC to be a key structure for the inhibition of finger and hand movement imitation, and the integration of eye contact. Based on Wang and Hamilton (2012)’s findings, the social top-down response modulation (STORM) model specifically proposes that imitation and mimicry are top-down modulated by the mPFC in order to increase the person’s social advantage, e.g., by making her being liked more by other people who unconsciously pick up being mimicked. Similarly, in a review paper focusing on the modulation of facial mimicry by neuroendocrine factors, Kraaijenvanger et al. (2017) attribute to prefrontal cortices, including the mPFC, a central role for the processing and mimicry of emotional faces in context. However, most previous brain imaging research has focused on the imitation of hand and finger gestures; no study so far has investigated the neural correlates of the inhibition of facial mimicry.

In the current experiment we investigated the role of the mPFC in the inhibition of facial mimicry of smiles. Smile mimicry was induced using dynamic stimuli showing facial expressions of happiness and verified with facial electromyography (EMG) of the zygomaticus major (ZM) muscle, while brain activity was sampled using fMRI. The expected inhibition of facial mimicry was induced by first conditioning participants to associate specific identities with winning or losing money. Although this task does not require the inhibition of facial mimicry per se – as in the face version of the finger-tapping task developed by Brass et al. (2001, 2005; Korb et al., 2010) – it was used to prevent the occurrence of stimulus-locked head movements, which are likely to occur during the production of voluntary facial expressions, and which would have jeopardized the quality of the fMRI recordings. Based on prior research using the same paradigm and stimuli (Sims et al., 2012; but see also Hofman et al., 2012), we predicted greater ZM activation for happy faces associated with winning (congruent condition) than with losing money (incongruent condition). In light of previous research investigating the neural correlates of the inhibition of finger or hand movements (Brass et al., 2005), we also expected the mPFC to inhibit facial mimicry, and thus to be more activated for happy faces associated with losing than with winning money. Finally, psychophysiological interaction (PPI) analyses were used to establish the functional connectivity of the mPFC with the rest of the brain.

Thirty-nine right-handed, fluent English speaking, female participants were recruited via flyers posted on campus. Inclusion criteria were right-handedness, normal or corrected vision, absence of a diagnosis of psychiatric conditions, no personal history of seizures or a family history of hereditary epilepsy, and no consumption of prescribed psychotropic medication. Moreover, participants were screened with an MRI safety questionnaire. Data from three participants were excluded from analyses due to excessive head movement (over 2 mm on any of the three axes), and one participant was excluded because she misunderstood instructions and responded on every trial during the fMRI task. The final sample included in the analyses was 35 participants with a mean age of 22.9 years (SD = 5.1).

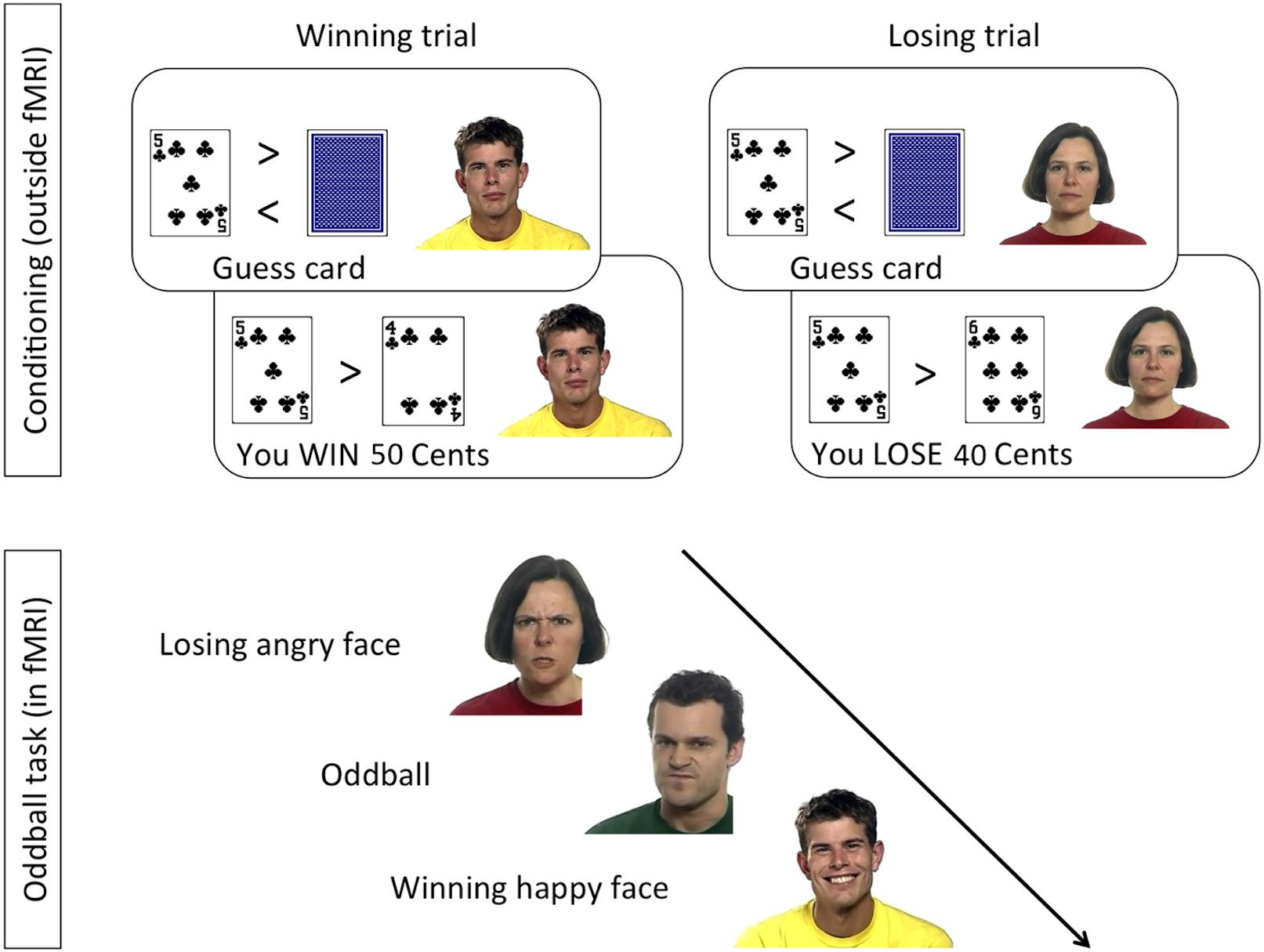

Stimuli were taken from the “Mindreading set” (Baron-Cohen et al., 20041), as described in Sims et al. (2014). In the conditioning phase, static pictures of three faces with neutral facial expression were shown next to two playing cards (Figure 1). In the oddball task, completed inside the MR scanner, 4-s long video clips showing dynamic emotional facial expressions of anger and happiness were shown. Faces used in the oddball task included those used in the conditioning phase as well as novel (previously unseen) faces.

Figure 1. Design of the conditioning and oddball tasks, following the procedure by Chakrabarti and colleagues (Sims et al., 2012, 2014).

All participants signed informed consent. The study was approved by the Health Sciences IRB of the University of Wisconsin–Madison (FWA00005399). The procedure was modeled after a paradigm developed by Sims et al. (2014). All tasks were programmed and displayed with E-Prime 2.0 (Psychology Software Tools, Sharpsburg, PA, United States). Participants completed the conditioning and rating tasks in a behavioral testing room, had EMG electrodes attached, and were moved into the scanner, where they completed the oddball task.

In the conditioning task (Figure 1, top), a card-guessing game was used to condition one face identity with the experience of winning money, another identity with losing money, and two more identities with neither winning nor losing money. Contingencies were counterbalanced across participants. Specifically, on each trial a task-irrelevant face with a neutral expression was displayed next to task-relevant playing cards. To make the presence of the faces plausible, participants were told that the faces would be part of a later memory game. Participants were seated 60 cm from a 17-inch computer screen.

A blank screen initiated a trial and was shown for 250 ms, followed by the presentation of an open card, next to a neutral face. After 1.5 s a closed card appeared next to the open one. Participants’ pressed the “m” or “n” buttons (counterbalanced across participants) on the keyboard to indicate if they guessed the closed card to be higher or lower than the open card. The card then turned face up, and the outcome of the trial was indicated in the lower part of the screen. The outcome, which was displayed for 4 s, could be “You Win (+50 Cents),” “You Lose (−40 Cents),” or “Draw.” The outcome of all trials was programmed in advance, and adaptively showed a higher or lower card depending on participant’s choice. All participants saw 120 trials in a different semi-random order, with a maximum of three subsequent trials with the same outcome. There were three types of trials: (i) a Win face, associated with winning in 27 (90%), and with losing in three (10%) trials; (ii) a Lose face, associated with losing in 27 (90%), and with winning in three (10%) trials; and (iii) two Neutral faces, each shown in 30 trials and equally associated with winning, losing, or draws. At the end of the task, all participants were informed that they had won $4.70, which they immediately received in cash. The conditioning task was preceded by six practice trials, with the same procedure, but a different face identity.

The rating task followed the card-guessing game. Participants rated the valence and the intensity of emotional expressivity of the previously seen faces – shown in random order. The computer mouse was used to select a point on a horizontal 100-points Likert scale ranging from “Do not like it at all” on the left to “Like it a lot” on the right. Similarly, participants were asked on a separate screen to rate the intensity of the emotion displayed by the face from “No emotion at all” to “Very strong emotional expression.”

In the oddball task (Figure 1, bottom), which took place inside the MR scanner, participants watched 4-s long video clips showing dynamic facial expressions of anger or happiness by the previously seen Win and Lose faces, and by faces they had never seen before. The combination of facial identity and expression resulted in congruent trials (HappyWin, AngryLose) and incongruent trials (HappyLose, AngryWin). The task was presented in 3 runs of 61 trials, lasting 10.4 min each. Participants were allowed to rest between runs. Each run comprised 28 videos of the Win face (50% happy, 50% angry), 28 videos of the Lose face (50% happy, 50% angry), and 5 “oddballs,” i.e., faces never seen before. On oddball trials participants had to press, as quickly and accurately as possible, a button on an MRI-compatible button box using their right index finger. Each trial was composed of a central fixation cross (2–3 s, mean 2.5 s), the video clip (4 s), and a blank screen (2–5 s, mean 3.5 s).

Bipolar EMG was acquired over the left ZM and corrugator supercilii (CS) muscles. Because data quality of the CS was poor only the ZM was included in the analyses. An EMG100C Biopac amplifier2 and EL510 MRI-compatible single-use electrodes were used, placed according to guidelines (Fridlund and Cacioppo, 1986). To provide a ground, an EL509 electrode was placed on the participant’s left index finger and attached to the negative pole of an EDA100C amplifier. Sampling rate was set to 10 kHz. Data were preprocessed offline, using Biopac’s Acqknowledge program, and self-made scripts in Matlab (version R2014b) partially using the EEGLAB toolbox (Delorme and Makeig, 2004). Data were filtered with a comb stop filter to remove frequencies around 20 Hz and all harmonics up to 5 kHz. Then a high-pass filter of 20 Hz and a low-pass filter of 500 Hz were applied. Data were downsampled to 500 Hz, segmented from 500 ms before to 4 s after stimulus onset, rectified, and smoothed with a 40 Hz filter. Separately for each fMRI run and participant, trials were excluded if their average amplitude in the baseline or post-stimulus-onset period exceeded by more than two SDs the average amplitude of all baselines or trials (for a similar procedure see Korb et al., 2015b). An average of 34 trials (SD = 2.8) per participant and condition (HappyWin, HappyLose, AngryWin, AngryLose) were available for statistical analyses. The average number of oddball trials was 11.5 (SD = 2.2).

Neuroimaging data were collected using a General Electric 3-T scanner with an eight-channel head coil. Functional images were acquired using a T2*-weighted gradient echo-planar imaging (EPI) sequence [40 sagittal slices/volume, 4 mm thickness, and 0 mm slice spacing; 64 × 64 matrix; 240-mm field of view; repetition time (TR) = 2000 ms, echo time (TE) = 24 ms, flip angle (FA) = 60°; voxel size = 3.75 × 3.75 × 4 mm; 311 whole-brain volumes per run]. To allow for the equilibration of the blood oxygenated level-dependent (BOLD) signal, the first six volumes (12 s) of each run were discarded. A high-resolution T1-weighted anatomical image was acquired in the beginning (T1-weighted inversion recovery fast gradient echo; axial scan with frequency direction A/P; 256 × 256 in-plane resolution; 256-mm field of view; FA = 12°; TI = 450; Receiver Bandwith = 31.25; 160 slices of 1 mm).

Blood oxygenated level-dependent data were processed with SPM12 (the Wellcome Department of Cognitive Neurology, London, United Kingdom3). To correct for head motion, the functional images were spatially realigned to the mean image of each run. Each participant’s anatomical images were co-registered to the mean EPI functional image, which was then normalized to the Montreal Neurological Institute (MNI) template. The parameters generated to normalize the mean EPI were used to normalize the functional images. Normalized images were resampled to 2 × 2 × 2 mm voxel size and spatially smoothed with a three-dimensional Gaussian filter (full-width at half maximum, 8 mm).

The preprocessed functional data were analyzed using a general linear model (GLM) with boxcar functions defined by the onsets and duration (4 s) of the videos and convolved with the canonical hemodynamic response function. Separate regressors were created for each condition (Anger-Win, Anger-Lose, Happy-Win, Happy-Lose) and temporal derivatives were included. An additional regressor was used to model all other events, including trials with rejected EMG data, oddball trials with button presses (hits), oddball trials without button presses (misses), and other button presses (false alarms). Finally, six motion correction parameters were included as regressors of no interest. A high-pass filter (128 s) was applied to remove low-frequency signal drifts, and serial correlations were corrected using an autoregressive AR(1) model.

Single-subject contrasts were taken to second level random effects analyses to find significant clusters across the subject sample, that can be generalized to the population. A region of interest (ROI) approach was used based on functional coordinates published in previous studies investigating the prefrontal modulation of spontaneous imitation of finger or hand movements (Brass et al., 2005, 2001). Additional whole-brain analyses were also performed and are provided in the Supplementary Material (see Supplementary Tables S1, S2).4 All activations are reported with a significance threshold of p < 0.001, uncorrected, and a cluster extent of k = 10. It should be noted that this thresholding only provides unquantified control of family-wise error (Poldrack et al., 2008; but see Lieberman and Cunningham, 2009), resulting in a high false-positive risk, and inferences based on them should be considered as preliminary (for stricter statistical thresholding with FWE correction, see right column in tables). Unthresholded whole-brain summaries for the main contrasts of interest are available at https://neurovault.org/collections/INQEMKIF/.

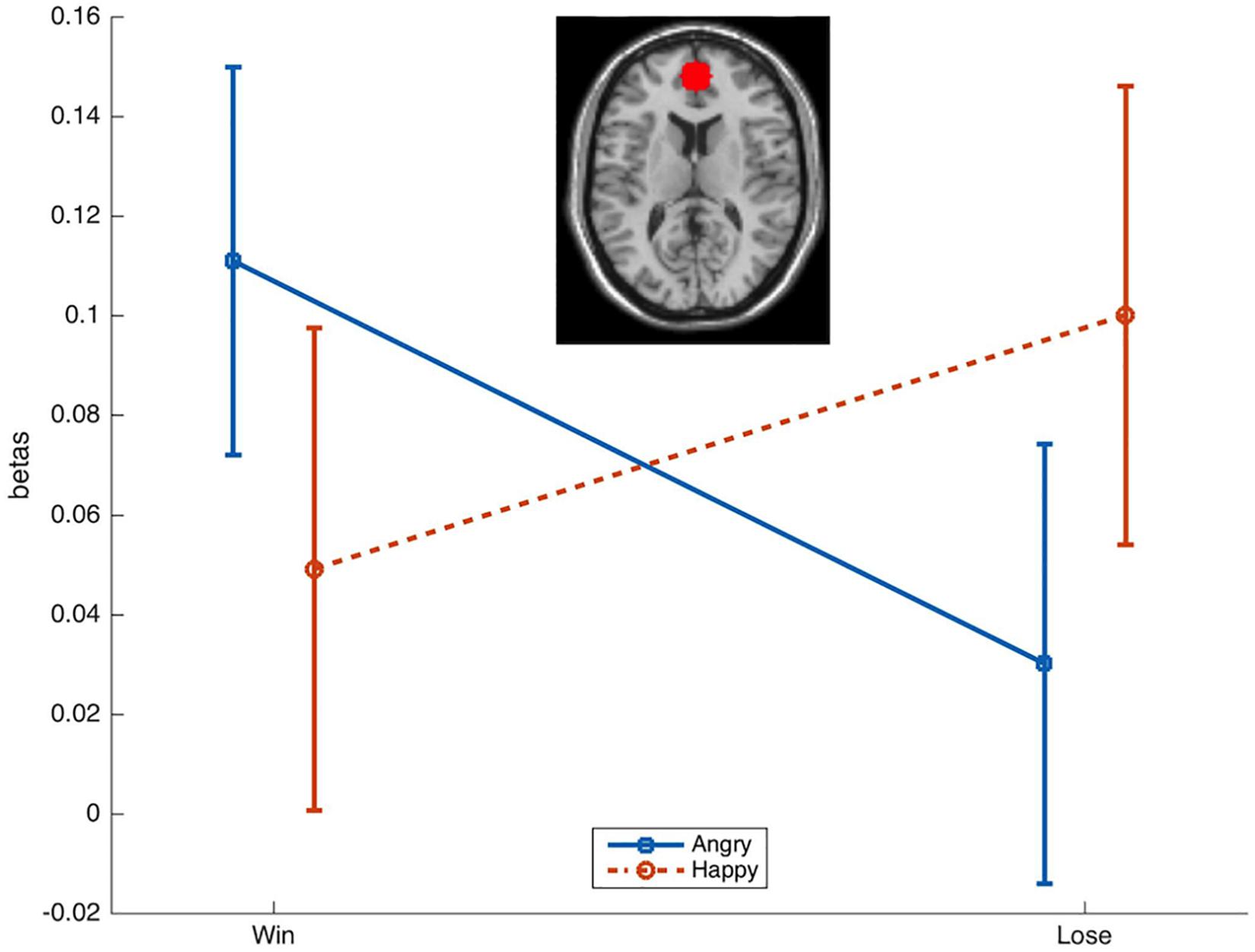

A spherical ROI with a radius of 6 mm was created with the wfu_pickatlas toolbox (Maldjian et al., 2003) and placed around the coordinates reported by Brass et al. (2005; MNI: 1, 51, 12) to be the peak of a cluster activated during the inhibition of spontaneous imitation of finger movements. Beta-values were extracted and averaged over all voxels with the REX script (Duff et al., 2007), and averaged over sessions. Mean beta-values were analyzed in an rmANOVA with the factors Emotion (Happy, Angry) and Reward (Win, Lose).

To further rule out the possibility that specific experimental conditions were correlated with participants’ head movements, a similar method was used as in Johnstone et al. (2006). Specifically, for each participant and session the four regressors of interest (HappyWin, HappyLose, AngryWin, and AngryLose) were extracted from the SPM matrix and correlated with each of the six movement regressors. This resulted in 4 (regressors) × 6 (movement) × 3 (sessions) = 72 correlations per participant. We summed the number of significant correlations (p < 0.05, uncorrected) across movement regressors and sessions, and analyzed the resulting 35 (subjects) × 4 (conditions) matrix in a rmANOVA with the factors Emotion (Angry, Happy) and Reward (Win, Lose). No significant main or interaction effects were found (all F < 1.5, all p > 0.2), suggesting that conditions were not differentially correlated with movement in any specific direction (see Supplementary Figure S1). Head movement was thus comparable across all experimental conditions.

Psychophysiological interaction analyses were computed to estimate condition-related changes in functional connectivity between brain areas (Friston et al., 1997; O’Reilly et al., 2012). In PPI analyses the time course of the functional activity in a specified seed region is used to model the activity in target brain regions. A model is created by multiplying the time course activity in the seed region (i.e., the physiological variable) with a binary comparison of task conditions (“1” and “−1”) (i.e., the psychological variable). Individual participants are modeled as additional conditions of no interest. Functional connectivity with the seed region is assumed if the brain activity in one or more target regions can be explained by the model. PPI analyses were used to estimate stimulus-related changes in functional connectivity with the mPFC (6 mm sphere centered at MNI: x 1, y 51, z 12). We tested the whole-brain functional connectivity in two contrasts of interest (Table 1).

Ratings of liking, provided after the conditioning experiment, were analyzed in a one-way rmANOVA with the levels Win, Lose, No change. A significant effect of Reward [F(2,80) = 28.52, p < 0.001, = 0.451) was found. Post hoc t-tests revealed that faces associated with losing money were rated significantly lower than those associated with winning and with no change (all t > 5.7, all p < 0.001). Ratings for Win and No change faces did not differ [t(34) = 0.9, p = 0.34]. An identical ANOVA carried out on the ratings of intensity did not result in a significant effect of condition [F(2,80) = 0.4, p < 0.67, = 0.01).

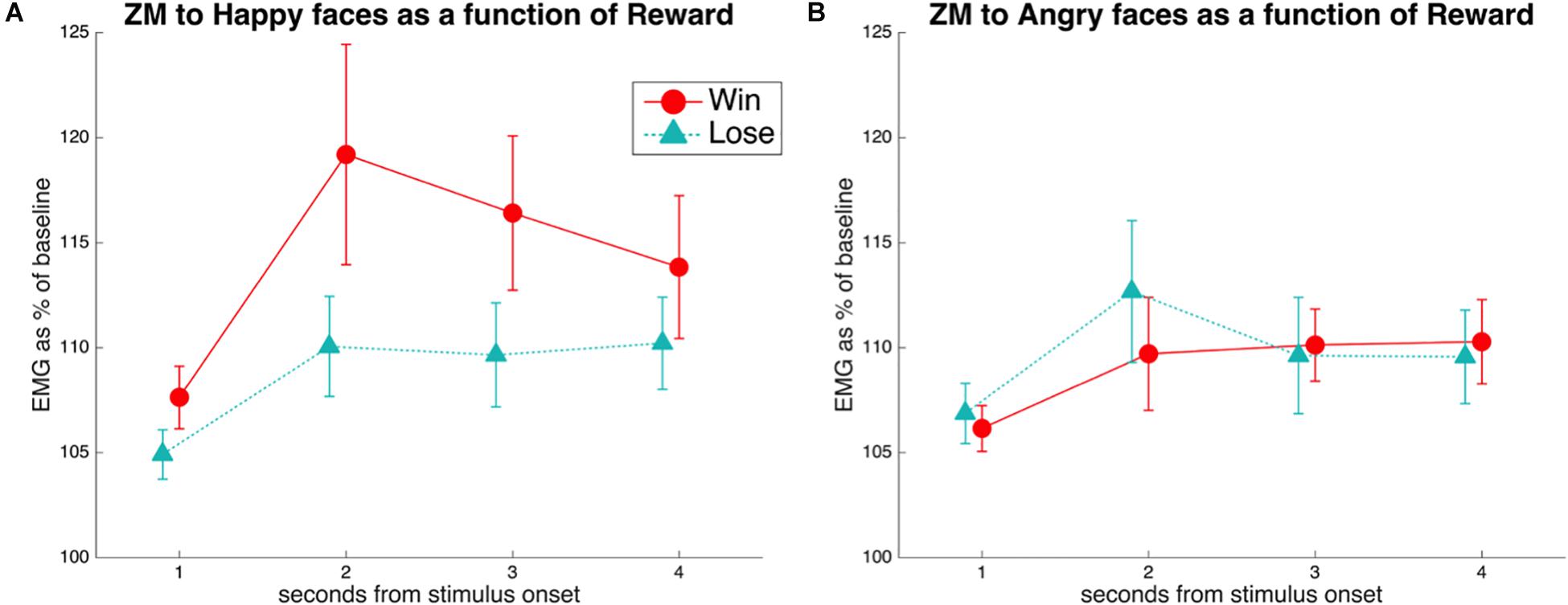

Electromyography of the ZM was analyzed in an Emotion (Happy, Angry) × Reward (Win, Lose) × Time (seconds 1–4 from stimulus onset) rmANOVA. This resulted in significant effects of Reward [F(1,38) = 4.8, p = 0.035, = 0.11], Time [F(3,114) = 4.9, p = 0.015, = 0.11], Emotion × Reward [F(1,38) = 4.8, p = 0.034, = 0.11], Emotion × Time [F(3,114) = 3.3, p = 0.050, = 0.08], and Emotion × Reward × Time [F(3,114) = 3.2, p = 0.036, = 0.08]. The main effect of Emotion was not significant (F < 1.9, p > 0.18). As the three-way interaction was significant, we proceeded by running separate analyses for each emotion.

A rmANOVA on the EMG to happy stimuli, with the factors Reward (Win, Lose) and Time (seconds 1–4 from stimulus onset), resulted (Figure 2A) in a significant main effect of Reward [F(1,38) = 7.8, p = 0.008, = 0.17], with greater ZM activation to Win faces (M = 113.2, SE = 2.8) than to Lose faces (M = 108.1, SE = 1.7). The main effect of Time was also significant [F(3,114) = 6.8, p = 0.004, = 0.15], with lowest values in the first second after stimulus onset (M = 106.1, SE = 1.1), and higher values in the three following seconds (respectively, M = 113.6, 112.0, 110.8, SE = 3.3, 2.5, 2.3). The Reward × Time interaction was not significant [F(3,114) = 1.9, p = 0.13, = 0.05]. Exploratory t-tests suggested a difference in ZM activation to happy stimuli for Win vs. Lose in the first to third second [respectively, t(38) values = 3.3, 2.8, 2.2; p-values = 0.002, 0.008, 0.034], but not in the last second after stimulus onset [t(38) = 1.31, p = 0.196].

Figure 2. (A) EMG of the ZM in response to happiness in faces associated with winning or losing money. A main effect of Reward was found, due to reduced mimicry of smiles when the face was associated with losing compared to winning money. This difference was significant at Times 1–3, as shown by pairwise comparisons. (B) EMG of the ZM in response to anger in faces associated with winning or losing money. No significant differences were found.

A similar rmANOVA of the ZM in response to angry faces (Figure 2B) revealed a barely significant Time × Reward interaction [F(3,114) = 2.78, p = 0.05, = 0.068]. However, pairwise comparisons between reward levels at every time point were all not significant (all t < 1.7, all p > 0.1).

We hypothesized that the mPFC would be more activated for trials involving an incongruent combination of expression valence and conditioned association (HappyLose and AngryWin) than for trials with congruent stimuli (HappyWin and AngryLose). The reason is that in such cases spontaneous facial mimicry should be inhibited in a top-down manner.

Activation in the a priori defined ROI was compared across conditions with rmANOVAs with the factors Emotion (Happy, Angry) and Reward (Win, Lose). This resulted (Figure 3) in a significant Emotion × Reward interaction [F(1,34) = 4.7, p = 0.036, = 0.12]. T-tests only resulted in a near-to-significant difference [t(34) = 1.9, p = 0.07] between AngryWin faces (M = 0.11, SE = 0.04) and AngryLose faces (M = 0.3, SE = 0.04). Other comparisons were not significant (all t < 1.5, all p > 0.17).

Figure 3. Mean (SE) beta-values from the ROI in the mPFC. A significant Emotion × Reward interaction was characterized by greater activation to incongruent (AngryWin, HappyLose) compared to congruent (AngryLose, HappyWin) trials.

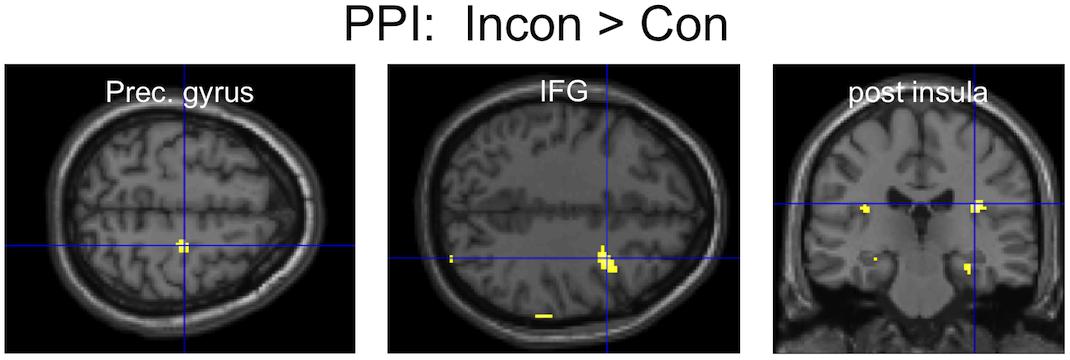

An area of the mPFC (MNI: 1, 51,12), previously reported to be involved in the inhibition of finger movement imitation (Brass et al., 2005), showed greater functional connectivity during Incongruent than Congruent trials with several brain areas, most notably (Figure 4) including the bilateral posterior insula and the right motor cortex (precentral gyrus) and inferior frontal gyrus (IFG). The opposite contrast only showed greater connectivity with the parahippocampal gyrus (Table 1).

Figure 4. PPI results: three clusters of interest showing increased functional connectivity with the mPFC for the contrast Incongruent > Congruent.

The reported fMRI and facial EMG study investigated, for the first time, the neural correlates of the inhibition of spontaneous facial mimicry.

As expected, facial mimicry of happiness was reduced for disliked faces (i.e., faces associated with losing money), compared to liked faces (i.e., faces associated with winning money). This replicates previous findings in which participants were conditioned to associate specific facial identities with positive or negative monetary outcomes (Hofman et al., 2012; Sims et al., 2012). Of particular interest is the comparison with the study by Sims et al. (2012), from which we borrowed the stimuli and most of the procedure (excluding the 60% faces). Despite the difficulties linked to recording low-amplitude facial EMG in the fMRI scanner, the ZM pattern in response to HappyWin and HappyLose is very similar across the two studies (compare Figure 2A here with their Figure 2). If anything, the effect size of the main effect of Reward was greater in our study ( = 0.16) than in that by Sims and colleagues ( = 0.09) – although the data processing and analysis procedures are not entirely comparable.

The replication of previously published reports that the amplitude of facial mimicry is modulated by the observer’s liking/disliking of the sender is relevant for several reasons. First, it is significant in light of recent failures to replicate high-profile findings, especially in the literature on embodied cognition (Wagenmakers et al., 2016; but see Noah et al., 2018), which have thrown light upon the need to replicate experimental results in psychology. Second, the findings are significant because we replicate past effects even with the additional difficulty of recording faint changes in facial muscle contraction, as they are typical for facial mimicry, during MRI. To the best of our knowledge, very few published studies have successfully simultaneously recorded facial EMG and BOLD data with fMRI (Heller et al., 2011; Likowski et al., 2012; Rymarczyk et al., 2018). Thus, the present report adds to the growing literature demonstrating the technical feasibility of monitoring facial EMG during fMRI scanning. Usage of dynamic face stimuli, as opposed to static pictures, is advisable in such EMG–fMRI combined experiments, because they are known to elicit stronger facial mimicry (Sato et al., 2008).

It is important to point out that, although we employed the same task and stimuli as Sims et al. (2012), and also replicate their EMG findings, our interpretation of the change in smile mimicry between HappyWin and HappyLose expressions differs. Specifically, Sims and colleagues interpret the difference in smile mimicry, between faces associated with winning and losing money, as indicating that the reward value of the face leads to increased mimicry. In their view, reward augments facial mimicry of smiles. In contrast, in our view, incongruence between the valence of a facial expression (Happy, Angry) and the memory (Win, Loss) attached to it results in the inhibition of facial mimicry. Accordingly, we interpret the smaller ZM activation for HappyLose compared to HappyWin faces as inhibiting spontaneous facial mimicry. This difference in focus is not due to a fundamental disparity in belief about the mechanisms underlying facial mimicry and its modulation, but instead to the lack of a neutral association condition in this paradigm. Previous studies (Likowski et al., 2011; Hofman et al., 2012; Seibt et al., 2013) have indeed shown that facial mimicry can be modulated by both positive associations (e.g., fair monetary offers, cooperation priming) and negative associations (e.g., unfair monetary offers, competition priming). When compared with a neutral condition, effects of negative associations on facial mimicry may be even slightly more frequent (Likowski et al., 2011; Hofman et al., 2012; Seibt et al., 2013), which supports our interpretation of the difference in ZM activation to HappyWin and HappyLose faces as the result of reduced facial mimicry to HappyLose faces. The effects of reward and punishment on facial mimicry are however not mutually exclusive, and in fact we have interpreted the results based on the congruence/incongruence between the valence of the expression and the valence of the monetary contingency associated with it.

Functional magnetic resonance imaging results show that the mPFC became more active during incongruent trials, (i.e., happy faces associated with losing and angry faces associated with winning, than during congruent trials). This was expected in light of brain imaging studies reporting that the mPFC is part of a network underlying the capacity to differentiate between one’s own and others’ movements, and to inhibit the imitation of perceived finger/hand movements (Brass et al., 2001, 2005, 2009; Brass and Haggard, 2007; Kühn et al., 2009; Wang et al., 2011; Wang and Hamilton, 2012). The finding is also consistent with the fact that patients who suffered lesions in prefrontal areas of the brain have difficulties to inhibit prepotent response tendencies, and show an accrued tendency to imitate other people’s actions (Lhermitte et al., 1986; De Renzi et al., 1996; Brass et al., 2003). In a slightly more dorsal area, cortical thickness of the mPFC was found to correlate with the tendency to suppress emotional expressions in everyday life, as measured by questionnaire (Kühn et al., 2011). Areas of the mPFC were also shown to underlie other aspects of social cognition, including the monitoring of eye gaze, person perception, and mentalizing (Amodio and Frith, 2006). The results reported here suggest that the mPFC is also involved in the top-down inhibition of spontaneous facial mimicry. In line with this, the mPFC has been proposed to be a key node in a distributed brain network underlying the processing of and responding to facial expressions in their context (Kraaijenvanger et al., 2017). Yet, the brain circuitry supporting the inhibition of facial mimicry could still differ from that supporting the inhibition of finger movements. Therefore, further research, including with other neuroscientific methods (e.g., lesion studies, TMS), is necessary. For example, it remains unclear if the tendency of prefrontal patients to over-imitate movements and actions of the limbs (De Renzi et al., 1996; Brass et al., 2003) also extends to facial expressions (McDonald et al., 2010).

Incongruent trials also resulted in increased functional connectivity between the mPFC, right motor cortices (precentral gyrus and IFG), and limbic areas (insula), as suggested by PPI analyses. Although results were only apparent at more liberal statistical thresholding, and PPI might not be the best tool to reveal the direction of functional connectivity, it can be speculated that during incongruent trials the mPFC inhibits motor and limbic areas, which are involved in the generation of facial mimicry and the judgment of facial expressions, as suggested by recent TMS studies (Korb et al., 2015b; Paracampo et al., 2016), as well as brain models (Kraaijenvanger et al., 2017). Indeed, right somatomotor areas appear to be crucially involved in the production of facial mimicry and in the integration of facial feedback for the recognition of emotional facial expressions (Adolphs et al., 2000; Pourtois et al., 2004; Pitcher et al., 2008; Keysers et al., 2010; Korb et al., 2015b; Paracampo et al., 2016). The IFG was repeatedly shown to underlie the imitation of gestures and finger movements (Heyes, 2001). Interestingly, in a task requiring the inhibition of incongruent hand gestures a close-by area of the mPFC was also found to inhibit the IFG, in addition to the STS, as shown by dynamic causal modeling (Wang et al., 2011). The insula has also been associated with the readout of facial feedback, as well as interoception in general (Adolphs et al., 2000; Critchley et al., 2004). Therefore, one can speculate that the increased connectivity between the mPFC and the bilateral (however posterior instead of anterior) insula reflects the prefrontal inhibition of emotional responses to stimuli with incongruent valence of expression and contingency.

The finding of increased activation of the mPFC during incongruent trials, and of its likely inhibition of somatomotor and interoceptive areas, is relevant in light of a long-lasting discussion in social psychology/neuroscience. In fact, based on numerous reports of the modulation of facial mimicry by context and other factors (for recent reviews see Seibt et al., 2015; Kraaijenvanger et al., 2017), opponents of the “matched motor hypothesis” have often dismissed the view that facial mimicry consists of a spontaneous and fast (reflex-like), motor response (Hess and Fischer, 2014), which takes place when encountering other people’s emotional expressions. Here, however, we reveal that activation of an area of the mPFC, previously shown to be crucially involved in the inhibition of the tendency to imitate finger movements, also accompanies the inhibition of smile mimicry of disliked faces. This finding suggests that facial mimicry does indeed arise quickly and spontaneously (be this reaction inborn or automaticed through early associative learning and repeated performance, see for example Heyes, 2001), but can be inhibited or otherwise modulated in a top-down manner through the activity of regulatory prefrontal cortices. Similarly, the STORM model proposed that the mPFC connects to and controls areas of the MNS, from where imitation arises (Wang and Hamilton, 2012).

It is important to point out that opponents of the “matched motor hypothesis” have argued that evidence of a situation-dependent modulation of facial mimicry, and other forms of imitation, necessarily rules out the hypothesis that these behaviors arise in a quick “reflex-like” way. We, on the other hand, are less categorical, and would like to remind the field that proof of mimicry modulation cannot, by any means, be considered sufficient evidence against the quickness and spontaneity of a behavior or physiological reaction. After all, even spinal reflexes can be inhibited in a, literally, top-down fashion. In line with definitions of automaticity proposed by Kahneman and Treisman (1984) and Kornblum et al. (1990), we would like to argue that facial mimicry has at least the qualities of partial automaticity.

A limitation of this study is the lack of a neutral condition, in which facial expressions of familiar faces are shown that are not associated with winning or with losing. Inclusion of this neutral condition would have provided a baseline, to which the changes in facial mimicry for congruent and incongruent trials could have been compared (e.g., see Hofman et al., 2012). It should also be noted that the here reported fMRI results were based on relatively liberal statistical thresholds, which increase the false-positive risk (for stricter statistical thresholding with FWE correction, see right column in tables), and inferences based on them should be considered as preliminary evidence awaiting replication in a bigger sample. However, the pattern of BOLD responses in the mPFC shown here corresponds to predictions based on an extended literature investigating brain responses during the observation of motor actions and their inhibition (finger tapping incongruent to one’s own). This work also adds to the burgeoning literature of concurrent EMG and fMRI of facial mimicry.

In conclusion, the view of disliked compared to liked smiling faces resulted in reduced facial mimicry, as shown with EMG. Cortical activity and connectivity, as measured with fMRI and PPI, suggested that the reduction in facial mimicry is caused by inhibition of somatomotor and insular cortices originating from the mPFC. The same medial prefrontal area thus allows the inhibition of imitative finger/hand movements, and of facial mimicry.

The datasets generated for this study are available on request to the corresponding author.

All participants signed the informed consent. The study was approved by the Health Sciences IRB of the University of Wisconsin–Madison (FWA00005399).

SK and PN designed the experiment. SK and RG acquired and analyzed the data. SK, RG, RD, and PN wrote the manuscript.

This work was partly supported by the Swiss National Science Foundation (Early Postdoc Mobility scholarship, PBGEP1-139870) (to SK) and by the National Science Foundation grant (BCS-1251101 to PN).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We thank Michael Anderle, Ron Fisher, and Josselyn Velasquez for help in data collection; Nate Vack and Steven Kecskemeti for help in task preparation and scanner sequence programming; and Sascha Frühholz and Giorgia Silani for advice on data analysis and commenting of an earlier version of the manuscript.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2019.01715/full#supplementary-material

Adolphs, R., Damasio, H., Tranel, D., Cooper, G., and Damasio, A. R. (2000). A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. J. Neurosci. 20, 2683–2690. doi: 10.1523/jneurosci.20-07-02683.2000

Amodio, D. M., and Frith, C. D. (2006). Meeting of minds: the medial frontal cortex and social cognition. Nat. Rev. Neurosci. 7, 268–277. doi: 10.1038/nrn1884

Baron-Cohen, S., Golan, O., Wheelwright, S., and Hill, J. (2004). Mindreading: The Interactive Guide to Emotions. London: Jessica Kingsley.

Baumeister, J.-C., Papa, G., and Foroni, F. (2016). Deeper than skin deep - the effect of botulinum toxin-A on emotion processing. Toxicon 118, 86–90. doi: 10.1016/j.toxicon.2016.04.044

Brass, M., Bekkering, H., Wohlschläger, A., and Prinz, W. (2000). Compatibility between observed and executed finger movements: comparing symbolic, spatial, and imitative cues. Brain Cogn. 44, 124–143. doi: 10.1006/brcg.2000.1225

Brass, M., Derrfuss, J., Matthes-von Cramon, G., and von Cramon, D. Y. (2003). Imitative response tendencies in patients with frontal brain lesions. Neuropsychology 17, 265–271. doi: 10.1037/0894-4105.17.2.265

Brass, M., Derrfuss, J., and von Cramon, D. Y. (2005). The inhibition of imitative and overlearned responses: a functional double dissociation. Neuropsychologia 43, 89–98. doi: 10.1016/j.neuropsychologia.2004.06.018

Brass, M., and Haggard, P. (2007). To do or not to do: the neural signature of self-control. J. Neurosci. 27, 9141–9145. doi: 10.1523/JNEUROSCI.0924-07.2007

Brass, M., Ruby, P., and Spengler, S. (2009). Inhibition of imitative behaviour and social cognition. Philos. Trans. R. Soc. Lond. Ser. BBiol. Sci. 364, 2359–2367. doi: 10.1098/rstb.2009.0066

Brass, M., Zysset, S., and von Cramon, D. Y. (2001). The inhibition of imitative response tendencies. Neuroimage 14, 1416–1423. doi: 10.1006/nimg.2001.0944

Cracco, E., Genschow, O., Radkova, I., and Brass, M. (2018). Automatic imitation of pro- and antisocial gestures: is implicit social behavior censored? Cognition 170, 179–189. doi: 10.1016/j.cognition.2017.09.019

Critchley, H. D., Wiens, S., Rotshtein, P., Öhman, A., and Dolan, R. J. (2004). Neural systems supporting interoceptive awareness. Nat. Neurosci. 7, 189–195. doi: 10.1038/nn1176

Davis, J. D., Winkielman, P., and Coulson, S. (2017). Sensorimotor simulation and emotion processing: impairing facial action increases semantic retrieval demands. Cogn. Affect. Behav. Neurosci. 17, 652–664. doi: 10.3758/s13415-017-0503-2

De Renzi, E., Cavalleri, F., and Facchini, S. (1996). Imitation and utilisation behaviour. J. Neurol. Neurosurg. Psychiatry 61, 396–400. doi: 10.1136/jnnp.61.4.396

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Dimberg, U., Thunberg, M., and Elmehed, K. (2000). Unconscious facial reactions to emotional facial expressions. Psychol. Sci. 11, 86–89. doi: 10.1111/1467-9280.00221

Dimberg, U., Thunberg, M., and Grunedal, S. (2002). Facial reactions to emotional stimuli: automatically controlled emotional responses. Cogn. Emot. 16, 449–472.

Duff, E. P., Cunnington, R., and Egan, G. F. (2007). REX: response exploration for neuroimaging datasets. Neuroinformatics 5, 223–234. doi: 10.1007/s12021-007-9001-y

Fridlund, A. J., and Cacioppo, J. T. (1986). Guidelines for human electromyographic research. Psychophysiology 23, 567–589. doi: 10.1111/j.1469-8986.1986.tb00676.x

Friston, K. J., Buechel, C., Fink, G. R., Morris, J., Rolls, E., and Dolan, R. J. (1997). Psychophysiological and modulatory interactions in neuroimaging. Neuroimage 6, 218–229. doi: 10.1006/nimg.1997.0291

Heller, A. S., Greischar, L. L., Honor, A., Anderle, M. J., and Davidson, R. J. (2011). Simultaneous acquisition of corrugator electromyography and functional magnetic resonance imaging: a new method for objectively measuring affect and neural activity concurrently. Neuroimage 58, 930–934. doi: 10.1016/j.neuroimage.2011.06.057

Hennenlotter, A., Dresel, C., Castrop, F., Ceballos-Baumann, A. O., Baumann, A. O. C., Wohlschläger, A. M., et al. (2009). The link between facial feedback and neural activity within central circuitries of emotion–new insights from botulinum toxin-induced denervation of frown muscles. Cereb. Cortex 19, 537–542. doi: 10.1093/cercor/bhn104

Hess, U., and Blairy, S. (2001). Facial mimicry and emotional contagion to dynamic emotional facial expressions and their influence on decoding accuracy. Int. J. Psychophysiol. 40, 129–141. doi: 10.1016/s0167-8760(00)00161-6

Hess, U., and Fischer, A. (2013). Emotional mimicry as social regulation. Pers. Soc. Psychol. Rev. 17, 142–157. doi: 10.1177/1088868312472607

Hess, U., and Fischer, A. (2014). Emotional mimicry: why and when we mimic emotions. Soc. Pers. Psychol. Compass 8, 45–57. doi: 10.1111/spc3.12083

Heyes, C. (2001). Causes and consequences of imitation. Trends Cogn. Sci. 5, 253–261. doi: 10.1016/S1364-6613(00)01661-2

Hofman, D., Bos, P. A., Schutter, D. J. L. G., and van Honk, J. (2012). Fairness modulates non-conscious facial mimicry in women. Proc. Biol. Sci. 279, 3535–3539. doi: 10.1098/rspb.2012.0694

Johnstone, T., Ores Walsh, K. S., Greischar, L. L., Alexander, A. L., Fox, A. S., Davidson, R. J., et al. (2006). Motion correction and the use of motion covariates in multiple-subject fMRI analysis. Hum. Brain Mapp. 27, 779–788. doi: 10.1002/hbm.20219

Kahneman, D., and Treisman, A. (1984). “Changing views of attention and automaticity,” in Varieties of Attention, eds R. Parasuraman and D. R. Davis (Orlando, FL: Academic Press), 29–61.

Keysers, C., Kaas, J. H., and Gazzola, V. (2010). Somatosensation in social perception. Nat. Rev. Neurosci. 11, 417–428. doi: 10.1038/nrn2833

Korb, S., Frühholz, S., and Grandjean, D. (2015a). Reappraising the voices of wrath. Soc. Cogn. Affect. Neurosci. 10, 1644–1660. doi: 10.1093/scan/nsv051

Korb, S., Malsert, J., Rochas, V., Rihs, T. A., Rieger, S. W., Schwab, S., et al. (2015b). Gender differences in the neural network of facial mimicry of smiles - An rTMS study. Cortex 70, 101–114. doi: 10.1016/j.cortex.2015.06.025

Korb, S., Grandjean, D., and Scherer, K. R. (2010). Timing and voluntary suppression of facial mimicry to smiling faces in a Go/NoGo task–an EMG study. Biol. Psychol. 85, 347–349. doi: 10.1016/j.biopsycho.2010.07.012

Korb, S., Osimo, S. A., Suran, T., Goldstein, A., and Rumiati, R. I. (2017). Face proprioception does not modulate access to visual awareness of emotional faces in a continuous flash suppression paradigm. Conscious. Cogn. 51, 166–180. doi: 10.1016/j.concog.2017.03.008

Korb, S., With, S., Niedenthal, P. M., Kaiser, S., and Grandjean, D. (2014). The perception and mimicry of facial movements predict judgments of smile authenticity. PLoS One 9:e99194. doi: 10.1371/journal.pone.0099194

Kornblum, S., Hasbroucq, T., and Osman, A. (1990). Dimensional overlap: cognitive basis for stimulus-response compatibility–a model and taxonomy. Psychol. Rev. 97, 253–270. doi: 10.1037//0033-295x.97.2.253

Kraaijenvanger, E. J., Hofman, D., and Bos, P. A. (2017). A neuroendocrine account of facial mimicry and its dynamic modulation. Neurosci. Biobehav. Rev. 77, 98–106. doi: 10.1016/j.neubiorev.2017.03.006

Kühn, S., Gallinat, J., and Brass, M. (2011). “Keep Calm and Carry On”: structural correlates of expressive suppression of emotions. PLoS One 6:e16569. doi: 10.1371/journal.pone.0016569

Kühn, S., Haggard, P., and Brass, M. (2009). Intentional inhibition: how the “veto-area” exerts control. Hum. Brain Mapp. 30, 2834–2843. doi: 10.1002/hbm.20711

Lhermitte, F., Pillon, B., and Serdaru, M. (1986). Human autonomy and the frontal lobes. Part I: imitation and utilization behavior: a neuropsychological study of 75 patients. Ann. Neurol. 19, 326–334. doi: 10.1002/ana.410190404

Lieberman, M. D., and Cunningham, W. A. (2009). Type I and Type II error concerns in fMRI research: re-balancing the scale. Soc. Cogn. Affect. Neurosci. 4, 423–428. doi: 10.1093/scan/nsp052

Likowski, K. U., Mühlberger, A., Gerdes, A. B. M., Wieser, M. J., Pauli, P., and Weyers, P. (2012). Facial mimicry and the mirror neuron system: simultaneous acquisition of facial electromyography and functional magnetic resonance imaging. Front. Hum. Neurosci. 6:214. doi: 10.3389/fnhum.2012.00214

Likowski, K. U., Mühlberger, A., Seibt, B., Pauli, P., and Weyers, P. (2011). Processes underlying congruent and incongruent facial reactions to emotional facial expressions. Emotion 11, 457–467. doi: 10.1037/a0023162

Maldjian, J. A., Laurienti, P. J., Kraft, R. A., and Burdette, J. H. (2003). An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. Neuroimage 19, 1233–1239. doi: 10.1016/s1053-8119(03)00169-1

Maringer, M., Krumhuber, E. G., Fischer, A. H., and Niedenthal, P. M. (2011). Beyond smile dynamics: mimicry and beliefs in judgments of smiles. Emotion 11, 181–187. doi: 10.1037/a0022596

Mathersul, D., McDonald, S., and Rushby, J. A. (2013). Automatic facial responses to briefly presented emotional stimuli in autism spectrum disorder. Biol. Psychol. 94, 397–407. doi: 10.1016/j.biopsycho.2013.08.004

McDonald, S., Li, S., De Sousa, A., Rushby, J., Dimoska, A., James, C., et al. (2010). Impaired mimicry response to angry faces following severe traumatic brain injury. J. Clin. Exp. Neuropsychol. 33, 17–29. doi: 10.1080/13803391003761967

Miller, E. K. (2000). The prefontral cortex and cognitive control. Nat. Rev. Neurosci. 1, 59–65. doi: 10.1038/35036228

Niedenthal, P. M., Brauer, M., Halberstadt, J. B., and Innes-Ker, Å. H. (2001). When did her smile drop? Facial mimicry and the influences of emotional state on the detection of change in emotional expression. Cogn. Emot. 15, 853–864. doi: 10.1080/02699930143000194

Noah, T., Schul, Y., and Mayo, R. (2018). When both the original study and its failed replication are correct: feeling observed eliminates the facial-feedback effect. J. Pers. Soc. Psychol. 114, 657–664. doi: 10.1037/pspa0000121

Oberman, L. M., Winkielman, P., and Ramachandran, V. S. (2007). Face to face: blocking facial mimicry can selectively impair recognition of emotional expressions. Soc. Neurosci. 2, 167–178. doi: 10.1080/17470910701391943

Ochsner, K. N., and Gross, J. J. (2005). The cognitive control of emotion. Trends Cogn. Sci. 9, 242–249. doi: 10.1016/j.tics.2005.03.010

O’Reilly, J. X., Woolrich, M. W., Behrens, T. E. J., Smith, S. M., and Johansen-Berg, H. (2012). Tools of the trade: psychophysiological interactions and functional connectivity. Soc. Cogn. Affect. Neurosci. 7, 604–609. doi: 10.1093/scan/nss055

Paracampo, R., Tidoni, E., Borgomaneri, S., Pellegrino, G. D., and Avenanti, A. (2016). Sensorimotor network crucial for inferring amusement from smiles. Cereb. Cortex 27, 5116–5129. doi: 10.1093/cercor/bhw294

Pitcher, D., Garrido, L., Walsh, V., and Duchaine, B. C. (2008). Transcranial magnetic stimulation disrupts the perception and embodiment of facial expressions. J. Neurosci. 28, 8929–8933. doi: 10.1523/JNEUROSCI.1450-08.2008

Poldrack, R. A., Fletcher, P. C., Henson, R. N., Worsley, K. J., Brett, M., and Nichols, T. E. (2008). Guidelines for reporting an fMRI study. Neuroimage 40, 409–414. doi: 10.1016/j.neuroimage.2007.11.048

Pourtois, G., Sander, D., Andres, M., Grandjean, D., Reveret, L., Olivier, E., et al. (2004). Dissociable roles of the human somatosensory and superior temporal cortices for processing social face signals. Eur. J. Neurosci. 20, 3507–3515. doi: 10.1111/j.1460-9568.2004.03794.x

Rychlowska, M., Cañadas, E., Wood, A., Krumhuber, E. G., Fischer, A., and Niedenthal, P. M. (2014). Blocking mimicry makes true and false smiles look the same. PLoS One 9:e90876. doi: 10.1371/journal.pone.0090876

Rymarczyk, K., Żurawski, Ł., Jankowiak-Siuda, K., and Szatkowska, I. (2018). Neural correlates of facial mimicry: simultaneous measurements of EMG and BOLD responses during perception of dynamic compared to static facial expressions. Front. Psychol. 9:52. doi: 10.3389/fpsyg.2018.00052

Sato, W., Fujimura, T., and Suzuki, N. (2008). Enhanced facial EMG activity in response to dynamic facial expressions. Int. J. Psychophysiol. 70, 70–74. doi: 10.1016/j.ijpsycho.2008.06.001

Schilbach, L., Eickhoff, S. B., Mojzisch, A., and Vogeley, K. (2008). What’s in a smile? Neural correlates of facial embodiment during social interaction. Soc. Neurosci. 3, 37–50. doi: 10.1080/17470910701563228

Seibt, B., Mühlberger, A., Likowski, K., and Weyers, P. (2015). Facial mimicry in its social setting. Emot. Sci. 6:1122. doi: 10.3389/fpsyg.2015.01122

Seibt, B., Weyers, P., Likowski, K. U., Pauli, P., Mühlberger, A., and Hess, U. (2013). Subliminal interdependence priming modulates congruent and incongruent facial reactions to emotional displays. Soc. Cogn. 31, 613–631. doi: 10.1521/soco.2013.31.5.613

Silani, G., Lamm, C., Ruff, C. C., and Singer, T. (2013). Right supramarginal gyrus is crucial to overcome emotional egocentricity bias in social judgments. J. Neurosci. 33, 15466–15476. doi: 10.1523/JNEUROSCI.1488-13.2013

Sims, T. B., Neufeld, J., Johnstone, T., and Chakrabarti, B. (2014). Autistic traits modulate frontostriatal connectivity during processing of rewarding faces. Soc. Cogn. Affect. Neurosci. 9, 2010–2016. doi: 10.1093/scan/nsu010

Sims, T. B., Van Reekum, C. M., Johnstone, T., and Chakrabarti, B. (2012). How reward modulates mimicry: EMG evidence of greater facial mimicry of more rewarding happy faces. Psychophysiology 49, 998–1004. doi: 10.1111/j.1469-8986.2012.01377.x

Stel, M., and van Knippenberg, A. (2008). The role of facial mimicry in the recognition of affect. Psychol. Sci. 19, 984–985. doi: 10.1111/j.1467-9280.2008.02188.x

Ubaldi, S., Barchiesi, G., and Cattaneo, L. (2015). Bottom-up and top-down visuomotor responses to action observation. Cereb. Cortex 25, 1032–1041. doi: 10.1093/cercor/bht295

van der Gaag, C., Minderaa, R. B., and Keysers, C. (2007). Facial expressions: what the mirror neuron system can and cannot tell us. Soc. Neurosci. 2, 179–222. doi: 10.1080/17470910701376878

Wagenmakers, E.-J., Beek, T., Dijkhoff, L., Gronau, Q. F., Acosta, A., Adams, R. B., et al. (2016). Registered replication report strack, martin, & stepper (1988). Perspect. Psychol. Sci. 11, 917–928. doi: 10.1177/1745691616674458

Wang, Y., and Hamilton, A. F. de C. (2012). Social top-down response modulation (STORM): a model of the control of mimicry in social interaction. Front. Hum. Neurosci. 6:153. doi: 10.3389/fnhum.2012.00153

Wang, Y., Ramsey, R., Hamilton, A. F., and de, C. (2011). The control of mimicry by eye contact is mediated by medial prefrontal cortex. J. Neurosci. 31, 12001–12010. doi: 10.1523/JNEUROSCI.0845-11.2011

Keywords: facial mimicry, inhibition, reward, electromyography, fMRI, medial prefrontal cortex

Citation: Korb S, Goldman R, Davidson RJ and Niedenthal PM (2019) Increased Medial Prefrontal Cortex and Decreased Zygomaticus Activation in Response to Disliked Smiles Suggest Top-Down Inhibition of Facial Mimicry. Front. Psychol. 10:1715. doi: 10.3389/fpsyg.2019.01715

Received: 27 April 2019; Accepted: 09 July 2019;

Published: 26 July 2019.

Edited by:

Seung-Lark Lim, University of Missouri–Kansas City, United StatesReviewed by:

Peter A. Bos, Leiden University, NetherlandsCopyright © 2019 Korb, Goldman, Davidson and Niedenthal. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sebastian Korb, U2ViYXN0aWFuLmtvcmJAdW5pdmllLmFjLmF0

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.