- 1Cognitive Science Department, University of California, Irvine, Irvine, CA, United States

- 2Cognitive Science Department, University of California, Riverside, Riverside, CA, United States

Social and personality psychology have been criticized for overreliance on potentially biased self-report variables. In well-being science, researchers have called for more “objective” physiological and cognitive measures to evaluate the efficacy of well-being-increasing interventions. This may now be possible with the recent rise of cost-effective, commercially available wireless physiological recording devices and smartphone-based cognitive testing. We sought to determine whether cognitive and physiological measures, coupled with machine learning methods, could quantify the effects of positive interventions. The current 2-part study used a college sample (N = 245) to contrast the cognitive (memory, attention, construal) and physiological (autonomic, electroencephalogram) effects of engaging in one of two randomly assigned writing activities (i.e., prosocial or “antisocial”). In the prosocial condition, participants described an interaction when they acted in a kind way, then described an interaction when they received kindness. In the “antisocial” condition, participants wrote instead about an interaction when they acted in an unkind way and received unkindness, respectively. Our study replicated previous research on the beneficial effects of recalling prosocial experiences as assessed by self-report. However, we did not detect an effect of the positive or negative activity intervention on either cognitive or physiological measures. More research is needed to investigate under what conditions cognitive and physiological measures may be applicable, but our findings lead us to conclude that they should not be unilaterally favored over the traditional self-report approach.

Introduction

Most people report wanting to be happy (Diener, 2000) – that is, to feel satisfied with their lives and to experience frequent positive emotions and infrequent negative emotions (Diener et al., 1999). A growing number of studies show that happiness correlates with, predicts, and causes many positive outcomes, including success in work (e.g., performance and salary), social relationships (e.g., number of friends and social support), and health and coping [e.g., physical symptoms and longevity (Prochaska et al., 2012)]; for reviews, see (Lyubomirsky et al., 2005; Diener et al., 2017; Walsh et al., 2018). Happy people also tend to show more positive perceptions of themselves and others, greater sociability and likability, more prosocial behavior, and superior creativity (Lyubomirsky et al., 2005).

According to Google Trends (Google Trends, 2019), searches for well-being have increased more than 500% since collection began in 2004. Given the clear benefits of happiness, it is unsurprising to see rising interest in personal well-being and methods to increase it. Literature in the growing area of well-being science points toward positive activity interventions (PAIs), such as writing letters of gratitude (e.g., Emmons and McCullough, 2003; Lyubomirsky et al., 2011), and practicing kindness (e.g., Dunn et al., 2008; Chancellor et al., 2018), as simple behavioral strategies to promote well-being, many of which have been empirically validated (Sin and Lyubomirsky, 2009; Bolier et al., 2013; Layous and Lyubomirsky, 2014). These PAIs have the potential to improve affect, and in turn, promote positive health and well-being outcomes without the use of drugs, costly or stigmatizing treatment, or significant behavioral changes. However, for such interventions to become useful and trusted tools for clinical or public use, the ability to detect their efficacy is critical.

The current state of the art measurement of happiness, and of PAI efficacy, is through self-report. However, self-report variables, even those with decent reliability and validity, are notoriously biased (Schwarz, 1999; Dunning et al., 2004), especially toward socially desirable responses (Velicer et al., 1992; Mezulis et al., 2004; Van De Mortel, 2008) – for example, toward appearing to be happier. Further, individual differences such as sex and culture serve as moderators or create additional variance (Lindsay and Widiger, 1995; Beaten et al., 2000). This has led researchers to search for “objective” measures of happiness that do not rely on self-report, like Facebook status updates (Chen et al., 2017) or Duchenne smiles (Harker and Keltner, 2001). Measures of physiology like electroencephalography (EEG) or heart rate monitoring, as well as cognitive tasks that tap into domains like memory, attention, and perception, are considered less biased by the social influences that plague self-report. Although these measures can be noisy, they are considered (by some) as more objective measures of underlying emotional and cognitive processes (Calvo and Mello, 2015).

Here we aimed to leverage recent advancements in low-cost, readily available physiological measurement devices and a large body of cognitive psychology research to determine the practical utility of cognitive and physiological measures in assessing the effects of a positive versus negative activity intervention. We aimed to verify whether these measures were indeed simple to implement, robust, and predictive for well-being research, thereby providing alternative measures to self-reported well-being levels.

In the current study, students were administered either a PAIs (writing about gratitude and recalling a kind act) or a negative activity intervention (writing about ingratitude and recalling an unkind act). Before and after the intervention, the participants completed both standard psychological measures of their current affective states, as well as several tasks designed to quantify the cognitive domains of memory, attention, and high-level perception. Additionally, physiological measurements of the central (EEG) and autonomic (heart rate, skin temperature, galvanic skin conductance) systems were recorded. This work builds upon our previous findings (Revord et al., 2019), which reported the self-report psychological outcomes of the same positive and negative activity interventions.

Cognitive Outcomes of Positive Activities

Although the well-being outcomes of positive activities like gratitude and kindness are widely reported (see Layous and Lyubomirsky, 2014, for a review), the cognitive outcomes of positive activities have been largely unexplored. Here we focus on the cognitive domains of attention, memory, and situation construal (i.e., high-level perception).

Numerous studies have investigated how attention may be biased toward emotionally salient information that matches one’s current emotional state. For example, in one study, induced negative affect generated an attentional bias that favored sad faces (Gotlib et al., 2004). Similarly, individuals high in trait anxiety have been found to pay more attention to threat (Derryberry and Reed, 1998; Fox et al., 2001). However, these results are muddied by differing effects across age groups (Mather and Carstensen, 2003), and unreliable tasks, such as the dot probe (Neshat-Doost et al., 2000; Schmukle, 2005). Positive activities such as practicing gratitude or kindness may involve deeper, more meaningful, or more complex (e.g., self-reflective or other-focused) emotions, and hence other outcomes than those induced by simple positive affect inductions may be expected. Furthermore, attention is multi-faceted, with multiple components (Mirsky et al., 1991), and support for modulation of other attentional processes with positive interventions is scarce. For example, one study reported no effect of happiness or sadness inductions on alerting, orienting, or executive attention, but found that participants induced to feel sadness exhibited reduced intrinsic alertness (Finucane et al., 2010).

Additionally, it is unclear what other aspects of cognition, such as perception and memory, may be impacted by PAIs. Certainly, trait levels of some positive constructs are related to cognitive biases. For example, those with higher subjective happiness appear to show a self-enhancing bias (J. Y. Lee, 2007). Happy people are also more likely to report more frequent and intense daily happy experiences (Otake et al., 2006), to recall more positive life events and fewer negative life events (Seidlitz and Diener, 1993), and to report more intense and enduring reactions to positive events (Seidlitz et al., 1997). Even when they do not report more positive events, happy people tend to think about negative events in more adaptive terms and to describe new situations in more positive ways (Lyubomirsky and Tucker, 1998). Individuals with higher levels of life satisfaction show superior ability to accurately retain and update positive memory (Pe et al., 2013). Other studies have revealed people high in trait gratitude to have a positive memory bias (Watkins et al., 2004), and those high in trait optimism to show a greater attentional bias for positive than negative stimuli (Segerstrom, 2001). On the opposite side of the spectrum, people who are depressed tend to exhibit a memory bias that favors negative words (Denny and Hunt, 1992).

Although trait-level affect appears to impact cognition, some experimental work also links positive activities with changes in cognition. For example, a 3-day loving-kindness meditation training impacted how easily participants associated positivity with neutral stimuli (Hunsinger et al., 2013). Furthermore, the find-remind-and-bind theory postulates that gratitude shifts cognitive perspective – including situation appraisals, short-term changes in cognition, motivations, and behaviors (Algoe, 2012).

We hypothesized that engaging in a prosocial writing activity, relative to an antisocial writing activity, would drive cognitive biases in several areas: (1) leading people to attend more to positive stimuli and less to negative stimuli; (2) prompting people to have better memory for positive stimuli and worse memory for negative stimuli; and (3) leading people to construe a variety of positive, negative, and neutral situations more positively.

Physiological Outcomes of Positive Activities

Detecting brain and body changes due to PAIs would further validate their efficacy. Presumably objective measures, such as physiological recording, provide a less biased alternative to self-report emotional state measures, which can reflect personal beliefs or correlate due to shared method variance. Here we aimed to use low cost, commercially available, wireless devices to detect changes in the central nervous system (electroencephalogram) and autonomic nervous system (ANS) (skin conductance, skin temperature, blood volume pulse). These devices are readily feasible to use outside of the lab environment, and thereby potentially scalable in field settings, such as clinical, organizational, and educational contexts.

Electroencephalography is a physiological technique in which voltage measuring electrodes are placed in various locations on the scalp surface. While this technique has high temporal resolution, spatial resolution is low, and signals represent the combined activation of millions of cortical neurons in a broad area under each electrode, as filtered through the thick scalp bone. Although much research focuses on inaccessible sub-cortical areas in the generation, expression, and regulation of emotion (see Phelps and LeDoux, 2005, for review), there is evidence linking prefrontal cortical regions, and their subsequent EEG patterns, to emotional processing (Davidson, 2004). Multiple studies report that alpha power asymmetries between frontal electrodes are correlated with self-report variables of affect, such that greater left activation is linked to positive affect, and greater right activation is linked to more negative affect (Tomarken et al., 1992; Wheeler et al., 2007). Emotions induced by positive (joy) versus negative (sad) music are also detectable from these frontal electrodes (Schmidt and Trainor, 2001; Naji et al., 2014). Similar to the interventions used in the current study, Hinrichs and Machleidt (1992) asked participants to recall positive and negative life events, while recording EEG activity. In contrast to the aforementioned studies, they found less asymmetry in alpha activity across hemispheres at frontal, temporal, and occipital sites during the recall of joyful events, and more alpha asymmetry at the same electrode positions during recall of sad and anxiety-provoking events.

Although findings regarding the effects of emotion on EEG lateralization are mixed, consensus in the field is that lateralization in frontal sites is related to the personal experience of emotion (rather that the perception of others’ emotion) and that perception, regardless of valence, may be visible in posterior electrode sites (Davidson, 1993). In this paper, we investigate the efficacy of using EEG asymmetries in gauging affective state changes in response to positive and negative activity interventions, as well as taking a more exploratory, data-driven, machine learning approach to identify possible new EEG correlates of the effects of such interventions.

The ANS regulates body functions not under conscious control, such as respiratory, cardiac, exocrine and endocrine activity. The ANS is in continuous balance between a more parasympathetic (fight or flight) or sympathetic (rest and digest) state. The profile of ANS activity is influenced by emotion; positive affect is characterized by increased parasympathetic nervous system activity, and negative affect results in parasympathetic withdrawal and sympathetic activity (McCraty et al., 1995). The state of the ANS may be indexed by various physiological variables, such as heart rate, heart rate variability (extracted from electrocardiogram or blood pressure volume measures), skin temperature, skin conductance, and respiration rate/depth.

Considerable debate over the years has focused on the effect of emotions on the ANS. Some theories, such as that of Schachter and Singer (1962), state that only the arousal level (i.e., intensity) of an emotion can be detected in the periphery, while others theorize both emotional valence (happy/sad) and arousal induce detectable ANS changes (Lazarus, 1991). Here, we pose a similar question: Does a positive versus negative activity intervention lead to ANS changes as measured by skin conductance, skin temperature, and blood pressure volume?

Mounting evidence suggests this is the case: In pioneering work by Ekman et al. (2013), people were asked to recall autobiographical events that elicited one of six emotions (surprise, disgust, sadness, anger, fear and happiness) while recording physiology. Significant differences in skin temperature, heart rate and skin conductance were observed among these emotions. Similarly, Rainville et al. (2006) asked participants to vividly recall emotional events while collecting cardiac and respiratory activity. They found emotions of happiness, sadness, fear, and anger all drove separate patterns of autonomic activity; a univariate analytic approach revealed that anger-fear and fear-sadness were the most discriminable discrete emotions. Kop et al. (2011) noted increased low frequency heart rate variability (LF-HRV) but not high frequency (HF-HRV) in response to recall of happy memories, as well as increased alpha power in right frontal EEG. A correlation was also observed between ANS (HF/LF HRV ratio) and CNS (EEG right frontal alpha). Haiblum-Itskovitch et al. (2018) used an art-making intervention to induce positive emotion, and found decreases in parasympathetic arousal, as indexed by HF-HRV.

Across studies, different variables (HF-HRV, LF-HRV, LF/HF Ratio, skin temperature, skin conductance) have been found to track emotion changes, suggesting a complex multivariate and non-linear relation between emotion and cognition and the need for more advanced analytic techniques. Advancements in affective computing, which fuses multimodal physiology signals using a data-driven machine learning approach (Jerritta et al., 2011; D’mello and Kory, 2015), have helped bridge this gap. For example, Kim et al. (2004) used heart rate measures, skin temperature, and skin conductance to classify three emotions with an accuracy of 78% and Wagner et al. (2005) classified four emotions at 92% accuracy using features derived from dimensional reduction of skin conductance, electromyogram, respiration, and blood pressure volume. Many methods also include EEG features as predictors, with accuracies ranging from 55 to 82% (Koelstra et al., 2010; Verma and Tiwary, 2014; Yin et al., 2017) for emotions induced by music, and 70% for recalled emotional events (Chanel et al., 2009). Work from this field stresses the importance of large sample sizes, filtering artifacts, and comparing an emotional state to a within subjects baseline, as variance across participants can be large, and ceiling and floor effects are expected in many physiological parameters (Calvo and Mello, 2015).

In our second experiment, we aimed to investigate the effects of a positive and negative intervention on the brain and body though physiological measures of electroencephalogram (EEG), galvanic skin conductance (GSC), skin temperature (ST), and blood volume pulse (BVP) – containing heart rate variability (HRV) information. We explicitly test two hypotheses motivated by previous research and theory – namely, expecting (1) greater frontal EEG asymmetry in the positive condition and (2) lower HF-HRV and higher LF-HRV in the positive condition. We also present analyses that follow a more exploratory approach, where machine learning is used to elucidate the effect of the two interventions on central and ANS measures.

Materials and Methods

Participants

Two-hundred forty-five undergraduates (Experiment 1: N = 159, Mage = 19.66; SD = 3.08; Experiment 2: N = 86, Mage = 19.32, SD = 2.02) were recruited from a participant pool across introductory psychology courses at the University of California, Riverside. Students were invited to participate via an online portal, and were reimbursed in course credit for their time. Written student consent was obtained in accordance with the University of California, Riverside Institutional Review Board. Inclusion criteria required that all participants be over age 18 and fluent in English, with no history of mental illness and currently not taking any medication. Pooling across all participants, 64.8% were female, and 91.1% were right-handed. They reported their ethnicity as 1.6% American Indian/Alaskan Native, 17.9% Asian, 15.2% Black/African American, 0.8% Hawaiian/Pacific Islander, 7% White, 34% Hispanic/Latino, 17% more than one, and 5% other. None of the reported demographics significantly related to intervention condition.

Procedure

Experiment 1 involved a single in-lab session in which participants filled out an online baseline questionnaire, including a consent form, demographic information, and psychological measures. They then completed baseline cognitive tasks, including word memory recall, word memory recognition, situation construal, and a “word find” with positive, negative, and neutral words. After completing the pre-intervention baseline measures, participants were randomly assigned to one of two intervention conditions: a positive writing condition (i.e., the prosocial writing activity; n = 78) or a negative writing condition (i.e., the antisocial writing activity; n = 81). Each writing condition involved a free response to a recalled gratitude (vs. ingratitude) and recalled prosocial (vs. “antisocial”) prompt of the same valence. Exact wording of each prompt appears in Supplementary Material (S1 Text). After each prompt was shown, students were unable to advance to the next screen until at least 5 min had passed and were limited to 10 min total. All subjects were able to complete the writing prompt within the 10-min limit. Furthermore, they were instructed to describe a target act (e.g., a kind or unkind act someone did to them) using specific language, discuss how it is still affecting them, and report how often they think about the act. Participants were also instructed to use any format, and not worry about perfect grammar and spelling. At post-intervention, they completed the same psychological and cognitive variables (in the same order) as at baseline and then were debriefed. Questions and tasks proceeded in the same order for all participants. The total time for the experimental session (after initial participant setup) was approximately 1 h, with 40 min between pre-and post-test.

Experiment 2 involved one online and one in lab session over an 8-day period. On Day 1, students completed a demographics and trait affect questionnaire (not analyzed). On Day 8, they came into the lab and were hooked up to EEG recording equipment and the FeelTM wristband (which records galvanic skin conductance [GSR], skin temperature [ST] and blood volume pulse [BVP]). The rest of the lab session proceeded as in Experiment 1. To control for possible order effects, in Experiment 2, the order of tasks/questionnaires (and items within questionnaires) were counterbalanced within condition using a Williams design to eliminate first-order effects.

Materials

All questionnaires, cognitive tasks, and writing prompts for the in-lab session were presented using Psychtoolbox (Kleiner et al., 2007) for MatlabTM (The MathWorks Inc., 2015). All online questionnaires were presented via Qualtrics, 2019.

Positive and Negative Activity Intervention

Students were randomly assigned to either a positive (prosocial) writing condition or a negative (“antisocial”) writing condition. The prosocial writing condition was designed to elicit a strong positive emotional response, and included both recalling something kind that someone else had done and recalling something kind that the participants themselves had done (cf. Layous and Lyubomirsky, 2014). The antisocial writing condition was designed to complement the prosocial condition, and included the opposite prompts – namely, recalling something unkind that someone else had done and recalling something unkind that the participants themselves had done.

Self-Report Variables

Validated questionnaires addressed multiple psychological states, which included measures of positive and negative affect (Affect-Adjective Scale; Emmons and Diener, 1985), elevation (Haidt, 2003), gratitude (Gratitude Adjective Checklist; McCullough et al., 2002), negative social emotions (i.e., guilt, indebtedness), optimism (Life Orientation Test-Revised; Scheier and Carver, 1985), state self-esteem (State Self-Esteem; (Rosenberg, 1965), meaning in life (Meaning in Life Questionnaire; Steger et al., 2006), and psychological needs (i.e., connectedness, autonomy, and competence; Balanced Measure of Psychological Needs: Sheldon and Hilpert, 2012). Questionnaires were chosen based on their successful use in previous research (Sheldon and Lyubomirsky, 2006; Nelson et al., 2015, 2016; Layous et al., 2017) in order to track changes in constructs that were expected to shift after positive and negative writing tasks. Another candidate questionnaire, the Gallup-Healthways Well-Being Index, was omitted due to its focus on the more trait like aspects of wellbeing that were not expected to change across the relatively short experimental timeline. Each questionnaire was administered before and after the writing intervention. Full results of these self-report variables are presented elsewhere (Revord et al., 2019), but are compared to cognitive and physiological measures here.

Cognitive Variables

Word memory

To assess memory for words of different valence, both before and after the writing intervention tasks, participants were serially presented with 16 positive words (e.g., cheer, merry, beautiful), 16 negative words (e.g., deserter, poverty, devil), and 16 neutral words (e.g., statue, inhabitant, scissors) and instructed to remember them (passive memorization, with no response requested). A different set of words was used pre- and post-intervention. This segment of the task lasted approximately 1.5 min, where words were presented for 1 s with a 2.75–3.25 s jittered inter-stimulus-interval (to facilitate the physiological analyses). Words were selected from the Affective Norms for English Words data set (ANEW; Bradley and Lang, 1999), such that positive words had an average valence of 7.25, negative words had an average valence of 2.75, and neutral words had an average valence of 5.00. Arousal ranged from 5.08 to 5.12 across valence conditions.

Ten minutes after word presentation, recognition memory was tested by presenting the 48 original words in random order, as well as 48 distractor words (16 positive, 16 negative, 16 neutral) in a 8 × 12 grid. Students were asked to click on all the words on the screen that they recognized. They could correctly click on old words (a “hit”), correctly choose not to click on new words (a “correct rejection”), mistakenly click on new words (a “false alarm”), or fail to click on old words (a “miss”). Average completion time for this segment of the task 5 min. D-prime and C, commons measure of sensitivity stemming from signal detection theory (see Nevin, 1969, for review) was used to quantify recognition memory and bias for each word valence separately. Briefly, d-prime provides an estimate of the distance between the distribution of the signal (correct rejections and hits) and the distribution of the noise (misses and false alarms), and C measures how biased a participant is to respond old versus new on average. For calculation purposes, when the hit or false alarm rate was equal to zero, d-prime was set to 1/2N and when the hit or false alarm rate was equal to 1, d-prime was set to 1−1/2N. Larger scores on d’ indicate greater accuracy.

Situation construal

In the situation construal task, participants were presented with 6 photos – two positive situations (e.g., a couple kissing), two negative situations (e.g., a person wading through a polluted ditch), and two neutral or ambiguous situations (e.g., bikers jumping train tracks at high speed, with a train approaching in the background). Images were selected from the International Affective Picture System (IAPS; Lang, 2005). On the IAPS valence ratings (1 = most negative, 9 = most positive), the negative images had an average valence of 2.5, the ambiguous images had an average valence of 5.0, and the positive images had an average valence of 7.5. A different set of 6 images were presented at each test (stimuli randomly assigned to either test). Positive images consisted of slides 2352, 4597, 8090, and 8300; negative images consisted of slides 9342, 9530, 9042, and 6561; and ambiguous images consisted of slides 8475, 2595, 2749, and 8160. The time to complete task was approximately 4 min.

Participants were asked to rate each of these situations using 10 items from the Riverside Situational Q-sort (Funder et al., 2000; Funder, 2016) (1 = not at all, 7 = extremely). They included 5 positive items (i.e., “situation is playful,” “situation is potentially enjoyable,” “situation might evoke warmth or compassion,” “situation is humorous or potentially humorous (if one finds that sort of thing funny),” and “situation might evoke warmth or compassion”), and five negative items (i.e., “situation contains physical threats,” “someone is being abused or victimized,” “someone is unhappy or suffering,” “situation would make some people tense and upset,” and “someone is under threat”). No time limit was imposed, and images were displayed on screen until all 10 questions were complete. Across measurements, Cronbach’s α ranged from 0.82 to 0.85 for the positive items and from 0.85 to 0.86 for the negative items. Two measure for each image valence were extracted:

Response normalized

The response of each item (question) was normalized from the range (1–7), to (–1, +1). Negative items were flipped such that all values above 0 represented positive appraisal of the image. Within each image valence, we took the average of all normalized responses. This gave us a measure of how positively each participant construed each image valence, where 1 is more positive, and –1 is more negative. If the positive intervention increases positive construal, we expect an increase in this measure across all image valences.

Reaction time

Alternatively, the intervention may increase or decrease how fast participant respond to each item. Therefore, the reaction time, averaged within each image valence is also reported.

Word find

To assess the degree to which participants attended to positive versus negative content (Mirsky et al., 1991; Raz and Buhle, 2006), they completed a “word find” task. On a sheet of paper, all participants were presented with the same 15 × 15 word find. The nine words in the word search comprised three positive (e.g., sweetheart), three negative (e.g., slave), and three neutral (e.g., fabric) words, each displayed in pseudorandom positions on screen. A different set of words was used pre- and post-intervention. When a word was found, participants were instructed to circle the word, then click the word on the screen. This procedure allowed us to randomize the order in which participants saw the to-be-found words and eliminate effects of systematic searching in the order of word presentation. Subjects completed the task in approximately 6 min.

Average order

The first primary outcome of the word find was the average order of finding each word valence type. For example, out of the nine words, the first word found is 1, last word found is 9 and the average will be 5. By taking the average of the order in each valence group, we obtained an estimate for the time it took to find that valence group, with a number below 5 signifying faster than average, and above 5 signifying slower than average.

Average time found

The second outcome of the word find was the average time taken from beginning the task to find the words in a valence group. Lower average time found indicates that a valence group was found more quickly, as measured in milliseconds.

Physiological Measures

ANS variables

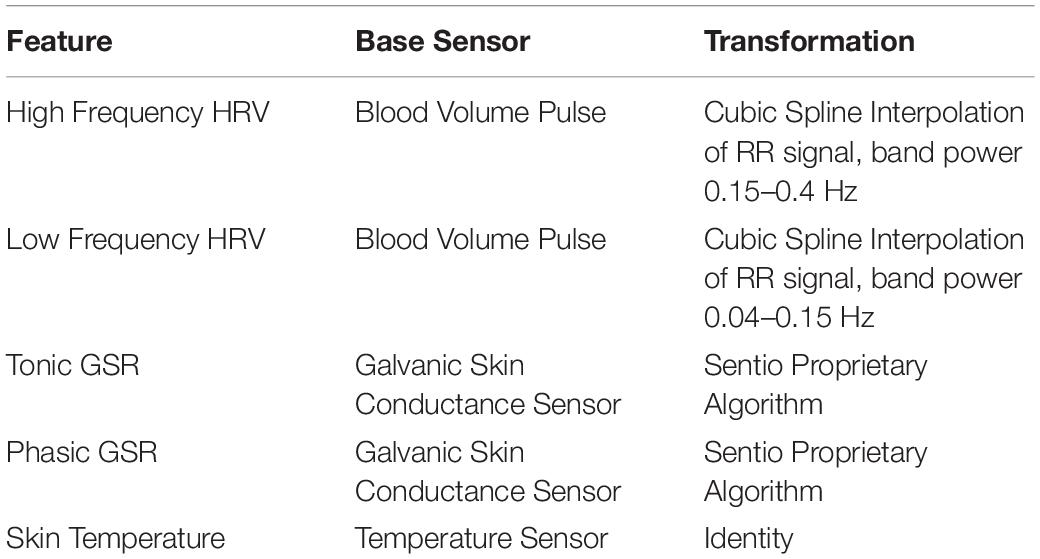

To measure autonomic activity, we used the FeelTM wristband, a to-be-released commercial “emotion tracker” (Sentio, Athens, Greece). The device contains skin conductance, skin temperature, and blood volume pulse sensors, as well as a 3-axis accelerometer/gyroscope, all sampled at 500 Hz. Signals were denoised for movement, sweat, and other artifacts using Sentio’s proprietary algorithms. Along with the denoised signals, the wristband also gave the interpolated inter-beat interval, as extracted from the BPV sensor (4 Hz sample rate), and the tonic/phasic decomposition of the skin conductance signal (20 Hz). The wristband’s internal clock was synchronized to the clock of the stimulus presentation computer, thereby allowing for event driven analysis. Raw signals were transformed into 5 “feature” signals (see Table 1 for details).

Physiological recording took place throughout the experiment, with many possible events on which to base physiological analysis. Following Calvo and Mello (2015) and Calvo et al. (2016) emotion classification paradigm, we chose events with low motion artifacts (i.e., not during writing or during the word find). As a result, the analyzed events were (1) emotional memory word presentation, (2) situation construal question presentation, and (3) presentation of each question during the self-report psychological measures. For periods when the stimulus was present on screen, the amplitude of the signal was extracted for each feature, as well as peak-to-peak. We then tested whether the pattern of changes in physiology across the intervention (as measured at specific event times) differed by intervention type.

Electroencephalogram

A 14-channel wireless EEG device (Emotiv Epoch; Emotiv, 2019) was modified to include an infracerebral electrode cap, better quality Ag/AgCl electrodes, a more comfortable form factor, and alternate electrode placement locations corresponding to the 10–20 placement system. Modifications were completed in lab using the an upgrade kit and directions from EASYCAP (Debener et al., 2012; Easycap GmbH, n.d.). In initial piloting, we noticed the EEG device was dropping samples, sometimes at random, and therefore syncing the signal to stimulus presentation was impossible. To rectify this, we wired a photo-diode into two channels of the EEG device (“sync channels”) and placed this photo diode on the stimulus presentation computer. For each stimulus presentation, a small dark square was flashed under the diode, which registered as a sharp rising or falling edge on these sync channels. The 12 leftover channels were placed at F4, F7, Fz, F5, F8, C3, Cz, P7, P3, Pz, P4, P8, Oz (with reference sensors common mode sense and driven right leg at Afz and Fcz). Sampling rate was 128 Hz.

Electroencephalography signals were band passed filtered between 0.1 and 60 Hz. The rising and falling edge of the sync channels were detected and assigned stimulus event markers and then these channels were removed. Channel data were visually inspected and any bad channels were removed; channels were then re-referenced to the average of all good channels. Noise from movement, sweat, and other sources were of concern, especially with the relatively low signal to noise ratio of this device. To remove eyeblink artifacts, we transformed data using Independent Spectral Analysis (ICA), and if a channel with clear eyeblink artifact pattern was observed, it was removed. Data were then transformed back to the original domain.

To remove other artifact segments from a channel (e.g., sweat, movement, electrode disconnection, electromagnetic interference), filtered versions of each channel were created in the delta, alpha, theta, alpha and beta bands 1–4 10, 4–7, 8–15, and 16–31 Hz band filters (filter channels). The envelope of each of these channels was also extracted using a Hilbert transform (filter envelope channels). Segments of each channel separately were marked as artifacts if any of the following conditions were true in a 0.3 s sliding window: (1) absolute value of original channel above 400 μV, (2) peak to peak amplitude greater than 400 μV, or (3) amplitude of filter envelope channels greater than 40 μV. These filter parameters were chosen so to minimize the inclusion of artifacts while maximizing the retained good signal, as determined by visual inspection.

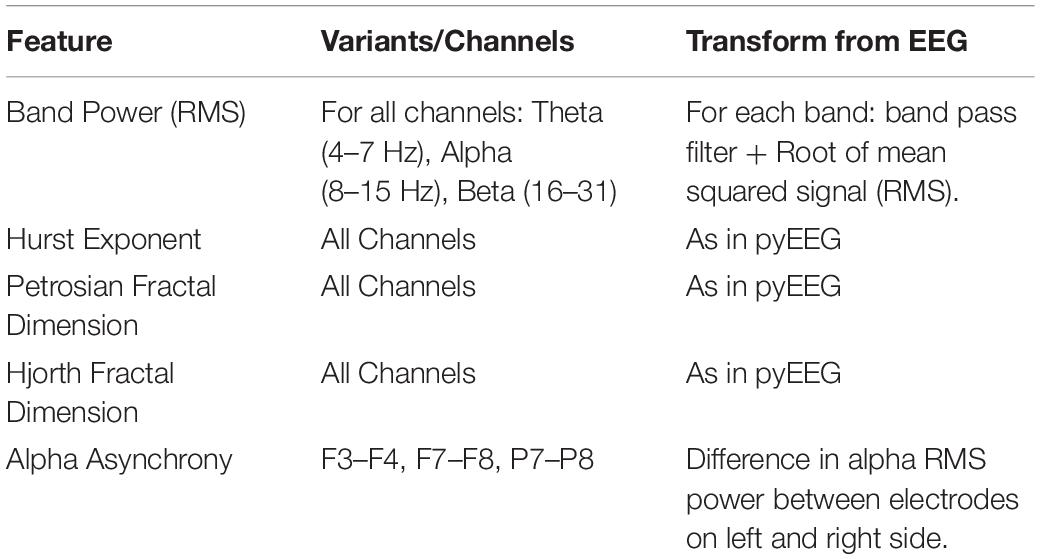

From each channel, and for each of the “stimulus on” events of the situation construal task, emotional memory task, and self-report variables (as for the ANS measures), we extracted a number of features (Table 2). The Python toolbox pyEEG (Bao et al., 2011) was used to extract some of the more complex features. These features where averaged for each participant and channel group, where channel groups were defined as F = [F7, F8, Fz], C = [Cz, C3, C4], P = [P3, P4, P7, P8, Pz], O = [Oz]. However, given the number of variables, and the low sample size, overfitting was a concern. Principle component analysis (PCA) was employed to reduce the number variables from 24 to 5 components (per task, per subject). Directly applying PCA to the non-grouped channels produced the same results. Asynchronous measures, due to their prior theoretical predictions, were not reduced by PCA, and analyzed separately.

Analysis

We took a multi-faceted approach to our analyses, employing both standard regression models and several machine learning techniques. All cognitive variables were initially analyzed using univariate regressions (with measures first z-score normalized), where the difference between pre-and post-intervention value (“Difference”) of each univariate cognition measure is predicted by the intervention condition (“Intervention,” dummy coded: positive = 1) and an intercept. A significant positive intercept (for positively coded measures) provides evidence for an overall increase in the cognitive measure across interventions, which may be attributable to test-retest effects, and is not of primary interest. A significant negative intercept indicates a reduction in that measure in the positive intervention condition. A significant “Intervention” coefficient represents a significant difference in pre-post change between the negative and positive intervention conditions, and evidence for an effect of the intervention on physiology. Familywise error rate adjustment is applied (Bonferroni) for each variable group (cognitive, Autonomic, EEG).

We built on the standard analysis with a number of machine learning techniques. Generally, the goal of machine learning is to obtain a non-linear mapping between some set of inputs X and a set of outputs Y. This framework has the advantage over simpler modeling techniques (such as ordinary least squares regression) in that many variables can be included in the predictive set X, and techniques such as cross validation and regularization will automatically select the most relevant variables and their interactions, to predict Y, while controlling for overfitting.

If cognitive or physiological variables are differentially affected by the intervention conditions, then they will show opposing patterns of change across the intervention. We used change in the psychological variables to classify whether a participant was in the positive or negative intervention condition. If accuracy is above chance, then it can be concluded that the intervention paradigm produced a physiological response. Further, in simpler models like logistic regression, the predictivity of each variable is reflected in its coefficient.

We ran four types of machine learning models: logistic regression, support vector machines (SVM; Corinna and Vladimir, 1995), adaptive boosted SVM (Freund and Schapire, 1999), and random forests (Liaw and Wiener, 2002). L1 regularization (Ng, 2004) was applied to all models to limit overfitting. Additionally, for logistic regression, regularization has the effect of driving non-predictive variable coefficient to zero. If a coefficient is driven to zero, it does not help predict intervention type, and suggests that this variable is unaffected by the intervention type.

A cross-validation scheme was used to limit overfitting and pick the optimal amount of regularization. The procedure is outlined below:

1. The data were split into training (80%) and test (20%) sets.

2. The training set was then split into thirds.

3. On two-thirds of the data we trained a set of models with different regularization values (e.g., 10–3, 10–2, 10–1, 100, 0, 101), and validated on the remaining third.

4. The one-third splits were then shuffled so that each split was used for both training and validation.

5. The regularization that lead to the best validation accuracy in steps 3 and 4 was selected, and a model was then fit to the entire training, and tested on the test set.

6. The entire process (1–5) was repeated 20 times to ensure that different training/test splits did not bias results.

We report the mean and standard error of training accuracy and test accuracy (from step 5), across the 20 runs. For logistic regression, parameters of the best fitting model among the 20 runs are reported, although it should be noted that parameter value changes were minimal with each run.

An alternative method to validate the physiological measures is to consider the concurrent validity between physio and self-report measures across our intervention. We therefore ran univariate ordinary least squares regression models with each of the 14 self-report measures as the DV, and IVs as all variables from (a) cognitive measures (both studies combined) and (b) physiological variables during each task (i.e., separate models for the set of each variables from Situation Construal, Questionnaire, Emotional Memory). The fit of the model was quantified (F statistic) and only p-values less than the Bonferroni corrected alpha level of 0.0036 were considered. Note, however, that well-being is a complex construct, and a simple relation between a set of physio/cognitive measures and a single self-report measure gives an incomplete picture of physiological/cognitive efficacy. Thus, we gave more weight to the machine learning approach.

Results

Univariate Regression Analysis

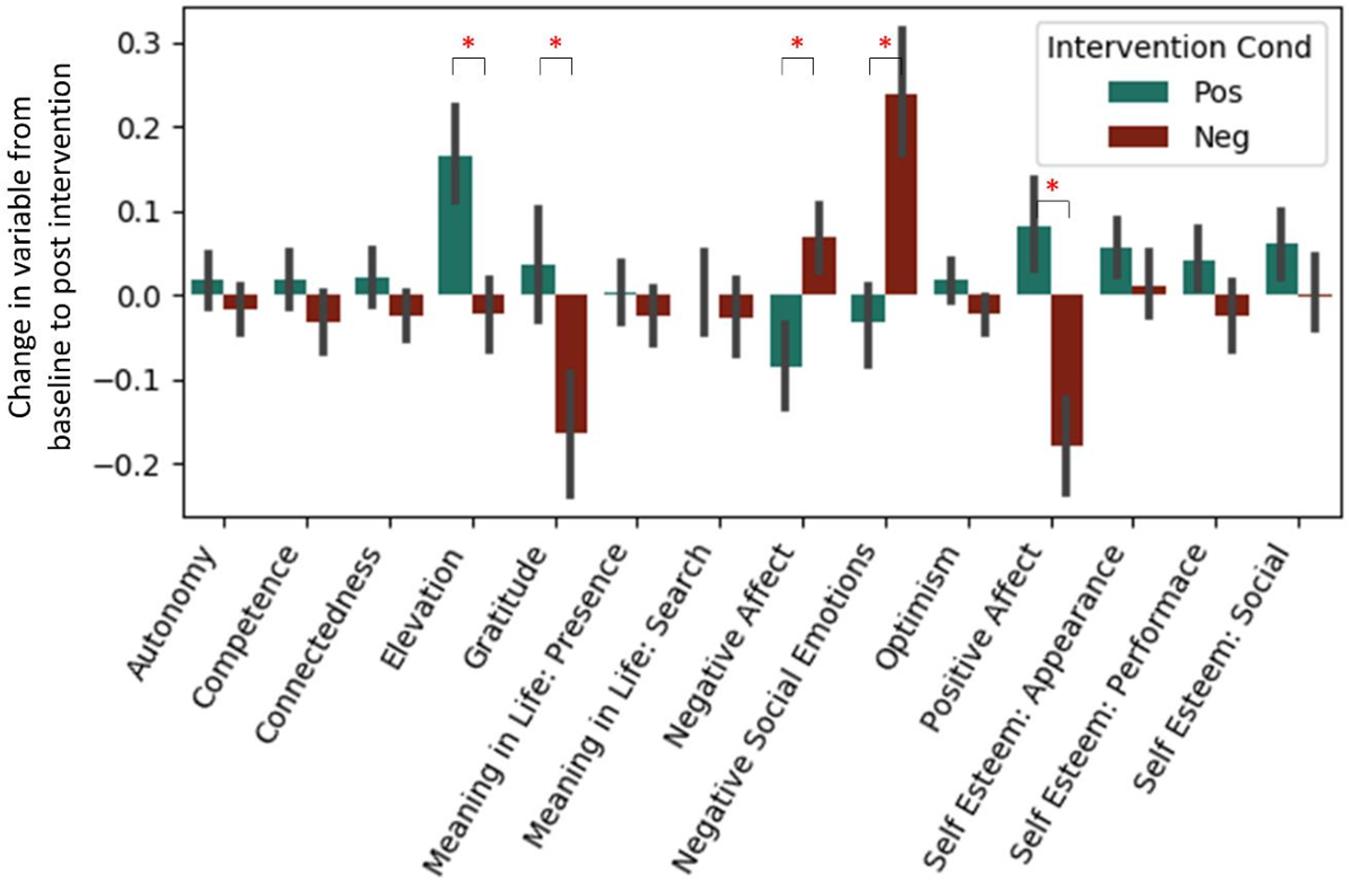

Psychological Self Report Variables

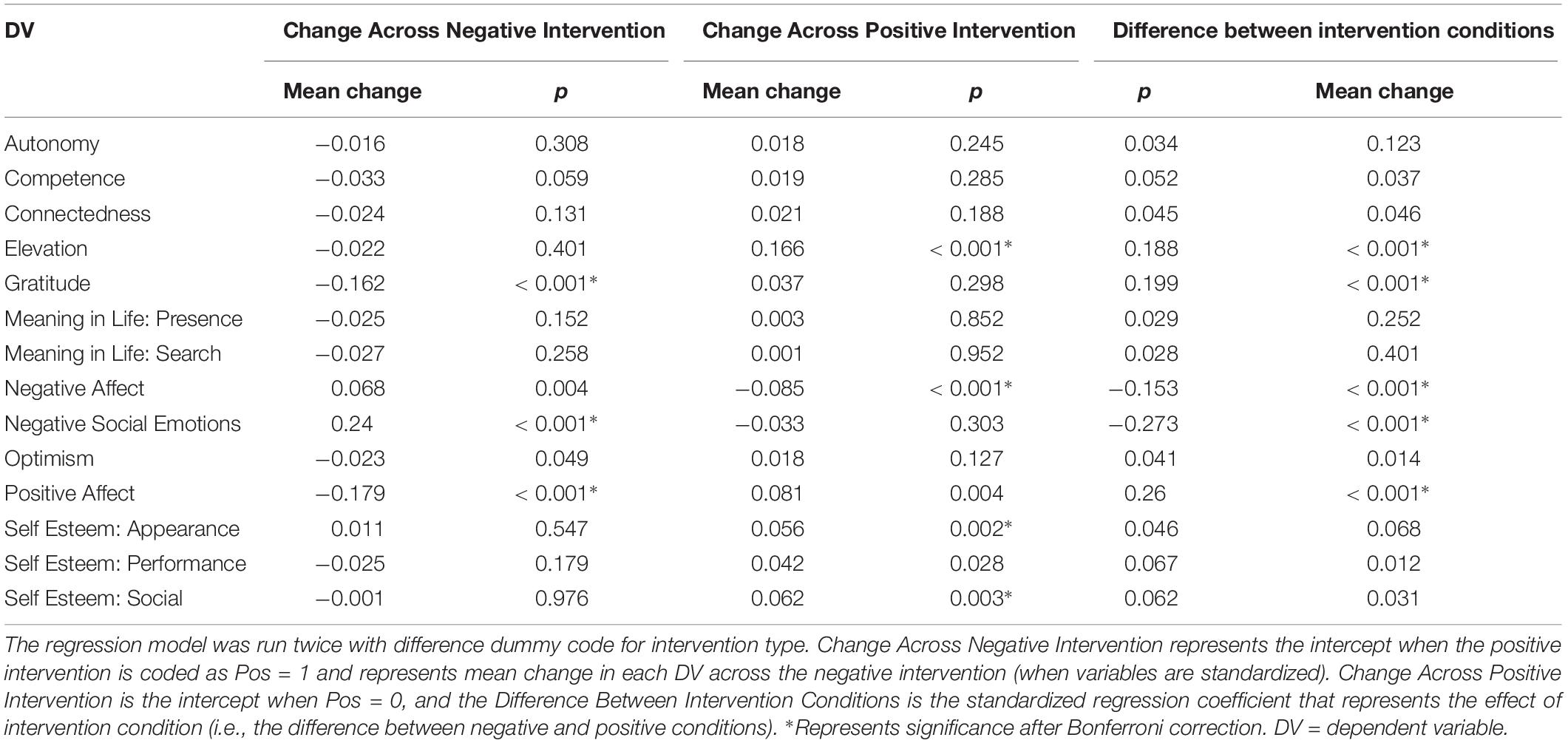

Analyses of our psychological self-report variables appears in a previous article (Revord et al., 2019), but are also briefly summarized here (see Figure 1). A regression predicting change in each measure from baseline to post-test from the intervention type was run for both studies combined (Table 3). After a Bonferroni correction for the 14 measures considered (α = 0.0036), we found in the positive intervention condition significant increases in elevation and self-esteem (appearance and social subscale), as well as a significant decrease in negative affect. Significant decreases in gratitude and positive affect, and a significant increase in negative social emotions, were observed in the negative condition. The positive and negative intervention conditions significantly differed in pre-post change for elevation, gratitude, negative affect, negative social emotions, and positive affect variables.

Figure 1. Standardized change between pre and post-intervention for each self-report variable. Each intervention type shown separately. Data from Experiment 1 and 2. *Represent significant difference in pre→post-change between intervention types after Bonferroni correction.

Cognitive Variables

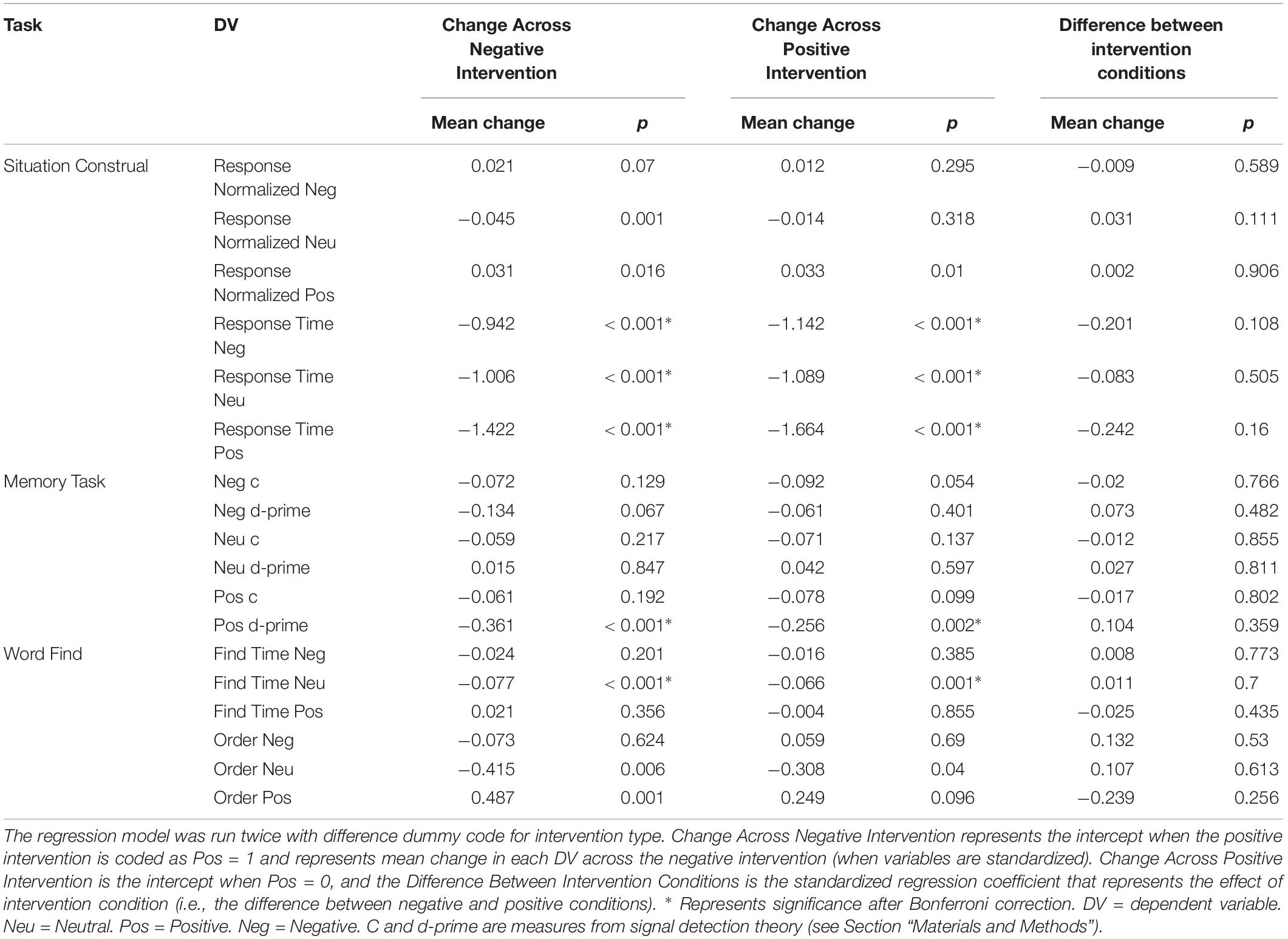

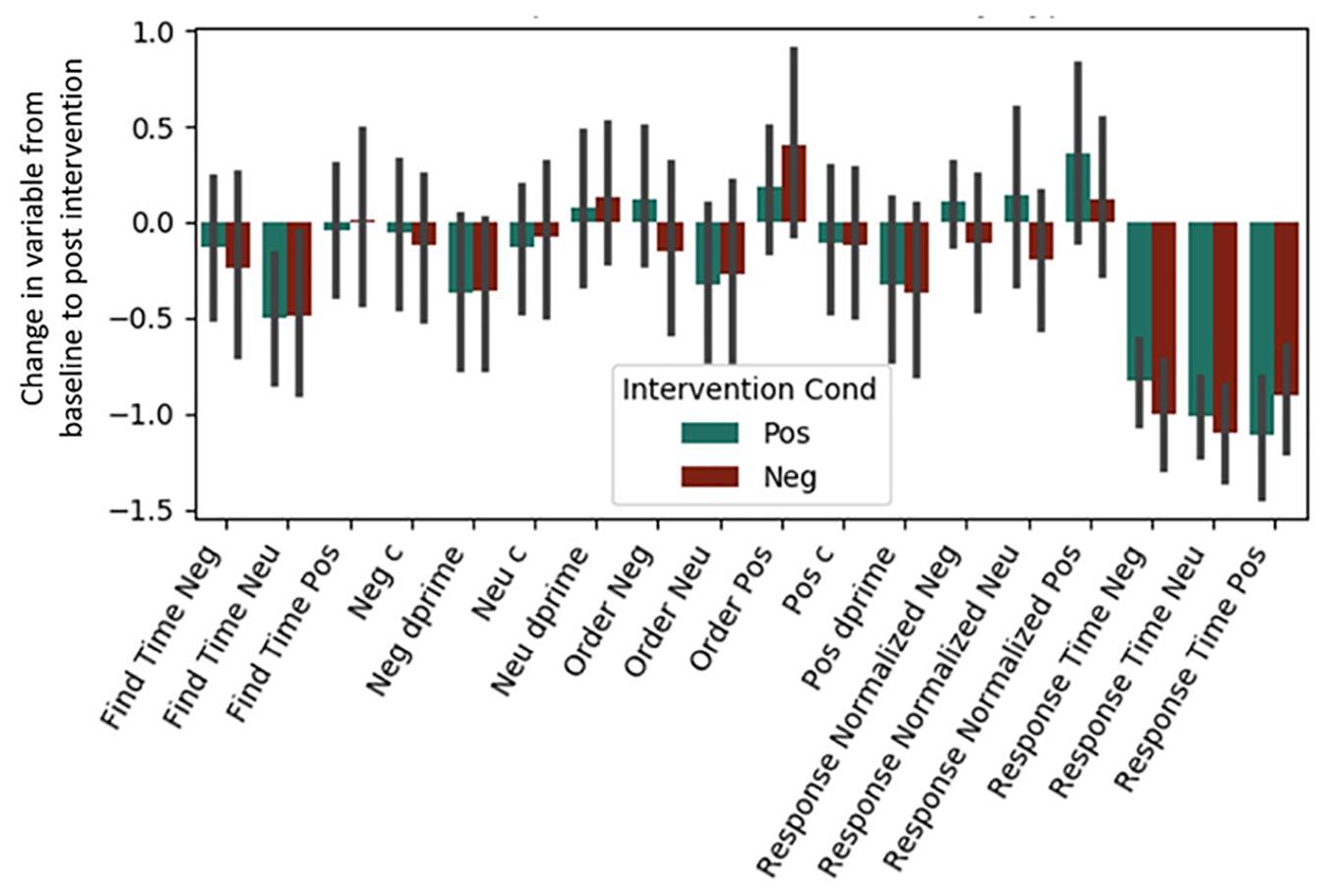

Both Experiment 1 and 2 included cognitive variables, and the regressions presented below were fit to the combined dataset (see Table 4 and Figure 2). Regressions for our two individual datasets are presented in Supplementary Material (see Supplementary Figures S1, S2 and Supplementary Tables S1, S2). Bonferroni corrections were made for the 18 measures considered (α = 0.0028). Due to test-retest effects, increases in the general speed and accuracy of responses across both conditions were expected. We observed these effects for (1) positive words’ d-prime, (2) response time for the situation construal task, and (3) find times for negative words in the word find (all p < 0.0028). A significant intervention coefficient provides compelling evidence that the intervention produced differences in cognitive outcomes; however, no significant intervention effects were found.

Figure 2. Post-intervention changes in cognitive variables by intervention type. Neu = Neutral. Pos = Positive. Neg = Negative. No significant differences were found across intervention condition. C and d-prime are measures from signal detection theory (see Section “Materials and Methods”).

Physiology Variables

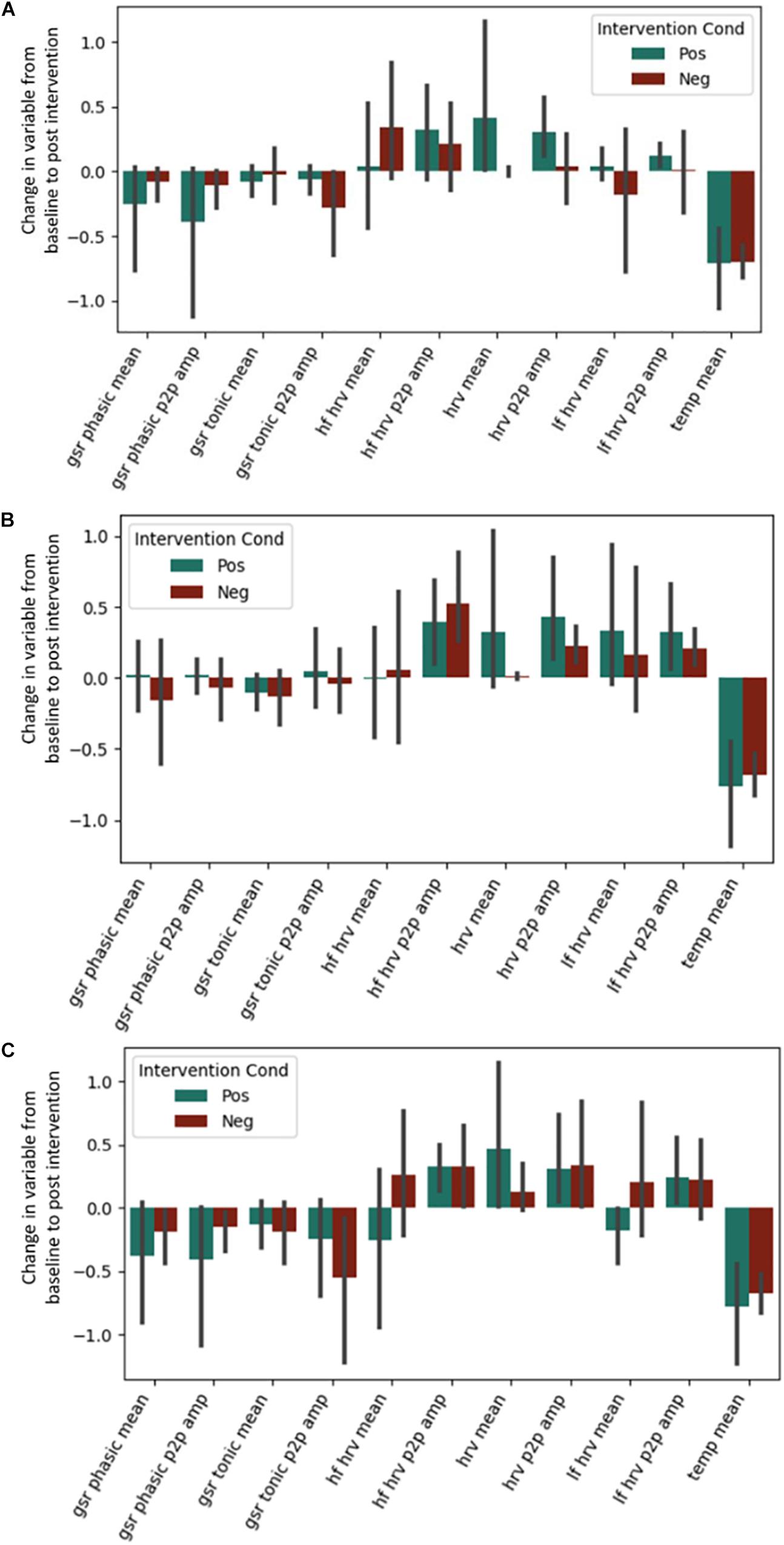

No effects of the intervention were observed for any of the autonomic physiological measures (see Figure 3). This includes the predicted High Frequency HRV, which was expected to be greater in the positive condition. Exact coefficients and p-values are available in Supplementary Material (see Supplementary Tables S3–S5).

Figure 3. Post-intervention changes in autonomic variables during the (A) Questionnaire Task, (B) Emotional Memory Task, (C) Situation Construal task across the intervention by intervention type. GSR = Galvanic Skin Conductance. LF = Low Frequency. HF = High Frequency. HRV = Heart Rate Variability. Temp = Temperature. P2P = Peak to peak. No significant differences were found across intervention condition.

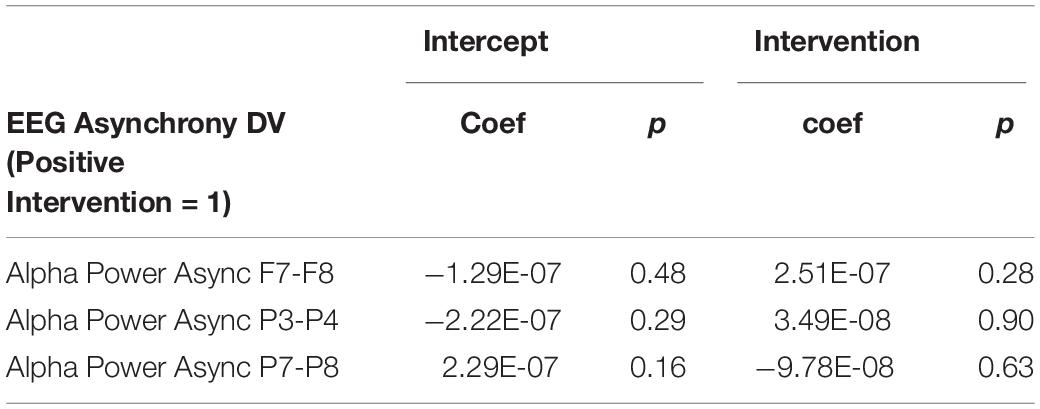

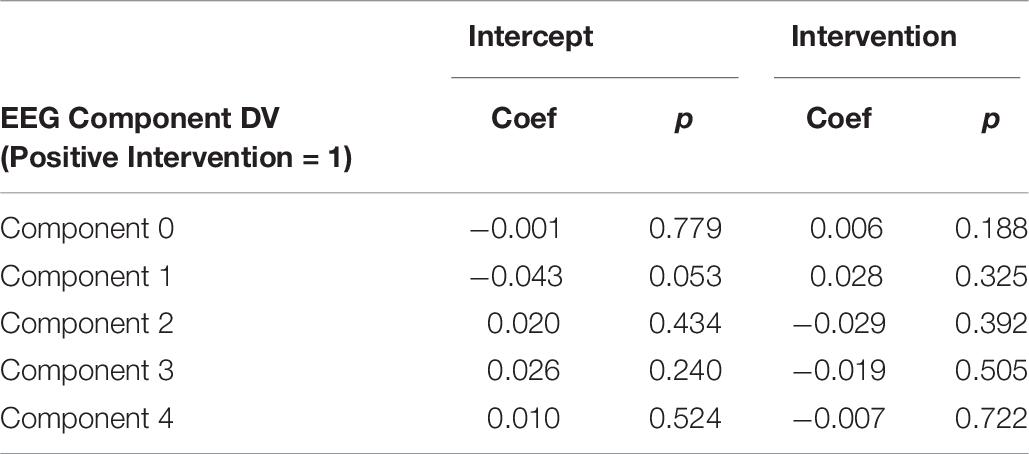

EEG Variables

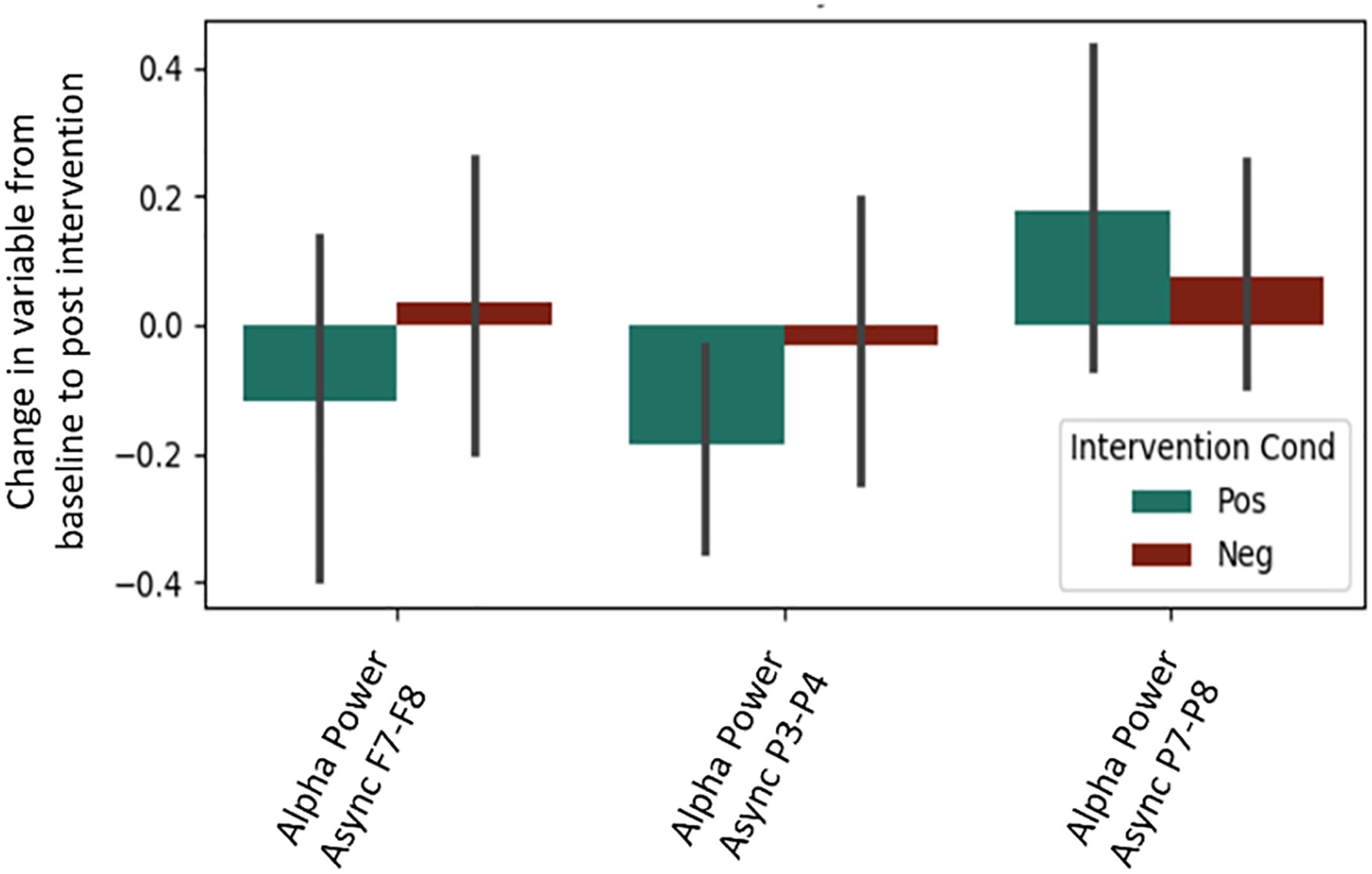

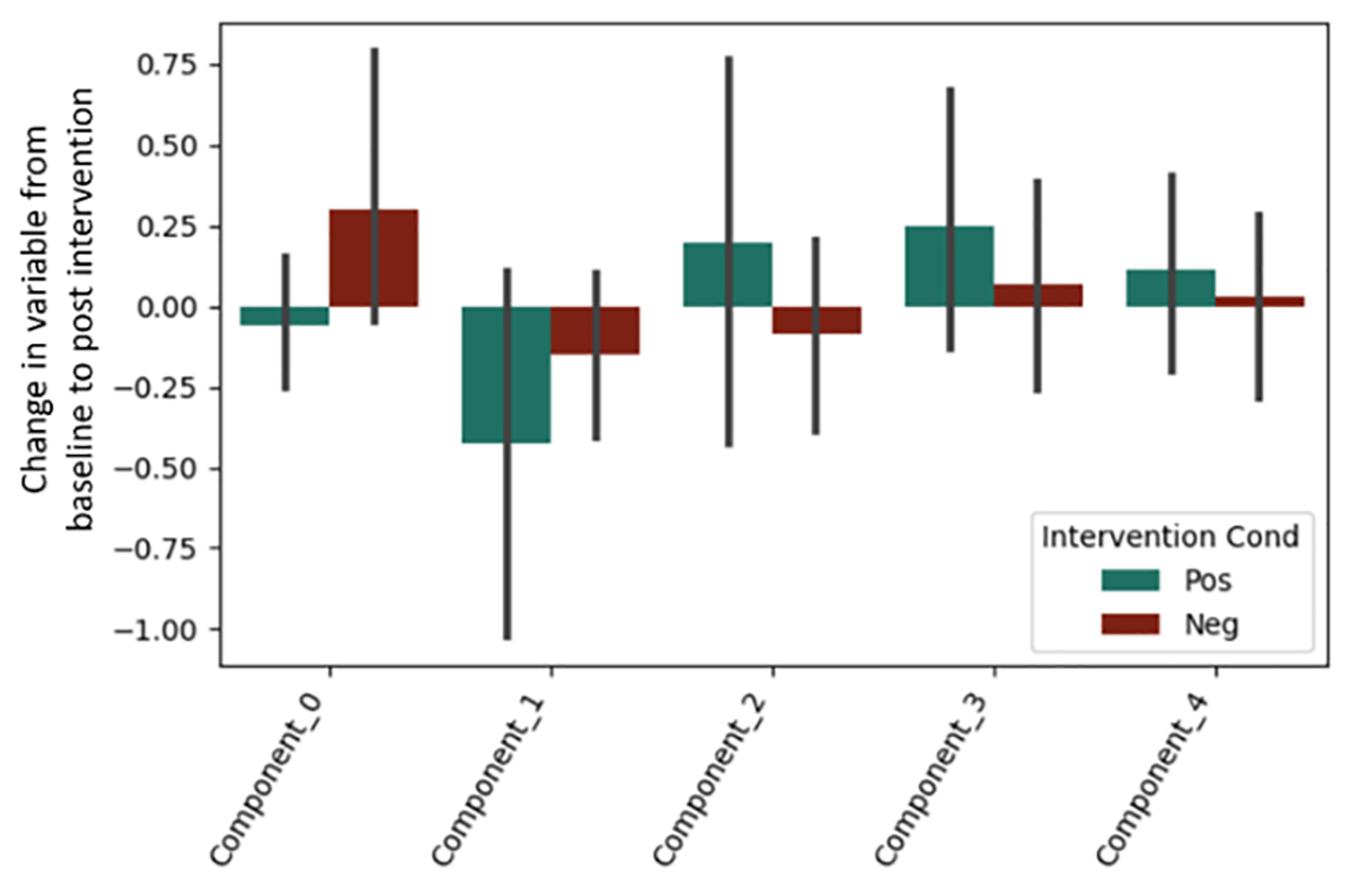

It is important to note that a significant quantity of EEG data were lost due to experimenter error, poor signal connection, and/or artifact contamination. Only 35 participants (out of 86 total) are included in the EEG analysis; hence, the results should be treated as exploratory. We began by testing EEG frontal alpha asynchrony, a measure which has been used to quantify affect in the past (Coan and Allen, 2004; Wheeler et al., 2007). Prediction of the pre-post intervention difference from intervention type yielded no significant effects for asynchrony (Table 5 and Figure 4), although the direction of the effect matched that of previous literature, and the lack of significance may be due to low power. Analysis of 5 PCA components extracted from all EEG channels and measures also showed no differences between intervention conditions (Table 6 and Figure 5).

Figure 4. Post-intervention changes in EEG asynchrony variables by intervention type. No significant differences were found across intervention condition.

Figure 5. Post-intervention changes in EEG PCA components by intervention type. No significant changes were found across intervention condition.

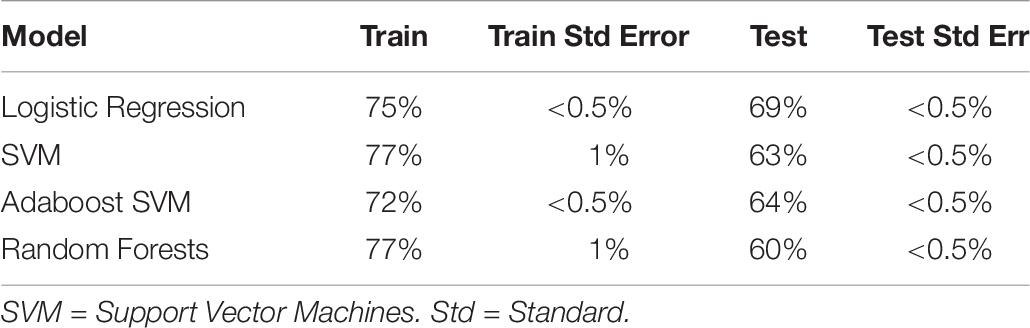

Machine Learning

Self-Report Variables

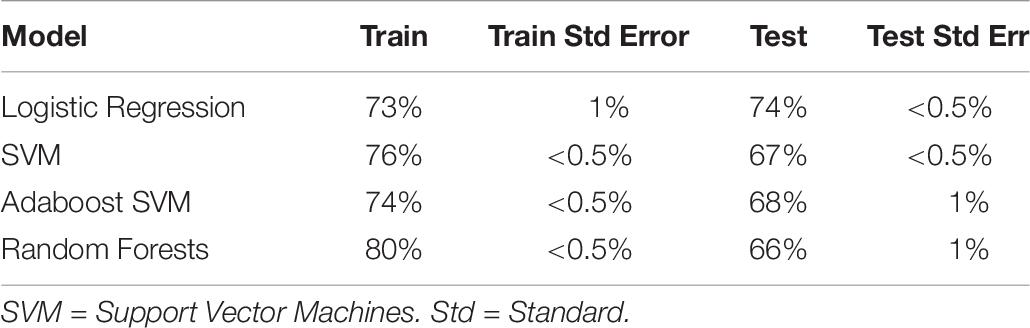

We then turned to a machine learning analysis, in which we predict the intervention condition from the change in measures between baseline and post-test. Our methods followed a hierarchical approach, in which we started with only self-report variables, and then added in cognitive, autonomic and EEG variables in a stepwise manner. The prediction accuracy using self-report variables acted as a baseline, where any increase above self-report accuracy with the addition of new variables meant that those new variables helped predict intervention condition, and were hence differentially affected by intervention type. Using self-report variables alone (with Studies 1 and 2 combined; see Table 7), we found logistic regression to be the best classifier, with an ability to predict the intervention condition from self-report variables at 74% (test accuracy).

Table 7. Train and test accuracy for machine learning models of self-report variables for Studies 1 and 2 combined.

The L1 regularization of logistic regression forces the beta weights of weakly predictive or highly co-linear measure to zero, thereby performing feature selection. Unsurprisingly, we found that the measures that were predictive in the univariate analysis were also predictive here: negative social emotions (β = –0.62), positive affect (β = 0.46), gratitude (β = 0.23) and elevation (β = 0.23). Optimism, which did not survive family-wise error correction in the original regression analysis, was also found to be predictive (β = 0.16). Other weakly predictive measures were competence, self-esteem: social, and meaning in life: search (β = 0.01, 0.01, and 0.02, respectively). Due to its high collinearity with positive affect and negative social emotions, negative affect, which was significant in the regression analysis, was not predictive here (β = 0).

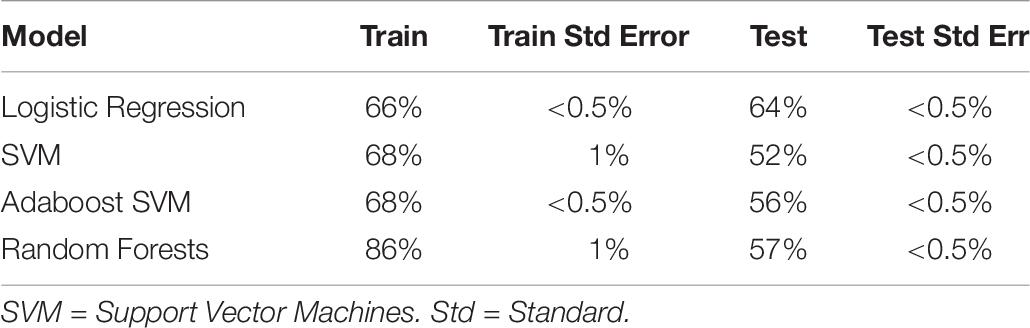

We also ran the above analysis for Experiment 2 only, to aid later comparison with the physiological measures (see Table 8). Interestingly, classifiers trained on Experiment 2 had generally worse accuracy than both combined (best at 64% test accuracy). This was not due to the reduction in sample size, as the equivalent samples size (86 subjects, sampled randomly) produced equivalent accuracy to both datasets combined. The cause of this discrepancy is unknown, and includes the possibility that the discomfort, unfamiliarity, length, and solitude characterizing the EEG procedure and electrode cap led to dissipation of the psychological effects from the positive and negative interventions.

Table 8. Train and test accuracy for machine learning models of self-report variables (Experiment 2 only).

Adding Cognitive Variables

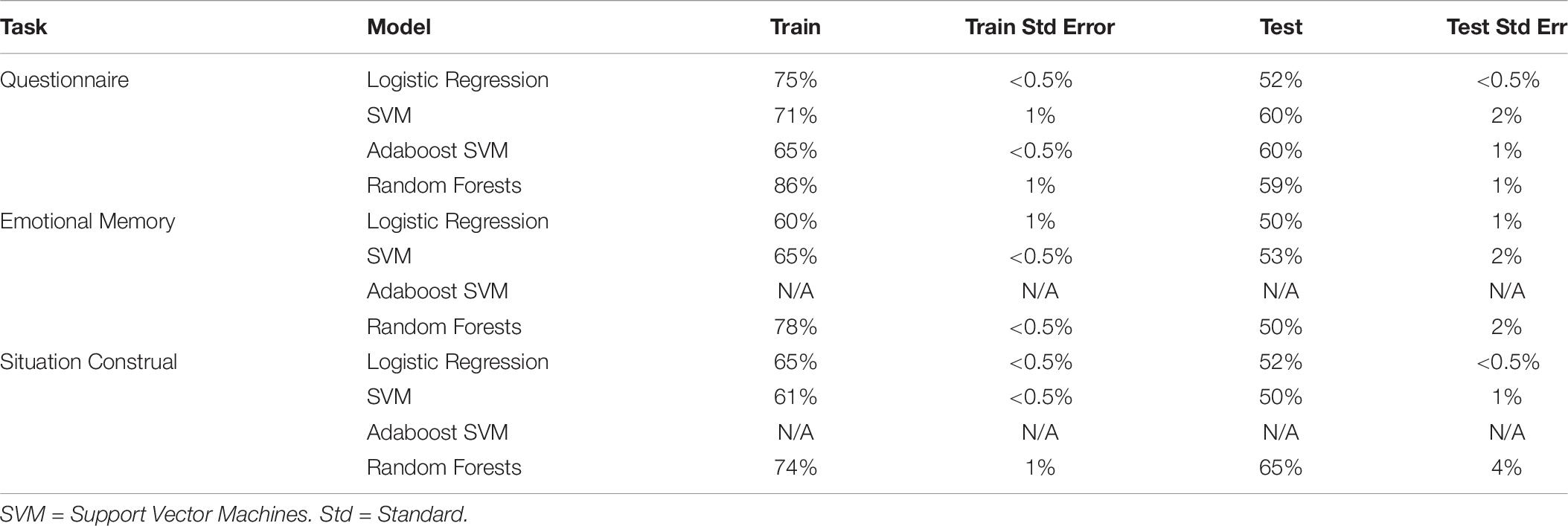

Next, we added cognitive variables to determine if they aided in the detection of an intervention effect. However, because adding model predictor variables led to overfitting, we reduced the original set of 15 self-report variables to 5 using PCA before adding the cognitive variables. Using those five self-report PCA components alone, test accuracy was reduced by only 1% (to 73%). The addition of the 18 cognitive variables did not produce an increase in classification accuracy (instead, hurting performance; see Table 9).

Given the reduction in performance, overfitting was likely, and therefore we reduced the number of predictors by also performing PCA on the cognitive components, and then added these to the self-report PCA components. The best test accuracy was again observed for logistic regression, but matched that of the self-report PCA components alone (72 ± 1%). Prediction of intervention condition from cognitive variables alone resulted in chance performance at test for all classifiers. We conclude that, similar to the regression analysis, machine learning analysis did not find evidence that cognitive variables were impacted by the positive or negative activity manipulation.

Adding Autonomic Variables

Physiological measures were collected during Experiment 2 only. To test if autonomic physiology variables added additional predictive power compared to self-report, we included 5 PCA components extracted from the autonomic variables to the PCA components from self-report, and then predicted intervention condition. Physiology was extracted from three tasks (questionnaire, emotional memory, situation construal), and each was added to the self-report variables in separate models (see Table 10). No models that included physiology and self-report (best test accuracy: 64 ± 4%) were better than self-report alone (test accuracy 64 ± 0.5%), and most resulted in chance performance. Further, models trained only on physiology resulted in chance test performance.

Table 10. Train and test accuracy for machine learning models of autonomic variables during the questionnaire task.

EEG

Prediction of the intervention condition from EEG components yielded chance performance for all models. This is not surprising given the low sample size.

Relation Between Cognitive, Physiological and Self Report Variables

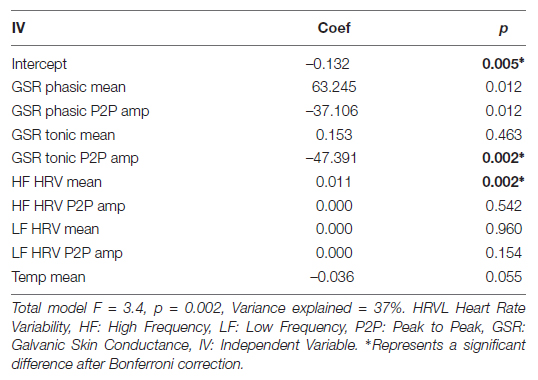

To investigate if cognitive and physiological measures are correlated with self-report variables in the context of our intervention, we ran series of models predicting each self-report measure from cognitive or physiological measures. We found one significant model, where Positive Affect was predicted by physiological variables during the emotional memory task (Table 11). Significant predictors of Positive Affect were Tonic GSR (Peak to Peak Amplitude) and the mean of High Frequency HRV.

Table 11. Regression coefficients for predicting positive affect from physiological variables during emotional memory task.

Conclusion and Discussion

Well-being science, and the field of social psychology in general, have traditionally focused on self-report to measure target variables (Baumeister et al., 2007). However, the potential bias apparent in these measures, even when rigorously validated, has propelled interest in alternative, more objective approaches. Here we investigated the efficacy of cognitive and physiological measures in determining the effect of positive versus negative activity interventions on well-being. While self-report variables produced significant results, we were unable to detect robust effects of well-being change in any cognitive or physiological measures. Our null results were possibly the results of chance, low power, or methodological limitations (see below), and we cannot conclusively establish whether our intervention had no cognitive or physiological effect. Additionally, we found limited evidence for a relation between any individual cognitive or physiological measures and any specific self-report variable. If power issues limited our physiological or cognitive machine learning findings, then the lack of these self-report and physio/cognitive relationships suggests that self-report measures an orthogonal component of well-being compared to these alternate measures. In conclusion, we suggest that these relatively more “objective” measures are not the silver bullet, set to revolutionize social psychological and well-being science, as purported by some (Pietro et al., 2014).

Although we did not detect significant effects of positive versus negative activity manipulations using cognitive and psychological measures, it is important to note some of the limitations of our approach and outline recommendations for future work.

Physiological Data Collection Is Noisy and Restrictive and May Not Map to Affective Valence

While considered more objective, biological indicators are not themselves unambiguous measures of human happiness or unhappiness (Oswald and Wu, 2010). Previous theoretical work has argued that only arousal levels, and not the valence of emotion, are detectable via physiology (Schachter and Singer, 1962). In our design, we pitted induced negative affect versus positive affect, and, while the valences were clearly different, the arousal levels may have been similar, resulting in a null effect. We quantified physiological measures while subjects performed cognitive tasks, thereby allowing for event driven analysis. Although we strived for low motion artifacts, there may have been “artifacts” produced by task relevant cognition that masked our ability to detect affect change. We therefore recommend future studies also record physiological measures during a prolonged period of low arousal, where no stimulus is present. Further, physiological signals are inherently noisy, and prone to interference from movement, sweat, or electromagnetic sources. Future investigators should be cognizant of their low signal to noise ratio, and the need for substantial preprocessing (i.e., filtering, artifact rejection, dimensionality reduction, feature extraction, etc. Each preprocessing step demands careful consideration of a number of method “tuning” parameters, which reduces the accessibility of these methods to the average social scientist. Furthermore, reducing noise sources (e.g., via electrostatically shielded rooms or limited participant movement) may place undue restrictions on lab-based PAI experimentation, and certainly limit field studies.

Research-Grade Devices Should Be Preferred

The devices used in our research (Feel wristband, Modified Emotive Epoch), while low-cost and wireless, were found to be limiting for rigorous research methodology. Neither device had the ability to embed stimulus triggers. The Feel device was synced with the stimulus computer, and it was assumed that its internal clock was robust and remained synchronized throughout the experimentation procedure. Furthermore, piloting revealed that the Emotiv device was becoming unsynchronized during the experimentation procedure. This was overcome by co-opting two EEG channels as a bipolar stimulus channel, driven by a photodiode connected to the stimulus presentation computer. It is possible that the addition of the synchronizing photodiode added artifacts that were not removed by our artifact rejection procedure, and this may have influenced our results. Further, the low sample rate (128 Hz) of the device may have hidden effects. The combination of numerous operational errors experienced with the device and excessive noise resulted in more than half of participants’ data being lost. Hence, the lack of significant EEG results may be due to low sample size, poor device quality, or artifacts. While considerable care was taken to ensure all signals were synchronized and denoised, the process was time consuming, and much could have been mitigated with the use of dedicated research grade devices.

Limitations of Measures

Although we selected self-report, cognitive and physiological measures based on their prior utility and theory, our goal was not to develop a model that unifies self-report and physiological measures. However, we note the lack of any published theoretical model linking these measures. We also recognize that because the manipulation changes many correlated constructs, it is hard to make conclusions about the specific relationship between any one self-report construct and any specific physiological/cognitive measure. A useful addition to the field would be a set of repeated measures studies to determine the relationship between these measures in a variety of contexts, focusing on measure reliability, as well as their convergent and discriminate validity.

Appropriate Control of Overfitting Should Be Employed

Due to the exploratory nature of this study, a model-free analysis approach was taken, where variables were combined using a number of machine learning techniques to predict the intervention (positive vs. negative) condition. We made heavy use of cross validation techniques to alleviate the possibility of overfitting (or false positives). We highlight this as especially important, because due to the sheer number of variables produced by each device, and the number of statistical comparisons that could be made, false positives are highly probable. Previous literature highlights the inconsistency of physiology in the detection of affect, as well as the possibility of Type 1 errors (given that, in each Experiment, a different set of variables was found to be predictive; Calvo and Mello, 2015). Therefore, we recommend future studies use appropriate methods to protect against spurious results coupled with appropriate power analysis. Another promising avenue includes model-based analyses, such as those employed in Bayesian cognitive modeling (M. D. Lee and Wagenmakers, 2014). Here, a number of specific theories that build in biologically plausible relations as priors (as parameterized by models) can be compared, and conclusions drawn are graded, where one theory is favored X time more than the other, as opposed to the all or nothing null hypothesis testing approach.

Effect Is Small and Highly Variable

We found evidence of small effect sizes in our research, such that machine learning conducted on psychological variables could only predict the intervention condition with 64% accuracy (Revord et al., 2019). If we take the self-report variables as unbiased, and a small effect as given, then this small effect may simply not have been sufficient to produce a detectable cognitive and physiological response. Future studies should increase power via a larger sample size, or attempt to increase intervention effect either by including additional well-being activities [e.g., counting ones blessings (Emmons and McCullough, 2003), practicing optimism (King, 2001), performing acts of kindness (Nelson et al., 2016) random acts of kindness, using ones strengths in a new way (Seligman et al., 2005), affirming ones most import ant values (Nelson and Lyubomirsky, 2014) or meditating on positive feeling toward oneself (Fredrickson et al., 2008)] or by expanding the design longitudinally and thereby detecting the cumulative effects of a weaker intervention. The low effect size also suggests high variance in our measures. Previous investigators have noted that physiological responses are trait like (O’Gorman and Horneman, 1979; Pinna et al., 2007), and may be dampened or exaggerated in some people (Gerritsen et al., 2003); and this may have contributed to increased variance and lack of discernable effect. We recommend the addition of a baseline task in future studies, where the physiological response to a large number of highly emotionally arousing stimuli is recorded in the same participants and used to verify a detectable physiological change. This approach would also allow for the exclusion of subjects with low physiological responses, as well as for individual tuning of methods (with the caveat that the results would not generalize to the full population). We include a table of means and standard deviations of all measures in Supplementary Material (Supplementary Tables S6–S11), as a means for future investigators to estimate the expected effect size and hence required sample size of future studies. Further, no neutral condition was included, in which, for example, participants might be asked to recall memories without any strong emotional component. As a result, we could only compare positive and negative conditions. If some measures respond only to the arousal component of emotion (as argued previously), then we would be unable to detect a difference. In conclusion, our study replicated previous research on the beneficial effects of writing about prosocial events as quantified by self-report. Furthermore, although we did not clearly demonstrate the cognitive effects of a PAI, neither did we obtain conclusive evidence for a lack of cognitive of physiological effects. Our study introduced a new line of inquiry about the robustness and durability of the effects of positive interventions on the brain and body, which could lead to insights in determining the optimal “dosage” of kindness and gratitude interventions. We believe this paper represents a first step in introducing more cognitive paradigms into the positive activity literature, and set a precedent for the use of more objective measures in such research. However, in light of limitations of the methods and study design, we recommend that the field considers these measures, while bearing in mind our recommendations for future work. More research is needed to investigate the conditions under which these measures may be feasible and useful, but we stress that they should not be unilaterally favored over the traditional self-report approach.

Data Availability

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

Students reviewed and signed a consent form which detailed the study procedures. This consent form was reviewed and approved by the University of California, Riverside Institutional Review Board.

Author Contributions

BY contributed to conceptualization, methodology, data curation, formal analysis, investigation, literature review, visualization, and manuscript preparation. JR contributed to conceptualization, methods, methodology, investigation, and literature review. SM contributed to conceptualization, methodology, and investigation. SL and AS initially conceived the study, administrated the study, and oversaw methods and investigation. All authors edited and approved the final manuscript.

Funding

BY was supported by a NSF GRFP Fellowship.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank Panagiotis Fatouros, Haris Tsirbas, and George Eleftheriou at Sentio, for their donation of the Feel device and continual support. The authors would also like to thank the hard work of the research assistants at University of California, Riverside who helped collect the data.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2019.01630/full#supplementary-material

References

Algoe, S. B. (2012). Find, remind, and bind: the functions of gratitude in everyday relationships. Soc. Personal. Psychol. Compass 6, 455–469. doi: 10.1111/j.1751-9004.2012.00439.x

Bao, F. S., Liu, X., and Zhang, C. (2011). PyEEG: an open source python module for EEG/MEG feature extraction. Comput. Intell. Neurosci. 2011:406391. doi: 10.1155/2011/406391

Baumeister, R. F., Vohs, K. D., and Funder, D. C. (2007). Psychology as the science of self-reports and finger movements: whatever happened to actual behavior? Perspect. Psychol. Sci. 2, 396–403. doi: 10.1111/j.1745-6916.2007.00051.x

Beaten, D. T., Bombardier, C., Guillemin, F., and Ferraz, M. B. (2000). Guidelines for the process of cross cultural adaptation of self report mesures. Spine 25, 3186–3191. doi: 10.1097/00007632-200012150-00014

Bolier, L., Haverman, M., Westerhof, G. J., Riper, H., Smit, F., and Bohlmeijer, E. (2013). Positive psychology interventions: a meta-analysis of randomized controlled studies. BMC Public Health 13:119. doi: 10.1186/1471-2458-13-119

Bradley, M. M., and Lang, P. J. (1999). Affective Norms for English words (ANEW): Instruction Manual and Affective Ratings. Gainesville: University of Florida.

Calvo, R. A., and Mello, S. D. (2015). Affect detection: an interdisciplinary review of models, methods, and their applications. IEEE Trans. Affect. Comput. 1, 18–37. doi: 10.1109/T-AFFC.2010.1

Calvo, R. A., Vella-Brodrick, D., Desmet, P., and Ryan, R. M. (2016). Editorial for “positive computing: a new partnership between psychology, social sciences and technologists. Psychol. Well Being 6:10. doi: 10.1186/s13612-016-0047-1

Chancellor, J., Margolis, S., Jacobs Bao, K., and Lyubomirsky, S. (2018). Everyday prosociality in the workplace: the reinforcing benefits of giving, getting, and glimpsing. Emotion 18, 507–517. doi: 10.1037/emo0000321

Chanel, G., Kierkels, J. J. M., Soleymani, M., and Pun, T. (2009). Short-term emotion assessment in a recall paradigm. Int. J. Hum. Comp. Stud. 67, 607–627. doi: 10.1016/j.ijhcs.2009.03.005

Chen, L., Gong, T., Kosinski, M., Stillwell, D., and Davidson, R. L. (2017). Building a profile of subjective well-being for social media users. PLoS One 12:e0187278. doi: 10.1371/journal.pone.0187278

Coan, J. A., and Allen, J. J. B. (2004). Frontal EEG asymmetry as a moderator and mediator of emotion. Biol. Psychol, 67, 7–50. doi: 10.1016/j.biopsycho.2004.03.002

Corinna, C., and Vladimir, V. (1995). Support-vector networks. Mach. Learn. 20, 273–297. doi: 10.1007/BF00994018

Davidson, R. J. (1993). Cerebral asymmetry and emotion: conceptual and methodological conundrums. Cogn. Emot. 7, 115–138. doi: 10.1080/02699939308409180

Davidson, R. J. (2004). What does the prefrontal cortex “do” in affect: perspectives on frontal EEG asymmetry research. Biol. Psychol. 67, 219–234. doi: 10.1016/J.BIOPSYCHO.2004.03.008

Debener, S., Minow, F., Emkes, R., Gandras, K., and de Vos, M. (2012). How about taking a low-cost, small, and wireless EEG for a walk? Psychophysiology 49, 1617–1621. doi: 10.1111/j.1469-8986.2012.01471.x

Denny, E. B., and Hunt, R. R. (1992). Affective valence and memory in depression: dissociation of recall and fragment completion. J. Abnorm. Psychol. 101, 575–580. doi: 10.1037//0021-843x.101.3.575

Derryberry, D., and Reed, M. A. (1998). Anxiety and attentional focusing: trait, state and hemispheric influences. Personal. Individ. Differ. 25, 745–761. doi: 10.1016/s0191-8869(98)00117-2

Diener, E. (2000). Subjective well-being: the science of happiness and a proposal for a national index. Am. Psychol. 55, 34–43. doi: 10.1037//0003-066x.55.1.34

Diener, E., Pressman, S. D., Hunter, J., and Delgadillo-Chase, D. (2017). If, why, and when subjective well-being influences health, and future needed research. Appl. Psychol. 9, 133–167. doi: 10.1111/aphw.12090

Diener, E., Suh, E. M., Lucas, R. E., and Smith, H. L. (1999). Subjective well-being: three decades of progress. Psychol. Bull. 125, 276–302. doi: 10.1037//0033-2909.125.2.276

D’mello, S. K., and Kory, J. (2015). A review and meta-analysis of multimodal affect detection systems. ACM Comput. Surv. 47, 1–36. doi: 10.1145/2682899

Dunn, E. W., Aknin, L. B., and Norton, M. I. (2008). Spending money on others promotes happiness. Science 319, 1687–1688. doi: 10.1126/science.1150952

Dunning, D., Heath, C., and Suls, J. M. (2004). Flawed self-assessment implications for health, education, and the workplace. Psychol. Sci. Public Interest 5, 69–106. doi: 10.1111/j.1529-1006.2004.00018.x

Easycap GmbH (n.d.). Step-by-Step Instructions to Rework Emotiv, Build a Mobile Cap, and Join Both Together. V.26.07.2013.

Ekman, P., Levenson, R. W., and Friesen, W. V. (2013). Autonomic nervous system activity distinguishes among emotions. Science 221, 1208–1210. doi: 10.1126/science.6612338

Emmons, R. A., and Diener, E. (1985). Personality correlates of subjective well-being. Personal. Soc. Psychol. Bull. 11, 89–97. doi: 10.1177/0146167285111008

Emmons, R. A., and McCullough, M. E. (2003). Counting blessings versus burdens: experimental studies of gratitude and subjective well-being. J. Personal. Soc. Psychol. 84, 377–389. doi: 10.1037//0022-3514.84.2.377

Finucane, A. M., Whiteman, M. C., and Power, M. J. (2010). The effect of happiness and sadness on alerting, orienting, and executive attention. J. Attent. Disord. 13, 629–639. doi: 10.1177/1087054709334514

Fox, E., Russo, R., Bowles, R., and Dutton, K. (2001). Do threatening stimuli draw or hold visual attention in subclinical anxiety? J. Exp. Psychol. 130, 681. doi: 10.1037//0096-3445.130.4.681

Fredrickson, B. L., Cohn, M. A., Coffey, K. A., Pek, J., and Finkel, S. M. (2008). Open hearts build lives: positive emotions, induced through loving-kindness meditation, build consequential personal resources. J. Personal. Soc. Psychol. 95, 1045–1062. doi: 10.1037/a0013262

Freund, Y., and Schapire, R. (1999). A short introduction to boosting. J. Jpn Soc. Artif. Intell. 14, 771–780.

Funder, D. C. (2016). Taking situations seriously: the situation construal model and the riverside situational Q-Sort. Curr. Direc. Psychol. Sci. 25, 203–208. doi: 10.1177/0963721416635552

Funder, D. C., Furr, R. M., and Colvin, C. R. (2000). The riverside behavioral Q-sort: a tool for the description of social behavior. J. Personal. 68, 451–489. doi: 10.1111/1467-6494.00103

Gerritsen, J., TenVoorde, B. J., Dekker, J. M., Kingma, R., Kostense, P. J., Bouter, L. M., et al. (2003). Measures of cardiovascular autonomic nervous function: agreement, reproducibility, and reference values in middle age and elderly subjects. Diabetologia 46, 330–338. doi: 10.1007/s00125-003-1032-9

Gotlib, I. H., Krasnoperova, E., Yue, D. N., and Joormann, J. (2004). Attentional biases for negative interpersonal stimuli in clinical depression. J. Abnorm. Psychol. 113, 127–135. doi: 10.1037/0021-843x.113.1.121

Haiblum-Itskovitch, S., Czamanski-Cohen, J., and Galili, G. (2018). Emotional response and changes in heart rate variability following art-making with three different art materials. Front. Psychol. 9:968. doi: 10.3389/fpsyg.2018.00968

Haidt, J. (2003). “Elevation and the positive psychology of morality,” in Flourishing: Positive Psychology and the Life Well-Lived, eds C. L. M. Keyes and J. Haidt (Washington, DC: American Psychological Association), 275–289. doi: 10.1037/10594-012

Harker, L., and Keltner, D. (2001). Expressions of positive emotion in women’s college yearbook pictures and their relationship to personality and life outcomes across adulthood. J. Personal. Soc. Psychol. 80, 112–124. doi: 10.1037//0022-3514.80.1.112

Hinrichs, H., and Machleidt, W. (1992). Basic emotions reflected in EEG-coherences. Int. J. Psychophysiol. 13, 225–232. doi: 10.1016/0167-8760(92)90072-J

Hunsinger, M., Livingston, R., and Isbell, L. (2013). The impact of loving-kindness meditation on affective learning and cognitive control. Mindfulness 4, 275–280. doi: 10.1007/s12671-012-0125-2

Jerritta, S., Murugappan, M., Nagarajan, R., and Wan, K. (2011). “Physiological signals based human emotion recognition: a review,” in Proceedings of the 2011 IEEE 7th International Colloquium on Signal Processing and Its Applications, (Penang), 410–415. doi: 10.1109/CSPA.2011.5759912

Kim, K. H., Bang, S. W., and Kim, S. R. (2004). Emotion recognition system using short term monitoring of physiological signals. Med. Biol. Eng. Comp. 42, 419–427. doi: 10.1007/BF02344719

King, L. A. (2001). The health benefits of writing about life goals. Personal. Soc. Psychol. Bull. 27, 798–807. doi: 10.1177/0146167201277003

Kleiner, M., Brainard, D., Pelli, D., Ingling, A., Murray, R., Broussard, C., et al. (2007). What’s new in psychtoolbox-3. Perception 36:1.

Koelstra, S., Yazdani, A., Soleymani, M., Mühl, C., Lee, J.-S., Nijholt, A., et al. (2010). “Single trial classification of EEG and peripheral physiological signals for recognition of emotions induced by music videos,” in Brain Informatics, eds Y. Yao, R. Sun, T. Poggio, J. Liu, N. Zhong, and J. Huang (Berlin: Springer), 89–100. doi: 10.1007/978-3-642-15314-3_9

Kop, W. J., Synowski, S. J., Newell, M. E., Schmidt, L. A., Waldstein, S. R., and Fox, N. A. (2011). Autonomic nervous system reactivity to positive and negative mood induction: the role of acute psychological responses and frontal electrocortical activity. Biol. Psychol. 86, 230–238. doi: 10.1016/j.biopsycho.2010.12.003.Autonomic

Lang, P. J. (2005). International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual. Technical Report A-8. Gainesville, FL: University of Florida.

Layous, K., and Lyubomirsky, S. (2014). The How, Why, What, When, and Who of Happiness: Mechanisms Underlying the Success of Positive Activity Interventions. New York: Oxford University Press, 473–495.

Layous, K., Sweeny, K., Armenta, C., Na, S., Choi, I., and Lyubomirsky, S. (2017). The proximal experience of gratitude. PLoS One 12:e0179123. doi: 10.1371/journal.pone.0179123

Lazarus, R. S. (1991). Progress on a cognitive-motivational-relational theory of emotion. Am. Psychol. 46, 819–834. doi: 10.1037//0003-066x.46.8.819

Lee, J. Y. (2007). Self-enhancing bias in personality, subjective happiness, and perception of life-events: a replication in a Korean aged sample. Aging Mental Health 11, 57–60. doi: 10.1080/13607860600736265

Lee, M. D., and Wagenmakers, E.-J. (2014). Bayesian Cognitive Modeling: A Practical Course. Cambridge: Cambridge university press.

Liaw, A., and Wiener, M. (2002). Classification and regression by random forest. R News 2, 18–22. doi: 10.1023/A:1010933404324

Lindsay, K. A., and Widiger, T. A. (1995). Sex and gender bias in self-report personality disorder inventories: item analysis of the MCMI-II, MMPI, and PDQ-R. J. Personal. Assess. 65, 100–116. doi: 10.1207/s15327752jpa6501

Lyubomirsky, S., Dickerhoof, R., Boehm, J. K., and Sheldon, K. M. (2011). Becoming happier takes both a will and a proper way: an experimental longitudinal intervention to boost well-being. Emotion 11, 391–402. doi: 10.1037/a0022575

Lyubomirsky, S., King, L., and Diener, E. (2005). The benefits of frequent positive affect: does happiness lead to success? Psychol. Bull. 131, 803–855. doi: 10.1037/0033-2909.131.6.803

Lyubomirsky, S., and Tucker, K. L. (1998). Implications of individual differences in subjective happiness for perceiving, interpreting, and thinking about life events. Motiv. Emot. 22, 155–186.

Mather, M., and Carstensen, L. L. (2003). Aging and attentional biases for emotional faces. Psychol. Sci. 14, 409–414. doi: 10.1002/da.22291

McCraty, R., Atkinson, M., Tiller, W. A., Rein, G., and Watkins, A. D. (1995). The effects of emotions on short-term power spectrum analysis of heart rate variability. Am. J. Cardiol. 76, 1089–1093. doi: 10.1016/s0002-9149(99)80309-9

McCullough, M. E., Emmons, R. A., and Tsang, J.-A. (2002). The grateful disposition: a conceptual and empirical topography. J. Personal. Soc. Psychol. 82, 112–127. doi: 10.1037//0022-3514.82.1.112

Mezulis, A. H., Abramson, L. Y., Hyde, J. S., and Hankin, B. L. (2004). Is there a universal positivity bias in attributions? A meta-analytic review of individual, developmental, and cultural differences in the self-serving attributional bias. Psychol. Bull. 130, 711–747. doi: 10.1037/0033-2909.130.5.711

Mirsky, A. F., Anthony, B. J., Duncan, C. C., Ahearn, M. B., and Kellam, S. G. (1991). Analysis of the elements of attention: a neuropsychological approach. Neuropsychol. Rev. 2, 109–145. doi: 10.1007/bf01109051

Naji, M., Firoozabadi, M., and Azadfallah, P. (2014). Classification of music-induced emotions based on information fusion of forehead biosignals and electrocardiogram. Cogn. Comput. 6, 241–252. doi: 10.1007/s12559-013-9239-7

Nelson, S. K., Della Porta, M. D., Jacobs Bao, K., Lee, H. C., Choi, I., and Lyubomirsky, S. (2015). ’It’s up to you’: experimentally manipulated autonomy support for prosocial behavior improves well-being in two cultures over six weeks. J. Posit. Psychol. 10, 463–476. doi: 10.1080/17439760.2014.983959

Nelson, S. K., Layous, K., Cole, S. W., and Lyubomirsky, S. (2016). Do unto others or treat yourself? the effects of prosocial and self-focused behavior on psychological flourishing. Emotion 16, 850–861. doi: 10.1037/emo0000178

Nelson, S. K., and Lyubomirsky, S. (2014). “Finding happiness. Tailoring positive activities for optimal well-being benefits,” in Handbook of Positive Emotions, eds M. M. Tugade, M. N. Shiota, and L. D. Kirby (New York, NY: Guilford Press), 275–293.

Neshat-Doost, H. T., Moradi, A. R., Taghavi, M. R., Yule, W., and Dalgleish, T. (2000). Lack of attentional bias for emotional information in clinically depressed children and adolescents on the dot probe task. J. Child Psychol. Psychiatry Allied Discipl. 41, 363–368. doi: 10.1017/S0021963099005429

Nevin, J. A. (1969). Signal detection theory and operant behavior: a review of David M. Green and John A. Swets’ Signal detection theory and psychophysics1. J. Exp. Anal. Behav. 12, 475–480. doi: 10.1901/jeab.1969.12-475

O’Gorman, J. G., and Horneman, C. (1979). Consistency of individual differences in non-specific electrodermal activity. Biol. Psychol. 9, 13–21. doi: 10.1016/0301-0511(79)90019-X

Oswald, A. J., and Wu, S. (2010). Objective confirmation of subjective measures of human well-being: evidence from the U.S.A. Science 327, 576–579. doi: 10.1126/science.1180606

Otake, K., Shimai, S., Tanaka-Matsumi, J., Otsui, K., and Fredrickson, B. L. (2006). Happy people become happier through kindness: a counting kindnesses intervention. J. Happiness Stud. 7, 361–375. doi: 10.1007/s10902-005-3650-z

Pe, M. L., Koval, P., and Kuppens, P. (2013). Executive well-being: updating of positive stimuli in working memory is associated with subjective well-being. Cognition 126, 335–340. doi: 10.1016/j.cognition.2012.10.002

Phelps, E. A., and LeDoux, J. E. (2005). Contributions of the amygdala to emotion processing: from animal models to human behavior. Neuron 48, 175–187. doi: 10.1016/j.neuron.2005.09.025

Pietro, C., Silvia, S., and Giuseppe, R. (2014). The pursuit of happiness measurement: a psychometric model based on psychophysiological correlates. Sci. World J. 2014, 139128. doi: 10.1155/2014/139128

Pinna, G. D., Maestri, R., Torunski, A., Danilowicz-Szymanowicz, L., Szwoch, M., La Rovere, M. T., et al. (2007). Heart rate variability measures: a fresh look at reliability. Clin. Sci. 113, 131–140. doi: 10.1042/CS20070055

Prochaska, J. O., Evers, K. E., Castle, P. H., Johnson, J. L., Prochaska, J. M., Rula, E. Y., et al. (2012). Enhancing multiple domains of well-being by decreasing multiple health risk behaviors: a randomized clinical trial. Population Health Management 15, 276–286. doi: 10.1089/pop.2011.0060