94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 27 March 2019

Sec. Emotion Science

Volume 10 - 2019 | https://doi.org/10.3389/fpsyg.2019.00701

This article is part of the Research Topic Dynamic Emotional Communication View all 24 articles

Real-life faces are dynamic by nature, particularly when expressing emotion. Increasing evidence suggests that the perception of dynamic displays enhances facial mimicry and induces activation in widespread brain structures considered to be part of the mirror neuron system, a neuronal network linked to empathy. The present study is the first to investigate the relations among facial muscle responses, brain activity, and empathy traits while participants observed static and dynamic (videos) facial expressions of fear and disgust. During display presentation, blood-oxygen level-dependent (BOLD) signal as well as muscle reactions of the corrugator supercilii and levator labii were recorded simultaneously from 46 healthy individuals (21 females). It was shown that both fear and disgust faces caused activity in the corrugator supercilii muscle, while perception of disgust produced facial activity additionally in the levator labii muscle, supporting a specific pattern of facial mimicry for these emotions. Moreover, individuals with higher, compared to individuals with lower, empathy traits showed greater activity in the corrugator supercilii and levator labii muscles; however, these responses were not differentiable between static and dynamic mode. Conversely, neuroimaging data revealed motion and emotional-related brain structures in response to dynamic rather than static stimuli among high empathy individuals. In line with this, there was a correlation between electromyography (EMG) responses and brain activity suggesting that the Mirror Neuron System, the anterior insula and the amygdala might constitute the neural correlates of automatic facial mimicry for fear and disgust. These results revealed that the dynamic property of (emotional) stimuli facilitates the emotional-related processing of facial expressions, especially among whose with high trait empathy.

In the last decade, researchers have focused on empathy as an essential component of human social interaction. The term ‘empathy’ – derived from Greek empatheia – ‘passion’ – is a multifaceted construct that is thought to involve both cognitive (i.e., understanding of another’s beliefs and feelings) and affective (i.e., ability to share another’s feelings) components (Jankowiak-Siuda et al., 2011; Betti and Aglioti, 2016). It is believed that people empathize with others by simulating their mental states or feelings. According to the Perception-Action Model (PAM) of empathy, simulative processes discovered and defined in the domain of actions “result from the fact that the subject’s representations of the emotional state are automatically activated when the subject pays attention to the emotional state of the object” (Preston and de Waal, 2002, p. 1; de Waal and Preston, 2017). Paying attention to the other’s emotional state, in turn, leads to the related autonomic and somatic responses (Preston, 2007). Consistent with this model, a positive association between emotional empathy and somatic response was observed for both skin conductance (Levenson and Ruef, 1992; Blair, 1999; Hooker et al., 2008) and cardiac activation (Krebs, 1975; Hastings et al., 2000). This might indicate that more empathic persons react with stronger affective sharing. Recent studies suggest also that empathic traits relate to variation in facial mimicry (FM) (Sonnby-Borgström, 2002; Sonnby-Borgström et al., 2003; Dimberg et al., 2011; Balconi and Canavesio, 2013a, 2014; Rymarczyk et al., 2016b).

Facial mimicry is spontaneous unconscious mirroring of others’ emotional facial expressions, which leads to congruent facial muscles activity (Dimberg, 1982). This phenomenon usually is measured by electromyography (EMG; e.g., Dimberg, 1982; Larsen et al., 2003). Evidence for FM has been most consistently reported when viewing happy (Dimberg and Petterson, 2000; Weyers et al., 2006; Rymarczyk et al., 2011) and angry (Dimberg et al., 2002; Sato et al., 2008) facial expressions. Interestingly, angry facial expressions induce greater activity than happy faces in the corrugator supercilii (CS, muscle involved in frowning), whereas happy facial expressions induce greater activity in the zygomaticus major (ZM, the muscle involved in smiling) and decreased CS activity. In addition, few EMG studies support also the phenomenon of FM for other emotions, i.e., fear with increased activity of CS (e.g., van der Schalk et al., 2011a) or frontalis muscle (e.g., Rymarczyk et al., 2016b) and for disgust with increased activity of CS (e.g., Lundquist and Dimberg, 1995) or levator labii (LL) (e.g., Vrana, 1993). Furthermore, the magnitude of FM has been shown to depend on many factors (for a review see Seibt et al., 2015), including empathic traits (Sonnby-Borgström, 2002; Sonnby-Borgström et al., 2003; Dimberg et al., 2011; Balconi and Canavesio, 2013a; Balconi et al., 2014; Rymarczyk et al., 2016b). For example, Dimberg et al. (2011) have found that more empathic individuals showed greater CS contraction to angry faces and greater ZM contraction to happy faces, as compared to less empathic individuals. Similar patterns were observed in response to fearful facial expressions, where in more empathic individuals exhibited larger CS reactions (Balconi and Canavesio, 2016). Recently, Rymarczyk et al. (2016b) found that emotional empathy moderates activity in other muscles, for instance levator labii in response to disgust and lateral frontalis in response to fearful facial expressions. Results of these studies suggest that more empathic individuals are more sensitive to the emotions expressed by others at the level of facial mimicry. It has been suggested that FM has important consequences for social behavior (Kret et al., 2015) because it facilitates understanding of emotion by inducing an appropriate empathic response (Adolphs, 2002; Preston and de Waal, 2002; Decety and Jackson, 2004).

On the neuronal level, the PAM assumes that observing the actions of another individuals stimulates the same action in the observers by activating the brain structures that are involved in executing the same behavior (Preston, 2007). It has been suggested that the Mirror Neuron System (MNS) represents the neural basis of the PAM (Gallese et al., 1996; Rizzolatti and Sinigaglia, 2010). Indeed, the first evidence of mirror neurons (localized in monkeys in the ventral sector of the F5 area) came from experiments where monkeys performed a goal-directed action (e.g., holding, grasping or manipulating objects) or when they observed another individual (monkeys or human) execute the same action (Gallese et al., 1996; Rizzolatti and Craighero, 2004; Gallese et al., 2009). Similarly, studies in humans have shown that the MNS is activated during imagination or imitation of simple or complex hand movements (Ruby and Decety, 2001; Iacoboni et al., 2005; Iacoboni and Dapretto, 2006). Furthermore, neuroimaging studies have shown that pure observation and imitation of emotional facial expressions engaged the MNS, particularly regions of the inferior frontal gyrus (IFG) and the inferior parietal lobule (IPL) (Rizzolatti et al., 2001; Carr et al., 2003; Rizzolatti and Craighero, 2004; Iacoboni and Dapretto, 2006), which are considered core regions of the MNS in human.

Apart from core regions of the MNS, the insula and the amygdala, limbic system’s structures, are proposed to be involved in processing of emotional facial expressions (Iacoboni et al., 2005). For example the amygdala activation was shown for fear expressions (Carr et al., 2003; Ohrmann et al., 2007; van der Zwaag et al., 2012), while the anterior insula (AI) for disgust expressions (Jabbi and Keysers, 2008; Seubert et al., 2010). Recently, the insula and dorsal part of anterior cingulate cortex together with a set of limbic and subcortical structures (including the amygdala), constitute the brain’s salience network (Seeley et al., 2007). The salience network is thought to mediate the detection and integration of behaviorally relevant stimuli (Menon and Uddin, 2010) including stimuli that elicit fear (Liberzon et al., 2003; Zheng et al., 2017).

Taking into account involvement of the MNS in social mirroring and phenomenon of facial mimicry, the interactions between the MNS and limbic system is postulated (Iacoboni et al., 2005). It is proposed that during observation and imitation of emotional expressions, the core regions of the MNS (i.e., IFG and IPL) activate the insula, which further activate other structure of limbic system, i.e., amygdala (Jabbi and Keysers, 2008). However, it should be emphasize that the specific function of the amygdala in affective resonance is still under debate (Adolphs, 2010). For example, van der Gaag et al. (2007) found bilateral anterior insula activation during perception of happy, disgusted and fearful facial expressions compared to non-emotional facial expressions, however, they did not find any amygdala activation. The amount of studies revealed that amygdala is activated rather during conscious imitation than pure observation of emotional facial expressions (Lee et al., 2006; van der Gaag et al., 2007; Montgomery and Haxby, 2008). Moreover, it was shown that extent of amygdala activation could be predicted by extent of movement during imitation of facial expressions (Lee et al., 2006). Some authors proposed that amygdala activation during imitation, but not observation, of emotional facial expressions might reflect increased autonomic activity or feedback from facial muscles to the amygdala (Pohl et al., 2013).

To sum up, there is general agreement that exists among researchers that the insula is involved in affective resonance. Furthermore, the insula and the amygdala were proposed to be a part of an emotional perception-action matching system (Iacoboni and Dapretto, 2006; Keysers and Gazzola, 2006) and therefore to “extend” the classical MNS during emotion processing (van der Gaag et al., 2007; Likowski et al., 2012; Pohl et al., 2013). It is believed that the mirror mechanism might be responsible for motor simulation of facial expressions (core MNS, i.e., IFG and IPL) (Carr et al., 2003; Wicker et al., 2003; Grosbras and Paus, 2006; Iacoboni, 2009), and for affective imitation (extended MNS, i.e., insula) (van der Gaag et al., 2007; Jabbi and Keysers, 2008). However, the exactly role of the amygdala in these processes is not clear.

According to the Perception-Action Model, the facial mimicry is an automatic matched motor response, based on a perception-behavior link (Chartrand and Bargh, 1999; Preston and de Waal, 2002). However, other authors proposed that that FM is not only a simple motor reaction, but also a result of a more generic processes of interpreting the expressed emotion (Hess and Fischer, 2013, 2014). Some evidence for this proposition comes from two studies that used simultaneous measures of blood-oxygen level-dependent (BOLD) and facial electromyography (EMG) signals in an MRI scanner (Likowski et al., 2012; Rymarczyk et al., 2018). Likowski et al. (2012) have found that, for emotional facial expressions of happiness, sadness, and anger, facial EMG correlated with BOLD activity localized to parts of the core MNS (i.e., IFG), as well as areas responsible for processing of emotion (i.e., AI). Similar results were obtained in a separate study that additionally utilized videos of happiness and anger facial expressions were also used (Rymarczyk et al., 2018). In that study, Rymarczyk et al. (2018) showed that activation in core MNS and MNS-related structures were more frequently observed when dynamic emotional expressions were presented as compared to static emotional expressions presentations. The authors concluded that dynamic emotional facial expressions might be a clearer signal to induce motor simulation processes in the core the MNS as well as the affective resonance processes in limbic structure, i.e., insula. It is worth noting that dynamic stimuli, as compared to static, selectively activated structures related to motion and biological motion perception (Arsalidou et al., 2011; Foley et al., 2012; Furl et al., 2015), as well as MNS brain structures (Sato et al., 2004; Kessler et al., 2011; Sato et al., 2015). Results of aforementioned EMG-fMRI studies suggest that the core MNS and MNS-related limbic structures (e.g., insula) may constitute neuronal correlates of FM. Furthermore, it appears that FM phenomenon contains a motor and an emotional component, each represented by a specific neural network of active brain structures that correlated with facial muscle responses during perception of emotions. Responsible for the motor component are structures thought to be the one constituting the core MNS (e.g., inferior frontal gyrus), involved in observation and execution of motor actions. The insula, MNS-related limbic structure, is involved in emotional-related processes. It should be noted that this assumption is restricted to FM for happiness, sadness, and anger emotion, based on the results of EMG-fMRI studies.

Furthermore, several studies have linked empathic traits to neural activity in the MNS indicating that individuals who have higher activity in the MNS also score higher on emotional aspects of empathy (Kaplan and Iacoboni, 2006; Jabbi et al., 2007; Pfeifer et al., 2008). For example, Jabbi et al. (2007) found positive correlation between the bilateral anterior insula and the frontal operculum activation when subjects observed video clips displaying pleased or disgusted facial expressions. To sum up, there is some evidence that the MNS is underpinning of empathy and that subsystems of MNS is supporting motor and affective simulation. However, till now there is no empirical evidence for link between the MNS, empathy and simulation processes.

In our study simultaneous recording of EMG and BOLD signal during perception of facial stimuli were used. We selected natural, static and dynamic facial expressions (neutral, fear, and disgust) from the Amsterdam Dynamic Facial Expression Set (ADFES) (van der Schalk et al., 2011b), based on studies showing that dynamic stimuli are a truer reflection of real-life situations (Krumhuber et al., 2013; Sato et al., 2015; Rymarczyk et al., 2016a). Empathy levels were assessed with the Questionnaire Measure of Emotional Empathy (QMEE), wherein empathy is defined as a “vicarious emotional response to the perceived emotional experiences of others” (Mehrabian and Epstein, 1972, p. 1). According to the reasoning outlined above, our EMG-fMRI investigation had two main goals.

Firstly, we wanted to explore whether the neuronal bases for FM, established for socially related stimuli, i.e., anger and happiness, would be the same for more biologically relevant ones, i.e., fear and disgust. We predicted, that similarly to anger and happiness, the core MNS (i.e., IFG and IPL) and MNS-related limbic structures (i.e., insula, amygdala) would be involved in perception of emotional facial expression. Since, that there is evidence that perception of dynamic emotional stimuli elicits greater brain activity as compared to static stimuli (Arsalidou et al., 2011; Kessler et al., 2011; Foley et al., 2012; Furl et al., 2015), we expected the stronger activation in all structures of MNS subsystems for dynamic compared to static emotional facial expression.

Secondly, based on the evidence that empathy traits modulate facial mimicry for fear (Balconi and Canavesio, 2016) and disgust (Balconi and Canavesio, 2016; Rymarczyk et al., 2016b), as well as based on the assumption that MNS is the underpinning of empathy processes, we wanted to test whether there are a relations between facial mimicry, empathy and the mirror neuron system. We predicted that highly empathic people would be characterized with greater activation of extended MNS sites, i.e., insula and amygdala, and that these activations would be correlated with stronger facial reactions. Next, according to neuroimaging evidence that the dynamic compared to static emotional stimuli are stronger signal for social communication (Bernstein and Yovel, 2015; Wegrzyn et al., 2015), we explored whether the relations between facial mimicry, empathy and subsystems of MNS could be also be dependant on the modality of the stimuli.

Forty-six healthy individuals (25 males, 21 females, mean ± standard deviation age = 23.8 ± 2.5 years) participated in this study. The subjects had normal or corrected to normal eyesight and none reported neurological diseases. This study was carried out in accordance with the recommendations of Ethics Committee of Faculty of Psychology at the University of Social Sciences and Humanities with written informed consent from all subjects. All subjects gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the Ethics Committee at the SWPS University of Social Sciences and Humanities. An informed consent form was signed by each participant after the experimental procedures had been clearly explained. After the scanning session, subjects were informed of the aims of the study.

Empathy scores were measured with Questionnaire Measure of Emotional Empathy (QMEE), wherein empathy is defined as a “vicarious emotional response to the perceived emotional experiences of others” (Mehrabian and Epstein, 1972, p. 1). The QMEE contains 33-items to be completed using a 9-point ratings from -4 (=very strong disagreement) to +4 (=very strong agreement) and was selected given that the questionnaire has a Polish adaptation (Rembowski, 1989) and has been shown to be a useful measure in FM research (Sonnby-Borgström, 2002; Dimberg et al., 2011). For analysis purposes subjects were split into High Empathy (HE) and Low Empathy (LE) groups based on the median score on the QMEE questionnaire.

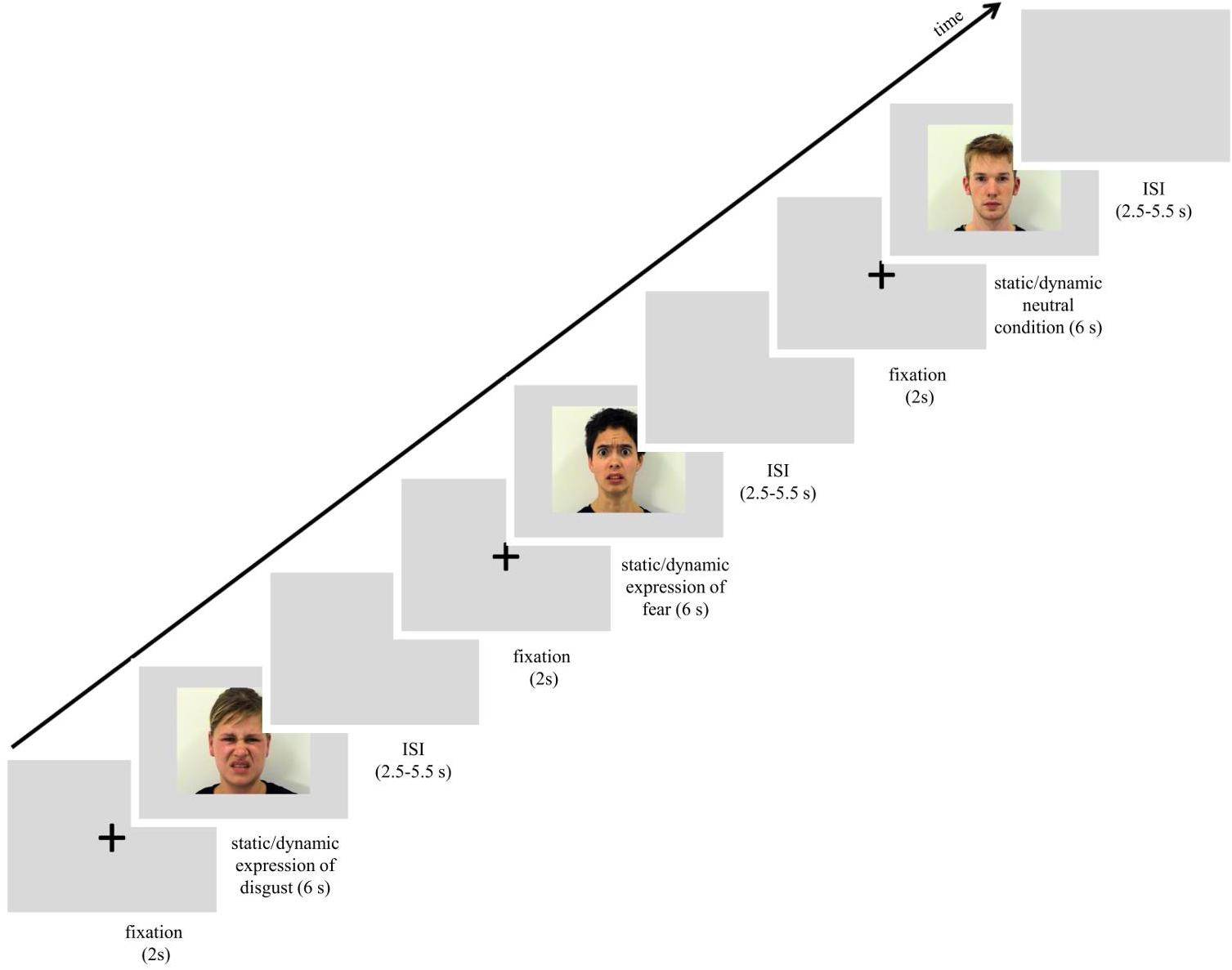

Facial expressions of disgust and fear were taken from The Amsterdam Dynamic Facial Expression Set (van der Schalk et al., 2011b). Additionally, neutral conditions of the same human actors were used, showing no visible action units specific to emotional facial expression. Stimuli (F02, F04, F05, M02, M08, and M12) consisted of forward-facing facial expressions presented as static and dynamic displays. Stimuli in the static condition consisted of a single frame from the dynamic video clip, corresponding to its condition. For static fear and disgust, the selected frame represented the peak moment of facial expression. In the case of neutral dynamic expressions, motion was still apparent because actors were either closing their eyes or slightly changing the position of their head. Stimuli were 576 pixels in height and 720 pixels in width. All expressions were presented on a gray background. For an overview of procedure and stimuli see Figure 1.

Figure 1. Scheme of procedure used in the study. Images used in the figure were obtained and published with permission of the copyright holder of the Amsterdam Dynamic Facial Expression Set (van der Schalk et al., 2011b).

Electromyography data were acquired using an MRI-compatible Brain Products’ BrainCap consisting of 2 bipolar and one reference electrode. The electrodes with a diameter of 2 mm were filled with electrode paste and positioned in pairs over the CS and LL on the left side of the face (Cacioppo et al., 1986; Fridlund and Cacioppo, 1986). A reference electrode, 6 mm in diameter, was filled with electrode paste and attached to the forehead. Before the electrodes were attached, the skin was cleaned with alcohol. This procedure was repeated until electrode impedance was reduced to 5 kΩ or less. The digitized EMG signals were recorded using a BrainAmp MR plus ExG amplifier and BrainVision Recorder. The signal was low-pass filtered at 250 Hz during acquisition. Finally, data were digitized using a sampling rate of 5 kHz, and stored on a computer running MS Windows 7 for offline analysis.

The MRI data were acquired on a Siemens Trio 3 T MR-scanner equipped with a 12-channel phased array head coil. Functional MRI images were collected using a T2∗-weighted EPI gradient-echo pulse sequence with the following parameters: TR = 2,000 ms, TE = 25 ms; 90° flip angle, FOV = 250 mm, matrix = 64 × 64, voxel size = 3.5 mm × 3.5 mm × 3.5 mm, interleaved even acquisition, slice thickness = 3.5 mm, 39 slices.

Each volunteer was introduced to the experimental procedure and signed a consent form. To conceal the true purpose, facial electromyography recordings, participants were told that sweat gland activity was being recorded while watching the faces of actors selected for commercials by an external marketing company. Following the attachment of the electrodes of the FaceEMGCap-MR, participants were reminded to carefully observe the actors presented on the screen and were positioned in the scanner. The subjects were verbally encouraged to feel comfortable and behave naturally.

The scanning session started with a reminder of the subject’s task. In the session subjects were presented with 72 trials that lasted approximately 15 min. Each trial started with a white fixation cross, 80 pixels in diameter, which was visible for 2 s in the center of the screen. Next, one of the stimuli with a facial expression (disgusts, fear or neutral, each presented as static image or dynamic video clip) was presented for 6 s. The expression was followed by a blank gray screen presented for 2.75–5.25 s (see Figure 1). All stimuli were presented in the center of the screen. In summary, each stimulus was repeated once, for a total of 6 presentations within a type of expression (e.g., 6 dynamic presentations of happiness). The stimulus appeared in an event-related manner, pseudo-randomized trial by trail with constraints in rand no facial expression from the same actor, and no more than 2 actors of the same sex or the same emotion were presented consecutively. In total, 6 randomized event-related sessions with introduced constraints were balanced between subjects. The procedure was controlled using Presentation® software running on a computer with Microsoft Windows operating system and was displayed on a 32-inch NNL LCD MRI-compatible monitor with a mirroring system (1920 pixels × 1080 pixels resolution; 32 bit color rate; 60 Hz refresh rate) from a viewing distance of approximately 140 cm.

Pre-processing was carried out using BrainVision Analyzer 2 (version 2.1.0.327). First, EPI gradient-echo pulse artifacts were removed using the average artifact subtraction AAS method (Allen et al., 2000) implemented in the BrainVision Analyzer. This method is based on the sliding average calculation, and consists of 11 consecutive functional volumes marked in the data logs. Synchronization hardware and MR trigger markers allowed for the use of the AAS method for successfully removing MR-related artifacts from the data. Next, standard EMG processing was carried out, which included a signal transformation with 30 Hz high-pass filter. The EMG data were subsequently rectified and integrated over 125 ms and resampled to 10 Hz. Artifacts related to EMG were detected using two methods. First, when single muscle activity was above 8 μV at baseline (i.e., visibility of the fixation cross) (Weyers et al., 2006; Likowski et al., 2008, 2011), the trial was classified as an artifact and excluded from further analysis. All remaining trials were blind-coded and visually checked for artifacts. In the next step, trials were baseline corrected such that the EMG response was measured as the difference of averaged signal activity between the stimuli duration (6 s) and baseline period (2 s). Finally, the signal was averaged for each condition, for each participant. These averaged values were subsequently imported into SPSS 21 for statistical analysis.

Differences in EMG responses were examined using a three-way mixed-model ANOVA with expression (disgust, fear, and neutral) and stimulus mode (dynamic and static) as within-subjects factors and empathy group [low empathy (LE), high empathy (HE)] as the between-subjects factor1. Separate ANOVAs were calculated for responses from each muscle, and reported with a Bonferroni correction and with Greenhouse-Geisser correction, when the sphericity assumption was violated. In order to confirm that EMG activity changed from baseline and that FM occurred, EMG data for each significant effect were tested for a difference from zero (baseline) using one-sample, two-tailed t-tests.

Image processing and analysis was carried out using SPM12 software (6470) run in MATLAB 2013b (The Mathworks Inc, 2013). Standard pre-processing steps were applied to functional images, i.e., motion-correction and co-registration to the mean functional image. The independent SPM segmentation module was used to divide structural images into different tissue classes [gray matter, white matter, and non-brain (cerebrospinal fluid, skull)]. Next, based on previously segmented structural images, a study-specific template was created and affine registered to MNI space using the DARTEL algorithm. In particular, the functional images were warped to MNI space based on DARTEL priors, resliced to 2 mm × 2 mm × 2 mm isotropic voxels and later smoothed with an 8 mm × 8 mm × 8 mm full-width at half maximum Gaussian kernel. Single subject design matrices were constructed with six experimental conditions, corresponding to dynamic and static trials for each of the three expression conditions (disgust, fear, and neutral). These conditions were modeled with a standard hemodynamic response function, as well as, other covariates including head movements and parameters that excluded other fMRI artifacts produced by Artifact Detection Toolbox (ART). Later, the same sets of contrasts of interest (listed under “Results” section, i.e., fMRI data) were calculated for each subject and used in group level analysis (i.e., one-sample t-test) for statistical Regions of Interest (ROIs) analysis. The analysis was performed using the MarsBar toolbox (Brett et al., 2002) for the individual ROIs. ROIs consisted of anatomical masks derived from the WFU Pickatlas (Wake Forest University, 2014), and SPM Anatomy Toolbox (Eickhoff, 2016). The STS was defined as an overlapping set of peaks with a radius of 8 mm based on activation peaks reported in literature (Van Overwalle, 2009). Each ROI was extracted as the mean value from the mask. Statistics of brain activity in each contrast were reported with Bonferroni correction.

To understand mutual relationship between brain activity and the facial muscle activity and reveal which ROIs are directly related to FM, bootstrapped (BCa, samples = 1000) Pearson correlation coefficients were calculated between contrasts of brain activity (disgust dynamic, disgust static, fear dynamic, and fear static) and corresponding mimicry.

Each ROI was represented by a single value, which was the mean of all the voxels in that anatomical mask in each hemisphere. Muscle activity was defined as baseline corrected EMG trials of the same muscle and type. The correlations were performed in pairs of variables of muscle and EMG activity, e.g., CS response to static disgust faces with fMRI response in the left insula to static disgust faces.

The QMEE scores of the two groups were significantly different [t(44) = 9.583, p < 0.001; MHE = 69.4, SEHE = 3.7; MLE = 14,64, SELE = 4.3]. The HE group included 13 males (M = 61.38, SE = 4.86) and 11 females (M = 78.91, SE = 4.42) and the LE group consisted of 12 males (M = 12.83, SE = 6.35) and 10 females (M = 16.8, SE = 6.18).

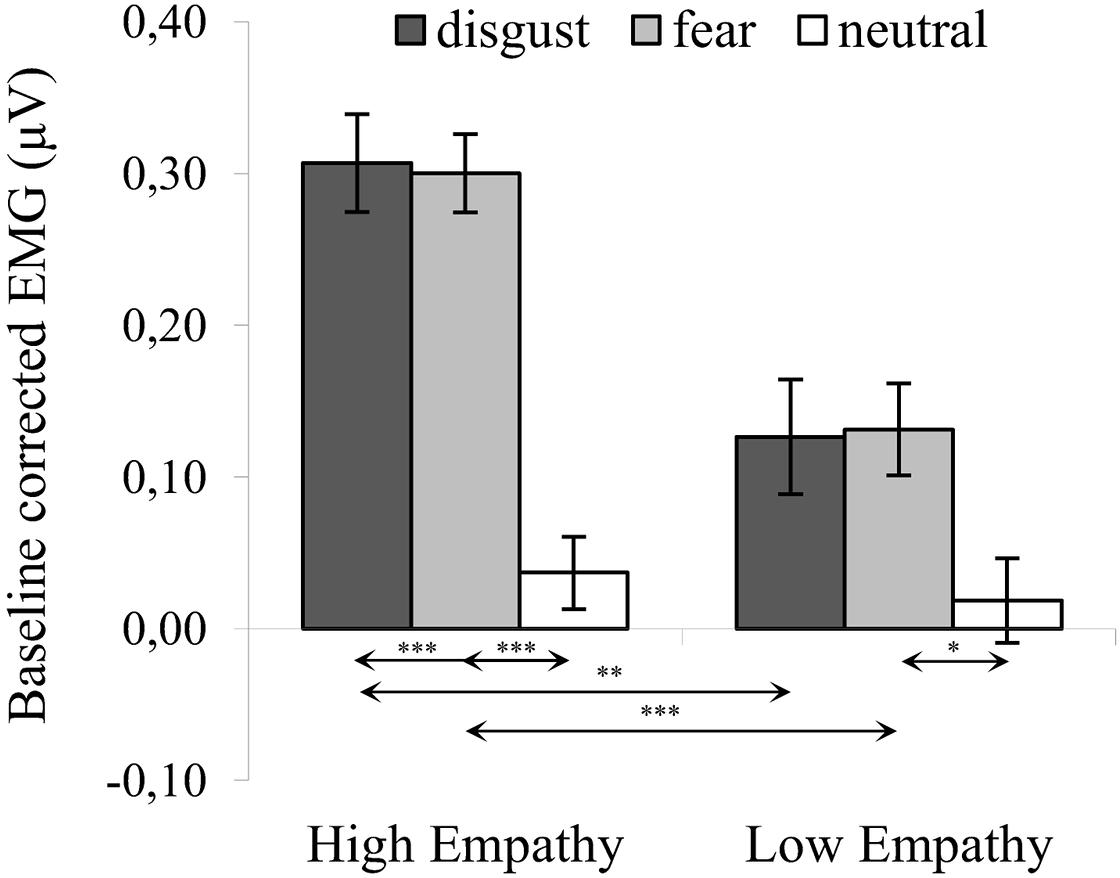

ANOVA2 showed a significant main effect of expression [F(2,72) = 26.527, p < 0.001, η2 = 0.424], indicating that activity of the CS for disgust (M = 0.217, SE = 0.025) was similar to fear [M = 0.216, SE = 0.020; t(36) = 0.036, p > 0.999] and higher for both fear and disgust as compared to neutral expressions [M = 0.028, SE = 0.018; disgust vs. neutral: t(36) = 5.559, p < 0.001; fear vs. neutral: t(36) = 6.714, p < 0.001]. Between-subject effect of empathy were also significant [F(1,36) = 24.813, p < 0.001, η2 = 0.408] with the activity of CS generally higher for HE (M = 0.215, SE = 0.016) than LE (M = 0.092, SE = 0.019) group.

The significant interaction of expression × empathy group [F(2,72) = 4.583, p = 0.013, η2 = 0.113] revealed that activity of the CS in the HE group for disgust (M = 0.307, SE = 0.032) was similar to fear [M = 0.300, SE = 0.026; t(36) = 0.194, p > 0.999] and higher for both emotions compared to neutral expressions [M = 0.037, SE = 0.024; disgust vs. neutral: t(36) = 6.136, p < 0.001; fear vs. neutral: t(35) = 7.306, p < 0.001]. In the LE, in contrast, higher CS activity was observed for fear (M = 0.131, SE = 0.030) compared to neutral expressions [M = 0.019, SE = 0.028; t(36) = 2.690, p = 0.034] and no other pair differences were observable [MLE disgust = 0.126, SELE disgust = 0.038; LE: disgust vs. neutral: t(36) = 2.118, p = 0.128; LE: disgust vs. fear: t(36) = 0.119, p > 0.999]. Higher CS activity was observed in the HE group as compared to the LE group for disgust [t(36) = 3.620, p = 0.001] and fearful faces [t(36) = 4.225, p < 0.001]. No group differences observed for neutral expressions [t(36) = 0.486, p = 0.621] (see Figure 2).

Figure 2. Mean (±SE) EMG activity changes and corresponding statistics for corrugator supercilii during presentation conditions. Separate asterisks indicate significant differences between conditions (simple effects) in EMG responses: ∗p < 0.05, ∗∗p < 0.01, ∗∗∗p < 0.001. SE, standard error.

There was no significant main effect of modality [F(1,36) = 0.169, p = 0.683, η2 = 0.005] and the following interactions did not reach significance: modality × empathy [F(1,36) = 0.044, p = 0.834, η2 = 0.001], expression × modality [F(2,72) = 0.013, p = 0.987, η2 = 0.000] and expression × modality × empathy [F(2,72) = 0.039, p = 0.962, η2 = 0.001].

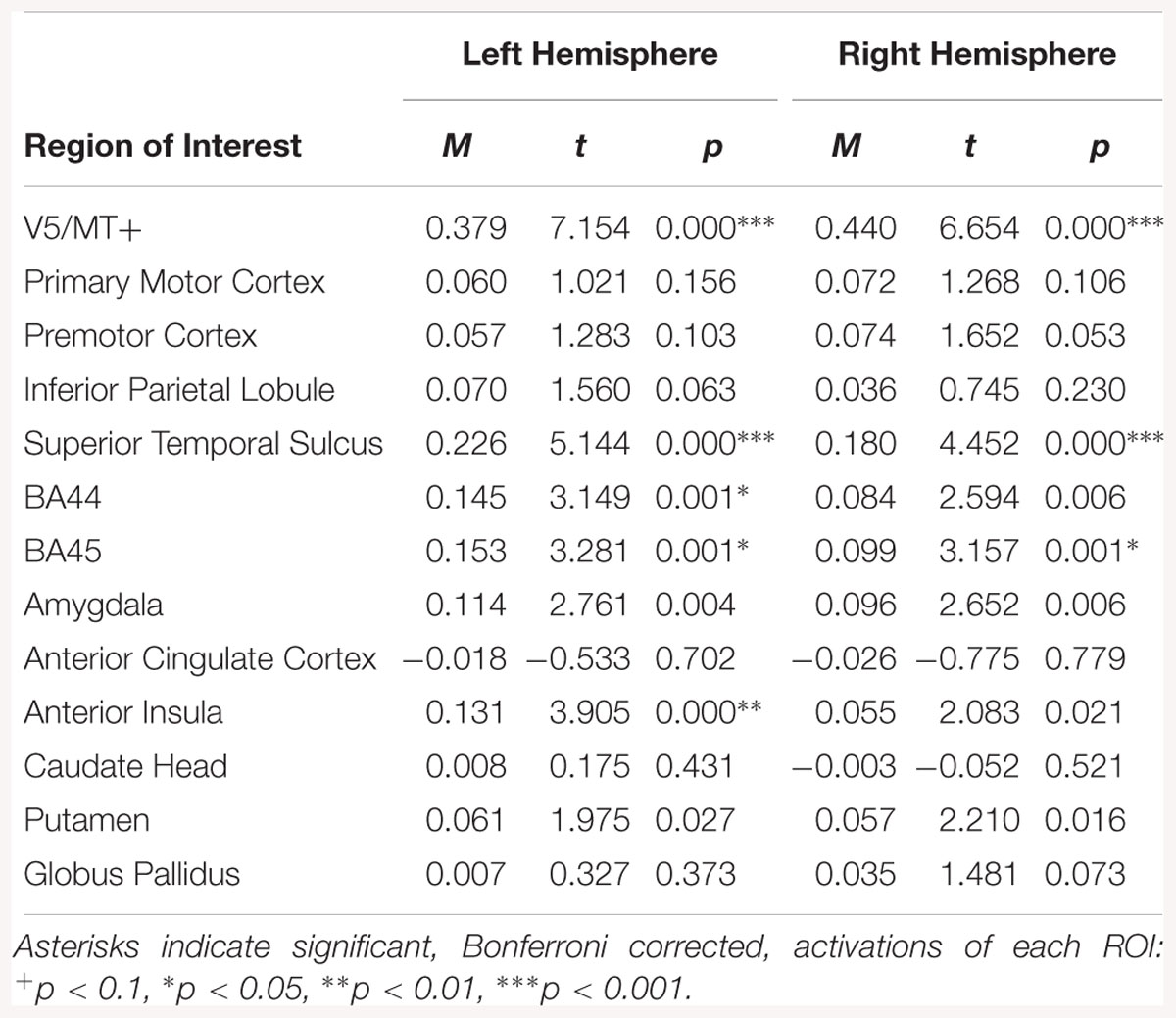

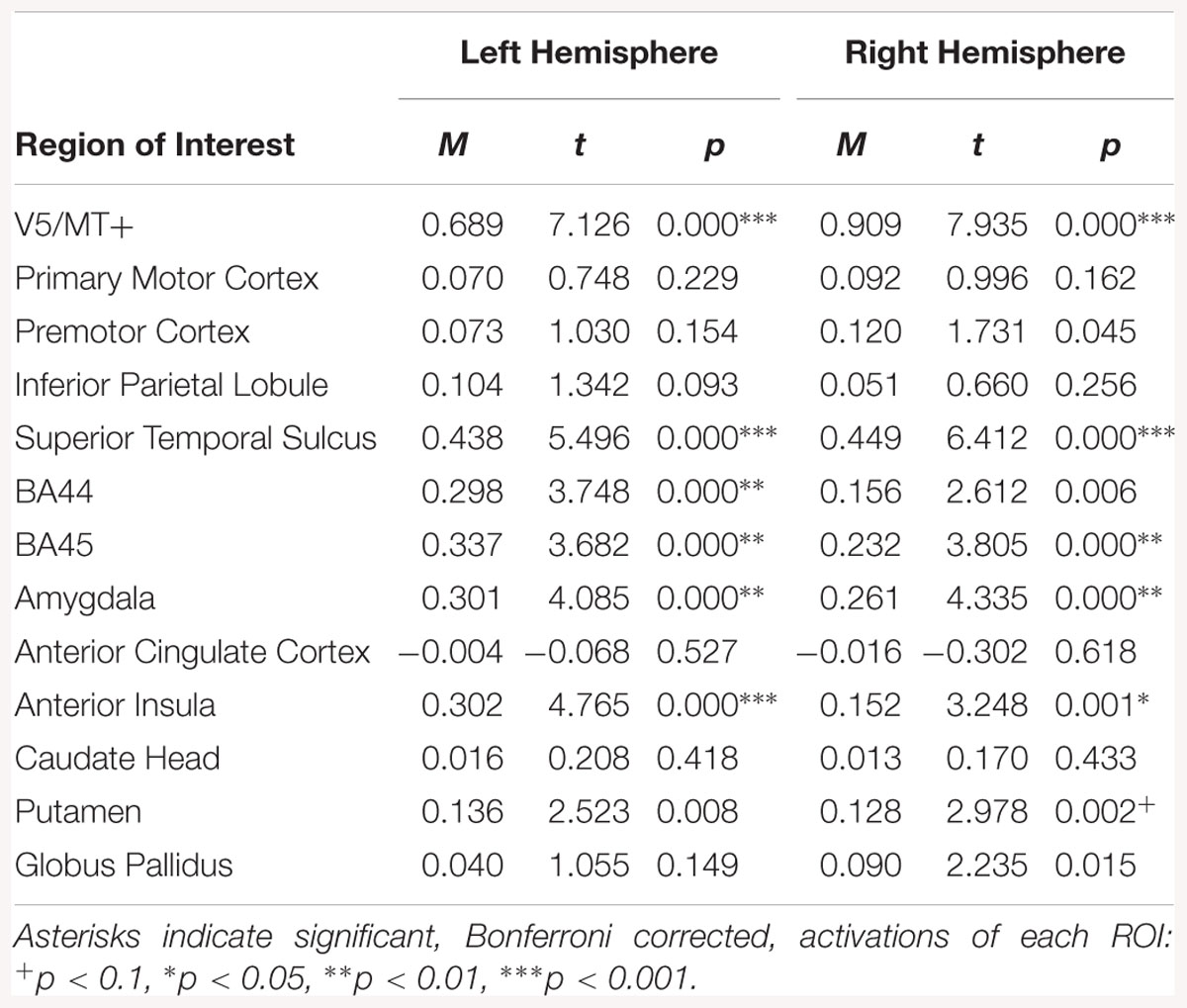

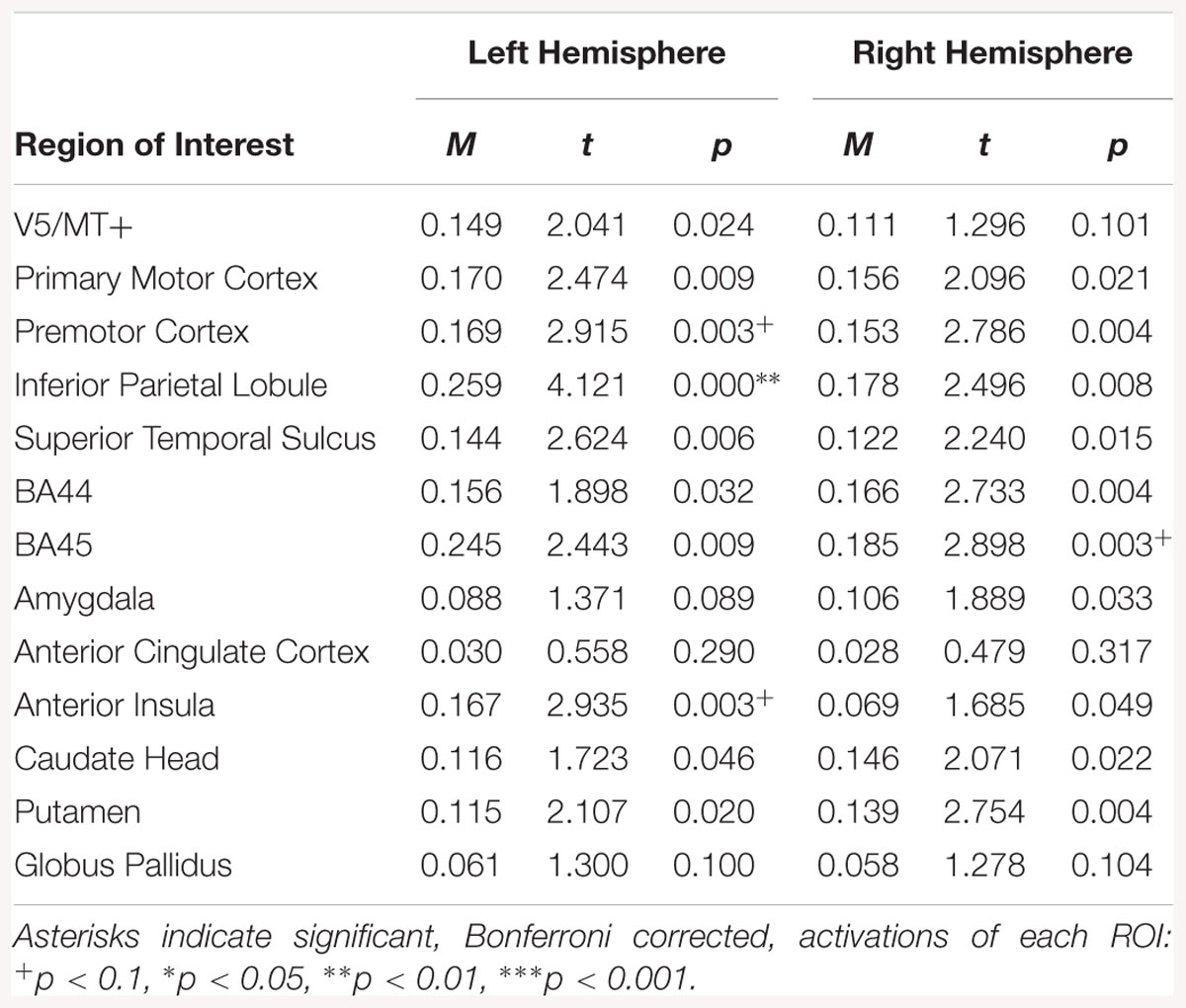

One-sample t-tests in HE and LE groups revealed a significant increase in CS activity for all disgust and fear conditions (see Table 1). There was no significant difference in CS activity from baseline in response to neutral expressions.

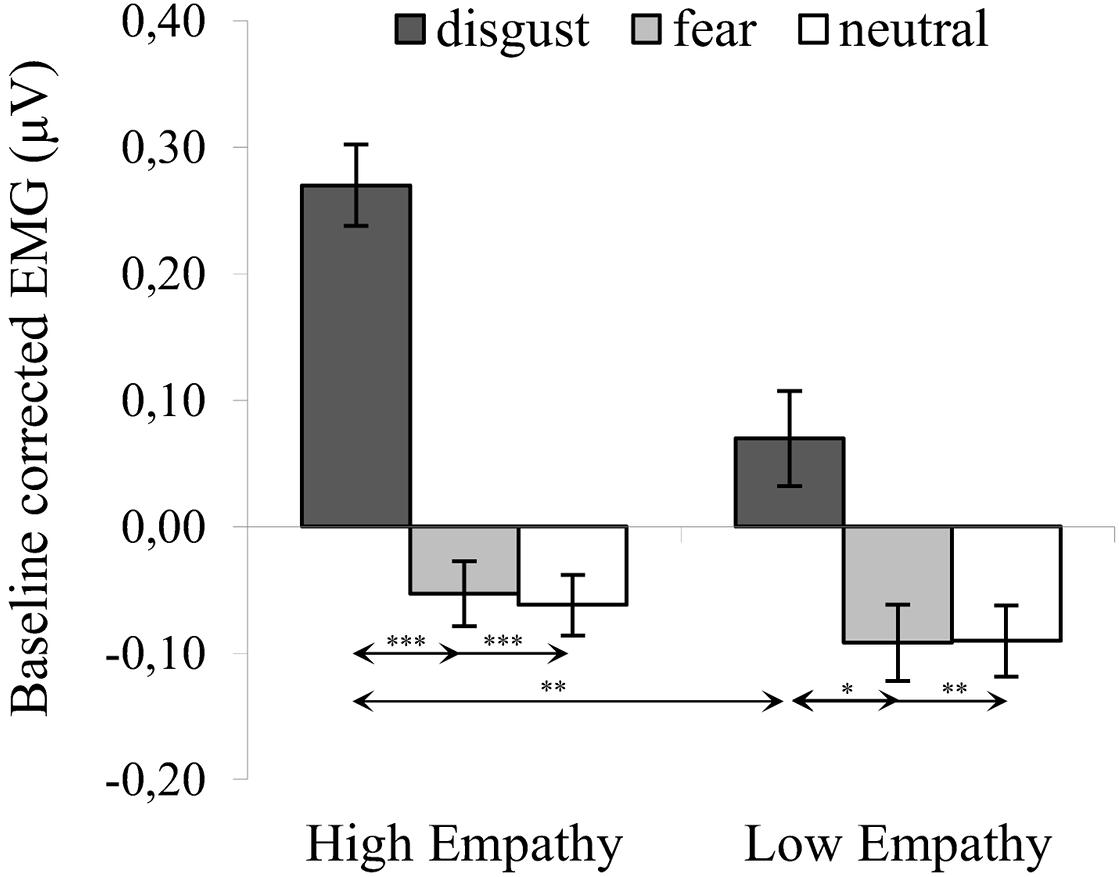

ANOVA3 showed a significant main effect of expression [F(2,76) = 33.989, p < 0.001, η2 = 0.486], indicating that activity of the LL was higher for disgust (M = 0.170, SE = 0.022) as compared to both fear [M = -0.073, SE = 0.031; t(36) = 6.914, p < 0.001] and neutral expressions [M = -0.073, SE = 0.025; t(36) = 8.483, p < 0.001]. There was no difference in LL activity between fear and neutral conditions [t(36) = 0.105, p > 0.999]. The between-subject effect of empathy was significant [F(1,36) = 6.579, p = 0.015, η2 = 0.155], such that activity of LL was higher for HE (M = 0.052, SE = 0.023) compared to LE (M = -0.038, SE = 0.026) groups.

The significant interaction of expression × empathy group [F(2,72) = 3.980, p = 0.023, η2 = 0.100] revealed that, for HE group, activity of the LL was higher for disgust (M = 0.270, SE = 0.028) compared to both fear [M = -0.053, SE = 0.040; t(36) = 7.022, p < 0.001] and neutral expressions [M = -0.062, SE = 0.033; t(36) = 8.973, p < 0.001]. Similarly, in the LE group, higher LL activity was observed for disgust (M = 0.070, SE = 0.033) compared to fear [M = -0.092, SE = 0.030; t(36) = 2.981, p = 0.014] and neutral expressions [M = -0.090, SE = 0.039; t(36) = 3.636, p = 0.003] (see Figure 3). Within groups, there was no difference in LL between fear and neutral expressions [HE: t(36) = 0.184, p > 0.999; LE: t(36) = 0.034, p > 0.999].

Figure 3. Mean (±SE) EMG activity changes and corresponding statistics for levator labii during presentation conditions. Separate asterisks indicate significant differences between conditions (simple effects) in EMG responses: ∗p < 0.05, ∗∗p < 0.01, ∗∗∗p < 0.001. SE, standard error.

The main effect of modality was not significant [F(1,36) = 1.315, p = 0.259, η2 = 0.035] and the following interactions did not reach significance: modality × empathy [F(1,36) = 0.000, p = 0.995, η2 = 0.000], expression × modality [F(2,72) = 0.458, p = 0.634, η2 = 0.013] and expression × modality × empathy [F(2,72) = 0.238, p = 0.789, η2 = 0.007].

One-sample t-tests in HE and LE groups revealed higher LL activity for all disgust conditions as compared to baseline (see Table 2). There was no differences in LL activity from baseline in response to fear and neutral expressions.

Regions of interest analyses were carried out for the contrasts that compare brain activation while viewing dynamic versus static facial expressions, resulting in eleven contrasts of interest: disgust dynamic > disgust static, fear dynamic > fear static, neutral dynamic > neutral static, emotion dynamic > emotion static (emotion dynamic – pooled dynamic disgust, and fear conditions; emotion static – similar pooling), all dynamic > all static (all dynamic – pooled dynamic disgust, fear and neutral conditions; all static – similar pooling), disgust dynamic > neutral dynamic, disgust static > neutral static, fear dynamic > neutral dynamic, fear static > neutral static, emotion dynamic > neutral dynamic, emotion static > neutral static. The aforementioned contrasts were calculated in order to investigate two types of questions. The contrast emotion/disgust/fear/all dynamic/static > neutral dynamic/static addresses neural correlates of FM of emotional/disgust/fear/all expressions. The other contrasts (i.e., emotion/disgust/fear/all dynamic > emotion/disgust/fear/all static) relate to the difference in processing between dynamic and static stimuli. Due to no group differences between HE and LE subjects, we report only fMRI ROI results for all subjects (for corresponding whole brain analysis see Supplementary Tables).

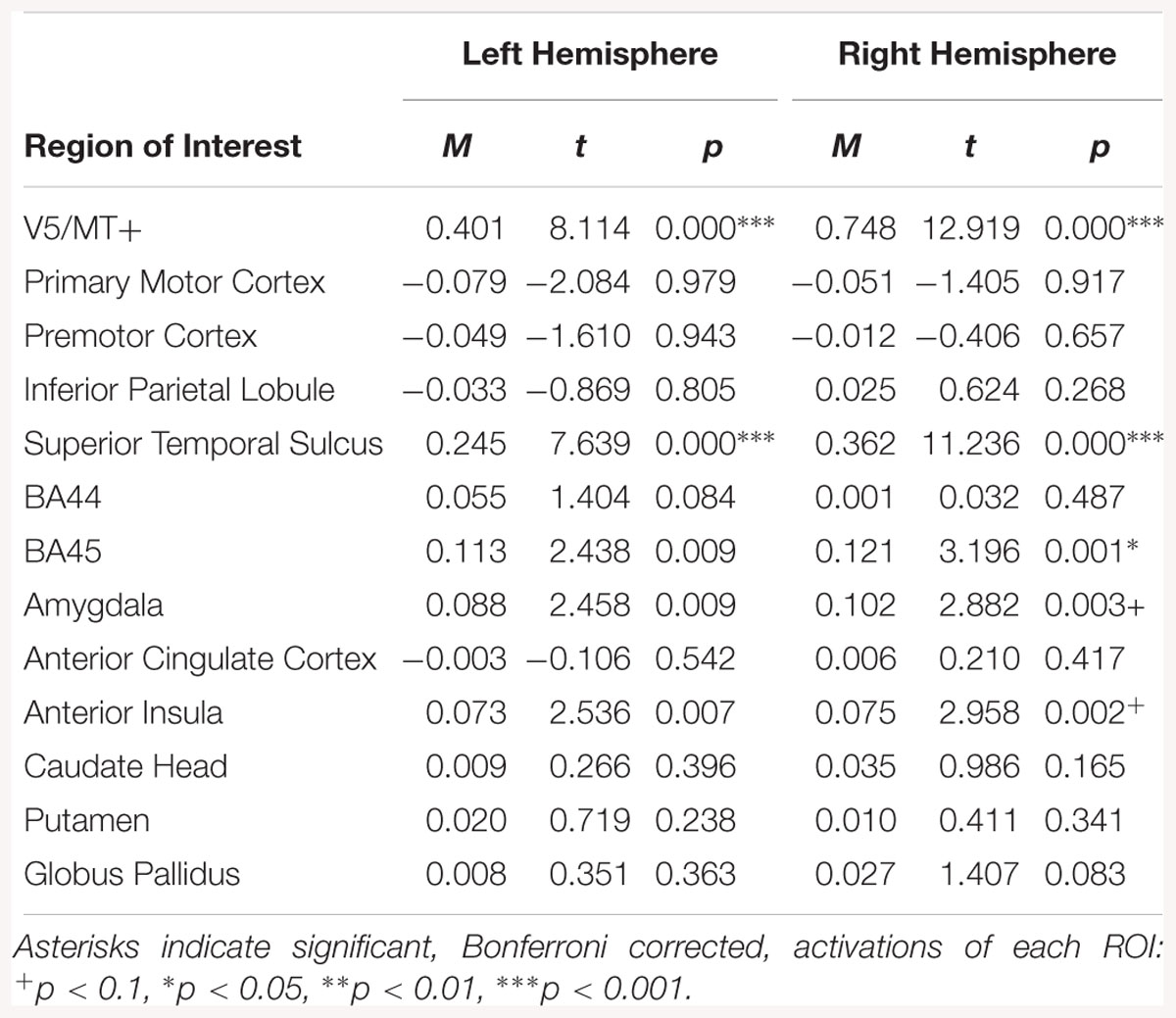

Regions of interest analyses identified activation in the right hemisphere for the disgust dynamic > disgust static contrast (see Table 3).

Table 3. Summary statistics for activation in each ROI across all participants for disgust dynamic > disgust static contrast.

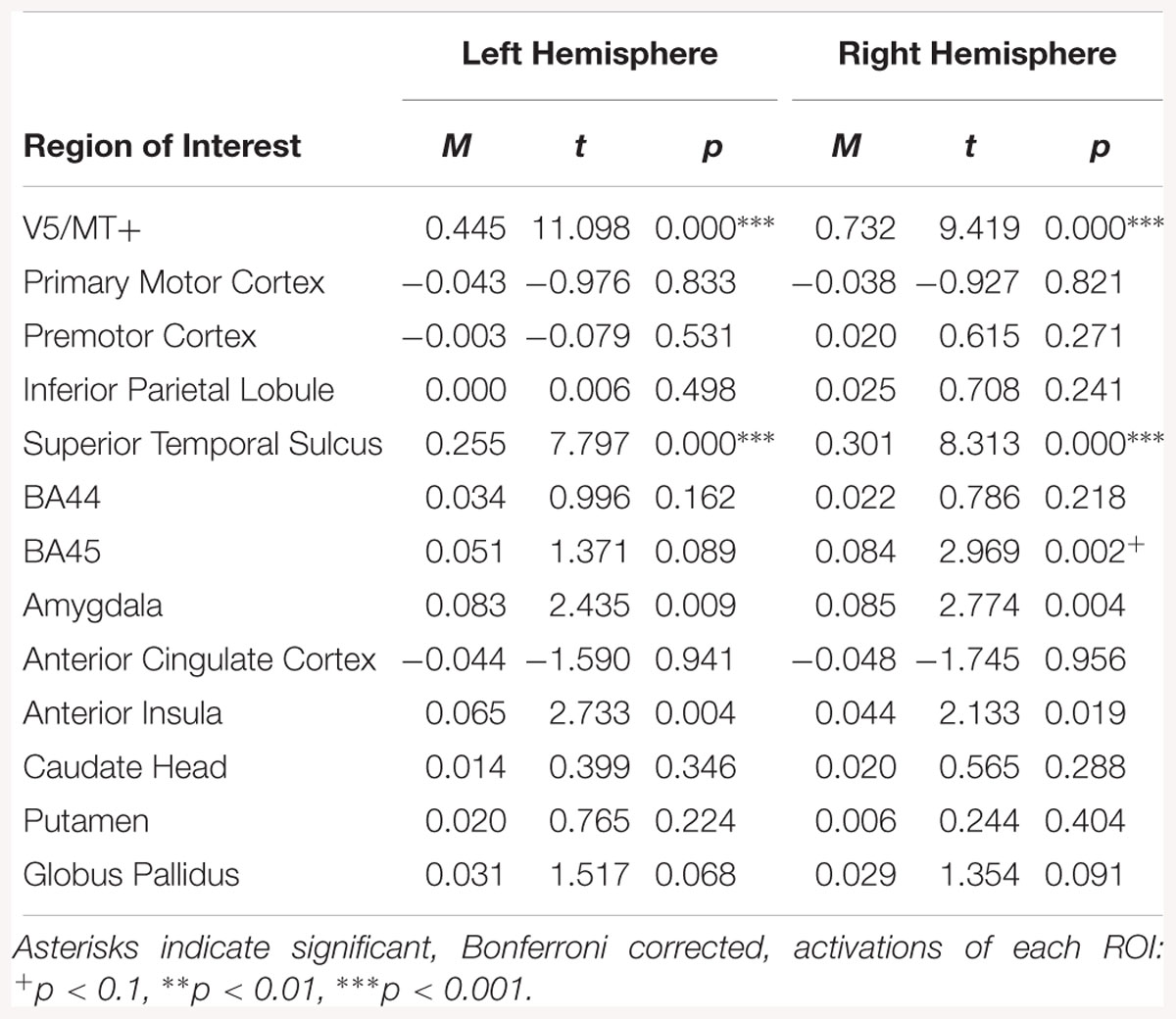

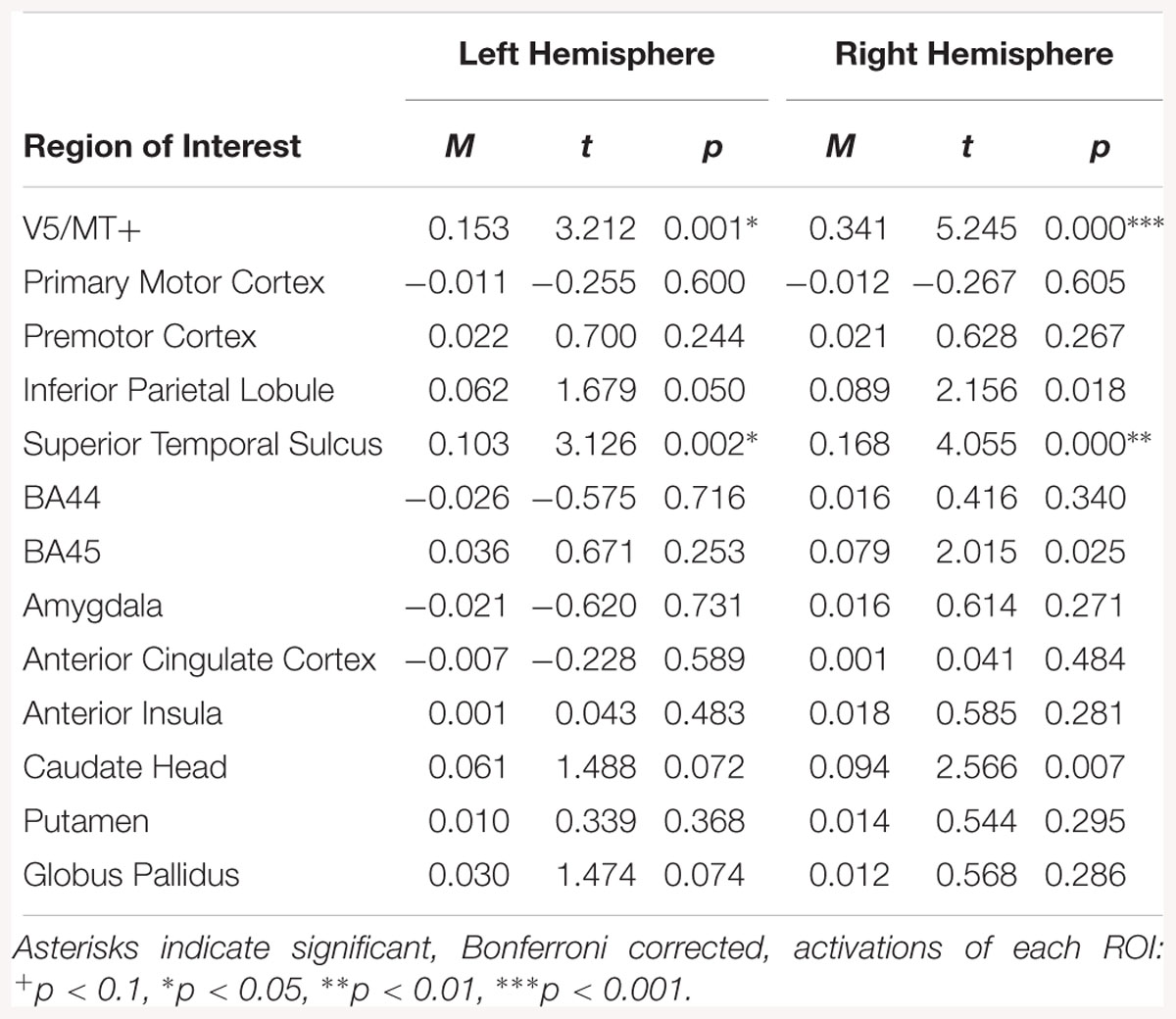

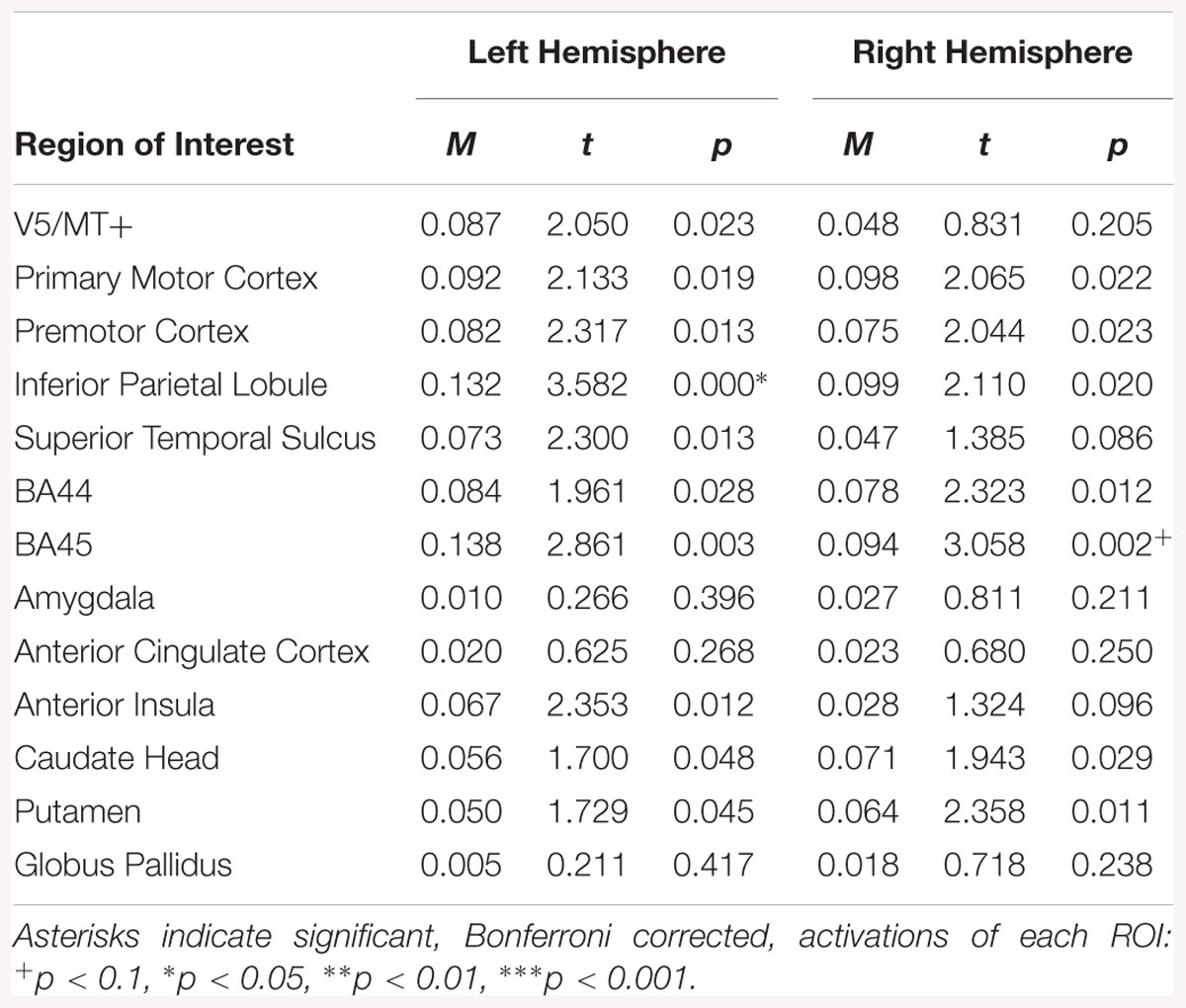

Bilateral activation was observed in the V5/MT+ and STS for the fear dynamic > fear static contrast. Moreover in the right hemisphere BA45, amygdala and AI were activated (see Table 4).

Table 4. Summary statistics for activation in each ROI across all participants for fear dynamic > fear static contrast.

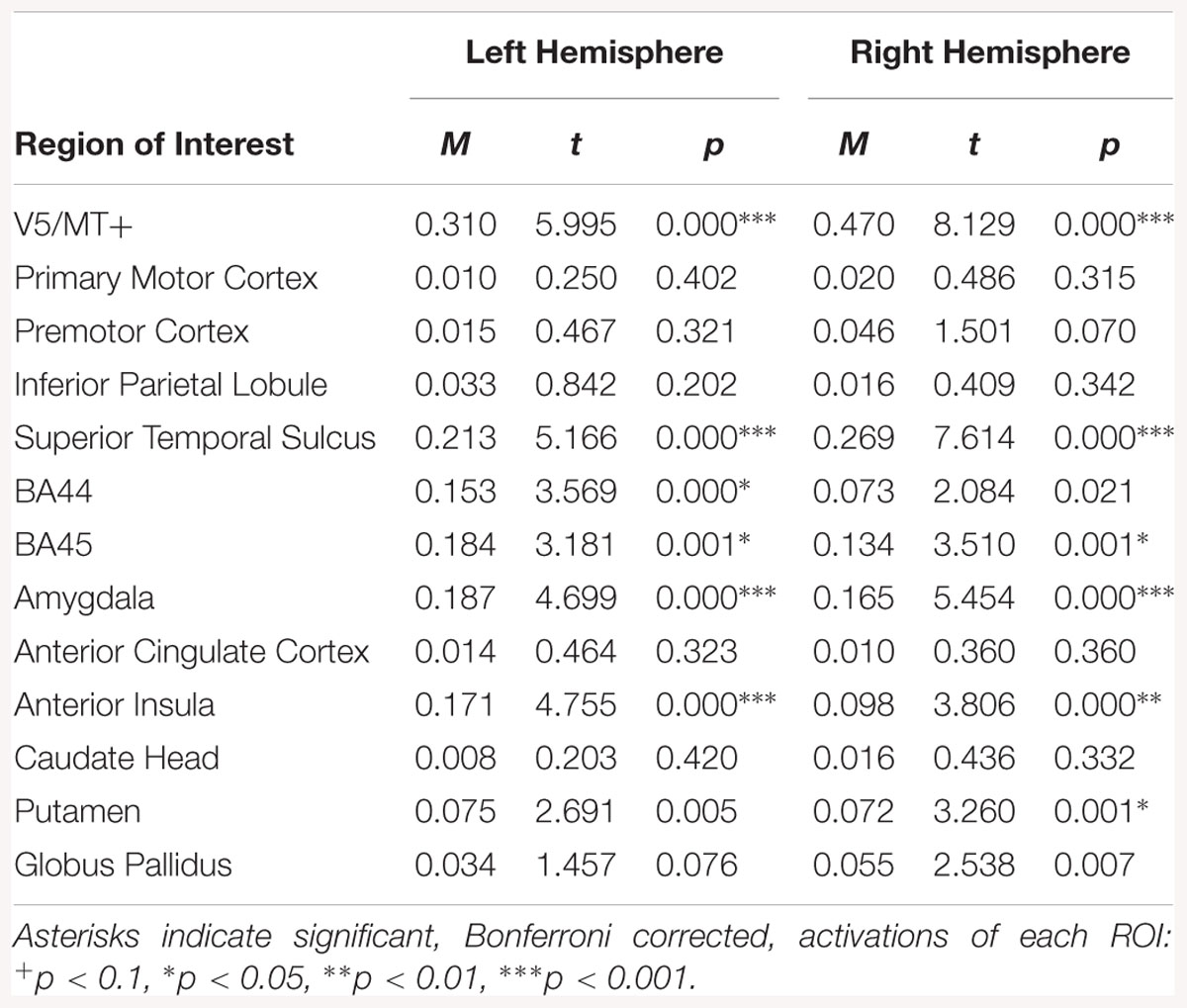

For the neutral dynamic > neutral static contrast, only V5/MT+ and STS were activated bilaterally (see Table 5).

Table 5. Summary statistics for activation in each ROI across all participants for neutral dynamic > neutral static contrast.

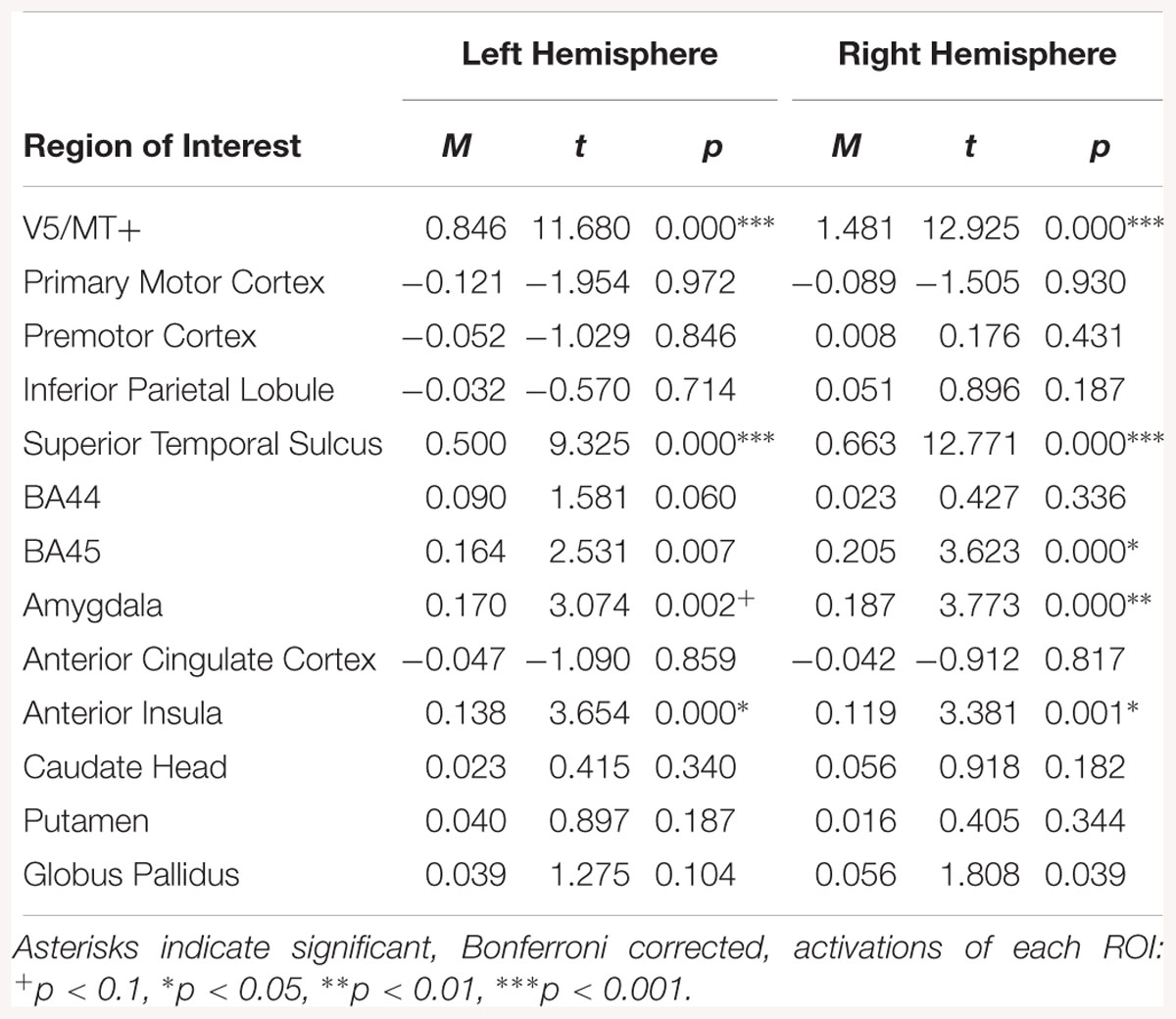

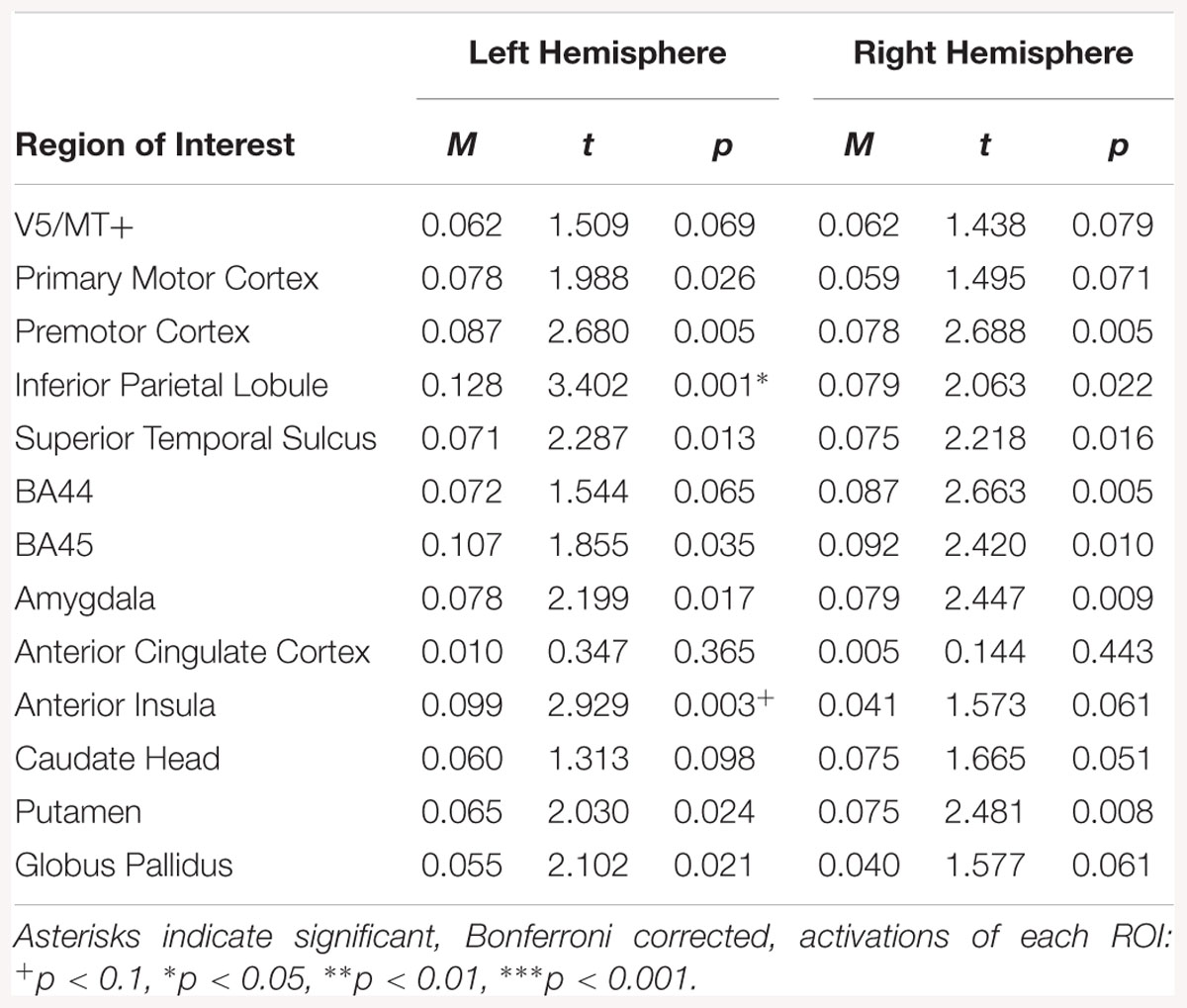

Regions of interest analysis for the emotion dynamic > emotion static contrast, revealed bilateral activations in V5/MT+, STS, AI and amygdala. Other structures activated by this contrast were right BA45 and left AI (see Table 6).

Table 6. Summary statistics for activation in each ROI across all participants for emotion dynamic > emotion static contrast.

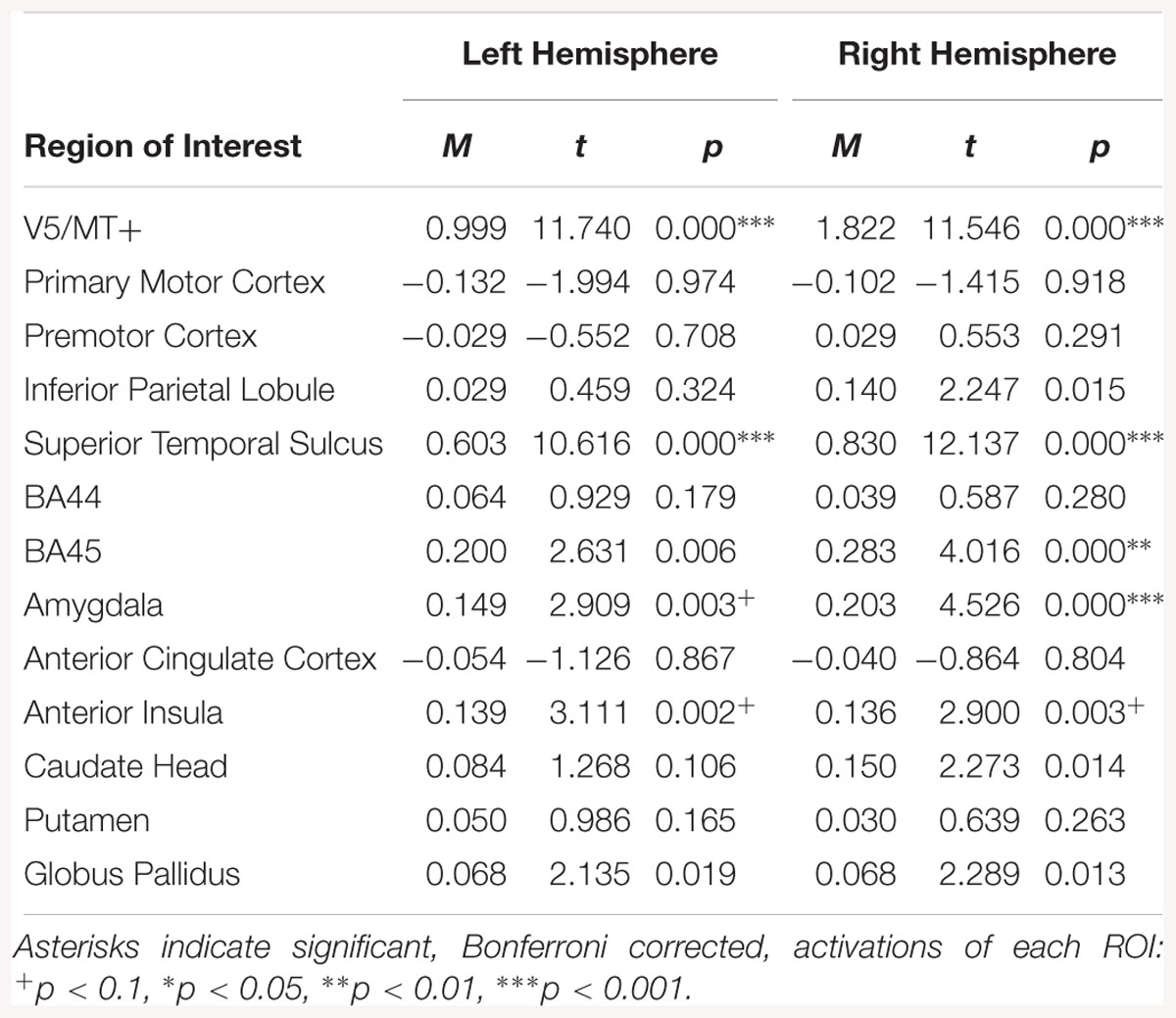

The all dynamic > all static contrast, indicated bilateral activations in V5/MT+, STS, amygdala and AI. The right BA45 was also activated (see Table 7).

Table 7. Summary statistics for activation in each ROI across all participants for all dynamic > all static expressions contrast.

Regions of interest analysis for the disgust dynamic > neutral dynamic contrast, revealed bilateral activations in V5/MT+, STS and BA45. Other structures revealed by this contrast were left BA44 and left AI (see Table 8).

Table 8. Summary statistics for activation in each ROI across all participants for disgust dynamic > neutral dynamic contrast.

Regions of interest analysis for the disgust static > neutral static contrast, showed activations in left IPL and right BA45(see Table 9).

Table 9. Summary statistics for activation in each ROI across all participants for disgust static > neutral static contrast.

For the fear dynamic > neutral dynamic contrast, activations were visible bilaterally in V5/MT+, STS, BA45, amygdala and AI. Activation was also noted in the left BA44 and right putamen (see Table 10).

Table 10. Summary statistics for activation in each ROI across all participants for fear dynamic > neutral dynamic contrast.

For the fear static > neutral static contrast, activations were observed in the left IPL and left AI (see Table 11).

Table 11. Summary statistics for activation in each ROI across all participants for fear static > neutral static contrast.

The emotion dynamic > neutral dynamic contrast indicated bilateral activations in V5/MT+, STS, BA45, amygdala and AI. Activation was also observed in the left BA44 and right putamen for this contrast (see Table 12).

Table 12. Summary statistics for activation in each ROI across all participants for emotion dynamic > neutral dynamic contrast.

The emotion static > neutral static contrast was associated with activation in left premotor cortex, left IPL, and right BA45(see Table 13).

Table 13. Summary statistics for activation in each ROI across all participants for emotion static > neutral static contrast.

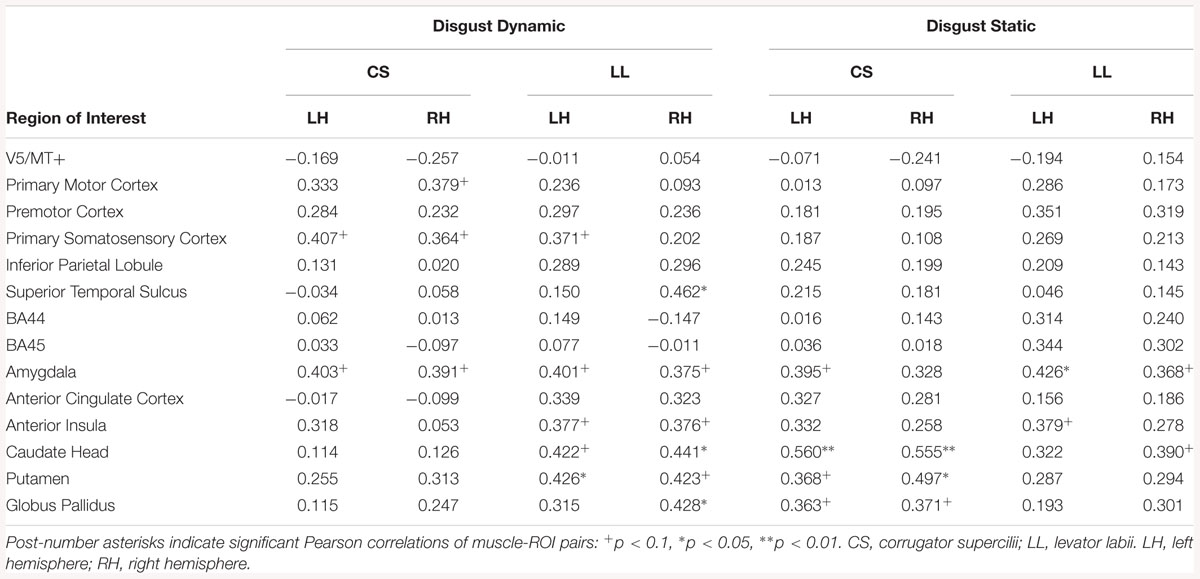

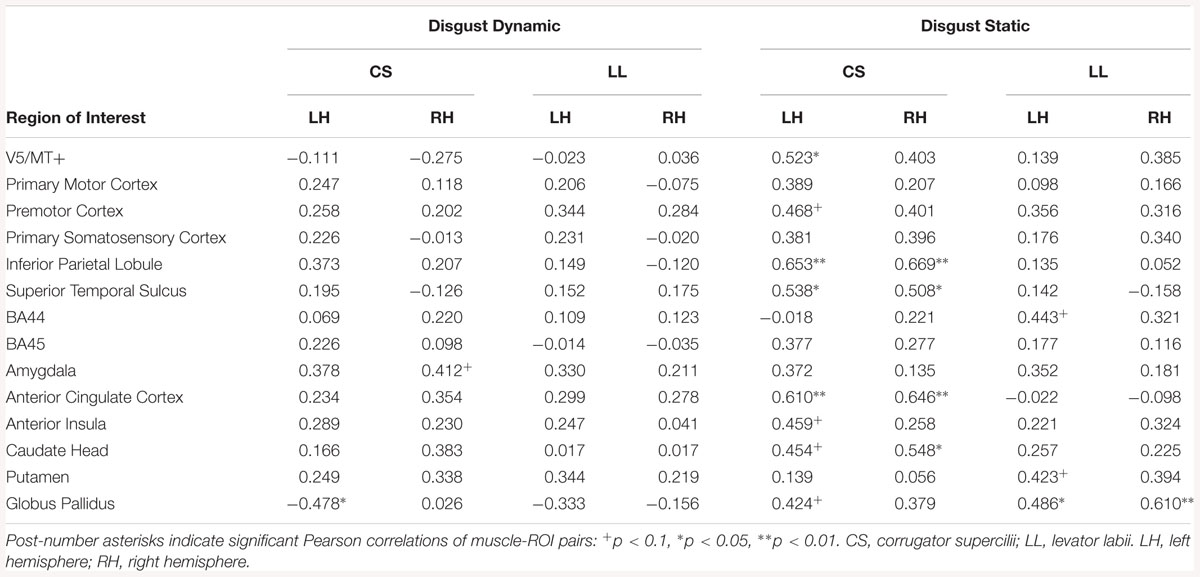

Correlation analyses in all subjects revealed linear relationships in the disgust dynamic condition between left AI and LL. In the disgust static condition, a positive relationship was present between the LL and activation of the right premotor cortex, and right caudate head. In the left hemisphere, positive relationships were found between the LL and activation in BA44, BA45, and AI (see Table 14).

Positive relationships between CS and brain activity was found in the right hemisphere in the caudate head and globus pallidus as well as in various regions of the left hemisphere (IPL, STS, ACC, AI, caudate head, globuspallidus) (see Table 14).

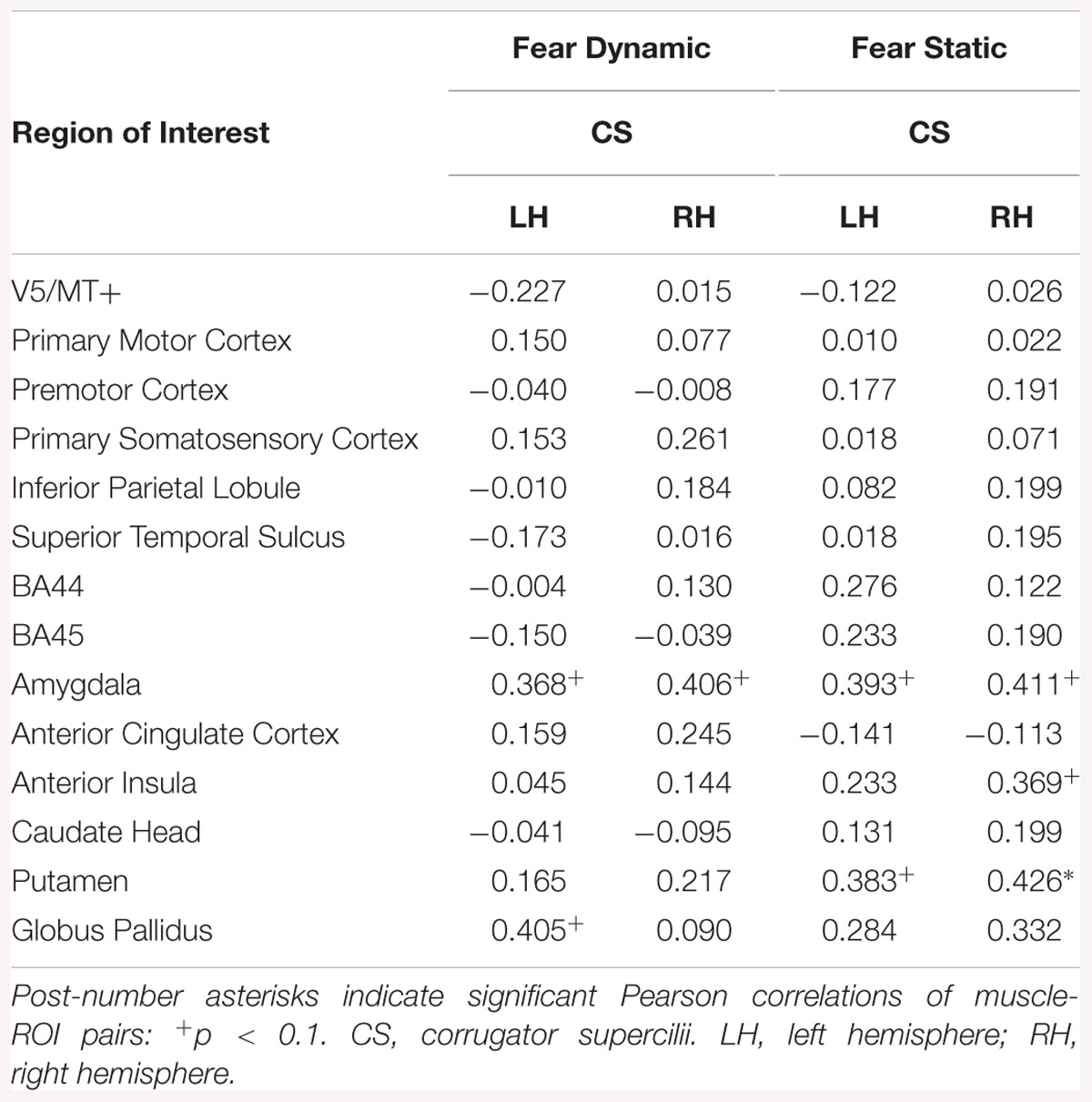

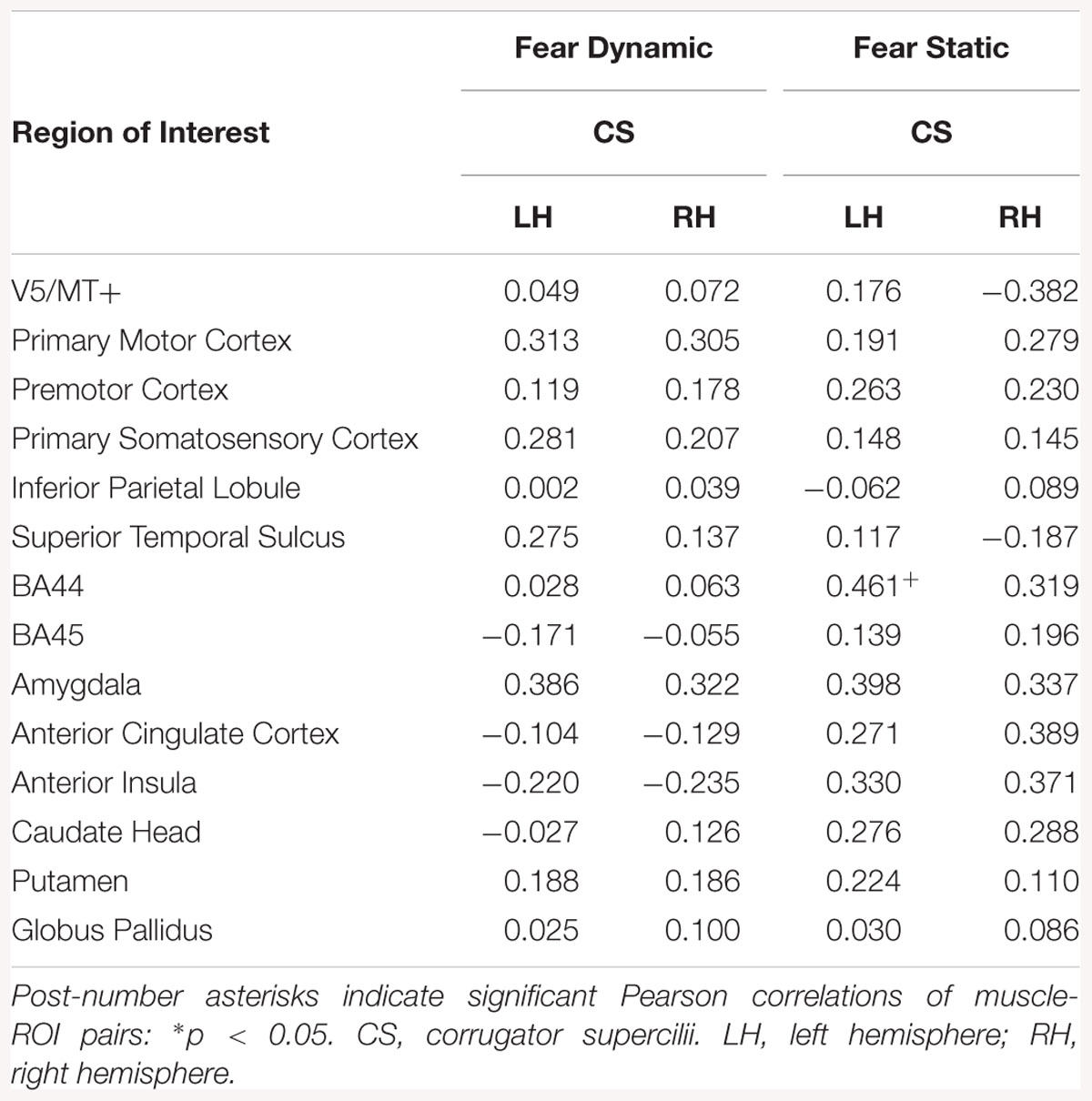

Correlation analyses in all subjects revealed a positive relationship between CS in activation in the left BA44, right BA45, and AI for the static fear condition. In the dynamic fear condition, there was a positive relationship between CS and activation in the left globus pallidus (see Table 15).

Correlation analyses of dynamic disgust in HE subjects revealed a positive relationship between LL and brain activity in several region of the right (STS, amygdala, AI, caudate head, putamen, globus pallidus) and left hemispheres (amygdala, AI, caudate head, putamen). For static disgust in HE subjects, the relationship between LL and brain activity was significant for the left AI, right caudate head, and bilateral amygdalae (see Table 16).

Table 16. Muscles-brain correlations of dynamic and static disgust conditions in high empathic subjects.

Correlation analyses of dynamic disgust in HE subjects revealed no relationship between CS and brain activations. For the static disgust in HE subjects, the relationship between CS and brain activity was significant in the regions of the right (caudate head, putamen, globus pallidus) and left hemispheres (amygdala, caudate head, putamen, globus pallidus) (see Table 16).

Correlation analyses of dynamic fear in HE subjects revealed a positive relationship between CS and brain activity in amygdalae bilaterally and left globus pallidus. For static fear in HE subjects a significant relationship between CS and brain activity was significant for the bilateral amygdalae and putamen and right AI (see Table 17).

Table 17. Muscles-brain correlations of dynamic and static fear conditions in high empathic subjects.

In LE subjects, the relationship between LL and brain activity was found only in the disgust static condition, for left BA44, putamen and globus pallidus bilaterally (see Table 18).

Table 18. Muscles-brain correlations of dynamic and static disgust conditions in low empathic subjects.

Correlation analyses of dynamic disgust in LE subjects revealed a positive relationship between CS and activity in right amygdala, and negative relationship between this muscle and left globus pallidus. For the static disgust condition, there was a positive relationship between CS and brain activity in the right (IPL, STS, ACC, and caudate head) and in the left hemisphere (V5/MT+, premotor cortex, IPL, STS, ACC, AI, caudate head, globus pallidus) among LE subjects (see Table 18).

In LE subjects, there was a relationship between CS and brain activity only in static fear condition, for left BA44 (see Table 19).

Table 19. Muscles-brain correlations of dynamic and static fear conditions in low empathic subjects.

In the present study, static and dynamic stimuli were used to investigate facial reactions and brain activation in response to emotional facial expressions. To assess neuronal structures involved in automatic, spontaneous mimicry during perception of fear and disgust facial expressions, we collected simultaneous recordings of the EMG signal and BOLD response during the perception of stimuli. Additionally, to explore whether empathic traits are linked with facial muscle and brain activity, we divided participants into low and high empathy groups (i.e., LE and HE) based on the median score on a validated questionnaire.

The EMG analysis revealed activity in the CS muscle while viewing both fear and disgust facial displays, while perception of disgust induced facial activity specifically in the LL muscle. Moreover, the HE group showed a larger responses in the CS and LL muscles as compared to the LE group, however, these responses were not differentiable between static and dynamic mode of stimuli.

For BOLD data, we used ROI analyses. We found that dynamic emotional expressions elicited higher activation in the bilateral STS, V5/MT+, bilateral amygdalae, and right BA45 as compared to emotional static expression. For the opposite contrast (static > dynamic), as expected, no significant activations emerged.

Using combined EMG-fMRI analysis, we found significant correlations between brain activity and facial muscle reactions for perception of dynamic as well as static emotional stimuli. The correlated brain structures, e.g., amygdala and AI, were more frequent in the HE compared to LE group.

The main result from EMG recording is that both fear and disgust emotions increased corrugator muscle reactions, whereas levator labii muscle activity was more pronounced in response to disgust than to fearful expressions. Before discussing this result, it should be emphasized that fear and disgust expressions have an opposite biological function, fear is thought to enhance perception to danger and disgust dampens it (Susskind et al., 2008). Accordingly, both emotions are characterized by opposite visible surface features, e.g., faster eye movements or velocity inspiration during perception of fear in comparison to perception of disgust (Susskind et al., 2008). It is suggested that fear and disgust involve opposite psychological mechanisms at the physiological level (Krusemark and Li, 2011). Based on the above-mentioned findings, we anticipated different patterns of facial muscle reaction for the evaluated emotions. Our results concerning the CS contraction for both negative emotions are congruent with earlier studies reporting CS activity during perception of anger (Sato et al., 2008; Dimberg et al., 2011), fear, and disgust emotions (Murata et al., 2016; Rymarczyk et al., 2016b). Moreover, Topolinski and Strack (2015) demonstrated that perception of highly surprising events, compared to lower-level ones, elicited CS activity specifically.

In addition, Neta et al. (2009) suggested that CS activity could reflect the participants’ bias, i.e., tendency to rate surprise as either positive or negative. Thus, it is proposed that CS reactions could be an indicator of a global negative affect (Bradley et al., 2001; Larsen et al., 2003) as well as a tool to measure individual differences in emotion regulatory ability (Lee et al., 2012).

Furthermore, we found increased LL activity for disgust facial expressions, but no evidence of activity for fear presentation. There is some evidence that perception of disgust faces (Vrana, 1993; Lundquist and Dimberg, 1995; Cacioppo et al., 2007; Rymarczyk et al., 2016b), a disgusting picture related to contamination (Yartz and Hawk, 2002) or tasting an unpleasant substance (Chapman et al., 2009) leads to the specific contraction of the LL muscle. Moreover, it was shown that reaction of the LL muscle occurred not only for biological but also moral disgust, i.e., during violation of moral norms (Whitton et al., 2014). Taken together, these results demonstrate the reliability of LL as an indicator of disgust experience (Armony and Vuilleumier, 2013, p. 62).

As far as modality of the stimulus is concerned, we did not observe any differences in the magnitude of facial reactions between static and dynamic stimuli. Similar results were found in our earlier study (Rymarczyk et al., 2016b), wherein reaction of the CS, LL and also lateral frontalis muscles were measured. We showed only a weak impact of dynamic stimuli on the strength of facial reactions for fear expressions. These reactions were apparent only in the lateral frontalis muscle, which was not measured in the present study. It should be noted that most studies have reported higher EMG response during perception of dynamic than static emotional facial expressions (Weyers et al., 2006; Sato et al., 2008; Rymarczyk et al., 2011); however, most of these studies tested the role of dynamic mode on the FM phenomenon for happiness and anger. Together, the role of dynamic stimuli in the FM phenomenon for more biologically embedded emotions needs further research.

Our data also provide some evidence for the relationship between the intensity of FM and trait emotional empathy. We found that HE compared to LE subjects showed stronger activity in CS and LL muscles for fear and disgust. However, the pattern of FM was the same in HE in LE groups. Our results are in agreement with previous EMG studies, wherein researchers have shown that HE subjects show greater mimicry of emotional expressions for happiness and anger (Sonnby-Borgström, 2002; Sonnby-Borgström et al., 2003; Dimberg et al., 2011), as well as, for fear (Balconi and Canavesio, 2016; Rymarczyk et al., 2016b) and disgust (Balconi and Canavesio, 2013b; Rymarczyk et al., 2016b) expressions as compared to LE subjects. Together, these results suggest that FM and emotional empathy are interrelated phenomena (Hatfield et al., 1992; McIntosh, 2006). Moreover, the magnitude of FM may be a strong predictor of empathy. According to PAM (de Waal, 2008), HE people exhibit stronger FM for emotional stimuli because on a neuronal level they engage brain areas related to the representation of their own feelings, for, e.g., the AI (Preston, 2007).

Neuroimaging data revealed that, observation of dynamic emotional, compared to dynamic neutral stimuli, triggered a distributed brain network that consisted of bilateral STS, V5/MT+, amygdala, AI, and BA45. The left BA44 and right putamen were also activated. In contrast, the perception of static emotional faces as compared to static neutral faces elicitated activity in the left IPL, right BA45, and left AI, and left premotor cortex.

Apart from STS and V5/MT+, greater activity for contrast dynamic vs. static fear was found in the right BA45, right amygdala, and right AI. Dynamic versus static disgust faces induced greater activity in the right BA45. Our findings concerning the bilateral visual area V5/MT+ and STS corroborate previous results confirming the importance of these structures in motion and biological motion perception, respectively (Robins et al., 2009; Arsalidou et al., 2011; Foley et al., 2012; Furl et al., 2015). It has been suggested that, due to their complex features dynamic facial characteristics require enhanced visual analysis in V5/MT+, which might result in wide-spread activation patterns (Vaina et al., 2001).

Previous studies have reported activations in the STS for facial motion due to speech production (Hall et al., 2005), or facial emotional expressions for happiness and anger (Kilts et al., 2003; Rymarczyk et al., 2018), fear (LaBar et al., 2003) and disgust (Trautmann et al., 2009). Moreover, STS activation was reported during detection of movements of natural faces (Schultz and Pilz, 2009), but not computer-generated faces (Sarkheil et al., 2013). According to the neurocognitive model for face processing (Haxby et al., 2000), STS activity could be related to enhanced perceptual and/or cognitive processing for dynamic characteristics of faces (Sato et al., 2004). To summarize our results, together with those of others, support the use of dynamic stimuli to study the neuronal correlates of emotional facial expressions (Fox et al., 2009; Zinchenko et al., 2018).

In our study, we found activity in brain areas typically implicated in simulative process, namely the IFG and IPL (Carr et al., 2003; Jabbi and Keysers, 2008). It has been proposed that understating the behavior of others is based on direct mirroring of somatosensory or motor representations of the observed action in the observer’s brain (Gazzola et al., 2006; van der Gaag et al., 2007; Jabbi and Keysers, 2008). For example, activation of these MNS structures was found during observation and imitation of others actions, i.e., during hand movement (Gallese et al., 1996; Rizzolatti and Craighero, 2004; Molnar-Szakacs et al., 2005; Vogt et al., 2007). Moreover, activity in the IFG was greater during the observation of action-related context as opposed to context-free actions, suggesting this structure plays a role not only in recognition but also in coding the intentions of others (Iacoboni et al., 2005) and contemplating others’ mental states (for meta-analysis see Mar, 2011). Neuroimaging studies have shown involvement of the IFG and IPL during observation of both dynamic and static (Carr et al., 2003) facial stimuli, for example, when comparing dynamic faces to dynamic objects (Fox et al., 2009), dynamic faces to dynamic scrambled faces (Sato et al., 2004; Schultz and Pilz, 2009) and dynamic faces to static faces (Arsalidou et al., 2011; Foley et al., 2012; Rymarczyk et al., 2018). It is interesting that in our study we also found that static compared to neutral images activated IPL and IFG. It is possible that the brain areas involved in the process of motor imagery could be activated also in absence of biological movement, which is typical for emotional but not neutral facial expressions. Accordingly, Kilts et al. (2003) reported that judgment of emotion intensity during perception of both angry and happy static expressions compared to neutral expressions activate motor and premotor cortices. Those authors proposed that during perception of static emotional images “decoding for emotion content is accomplished by the covert motor simulation of the expression prior to attempts to match the static percept to its dynamic mental representation” (Kilts et al., 2003, p. 165). To summarize, growing neuroimaging evidence confirms the role of frontal and parietal dorsal streams in the processing of both static (Carr et al., 2003) as well as dynamic emotional stimuli (Sarkheil et al., 2013), also for fear (Schaich Borg et al., 2008) and disgust emotions (Schaich Borg et al., 2008). Since facial emotional expressions are a strong cue in social interactions, it is proposed that natural stimuli (Schultz and Pilz, 2009), especially dynamic ones, may be powerful signals for activating simulation processes within the MNS.

In our study, we found that activity in several regions correlated with facial reactions. For fear expressions, CS reactions correlated with activation in the right amygdala, right AI and left BA44 for static displays, and in the left pallidus for dynamic ones. A similar pattern of correlated structures was observed for disgust displays, such that CS reactions correlated with activation in the left AI, left IPL, pallidus, and caudate head bilaterally, for static displays. Moreover, for disgust static displays LL reaction correlated with activation in the left BA44, and left BA45, left AI and bilateral premotor cortex, LL correlations with dynamic displays were primarily observed in the left AI (see Table 14).

In almost all conditions (i.e., during perception of fear and disgust as well as static and dynamic stimuli) facial reactions correlated with activity of brain regions related to motor simulation of facial expressions (i.e., IFG and IPL), as discussed above, as well as in the AI. Similar results were obtained in other studies, wherein simultaneous recording of the EMG signal and BOLD response during perception of stimuli was applied (Likowski et al., 2012; Rymarczyk et al., 2018). For example, Likowski et al. (2012) found that ZM reactions to static happy expressions and CS reactions to static angry faces correlated with activations in the right IFG. Moreover, Rymarczyk et al. (2018) observed such correlations mainly for dynamic stimuli. All together, these studies emphasize the role of the IFG and IPL in intentional imitation of emotional expressions and suggest that these regions, that are sensitive to goal-directed actions, may constitute the neuronal correlates of FM [for a review see, Bastiaansen et al., 2009].

The activation of the AI observed in our study during perception of disgust and fear is in line with the results of other studies (Phan et al., 2002). For example, the AI has been shown to respond during experiences of unpleasant odors (Wicker et al., 2003), tastes (Jabbi et al., 2007), and perception of disgust-inducing pictures (Shapira et al., 2003) as well as disgusted faces (Chen et al., 2009). However, the AI seems to be engaged in processing not only negative but also positive emotions, for, e.g., during smile execution (Hennenlotter et al., 2005). Furthermore, most researchers agree that the AI, which is considered to be structure extending MNS, may underlie a simulation of emotional feeling states (van der Gaag et al., 2007; Jabbi and Keysers, 2008). These assumptions correspond with other findings of simultaneous EMG-fMRI studies that show correlations between insula activity with facial reactions during perception of emotional expressions. For example, Likowski et al. (2012) showed that CS muscle reactions to angry faces were associated with the right insula, while Rymarczyk et al. (2018) found such relationships for happiness expressions with ZM and orbicularis oculi responses. It should be noted that, more recently, the AI is considered to be a key brain region involved in the experience of emotions (Menon and Uddin, 2010), among other processes like judgments of trustworthiness or sexual arousal [for a review see (Bud) Craig, 2009].

Next, in our study we found correlations between activity of the amygdala and facial reactions in the CS muscle during perception of fear stimuli. These results are parallel to other findings of neuroimaging studies that revealed activity of the amygdala during observation (Carr et al., 2003) as well as execution of fear and other negative facial expressions (van der Gaag et al., 2007). A number of studies emphasize the role of the amygdala in social-emotional recognition (Adolphs, 2002; Adolphs and Spezio, 2006), and in particular, in the processing of salient face stimuli during unpredictable circumstances (Adolphs, 2010). Moreover, it has been suggested that the amygdala contributes to relevant stimuli detection (Sander et al., 2003). Therefore, it is possible that, due an increased vigilance in observing the dynamically changing salient features of faces, the processing of dynamic aspects of faces requires amygdala activation.

Furthermore, our EMG-fMRI analysis revealed correlations between activity of the basal ganglia (i.e., globus pallidus and caudate head) and facial reactions for fear and disgust expressions. One interpretation of this result might be that the caudate nucleus and the globus pallidus, which are involved in motor control (Salih et al., 2009), also play a role in motor control during automatic FM. On the other hand, clinical studies (Sprengelmeyer et al., 1996; Calder et al., 2016) and neuroimaging data (Sprengelmeyer et al., 1998) suggest that both the globus pallidus and the caudate nuclei play an important role in processing of disgust expressions. Moreover, the globus pallidus seems to be involved in aversive responses to fear and anxiety (Talalaenko et al., 2008), as well as in affect regulation (Murphy et al., 2003).

A further innovative feature of our study was to test whether empathy traits modulate the neuronal correlates of FM. As discussed above, the high empathy group as compared to the low empathic one presented a distinct pattern of EMG response that is consistent with a typical FM, i.e., greater CS reactions for fear and disgust and greater LL reactions for disgust. What is important to note here is the FM activity in emotion-related brain structures (e.g., AI, amygdala) was more evident in the HE group. Our finding of the anterior insula activity is partially consistent with few neuroimaging studies where disgust stimuli were used (for a review see Baird et al., 2011). For example it was shown that an observation of film clips of people drinking liquids and displaying disgusted faces evoked activity in a neural circuit consisting of the AI, IFG and cingulate cortex, but only in high empathic persons. It seems that activations related to disgust were more frequently observed for high-arousing stimuli, like pictures of painful situations (Jackson et al., 2006) or facial pain expressions (Botvinick et al., 2005; Saarela et al., 2007). However, in our study, we found no differences in brain activity during perception of fear and disgust facial expressions when comparing low and high empathic subjects. This may be the result from different kind of stimuli used in our and other studies. While most studies used the high-arousing stimuli like the pain-inducing situations, our study applied low arousing stimuli. In other words, the perception of emotional facial expressions, compared to perception of pain-inducing situations may be not sufficient to detect brain differences related to low and high empathic characteristics of subjects.

In relation to correlation between facial reaction for fear and disgust stimuli and activity of the amygdala, our result stay in agreement of the assumption, that the amygdala, next to AI, IFG, and IPL constitute the neuronal structures required for complex empathic processes (Bzdok et al., 2012; Decety et al., 2012; Marsh, 2018). Taken together, it is proposed that activity of the amygdala, together with activity of the insula may constitute the neuronal bases of affective simulation, however, the specificity of role of the amygdala in affective resonance requires further clarification. As noted by Preston and de Waal (2002): “So, if the mirror neurons represent emotional behavior, then the insula may relay information from the premotor mirror neurons to the amygdala” (see Augustine, 1996).

Our results from study using simultaneously recorded EMG and BOLD signals during perception of fear and disgust have confirmed that, similarly to anger and happiness (Likowski et al., 2012; Rymarczyk et al., 2018), the MNS may constitute the neuronal bases of FM. In particular, the core MNS structures (i.e., IFG and IPL) are thought to be responsible for motor simulation, while MNS-related limbic regions (e.g., AI) seem to be related to affective resonance. In line with this, it is suggested that FM includes both motor and emotional component; however, their mutual relations required further studies. For example, it is possible that motor imitation leads to emotional contagion or vice versa, among other factors, which play an important role in social interactions.

Our study is the first attempt when the relation between facial mimicry, activity of subsystems of the MNS, and level of emotional empathy was explored. We have found that high empathic people demonstrated the stronger facial reactions and what is worth noting, these reactions were correlated with stronger activation of structures of core MNS and MNS-related limbic structures. In other words, it appears that high empathic people imitate emotions of others more than low empathic ones. Additionally, we have shown that the processes of motor imitation and affective contagion were more evident for dynamic, more natural, than static emotional facial expressions.

As far as modality of the stimuli is concerned, our study confirmed the general agreement that exists among researchers that dynamic facial expressions are a valuable source of information in social communication. The evidence was visible in greater neural network activations during dynamic compared to static facial expressions of fear and disgust. Moreover, it appeared that presentation of stimulus dynamics is an important factor for elicitation of emotion, especially for fear.

As it was noted in the introduction, the increased activity of CS or LL in response to emotional facial expressions are not distinct to single emotions, i.e., neither for fear nor for disgust. Some studies confirmed increased CS activity during perception of various negative emotions (Murata et al., 2016). Accordingly LL increased activity was found not only for in disgust mimicry but also in pain expression, together with increased activity of CS (Prkachin and Solomon, 2008). Therefore, our inference about brain-muscle relationships are limited due to non-specificity of the CS and LL which are indicators of FM for fear and disgust.

Next, there is some evidence that increased activity of other facial muscle, i.e., the lateral frontalis, could be related to fear expression (Van Boxtel, 2010). In our previous work we showed that fear presentations induced activity in this muscle (Rymarczyk et al., 2016b). However, in the current work we did not measure activity of this muscle because the cap intended for EMG measurements in MRI environment was not designed for that purpose.

KR, KJ-S, and ŁŻ conceived and designed the experiments. KR and ŁŻ performed the experiments, analyzed the data, and contributed materials. KR, ŁŻ, KJ-S, and IS wrote the manuscript.

This study was supported by grant no. 2011/03/B/HS6/05161 from the Polish National Science Centre provided to KR and grant no. WP/2018/A/22_2018_2019 from SWPS University of Social Sciences and Humanities.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2019.00701/full#supplementary-material

Adolphs, R. (2002). Neural systems for recognizing emotion. Curr. Opin. Neurobiol. 12, 169–177. doi: 10.1016/S0959-4388(02)00301-X

Adolphs, R. (2010). What does the amygdala contribute to social cognition? Ann. N. Y. Acad. Sci. 1191, 42–61. doi: 10.1111/j.1749-6632.2010.05445.x

Adolphs, R., and Spezio, M. (2006). Role of the amygdala in processing visual social stimuli. Prog. Brain Res. 156, 363–378. doi: 10.1016/S0079-6123(06)56020-0

Allen, P. J., Josephs, O., and Turner, R. (2000). A method for removing imaging artifact from continuous EEG recorded during functional MRI. Neuroimage 12, 230–239. doi: 10.1006/nimg.2000.0599

Armony, J., and Vuilleumier, P. (eds). (2013). The Cambridge Handbook of Human Affective Neuroscience. Cambridge: Cambridge University Press. doi: 10.1017/CBO9780511843716

Arsalidou, M., Morris, D., and Taylor, M. J. (2011). Converging evidence for the advantage of dynamic facial expressions. Brain Topogr. 24, 149–163. doi: 10.1007/s10548-011-0171-4

Augustine, J. R. (1996). Circuitry and functional aspects of the insular lobe in primates including humans. Brain Res. Rev. 22, 229–244. doi: 10.1016/S0165-0173(96)00011-2

Baird, A. D., Scheffer, I. E., and Wilson, S. J. (2011). Mirror neuron system involvement in empathy: a critical look at the evidence. Soc. Neurosci. 6, 327–335. doi: 10.1080/17470919.2010.547085

Balconi, M., and Canavesio, Y. (2013a). Emotional contagion and trait empathy in prosocial behavior in young people: the contribution of autonomic (facial feedback) and balanced emotional empathy scale (BEES) measures. J. Clin. Exp. Neuropsychol. 35, 41–48. doi: 10.1080/13803395.2012.742492

Balconi, M., and Canavesio, Y. (2013b). High-frequency rTMS improves facial mimicry and detection responses in an empathic emotional task. Neuroscience 236, 12–20. doi: 10.1016/j.neuroscience.2012.12.059

Balconi, M., and Canavesio, Y. (2014). Is empathy necessary to comprehend the emotional faces? The empathic effect on attentional mechanisms (eye movements), cortical correlates (N200 event-related potentials) and facial behaviour (electromyography) in face processing. Cogn. Emot. 30, 210–224. doi: 10.1080/02699931.2014.993306

Balconi, M., and Canavesio, Y. (2016). Empathy, approach attitude, and rTMs on left DLPFC affect emotional face recognition and facial feedback (EMG). J. Psychophysiol. 30, 17–28. doi: 10.1027/0269-8803/a000150

Balconi, M., Vanutelli, M. E., and Finocchiaro, R. (2014). Multilevel analysis of facial expressions of emotion and script: self-report (arousal and valence) and psychophysiological correlates. Behav. Brain Funct. 10:32. doi: 10.1186/1744-9081-10-32

Bastiaansen, J. A. C. J., Thioux, M., and Keysers, C. (2009). Evidence for mirror systems in emotions. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 364, 2391–2404. doi: 10.1098/rstb.2009.0058

Bernstein, M., and Yovel, G. (2015). Two neural pathways of face processing: a critical evaluation of current models. Neurosci. Biobehav. Rev. 55, 536–546. doi: 10.1016/j.neubiorev.2015.06.010

Betti, V., and Aglioti, S. M. (2016). Dynamic construction of the neural networks underpinning empathy for pain. Neurosci. Biobehav. Rev. 63, 191–206. doi: 10.1016/j.neubiorev.2016.02.009

Blair, R. J. (1999). Psychophysiological responsiveness to the distress ofothers in children with autism. Pers. Individ. Dif. 26, 477–485. doi: 10.1016/S0191-8869(98)00154-8

Botvinick, M., Jha, A. P., Bylsma, L. M., Fabian, S. A., Solomon, P. E., and Prkachin, K. M. (2005). Viewing facial expressions of pain engages cortical areas involved in the direct experience of pain. Neuroimage 25, 312–319. doi: 10.1016/j.neuroimage.2004.11.043

Bradley, M. M., Codispoti, M., Cuthbert, B. N., and Lang, P. J. (2001). Emotion and motivation I: defensive and appetitive reactions in picture processing. Emotion 1, 276–298. doi: 10.1037//1528-3542.1.3.276

Brett, M., Anton, J. L., Valabregue, R., and Poline, J. B. (2002). “Region of interest analysis using an SPM toolbox,” in Presented at the 8th International Conference on Functional Mapping of the Human Brain, Sendai.

Bzdok, D., Schilbach, L., Vogeley, K., Schneider, K., Laird, A. R., Langner, R., et al. (2012). Parsing the neural correlates of moral cognition: ALE meta-analysis on morality, theory of mind, and empathy. Brain Struct. Funct. 217, 783–796. doi: 10.1007/s00429-012-0380-y

Cacioppo, J. T., Petty, R. E., Losch, M. E., and Kim, H. S. (1986). Electromyographic activity over facial muscle regions can differentiate the valence and intensity of affective reactions. J. Pers. Soc. Psychol. 50, 260–268. doi: 10.1037//0022-3514.50.2.260

Cacioppo, J. T., Tassinary, L. G., and Berntson, G. G. (2007). “Handbook of psychophysiology,” in Dreaming, Vol. 44, eds J. T. Cacioppo, L. G. Tassinary, and G. Berntson (Cambridge: Cambridge University Press). doi: 10.1017/CBO9780511546396

Calder, A. J., Keane, J., Manes, F., Antoun, N., and Young, A. W. (2016). Facial Expression Recognition. Facial Expression Recognition: Selected works of Andy Young. Abingdon: Routledge. doi: 10.4324/9781315715933

Carr, L., Iacoboni, M., Dubeau, M. C., Mazziotta, J. C., and Lenzi, G. L. (2003). Neural mechanisms of empathy in humans: a relay from neural systems for imitation to limbic areas. Proc. Natl. Acad. Sci. U.S.A. 100, 5497–5502. doi: 10.1073/pnas.0935845100

Chapman, H. A., Kim, D. A., Susskind, J. M., and Anderson, A. K. (2009). In bad taste: evidence for the oral origins of moral disgust. Science 323, 1222–1226. doi: 10.1126/science.1165565

Chartrand, T. L., and Bargh, J. A. (1999). The chameleon effect: the perception-behavior link and social interaction. J. Pers. Soc. Psychol. 76, 893–910. doi: 10.1037/0022-3514.76.6.893

Chen, Y. H., Dammers, J., Boers, F., Leiberg, S., Edgar, J. C., Roberts, T. P. L., et al. (2009). The temporal dynamics of insula activity to disgust and happy facial expressions: a magnetoencephalography study. Neuroimage 47, 1921–1928. doi: 10.1016/j.neuroimage.2009.04.093

Craig, A. D. (2009). How do you feel — now? The anterior insula and human awareness. Nat. Rev. Neurosci. 10, 59–70. doi: 10.1038/nrn2555

de Waal, F. B. M. (2008). Putting the altruism back into altruism: the evolution of empathy. Annu. Rev. Psychol. 59, 279–300. doi: 10.1146/annurev.psych.59.103006.093625

de Waal, F. B. M., and Preston, S. D. (2017). Mammalian empathy: behavioural manifestations and neural basis. Nat. Rev. Neurosci. 18, 498–509. doi: 10.1038/nrn.2017.72

Decety, J., and Jackson, P. L. (2004). The functional architecture of human empathy. Behav. Cogn. Neurosci. Rev. 3, 71–100. doi: 10.1177/1534582304267187

Decety, J., Norman, G. J., Berntson, G. G., and Cacioppo, J. T. (2012). A neurobehavioral evolutionary perspective on the mechanisms underlying empathy. Prog. Neurobiol. 98, 38–48. doi: 10.1016/j.pneurobio.2012.05.001

Dimberg, U. (1982). Facial reactions to facial expressions. Psychophysiology 19, 643–647. doi: 10.1111/j.1469-8986.1982.tb02516.x

Dimberg, U., Andréasson, P., and Thunberg, M. (2011). Emotional empathy and facial reactions to facial expressions. J. Psychophysiol. 25, 26–31. doi: 10.1027/0269-8803/a000029

Dimberg, U., and Petterson, M. (2000). Facial reactions to happy and angry facial expressions: evidence for right hemisphere dominance. Psychophysiology 37, 693–696. doi: 10.1111/1469-8986.3750693

Dimberg, U., Thunberg, M., and Grunedal, S. (2002). Facial reactions to emotional stimuli: automatically controlled emotional responses. Cogn. Emot. 16, 449–471. doi: 10.1080/02699930143000356

Foley, E., Rippon, G., Thai, N. J., Longe, O., and Senior, C. (2012). Dynamic facial expressions evoke distinct activation in the face perception network: a connectivity analysis study. J. Cogn. Neurosci. 24, 507–520. doi: 10.1162/jocn_a_00120

Fox, C. J., Iaria, G., and Barton, J. J. S. (2009). Defining the face processing network: optimization of the functional localizer in fMRI. Hum. Brain Mapp. 30, 1637–1651. doi: 10.1002/hbm.20630

Fridlund, A. J., and Cacioppo, J. T. (1986). Guidelines for human electromyographic research. Psychophysiology 23, 567–589. doi: 10.1111/j.1469-8986.1986.tb00676.x

Furl, N., Henson, R. N., Friston, K. J., and Calder, A. J. (2015). Network interactions explain sensitivity to dynamic faces in the superior temporal sulcus. Cereb. Cortex 25, 2876–2882. doi: 10.1093/cercor/bhu083

Gallese, V., Fadiga, L., Fogassi, L., and Rizzolatti, G. (1996). Action recognition in the premotor cortex. Brain 119(Pt 2), 593–609. doi: 10.1093/brain/119.2.593

Gallese, V., Rochat, M., Cossu, G., and Sinigaglia, C. (2009). Motor cognition and its role in the phylogeny and ontogeny of action understanding. Dev. Psychol. 45, 103–113. doi: 10.1037/a0014436

Gazzola, V., Aziz-Zadeh, L., and Keysers, C. (2006). Empathy and the somatotopic auditory mirror system in humans. Curr. Biol. 16, 1824–1829. doi: 10.1016/j.cub.2006.07.072

Grosbras, M.-H., and Paus, T. (2006). Brain networks involved in viewing angry hands or faces. Cereb. Cortex 16, 1087–1096. doi: 10.1093/cercor/bhj050

Hall, D. A., Fussell, C., and Summerfield, A. Q. (2005). Reading fluent speech from talking faces: typical brain networks and individual differences. J. Cogn. Neurosci. 17, 939–953. doi: 10.1162/0898929054021175

Hastings, P. D., Zahn-Waxler, C., Robinson, J., Usher, B., and Bridges, D. (2000). The development of concern for others in children with behavior problems. Dev. Psychol. 36, 531–546. doi: 10.1037/0012-1649.36.5.531

Hatfield, E., Cacioppo, J. T., and Rapson, R. L. (1992). “Primitive emotional contagion,” in Emotion and Social Behavior, ed. M. S. Clark (Thousand Oaks, CA: Sage Publications), 151–177.

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Hennenlotter, A., Schroeder, U., Erhard, P., Castrop, F., Haslinger, B., Stoecker, D., et al. (2005). A common neural basis for receptive and expressive communication of pleasant facial affect. Neuroimage 26, 581–591. doi: 10.1016/j.neuroimage.2005.01.057

Hess, U., and Fischer, A. (2013). Emotional mimicry as social regulation. Pers. Soc. Psychol. Rev. 17, 142–157. doi: 10.1177/1088868312472607

Hess, U., and Fischer, A. (2014). Emotional mimicry: why and when we mimic emotions. Soc. Pers. Psychol. Compass 8, 45–57. doi: 10.1111/spc3.12083

Hooker, C. I., Verosky, S. C., Germine, L. T., Knight, R. T., and D’Esposito, M. (2008). Mentalizing about emotion and its relationship to empathy. Soc. Cogn. Affect. Neurosci. 3, 204–217. doi: 10.1093/scan/nsn019

Iacoboni, M. (2009). Imitation, empathy, and mirror neurons. Annu. Rev. Psychol. 60, 653–670. doi: 10.1146/annurev.psych.60.110707.163604

Iacoboni, M., and Dapretto, M. (2006). The mirror neuron system and the consequences of its dysfunction. Nat. Rev. Neurosci. 7, 942–951. doi: 10.1038/nrn2024

Iacoboni, M., Molnar-Szakacs, I., Gallese, V., Buccino, G., Mazziotta, J. C., and Rizzolatti, G. (2005). Grasping the intentions of others with one’s own mirror neuron system. PLoS Biol. 3:e79. doi: 10.1371/journal.pbio.0030079

Jabbi, M., and Keysers, C. (2008). Inferior frontal gyrus activity triggers anterior insula response to emotional facial expressions. Emotion 8, 775–780. doi: 10.1037/a0014194

Jabbi, M., Swart, M., and Keysers, C. (2007). Empathy for positive and negative emotions in the gustatory cortex. Neuroimage 34, 1744–1753. doi: 10.1016/j.neuroimage.2006.10.032

Jackson, P. L., Brunet, E., Meltzoff, A. N., and Decety, J. (2006). Empathy examined through the neural mechanisms involved in imagining how I feel versus how you feel pain. Neuropsychologia 44, 752–761. doi: 10.1016/j.neuropsychologia.2005.07.015

Jankowiak-Siuda, K., Rymarczyk, K., and Grabowska, A. (2011). How we empathize with others: a neurobiological perspective. Med. Sci. Monit. Int. Med. J. Exp. Clin. Res. 17, RA18–RA24. doi: 10.12659/MSM.881324

Kaplan, J. T., and Iacoboni, M. (2006). Getting a grip on other minds: mirror neurons, intention understanding, and cognitive empathy. Soc. Neurosci. 1, 175–183. doi: 10.1080/17470910600985605

Kessler, H., Doyen-Waldecker, C., Hofer, C., Hoffmann, H., Traue, H. C., and Abler, B. (2011). Neural correlates of the perception of dynamic versus static facial expressions of emotion. Psychosoc. Med. 8:Doc03. doi: 10.3205/psm000072

Keysers, C., and Gazzola, V. (2006). Towards a unifying neural theory of social cognition. Prog. Brain Res. 156, 379–401. doi: 10.1016/S0079-6123(06)56021-2

Kilts, C. D., Egan, G., Gideon, D. A., Ely, T. D., and Hoffman, J. M. (2003). Dissociable neural pathways are involved in the recognition of emotion in static and dynamic facial expressions. Neuroimage 18, 156–168. doi: 10.1006/nimg.2002.1323

Krebs, D. (1975). Empathy and altruism. J. Pers. Soc. Psychol. 32, 1134–1146. doi: 10.1037//0022-3514.32.6.1134

Kret, M. E., Fischer, A. H., and De Dreu, C. K. W. (2015). Pupil mimicry correlates with trust in in-group partners with dilating pupils. Psychol. Sci. 26, 1401–1410. doi: 10.1177/0956797615588306

Krumhuber, E. G., Kappas, A., and Manstead, A. S. R. (2013). Effects of dynamic aspects of facial expressions: a review. Emot. Rev. 5, 41–46. doi: 10.1177/1754073912451349

Krusemark, E. A., and Li, W. (2011). Do all threats work the same way? Divergent effects of fear and disgust on sensory perception and attention. J. Neurosci. 31, 3429–3434. doi: 10.1523/JNEUROSCI.4394-10.2011

LaBar, K. S., Crupain, M. J., Voyvodic, J. T., and McCarthy, G. (2003). Dynamic perception of facial affect and identity in the human brain. Cereb. Cortex 13, 1023–1033. doi: 10.1093/cercor/13.10.1023

Larsen, J. T., Norris, C. J., and Cacioppo, J. T. (2003). Effects of positive and negative affect on electromyographic activity over zygomaticus major and corrugator supercilii. Psychophysiology 40, 776–785. doi: 10.1111/1469-8986.00078

Lee, H., Heller, A. S., van Reekum, C. M., Nelson, B., and Davidson, R. J. (2012). Amygdala–prefrontal coupling underlies individual differences in emotion regulation. Neuroimage 62, 1575–1581. doi: 10.1016/j.neuroimage.2012.05.044

Lee, T. W., Josephs, O., Dolan, R. J., and Critchley, H. D. (2006). Imitating expressions: emotion-specific neural substrates in facial mimicry. Soc. Cogn. Affect. Neurosci. 1, 122–135. doi: 10.1093/scan/nsl012

Levenson, R. W., and Ruef, A. M. (1992). Empathy: a physiological substrate. J. Pers. Soc. Psychol. 63, 234–246. doi: 10.1037/0022-3514.63.2.234

Liberzon, I., Phan, K. L., Decker, L. R., and Taylor, S. F. (2003). Extended amygdala and emotional salience: a PET activation study of positive and negative affect. Neuropsychopharmacology 28, 726–733. doi: 10.1038/sj.npp.1300113

Likowski, K. U., Mühlberger, A., Gerdes, A. B. M., Wieser, M. J., Pauli, P., and Weyers, P. (2012). Facial mimicry and the mirror neuron system: simultaneous acquisition of facial electromyography and functional magnetic resonance imaging. Front. Hum. Neurosci. 6:214. doi: 10.3389/fnhum.2012.00214

Likowski, K. U., Mühlberger, A., Seibt, B., Pauli, P., and Weyers, P. (2008). Modulation of facial mimicry by attitudes. J. Exp. Soc. Psychol. 44, 1065–1072. doi: 10.1016/j.jesp.2007.10.007

Likowski, K. U., Mühlberger, A., Seibt, B., Pauli, P., and Weyers, P. (2011). Processes underlying congruent and incongruent facial reactions to emotional facial expressions. Emotion 11, 457–467. doi: 10.1037/a0023162

Lundquist, L.-O., and Dimberg, U. (1995). Facial expressions are contagious. J. Psychophysiol. 9, 203–211.

Mar, R. A. (2011). The neural bases of social cognition and story comprehension. Annu. Rev. Psychol. 62, 103–134. doi: 10.1146/annurev-psych-120709-145406

Marsh, A. A. (2018). The neuroscience of empathy. Curr. Opin. Behav. Sci. 19, 110–115. doi: 10.1016/j.cobeha.2017.12.016

McIntosh, D. N. (2006). Spontaneous facial mimicry, liking and emotional contagion. Pol. Psychol. Bull. 37, 31–42. doi: 10.3389/fpsyg.2016.00458