94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 27 February 2019

Sec. Cognition

Volume 10 - 2019 | https://doi.org/10.3389/fpsyg.2019.00352

Research on the metaphorical mapping of valenced concepts onto space indicates that positive, neutral, and negative concepts are mapped onto upward, midward, and downward locations, respectively. More recently, this type of research has been tested for the very first time in 3D physical space. The findings corroborate the mapping of valenced concepts onto the vertical space as described above but further show that positive and negative concepts are placed close to and away from the body; neutral concepts are placed midway. The current study aimed at investigating whether valenced perceptual stimuli are positioned onto 3D space akin to the way valenced concepts are positioned. By using a unique device known as the cognition cube, participants placed visual, auditory, tactile and olfactory stimuli on 3D space. The results mimicked the placing of valenced concepts onto 3D space; i.e., positive percepts were placed in upward and close-to-the-body locations and negative percepts were placed in downward and away-from-the-body locations; neutral percepts were placed midway. These pattern of results was more pronounced in the case of visual stimuli, followed by auditory, tactile, and olfactory stimuli.

Significance Statement

Just recently, a unique device called “the cognition cube” (CC) enabled to find that positive words are mapped onto upward and close-to-the-body locations and negative words are mapped onto downward and away-from-the-body locations; neutral words are placed midway. This way of placing words in relation to the body is consistent with an approach-avoidance effect such that “good” and “bad” things are kept close to and away from one’s body. We demonstrate for the very first time that this same pattern emerges when visual, auditory, tactile, and olfactory perceptual stimuli are placed on 3D physical space. We believe these results are significant in that the CC can be used as a new tool to diagnose emotion-related disorders.

The valence-space metaphor contends that stimuli’s valence is mapped onto physical space. In the case of the vertical plane, various studies have shown that positive and negative stimuli are associated with high and low locations, respectively (Meier and Robinson, 2004; Xie et al., 2014, 2015; Montoro et al., 2015; Sasaki et al., 2015). A handful of studies suggest that positive and negative items can be associated with rightward and leftward locations, respectively, when the task is performed by right-handed participants and the pattern reverses when the task is performed by left-handed participants (Casasanto, 2009; Casasanto and Chrysikou, 2011; Freddi et al., 2016). More recent evidence suggests, however, that no mapping occurs in this horizontal plane (e.g., Amorim and Pinheiro, 2018) and that factors such as age, gender, language, handedness, and, most importantly, valence exert no effect (Marmolejo-Ramos et al., 2017).

The stimuli used in those studies consisted of visual, verbal, or auditory stimuli (e.g., pictures, words, sounds) while at the same time being restricted to the mapping of one or at best two dimensions at the time (e.g., mapping of valence to vertical and/or horizontal space via computer screens or by paper-pencil tasks). In contrast, valence-to-space mapping using olfactory or tactile stimuli are virtually lacking, as are studies that target metaphorical mapping onto our physical reality of height, length, and width simultaneously. Recently, a study showed for the very first time how valenced concepts are placed onto 3D physical space. In that study, Marmolejo-Ramos et al. (2018) crafted a specific device (“the cognition cube”) that enabled allocating items in space such that X, Y, and Z Cartesian coordinates could be estimated. These authors not only confirmed the mapping of valenced concepts onto the vertical plane, but also disconfirmed the effects of various factors, including valence, on the horizontal plane. More importantly, their results showed that in the “depth” plane (Z-axis) positively- and negatively valenced concepts are placed closer to and away from the body, respectively. This result is line with an approach-avoidance effect (Solarz, 1960; Phaf et al., 2014; see also Meier et al., 2012; Topolinski et al., 2014; Godinho and Garrido, 2016).

Research on embodied cognition argues that concepts are built during the sensorimotor experience with the environment (Smith and Colunga, 2012; Martin, 2016). But concepts are more elaborate in that those perceptual and motor experiences are colored by the social (and, in turn, emotional) context in which experiences ensue (e.g., Marmolejo-Ramos et al., 2017a; see also Pecher, 2018). As to the specific case of perceptual experience, humans have built-in circuitry that enables processing such type of information via sensory systems (i.e., vision, audition, touch, smell, and taste) and the mental representation of the sensory input is known as percept (see Jepson and Richards, 1993). Thus percepts can be understood as a form of primitive concepts in that they are closer to sensory-to-perceptual than to social experiences1.

Despite percepts being primitive concepts, they do have associated valence. As recent studies indicate, tastes and shapes have associated valences such that, for example, sweet tastes and round shapes are associated with a positive valence (Velasco et al., 2014a,b, 2016). There is also evidence for associations between percepts and 2D space such that, for example, high-pitched sounds, which also have an associated positive valence, are mapped onto high spatial locations (Salgado-Montejo et al., 2016; but see Rusconi et al., 2006). By merging these results with those recently found by Marmolejo-Ramos et al. (2018), we hypothesized that percepts are placed in 3D space in the same fashion as are valenced concepts. That is, positive percepts will be placed in high locations (Y-axis) and near the participant’s body (Z-axis), negative percepts will be placed in low locations and far from the participant’s body, and neutral percepts will be placed in between these percepts regarding both planes. As to the X-axis, no consistent nor straightforward effect of valence, nor any other effect is expected. Perceptual stimuli from four sensory modalities are used and, as has been shown, some modalities dominate over others (see Colavita, 1974; Koppen and Spence, 2007; Rach et al., 2011). It is therefore expected that the modality of the percept – being the visual modality most likely to dominate overall – can lead to differences in the strength of associations between percepts and space.

Forty-four undergraduate and graduate students participated in the experiment (29 females; Mdnage–females = 26 ± 4.44MAD, rangeage–females = 18–52 years, 2 left-handed; Mdnage-males = 25 ± 5.93MAD, rangeage-males = 19–33 years, 1 left-handed). This sample size was suggested by a power analysis for a general linear model with five fixed predictors that, as a full model, explained at least 25% of the variance in the dependent variable under a 5% Type I error (α) and an 80% power. (For details regarding the power estimation, see Marmolejo-Ramos et al., 2018) None of the participants reported any known visual, tactile, olfactory, auditory, or related sensory impairment. Forty percent of the participants were non-native Swedish speakers who reported good-to-excellent command of the English language. All participants received course credit or cinema tickets or participated voluntarily. The study’s protocol was approved by the ethics committee of the Department of Psychology at Stockholm University (experiment code 67/16). All subjects gave written informed consent in accordance with the Declaration of Helsinki (World Medical Association [WMA], 2013).

Nine highly familiar stimuli (3 positive, 3 neutral, and 3 negative) were selected for each of the sensory modalities vision, audition, touch, and smell (see Table 1). The images were selected from the IAPS data set (Lang et al., 2008; items’ codes: 1710, 1440, 1460, 7705, 7547, 7011, 1111, 7380, and 3019). The sounds were selected from a large sound database available on CDs (BBC Sound Effects Library – Original Series, United Kingdom) and from an online collaborative sound database (Freesound2, item’s codes: bbc 74, bbc 19, bbc76, bbc 101, bbc 98, bbc 88, bbc 100, bbc 32, bbc 01). The sounds were edited to a duration of 3 s, an intensity of 50 dB, and converted to stereo by using a digital audio editor and recording program (Audacity; see Cornell Kärnekull et al., 2016). The textures and the smells were selected from previous work where valence ratings were available (Ekman et al., 1965; Etzi et al., 2014; Cornell Kärnekull et al., 2016). Although the valence of all stimuli was assessed in previous studies, their valence was corroborated in the present study via rating scales (positive: images = 9 ± 1.48, sounds = 8 ± 1.48, textures = 7.1 ± 2.14, smells = 7.55 ± 1.85; neutral: images = 5 ± 1.48, sounds = 5 ± 1.48, textures = 4.9 ± 2.22, smells = 5.05 ± 3.26; negative: images = 3 ± 2.96, sounds = 2 ± 2.96, textures = 4.95 ± 2.29, smells = 2.2 ± 2.29)3. A computer-based version of VAS (visual-analog scales) was implemented in PsychoPy (Peirce, 2007) for the olfactory and tactile stimuli, and a paper-pencil version was used for the visual and auditory stimuli4.

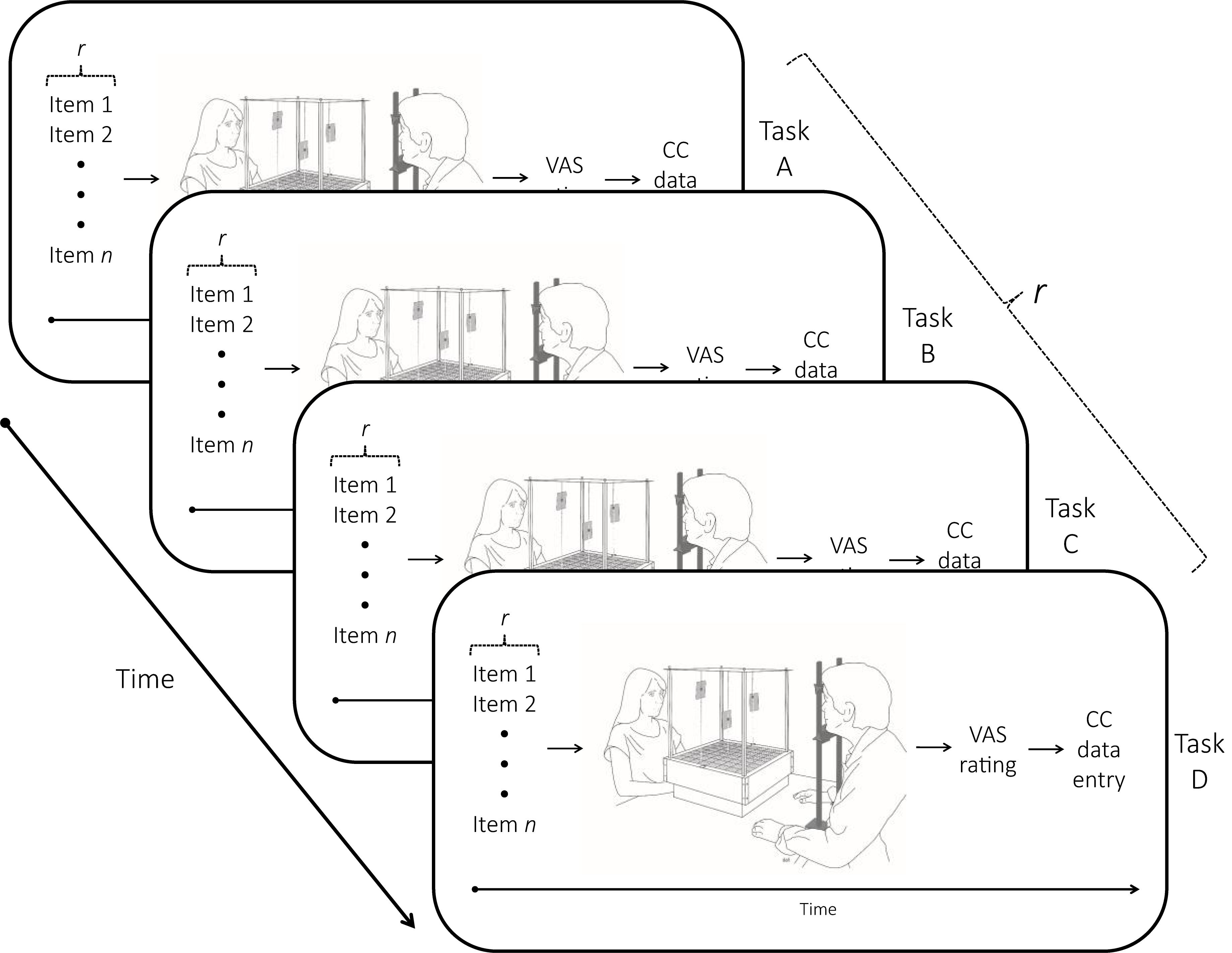

The cognition cube was used to measure the allocation of the different sensory stimuli in 3D space (see Marmolejo-Ramos et al., 2018, including its Supplementary Material, for details of this new device). Each stimulus had a unique code name that was printed and placed within a small plastic badge with magnets that could be positioned onto a vertical metal rod (see Figure 1). In this way, the horizontal and depth location of a stimulus could be pinpointed by moving the metal rod perpendicularly to the floor of the cube while the vertical (Y) coordinate was given by the location of the badge on the rod itself.

Figure 1. Illustration of the experimental sequence. Presentation of the four modalities (visual, auditory, tactile, olfactory) was counterbalanced, and the respective modality items (images, sounds, smells, textures for Tasks A ∼ D) were randomly presented in each modality set (r). The following fixed sequence of events occurred within each task: (i) stimuli were presented one by one (item 1… n); (ii) stimuli were allocated spatially within the cognition cube (CC); (iii) stimuli were rated (VAS rating); and (iv) the X, Y, and Z coordinates of each stimulus were measured and recorded.

All participants were tested individually and by the same experimenter. First, participants were provided with the following written instruction which also was orally presented by the experimenter.

“We are interested in knowing how people allocate sensory stimuli in space. In this task, this [pointing to the cube] will be your space. This space is confined within these eight edges [pointing to the internal corners of the cube] and is bounded by this farthest limit [pointing to the farthest limit in the Z plane], this closest limit [pointing to the section of the cube closest to the participant], this lowest limit [pointing to the floor of the cube], this highest limit [pointing to the ceiling of the cube], this rightmost limit [pointing to the rightmost internal edge of the cube] and this leftmost limit [pointing to the leftmost internal edge of the cube]. You will be presented with images, sounds, smells, and textures; each in separate blocks and item by item. Your task is to allocate each sensory stimulus anywhere within this cube [the experimenter made clear that any location within the space can be used] by pointing to where you would place it [the experimenter asked the participant to use his/her dominant hand]. Please make your location choice while looking at the cube [this happened while the person’s head rested on a chin rest]. Once you have decided where to place it, please point at the desired location. If you cannot reach the location, you can stand up and point at it. In order to translate your decision into the cube, please hold your hand in the spot you decided to place the stimulus [at this point the experimenter attached a badge with the item’s code name to a metallic rod]. After allocating all of the items in a modality task, you will be asked to rate the pleasantness of the stimuli. Then, the same procedure will be applied for the next modality.”

The participant sat in front of the cube with the chin rest at a distance (∼40 cm) that allowed him/her to perceive and reach all corners within the cube. This procedure ensured that the visual field of the participant could cover each area in the cube equally well, i.e., similar ocular movements for looking to rightward-leftward and upward-downward directions. Also, the experimenter reminded the participant that the goal of the study was to know how people put “objects” in space with no connotation of the valence of the stimuli. The participant was reminded he/she could choose any location within the cube and could do so by pointing to that location. By means of a chin rest, the participant’s eye level was aligned to the center of the cube. The experimenter always sat opposite to the participant (see Figure 1).

Spatial allocations were performed for the four sensory modalities (visual, auditory, touch, and olfactory) separately comprising a total of 36 stimuli. Modality order presentation was counterbalanced, and presentation of the items within each modality was randomized. For each modality item presented, the experimenter asked the participant to spatially allocate the stimulus in the cube. When the participant pointed to the selected location, he/she was asked to hold their hand in the selected position while the experimenter positioned the badge with the code name of the specific stimulus (see Table 1). The code names were printed in black ink on 8 cm (W) × 3 cm (L) white paper and placed in a transparent plastic badge. Once all modality items had been placed within the cube, the participant was asked to rate the valence of the stimuli presented. (Items were presented in random order). Finally, the experimenter recorded the X, Y, and Z coordinates for each allocated item before the next modality task was presented. The entire experimental session lasted between 45 and 60 min (see Figure 1). At the end of each test session, all participants were asked whether they were aware of the purpose of the study. None of the participants reported knowledge of the study’s aims.

The dependent variables were the Cartesian 3D coordinates. The X, Y, and Z coordinates of each stimulus took integer values between −20 and 20. The subjective valence ratings of the stimuli took values between 0 (negative) and 10 (positive). The valence ratings were examined in relation to the location of the stimuli in each of the 3D coordinates.

The independent variables were the stimuli’s valence (positive, neutral, negative), sensory modality (visual, auditory, touch, and olfactory), the interaction between these two factors, participants, and stimuli. These last two factors were treated as random effects. The model with all main, interaction, and random effects was assessed via a robust linear mixed-effects model (here LMMr; implemented in the function “rlmer” in the R package “robustlmm;” Koller, 2016). The amount of variance explained by the full model (i.e., fixed and random effects) was estimated via the pseudo-R2 for (generalized and linear) mixed-effect models with random intercepts (Nakagawa and Schielzeth, 2013 for an extension of this method to random slopes models, see Johnson, 2014,). This method is implemented in the function “r.squaredGLMM” in the “MuMIn” R package. Its output provides both the R2 of the fixed-effects (here ) and the R2 of the full mixed-model (here ). These values are here reported as percentages. ANOVA results for the main effects and interactions were obtained via a rank-based test statistic (Brunner et al., 2017). (This test is implemented in the function “rankFD” in the “rankFD” R package).

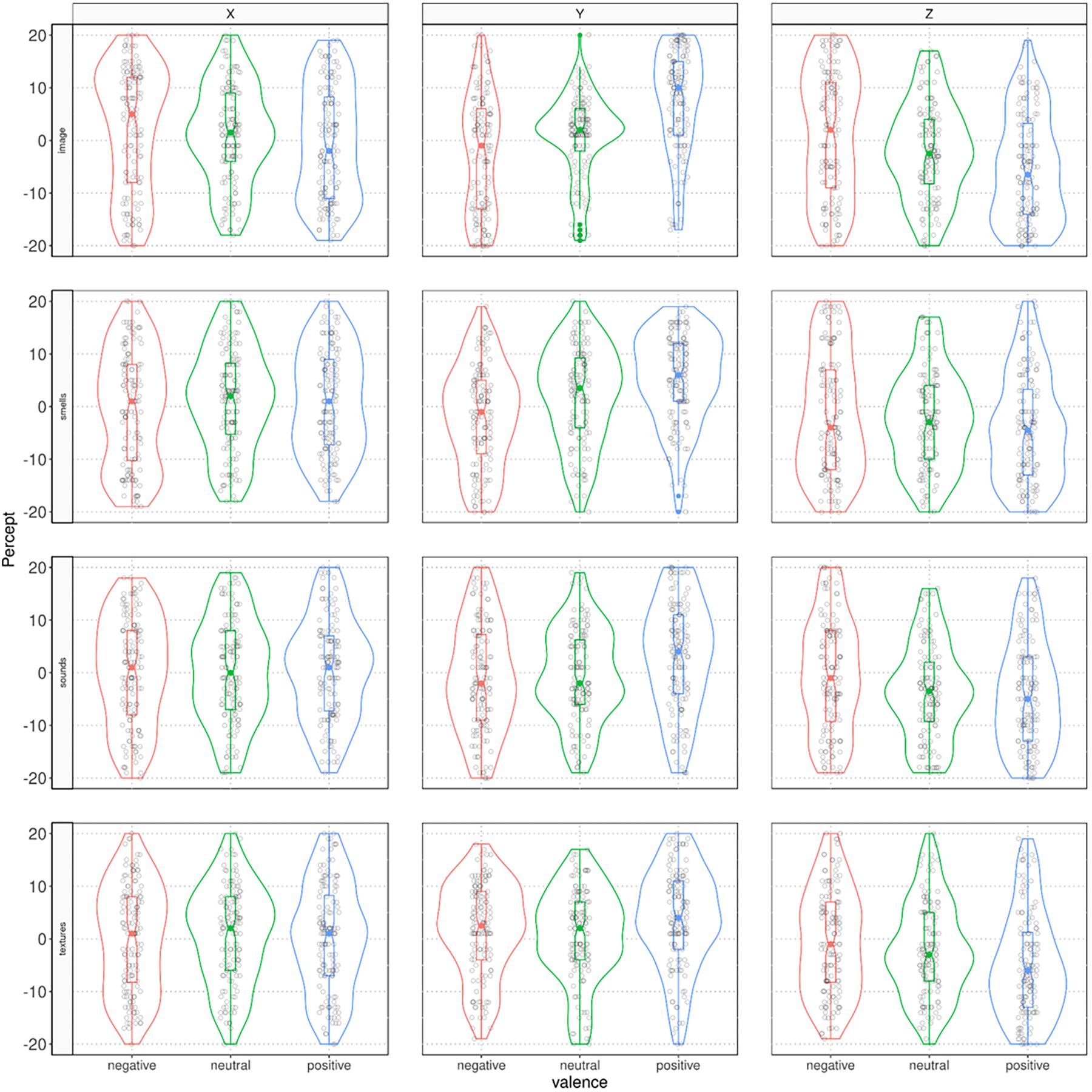

Supplementary analyses were performed on the X, Y, and Z-values of each sensory modality in order to assess the effects of other covariates (see Supplementary Material). Post hoc pairwise comparisons of dependent measures were performed on 20% bootstrapped trimmed means (via the function “pairdepb” in the “WRS2” R package; see Wilcox, 2017). For these pairwise comparisons the average differences,  , and 95% CIs around them are reported. (Significant differences are evidenced by the 95% CIs not containing the value of 0). The results are represented via 3D- and violin-plots (Hintze and Nelson, 1998).

, and 95% CIs around them are reported. (Significant differences are evidenced by the 95% CIs not containing the value of 0). The results are represented via 3D- and violin-plots (Hintze and Nelson, 1998).

Associations among locations in the X, Y, and Z coordinates and the items’ valence ratings were assessed via the maximal information coefficient [here rMIC; this criterion measures relationships ranging between 0 (no relationship) and 1 (noiseless functional relationships)], (Reshef et al., 2011) accompanied by the p-value of the percentage bend correlation; here ppb (the function “mine” in the “minerva” R package performs the rMIC, and the function “pbcor” in the “WRS2” R package performs the percentage bend correlation; Wilcox, 2017).

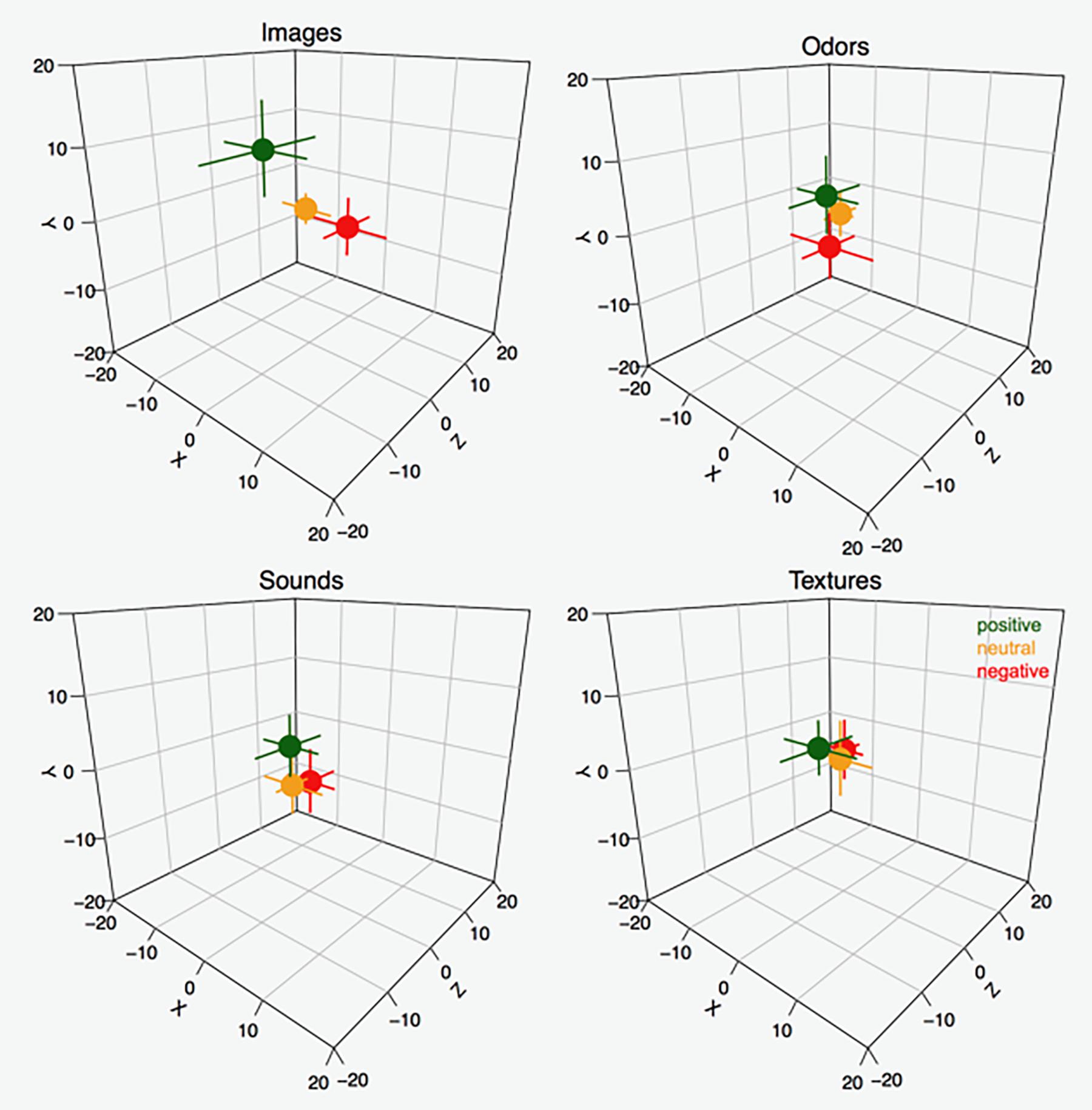

The effect of valence to space mapping was in accordance with our hypothesis. The analyses of the associations among locations in the three axes and the subjective valence ratings for the stimuli suggested that the more positively items were rated, the higher their location in vertical (Y) space (Images: rMIC = 0.21, ppb < 0.0001; Sounds: rMIC = 0.13, ppb = 1.79e−7; Smells: rMIC = 0.13, ppb < 0.001; Textures: rMIC = 0.15, ppb 2.71e−6). The more negatively items were rated, the further away from the body they were placed in the depth (Z) plane (Images: rMIC = 0.10, ppb = 0.002; Sounds: rMIC = 0.12, ppb = 4.66e−5; Smells: rMIC = 0.14, ppb = 0.015; Textures: rMIC = 0.14, ppb = 3.86e−6). In the horizontal (X) plane, no associations were reliable (all ppb’s > 0.08).

The results from the ANOVA indicated significant main effects of valence, F(1.99,1538.73) = 51.10, p < 0.00019 ( negativevs.neutral = −3.42 [−9.33, 2.47],

negativevs.neutral = −3.42 [−9.33, 2.47],  negativevs.positive = −7.89 [−14.13, −1.65], and

negativevs.positive = −7.89 [−14.13, −1.65], and  neutralvs.positive = −4.46 [−9.68,.75]), and sensory modality, F(2.99, 1538.73) = 2.85, p = 3.59e−2. (The only significant pairwise differences were:

neutralvs.positive = −4.46 [−9.68,.75]), and sensory modality, F(2.99, 1538.73) = 2.85, p = 3.59e−2. (The only significant pairwise differences were:  smellsvs.images = −7.21 [−12.78, −1.64] and

smellsvs.images = −7.21 [−12.78, −1.64] and  smellsvs.textures = −8.32 [−14.91, −1.73]) in the Y axis, although no significant interaction effect between valence and sensory modality was observed [F(5.94, 1538.73) = 5.21, p = 2.67e−5]. Although the test suggested that in the Z axis there was an effect of valence, F(1.98,1538.50) = 21.14, p = 9.94e−10, post hoc comparisons did not support that claim in that the 95% CIs of the average differences in all pairwise comparisons contained 0. The effects of sensory modality F(2.99,1538.50) = 0.32, p = 0.80 and the interaction between valence and modality in the Z axis were also non-significant, F(5.93,1538.50) = 1.08, p = 0.37. Moreover, and in accordance with our hypothesis, the ANOVA-type results indicated that no main effect or interaction was evident in the X axis for either valence or modality [valence: F(1.99,1546.63) = 0.65, p = 0.52; sensory modality: F(2.99,1546.63) = 0.24, p = 0.86; and their interaction: F(5.95,1546.63) = 1.04, p = 0.39] (see Table 2 for results of the LMMr and Figures 2, 3).

smellsvs.textures = −8.32 [−14.91, −1.73]) in the Y axis, although no significant interaction effect between valence and sensory modality was observed [F(5.94, 1538.73) = 5.21, p = 2.67e−5]. Although the test suggested that in the Z axis there was an effect of valence, F(1.98,1538.50) = 21.14, p = 9.94e−10, post hoc comparisons did not support that claim in that the 95% CIs of the average differences in all pairwise comparisons contained 0. The effects of sensory modality F(2.99,1538.50) = 0.32, p = 0.80 and the interaction between valence and modality in the Z axis were also non-significant, F(5.93,1538.50) = 1.08, p = 0.37. Moreover, and in accordance with our hypothesis, the ANOVA-type results indicated that no main effect or interaction was evident in the X axis for either valence or modality [valence: F(1.99,1546.63) = 0.65, p = 0.52; sensory modality: F(2.99,1546.63) = 0.24, p = 0.86; and their interaction: F(5.95,1546.63) = 1.04, p = 0.39] (see Table 2 for results of the LMMr and Figures 2, 3).

Figure 2. Median X, Y, and Z positions for positive, neutral, and negative stimuli in the four sensory modalities. In order to understand the spatial distribution of the data in relation to the participants’ perspective, assume the participants were facing the cube from the left angle; i.e., in front of the X axis. Error bars represent 95% CIs around the median (estimated as ±1.58 ⋅ , where IQR = interquartile range and n = sample size).

Figure 3. Violin-plots displaying the distribution of locations of valenced stimuli in each sensory modality in the X, Y, and Z spatial axes.

Supplementary analyses (see Supplementary Material) further indicate that the valence of the sensory stimuli is a major factor for predicting the allocation variation across the coordinates. In general, valence proved a stronger predictor than modality when allocating sensory stimuli in 3D space. Evidence of this is that the valence of the sensory modality was ranked as the most important variable in most cases across tasks and axes and exhibited the largest associated t-values (see Supplementary Table S1). The percentage of variance explained by all models considered suggests that the allocation of valenced modalities is more salient in the Y axis, followed by the Z and X axes. (See also results of the ANOVA above). Finally, the relationships among valenced modalities and space proved more salient for the visual stimuli, followed by auditory, tactile, and olfactory stimuli. (See also Figures 2, 3).

This study had the goal of examining the allocation of valenced percepts in four sensory modalities in 3D space. The results showed that positive percepts are placed in high locations, negative percepts are placed in low locations, and neutral percepts fall in between. Positive percepts are placed closer to the body, negative percepts are placed farther from the body, and neutral percepts fall in between. In the horizontal plane, the effect of a percept’s valence does not manifest. Overall, these results agree with those reported in a recent unique study in which valenced concepts were allocated in 3D space (see Marmolejo-Ramos et al., 2018).

The present study also showed that the effect of the percepts’ valence and their allocation in space was largest in the vertical plane (Y axis), followed by the “depth” and horizontal planes (Z and X axes, respectively). This result also chimes with the study of Marmolejo-Ramos et al. (2018), which is the first and only available study on the allocation of valenced concepts onto 3D space. Finding that covariates such as handedness, gender, age, language, and valence play no role in how valenced percepts are placed on the horizontal plane (see Supplementary Material) is also in line with studies which consistently show this same situation in the allocation of concepts in 2D (e.g., Marmolejo-Ramos et al., 2013, 2017) and, more recently, 3D (Marmolejo-Ramos et al., 2018) space (see Supplementary Material). In the current study, the null effect of valence and other factors in the X axis was manifest across all sensory modalities. Only in the case of valenced images, negative images tended to be placed rightward while positive images tended to be placed leftward. The non-significant associations between the items’ ratings and the X axis values provide further evidence against any link between percept’s valence and the horizontal plane in the sensory modalities studied.

Results in the Z plane are in line with an approach-avoidance paradigm such that positive percepts tended to be placed toward the body while negative percepts were placed away from the body. This pattern was evident across sensory modalities (except for the case of smells). In the vertical plane, positive percepts are placed in high locations while negative percepts are placed in low locations (except for the case of textures). The significant associations between the percepts’ valence ratings and the stimuli’s coordinates in the Y and Z axes, however, supports these claims. Differences in the allocation of percepts in each modality might be due to the dominance that some modalities have over others, as has been shown in the case of vision over olfaction (see Sakai et al., 2005; Sugiyama et al., 2006).

This study extends the results of Marmolejo-Ramos et al. (2018) to the case of percepts and, by the same token, validates the cognition cube as a suitable device for the study of valenced items and their association with 3D space. Specifically, the current study indicates that people map affective visual, auditory, olfactory and tactile information onto physical space in a systematic manner that reflects conceptual metaphors (see Lakoff and Johnson, 1980; Lakoff, 2014; for a recent proposal on this topic see Marmolejo-Ramos et al., 2017a). This study thus suggests that in normal adult samples, valenced concepts and percepts are placed onto 3D space differentially (as stated in the discussion). Future work is needed to investigate whether neurocognitive (e.g., alexithymia) and developmental factors (e.g., children) reflect in how concepts and percepts are allocated in space. Likewise, we believe the cognition cube could be used as a novel approach to diagnose emotion-related disorders (we are indeed working on this front and some preliminary results indicate this to be the case).

FM-R and AA designed the study. FM-R wrote the manuscript with input from AA, CT, and ML. CT administered the experiments. FM-R and RO carried out the data analyses.

This work was funded by grants from The Swedish Foundation for Humanities and Social Sciences to ML (ML: M14-0375:1).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

FM-R thanks Iryna Losyeva and Alexandra Marmolejo-Losyeva for their permanent support, and Matías Salibián-Barrera and Jorge I. Vélez for clarifying some statistical matters. The authors thank Susan Brunner for proofreading the manuscript. RO thanks to CNPq and FACEPE Brazil. The authors also thank the referees for their comments and suggestions.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2019.00352/full#supplementary-material

Amorim, M., and Pinheiro, A. P. (2018). Is the sunny side up and the dark side down? Effects of stimulus type and valence on a spatial detection task. Cogn. Emot. [Epub ahead of print]. doi: 10.1080/02699931.2018.1452718

Brunner, E., Konietschke, F., Pauly, M., and Puri, M. (2017). Rank-based procedures in factorial designs: hypotheses about non-parametric treatment effects. J. Royal Stat. Soc. Stat. Methodol. 79, 1463–1485. doi: 10.1111/rssb.12222

Casasanto, D. (2009). Embodiment of abstract concepts: good and bad in right- and left handers. J. Exp. Psychol. 138, 351–367. doi: 10.1037/a0015854

Casasanto, D., and Chrysikou, E. (2011). When left is “right” motor fluency shapes abstract concepts. Psychol. Sci. 22, 419–422. doi: 10.1177/0956797611401755

Colavita, F. B. (1974). Human sensory dominance. Perc. Psychophys. 16, 409–412. doi: 10.3758/BF03203962

Cornell Kärnekull, S., Arshamian, A., Nilsson, M. E., and Larsson, M. (2016). From perception to metacognition: auditory and olfactory functions in early blind, late blind, and sighted individuals. Front. Psychol. 7:1450. doi: 10.3389/fpsyg.2016.01450

Ekman, G., Hosman, J., and Lindström, B. (1965). Roughness, smoothness, and preference: a study of quantitative relations in individual subjects. J. Exp. Psychol. 70:18. doi: 10.1037/h0021985

Etzi, R., Spence, C., and Gallace, A. (2014). Textures that we like to touch: an experimental study of aesthetic preferences for tactile stimuli. Conscious. Cogn. 29, 178–188. doi: 10.1016/j.concog.2014.08.011

Freddi, S., Brouillet, T., Cretenet, J., Heurley, L., and Dru, V. (2016). A continuous mapping between space and valence with left-and right-handers. Psychon. Bull. Rev. 23, 865–870. doi: 10.3758/s13423-015-0950-0

Godinho, S., and Garrido, M. V. (2016). Oral approach-avoidance: a replication and extension for european-portuguese phonation. Eur. J. Soc. Psychol. 46, 260–264. doi: 10.1002/ejsp.2172

Hintze, J., and Nelson, R. (1998). Violin plots: a box plot-density trace synergism. Am. Stat. 52, 181–184.

Jepson, A. D., and Richards, W. (1993). What is a Percept? Technical Reports on Research in Biological and Computational Vision, RBCV-TR-93-43. Toronto: University of Toronto.

Johnson, P. C. D. (2014). Extension nakagawa & schielzeth’s R2 GLMM to random slopes models. Methods Ecol. Evol. 5, 944–946. doi: 10.1111/2041-210X.12225

Koller, M. (2016). Robustlmm: an R package for robust estimation of linear mixed-effects models. J. Stat. Software 75, 1–24. doi: 10.18637/jss.v075.i06

Koppen, C., and Spence, C. (2007). Audiovisual asynchrony modulates the colavita visual dominance effect. Brain Res. 1186, 224–232. doi: 10.1016/j.brainres.2007.09.076

Lakoff, G. (2014). Mapping the brain’s metaphor circuitry: metaphorical thought in everyday reason. Front. Hum. Neurosci. 8:958. doi: 10.3389/fnhum.2014.00958

Lakoff, G., and Johnson, M. (1980). The metaphorical structure of the human conceptual system. Cogn. Sci. 4, 195–208. doi: 10.1207/s15516709cog0402_4

Lang, P. J., Bradley, M. M., and Cuthbert, B. N. (2008). International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual. Technical Report A-8. Gainesville, FL: University of Florida.

Marmolejo-Ramos, F., Correa, J. C., Sakarkar, G., Ngo, G., Ruiz-Fernández, S., Butcher, N., et al. (2017). Placing joy, surprise and sadness in space. A cross-linguistic study. Psychol. Res. 81, 750–763. doi: 10.1007/s00426-016-0787-9

Marmolejo-Ramos, F., Elosúa, M. R., Yamada, Y., Hamm, N., and Noguchi, K. (2013). Appraisal of space words and allocation of emotion words in bodily space. PLoS One 8:e81688. doi: 10.1371/journal.pone.0081688

Marmolejo-Ramos, F., Khatin-Zadeh, O., Yazdani-Fazlabadi, B., Tirado, C., and Sagi, E. (2017a). Embodied concept mapping: blending structure-mapping and embodiment theories. Pragma. Cogn. 24, 164–185. doi: 10.1075/pc.17013.mar

Marmolejo-Ramos, F., Tirado, C., Arshamian, E., Vélez, J., and Arshamian, A. (2018). The allocation of valenced concepts onto 3D space. Cogn. Emot. 32, 709–718. doi: 10.1080/02699931.2017.1344121

Martin, A. (2016). GRAPES – grounding representations in action, perception and emotion systems: how object properties and categories are represented in the human brain. Psychon. Bull. Rev. 23, 979–990. doi: 10.3758/s13423-015-0842-3

Mather, G. (2016). Foundations of Sensation and Perception. London: Routledge. doi: 10.4324/9781315672236

Meier, B., and Robinson, M. (2004). Why the sunny side is up associations between affect and vertical position. Psychol. Sci. 15, 243–247. doi: 10.1111/j.0956-7976.2004.00659.x

Meier, B., Schnall, S., Schwarz, N., and Bargh, J. (2012). Embodiment in social psychology. Topics Cogn. Sci. 4, 705–716. doi: 10.1111/j.1756-8765.2012.01212.x

Miller, T., Schmidt, T., Blankenburg, F., and Pulvermüller, F. (2018). Verbal labels facilitate tactile perception. Cognition 171, 172–179. doi: 10.1016/j.cognition.2017.10.010

Montoro, P., Contreras, M., Elosúa, M., and Marmolejo-Ramos, F. (2015). Cross-modal metaphorical mapping of spoken emotion words onto vertical space. Front. Psychol. 6:1205. doi: 10.3389/fpsyg.2015.01205205

Nakagawa, S., and Schielzeth, H. (2013). A general and simple method for obtaining R2 from generalized linear mixed-effects models. Methods Ecol. Evol. 4, 133–142. doi: 10.1111/j.2041-210x.2012.00261.x

Peirce, J. W. (2007). PsychoPy-psychophysics software in python. J. Neurosci. Methods 162, 8–13. doi: 10.1016/j.jneumeth.2006.11.017

Phaf, R. H., Mohr, S. E., Rotteveel, M., and Wicherts, J. M. (2014). Approach, avoidance, and affect: a meta-analysis of approach-avoidance tendencies in manual reaction time tasks. Front. Psychol. 5:378. doi: 10.3389/fpsyg.2014.00378

Prinz, J. J. (2006). “Beyond appearances: the content of sensation and perception,” in Perceptual Experience, eds T. Gendler and J. P. Hawthorne (Oxford: Oxford University Press).

Rach, S., Diederich, A., and Colonius, H. (2011). On quantifying multisensory interaction effects in reaction time and detection rate. Psychol. Res. 75, 77–94. doi: 10.1007/s00426-010-0289-0

Reshef, D., Reshef, Y., Finucane, H., Grossman, S., McVean, G., Turnbaugh, P., et al. (2011). Detecting novel associations in large datasets. Science 334, 1518–1524. doi: 10.1126/science.1205438

Rusconi, E., Kwan, B., Giordano, B., Umiltà, C., and Butterworth, B. (2006). Spatial represententaiton of pitch height: the SMARC effect. Cognition 99, 113–129. doi: 10.1016/j.cognition.2005.01.004

Sakai, N., Imada, S., Saito, S., Kobayakawa, T., and Deguchi, Y. (2005). The effect of visual images on perception of odors. Chem. Senses 30(Suppl. 1), i244–i245. doi: 10.1093/chemse/bjh205

Salgado-Montejo, A., Marmolejo-Ramos, F., Alvarado, J. A., Arboleda, J. C., Suarez, D. R., and Spence, C. (2016). Drawing sounds: representing tones and chords spatially. Exp. Brain Res. 234, 3509–3522. doi: 10.1007/s00221-016-4747-9

Sasaki, K., Yamada, Y., and Miura, K. (2015). Post-determined emotion: motor action retrospectively modulates emotional valence of visual images. Proc. Biol. Sci. 282:1805. doi: 10.1098/rspb.2014.0690

Schneider, I., Veenstra, L., van Harreveld, F., Schwarz, N., and Koole, S. (2016). Let’s not be indifferent about neutrality: neutral ratings in the international affective picture system (IAPS) mask mixed affective responses. Emotion 16, 426–430. doi: 10.1037/emo0000164

Smith, L. B., and Colunga, E. (2012). “Developing categories and concepts,” in The Cambridge Handbook of Psycholinguistics, 283-307, Eds Edn, eds M. J. Spivey, K. McRae, and M. F. Joanisse (Cambridge: Cambridge University Press). doi: 10.1017/CBO9781139029377.015

Solarz, A. K. (1960). Latency of instrumental responses as a function of compatibility with the meaning of eliciting verbal signs. J. Exp. Psychol. 59, 239–245. doi: 10.1037/h0047274

Sugiyama, H., Ayabe-Kanamura, S., and Kikuchi, T. (2006). Are olfactory images sensory in nature? Perception 35, 1699–1708.

Topolinski, S., Maschmann, I., Pecher, D., and Winkielman, P. (2014). Oral approach–avoidance: affective consequences of muscular articulation dynamics. J. Pers. Soc. Psychol. 106:885. doi: 10.1037/a0036477

Treuer, S. (2003). Climbing the cortical ladder from sensation to perception. Trends Cogn. Sci. 7, 469–471. doi: 10.1016/j.tics.2003.09.003

Velasco, C., Salgado-Montejo, A., Elliot, A. J., Alvarado, J., and Spence, C. (2016). The shapes associated with approach/avoidance words. Motiv. Emot. 40, 689–702. doi: 10.1371/journal.pone.0140043

Velasco, C., Salgado-Montejo, A., Marmolejo-Ramos, F., and Spence, C. (2014a). Predictive packaging design: tasting shapes, typefaces, names, and sounds. Food Qual. Prefer. 34, 88–95. doi: 10.1016/j.foodqual.2013.12.005

Velasco, C., Balboa, D., Marmolejo-Ramos, F., and Spence, C. (2014b). Crossmodal effect of music and odor pleasantness on olfactory quality perception. Front. Psychol. 5:1352. doi: 10.3389/fpsyg.2014.01352

Wilcox, R. R. (2017). Introduction to Robust Estimation and Hypothesis Testing, 4th Edn. New York: Academic Press.

World Medical Association [WMA]. (2013). Ethical principles for medical research involving human subjects. J. Am. Med. Assoc. 310, 2191–2194. doi: 10.1001/jama.2013.281053

Xie, J., Huang, Y., Wang, R., and Liu, W. (2015). Affective valence facilitates spatial detection on vertical axis: shorter time strengthens effect. Front. Psychol. 6:277. doi: 10.3389/fpsyg.2015.00277

Keywords: embodied cognition, metaphorical mapping, valence-space metaphor, cognition cube, approach-avoidance

Citation: Marmolejo-Ramos F, Arshamian A, Tirado C, Ospina R and Larsson M (2019) The Allocation of Valenced Percepts Onto 3D Space. Front. Psychol. 10:352. doi: 10.3389/fpsyg.2019.00352

Received: 18 July 2018; Accepted: 05 February 2019;

Published: 27 February 2019.

Edited by:

Yann Coello, Université Lille Nord de France, FranceReviewed by:

Ana Pinheiro, University of Minho, PortugalCopyright © 2019 Marmolejo-Ramos, Arshamian, Tirado, Ospina and Larsson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fernando Marmolejo-Ramos, ZmVybmFuZG8ubWFybW9sZWpvcmFtb3NAYWRlbGFpZGUuZWR1LmF1; ZmlydGh1bmFuZHNzdGVyQGdtYWlsLmNvbQ== Maria Larsson, bWFybGFyQHBzeWNob2xvZ3kuc3Uuc2U=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.