94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 10 January 2019

Sec. Cognitive Science

Volume 9 - 2018 | https://doi.org/10.3389/fpsyg.2018.02687

Despite the sparse visual information and paucity of self-identifying cues provided by point-light stimuli, as well as a dearth of experience in seeing our own-body movements, people can identify themselves solely based on the kinematics of body movements. The present study found converging evidence of this remarkable ability using a broad range of actions with whole-body movements. In addition, we found that individuals with a high degree of autistic traits showed worse performance in identifying own-body movements, particularly for simple actions. A Bayesian analysis showed that action complexity modulates the relationship between autistic traits and self-recognition performance. These findings reveal the impact of autistic traits on the ability to represent and recognize own-body movements.

The concept of the “self” has widely been considered to play a crucial role in supporting the human ability to communicate with others (Anderson, 1984). Ornitz and Ritvo (1968) suggested that children with autism spectrum disorder (ASD) may suffer from a basic impairment in self-awareness, which interferes with the development of social interaction and communication with others. To test this hypothesis, a number of experimental studies have investigated whether individuals with ASD exhibit impairments in visual self-recognition. The prototypical task used to assess visual self-recognition ability in children with ASD is the mirror self-recognition procedure—examining children's responses to their reflections in mirrors. Specifically, a child views herself in a mirror after a small amount of rouge has been secretly applied to his or her nose (Neuman and Hill, 1978; Dawson and McKissick, 1984; Spiker and Ricks, 1984). These studies have largely found that most children with ASD behaved similarly to children in a control group: they touched the nose, or verbally referred to the rouge on the nose, demonstrating recognition of their own faces in the mirror. Hence, based on evidence from the mirror-mark test, it appears that self-recognition may be intact in ASD (although a developmental delay in autism has been reported, e.g., Ferrari and Matthews, 1983).

However, other researchers have pointed out that simply passing the mirror-mark test is not sufficient to establish possession of self-identification ability. For example, many infants who pass the mirror-mark test also attempt to wipe a non-existent mark off their own face when they observe that other people have a mark on their face (Lewis and Brooks-Gunn, 1979; Johnson, 1983; Mitchell, 1993). These findings raise a possibility that the mirror-mark test may not probe the core perceptual representation underlying self-recognition, and hence is not sensitive enough to identify the impact of ASD on self-recognition from visual input.

Another concern is that stimuli used in previous studies of self-recognition may not solely assess self-representation. For example, we have rich experiences from daily life of our own faces and own speech, as we often see our faces in mirrors or photos and listen to our own voices in conversations. To investigate the perceptual representation of the self, we need stimuli that can decouple high familiarity with perceptual experiences from self-recognition ability. A good candidate to investigate involves own-body actions, an extensive stimulus set that we rarely witness in our daily life. Accordingly, tasks requiring identification of the self through own-body movements can shed light on the core of self-recognition ability, without relying on visual experience. In addition, the recognition of human body movements requires an especially tight coupling between perception and action, in that these processes share a common representational platform (Prinz, 1997; Casile and Giese, 2006). Studying self-recognition of own-body movements provides a unique window to examine the interplay between perception, action, and self-recognition ability.

Several studies have reported that humans are able to identify their own-body movements, even when the actions are reduced to a dozen discrete dots representing joint movements in point-light displays (Loula et al., 2005). In fact, previous research suggests that people are even more accurate in identifying the self from own-body movements than in recognizing familiar friends from their actions (Cutting and Kozlowski, 1977; Loula et al., 2005). In addition, visual representations of own-body movements have been found to be object-centered. Jokisch et al. (2006) used point-light displays portraying walking actions to demonstrate that self-recognition of own walking actions is viewpoint-invariant, whereas the recognition of a familiar friend from walking point-light displays is viewpoint-dependent, favoring the frontal view (Jokisch et al., 2006). This characteristic reveals a fundamental difference between the action representation of the self and of others.

The ability to extract the “self” and infer “identity” from impoverished point-light displays highlights the remarkable capacity of the human visual system for biological motion perception—the ability to construe rich social information through the kinematics of body movements (Adolphs, 2003; Thurman and Lu, 2014). Several studies have investigated biological motion perception in autism. Individuals with ASD show poorer performance in extracting emotional content from body movements (Moore et al., 1997; Hubert et al., 2007; Parron et al., 2008; Nackaerts et al., 2012), reduced adaptation to action categorization (van Boxtel et al., 2016), and impairments in some action detection and discrimination tasks (e.g., Blake et al., 2003; Koldewyn et al., 2009; Annaz et al., 2010; Nackaerts et al., 2012).

Behavioral differences in the processing of biological motion information have not only been reported when comparing ASD and control groups, but also in typical populations with varying degrees of autistic traits. The Autism-Spectrum Quotient (AQ) questionnaire is the most common measure of self-reported autistic traits (Baron-Cohen et al., 2001). Recent evidence has identified an overlapping genetic and biological etiology underlying ASD and autistic traits (Bralten et al., 2017), in addition to behavioral overlap (Baron-Cohen et al., 2001; Robertson and Simmons, 2013). Several studies of biological motion perception have reported an association between AQ scores and performance on various tasks. For example, people with a higher number of autistic traits show impairments in interpreting social actions (Bailey et al., 1995; Kaiser et al., 2010; Ahmed and Vander Wyk, 2013; van Boxtel et al., 2017). Individuals with more autistic traits showed poorer performance in judging the facing direction of a walker, a task requiring contextual integration (Miller and Saygin, 2013). In addition, people with more autistic traits showed less perceptual adaptation to actions (van Boxtel et al., 2016) and less neural adaptation in the right posterior superior temporal sulcus, the key brain region for perceiving, and understanding human actions (Thurman et al., 2016).

However, previous work has only examined action recognition or categorization and compared such ability between people with different degrees of autistic traits. Therefore, it remains unknown whether the ability to identify own-body movements is systematically impacted by the degree of autistic traits. If people with a high degree of autistic traits lack a clear perceptual representation of their own-body movements, we hypothesize that they may exhibit worse performance in self-identification than people with a low degree of autistic traits.

In the present study, we recorded body movements of individual participants and conducted a self-recognition task after a considerable delay (~2.75 months). We then examined the relation between autistic traits and self-recognition performance, and further examined whether this relation was modulated by action complexity (as more complex actions involve increased motor planning and distinctive movement styles for different individuals).

Forty-three undergraduate students from the University of California, Los Angeles (UCLA) with normal or corrected-to-normal vision were enrolled in the study. Exclusion criteria included seven students who participated in the first session of the study, but either did not show up to the latter session or were unable to be contacted after ~2 months for the second session. In addition, two participants were not included in the analysis due to technical difficulties (that these two participants were not tested the correct action sets for their own-body movements). Hence, a total of 34 participants (21 female, and 13 male) were included in the analyses reported in the present paper.

Participants gave informed consent as approved by the UCLA Institutional Review Board and were provided with $10 cash per session (hence $20 for completing two sessions of the study), except for two participants who requested course credit instead. All participants were naïve to the hypothesis under investigation. Participants were not informed at any point about testing for self-recognition until the final task (i.e., second session) of the study.

Participants completed the 50-point Autism-spectrum Questionnaire (AQ) to generate AQ scores measuring the degree to which individuals with normal intelligence and development show traits associated with the autistic spectrum (Baron-Cohen et al., 2001). Group assignment was determined by degree of autistic traits based on AQ scores, with a cutoff of <15 for the low-AQ group and >24 for the high-AQ group. These cutoff scores were based on previous work in our lab (van Boxtel and Lu, 2013; Thurman et al., 2016), and from previous measurements of these traits in the general population (Ruzich et al., 2015). These cutoffs correspond to the bottom 15th percentile and top 25th percentile of measured AQ histograms from a large sample of 496 UCLA undergraduate students who previously completed the questionnaire in unrelated studies. From this pool of participants with recorded AQ scores, we contacted individuals who were within the cutoff ranges and recruited participants for this study. The high-AQ group consisted of 15 participants (Mage = 21.3, SDage = 1.8, 11 females) with an average AQ score of 26.4 at the 91th percentile (SD = 1.5, range = 25–30). The low-AQ group consisted of 19 participants (Mage = 21.9, SDage = 3.2, 10 females) with an average AQ score of 10.1 at the 5th percentile (SD = 2.6, range = 4–14).

The sample size of the present study exceeded typical samples (N = 6~12) used in previous research studying self-recognition of body movements (Loula et al., 2005; Jokisch et al., 2006). In comparison with sample sizes used in previous studies examining the impact of autism disorder on biological motion perception, the number of high-AQ and low-AQ participants in the present study is within the typical range (N = 12 ASD participants in Blake et al., 2003, N = 16 in Murphy et al., 2009, N = 30 in Koldewyn et al., 2009, and N = 16 in van Boxtel et al., 2016). Additionally, in our previous studies examining the relation between autistic traits and adaptation in biological motion, a similar number of students (N = 30) participated in a behavioral study (van Boxtel and Lu, 2013), and 12 students participated in an fMRI study (Thurman et al., 2016). Hence, the sample size in the present study is consistent with the participant numbers used in previous studies in the relevant literature.

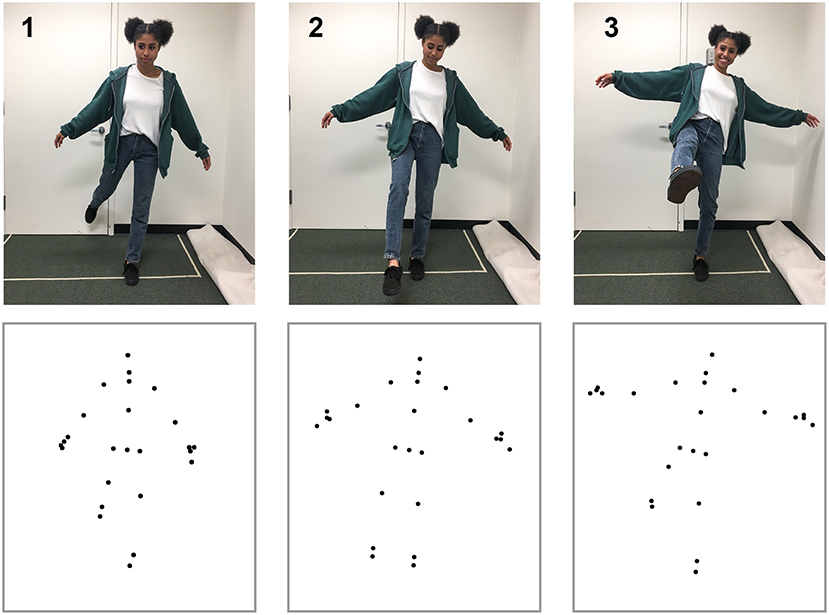

To create the action stimuli, we used the Microsoft Kinect V2.0 and Kinect SDK (Shotton et al., 2011; Zhang, 2012) to capture three-dimensional coordinates for 18 points (3 points for each limb, 3 for torso, 2 for hands, and 1 for the head) at a rate of 30 Hz. We reduced the noise of movements by applying a double exponential adaptive smoothing filter (LaViola, 2003), which mainly serves to remove recording errors from the Kinect system (e.g., missing a joint due to occlusion or small jitter for some points). We trimmed the videos to present each action segment in point-light display format (van Boxtel and Lu, 2015) for the self-recognition task. Motion capture took place in a 3 by 4 m space to allow for a range of movement. The Kinect sensor was placed 1.2 m above the floor and 2.5 m from the participant. Figure 1 provides an illustration of the action recording process. Testing took place in a dark, quiet room to minimize distractions. Using a chin rest, participants viewed biological motion displays of actions from a fixed distance of 34.5 cm. Each of the 18 dots used for point-light displays subtended a visual angle of 0.15°. Monitor width and height was 53.1° × 40.7°. All videos of the point-light displays can be found at the website “http://cvl.psych.ucla.edu/self_recognition_videos.html.”

Figure 1. Motion capture data for the simple action kicking. The top row shows three image frames from the action sequence, and the bottom row shows the corresponding point-light display that was captured from the Kinect device. Not all of the points shown were used for display during the self-recognition task. The depicted individual has given written, informed consent to use her image in the publication.

Each participant completed two sessions. Motion capture took place in the first session and the self-recognition test in the second session, with a time gap on the average of 2.76 months between the two sessions (in days, M = 84.1, SD = 31.8, range = 59–154). A long delay between the motion recording session and recognition session has previously been used in a study of self-recognition of body movements (Loula et al., 2005), serving to minimize the likelihood that participants would remember the specific movements that they had performed during the recording session.

We recorded whole-body movements from each participant while they performed a total of 18 different actions. Participants were given verbal instructions, e.g., “please perform the action of grabbing.” This wording was general and did not provide specifics on how to perform the action. Based on previous findings that humans are adept at recognizing various action categories from whole-body movements (Dittrich, 1993; van Boxtel and Lu, 2011), we asked people to perform a variety of actions and later tested self-recognition from a range of actions. We categorized nine stimuli as simple actions, and the other nine stimuli as complex actions. We categorized actions with simple goals (such as grabbing) or locomotive actions (such as jumping) as simple actions, and categorized goal-directed actions (such as arguing) as complex actions. In the present study, simple actions consisted of grabbing, hammering, jumping, kicking, lifting, pointing, punching, pushing, and waving. Complex actions consisted of arguing, cleaning, dancing, playing a sport, fighting, getting someone's attention, hurrying, playing a musical instrument, and stretching. We imposed a maximum time limit of 5 s for performing each action. Participants were given verbal instructions about performing the actions and asked to think about each action before performing it, in order to avoid spontaneous and unrelated movements. None of the participants showed any problem in understanding the verbal instructions.

The first session of action recording took approximately 45 min. An additional, separate group of six students (three female and three male) were recorded performing the same sets of actions. The recorded actions from these six actors were used solely as distractors during testing in the second session. These six students did not participant in the second session of the study.

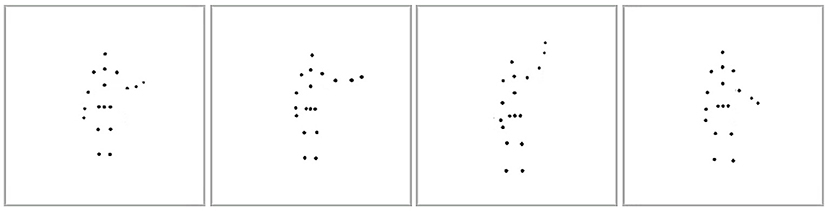

After a considerable delay (~2.75 months), we measured participants' self-recognition ability during the second session by presenting them with four different point-light stimuli showing the same action performed by four individuals. One point-light stimulus consisted of a participant's own-body movements from previous recordings, and the three others were movements generated by the distractor actors. The distractors matched the participant's gender to avoid gender-specific biases in recognizing body movements. All the displayed action stimuli were normalized according to the participant's body height and width to avoid recognition based on body form. The actions were spread out horizontally along the center of the screen, as shown in Figure 2. Participants were instructed to identify their own action. Each action was looped until the participant made a response by clicking one of the four boxes with the mouse, or until a 40 s timeout. Feedback was not provided. Within each trial, all four point-light stimuli were displayed from the same viewing angle. In the test session, four viewpoints were used to show each action. Specifically, the actors were rotated around the vertical axis to show the action at 0° (facing back), 135° (facing front right), 180° (facing front), and 247° (facing back left). We did not use profile views (directly facing left or right), since some actions (e.g., jumping) induced severe self-occlusion of moving point-lights at these views. There were 72 test trials in total in the self-recognition session (four viewpoints for each of the 18 actions).

Figure 2. Point-light displays of the self-recognition task. The image shows the subject and three distractor actors performing the waving action.

In addition to measuring self-recognition accuracy, we recorded participants' response times and confidence in their self-recognition judgments. For confidence assessment, we adopted the design of comparative confidence judgments (De Gardelle and Mamassian, 2014). Trial order was constrained so that every two trials participants viewed one simple action and one complex action. The order and selection of actions was randomized. After viewing two self-recognition trials, participants were asked to indicate in which trial they were more confident in their decision of identifying own-body movements. Specifically, after two trials of self-identification, participants were shown two side-by-side boxes, with box “1” indicating they were more confident in their judgment for the first of the previous two trials, and “2” for more confidence in their judgment for the second of the previous two trials. There were 36 confidence measurements in total.

Overall self-recognition accuracy (proportion correct) for each of the 18 actions (including all participants) was in the range of [0.43, 0.86], significantly above the chance level of 0.25. This result replicated findings from prior research showing that humans are able to identify themselves from walking actions (Cutting and Kozlowski, 1977; Loula et al., 2005; Jokisch et al., 2006). Hence, the present findings provide converging evidence that participants are able to recognize themselves solely from kinematic information in body movements, even for actions less commonly encountered than walking.

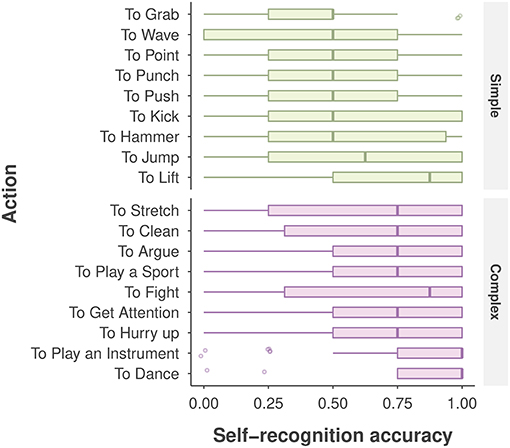

We first conducted a repeated-measures ANOVA to examine the effects of AQ group (low vs. high, a between-subjects factor) and action type (simple vs. complex, a within-subjects factor) on self-recognition performance. Figure 3 depicts the average self-recognition accuracy as a function of action complexity and AQ groups. We found a significant main effect of action type [F(1, 32) = 40.59, p < 0.001, ηp2 = 0.56], with better self-recognition performance for complex than for simple actions. This analysis revealed a marginal main effect of AQ group [F(1, 32) = 3.80, p = 0.060, ηp2 = 0.11], suggesting that the degree of autistic traits may impact self-recognition performance. The low-AQ group performed significantly better at self-recognition of simple actions than did the high-AQ group [t(32) = 2.23, p = 0.033, ηp2 = 0.13], but these group differences were not observed for complex actions [t(32) = 1.34, p = 0.256, ηp2 = 0.04]. The classic repeated-measures ANOVA did not reveal a significant interaction effect between AQ group and action type [F(1, 32) = 1.12, p = 0.298].

Figure 3. A boxplot of self-recognition accuracy by AQ group and action type. The boxplot shows the median accuracy and the first/third quartiles. The points denote the average accuracy of individual participants. Note that data points for participants yielding the same accuracy level may overlap in the plot.

As depicted in Figure 4, participants showed large variability in self-recognition accuracy for individual action stimuli. For example, accuracy was 0.86 for the dancing action, but was 0.65 for the stretching action, despite the fact that both actions were categorized as complex; and for the two simple actions lift and grab, performance was 0.72 and 0.43, respectively. Such large variability involving individual actions, viewpoints, and other extraneous variables such as gender, age, and AQ score were not captured in the aforementioned ANOVA analysis.

Figure 4. Self-recognition accuracy for each action, categorized by action type. The boxplot displays the median (the central vertical line), the lower 25th and upper 75th percentiles (the left and right edges of the box), and whiskers (box edge ±1.5 × box width). The points denote the outliers (any value beyond the whiskers).

To take into consideration these potential sources of variability involving individual actions and participants, we conducted a Bayesian analysis of a hierarchical logistic regression model with a binary response (correct/incorrect) for each trial as the dependent measure (Carpenter et al., 2017). The hierarchical model included predictor variables of fixed effects, varying predictors at the participant level, and varying predictors at the action level. We compared three different models to first identify the important factors for determining self-recognition accuracy. The full model, Model 1, included fixed effects action type (simple vs. complex), AQ group (low vs. high), and the interaction between action type and AQ group. Predictors at the participant level included random intercepts and action type factors. Predictors at the action level included random intercepts and viewpoints. We also included participant age, gender, and AQ score as group-level predictors.

We compared Model 1 with two alternative models. Model 2 excluded the interaction effect of action type by AQ group but kept everything else the same, and Model 3 excluded action type and AQ factors. The three models were compared using approximate leave-one-out cross validation (LOO) with the Pareto-smoothed importance sampling (PSIS) method (Vehtari et al., 2017). Specifically, PSIS-LOO is based on the expected log predictive density while also penalizing the number of estimated parameters. This method is considered an improvement over the Deviance Information Criterion (DIC) for Bayesian models. The Bayesian model comparison revealed that Model 1 provided a better fit to human performance than did the two alternative models, according to the obtained LOO values: Model 1 vs. Model 2 resulted in ΔLOO = 15.9, SE = 5.6, CI = [4.9, 27.0]; Model 1 vs. Model 3 resulted in ΔLOO = 17.6, SE = 6.0, CI = [5.9, 29.3]. The results from the Bayesian analysis indicated that after considering variability from extraneous variables (e.g., individual actions, viewpoints), AQ group, action complexity, and the interaction between these two factors all play important roles in accounting for human performance for self-recognition of body movements. Details of the Bayesian analysis are included in the Supplementary Materials.

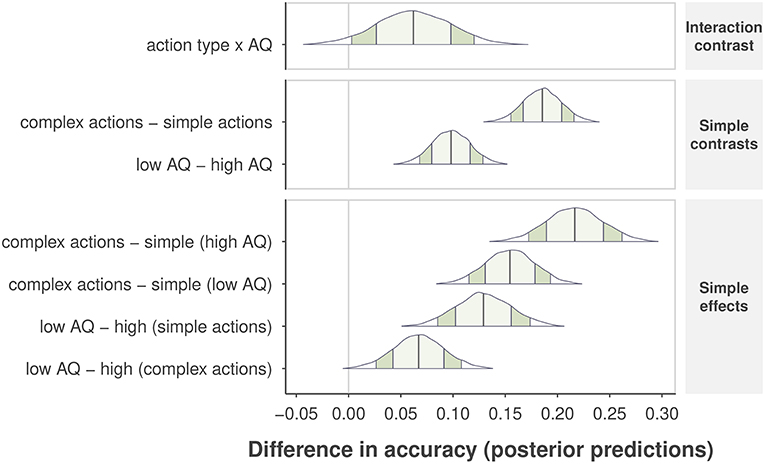

Next, we selected Model 1 to obtain the posterior distributions of predicted accuracy for the effects of AQ group and action complexity. We report 90% Bayesian posterior confidence intervals for each effect (Morey et al., 2016), and p-values indicating how much of the posterior distribution is above the null threshold (i.e., lack of a difference within a single contrast comparison). The Bayesian analysis confirmed that complex actions were easier to identify than simple actions, resulting in an increase in accuracy of .19, CI = [0.15, .22], p < 0.001, as shown in the second row plot in Figure 5. Due to the consideration of variability at the levels of subjects and individual actions, the Bayesian analysis gained more power to reveal a main effect of self-recognition accuracy between AQ groups, indicating that the high-AQ group was less accurate than the low-AQ group by 0.10, CI = [0.07, 0.13], p < 0.001, as shown in the third row plot in Figure 5. We also found an interaction effect, such that the impact of AQ group on performance decreased from complex to simple actions. High-AQ participants showed a further reduction in accuracy of 0.06, CI = [0.003, 0.12], p = 0.044 compared to low-AQ participants. These results indicate that individuals with higher levels of autistic traits show worse performance when identifying themselves from simple actions in comparison with low-AQ individuals. We observed no difference in accuracy between males and females in the high-AQ group, but found that males were 0.07, CI = [0.04, 0.12] more accurate than females in the low-AQ group.

Figure 5. Differences in posterior predictive accuracy are shown for specific sets of contrasts and effects along the vertical axis. Posterior predictions are determined from the observed data and by comparing the mean predicted value at specific levels of one or more factors. The “no difference” marker is indicated by the vertical line at value zero. The shape of the posterior distribution is shown for each contrast, with 90% intervals indicated by the darker shaded region inside the distribution, and 1 SD of the posterior by the lighter, center shaded region. Posterior means are indicated by the center vertical lines inside each distribution.

We also examined the results from the other two dependent measures, response time (RT) and confidence. For the RT analysis, we removed 10 trials from the total of 2,448 trials (34 participants × 72 trials) in which eight of the participants did not respond within 40 s (these trials were counted as inaccurate for the analysis based on accuracy data). We fit the same effects as in Model 1, but with the normalized log response time as the outcome and a Gaussian distribution as the likelihood function. Response time patterns showed similar significant main effects to those observed for accuracy data. The high-AQ group responded 5.5 (p < 0.001) s faster than did the low-AQ group, and simple actions yielded a median RT 6.1 s shorter than that for complex actions (p < 0.001). However, the RT data did not show the interaction effect between AQ group and action type (see the Supplementary Materials for posterior medians of each contrast effect for the RT analysis). However, we emphasize that accuracy was the primary measure in this study, and the relatively low overall accuracy levels make it more difficult to interpret the RT data.

Finally, for the confidence measure, we computed the proportion of complex action selections over simple action selections and analyzed these proportions by AQ group. The mean proportion for complex action confidence was 0.612 (SE = 0.04) for the low AQ group, and 0.609 (SE = 0.03) for the high AQ group. There was no significant group difference between the two AQ groups. This finding suggests that participants were more confident in their choice of own-body movements for complex actions than for simple ones, regardless of the degree of autistic traits.

Although humans rarely witness own-body movements, people can still identify themselves solely based on the kinematics of these movements, even when reduced to sparse visual information and scant self-identifying cues provided by point-light stimuli. We replicated findings from prior research showing that humans are able to identify themselves from walking actions (Cutting and Kozlowski, 1977; Loula et al., 2005; Jokisch et al., 2006). Since the present study used a variety of actions, our results generalize previous findings by showing that participants can recognize themselves solely from body movements even for actions less familiar than walking.

The present study is the first study to report individual differences in the ability of self-recognition with body movements. We found clear evidence that human performance of self-recognition is influenced both by characteristics of extrinsic stimuli such as complexity of performed actions, and by intrinsic traits of observers such as autistic traits. Our results revealed significantly better self-recognition performance for complex actions than for simple actions. This impact of action complexity may result from the increased motor planning and the greater individualization of movements involved in performing complex actions. For example, different people may generate different movements for a complex action such as getting someone's attention. Although simple actions (e.g., walking, jumping) are commonly observed and performed in daily life, biometric identity cues may not be readily apparent to the human visual system, resulting in a difficulty to differentiate these actions of individuals and in self-identification.

Intrinsic traits of observers can also affect the ability of self-recognition of body movements. A key finding in the present study is that participants with a high degree of autistic traits showed poorer performance for self-recognition of body movements than did people with fewer autistic traits. This finding is consistent with previous findings that individuals with autism show impairments on various tasks involving motor skills and social perception (e.g., Bailey et al., 1995; Rinehart et al., 2006; Kaiser et al., 2010; Ahmed and Vander Wyk, 2013; van Boxtel et al., 2016). What underlying mechanisms may contribute to the impact of autistic traits on self-recognition of body movements? We discuss a few possibilities below.

It is well documented that autism is associated with motor skill impairments (e.g., Rinehart et al., 2006; Ming et al., 2007). The impact of autistic traits on self-recognition performance in our study may result from some differences in motor movements between the high-AQ and the low-AQ groups. To examine this possibility, we compared a general characteristic of motor movements to assess whether people with high AQ scores moved faster or slower than people with low AQ scores. We compared the average speed of movements between the two groups. Using the recorded 3D coordinates of motion capture data from participants in the study, we calculated the inter-frame displacements for each point. We then computed the average speed of movements across the frames and points for each action, and compared the movement speeds between the high-AQ and the low-AQ groups. Only for the jumping action, people in the high-AQ group moved significantly slower than people in the low-AQ group (mean movement speed: high-AQ group 0.7 inch per frame; low-AQ group 1.1 inch per frame; p = 0.01). The other 17 of the 18 actions did not reveal any speed difference between the two groups. Hence, we found no clear evidence that the two AQ groups performed actions differently in a systematic way, which weakens the possibility that motor skill differences between two AQ groups can account for the present findings.

Since we rarely see our own body movements, self-recognition of actions need not be solely vision-based, more likely relying on the linkage between action perception and the motor processing system. Motor representations of own-body movements must be converted to visual representations that could be used in the self-recognition task. It is possible that people with a high degree of autistic traits may have a weakened connection between visual and motor representations of body movements, resulting in decreased self-recognition performance for the high-AQ group. One possible explanatory mechanism is related to the mirror neuron network, which includes unique neurons that discharge both when an action is perceived and when it is executed (Gallese et al., 1996; Rizzolatti et al., 1996; Rizzolatti and Fabbri-Destro, 2010). Mirror neurons may play a critical role in a coding scheme for the “self” and “other,” serving to transform a motor representation of own-body movements into a visual representation. Accordingly, a weakened mirror neuron network in the high-AQ group may have led to poorer performance in self-recognition of body movements.

This conjecture is consistent with the proposal by Williams (2008), specifically that a deficient mirror neuron system may give rise to impairments in self-other matching ability for individuals with ASD. However, the possibility of mirror- neuron abnormalities in autism remains controversial and is an active research area. Some research suggests that deficits in the development of mirror neuron networks in children with ASD may lie at the root of perceptual and social behavior impairments (Pineda, 2005; Dapretto et al., 2006; Le Bel et al., 2009). However, others have suggested that impairments in other brain systems beyond the mirror neuron system are the causes of poor social ability in autism (see a review by Southgate and Hamilton, 2008). Future work is clearly needed to pin down the relation between the mirror system and self-recognition ability. Currently, the experimental paradigm developed in the present paper provides a new stimulus set that could be utilized to investigate the mirror system in autism. In addition, the Kinect motion capture system makes it much more feasible to film complex actions performed by children with autism. Although the Kinect system does not have as high precision as other motion capture systems, the present study showed that the system has adequate recording quality to support the self-recognition task.

Finally, it is also possible that the poorer self-recognition by high-AQ individuals in our study may result from less attention to social stimuli. According to the social motivation theory of autism proposed by Chevallier et al. (2012), a dysfunction of social motivational mechanisms may constitute a primary deficit in autism and may lead to an unbalanced tradeoff between attention to external cues and attention to internal cues. For example, individuals with ASD are less susceptible to the rubber-hand illusion and show disproportionate attention to internal body cues (Schauder et al., 2015). This reduced attention and social motivation may be linked to impairments in the early stages of action encoding, which can subsequently affect kinematic memory (Zalla et al., 2010) and weaken top-down mechanisms involved in biological motion perception (Lu et al., 2006).

The construct of a “self” is highly complex, involving several subcomponents such as awareness of oneself (and consequently one's own actions), a sense of agency, and body ownership (Van Den Bos and Jeannerod, 2002). The ability to self-recognize is a necessary subcomponent of human social interaction and communication, helping to establish oneself as an independent agent (Jeannerod, 2003). The apparent ease and automaticity of self-recognition belies a highly complex integrative process, in which a conscious self-representation stems from the interplay between perceptual and motor systems. Extending self-recognition to own-body movements highlights the important contribution of motor experience to self-recognition, providing a new experimental paradigm to examine the core construct of the “self.”

Experimental design contributed by HL and JB. Data collection and data processing contributed by TS and JB. Data analysis and manuscript preparation contributed by JB, HL, and AK.

This research was supported by NSF grant BCS-165530.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We thank Steven Thurman and Yixin Zhu for helping with the setup of the motion capture system.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2018.02687/full#supplementary-material

Adolphs, R. (2003). Cognitive neuroscience of human social behaviour. Nat. Rev. Neurosci. 4, 165–178. doi: 10.1038/nrn1056

Ahmed, A. A., and Vander Wyk, B. C. (2013). Neural processing of intentional biological motion in unaffected siblings of children with autism spectrum disorder: an fMRI study. Brain Cogn. 83, 297–306. doi: 10.1016/j.bandc.2013.09.007

Anderson, J. R. (1984). The development of self-recognition: a review. Dev. Psychobiol. 17, 35–49. doi: 10.1002/dev.420170104

Annaz, D., Remington, A., Milne, E., Coleman, M., Campbell, R., Thomas, M. S., et al. (2010). Development of motion processing in children with autism. Dev. Sci. 13, 826–838. doi: 10.1111/j.1467-7687.2009.00939.x

Bailey, A., Le Couteur, A., Gottesman, I., Bolton, P., Simonoff, E., Yuzda, E., et al. (1995). Autism as a strongly genetic disorder: evidence from a British twin study. Psychol. Med. 25, 63–77. doi: 10.1017/S0033291700028099

Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J., and Clubley, E. (2001). The autism-spectrum quotient (AQ): evidence from asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. J. Autism Dev. Disord. 31, 5–17. doi: 10.1023/A:1005653411471

Blake, R., Turner, L. M., Smoski, M. J., Pozdol, S. L., and Stone, W. L. (2003). Visual recognition of biological motion is impaired in children with autism. Psychol. Sci. 14, 151–157. doi: 10.1111/1467-9280.01434

Bralten, J., van Hulzen, K. J., Martens, M. B., Galesloot, T. E., Vasquez, A. A., Kiemeney, L. A., et al. (2017). Autism spectrum disorders and autistic traits share genetics and biology. Mol. Psychiatry. 23, 1205–1212. doi: 10.1038/mp.2017.98

Carpenter, B., Gelman, A., Hoffman, M., Lee, D., Goodrich, B., Betancourt, M., et al. (2017). Stan: a probabilistic programming language. J. Stat. Softw. Articles 76, 1–32. doi: 10.18637/jss.v076.i01

Casile, A., and Giese, M. A. (2006). Nonvisual motor training influences biological motion perception. Curr. Biol. 16, 69–74. doi: 10.1016/j.cub.2005.10.071

Chevallier, C., Kohls, G., Troiani, V., Brodkin, E. S., and Schultz, R. T. (2012). The social motivation theory of autism. Trends Cogn. Sci. 16, 231–239. doi: 10.1016/j.tics.2012.02.007

Cutting, J. E., and Kozlowski, L. T. (1977). Recognizing friends by their walk: gait perception without familiarity cues. Bull. Psychon. Soc. 9, 353–356. doi: 10.3758/BF03337021

Dapretto, M., Davies, M. S., Pfeifer, J. H., Scott, A. A., Sigman, M., Bookheimer, S. Y., et al. (2006). Understanding emotions in others: mirror neuron dysfunction in children with autism spectrum disorders. Nat. Neurosci. 9:28–30. doi: 10.1038/nn1611

Dawson, G., and McKissick, F. C. (1984). Self-recognition in autistic children. J. Autism Dev. Disord. 14, 383–394. doi: 10.1007/BF02409829

De Gardelle, V., and Mamassian, P. (2014). Does confidence use a common currency across two visual tasks? Psychol. Sci. 25, 1286–1288. doi: 10.1177/0956797614528956

Dittrich, W. H. (1993). Action categories and the perception of biological motion. Perception 22, 15–22. doi: 10.1068/p220015

Ferrari, M., and Matthews, W. S. (1983). Self-recognition deficits in autism: syndrome-specific or general developmental delay? J. Autism Dev. Disord. 13, 317–324. doi: 10.1007/BF01531569

Gallese, V., Fadiga, L., Fogassi, L., and Rizzolatti, G. (1996). Action recognition in the premotor cortex. Brain 119, 593–609. doi: 10.1093/brain/119.2.593

Hubert, B., Wicker, B., Moore, D. G., Monfardini, E., Duverger, H., Da Fonseca, D., et al. (2007). Brief report: recognition of emotional and non-emotional biological motion in individuals with autistic spectrum disorders. J. Autism Dev. Disord. 37, 1386–1392. doi: 10.1007/s10803-006-0275-y

Jeannerod, M. (2003). The mechanism of self-recognition in humans. Behav. Brain Res. 142, 1–15. doi: 10.1016/S0166-4328(02)00384-4

Johnson, D. B. (1983). Self-recognition in infants. Infant Behav. Dev. 6, 211–222. doi: 10.1016/S0163-6383(83)80028-9

Jokisch, D., Daum, I., and Troje, N. F. (2006). Self recognition versus recognition of others by biological motion: viewpoint-dependent effects. Perception 35, 911–920. doi: 10.1068/p5540

Kaiser, M. D., Delmolino, L., Tanaka, J. W., and Shiffrar, M. (2010). Comparison of visual sensitivity to human and object motion in autism spectrum disorder. Autism Res. 3, 191–195. doi: 10.1002/aur.137

Koldewyn, K., Whitney, D., and Rivera, S. M. (2009). The psychophysics of visual motion and global form processing in autism. Brain 133, 599–610. doi: 10.1093/brain/awp272

LaViola, J. J. (2003). “An experiment comparing double exponential smoothing and Kalman filter-based predictive tracking algorithms,” in Virtual Reality, 2003. Proceedings IEEE (Los Angeles, CA: IEEE), 283–284.

Le Bel, R. M., Pineda, J. A., and Sharma, A. (2009). Motor–auditory–visual integration: the role of the human mirror neuron system in communication and communication disorders. J. Commun. Disord. 42, 299–304. doi: 10.1016/j.jcomdis.2009.03.011

Lewis, M., and Brooks-Gunn, J. (1979). Toward a theory of social cognition: the development of self. N. Direct. Child Adolesc. Dev. 1979, 1–20. doi: 10.1002/cd.23219790403

Loula, F., Prasad, S., Harber, K., and Shiffrar, M. (2005). Recognizing people from their movement. J. Exp. Psychol. 31, 210–220. doi: 10.1037/0096-1523.31.1.210

Lu, H., Tjan, B. S., and Liu, Z. (2006). Shape recognition alters sensitivity in stereoscopic depth discrimination. J. Vision 6, 75–86. doi: 10.1167/6.1.7

Miller, L. E., and Saygin, A. P. (2013). Individual differences in the perception of biological motion: links to social cognition and motor imagery. Cognition 128, 140–148. doi: 10.1016/j.cognition.2013.03.013

Ming, X., Brimacombe, M., and Wagner, G. (2007). Prevalence of motor impairment in autism spectrum disorders. Brain Dev. 29, 565–570. doi: 10.1016/j.braindev.2007.03.002

Mitchell, R. W. (1993). Mental models of mirror-self-recognition: two theories. N. Ideas Psychol. 11, 295–325. doi: 10.1016/0732-118X(93)90002-U

Moore, D. G., Hobson, R. P., and Lee, A. (1997). Components of person perception: an investigation with autistic, non-autistic retarded and typically developing children and adolescents. Br. J. Dev. Psychol. 15, 401–423. doi: 10.1111/j.2044-835X.1997.tb00738.x

Morey, R. D., Hoekstra, R., Rouder, J. N., Lee, M. D., and Wagenmakers, E.-J. (2016). The fallacy of placing confidence in confidence intervals. Psychon. Bull. Rev. 23, 103–123. doi: 10.3758/s13423-015-0947-8

Murphy, P., Brady, N., Fitzgerald, M., and Troje, N. F. (2009). No evidence for impaired perception of biological motion in adults with autistic spectrum disorders. Neuropsychologia 47, 3225–3235. doi: 10.1016/j.neuropsychologia.2009.07.026

Nackaerts, E., Wagemans, J., Helsen, W., Swinnen, S. P., Wenderoth, N., and Alaerts, K. (2012). Recognizing biological motion and emotions from point-light displays in autism spectrum disorders. PLoS ONE 7:e44473. doi: 10.1371/journal.pone.0044473

Neuman, C. J., and Hill, S. D. (1978). Self-recognition and stimulus preference in autistic children. Dev. Psychobiol. 11, 571–578. doi: 10.1002/dev.420110606

Ornitz, E. M., and Ritvo, E. R. (1968). Perceptual inconstancy in early infantile autism: the syndrome of early infant autism and its variants including certain cases of childhood schizophrenia. Arch. Gen. Psychiatry 18, 76–98. doi: 10.1001/archpsyc.1968.01740010078010

Parron, C., Da Fonseca, D., Santos, A., Moore, D. G., Monfardini, E., and Deruelle, C. (2008). Recognition of biological motion in children with autistic spectrum disorders. Autism 12, 261–274. doi: 10.1177/1362361307089520

Pineda, J. A. (2005). The functional significance of mu rhythms: translating “seeing” and “hearing” into “doing”. Brain Res. Rev. 50, 57–68. doi: 10.1016/j.brainresrev.2005.04.005

Prinz, W. (1997). Perception and action planning. Eur. J. Cogn. Psychol. 9, 129–154. doi: 10.1080/713752551

Rinehart, N. J., Bellgrove, M. A., Tonge, B. J., Brereton, A. V., Howells-Rankin, D., and Bradshaw, J. L. (2006). An examination of movement kinematics in young people with high-functioning autism and Asperger's disorder: further evidence for a motor planning deficit. J. Autism Dev. Disord. 36, 757–767. doi: 10.1007/s10803-006-0118-x

Rizzolatti, G., and Fabbri-Destro, M. (2010). Mirror neurons: from discovery to autism. Exp. Brain Res. 200, 223–237. doi: 10.1007/s00221-009-2002-3

Rizzolatti, G., Fadiga, L., Gallese, V., and Fogassi, L. (1996). Premotor cortex and the recognition of motor actions. Cogn. Brain Res. 3, 131–141. doi: 10.1016/0926-6410(95)00038-0

Robertson, A. E., and Simmons, D. R. (2013). The relationship between sensory sensitivity and autistic traits in the general population. J. Autism Dev. Disord. 43, 775–784. doi: 10.1007/s10803-012-1608-7

Ruzich, E., Allison, C., Smith, P., Watson, P., Auyeung, B., Ring, H., et al. (2015). Measuring autistic traits in the general population: a systematic review of the Autism-Spectrum Quotient (AQ) in a nonclinical population sample of 6,900 typical adult males and females. Mol. Autism 6:2. doi: 10.1186/2040-2392-6-2

Schauder, K. B., Mash, L. E., Bryant, L. K., and Cascio, C. J. (2015). Interoceptive ability and body awareness in autism spectrum disorder. J. Exp. Child Psychol. 131, 193–200. doi: 10.1016/j.jecp.2014.11.002

Shotton, J., Fitzgibbon, A., Blake, A., Kipman, A., Finocchio, M., Moore, B., et al. (2011). “Real-time human pose recognition in parts from a single depth image,” in IEEE (Colorado Springs, CO). doi: 10.1109/CVPR.2011.5995316

Southgate, V., and Hamilton, A. F. (2008). Unbroken mirrors: challenging a theory of autism. Trends Cogn. Sci. 12, 225–229. doi: 10.1016/j.tics.2008.03.005

Spiker, D., and Ricks, M. (1984). Visual self-recognition in autistic children: developmental relationships. Child Dev. 55, 214–225. doi: 10.2307/1129846

Thurman, S. M., and Lu, H. (2014). Perception of social interactions for spatially scrambled biological motion. PLoS ONE 9:e112539. doi: 10.1371/journal.pone.0112539

Thurman, S. M., van Boxtel, J. J., Monti, M. M., Chiang, J. N., and Lu, H. (2016). Neural adaptation in pSTS correlates with perceptual aftereffects to biological motion and with autistic traits. Neuroimage 136, 149–161. doi: 10.1016/j.neuroimage.2016.05.015

van Boxtel, J., and Lu, H. (2013). Impaired global, and compensatory local, biological motion processing in people with high levels of autistic traits. Front. Psychol. 4:209. doi: 10.3389/fpsyg.2013.00209

van Boxtel, J. J., Dapretto, M., and Lu, H. (2016). Intact recognition, but attenuated adaptation, for biological motion in youth with autism spectrum disorder. Autism Res. 9, 1103–1113. doi: 10.1002/aur.1595

van Boxtel, J. J., and Lu, H. (2011). Visual search by action category. J. Vision, 11, 19–19. doi: 10.1167/11.7.19

van Boxtel, J. J., and Lu, H. (2015). Joints and their relations as critical features in action discrimination: evidence from a classification image method. J. Vision 15, 20–20. doi: 10.1167/15.1.20

van Boxtel, J. J., Peng, Y., Su, J., and Lu, H. (2017). Individual differences in high-level biological motion tasks correlate with autistic traits. Vision Res. 141, 136–144. doi: 10.1016/j.visres.2016.11.005

Van Den Bos, E., and Jeannerod, M. (2002). Sense of body and sense of action both contribute to self-recognition. Cognition 85, 177–187. doi: 10.1016/S0010-0277(02)00100-2

Vehtari, A., Gelman, A., and Gabry, J. (2017). Practical Bayesian model evaluation using leave-one-out cross-validation and WAIC. Stat. Comput. 27, 1413–1432. doi: 10.1007/s11222-016-9696-4

Williams, J. H. G. (2008). Self-other relations in social development and autism: multiple roles for mirror neurons and other brain bases. Autism Res. 1, 73–90. doi: 10.1002/aur.15

Zalla, T., Daprati, E., Sav, A.-M., Chaste, P., Nico, D., and Leboyer, M. (2010). Memory for self-performed actions in individuals with asperger syndrome. PLoS One. 5:e13370. doi: 10.1371/journal.pone.0013370

Keywords: autism-spectrum quotient, self-recognition, body movements, biological motion, visual perception

Citation: Burling JM, Kadambi A, Safari T and Lu H (2019) The Impact of Autistic Traits on Self-Recognition of Body Movements. Front. Psychol. 9:2687. doi: 10.3389/fpsyg.2018.02687

Received: 02 August 2018; Accepted: 13 December 2018;

Published: 10 January 2019.

Edited by:

Matthias Gamer, Universität Würzburg, GermanyReviewed by:

Anthony P. Atkinson, Durham University, United KingdomCopyright © 2019 Burling, Kadambi, Safari and Lu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Joseph M. Burling, am1idXJsaW5nQHVjbGEuZWR1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.