94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 30 November 2018

Sec. Environmental Psychology

Volume 9 - 2018 | https://doi.org/10.3389/fpsyg.2018.02274

This article is part of the Research TopicThe Cognitive Psychology of Climate ChangeView all 10 articles

Avoiding dangerous climate change requires ambitious emissions reduction. Scientists agree on this, but policy-makers and citizens do not. This discrepancy can be partly attributed to faulty mental models, which cause individuals to misunderstand the carbon dioxide (CO2) system. For example, in the Climate Stabilization Task (hereafter, “CST”) (Sterman and Booth-Sweeney, 2007), individuals systematically underestimate the emissions reduction required to stabilize atmospheric CO2 levels, which may lead them to endorse ineffective “wait-and-see” climate policies. Thus far, interventions to correct faulty mental models in the CST have failed to produce robust improvements in decision-making. Here, in the first study to test a group-based intervention, we found that success rates on the CST markedly increased after participants deliberated with peers in a group discussion. The group discussion served to invalidate the faulty reasoning strategies used by some individual group members, thus increasing the proportion of group members who possessed the correct mental model of the CO2 system. Our findings suggest that policy-making and public education would benefit from group-based practices.

To avoid dangerous climate change, average global temperature must not exceed a critical threshold, defined in the Paris Agreement as 1.5–2°C above pre-industrial levels (UNFCCC, 2015). However, countries' current climate pledges are guaranteed to overshoot this threshold (Mauritsen and Pincus, 2017), indicating that current national emissions policies are grossly inadequate. In a democracy, implementing effective mitigation policy is a two-step challenge: policy-makers must craft appropriate policies and those policies must then receive political and electoral support (Dreyer et al., 2015). Both steps require policy-makers, politicians, and citizens to understand the CO2 system. Unfortunately, most individuals lack this knowledge and consequently underestimate the measures required to mitigate climate change (e.g., Sterman and Booth-Sweeney, 2002; Martin, 2008; Guy et al., 2013).

To reason about emissions policy (in the context of mitigating climate change), an individual must understand how CO2 emissions contribute to climate change. For example, someone who accepts the scientific consensus would: (1) recognize that global temperature is increasing, (2) attribute that increase to human CO2 emissions, and (3) predict that emitting more CO2 will further increase temperature. This knowledge structure is called a “mental model” (Sterman, 1994; Doyle and Ford, 1998). A mental model represents the causal relationships within a system, and is used to describe, explain, and predict system behavior (Sterman, 1994; Doyle and Ford, 1998). Although crucial for decision-making, mental models are constrained by cognitive limits (Doyle and Ford, 1998; Sterman and Booth-Sweeney, 2002) and can never represent the full complexity of the real world. The human decision-maker is thus likely to make imperfect decisions about complex problems.

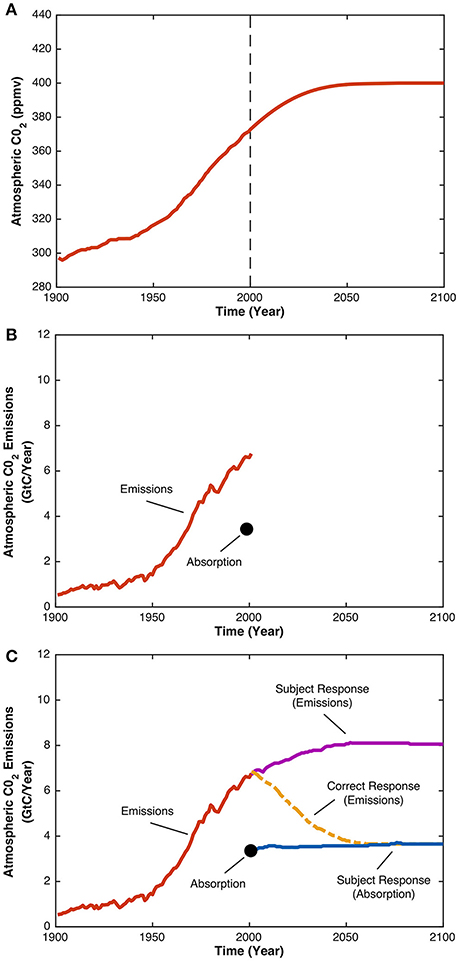

The Climate Stabilization Task (hereafter, “CST”) represents the complex problem of choosing the appropriate level of climate change mitigation. The CST is a decision-making task in which participants are told that atmospheric CO2 concentration is increased by CO2 emissions (largely from human activities), decreased by CO2 absorption (largely by oceans and plants), and stabilized when the rate of CO2 emissions equals the rate of CO2 absorption. Participants are also told that atmospheric CO2 concentration has increased since the Industrial Revolution, because the rate of CO2 emissions has increased to double the rate of CO2 absorption. Participants are then presented with a hypothetical scenario (Figure 1A) in which atmospheric CO2 concentration gradually rises to 400 ppm, then stabilizes by the year 2100. Next, participants must sketch trajectories of CO2 emissions and CO2 absorption that would correspond with this hypothetical scenario (Figure 1B).

Figure 1. Graphical illustration of the CST. Participants are presented with the graph in (A) showing the increase of atmospheric CO2 concentration since the year 1900 up until the year 2000. Following 2000, the graph depicts a hypothetical scenario in which atmospheric CO2 concentration increases to 400 ppm before stabilizing by the year 2100. Next, participants are presented with the graph in (B) and asked to sketch the trajectories of CO2 emissions and CO2 absorption from years 2000 to 2100 that they believe would be consistent with the hypothetical scenario. The graph in (C) shows a typical participant's response to the CST, where the blue line represents the participant's estimate of CO2 absorption, and the purple line represents the participant's estimate of CO2 emissions. As the rate of CO2 emissions exceeds the rate of CO2 absorption, atmospheric CO2 concentration will increase, not stabilize. This is an example of the so-called “pattern-matching” heuristic, whereby the pattern of CO2 emissions is assumed to “match” the pattern of atmospheric CO2 concentration. The correct CO2 emissions trajectory, given the participant's estimate of CO2 absorption, is depicted by the dashed yellow line. The rate of CO2 emissions decreases to equal the rate of CO2 absorption, an equilibrium that would stabilize atmospheric CO2 concentration. This response is consistent with the principle of accumulation, which states that the level of a stock at any given time is the difference between its inflow and its outflow.

The “principle of accumulation” states that, at any given time, the level of some accumulating stock (in this case, atmospheric CO2 concentration) is the difference between its inflow (rate of CO2 emissions) and outflow (rate of CO2 absorption) (Martin, 2008). Thus, to stabilize atmospheric CO2 concentration, the rate of CO2 emissions must decrease to equal the rate of CO2 absorption. However, participants often erroneously assert that stabilizing CO2 emissions is sufficient to stabilize atmospheric CO2 concentration (Figure 1C). Known as “pattern-matching,” this occurs when participants ignore CO2 absorption, believing that the pattern of atmospheric CO2 concentration should “match” the pattern of CO2 emissions. Repeated studies find that only 6–44% of participants answer the CST correctly, with many falling prey to the above mentioned pattern-matching heuristic (Sterman and Booth-Sweeney, 2002, 2007; Moxnes and Saysel, 2009; Boschetti et al., 2012; Guy et al., 2013; Newell et al., 2015). Low success rates on the CST are observed not only for members of the general public (Boschetti et al., 2012), but also for individuals who are a good proxy for policy-makers—namely stakeholders of a project researching climate change impacts (Boschetti et al., 2012) and Masters students studying system dynamics at the Massachusetts Institute of Technology (Sterman and Booth-Sweeney, 2002).

Most interventions to correct decision-makers' mental models of the CO2 system—as indexed by responses on the CST—have been unsuccessful (e.g., Pala and Vennix, 2005; Reichert et al., 2015). Using analogies (e.g., a bathtub in which the water level represents atmospheric CO2 concentration) (Moxnes and Saysel, 2009; Guy et al., 2013; Newell et al., 2015) and promoting “global thinking” over “local thinking” (Fischer and Gonzalez, 2015; Weinhardt et al., 2015) have produced minor improvements in CST performance. A formal university course in system dynamics was more successful (Pala and Vennix, 2005; Sterman, 2010), but this intervention is too resource-intensive to be applied on a large scale. Although these results seem discouraging, all interventions so far share the limitation of characterizing decision-makers as individuals. However, real-world decision-making is a social, group-based process informed by the beliefs of others (Tranter, 2011). Previous research shows that groups are better able than individuals to attenuate cognitive biases and decision heuristics (Kugler et al., 2012; Schulze and Newell, 2016), as well as identify, evaluate, and resolve competing hypotheses (Trouche et al., 2015; Larrick, 2016). These benefits notwithstanding, it is important to note that groups do not outperform individuals in all tasks. However, groups do perform consistently better than individuals on intellective, “truth-wins” problems in which the sole correct answer can be determined through logic, and then explained to convince others (i.e., the truth “wins”) (Davis, 1973; Laughlin et al., 2006). The CST is one such problem, as understanding the principle of accumulation leads to only one demonstrably correct solution (i.e., the rate of emissions equaling the rate of absorption).

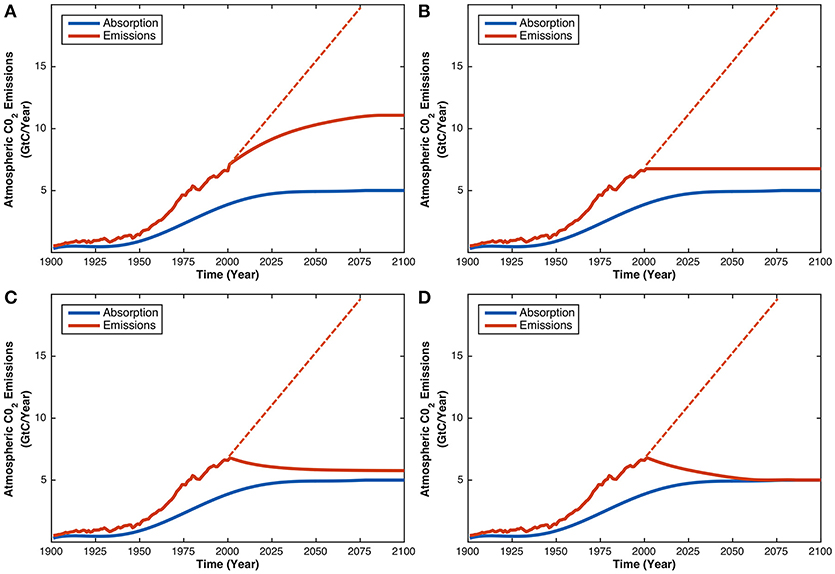

The aim of the current study was to test whether an intervention involving group decision-making can improve performance on the CST. To address this question, we administered a computerized version of the CST to staff and students from the University of Western Australia (N = 141). Participants were given background information about the CO2 system, and then presented with the hypothetical scenario in which atmospheric CO2 concentration stabilizes by the year 2100 (Figure 1A). The decision-making component was administered at two time points, Time 1 (T1) and Time 2 (T2). At T1, participants were presented with four graphs (Figure 2) and asked to select the graph that would produce the hypothetical scenario described. After selecting a graph, participants typed a brief explanation for their decision. At T2, participants were randomly allocated to one of three experimental conditions, before entering an anonymized online chatroom for 10 minutes. In the individuals condition (N = 21), participants reflected on their initial answer and explanation by themselves before making a decision about the four graphs again. In the dyads (N = 40) and groups (N = 80) conditions, either two or four participants, respectively, inspected each other's initial answers and explanations, then engaged in a discussion to reach a consensus decision on which of the four graphs is correct.

Figure 2. The four response alternatives in the multiple-choice version of the CST. All graphs show the same CO2 absorption trajectory with a different CO2 emissions trajectory. Graph (A) depicts the typical pattern-matching response in which CO2 emissions rise and then stabilize. Graph (B) is a less obvious form of pattern-matching in which CO2 emissions immediately stabilize. Graph (C) approximates the correct answer as CO2 emissions decrease, but not to the level required to achieve stabilization. Graph (D) is the correct response, because CO2 emissions decrease to equal CO2 absorption, thus stabilizing atmospheric CO2.

There were three key predictions. Firstly, it was predicted that the individual reflection would have no effect on decision-making, such that the success rates of individuals would not increase from T1 to T2. Secondly, it was predicted that the dyad discussion would benefit decision-making, such that the success rates of dyads would increase from T1 to T2. Thirdly, it was predicted that the group discussion would benefit decision-making to a greater extent than the dyad discussion, such that the success rates of groups would increase from T1 to T2, and this increase would be greater than that observed for dyads.

A secondary aim of the current study was to examine whether individual performance on the CST can be explained by a person's (1) demographic characteristics, (2) climate change knowledge and attitudes, and/or (3) personality and cognitive style. These constructs influence performance on comparable tasks that tap similar reasoning skills, but their effects on CST performance are unclear. Participants therefore completed a pre-test questionnaire assessing several individual differences measures. This was an exploratory feature of the current study and accordingly we made no specific predictions about the relationships between the following variables and CST performance.

Most studies find no relationship between demographic variables and performance on tasks similar to the CST (e.g., Moxnes and Saysel, 2004; Sterman, 2008). However, other studies find that younger participants (Browne and Compston, 2015), males (Ossimitz, 2002; Browne and Compston, 2015; Reichert et al., 2015), or students studying STEM degrees (Booth-Sweeney and Sterman, 2000; Browne and Compston, 2015) perform better than older participants, females, or students studying non-STEM degrees. Age, sex, and field of education were therefore included in the questionnaire.

The questionnaire also included a measure of “climate change knowledge,” as task-specific knowledge is associated with better performance on some stock-flow tasks (Strohhecker and Größler, 2013), but appears unrelated to performance on the CST (Moxnes and Saysel, 2004). A measure of “climate change attitudes” was also included to rule out the possibility that participants choose a graph based on their own ideology, rather than stock-flow reasoning. For the same reason, a measure of “environmental worldview,” or one's beliefs about humanity's relationship with nature (Price et al., 2014), was also included.

Two personality variables are related to the ability to overcome bias by prior belief (Homan et al., 2008; West et al., 2008), and may therefore benefit performance on the CST. “Active open-mindedness” describes an individual's tendency to spend sufficient time on a problem before giving up, and to consider new evidence and the beliefs of others (Haran et al., 2013). “Need for cognition” is the psychological need to structure the world in meaningful and integrated ways, and is associated with expending greater mental effort and enjoying analytical activity (Cacioppo and Petty, 1982).

Lastly, three aspects of cognitive style may be relevant to task performance. “Cognitive reflection” is the ability to resist reporting the first answer that comes to mind (Frederick, 2005), and may therefore protect against pattern-matching. “Global processing” is a way of perceiving the world that favors the organized whole, whereas “local processing” favors component parts and details (Weinhardt et al., 2015). Previous studies have produced conflicting results on the relationship between processing style and stock-flow reasoning (Fischer and Gonzalez, 2015; Weinhardt et al., 2015). “Systems thinking” refers to the tendency to understand phenomena as emerging from complex, dynamic, and nested systems (Thibodeau et al., 2016). It is positively related to the ability to comprehend causal complexity and dynamic relationships (Thibodeau et al., 2016), as well as pro-environmental attitudes (Davis and Stroink, 2016; Lezak and Thibodeau, 2016).

A third and final aim relates to Sterman's (2008) contention that the widespread, global preference for “wait-and-see” or “go-slow” approaches to emissions reduction can be linked to misunderstanding the complex CO2 system. We therefore included a policy preference question in the pre-test questionnaire, which was subsequently repeated at post-test, after completion of the CST. Participants answered the question, “Which of these comes closest to your view on how we should address climate change?” with one of three options: “wait-and-see” (wait until we are sure that climate change is really a problem before taking significant economic action), “go-slow” (we should take low-cost action as climate change effects will be gradual), or “act-now” (climate change is a serious and pressing problem that requires significant action now). If poor understanding of the climate system is indeed responsible for complacent attitudes toward emissions reduction, then we expect participants who answer the CST incorrectly to be more likely to prefer “wait-and-see” or “go-slow” policies at post-test. Conversely, those who answer the CST correctly should be more likely to select the “act-now” option.

Ethical approval to conduct the experiment was granted by the Human Ethics Office at the University of Western Australia (UWA) (RA/4/1/6298).

One hundred and forty one members of the campus community at the UWA were recruited to take part in the experiment using the Online Recruitment System for Economic Experiments (ORSEE; Greiner, 2015), an open-source web-based recruitment platform for running decision-making experiments. The ORSEE database at UWA contains a pool of over 1,500 staff and students from a range of academic disciplines. Participants were recruited by issuing electronic invitations to randomly selected individuals in the ORSEE database to attend one of several advertised experimental sessions. Participants' ages ranged from 17 to 74 (Mdn = 21.00, M = 23.80, SD = 7.34) and just over two thirds of participants were female (69.5%). About half studied a degree-specific major under the Faculty of Science (54.5%), but the Business School (15.7%), Engineering, Computing, and Mathematics (14.2%), and Arts (13.4%) faculties were also well represented. Participants were paid $10AUD for attending the experiment.

The experiment manipulated two independent variables: group size (individuals [n = 1] vs. dyads [n = 2] vs. groups [n = 4]) and time (T1 vs. T2). Group size was a between-participants variable, whereas time was a within-participants variable. Participants were allocated to the different group size conditions in a quasi-random fashion (see below). There was a minimum of 20 cases per group size: 21 × 1 = 21 participants in the individuals condition; 20 × 2 = 40 participants in the dyads condition; and 20 × 4 = 80 participants in the groups condition.

The experiment was conducted between May and August 2016 in the Behavioral Economics Laboratory at the UWA (http://bel-uwa.github.io), a computerized laboratory designed for carrying out collective decision-making experiments. There were 27 experimental sessions in total, with a minimum of two and a maximum of eight participants per session. Group sizes were randomly pre-determined before each session but were subject to change in the event that some participants failed to attend. For example, if eight participants were invited to a session, the goal was often to run two groups of four participants. If however, only six participants attended, then four participants were allocated to the group condition, and two participants were allocated at random either to the dyads condition or the individuals condition.

As participants arrived to each experimental session, they were randomly seated at a workstation containing two computer terminals. This random seating allocation in turn determined the group size condition to which the participants were allocated. The workstations were separated from each other by privacy blinds to prevent participants from observing one another's responses, and participants knew that face-to-face communication was prohibited. Participants read an information sheet and provided written informed consent, after which the experimenter provided an overview of the structure of the session. Using the left computer terminal on their workstation, the participants then completed the individual differences questionnaire (see Supplementary Materials, Section Individual Differences Questionnaire), which was executed on an internet browser using Qualtrics survey software. The questionnaire took approximately 20 minutes to complete.

Once all participants had completed the questionnaire, they received verbal instructions from the experimenter to minimize their internet browser, which revealed the electronic instructions for the CST (see Supplementary Materials, Section Instructions for CST). The first page foreshadowed what the task would involve. The second page defined CO2, CO2 emissions, atmospheric CO2 concentration, and CO2 absorption. The third page described how CO2 emissions and CO2 absorption, respectively, increase and decrease atmospheric CO2 concentration, and why atmospheric CO2 concentration has increased since the Industrial Revolution. The final page presented the decision-making situation. It described a hypothetical scenario in which atmospheric concentration rises from its current level of 400 ppm to stabilize at 420 ppm by the year 2100. Participants were then confronted with four graphs depicting the same trajectory of CO2 absorption, but different trajectories of CO2 emissions (Figure 2), and were required to choose the graph that would give rise to the hypothetical scenario. Graph D (Figure 2D) is the correct response, as it is the only graph that depicts the rate of CO2 emissions decreasing to equal the rate of CO2 absorption. We used a multiple-choice format because it is less cognitively-taxing than the version of the CST in which participants sketch trajectories. In Sterman and Booth-Sweeney (2007), a multiple-choice condition with seven textual response alternatives produced equivalent results to conditions requiring participants to sketch graphs.

The decision-making component of the CST was executed as a z-Tree (Fischbacher, 2007) program, which was administered on the right computer terminal of each participant's workstation. The CST required a decision at two different points in time: T1 and T2. At T1, all participants completed the task individually, irrespective of the group size condition to which they had been allocated. The experimental procedure at this time point was therefore identical across all three group size conditions. Participants first read the electronic instructions on the left computer terminal, before indicating on the right computer terminal which of the four graphs they believed would stabilize atmospheric CO2 concentration (Figure 2). There was no time limit for this component of the task.

Once participants had registered their T1 graph choice, a text field appeared on screen with a prompt to use the keyboard to type out a brief explanation for why they thought that the graph they had chosen was correct. Participants were allocated 5 minutes to complete this component of the task and a counter in the top right-hand corner of the terminal display indicated the time remaining for participants to supply their written explanations.

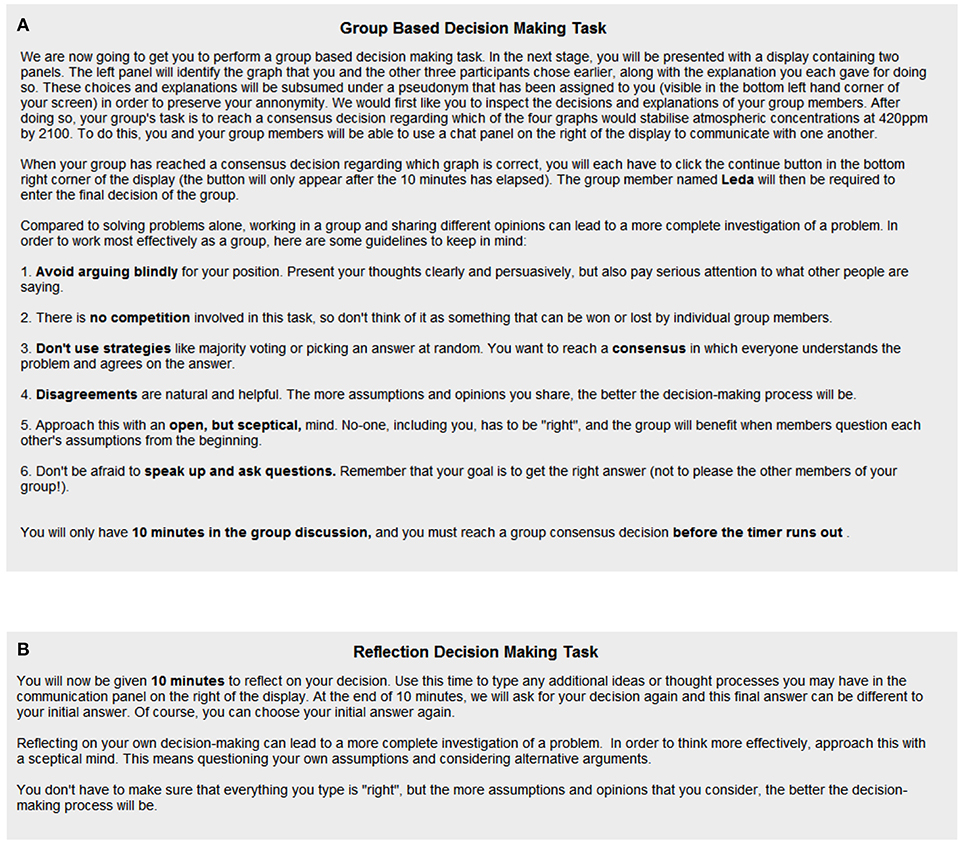

At T2, participants were informed of their group size condition allocation. Participants assigned to the dyads or groups conditions were required to discuss the decision problem with their one partner or three group members, respectively, for a fixed period of 10 minutes in order to reach a consensus decision regarding the correct solution. They were first given six guidelines for a productive group discussion (adapted from a study by Schweiger et al., 1986), as shown in Figure 3A. They then entered an online chatroom in which they could communicate with one another. The chatroom interface was divided into two panels: the Player Decisions Panel and the Communication Panel (Figure 4). The Player Decisions Panel, to the left of the terminal display, presented the T1 graph choices and explanations of each group or dyad member under a pseudonym (Leda, Triton, Portia, or Sinope) to preserve participant anonymity. In the Communication Panel, to the right of the terminal display, dyad and group members could communicate with one another by typing messages into a text entry field. These messages were posted in the Communication Panel under the group or dyad member's designated pseudonym. A timer in the top right corner of the terminal display showed how much time remained. After 10 minutes had elapsed, one group or dyad member was chosen randomly by the computer to register the group's or dyad's consensus decision.

Figure 3. The instructions given to participants in the groups and dyads conditions (A) and individuals condition (B) at T2.

The procedure at T2 was different in the individuals condition. Participants in this condition were instructed to reflect on their T1 decision for 10 minutes, alone. They were instructed to approach this reflection with a skeptical mind, to question their original assumptions, and to consider alternative explanations (Figure 3B). The chatroom interface was once again divided into two panels, this time labeled the Decision Panel and the Reflections Panel. In the Decision Panel, to the left of the terminal display, participants could inspect their T1 decision and explanation. In the Reflections Panel, to the right of the terminal display, participants were able to record reflections on their T1 decision. This panel was essentially the same as the Communication Panel for participants in the groups and dyads conditions, except that it was used to record self-reflections, rather than to communicate with group or dyad members. A timer in the top right corner of the terminal display once again indicated how much time remained. After 10 minutes had elapsed, participants were required to indicate once again which graph they deemed to be correct.

After submitting the T2 decision, all participants completed a post-test questionnaire. Participants in the dyads or groups conditions were asked to choose one of the four CST graphs again, in response to the question; “If the group answer is not what you would have chosen, which answer would you have chosen?”. The post-test questionnaire also contained the climate change knowledge and attitudes questions asked in the individual differences questionnaire at the beginning of the experiment.

The CST took approximately 30 minutes to complete, and the entire experimental session lasted approximately 60 minutes.

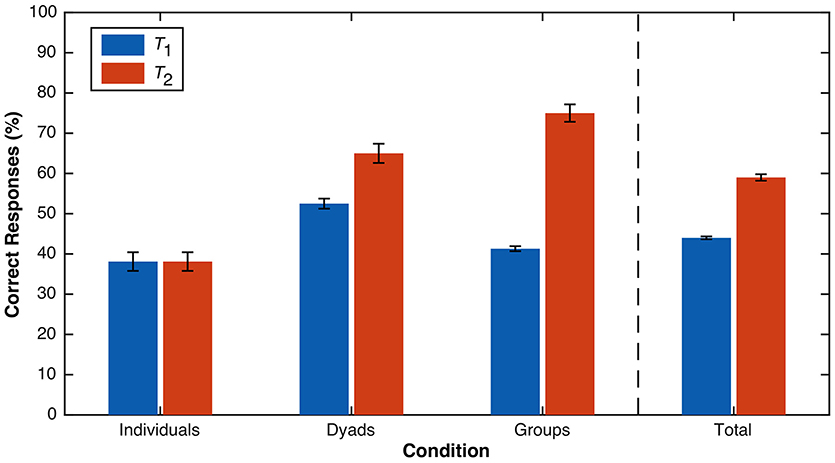

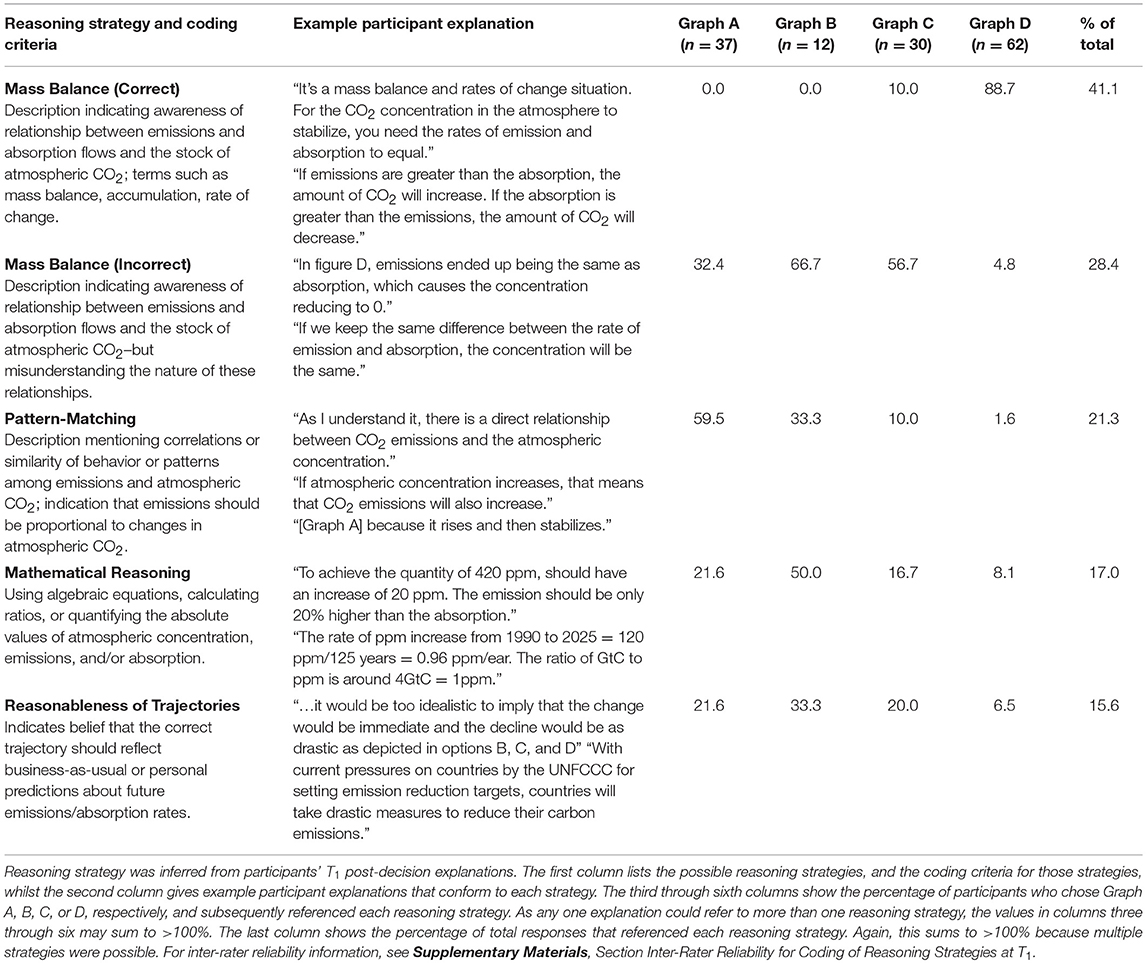

The success rates at T1 (blue bars; Figure 5) did not differ significantly across the three conditions (χ2 df = 2 = 1.72, p = .424, two-sided), and the overall success rate was 44%. This is consistent with the highest previously-reported success rate using the CST (Sterman and Booth-Sweeney, 2002). Table 1 shows the frequency with which participants used various reasoning strategies at T1 to justify their graph choice. For example, Graph D was frequently accompanied by an explanation correctly describing mass balance principles (88.7% of Graph D responses). Although other strategies were referenced by participants who selected Graph D, every other reasoning strategy was more frequently used to justify an incorrect graph.

Figure 5. Percentage of correct (Graph D) responses to the CST as a function of time (T1 vs. T2) and condition (individuals vs. dyads vs. groups). The bars for T1 (and T2 for the individuals condition) represent the percentage of correct individual responses, whereas the bars for the dyads and groups conditions at T2 represent the proportion of correct dyad and group consensus decisions, respectively. Error bars represent standard errors.

Table 1. The frequency (%) with which different reasoning strategies were adopted, as a function of Graph A, B, C, and D choices at T1.

The full coding scheme consisted of five strategies from Sterman and Booth-Sweeney (2007) and their associated coding criteria, plus four additional categories created post-hoc to capture reasoning strategies that did not conform to any previously-defined category. The strategies taken from Sterman and Booth-Sweeney (2007) were: pattern-matching, mass balance, technology, sink saturation, and CO2 fertilization (for details see Table 7 in Sterman and Booth-Sweeney, 2007). Two additional categories defined in their coding scheme (energy balance and inertia/delays) were not used by any of our participants, and therefore were not included here. Two of the new categories were simply the reverse of the categories identified by Sterman and Booth-Sweeney (2007): mass balance—incorrect (incorrect understandings of mass balance) and technology—reverse (technology will increase emissions, rather than enable emissions reduction). The final two categories, mathematical reasoning, and reasonableness of trajectories, were created by the authors on the basis of an analysis of participants' responses. In this paper, we only report on the five most popular strategies (technology, sink saturation, CO2 fertilization, and technology—reverse were used by < 3% of total participants and were therefore excluded from the current analysis).

The literature tends to attribute incorrect answers on the CST to the “pattern-matching” heuristic (e.g., Sterman and Booth-Sweeney, 2007; Sterman, 2008; Cronin et al., 2009). Pattern-matching was indeed the most popular reasoning strategy for participants who selected the typical pattern-matching graph of Graph A (Figure 2A). However, pattern-matching was not the most popular incorrect reasoning strategy overall. As shown in the last column of Table 1, across all responses, incorrect mass balance principles were applied more frequently than pattern-matching. “Mathematical reasoning” and “reasonableness of trajectories” were also common, especially for participants who chose Graph B. The popularity of these strategies suggests that errors on the CST are not exclusively caused by rash, heuristic decisions (i.e., pattern-matching)—even participants who used deliberate and effortful approaches (e.g., unsuccessfully trying to relate CO2 emissions with CO2 absorption, or calculating ratios) failed to reach the correct answer.

There was more heterogeneity in success rates across conditions at T2 (orange bars; Figure 5), and the overall success rate of 59% was marginally higher than at T1. The success rate for dyads (65.0%) did not differ significantly from that for individuals (38.1%) (χ2 df = 1 = 2.97, p = .121, two-sided) or groups (75.0%) (χ2 df = 1 = 0.48, p = .731, two-sided). However, the success rate for groups was significantly higher than for individuals (χ2df = 1 = 5.67, p = .028, two-sided). A more diagnostic set of comparisons involves contrasting the difference in success rates between T1 and T2 for each condition, separately. For individuals, there was no change in success rates over time (p = 1.00, McNemar, two-sided). For dyads, the success rate of dyad consensus decisions at T2 (65.0%) was numerically, but not significantly, higher than that of individual dyad member decisions at T1 (52.5%) (p = .125, McNemar, two-sided). Recall that after dyads submitted their consensus decision at T2, individual dyad members were prompted for the answer they would have chosen, regardless of what the consensus decision was. Again, there was no difference in success rates between answers given by individual dyad members at T1 and then at this post-test stage (both 52.5%) (p = 1.00, McNemar, two-sided). For groups, the success rate of group consensus decisions at T2 (75.0%) was significantly higher than that of individual group member decisions at T1 (41.3%) (p < .001, McNemar, two-sided). Furthermore, the success rate of individual group members' post-test answers (61.3%) was significantly higher than the success rate of individual group members at T1 (41.3%) (p = .002, McNemar, two-sided). Thus, the group discussion reliably improved CST success rates, while the individual reflection and dyad discussion did not.

Analysing the content of T2 reflections and discussions (in the same way as analyzing the content of T1 explanations) sheds light on how groups derived their decision-making advantage over individuals. Despite explicit instructions to “approach this with a skeptical mind,” “question your own assumptions,” and “consider alternative arguments,” 80% of participants in the individuals condition did not type anything during the 10-minute reflection period. Of 21 individuals, only one typed an alternative argument, and only three changed their answers at T2. Individuals failed to self-reflect, thus preventing them from recognizing their answer was incorrect, or considering why a different answer may be correct. This is consistent with previous research characterizing individuals as “cognitively lazy” decision-makers who rarely challenge an answer that “feels” right (Trouche et al., 2015; Larrick, 2016).

By contrast, all groups entertained at least two reasoning strategies in their group discussions (except one group in which all group members selected Graph D at T1). Group discussions contained a mean of 2.30 different reasoning strategies, compared to 1.15 for dyad discussions, and 0.24 for individual reflections (χ2df = 2 = 33.48, p < .001, two-sided). Furthermore, we have tentative evidence that group members helped correct other members' faulty reasoning strategies. For example, in one group, one participant's misunderstanding of mass balance principles (“If emission rate gets close to absorption, concentration will decrease below 400[ppm]”) was corrected by two other participants who explained the principle of accumulation (“…but when emissions is greater than absorption, then concentration will increase”). Groups were more likely than individuals (χ2df = 1 = 23.89, p < .001, two-sided) and dyads (χ2df = 1 = 8.29, p = .010, two-sided) to refer to the correct reasoning strategy of mass balance (even if no group member had referenced mass balance in their T1 explanation). This supports previous findings showing that exposure to diverse perspectives motivates group members to critically evaluate all arguments (Trouche et al., 2015), thus increasing the likelihood that the correct decision will be discussed and judged to be correct (Schulz-Hardt et al., 2006).

The data seem to speak against—but do not rule out—the alternative explanation that groups only benefited from the increased probability of having one member who knew the correct answer. Eight individuals, 15 dyads, and 15 groups contained at least one member who chose Graph D at T1. If the effect was due merely to the presence of a correct member, we would expect equal performance between dyads and groups, and also for dyads to outperform individuals at T2 (which they did not, χ2df = 1 = 2.97, p = .121, two-sided). Furthermore, two groups gave the correct consensus decision at T2, despite having no members who gave the correct decision at T1 (an example of “process gain,” in which interpersonal interaction between multiple individuals yields an outcome better than that of any single individual, or even the sum of all individuals; Hackman and Morris, 1975).

We were also able to rule out effects of individual differences. Binary logistic regression analyses were conducted to determine whether the various individual difference variables measured at pre-test subsequently predicted performance on the CST at T1. To satisfy the assumption of a dichotomous dependent variable, CST performance was coded as either incorrect (selecting Graphs A, B, or C) or correct (selecting Graph D). Age, actively open-minded thinking, and supporting the policy of “government regulation of CO2 as a pollutant” were the only significant independent predictors (p < .05). These variables were subsequently combined into a set of predictors and subjected to a further binary logistic regression analysis. The full model was statistically significant compared to the constant-only model, χ2df = 3 = 21.47, p < .001, Nagelkerke's R2 = 0.19. Prediction accuracy was 61.9% (50.8% for correct responses, 70.5% for incorrect responses). Thus, the full model with these three predictors was barely above chance at predicting correct answers. This poor predictive performance is noteworthy in revealing that performance on the CST is largely immune to the influence of demographic, attitudinal, personality, and cognitive style variables.

Recall that the individual difference questions about climate change knowledge and attitudes were presented again at the post-test phase. CST performance was not significantly predictive of answers to any of these items. However, in light of the third aim of our study, it is worth discussing the answers to the policy preference question, “Which of these comes closest to your view on how we should address climate change?”. At pre-test, 69.1% of participants answered “act-now,” 30.9% answered “go-slow,” and no participant selected the “wait-and-see” option. Excluding the wait-and-see option, answering the CST correctly did not significantly predict post-test responses (χ2df = 3 = 7.19, p = .066, two-sided). However, there was an increase in the percentage of individuals who selected “act-now” from pre-test to post-test across all conditions (overall, 80.9% “act-now” at post-test).

Repeated studies employing the CST reveal that individuals systematically underestimate the emissions reduction required to stabilize atmospheric CO2 levels. So far, interventions to increase success rates on the CST have been individual-focused and largely ineffective. We sought to examine whether group reasoning could increase success rates on this task. It was predicted that success rates from T1 to T2 would (1) not increase for individuals, (2) increase for dyads, and (3) increase for groups to a greater extent than for dyads. The first and third predictions were statistically supported, but there was only qualitative support for the second prediction. The 10-minute group discussions among four participants significantly improved success rates, whereas 10 minutes of individual reflection or dyad discussion did not. By analyzing individual justifications at T1, and reflections and discussions at T2, we found that groups benefited from exposure to multiple perspectives and the opportunity to communicate, which facilitated the falsification of incorrect reasoning strategies. We also found that incorrect reasoning strategies were numerous, and not limited to the oft-reported pattern-matching strategy. Lastly, we rejected two alternative explanations—groups did not improve merely due to a size advantage in the number of members who knew the correct response, nor were individual differences in demographics, climate change knowledge, personality, or cognitive style responsible for any given individual's CST success.

There are some potential limitations of the current study that merit consideration. Firstly, with a minimum of 20 cases in each condition as units for the statistical analysis, our experiment may have had insufficient statistical power to detect a significant improvement in success rates for dyads from T1 to T2. Using a larger sample size may reveal that the numerical, yet non-significant, increase in success rates observed with our dyad members reflects some real benefit of the dyad discussion. Secondly, the group advantage observed in the current study was obtained using a multiple-choice response format, which is different to the conventional CST procedure in which participants must sketch trajectories of emissions and absorption. It therefore remains open whether the results reported here would generalize to an experimental scenario employing this more complicated response format. It is possible, for example, that the uncharacteristically low rates of susceptibility to the pattern matching heuristic observed in the current study are an artifact of our unorthodox response format. Thus, we may expect higher initial rates of pattern-matching when returning to the original CST procedure, but it remains to be seen whether this would eliminate or attenuate the group advantage witnessed here. Thirdly, our intervention at T2 in the dyad and group conditions afforded more than merely the opportunity for individuals to communicate with one another—participants were also afforded the chance to read the T1 explanations of their dyad partner or group members. Although it is our conviction that the opportunity to engage in communication was instrumental to the group decision making advantage, we cannot preclude the possibility that mere exposure to the alternative perspectives of others may confer an advantage in itself, compared to individual reasoning alone. A group condition in which participants are exposed to their group members' T1 decision explanations—without engaging in any subsequent discussion—would reveal whether mere exposure to multiple perspectives can produce a group benefit. Finally, we could not provide strong evidence for Sterman's (2008) argument that policy-makers' and citizens' deficient mental models of the climate system are responsible for complacent attitudes toward emissions reduction. Answers to the CST did not predict subsequent policy preferences. However, all participants in our sample believed that we must take action against climate change (“act-now” or “go-slow”), even before completing the CST. We were therefore unable to test the hypothesis that participants who answer the CST incorrectly also deny the need for emissions reduction (“wait-and-see”). However, the overall increase in “act-now” responses, relative to “go-slow” responses, from pre-test to post-test implies some diffuse benefit of merely completing the CST. Future studies employing a sample with more heterogeneous pre-existing policy beliefs will provide a stronger test for the hypothesized link between accurate mental models of the CO2 system and support for urgent emissions reduction.

Given the abovementioned concerns about the generality of our results, one direction for future work is to determine whether and how our findings generalize to other stock-flow tasks, especially the original CST procedure, in which participants sketch trajectories of emissions and absorption by themselves. Furthermore, although we have shown a benefit on CST performance of reasoning in groups of four members, an additional avenue for future work will be to examine whether this advantage extends to larger groups. On the one hand, we might expect that increasing the number of group members will improve CST performance, because of an increase in information-processing capacity and diversity of perspectives (Cohen and Thompson, 2011; Charness and Sutter, 2012). On the other hand, we might expect that as the group size reaches some critical point, CST performance will begin to decline as the aforementioned benefits of group decision making will be outweighed by the costs of coordinating opinions and resolving disputes within the group (Orlitzky and Hirokawa, 2001; Lejarraga et al., 2014). Identifying the optimal group size for solving the CST will permit more robust recommendations about how group-based practices should be incorporated into the decision-making process.

In closing, we note that the final success rate of group consensus decisions at T2 (75%) is considerably higher than the success rates previously reported with the CST. The success of our group-based intervention suggests that group-based decision-making may help facilitate the two-step implementation of effective emissions policy. First, crafting appropriate mitigation policy requires comprehensive and accurate decision-making, and our results suggest small groups are best-suited for this task. Second, rallying political and electoral support for such policy requires a well-informed population that comprehends the scale of the emissions problem. The general public presently endorses high levels of belief in anthropogenic climate change, but low levels of concern and urgency about climate change mitigation (Akter and Bennett, 2011; Reser et al., 2012). In order to bridge this gap between what the public believes about the climate change problem (that it is real and caused by human activities), and the solutions they are willing to support (immediate and significant emissions reduction), their mental models must be changed. Group-based programs, whether informal conversations about climate change or formal public education initiatives, could establish the correct mental model and help mobilize support for effective mitigation policy.

This study was carried out in accordance with the recommendations of the National Statement on Ethical Conduct in Human Research, Human Ethics Office at the University of Western Australia. The protocol was approved by the Human Ethics Office at the University of Western Australia. All subjects gave written informed consent in accordance with the Declaration of Helsinki.

All authors contributed to the conception and design of the study. MH created the software for running the experiment and produced the figures for the paper. BX conducted the experimental sessions, performed the data analysis, and wrote the paper. MH and IW edited the paper and reviewed the results.

The work reported here was supported by a grant from the Climate Adaptation Flagship of the Commonwealth Scientific and Industrial Research Organization (CSIRO) of Australia awarded to MH and IW.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2018.02274/full#supplementary-material

Akter, S., and Bennett, J. (2011). Household perceptions of climate change and preferences for mitigation action: the case of the carbon pollution reduction scheme in Australia. Clim. Change 109, 417–436. doi: 10.1007/s10584-011-0034-8

Booth-Sweeney, L., and Sterman, J. D. (2000). Bathtub dynamics: initial results of a systems thinking inventory. Syst. Dyn. Rev. 16, 249–286. doi: 10.1002/sdr.198

Boschetti, F., Richert, C., Walker, I., Price, J., and Dutra, L. (2012). Assessing attitudes and cognitive styles of stakeholders in environmental projects involving computer modelling. Ecol. Modell. 247, 98–111. doi: 10.1016/j.ecolmodel.2012.07.027

Browne, C., and Compston, P. (2015). “Rethinking intuition of accumulation,” in Proceedings of the 33rd International Conference of the System Dynamics Society (Cambridge, MA), 463–490. Available online at: http://www.systemdynamics.org/conferences/2015/proceed/papers/P1091.pdf.

Cacioppo, J. T., and Petty, R. E. (1982). The need for cognition. J. Person. Soc. Psychol. 42, 116–131. doi: 10.1037/0022-3514.42.1.116

Charness, G., and Sutter, M. (2012). Groups make better self-interested decisions. J. Econ. Perspect. 26, 157–176. doi: 10.1257/jep.26.3.157

Cohen, T. R., and Thompson, L. (2011). “When are teams an asset in negotiations and when are they a liability?” in Research on Managing Groups and Teams: Negotiation and Groups, Vol. 14, eds E. Mannix, A. Neale, and J. Overbeck (Bingley, UK: Emerald Group Publishing), 3–34.

Cronin, M. A., Gonzalez, C., and Sterman, J. D. (2009). Why don't well-educated adults understand accumulation? A challenge to researchers, educators, and citizens. Org. Behav. Hum. Decision Process. 108, 116–130. doi: 10.1016/j.obhdp.2008.03.003

Davis, A. C., and Stroink, M. L. (2016). The relationship between systems thinking and the new ecological paradigm. Syst. Res. Behav. Sci. 33, 575–586. doi: 10.1002/sres.2371

Davis, J. H. (1973). Group decision and social interaction: a theory of social decision schemes. Psychol. Rev. 80, 97–125. doi: 10.1037/h0033951

Doyle, J. K., and Ford, D. N. (1998). Mental models concepts for system dynamics research. Syst. Dyn. Rev. 14, 3–29.

Dreyer, S. J., Walker, I., McCoy, S. K., and Teisl, M. F. (2015). Australians' views on carbon pricing before and after the 2013 federal election. Nat. Clim. Change 5, 1064–1067. doi: 10.1038/nclimate2756

Fischbacher, U. (2007). z-Tree: Zurich toolbox for ready-made economic experiments. Exp. Econ 10, 171–178. doi: 10.1007/s10683-006-9159-4

Fischer, H., and Gonzalez, C. (2015). Making sense of dynamic systems: how our understanding of stocks and flows depends on a global perspective. Cogn. Sci. 40, 496–512. doi: 10.1111/cogs.12239

Frederick, S. (2005). Cognitive reflection and decision making. J. Econ. Perspect. 19, 25–42. doi: 10.1257/089533005775196732

Greiner, B. (2015). Subject pool recruitment procedures: organizing experiments with ORSEE. J. Econ. Sci. Assoc. 1, 114–125. doi: 10.1007/s40881-015-0004-4

Guy, S., Kashima, Y., Walker, I., and O'Neill, S. (2013). Comparing the atmosphere to a bathtub: effectiveness of analogy for reasoning about accumulation. Clim. Change 121, 579–594. doi: 10.1007/s10584-013-0949-3

Hackman, J. R., and Morris, C. G. (1975). “Group tasks, group interaction process, and group performance effectiveness: a review and proposed integration,” in Advances in Experimental Social Psychology, Vol. 8, ed L. Berkowitz (New York, NY: Academic Press, Inc), 45–99.

Haran, U., Ritov, I., and Mellers, B. A. (2013). The role of actively open-minded thinking in information acquisition, accuracy, and calibration. Judg. Decision Making 8, 188–201. Available online at: https://search.proquest.com/docview/1364598283?accountid=12763

Homan, A. C., Hollenbeck, J. R., Humphrey, S. E., Van Knippenberg, D., Ilgen, D. R., and Van Kleef, G. A. (2008). Facing differences with an open mind: openness to experience, salience of intragroup differences, and performance of diverse work groups. Acad. Manage. J. 51, 1204–1222. doi: 10.5465/amj.2008.35732995

Kugler, T., Kausel, E. E., and Kocher, M. G. (2012). Are groups more rational than individuals? A review of interactive decision making in groups. Wiley Interdiscip. Rev. 3, 471–482. doi: 10.1002/wcs.1184

Larrick, R. P. (2016). The social context of decisions. Annu. Rev. Org. Psychol. Org. Behav. 3, 441–467. doi: 10.1146/annurev-orgpsych-041015-062445

Laughlin, P. R., Hatch, E. C., Silver, J. S., and Boh, L. (2006). Groups perform better than the best individuals on letters-to-numbers problems: effects of group size. J. Person. Soc. Psychol. 90, 644–651. doi: 10.1037/0022-3514.90.4.644

Lejarraga, T., Lejarraga, J., and Gonzalez, C. (2014). Decisions from experience: how groups and individuals adapt to change. Memory Cogn. 42, 1384–1397. doi: 10.3758/s13421-014-0445-7

Lezak, S. B., and Thibodeau, P. H. (2016). Systems thinking and environmental concern. J. Environ. Psychol. 46, 143–153. doi: 10.1016/j.jenvp.2016.04.005

Martin, J. F. (2008). Improving Understanding of Climate Change Dynamics Using Interactive Simulations. Cambridge, MA: Master of Science in Technology and Policy; Massachusetts Institute of Technology. Available online at: http://dspace.mit.edu/bitstream/handle/1721.1/43742/263169453-MIT.pdf?sequence=2

Mauritsen, T., and Pincus, R. (2017). Committed warming inferred from observations. Nat. Clim. Change 7, 652–655. doi: 10.1038/nclimate3357

Moxnes, E., and Saysel, A. K. (2004). “Misperceptions of global climate change: information policies,” in Working Papers in System Dynamics. Available online at: http://bora.uib.no/bitstream/handle/1956/1981/WPSD1.04Climate.pdf?sequence=1

Moxnes, E., and Saysel, A. K. (2009). Misperceptions of global climate change: information policies. Clim. Change 93, 15–37. doi: 10.1007/s10584-008-9465-2

Newell, B. R., Kary, A., Moore, C., and Gonzalez, C. (2015). Managing the Budget: stock-flow reasoning and the CO2 accumulation problem. Topics Cogn. Sci. 8, 138–159. doi: 10.1111/tops.12176

Orlitzky, M., and Hirokawa, R. Y. (2001). To err is human, to correct for it divine: a meta-analysis of research testing the functional theory of group decision-making effectiveness. Small Group Res. 32, 313–341. doi: 10.1177/104649640103200303

Ossimitz, G. (2002). “Stock-flow-thinking and reading stock-flow-related graphs: an empirical investigation in dynamic thinking abilities,” in 2002 International System Dynamics Conference (Palermo). Available online at: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.379.6653&rep=rep1&type=pdf

Pala, Ö., and Vennix, J. A. (2005). Effect of system dynamics education on systems thinking inventory task performance. Syst. Dyn. Rev. 21, 147–172. doi: 10.1002/sdr.310

Price, J. C., Walker, I. A., and Boschetti, F. (2014). Measuring cultural values and beliefs about environment to identify their role in climate change responses. J. Environ. Psychol. 37, 8–20. doi: 10.1016/j.jenvp.2013.10.001

Reichert, C., Cervato, C., Niederhauser, D., and Larsen, M. D. (2015). Understanding atmospheric carbon budgets: teaching students conservation of mass. J. Geosci. Educ. 63, 222–232. doi: 10.5408/14-055.1

Reser, J. P., Bradley, G. L., Glendon, A. I., Ellul, M., and Callaghan, R. (2012). Public Risk Perceptions, Understandings and Responses to Climate Change in Australia and Great Britain. Gold Coast, QLD. Available online at: https://terranova.org.au/repository/nccarf/public-risk-perceptions-understandings-and-responses-to-climate-change-in-australia-and-great-britain-final-report/reser_2012_public_risk_perceptions_final.pdf

Schulze, C., and Newell, B. R. (2016). More heads choose better than one: group decision making can eliminate probability matching. Psychon. Bull. Rev. 23, 907–914. doi: 10.3758/s13423-015-0949-6

Schulz-Hardt, S., Brodbeck, F. C., Mojzisch, A., Kerschreiter, R., and Frey, D. (2006). Group decision making in hidden profile situations: dissent as a facilitator for decision quality. J. Person. Soc. Psychol. 91, 1080–1093. doi: 10.1037/0022-3514.91.6.1080

Schweiger, D. M., Sandberg, W. R., and Ragan, J. W. (1986). Group approaches for improving strategic decision making: a comparative analysis of dialectical inquiry, devil's advocacy, and consensus. Acad. Manage. J. 29, 51–71. doi: 10.2307/255859

Sterman, J. D. (1994). Learning in and about complex systems. Syst. Dyn. Rev. 10, 291–330. doi: 10.1002/sdr.4260100214

Sterman, J. D. (2008). Risk communication on climate: mental models and mass balance. Science 322, 532–533. doi: 10.1126/science.1162574

Sterman, J. D. (2010). Does formal system dynamics training improve people's understanding of accumulation? Syst. Dyn. Rev. 26, 316–334. doi: 10.1002/sdr.447

Sterman, J. D., and Booth-Sweeney, L. (2002). Cloudy skies: assessing public understanding of global warming. Syst. Dyn. Rev. 18, 207–240. doi: 10.1002/sdr.242

Sterman, J. D., and Booth-Sweeney, L. (2007). Understanding public complacency about climate change: Adults' mental models of climate change violate conservation of matter. Clim. Change 80, 213–238. doi: 10.1007/s10584-006-9107-5

Strohhecker, J., and Größler, A. (2013). Do personal traits influence inventory management performance?—the case of intelligence, personality, interest and knowledge. Int. J. Product. Econ. 142, 37–50. doi: 10.1016/j.ijpe.2012.08.005

Thibodeau, P. H., Frantz, C. M., and Stroink, M. L. (2016). Situating a measure of systems thinking in a landscape of psychological constructs. Syst. Res. Behav. Sci. 33, 753–769. doi: 10.1002/sres.2388

Tranter, B. (2011). Political divisions over climate change and environmental issues in Australia. Environ. Polit. 20, 78–96. doi: 10.1080/09644016.2011.538167

Trouche, E., Johansson, P., Hall, L., and Mercier, H. (2015). The selective laziness of reasoning. Cogn. Sci. 40, 2122–2136. doi: 10.1111/cogs.12303

UNFCCC (2015). Paris Agreement. Paris: http://unfccc.int/files/meetings/paris_nov_2015/application/pdf/paris_agreement_english_.pdf

Weinhardt, J. M., Hendijani, R., Harman, J. L., Steel, P., and Gonzalez, C. (2015). How analytic reasoning style and global thinking relate to understanding stocks and flows. J. Operat. Manage. 39–40, 23–30. doi: 10.1016/j.jom.2015.07.003

Keywords: climate stabilization task, mental models, group decision-making, carbon dioxide accumulation, stock-flow tasks, emissions reduction

Citation: Xie B, Hurlstone MJ and Walker I (2018) Correct Me if I'm Wrong: Groups Outperform Individuals in the Climate Stabilization Task. Front. Psychol. 9:2274. doi: 10.3389/fpsyg.2018.02274

Received: 09 May 2018; Accepted: 01 November 2018;

Published: 30 November 2018.

Edited by:

Marc Glenn Berman, University of Chicago, United StatesReviewed by:

Christin Schulze, Max-Planck-Institut für Bildungsforschung, GermanyCopyright © 2018 Xie, Hurlstone and Walker. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Belinda Xie, YmVsaW5kYS54aWVAdW5zdy5lZHUuYXU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.