95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 30 June 2017

Sec. Perception Science

Volume 8 - 2017 | https://doi.org/10.3389/fpsyg.2017.01110

This article is part of the Research Topic New Boundaries Between Aging, Cognition, and Emotions View all 13 articles

This study used event-related potentials (ERPs) to investigate the effects of age on neural temporal dynamics of processing task-relevant facial expressions and their relationship to cognitive functions. Negative (sad, afraid, angry, and disgusted), positive (happy), and neutral faces were presented to 30 older and 31 young participants who performed a facial emotion categorization task. Behavioral and ERP indices of facial emotion processing were analyzed. An enhanced N170 for negative faces, in addition to intact right-hemispheric N170 for positive faces, was observed in older adults relative to their younger counterparts. Moreover, older adults demonstrated an attenuated within-group N170 laterality effect for neutral faces, while younger adults showed the opposite pattern. Furthermore, older adults exhibited sustained temporo-occipital negativity deflection over the time range of 200–500 ms post-stimulus, while young adults showed posterior positivity and subsequent emotion-specific frontal negativity deflections. In older adults, decreased accuracy for labeling negative faces was positively correlated with Montreal Cognitive Assessment Scores, and accuracy for labeling neutral faces was negatively correlated with age. These findings suggest that older people may exert more effort in structural encoding for negative faces and there are different response patterns for the categorization of different facial emotions. Cognitive functioning may be related to facial emotion categorization deficits observed in older adults. This may not be attributable to positivity effects: it may represent a selective deficit for the processing of negative facial expressions in older adults.

Facial emotion processing is affected by aging (Di Domenico et al., 2015) and clinical conditions (Gur et al., 2007; Weiss et al., 2008; Wieser et al., 2012; Altamura et al., 2016). Numerous behavioral studies have identified age-related decline of labeling negative facial expressions and a preservation in labeling happy expressions (Isaacowitz et al., 2007; Ruffman et al., 2008; Horning et al., 2012; West et al., 2012; Kessels et al., 2014). Neuroimaging data have also found decline of neural activities to negative stimuli with age, and a relatively invariant response to positive stimuli across the adult life span (Gunning-Dixon et al., 2003; Tessitore et al., 2005; Fischer et al., 2010). It is argued that there is an attention (Mather and Carstensen, 2003) and memory (Charles et al., 2003) biases toward positive versus negative stimuli in older adults, so called the “positivity effect" (Mather, 2012; Nashiro et al., 2012). From a neurobiological perspective, the interaction between noradrenergic activity and emotional memory enhancement in older adults is considered relevant (Mammarella et al., 2016). This perspective has extended to the perception and identification of another’s expression (Bucks et al., 2008; Isaacowitz et al., 2009; Kaszniak and Menchola, 2012). A competing perspective for explaining age-related changes in labeling negative expressions has been argued as a general decline of cognitive function (Suzuki and Akiyama, 2013) or neurological atrophy in specific brain regions (Calder et al., 2003).

The processing of facial expression information is fast and efficient (Vuilleumier, 2005), and may lead to substantial temporal dispersion of evoked responses that enable ‘high-level’ regions to respond with surprisingly short latencies (Kawasaki et al., 2001; Pessoa and Adolphs, 2010). With resolution in the order of milliseconds, event-related potentials (ERP) are candidates to be excellent neural markers of the early involvement of perceptual face knowledge (Rossion, 2014). Affective facial stimuli elicit particular ERP components (Eimer and Holmes, 2007; Luo et al., 2010; Leleu et al., 2015), such as: (a) the P1, a positive potential with peak latency from 70 to 130 ms after stimulus onset over the occipital brain scalp sites, indicating selective spatial attention toward emotional cues (Luck et al., 2000; Bekhtereva et al., 2015); (b) the N170, a prominent negative waveform over the occipito-temporal scalp sites, with a peak at approximately 170 ms post-stimulus, representing an early neural marker involved in the pre-categorical structural encoding of an emotional face (Rossion, 2014); (c) the posterior P2, a positive deflection observed over the occipito-temporal regions at approximately 200–280 ms post-stimulus (Latinus and Taylor, 2006; Brenner et al., 2014), which has been suggested to be a kind of stimulus-driven call for processing resources (van Hooff et al., 2011); and (d) the N250, an affect-related negative component peaking at approximately 250 ms post-stimulus over the occipito-temporal scalp sites (Streit et al., 2000; Williams et al., 2006).

Event-related potential studies have used diverse paradigms to examine age-related changes of facial emotion processing, including passive viewing (Smith et al., 2005; Hilimire et al., 2014; Mienaltowski et al., 2015) and emotion recognition memory tasks (Schefter et al., 2012). Hilimire et al. (2014) suggest that there is an age-related shift away from negative faces toward positive faces within an early (110–130 ms) and late (225–350 ms) period in a checkerboard probe go/no-go task requiring passive processing of task-irrelevant emotional faces. However, another previous study of young adults showed that the processing of negative faces was increased at early perceptual stages only task-irrelevantly (e.g., passive viewing), whereas happy faces received enhanced processing only task-relevantly (e.g., naming the emotional expression) (Rellecke et al., 2012). To further test the effect of age on facial emotion processing, it would be necessary to manipulate the relevance to the task of the facial expressions. This would allow researchers to determine whether older adults demonstrate enhanced processing of positive expressions during explicit facial emotion identification tasks, and whether greater processing in response to positive faces is associated with stronger cognitive functioning.

The goal of the present study was to revisit the effect of age on particular ERP components using facial emotion categorization paradigm. To shed light on the controversy regarding the mechanism underlying these age effects, correlations between cognitive functions and brain responsivity to specific emotional faces, as well as the correlations between cognitive functions and performance of labeling facial emotions, were also explored. We used a reference sample of healthy younger adults to test the specificity of the effects in an older population. Given the existence of two directly competing perspectives discussed above, no specific directional hypothesis was possible. If available cognitive resources may be voluntarily engaged, accounting for the positivity effects observed in older adults (Isaacowitz et al., 2009), we hypothesized that higher cognitive abilities should be associated with reduced or enhanced neural responsivity to specific emotional faces. If impaired performance on labeling negative facial expressions is an unintended consequence of a general decline of cognitive function associated with old age (Suzuki and Akiyama, 2013), we anticipated that weaker cognitive abilities might show a correlation with decreased performance in labeling negative faces in older adults (but not young adults), but not necessarily in labeling positive faces.

Thirty healthy older adults (58–79 years of age; 17 female) and 31 young adults (22–26 years of age; 19 female) were recruited. All participants were right-handed and had normal or corrected-to-normal vision and normal hearing. A battery of neuropsychological tests, including the Montreal Cognitive Assessment (MoCA), the Auditory Verbal Learning Test (AVLT), the Logical Memory Test (LMT), and the forward and backward digit span test, were used to confirm that participants were within normal ranges for cognitive functioning according to published norms (Guo et al., 2009). Meanwhile, older adults completed the Instrumental Activities of Daily Living scale (IADL) and the Geriatric Depression Scale (GDS), while young adults completed the Center for Epidemiologic Studies Depression Scale (CES-D).

For both groups, inclusion criteria were the absence of self-reported history of neuropsychological impairment or any disorder affecting the central nervous system, no previous head injury, and not currently being treated for depression or anxiety. Exclusion criteria were (a) impaired general cognition and activities of daily living; (b) subjective memory impairment, and scoring more than 1.5 standard deviations below the age and education-adjusted mean on the immediate or delayed AVLT or LMT; and (c) a GDS score > 20 for older adults (Chan, 1996); and (d) a CES-D score > 28 for young adults (Cheng et al., 2006). Participants’ characteristics are shown in Table 1.

Participants were recruited from the Southern Medical University (Guangzhou, China) and the nearby community via advertisements, and received monetary compensation. All participants provided written informed consent in accordance with the Declaration of Helsinki. The study was approved by the Medical Ethics Committee of Nanfang Hospital of Southern Medical University (NFEC-201511-K2).

Ninety-six digitally reworked black-and-white face photographs were selected from the Chinese Facial Affective Picture System database (Luo et al., 2010). The faces expressed positive (happiness, 32 pictures), neutral (32 pictures), and negative (32 pictures; fear, anger, sadness and disgust, eight pictures each) emotions, and were balanced for gender and matched for luminance and contrast grade. Only closed-mouth expressions were used. Positive and negative faces were equated in terms of emotional intensity (t = -1.34, p = 0.187). Mean emotional intensity was 5.85 (SEM = 0.27) and 6.29 (SEM = 0.18) for positive and negative faces. Each face was seen by each participant once. The pictures used in the practice stage were different from those used in the formal experiment.

The stimuli were presented in random order for 500 ms each, against a black background subtending a visual angle of about 11° to 15° at a distance of 75 cm. Participants were instructed to classify the displayed facial expressions from a multiple-choice scale containing the names of the six facial expressions as discrete categories arranged horizontally on the screen. The scale appeared on the screen after a delay of 1000 ms (to avoid confounding motor effects), and was presented until a classification had been made, or for a maximum of 8000 ms. A random interval of 1600–2200 ms was set between the participants’ response and the onset of the next trial. Emotions were classified by clicking the corresponding label of the scale with a computer mouse. The task paradigm has been described in a previous study (Woelwer et al., 2012).

EEG data were collected from 32 electrodes (impedance < 5 kΩ), in accordance with the extended 10/20 system. Recordings were made with a Brain-Amp-DC amplifier and controlled through Brain Vision Recorder 2.0 (sampling rate: 250 Hz; recording reference: left mastoid) (Brain Products, Munich, Germany). Data were analyzed by Brain Vision Analyzer 2.0 (Brain Products) and filtered offline (band-pass 0.1–70 Hz with a 50-Hz notch filter; re-calculated to Cz reference), corrected for horizontal and vertical ocular artifacts, and baseline corrected to 200 ms pre-stimulus. Trials with a transition threshold of 50 μV (sample to sample) or an amplitude criterion of more than ±80 μV were automatically rejected.

The procedure was confirmed by visually checking the remaining trials for artifacts. The number of artifact-free trials did not differ between groups [(F1,59 = 0.81, p = 0.78), mean number of artifact-free trials per valence: positive faces = 31.97 (SD = 0.18), neutral faces = 31.97 (SD = 0.18), and negative faces = 31.93 (SD = 0.37) in older adults; positive faces = 31.94 (SD = 0.36), neutral faces = 31.90 (SD = 0.54), and negative faces = 32.19 (SD = 0.18) in young adults].

The amplitudes (measured as peak-to-baseline values) of the P100 (70–130 ms) and the N170 (130–200 ms) were averaged from occipital (O1 and O2) and parietal (averaged across P3/P7 and P4/P8, respectively) scalp sites, respectively. The time-windows were chosen by visually inspecting the time course of each component. Peaks of the components were measured within a ±30 ms window centered on the maximum of the grand-average means (Itier and Taylor, 2004). To further test whether there is a positive deflection to task-relevant happy faces in older adults within the time-window of P100, the ERP data were also re-calculated according to the average reference (average of all scalp electrodes), and reanalyzed using the method reported by Hilimire et al. (2014).

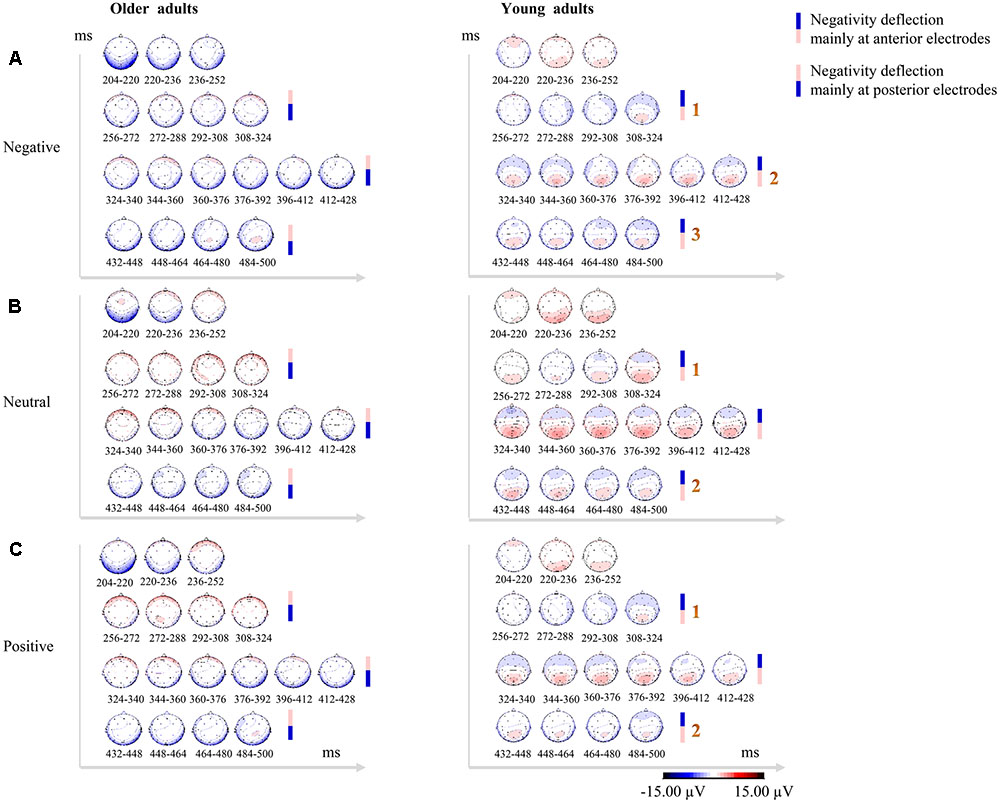

It was difficult to quantitatively compare the P2 and N250 components between the two age groups, because the older adults demonstrated a clearly delayed P2 (older adults: 270–330 ms; young adults: 200–260 ms) and near-absent N250 (or that which was merged into the later component). Therefore, we qualitatively compared the between-groups differences in brain responsivity after the N170 based on the topographical maps corresponding to the duration from 200 to 500 ms post-stimulus. Multiple re-entrant feedback signals along various regions of the visual ventral stream are thought to be surprisingly fast, with each processing stage adding approximately 10 ms to the overall latency (Pessoa and Adolphs, 2010). As the shortest possible time bin for the present topographical maps was 16 ms, the spline interpolated topographical maps of scalp voltage across 32 electrodes over the period from 200 to 500 ms post-stimulus were computed in consecutive 16-ms bins.

Event-related potentials were analyzed separately for signal amplitude at the corresponding electrodes and entered into repeated-measures ANOVAs with the Greenhouse-Geisser epsilon correction in case of violation of sphericity. The between-participant variable was “Group” (older adults vs. young adults), and the within-group variables were “Hemisphere” (two levels: left and right) and “Emotion” (three levels: positive, neutral, and negative). In case of a significant interaction including Group and Emotion, post hoc tests were calculated using Bonferroni correction to account for multiple testing. Independent-sample t-tests and chi-square tests assessed differences in demographic variables between the groups.

The multiple linear regression analyses (forward procedure) were performed to statistically test the correlations between cognitive functions and ERPs, as well as the correlations between cognitive functions and performances, for both groups. In the first regression analysis, amplitudes of P100 and N170 were introduced as the dependent variable, respectively. Scores of MoCA, AVLT, LMT, forward and backward digit span, semantic fluency, and age were introduced as the independent variables. In the second regression analysis, performance data (accuracy rates for negative, positive, and neutral faces) were introduced as dependent variable, respectively. Independent variables were the same as in the previous analysis.

Regarding accuracy, a repeated-measure ANOVA yielded a Group × Emotion interaction (F2,118 = 11.32, P < 0.001, = 0.16). The older adults performed less accurately in labeling negative faces than young adults (t = -6.20, P < 0.001), while their performance for positive and neutral faces remained no significant group difference (P > 0.10). Within-group accuracy for labeling negative faces were significantly lower than those for labeling positive faces (older adults: t = -10.59, P < 0.001; young adults: t = -8.25, P < 0.001) and neutral faces (older adults: t = -8.87, P < 0.001; young adults: t = -6.84, P < 0.001) in both groups.

Reaction time mirrored the pattern of accuracy in the Group × Emotion interaction (F2,118 = 9.47, p = 0.001, = 0.14). After adjusting for reaction time in the practice phase before the formal experiment, older adults were slower to label negative faces (F1,58 = 9.92, p = 0.003, = 0.15) relative to young adults, while their performance for labeling positive and neutral faces remained no significant difference (p > 0.10). Detailed behavioral results are reported in Table 2.

As for P100 amplitudes, none of the interactions that included Group × Emotion were statistically significant (F < 1). Descriptive statistics for ERP variables are in Supplementary Tables S1, S2.

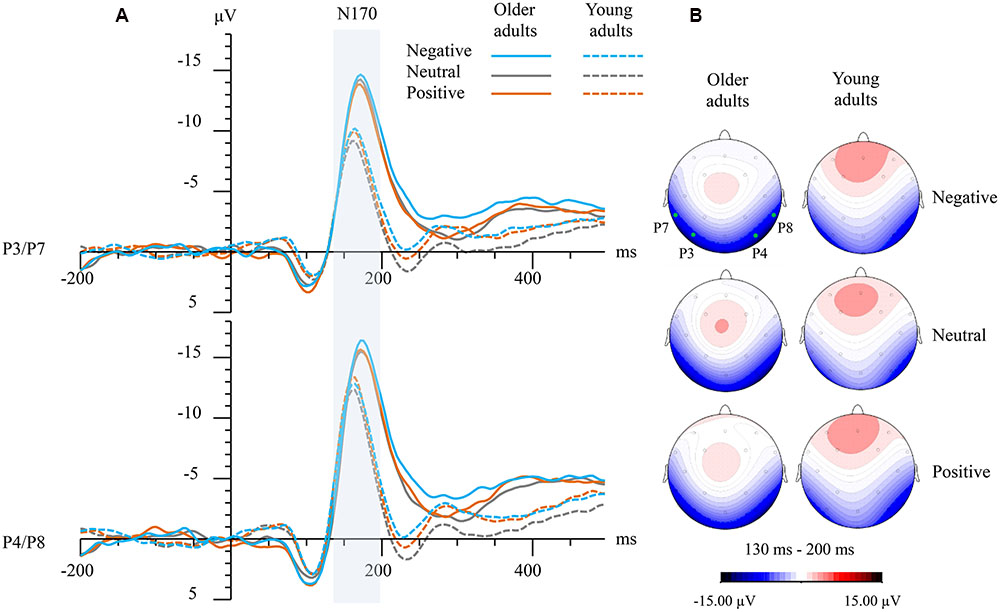

As for N170 amplitudes, a significant Group × Hemisphere × Emotion interaction was observed (F2,118 = 3.08, P = 0.05, = 0.05). Group differences were detected for left hemisphere (negative faces: t = -3.31, P = 0.002; positive faces: t = -3.29, P = 0.002; and neutral faces: t = -4.00, P < 0.001), and right hemisphere (negative faces: t = -2.24, P = 0.003; and neutral faces: t = -2.12, P = 0.04). No group difference was found for right-hemispheric N170 amplitudes elicited by positive faces (P > 0.10).

Bonferroni corrected post hoc tests showed that there was no significant within-group emotion effect in older adults. In contrast, young adults demonstrated that the N170 elicited by emotional faces were larger than those elicited by neutral faces (negative vs. neutral: t = -2.99, P = 0.02; positive vs. neutral: t = -2.90, P = 0.02) at the left hemisphere, and N170 elicited by positive faces was larger than those elicited by neutral faces (t = -2.94, P = 0.02) at the right hemisphere. Moreover, within-group analysis in older adults showed that the right-hemispheric N170 was significant larger than left-hemispheric N170 for emotional faces (negative faces: t = -2.34, P = 0.026; positive faces: t = -2.61, P = 0.014), while the N170 laterality effect was not significant for neutral faces (P = 0.07). On the contrary, the N170 laterality effect has observed for all emotions in young adults (negative faces: t = -5.93, p < 0.001; neutral faces: t = -5.54, P < 0.001; positive faces: t = -4.69, P < 0.001). See also Figure 1.

FIGURE 1. (A) Grand-averaged event-related potential (ERP) waveforms elicited at left (averaged from P3 and P7) and right temporo-occipital electrodes (averaged from P4 and P8) for both groups and emotions. The vertical bar marks the time-windows that were analyzed as N170 in both age groups. (B) Topographical maps showing top view of scalp distributions over the N170 time-window for negative faces (top), neutral faces (medium), and positive faces (bottom). Note: the solid green points indicate the scalp electrodes where the P3, P4, P7, and P8 were located.

Older adults exhibited a sustained temporo-occipital negativity deflection (mainly at posterior electrodes) over the time range of 200–500 ms post-stimulus. By contrast, young adults showed clear posterior positivity and subsequent frontal negativity deflection (mainly at anterior electrodes). Moreover, the frontal negativity deflections observed in young adults were thrice enhanced (scalp voltage gradually increasing thrice) for negative faces and twice enhanced (scalp voltage gradually increasing twice) for neutral and positive faces between 256 and 500 ms post-stimulus (see Figure 2).

FIGURE 2. Topographical maps in consecutive 16-ms bins over the time range of 200–500 ms post-stimulus showing qualitative between-group differences in scalp distributions across 32 electrodes for negative faces (A), neutral faces (B), and positive faces (C). Older adults exhibited sustained temporo-occipital negativity deflections (mainly at posterior electrodes), while young adults showed clear posterior positivity over P2 time-window and subsequent frontal negativity deflections (mainly at anterior electrodes). The negativity deflections in young adults were thrice enhanced (scalp voltage gradually increasing thrice) for negative faces, and twice enhanced (scalp voltage gradually increasing twice) for neutral and positive faces. Enhanced positivity for the stimuli is shown in red, while blue indicates enhanced negativity for the stimuli. The scale (–15/+15 μV) has been adapted to better display the topographical similarities and differences.

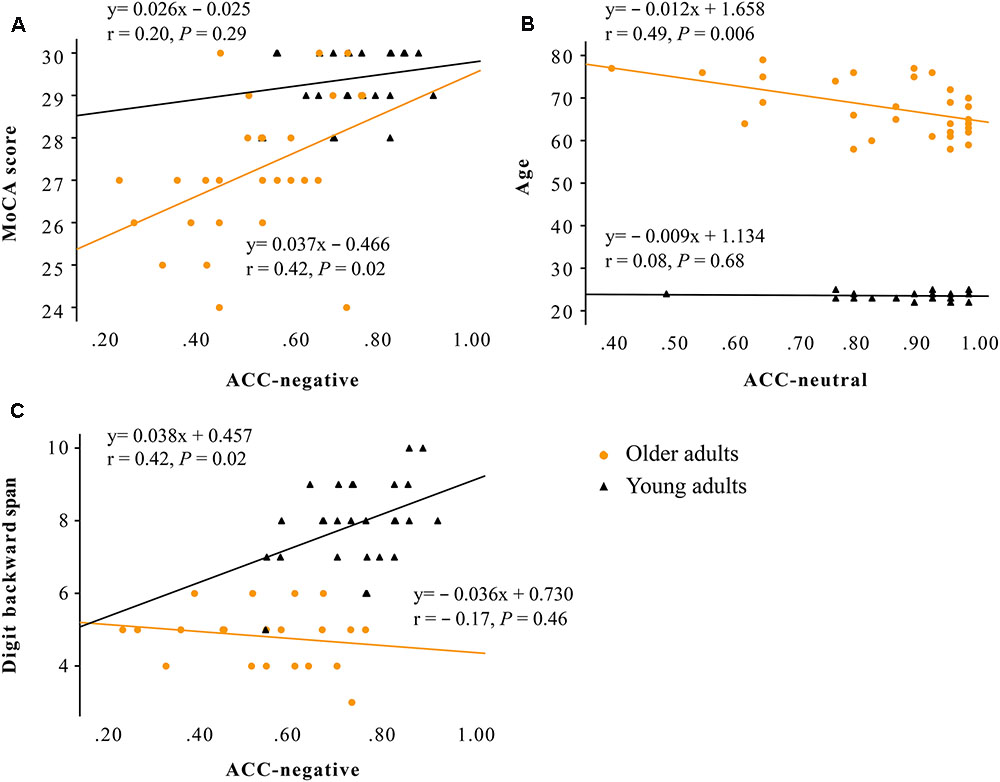

No correlations were observed between cognitive functions (scores of MoCA, AVLT, LMT, forward and backward digit span, semantic fluency) and ERPs (amplitudes of P100 and N170) in either group (P > 0.05). For the correlations between cognitive functions and task performances (expressed as accuracy for labeling negative, positive, and neutral faces) in older adults, decreased accuracy for labeling negative faces was correlated with lower MoCA scores (adjusted R2 = 0.15, standardized Beta = 0.42, P = 0.020; Figure 3A), and decreased accuracy for labeling neutral faces was correlated with advanced age (adjusted R2 = 0.21, standardized Beta = -0.49, P = 0.006; Figure 3B). In young adults, decreased accuracy for labeling negative faces was correlated with lower backward digit span (adjusted R2 = 0.15, standardized Beta = 0.42, P = 0.019; Figure 3C).

FIGURE 3. Scatter plot showing that: (A) decreased accuracy for labeling negative faces was correlated with lower MoCA scores only in older adults (adjusted R2 = 0.15, standardized Beta = 0.42, P = 0.020); (B) decreased accuracy for labeling neutral faces was correlated with advanced age only in older adults (adjusted R2 = 0.21, standardized Beta = -0.49, P = 0.006); (C) decreased accuracy for negative faces was correlated with lower backward digit span only in young adults (adjusted R2 = 0.15, standardized Beta = 0.42, P = 0.019). Older adults are marked with circles, and younger adults are marked with triangles. Note: ACC, accuracy rate; MoCA, the Montreal Cognitive Assessment.

A large sample internet-based explicit emotion identification study suggests an inverted U-shaped trajectory over 6–91 years with highest identification accuracy in the middle decades (20–49 years) and a progressive decline over 50–91 years (Williams et al., 2009). The behavioral results in the present study are highly similar to this kind of trajectory (Ruffman et al., 2008; Kessels et al., 2014), wherein reduced accuracy and slower speed for identification of negative faces and relatively preserved identification of positive and neutral faces are observed in older adults. It is possible that emotion labeling of negative facial emotions is more difficult than that of positive and neutral faces. In contrast, happy faces are easier to recognize because other positive emotions are absent as possible distractors (Kessels et al., 2014), and a smile may be a shortcut for quick and accurate categorization (Beltran and Calvo, 2015).

The P100 has previously been associated with attention-related enhancements of processing intrinsically salient stimuli (Di Russo et al., 2003). In the present study, an insignificant group difference in P100 amplitudes suggests that early detection of facial emotions remains relatively intact in the older adults. We did not observe an attention bias toward positive or away from negative faces over the P100 time-window in the present study. A recent ERP study reported an age-related stronger positive deflection for task-irrelevant happy faces at frontal scalp sites over the time-window of P100, when performing a checkerboard probe go/no-go task (Hilimire et al., 2014). We also re-calculated the ERP data using the method reported by Hilimire et al. (2014), but no positive deflection was observed. One of the potential explanations for this inconsistence is that task relevance may manipulate the effect of on facial emotion processing.

The N170 component has excellent test-retest reliability, and obtaining as few as 10 or 20 artifact-free trials per condition for each participant may be sufficient to attain adequate reliability (Huffmeijer et al., 2014). The N170 is linked to pre-categorical structural encoding of emotional faces, where generated holistic internal face representations are used by subsequent finer-grained processing of expression categorization (Calvo and Beltran, 2014; Rossion, 2014; Bekhtereva et al., 2015). In line with our behavioral results, the present study revealed that the N170 amplitudes elicited by negative faces were enhanced in older adults relative to their younger counterparts, while the group difference in right-hemispheric N170 elicited by positive faces was non-significant, reflecting that older adults may exert more effort in structural encoding of facial features in negative faces, while their structural encoding of positive faces may be relatively preserved. Recent visual evoked potential studies suggest that emotional cue extraction for faces might be completed within the N170 phase (Bekhtereva et al., 2015), and N170 amplitudes could distinguish emotional faces from neutral faces in younger adults (Zhang et al., 2013). Accordingly, compromised within-group emotion effect on N170 amplitudes observed in our older adult participants may go some way to explaining the decreased differentiation of emotional faces from neutral faces in older adults.

Interestingly, the present study also observed decreased hemispheric laterality effect for the N170 elicited by neutral faces in older adults. In contrast, young adults exhibited an obvious N170 laterality effect for all facial emotions. It has been suggested that facial expression processing is both holistic and analytic (Tanaka et al., 2012). Holistic processing is thought to be preferentially executed by the right hemisphere, whereas the left hemisphere is regarded as more involved in part-based processing (Ramon and Rossion, 2012). The left-hemispheric N170 was previously found to be greater for featural relative to configural changes (Calvo and Beltran, 2014), whereas the right-hemispheric N170 shows the opposite pattern (Scott, 2006). Therefore, the non-significant group difference in right-hemispheric N170 for positive faces observed in the present study may suggest that the holistic processing of positive faces remains relatively intact in the older adults, while attenuated N170 laterality effect for neutral faces may reflect compensatory engagement of additional regions during pre-categorical structural encoding of facial emotions in older adults.

As a result of the obvious phase delay of the P2, and near-absent N250 in older adults, it was difficult to quantitatively compare the P2 and the N250 between two age groups. By using the topographical maps in consecutive 16-ms bins over the time range of 200–500 ms post-stimulus to qualitatively compare between-group difference in scalp distributions for three valence conditions, the present study revealed a sustained temporo-occipital negativity deflection in older adults and frontal negativity deflections in young adults over this time range. Moreover, the frontal negativity deflections in young adults were thrice enhanced in response to negative faces and twice enhanced in response to neutral and positive faces. These findings suggest that two age groups may have recruited different frontal-parietal networks during the facial emotion labeling task, and exert different strategies for processing facial expressions.

Prefrontal and temporal-lobe structures that are important in recognizing and naming facial emotional stimuli in general (Kober et al., 2008; Ruffman et al., 2008) were found to be the earliest and most strongly affected by advancing age (Petit-Taboue et al., 1998). These brain regions are implicated in conceptualization (categorical perception of discrete emotions), language (representation of feature-based information for abstract categories), and executive attention (volitional attention and working memory), suggesting that more “cognitive” functions play a routine role in constructing perceptions of facial emotions (Lindquist and Barrett, 2012), especially when one explicitly evaluates and holds affective information in mind to categorize it.

In line with it, the present study found that older adults’ impaired performance for labeling negative faces was associated with decreased MoCA scores, suggesting that cognitive functions may contribute to impaired identification of negative faces in older adults. Moreover, older adults’ performance for labeling neutral faces was found to be negatively associated with age. This may partially explain the decreased N170 laterality effect for neutral faces observed in our older adult participants. Furthermore, cognitive functioning was not correlated with any early neural responses to emotional faces, suggesting that older adults may not voluntarily or involuntarily employ cognitive resources to modulate emotion perception during early visual processing of task-relevant emotional faces.

Limitations of this study should be considered and verified. First, we combined total artifact-free trials into the ERP analysis, instead of just taking correct trials, because of the significant group difference in accuracy for the task. The Supplementary Figure S1 showed that the waveform of the correct trials in older adults was overlap with that of the total trials, but slightly higher than that of the incorrect trials. The combination may allow better understanding of actual early processing of a valence-specific emotion in older adults. Second, the pictures used in the present study were mainly younger faces, which may introduce the concern of “own-age bias” in face processing (Ebner et al., 2011). However, the processing of negative faces has also been reported to override age-of-face effects in facial expression identification tasks (Ebner et al., 2013). Further studies should be designed to use stimuli depicting both young and older adults.

The present findings showed that older people may exert more effort during structural encoding and different response patterns during emotional decoding of facial expressions, especially for negative faces. Therefore, aging might be associated with a selective deficit in processing negative facial expressions. Further investigations in this area may facilitate the detection of at-risk individuals in the early stage of mild cognitive impairment and enable targeted early interventions.

XL designed the study, collected and analyzed the data, and drafted the manuscript. KW contributed to the experiment design and data interpretation. KL participated in the participants’ enrollment and cognitive assessment. XZ and RC conceived of and designed the study, and reviewed the manuscript. All authors have read and approved the final manuscript.

This work was supported by the National Natural Science Foundation of China (81400868), Science and Technology Planning Project of Guangdong Province, China (2014A020212201), the National Social Science Foundation of China (14ZDB259), and the University and College Humanities and Social Sciences Project of Guangdong Province, China (2012GXM-0006). Raymond Chan was supported by the Beijing Training Project for the Leading Talents in S & T (Z151100000315020), the Key Laboratory of Mental Health, Institute of Psychology, and the CAS/SAFEA International Partnership Program for Creative Research Teams (Y2CX131003). These funding agents had no role in the study design; collection, analysis, and interpretation of the data; writing of the manuscript; or decision to submit the paper for publication. The authors would like to thank Dr. Yuejia Luo and two reviewers for their helpful comments on a previous version of this manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fpsyg.2017.01110/full#supplementary-material

FIGURE S1 | The supplementary figure showing that the waveform of the correct trials was overlap with that of the total trials, but slightly higher than that of the incorrect trials.

Altamura, M., Padalino, F. A., Stella, E., Balzotti, A., Bellomo, A., Palumbo, R., et al. (2016). Facial emotion recognition in bipolar disorder and healthy aging. J. Nerv. Ment. Dis. 204, 188–193. doi: 10.1097/NMD.0000000000000453

Bekhtereva, V., Craddock, M., and Muller, M. M. (2015). Attentional bias to affective faces and complex IAPS images in early visual cortex follows emotional cue extraction. Neuroimage 112, 254–266. doi: 10.1016/j.neuroimage.2015.03.052

Beltran, D., and Calvo, M. G. (2015). Brain signatures of perceiving a smile: time course and source localization. Hum. Brain Mapp. 36, 4287–4303. doi: 10.1002/hbm.22917

Brenner, C. A., Rumak, S. P., Burns, A. M., and Kieffaber, P. D. (2014). The role of encoding and attention in facial emotion memory: an EEG investigation. Int. J. Psychophysiol. 93, 398–410. doi: 10.1016/j.ijpsycho.2014.06.006

Bucks, R. S., Garner, M., Tarrant, L., Bradley, B. P., and Mogg, K. (2008). Interpretation of emotionally ambiguous faces in older adults. J. Gerontol. B Psychol. Sci. Soc. Sci. 63, 337–343.

Calder, A. J., Keane, J., Manly, T., Sprengelmeyer, R., Scott, S., Nimmo-Smith, I., et al. (2003). Facial expression recognition across the adult life span. Neuropsychologia 41, 195–202. doi: 10.1016/S0028-3932(02)00149-145

Calvo, M. G., and Beltran, D. (2014). Brain lateralization of holistic versus analytic processing of emotional facial expressions. Neuroimage 92, 237–247. doi: 10.1016/j.neuroimage.2014.01.048

Chan, A. C. (1996). Clinical validation of the geriatric depression scale (GDS): Chinese version. J. Aging Health 8, 238–253. doi: 10.1177/089826439600800205

Charles, S. T., Mather, M., and Carstensen, L. L. (2003). Aging and emotional memory: the forgettable nature of negative images for older adults. J. Exp. Psychol. Gen. 132, 310–324. doi: 10.1037/0096-3445.132.2.310

Cheng, S. T., Chan, A. C., and Fung, H. H. (2006). Factorial structure of a short version of the center for epidemiologic studies depression scale. Int. J. Geriatr. Psychiatry 21, 333–336. doi: 10.1002/gps.1467

Di Domenico, A., Palumbo, R., Mammarella, N., and Fairfield, B. (2015). Aging and emotional expressions: is there a positivity bias during dynamic emotion recognition? Front. Psychol. 6:1130. doi: 10.3389/fpsyg.2015.01130

Di Russo, F., Martinez, A., and Hillyard, S. A. (2003). Source analysis of event-related cortical activity during visuo-spatial attention. Cereb. Cortex 13, 486–499.

Ebner, N. C., He, Y., Fichtenholtz, H. M., McCarthy, G., and Johnson, M. K. (2011). Electrophysiological correlates of processing faces of younger and older individuals. Soc. Cogn. Affect. Neurosci. 6, 526–535. doi: 10.1093/scan/nsq074

Ebner, N. C., Johnson, M. R., Rieckmann, A., Durbin, K. A., Johnson, M. K., and Fischer, H. (2013). Processing own-age vs. other-age faces: neuro-behavioral correlates and effects of emotion. Neuroimage 78, 363–371. doi: 10.1016/j.neuroimage.2013.04.029

Eimer, M., and Holmes, A. (2007). Event-related brain potential correlates of emotional face processing. Neuropsychologia 45, 15–31. doi: 10.1016/j.neuropsychologia.2006.04.022

Fischer, H., Nyberg, L., and Backman, L. (2010). Age-related differences in brain regions supporting successful encoding of emotional faces. Cortex 46, 490–497. doi: 10.1016/j.cortex.2009.05.011

Gunning-Dixon, F. M., Gur, R. C., Perkins, A. C., Schroeder, L., Turner, T., Turetsky, B. I., et al. (2003). Age-related differences in brain activation during emotional face processing. Neurobiol. Aging 24, 285–295. doi: 10.1016/S0197-4580(02)00099-94

Guo, Q., Zhao, Q., Chen, M., Ding, D., and Hong, Z. (2009). A comparison study of mild cognitive impairment with 3 memory tests among Chinese individuals. Alzheimer Dis. Assoc. Disord. 23, 253–259. doi: 10.1097/WAD.0b013e3181999e92

Gur, R. E., Calkins, M. E., Gur, R. C., Horan, W. P., Nuechterlein, K. H., Seidman, L. J., et al. (2007). The consortium on the genetics of schizophrenia: neurocognitive endophenotypes. Schizophr. Bull. 33, 49–68. doi: 10.1093/schbul/sbl055

Hilimire, M. R., Mienaltowski, A., Blanchard-Fields, F., and Corballis, P. M. (2014). Age-related differences in event-related potentials for early visual processing of emotional faces. Soc. Cogn. Affect. Neurosci. 9, 969–976. doi: 10.1093/scan/nst071

Horning, S. M., Cornwell, R. E., and Davis, H. P. (2012). The recognition of facial expressions: an investigation of the influence of age and cognition. Neuropsychol. Dev. Cogn. B Aging Neuropsychol. Cogn. 19, 657–676. doi: 10.1080/13825585.2011.645011

Huffmeijer, R., Bakermans-Kranenburg, M. J., Alink, L. R., and van Ijzendoorn, M. H. (2014). Reliability of event-related potentials: the influence of number of trials and electrodes. Physiol. Behav. 130, 13–22. doi: 10.1016/j.physbeh.2014.03.008

Isaacowitz, D. M., Allard, E. S., Murphy, N. A., and Schlangel, M. (2009). The time course of age-related preferences toward positive and negative stimuli. J. Gerontol. B Psychol. Sci. Soc. Sci. 64, 188–192. doi: 10.1093/geronb/gbn036

Isaacowitz, D. M., Lockenhoff, C. E., Lane, R. D., Wright, R., Sechrest, L., Riedel, R., et al. (2007). Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychol. Aging 22, 147–159. doi: 10.1037/0882-7974.22.1.147

Itier, R. J., and Taylor, M. J. (2004). Face recognition memory and configural processing: a developmental ERP study using upright, inverted, and contrast-reversed faces. J. Cogn. Neurosci. 16, 487–502. doi: 10.1162/089892904322926818

Kaszniak, A. W., and Menchola, M. (2012). Behavioral neuroscience of emotion in aging. Curr. Top. Behav. Neurosci. 10, 51–66. doi: 10.1007/7854_2011_163

Kawasaki, H., Kaufman, O., Damasio, H., Damasio, A. R., Granner, M., Bakken, H., et al. (2001). Single-neuron responses to emotional visual stimuli recorded in human ventral prefrontal cortex. Nat. Neurosci. 4, 15–16. doi: 10.1038/82850

Kessels, R. P., Montagne, B., Hendriks, A. W., Perrett, D. I., and de Haan, E. H. (2014). Assessment of perception of morphed facial expressions using the emotion recognition task: normative data from healthy participants aged 8-75. J. Neuropsychol. 8, 75–93. doi: 10.1111/jnp.12009

Kober, H., Barrett, L. F., Joseph, J., Bliss-Moreau, E., Lindquist, K., and Wager, T. D. (2008). Functional grouping and cortical-subcortical interactions in emotion: a meta-analysis of neuroimaging studies. Neuroimage 42, 998–1031. doi: 10.1016/j.neuroimage.2008.03.059

Latinus, M., and Taylor, M. J. (2006). Face processing stages: impact of difficulty and the separation of effects. Brain Res. 1123, 179–187. doi: 10.1016/j.brainres.2006.09.031

Leleu, A., Godard, O., Dollion, N., Durand, K., Schaal, B., and Baudouin, J. Y. (2015). Contextual odors modulate the visual processing of emotional facial expressions: an ERP study. Neuropsychologia 77, 366–379. doi: 10.1016/j.neuropsychologia.2015.09.014

Lindquist, K. A., and Barrett, L. F. (2012). A functional architecture of the human brain: emerging insights from the science of emotion. Trends Cogn. Sci. 16, 533–540. doi: 10.1016/j.tics.2012.09.005

Luck, S. J., Woodman, G. F., and Vogel, E. K. (2000). Event-related potential studies of attention. Trends Cogn. Sci. 4, 432–440.

Luo, W., Feng, W., He, W., Wang, N. Y., and Luo, Y. J. (2010). Three stages of facial expression processing: ERP study with rapid serial visual presentation. Neuroimage 49, 1857–1867. doi: 10.1016/j.neuroimage.2009.09.018

Mammarella, N., Di Domenico, A., Palumbo, R., and Fairfield, B. (2016). Noradrenergic modulation of emotional memory in aging. Ageing Res. Rev. 27, 61–66. doi: 10.1016/j.arr.2016.03.004

Mather, M. (2012). The emotion paradox in the aging brain. Ann. N. Y. Acad. Sci. 1251, 33–49. doi: 10.1111/j.1749-6632.2012.06471.x

Mather, M., and Carstensen, L. L. (2003). Aging and attentional biases for emotional faces. Psychol. Sci. 14, 409–415. doi: 10.1111/1467-9280.01455

Mienaltowski, A., Chambers, N., and Tiernan, B. (2015). Similarity in older and younger adults’ emotional enhancement of visually-evoked N170 to facial stimuli. J. Vis. 15:133. doi: 10.1167/15.12.133

Nashiro, K., Sakaki, M., and Mather, M. (2012). Age differences in brain activity during emotion processing: reflections of age-related decline or increased emotion regulation? Gerontology 58, 156–163. doi: 10.1159/000328465

Pessoa, L., and Adolphs, R. (2010). Emotion processing and the amygdala: from a ‘low road’ to ‘many roads’ of evaluating biological significance. Nat. Rev. Neurosci. 11, 773–783. doi: 10.1038/nrn2920

Petit-Taboue, M. C., Landeau, B., Desson, J. F., Desgranges, B., and Baron, J. C. (1998). Effects of healthy aging on the regional cerebral metabolic rate of glucose assessed with statistical parametric mapping. Neuroimage 7, 176–184. doi: 10.1006/nimg.1997.0318

Ramon, M., and Rossion, B. (2012). Hemisphere-dependent holistic processing of familiar faces. Brain Cogn. 78, 7–13. doi: 10.1016/j.bandc.2011.10.009

Rellecke, J., Sommer, W., and Schacht, A. (2012). Does processing of emotional facial expressions depend on intention? Time-resolved evidence from event-related brain potentials. Biol. Psychol. 90, 23–32. doi: 10.1016/j.biopsycho.2012.02.002

Rossion, B. (2014). Understanding face perception by means of human electrophysiology. Trends Cogn. Sci. 18, 310–318. doi: 10.1016/j.tics.2014.02.013

Ruffman, T., Henry, J. D., Livingstone, V., and Phillips, L. H. (2008). A meta-analytic review of emotion recognition and aging: implications for neuropsychological models of aging. Neurosci. Biobehav. Rev. 32, 863–881. doi: 10.1016/j.neubiorev.2008.01.001

Schefter, M., Knorr, S., Kathmann, N., and Werheid, K. (2012). Age differences on ERP old/new effects for emotional and neutral faces. Int. J. Psychophysiol. 85, 257–269. doi: 10.1016/j.ijpsycho.2011.11.011

Scott, L. S. (2006). Featural and configural face processing in adults and infants: a behavioral and electrophysiological investigation. Perception 35, 1107–1128.

Smith, M. L., Cottrell, G. W., Gosselin, F., and Schyns, P. G. (2005). Transmitting and decoding facial expressions. Psychol. Sci. 16, 184–189. doi: 10.1111/j.0956-7976.2005.00801.x

Streit, M., Wolwer, W., Brinkmeyer, J., Ihl, R., and Gaebel, W. (2000). Electrophysiological correlates of emotional and structural face processing in humans. Neurosci. Lett. 278, 13–16.

Suzuki, A., and Akiyama, H. (2013). Cognitive aging explains age-related differences in face-based recognition of basic emotions except for anger and disgust. Neuropsychol. Dev. Cogn. B Aging Neuropsychol. Cogn. 20, 253–270. doi: 10.1080/13825585.2012.692761

Tanaka, J. W., Kaiser, M. D., Butler, S., and Le Grand, R. (2012). Mixed emotions: holistic and analytic perception of facial expressions. Cogn. Emot. 26, 961–977. doi: 10.1080/02699931.2011.630933

Tessitore, A., Hariri, A. R., Fera, F., Smith, W. G., Das, S., Weinberger, D. R., et al. (2005). Functional changes in the activity of brain regions underlying emotion processing in the elderly. Psychiatry Res. 139, 9–18. doi: 10.1016/j.pscychresns.2005.02.009

van Hooff, J. C., Crawford, H., and van Vugt, M. (2011). The wandering mind of men: ERP evidence for gender differences in attention bias towards attractive opposite sex faces. Soc. Cogn. Affect. Neurosci. 6, 477–485. doi: 10.1093/scan/nsq066

Vuilleumier, P. (2005). Cognitive science: staring fear in the face. Nature 433, 22–23. doi: 10.1038/433022a

Weiss, E. M., Kohler, C. G., Vonbank, J., Stadelmann, E., Kemmler, G., Hinterhuber, H., et al. (2008). Impairment in emotion recognition abilities in patients with mild cognitive impairment, early and moderate Alzheimer disease compared with healthy comparison subjects. Am. J. Geriatr. Psychiatry 16, 974–980. doi: 10.1097/JGP.0b013e318186bd53

West, J. T., Horning, S. M., Klebe, K. J., Foster, S. M., Cornwell, R. E., Perrett, D., et al. (2012). Age effects on emotion recognition in facial displays: from 20 to 89 years of age. Exp. Aging Res. 38, 146–168. doi: 10.1080/0361073X.2012.659997

Wieser, M. J., Klupp, E., Weyers, P., Pauli, P., Weise, D., Zeller, D., et al. (2012). Reduced early visual emotion discrimination as an index of diminished emotion processing in Parkinson’s disease? - Evidence from event-related brain potentials. Cortex 48, 1207–1217. doi: 10.1016/j.cortex.2011.06.006

Williams, L. M., Mathersul, D., Palmer, D. M., Gur, R. C., Gur, R. E., and Gordon, E. (2009). Explicit identification and implicit recognition of facial emotions: I. Age effects in males and females across 10 decades. J. Clin. Exp. Neuropsychol. 31, 257–277. doi: 10.1080/13803390802255635

Williams, L. M., Palmer, D., Liddell, B. J., Song, L., and Gordon, E. (2006). The ‘when’ and ‘where’ of perceiving signals of threat versus non-threat. Neuroimage 31, 458–467. doi: 10.1016/j.neuroimage.2005.12.009

Woelwer, W., Brinkmeyer, J., Stroth, S., Streit, M., Bechdolf, A., Ruhrmann, S., et al. (2012). Neurophysiological correlates of impaired facial affect recognition in individuals at risk for schizophrenia. Schizophr. Bull. 38, 1021–1029. doi: 10.1093/schbul/sbr013

Keywords: aging, cognitive function, event-related potential, N170, emotion

Citation: Liao X, Wang K, Lin K, Chan RCK and Zhang X (2017) Neural Temporal Dynamics of Facial Emotion Processing: Age Effects and Relationship to Cognitive Function. Front. Psychol. 8:1110. doi: 10.3389/fpsyg.2017.01110

Received: 04 March 2017; Accepted: 15 June 2017;

Published: 30 June 2017.

Edited by:

Rocco Palumbo, Schepens Eye Research Institute, United StatesReviewed by:

Emilia Iannilli, Technische Universität Dresden, GermanyCopyright © 2017 Liao, Wang, Lin, Chan and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaoyuan Zhang, emh4eUBzbXUuZWR1LmNu Xiaoyan Liao, bGlhb3h5QHNtdS5lZHUuY24=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.