94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

MINI REVIEW article

Front. Psychol. , 20 February 2017

Sec. Emotion Science

Volume 8 - 2017 | https://doi.org/10.3389/fpsyg.2017.00226

David W. Frank1,2,3*

David W. Frank1,2,3* Dean Sabatinelli2,3,4

Dean Sabatinelli2,3,4

Research has consistently revealed enhanced neural activation corresponding to attended cues coupled with suppression to unattended cues. This attention effect depends both on the spatial features of stimuli and internal task goals. However, a large majority of research supporting this effect involves circumscribed tasks that possess few ecologically relevant characteristics. By comparison, natural scenes have the potential to engage an evolved attention system, which may be characterized by supplemental neural processing and integration compared to mechanisms engaged during reduced experimental paradigms. Here, we describe recent animal and human studies of naturalistic scene viewing to highlight the specific impact of social and affective processes on the neural mechanisms of attention modulation.

Attention to the surrounding environment allows us to achieve our internally directed goals. Neuronal activation within early visual regions, such as the inferotemporal cortex (IT) and V4, corresponding to attended stimuli is often enhanced while neuronal activity in these areas corresponding to distracting information is suppressed, in part through the influence of regions such as the frontal eye fields (FEFs) and inferior parietal sulci (IPS; Kastner and Ungerleider, 2000; Baluch and Itti, 2011; Carrasco, 2011; Chelazzi et al., 2011). Classically, perceptually salient or unexpected stimuli can involuntarily draw attention in an exogenous, “bottom–up” (BU) fashion (Yantis and Jonides, 1990; Theeuwes, 1992, 2004). In contrast, “top–down” (TD), attention reflects how we voluntarily select items in the environment that merit re-orienting (Posner, 1980; Connor et al., 2004). These two processes may be characterized by different neural mechanisms using networks that ultimately converge and influence one another, and the convergence of BU and TD attention can be described as one, or several, priority maps where stimuli compete for attentional resources (Kusunoki et al., 2000; Bisley and Goldberg, 2010), resulting in one environmental item that draws attention in a “winner take all” fashion. It is important to note that a complex environmental event, as discussed below, may act on both attentional systems and that the activation of these processes is not, necessarily, binary; engagement of endogenous and exogenous attention may lie on a continuum, with specific events assigning different weights to each.

Through learning we establish expectations and rules about the nature of objects within our environment, such that incoming visual information is continuously compared against these expectancies (Summerfield and De Lange, 2014). In this way, we can predictively focus on subsets of the local context and shift attention rapidly should something unexpected occur. Feedforward processing of visual information is monitored via constant feedback from frontal cortex (Miller and Cohen, 2001; Barbas et al., 2011). One such processing stream involves the prefrontal cortex (PFC), which maintains functional connections with FEFs that modulate saccade planning, which in turn projects to the IPS for planning attentional deployment, and continues to primary and secondary visual-processing regions V1 and V2 (Corbetta et al., 2008; Corbetta and Shulman, 2011; Spreng et al., 2013). Additionally, the orbitofrontal cortex (OFC) innervates thalamus and amygdala (Cavada et al., 2000), potentially reflecting affective regulatory functions, which in turn project to ventral visual regions IT, V4, and primary visual cortex for object discrimination (Freese and Amaral, 2005; Tomasi and Volkow, 2011). These regional linkages may enable prior experience to enhance the efficiency and speed of perceptual processing.

Studies of visual attention often involve cue stimuli with little complexity; typically consisting of only a few shapes with solid colors, or motion contrasts on a fixed, blank background (Posner and Cohen, 1984; Wolfe and Horowitz, 2004). While these studies are tractable and extremely valuable in exploring the essential nature of visual attention, they do not resemble the intricacy of naturalistic scenes we encounter in life. To develop a more ecologically representative model of attention processing, it is useful to consider how stimuli that represent realistic daily experiences may affect attentional deployment.

While expectation clearly directs our attentional spotlight in sparse experimental paradigms, we are also interested in how attention circuits differ when processing natural scenes, as contextual cuing incorporates prior experience to expedite visual search (Le-Hoa Võ and Wolfe, 2015). Here, we review recent studies of naturalistic input to the attention process, such as environmental complexity, social stimuli, and affective stimuli. Additionally, we briefly discuss limitations of naturalistic experimental techniques and posit several research problems regarding our understanding of the primate attention network.

Humans are exceptionally skilled at rapid detection of other, potentially dangerous, animals in the natural environment. When participants are given prior instruction, scalp event-related potentials (ERPs) can differentiate briefly presented (<25ms) natural scenes containing animals from comparable scenes containing no animals within 150 ms of stimulus onset (Thorpe et al., 1996; Codispoti et al., 2006). Individuals are also able to discriminate peripheral naturalistic images during cognitively demanding tasks. Additionally, this ability does not extend to artificial, but visually salient, stimuli (Li et al., 2002). The efficiency of search concerning natural scenes is, therefore, likely a reflection of our expertise in navigating the world.

Prior exposure with the environment can inform our search strategy to determine where in space to deploy attention. For instance, when searching for a human in an urban context, individuals will first fixate on areas in which humans are typically found; searchers will look for people on a sidewalk before they look on a roof (Ehinger et al., 2009). In the laboratory, individuals will also use prior memory of a novel scene to speed search, engaging both frontoparietal attention mechanisms and the hippocampus (Summerfield et al., 2006). Real-world search is also relatively resistant to the number of distractors. Wolfe et al. (2011) conducted a study in which individuals were asked to find a particular object (e.g., a lamp) located within a natural scene (e.g., a living room) or within a search array (various objects randomly situated on a blank surface). When the target was placed within a natural scene, each additional searchable item added approximately 5 ms to the total search time. However, when targets were placed in an artificial array, each additional distractor added approximately 40 ms to search time. In other words, individuals were much better at disregarding distractors that were logically placed within a natural scene, thus speeding search for the target. Additionally, natural objects placed in locations and orientations typically viewed in the environment reduce cognitive competition compared to items positioned in novel ways (Kaiser et al., 2014). These data demonstrate the considerable impact of context clues in real-world search. Scene context, supported by prior experience, appears to guide TD attention via multiple brain regions, including hippocampus, parahippocampal and occipital place areas, retrosplenial cortex, and IPS (Dilks et al., 2013; Preston et al., 2013; Peelen and Kastner, 2014). In this way, canonical late-stage visual and memory systems are integrated with the attention network, providing regions such as the FEF and IPS with information to significantly modulate visual search.

As we navigate the world, our attentional focus must be continually updated to attain the current goal while inhibiting past goals. In this way, the ventral visual cortex has been shown to be actively suppressed when attending to previous relevant (but now irrelevant) stimuli (Seidl et al., 2012). The ability to rapidly attend to a searched-for object in the environment is influenced by neural preparatory activity from visual regions such as IT. One study has demonstrated that when a person anticipates the presentation of a human in a natural scene, this foreknowledge will enhance IT activation and predict the speed at which the target will be identified. Importantly, this enhanced activity occurs even if no scene is presented, reflecting the preparatory nature of IT in scene perception (Peelen and Kastner, 2011). These data suggest that previous knowledge primes the IT resulting in a more successful search. Additionally, prior knowledge that is no longer useful, and can thus interfere with the task, must be suppressed.

Taken together, the use of naturalistic stimuli in studies of visual search enables a more evolutionarily meaningful examination of attentional processing and its modulation. Attention is also highly efficient when searching quotidian scenes; context derived from experience allows more refined search that directs our focus toward goal-related target areas. The additional information from more realistic stimuli improves visual search and attentional capture by incorporating additional brain regions involved in facial recognition, irrespective of emotion, (e.g., fusiform face area (FFA)), scene representation (e.g., parahippocampal place area, occipital place area, and retrosplenium), and object location (e.g., parietal cortex). Thus, as we move away from highly controlled laboratory tasks and take a more ecologically valid approach, we may then consider the interaction of other neural systems, such as those involved in affective processing, while investigating their effects on attention.

Although previous experience with contextual cues and episodic memory help guide TD attention, the presence of emotionally evocative cues in a scene has the potential to bias both endogenous and exogenous re-orienting. The attention-grabbing nature of an affectively arousing stimulus is of course a result of natural selection, as rapid orientation to a potentially dangerous (or life sustaining) object will enhance an organism’s likelihood of survival (Lang et al., 1997). Even a weak association of reward can enhance attentional capture by colored singletons in relatively circumscribed laboratory paradigms (Kristjánsson et al., 2010). The communicative value of emotionally expressive faces also modulate attention, as monkeys and humans are faster to attend to threatening images of conspecifics than non-threatening ones (Bethell et al., 2012; Lacreuse et al., 2013; Carretie, 2014). Moreover, averted gaze of conspecifics can be more arousing than viewer-directed gaze, signaling an important environmental stimulus outside of view (Hoffman et al., 2007). Similarly, humans are faster to locate angry face targets, as opposed to happy faces, among neutral stimuli within search arrays of various set sizes (Fox et al., 2000; Eastwood et al., 2001; Tipples et al., 2002). Affective attentional capture has also been illustrated in an emotion-induced attentional blink, where targets are less often detected following an emotional stimulus than a neutral stimulus (Anderson and Phelps, 2001; Keil and Ihssen, 2004; Most et al., 2005; Keil et al., 2006; Arnell et al., 2007). Thus, in situations in which perceptual information is often missed, both emotionally arousing faces and scenes are effective at exogenously capturing attention and are more likely to undergo further visual processing. Taken together, these are but a few illustrations of how affectively arousing stimuli reflexively modulate visual attention.

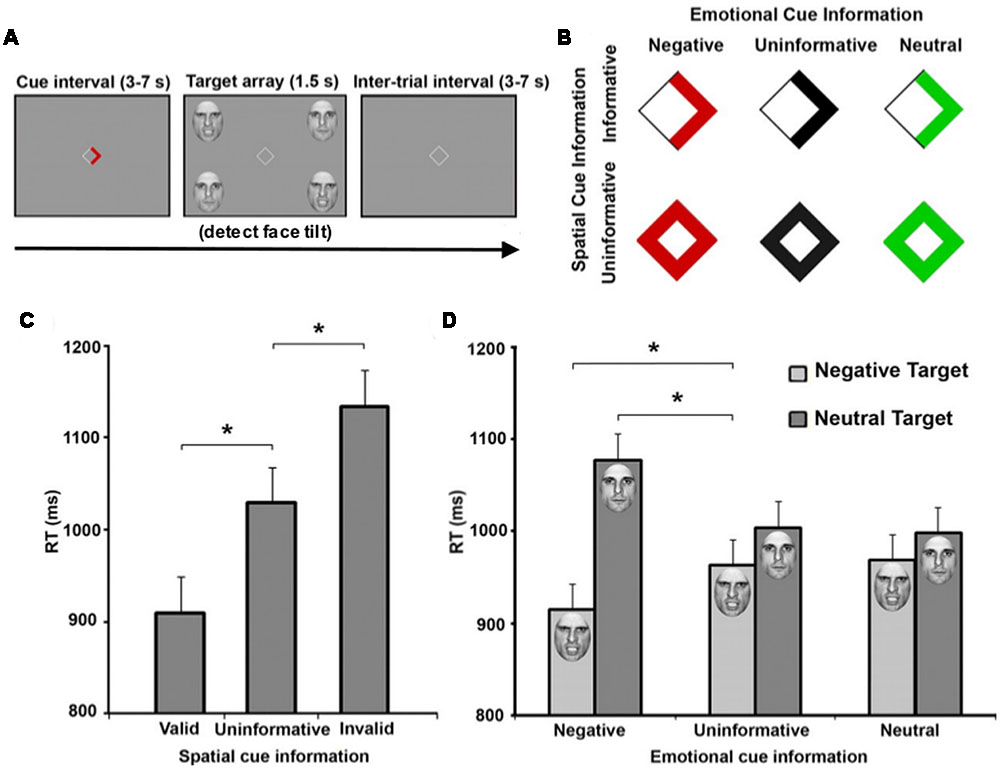

While emotional attention research has often focused on the ability of an arousing object to redirect attention without explicit instruction, other work has also shown that affective stimuli can modulate goal-directed TD processing. Using a modified Posner paradigm, Mohanty et al. (2009) employed emotionally arousing and non-arousing faces as targets (Figure 1). They then manipulated both the spatial location and emotional valence of targets, and both valid spatial cues (arrow direction) and valid emotional cues (arrow color) were displayed to independently speed target detection. Within the emotional cue condition, aversive cues enhanced attention, while both uninformative and neutral cues resulted in no attentional benefit. In fact, uninformative and neutral cues shared similar reaction times. Imaging results indicated enhanced activation in regions including FEF, IPS, and IT in response to spatial cues, while emotional cues additionally evoked amygdala activation. Additive spatial- and emotion-driven effects were found in FEF, IPS, and IT, and functional connectivity between amygdala and IT also increased during emotionally-cued stimuli. These data suggest that the amygdala provides input to an attention network, enhancing our ability to detect affectively arousing targets. Therefore, a set of affective regions, in addition to areas facilitating memory retrieval (e.g., hippocampus), integrate with attention structures commonly identified in controlled experimental paradigms to allow for more efficient and environmentally adaptive behavior.

FIGURE 1. Emotional and spatial foreknowledge enhances target detection speed. (A) Participants view central cue that may provide emotional information, spatial information, or both about an upcoming target. Participants were instructed to detect the direction of a tilted face among three vertical faces. (B) Cues could provide spatial information about the location of an upcoming target, indicated by an arrow, or no information, indicated by a diamond. Cues could also provide emotional information about the face of the upcoming target by the color red, indicating an angry face; the color green, indicating a neutral face; or provide no emotional information, indicated by black symbols. (C) Mean response time (RT) of valid (cue-directed attention toward target), uninformative (cue did not direct spatial attention), and invalid trials (cue-directed attention away from target). (D) Mean RT of negative, uninformative, and neutral emotional cues when target facial expressions were either negative or neutral. Asterisks indicate statistically significant differences (p < 0.05). Adapted with permission from Mohanty et al. (2009).

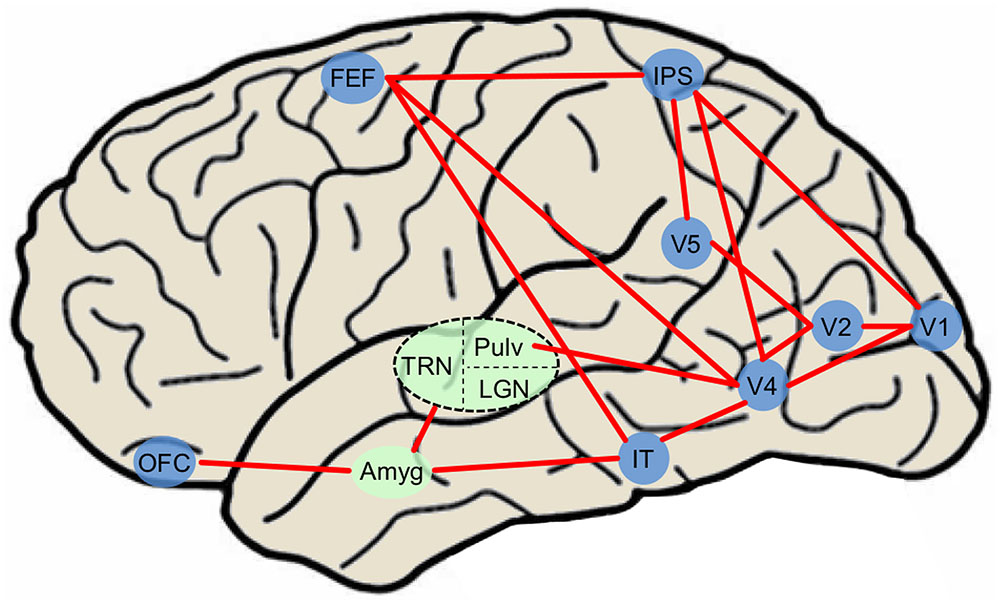

Current visual attention network maps (Thompson and Bichot, 2005; Corbetta et al., 2008; Noudoost et al., 2010; Peelen and Kastner, 2014) typically include only canonical visual-processing regions within the dorsal and ventral pathways. In a common attention network model (Pessoa and Adolphs, 2010), the majority of visual stimuli project to primary visual cortex (while some information is sent directly to the superior colliculus). BU processing occurs as visual information progresses throughout the ventral pathway into V2, V4, IT cortex, and synapses on thalamic nuclei such as the medial dorsal nucleus, thalamic reticular nucleus, and pulvinar nucleus that project diffusely throughout the cortex. BU processing also occurs as visual information progresses from V1 along the dorsal pathway to the parietal cortex and FEFs. Meanwhile, PFC exerts TD control over thalamic nuclei and FEF. It is likely that subcortical regions including amygdala modulate BU processing via the current re-entrant model by synapsing onto early ventral visual regions while influencing TD processing through connections with OFC. Due to the ability of emotional stimuli to both exogenously capture and endogenously guide attention, emotionally evocative aspects of stimuli may be incorporated to provide a more accurate picture of an evolved attention system. Structures such as the amygdala have previously been shown to feed into ventral visual cortex creating a re-entrant loop of emotionally enhanced perceptual processing (Amaral and Price, 1984; Freese and Amaral, 2005; Sabatinelli et al., 2009; Sabatinelli et al., 2014), influencing early BU visual attention regions. The amygdala also transacts with regions that influence TD attention such as orbitofrontal and cingulate cortex (Ghashghaei et al., 2007; Pessoa and Adolphs, 2010; Salzman and Fusi, 2010; Saalmann and Kastner, 2011), and may exert control over both TD and BU systems via thalamic connectivity (Pessoa and Adolphs, 2010; Saalmann and Kastner, 2011). Finally, since PFC can attenuate amygdala activity (Rosenkranz and Grace, 2001), TD attention processing originating in OFC may indirectly suppress the effects of emotionally weighted BU attention via amygdala circuitry. Therefore, the interconnected nature of the amygdala allows it to emotionally “tag” stimuli through a variety of neural pathways, and ultimately contributes to the likelihood of orienting to any stimulus in the environment (Figure 2). Thus, the inclusion of amygdala and other subcortical structures including regions of the thalamus (Rudrauf et al., 2008; Frank and Sabatinelli, 2014), may serve to refine circuit maps modeling naturalistic visual attention processing. While here we focus particularly on amygdala, this is only one region in a network; other regions likely contribute to affectively modulated attention processing in real-world contexts.

FIGURE 2. Simplified diagram of major attention network nodes with the inclusion of affectively modulated regions in a human brain. Blue nodes denote cortical regions and green nodes denote subcortical nuclei. The dashed oval is subdivided into three thalamic nuclei. Amyg, amygdala; FEF, frontal eye field; IT, inferotemporal cortex; LGN, lateral geniculate nucleus; IPS, intraparietal sulcus; OFC, orbitofrontal cortex; Pulv, pulvinar nucleus; TRN, thalamic reticular nucleus.

The explicit incorporation of emotion into attention models may also foster greater clinical translation using affective attention tasks to assess emotion-based disorders. For instance, patients with generalized anxiety disorder exhibit stronger emotional attentional blink to threatening stimuli compared to healthy controls (Olatunji et al., 2011). More recently, researchers using emotion-modulated attentional blink tasks have found that soldiers with post-traumatic stress disorder (PTSD) display stronger attentional capture by combat images than do healthy controls or peers not suffering from PTSD (Olatunji et al., 2013). Future attention studies involving the use of real-world stimuli may benefit clinical populations through potential cognitive and neurophysiological attentional redirection techniques, in addition to aiding clinicians with the identification of affective attention biomarkers.

During naturalistic viewing, attentional deployment to a region of space depends not only on internal goals and the physical impact of light on the retina, but also the context of the scene and experience with the targets involved in the current task. Moreover, the emotional relevance of items in our visual field also impacts attention allocation across exogenous and endogenous pathways. Evolution has resulted in neural mechanisms to discriminate a variety of emotional stimuli, and the guidance of attention by these stimuli likely contributed to human survival; humans can rapidly attend to potential threats or life-sustaining comestibles. Recently, naturalistic attention has been conceptualized as templates to help predict how and where attention will be deployed within our natural world (Peelen and Kastner, 2014). Within this burgeoning area of work, few models explicitly incorporate emotional relevance of targets (Pessoa, 2010). Furthermore, of those studying affective attention, many employ expressive faces, absent of context. It is also true that emotional stimuli can influence both TD and BU attention systems. In fact, some authors have argued that dividing attention into TD and BU divisions is overly simplistic and a third category, namely selection history, should be added (Awh et al., 2012). This point is particularly salient considering affective stimuli can possess both learned and evolved response tendencies. However, it may be the case that the level of TD or BU engagement is dependent on the particular task at hand; context may determine which system is engaged by emotional stimuli.

While the use of naturalistic stimuli will likely open new avenues of research, there are limitations to this methodology. For instance, the perceptual characteristics of scene stimuli, such as image complexity, depth of field, spatial frequency distribution can heavily influence neural activity and act as a confounding variable in an experimental paradigm (Bradley et al., 2007). When addressing the emotional modulation of brain activity, one should recognize that control of hedonic scene content is advantageous (Lang et al., 2008), considering arousal and pleasantness vary across picture categories. As technology advances and virtual reality scene presentation becomes more prevalent, it is possible that more researchers will take advantage of this capability to add another level of ecological validity to their experimental paradigms (Iaria et al., 2008; Nardo et al., 2011). However, this may come at a cost, since a greater number of uncontrolled variables are likely to emerge as an experiment approaches approximating the natural world. It should also be noted that when evaluating emotional attention in non-human primate data, it is often difficult to disentangle attention and emotion, as an animal’s behavior is inherently shaped through reward (Maunsell, 2004). Thus, any conclusions that attempt to differentiate attention and emotion should be taken with caution due to this behavior–reward association, and the inherently intertwined nature of their evolutionary origin.

While social stimuli are powerful cues, as the faces of our peers are effective at communicating dangers and desires, emotion is multi-faceted and there are countless open questions regarding the impact of naturalistic affective stimuli on attention. For example, how do naturalistic affective stimuli differentially modulate BU and TD attention? How do context and individual differences modulate the impact of appetitive and aversive scene processing? What are the limits of TD control on emotional attention? Are variations in these limits associated with disorders of emotion? Multiple studies have demonstrated that a subject’s emotional state influences endogenous attention, speeding reorienting to affective stimuli (Garner et al., 2006; Bar-Haim et al., 2007; Vogt et al., 2011); how does attention-modulation by heightened emotional arousal compare to attention-modulation by declarative knowledge of the upcoming stimulus? These and other questions may be clarified by naturalistic scene research of attentional processing in the real world.

DF and DS both provided substantial contribution to the conception and design of the work, drafting the present study, will grant final approval of the version to be published, and agree to be accountable for all aspects of the work.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Amaral, D. G., and Price, J. L. (1984). Amygdalo-cortical projections in the monkey (Macaca-Fascicularis). J. Comp. Neurol. 230, 465–496. doi: 10.1002/cne.902300402

Anderson, A. K., and Phelps, E. A. (2001). Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature 411, 305–309. doi: 10.1038/35077083

Arnell, K. M., Killman, K. V., and Fijavz, D. (2007). Blinded by emotion: target misses follow attention capture by arousing distractors in RSVP. Emotion 7, 465. doi: 10.1037/1528-3542.7.3.465

Awh, E., Belopolsky, A. V., and Theeuwes, J. (2012). Top-down versus bottom-up attentional control: a failed theoretical dichotomy. Trends Cogn. Sci. 16, 437–443. doi: 10.1016/j.tics.2012.06.010

Baluch, F., and Itti, L. (2011). Mechanisms of top-down attention. Trends Neurosci. 34, 210–224. doi: 10.1016/j.tins.2011.02.003

Barbas, H., Zikopoulos, B., and Timbie, C. (2011). Sensory pathways and emotional context for action in primate prefrontal cortex. Biol. Psychiatry 69, 1133–1139. doi: 10.1016/j.biopsych.2010.08.008

Bar-Haim, Y., Lamy, D., Pergamin, L., Bakermans-Kranenburg, M. J., and van IJzendoorn, M. H. (2007). Threat-related attentional bias in anxious and nonanxious individuals: a meta-analytic study. Psychol. Bull. 133, 1–24. doi: 10.1037/0033-2909.133.1.1

Bethell, E. J., Holmes, A., MacLarnon, A., and Semple, S. (2012). Evidence that emotion mediates social attention in rhesus macaques. PLoS ONE 7:e44387. doi: 10.1371/journal.pone.0044387

Bisley, J. W., and Goldberg, M. E. (2010). Attention, intention, and priority in the parietal lobe. Annu. Rev. Neurosci. 33, 1–21. doi: 10.1146/annurev-neuro-060909-152823

Bradley, M. M., Hamby, S., Low, A., and Lang, P. J. (2007). Brain potentials in perception: picture complexity and emotional arousal. Psychophysiology 44, 364–373. doi: 10.1111/j.1469-8986.2007.00520.x

Carrasco, M. (2011). Visual attention: the past 25 years. Vis. Res. 51, 1484–1525. doi: 10.1016/j.visres.2011.04.012

Carretie, L. (2014). Exogenous (automatic) attention to emotional stimuli: a review. Cogn. Affect. Behav. Neurosci. 14, 1228–1258. doi: 10.3758/s13415-014-0270-2

Cavada, C., Tejedor, J., Cruz-Rizzolo, R. J., and Reinoso-Suárez, F. (2000). The anatomical connections of the macaque monkey orbitofrontal cortex. A review. Cereb. Cortex 10, 220–242. doi: 10.1093/cercor/10.3.220

Chelazzi, L., Della Libera, C., Sani, I., and Santandrea, E. (2011). Neural basis of visual selective attention. Wiley Interdiscip. Rev. Cogn. Sci. 2, 392–407. doi: 10.1002/wcs.117

Codispoti, M., Ferrari, V., Junghöfer, M., and Schupp, H. T. (2006). The categorization of natural scenes: brain attention networks revealed by dense sensor ERPs. Neuroimage 32, 583–591. doi: 10.1016/j.neuroimage.2006.04.180

Connor, C. E., Egeth, H. E., and Yantis, S. (2004). Visual attention: bottom-up versus top-down. Curr. Biol. 14, R850–R852. doi: 10.1016/j.cub.2004.09.041

Corbetta, M., Patel, G., and Shulman, G. L. (2008). The reorienting system of the human brain: from environment to theory of mind. Neuron 58, 306–324. doi: 10.1016/j.neuron.2008.04.017

Corbetta, M., and Shulman, G. L. (2011). Spatial neglect and attention networks. Annu. Rev. Neurosci. 34, 569–599. doi: 10.1146/annurev-neuro-061010-113731

Dilks, D. D., Julian, J. B., Paunov, A. M., and Kanwisher, N. (2013). The occipital place area is causally and selectively involved in scene perception. J. Neurosci. 33, 1331–1336. doi: 10.1523/JNEUROSCI.4081-12.2013

Eastwood, J. D., Smilek, D., and Merikle, P. M. (2001). Differential attentional guidance by unattended faces expressing positive and negative emotion. Percept.Psychophys. 63, 1004–1013. doi: 10.3758/BF03194519

Ehinger, K. A., Hidalgo-Sotelo, B., Torralba, A., and Oliva, A. (2009). Modelling search for people in 900 scenes: a combined source model of eye guidance. Vis. Cogn. 17, 945–978. doi: 10.1080/13506280902834720

Fox, E., Lester, V., Russo, R., Bowles, R., Pichler, A., and Dutton, K. (2000). Facial expressions of emotion: are angry faces detected more efficiently? Cogn. Emot. 14, 61–92. doi: 10.1080/026999300378996

Frank, D. W., and Sabatinelli, D. (2014). Human thalamic and amygdala modulation in emotional scene perception. Brain Res. 1587, 69–76. doi: 10.1016/j.brainres.2014.08.061

Freese, J. L., and Amaral, D. G. (2005). The organization of projections from the amygdala to visual cortical areas TE and V1 in the macaque monkey. J. Comp. Neurol. 486, 295–317. doi: 10.1002/cne.20520

Garner, M., Mogg, K., and Bradley, B. P. (2006). Orienting and maintenance of gaze to facial expressions in social anxiety. J. Abnorm. Psychol. 115, 760–770. doi: 10.1037/0021-843X.115.4.760

Ghashghaei, H., Hilgetag, C., and Barbas, H. (2007). Sequence of information processing for emotions based on the anatomic dialogue between prefrontal cortex and amygdala. Neuroimage 34, 905–923. doi: 10.1016/j.neuroimage.2006.09.046

Hoffman, K. L., Gothard, K. M., Schmid, M. C., and Logothetis, N. K. (2007). Facial-expression and gaze-selective responses in the monkey amygdala. Curr. Biol. 17, 766–772. doi: 10.1016/j.cub.2007.03.040

Iaria, G., Fox, C. J., Chen, J. K., Petrides, M., and Barton, J. J. S. (2008). Detection of unexpected events during spatial navigation in humans: bottom-up attentional system and neural mechanisms. Eur. J. Neurosci. 27, 1017–1025. doi: 10.1111/j.1460-9568.2008.06060.x

Kaiser, D., Stein, T., and Peelen, M. V. (2014). Object grouping based on real-world regularities facilitates perception by reducing competitive interactions in visual cortex. Proc. Natl. Acad. Sci. U.S.A. 111, 11217–11222. doi: 10.1073/pnas.1400559111

Kastner, S., and Ungerleider, L. G. (2000). Mechanisms of visual attention in the human cortex. Annu. Rev. Neurosci. 23, 315–341. doi: 10.1146/annurev.neuro.23.1.315

Keil, A., and Ihssen, N. (2004). Identification facilitation for emotionally arousing verbs during the attentional blink. Emotion 4, 23–35. doi: 10.1037/1528-3542.4.1.23

Keil, A., Ihssen, N., and Heim, S. (2006). Early cortical facilitation for emotionally arousing targets during the attentional blink. BMC Biol. 4:23. doi: 10.1186/1741-7007-4-23

Kristjánsson,Á., Sigurjónsdóttir,Ó., and Driver, J. (2010). Fortune and reversals of fortune in visual search: reward contingencies for pop-out targets affect search efficiency and target repetition effects. Attent. Percept. Psychophys. 72, 1229–1236. doi: 10.3758/APP.72.5.1229

Kusunoki, M., Gottlieb, J., and Goldberg, M. E. (2000). The lateral intraparietal area as a salience map: the representation of abrupt onset, stimulus motion, and task relevance. Vis. Res. 40, 1459–1468. doi: 10.1016/S0042-6989(99)00212-6

Lacreuse, A., Schatz, K., Strazzullo, S., King, H. M., and Ready, R. (2013). Attentional biases and memory for emotional stimuli in men and male rhesus monkeys. Anim. Cogn. 16, 861–871. doi: 10.1007/s10071-013-0618-y

Lang, P. J., Bradley, M. M., and Cuthbert, B. N. (1997). “Motivated attention: affect, activation, and action,” in Attention and Orienting: Sensory and Motivational Processes, eds P. J. Lang, R. F. Simons, and M. T. Balaban (Hillsdale, NJ: Lawrence Erlbaum Associates), 97–135.

Lang, P. J., Bradley, M. M., and Cuthbert, B. N. (2008). International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual. Technical Report A-8. Gainesville, FL: University of Florida.

Le-Hoa Võ, M., and Wolfe, J. M. (2015). The role of memory for visual search in scenes. Ann. N. Y. Acad. Sci. 1339, 72–81. doi: 10.1111/nyas.12667

Li, F. F., VanRullen, R., Koch, C., and Perona, P. (2002). Rapid natural scene categorization in the near absence of attention. Proc. Natl. Acad. Sci. U.S.A. 99, 9596–9601. doi: 10.1073/pnas.092277599

Maunsell, J. H. (2004). Neuronal representations of cognitive state: reward or attention? Trends Cogn. Sci. 8, 261–265. doi: 10.1016/j.tics.2004.04.003

Miller, E. K., and Cohen, J. D. (2001). An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 24, 167–202. doi: 10.1146/annurev.neuro.24.1.167

Mohanty, A., Egner, T., Monti, J. M., and Mesulam, M.-M. (2009). Search for a threatening target triggers limbic guidance of spatial attention. J. Neurosci. 29, 10563–10572. doi: 10.1523/JNEUROSCI.1170-09.2009

Most, S. B., Chun, M. M., Widders, D. M., and Zald, D. H. (2005). Attentional rubbernecking: cognitive control and personality in emotion-induced blindness. Psychon. Bull. Rev. 12, 654–661. doi: 10.3758/BF03196754

Nardo, D., Santangelo, V., and Macaluso, E. (2011). Stimulus-driven orienting of visuo-spatial attention in complex dynamic environments. Neuron 69, 1015–1028. doi: 10.1016/j.neuron.2011.02.020

Noudoost, B., Chang, M. H., Steinmetz, N. A., and Moore, T. (2010). Top-down control of visual attention. Curr. Opin. Neurobiol. 20, 183–190. doi: 10.1016/j.conb.2010.02.003

Olatunji, B. O., Armstrong, T., McHugo, M., and Zald, D. H. (2013). Heightened attentional capture by threat in veterans with PTSD. J. Abnorm. Psychol. 122, 397–405. doi: 10.1037/a0030440

Olatunji, B. O., Ciesielski, B. G., Armstrong, T., Zhao, M., and Zald, D. H. (2011). Making something out of nothing: neutral content modulates attention in generalized anxiety disorder. Depress. Anxiety 28, 427–434. doi: 10.1002/da.20806

Peelen, M. V., and Kastner, S. (2011). A neural basis for real-world visual search in human occipitotemporal cortex. Proc. Natl. Acad. Sci. U.S.A. 108, 12125–12130. doi: 10.1073/pnas.1101042108

Peelen, M. V., and Kastner, S. (2014). Attention in the real world: toward understanding its neural basis. Trends Cogn. Sci. 18, 242–250. doi: 10.1016/j.tics.2014.02.004

Pessoa, L. (2010). Emotion and cognition and the amygdala: from “what is it?” to “what’s to be done?” Neuropsychologia 48, 3416–3429. doi: 10.1016/j.neuropsychologia.2010.06.038

Pessoa, L., and Adolphs, R. (2010). Emotion processing and the amygdala: from a ’low road’ to ’many roads’ of evaluating biological significance. Nat. Rev. Neurosci. 11, 773–783. doi: 10.1038/nrn2920

Posner, M. I. (1980). Orienting of attention. Q. J. Exp. Psychol. 32, 3–25. doi: 10.1080/00335558008248231

Posner, M. I., and Cohen, Y. (1984). “Components of visual orienting,” in Attention and Performance X: Control of Language Processes eds H. Bouma and D. Bouwhuis (Hillsdale, NJ: Erlbaum), 531–556.

Preston, T. J., Guo, F., Das, K., Giesbrecht, B., and Eckstein, M. P. (2013). Neural representations of contextual guidance in visual search of real-world scenes. J. Neurosci. 33, 7846–7855. doi: 10.1523/JNEUROSCI.5840-12.2013

Rosenkranz, J. A., and Grace, A. A. (2001). Dopamine attenuates prefrontal cortical suppression of sensory inputs to the basolateral amygdala of rats. J. Neurosci. 21, 4090–4103.

Rudrauf, D., David, O., Lachaux, J.-P., Kovach, C. K., Martinerie, J., Renault, B., et al. (2008). Rapid interactions between the ventral visual stream and emotion-related structures rely on a two-pathway architecture. J. Neurosci. 28, 2793–2803. doi: 10.1523/JNEUROSCI.3476-07.2008

Saalmann, Y. B., and Kastner, S. (2011). Cognitive and perceptual functions of the visual thalamus. Neuron 71, 209–223. doi: 10.1016/j.neuron.2011.06.027

Sabatinelli, D., Frank, D. W., Wanger, T. J., Dhamala, M., Adhikari, B. M., and Li, X. (2014). The timing and directional connectivity of human frontoparietal and ventral visual attention networks in emotional scene perception. Neuroscience 277C, 229–238. doi: 10.1016/j.neuroscience.2014.07.005

Sabatinelli, D., Lang, P. J., Bradley, M. M., Costa, V. D., and Keil, A. (2009). The timing of emotional discrimination in human amygdala and ventral visual cortex. J. Neurosci. 29, 14864–14868. doi: 10.1523/jneurosci.3278-09.2009

Salzman, C. D., and Fusi, S. (2010). Emotion, cognition, and mental state representation in amygdala and prefrontal cortex. Annu. Rev. Neurosci. 33, 173–202. doi: 10.1146/annurev.neuro.051508.135256

Seidl, K. N., Peelen, M. V., and Kastner, S. (2012). Neural evidence for distracter suppression during visual search in real-world scenes. J. Neurosci. 32, 11812–11819. doi: 10.1523/JNEUROSCI.1693-12.2012

Spreng, R. N., Sepulcre, J., Turner, G. R., Stevens, W. D., and Schacter, D. L. (2013). Intrinsic architecture underlying the relations among the default, dorsal attention, and frontoparietal control networks of the human brain. J. Cogn. Neurosci. 25, 74–86. doi: 10.1162/jocn_a_00281

Summerfield, C., and De Lange, F. P. (2014). Expectation in perceptual decision making: neural and computational mechanisms. Nat. Rev. Neurosci. 15, 745–756. doi: 10.1038/nrn3838

Summerfield, J. J., Lepsien, J., Gitelman, D. R., Mesulam, M. M., and Nobre, A. C. (2006). Orienting attention based on long-term memory experience. Neuron 49, 905–916. doi: 10.1016/j.neuron.2006.01.021

Theeuwes, J. (1992). Perceptual selectivity for color and form. Attent. Percept. Psychophys. 51, 599–606. doi: 10.3758/BF03211656

Theeuwes, J. (2004). Top-down search strategies cannot override attentional capture. Psychon. Bull. Rev. 11, 65–70. doi: 10.3758/BF03206462

Thompson, K. G., and Bichot, N. P. (2005). A visual salience map in the primate frontal eye field. Prog. Brain Res. 147, 251–262. doi: 10.1016/s0079-6123(04)47019-8

Thorpe, S., Fize, D., and Marlot, C. (1996). Speed of processing in the human visual system. Nature 381, 520–522. doi: 10.1038/381520a0

Tipples, J., Atkinson, A. P., and Young, A. W. (2002). The eyebrow frown: a salient social signal. Emotion 2, 288–296. doi: 10.1037/1528-3542.2.3.288

Tomasi, D., and Volkow, N. D. (2011). Association between functional connectivity hubs and brain networks. Cereb. Cortex 21, 2003–2013. doi: 10.1093/cercor/bhq268

Vogt, J., Lozo, L., Koster, E. H., and De Houwer, J. (2011). On the role of goal relevance in emotional attention: disgust evokes early attention to cleanliness. Cogn. Emot. 25, 466–477. doi: 10.1080/02699931.2010.532613

Wolfe, J. M., Alvarez, G. A., Rosenholtz, R., Kuzmova, Y. I., and Sherman, A. M. (2011). Visual search for arbitrary objects in real scenes. Attent. Percept. Psychophys. 73, 1650–1671. doi: 10.3758/s13414-011-0153-3

Wolfe, J. M., and Horowitz, T. S. (2004). What attributes guide the deployment of visual attention and how do they do it? Nat. Rev. Neurosci. 5, 495–501. doi: 10.1038/nrn1411

Keywords: emotion, IPS, LIP, FEF, amygdala

Citation: Frank DW and Sabatinelli D (2017) Primate Visual Perception: Motivated Attention in Naturalistic Scenes. Front. Psychol. 8:226. doi: 10.3389/fpsyg.2017.00226

Received: 14 December 2016; Accepted: 06 February 2017;

Published: 20 February 2017.

Edited by:

Maurizio Codispoti, University of Bologna, ItalyReviewed by:

Laura Miccoli, University of Granada, SpainCopyright © 2017 Frank and Sabatinelli. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: David W. Frank, ZGF2aWQtZnJhbmtAb3Voc2MuZWR1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.