94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol. , 07 September 2016

Sec. Human-Media Interaction

Volume 7 - 2016 | https://doi.org/10.3389/fpsyg.2016.01341

Despite their best intentions, people struggle with the realities of privacy protection and will often sacrifice privacy for convenience in their online activities. Individuals show systematic, personality dependent differences in their privacy decision making, which makes it interesting for those who seek to design ‘nudges’ designed to manipulate privacy behaviors. We explore such effects in a cookie decision task. Two hundred and ninety participants were given an incidental website review task that masked the true aim of the study. At the task outset, they were asked whether they wanted to accept a cookie in a message that either contained a social framing ‘nudge’ (they were told that either a majority or a minority of users like themselves had accepted the cookie) or contained no information about social norms (control). At the end of the task, participants were asked to complete a range of personality assessments (impulsivity, risk-taking, willingness to self-disclose and sociability). We found social framing to be an effective behavioral nudge, reducing cookie acceptance in the minority social norm condition. Further, we found personality effects in that those scoring highly on risk-taking and impulsivity were significantly more likely to accept the cookie. Finally, we found that the application of a social nudge could attenuate the personality effects of impulsivity and risk-taking. We explore the implications for those working in the privacy-by-design space.

People value their privacy, but do not readily protect it – a phenomenon known as the privacy paradox (Smith et al., 2011). There are a variety of explanations for this: people may not be aware of the various ways in which they leave themselves vulnerable online; they may not know how to protect themselves; they may find the act of establishing and maintaining privacy protection online too onerous or they may simply be willing to sacrifice their privacy in some kind of implicit or explicit trade – for convenience, for goods or simply for a better, personalized service.

Privacy is a fundamental issue for those involved in human–computer interaction. Human–computer interaction researchers may seek to build privacy considerations into their work from the outset – adopting a privacy by design approach (Brown, 2014) that embeds privacy thinking into the entire R&D lifecycle, ensuring that privacy is a core component in the final design. Or they may consider privacy ‘bolt-ons’ to final systems in the form of new functionality, new forcing functions or choice architectures that attempt to ‘nudge’ users to behave in ways that offer greater privacy protection. The latter is often discussed in terms of the ‘economics of privacy’ (e.g., Acquisti et al., 2013), recognizing that users’ privacy decisions are not necessarily stable over time, but rather reflect the economic and social costs and benefits of protecting privacy within a particular context. The wider context for privacy decision-making may include those personality variables that directly affect an individual’s ability to tolerate task interruptions as well as a range of task framing effects that make privacy risks more or less salient. In other words: “what people decide their data is worth depends critically on the context in which they are asked - specifically, how the problem is framed” (Acquisti et al., 2013, p.32).

This paper explores some of the framing and personality influencers that affect privacy-related decision making, specifically in the context of accepting or rejecting cookies – a known issue in relation to privacy. Participants were presented with an online travel shopping task and asked to judge trustworthiness, familiarity and likelihood of using of four different sites with the intention of masking the true aim of the study, i.e., cookie acceptance. Within this task the acceptance or rejection of cookies was framed by presenting population norms for this specific behavior (a social framing manipulation). The paper also considers the influence of different personality traits on responses to these framing manipulations. Nudges presently give little attention to personality differences assuming that all individuals are homogenous. The idea is that the presentation of choices can be made in such a way as to ‘nudge’ people toward a particular decision (Thaler and Sunstein, 2008), and that these nudges can cater for different personality traits that will be more or less susceptible to such nudges. Nudges that use social norm references are of particular interest as they can influence individuals to mimic the behaviors and decisions of a group by appealing to the need for group affiliation and social conformity.

Social norms have been used to influence behavior across a variety of contexts including health decision-making (Jetten et al., 2012); energy conservation (e.g., Allcott, 2011) and tax compliance (Posner, 2000). For example, using descriptive social norms (statements about how other individuals have acted in the given situation), researchers have compared numerical majority norms (e.g., 73 or 74%) to minority norms (e.g., 27 or 37%) and found that these simple manipulations can increase fruit consumption (e.g., Stok et al., 2012) or increase voting participation amongst students (Glynn et al., 2009). These studies have effectively demonstrated that individuals will use information about others like themselves to help make decisions. Majority norms are believed to be particularly effective as they refer to how most people behave in a certain situation and provide consensus information to individuals over what the ‘correct’ behavior is perceived to be (Thibaut and Kelley, 1959). However, a study by Besmer et al. (2010) suggests that minority norms may be more effective in influencing privacy decisions.

Social norms have also been used to manipulate the adoption of available privacy or security solutions (Das et al., 2014), although we should note that results here have been mixed. Social framing has been used to improve cookie management (Goecks and Mynatt, 2005), the creation and maintenance of personal firewalls (Goecks et al., 2009) and for peer-to-peer file sharing (DiGioia and Dourish, 2005). However, they are not always associated with the strongest effects on behavior change. For example, Patil et al. (2011) explored social influences on the privacy settings on Instant Messenger, finding that social cues were influential as a secondary manipulation, but showed very small effect sizes in comparison to other privacy manipulations. Further, we should be mindful that social nudges can work both ways – i.e., they can lead to a greater willingness to divulge sensitive information when informed that others have made similar disclosures (Acquisti et al., 2012). Further work is required in the privacy domain to resolve the role and effectiveness of social norm framing for privacy decisions in order to clarify the circumstances in which social framing might have limited or no influence (Anderson and Agarwal, 2010; Patil et al., 2011; Knijnenburg and Kobsa, 2013) or the conditions under which minority vs. majority social norms are effective (Besmer et al., 2010). One particularly neglected condition is the role of personality in decision-making.

Decisions can be mapped to personality in a number of ways (Riquelme and Román, 2014). For example, collaborative decision-making can reflect the extent to which individual’s exhibit social or individual value orientations (e.g., Steinel and De Dreu, 2004) or might reflect their collaborative vs. competitive tendencies (McClintock and Liebrand, 1988). Risky decisions are more likely to be made by those individuals who score high on openness to experience, while those who score highly on neuroticism are more likely to be risk-averse, at least in the domain of gains (Lauriola and Levin, 2001). Existing research also suggests that personality factors may interact with social manipulations to influence decision-making as personality traits may often affect behavior indirectly through influencing normative determinants of behavior (Rosenstock, 1974; Ajzen, 1991). In the security and privacy domains, a range of candidate personality traits can be identified that are likely to affect decision-making, including impulsivity, risk-taking/risk propensity (Grossklags and Reitter, 2014), willingness to self-disclose information about oneself, and sociability. These are described in more detail below.

Impulsivity, or impulsiveness, describes the tendency of individuals to give insufficient attention to the consequences of an action before carrying it out or being aware of the risk involved. Impulsivity may lead individuals to make decisions without having all the facts when processing information (Eysenck et al., 1985; Dickman, 1990; Franken and Muris, 2005). This lack of premeditation and deliberation (Halpern, 2002; Magid and Colder, 2007) as well as greater distractibility (Stanford et al., 2009) may then result in decisions that not only lead to regret, but also increase the potential vulnerability to risk for the more impulsive individual. Research indicates impulsivity may be thwarted under negative normative evaluations and heightened under positive normative evaluations (Rook and Fisher, 1995). Higher impulsivity is associated with lower self-control (Romer et al., 2010; Chen and Vazsonyi, 2011). When self-control resources are low, people are more likely to conform to social persuasion (Wills et al., 2010; Jacobson et al., 2011), especially when self-control is depleted and resistance has broken down (Burkley et al., 2011). In the absence of self-control to fend off persuasive appeals, people may become more susceptible to social influence.

Risk-taking is another interesting trait. This trait is influenced by past experience but also typically changes with age (Mata et al., 2011) and gender (Rolison et al., 2012). While impulsive people act on the spur of the moment without being aware of the risks involved, risk takers are aware of the risk and are prepared to take the chance (Dahlbäck, 1990). Risk-takers are less concerned about the repercussions of their actions as compared to risk-averse individuals who are more likely to be concerned about subsequent regret (Zeelenberg et al., 1996). In addition, risk-takers are more likely to make decisions ‘in the moment’ and to be influenced by emotional cues or mood (e.g., boredom, stress, disengagement) rather than a rational calculation (Figner and Weber, 2011; Visschers et al., 2012). Perhaps unsurprisingly, risk takers have also been shown to be less concerned about privacy (Egelman and Peer, 2015).

The third trait of interest is willingness to self-disclose, reflecting the fact that individuals vary in their willingness to share information about the self. Evidence for this comes from social networking research (Kaplan and Haenlein, 2010), health research (Esere et al., 2012) and also consumer research (Moon, 2010). A greater willingness to self-disclose information may render individuals vulnerable to privacy and security risks, as they may not think through the consequences of this exposure (Acquisti and Gross, 2006). Individuals are more willing to disclose information when under social influence (Sánchez et al., 2014) and pay less attention to potential privacy risks when under this influence (Cheung et al., 2015).

The fourth and final trait of interest is sociability – a facet of extraversion (McCrae and Costa, 1985) and a trait that correlates positively with consumer trust in online retailers (Riquelme and Román, 2014). Sociability may be associated with a greater interest in relationship management and information sharing, motives that have also been examined in relation to voluntary self-disclosure (Lee et al., 2008). Extraverts are particularly sensitive to social attention (Lucas et al., 2000) but less likely to yield to social pressure (Sharma and Malhotra, 2007) and slower to learn social norms (Eysenck, 1990).

Finally, social norm information has been shown to moderate the relationship between personality and behavior. Rook and Fisher (1995) showed that social normative evaluations influenced consumers’ impulsive buying behavior. Specifically, they only found a significant relationship between impulsivity and buying behaviors if consumers believe that acting on impulse is appropriate. With regards to social norms and risk taking, social norms are often communicated to those exhibiting risky health behavior in order to reduce such behavior (e.g., Scholly et al., 2005; Baumgartner et al., 2010). Showing such vulnerable individuals that they are in the minority helps convince them that the behavior is inappropriate.

Cookie acceptance is a form of privacy behavior that has been relatively understudied in existing research and represents an opportunity to explore the effects of social framing and the potential interacting role of personality on an objective behavior. A cookie is a small piece of data sent from a website, which is then stored on a user’s browser and transmitted back to the website every time the user browses a site. Cookies are promoted as being necessary to enhance the user’s experience by aiding navigation, identifying preferences, allowing personalization, targeted advertising and remembering login credentials. However, cookies also raise a number of privacy and anonymity issues as they track user behavior and collect user-specific information within and across websites (see BBC, 2011; Opentracker, 2014). Cookies cannot contain viruses or malware, but they can be associated with a number of security issues as they can hold information such as passwords used in authentication and also the credit card details, addresses and similar to support automatic completion of online forms. The security of the information contained within the cookie is dependent on the security of the website, the security of the user’s web browser and computer and whether or not the data is strongly encrypted.

In 2002, an EU directive was introduced which required online providers to seek consent to the use of cookies and in 2011 it became law. This initially meant that users had to give their explicit consent for cookie use before continuing with any web-based interaction and European users quickly became familiar with a cookie dialog in which they would accept or reject cookies as an integral component in online exchange. The Commission went so far as to develop a ‘Cookie Consent Kit’ with the necessary JavaScript to enable the easy adoption of a cookie dialog on any website (Information Provider’s Guide, 2015). Subsequent legislation gave limited exemption to certain forms of interaction. Amid criticisms about how users are tracked and systems extract personal data (see review by Peacock, 2014), data protection concerns have further increased and new EU legislation on data protection (the General Data Protection Regulation) will be coming into force in 2018 as part of the European data privacy framework. These guidelines are also expected to lead to improved privacy protection for users via a new drive for the more widespread employment of a privacy by design philosophy. The framework will also make privacy impact assessments mandatory, while setting the stage for fines for those companies that breach the Great Britain (1998). This also means that they are responsible for the safe-keeping of data they obtain from various sources (Hawthorn, 2015) and the securing of appropriate consent. The information collected via cookies is likely to fall under this new remit.

At present, explicit cookie acceptance typically involves a dialog that requires the user to click an “accept” or “proceed” button to get access to a website. This is a typical dialog structure, similar to others that might signal the acceptance of terms and conditions, granting permission for a software download, or agreeing to mobile applications accessing information from a mobile device. We know that users habituate to such messages over time (i.e., the more frequently they see the message, the less attention they give them Böhme and Köpsell, 2010; Raja et al., 2010). Further, we know that dialog boxes often interrupt the user in achieving his or her primary goal. This habituation to dialogs coupled with the users’ relentless focus on the primary task can create security vulnerabilities as, for example, when users automatically accept cookies or select an ‘update later’ button that will enable them to continue with the job at hand (Zhang-Kennedy et al., 2014). It is useful, then, to carefully consider cookie dialogs more carefully both in terms of the design of the dialog itself as well as a consideration of any personality dimensions that might weaken privacy decision-making (such as impulsivity in accepting everything that looks like a cookie). The current study attempts to address these issues.

In this paper the following research questions are explored. First, to what extent can social framing manipulations influence privacy decision-making, expressed here in terms of cookie acceptance? Second, are there personality factors that determine how users are likely to respond in a privacy context and third, might these lead to differential responses to social nudges? Associated hypotheses are as follows.

In comparison to a base rate (control) which is likely to lean toward acceptance of cookies, participants’ responses will be affected by a social nudge, such that a low social norm (minority) reference will effectively nudge participants away from accepting cookies.

Cookies are more likely to be accepted when individuals are more impulsive and willing to share information, when they are greater risk takers and more sociable.

Differences in cookie acceptance attributed to personality may be qualified by the effects of the social framing manipulation.

The study design involved an incidental task in which participants were asked to review a series of travel websites and make a series of judgments about them. Before clicking on the sites to review, participants were presented with one of three cookie dialogs, representing three social framing conditions (control, minority social norm, majority social norm). The dependent measure for the true task was cookie acceptance.

The social framing manipulations were constructed as follows. The original (control) text in the cookie dialog box was similar to that recommended by the EU cookie directive and read as follows: “Our use of cookies. This cookie stores basic user information on your computer, potentially improving the browsing experience and helping us deliver more relevant information to you.” Two experimental conditions were then generated with additional text that made reference to either a minority or a majority social norm, as follows: “37% (74%) of MTURKers like yourself have used this option.” All cookie dialogs concluded with: “Do you want to use this option? Accept/Don’t accept” (see Figure 1). Allocation to each of the three conditions (control, minority norm, majority norm) was randomized. Note that the social norm values (37% vs. 74%) for the two experimental conditions follow those selected by Glynn et al. (2009) and Stok et al. (2012). The use of the phrase “MTURKers like yourself” was included to enhance the group reference (cf. Terry and Hogg, 1996). Referencing the behavior of members of this online community was expected to increase potential adherence to social norms as identification with the norm referent group is important for compliance (Turner, 1991).

Data collection was via the Mechanical Turk platform. This platform was chosen for three reasons: Firstly, all users of the platform are regular users of the Internet. Secondly, these participants are predominantly driven by financial gain and fast task completion, a situation encountered by most individuals working online. Such users will be driven by their primary goal (task completion), which presents an ideal opportunity to explore the choice architecture around privacy and security nudges. Thirdly, using participants from the same occupational group allowed us to make sure that the participants would identify with the referent group mentioned in the social manipulations, in this case MTurkers.

In order to ensure a reasonable sample size to conduct inferential statistics (chi-square and ANCOVA) for the three hypotheses (VanVoorhis and Morgan, 2007), we recruited 309 participants for the study. A small group of cases (n = 19) were excluded due to duplication, errors in the data or missing data. The final dataset included 290 cases. Participants were aged on average 35 years (M = 35.30, SD = 11.96) with an age range between 18 to 71 years old. Just over half (53.1%, n = 154) of the participants were women and (45.9%, n = 133) male (three missing values).

Before commencement of this study, full ethical approval was received from the Faculty of Health and Life Sciences Ethics Committee at Northumbria University. All participants accessed the study via a link listed on Amazon’s Mechanical Turk platform where they were notified of a flat rate of $1 for participation in the study. Once they had accepted the task and completed the consent form, they were randomly allocated to one of the three cookie conditions. Regardless of their response in the cookie dialog, all participants received the same content thereafter – i.e., they were shown images of four travel websites welcome screen and answered a series of questions about those sites. This was followed by a questionnaire to assess their impulsivity, risk-taking, self-disclosure and sociability. Control questions and demographics were presented toward the end. A debriefing statement followed.

Following the incidental task, participants completed several scales designed to measure impulsivity, risk-taking, self-disclosure, sociability, and demographics, with instructions to participants written as follows: “Thank you for completing the task. We would hereby like to ask you a few questions about how you generally make decisions and share information about yourself.” The measures were matched to the constructs of interest discussed in the introduction (risk taking, impulsivity, self-disclosure, and sociability). Note that at various points we used subscales or slightly shortened measures. The advantages to this were (i) a shorter total time on task with the expectation of reduced dropout due to survey length (Hoerger, 2010) and (ii) improved task relevance. Shorter scales featuring three or more items often perform similarly well compared to longer scales when the items feature more than three response options (Peterson, 1994). In each case, the focus was on using well established measures that are readily accessible to enable other researchers to replicate the work. Further, we utilized measures that did not require extensive rewording to be used in an online setting. When shortening the scales, we retained those items that were readily applicable to the online context and our study. All alpha coefficients are given below.

This was measured using five items from the impulsiveness scale by Eysenck et al. (1985). This scale specifically separates impulsivity from risk taking. Instructions asked participants: “Please tell us how you tend to go about making decisions on a day-to-day basis.” The focus was to retain appropriate item stems. Scales with dichotomous response options and fewer number of items tend to have much lower reliability (Peter, 1979). We changed the original questions to personal statements and used Likert-type response options instead of the original dichotomous response options. An example item is: “I buy things on impulse.” Each item included five response options that captured the frequency with which participants engaged in the behaviors, ranging from (1) “never” to (5) “always.” The fourth item was reverse-scored while the fifth item was excluded (α = 0.74, M = 2.27, SD = 0.58).

This was measured using three items from Dahlbäck (1990). The scale was taken because it considers risky behavior in relation to other people’s behavior (which was relevant to a study on social influence). An example item is “I think that I am often less cautious than people in general.” The answering options ranged from (1) “strongly disagree” to (5) “strongly agree.” The third item was reverse-scored (α = 0.62, M = 2.31, SD = 0.73).

Self-disclosure was measured with four items from the International Personality Item Pool (IPIP, 2014) and one item by Wheeless (1978). The instructions were as follows: “Please tell us to what extent you share personal information about yourself on a day-to-day basis.” An example item is: “I share and express my private thoughts to others.” The answering options ranged from (1) “never” to (5) “always” (α = 0.77, M = 2.93, SD = 0.60).

This was measured using four items from Aluja et al. (2010), retaining the original instructions. An example item is: “I have a rich social life.” The response options ranged from (1) “strongly disagree” to (4) “agree strongly” (α = 0.85, M = 2.47, SD = 0.73).

Control questions incorporated the following: “Do you normally accept cookies on websites?” Participants could select either “Yes” or “No.” Demographics were also collected, such as age (including an option “prefer not to say”) and gender (including an option “prefer not to say”) in order to describe the sample in later analyses.

To explore the hypotheses, we first conducted separate analyses for each hypothesis and then collectively modeled the influence of social framing and personality using logistic regression.

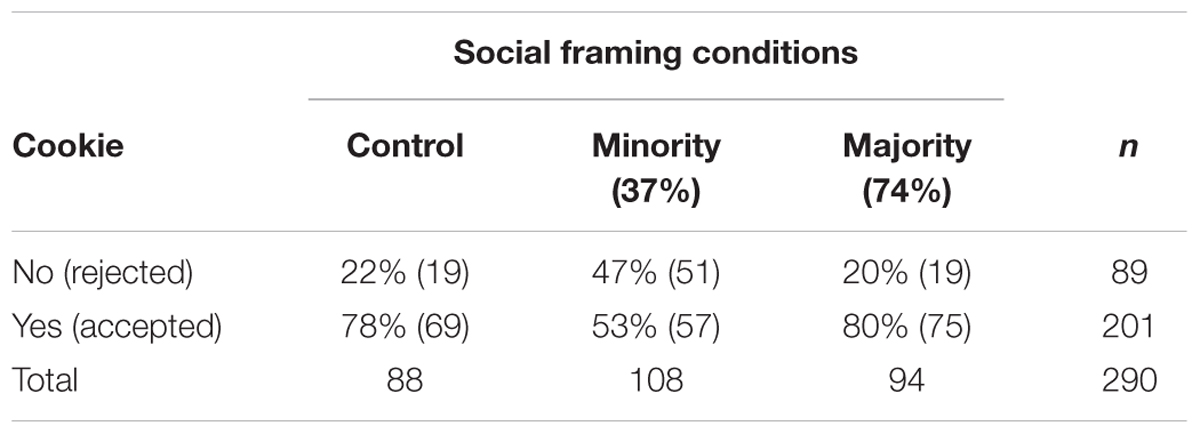

As predicted, the use of social norms in the cookie dialog had an effect upon cookie acceptance (see Table 1). It was hypothesized that people would reject the cookie if they believed that like-minded others were doing the same. Our findings support this prediction. A Chi-square analysis revealed a statistically significant effect across the three conditions [χ2(2) = 22.15, p < 0.001] and this finding is supported by Cramer’s V statistics result (Cramer’s V = 0.276) that show a moderately strong relationship between social framing and subsequent acceptance (range: 0.25 -0.30). Looking at the cookie acceptance data shown in Table 1, we see fewer people accepted the cookie when a minority norm was present– a significant effect as measured by a pairwise comparison [χ2(1) = 13.87, p < 0.001; φ = -0.266]. Note, however, that there was no statistical difference between the control group and the majority accept group [χ2(1) = 0.05, p = 0.819]. This is unproblematic, as default settings typically urge participants to accept cookies and so the control condition would simply reflect this bias toward cookie acceptance. For completeness, the two social framing groups also differed significantly from each other [χ2(1) = 16.19, p < 0.001; φ = 0.283].

TABLE 1. Cookie responses based on social group references (percentages reflect distribution of participants by condition).

We predicted that four personality variables would affect cookie acceptance: impulsivity; risk-taking; self-disclosure and sociability (see means in Table 2). To explore this, we divided participants into two groups, those who accepted and those who declined the cookie, and compared each of these traits. We used an analysis of covariance (controlling for age and gender differences in the sample; p-values in these analyses were adjusted for multiple comparisons). As predicted participants in the cookie acceptance group had higher impulsivity scores [F(1,288) = 6.35, p = 0.012, = 0.02] and higher risk-taking [F(1,288) = 11.66, p = 0.001, = 0.04] than those in the cookie rejection group. The obtained effect sizes for these results suggest a small to moderate effect of personality. No significant findings were obtained in relation to either willingness to disclose information [F(1,288) = 0.03, p = 0.857] or sociability [F(1,288) = 0.32, p = 0.571].

In this section we explore whether those with different personality characteristics might respond differently to the experimental manipulations in terms of their cookie acceptance. As cookie acceptance was associated with greater impulsivity and risk taking, we were interested in exploring potential group differences in relation to these two factors. To achieve this, a median split was performed to create both a high and low impulsive group (median = 2.25) and risk taking group (median = 2.33) (Table 3).

Looking firstly at the high vs. low impulsive individuals depicted in column 1 (control condition), we see that the impulsivity effect was reliable in the control condition – with high impulsive individuals being much more likely to accept the cookie than less impulsive individuals [χ2(1) = 4.930, p < 0.05; φ = 0.237). However, there was no statistically reliable effect of impulsivity in either the minority [χ2(1) = 3.141, p = 0.058; φ = 0.171] nor in the majority social framing condition [χ2(1) = 0.774, p = 0.271; φ = 0.091].

Looking secondly at the high vs. low risk takers, there is a slightly different pattern. There was no significant difference in cookie acceptance between high and low risk takers in either the control [χ2(1) = 2.498, p = 0.094; φ = 0.168] or the majority framing condition [χ2(1) = 0.324, p = 0.377; φ = 0.059]. However, there was a significant difference between high and low risk takers in the minority social framing condition [χ2(1) = 5.302, p < 0.05; φ = 0.222] with 64% of high risk takers accepting the cookie compared to 41% of low risk takers.

These findings suggest that behavioral nudges serve to attenuate individual differences in privacy decision-making that derive from two personality characteristics: impulsivity and risk-taking, although clearly more research would be required to understand the factors at play here.

To further investigate these findings, we carried out a hierarchical logistical regression. Hierarchical logistic regression was performed using the Enter method in which model 1 included the control variables (age and gender), model 2 explored the main effect of the social frame, model 3 explored the effect of personality and finally, model 4 explored interactions between the personality variables and social frames. See Table 4 for model coefficients in predicting cookie acceptance.

The results of first model, including the control variables (age and gender), revealed a poor fit to the data [χ2(2) = 0.94, p = 0.625]. After controlling for age and gender, the social framing in Model 2 was found to significantly improve the model [χ2(4) = 20.00, p < 0.05] explaining between 6 and 10% of the variance in cookie acceptance (Cox and Snell R2 = 0.07; Nagelkerke R2 = 0.10, Hosmer and Lemeshow, R2 = 0.06). Overall, 69.3% of cases were correctly classified in this model and the Wald statistic result revealed that only social frame (minority) contributed significantly to the prediction of cookie acceptance (Wald = 12.44, b = -1.15, df = 1, p < 0.001). This was due to the fact that the control group was used as the referent group in the model (our earlier findings demonstrated that the control and majority referent group accepted cookies in a similar pattern). The minority framing has a significant negative co-efficient indicating that the presence of a minority social referent is less likely to lead to cookie acceptance. The odds ratio is less than 1, indicating that as the predictor increases (or allocation to this group) the odds of the outcome occurring decrease. Conversely, the majority social referent odds ratio is 1.07 indicating that people in the majority frame are 1.07 times more likely to accept the cookie. The majority social referent does not significantly predict cookie acceptance.

Model 3 introduced the personality factors which further improved the model fit [χ2(4) = 12.09, p < 0.05] explaining between 9 and 15% of the variance in cookie acceptance (Cox and Snell R2 = 0.11; Nagelkerke R2 = 0.15, Hosmer and Lemeshow, R2 = 0.09) and raised the classification of cases to 71.4%. The Wald statistic result revealed that only one personality factor contributed significantly to the prediction of cookie acceptance, which was risk taking (Wald = 5.84, b = 0.63, df = 1, p < 0.05).

Model 4 did not lead to a significant change in the model [χ2(14) = 10.73, p = 0.707] indicating that there were no interactions between social framing and personality, and interactions between personality factors. Model 3 was therefore the best fit to the sampled data. Risk taking has a significant positive co-efficient and an odds ratio of 1.88, indicating that individuals higher in risk taking are 1.88 times more likely to accept the cookie.

The results of logistic regression suggested a slightly different picture compared to our reported analysis. The regression outcomes confirm our chi-square findings regarding differences in social framing. However, only risk-taking contributes significantly to the prediction of cookie acceptance in the regression model. The non-significant result for impulsivity is likely due to two aspects: (1) variable reduction propensity of logistic regression and (2) a potential suppression effect [the correlation between impulsivity and risk taking is significant (r = 0.589, p < 0.001)]. Using chi-squared analyses overcomes these limitations and isolates the influence of these two factors. Impulsivity significantly predicts acceptance when risk-taking is removed demonstrating the high inter-correlation between the two. Lastly, we are unable to identify interactions between personality types and the social framing. The large number of predictors in the logistic regression and the inter-correlations means that these potential interactions did not appear as the regression is confounded.

In this study we measured cookie acceptance directly rather than as a self-reported behavior. This is important as avowed intentions do not translate well into actual behaviors – a major concern for human-centric studies in privacy and security according to Crossler et al. (2013). We explored social framing effects by invoking different social norms in a cookie dialog and found that those norms that indicated low cookie acceptance were able to drive people away from the ‘default’ position of accepting the cookie. We also explored the personality characteristics of risk-taking, impulsivity, sociability and self-disclosure, noting an influence of the first two on cookie acceptance. However, risk taking was found to be the more reliable predictor. We did not find evidence for an interaction between the personality factors and the social norm conditions. We capture our predictions and interpretation of findings in more detail in the next section, before moving on to a discussion of the limitations and implications of the work and conclusion.

The first hypothesis predicted that a social nudge might be capable of moving people away from accepting cookies as the normative or ‘control behavior.’ This hypothesis was supported. When a cookie dialog included a reference to low cookie acceptance rate as a social norm, participants were nudged away from accepting the cookie. These results are in line with previous findings that illustrate behavior change in the face of social nudges (Stok et al., 2012) and those studies showing the effects of social nudges on privacy behavior (Goecks and Mynatt, 2005; Besmer et al., 2010). Note that the presence of a majority social acceptance norm in the cookie message did not generate any systematic change in behavior, as compared to a control condition – presumably because the ‘majority norm’ was more reflective of default acceptance behaviors. We note the implications here for the current and future European Union directives around the use of cookies – with current recognition of cookie acceptance as the default, but the possible introduction of more detailed information about the privacy implications of cookies to come. Our research thus contributes to current understanding in respect of ‘social proof’, building on the work of Das et al. (2014) and recognizing evidence from other contexts (see Glynn et al., 2009; Stok et al., 2012). Taken together, these show how perceived social norms can directly affect decision-making.

The second hypothesis focused on the role of personality in decision-making, following work by Riquelme and Román (2014). It was hypothesized that participants would be more likely to accept cookies if they were also (i) more impulsive, (ii) more willing to share information, (iii) greater risk takers and (iv) more sociable. This hypothesis was supported for only two of the four traits (impulsivity and risk-taking), but most strongly for risk taking. There is a suggestion that more impulsive individuals were more likely to accept cookies, which also fits with findings that they are less likely to deliberate on their options (see Halpern, 2002; Magid and Colder, 2007). Risk taking was a stronger predictor of cookie acceptance than impulsivity in this study. Risk taking has been found to be a predictor of other security behaviors (see Grossklags and Reitter, 2014). This supports findings by Zeelenberg et al. (1996) that risk takers are less likely to be concerned about the repercussions of their actions and less likely to consider any subsequent regret.

No evidence was found to link cookie acceptance to either self-disclosure or sociability. Perhaps the association between cookie acceptance and self-disclosure as well as privacy concern was too tenuous. Participants may not have recognized that cookies may also involve sharing potentially identifying and/or personal information. This suggests that context-dependency plays an important role, as different environmental cues may influence disclosure of private information (John et al., 2011).

Nurse et al. (2011) proposed that it is imperative to design systems and communication in a way that caters to individual strengths. Personality traits may shape user strengths and weaknesses in relation to privacy and security behaviors. Based on our results, in the absence of a nudge, risk taking and impulsivity may represent potentially unfavorable traits in security-related decision making. Our third hypothesis focused on the intersection between personality differences and social framing. The study explored this in relation to levels of impulsivity and risk-taking by investigating group differences. We do not wish to put too much weight on these findings, as our analysis relied on a median split between high vs. low impulsive and high vs. low risk-takers that compared groups of uneven sizes; however, we would draw attention to the fact that nudges were effective for vulnerable cohorts such as high risk-takers. This is important when considering privacy-by-design approaches that rely upon an understanding of human behavior, as we rarely consider the ways in which behavioral nudges might be targeted at specific personality types. Thus, for example, nudges could be employed to move risk-taking individuals away from problematic default behaviors. It is easy to understand how such a social manipulation might act to improve the privacy and security behaviors of risk-takers without unnecessarily triggering the concerns of those who are already risk-averse.

Our study does have its limitations. In measuring cookie acceptance behavior directly, we were reduced to reporting a binary cookie acceptance decision that then limited our ability to perform a meaningful parametric assessment of interaction effects in respect of personality and social framing. Also, while we found that social nudging was effective, our interventions may have produced only short-term behavioral change and we must recognize that other forms of enquiry would be needed to explore longer term, enduring behavior change and norm internalization (e.g., Mols et al., 2015). Finally, the current study took place in a research environment where security may not have been perceived as a matter of concern. MTurkers may have assumed that the study would not pose any danger to them, reducing their concerns about potential security risks. This may not be representative of other groups or contexts and may affect the generalizability of the presented findings beyond MTurk (Casler et al., 2013; Paolacci and Chandler, 2014).

To date, a number of researchers have exploited known cognitive biases and habits in order to help users make more informed choices (Acquisti, 2009). For example, privacy nudges have been employed to reduce an unintended disclosure of information to a wider audience on social media sites (Wang et al., 2014), to influence privacy settings on mobile phones and similar devices (Choe et al., 2013; Turland et al., 2015), or to nudge individuals away from privacy-invasive apps (see Choe et al., 2013). In a related security domain, nudges have also been used to reduce the “update later” response to security updates (Zhang-Kennedy et al., 2014); to create more secure passwords (Forget et al., 2008). We have added to this work, but note the following recommendations for future work. Firstly, nudges would appear to be most effective when they are present at the point that a decision is to be made, but we know relatively little about the way their influence may wane over time. Secondly social norm nudges ought to be credible and care must be taken in regard to the way that they map onto existing social norms (e.g., Stok et al., 2012). In our own data we saw little evidence for the influence of a ‘majority norm’ and have interpreted this in terms of existing default behaviors. However, it is interesting to note, in the data presented in Table 3, the possibility of a personality linked baseline effect in which those more conservative individuals with a relatively low cookie acceptance rate might be influenced to accept cookies when presented with a majority social norm. Baseline behaviors are important when exploring norm-based nudges – something that has been observed in relation to the use of social nudges in other contexts (Allcott, 2011). Norm-based nudges are therefore likely to be maximally useful when the default behavior is well known.

A variety of other future research avenues are also worth considering. First, work on affect and risk communication (Visschers et al., 2012) may provide further ideas for nudging in the area of information security. Second, the result of framing in tasks may also be influenced by other individual characteristics not under investigation in this study (Tversky and Kahneman, 1981; Kühberger, 1998), including conscientiousness, agreeableness (Levin et al., 2002), risk preferences and gender (Druckman, 2001; Levin et al., 2002). More research on framing effects and the role of individual differences on decision-making (see Levin et al., 2002) may help us to better understand under what circumstances and for whom framing is effective. This would then allow practitioners to develop choice architectures capable of exerting the greatest influence. We note, here, that behavioral researchers working in other contexts have shown the importance of individual differences in the appropriate tailoring of a behavioral nudge. Thus, for example, Costa and Kahn (2013), working in the field of energy conservation, found that the provision of a report on self and peer consumption of energy was an effective nudge for political liberals but was ineffective with political conservatives. Finally, it is likely that the effectiveness of social norms may be dependent on the extent to which the individual identifies with the referent group (Stok et al., 2012). Future research may wish to explore this in more depth as part of a wider remit to understand the way that tailored social information may influence an individual.

The authors worked as a team and made contributions throughout. PB and LC conceived of the research program as part of the original funding bid and led the team, contributing to design, the interpretation of data and the final stages of writing up. DJ as lead researcher, contributed to the design, supervised the running of the project, conducted the statistical analyses together with JB and wrote the first draft of the manuscript. JT was responsible for designing and building the data collection platform.

The work presented in this paper was funded through the Choice Architecture for Information Security (ChAIse) project (EP/K006568/1) from Engineering and Physical Sciences Research Council (EPSRC), UK, and Government Communications Headquarters (GCHQ), UK, as a part of the Research Institute in Science of Cyber Security.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors would further like to thank colleagues from Computing Science at Newcastle University for their support and the contribution to this research. We would also like to thank the reviewers for their feedback.

Acquisti, A. (2009). Nudging Privacy: the behavioral economics of personal information. IEEE Secur. Priv. Mag. 7, 82–85. doi: 10.1109/MSP.2009.163

Acquisti, A., and Gross, R. (2006). “Imagined communities: awareness, information sharing, and privacy on the facebook,” in Proocedings of the 6th international conference on Privacy Enhancing Technologies, Cambridge, 36–58. doi: 10.1007/11957454_3

Acquisti, A., John, L., and Loewenstein, G. (2013). What is privacy worth? J. Leg. Stud. 42, 249–274. doi: 10.1086/671754

Acquisti, A., John, L. K., and Loewenstein, G. (2012). The Impact of relative standards on the propensity to disclose. J. Mark. Res. 49, 160–174. doi: 10.1509/jmr.09.0215

Ajzen, I. (1991). The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 50, 179–211. doi: 10.1016/0749-5978(91)90020-T

Allcott, H. (2011). Social norms and energy conservation. J. Public Econ. 95, 1082–1095. doi: 10.1016/j.jpubeco.2011.03.003

Aluja, A., Kuhlman, M., and Zuckerman, M. (2010). Development of the Zuckerman–Kuhlman–Aluja Personality Questionnaire (ZKA–PQ): a factor/facet version of the Zuckerman–Kuhlman Personality Questionnaire (ZKPQ). J. Pers. Assess. 92, 416–431. doi: 10.1080/00223891.2010.497406

Anderson, C., and Agarwal, R. (2010). Practicing safe computing: a multimethod empirical examination of home computer user security behavioral intentions. MIS Q. 34, 613–643.

Baumgartner, S. E., Valkenburg, P. M., and Peter, J. (2010). Assessing causality in the relationship between adolescents’ risky sexual online behavior and their perceptions of this behavior. J. Youth Adolesc. 39, 1226–1239. doi: 10.1007/s10964-010-9512-y

BBC (2011). New Net Rules Set to Make Cookies Crumble. Available at: http://www.bbc.co.uk/news/technology-12668552 [accessed April 23, 2016]

Besmer, A., Watson, J., and Lipford, H. R. (2010). “The impact of social navigation on privacy policy configuration,” in Proceedings of the Sixth Symposium on Usable Privacy and Security - SOUPS ’10, (New York, NY: ACM Press), doi: 10.1145/1837110.1837120

Böhme, R., and Köpsell, S. (2010). “Trained to accept?,” in Proceedings of the 28th International Conference on Human factors in Computing Systems - CHI ’10, (New York, NY: ACM Press), doi: 10.1145/1753326.1753689

Brown, I. (2014). Britain’s smart meter programme: a case study in privacy by design. Int. Rev Law Comput. Technol. 28, 172–184. doi: 10.1080/13600869.2013.801580

Burkley, E., Anderson, D., and Curtis, J. (2011). You wore me down: self-control strength and social influence. Soc. Pers. Psychol. Compass 5, 487–499. doi: 10.1111/j.1751-9004.2011.00367.x

Casler, K., Bickel, L., and Hackett, E. (2013). Separate but equal? A comparison of participants and data gathered via Amazon’s MTurk, social media, and face-to-face behavioral testing. Comput. Hum. Behav. 29, 2156–2160. doi: 10.1016/j.chb.2013.05.009

Chen, P., and Vazsonyi, A. T. (2011). Future orientation, impulsivity, and problem behaviors: a longitudinal moderation model. Dev. Psychol. 47, 1633–1645. doi: 10.1037/a0025327

Cheung, C., Lee, Z. W. Y., and Chan, T. K. H. (2015). Self-disclosure in social networking sites. Internet Res. 25, 279–299. doi: 10.1108/IntR-09-2013-0192

Choe, E. K., Jung, J., Lee, B., Fisher, K., and Kotzé, P. (2013). “Nudging people away from privacy-invasive mobile apps through visual framing,” in Human-Computer Interaction – INTERACT 2013: Lecture Notes in Computer Science, eds G. Marsden, G. Lindgaard, J. Wesson, and M. Winckler (Berlin: Springer), 74–91. doi: 10.1007/978-3-642-40477-1_5

Costa, D. L., and Kahn, M. E. (2013). Energy conservation “nudges” and environmentalist ideology: evidence from a randomized residential electricity field experiment. J. Eur. Econ. Assoc. 11, 680–702. doi: 10.1111/jeea.12011

Crossler, R. E., Johnston, A. C., Lowry, P. B., Hu, Q., Warkentin, M., and Baskerville, R. (2013). Future directions for behavioral information security research. Comput. Secur. 32, 90–101. doi: 10.1016/j.cose.2012.09.010

Dahlbäck, O. (1990). Personality and risk-taking. Pers. Individ. Dif. 11, 1235–1242. doi: 10.1016/0191-8869(90)90150-P

Das, S., Kramer, A. D. I., Dabbish, L. A., and Hong, J. I. (2014). “Increasing security sensitivity with social proof?: a large - scale experimental confirmation,” in Proceedings of the ACM SIGSAC Conference on Computer and Communications Security, (New York, NY: ACM Press), 739–749. doi: 10.1145/2660267.2660271

Dickman, S. J. (1990). Functional and dysfunctional impulsivity: personality and cognitive correlates. J. Pers. Soc. Psychol. 58, 95–102. doi: 10.1037/0022-3514.58.1.95

DiGioia, P., and Dourish, P. (2005). “Social navigation as a model for usable security,” in Proceedings of the 2005 Symposium On Usable Privacy and Security - SOUPS ’05, (New York, NY: ACM Press), 101–108. doi: 10.1145/1073001.1073011

Druckman, J. N. (2001). Evaluating framing effects. J. Econ. Psychol. 22, 91–101. doi: 10.1016/S0167-4870(00)00032-5

Egelman, S., and Peer, E. (2015). Predicting privacy and security attitudes. ACM SIGCAS Comput. Soc. 45, 22–28. doi: 10.1145/2738210.2738215

Esere, M. O., Omotosho, J. A., and Idowu, A. I. (2012). “Self-disclosure in online counselling,” in Online Guidance and Counseling. Towards effectively applying technology, eds B. I. Popoola and O. F. Adebowale (Hershey, PA: IGI Global), 180–189.

Eysenck, H. (1990). “Biological dimensions of personality,” in Handbook of Personality: Theory and Research, ed. L. A. Pervin (New York, NY: Guilford), 244–276.

Eysenck, S. B. G., Pearson, P. R., Easting, G., and Allsopp, J. F. (1985). Age norms for impulsiveness, venturesomeness and empathy in adults. Pers. Individ. Dif. 6, 613–619. doi: 10.1016/0191-8869(85)90011-X

Figner, B., and Weber, E. U. (2011). Who takes risks when and why?: determinants of risk taking. Curr. Dir. Psychol. Sci. 20, 211–216. doi: 10.1177/0963721411415790

Forget, A., Chiasson, S., van Oorschot, P. C., and Biddle, R. (2008). “Persuasion for stronger passwords: motivation and pilot study,” in Proceedings of the 3rd international conference on Persuasive Technology PERSUASIVE ‘08, (Berlin: Springer-Verlag), 140–150. doi: 10.1007/978-3-540-68504-3_13

Franken, I. H. A., and Muris, P. (2005). Individual differences in decision-making. Pers. and Individ. Dif. 39, 991–998. doi: 10.1016/j.paid.2005.04.004

Glynn, C. J., Huge, M. E., and Lunney, C. A. (2009). The Influence of perceived social norms on college students’ intention to vote. Polit. Commun. 26, 48–64. doi: 10.1080/10584600802622860

Goecks, J., Edwards, W. K., and Mynatt, E. D. (2009). “Challenges in supporting end-user privacy and security management with social navigation,” in Proceedings of the 5th Symposium on Usable Privacy and Security - SOUPS ’09, (New York, NY: ACM Press), doi: 10.1145/1572532.1572539

Goecks, J., and Mynatt, E. D. (2005). “Supporting privacy management via community experience and expertise,” in Communities and Technologies 2005, eds P. Van Den Besselaar, G. De Michelis, J. Preece, and C. Simone (Dordrecht: Springer), 397–417. doi: 10.1007/1-4020-3591-8_21

Grossklags, J., and Reitter, D. (2014). “How task familiarity and cognitive predispositions impact behavior in a security game of timing,” in 2014 IEEE 27th Computer Security Foundations Symposium, (Piscataway, NJ: IEEE), 111–122. doi: 10.1109/CSF.2014.16

Halpern, D. F. (2002). Thought and Knowledge: An Introduction to Critical Thinking. Abingdon: Routledge.

Hawthorn, N. (2015). 10 Things You Need to Know about the new EU Data Protection Regulation. Available at: http://www.computerworlduk.com/security/10-things-you-need-know-about-new-eu-data-protection-regulation-3610851/ [accessed April 23, 2016]

Hoerger, M. (2010). Participant dropout as a function of survey length in internet-mediated university studies: implications for study design and voluntary participation in psychological research. Cyberpsychol. Behav. Soc. Netw. 13, 697–700. doi: 10.1089/cyber.2009.0445

Information Provider’s Guide (2015). Information Provider’s Guide. Available at: http://ec.europa.eu/ipg/basics/legal/cookies/index_en.htm#section_4 [accessed April 23, 2016]

IPIP (2014). Self-Disclosure. International Personality Item Pool. Available at: http://ipip.ori.org/newTCIKey.htm#Self-Disclosure

Jacobson, R. P., Mortensen, C. R., and Cialdini, R. B. (2011). Bodies obliged and unbound: differentiated response tendencies for injunctive and descriptive social norms. J. Pers. Soc. Psychol. 100, 433–448. doi: 10.1037/a0021470

Jetten, J., Haslam, C., and Haslam, S. A. (eds) (2012). The social Cure: Social Identity, Health and Well-Being. Hove: Psychology Press.

John, L. K., Acquisti, A., and Loewenstein, G. (2011). Strangers on a plane: context-dependent willingness to divulge sensitive information. J. Consum. Res. 37, 858–873. doi: 10.1086/656423

Kaplan, A. M., and Haenlein, M. (2010). Users of the world, unite! The challenges and opportunities of social media. Bus. Horiz. 53, 59–68. doi: 10.1016/j.bushor.2009.09.003

Knijnenburg, B. P., and Kobsa, A. (2013). Making decisions about privacy. ACM Trans. Interact. Intell. Syst. 3, 1–23. doi: 10.1145/2499670

Kühberger, A. (1998). The Influence of framing on risky decisions: a meta-analysis. Organ. Behav. Hum. Decis. Process. 75, 23–55. doi: 10.1006/obhd.1998.2781

Lauriola, M., and Levin, I. P. (2001). Personality traits and risky decision-making in a controlled experimental task: an exploratory study. Pers. Individ. Dif. 31, 215–226. doi: 10.1016/S0191-8869(00)00130-6

Lee, D.-H., Im, S., and Taylor, C. R. (2008). Voluntary self-disclosure of information on the Internet: a multimethod study of the motivations and consequences of disclosing information on blogs. Psychol. Mark. 25, 692–710. doi: 10.1002/mar.20232

Levin, I. P., Gaeth, G. J., Schreiber, J., and Lauriola, M. (2002). A New look at framing effects: distribution of effect sizes, individual differences, and independence of types of effects. Organ. Behav. Hum. Decis. Process. 88, 411–429. doi: 10.1006/obhd.2001.2983

Lucas, R. E., Diener, E., Grob, A., Suh, E. M., and Shao, L. (2000). Cross-cultural evidence for the fundamental features of extraversion. J. Pers. Soc. Psychol. 79, 452–468. doi: 10.1037/0022-3514.79.3.452

Magid, V., and Colder, C. R. (2007). The UPPS impulsive behavior scale: factor structure and associations with college drinking. Pers. Individ. Dif. 43, 1927–1937. doi: 10.1016/j.paid.2007.06.013

Mata, R., Josef, A. K., Samanez-Larkin, G. R., and Hertwig, R. (2011). Age differences in risky choice: a meta-analysis. Ann. N. Y. Acad. Sci. 1235, 18–29. doi: 10.1111/j.1749-6632.2011.06200.x

McClintock, C. G., and Liebrand, W. B. (1988). Role of interdependence structure, individual value orientation, and another’s strategy in social decision making: a transformational analysis. J. Pers. Soc. Psychol. 55, 396–409. doi: 10.1037/0022-3514.55.3.396

McCrae, R. R., and Costa, P. T. (1985). Comparison of EPI and psychoticism scales with measures of the five-factor model of personality. Pers. Individ. Dif. 6, 587–597. doi: 10.1016/0191-8869(85)90008-X

Mols, F., Haslam, S. A., Jetten, J., and Steffens, N. K. (2015). Why a nudge is not enough: a social identity critique of governance by stealth. Eur. J. Polit. Res. 54, 81–98. doi: 10.1111/1475-6765.12073

Moon, Y. (2010). Elicit self-disclosure from consumers. J. Consum. Res. 26, 323–339. doi: 10.1111/j.1740-0929.2010.00817.x

Nurse, J. R. C., Creese, S., Goldsmith, M., and Lamberts, K. (2011). “Trustworthy and effective communication of cybersecurity risks: a review,” in Proceedings of International Workshop on Socio-Technical Aspects in Security and Trust (STAST), (New York, NY: IEEE), 60–68. doi: 10.1109/STAST.2011.6059257

Opentracker (2014). Third-party cookies vs first-party cookies. Available at: http://www.opentracker.net/article/third-party-cookies-vs-first-party-cookies [accessed April 23, 2016].

Paolacci, G., and Chandler, J. (2014). Inside the turk: understanding mechanical turk as a participant pool. Curr. Dir. Psychol. Sci. 23, 184–188. doi: 10.1177/0963721414531598

Patil, S., Page, X., and Kobsa, A. (2011). “With a little help from my friends,” in Proceedings of the ACM 2011 Conference on Computer Supported Cooperative Work – CSCW 11, (New York, NY: ACM Press), 391. doi: 10.1145/1958824.1958885

Peacock, S. E. (2014). How web tracking changes user agency in the age of Big Data: the used user. Big Data Soc. 2, 1–11.

Peter, J. P. (1979). Reliability: a review of psychometric basics and recent marketing practices. J. Mark. Res. 16, 6–17. doi: 10.2307/3150868

Peterson, R. A. (1994). A Meta-analysis of Cronbach’s coefficient alpha. J. Consum. Res. 21, 381–391. doi: 10.1086/209405

Posner, E. A. (2000). Law and social norms: the case of tax compliance. Va. Law Rev. 86, 1781–1819. doi: 10.2307/1073829

Raja, F., Hawkey, K., Jaferian, P., Beznosov, K., and Booth, K. S. (2010). “It’s too complicated, so i turned it off!: expectations, perceptions, and misconceptions of personal firewalls,” in Proceedings of the 3rd ACM Workshop on Assurable and Usable Security Configuration, (New York, NY: ACM Press), 53–62. doi: 10.1145/1866898.1866907

Riquelme, P. I., and Román, S. (2014). Is the influence of privacy and security on online trust the same for all type of consumers? Electron. Mark. 24, 135–149. doi: 10.1007/s12525-013-0145-3

Rolison, J. J., Hanoch, Y., and Wood, S. (2012). Risky decision making in younger and older adults: the role of learning. Psychol. Aging 27, 129–140. doi: 10.1037/a0024689

Romer, D., Duckworth, A. L., Sznitman, S., and Park, S. (2010). Can adolescents learn self-control? Delay of gratification in the development of control over risk taking. Prev. Sci. 11, 319–330. doi: 10.1007/s11121-010-0171-8

Rook, D. W., and Fisher, R. J. (1995). Normative influences on impulsive buying behavior. J. Consum. Res. 22, 305–313. doi: 10.1086/209452

Rosenstock, I. M. (1974). The health belief model and preventive health behavior. Health Educ. Behav. 2, 354–386. doi: 10.1177/109019817400200405

Sánchez, A. R., Cortijo, V., and Javed, U. (2014). Students’ perceptions of Facebook for academic purposes. Comput. Educ. 70, 138–149. doi: 10.1016/j.compedu.2013.08.012

Scholly, K., Katz, A. R., Gascoigne, J., and Holck, P. S. (2005). Using social norms theory to explain perceptions and sexual health behaviors of undergraduate college students: an exploratory study. J. Am. Coll. Health 53, 159–166. doi: 10.3200/JACH.53.4.159-166

Smith, H. J., Dinev, T., and Xu, H. (2011). Information privacy research: an interdisciplinary review. MIS Q. 35, 989–1015. doi: 10.1126/science.1103618

Stanford, M. S., Mathias, C. W., Dougherty, D. M., Lake, S. L., Anderson, N. E., and Patton, J. H. (2009). Fifty years of the barratt impulsiveness scale: an update and review. Pers. Individ. Dif. 47, 385–395. doi: 10.1016/j.paid.2009.04.008

Steinel, W., and De Dreu, C. K. W. (2004). Social motives and strategic misrepresentation in social decision making. J. Pers. Soc. Psychol. 86, 419–434. doi: 10.1037/0022-3514.86.3.419

Stok, F. M., de Ridder, D. T. D., de Vet, E., and de Wit, J. B. F. (2012). Minority talks: the influence of descriptive social norms on fruit intake. Psychol. Health 27, 956–970. doi: 10.1080/08870446.2011.635303

Terry, D. J., and Hogg, M. A. (1996). Group norms and the attitude-behavior relationship: a role for group identification. Pers. Soc. Psychol. Bull. 22, 776–793. doi: 10.1177/0146167296228002

Thaler, R. H., and Sunstein, C. R. (2008). Nudge. Improving Decisions about Health Wealth and Happiness. Westminster: Penguin.

Thibaut, J., and Kelley, H. (1959). The Social Psychology of Groups. New York, NY: John Wiley & Sons, Inc.

Turland, J., Coventry, L., Jeske, D., Briggs, P., and van Moorsel, A. (2015). “Nudging towards security: developing an application for wireless network selection for android phones,” in Proceedings of the 2015 British HCI Conference on – British HCI ’15, (New York, NY: ACM Press), 193–201. doi: 10.1145/2783446.2783588

Tversky, A., and Kahneman, D. (1981). The framing of decisions and the psychology of choice. Science 211, 453–458. doi: 10.1126/science.7455683

VanVoorhis, C., and Morgan, B. (2007). Understanding power and rules of thumb for determining sample sizes. Tutor. Quant. Methods Psychol. 3, 43–50.

Visschers, V. H. M., Wiedemann, P. M., Gutscher, H., Kurzenhäuser, S., Seidl, R., Jardine, C. G., et al. (2012). Affect-inducing risk communication: current knowledge and future directions. J. Risk Res. 15, 257–271. doi: 10.1080/13669877.2011.634521

Wang, Y., Leon, P. G., Acquisti, A., Cranor, L. F., Forget, A., and Sadeh, N. (2014). “A field trial of privacy nudges for Facebook,” in Proceedings of the 32nd Annual ACM Conference on Human Factors in Computing Systems – CHI ’14, (New York, NY: ACM Press), 2367–2376. doi: 10.1145/2556288.2557413

Wheeless, L. R. (1978). A follow-up study of the relationships among trust, disclosure, and interpersonal solidarity. Hum. Commun. Res. 4, 143–157. doi: 10.1111/j.1468-2958.1978.tb00604.x

Wills, T. A., Gibbons, F. X., Sargent, J. D., Gerrard, M., Lee, H.-R., and Dal Cin, S. (2010). Good self-control moderates the effect of mass media on adolescent tobacco and alcohol use: tests with studies of children and adolescents. Health Psychol. 29, 539–549. doi: 10.1037/a0020818

Zeelenberg, M., Beattie, J., van der Pligt, J., and de Vries, N. K. (1996). Consequences of regret aversion: effects of expected feedback on risky decision making. Organ. Behav. Hum. Decis. Process. 65, 148–158. doi: 10.1006/obhd.1996.0013

Zhang-Kennedy, L., Chiasson, S., and Biddle, R. (2014). “Stop clicking on “update later”: persuading users they need up-to-date antivirus protection,” in Persuasive Technology: Lecture Notes in Computer Science, eds A. Spagnolli, L. Chittaro, and L. Gamberini (New York, NY: Springer International Publishing), doi: 10.1007/978-3-319-07127-5_27

Keywords: privacy, cookie, nudge, risk-taking, impulsivity, social norms

Citation: Coventry LM, Jeske D, Blythe JM, Turland J and Briggs P (2016) Personality and Social Framing in Privacy Decision-Making: A Study on Cookie Acceptance. Front. Psychol. 7:1341. doi: 10.3389/fpsyg.2016.01341

Received: 25 May 2016; Accepted: 22 August 2016;

Published: 07 September 2016.

Edited by:

Mohamed Chetouani, Pierre-and-Marie-Curie University, FranceReviewed by:

Evangelos Karapanos, Cyprus University of Technology, CyprusCopyright © 2016 Coventry, Jeske, Blythe, Turland and Briggs. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lynne M. Coventry, bHlubmUuY292ZW50cnlAbm9ydGh1bWJyaWEuYWMudWs= Pam Briggs, cC5icmlnZ3NAbm9ydGh1bWJyaWEuYWMudWs=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.