- 1Department of Cognitive Neuroscience, Maastricht University, Maastricht, Netherlands

- 2Department of Psychiatry and Mental Health, University of Cape Town, Cape Town, South Africa

- 3Department of Medical and Clinical Psychology, Tilburg University, Tilburg, Netherlands

- 4Laboratory for Translational Neuropsychiatry, Department of Neurosciences, KU Leuven, Leuven, Belgium

- 5Old Age Psychiatry, University Hospitals Leuven, Leuven, Belgium

There are many ways to assess face perception skills. In this study, we describe a novel task battery FEAST (Facial Expressive Action Stimulus Test) developed to test recognition of identity and expressions of human faces as well as stimulus control categories. The FEAST consists of a neutral and emotional face memory task, a face and shoe identity matching task, a face and house part-to-whole matching task, and a human and animal facial expression matching task. The identity and part-to-whole matching tasks contain both upright and inverted conditions. The results provide reference data of a healthy sample of controls in two age groups for future users of the FEAST.

Introduction

Face recognition is one of the most ubiquitous skills. The neural underpinnings of face perception are still a matter of debate. This is not surprising when one realizes that a face has a broad range of attributes. Identity is but one of these, and it is not clearly understood yet how a deficit in that area affects perception and recognition of other aspects of face perception. Prosopagnosia or absence of normal face identity recognition is one of the most peculiar neuropsychological symptoms and it has shed some light on the nature of face perception (de Gelder and Van den Stock, 2015). The term referred originally to loss of face recognition ability in adulthood following brain damage (Bodamer, 1947). Prosopagnosia can have a profound impact on social life, as in extreme cases the patients have difficulty recognizing the face of their spouse or child. More recently it has also been associated with neurodegenerative syndromes like fronto-temporal lobe degeneration (FTLD) (Snowden et al., 1989) and neurodevelopmental syndromes like cerebellar hypoplasia (Van den Stock et al., 2012b). In addition to the acquired variant, there is now general consensus on the existence of a developmental form, i.e., developmental prosopagnosia (DP). A recent prevalence study reported an estimate of 2.5% (Kennerknecht et al., 2006) and indicates that DP typically shows a hereditary profile with an autosomal dominant pattern.

In view of the rich information carried by the face, an assessment of specific face processing skills is crucial. Two questions are central. One, what specific dimension of facial information are we focusing on, and two, is its loss specific for faces. To date, there is no consensus or golden standard regarding the best tool and performance level that allows diagnosing individuals with face recognition complaints as “prosopagnosic.” Several tests and tasks have been developed, such as the Cambridge Face Memory Test (Duchaine and Nakayama, 2006), the Benton Facial Recognition Test (Benton et al., 1983), the Cambridge Face Perception Task (Dingle et al., 2005), the Warrington Recognition Memory Test (Warrington, 1984) and various tests using famous faces (such as adaptations of the Bielefelder famous faces test, Fast et al., 2008). These each provide a measure or a set of measures relating to particular face processing abilities, e.g., matching facial identities or rely on memory for facial identities which is exactly what is problematic in people with face recognition disorders. More generally, beyond the difference between perception and memory, there is not yet a clear understanding of how the different aspects of normal face perception are related. So testing of face skills should cast the net rather wide. A test battery suitable for the assessment of prosopagnosia should take some additional important factors into account. Firstly, to assess the face specificity of the complaints, the test battery should include not only tasks with faces, but also an equally demanding condition with control stimuli that are visually complex. Secondly, an important finding classically advanced to argue for a specialization for faces regards the configural way in which we seem to process faces, so the task should enable the measurement of configural processing of faces and objects. The matter of configuration perception also has been tackled in several different ways, such as with the composite face task (Young et al., 1987), the whole-part face superiority effect (Tanaka and Farah, 1993) or more recently, using gaze-contingency (Van Belle et al., 2011). We choose to focus on the classical face inversion effect (Yin, 1969; Farah et al., 1995), whose simple method lends itself very well to study object inversion effects. Next, besides using the inversion effect, configuration- vs. feature-based processing can also be investigated more directly by part-to-whole matching tasks (de Gelder et al., 2003). Furthermore, previous studies have found positive relationships between the ability to process faces configurally and face memory (Richler et al., 2011; Huis in ‘t Veld et al., 2012; Wang et al., 2012; DeGutis et al., 2013) indicating that configural processing might facilitate memory for faces.

Additionally, there is accumulating evidence in support of an interaction between face identity and face emotion processing (Van den Stock et al., 2008; Chen et al., 2011; Van den Stock and de Gelder, 2012, 2014) and there is increasing evidence that configuration processing is positively related to emotion recognition ability (Bartlett and Searcy, 1993; Mckelvie, 1995; Calder et al., 2000; White, 2000; Calder and Jansen, 2005; Durand et al., 2007; Palermo et al., 2011; Tanaka et al., 2012; Calvo and Beltrán, 2014). We therefore extended our test battery with tasks targeting emotion recognition and emotion effects on face memory, by adding an emotional face memory task and a facial expression matching task. To stay with the rationale of our test that each skill tested with faces must also be tested with a selected category of control objects, we used canine face expressions.

Taking all these aspects into account, we constructed a face perception test battery labeled the Facial Expressive Action Stimulus Test (FEAST). The FEAST is designed to provide a detailed assessment of multiple aspects of face recognition ability. Most of the subtests have been extensively described and validated on the occasion of prosopagnosia case reports and small group studies (de Gelder et al., 1998, 2000, 2003; de Gelder and Rouw, 2000a,b,c, 2001; Hadjikhani and de Gelder, 2002; de Gelder and Stekelenburg, 2005; Righart and de Gelder, 2007; Van den Stock et al., 2008, 2012a, 2013; Huis in ‘t Veld et al., 2012). But so far the test battery was not presented systematically as it had not been tested on a large sample of participants receiving the full set of subtests. Here, we report a new set of normative data for the finalized version of the FEAST, analyze the underlying relationships of the tasks, and freely provide the data and stimulus set to the research community for scientific purposes.

Materials and Methods

Subjects

The participants were recruited between 2012 and 2015 from acquaintances of lab members and research students. Participation was voluntarily and no monetary reward was offered. The following inclusion criteria were applied: right-handed, minimally 18 years old, normal or corrected-to-normal vision and normal basic visual functions as assessed by the Birmingham Object Recognition Battery (line length, size, orientation, gap, minimal feature match, foreshortened view, and object decision) (Riddoch and Humphreys, 1992). A history of psychiatric or neurological problems, as well as any other medical condition or medication use which would affect performance, or history of a concussion, were exclusion criteria. This study was carried out in accordance with the recommendations and guidelines of the Maastricht University ethics committee, the “Ethische Commissie Psychologie” (ECP). The protocol was approved by the Maastricht University ethics committee (ECP-number: ECP-128 12_05_2013).

In total, 61 people participated in the study. Three subjects were 80, 81, and 82 years old. Even though they adhered to every inclusion criteria, they were excluded from the analyses due to being outliers on age (more than 2 standard deviations from the mean). The sample thus consisted of 58 participants, between 18 and 62 years old (M = 38, SD = 15). Of those, 26 are male, between 19 and 60 years old (M = 38, SD = 15) and 32 women between 18 and 62 years old (M = 39, SD = 16). There are no differences in age between the genders [t(1, 56) = −0.474, p = 0.638].

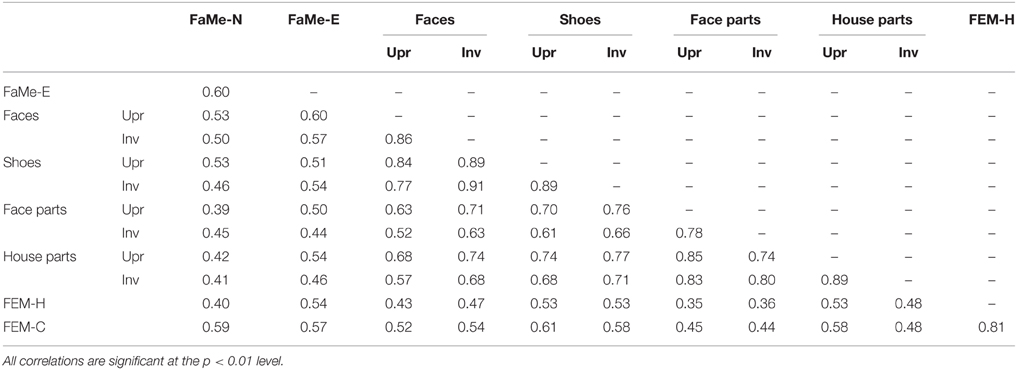

However, an age distribution plot (see Figure 1) reveals a gap, where there are only 6 participants between 35 and 49. Therefore, the sample is split in two: one “young adult” group, younger than 42 and a “middle aged” group of participants between 47 and 62 years old. The young adult age group consisted of 15 men between 19 and 37 years old, (M = 26, SD = 6) and 17 women between 18 and 41 years old (M = 26, SD = 8). The middle aged group consisted of 11 men between 47 and 60 years old (M = 53, SD = 4) and 15 women between 50 and 62 years old (M = 55, SD = 3).

Figure 1. Age distribution of the sample with the young adult group between 18 and 41 years old, and a middle aged group between 47 and 62 years old.

Experimental Stimuli and Design

The face and shoe identity matching task, face and house part-to-whole matching task, Neutral and Emotion Face Memory task (FaMe-N and FaMe-E) have been previously described including figures of stimulus examples (Huis in ‘t Veld et al., 2012).

Face and Shoe Identity Matching Task and the Inversion Effect

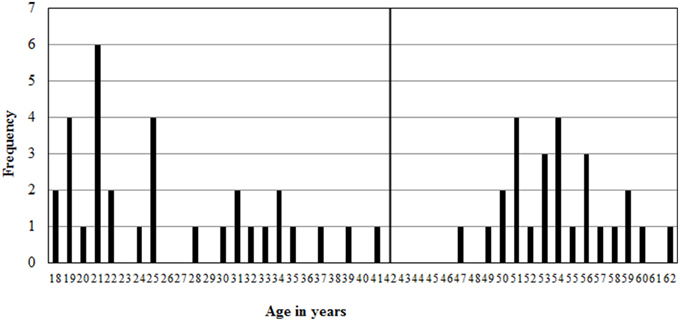

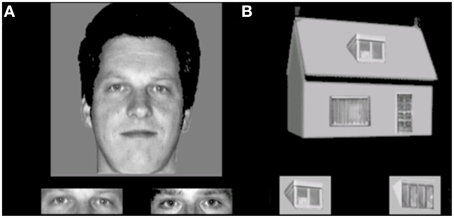

The face and shoe identity-matching task (de Gelder et al., 1998; de Gelder and Bertelson, 2009) was used to assess identity recognition and the inversion effect for faces and objects. The test contained 4 conditions with a 2 category (faces and shoes) × 2 orientation (upright and inverted) factorial design. The materials consisted of greyscale photographs of shoes (8 unique shoes) and faces (4 male, 4 female; neutral facial expression) with frontal view and ¾ profile view. A stimulus contained three pictures: one frontal view picture on top and two ¾ profile view pictures underneath. One of the two bottom pictures (target) was of the same identity as the one on top (sample) and the other was a distracter. The target and distracter pictures of the faces were matched for gender and hairstyle. Each stimulus was presented for 750 ms and participants were instructed to indicate by a button press which of the two bottom pictures represented the same exemplar as the one on top. Participants were instructed to answer as quickly but also as accurately as possible, and responses during stimulus presentation were collected. Following the response, a black screen with a fixation cross was shown for a variable duration (800–1300 ms). The experiment consisted of four blocks (one block per condition). In each block, 16 stimuli were presented 4 times in a randomized order, adding up to a total of 64 trials per block. Each block was preceded by 4 practice trials, during which the participants received feedback about their performance (see Figure 2).

Figure 2. Stimulus example of (A) upright faces and (B) upright shoes in the face and shoe identity matching task. Some identities are different from the actual stimuli due to copyright and permissions.

Face and House Part-to-whole Matching Task

This task is developed to assess holistic processing. The test also consisted of 4 conditions, with a 2 category (faces and houses) × 2 orientation (upright and inverted) factorial design. Materials consisted of grayscale pictures of eight faces (four male; neutral facial expression, photographed in front view and with direct gaze) and eight houses. From each face, part-stimuli were constructed by extracting the rectangle containing the eyes and the rectangle containing the mouth. House-part stimuli were created using a similar procedure, but the parts consisted of the door or window. The trial procedure was similar to the face and object identity matching task, where a whole face or house was presented on top (sample), with a target part-picture and a distractor part-picture presented underneath. Each trial was presented for 750 ms and participants were instructed to indicate by a button press which of the two bottom pictures represented the same exemplar as the one on top. Participants were instructed to answer as quickly but also as accurately as possible, and responses during stimulus presentation were collected. Following the response, a black screen with a fixation cross was shown for a variable duration (800–1300 ms). The experiment consisted of eight blocks (two blocks per condition). In each block, 16 stimuli were presented 2 times in a randomized order, adding up to a total of 32 trials per block and 64 trials per condition. Within blocks, the presentation of the two parts (eyes or mouth, window or door) was randomized in order to prevent participants to pay attention only to one specific feature. The first block of each condition was preceded by 4 practice trials, during which the participants received feedback about their performance (see Figure 3).

Figure 3. Stimulus examples of an (A) upright face and eyes and (B) upright house and windows trial in the face and house part-to-whole matching task.

Facial Expression Matching Task (FEM-H and FEM-C)

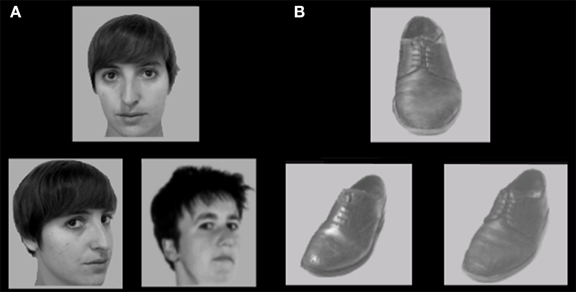

The FEM is a match-to-sample task used to measure emotion recognition ability in both human and canine faces. The experiment was divided into two parts. The first part consisted of human facial expressions (anger, fear, happy, sad, surprise, disgust). The materials consisted of grayscale photographs of facial expressions of 34 female identities and 35 male identities taken from the Karolinska Directed Emotional Faces (KDEF) (Lundqvist et al., 1998). This task has been used previously in Van den Stock et al. (2015). A stimulus consisted of three pictures: one picture on top (sample) and two pictures underneath. One of the two bottom pictures showed a face expressing the same emotion as the sample, the other was a distracter. The target and distracter pictures of the faces were matched for gender for the human stimuli. Each trial was presented until a response was given, but participants were instructed to answer as quickly and accurately as possible. Following the response, a black screen with a fixation cross was shown for a variable duration (800–1300 ms). Each emotional condition contained 10 trials (5 male) in which the target emotion was paired with a distracter from each of the other emotions once per gender, resulting in 60 trials in total. The first part was preceded by 4 practice trials, during which the participants received feedback about their performance.

The second part consisted of canine facial expressions. In total, 114 pictures of dogs which could be perceived as angry (17), fearful (27), happy (17), neutral (29), and sad (24) were taken from the internet by EH. These pictures were validated in a pilot study using 28 students of Tilburg University in exchange for course credit. The participants indicated of each photo whether they thought the dog was expressing anger, fear, happiness, sadness or no emotion in particular (neutral) and secondly, how intense they rated the emotional expression on a scale from one to five. Twelve angry, twelve fearful, and twelve happy canine expressions were accurately recognized by more than 80% of the participants and used in the experiment. The canine part consisted of 72 trials in total, 24 per emotion condition, in which each target emotion was paired with each of the distracter emotions 12 times. The experiment was preceded by 2 practice trials, during which the participants received feedback about their performance (see Figure 4).

Figure 4. Example stimulus of the Facial Expression Matching Task with an angry target and happy distracter stimulus trial for the (A) human and (B) canine experiment.

Neutral Face Memory Task (FaMe-N)

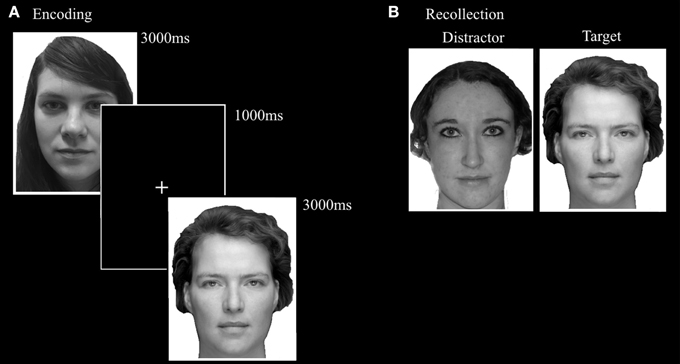

Based on the Recognition Memory Test (Warrington, 1984), the FaMe-N consists of an encoding and a recognition phase. The stimuli consist of 100 grayscale Caucasian faces (50 male) with a neutral facial expression, in front view, with frontal eye gaze. The stimuli were taken from a database created at Tilburg University. Trials in the encoding phase consisted of the presentation of a single stimulus for 3000 ms, followed by a black screen with a white fixation cross with a duration of 1000 ms. Participants were instructed to encode each face carefully and told that their memory for the faces would be tested afterwards. The encoding block consisted of 50 trials.

The recognition phase immediately followed upon the encoding phase. A trial in the recognition phase consisted of the simultaneous presentation of two adjacent faces. One was the target face and was also presented in the encoding phase. The other face was not previously presented in the encoding phase and served as distracter. Fifty trials were randomly presented and target and distractor presentation side were evenly distributed. Participants were instructed to indicate as quickly and also as accurately as possible which face was also presented in the encoding phase. The stimulus pairs were matched for gender and hairstyle (see Figure 5).

Figure 5. Trial setup examples of the (A) encoding phase and (B) recollection phase of the FaMe-N. Identities are different from the actual stimuli due to copyright and permissions.

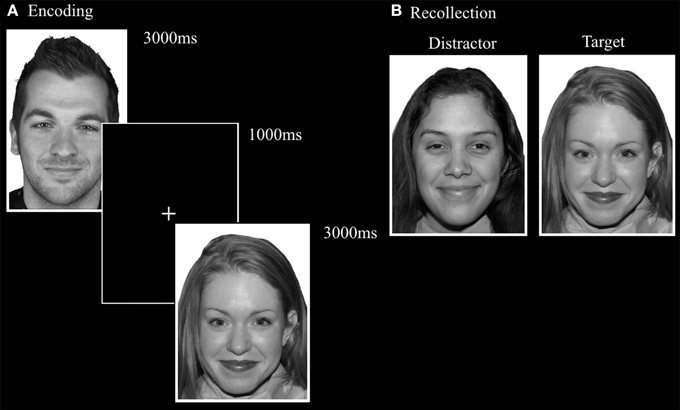

Emotional Face Memory Task (FaMe-E)

This task was designed by adapting the FaMe-N task by using stimuli containing emotional instead of neutral faces. Images were taken from the NimStim database (Tottenham et al., 2009) and stimuli created at Tilburg University. The stimuli consisted of 96 photographs (53 female) with direct eye gaze and frontal view. The individuals in the stimuli express fear, sadness, or happiness. There was no overlap in identities with the FaMe-N. The procedure was similar to the FaMe-N, but with 48 trials (16 per emotion) in both phases. The pictures making a stimulus pair were matched for emotion and hairstyle and in most trials also gender (see Figure 6).

Figure 6. Trial setup of a happy trial in the (A) encoding phase and (B) recollection phase of the FaMe-E. Some identities are different from the actual stimuli due to copyright and permissions.

Analyses

Accuracies were calculated as the total proportion of correct responses for both the total score of each task and for each condition separately. Average response times from stimulus onset were calculated for the correct responses only. For all tasks, reaction times faster than 150 ms were excluded from analyses. In addition, for the identity matching task and part-to-whole matching task, reaction times longer than 3000 ms were excluded from analyses. For the other tasks, reaction times longer than 5000 ms were excluded from analyses. The number of outliers are reported in the results. One control subject did not complete the face and house part-to-whole matching task. The SPSS dataset can be downloaded through the supplementary materials.

In addition, the internal consistency was assessed with the Kuder Richardson coefficient of reliability (KR 20), reported as ρKR20, which is analogous to Cronbach's alpha but suitable for dichotomous measures (Kuder and Richardson, 1937).

The results were analyzed using repeated measures GLMs, with the experimental factors as within subject variables and age group and gender as between subject variables. Interaction effects were further explored using post-hoc paired samples t-tests. The assumption of equality of error variances was checked with a Levene's test. The assumption of normality was not formally tested, as the sample is larger than 30 and repeated measures GLMs are quite robust against violations of normality.

Inversion scores were calculated by subtracting the accuracy and reaction time scores on the inverted presentation condition from the upright condition. A positive score indicates that accuracy was higher, or the reaction time was longer, on the upright condition. A negative score indicates higher accuracy or reaction times for the inverted condition. To assess whether a stronger configuration processing as measured by a higher accuracy inversion effect is related to improved face memory and emotion recognition, multiple linear regression analyses were performed with accuracy scores on the FaMe-N, FaMe-E, and both FEM tasks as dependent variable and age, gender, and four inversion scores (face identity, shoe identity, face-part, and house-part) as predictors. In addition, correlations between all tasks were calculated.

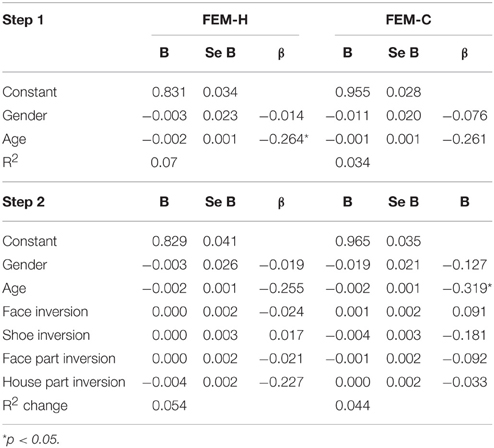

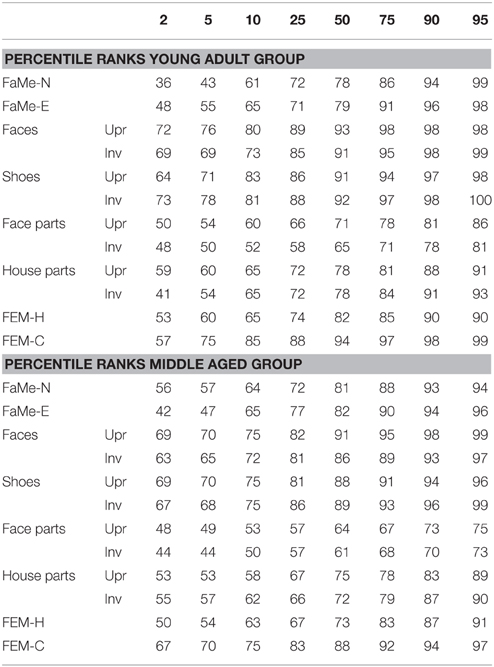

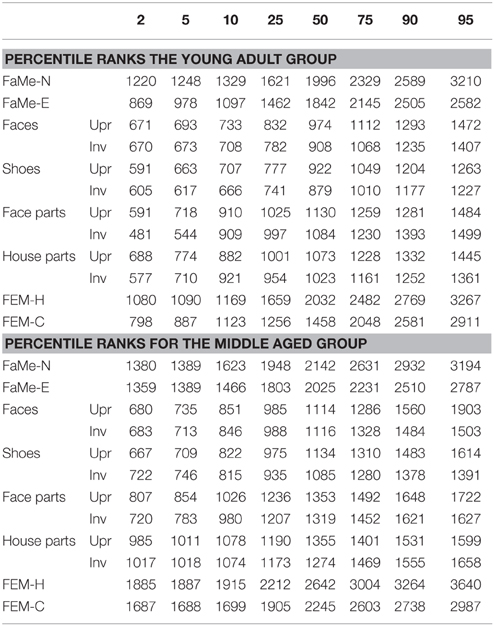

Lastly, percentile ranks of all tasks and correlations between all tasks were calculated and reported for both the accuracy scores and reaction times (see Tables 8–11).

Results

Face and Shoe Identity Matching Task

The task has a good internal consistency of ρKR20 = 0.912. The following number of outliers were discarded; upright faces: a total of 0.86% outliers across ten participants (M = 3.2 trials, SD = 2.7, min = 1, max = 8); inverted faces: 0.7% across ten participants (M = 2.6 trials, SD = 2.7, min = 1, max = 10); upright shoes: 0.9% across 15 participants (M = 2.1 trials, SD = 2, min = 1, max = 7) and inverted shoes: 0.5% across four participants (M = 4.8 trials, SD = 5.7, min = 1, max = 13).

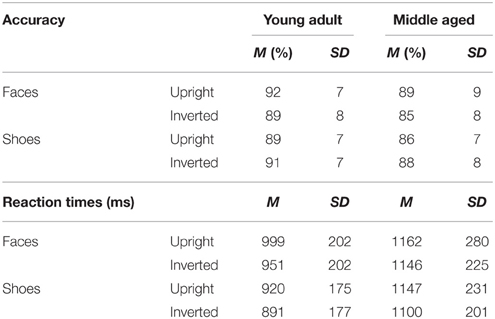

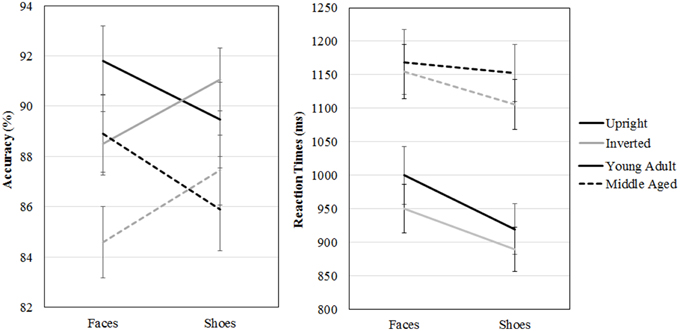

A repeated measures GLM on accuracy scores with category (faces, shoes) and orientation (upright, inverted) as within-subject factors and gender and age group as between-subject factors revealed a category by orientation interaction effect [F(1, 54) = 16.955, p < 0.001, ]. Paired samples t-tests show that upright faces are recognized more accurately than inverted faces [t(57) = 3.464, p = 0.001] and inverted shoes are recognized better than upright shoes [t(57) = −2.254, p = 0.028]. Also, the middle aged group is less accurate overall [F(1, 54) = 4.342, p = 0.042, ].

A repeated measures GLM with a similar design on reaction times showed that faces are matched slower than shoes [F(1, 54) = 16.063, p < 0.001, = 0.23], upright faces and shoes are matched slower than inverted ones [F(1, 54) = 7.560, p = 0.008, = 0.12] and the middle aged group responded slower [F(1, 54) = 15.174, p < 0.001, = 0.22; see Figure 7 and Table 1].

Figure 7. Means and standard errors of the mean of the accuracy and reaction times on the face and shoe matching task, split by age group.

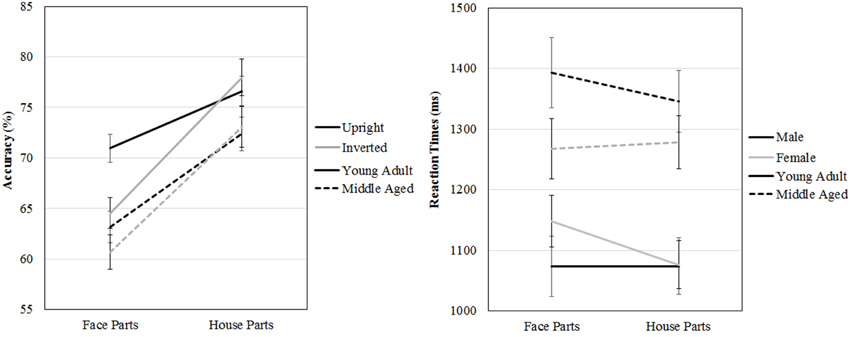

Face and House Part-to-whole Matching Task

The task has a good internal consistency of ρKR20 = 0.865. The following number of outliers were discarded; upright face parts: a total of 1.02% outliers across 38 participants (M = 2.7 trials, SD = 2.2, min = 1, max = 8); inverted face parts: 1.1% across 41 participants (M = 3.2 trials, SD = 3.2, min = 1, max = 13); upright house parts: 1.5% across 54 participants (M = 2.5 trials, SD = 2.8, min = 1, max = 12) and inverted house parts: 0.9% across 33 participants (M = 2.2 trials, SD = 1.6, min = 1, max = 6).

A repeated measures GLM on accuracy scores with category (faces, houses) and orientation (upright, inverted) as within-subject factors and gender and age group as between-subject factors revealed a three way age group by category by orientation interaction effect [F(1, 53) = 5.413, p = 0.024, ]. Overall, both age groups are better at part to whole matching of houses [F(1, 53) = 153.660, p < 0.001, ]. However, the young adult group is more accurately able to part to whole match upright than inverted faces [t(31) = 5.369, p < 0.001], whereas the middle aged group is not [t(24) = 0.952, p = 0.351], but no such group differences are found for house inversion [young adult group: t(31) = −0.958, p = 0.345, middle aged group: t(24) = −0.490, p = 0.628].

The same repeated measures GLM on reaction times revealed a three way gender by age group by category interaction effect [F(1, 53) = 5.539, p = 0.022, η2p = 0.10]. To assess this effect, the repeated measures GLM with category (faces, houses) and orientation (upright, inverted) as within-subject factors and age group as between-subject factors was run for males and females separately. For the female group, a category by age group interaction effect is found [F(1, 29) = 7.022, p = 0.013, ], whereas no significant effects were found for men (see Figure 8 and Table 2).

Figure 8. Means and standard errors of the mean of the accuracy and reaction times on the face and house part-to-whole matching task split by age group.

Table 2. Means and standard deviations on the face and house part-to-whole matching task by age group.

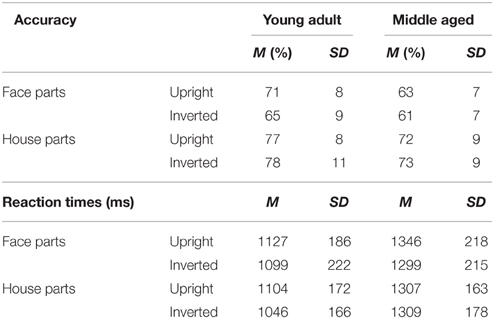

Facial Expression Matching Task

Human Facial Expressions (FEM-H)

The task has a reasonably good internal consistency of ρKR20 = 0.769. The following number of outliers were discarded from 47 participants; 14% in total (Anger: 2.5%, disgust: 1.8%, fear: 3.4%, happy: 0.7%, sad: 3.5%, surprise: 2.2%, M = 10.4 trials, SD = 6.6, min = 1, max = 27).

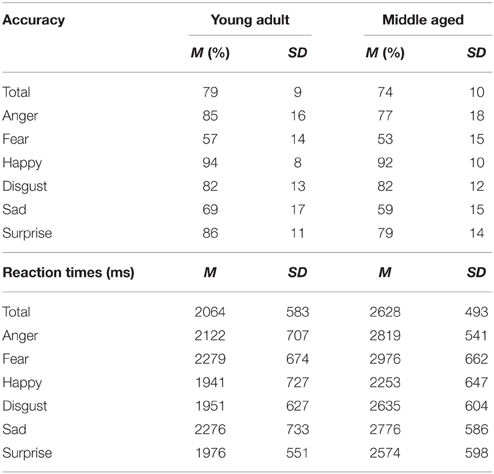

A repeated measures GLM on the accuracy scores with emotion (fear, sadness, anger, disgust, surprise, and happy) as within subject variables and gender and age group as between subject variables showed a main effect of emotion [F(5, 50) = 88.169, p < 0.001, ]. Post-hoc contrasts reveal that fear is recognized least accurate, worse than sadness [F(1, 54) = 15.998, p < 0.001, 0.23], on which accuracy rates are in turn lower than anger [F(1, 54) = 63.817, p < 0.001, 0.54]. Also, happy is recognized best with higher accuracy scores than surprise [F(1, 54) = 49.157, p < 0.001, 0.48].

The same repeated measures GLM on the reaction time data revealed a main effect of emotion [F(5, 50) = 15.055, p < 0.001, 0.60]. Happy was also recognized fastest (as compared to surprise, F(1, 54) = 7.873, p = 0.007, 0.13] and disgust was recognized slower than anger [F(1, 54) = 7.776, p = 0.007, 0.13]. Also, the middle aged age group is slower overall [F(1, 54) = 15.280, p < 0.001, 0.22; see Figure 9 and Table 3].

Figure 9. Means and standard errors of the mean of the accuracy of the whole group and reaction times on the FEM-H split by age group. ***p < 0.001, **p < 0.05.

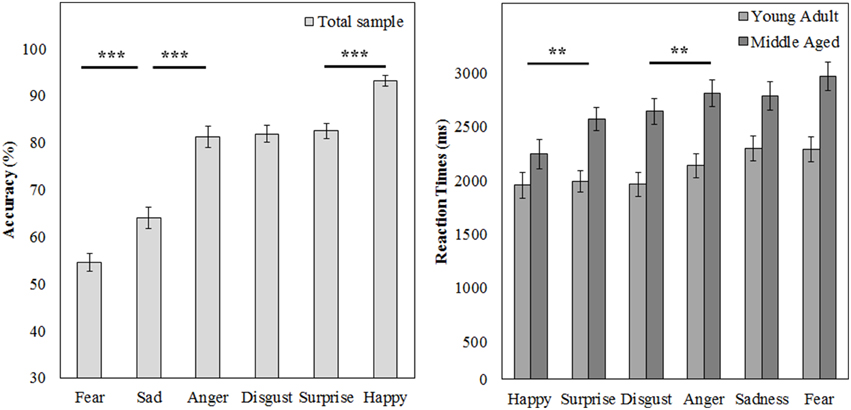

Canine Facial Expressions (FEM-C)

The task has a good internal consistency of ρKR20 = 0.847. From 35 participants, 5.3% of the trials were discarded (Anger: 1.1%, fear: 2.8%, happy: 1.4%, M = 6.3 trials, SD = 4.9, min = 1, max = 22).

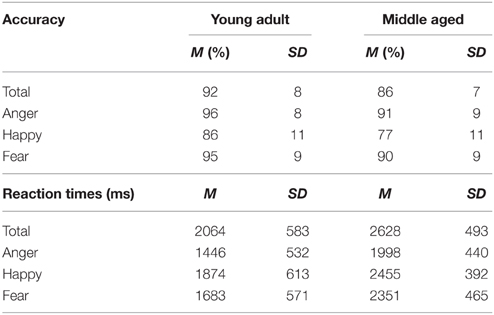

A repeated measures GLM on the accuracy scores with emotion (fear, anger, and happy) as within subject variables and gender and age group as between subject variables revealed a main effect of emotion [F(2, 53) = 37.049, p < 0.001, 0.58]. Fear was recognized least accurately [as compared to happy, F(1, 54) = 65.310, p < 0.001, 0.55]. Also, the middle aged group was less accurate at this task than the young adult group [F(1, 54) = 8.045, p = 0.006, 0.13].

Similarly, for reaction times a main effect of emotion [F(2, 53) = 66.335, p < 0.001, 0.72] was observed; anger is recognized quicker than happy [F(1, 54) = 74.880, p < 0.001, 0.58], which is in turn recognized a faster than fear [F(1, 54) = 17.588, p < 0.001, 0.25]. Additionally, again the middle aged group is slower overall [F(1, 54) = 19.817, p < 0.001, 0.27; see Figure 10 and Table 4].

Figure 10. Means and standard errors of the mean of the accuracy and reaction times on the FEM-Canine split by age group. ***p < 0.001.

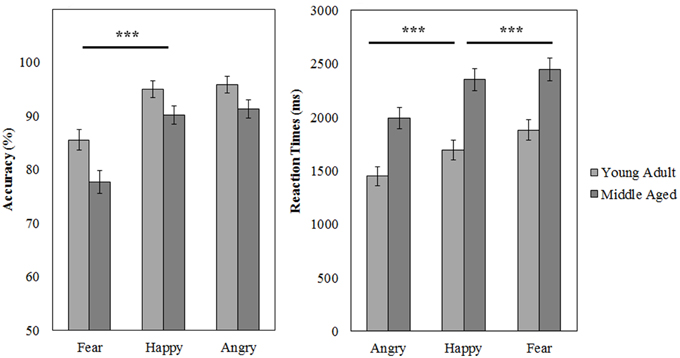

Neutral Face Memory Task (FaMe-N)

The task has a good internal consistency of ρKR20 = 0.808. In total 232 trials (8%) were outliers across 50 participants (M = 4.6, SD = 4.5, min = 1, max = 24).

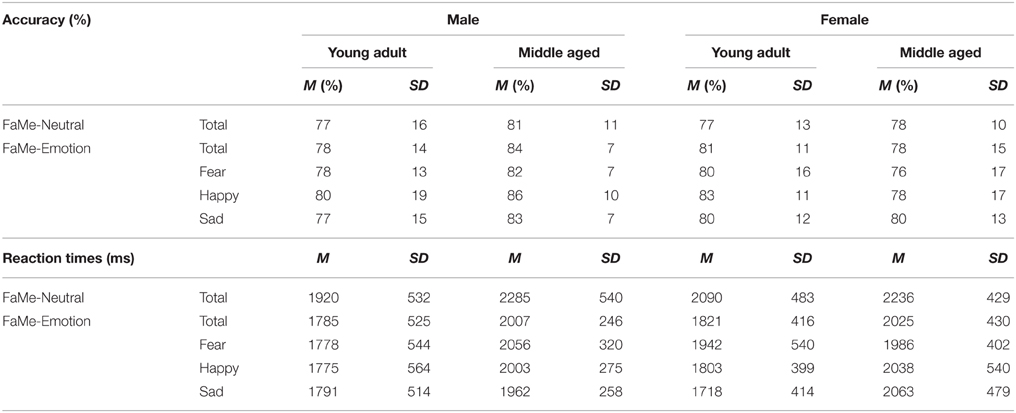

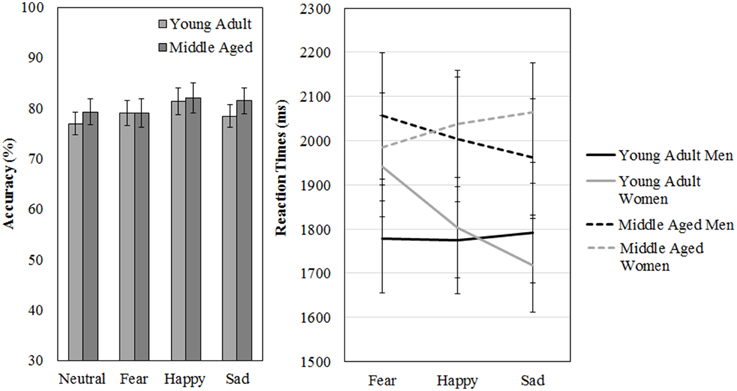

The participants scored on average 78% correct (SD = 12%) on the FaMe-N. No differences in accuracy scores on the FaMe-N are found for gender [F(1, 54) = 0.238, p = 0.628, 0.004] or age group [F(1, 54) = 0.469, p = 0.496, 0.009], nor is there any interaction effect.

Also, the average reaction time was 2121 ms (SD = 501) no difference in reaction times were found for gender [F(1, 54) = 0.211, p = 0.648, 0.004] but the effect of age group was near significance [F(1, 54) = 3.768, p = 0.057, 0.065; see Figure 11 and Table 5].

Figure 11. Means and standard errors of the mean of the accuracy and reaction times on the FaMe-N and FaMe-E.

Emotional Face Memory Task (FaMe-E)

The task has a good internal consistency of ρKR20 = 0.799. In total 125 trials (4.5%) were outliers across 34 participants (M = 3.7, SD = 3.5, min = 1, max = 19).

A repeated measures GLM on accuracy scores and reaction times scores with emotion (fear, happy, sad) as within-subject factors and gender and age group as between subject variables revealed no significant effects.

However, a gender by age group by emotion three-way interaction effect was found for reaction times, [F(2, 53) = 3.197, p = 0.049, 0.11]. Figure 11 shows that the pattern of results between men and women is reversed when the age groups are compared. It looks like young adult women seem quicker to recognize sadness than middle aged women: indeed, if the repeated measures is run for men and women separately, with emotion as within subject variables and age group as between, no effects of emotion or age group are found for men. However, for women, an emotion by age group interaction trend is found [F(2, 29) = 2.987, p = 0.066, 0.17; see Figure 11 and Table 5].

In addition, we directly compared the FaMe-N and FaMe-E using a repeated measures GLM on accuracy scores and reaction times scores on the neutral, fearful, happy, and sad conditions as within-subject factors and gender and age group as between subject variables, but no significant effects were found.

Relationships between Tasks

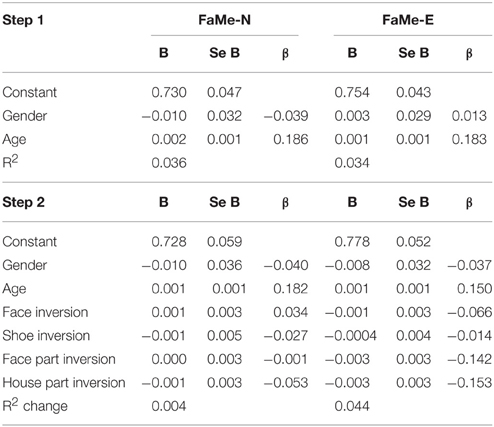

In the current sample, no significant predictive relationship between configuration processing as measured by the inversion effect and face memory scores were found (see Table 6).

Table 6. Regression coefficients of the inversion scores on the tasks for configural and feature-based processing on the total scores of the Face Memory–Neutral and the Face Memory–Emotion task.

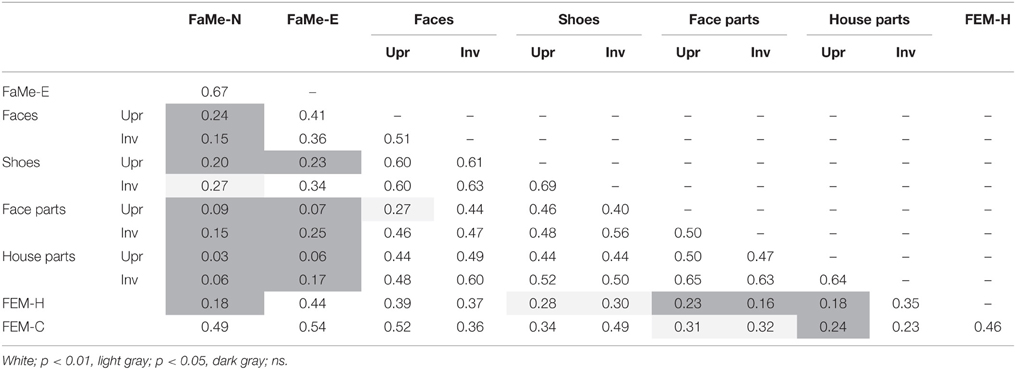

Similarly, no significant relationship between configuration processing and emotion recognition scores were found, aside from a negative effect of age on accuracy on the FEM-H and FEM-C, see Table 7. In addition, see Tables 8, 9 for correlations between the all the tasks and subtasks of the FEAST.

Table 7. Regression coefficients of the inversion scores on the tasks for configural and feature-based processing on the total scores of the Facial Expression Matching- Human and Canine task.

Table 8. Percentile ranks corresponding to accuracy scores (as percentage correct) split by age group for all tasks and subtasks.

Table 9. Percentile ranks corresponding to reaction times split by age group for all tasks and subtasks.

Furthermore, percentile ranks for accuracy scores as percentage correct and the reaction times are reported in Tables 8, 9, and the correlations between all tasks are reported in Tables 10, 11.

Discussion

In this study, we provide normative data of a large group of healthy controls on several face and object recognition tasks, face memory tasks and emotion recognition tasks. The effects of gender and age were also reported. All tasks have a good internal consistency and an acceptable number of outliers.

Firstly, face and object processing and configuration processing were assessed. As expected, upright face recognition is more accurate than inverted face recognition, in line with the face inversion effect literature (Yin, 1969; Farah et al., 1995). Interestingly, even though the middle aged group was less accurate than the young adults group, their response patterns regarding face and object inversion were comparable. As configurational processing measured by (upright-inverted) inversion scores was not influenced by gender or age, this is a stable effect in normal subjects. The absence of any interaction effects with age group or gender indicate that category specific configuration effects are stable across gender and between young adulthood and middle age. This implies it is a suitable index to evaluate in prosopagnosia assessment. Secondly, the face and house part to whole matching task seems to be a harder task than the whole face and shoe matching task, as indicated by overall lower accuracies. Young adults are more sensitive to inversion in this task.

Thirdly, we found that fear and sadness recognition on our FEM-H task was quite poor, but that anger, disgust, surprise and happiness were recognized above 80% accuracy. Similarly, canine emotions were recognized very well, although fear was also the worst recognized canine emotion and the older age group scored slightly worse and slower on this task, confirming that this subtest provides a good control.

Lastly, no effects of gender or age were found on neutral face memory, and participants scored quite well on the task, with an average of almost 80% correct. Similarly, no clear effects of age, gender or emotion were found on face memory as measured with the FaMe-E, except that it seems that middle aged women are slower to recognize previously seen identities when they expressed sadness. Interestingly, this is in line with the “age-related positivity effect” (Samanez-Larkin and Carstensen, 2011; Reed and Carstensen, 2012). In general, the results corroborate those from other studies on the effect of emotion on memory (Johansson et al., 2004), but a wide variety of results has been reported in the literature (Dobel et al., 2008; Langeslag et al., 2009; Bate et al., 2010; D'Argembeau and Van der Linden, 2011; Righi et al., 2012; Liu et al., 2014). In addition, we did not find any relationships between configuration perception and face memory. This can be due to the fact that unlike in samples with DPs and controls, there is less variability in inversion scores and memory scores (i.e., most participants will not have any configuration processing deficits similar to DPs and in contrast to DPs, most controls are not severely limited on face memory).

The results indicate that age is most likely a modulating factor when studying face and object processing, as the responses of the middle aged group is often slower. One explanation besides a general cognitive decline with age can be found in the literature on the effect of age on facial recognition, where an “own-age bias” is often found (Lamont et al., 2005; Firestone et al., 2007; He et al., 2011; Wiese, 2012). The “own-age bias” in face recognition refers to the notion that individuals are more accurate at recognizing faces from individuals belonging to the age category of the observer. For instance, children are better at recognizing child faces and adults are better at recognizing adult faces. Future researchers wishing to use the FEAST should compare the results of their participants with the appropriate age group, or should control for the effects of age or ideally, test age-matched controls. Gender on the other hand does not seem so influential, but this article provides guidelines and data for both gender and age groups regardless.

Some limitations of the FEAST should be noted. One is the lack of a non-face memory control condition using stimuli with comparable complexity. However, a recent study with a group of 16 DPs showed that only memory for faces, in contrast to hands, butterflies and chairs was impaired (Shah et al., 2014), so for this group this control condition might not be necessary. Also, the specific effects of all emotions, valence and arousal may be taken into account in future research. The face memory test could be complemented with the use of test images that show the face in the test phase from a different angle that in the training phase as is done in the matching tests. In addition, the low performance on fear recognition should be assessed. In short, the FEAST provides researchers with an extensive battery for neutral and emotional face memory, whole and part-to-whole face and object matching, configural processing and emotion recognition abilities.

Author Contributions

All authors contributed significantly to the concept and design of the work. EH collected and analyzed the data. All authors contributed to data interpretation. EH drafted the first version of the manuscript and BD and JV revised.

Funding

National Initiative Brain & Cognition; Contract grant number: 056-22-011. EU project TANGO; Contract grant number: FP7-ICT-2007-0 FETOpen. European Research Council under the European Union's Seventh Framework Programme (ERC); Contract grant number: FP7/2007–2013, agreement number 295673. JV is a post-doctoral research fellow for FWO-Vlaanderen.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fpsyg.2015.01609

References

Bartlett, J. C., and Searcy, J. (1993). Inversion and configuration of faces. Cogn. Psychol. 25, 281–316. doi: 10.1006/cogp.1993.1007

Bate, S., Haslam, C., Hodgson, T. L., Jansari, A., Gregory, N., and Kay, J. (2010). Positive and negative emotion enhances the processing of famous faces in a semantic judgment task. Neuropsychology 24, 84–89. doi: 10.1037/A0017202

Benton, A. L., Sivan, A. B., Hamsher, K., Varney, N. R., and Spreen, O. (1983). Contribution to Neuropsychological Assessment. New York, NY: Oxford University Press.

Calder, A. J., and Jansen, J. (2005). Configural coding of facial expressions: the impact of inversion and photographic negative. Visual Cogn. 12, 495–518. doi: 10.1080/13506280444000418

Calder, A. J., Young, A. W., Keane, J., and Dean, M. (2000). Configural information in facial expression perception. J. Exp. Psychol. Hum. Percept. Perform. 26, 527–551. doi: 10.1037/0096-1523.26.2.527

Calvo, M. G., and Beltrán, D. (2014). Brain lateralization of holistic versus analytic processing of emotional facial expressions. Neuroimage 92, 237–247. doi: 10.1016/j.neuroimage.2014.01.048

Chen, W., Lander, K., and Liu, C. H. (2011). Matching faces with emotional expressions. Front. Psychol. 2:206. doi: 10.3389/fpsyg.2011.00206

D'Argembeau, A., and Van der Linden, M. (2011). Influence of facial expression on memory for facial identity: effects of visual features or emotional meaning? Emotion 11, 199–208. doi: 10.1037/A0022592

de Gelder, B., Bachoud-Lévi, A. C., and Degos, J. D. (1998). Inversion superiority in visual agnosia may be common to a variety of orientation polarised objects besides faces. Vision Res. 38, 2855–2861.

de Gelder, B., and Bertelson, P. (2009). A comparative approach to testing face perception: Face and object identification by adults in a simultaneous matching task. Psychol. Belgica 42, 177–190. doi: 10.5334/pb-49-2-3-177

de Gelder, B., Frissen, I., Barton, J., and Hadjikhani, N. (2003). A modulatory role for facial expressions in prosopagnosia. Proc. Natl. Acad. Sci. U.S.A. 100, 13105–131010. doi: 10.1073/pnas.1735530100

de Gelder, B., Pourtois, G., Vroomen, J., and Bachoud-Lévi, A. C. (2000). Covert processing of faces in prosopagnosia is restricted to facial expressions: evidence from cross-modal bias. Brain Cogn. 44, 425–444. doi: 10.1006/brcg.1999.1203

de Gelder, B., and Rouw, R. (2000a). Configural face processes in acquired and developmental prosopagnosia: evidence for two separate face systems? Neuroreport 11, 3145–3150. doi: 10.1097/00001756-200009280-00021

de Gelder, B., and Rouw, R. (2000b). Paradoxical configuration effects for faces and objects in prosopagnosia. Neuropsychologia 38, 1271–1279. doi: 10.1016/S0028-3932(00)00039-7

de Gelder, B., and Rouw, R. (2000c). Structural encoding precludes recognition of parts in prosopagnosia. Cogn. Neuropsychol. 17, 89–102. doi: 10.1080/026432900380508

de Gelder, B., and Rouw, R. (2001). Beyond localisation: a dynamical dual route account of face recognition. Acta Psychol. (Amst.) 107, 183–207. doi: 10.1016/S0001-6918(01)00024-5

de Gelder, B., and Stekelenburg, J. J. (2005). Naso-temporal asymmetry of the n170 for processing faces in normal viewers but not in developmental prosopagnosia. Neurosci. Lett. 376, 40–45. doi: 10.1016/j.neulet.2004.11.047

de Gelder, B., and Van den Stock, J. (2015). “Prosopagnosia,” in International Encyclopedia of the Social and Behavioral Sciences, 2nd Edn., Vol. 19, ed J. D. Wright (Oxford: Elsevier), 250–255.

DeGutis, J., Wilmer, J., Mercado, R. J., and Cohan, S. (2013). Using regression to measure holistic face processing reveals a strong link with face recognition ability. Cognition 126, 87–100. doi: 10.1016/j.cognition.2012.09.004

Dingle, K. J., Duchaine, B. C., and Nakayama, K. (2005). A new test for face perception [abstract]. J. Vision 5:40a. doi: 10.1167/5.8.40

Dobel, C., Geiger, L., Bruchmann, M., Putsche, C., Schweinberger, S. R., and Junghöfer, M. (2008). On the interplay between familiarity and emotional expression in face perception. Psychol. Res. 72, 580–586. doi: 10.1007/s00426-007-0132-4

Duchaine, B., and Nakayama, K. (2006). The cambridge face memory test: results for neurologically intact individuals and an investigation of its validity using inverted face stimuli and prosopagnosic participants. Neuropsychologia 44, 576–585. doi: 10.1016/j.neuropsychologia.2005.07.001

Durand, K., Gallay, M., Seigneuric, A., Robichon, F., and Baudouin, J. Y. (2007). The development of facial emotion recognition: the role of configural information. J. Exp. Child Psychol. 97, 14–27. doi: 10.1016/j.jecp.2006.12.001

Farah, M. J., Wilson, K. D., Drain, H. M., and Tanaka, J. R. (1995). The inverted face inversion effect in prosopagnosia: evidence for mandatory, face-specific perceptual mechanisms. Vision Res. 35, 2089–2093.

Firestone, A., Turk-Browne, N. B., and Ryan, J. D. (2007). Age-related deficits in face recognition are related to underlying changes in scanning behavior. Aging Neuropsychol. Cogn. 14, 594–607. doi: 10.1080/13825580600899717

Hadjikhani, N., and de Gelder, B. (2002). Neural basis of prosopagnosia: an fmri study. Hum. Brain Mapp. 16, 176–182. doi: 10.1002/hbm.10043

He, Y., Ebner, N. C., and Johnson, M. K. (2011). What predicts the own-age bias in face recognition memory? Soc. Cogn. 29, 97–109. doi: 10.1521/soco.2011.29.1.97

Huis in ‘t Veld, E., Van den Stock, J., and de Gelder, B. (2012). Configuration perception and face memory, and face context effects in developmental prosopagnosia. Cogn. Neuropsychol. 29, 464–481. doi: 10.1080/02643294.2012.732051

Johansson, M., Mecklinger, A., and Treese, A. C. (2004). Recognition memory for emotional and neutral faces: an event-related potential study. J. Cogn. Neurosci. 16, 1840–1853. doi: 10.1162/0898929042947883

Kennerknecht, I., Grueter, T., Welling, B., Wentzek, S., Horst, J., Edwards, S., et al. (2006). First report of prevalence of non-syndromic hereditary prosopagnosia (hpa). Am. J. Med. Genet. A 140, 1617–1622. doi: 10.1002/ajmg.a.31343

Kuder, G. F., and Richardson, M. W. (1937). The theory of the estimation of test reliability. Psychometrika 2, 151–160.

Lamont, A. C., Stewart-Williams, S., and Podd, J. (2005). Face recognition and aging: effects of target age and memory load. Mem. Cogn. 33, 1017–1024. doi: 10.3758/Bf03193209

Langeslag, S. J. E., Morgan, H. M., Jackson, M. C., Linden, D. E. J., and Van Strien, J. W. (2009). Electrophysiological correlates of improved short-term memory for emotional faces (vol 47, pg 887, 2009). Neuropsychologia 47, 2013–2013. doi: 10.1016/j.neuropsychologia.2009.02.031

Liu, C. H., Chen, W., and Ward, J. (2014). Remembering faces with emotional expressions. Front. Psychol. 5:1439. doi: 10.3389/Fpsyg.2014.01439

Lundqvist, D., Flykt, A., and Öhman, A. (1998). The Karolinska Directed Emotional Faces - Kdef. Stockholm: Karolinska Institutet.

Mckelvie, S. J. (1995). Emotional expression in upside-down faces - evidence for configurational and componential processing. Br. J. Soc. Psychol. 34, 325–334.

Palermo, R., Willis, M. L., Rivolta, D., McKone, E., Wilson, C. E., and Calder, A. J. (2011). Impaired holistic coding of facial expression and facial identity in congenital prosopagnosia. Neuropsychologia 49, 1226–1235. doi: 10.1016/j.neuropsychologia.2011.02.021

Reed, A. E., and Carstensen, L. L. (2012). The theory behind the age-related positivity effect. Front. Psychol. 3:339. doi: 10.3389/Fpsyg.2012.00339

Richler, J. J., Cheung, O. S., and Gauthier, I. (2011). Holistic processing predicts face recognition. Psychol. Sci. 22, 464–471. doi: 10.1177/0956797611401753

Riddoch, M. J., and Humphreys, G. W. (1992). Birmingham Object Recognition Battery. Hove: Psychology Press.

Righart, R., and de Gelder, B. (2007). Impaired face and body perception in developmental prosopagnosia. Proc. Natl. Acad. Sci. U.S.A. 104, 17234–17238. doi: 10.1073/pnas.0707753104

Righi, S., Marzi, T., Toscani, M., Baldassi, S., Ottonello, S., and Viggiano, M. P. (2012). Fearful expressions enhance recognition memory: electrophysiological evidence. Acta Psychol. 139, 7–18. doi: 10.1016/j.actpsy.2011.09.015

Samanez-Larkin, G. R., and Carstensen, L. L. (2011). “Socioemotional functioning and the aging brain,” in The Oxford Handbook of Social Neuroscience, ed J. T. C. J. Decety (New York, NY: Oxford University Press), 507–521.

Shah, P., Gaule, A., Gaigg, S. B., Bird, G., and Cook, R. (2014). Probing short-term face memory in developmental prosopagnosia. Cortex 64C, 115–122. doi: 10.1016/j.cortex.2014.10.006

Snowden, J. S., Goulding, P. J., and Neary, D. (1989). Semantic dementia: a form of circumscribed cerebral atrophy. Behav. Neurol. 2, 167–182.

Tanaka, J. W., and Farah, M. J. (1993). Parts and wholes in face recognition. Q. J. Exp. Psychol. A 46, 225–245.

Tanaka, J. W., Kaiser, M. D., Butler, S., and Le Grand, R. (2012). Mixed emotions: holistic and analytic perception of facial expressions. Cogn. Emot. 26, 961–977. doi: 10.1080/02699931.2011.630933

Tottenham, N., Tanaka, J. W., Leon, A. C., McCarry, T., Nurse, M., Hare, T. A., et al. (2009). The nimstim set of facial expressions: judgments from untrained research participants. Psychiatry Res. 168, 242–249. doi: 10.1016/j.psychres.2008.05.006

Van Belle, G., Busigny, T., Lefèvre, P., Joubert, S., Felician, O., Gentile, F., et al. (2011). Impairment of holistic face perception following right occipito-temporal damage in prosopagnosia: converging evidence from gaze-contingency. Neuropsychologia 49, 3145–3150. doi: 10.1016/j.neuropsychologia.2011.07.010

Van den Stock, J., and de Gelder, B. (2012). Emotional information in body and background hampers recognition memory for faces. Neurobiol. Learn. Mem. 97, 321–325. doi: 10.1016/j.nlm.2012.01.007

Van den Stock, J., and de Gelder, B. (2014). Face identity matching is influenced by emotions conveyed by face and body. Front. Hum. Neurosci. 8:53. doi: 10.3389/fnhum.2014.00053

Van den Stock, J., de Gelder, B., De Winter, F. L., Van Laere, K., and Vandenbulcke, M. (2012a). A strange face in the mirror. Face-selective self-misidentification in a patient with right lateralized occipito-temporal hypo-metabolism. Cortex 48, 1088–1090. doi: 10.1016/j.cortex.2012.03.003

Van den Stock, J., de Gelder, B., Van Laere, K., and Vandenbulcke, M. (2013). Face-selective hyper-animacy and hyper-familiarity misperception in a patient with moderate alzheimer's disease. J. Neuropsychiatry Clin. Neurosci. 25, E52–E53. doi: 10.1176/appi.neuropsych.12120390

Van den Stock, J., De Winter, F. L., de Gelder, B., Rangarajan, J. R., Cypers, G., Maes, F., et al. (2015). Impaired recognition of body expressions in the behavioral variant of fronto-temporal dementia. Neuropsychologia 75, 496–504. doi: 10.1016/j.neuropsychologia.2015.06.035

Van den Stock, J., Vandenbulcke, M., Zhu, Q., Hadjikhani, N., and de Gelder, B. (2012b). Developmental prosopagnosia in a patient with hypoplasia of the vermis cerebelli. Neurology 78, 1700–1702. doi: 10.1212/WNL.0b013e3182575130

Van den Stock, J., van de Riet, W. A., Righart, R., and de Gelder, B. (2008). Neural correlates of perceiving emotional faces and bodies in developmental prosopagnosia: an event-related fmri-study. PLoS ONE 3:e3195. doi: 10.1371/journal.pone.0003195

Wang, R. S., Li, J. G., Fang, H. Z., Tian, M. Q., and Liu, J. (2012). Individual differences in holistic processing predict face recognition ability. Psychol. Sci. 23, 169–177. doi: 10.1177/0956797611420575

White, M. (2000). Parts and wholes in expression recognition. Cogn. Emot. 14, 39–60. doi: 10.1080/026999300378987

Wiese, H. (2012). The role of age and ethnic group in face recognition memory: ERP evidence from a combined own-age and own-race bias study. Biol. Psychol. 89, 137–147. doi: 10.1016/j.biopsycho.2011.10.002

Keywords: face recognition, face memory, emotion recognition, configural face processing, inversion effect, experimental task battery

Citation: de Gelder B, Huis in ‘t Veld EMJ and Van den Stock J (2015) The Facial Expressive Action Stimulus Test. A test battery for the assessment of face memory, face and object perception, configuration processing, and facial expression recognition. Front. Psychol. 6:1609. doi: 10.3389/fpsyg.2015.01609

Received: 22 July 2015; Accepted: 05 October 2015;

Published: 29 October 2015.

Edited by:

Luiz Pessoa, University of Maryland, USAReviewed by:

Yawei Cheng, National Yang-Ming University, TaiwanWim Van Der Elst, Hasselt University, Belgium

Copyright © 2015 de Gelder, Huis in ‘t Veld and Van den Stock. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Beatrice de Gelder, Yi5kZWdlbGRlckBtYWFzdHJpY2h0dW5pdmVyc2l0eS5ubA==

Beatrice de Gelder

Beatrice de Gelder Elisabeth M. J. Huis in ‘t Veld

Elisabeth M. J. Huis in ‘t Veld Jan Van den Stock

Jan Van den Stock