- Department of Psychology, Faculty of Science and Technology, Bournemouth University, Poole, UK

The processing of facial identity and facial expression have traditionally been seen as independent—a hypothesis that has largely been informed by a key double dissociation between neurological patients with a deficit in facial identity recognition but not facial expression recognition, and those with the reverse pattern of impairment. The independence hypothesis is also reflected in more recent anatomical models of face-processing, although these theories permit some interaction between the two processes. Given that much of the traditional patient-based evidence has been criticized, a review of more recent case reports that are accompanied by neuroimaging data is timely. Further, the performance of individuals with developmental face-processing deficits has recently been considered with regard to the independence debate. This paper reviews evidence from both acquired and developmental disorders, identifying methodological and theoretical strengths and caveats in these reports, and highlighting pertinent avenues for future research.

Introduction

The human face conveys information regarding a person’s identity (e.g., Schyns and Oliva, 1999; Gosselin and Schyns, 2001), emotional state (e.g., Ekman and Friesen, 1976; Smith et al., 2005), gender (e.g., Brown and Perrett, 1993), age (e.g., George and Hole, 1995), and direction of attention (e.g., Sander et al., 2007). This information is rapidly filtered so complex perceptual categorizations can be performed (Schyns et al., 2009). Despite decades of research on how we extract various cues from faces, it is still widely debated whether identity and expression information is processed and represented within shared or independent systems.

One way to approach this question is to examine the type of basic visual information (e.g., spatial frequency ranges or spatial location of diagnostic cues) and perceptual processes (e.g., holistic and featural or analytic processing) that are used to make identity and expression judgments. Studies examining the effect of spatial frequency (i.e., coarse vs. fine visual information) do not show a strict dissociation between expression and identity judgments: although certain spatial frequency bands may be more conducive to identification or detection of individual emotions (e.g., Costen et al., 1996; Gao and Maurer, 2011; Kumar and Srinivasan, 2011), or may be used preferentially for different tasks (e.g., Schyns and Oliva, 1999; Deruelle and Fagot, 2005); both high and low spatial frequency bands carry sufficient information to convey expression and identity information (e.g., Deruelle and Fagot, 2005), and biases toward spatial frequency bands are not fixed (Schyns and Oliva, 1999). As such, spatial frequency bands cannot be taken to represent dissociable pathways of visual processing for expression and identity. However, techniques such as the “Bubbles” task (Gosselin and Schyns, 2001) indicate that typical perceivers do focus or rely on subtly different areas of the face when making expression and identity judgments—for instance, perceivers use a variety of discrete facial regions for different expression judgments (e.g., Smith et al., 2005), whereas identity judgments rely on a more diffuse area of the face, encompassing the eyes, nose, and mouth (Gosselin and Schyns, 2001; Schyns et al., 2002). Taken together, these findings are consistent with the idea that facial identity information is processed in a holistic manner (see Maurer et al., 2002; Piepers and Robbins, 2012; for a definition and discussion of holistic processing), encompassing both individual facial features and their precise spatial configuration; whereas expression judgments may rely more on processing of individual components or conjunctions of components. For example, research using the composite task has found that, in general, identity judgments rely quite strongly on the integration of information from the top and bottom halves of the face (see Rossion, 2013 for a review). Expression judgments also show this “composite effect,” suggesting that expressions are also processed holistically, but some authors have suggested that this process is independent of or different from the holistic processing that occurs for identity (Calder et al., 2000; White, 2000). Furthermore, other studies have suggested that componential or part-based processing is more efficient, and hence the default route of visual processing, for expression judgments (Tanaka et al., 2012; see also Ellison and Massaro, 1997). In sum, then, expression and identity judgments may rely on subtly different visual cues and processing styles, with identity judgments making use of more diffuse spatial areas of the face and a processing style that integrates information from across these areas, and expression judgments relying on smaller spatial areas (incorporating one or two facial features) and a more piecemeal or componential processing style. While this gives some indication that identity and expression may make use of similar, or at least overlapping, visual information, it still leaves open the question of whether individuals access these visual cues or processing styles separately for different tasks, or whether they are irrevocably intertwined. As such, this paper focuses on research into neuropsychological case studies, and the contribution they can make to this debate.

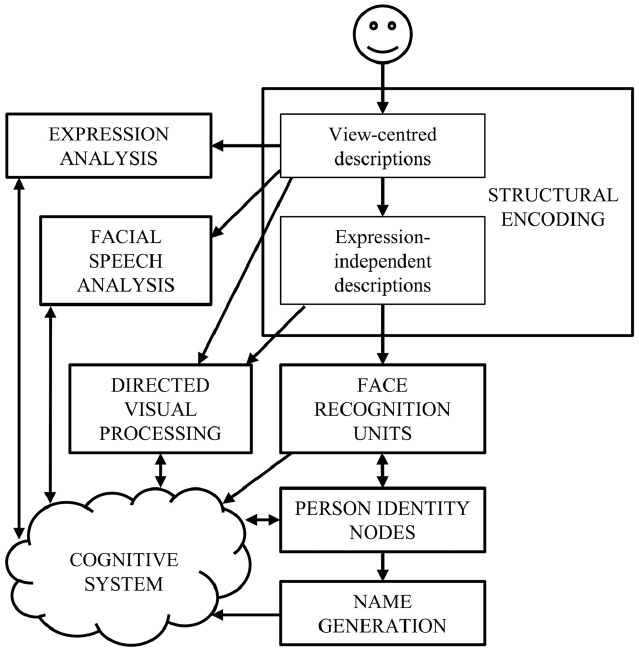

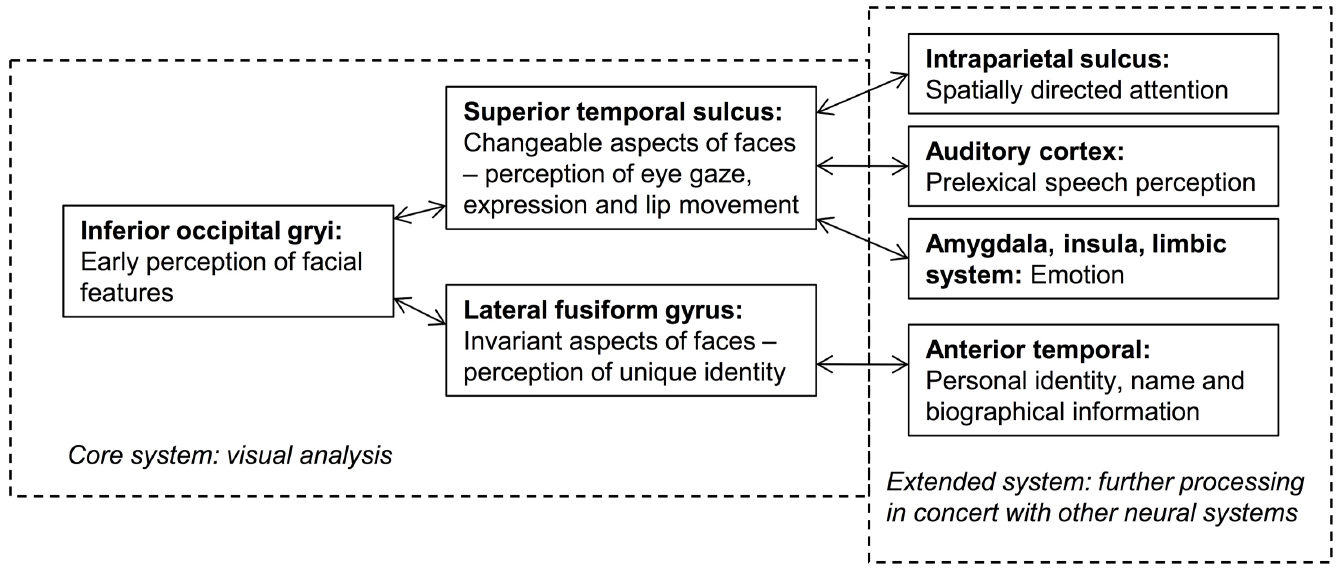

Current face-processing models support the segregation of identity and expression mechanisms. Functional models posit that identity and expression information is processed independently, although some interaction may be mediated by the wider cognitive system (e.g., Bruce and Young, 1986; see Figure 1). Anatomical models (e.g., Haxby et al., 2000; Gobbini and Haxby, 2007) distinguish between static structural properties of the face that relate to a single person (e.g., the shape and spacing of the facial features provide information on facial identity: Sergent, 1985), and dynamic variant information that is common to many individuals (e.g., positions of the muscles that convey an emotional state). Anatomically distinct brain regions are believed to analyze this information, with the lateral fusiform gyrus processing identity and the superior temporal sulcus (STS) expression (see Figure 2). While the anatomical model predicts this split occurs after an early stage of perceptual processing in the inferior occipital gyrus, other authors suggest this phase involves higher-level processing of both expression and identity (Calder and Young, 2005; Palermo et al., 2013). Both accounts are broadly consistent with the patterns of visual information use outlined above.

Figure 1. The cognitive model of face-processing proposed by Bruce and Young (1986).

Figure 2. An adaptation of the distributed model of face-processing proposed by Haxby et al. (2000).

Early evidence supporting the proposed independence of identity and expression processing (hereon termed “the independence hypothesis”) came from a double dissociation between two neurological disorders. One half comes from individuals with prosopagnosia, who cannot recognize familiar people yet have preserved processing of facial expression (e.g., Tranel et al., 1988). The other half comes from patients who are impaired at recognizing emotional expressions despite intact identification abilities (e.g., Young et al., 1993; Hornak et al., 1996). While the independence hypothesis remains a dominant aspect of cognitive and anatomical models, much behavioral (e.g., Fox and Barton, 2007; Bate et al., 2009a; Campbell and Burke, 2009) and neuroimaging (e.g., Ganel et al., 2005; Fox et al., 2009) evidence has questioned the degree of separation between the two processes. Further, Calder and Young (2005) discounted the traditional patient-based evidence supporting the independence hypothesis, positing that a single model can achieve independent coding of identity and expression using the different types of visual information described above.

Since the publication of Calder and Young’s (2005) review, new patient reports have overcome the limitations of previous work. These papers also describe neuroanatomical data that complement behavioral performance, and some directly assess the use of perceptual information in expression and identity processing. Further, several developmental neuropsychological case studies have addressed the independence hypothesis. This paper summarizes the patient-based evidence reported since 2005, and presents a timely review of the contribution of neuropsychological case reports to the independence debate.

Acquired Deficits in Facial Identity Processing

One half of the neuropsychological double dissociation traditionally believed to support the independence hypothesis comes from individuals with prosopagnosia. This condition typically results from occipitotemporal lesions (Barton, 2008), and is characterized by a severe impairment in facial identity recognition. In some cases expression recognition appears to be preserved (i.e., performance was within the range of typical age-matched controls; Tranel et al., 1988; McNeil and Warrington, 1993; Young et al., 1993; Takahashi et al., 1995), supporting the independence hypothesis. Yet, Calder and Young (2005) argue that the bulk of this evidence should be discounted because the prosopagnosia is not visuoperceptual in origin, instead resulting from prosopamnesia (impaired recognition of faces encoded after but not before illness onset; e.g., Tranel et al., 1988), general amnesia, or more general semantic impairments (e.g., Etcoff, 1984). In fact, the authors suggested that only two cases truly appeared to have visuoperceptual deficits (Bruyer et al., 1983; Tranel et al., 1988), yet both investigations suffered from methodological or statistical limitations. The authors therefore concluded that no case of prosopagnosia published prior to their review provided convincing evidence in support of the independence hypothesis.

However, since 2005, further instances of prosopagnosia with preserved expression recognition have been described. Riddoch et al. (2008) reported patient FB, who had damage to the right fusiform, right inferior temporal, right middle temporal and right inferior occipital gyri. FB had severe prosopagnosia yet scored within the typical range on an expression recognition test. Yet, the actual processing strategies used by FB were not assessed, and, in line with previous patient reports (e.g., Adolphs et al., 2005), the authors suggest that her normal performance on the expression task may be underpinned by atypical strategies. As mentioned above, expression processing may rely on both part-based analytical processing and some degree of holistic processing. While the possibility was not explicitly tested, the authors suggest that FB relied on part-based information to a greater extent than controls in the expression task, and could use this information to achieve a normal score (see also Baudouin and Humphreys, 2006).

Fox et al. (2011) used more sensitive measures of identity and expression perception in four individuals with prosopagnosia, alongside a fMRI-based functional localizer that identified preserved and impaired cortical regions. All four patients had selective difficulties in facial identity recognition, and, consistent with the anatomical model (Haxby et al., 2000), two had right inferotemporal lesions and two had damage within the anterior temporal lobes. Strikingly, the authors also described a fifth patient with selective damage to the posterior STS (pSTS), who presented with impaired expression recognition. Notably, however, these deficits extended to identity recognition when irrelevant variations in expression needed to be discounted. While evidence from the four prosopagnosic individuals supports the independence hypothesis, the latter participant suggests some overlap in the diagnostic facial information used in the two tasks, although exactly what diagnostic information (e.g., processing style; separate visual cues) is currently unclear.

Converging evidence comes from patient HJA, who acquired damage to the ventral occipital and temporal lobes and was unable to recognize facial identity or static facial expressions at normal levels (Humphreys et al., 1993), potentially due to abnormal use of visual information during static expression processing (Baudouin and Humphreys, 2006). However, HJA performed significantly better when identifying moving facial expressions (Humphreys et al., 1993) and when matching moving faces based on identity (Lander et al., 2004). Although this movement advantage did not extend to overt identification or face learning, HJA’s use of movement cues in both identity and expression decisions suggests that (a) the neural mechanisms subserving facial movement processing—most likely the pSTS (Pitcher et al., 2011, 2014; Schultz et al., 2013)—can facilitate both processes, and (b) these neural mechanisms can be dissociated from those involved in static face-processing. This latter hypothesis is encompassed within the anatomical model (see Figure 2), although the role of the pSTS in identity recognition is less clear. O’Toole et al. (2002) suggest that the structure may also process “dynamic facial signatures” (characteristic patterns of motion that aid identification), although facial movement may also boost identity recognition by attracting attention and foveal fixation that guides stimulus-driven selective attention (O’Toole et al., 2002; Abrams and Christ, 2003). Regardless, this information may contribute to both expression and identity judgments, yet a smaller influence on the latter may facilitate identification only under limited circumstances (e.g., when the individual has trouble processing static facial cues; see O’Toole et al., 2002, for a discussion).

While increasing evidence supports independent mechanisms for dynamic and static facial information, there is less agreement about the stage at which this split occurs. Haxby et al. (2000) concur with Bruce and Young (1986) that the bifurcation occurs at an early stage (i.e., before the formation of view-independent structural descriptions in the functional model, and before processing in the lateral fusiform gyrus for identity and the STS for expression in the anatomical model). However, Palermo et al. (2011, 2013) posit a higher-order shared stage of processing that may involve holistic processing. A very recent report supports this hypothesis. Richoz et al. (2015) investigated the decoding of facial expression in patient PS, who had major lesions in the left mid-ventral and the right inferior occipital cortex, and minor lesions of the left posterior cerebellum and the right middle temporal gyrus. Previous work by Caldara et al. (2005) indicated that, in a facial identity task, PS used information in a sub-optimal manner, focusing on the mouth and external contours and avoiding the eye region. Yet, Richoz et al. (2015) found that PS used all the facial features to decode dynamic emotional expressions (with the exception of fear), and performed within the typical range when classifying those expressions. Despite this, PS had a general impairment in categorizing many static facial expressions, which, in line with the theory of Palermo et al. (2013), the authors attribute to the right inferior occipital gyrus lesion. They suggest that the preserved processing of dynamic expressions may occur via a direct and functionally distinct pathway connecting early visual cortex to the pSTS. Although this study did not investigate whether the patient also benefited from dynamic information in identity judgments, it indicates that the use of both dynamic and static information should be assessed in expression and identity tasks. Indeed, dissociable anatomic systems may exist for dynamic and static information, but there may be some overlap in the diagnostic information used for expression and identity judgments in each pathway.

Acquired Deficits in Facial Expression Recognition

There are fewer reports of acquired deficits in expression processing and it is not always clear whether facial identity recognition has been preserved. There is also some variation in lesion location (which is sometimes under-specified), with early studies reporting expression recognition difficulties following diffuse bilateral damage (Kurucz et al., 1980), right (Adolphs et al., 1996) or left (Young et al., 1993) hemisphere lesions, or selective amygdala damage (Adolphs et al., 1994; Brierley et al., 2004). These reports may be reconciled by findings that perceptual and recognition processes may be independently affected, and this may be related to lesion location. Studies have demonstrated deficits in expression matching but not naming following right hemisphere damage (Young et al., 1993), particularly to the right pSTS (Fox et al., 2011, patient R-ST1). Conversely, deficits in expression naming and emotional memory have been reported following unilateral (Adolphs et al., 1999; Brierley et al., 2004; Fox et al., 2011, patient R-AT1) or bilateral (Brierley et al., 2004) amygdala damage, respectively. Patient R-AT1 in Fox et al.’s (2011) report showed no pSTS damage and no difficulty performing expression matching tasks, suggesting dissociable roles for the pSTS and amygdala in expression processing. These findings converge neatly with the anatomical model.

The studies reviewed above mostly relied on categorization performance rather than examination of processing strategy. One exception is Adolphs et al.’s (1994) report of patient SM, who presented with amygdala damage and a selective deficit in fear recognition. In a later report, the authors found that she was unable to use diagnostic information from the eye region, irrespective of emotional expression (Adolphs et al., 2005). This had presented as a selective impairment in fear recognition because the eyes are the most important feature for identifying this emotion. As noted above for investigations examining individuals with prosopagnosia, categorization performance alone may obscure atypical use of visual information, influencing the theoretical conclusions that can be gleaned from patient reports.

Other criticisms suggest this half of the double dissociation has been over-simplified (Calder and Young, 2005). Evidence suggests that a single processing stream cannot process all expressions, as dissociable neural systems have been identified for particular emotions (e.g., Krolak-Salmon et al., 2003; although it is not clear if these dissociations are based on the emotion itself or simply perceptual features that are embedded within that expression, see Whalen et al., 2004). Instead, selective disruption of expression recognition may reflect damage to a more general emotion system (Calder and Young, 2005). Indeed, facial expression impairments are often associated with deficits in decoding vocal expression for specific emotions (Sprengelmeyer et al., 1999; Calder et al., 2000, 2001) and at a more general level (Adolphs et al., 2002).

Developmental Cases

Calder and Young (2005) rejected some patient-based evidence supporting the independence hypothesis on the grounds that it was developmental in origin. In line with other authors (e.g., Bishop, 1997; Karmiloff-Smith et al., 2003), they argue that individuals with developmental disorders may have had some form of atypical brain organization from birth. Thus, it is difficult to interpret developmental cases within cognitive models of the face-processing system, given that (a) the basic architecture of this system might not have developed, and (b) it is unknown if the system can be selectively disrupted in the same manner inferred for patients with acquired deficits. One could therefore argue that developmental rather than representational abnormalities provide a convincing explanation of the basis of face-processing impairments in these cases.

Despite these issues, several studies have investigated the independence hypothesis in developmental prosopagnosia. This disorder is typically viewed as a parallel condition to acquired prosopagnosia, and is similarly characterized by a severe and relatively selective deficit in facial identity recognition (e.g., Duchaine et al., 2007; Bate et al., 2009b; Garrido et al., 2009; Bate and Cook, 2012). However, these individuals have never experienced a brain injury, and do not have concurrent socio-emotional, intellectual or low-level perceptual difficulties. While some individuals with developmental prosopagnosia have deficits in facial expression recognition (Duchaine et al., 2006; Minnebusch et al., 2007), others appear to have a selective deficit only affecting the recognition of facial identity (Duchaine et al., 2003; Humphreys et al., 2007). Notably, however, Palermo et al. (2011) reported 12 developmental prosopagnosics who displayed normal levels of accuracy on a series of facial expression recognition tests, yet presented with impaired holistic coding of both facial expression and facial identity. The authors interpreted this finding as evidence that the prosopagnosics were relying on atypical strategies to achieve normal performance on the expression tasks. Thus, further work that examines actual processing strategy rather than accuracy and response times alone is required to clarify whether facial expression recognition is truly unaffected in some individuals with developmental prosopagnosia.

Evidence of selectively impaired expression recognition has been reported in socio-developmental disorders (SDDs), but findings are mixed. Many studies fail to find group-level deficits, with individuals falling into both typical and atypical ranges (for reviews, see Harms et al., 2010; Uljarevic and Hamilton, 2012). There is nevertheless some evidence that expression and identity processing can be dissociated (e.g., Hefter et al., 2005), although particular emotions (e.g., fear and surprise: Ashwin et al., 2007; Humphreys et al., 2007) appear to be disproportionately affected. As noted for brain-damaged patients, this evidence again suggests that deficits in expression recognition may be related to emotional processing more than face-processing. For instance, recent work suggests that facial (Cook et al., 2013) and vocal (Heaton et al., 2012) affect recognition deficits in autism may be attributed to co-occurring alexithymia rather than autism itself. Case reports of individuals with developmental visuoperceptual deficits in expression recognition without a concurrent SDD would provide more convincing evidence to support the independence hypothesis, yet no known case has been reported to date. This is unsurprising given that several authors suggest that facial expression recognition impairments inevitably lead to deficits in socio-emotional functioning, which may in turn lead to impairments in facial identity recognition (e.g., Schultz, 2005).

More consistent findings suggesting atypical processing of facial expression have been observed in studies that examine the use of diagnostic facial information in SDDs. Several reports suggest that, during expression recognition, high-functioning individuals with autism look less at the eye region (e.g., Pelphrey et al., 2002; Corden et al., 2008) or the inner facial features (eyes, nose, and mouth; Hernandez et al., 2009; Bal et al., 2010) than controls, or do not effectively use information from the upper-face when decoding expressions (Spezio et al., 2007; Gross, 2008).

Conclusion

While patient-based evidence supporting the independence of facial identity and expression processing was almost entirely discounted by Calder and Young (2005), more recent neuropsychological case studies have provided more convincing evidence supporting the independence hypothesis, particularly when accompanied by neuroanatomical data. Yet, reliance on recognition performance alone can clearly obscure atypicalities in the use of diagnostic visual information in both identity and expression recognition; and the use of dynamic and static information should be assessed in both processes, at both perceptual and mnemonic levels. When tested appropriately, neurological patients can provide invaluable contributions to theoretical debates such as the independence hypothesis. Although suitable patients are rare, supporting evidence may also be gleaned from more readily available developmental cases. While some authors have discounted the contribution of these individuals on theoretical grounds, observation of similar patterns of performance across acquired and developmental disorders would ultimately provide more convincing insights into the independence debate.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Abrams, R. A., and Christ, S. E. (2003). Motion onset captures attention. Psychol. Sci. 14, 427–432. doi: 10.1111/1467-9280.01458

Adolphs, R., Damasio, H., Tranel, D., and Damasio, A. R. (1996). Cortical systems for the recognition of emotion in facial expressions. J. Neurosci. 16, 7678–7687.

Adolphs, R., Gosselin, F., Buchanan, T. W., Tranel, D., Schyns, P., and Damasio, A. R. (2005). A mechanism for impaired fear recognition after amygdala damage. Nature 433, 68–72. doi: 10.1038/nature03086

Adolphs, R., Tranel, D., and Damasio, H. (2002). Neural systems for recognizing emotion from prosody. Emotion 2, 23–51. doi: 10.1037/1528-3542.2.1.23

Adolphs, R., Tranel, D., Hamann, S., Young, A. W., Calder, A. J., Phelps, E. A., et al. (1999). Recognition of facial emotion in nine individuals with bilateral amygdala damage. Neuropsychologia 37, 1111–1117. doi: 10.1016/S0028-3932(99)00039-1

Adolphs, R., Tranel, D., Tranel, H., and Damasio, A. (1994). Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 372, 669–672. doi: 10.1038/372669a0

Ashwin, C., Baron-Cohen, S., Wheelwright, S., O’Riordan, M., and Bullmore, E. T. (2007). Differential activation of the amygdala and the “social brain” during fearful face-processing in Asperger syndrome. Neuropsychologia 45, 2–14. doi: 10.1016/j.neuropsychologia.2006.04.014

Bal, E., Harden, E., Lamb, D., Van Hecke, A. V., Denver, J. W., and Porges, S. W. (2010). Emotion recognition in children with autism spectrum disorders: relations to eye gaze and autonomic state. J. Autism Dev. Disord. 40, 358–370. doi: 10.1007/s10803-009-0884-3

Barton, J. J. S. (2008). Structure and function in acquired prosopagnosia: lessons from a series of 10 patients with brain damage. J. Neuropsychol. 2, 197–225. doi: 10.1348/174866407X214172

Bate, S., and Cook, S. (2012). Covert recognition relies on affective valence in developmental prosopagnosia: evidence from the skin conductance response. Neuropsychology 26, 670–674. doi: 10.1037/a0029443

Bate, S., Haslam, C., and Hodgson, T. L. (2009a). Angry faces are special too: evidence from the visual scanpath. Neuropsychology 23, 658–667. doi: 10.1037/a0014518

Bate, S., Haslam, C., Jansari, A., and Hodgson, T. L. (2009b). Covert face recognition relies on affective valence in congenital prosopagnosia. Cogn. Neuropsychol. 26, 391–411. doi: 10.1080/02643290903175004

Baudouin, J. Y., and Humphreys, G. W. (2006). Compensatory strategies in processing facial emotions: evidence from prosopagnosia. Neuropsychologia 44, 1361–1369. doi: 10.1016/j.neuropsychologia.2006.01.006

Bishop, D. V. M. (1997). Cognitive neuropsychology and developmental disorders: uncomfortable bedfellows. Q. J. Exp. Psychol. A 50, 899–923. doi: 10.1080/713755740

Brierley, B., Medford, N., Shaw, P., and David, A. S. (2004). Emotional memory and perception in temporal lobectomy patients with amygdala damage. J. Neurol. Neurosurg. Psychiatry 75, 593–599. doi: 10.1136/jnnp.2002.006403

Brown, E., and Perrett, D. I. (1993). What gives a face its gender? Perception 22, 829–840. doi: 10.1068/p220829

Bruce, V., and Young, A. (1986). Understanding face recognition. Br. J. Psychol. 77, 305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x

Bruyer, R., Laterre, C., Seron, X., Feyereisen, P., Strypstein, E., Pierrard, E., et al. (1983). A case of prosopagnosia with some preserved covert remembrance of familiar faces. Brain Cogn. 2, 257–284. doi: 10.1016/0278-2626(83)90014-3

Caldara, R., Schyns, P., Mayer, E., Smith, M. L., Gosselin, F., and Rossion, B. (2005). Does prosopagnosia take the eyes out of face representations? Evidence for a defect in representing diagnostic facial information following brain damage. J Cogn. Neurosci. 17, 1–15. doi: 10.1162/089892905774597254

Calder, A. J., Burton, A. M., Miller, P., Young, A. W., and Akamatsu, S. (2001). A principal components analysis of facial expressions. Vision Res. 41, 1179–1208. doi: 10.1016/S0042-6989(01)00002-5

Calder, A. J., and Young, A. W. (2005). Understanding facial identity and facial expression recognition. Nat. Rev. Neurosci. 6, 641–653. doi: 10.1038/nrn1724

Calder, A. J., Young, A. W., Keane, J., and Dean, M. (2000). Configural information in facial expression perception. J. Exp. Psychol. Hum. Percept. Perform. 26, 527–551. doi: 10.1037/0096-1523.26.2.527

Campbell, J., and Burke, D. (2009). Evidence that identity-dependent and identity-independent neural populations are recruited in the perception of five basic emotional facial expressions. Vision Res. 49, 1532–1540. doi: 10.1016/j.visres.2009.03.009

Cook, R., Brewer, R., Shah, P., and Bird, G. (2013). Alexithymia, not autism, predicts poor recognition of emotional facial expressions. Psychol. Sci. 24, 723–732. doi: 10.1177/0956797612463582

Corden, B., Chilvers, R., and Skuse, D. (2008). Avoidance of emotionally arousing stimuli predicts social-perceptual impairment in Asperger’s syndrome. Neuropsychologia 46, 137–147. doi: 10.1016/j.neuropsychologia.2007.08.005

Costen, N. P., Parker, D. M., and Craw, I. (1996). Effects of high-pass and low-pass spatial filtering on face identification. Percept. Psychophys. 38, 602–612.

Deruelle, C., and Fagot, J. (2005). Categorizing facial identities, emotions, and genders: attention to high-and low-spatial frequencies by children and adult. J. Exp. Child Psychol. 90, 172–184. doi: 10.1016/j.jecp.2004.09.001

Duchaine, B., Germine, L., and Nakayama, K. (2007). Family resemblance: ten family members with prosopagnosia and within-class object agnosia. Cog. Neuropsychol. 24, 419–430. doi: 10.1080/02643290701380491

Duchaine, B., Parker, H., and Nakayama, K. (2003). Normal emotion recognition in a prosopagnosic. Perception 32, 827–838. doi: 10.1068/p5067

Duchaine, B., Yovel, G., Butterworth, E., and Nakayama, K. (2006). Prosopagnosia as an impairment to face-specific mechanisms: elimination of the alternative hypothesis in a developmental case. Cogn. Neuropsychol. 23, 714–747. doi: 10.1080/02643290500441296

Ekman, P., and Friesen, W. V. (1976). Pictures of Facial Affect. Palo Alto, CA: Consulting Psychologists Press.

Ellison, J. W., and Massaro, D. W. (1997). Featural evaluation, integration, and judgment of facial affect. J. Exp. Psychol. Hum. Percept. Perform. 23, 213–216. doi: 10.1037/0096-1523.23.1.213

Etcoff, N. (1984). Selective attention to facial identity and facial emotion. Neuropsychologia 22, 281–295. doi: 10.1016/0028-3932(84)90075-7

Fox, C. J., and Barton, J. J. (2007). What is adapted in face adaptation? The neural representations of expression in the human visual system. Brain Res. 1127, 80–89. doi: 10.1016/j.brainres.2006.09.104

Fox, C. J., Hanif, H. M., Iaria, G., Duchaine, B. C., and Barton, J. J. S. (2011). Perceptual and anatomic patterns of selective deficits in facial identity and expression processing. Neuropsychologia 49, 3188–3200. doi: 10.1016/j.neuropsychologia.2011.07.018

Fox, C. J., Moon, S. Y., Iaria, G., and Barton, J. J. (2009). The correlates of subjective perception of identity and expression in the face network: an fMRI adaptation study. Neuroimage 44, 569–580. doi: 10.1016/j.neuroimage.2008.09.011

Ganel, T., Valyear, K. F., Goshen-Gottstein, Y., and Goodale, M. A. (2005). The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia 43, 1645–1654. doi: 10.1016/j.neuropsychologia.2005.01.012

Gao, X., and Maurer, D. (2011). A comparison of spatial frequency tuning for the recognition of facial identity and facial expressions in adults and children. Vision Res. 51, 508–519. doi: 10.1016/j.visres.2011.01.011

Garrido, L., Furl, N., Draganski, B., Weiskopf, N., Stevens, J., Tan, G. C., et al. (2009). Voxel-based morphometry reveals reduced grey matter volume in the temporal cortex of developmental prosopagnosics. Brain 132, 3443–3455. doi: 10.1093/brain/awp271

George, P. A., and Hole, G. J. (1995). Factors influencing the accuracy of age estimates of unfamiliar faces. Perception 24, 1059–1073. doi: 10.1068/p241059

Gobbini, M. I., and Haxby, J. V. (2007). Neural systems for recognition of familiar faces. Neuropsychologia 45, 32–41. doi: 10.1016/j.neuropsychologia.2006.04.015

Gosselin, F., and Schyns, P. G. (2001). Bubbles: a technique to reveal the use of information in recognition tasks. Vision Res. 41, 2261–2271. doi: 10.1016/S0042-6989(01)00097-9

Gross, T. F. (2008). Recognition of immaturity and emotional expressions in blended faces by children with autism and other developmental disabilities. J. Autism Dev. Disord. 38, 297–311. doi: 10.1007/s10803-007-0391-3

Harms, M. B., Martin, A., and Wallace, G. L. (2010). Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychol. Rev. 3, 290–322. doi: 10.1007/s11065-010-9138-6

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Heaton, P., Reichenbacher, L., Sauter, D., Allen, R., Scott, S., and Hill, E. (2012). Measuring the effects of alexithymia on perception of emotional vocalizations in autism spectrum disorder and typical development. Psychol. Med. 42, 2453–2459. doi: 10.1017/S0033291712000621

Hefter, R. L., Manoach, D. S., and Barton, J. J. S. (2005). Perception of facial expression and facial identity in subjects with social developmental disorders. Neurology 65, 1620–1625. doi: 10.1212/01.wnl.0000184498.16959.c0

Hernandez, N., Metzger, A., Magne, R., Bonnet-Brilhaut, F., Roux, S., Barthelemy, C., et al. (2009). Exploration of core features of a human face by healthy and autistic adults analyzed by visual scanning. Neuropsychologia 47, 1004–1012. doi: 10.1016/j.neuropsychologia.2008.10.023

Hornak, J., Rolls, E. T., and Wade, D. (1996). Face and voice expression identification in patients with emotional and behavioural changes following ventral frontal lobe damage. Neuropsychologia 34, 247–261. doi: 10.1016/0028-3932(95)00106-9

Humphreys, G. W., Donnelly, N., and Riddoch, M. J. (1993). Expression is computed separately from facial identity, and it is computed separately for moving and static faces: neuropsychological evidence. Neuropsychologia 31, 173–181. doi: 10.1016/0028-3932(93)90045-2

Humphreys, K., Minshew, N., Leonard, G. L., and Behrmann, M. (2007). A fine-grained analysis of facial expression processing in high-functioning adults with autism. Neuropsychologia 45, 685–695. doi: 10.1016/j.neuropsychologia.2006.08.003

Karmiloff-Smith, A., Scerif, G., and Ansari, D. (2003). Double dissociation in developmental disorders? Theoretically misconceived, empirically dubious. Cortex 39, 161–163. doi: 10.1016/S0010-9452(08)70091-1

Krolak-Salmon, P., Henaff, M. A., Isnard, J., Tallon-Baudry, C., Guenot, M., Vighetto, A., et al. (2003). An attention modulated response to disgust in human ventral anterior insula. Ann. Neurol. 53, 446–453. doi: 10.1002/ana.10502

Kumar, D., and Srinivasan, N. (2011). Emotion perception is mediated by spatial frequency content. Emotion 11, 1144–1151. doi: 10.1037/a0025453

Kurucz, J., Soni, A., Feldmar, G., and Slade, W. (1980). Prosopo-affective agnosia and computerized tomography findings in patients with cerebral disorders. J. Am. Geriatr. Soc. 28, 475–478. doi: 10.1111/j.1532-5415.1980.tb01123.x

Lander, K., Humphreys, G., and Bruce, V. (2004). Exploring the role of motion in prosopagnosia: recognizing, learning and matching faces. Neurocase 10, 462–470. doi: 10.1080/13554790490900761

Maurer, D., Le Grand, R., and Mondloch, C. J. (2002). The many faces of configural processing. Trends Cogn. Sci. 6, 255–260. doi: 10.1016/S1364-6613(02)01903-4

McNeil, J. E., and Warrington, E. K. (1993). Prosopagnosia: a face-specific disorder. Q. J. Exp. Psychol. A 46, 1–10. doi: 10.1080/14640749308401064

Minnebusch, D. A., Suchan, B., Ramon, M., and Daum, I. (2007). Event-related potentials reflect heterogeneity of developmental prosopagnosia. Eur. J. Neurosci. 25, 2234–2247. doi: 10.1111/j.1460-9568.2007.05451.x

O’Toole, A. J., Roark, D. A., and Abdi, H. (2002). Recognizing moving faces: a psychological and neural synthesis. Trends Cogn. Sci. 6, 261–266. doi: 10.1016/S1364-6613(02)01908-3

Palermo, R., O’Connor, K. B., Davis, J. M., Irons, J., and McKone, E. (2013). New tests to measure individual differences in matching and labelling facial expressions of emotion, and their association with ability to recognize vocal emotions and facial identity. PLoS ONE 8:e68126. doi: 10.1371/journal.pone.0068126

Palermo, R., Willis, M. L., Rivolta, D., McKone, E., Wilson, C. E., and Calder, A. J. (2011). Impaired holistic coding of facial expression and facial identity in congenital prosopagnosia. Neuropsychologia 49, 1226–1235. doi: 10.1016/j.neuropsychologia.2011.02.021

Pelphrey, K. A., Sasson, N. J., Reznick, J. S., Paul, G., Goldman, B. D., and Piven, J. (2002). Visual scanning of faces in autism. J. Autism Dev. Disord. 32, 249–261. doi: 10.1023/A:1016374617369

Piepers, D. W., and Robbins, R. A. (2012). A review and clarification of the terms “holistic,” “configural,” and “relational” in the face perception literature. Front. Psychol. 3:559. doi: 10.3389/fpsyg.2012.00559

Pitcher, D., Dilks, D. D., Saxe, R. R., Triantafyllou, C., and Kanwisher, N. (2011). Differential selectivity for dynamic versus static information in face-selective cortical regions. Neuroimage 56, 2356–2363. doi: 10.1016/j.neuroimage.2011.03.067

Pitcher, D., Duchaine, B., and Walsh, V. (2014). Combined TMS and fMRI reveal dissociable cortical pathways for dynamic and static face perception. Curr. Biol. 24, 2066–2070. doi: 10.1016/j.cub.2014.07.060

Richoz, A. R., Jack, R. E., Garrod, O. G., Schyns, P. G., and Caldara, R. (2015). Reconstructing dynamic mental models of facial expressions in prosopagnosia reveals distinct representations for identity and expression. Cortex 65, 50–64. doi: 10.1016/j.cortex.2014.11.015

Riddoch, M. J., Johnston, R. A., Bracewell, R. M., Boutsen, L., and Humphreys, G. W. (2008). Are faces special? A case of pure prosopagnosia. Cogn. Neuropsychol. 25, 3–26. doi: 10.1080/02643290801920113

Rossion, B. (2013). The composite face illusion: a whole window into our understanding of holistic face perception. Vis. Cogn. 21, 139–253. doi: 10.1080/13506285.2013.772929

Sander, D., Grandjean, D., Kaiser, S., Wehrle, T., and Scherer, K. R. (2007). Interaction effects of perceived gaze direction and dynamic facial expression: evidence for appraisal theories of emotion. Eur. J. Cogn. Psychol. 19, 470–480. doi: 10.1080/09541440600757426

Schultz, J., Brockhaus, M., Bülthoff, H. H., and Pilz, K. S. (2013). What the human brain likes about facial motion. Cereb. Cortex 23, 1167–1178. doi: 10.1093/cercor/bhs106

Schultz, R. T. (2005). Developmental deficits in social perception in autism: the role of the amygdala and fusiform face area. Int. J. Dev. Neurosci. 23, 125–141. doi: 10.1016/j.ijdevneu.2004.12.012

Schyns, P. G., Bonnar, L., and Gosselin, F. (2002). Show me the features! Understanding recognition from the use of visual information. Psychol. Sci. 13, 402–409. doi: 10.1111/1467-9280.00472

Schyns, P. G., and Oliva, A. (1999). Dr. Angry and Mr. Smile: when categorization flexibly modifies the perception of faces in rapid visual presentations. Cognition 69, 243–265. doi: 10.1016/S0010-0277(98)00069-9

Schyns, P. G., Petro, L. S., and Smith, M. L. (2009). Transmission of facial expressions of emotion co-evolved with their efficient decoding in the brain: behavioral and brain evidence. PLoS ONE 4:e5625. doi: 10.1371/journal.pone.0005625

Sergent, J. (1985). Influence of task and input factors on hemispheric involvement in face-processing. J. Exp. Psychol. Hum. Percept. Perform. 11, 846–861. doi: 10.1037/0096-1523.11.6.846

Smith, M. L., Cottrell, G. W., Gosselin, F., and Schyns, P. G. (2005). Transmitting and decoding facial expressions. Psychol. Sci. 1, 184–189. doi: 10.1111/j.0956-7976.2005.00801.x

Spezio, M. L., Adolphs, R., Hurley, R. S. E., and Piven, J. (2007). Abnormal use of facial information in high-functioning autism. J. Autism Dev. Disord. 37, 929–939. doi: 10.1007/s10803-006-0232-9

Sprengelmeyer, R., Young, A. W., Schroeder, U., Grossenbacher, P. G., Federlien, J., Buttner, T., et al. (1999). Knowing no fear. Proc. Biol. Sci. 266, 2451–2456. doi: 10.1098/rspb.1999.0945

Takahashi, N., Kawamura, M., Hirayama, K., Shiota, J., and Isono, O. (1995). Prosopagnosia: a clinical and anatomical study of four patients. Cortex 31, 317–329. doi: 10.1016/S0010-9452(13)80365-6

Tanaka, J. W., Kaiser, M. D., Butler, S., and Le Grand, R. (2012). Mixed emotions: holistic and analytic perception of facial expressions. Cogn. Emot. 26, 961–977. doi: 10.1080/02699931.2011.630933

Tranel, D., Damasio, A. R., and Damasio, H. (1988). Intact recognition of facial expression, gender, and age in patients with impaired recognition of face identity. Neurology 38, 690–696. doi: 10.1212/WNL.38.5.690

Uljarevic, M., and Hamilton, A. (2012). Recognition of emotions in autism: a formal meta-analysis. J. Autism Dev. Disord. 43, 1517–1526. doi: 10.1007/s10803-012-1695-5

Whalen, P. J., Kagan, J., Cook, R. G., Davis, F. C., Kim, H., Polis, S., et al. (2004). Human amygdala responsivity to masked fearful eye whites. Science 306, 2061. doi: 10.1126/science.1103617

White, M. (2000). Parts and wholes in expression recognition. Cogn. Emot. 14, 39–60. doi: 10.1080/026999300378987

Keywords: face recognition, face-processing, emotional expression, facial identity, prosopagnosia

Citation: Bate S and Bennetts R (2015) The independence of expression and identity in face-processing: evidence from neuropsychological case studies. Front. Psychol. 6:770. doi: 10.3389/fpsyg.2015.00770

Received: 31 October 2014; Accepted: 22 May 2015;

Published: 09 June 2015.

Edited by:

Wenfeng Chen, Chinese Academy of Sciences, ChinaCopyright © 2015 Bate and Bennetts. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sarah Bate, Department of Psychology, Faculty of Science and Technology, Bournemouth University, Poole House, Fern Barrow, Poole BH12 5BB, UK,c2JhdGVAYm91cm5lbW91dGguYWMudWs=

Sarah Bate

Sarah Bate