- 1 Sackler Centre for Consciousness Science, University of Sussex, Brighton, UK

- 2 Department of Informatics, University of Sussex, Brighton, UK

- 3 Department of Psychiatry, Brighton and Sussex Medical School, Brighton, UK

We describe a theoretical model of the neurocognitive mechanisms underlying conscious presence and its disturbances. The model is based on interoceptive prediction error and is informed by predictive models of agency, general models of hierarchical predictive coding and dopaminergic signaling in cortex, the role of the anterior insular cortex (AIC) in interoception and emotion, and cognitive neuroscience evidence from studies of virtual reality and of psychiatric disorders of presence, specifically depersonalization/derealization disorder. The model associates presence with successful suppression by top-down predictions of informative interoceptive signals evoked by autonomic control signals and, indirectly, by visceral responses to afferent sensory signals. The model connects presence to agency by allowing that predicted interoceptive signals will depend on whether afferent sensory signals are determined, by a parallel predictive-coding mechanism, to be self-generated or externally caused. Anatomically, we identify the AIC as the likely locus of key neural comparator mechanisms. Our model integrates a broad range of previously disparate evidence, makes predictions for conjoint manipulations of agency and presence, offers a new view of emotion as interoceptive inference, and represents a step toward a mechanistic account of a fundamental phenomenological property of consciousness.

Introduction

In consciousness science, psychiatry, and virtual reality (VR), the concept of presence is used to refer to the subjective sense of reality of the world and of the self within the world (Metzinger, 2003; Sanchez-Vives and Slater, 2005). Presence is a characteristic of most normal healthy conscious experience. However, theoretical models of the neural mechanisms responsible for presence, and its disorders, are still lacking (Sanchez-Vives and Slater, 2005).

Selective disturbances of conscious presence are manifest in dissociative psychiatric disorders such as depersonalization (loss of subjective sense of reality of the self) and derealization (loss of subjective sense of reality of the world). Depersonalization disorder (DPD), characterized by the chronic circumscribed expression of these symptoms (Phillips et al., 2001; Sierra et al., 2005; Simeon et al., 2009; Sierra and David, 2011), can therefore provide a useful model for understanding presence. In VR, presence is used in a subjective–phenomenal sense to refer to the sense of now being in a virtual environment (VE) rather than in the actual physical environment (Sanchez-Vives and Slater, 2005). These perspectives are complementary: While studies of DPD can help identify candidate neural mechanisms underlying presence in normal conscious experience, studies of VR can help identify how presence can be generated even in situations where it would normally be lacking. Here, we aim to integrate insights into presence from these different perspectives within a single theoretical framework and model.

Our framework is based on interoceptive predictive coding within the anterior insular cortex (AIC) and associated brain regions. Interoception refers to the perception of the physiological condition of the body, a process associated with the autonomic nervous system and with the generation of subjective feeling states (James, 1890; Critchley et al., 2004; Craig, 2009). Interoception can be contrasted with exteroception which refers to (i) perception of the environment via the classical sensory modalities, and (ii) proprioception and kinesthesia reflecting the position and movement of the body in space (Sherrington, 1906; Craig, 2003; Critchley et al., 2004; Blanke and Metzinger, 2009). Predictive coding is a powerful framework for conceiving of the neural mechanisms underlying perception, cognition, and action (Rao and Ballard, 1999; Bubic et al., 2010; Friston, 2010). Simply put, predictive coding models describe counter flowing top-down prediction/expectation signals and bottom-up prediction error signals. Successful perception, cognition and action are associated with successful suppression (“explaining away”) of prediction error. Applied to interoception, predictive coding implies that subjective feeling states are determined by predictions about the interoceptive state of the body, extending the James–Lange, and Schachter–Singer theories of emotion (James, 1890; Schachter and Singer, 1962). Predictive coding models have previously been applied to the sense of agency (the sense that a person’s action is the consequence of his or her intention). Such models propose that disturbances of sensed agency, for example in schizophrenia, arise from imprecise predictions about the sensory consequences of actions (Frith, 1987; Blakemore et al., 2000; Synofzik et al., 2010; Voss et al., 2010). In one line of previous work, Verschure et al. (2003) proposed that presence in a VE is associated with good matches between expected and actual sensorimotor signals, leveraging a prediction-based model of behavior (“distributed adaptive control”; Bernardet et al., 2011). However, to our knowledge, computationally explicit predictive coding models have not been formally applied to presence, nor to interoceptive perceptions. Anatomically, we focus on the AIC because this region has been strongly implicated in interoceptive representation and in the associated generation of subjective feeling states (interoceptive awareness; Critchley et al., 2004; Craig, 2009; Harrison et al., 2010); moreover, AIC activity in DPD is abnormally low (Phillips et al., 2001).

In brief, our model proposes that presence is the result of successful suppression by top-down predictions of informative interoceptive signals evoked (directly) by autonomic control signals and (indirectly) by bodily responses to afferent sensory signals. According to the model, disorders of presence (as in DPD) follow from pathologically imprecise interoceptive predictive signals. The model integrates presence and agency while proposing that they are neither necessary nor sufficient for each other, offers a novel view of emotion as “interoceptive inference,” and is relevant to emerging models of selfhood based on proprioception and multisensory integration. Importantly, the model is testable via novel combinations of VR, neuroimaging, and manipulation of physiological feedback.

The model is motivated by several lines of theory and evidence, including: (i) general models of hierarchically organized predictive coding in cortex, following principles of Bayesian inference (Neal and Hinton, 1998; Lee and Mumford, 2003; Friston, 2009; Bubic et al., 2010); (ii) the importance of insular cortex (particularly the AIC) in integrating interoceptive and exteroceptive signals, and in generating subjective feeling states (Critchley et al., 2002, 2004; Craig, 2009; Harrison et al., 2010); (iii) suggestions and observations of prediction errors in insular cortex (Paulus and Stein, 2006; Gray et al., 2007; Preuschoff et al., 2008; Singer et al., 2009; Bossaerts, 2010); (iv) evidence of abnormal insula activation in DPD (Phillips et al., 2001; Sierra and David, 2011); (v) models of the subjective sense of “agency” (and its disturbance in schizophrenia) framed in terms of predicting the sensory consequences of self-generated actions (Frith, 1987, 2011; Synofzik et al., 2010; Voss et al., 2010); and (vi) theory and evidence regarding the role of dopamine in signaling prediction errors and in optimizing their precision (Schultz and Dickinson, 2000; Fiorillo et al., 2003; Friston et al., 2006; Fletcher and Frith, 2009).

In the remainder of this paper, we first define the concept of presence in greater detail. We then introduce the theoretical model before justifying its components with reference to each of the areas just described. We finish by extracting from the model some testable predictions, discussing related modeling work, and noting some potential challenges.

The Phenomenology of Presence

The concept of presence has emerged semi-independently in different fields (VR, psychiatry, consciousness science, philosophy) concerned with understanding basic features of normal and abnormal conscious experience. The concepts from each field partially overlap. In VR, presence has both subjective–phenomenal and objective–functional interpretations. In the former, presence is understood as the sense of now being in a VE while transiently unaware of one’s real location and of the technology delivering the sensory input and recording the motor output (Jancke et al., 2009); a more compact definition is simply “the sense of being there” (Lombard and Ditton, 1997) or “being now there” (Metzinger, 2003). The objective interpretation is based on establishing a behavioral/functional equivalence between virtual and real environments: “the key to the approach is that the sense of “being there” in a VE is grounded on the ability to “do there”” (Sanchez-Vives and Slater, 2005; p.333). In this paper we focus on the former interpretation as most relevant to the phenomenology of presence.

Within psychiatry, presence is often discussed with reference to its disturbance or absence in syndromes such as DPD and early (prodromal) stages of psychoses. A useful characterization of DPD is provided by Ackner (1954): “a subjective feeling of internal and/or external change, experienced as one of strangeness or unreality.” A common description given by DPD patients is that their conscious experiences of the self and the world have an “as if” character; the objects of perception seems unreal and distant, or unreachable “as if” behind a mirror or window. DPD patients do not normally suffer delusions or hallucinations, marking a clear distinction from full-blown psychoses such as schizophrenia; however, it is increasingly recognized that symptoms of DPD may characterize prodromal stages of psychosis (Moller and Husby, 2000) potentially providing diagnostic, prognostic, and explanatory value. There is a clear overlap between the usages of presence in DPD and VR in picking out the subjective feeling of “being there.” In the former case the sense of “being there” is lost, and in the latter, its generation is desired. More generally, presence can be considered as a constitutive property of conscious experience. Following Metzinger, a “temporal window of presence” can be understood as precipitating a subjective conscious “now” from the flow of objective time (Metzinger, 2003). Metzinger further connects the concept of presence to that of transparency, which refers to the fact that our perceptions of the world and of the self appear direct, unmediated by the neurocognitive mechanisms that in fact give rise to them. Here, we do not treat explicitly the temporal aspect of presence, and transparency and presence are treated synonymously. Considered this way, although presence can vary in its intensity, it is a characteristic of conscious experiences generally and not an instance of any specific conscious experience (e.g., an experience of a red mug); in other words, presence can be considered to be a “structural property” of consciousness (Seth, 2009).

Considering these perspectives together, there is a natural ambiguity about whether it is presence itself, or its absence in particular conditions, that is the core phenomenological explanatory target. However, in either case it remains necessary to formulate a model describing the relevant neurocognitive constraints. We now introduce such a model.

An Interoceptive Predictive-Coding Model of Conscious Presence

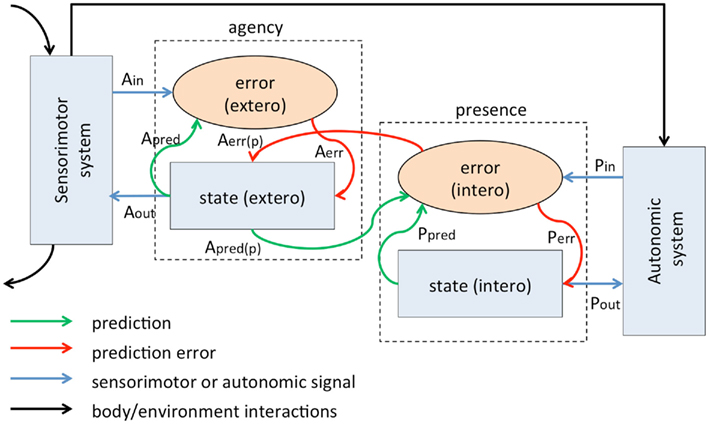

Figure 1 depicts the functional architecture of the proposed model. It consists of two primary components, an “agency component” and a “presence component,” mutually interacting according to hierarchical Bayesian principles and connected, respectively, with a sensorimotor system and an autonomic/motivational system. Each main component has a “state module” and an “error module.” The core concept of the model is that a sense of presence arises when informative interoceptive prediction signals are successfully matched to inputs so that prediction errors are suppressed. It is not sufficient simply for there to be zero interoceptive prediction error signals, as could happen for example in the absence of any interoceptive signals allowing a simple prediction of “no signal.” Rather, presence depends on a match between informative interoceptive signals and top-down predictions arising from a dynamically evolving brain–body–world interaction. The same considerations apply as well to the agency component.

Figure 1. An interoceptive predictive coding model of conscious presence. Both agency and presence components comprise state and error units; state units generate control signals (Aout, Pout) and make predictions [Apred, Ppred, Apred(p)] about the consequent incoming signals (Ain, Pin); error units compare predictions with afferents, generating error signals [Aerr, Perr, Aerr(p)]. In the current version of the model the agency component is hierarchically located above the presence component, so that it generates predictions about the interoceptive consequences of sensory input generated by motor control signals.

The agency component is based on Frith’s well-established “comparator model” of schizophrenia (Frith, 1987, 2011; Blakemore et al., 2000), recently extended to a Bayesian framework (Fletcher and Frith, 2009). In the state module of this component, motor signals are generated which influence the sensorimotor system (Aout); these motor signals are accompanied by prediction signals (Apred) which attempt to predict the sensory consequences of motor actions via a forward model informed by efference copy and/or corollary discharge signals (Sommer and Wurtz, 2008). Predicted and afferent sensory signals are compared in the error module, generating a prediction error signal Aerr. In this model, the subjective sense of agency depends on successful prediction of the sensory consequences of action, i.e., suppression or “explaining away” of the exteroceptive prediction error Apred. Following previous models (Fletcher and Frith, 2009; Synofzik et al., 2010), disturbances in sensed agency arise not simply from predictive mismatches, but from pathologically imprecise predictions about the sensory consequences of action. Predictive coding schemes by necessity involve estimates of precision (or inverse variance) since prediction errors per se are otherwise meaningless. Experimentally, it has been shown that imprecise predictions prompt patients to rely more strongly on (and therefore adapt more readily to) external cues, accounting for a key feature of schizophrenic phenomenology in which actions are interpreted as having external rather than internal causes (Synofzik et al., 2010). The precision of prediction error signals has been associated specifically with dopaminergic activity (Fiorillo et al., 2003), suggesting a proximate neuronal origin of schizophrenic symptomatology in terms of abnormal dopaminergic neurotransmission (Fletcher and Frith, 2009). Prediction error precision also features prominently in recent models of hierarchical Bayesian networks, discussed in Section “Prediction, Perception, and Bayesian Inference” (Friston et al., 2006; Friston, 2009).

In the presence component, the autonomic system is driven both by afferent sensory signals and by internally generated control signals from the state module (Pout), modulating the internal physiological milieu. The state module is responsible for the generation of subjective emotional (feeling) states in accordance with the principles of James and Lange, i.e., that subjective feelings arise from perceptions of bodily responses to emotive stimuli (Critchley et al., 2004; Craig, 2009) or equally, in accordance with the Schachter and Singer model of emotion in which emotional feelings arise through interpretation of interoceptive arousal signals within a cognitive context (e.g., Schachter and Singer, 1962; Critchley et al., 2002). Extending these principles, in our model emotional content is determined by the nature of the predictive signals Ppred, and not simply by the “sensing” of interoceptive signals per se (i.e., we apply the Helmholtzian perspective of perception as inference to subjective feeling states, see Interoception As Inference: A New View of Emotion?). As in the agency component, there is also an error module which compares predicted interoceptive signals with actual interoceptive signals Pin via a forward model giving rise to an interoceptive prediction error Perr (Paulus and Stein, 2006). In our model, the sense of presence is underpinned by a match between informative predicted and actual interoceptive signals; disturbances of presence, as in DPD, arise because of disturbances in this predictive mechanism. Again, by analogy with the agency component (Fletcher and Frith, 2009; Synofzik et al., 2010) we propose that these disturbances arise because of imprecise prediction signals Ppred.

In our model, the presence and agency components are interconnected. Importantly, this connection is not just analogical (i.e., justified with respect to shared predictive principles) but is based on several lines of evidence. First, disorders of agency and presence often (but not always) co-occur (Robertson, 2000; Sumner and Husain, 2008; Ruhrmann et al., 2010; Sierra and David, 2011; see Summary). Second, manipulations of perceived agency can influence reported presence, as shown in both healthy subjects and schizophrenic patients (Lallart et al., 2009; Gutierrez-Martinez et al., 2011). Third, as discussed below, abundant evidence points to interactions between interoceptive and exteroceptive processes, which in our model mediate interactions between the agency and presence components. In the present version of the model, agency is functionally localized at a higher hierarchical level than presence, such that the agency state module generates both sensorimotor predictions (Apred) and interoceptive predictions [Apred(p)]; correspondingly, interoceptive prediction error signals are conveyed to the agency state module [Aerr(p)] as well as to the presence state module. This arrangement is consistent with evidence showing that reported presence is modulated by perceived agency (Lallart et al., 2009; Gutierrez-Martinez et al., 2011). Interestingly, in this arrangement an additional generative component is needed to generate predictive interoceptive signals given the current state of both agency and presence components. We speculate that this integrative generative model may be a key component of a core sense of selfhood, in line with recent hierarchical models of the self (Northoff and Bermpohl, 2004; Feinberg, 2011) including those based on perceptual aspects of global body ownership (Blanke and Metzinger, 2009).

As just mentioned, a connection between presence and agency mechanisms, whether hierarchical or reciprocal, in our model requires interacting interoceptive and exteroceptive processes. Theory and evidence regarding such interactions have a long history, extending back at least as far as James (1890) and prominent in modern neural theories of consciousness (e.g., Edelman, 1989; Humphrey, 2006; Craig, 2009). Consistent with our model, interoceptive responses have recently been argued to shape predictive inference during visual object recognition via affective predictions generated in the orbitofrontal cortex (Barrett and Bar, 2009). Intriguingly, susceptibility to the rubber-hand illusion (Botvinick and Cohen, 1998) is anticorrelated with interoceptive sensitivity (as measured by a heartbeat detection task; Tsakiris et al., 2011), suggesting an interaction between predictive models of body ownership and interoception. People with lower interoceptive predictive ability may more readily assimilate exteroceptive (e.g., correlated visual and tactile) cues in localizing interoceptive and proprioceptive signals, while people with good interoceptive predictive ability may rely less on these exteroceptive cues. Strikingly, rubber-hand illusory experiences are associated with cooling of the real hand, indicating an interaction between predictive mechanisms and autonomic regulation (Moseley et al., 2008).

Despite the connection proposed between agency and presence, our model implies that perceived agency is neither necessary nor sufficient for presence, and vice versa. This position is consistent with evidence that (i) experimental manipulations of perceived agency need not evoke changes in autonomic responses such heart rate and skin conductance (David et al., 2011), (ii) these autonomic signals need not correlate with judgments of agency (David et al., 2011), and (iii) as already mentioned, disorders of agency and presence do not always co-occur (see Disorders of Agency and Presence and Summary).

Brain Basis of the Model

The model implicates a broad network of brain regions for both the agency and the presence components. Neural correlates of the sense of agency have been studied extensively, primarily by manipulating spatial or temporal delays to induce exteroceptive predictive mismatches. Regions identified include motor areas (ventral premotor cortex, supplementary, and pre-supplementary motor areas and basal ganglia), the cerebellum, the posterior parietal cortex, the posterior temporal sulcus, subregions of the prefrontal cortex, and the anterior insula (Haggard, 2008; Tsakiris et al., 2010; Nahab et al., 2011). Among these areas the pre-supplementary motor area plays a key role in implementing complex, open decisions among alternative actions and has been suggested as a source of the so-called “readiness potential” identified in the classic experiments of Libet on volition (Haggard, 2008). The right angular gyrus of the inferior parietal cortex, and more generally the temporo-parietal-junction, are associated specifically with awareness of the discrepancy between intended and actual movements (Farrer et al., 2008; Miele et al., 2011) and have been implicated in multisensory integration underlying exteroceptive aspects of global body ownership relevant to selfhood (Blanke and Metzinger, 2009).

The presence component also implicates a broad neural substrate. We suggest that areas contributing to interoceptive predictive coding include specific brainstem (nucleus of the solitary tract, periaqueductal gray, locus coeruleus), subcortical (substantia innominata, nucleus accumbens, amygdala), and cortical (insular, orbitofrontal, and anterior cingulate) regions, potentially forming at least a loose hierarchy (Critchley et al., 2004; Tamietto and de Gelder, 2010). Among these areas, the insular cortex appears central to the integration of interoceptive and exteroceptive signals and to the generation of subjective feeling states. The posterior and mid insula support the primary cortical representation of interoceptive signals (Critchley et al., 2002, 2004; Harrison et al., 2010), with the anterior insula (AIC) operating as a comparator or error module (Paulus and Stein, 2006; Preuschoff et al., 2008; Palaniyappan and Liddle, 2011). Interestingly, the AIC is also differentially activated by changes in the sense of agency (Tsakiris et al., 2010; Nahab et al., 2011), supporting a link between mechanisms underlying agency and presence.

Autonomic control signals Pout are suggested to originate in regions of anterior cingulate cortex (ACC) which can be interpreted as “visceromotor cortex” for their function in the autonomic modulation of bodily arousal to meet behavioral demand (Pool and Ransohoff, 1949; Critchley et al., 2003; Critchley, 2009). Equally, during motor behavior, premotor, supplementary, and primary motor cortices are direct generators of autonomic vascular changes through central command (Delgado, 1960) and a parallel, partly reciprocal, system of antisympathetic and parasympathetic efferent drive operates through subgenual cingulate and ventromedial prefrontal cortex (Nagai et al., 2004; Critchley, 2009; Wager et al., 2009). The neural basis of interoceptive prediction signals Ppred is suggested to overlap with these control mechanisms, with emphasis on the ACC and the orbitofrontal cortex. The ACC has been associated with autonomic “efference copy” signals (Harrison et al., 2010) and medial sectors of the orbitofrontal cortex have robust connections with limbic, hypothalamic, midbrain, brainstem, and spinal cord areas involved in internal state regulation (Barbas, 2000; Barbas et al., 2003; Barrett and Bar, 2009). It is noteworthy that ventromedial prefrontal including medial orbital cortices also support primary and abstract representations of value and reward across modalities (Grabenhorst and Rolls, 2011).

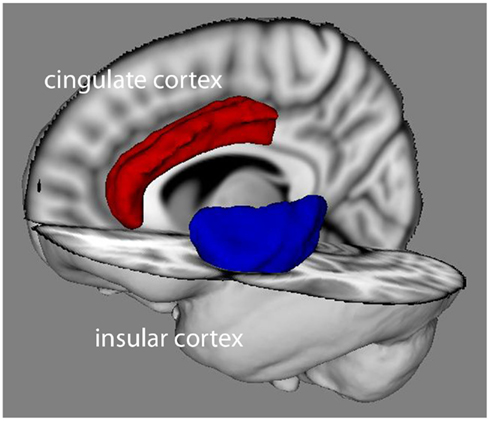

The AIC and the ACC (see Figure 2) are often coactivated despite being spatially widely separated, forming a “salience network” in conjunction with the amygdala and the inferior frontal gyrus (Seeley et al., 2007; Medford and Critchley, 2010; Palaniyappan and Liddle, 2011). The AIC and ACC are known to be functionally (Taylor et al., 2009) and structurally (van den Heuvel et al., 2009) connected. Interestingly, Craig has suggested that AIC–ACC connections are mediated via their distinctive populations of von Economo neurons, which have rapid signal propagation properties and are rich in dopamine D1 receptors (Hurd et al., 2001; Craig, 2009). These areas have also been broadly implicated in representations of reward expectation and reward prediction errors in reinforcement learning contexts (see Disorders of Agency and Presence and Rushworth and Behrens, 2008). A recent model of medial prefrontal cortex, and especially ACC, proposes that competing accounts treating error likelihood, conflict, and volatility and reward can be unified by a simple scheme involving population-based predictions of action–outcome pairings, whether good or bad (Alexander and Brown, 2011). ACC responses are also modulated by the effort associated with an expected reward (Croxson et al., 2009), implicating agency. These observations provide further support for considering the salience network as a central neural substrate of our model.

Figure 2. The human cingulate (red) and insular (blue) cortices. Image generated using Mango (http://ric.uthscsa.edu/mango/).

The Insular Cortex, Interoception, and Emotion

The human insular cortex is a large and highly interconnected structure, deeply embedded in the brain (see Figure 2; Augustine, 1996; Medford and Critchley, 2010; Deen et al., 2011). The insula has been divided into several subregions based on connectivity and cytoarchitectonic features (Mesulam and Mufson, 1982a,b; Mufson and Mesulam, 1982; Deen et al., 2011), with all subregions implicated in visceral representation. Posterior and mid insula support a primary representation of interoceptive information, relayed from brainstem centers, notably the nucleus of the solitary tract, which receives convergent visceral afferent inputs from cranial nerves, predominantly vagus and glossopharyngeal nerves (Mesulam and Mufson, 1982b), and spinal cord, particularly the lamina-1 spinal tract (Craig, 2002). Blood-borne afferent signals may also reach posterior insula via the solitary nucleus due to its interaction with the area postrema (Shapiro and Miselis, 1985). A secondary (re-)representation of interoceptive information within AIC is proposed to arise from forward flow of information from posterior and mid insular cortices (Craig, 2002), augmented by direct input from ventroposteriomedial thalamus. Bidirectional connections with amygdala, nucleus accumbens, and orbitofrontal cortex further suggest that the AIC is well placed to receive input about (positive and negative) stimulus salience (Augustine, 1996). Generally, AIC is considered as the principal cortical site for the integration of interoceptive and exteroceptive signals.

The AIC is engaged across a wide range of processes that share as a common factor visceral representation, interoception, and emotional experience (Craig, 2002, 2009; Critchley et al., 2004; Singer et al., 2009). The AIC is proposed to instantiate interoceptive representations that are accessible to conscious awareness as subjective feeling states (Critchley et al., 2004; Singer et al., 2009). Evidence for this view comes in part from a study in which individual differences in interoceptive sensitivity, as measured by heartbeat detection, could be predicted by AIC activation and morphometry (better performance associated with higher activation and higher gray matter volume) which in turn accounted for individual differences in reported emotional symptoms. These observations suggest a role for AIC both in interoceptive awareness and in the generation of associated emotional feeling states (Critchley et al., 2004; though see Khalsa et al., 2009 who show that the AIC is not necessary for interoceptive sensitivity). Close topographical relationships between different qualities of subjective emotional experience and differences in visceral autonomic state have subsequently been reported within insula subregions (Harrison et al., 2010). Also, the AIC is activated by observation, experience, and imagination of a strong emotion (disgust), though with different functional connectivity patterns in each case (Jabbi et al., 2008). Most generally, Craig (2009) suggests the AIC as a “central neural correlate of consciousness,” drawing additional attention to its possible role in the perception of flow of time.

Taken together, the evidence summarized so far underscores AIC involvement in interoceptive processing, its contribution (in particular with the ACC) to a wider salience network and its role in the integration of exteroceptive signals with stimulus salience. These processes within AIC appear to underlie subjective feeling states. Consistent with this interpretation, we propose AIC to be a comparator underlying the sense of presence. Specific support for our model includes (i) evidence for predictive coding in the AIC; (ii) hypoactivation of AIC in patients with DPD, and (iii) modulation of AIC activity by reported subjective presence in VR experiments. Before turning to this evidence we next discuss the principles of predictive coding in more detail.

Prediction, Perception, and Bayesian Inference

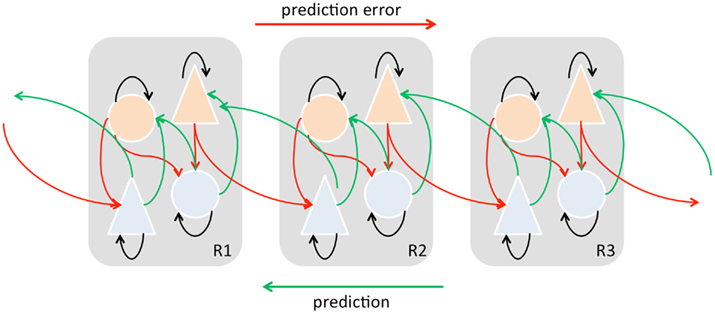

Following the early insights of von Helmholtz, there is now increasing recognition of the importance of prediction, and prediction error, in perception, cognition, and action (Hinton and Dayan, 1996; Rao and Ballard, 1999; Lee and Mumford, 2003; Egner et al., 2008; Friston, 2009; Summerfield and Egner, 2009; Bubic et al., 2010; Mathews et al., 2012). The concept of “predictive coding” overturns classical notions of perception as a largely bottom-up process of evidence-accumulation or feature-detection driven by impinging sensory signals, proposing instead that perceptual content is determined by top-down predictive signals arising from multi-level generative models of the external causes of sensory signals, which are continually modified by bottom-up prediction error signals communicating mismatches between predicted and actual signals across hierarchical levels (see Figure 3). In this view, even low-level perceptual content is determined via a cascade of predictions flowing from very general abstract expectations which constrain successively more detailed (fine-grained) predictions. We emphasize that in these frameworks bottom-up/feed-forward signals convey prediction errors, and top-down/feed-back signals convey predictions determining content. The great power of predictive coding frameworks is that they formalize the concept of inductive inference, just as classical logic formalizes deductive inference (Dorling, 1982; Barlow, 1990).

Figure 3. A schematic of hierarchical predictive coding across three cortical regions; the “lowest” (R1) on the left and the “highest” (R3) on the right. Light blue cells represent state units, orange cells represent error units. Note that predictions and prediction errors are sent and received from each level in the hierarchy. Feed-forward signals conveying prediction errors originate in superficial layers and terminate in deep (infragranular) layers of their targets, are associated with gamma-band oscillations, and are mediated by GABA and fast AMPA receptor kinetics. Conversely, feedback signals conveying predictions originate in deep layers and project to superficial layers, are associated with beta-band oscillations, and are mediated by slow NMDA receptor kinetics. Adapted from (Friston, 2009; see also Wang, 2010).

Predictive coding models are now well-established in accounting for various features of perception (Rao and Ballard, 1999; Yuille and Kersten, 2006), cognition (Grush, 2004), and motor control (Wolpert and Ghahramani, 2000) (see Bubic et al., 2010 for a review). Two examples from visual perception are worth highlighting. In an early study (Rao and Ballard, 1999) implemented a model of visual processing utilizing a predictive coding scheme. When exposed to natural images, simulated neurons developed receptive-field properties observed in simple visual cells (e.g., oriented receptive-fields) as well as non-classical receptive-field effects such as “end-stopping.” These authors pointed out that predictive coding is computationally and metabolically efficient since neural networks learn the statistical regularities embedded in their inputs, reducing redundancy by removing the predictable components of afferent signals and transmitting only residual errors. More recently, Egner and colleagues elegantly showed that repetition suppression (decreased cortical responses to familiar stimuli) is better explained by predictive coding than by alternative explanations based on adaptation or sharpening of representations. Their key finding is that repetition suppression can be abolished when the local likelihood of repetitions is manipulated so that repetitions become unexpected (Egner et al., 2008).

Theoretically, computational accounts of predictive coding have now reached high levels of sophistication (Dayan et al., 1995; Hinton and Dayan, 1996; Rao and Ballard, 1999; Lee and Mumford, 2003; Friston et al., 2006; Friston, 2009). These accounts leverage the hierarchical organization of cortex to show how generative models underlying top-down predictions can be induced empirically via hierarchical Bayesian inference. Bayesian methods provide a computational mechanism for estimating the probable causes of data (posterior distribution) given the observed conditional probabilities of the data and associated priors; in other words, Bayes’ theorem relates a conditional probability (which can be observed) to its inverse (which cannot be observed, but knowledge of which is desired).

As illustrated in Figure 3, in these models each layer attempts to suppress activity in the layer immediately below, as well as within the same layer, and each layer passes prediction errors related to its own activity both internally and to the layer immediately above. From a Bayesian perspective, top-down influences constitute empirically induced priors on the causes of their input. Advances in machine learning theory based on hierarchical Bayesian inference (Dayan et al., 1995; Neal and Hinton, 1998; Lee and Mumford, 2003; Friston et al., 2006; Friston, 2009) show how these schemes may operate in practice. Recent attention has focused on Friston’s “free energy” principle (Friston et al., 2006; Friston, 2009) which, following earlier work by Hinton and colleagues (e.g., Hinton and Dayan, 1996; Neal and Hinton, 1998), shows how generative models can be hierarchically induced from data by assuming that the brain minimizes a bound on the evidence for a model of the data. The machine learning algorithms able to perform this minimization are based on so-called “variational Bayes” worked out by (Neal and Hinton, 1998) among others; these algorithms have plausible neurobiological implementations, at least in cortical hierarchies (Hinton and Dayan, 1996; Lee and Mumford, 2003; Friston et al., 2006; Friston, 2009).

Interestingly, the precision of prediction error signals plays a key role in these models on the grounds that hierarchical models of perception require optimization of the relative precision of top-down predictions and bottom-up evidence (Friston, 2009). This process corresponds to modulating the gain of error units at each level, implemented by neuromodulatory systems. While for exteroception this may involve cholingeric neurotransmission via attention (Yu and Dayan, 2005); for interoception, proprioception, and value-learning, prediction error precision is suggested to be encoded by dopamine (Fiorillo et al., 2003; Friston, 2009). The role of dopamine in our model is discussed further in Section “The Role of Dopamine.”

It is important to emphasize that in predictive coding frameworks, predictions and prediction errors interact over rapid (synchronic) timescales providing a constitutive basis for the corresponding perceptions, cognitions, and actions. This timescale is distinct from the longer (diachronic) timescales across which the brain might learn temporal relations among stimuli (Schultz and Dickinson, 2000), or form expectations about the timing and nature of future events (Suddendorf and Corballis, 2007).

In summary, predictive coding may capture a general principle of cortical functional organization. It fluently explains a broad range of evidence (though a key prediction, that of distinct “state” and “error” neurons in different cortical laminae, remains to be established) and has attractive computational properties, at least in the context of visual perception. It has been applied to agency, where by extending Frith’s comparator model it suggests that disorders of agency arise from pathologically imprecise predictions about the sensory consequences of self-generated actions. However the framework has not yet been formally applied to interoception or to presence.

Interoception as Inference: A New View of Emotion?

Predictive coding models of interoceptive processing have not yet been elaborated. Such a model forms a key component of our model of presence, offering a starting point for predictive models of interoception and emotion generally.

Interoceptive concepts of emotion were first crystallized by James and Lange who argued that emotions arise from perception of physiological changes in the body. This basic idea has been influential over the last century, underpinning more recent frameworks for understanding emotion such as the “somatic marker hypothesis” of Damasio (2000), the “sentient self” model (Craig, 2002, 2009), and “interoceptive awareness” (Critchley et al., 2004). Despite the advances embedded in these frameworks, interoception remains generally understood along “feed-forward” lines, similar to classical feature-detection or evidence-accumulation theories of visual perception. However, it has long been recognized that cognitively explicit beliefs about the causes of physiological changes can influence subjective feeling states (Cannon, 1915). Some 50 years ago, Schachter and Singer (1962) famously demonstrated that injections of adrenaline, proximally causing a variety of significant physiological changes, could give rise to either anger or elation depending on the concurrent context (an irritated or elated confederate), an observation formalized in their “two factor” theory in which subjective emotions are determined by a combination of cognitive factors and physiological conditions.

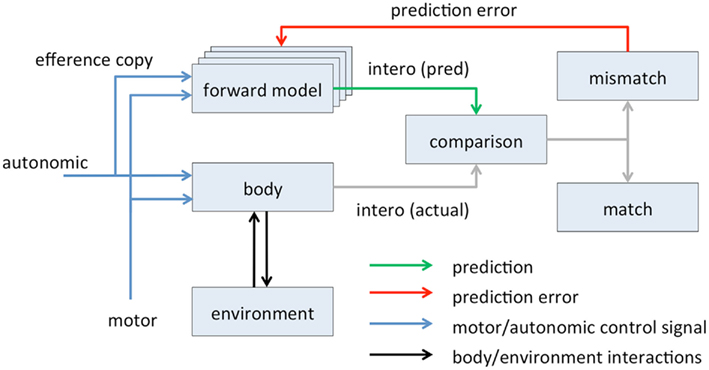

Though it involves expectations, Schachter and Singer’s theory falls considerably short of a full predictive coding model of emotion. Drawing a parallel with models of perception, predictive interoception would involve hierarchically cascading top-down interoceptive predictions counter flowing with bottom-up interoceptive prediction errors, with subjective feeling states being determined by the joint content of the top-down predictions across multiple hierarchical levels. In other words, according to the model emotional content is determined by a suite of hierarchically organized generative models predicting interoceptive responses to external stimuli and/or internal physiological control signals (Figure 4).

Figure 4. Predictive coding applied to interoception. Motor control and autonomic control signals evoke interoceptive responses [intero(actual)] either directly (autonomic control) or indirectly via the musculoskeletal system and the environment (motor control). These responses are compared to predicted responses [intero(pred)], which are generated by hierarchically organized forward/generative models informed by motor and autonomic efference copy signals. The comparison, which may take place in AIC, generates a prediction error which refines the generative models. Subjective feeling states are associated with predicted interoceptive signals intero(pred). The figure is adapted from a general schematic of predictive coding in (Bubic et al., 2010).

It is important to distinguish interoceptive predictive coding from more generic interactions between prediction and emotion. As already mentioned, predictive coding involves prediction at synchronic, fast time-scales, such that predictions (and prediction errors) are constitutive of (emotional) content. Approaching this idea, Barrett and Bar (2009) propose that affective (interoceptive) predictions shape visual object recognition at fast timescales, however they do not contend that such predictions are the constitutive basis of emotions in the full predictive coding sense. Many previous studies have examined how predictions can influence emotion over longer, diachronic, timescales (Ploghaus et al., 1999; Porro et al., 2003; Ueda et al., 2003; Gilbert and Wilson, 2009); the brain networks involved in emotional predictions across time reliably include prefrontal cortex and the ACC.

Predictive Coding in the AIC

A key requirement of our model is that the AIC participates in interoceptive predictive coding. In a related influential model of anxiety, Paulus and Stein (2006) suggest that insular cortex compares predicted to actual interoceptive signals, with subjective anxiety associated with heightened interoceptive prediction error signals. In line with their model, highly anxious individuals show increased AIC activity during emotion processing (Paulus and Stein, 2006). AIC responses to stimuli are modulated by expectations: When participants are exposed to a highly aversive taste, while falsely expecting only a moderately aversive taste, they report less aversion than when having accurate information about the stimulus, with corresponding attenuation of evoked activity within AIC (and adjacent frontal operculum; Nitschke et al., 2006). Moreover, AIC responses to expected aversive stimuli are larger if expectations are uncertain (Sarinopoulos et al., 2010). The AIC is also activated by anticipation of painful (Ploghaus et al., 1999) and tactile stimuli (Lovero et al., 2009). Direct experimental evidence of insular predictive coding, though not specifically regarding interoceptive signals, comes from an fMRI study of a gambling task in which activity within spatially separate subregions of the AIC encoded both predicted risk and risk prediction error (Preuschoff et al., 2008). The risk prediction error signal exhibits a fast onset, whereas the risk prediction signal (localized to a slightly more superior and anterior AIC subregion) exhibits a slow onset; these dynamics are consistent with respective bottom-up and top-down origins in predictive coding frameworks (Preuschoff et al., 2008).

Consistent with the above findings, during performance of the Iowa gambling task, AIC responses reflect risk prediction error while striatal responses reflect reward prediction errors (d’Acremont et al., 2009). During more classical instrumental learning both AIC and striatal responses reflect reward prediction error signals where, in contrast to striatal activity, AIC responses correlate negatively with reward prediction error and during “loss” trials only, possibly reflecting aversive prediction error (Pessiglione et al., 2006). Risk, reward, and interoception are clearly closely linked, as underlined by theories of decision-making and associated empirical data that emphasize the importance of internal physiological responses in shaping apparently rational behavior (Bechara et al., 1997; Damasio, 2000). These links are also implied by the structural and functional interconnectivity of AIC with the ACC and with orbitofrontal cortex and other reward-related and decision-making structures (see The Insular Cortex, Interoception, and Emotion).

Anterior insular cortex responses are implicated in other prediction frameworks: AIC responses occur for conscious but not unconscious errors made in an antisaccade task (Klein et al., 2007). The AIC is proposed to be specifically involved in updating previously existing prediction models in reward learning contexts (Palaniyappan and Liddle, 2011), and AIC activity elicited during intentional action is suggested to provide interoceptive signals essential for evaluating the affective consequences of motor intentions (Brass and Haggard, 2010). This view aligns with our model in emphasizing a connection between agency and presence.

A different source of evidence for interoceptive predictive coding comes from exogenous manipulations of interoceptive feedback. The experimental induction of mismatch between predicted and actual interoceptive signals by false physiological feedback enhances activation of right AIC (Gray et al., 2007), showing the region to be a comparator. Moreover, this AIC activation, in conjunction with amygdala, is associated with an increased emotional salience attributed to previously unthreatening stimuli, consistent with revision of top-down interoceptive predictions in the face of unexplained error (Gray et al., 2007).

In summary, there is accumulating evidence for predictive signaling in AIC relevant to risk and reward, as well as limited evidence for interoceptive predictive coding arising from false feedback evidence. Direct evidence for interoceptive predictive coding in the AIC has not yet been obtained and stands as a key test of the present model.

Disorders of Agency and Presence

A useful model should be able to account for features of relevant disorders. As discussed, schizophrenic delusions of control are well explained by the comparator model of agency in terms of problems with kinematic and sensory aspects of the forward modeling component (Frith, 2011). Specifically, reduced precision of exteroceptive predictions coincides with greater delusions of control, consistent with abnormal dopaminergic neurotransmission (Synofzik et al., 2010; see also The Role of Dopamine). Other first-rank symptoms, for example thought insertion, are however less well accounted for by current comparator models (Frith, 2011). Here, we focus on the less extensively discussed disorders of presence.

Depersonalization, Derealization, and DPD

Depersonalization and derealization symptoms manifest as a disruption of conscious experience at very basic, preverbal level, most colloquially as a “feeling of unreality” which can be equally interpreted as the absence of normal feelings of presence (American Psychiatric Association, 2000). Depersonalization and derealization are common as brief transient phenomena in healthy individuals, but may occur as a chronic disabling condition, either as a primary disorder, DPD, or secondary to other neuropsychiatric illness such as panic disorder, post-traumatic stress disorder, and depression. Recent surveys of clinical populations suggest that depersonalization/derealization may be the third most common psychiatric symptom after anxiety and low mood (Stewart, 1964; Simeon et al., 1997), and are experienced by 1.2–2% of the general population in any given month (Bebbington et al., 1997; Hunter et al., 2004). The chronic expression of these symptoms in DPD is characterized by “alteration in the perception or experience of the self so that one feels detached from and as if one is an outside observer of one’s own mental processes”(American Psychiatric Association, 2000). Two recent studies of DPD phenomenology have shown that the condition is best considered a syndrome, as chronic depersonalization involves qualitative changes in subjective experience across a range of experiential domains (Sierra et al., 2005; Simeon et al., 2008), encompassing abnormalities of bodily sensation and emotional experience. Notably, DPD is often accompanied by alexithymia, which refers to a deficiency in understanding, processing, or describing emotions; more generally a deficiency of conscious access to subjective emotional states (Simeon et al., 2009). In short, DPD can be summarized as a psychiatric condition marked by the selective diminution of the subjective reality of the self and world; a presence deficit.

Neuroimaging studies of DPD, though rare, reveal significantly lower activation in AIC (and bilateral cingulate cortex) as compared to normal controls when viewing aversive images (Phillips et al., 2001). It has been suggested that DPD is associated with a suppressive mechanism grounded in fronto-limbic brain regions, notably the AIC, which “manifests subjectively as emotional numbing, and disables the process by which perception and cognition become emotionally colored, giving rise to a subjective feeling of unreality”(Sierra and David, 2011). This mechanism may therefore also underlie comorbid alexithymia.

In our model, DPD symptoms correspond to abnormal interoceptive predictive coding dynamics. Whereas anxiety has been associated with heightened prediction error signals (Paulus and Stein, 2006), we suggest that DPD is associated with imprecise interoceptive prediction signals Ppred in analogy with predictive models of disorders of agency (Fletcher and Frith, 2009; Synofzik et al., 2010). Our model therefore extends that of Paulus and Stein (2006): Chronically high anxiety may result from chronically elevated interoceptive prediction error signals, leading to overactivation in AIC as a result of inadequate suppression of these signals. In contrast, the imprecise interoceptive prediction signals associated with DPD may result in hypoactivation of AIC since there is an excessive but undifferentiated suppression of error signals.

From Hallucination and Dissociation to Delusion

Both psychotic illness and dissociative conditions encompass disorders of perception and disorders of belief (delusions). In psychoses such as schizophrenia, disordered perception is manifest as hallucinations while delusions are characterized by bizarre or irrational self-referential beliefs such as thought insertion by aliens or government agencies (Maher, 1974; Fletcher and Frith, 2009). In dissociative disorders, disordered perceptions are characterized by symptoms of self disturbance as in DPD which can evolve into frankly psychotic delusional conditions such as the Cotard delusion in which patients believe that they are dead (Cotard, 1880; Young and Leafhead, 1996). Fletcher and Frith (2009) propose that, for positive symptoms in psychoses, a Bayesian perspective can accommodate hallucinations and delusions within a common framework. In their compelling account, a shift from hallucination to delusion reflects readjustment of top-down predictions within successively higher levels of cortical hierarchies, in successive attempts to explain away residual prediction errors.

A similar explanation can apply to a transition from non-delusional interoceptive dissociative symptoms in DPD to full-blown (psychotic) delusions in Cotard and the like. To the extent that imprecise predictions at low levels of (interoceptive) hierarchies are unable to suppress interoceptive prediction error signals, imprecise predictions will percolate upward, eventually leading not only to generalized imprecision across cortical hierarchical levels but also to re-sculpting of abstract predictive models underlying delusional beliefs. This account augments the proposal of Corlett et al. (2010) who suggest that the lack of emotional engagement experienced by Cotard patients is surprising (in the Bayesian sense), engendering prediction errors and re-sculpting of predictive models; they do not however propose a role for interoceptive prediction error. The account is also consistent with Young and Leafhead (1996) who argued that the Cotard delusion develops as an attempt to explain (“explain away,” in our view) the experiential anomalies of severe depersonalization (Young and Leafhead, 1996). Interestingly, in one case study DPD symptoms ceased once full-blown Cotard and Fregoli (another rare misidentification delusion in which a familiar person is believe to be an imposter) delusions were co-expressed (Lykouras et al., 2002), suggesting that even a highly abstracted belief structure can be sufficient to suppress chronically aberrant perceptual signals.

The phenomenon of intentional binding is relevant in this context: actions and consequences accompanied by a sense of agency are perceived as closer together in time than they objectively are; conversely, if the consequence is not perceived as the result of the action, the events are perceived as more distant in time than they actually are (Haggard et al., 2002). Importantly, intentional binding has both a predictive and a retrospective component: Schizophrenic patients with disorders of agency show stronger intentional binding than controls (Voss et al., 2010), with abnormalities most evident in the predictive component, reflecting indiscriminate (i.e., imprecise) predictions (Synofzik et al., 2010). In contrast, prodromal individuals (before development of frankly psychotic symptoms) show an increased influence of both predictive and retrospective components, consistent with elevated prediction error signals (Hauser et al., 2011). These results suggest a process through which abnormal prediction errors lead, over time, to imprecise (and eventually reformulated) top-down predictions. A similar account may apply for dissociative symptoms: As with psychosis, anxiety (associated with enhanced interoceptive prediction error) is often prodromal to DPD and is a typical general context for DPD symptoms (Paulus and Stein, 2006).

The Role of Dopamine

Dopaminergic neurotransmission is implicated at several points in the discussion so far, most prominently as encoding precisions within predictive coding. Here we expand briefly on the potential importance of dopamine for the present model.

Seminal early work relevant to predictive coding showed that dopaminergic responses to reward, recorded in the monkey midbrain, diminish when reward become predictable over repeated phasic (diachronic) stimulus-reward presentations suggesting that dopamine encodes a reward prediction error signal useful for learning (Schultz and Dickinson, 2000; Chorley and Seth, 2011). More recently, Pessiglione et al. (2006) found that reward prediction errors in humans are modulated by dopamine levels. Modulation was most apparent in the striatum but was also evident in the AIC. In considering this evidence it is important to distinguish the phasic diachronic role of dopamine in signaling reward prediction error (Schultz and Dickinson, 2000) from its synchronic role in modulating (or optimizing) the precision of prediction errors by modulating signal-to-noise response properties in neuronal signaling (Fiorillo et al., 2003; Friston, 2009, 2010). Although our model emphasizes the latter role, the learning function of dopamine may nonetheless mediate the transition from disordered perception to delusion. In this view, dopamine-modulated learning underlies the re-sculpting of generative models to accommodate persistently elevated prediction error signals (Corlett et al., 2010). Dopaminergic neurotransmission may therefore govern the balance between (synchronic) optimization of precisions at multiple hierarchical levels (for both agency and presence) and the reformulation of predictive models themselves, with both mechanisms contributing to delusion formation. This account is also compatible with an alternative interpretation of short-latency dopaminergic signaling in identifying aspects of environmental context and behavior potentially responsible for causing unpredicted events (Redgrave and Gurney, 2006). In this view, short-latency prediction error signals arising in the midbrain ventral tegmental area are implicated in discerning whether afferent sensory signals are due to self-generated actions or to external causes.

Abnormal dopaminergic neurotransmission is observed in the ACC of individuals with schizophrenia (Dolan et al., 1995; Takahashi et al., 2006). Although nothing appears to be known specifically about dopaminergic processing in the insula in individuals with either DPD or schizophrenia, the AIC is rich in dopamine D1 receptors (Williams and Goldman-Rakic, 1998), and both insula and the ACC also express high levels of extrastriatal dopamine transporters, indicating widespread synaptic availability of dopamine in these regions. Dopamine is also a primary neurochemical underpinning a set of motivational functions that engage the AIC, including novelty-seeking, craving, and nociception (Palaniyappan and Liddle, 2011). A more general role for dopamine in modulating conscious contents is supported by a recent study showing that dopaminergic stimulation increases both accuracy and confidence in the reporting of rapidly presented words (Lou et al., 2011).

Testing the Model

To recap, we propose that presence results from successful suppression by top-down predictions of informative interoceptive signals evoked (directly) by autonomic control signals and (indirectly) by bodily responses to afferent sensory signals. Testing this model requires (i) the ability to measure presence and (ii) the ability to experimentally manipulate predictions and prediction errors independently with respect to both agency and presence.

Measuring presence remains an important challenge. Subjective measures depend on self-report and can be formalized by questionnaires (Lessiter et al., 2001); however these measures can be unstable in that prior knowledge can influence the results (Freeman et al., 1999). Directly asking about presence may also induce or reduce experienced presence (Sanchez-Vives and Slater, 2005). Alternatively, specific behavioral measures can test for equivalence between real environments and VEs. However these measures are most appropriate for a behavioral interpretation of presence (Sanchez-Vives and Slater, 2005). Physiological measures can also be used to infer presence, for example by recording heart rate variability in stressful environments (Meehan et al., 2002). Presence can be measured indirectly by the extent to which participants are able to perform cognitive memory and performance tasks that depend on features of the VE (Bernardet et al., 2011), though again these measures may correspond to a behavioral rather than a phenomenal interpretation of presence. An alternative, subjective approach, involves asking subjects to modify aspects of a VE until they report a level of immersion equivalent to that of a “reference” VE (Slater et al., 2010a). Finally, presence could be inferred by the ability to induce so-called “breaks in presence” which would not be possible if presence was lacking in the first place (Slater and Steed, 2000). In practice, a combination of the above strategies is likely to be the most useful.

Several technologies are available for experimentally manipulating predictions and prediction errors. Consider first manipulations of prediction error. In the agency component, these errors can be systematically manipulated by, for example, interposing a mismatch between actions and sensory feedback using either VR (Nahab et al., 2011) or by standard psychophysical methods (Blakemore et al., 1999; Farrer et al., 2008). In the presence component, prediction errors could be manipulated by subliminal presentation of emotive stimuli prior to target stimuli (Tamietto and de Gelder, 2010) or by false physiological feedback (Gray et al., 2007). Manipulations of top-down expectations could be achieved by modifying the context in which subjects are tested. For example, expectations about self-generated versus externally caused action can be manipulated by introducing a confederate as a potential actor in a two-player game (Wegner, 2004; Farrer et al., 2008) or by explicitly presenting emotionally salient stimuli to induce explicit expectations of interoceptive responses.

Evidence from VR

Important constraints on neural models of presence come from experiments directly manipulating the degree of presence while measuring neural responses. VR technology, especially when used in combination with neuroimaging, offers a unique opportunity to perform these manipulations (Sanchez-Vives and Slater, 2005). In one study, a virtual rollercoaster ride was used to induce a sense of presence while brain activity was measured using fMRI. This study revealed a distributed network of brain regions elements of which were both correlated, and anticorrelated, with reported presence (Baumgartner et al., 2008). Areas showing higher activity during strong presence include extrastriate and dorsal visual areas, superior parietal cortex, inferior parietal cortex, parts of the ventral visual stream, premotor cortex, and thalamic, brainstem, and hippocampal regions, and notably the AIC. Other relevant studies have examined behavioral correlates of presence as modulated by VR. In a non-clinical population, immersion in a VE enhances self-reported dissociative symptoms on subsequent re-exposure to the real environment, indicating that VR does indeed modulate the neural mechanisms underpinning presence (Aardema et al., 2010). In another study, self-reported presence anticorrelated with memory recall in a structured VE (Bernardet et al., 2011). Two recent studies speak to a connection between presence and agency. In the first, the ability to exert control over events in a VE substantially enhances self-reported presence in healthy subjects (Gutierrez-Martinez et al., 2011). In the second, schizophrenic patients performing a sensorimotor task in a VE reported lower presence than controls, and for control subjects only, presence was modulated by perceived agency which was manipulated by modulating visual feedback in the VE (Lallart et al., 2009). These results are consistent with our model in which predictive signals emanating from the agency component influence presence.

Virtual reality has also been used to study the neural basis of experienced agency. For example, VR-based manipulation of the relationship between intended and (virtual) experienced hand movements, applied in combination with fMRI, revealed a network of brain regions that correlate with experienced agency, with the right supramarginal gyrus identified as the locus of mismatch detection (Nahab et al., 2011). Several recent studies have used VR to generalize the rubber-hand illusion to induce experiences of heautoscopy (Petkova and Ehrsson, 2008; Blanke and Metzinger, 2009) and body transfer into a VE (Slater et al., 2010b) [Heautoscopy is intermediate between autoscopy and full-blown out of body experience (Blanke and Metzinger, 2009)]. These studies have focused on body ownership and exteroceptive multisensory integration rather than on presence or agency directly (though see Kannape et al., 2010 with respect to agency). We speculate that one reason why these so-called “full-body illusions” are difficult to induce is that, despite converging exteroceptive cues, there remains an “interoceptive anchor” grounding bodily experience in the physical body.

Related Models

Here we briefly describe related theoretical models of presence and of insula function. Models of agency have already been mentioned (see An Interoceptive Predictive-Coding Model of Conscious Presence) and are extensively discussed elsewhere (David et al., 2008; Fletcher and Frith, 2009; Corlett et al., 2010; Synofzik et al., 2010; Voss et al., 2010; Frith, 2011; Hauser et al., 2011). Riva et al. (2011) interpret presence as “the intuitive perception of successfully transforming intentions into actions (enaction).” Their model differs from the present proposal by focusing on action and behavior, by assuming a much greater phenomenological and conceptual overlap between presence and agency, and by not considering the role of interoception or the AIC. Verschure et al. (2003) adopt a phenomenological interpretation of presence, proposing an association with predictive models of sensory input based on learned sensorimotor contingencies (Bernardet et al., 2011). While this model incorporates predictions it does not involve interoception or propose any specific neuronal implementation. Baumgartner and colleagues propose a model based on activity within the dorsolateral prefrontal cortex (DLPFC). In their model, DLPFC activity downregulates activity in the visual dorsal stream, diminishing presence (Baumgartner et al., 2008). Conversely, decreased DLPFC activity leads to increased dorsal visual activity, which is argued to support attentive action preparation in the VE as if it were a real environment. Supporting their model, bilateral DLPFC activity was anticorrelated with self-reported presence in their virtual rollercoaster experiment (Baumgartner et al., 2008). However, application of transcranial direct current stimulation to right DLPFC, decreasing its activity, did not enhance reported presence (Jancke et al., 2009).

Models of insula function are numerous and cannot be covered exhaustively here. Among the most relevant is a model in which AIC integrates exteroceptive and interoceptive signals with computations about their uncertainty (Singer et al., 2009). In this model, the AIC is assumed to engage in predictive coding for both risk-related and interoceptive signals, however no particular mechanistic implementation is specified. The anxiety model of Paulus and Stein (2006) introduces the idea of interoceptive prediction errors in the AIC but does not specify a computational mechanism or elaborate the notion of interoceptive predictive coding as the constitutive basis of emotion. Palaniyappan and Liddle (2011) leverage the concept of a salience network (see The Insular Cortex, Interoception, and Emotion) to ascribe the insula with a range of functions including detecting salient stimuli and modulating autonomic and motor responses via coordinating switching between large-scale brain networks implicated in externally oriented attention and internally oriented cognition and control. In this model, psychotic hallucinations result from inappropriate proximal salience signals which in turn may arise from heightened uncertainty regarding the (diachronic) predicted outcome of events. To our knowledge, no extant model proposes that the AIC engages in interoceptive predictive coding underlying conscious presence.

Summary

We have described a theoretical model of the mechanisms underpinning the subjective sense of presence, a basic property of normal conscious experience. The model is based on parallel predictive coding schemes, one relating to agency reflecting existing “comparator” models of schizophrenia (Frith, 1987, 2011), and a second based on interoceptive predictive coding. The model operationalizes presence as the suppression of informative interoceptive prediction error, where predictions (and corresponding errors) arise (i) directly, via autonomic control signals, and (ii) indirectly, via motor control signals which generate sensory inputs. By analogy with models of agency (Synofzik et al., 2010), the sense of presence is specifically associated with the precision of interoceptive predictive signals, potentially mediated by dopaminergic signaling. Importantly, presence in the model is associated with informative interoceptive afferent and predictive signals, and not with the absence of interoceptive prediction errors per se. The role of the agency component with respect to presence is critical; it provides predictions about future interoceptive states on the basis of a parallel predictive model of sensorimotor interactions. The joint activity of these predictive coding models may instantiate key features of an integrated self-representation, especially when considered alongside models of body ownership based on proprioception and multisensory integration (Blanke and Metzinger, 2009; Tsakiris, 2010). Converging evidence points to key roles for the AIC and the ACC in instantiating predictive models, both for interoceptive and exteroceptive signals, in line with growing opinion that the AIC is a core neural substrate for conscious selfhood (Critchley et al., 2004). In addition, the model suggests a novel perspective on emotion, namely as interoceptive inference along Helmholtzian lines. In this view, emotional states are constituted by interoceptive predictions when matched to inputs, extending early two-factor theories of emotion (Schachter and Singer, 1962) as well as more recent proposals contending that rapid affective predictions can shape exteroceptive perceptions (Barrett and Bar, 2009) or that interoceptive predictions can be useful for homeostatic regulation (Paulus and Stein, 2006).

The model is consistent with known neurobiology and phenomenology of disorders of presence and agency. Presence deficits are particularly apparent in DPD, which is known to involve hypoactivity in the AIC. Associating disturbances of presence with imprecise interoceptive predictions is also consistent with the frequently comorbid alexithymia exhibited by DPD patients. Anxiety, often prodromal or comorbid with DPD is also accommodated by the model in terms of enhanced prediction error signals, which when sustained could lead to the imprecise predictions underlying dissociative symptoms. The hierarchical predictive coding scheme may also account for transitions from disordered perception to delusion as predictive mismatches percolate to successively more abstract representational levels, eventually leading to dopaminergically governed re-sculpting of predictive models underlying delusional beliefs.

The model is amenable to experimental testing, especially by leveraging powerful combinations of VR, neuroimaging, and psychophysiology. These technological developments need however to be accompanied by more sophisticated subjective scales reflecting more accurately the phenomenology of presence. A basic prediction of the model is that artificially induced imprecision in interoceptive predictions should lead to diminished conscious presence and abnormal AIC activity; by contrast, simple elevation of interoceptive prediction error signals should lead instead to increased anxiety. As described in Section “Testing the Model,” these manipulations could be engendered either by preexposure to emotionally ambiguous but salient stimuli or by direct pharmacological manipulation affecting dopaminergic neuromodulation in the AIC. A second basic prediction is that the AIC, as well as other areas involved in interoceptive processing, should show responses consistent with interoceptive predictive coding. For example, by analogy with studies of repetition suppression, AIC should show reduced responses for well predicted interoceptive signals and enhanced responses when expectations are violated. Third, the model predicts that distortions of presence may not necessarily lead to distortions of agency; they will only do so if agency-component predictions realign or change their precision or structure in order to suppress faulty interoceptive prediction errors. Further predictions can be based on the relative timing of activity. In the visual domain, expectations about upcoming sensory input reduce the latency of neuronal signatures differentiating seen and unseen stimuli (Melloni et al., 2011); in other words, expectations speed up conscious access. By analogy, an expected interoceptive signal may be perceived as occurring earlier than an unexpected interoceptive signal. This hypothesis could be tested by manipulations of physiological feedback (Gray et al., 2007). Potentially, VR experimental environments could be used not only for testing the model but also for therapeutic purposes with DPD patients.

Several challenges may be raised to the model as presently posed. First, in contrast to exteroceptive (particularly visual) processing (Felleman and Van Essen, 1991; Nassi and Callaway, 2009), evidence for hierarchical organization of interoceptive processing and autonomic control is less clear. Complicating any such interpretation are multiple levels of autonomic control including muscle reflex autonomic responses mediated at spinal levels, direct influences of motor cortex on sympathetic responses to muscle vasculature, varying degrees of voluntary effects on visceral state, and poorly understood effects of lateralization for both afferent and efferent signals (Delgado, 1960; Craig, 2005; Critchley et al., 2005; McCord and Kaufman, 2010). On the other hand, there is reasonable evidence for somatotopic coding in brainstem nuclei (e.g., nucleus of the solitary tract and area postrema), and subsequently in parabrachial nuclei, thalamic nuclei, and posterior insula (Craig, 2002), consistent with hierarchical organization. It nonetheless remains as a challenge to explore the extent to which interoception can be described, anatomically and functionally, as hierarchical, when considered for example in comparison to object representation in visual or auditory systems.

A second challenge is that predictive coding schemes for visual perception are often motivated by the need for efficient processing of high-bandwidth and highly redundant afferent visual sensory signals (Rao and Ballard, 1999). The functional architecture of interoception appears very different, undermining any direct analogy. However, interoceptive pathways involve dozens or probably hundreds of different dedicated receptors often distributed broadly throughout the body (Janig, 2008), posing potentially even greater computational challenges.

Third, clinical experience suggests that disorders of agency and presence do not always coincide; for example it is not possible to elicit reports of subjective disturbances of conscious presence in all patients with schizophrenia (Ruhrmann et al., 2010). Moreover, depersonalization and derealization are not generally associated with disorders of agency, as shown for example in Alien Hand syndrome (Sumner and Husain, 2008) and Tourette syndrome (Robertson, 2000). While our model specifically allows for independent effects and proposes that agency and presence are neither necessary nor sufficient for each other, additional research is needed to examine experimentally their interactions. It is possible that such studies could invert the hierarchical relationship between agency and presence (see Figure 1) or reframe it as a bidirectional, symmetric relationship. Further, lesions to insular cortex do not always give rise to dissociative symptoms (Jones et al., 2010), raising the possibility that the predictive processes underlying presence play out across multiple brain regions with key nodes potentially extending down into brainstem areas (Damasio, 2010). Alternatively, dissociative symptoms could in fact require an intact insula in order to generate the imprecise predictions underlying the subjective phenomenology.

Finally, our model remains agnostic as to whether it is presence itself, or the experience of its disturbance or absence, that is the core phenomenological explanatory target. Arguably, disturbances of presence are more phenomenologically salient than the background of presence characterizing normal conscious experience. Reportable experience of presence per se may require additional reflective attention of the form induced by subjective questionnaires. A full treatment of this issue would refer back at least as far as the phenomenological work of Heidegger and Husserl (Heidegger, 1962; Husserl, 1963), by way of Metzinger’s discussion of transparency (see The Phenomenology of Presence), lying well beyond the present scope. Nonetheless, by proposing specific neurocognitive constraints our model provides a framework for understanding presence as a structural property of consciousness that is susceptible to breakdown (inducing an experience of the “absence of presence”) in particular and predictable circumstances.

Addressing the above challenges will require multiple research agendas. However, three key tests underpinning many of them are (i) to search explicitly for signs of interoceptive predictive coding in the AIC, (ii) to establish the nature of the target representation of discrete channels of afferent viscerosensory information instantiating such predictive coding schemes, and (iii) to correlate subjective disturbances in presence with experimental manipulations of interoceptive predictions and prediction errors. More prospectively, the model requires extension to address explicitly issues of selfhood. We believe considerable promise lies in integrating interoceptive predictive coding with existing proprioceptive and multisensory models of selfhood (Blanke and Metzinger, 2009; Tsakiris, 2010), potentially explaining the force of “interoceptive anchors” in grounding bodily experience.

In conclusion, our model integrates previously disparate theory and evidence from predictive coding, interoceptive awareness and the role of the AIC and ACC, dopaminergic signaling, DPD and schizophrenia, and experiments combining VR and neuroimaging. It develops a novel view of emotion as interoceptive inference and provides a computationally explicit, neurobiologically grounded account of conscious presence, a fundamental but understudied phenomenological property of conscious experience. We hope the model will motivate new experimental work designed to test its predictions and address its objections. Such efforts are likely to generate important new findings in both basic and applied consciousness science.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors acknowledge the following funding sources: the Dr. Mortimer and Theresa Sackler Foundation (AKS, HDC); EU project CEEDS (FP7-ICT-2009-5, 258749; AKS, KS); and EPSRC Leadership Fellowship EP/G007543/1 (AKS). We are grateful to Neil Harrison, Nick Medford, Bjorn Merker, Thomas Metzinger, Natasha Sigala, Paul Verschure, and our reviewers for comments that helped improve the paper.

References