94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Psychol. , 27 December 2011

Sec. Psychology of Language

volume 2 - 2011 | https://doi.org/10.3389/fpsyg.2011.00375

This article is part of the Research Topic The neurocognition of language production View all 11 articles

Recent findings in the neurophysiology of language production have provided a detailed description of the brain network underlying this behavior, as well as some indications about the timing of operations. Despite their invaluable utility, these data generally suffer from limitations either in terms of temporal resolution, or in terms of spatial localization. In addition, studying the neural basis of speech is complicated by the presence of articulation artifacts such as electro-myographic activity that interferes with the neural signal. These difficulties are virtually absent in a powerful albeit much less frequent methodology, namely the recording of intra-cranial brain activity (intra-cranial electroencephalography). Such recordings are only possible under very specific clinical circumstances requiring functional mapping before brain surgery, most notably in patients that suffer from pharmaco-resistant epilepsy. Here we review the research conducted with this methodology in the field of language production, with explicit consideration of its advantages and drawbacks. The available evidence is shown to be diverse, both in terms of the tasks and the cognitive processes tested and in terms of the brain localizations being studied. Still, the review provides valuable information for characterizing the dynamics of the neural events occurring in the language production network. Following modality specific activities (in auditory or visual cortices), there is a convergence of activity in superior temporal sulcus, which is a plausible neural correlate of phonological encoding processes. Later, between 500 and 800 ms, inferior frontal gyrus (around Broca’s area) is involved. Peri-rolandic areas are recruited in the two modalities relatively early (200–500 ms window), suggesting a very early involvement of (pre-) motor processes. We discuss how some of these findings may be at odds with conclusions drawn from available meta-analysis of language production studies.

Speech is a basic skill that is used quite effortlessly in many daily life activities. Despite this apparent simplicity, speech is subtended by a complex set of cognitive processes and a wide network of brain structures, engaged, and interacting in time within tens of milliseconds. Cognitive models of speech production generally include distinct levels of processing. These allow the retrieval and use of semantic information (i.e., the message to be conveyed), linguistic information (e.g., lexical and phonological representations), as well as pre-motor and motor commands (for articulating).

The network of brain areas engaged during speech production is relatively well described, based on evidence from neuropsychological populations (e.g., speakers suffering from various kinds of aphasia following a stroke) and from functional brain imaging experiments. For example, DeLeon et al. (2007) investigated the linguistic performance and neural integrity of patients within 24 h of acute ischemic stroke. They showed that a deficit in semantic processing (conceptual identification), is associated with dysfunction in anterior temporal brain areas (Brodmann areas BA 21–22–38), while a lexical dysfunction is associated with posterior temporal regions (BA 37–39). Indefrey and Levelt (2004) conducted a meta-analysis of brain activity studies of diverse types, including functional magnetic resonance imaging (fMRI), positron emission tomography (PET), electroencephalography (EEG), magneto-encephalography (MEG), and transcranial magnetic stimulation (TMS). The meta-analysis shows that the selection of a lexical item involves activation of the mid part of left middle temporal gyrus; accessing a word’s phonological code is linked to activation in Wernicke’s area; and post-phonological encoding (syllabification and metrical encoding) is linked to activation in left inferior frontal regions.

The techniques mentioned above provide complementary insights into neural processing. For instance, fMRI is productive in identifying areas that are central in different language tasks (Price, 2010) but has an indirect, temporally smeared, and poorly understood relationship to neural processing. In contrast, surface EEG and MEG are directly and instantaneously generated by synaptic and active currents in pyramidal apical dendrites. For this reason, they provide valuable information about the timing of neural events that can then be linked to cognitive operations. For example, the retrieval of lexical linguistic information mentioned above appears to be engaged around 200 ms post-stimulus in the classic picture naming task (Salmelin et al., 1994; Maess et al., 2002; Costa et al., 2009; see also Indefrey and Levelt, 2004; Salmelin, 2007). However, inferring and localizing the cortical sources of these process is a technically complex problem, based on mathematical algorithms constrained by a priori hypothesis. The other constraint faced by surface EEG and MEG is that they are both highly sensitive to electro-myographic artifacts. These are electrical signals generated by articulatory muscles well before the onset of speech (Goncharova et al., 2003). They have a large amplitude compared to neural signals and thus interfere with the signal of interest. The presence of massive EMG limits the time windows and frequency bands of activity that can be analyzed fruitfully (although see McMenamin et al., 2009, 2010; De Vos et al., 2010).

Such caveats do not apply, however, when the neurophysiological signal is recorded intra-cranially (intracranial EEG, or iEEG), rather than on the surface of the scalp. iEEG can provide precise spatial resolution and physiological interpretations that are not possible with surface EEG or MEG. The signal is barely contaminated with electro-myographic artifacts because these do not propagate to intra-cranial electrodes. However, these investigations are limited to quite specific circumstances, such as some forms of epilepsy, where intra-cranial electrodes have to be implanted for clinical purposes.

Exceptional as they may be, intra-cranial neurophysiological recordings can contribute to our understanding of the neurocognition of language production, if the appropriate interpretative precautions are taken (see next section on Methodological Considerations). To illustrate this view, our focus here will be on revealing the dynamics of the neural events occurring in the language production network. The inclusion criterion we used when selecting the articles to be reviewed from publication databases was that they reported studies in which brain activity was recorded intra-cranially while participants were engaged in tasks requiring overt or covert language production. Below, we begin with a brief methodological primer, followed by a discussion of the empirical studies.

Patients with pharmacologically resistant epilepsy may be candidates for neurosurgical procedures during which epileptogenic zones are resected. For this procedure to be acceptable, it is of critical importance not only to identify cortical regions that produce seizure onsets but also regions with eloquent functional roles (e.g., motor, language) that should not be resected. Intra-cranial measures provide invaluable information for these decisions.

To achieve this, a classic method involves delivering mild intra-cranial electrical stimulations in different brain structures, and estimating their impact on simple cognitive tasks. Such electrical stimulation procedures are carried out routinely as part of the standard presurgical assessment (Chauvel et al., 1993). This technique can be conducted intraoperatively or extraoperatively. Its major limitation is the triggering of seizures (Hamberger, 2007). For additional pre-surgical evaluation, two main methodological approaches can be used, that we detail below.

The first method, iEEG, involves multi-contact depth electrodes that are implanted inside the brain. These enable measuring coherent activity of local neuronal populations in the vicinity of the recording sites. The electrodes can be stereotactically implanted. This means that they are placed in specific brain structures by reference to a standard atlas (Bancaud et al., 1965; Chauvel et al., 1996). This is the method used in two of the articles discussed in this review (Basirat et al., 2008; Mainy et al., 2008). Alternatively, the electrodes may be placed strictly on the basis of the patients’ MRI and its macroscopic structures (e.g., Heschl gyrus, or Broca’s area), and later referenced to a standard atlas. This is the method used by Sahin et al. (2009), also discussed in this review. The electrodes, generally between 5 and 15 of them, remain for durations between 1 and 3 weeks. The presence of these electrodes allows recordings over unaffected brain tissue during periods of normal activity in the patient’s room (i.e., not during the surgical procedure).

Electrocorticography (ECoG) is the alternative major technique, which was used in all the other studies we reviewed. In this case, subdural grids consisting of 2D arrays of 64-channels 8 × 8 electrodes are positioned directly on the lateral surface of the brain. The location of these electrode-grids with respect to underlying cortical gyral and sulcal anatomy is determined by coregistration of pre-implantation volumetric brain MRI with post-implantation volumetric brain CT. While subdural ECoG grids provide widespread cortical coverage and cortical maps of gyral activity, the iEEG electrodes record activity from both sulci and gyri and go beneath the cortical surface to deep cortical structures.

As is the case with surface EEG, cortical functional mapping can be based on various kinds of data-processing (for review, see Jacobs and Kahana, 2010). First, the signal as it unfolds in time can be averaged across trials, with the reference time being either the onset of stimulus (i.e., stimulus-locked average) or the onset of the overt response (i.e., response-locked average). This averaging yields cortical event-related potentials (ERP), which are an electrical signal generated by neuronal networks in response to a behaviorally significant event. Depending on the location and size of the electrodes (including the reference electrodes), ERPs may integrate neural activity over a range of spatial scales: surface EEG integrates activity over centimeters whereas intracranial ECoG integrates activity on a submillimeter to millimeter scale. Intracranial ERPs are generally referred to as a local field potentials (LFP) in reference to their highly localized origin (Bressler, 2002). The second type of analysis that can be conducted requires decomposing the signal into its frequency components, and then averaging these components across trials. Depending on the types of neural activity that occur in specific language tasks, cortical networks may display different states of synchrony causing cortical signal to oscillate at different frequency bands, referred to as delta band (0–4 Hz), theta band (5–8 Hz), alpha band (9–12 Hz), and gamma band (typically between 40 and 100 Hz; Donner and Siegel, 2011). Analyzing the change in the power spectra within each of these frequency bands provides information on the functional process that generates them. Such analysis is ideally suited for linking neuronal activity to language functions (notably language production), because language related cortical activity is prominently reflected in sustained activities that are not phase locked to external events (i.e., it will be less apparent in ERPs than in oscillatory activity). This is because many linguistic processes result from intrinsic network interactions within the brain (summarizing: from top-down modulations) rather than from an external drive (summarizing: from bottom-up activity). In this context, the most commonly used spectral profile in language cartography is the variation in power in gamma band, especially in high gamma bands (γhigh = 80–100 Hz, or sometimes 70–160 Hz) which is known to be a robust correlate of local neural activation (Chang et al., 2010). γhigh has been shown to be useful for detecting regional processing differences across language tasks, as well as across a variety of cognitive functions (e.g., Crone, 2000; Crone and Hao, 2002; Towle et al., 2008). Recent findings further suggest that cognitive tasks or processing levels (including in tasks requiring the production of words) induce the variations beyond the γ band (i.e., in lower frequency bands; Gaona et al., 2011).

The combination of epilepsy surgery and cognitive neurophysiology has provided a unique window into brain–behavior interactions over the past 60 years. Recent general reviews are provided by Engel et al. (2005) and by Jacobs and Kahana (2010). This being said, there are critical limitations that have to be kept in mind when deriving inferences and generalizations to the healthy population.

First of all, it is critical that recordings are obtained from normal healthy brain tissue, as distant as possible from the epileptogenic zone. In addition, recordings should be obtained at significant temporal distance from the occurrence of seizures, to avoid acute effects linked to seizure activity.

In the spatial domain, iEEG data have a spatial resolution defined in millimeters yet the electrode implantation scheme only provides a restricted sampling of selected cerebral structures. A complete 3D coverage of the brain with a spatial resolution of 3.5 mm has been estimated to require about 10,000 recording sites (Halgren et al., 1998), while the number of sites that are typically recorded with iEEG is approximately 100. Moreover, iEEG data analysis is complicated by the fact that patient populations are often small, and that recording sites are highly variable across patients. A comprehensive view of the large-scale networks involved in various cognitive tasks would therefore require combining data from multiple subjects with both overlapping and complementary electrode positions (a constraint that applies similarly to ECoG grid techniques).

Turning to the time domain, data acquired with this methodology have a temporal resolution in milliseconds. To be interpretable, however, the data need to be aggregated across trials. Thus the time resolution of the phenomenon that are described are rather in the order of tens or hundreds of milliseconds. Finally, a frequent practical limitation concerns the time-frame of participant availability for the cognitive tasks. Because this is often rather limited, the amount of data collected may be small. For this reason, it should always be kept in mind that the pattern of significant gamma band modulation is likely to be underestimated. Had there been more testing time, more electrodes may have shown significant effects.

Overall, however, when these limitations are dealt with carefully, the conclusions can be reasonably generalized beyond the population of epileptic patients. Many iEEG studies have provided spatio-temporal information about a wide range of cognitive processes (e.g., auditory perception, language, memory). These have been shown to be consistent with data from healthy participants, and have even provided the first threads of evidence later corroborated in healthy populations (Liégeois-Chauvel et al., 1989, 1994; Halgren et al., 2006; Axmacher et al., 2008; McDonald et al., 2010). For instance, Liégeois-Chauvel et al. (1999) demonstrated that enhanced sensitivity to temporal acoustic characteristics of sound in left auditory cortex underlaid the left hemispheric dominance for language. Such conclusion has been corroborated by observations from healthy participants (Trébuchon-Da Fonseca et al., 2005). Interestingly, the epileptic population under consideration includes patients suffering from mild to severe linguistic impairments, as well as patients with no apparent linguistic deficit (Mayeux et al., 1980; Hamberger and Seidel, 2003; Hamberger, 2007; Trébuchon-Da Fonseca et al., 2009). For these reasons, we argue that the iEEG studies can provide detailed spatio-temporal information about the dynamics of language production, as discussed below.

Our review of empirical studies is primarily organized according to the modalities used to elicit language production responses: auditory or visual stimuli. This is motivated by theoretical and clinical considerations. Theoretically, this distinction is thought to provide the most appropriate classification for capturing the spatio-temporal dynamics at stake. Major differences in the speed at which the input is perceived and decoded across modalities may result in major differences in the brain dynamics underlying language production. Furthermore, the networks involved in auditory and visual language production tasks are significantly different. From the clinical perspective, electrode, and subdural grid location are guided by surgical considerations only, and turn out to be highly variable from one participant to another. This has a strong influence on the kind of tasks that a participant may be asked to perform (e.g., auditory but not visual tasks for a patient implanted in superior temporal gyrus).

In addition to the above, we also included studies in which task instructions did not explicitly require that language was produced, but in which the pattern of neural activity indicated that this was most likely to be the case. These are reviewed in the Section on “Other Experimental Tasks.”

Among patients suffering from pharmaco-resistant epilepsy, temporal regions are commonly involved, and the posterior part of the superior temporal gyrus is often explored in order to know its possible involvement in seizures and/or to determine the posterior border of cortical excision. These explorations can be conducted by asking participants to repeat linguistic materials they hear (e.g., syllables, words, or sentences: Creutzfeldt et al., 1989; Crone et al., 2001; Crone and Hao, 2002; Towle et al., 2008; Fukuda et al., 2010; Pei et al., 2011), or to engage in a deeper processing of the auditory stimulus (e.g., in word association, definition, and verb generation tasks: Edwards et al., 2010; Thampratankul et al., 2010). These differences in tasks induce differences in the processes engaged to trigger the response, and in the corresponding brain activities. Most of the studies of this kind have shown reliable activity changes in γhigh time-frequency spectra. Note that these changes are very focal, and that event-related responses were clearly observed only in approximately one-fifth of the electrodes across the different studies. Here, and elsewhere, this should not be taken to indicate exclusive focal activity on these sites, given that the amount of testing and data collected is often small (see Previous Section and General Discussion). Such evidence should rather be thought of as providing a partial window on the activity of the underlying network.

Broadly speaking, perception, and overt repetition of linguistic materials involve a network comprising posterior superior temporal gyrus (pSTG), and inferior Rolandic gyri. The activation (i.e., increased γ activity) is first seen in pSTG, with a peak around 150 ms post-stimulus. The inferior rolandic gyri are activated somewhat later, around 200 ms before overt vocal response. In the course of response articulation, generally 100 ms after response onset, a second period of activity is seen in pSTG. We discuss these two sites in turn.

Activity in pSTG corresponds to auditory processing of the verbal stimulus, be it externally delivered (first cortical activity) or actually produced by the speaker (second cortical activity). For example, Fukuda et al. (2010) report a series of 15 patients in which STG is sequentially activated with a peak of γ oscillation 500 ms prior the onset of articulation and another one 100 ms after speech onset. This second gamma (γ) activity, linked to overt repetition, was smaller than the first. In between, around the onset of syllable articulation, there was little modulation of gamma oscillations. Crone et al. (2001; see also Crone and Hao, 2002) also observed this sequence of two peaks of activity during oral word repetition at a comparable location. This study reported the single case of a right-handed woman, implanted with ECoG in left temporal, left peri-sylvian, and left basal temporal occipital areas. This patient was a bimodal bilingual (English and ASL) and was tested with oral and signed responses (for further evidence on signed responses see Knapp et al., 2005). In the case of signed responses, only the pre-response peak was present, which is consistent with the idea that it reflects auditory or phonological processing of the verbal stimulus. This pattern has also been observed in another series of 12 patients by Towle et al. (2008). These authors reported that activation in the γhigh band associated with word perception included pSTG and lateral parietal regions (i.e., Wernicke’s and surrounding areas). A posterior shift in the distribution of gamma activity is reported when the patients heard the word compared to when they spoke the word. An additional response in Broca’s area was also observed in this study, which started 800 ms before the voice onset time. However, this latter activity could be related to the conditions with which repetition was elicited. Only words that had been heard in a previous block were to be repeated. In this case, the frontal response could thus be tied to decision and discrimination processes rather than linguistic processing per se.

The pre-response peak repeatedly observed in pSTG allows for a more detailed interpretation. In Fukuda et al. (2010), the peak has different latencies in stimulus- vs. response-locked averages (i.e., if the analysis focuses on the onset of the stimulus or on the onset of the response). Stimulus-locked, the peak is present shortly after stimulus (peak ∼260 ms). Response-locked, the peak starts around 500 ms pre-articulation, which is considerably later given that average response time was about 1000 ms. This suggests that this response not only reflects processing evoked by external auditory inputs but also longer lasting induced preparatory activity (Alain et al., 2007), for example phonological encoding processes. In this respect, the data from the single case reported by Crone et al. (2001) are somewhat different. pSTG activity is only present time-locked to stimulus for 500 ms, followed by a post-response activity at 1500 ms. In contrast, a more anterior recording site showed only pre-response (not post-response) activity, suggesting a role of the later site only in phonological encoding and preparation processes. We come back to this anterior–posterior contrast in Section “General Discussion,” after we have presented the evidence from the other modalities in the following sections.

No significant difference in the peak amplitude, onset latency, or peak latency of gamma activity has been reported between the left and right STG (Fukuda et al., 2010). In contrast, the later modulation of gamma oscillation recorded from inferior Rolandic sites displayed a left-hemisphere advantage. The peak of activation was earlier in left compared to right hemisphere. For simple syllables, this activity starts 200 ms before articulation onset and peaks 130 ms after it. Furthermore, a subset of inferior rolandic sites showed phoneme-specific patterns of gamma-augmentation, mostly located on the left side (for a thorough investigation of cortical signal classification to discriminate linguistic materials, i.e., words, see Kellis et al., 2010). Overall, then, these data suggest that primary sensorimotor area on the left side may have a predominant role in movement execution for phoneme articulation, in agreement with Chang et al. (2010; for comparison, Brooker and Donald, 1980, provide a critical discussion of lateralization effects observed at similar timings in surface recordings).

In some cases, participants are asked to engage in deeper processing of the stimulus to construct the response (e.g., word association task, verb generation task, response to definition; Brown et al., 2008; Edwards et al., 2010; Thampratankul et al., 2010). Quite expectedly, this leads to a broader pattern of activity. In the verb generation task, auditory stimulation is followed by gamma activation shifts from pSTG (100 ms post-stimulus) to the posterior part of middle temporal gyrus (pMTG), the parietal operculum, the temporo-parietal junction (300 ms), and the precentral gyrus (superior portion of ventral pre-motor cortex svPM: 400 ms post-stimulus). The middle frontal gyrus and the left inferior frontal gyrus (IFG) are activated later, namely 700 ms after the stimulus onset and around 300 ms prior to verbal responses (Brown et al., 2008; Edwards et al., 2010; Thampratankul et al., 2010).

The propagation of pSTG activation to MTG has been primarily linked to semantic association processes (retrieving the color of a fruit or the answer to a question; Brown et al., 2008; Thampratankul et al., 2010). The more posterior portion, proceeding from the planum temporale and terminating in the mid-to-posterior superior temporal sulcus (STS) connected with the temporo-parietal junction has been linked to verbal phonological working memory and word production (Edwards et al., 2010). The activity seen later in the left supramarginal gyrus (SMG) does not have a well defined function in this data set. Note however that functional imaging data associates fMRI bold responses in this area with phonological processes (Démonet et al., 2005; Vigneau et al., 2006). Finally, the medial pre-frontal cortices (including the supplementary motor area, the pre-supplementary motor area, and the cingulate gyrus) have been associated to voluntary control over the initiation of vocal utterances (Brown et al., 2008; see also Alario et al., 2006, for fMRI evidence).

One issue that runs across all of these studies is the attenuation of gamma responses in STG in speaking vs. listening conditions, which is also seen in non-human primates (Müller-Preuss and Ploog, 1981). In humans, Creutzfeldt et al. (1989) recorded single units while participants repeated sentences. STG neurons showed reduced responsiveness to self-produced speech compared to repetition and naming. This observation was also made by Fukuda et al. (2010), Towle et al. (2008), or Crone et al. (2001). Flinker et al. (2010) report a more specific investigation of this issue. With a phoneme repetition task, they showed that auditory cortex is not homogeneously suppressed but rather that there are fine grained spatial patterns of suppression. They conclude that every time we produce speech, auditory cortex responds with a specific pattern of suppressed and non-suppressed activity. This reduced responsiveness could be due to corollary discharges from motor speech commands preparing cortex for self-generated speech (Creutzfeldt et al., 1989, Towle et al., 2008). One difficulty when investigating this issue is to control for the volume of auditory stimuli in speaking vs. listening conditions. Yet this would be important because this intensity variable is known to affect the magnitude of the brain response (Liégeois-Chauvel et al., 1989).

The kinds of visual inputs most generally used include pictures of common objects to be named, overtly or covertly, and words to be recognized and read (Hart et al., 1998; Crone et al., 2001; Crone and Hao, 2002; Tanji et al., 2005; Usui et al., 2009; Edwards et al., 2010; Cervenka et al., 2011; Pei et al., 2011; Wu et al., 2011). In general, the visual modality engages the left baso-temporal region, often referred to as baso-temporal language area (BTLA), which includes fusiform gyrus (FG) and ITG. Activation starts in BTLA around 200 ms after picture presentation. This activity precedes pSTG activity, occurring around 200–600 ms for reading, and 400–750 ms for picture naming, which is much later than the comparable pSTG activity in auditory tasks described in the previous section (∼100 ms post-stimulus; Crone et al., 2001; Edwards et al., 2010). The BTLA presumably plays a crucial role in lexico-semantic processing and picture recognition (Crone et al., 2001; Edwards et al., 2010).

A detailed chronometric analysis of the involvement of BTLA is provided by Hart et al. (1998), on the basis of a pair of electrodes in a single patient. A functional response is recorded around 250–300 ms after visual presentation, and lasts between 450 and 750 ms, depending on the subjective familiarity of the object. Direct electrical cortical stimulation (ECS) delivered shortly after stimulus presentation caused a variety of language deficits (word-finding difficulties, empty speech, paraphasias, and speech arrest), the outcome of which was modulated by the timing of the stimulation. In particular, semantic disruptions were no longer present when the stimulation occurred 750 ms (or later) after the picture. Putting together the activation data with the so-called “time-slicing” cortical stimulation procedure provides lower and upper estimates for the engagement of BTLA in this task: in short, between 250 and 750 ms. These data were comparable in the two languages spoken by the patient.

Further information about the functional role(s) of left BTLA comes from the case of a Japanese speaker implanted with subdural electrodes on the basal temporal cortices bilaterally (Tanji et al., 2005), very close to the locations involved in Hart et al. (1998). The patient was asked to name pictures aloud, and to read silently Japanese words and pseudo-words, the latter being written in the syllabic script Kana or in the logographic and morphographic script Kanji. There were clear responses in the γhigh band in the three tasks. The pictures elicited bilateral responses on various recording sites, with a weak anterior–posterior distinction in the left response to animals and tools (animals leading to more intense signal on a more anterior site, and vice-versa). The written materials elicited left lateralized responses only, irrespective of script and lexicality. Pair-wise comparisons of the amount of activation between these conditions showed the following pattern: Kanji pseudo-words > Kanji words > Kana words ∼ Kana pseudo-words. On the basis evoked potentials recorded at similar locations on two patients, Usui et al. (2009) suggested there may be a distinction between anterior and posterior responses to the two scripts in this brain region.

This global pattern does not lend itself to a simple interpretation, however. Increased activity for Kanji pseudo-words compared to Kanji words could reflect the involvement of BTLA in semantic processing, for example if participants effortfully attempted to reach a semantic interpretation of the pseudo-words (Tanji et al., 2005, p. 3291 bottom). The distinction between animals and tools in picture naming would be consistent with this view. However, the similar contrast between words and pseudo-words presented in the Kana script did not produce the same gamma activation difference. It is possible that, compared to logographic Kanji, syllabic Kana promotes phonological processing at the expense of semantic processing, and thus the semantic effect is blurred with this script. This would also explain why there was increased activity for Kanji compared to Kana script at this location. The authors conclude that the overall pattern reflects a role of BTLA at the lexical level, as a convergence zone midway between word form and word meaning. While this interpretation is consistent with the evidence, it is formulated in broad terms and a more detailed account may require further studies (see Wu et al., 2011, for some recent further ECoG evidence, and Usui et al., 2009, for more extensive data on electrical stimulation).

Most of the articles discussed above report activation in IFG without focusing on it. Sahin et al. (2009), however, focused on Broca’s area and neighboring regions, in an iEEG study involving three patients implanted in this region. The task they used involved processing a visual stimulus (e.g., word) according to a grammatical rule of English (e.g., transforming a singular noun “horse” to plural “horses,” or transforming a verb in present tense “watch” to past tense “watched”). Across all three patients, evoked potentials were recorded at ∼200, ∼320, and ∼450 ms post-target onset. The first 200 ms peak was modulated by lexical manipulations (frequency of use). The second 320 ms peak was modulated by the nature of the task (grammatical manipulations, see examples above). The third 450 ms peak was modulated by articulatory requirements (length of the response in syllables). These results indicate that distinct linguistic processes can be distinguished at a high temporal resolution within the same locus. They also suggest that Broca’s area is not dedicated to a single kind of linguistic representation but comprises adjacent but distinct circuits which implement different levels of processes. Although the authors favor a staged model in which these different processes are performed sequentially, these data by themselves do not argue against more integrated processing (e.g., in the form of cascading: Goldrick et al., 2009).

This section includes the studies in which participants were not explicitly asked to produce language, but in which language production processes can nonetheless be suspected to have been engaged. For example, patients may be asked to press buttons (rather than speak) on the basis of the linguistic materials they are currently processing (Basirat et al., 2008; Mainy et al., 2008; Chang et al., 2010). Note that, while this studies may provide valuable information about language processing in general, the inferences ones draws with respect to language production processes themselves should be made cautiously. This is because previous comparison between neural responses in overt and covert conditions, especially in fMRI, have shown notable differences. As would be expected, all reports agree that overt responses lead to greater involvement of motor cortices than covert production. A broader brain network is engaged in overt than in covert conditions, including mesial temporal lobe as well as sub-cortical structures (Rosen et al., 2000; Shuster and Lemieux, 2005; Forn et al., 2008; Kielar et al., 2011). Importantly, it has been argued that overt and covert networks show distinct patterns of activation (Borowsky et al., 2005; Shuster and Lemieux, 2005). Barch et al. (1999) concluded that covert conditions cannot be used as simple substitutes for overt verbal responses. One demonstration of such differences between activity linked to overt and covert responding in iEEG comes from Pei et al. (2011) reviewed above. These authors briefly compared these two conditions. They observed that a major difference was in the modulation of pSTG (Wernicke’s area) after acoustic processing, while other activations were increased in the covert modality (BA22, mid-STG, BA41/42, and the temporo-parietal junction).

In the first study reviewed in this section, Mainy et al. (2008) tested 10 patients on a hierarchy of judgments on visually presented words and pseudo-words, regarding either the meaning (living vs. non-living word categorization task), the visual properties (analysis of consonant strings) or the phonological make up (rhyme decision task on pseudo-words)1. Notably in the latter case, access to phonological information may approximate the processes engaged during language production, for example during overt word reading. Once again, the measure of interest that was analyzed in most detail is the change in spectral power within the γhigh band. The results for the early stages of processing are consistent with those reviewed above concerning the visual modality. A rather abrupt onset of activity occurred around 200 ms post-stimulus in associative visual areas, irrespective of the task. With about 100 ms delay, STG showed increased activity in the semantic and phonological tasks, not in the visual property judgment task. This specific response is presumably due to the fact that, while all the stimuli were visual, the materials were pronounceable only in the semantic and the phonological tasks. Relatively similar responses were recorded in the more anterior middle temporal gyrus on some patients. Finally, around 400 ms, a response peaked in IFG, being larger in the phonological than in the semantic task (occasionally the semantic response was larger in more anterior sites). Note that the semantic task involved words and the phonological task involved pseudo-words. The authors link the IFG and the STS gamma band responses to the phonological retrieval processes and/or inner speech production. The respective roles (and their precise interaction) of these two regions stand as an important issue to be clarified.

Chang et al. (2010) also asked patients to perform phonologically based decisions. In this case, participants heard sequences of syllables and had to press a button when they heard a pre-specified target. A secondary control task required patients to repeatedly produce a syllable (/pa/) or a vowel (/a/). In the perception task, an early activity (<120 ms post stimuli) rises sharply in dorsal STG, both to target and non-target items. Quickly after, activity in ventral STG becomes larger for targets. Around 120 ms later, high gamma activity is measured in superior ventral pre-motor cortex (svPM), again larger for target items. The articulation task elicits responses at these same two locations in reverse order: svPM activity starts rising before vocal onset, and STG is activated after vocal onset, presumably as a result of auditory feedback (see Chang et al., 2010, for details on the response decision processes recorded, notably, in pre-frontal cortex). This suggests that the svPM observed early on during speech perception is closely linked to speech-motor activities. The authors discuss how motor cortex may actively participate in sublexical speech perception, perhaps as pSTG accesses the articulatory network to compare externally driven auditory representations with internal motor representations. Additionally, it is noteworthy that STS regions were not active prior to articulation, as they were in various studies reviewed above. This could be due to the repetitive nature of the task, whereby the response is not encoded anew on every trial but rather stored ready elsewhere. The production data reported are too scarce to clarify this point.

Finally, Basirat et al. (2008) report a study whose methodology may be fruitful to investigate the monitoring processes that accompany language production (Postma and Oomen, 2005). The original motivation was to investigate so-called multistable perception, i.e., perceptual changes occurring while listening to a briefly cycling stretch of speech. Two patients with iEEG electrodes implanted in frontal, superior temporal, and parietal areas were asked to listen to sequences of repeated syllables. Two experimental conditions were contrasted. In the first one, the two syllables alternated regularly (e.g., /pata…/), and patients were asked to press a button whenever they perceived a change in the repeated utterance. In the second condition, the alternation was random (e.g., /…papapa…tatata…papapa…/), and patients were asked to detect transitions between/pa/and/ta/. In the first condition the button presses are elicited by endogenous perceptual changes, while in the second one they are elicited by exogenous changes in the signal. Contrasting these two conditions right before a transition was detected revealed significant gamma band activation in the left inferior frontal and supramarginal gyri, but not in temporal sites. This activity could be attributed to the endogenous emergence of the varying speech forms. The authors note the involvement of phonological comparison and decision making in perceptual transitions. These are indeed two standard components of speech monitoring accounts (Christoffels et al., 2007; Möller et al., 2007; Riès et al., 2011).

This review encompasses articles in which intracranial recordings of patients involved in language production tasks are reported. The combination of the observations made in the different studies provides a patchy yet informative view of the spatio-temporal brain dynamics involved in language production. The review also provides some clues regarding the relative merits of different intracranial indicators of cognitive processing, and allows a number of considerations regarding the relationship between these measures and the gold-standard of brain-function mapping (i.e., brain stimulation). Below we discuss these points in turn. We then finish with some considerations about the amount of available evidence, and avenues for future research using this methodology.

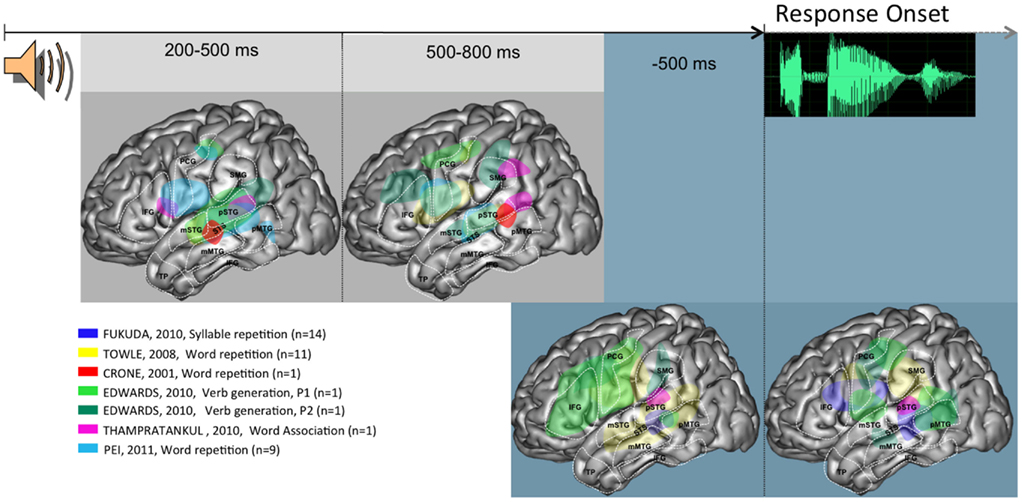

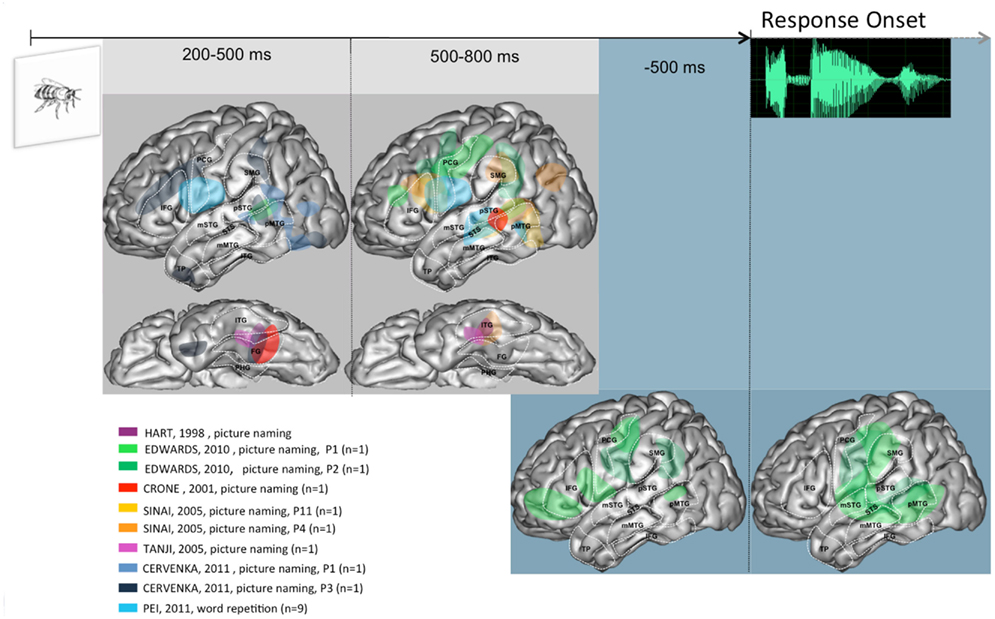

The view that emerges from the ECoG studies we have reviewed is summarized on Figure 1, for speech elicited auditorily, and on Figure 2, for speech elicited visually. These figures, as well as the discussion below, focus on the left hemisphere.

Figure 1. Summary of activities observed across patients and studies when language production is triggered auditorily, projected on a standardized left hemisphere. The two figures on the gray background represent activities time-locked to stimulus presentation, at the indicated timings. The two figures on the blue background represent activities time-locked to response onset. Further details are provided in the Section “Overview of the Spatio-Temporal Dynamics Uncovered” in the General Discussion. Abbreviations used: IFG, inferior frontal gyrus; STS, superior temporal sulcus; SMG, supra marginal gyrus; pSTG, posterior part of the superior temporal gyrus; mSTG, middle part of the superior temporal gyrus; pMTG, posterior part of the middle temporal gyrus; mMTG, middle part of the middle temporal gyrus; PCG, pre central gyrus; TP, temporal pole.

Figure 2. Summary of activities observed across patients and studies when language production is triggered visually, projected on a standardized left hemisphere. The two figures on the gray background represent activities time-locked to stimulus presentation, at the indicated timings; lateral and basal views shown. The two figures on the blue background represent activities time-locked to response onset. Further details are provided in the Section “Overview of the Spatio-Temporal Dynamics Uncovered” in the General Discussion. Abbreviations used: IFG, inferior frontal gyrus; STS, superior temporal sulcus; SMG, supra marginal gyrus; pSTG, posterior part of the superior temporal gyrus; mSTG, middle part of the superior temporal gyrus; pMTG, posterior part of the middle temporal gyrus; mMTG, middle part of the middle temporal gyrus; PCG, pre central gyrus; TP, temporal pole; FG, fusiform gyrus; ITG, inferior temporal gyrus.

In the auditory tasks, brain activity starts by being rather focused on the superior temporal gyrus and sulcus (middle and posterior parts), between 200 and 500 ms post-stimulus. At this point of time, a rather consistent activity is also seen in the inferior part of frontal peri-rolandic areas. Later, between 500 and 800 ms post-stimulus, a somewhat broader activation network involves STG and STS, as was the case previously, but also SMG, IFG, and a larger part of peri-rolandic areas. The relative involvement of the different parts of this network seems to be modulated by task demands (e.g., verb generation vs. word repetition). Time-locked to response onset, an overall stable network is observed both before and after speech onset. As in the previous epochs, this network is mostly focused around left peri-sylvian areas, with a larger area of activity in inferior frontal areas before response (notably in the verb generation task).

In the visual tasks, consistent activity is detected in the basal temporal region. This activity starts in its most posterior part (200–500 ms post-stimulus), and is seen later (500–800 ms) in the middle part. A much more anterior locus of activity (temporal pole) is seen unexpectedly early (200 ms post-stimulus), but only in one patient (Cervenka et al., 2011). Concomitantly with this baso-temporal progression, consistent activities have been reported in the posterior part of MTG and the middle and posterior parts of STS, but not in STG, which was clearly seen in the auditory tasks. The similarity between visual and auditory tasks, however, is clearly apparent within the 500–800 ms time window, in inferior frontal and peri-rolandic areas, as well as in SMG. Finally, time-locked to response onset, very few data are available. The pattern seen in the two patients reported by Edwards et al. (2010) is in keeping with the peri-sylvian networked discussed for auditory tasks.

Overall, these data allow a number of general conclusions. There is a clear early modality effect, whereby auditory tasks recruit STG while visual tasks recruit basal and lateral temporal areas. The possible convergence between these modality specific activities may be in STS, which is a plausible neural correlate of phonological encoding processes (Edwards et al., 2010). These could also involve SMG, whose activity is also seen across modalities, but somewhat later (500–800 ms window). Peri-rolandic areas are recruited in the two modalities relatively early (200–500 ms window). This suggests a very early involvement of (pre-) motor processes, which is consistent with the hypothesis of a dorsal stream in verbal processing. Around and time-locked to response onset the broad peri-sylvian network is not easily characterized in specific cognitive terms, given the reviewed evidence.

What is also clear from this review is the great heterogeneity in the data sets available, across patients and tasks. Our discussion above therefore had to consider both very general phenomena that seem to be reproducible across patients or studies, and more specific hypothesis that have only been discussed or tested in specific studies, or with specific patients. We come back to this issue, in more general terms, in the section below on the limits of this methodology.

The number of articles published in this thread of research is remarkably limited. Despite our use of a rather broad scope, in accordance with the topic of this special issue, we could only find about 25 articles in which patients produced language in one way or another while their brain activity was recorded intra-cranially. The number of individuals sampled in each article is also relatively low. Only three of the articles report evidence from more than ten patients (Sinai et al., 2005; Towle et al., 2008; Fukuda et al., 2010). The remaining studies report evidence from four or fewer patients, and six of them focus on single cases (Hart et al., 1998; Crone et al., 2001; Crone and Hao, 2002; Tanji et al., 2005; Thampratankul et al., 2010). The single-case approach is undoubtedly appropriate in this context, in light of the inter-individual variability visible in the few studies reporting more than one patient. However, generalizations from these data to normal function should only be made when the reliability of a given phenomenon has been examined across individuals. Somewhat paradoxically, this relatively limited sample of data was obtained using a great variety of tasks and theoretical approaches, presumably because of clinical motivations underling a great share of the tasks used. While this provides a wide sampling of evidence, it also complicates the comparisons across studies whenever a fine grained definition of cognitive processes is to be used. It remains to be seen whether future research will converge on some specific theoretical questions and experimental paradigms.

The networks revealed by the meta-analysis of Indefrey and Levelt (2004) on diverse brain imaging techniques, and by Price (2010) on fMRI only, already discussed in the Section “Introduction,” are largely consistent with one another. The main difference between these two reviews is that Price (2010) reports in more detail areas sub-tending input processes, and that only Price (2010) reports the involvement of medial frontal areas (e.g., pre-SMA) in volition, selection, and execution (see also Alario et al., 2006). The language production network is, by and large, consistent with the activation localizations reported on Figures 1 and 2. Likewise, the timing of language production operations that emerges from the reviews by Indefrey and Levelt (2004) and by Ganushchak et al. (2011) is consistent with what is reported here.

Given this context, it becomes interesting to compare in some more detail link between spatial and timing localization of cognitive events, in other words the spatio-temporal dynamics uncovered by these different meta-analysis and reviews. Here, some notable inconsistencies seem to emerge.

Regarding activity in the temporal gyrus, our review does not reveal early middle temporal activity (150–255 ms post-stimulus according to Indefrey and Levelt, 2004) but only posterior temporal gyrus activity. This activity is mostly present between 200 and 800 ms in the visual tasks, which is quite comparable with the 200–400 ms estimate of Indefrey and Levelt (2004). By contrast, this activity is almost absent (and if anything occurs earlier) in the auditory tasks. Thus, the data reviewed here do not support (but neither clearly contradict) the anterior to posterior propagation along the middle temporal gyrus associated to lexical to phonological pathway by Indefrey and Levelt (2004). Note that recent MEG evidence suggests a “reverse” posterior to anterior propagation in picture naming (Liljeström et al., 2009; see also Edwards et al., 2010, for further discussion). Additionally, the most anterior part of STG associated to monitoring and auditory object processing in Indefrey and Levelt’s (2004) meta-analysis is not consistently activated in the studies we reviewed. Note that this region was commonly recorded and that monitoring was presumably engaged in the tasks that were used.

Another relevant point of inconsistency concerns the role of different frontal areas. The intracranial studies we reviewed consistently report very fast responses in peri-rolandic areas (around 200 ms post-stimulus), also seen in MEG during picture naming (Liljeström et al., 2009, Figure 3). This is much earlier than the articulatory timing specified by Indefrey and Levelt (2004). While the interpretation of this fast response remains to be settled, its existence seems to go against a very sequential view of the word production process (Goldrick et al., 2009). Finally, IFG is also activated during language production, but, while Price (2010) highlights its role in early stage which is selection process, as we did when considering the data from Towle et al. (2008), Indefrey and Levelt (2004) focus on syllabification process only for this region.

This brief comparison of results across methodologies indicates that it is a real challenge to arrive at an integrated view of the dynamics of brain activity during language production that is consistent with data from the different available techniques (see also Jerbi et al., 2009; Liljeström et al., 2009). In this context, and with its inherent strengths and limitations, iEEG can provide a powerful method for testing specific explicit hypothesis derived from the meta-analysis of surface EEG and fMRI data and thus to provide valuable details about the spatio-temporal dynamics of language production.

The primary motivation for using invasive brain-activity recording methods, such as iEEG and ECoG, is to help delineating between dysfunctional and functionally eloquent tissue. Yet ECS still is considered as the gold standard for this purpose, whereby elicited focal activity changes induce language task interruptions. It is of clinical importance to compare these methods because in some respects iEEG recordings have a number of advantages over brain stimulation (e.g., iEEG allows fast parallel recording of multiple sites vs. time-consuming sequential recording of individual sites; stimulation can inadvertently influence distant areas through axonal connections). The comparison is also interesting from a cognitive perspective. The two methods do not always provide exactly the same information about which areas are involved. Every patient in which both methods are tested is bound to show sites with converging patterns (significant ECoG effect and disruption through ECS, or neither) as well as sites with diverging patterns (either an ECoG effect and no ECS disruption, or the opposite; see more on this below). This calls for caution when drawing inferences about the healthy brain (just as anatomo-functional correlations established with neuropsychological evidence and with brain activation data should be combined cautiously). In particular, the specific signal recorded in each study (e.g., the frequency ranges considered within or outside the gamma band) has an influence on the sites that may turn out to be significantly active.

Among the studies reviewed above, some report language production related gamma activity in areas that are largely concordant with those observed in brain stimulation (Towle et al., 2008; Fukuda et al., 2010). In contrast, Brown et al. (2008) or Thampratankul et al. (2010) found that the areas showing significant γhigh band augmentation were larger than the eloquent areas suggested by the electrical neuro-stimulation procedure. This discrepancy might in part be due to differences in the age of the population of interest, which was diverse across studies. Brown et al. (2008) point out various studies which report positive correlations between the age of patients and the number of sites where neuro-stimulation produced naming errors in language mapping (Ojemann et al., 2003; Schevon et al., 2007).

Two articles were directly devoted to a comparison between γhigh band recorded from ECoG and ECS for mapping the language production function. Sinai et al. (2005) probed ECS with different language production tasks, and focused on picture naming for the ECS–ECoG comparison. The primary goal was to test whether ECoG activity predicts ECS disruption. In their data, sites in which no ECoG activity is recorded are rather unlikely to disrupt naming during ECS. By contrast recording ECoG activity in one site does not provide a reliable indication that the site will show disrupted performance during ECS (i.e., the ECoG recording could well be a “false alarm”). These authors also point out that ECS may sometimes overestimate the cortical territory that is critical to function, as shown by occasional good post-operative performance while ECS disrupting sites had to be resected. For these reasons, the authors suggest that, at least for now, ECoG seems to be suitable to provide a preliminary functional map from all implanted subdural electrodes, and to determine cortical sites of lower priority during ECS mapping (i.e., those that do not show ECoG activity in the tasks of clinical interest). It could also be valuable in those cases or sites where ECS is not feasible (because of clinical seizures, after-discharges, or pain). Following a similar logic, Cervenka et al. (2011) show a highly variable degree of overlap between ECS and ECoG sites across four bilingual speakers. In particular, the two methods provide contrastive results regarding the degree of cortical overlap between first and second language. They conclude, in rather general terms, that ECoG provide a useful complement to ECS, notably with bilingual speakers.

ECoG could thus be, in principle, a useful peri-operative tool. Its use is made difficult, however, because of the requirement to conduct off-line statistical analysis on data collected from multiple trials (vs. the immediate “yes–no” answer stemming from ECS). This constraint may in part be relieved by conceiving a statistical procedure which can be implemented online during the surgical procedure. This is the goal of Roland et al. (2010), which seek to reduce the amount of data needed to obtain meaningful ECoG patterns by using an algorithm designed for real-time event detection. Signal modeling for real-time identification and event detection (SIGFRIED) provides such algorithm, along with a non-expert user oriented interface. These allow detecting online task-related modulations in the ECoG γhigh band while patients perform simple motor and speech tasks during awake craniotomy. Their findings indicate that a subset of areas identified by SIGFRIED correspond to those identified by stimulation mapping, without this identification taking much longer in the former case. This method may provide a realistic way, in peri-operative terms, to conduct preliminary mapping of functional sites prior to detail stimulation mapping of predetermined ECoG eloquent areas. This could also be used to circumscribe brain regions during experimental testing.

The primary measure in all the studies we have reviewed is the modulation of γhigh band activity linked to different processing stages. These studies establish that γhigh band (>70 Hz) provides a powerful means of cortical mapping and detection of task-specific activations (Crone et al., 2001, Crone and Hao, 2002; Canolty et al., 2007; Towle et al., 2008). Additionally, LFP (i.e., ERPs measured with iEEG, see Introduction) provide a much clearer view of the time course (e.g., Sahin et al., 2009).

Recent intracranial studies of language production have mainly utilized high gamma power rather than ERPs, presumably because they are more focal and are more direct indications of neural activation. However, frequency changes during cognitive tasks are not limited to gamma variations, and there could be important differences within the gamma band itself. Regarding the first point, Canolty et al. (2007) analyzed complex oscillatory responses and found that theta was the frequency that was most shared between electrodes. It seemed to be an important regulator of inter-regional communication during complex behavioral tasks (see also Korzeniewska et al., 2011, for a detailed analysis of functional connectivity). Regarding the second point, Gaona et al. (2011) provide evidence that modulations of different stretches of the γhigh band may show differential sensitivity to linguistic tasks and processing stages. Presumably, a complete picture of the spatio-temporal brain and cognitive dynamics involved in language production will only emerge from a full consideration of this intricate pattern of activities across frequency bands.

The evidence we have reviewed begs a number of unanswered questions regarding the spatio-temporal dynamics of the brain areas involved in language production. For example, activity in the visual tasks clearly engages lateral temporal areas, while this has not been described in auditory tasks. Note however the implicit “correlation” between the tasks used and the electrode implantation, whereby patients with more superior electrodes are more likely to be engaged in auditory tasks, while patients with lateral and basal electrodes are likely to be engaged in visual tasks. A more accurate description of the truly modality specific activities would benefit from cross-evidence where these two populations of patients are tested in both modalities. This would allow a more specific interpretation of the activity seen is STG and STS (see Edwards et al., 2010, for some hypothesis).

As another example, the early activity seen in peri-rolandic areas could suggest very early preparation of the response, or early engagement of motor areas in speech decoding, or both. This is an important issue, as it ties with the interaction between the perception and the action streams involved in language processing. Experimental tasks directly designed to clarify this kind of issues are still lacking detailed tests with this population. As a final example, the studies we have reviewed do not report (by lack of available data) any evidence about some brain structures that are known to be important for language production (e.g., temporal pole; Tsapkini et al., 2011). This leaves a number of open questions in our understanding of the brain dynamics in which they may be involved. They are potentially important testing grounds, should they be testable in a given patient.

Many specific aspects of word production remain largely unexplored with intracranial recordings. It is clear however, that combining specific cognitive hypotheses with the temporal and spatial resolution of this technique can provide a powerful tool to uncover the dynamics of language production.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This research has received funding from the European Research Council under the European Community’s Seventh Framework Programme (FP7/2007-2013 Grant agreement n 263575).

Alain, C., Snyder, J. S., He, Y., and Reinke, K. S. (2007). Changes in auditory cortex parallel rapid perceptual learning. Cereb. Cortex 17, 1074–1084.

Alario, F.-X., Chainay, H., Lehericy, S., and Cohen, L. (2006). The role of the supplementary motor area (SMA) in word production. Brain Res. 1076, 129–143.

Axmacher, N., Schmitz, D. P., Wagner, T., Elger, C. E., and Fell, J. (2008). Interactions between medial temporal lobe, prefrontal cortex, and inferior temporal regions during visual working memory: a combined intracranial EEG and functional magnetic resonance imaging study. J. Neurosci. 28, 7304–7312.

Bancaud, J., Talairach, J., Bonis, A., Schaub, C., Szikla, G., Morel, P., and Bordas-Ferrer, M. (1965). La stéréoélectroencéphalographie dans l’Epilepsie: Informations Neurophysiopathologiques Apportées par l’Investigation Fonctionnelle Stéréotaxique. Paris: Masson.

Barch, D. M., Sabb, F. W., Carter, C. S., Braver, T. S., Noll, D. C., and Cohen, J. D. (1999). Overt verbal responding during fMRI scanning: empirical investigations of problems and potential solutions. Neuroimage 10, 642–657.

Basirat, A., Sato, M., Schwartz, J. L., Kahane, P., and Lachaux, J. P. (2008). Parieto-frontal gamma band activity during the perceptual emergence of speech forms. Neuroimage 42, 404–413.

Borowsky, R., Owen, W. J., Wile, T. L., Friesen, K. K., Martin, J. L., and Sarty, G. E. (2005). Neuroimaging of language processes: fMRI of silent and overt lexical processing and the promise of multiple process imaging in single brain studies. Can. Assoc. Radiol. J. 56, 204–213.

Bressler, S. L. (2002). “Event related potentials,” in The Handbook of Brain Theory and Neural Networks, ed. M. A. Arbib (Cambridge, MA: MIT Press), 412–415.

Brooker, B. H., and Donald, M. W. (1980). Contribution of the speech musculature to apparent human EEG asymmetries prior to vocalization. Brain Lang. 9, 226–245.

Brown, E. C., Rothermel, R., Nishida, M., Juhász, C., Muzik, O., Hoechstetter, K., Sood, S., Chugani, H. T., and Asano, E. (2008). In vivo animation of auditory-language-induced gamma-oscillations in children with intractable focal epilepsy. Neuroimage 41, 1120–1131.

Canolty, R. T., Soltani, M., Dalal, S. S., Edwards, E., Dronkers, N. F., Nagarajan, S. S., Kirsch, H. E., Barbaro, N. M., and Knight, R. T. (2007). Spatiotemporal dynamics of word processing in the human brain. Front. Neurosci. 1:1. doi:10.3389/neuro.01/1.1.014.2007

Cervenka, M. C., Boatman-Reich, D. F., Ward, J., Franaszczuk, P. J., and Crone, N. E. (2011). Language mapping in bilingual patients: electrocorticography and cortical stimulation during naming. Front. Hum. Neurosci. 5:13. doi:10.3389/fnhum.2011.00013

Chang, E. F., Edwards, E., Nagarajan, S. S., Fogelson, N., Dalal, S. S., Canolty, R. T., Kirsch, H. E., Barbaro, N. M., and Knight, R. T. (2010). Cortical spatio-temporal dynamics underlying phonological target detection in humans. J. Cogn. Neurosci. 23, 1437–1446.

Chauvel, P., Landre, E., Trottier, S., Vignal, J. P., Biraben, A., Devaux, B., and Bancaud, J. (1993). Electrical stimulation with intracerebral electrodes to evoke seizures. Adv. Neurol. 63, 115–121.

Chauvel, P., Vignal, J. P., Biraben, A., Badier, J. M., and Scarabin, J. M. (1996). “Stereoelectroencephalography,” in Focus Localization. Multimethodological Assessment of Focalization-Related Epilepsy, eds G. Pavlik and H. Stefan (Berlin: Thomas Wiese), 135–163.

Christoffels, I. K., Formisano, E., and Schiller, N. O. (2007). Neural correlates of verbal feedback processing: an fMRI study employing overt speech. Hum. Brain Mapp. 28, 868–879.

Costa, A., Strijkers, K., Martin, C., and Thierry, G. (2009). The time course of word retrieval revealed by event-related brain potentials during overt speech. Proc. Natl. Acad. Sci. U.S.A. 106, 21442–21446.

Creutzfeldt, O., Ojemann, G., and Lettich, E. (1989). Neuronal activity in the human lateral temporal lobe I. Responses to speech. Exp. Brain Res. 77, 476–489.

Crone, N. E., and Hao, L. (2002). Functional dynamics of spoken and signed word production: a case study using electrocorticographic spectral analysis. Aphasiology 16, 903–926.

Crone, N. E., Hao, L., Hart, J. Jr., Boatman, D., Lesser, R. P., Irizarry, R., and Gordon, B. (2001). Electrocorticographic gamma activity during word production in spoken and sign language. Neurology 5, 2045–2053.

De Vos, M., Riès, S., Vanderperren, K., Vanrumste, B., Alario, F. X., Van Huffel, S., and Burle, B. (2010). Removal of muscle artifacts from eeg recordings of spoken language production. Neuroinformatics 8, 135–150.

DeLeon, J., Gottesman, R. F., Kleinman, J. T., Newhart, M., Davis, C., Heidler-Gary, J., Lee, A., and Hillis, A. E. (2007). Neural regions essential for distinct cognitive processes underlying picture naming. Brain 130, 1408–1422.

Démonet, J.-F., Thierry, G., and Cardebat, D. (2005). Renewal of the neurophysiology of language: functional neuroimaging. Physiol. Rev. 85, 49–95.

Donner, T. H., and Siegel, M. (2011). A framework for local cortical oscillation patterns. Trends Cogn. Sci. 15, 191–199.

Edwards, E., Nagarajan, S. S., Dalal, S. S., Canolty, R. T., Kirsch, H., Barbaro, N. M., and Knight, R. T. (2010). Spatiotemporal imaging of cortical activation during verb generation and picture naming. Neuroimage 50, 291–301.

Engel, A. K., Moll, C. K. E, Fried, I., and Ojemann, G. A. (2005). Invasive recordings from the human brain: clinical insights and beyond. Nat. Rev. Neurosci. 6, 35–47.

Flinker, A., Chang, E. F., Kirsch, E. H., Barbaro, N. M., Crone, N. E., and Knight, R. T. (2010). Single-trial speech suppression of auditory cortex activity in humans. J. Neurosci. 30, 16643–16650.

Forn, C., Ventura-Campos, N., Belenguer, A., Belloch, V., Parcet, M. A., and Avila, C. (2008). A comparison of brain activation patterns during covert and overt paced auditory serial addition test tasks. Hum. Brain Mapp. 29, 644–650.

Fukuda, M., Rothermel, R., Juhász, C., Nishida, M., Sood, S., and Asano, E. (2010). Cortical gamma-oscillations modulated by listening and overt repetition of phonemes. Neuroimage 49, 2735–2745.

Ganushchak, L. Y., Christoffels, I. K., and Schiller, N. O. (2011). The use of electroencephalography in language production research: a review. Front. Psychol. 2:208. doi:10.3389/fpsyg.2011.00208

Gaona, C. M., Sharma, M., Freudenburg, Z. V., Breshears, J. D., Bundy, D. T., Roland, J., Barbour, D. L., Schalk, G., and Leuthardt, E. C. (2011). Nonuniform high-gamma (60-500 Hz) power changes dissociate cognitive task and anatomy in human cortex. J. Neurosci. 31, 2091–2100.

Goldrick, M., Dell, G. S., Kroll, J., and Rapp, B. (2009). Sequential information processing and limited interaction in language production. Science. [E-letter].

Goncharova, I. I., McFarland, D. J., Vaughan, T. M., and Wolpaw, J. R. (2003). EMG contamination of EEG: spectral and topographical characteristics. Clin. Neurophysiol. 114, 1580–1593.

Halgren, E., Marinkovic, K., and Chauvel, P. (1998). Generators of the late cognitive potentials in auditory and visual oddball task. Electroencephalogr. Clin. Neurophysiol. 106, 156–164.

Halgren, E., Wang, C., Schomer, D. L., Knake, S., Marinkovic, K., Wu, J., and Ulberte, I. (2006). Processing stages underlying word recognition in the anteroventral temporal lobe. Neuroimage 30, 1401–1413.

Hamberger, M. J. (2007). Cortical language mapping in epilepsy: a critical review. Neuropsychol. Rev. 17, 477–489.

Hamberger, M. J., and Seidel, W. T. (2003). Auditory and visual naming tests: normative and patient data for accuracy, response time, and tip-of-the-tongue. J. Int. Neuropsychol. Soc. 9, 479–489.

Hart, J. Jr., Crone, N. E., Lesser, R. L., Sieracki, J., Miglioretti, D. L., Hall, C., Sherman, D., and Gordon, B. (1998). Temporal dynamics of verbal object comprehension. Proc. Natl. Acad. Sci. U.S.A. 95, 6498–6503.

Indefrey, P., and Levelt, W. J. M. (2004). The spatial and temporal signatures of wordproduction components. Cognition 92, 101–144.

Jacobs, J., and Kahana, M. J. (2010). Direct brain recordings fuel advances in cognitive electrophysiology. Trends Cogn. Sci. 14, 162–171.

Jerbi, K., Ossandon, T., Hamame, C. M., Senova, S., Dalal, S. S., Jung, J., Minotti, L., Bertand, O., Berthoz, A., Kahane, P., and Lachaux, J. P. (2009). Task-related gamma-band dynamics from an intracerebral perspective: review and implications for surface EEG and MEG. Hum. Brain Mapp. 30, 1758–1771.

Kellis, S., Miller, K., Thomson, K., Brown, R., House, P., and Greger, B. (2010). Decoding spoken words using local field potentials recorded from the cortical surface. J. Neural Eng. 7, 056007.

Kielar, A., Milman, L., Bonakdarpour, B., and Thompson, C. K. (2011). Neural correlates of covert and overt production of tense and agreement morphology: evidence from fMRI. J. Neurolinguistics 24, 183–201.

Knapp, H. P., Corina, D. P., Panagiotides, H., Zanos, S., Brinkely, J., and Ojemann, G. (2005). “Frontal lobe representations of American sign language, speech, and reaching: data from cortical stimulation and local field potentials. Program No. 535.7,” in Neuroscience Meeting Planner, Washington, DC: Society for Neuroscience.

Korzeniewska, A., Franaszczuk, P. J., Crainiceanu, C. M., Kus, R., and Crone, N. E. (2011). Dynamics of large-scale cortical interactions at high gamma frequencies during word production: event related causality (ERC) analysis of human electrocorticography (ECoG). Neuroimage 56, 2218–2237.

Liégeois-Chauvel, C., De Graaf, J. B., Laguitton, V., and Chauvel, P. (1999). Specialization of left auditory cortex for speech perception in man is based on temporal coding. Cereb. Cortex 9, 484–496.

Liégeois-Chauvel, C., Morin, C., Musolino, A., Bancaud, J., and Chauvel, P. (1989). Evidence for a contribution of the auditory cortex to audiospinal facilitation in man. Brain 112, 375–391.

Liégeois-Chauvel, C., Musolino, A., Badier, J. M., Marquis, P., and Chauvel, P. (1994). Evoked potentials recorded from the auditory cortex in man: evaluation and topography of the middle latency components. Electroencephalogr. Clin. Neuro. 92, 204–214.

Liljeström, M., Hultén, A., Parkkonen, L., and Salmelin, R. (2009). Comparing MEG and fMRI views to naming actions and objects. Hum. Brain Mapp. 30, 1845–1856.

Maess, B., Friederici, A. D., Damian, M., Meyer, A. S., and Levelt, W. J. M. (2002). Semantic category interference in overt picture naming: Sharpening current density localization by PCA. J. Cogn. Neurosci. 14, 455–462.

Mainy, N., Jung, J., Baciu, M., Kahane, P., Schoendorff, B., Minotti, L., Hoffmann, D., Bertrand, O., and Lachaux, J. P. (2008). Cortical dynamics of word recognition. Hum. Brain Mapp. 29, 1215–1230.

Mayeux, R., Brandt, J., Rosen, J., and Benson, D. F. (1980). Interictal memory and language impairment in temporal lobe epilepsy. Neurology 30, 120–125.

McDonald, C. R., Thesen, T., Carlson, C., Blumberg, M., Girard, H. M., Trongnetrpunya, A., Sherfey, J. S., Devinsky, O., Kuzniecky, R., and Dolye, W. K. (2010). Multimodal imaging of repetition priming: using fMRI, MEG, and intracranial EEG to reveal spatiotemporal profiles of word processing. Neuroimage 53, 707–717.

McMenamin, B. W., Shackman, A. J., Maxwell, J. S., Bachhuber, D. R., Koppenhaver, A. M., Greischar, L. L., and Davidson, R. J. (2010). Validation of ICA-based myogenic artifact correction for scalp and source-localized EEG. Neuroimage 49, 2416–2432.

McMenamin, B. W., Shackman, A. J., Maxwell, J. S., Greischar, L. L., and Davidson, R. J. (2009). Validation of regression-based myogenic correction techniques for scalp and source-localized EEG. Psychophysiology 46, 578–592.

Möller, J., Jansma, B. M., Rodriguez-Fornells, A., and Munte, T. F. (2007). What the brain does before the tongue slips. Cereb. Cortex 17, 1173–1178.

Müller-Preuss, P., and Ploog, D. (1981). Inhibition of auditory cortical neurons during phonation. Brain Res. 215, 61–76.

Ojemann, S. G., Berger, M. S., Lettich, E., and Ojemann, G. A. (2003). Localization of language function in children: results of electrical stimulation mapping. J. Neurosurg. 98, 465–470.

Pei, X., Leuthardt, E. C., Goanal, C. M., Brunner, P., Wolpaw, J. R., and Schalk, G. (2011). Spatiotemporal dynamics of electrocorticographic high gamma activity during overt and covert word repetition. Neuroimage 54, 2960–2972.

Postma, A., and Oomen, C. E. (2005). “Critical issues in speech monitoring,” in Phonological Encoding and Monitoring in Normal and Pathological Speech, eds R. Hartsuiker, Y. Bastiaanse, A. Postma, and F. Wijnen (Hove: Psychology Press), 157–166.

Price, C. J. (2010). The anatomy of language: a review of 100 fMRI studies published in 2009. Ann. N. Y. Acad. Sci. 119, 62–88.

Riès, S., Janssen, N., Dufau, S., Alario, F. X., and Burle, B. (2011). General-purpose monitoring during speech production. J. Cogn. Neurosci. 23, 1419–1436.

Roland, J., Brunner, P., Johnston, J., Schalk, G., and Leuthardt, E. C. (2010). Passive real-time identification of speech and motor cortex during an awake craniotomy. Epilepsy Behav. 18, 123–128.

Rosen, H. J., Ojemann, J. G., Ollinger, J. M., and Petersen, S. E. (2000). Comparison of brain activation during word retrieval done silently and aloud using fMRI. Brain Cogn. 42, 201–217.

Sahin, N. T., Pinker, S., Cash, S. S., Schomer, D., and Halgren, E. (2009). Sequential processing of lexical, grammatical, and phonological information within Broca’s area. Science 326, 445–449.

Salmelin, R. (2007). Clinical neurophysiology of language: the MEG approach. Clin. Neurophysiol. 118, 237–254.

Salmelin, R., Hari, R., Lounasmass, O. V., and Sams, M. (1994). Dynamics of brain activation during picture naming. Nature 368, 463–465.

Schevon, C. A., Carlson, C., Zaroff, C. M., Weiner, H. J., Doyle, W. K., Miles, D., Lajoie, J., Kuzniecky, R., Pacia, S., Vazquez, B., Luciano, D., Najjar, S., and Devinsky, O. (2007). Pediatric language mapping: sensitivity of neurostimulation and Wada testing in epilepsy surgery. Epilepsia 48, 539–545.

Shuster, L. I., and Lemieux, S. K. (2005). An fMRI investigation of covertly and overtly produced mono- and multisyllabic-words. Brain Lang. 93, 20–31.

Sinai, A., Bowers, C. W., Crainiceanu, C. M., Boatman, D., Gordon, B., Lesser, R. P., Lenz, F. A., and Crone, N. E. (2005). Electrocorticographic high gamma activity versus electrical cortical stimulation mapping of naming. Brain 128, 1556–1570.

Tanji, K., Suzuki, K., Delorme, A., Shamoto, H., and Nakasato, N. (2005). High-frequency γ-band activity in the basal temporal cortex during picture-naming and lexical-decision tasks. J. Neurosci. 25, 3287–3293.

Thampratankul, L., Nagasawa, T., Rothermel, R., Juhasz, C., Sood, S., and Asano, E. (2010). Cortical gamma oscillations modulated by word association tasks: intracranial recording. Epilepsy Behav. 18, 116–118.

Towle, V. L., Yonn, H. A., Castelle, M., Edgar, J. C., Biassou, N. M., Frim, D. M., Spire, J. P., and Kohrman, M. H. (2008). ECoG gamma activity during a language task: differentiating expressive and receptive speech areas. Brain 131, 2013–2027.

Trébuchon-Da Fonseca, A., Giraud, K., Badier, J. M., Chauvel, P., and Liegeois-Chauvel, C. (2005). Hemispheric lateralization of voice onset time (VOT): comparison between depth and scalp EEG recordings. Neuroimage 27, 1–14.

Trébuchon-Da Fonseca, A., Guedj, E., Alario, F. X., Laguitton, V., Mundler, O., Chauvel, P., and Liegeois-Chauvel, C. (2009). Brain regions underlying word finding difficulties in temporal lobe epilepsy. Brain 132, 2772–2784.

Tsapkini, K., Frangakis, C. E., and Hillis, A. E. (2011). The function of the left anterior temporal pole: evidence from acute stroke and infarct volume. Brain 134, 3094–3105.

Usui, K., Ikeda, A., Nagamine, T., Matsuhashi, M., Kinoshita, M., Mikuni, N., Miyamoto, S., Hashimoto, N., Fukuyama, H., and Shibasaki, H. (2009). Temporal dynamics of Japanese morphogram and syllabogram processing in the left basal temporal area studied by event-related potentials. J. Clin. Neurophysiol. 26, 160–166.

Vigneau, M., Beaucousin, V., Herve, P. Y., Duffau, H., Crivello, F., Houdé, O., Mazoyer, B., and Tzourio-Mazoyer, N. (2006). Meta-analyzing left hemisphere language areas: phonology, semantics, and sentence processing. Neuroimage 30, 1414–1432.

Keywords: electrocorticography, intra-cranial recording, lexical access, phonological encoding, articulation, speech, gamma band activity

Citation: Llorens A, Trébuchon A, Liégeois-Chauvel C and Alario F (2011) Intra-cranial recordings of brain activity during language production. Front. Psychology 2:375. doi: 10.3389/fpsyg.2011.00375

Received: 08 July 2011; Accepted: 28 November 2011;

Published online: 27 December 2011.

Edited by:

Albert Costa, University Pompeu Fabra, SpainReviewed by:

Olaf Hauk, MRC Cognition and Brain Sciences Unit, UKCopyright: © 2011 Llorens, Trébuchon, Liégeois-Chauvel and Alario. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: F.-Xavier Alario, Laboratoire de Psychologie Cognitive, CNRS, Aix-Marseille Université, 3 place Victor Hugo (Bâtiment 9, Case D), 13331 Marseille Cedex 3, France. e-mail:ZnJhbmNvaXMteGF2aWVyLmFsYXJpb0B1bml2LXByb3ZlbmNlLmZy