- 1 Laboratoire de Psychologie Cognitive, Université Lille Nord de France, Lille, France

- 2 Laboratoire de Psychologie Cognitive, UDL3, URECA, Villeneuve d’Ascq, France

- 3 Laboratoire de Psychologie Cognitive, Aix-Marseille University, Marseille, France

- 4 Laboratoire de Psychologie Cognitive, Centre National de la Recherche Scientifique, Marseille, France

It is now commonly accepted that orthographic information influences spoken word recognition in a variety of laboratory tasks (lexical decision, semantic categorization, gender decision). However, it remains a hotly debated issue whether or not orthography would influence normal word perception in passive listening. That is, the argument has been made that orthography might only be activated in laboratory tasks that require lexical or semantic access in some form or another. It is possible that these rather “unnatural” tasks invite participants to use orthographic information in a strategic way to improve task performance. To put the strategy account to rest, we conducted an event-related brain potential (ERP) study, in which participants were asked to detect a 500-ms-long noise burst that appeared on 25% of the trials (Go trials). In the NoGo trials, we presented spoken words that were orthographically consistent or inconsistent. Thus, lexical and/or semantic processing was not required in this task and there was no strategic benefit in computing orthography to perform this task. Nevertheless, despite the non-linguistic nature of the task, we replicated the consistency effect that has been previously reported in lexical decision and semantic tasks (i.e., inconsistent words produce more negative ERPs than consistent words as early as 300 ms after the onset of the spoken word). These results clearly suggest that orthography automatically influences word perception in normal listening even if there is no strategic benefit to do so. The results are explained in terms of orthographic restructuring of phonological representations.

Introduction

There is an accumulating amount of evidence in favor of the idea that learning to read and write changes the way people process and/or represent spoken language. Early evidence for a role of orthography on spoken language came from a variety of behavioral paradigms (for reviews, see Frost and Ziegler, 2007; Ziegler et al., 2008). For example, the finding that people find it harder to say that two words rhyme when they are spelled differently (e.g., rye–pie) than when they are spelled the same (pie–tie) suggested that orthography is used to make phonological judgments (Seidenberg and Tanenhaus, 1979).

Subsequently, the influence of orthography on spoken language was found in both lexical and semantic tasks (Ziegler and Ferrand, 1998; Peereman et al., 2009). In these experiments, the consistency of the sound–spelling mapping was manipulated: inconsistent words whose phonology can be spelled in multiple ways (e.g.,/aIt/can be spelled ITE, IGHT, or YTE) were typically found to take longer to process than consistent words whose phonology can only be spelled one way (/∧k/is always spelled UCK). This consistency effect was taken as a marker for the activation of orthography in spoken language. Importantly, in these paradigms, orthographic information is never presented explicitly, that is, participants simply hear spoken words and they are totally unaware of any orthographic manipulation.

The orthographic consistency effect has been replicated in a number of languages (Ventura et al., 2004; Pattamadilok et al., 2007; Ziegler et al., 2008). Importantly, it has been shown the consistency effect is not present in pre-readers but develops as children learn to read and write (Goswami et al., 2005; Ventura et al., 2007; Ziegler and Muneaux, 2007). This finding can be taken as strong evidence that the effect results from the acquisition of literacy and is not due to some idiosyncratic or uncontrolled property of the spoken language material. In line with this finding, the consistency effect is absent in children with dyslexia who struggle to learn the orthographic code (Ziegler and Muneaux, 2007).

More recently, brain imaging data have been collected to study the time course and the localization of these orthographic effects in the brain. As concerns the time course of the effect, a number of studies using event-related brain potentials (ERPs) found that inconsistent words produced larges negativities than consistent words as early as early as 300 ms after the onset of the spoken word at least for inconsistencies that occurred early in the word (Perre and Ziegler, 2008; Pattamadilok et al., 2009). Although an effect that starts at 300 ms does not seem particularly early, one has to keep in mind that spoken words unfold in time. With a mean duration of 550 ms per word in the experiments of Perre and Ziegler (2008), the consistency effect should be taken to occur relatively early in the course of spoken word recognition. Also, the consistency effect occurred in the same time window as the frequency effect (Pattamadilok et al., 2009) suggesting that the computation of orthographic information happens early enough to affect lexical access.

One elegant way to study the locus of orthographic effects on spoken language is to see what parts of the brain show differential activation for spoken words when comparing literate vs. illiterate participants (Castro-Caldas et al., 1998; Carreiras et al., 2009; Dehaene et al., 2010). Dehaene et al. (2010) showed reduced activation for literate than illiterate participants for spoken sentences in left posterior STS, left and right middle temporal gyri, and midline anterior cingulate cortex. These reductions were taken to reflect a facilitation of speech comprehension in literate participants. Structural brain differences in these regions were also reported by Carreiras et al. (2009), who conducted a voxel-based morphometry (VBM) comparison between literate and illiterate participants. Their results showed that literates had more gray matter than illiterates in several regions including the left supra-marginal and superior temporal areas associated with phonological processing. Converging evidence was obtained by Perre et al. (2009b) who showed that the cortical generators of the orthographic consistency effect obtained in ERPs in the 300–350-ms time window were localized in a left temporo-parietal area, including parts of the supra-marginal gyrus (BA40), the posterior superior temporal gyrus (BA22), and the inferior parietal lobule (BA40). Finally, Pattamadilok et al. (2010) showed that transcranial magnetic stimulation of the left supra-marginal gyrus (but not visual–orthographic areas in the occipital cortex) removed the consistency effect.

One fundamental outstanding question is whether orthography affects normal word perception in passive listening. That is, one can make the argument that orthography is only activated in laboratory tasks that require lexical or semantic access in some form or another. For instance, in a lexical decision task, participants are asked to discriminate words presented in isolation from non-words. It is possible that this rather unnatural laboratory task amplifies the effects of orthography because participants might be able to “strategically” improve performance by verifying whether the heard word is “present” not only in the phonological but also in the orthographic lexicon1. Given that people who typically participate in these kinds of experiments (i.e., highly literate University students) read more words than they actually hear, such an orthographic strategy might lead to fast positive word responses in a lexical decision task especially when spoken words have unambiguous consistent spellings. Such a strategy explanation was partially addressed by Pattamadilok et al. (2009) who used a semantic categorization task to investigate the effects of orthography on spoken language. In their task, participants were asked to decide whether words belonged to a given semantic category. This was the case in about 20% of the trials (Go trials). In the remaining trials, participants simply heard consistent and inconsistent words (which did not belong to the semantic category) and thus required no response (NoGo trials). The authors replicated the orthographic consistency in ERPs previously found in lexical decision. Yet, one could still make the argument that strategic access to orthography might be beneficial for lexical access even in a semantic categorization task.

Thus, the goal of the present study was to rule out any “strategic” explanation of orthography effects in spoken language by removing the explicit lexical or semantic component of the task. That is, instead of asking participants to verify the lexical status of a word or to access its meaning (cf., Perre and Ziegler, 2008; Pattamadilok et al., 2009), we rendered the task “non-linguistic” by asking the participants to detect a 500-ms-long burst of white noise. The noise signal was present on 25% of the trials (Go trials). As in the study by Pattamadilok et al. (2009), the consistency manipulation was performance on the remaining trials, on which no response was required (NoGo trials). Clearly, detecting white noise is an extremely shallow task that can be resolved by simple auditory mechanisms located in primary auditory cortex. Given this, any strategic activation of orthography on NoGo trials would have absolutely no beneficial effect on task performance on Go trials. Therefore, if the orthographic consistency that was previously found at around 300 ms in ERPs in lexical or semantic tasks (Perre and Ziegler, 2008; Pattamadilok et al., 2009) were to be replicated in a noise detection task, this would be strong evidence for an automatic and non-strategic influence of orthography on spoken language processing.

To make the present study perfectly comparable to that of Pattamadilok et al. (2009), we used the same Go/NoGo procedure and the same consistent and inconsistent items (and the same audio recordings) as Pattamadilok et al. (2009). The study was conducted in the same electroencephalogram (EEG) laboratory using the same recording and stimulation setup. The only difference concerned the Go trials, for which we asked participants to detect a noise burst of 500 ms rather than a word referring to a body part as in Pattamadilok et al. (2009). We hypothesized that if orthographic information participates in lexical access of spoken words in an automatic fashion or if orthography has changed the nature of phonological representations, we should be able to find signatures of the orthographic consistency effect even in a noise detection task.

Materials and Methods

Participants

Eighteen students from the University of Provence (mean age 21 years; 14 females) took part in the experiment. All participants reported being right-handed native speakers of French with a normal hearing. All reported being free of language or neurological disorders.

Materials

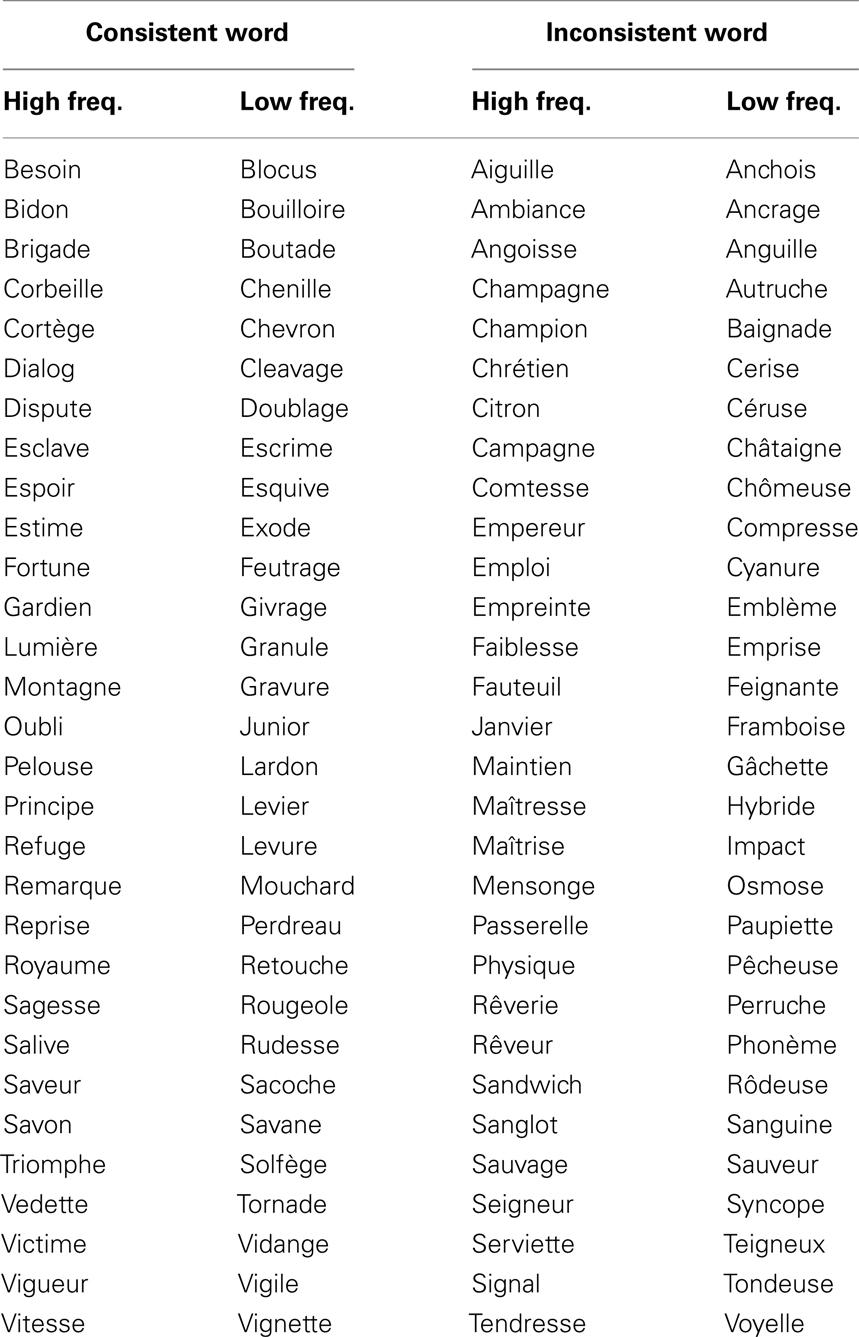

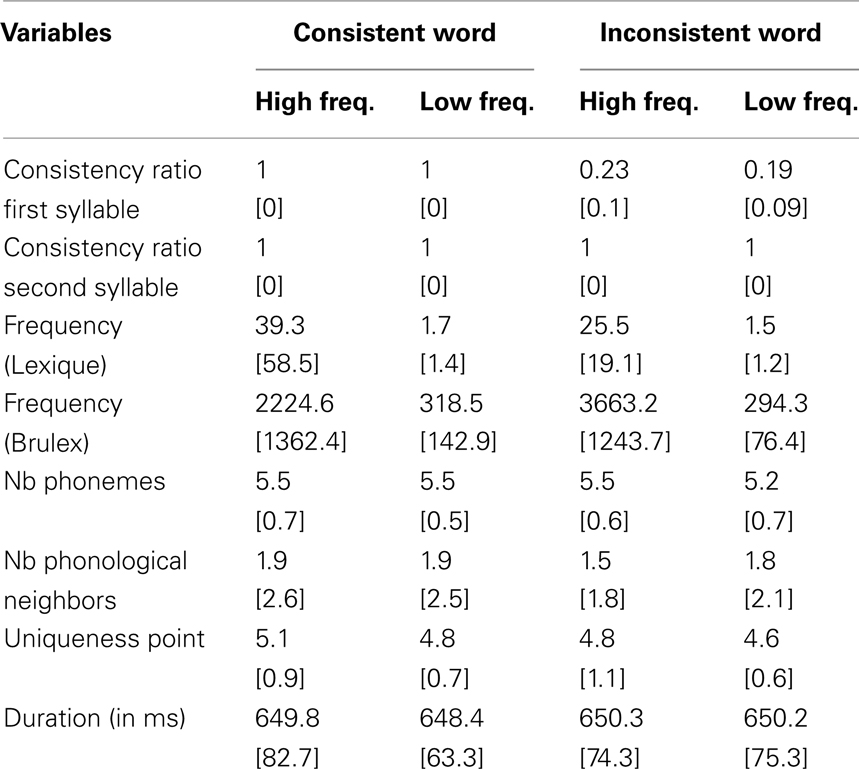

The critical stimuli (i.e., NoGo trials) were identical to those used by Pattamadilok et al. (2009). They consisted of 120 disyllabic French words that were equitably separated into four experimental conditions (30 items per condition), which resulted from a manipulation of word frequency and orthographic consistency (see Table A1 in Appendix). Orthographic consistency was manipulated on the first syllable; the second syllable was always consistent. The four conditions were as follows: high- and low-frequency consistent words [e.g., in “montagne” (mountain), the first syllable “mon” has only one possible spelling in French]; high- and low-frequency inconsistent words [e.g., “champagne,” (champagne): the first syllable “cham” has more than one possible spelling].

The syllable consistency ratio2 was computed from the disyllabic words in the BRULEX database (Content et al., 1990). The critical stimuli from the different conditions were matched on the following variables (see Table A2 in Appendix): number of phonemes, phonological uniqueness point, number of phonological neighbors and mean durations (all ps > 0.23). Within each frequency class, the consistent and inconsistent stimuli were matched on two frequency counts: LEXIQUE (New et al., 2001) and BRULEX (Content et al., 1990).

Stimuli were recorded by a female native speaker of French in a soundproof room on a digital audiotape recorder (Teac DA-P20) using a Sennheiser MD43 microphone. The speaker was completely naive as to the purpose of the study and had no information which word belonged to which condition. Stimuli were digitized at a sampling rate of 48 kHz with 16-bit analog-to-digital conversion using a wave editor.

In addition to the critical stimuli described above, the material also included 40 Go trials that corresponded to a 500-ms-long burst of white noise. They represented 25% of all trials.

Procedure

A Go–NoGo paradigm was used (Holcomb and Grainger, 2006). Participants were asked to respond as quickly as possible by pushing a response button placed in their right hand when they heard a white noise sound and to withhold from responding when this was not the case (NoGo). Participants were tested individually in a soundproof room. Stimuli were presented binaurally at a comfortable listening level through headphones. The session started with 12 practice trials to familiarize participants to the task. The 160 stimuli (40 Go trials and 120 NoGo trials) were divided into 5 blocks. Each block consisted of 8 Go trials and 24 NoGo trials (6 trials per condition) randomly presented. A trial consisted of the following sequence of events: a fixation point (+) was presented for 500 ms in the center of the screen, then the auditory stimulus was presented while the fixation cross remained on the screen for 2000 ms. Participants were told not to blink or move while the fixation point was present. Inter-trial interval was 1500 ms (blank screen). No feedback was provided during the experiment.

EEG Recording Procedure

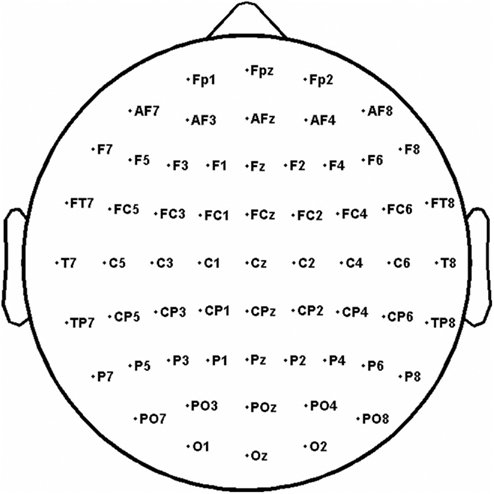

Continuous EEG was recorded from 64 Ag/AgCl active electrodes held in place on the scalp by an elastic cap (Electro-Cap International, Eaton, OH, USA). As illustrated in Figure 1, the electrode montage included 10 midline sites and 27 sites over each hemisphere (American Clinical Neurophysiology Society, 2006). Two additional electrodes (CMS/DRL nearby Pz) were used as an online reference (for a complete description, see www.biosemi.com; Schutter et al., 2006). Six other electrodes were attached over the left and right mastoids, below the right and left eyes (for monitoring vertical eye movements and blinks), at the corner of the right and left eyes (for monitoring horizontal eye movements). Bioelectrical signals were amplified using an ActiveTwo Biosemi amplifier (DC-67 Hz bandpass, 3 dB/octave) and were continuously sampled (24 bit sampling) at a rate of 256 Hz throughout the experiment. EEG was filtered off-line (1 Hz High-pass, 40 Hz low-pass) and the signal from the left mastoid electrode was used off-line to re-reference the scalp recordings.

Results

Behavioral Data

The accuracy data showed that participants performed the task perfectly well. The overall accuracy score was 100% on average and mean RT for Go decisions was 476 ms (SD = 94.8).

Electrophysiological Data

Averaged ERPs were formed off-line from correct trials free of ocular and muscular artifact (less than 10% of trials were excluded from the analyses). ERPs were calculated by averaging the EEG time-locked to a point 200 ms pre-stimulus onset and lasting 1000 ms post-stimulus onset. The 200-ms pre-stimulus period was used as the baseline. Separate ERPs were formed for each experimental condition and the Go trials. The EEG data of three participants were discarded because of excessive eye blink artifacts.

The time course of the consistency and the frequency effect was assessed by comparing mean amplitude values for each condition in successive 100 ms epochs from 250 to 750 ms post-stimulus onset. To analyze the scalp distribution of ERP effects, we defined four quadrants of electrodes (see Figure 1): left centro-anterior (Fp1, Fpz, AF7, AF3, AFz, F7, F5, F3, F1, Fz, FT7, FC5, FC3, FC1, FCz, T7, C5, C3, C1, Cz), right centro-anterior (Fp2, Fpz, AF8, AF4, AFz, F8, F6, F4, F2, Fz, FT8, FC6, FC4, FC2, FCz, T8, C6, C4, C2, Cz), left centro-posterior (T7, C5, C3, C1, Cz, TP7, CP5, CP3, CP1, CPz, P7, P5, P3, P1, Pz, PO7, PO3, POz, O1, Oz), right centro-posterior (T8, C6, C4, C2, Cz, TP8, CP6, CP4, CP2, CPz, P8, P6, P4, P2, Pz, PO8, PO4, O2, Oz). To assess differences in mean amplitudes between each experimental condition, we performed ANOVAs which resulted from the combination of four factors: consistency (consistent vs. inconsistent), frequency (low vs. high), quadrant (4), and electrodes (20). The Geisser and Greenhouse (1959) correction was applied where appropriate. Because ERP waveforms generally vary from one electrode to another and from one quadrant to another, the main effect of these factors which was significant in nearly all analyses is not reported here.

No main effect of consistency or frequency was observed in any of the windows (all ps > 0.26). Neither the consistency × frequency interaction nor the consistency × frequency × quadrant interaction were significant (all ps > 0.19). However, an interaction between consistency and quadrant was observed. This interaction was significant from 250 to 350 ms (F = 3.5, p < 0.05) and marginally significant from 350 to 450 ms (F = 2.9, p < 0.09). From 250 to 350 ms, there was also a marginally significant interaction between consistency and electrode (F = 2.4, p < 0.07). Finally, no interaction between frequency and quadrant or between frequency and electrode was observed in any of the windows (all ps > 0.18).

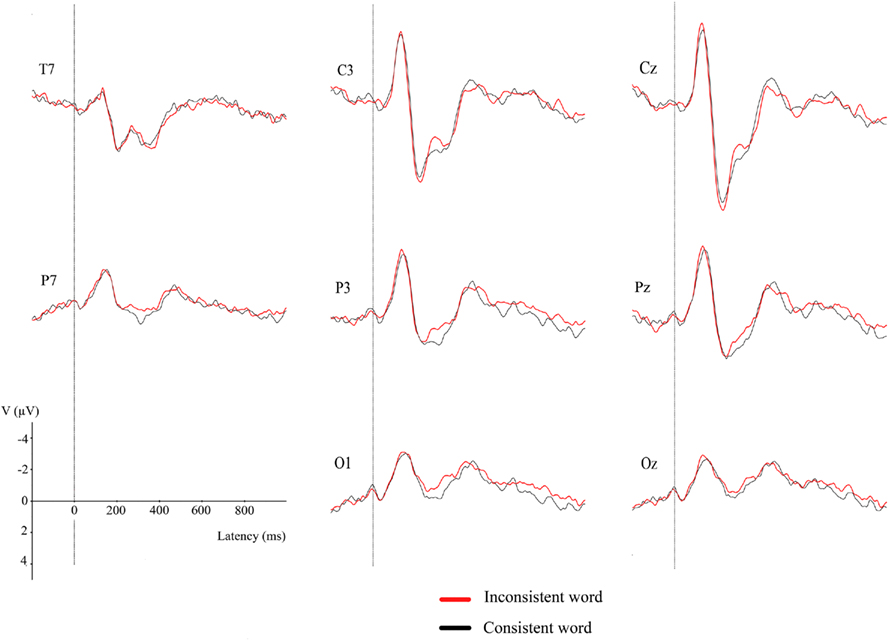

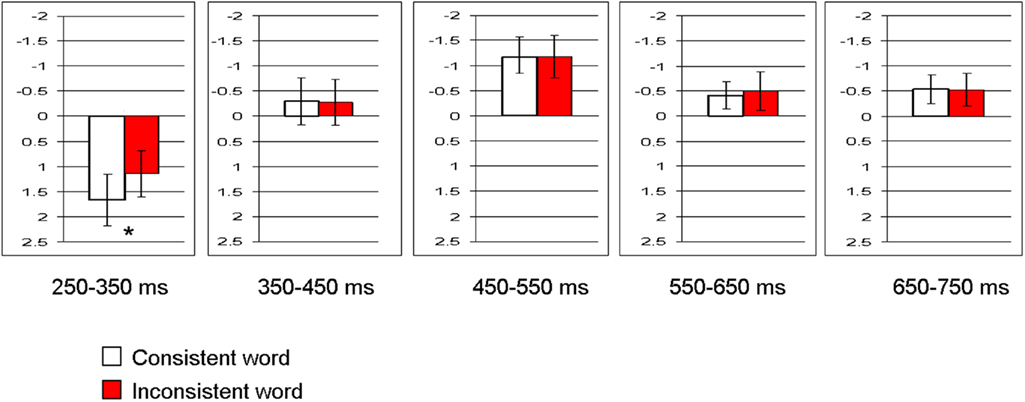

From 250 to 350 ms and 350 to 450 ms, separate analyses were performed in each of the four quadrants of electrodes with consistency (consistent vs. inconsistent), frequency (low vs. high), and electrodes (20) as within-subject factors. As illustrated in Figures 2 and 3, a significant effect of consistency was observed in the left centro-posterior quadrant between 250 and 350 ms (F = 5.3, p < 0.05). ERPs to inconsistent words were more negative than ERPs to consistent words. In this time window, no frequency effect or interaction was observed in either quadrant (all ps > 0.11) except an interaction between consistency and electrode in the right centro-anterior quadrant (F = 2.4, p < 0.07). From 350 to 450 ms, no main effect, or interaction was observed in any of the four quadrants (all ps > 0.11).

Figure 2. Event-related brain potentials to consistent and inconsistent words from selected electrodes in left posterior quadrant.

Figure 3. Mean amplitudes (μV) and standard errors or SEs within each time window for electrodes within the left centro-posterior quadrant.

Discussion

The main finding of the present study is that we replicated the orthographic consistency effect using the same items and paradigm as Pattamadilok et al. (2009) but replacing the semantic categorization task by a simple non-linguistic noise detection task. If the existence of the orthographic consistency effect resulted from strategic activation induced by the constraints of single-word lexical and semantic tasks, then no such effect should have been found in a noise detection task as neither lexical access nor semantic processing were required to perform the task. In contrast, the fact that the orthographic consistency effect was replicated in an implicit passive listening condition supports the idea that the effects of orthography, at least the “early” ones associated with an enhanced ERP amplitude for inconsistent words around 300 ms, are automatic and non-strategic in nature.

A common caveat concerns the fact that the demonstration of the consistency effect necessarily relies on a between-item comparison that is subject to possibly uncontrolled differences between consistent and inconsistent items, such as subtle articulatory or semantic differences. However, there is now sufficient evidence to alleviate this concern. First, orthographic effects on spoken word perception have been found in the priming paradigm, in which lexical decisions are made to the same target words (Chereau et al., 2007; Perre et al., 2009a). Second, articulatory differences have been ruled out as a potential locus of the orthographic consistency effect in a study by Ziegler et al. (2004), in which the degree of spelling inconsistency was manipulated while rhyme phonology was held constant (wine vs. sign). Third, a dozen of studies have been reported since the first publication of the orthographic consistency effect in spoken word recognition that have replicated the effect with different sets of items and in different languages (for a review, see Ziegler et al., 2008). Finally, and most importantly, orthographic consistency effects have recently been found in a word–learning paradigm (i.e., participants were trained to associate novel pictures and novel spoken words), which makes it possible to dispense with the between-item design by selecting a single set of spoken targets whose spelling–sound characteristics were manipulated across participants (Rastle et al., 2011). Thus, the replication of the orthographic consistency effect in the word–learning paradigm unequivocally rules out an explanation in terms of a between-item confound.

The present finding calls into question earlier findings suggesting that the existence of an orthographic consistency effect in spoken language was contingent on explicit lexical processing. This claim was essentially based on the finding that orthographic consistency effects were found to be absent in shadowing tasks (Pattamadilok et al., 2007) presumably because shadowing is a relatively shallow task that can be performed on a non-lexical basis. Indeed, in an elegant study, Ventura et al. (2004) showed that orthographic consistency effects were absent in a classic shadowing task but re-appeared when the shadowing response was made contingent upon a lexical decision response (say out loud only if the item is a word). Given that we found an orthographic consistency effect in a task that is as shallow as the shadowing task (in both cases lexical access was not required to perform the task), it is tempting to conclude that the articulatory output processes of the shadowing task might mask potential consistency effects that should occur at a perceptual encoding stage. In the study cited above, Rastle et al. (2011) came to a similar conclusion suggesting that in a shadowing task, contrary to a picture naming task in which robust effects of orthography have been reported by these authors, “phonological activation can drive the process of speech production before there is much opportunity for orthographic feedback to exert an influence.”

One issue that needs to be addressed is why the orthographic consistency in speech perception appears to be more robust than the frequency effect. Note there was no frequency effect in the present study although the same items produced a strong frequency effect in the semantic categorization task of Pattamadilok et al. (2009). One possibility might be that the frequency effect taps lexical access and selection (i.e., the mapping between phonological form and meaning), whereas the orthographic consistency effect taps access to the phonological word forms themselves (i.e., the mapping between acoustic input and phonological word forms). Whereas the noise detection task might block access to meaning (e.g., for a similar argument with letter search tasks, see Henik et al., 1983), it is possible that the mapping between acoustic input onto phonological word forms cannot be blocked strategically (e.g., cocktail party phenomenon). The idea that orthography plays a role at this phonological encoding stage is supported by the early time course of the effect (around 300 ms). Indeed, in this time window, Connolly and Phillips (1994) identified an ERP component, the “phonological mismatch negativity,” that has been interpreted as reflecting pre-lexical phonological processing of speech. Thus, the involvement of orthography at this stage would also explain why the consistency effect “survives” the frequency effect and why it is very little affected by task demands (i.e., the same early effect was found in lexical decision, semantic categorization, and noise detection, but see Pattamadilok et al., 2011).

Finally, how should this effect best be conceptualized? Do we see words whenever we hear spoken utterances? Our suggestion is that the robust and task-independent effect of consistency occurring around 300 ms results from restructuring of phonological representations, the idea being that words with consistent spellings develop more precise and stable phonological representations during the course of reading development (Goswami et al., 2005; Ziegler and Goswami, 2005). If so, we would not necessarily “see” words whenever we hear spoken utterances but access to the phonological word representations themselves would be facilitated for words with consistent spellings. Several studies are consistent with this hypothesis. First, Perre et al. (2009b) estimated, using sLORETA, the underlying cortical generators of the orthographic consistency effect that occurred around 300–350 ms and found differential activation between consistent and inconsistent words in phonological areas (e.g., supra-marginal gyrus) and not in visual–orthographic areas, such as left ventral occipitotemporal cortex (vOTC). Second, Pattamadilok et al. (2010) showed that transmagnetic stimulation of the same phonological areas identified by Perre et al. (2009b) abolished the orthographic consistency effect but stimulation of the vOTC did not affect the orthographic consistency effect. Third, in an fMRI study, Montant et al. (this issue) found no differential activation for inconsistent over consistent word pairs in vOTC but in brain regions of the spoken language network (left inferior frontal gyrus), Fourth, in a passive listening task, Dehaene et al. (2010) found differences between literate and illiterate participants only in phonological areas, such as the planum temporale or the left superior temporal gyrus, but not in visual–orthographic areas. Differential activation between literate and illiterate subjects in vOTC was only found in the auditory lexical decision task suggesting an additional, possibly strategic, activation of orthography in lexical decision tasks. The idea that there is an additional, possibly strategic, contribution of orthography in auditory lexical decision is also supported by the existence of a consistency effect on the N400 that appeared only in lexical decision tasks (Perre and Ziegler, 2008; Perre et al., 2009b) but not in semantic categorization or noise detection tasks.

In sum then, the present study replicated the existence of an orthographic consistency effect that occurs early (around 300 ms) and that seems to be independent of task manipulations as it occurs even in a passive listening task. In line with the studies cited above, this effect seems to reflect restructuring of phonological word representations in the sense of Frith (1998) who compared the acquisition of learning to read to catching a virus “This virus infects all speech processing, as now whole word sounds are automatically broken up into sound constituents. Language is never the same again” (p. 1011).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank Julia Boisson for her help in data collection. Daisy Bertrand was supported by an ERC Advanced Grant awarded to Jonathan Grainger (no. 230313).

Footnotes

- ^Separate orthographic and phonological lexica are typically postulated in many of the current word recognition models (Diependaele et al., 2010; Perry et al., 2010; Grainger and Ziegler, 2011).

- ^The consistency ratio was calculated by dividing the number of disyllabic words sharing both the phonological and orthographic forms of a given syllable at a given position (friends) by the number of all disyllabic words that contain that syllable at the same position (friends and enemies, see Ziegler et al., 1996). The consistency ratio varies between 0 (totally inconsistent) and 1 (totally consistent) and thus reflects the degree of orthographic consistency.

References

Carreiras, M., Seghier, M. L., Baquero, S., Estevez, A., Lozano, A., Devlin, J. T., and Price, C. J. (2009). An anatomical signature for literacy. Nature 461, 983–986.

Castro-Caldas, A., Petersson, K. M., Reis, A., Stone-Elander, S., and Ingvar, M. (1998). The illiterate brain. Learning to read and write during childhood influences the functional organization of the adult brain. Brain 121(Pt 6), 1053–1063.

Chereau, C., Gaskell, M. G., and Dumay, N. (2007). Reading spoken words: orthographic effects in auditory priming. Cognition 102, 341–360.

Connolly, J. F., and Phillips, N. A. (1994). Event-related potential components reflect phonological and semantic processing of the terminal word of spoken sentences. J. Cogn. Neurosci. 6, 256–266.

Content, A., Mousty, P., and Radeau, M. (1990). BRULEX: a computerized lexical data base for the French language/BRULEX. Une base de donnees lexicales informatisee pour le francais ecrit et parle. Annee Psychol. 90, 551–566.

Dehaene, S., Pegado, F., Braga, L. W., Ventura, P., Nunes Filho, G., Jobert, A., Dehaene-Lambertz, G., Kolinsky, R., Morais, J., and Cohen, L. (2010). How learning to read changes the cortical networks for vision and language. Science 330, 1359–1364.

Diependaele, K., Ziegler, J. C., and Grainger, J. (2010). Fast phonology and the bimodal interactive activation model. Eur. J. Cogn. Psychol. 22, 764–778.

Frost, R., and Ziegler, J. C. (2007). “Speech and spelling interaction: the interdependence of visual and auditory word recognition,” in The Oxford Handbook of Psycholinguistics, ed. M. G. Gaskell (Oxford: Oxford University Press), 107–118.

Goswami, U., Ziegler, J. C., and Richardson, U. (2005). The effects of spelling consistency on phonological awareness: a comparison of English and German. J. Exp. Child. Psychol. 92, 345–365.

Grainger, J., and Ziegler, J. (2011). A dual-route approach to orthographic processing. Front. Psychol. 2:54.

Henik, A., Kellogg, W., and Friedrich, F. (1983). The dependence of semantic relatedness effects upon prime processing. Mem. Cognit. 11, 366–373.

Geisser, S., and Greenhouse, S. (1959). On methods in the analysis of profile data. Psychometrika 24, 95–112.

Holcomb, P. J., and Grainger, J. (2006). On the time course of visual word recognition: an event-related potential investigation using masked repetition priming. J. Cogn. Neurosci. 18, 1631–1643.

New, B., Pallier, C., Ferrand, L., and Matos, R. (2001). Une base de données lexicales du français contemporain sur internet: LEXIQUE. L’Année Psychologique 101, 447–462.

Pattamadilok, C., Knierim, I. N., Kawabata Duncan, K. J., and Devlin, J. T. (2010). How does learning to read affect speech perception? J. Neurosci. 30, 8435–8444.

Pattamadilok, C., Morais, J., Ventura, P., and Kolinsky, R. (2007). The locus of the orthographic consistency effect in auditory word recognition: further evidence from French. Lang. Cogn. Process. 22, 1–27.

Pattamadilok, C., Perre, L., Dufau, S., and Ziegler, J. C. (2009). On-line orthographic influences on spoken language in a semantic task. J. Cogn. Neurosci. 21, 169–179.

Pattamadilok, C., Perre, L., and Ziegler, J. C. (2011). Beyond rhyme or reason: ERPs reveal task-specific activation of orthography on spoken language. Brain Lang. 116, 116–124.

Peereman, R., Dufour, S., and Burt, J. S. (2009). Orthographic influences in spoken word recognition: the consistency effect in semantic and gender categorization tasks. Psychon. Bull. Rev. 16, 363–368.

Perre, L., Midgley, K., and Ziegler, J. C. (2009a). When beef primes reef more than leaf: orthographic information affects phonological priming in spoken word recognition. Psychophysiology 46, 739–746.

Perre, L., Pattamadilok, C., Montant, M., and Ziegler, J. C. (2009b). Orthographic effects in spoken language: on-line activation or phonological restructuring? Brain Res. 1275, 73–80.

Perre, L., and Ziegler, J. C. (2008). On-line activation of orthography in spoken word recognition. Brain Res. 1188, 132–138.

Perry, C., Ziegler, J. C., and Zorzi, M. (2010). Beyond single syllables: large-scale modeling of reading aloud with the connectionist dual process (CDP++) model. Cogn. Psychol. 61, 106–151.

Rastle, K., McCormick, S. F., Bayliss, L., and Davis, C. J. (2011). Orthography influences the perception and production of speech. J. Exp. Psychol. Learn. Mem. Cogn. [Epub ahead of print].

Schutter, D. J., Leitner, C., Kenemans, J. L., and van Honk, J. (2006). Electrophysiological correlates of cortico-subcortical interaction: a cross-frequency spectral EEG analysis. Clin. Neurophysiol. 117, 381–387.

Seidenberg, M. S., and Tanenhaus, M. K. (1979). Orthographic effects on rhyme monitoring. J. Exp. Psychol. Hum. Learn. Mem. 5, 546–554.

Ventura, P., Morais, J., and Kolinsky, R. (2007). The development of the orthographic consistency effect in speech recognition: from sublexical to lexical involvement. Cognition 105, 547–576.

Ventura, P., Morais, J., Pattamadilok, C., and Kolinsky, R. (2004). The locus of the orthographic consistency effect in auditory word recognition. Lang. Cogn. Process. 19, 57–95.

Ziegler, J. C., and Ferrand, L. (1998). Orthography shapes the perception of speech: the consistency effect in auditory word recognition. Psychon. Bull. Rev. 5, 683–689.

Ziegler, J. C., Ferrand, L., and Montant, M. (2004). Visual phonology: the effects of orthographic consistency on different auditory word recognition tasks. Mem. Cognit. 32, 732–741.

Ziegler, J. C., and Goswami, U. (2005). Reading acquisition, developmental dyslexia, and skilled reading across languages: a psycholinguistic grain size theory. Psychol. Bull. 131, 3–29.

Ziegler, J. C., and Muneaux, M. (2007). Orthographic facilitation and phonological inhibition in spoken word recognition: a developmental study. Psychon. Bull. Rev. 14, 75–80.

Ziegler, J. C., Petrova, A., and Ferrand, L. (2008). Feedback consistency effects in visual and auditory word recognition: where do we stand after more than a decade? J. Exp. Psychol. Learn. Mem. Cogn. 34, 643–661.

Appendix

Keywords: orthography, speech, literacy, ERP, consistency

Citation: Perre L, Bertrand D and Ziegler JC (2011) Literacy affects spoken language in a non-linguistic task: an ERP study. Front. Psychology 2:274. doi: 10.3389/fpsyg.2011.00274

Received: 20 July 2011; Accepted: 01 October 2011;

Published online: 19 October 2011.

Edited by:

Regine Kolinsky, Fonds de la Recherche Scientifique, BelgiumReviewed by:

Colin Davis, Royal Holloway University of London, UKAriel M. Goldberg, Tufts University, USA

Copyright: © 2011 Perre, Bertrand and Ziegler. This is an open-access article subject to a non-exclusive license between the authors and Frontiers Media SA, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and other Frontiers conditions are complied with.

*Correspondence: Johannes C. Ziegler, Laboratoire de Psychologie Cognitive, Pôle 3C, Case D, Centre National de la Recherche Scientifique, Université de Provence, 3 place Victor Hugo, 13331 Marseille, Cedex 3, France. e-mail: johannes.ziegler@univ-provence.fr