- 1 Department of Psychology, Florida State University, Tallahassee, FL, USA

- 2 Department of Psychology, Beckman Institute for Advanced Science and Technology, University of Illinois at Urbana-Champaign, Urbana, IL, USA

Frequent action video game players often outperform non-gamers on measures of perception and cognition, and some studies find that video game practice enhances those abilities. The possibility that video game training transfers broadly to other aspects of cognition is exciting because training on one task rarely improves performance on others. At first glance, the cumulative evidence suggests a strong relationship between gaming experience and other cognitive abilities, but methodological shortcomings call that conclusion into question. We discuss these pitfalls, identify how existing studies succeed or fail in overcoming them, and provide guidelines for more definitive tests of the effects of gaming on cognition.

Do Action Video Games Improve Perception and Cognition?

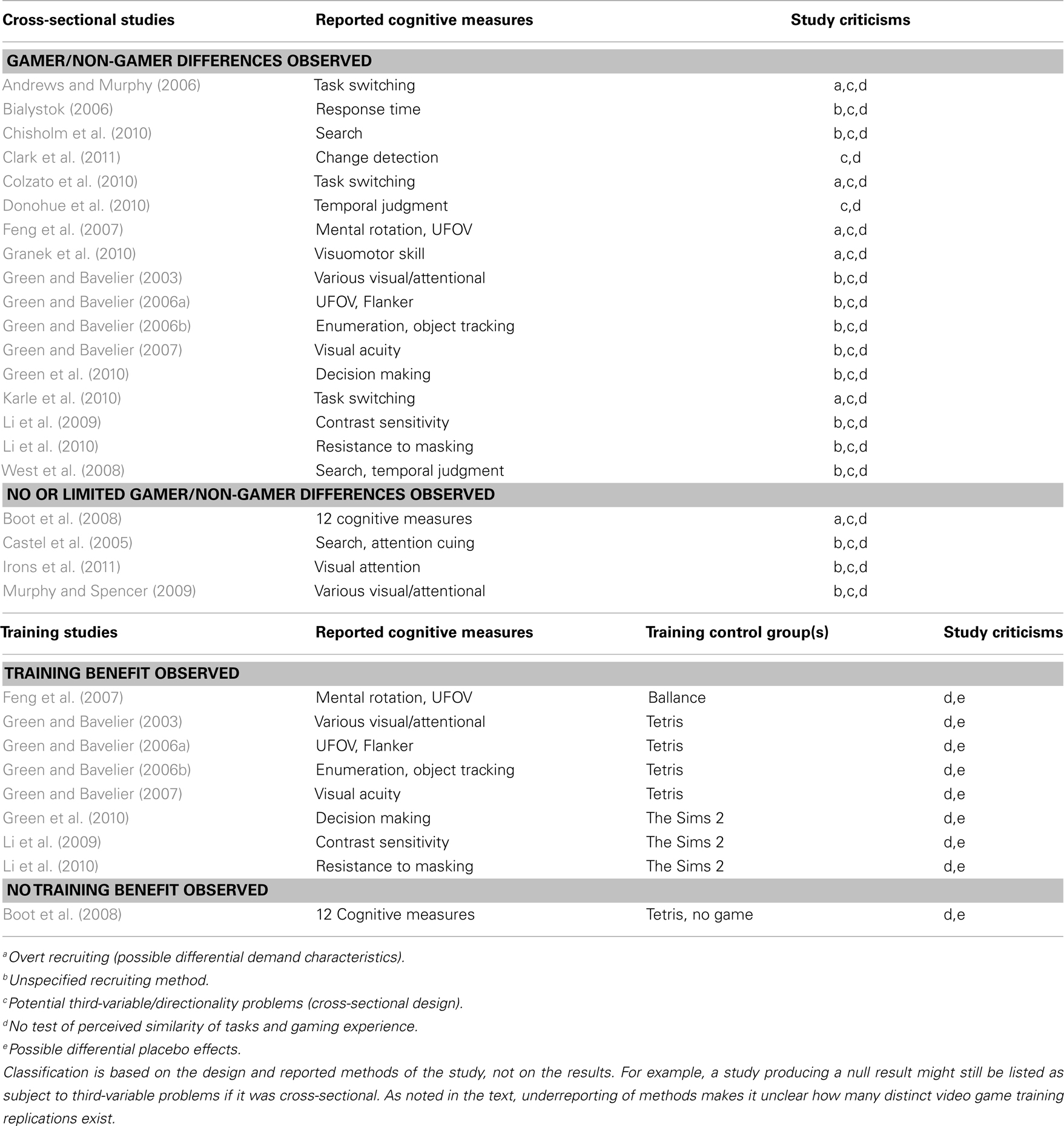

Frequent action game players outperform non-gamers on a variety of perceptual and cognitive measures, and some studies suggest that video game training enhances cognitive performance on tasks other than those specific to the game (Table 1). The possibility of broad transfer from game training to other aspects of cognition is exciting because it countermands an extensive literature showing that training on one task rarely improves performance on others (see Ball et al., 2002; Hertzog et al., 2009; Owen et al., 2010).

Although provocative, the conclusion that game training produces unusually broad transfer is weakened by methodological shortcomings common to most (if not all) of the published studies documenting gaming effects. The flaws we discuss are not obscure or esoteric – they are well known pitfalls in the design of clinical trials and experiments on expertise. Most of these shortcomings are surmountable, but no published gaming study has successfully avoided them all. In this perspective piece, we delineate these flaws and provide guidelines for more definitive tests of game benefits.

We focus on gaming research for three reasons: first, the claims of broad transfer from game training diverge from typical findings in the cognitive training literature (Hertzog et al., 2009). Second, these claims have circulated widely in the popular media and thus have had a broad impact. Third, game training holds tremendous promise if the evidence for broad transfer of training bears out. We restrict our discussion to recent studies of the effects of action games on college-aged participants, but our criticisms apply to similar studies examining the effect of game experience on cognition in children and older adults, and to studies testing the efficacy of various “brain fitness” and cognitive aging interventions.

Cross-Sectional Studies: Comparing Gamers and Non-Gamers

Most game training studies are premised on evidence that expert gamers outperform non-gamers on measures of perception and cognition. Such differences are a necessary precondition for training studies – if experienced gamers perform comparably to non-gamers, then there is no reason to expect game training to enhance those abilities. Even if gamers do outperform non-gamers, the difference might not be caused by gaming: people may become action gamers because they have the types of abilities required to excel at these games, or a third factor might influence both cognitive abilities and gaming.

One possible factor that could lead to the spurious conclusion of gaming benefits on cognition is differential expectations for experts and novices. If gamers are recruited to a study because of their gaming experience, they might expect to perform well because of their expertise, and a belief that you should perform well can influence performance on measures as basic as visual acuity (Langer et al., 2010). Imagine that you are recruited to participate in a study because of your gaming expertise, and the study consists of game like computer tasks. If you know you have been recruited because you are an expert, the demand characteristics of the experimental situation will motivate you to try to perform well. In contrast, a non-gamer selected without any mention of gaming will not experience such demand characteristics, so will be less motivated. Any difference in task performance, then, would be analogous to a placebo effect.

Almost all studies comparing expert and novice gamers either neglect to report how subjects were recruited or make no effort to hide the nature of the study from participants. Many studies recruit experts through advertisements explicitly seeking people with game experience, thereby violating a core principle of experimental design and introducing the potential for differential demand characteristics (Boot et al., 2008; Colzato et al., 2010; Karle et al., 2010). The problem is amplified because gamers often are familiar with media and blog coverage of the benefits of gaming, so they expect to perform better when they have been recruited for their gaming expertise.

The danger that expectations, motivation, and prior knowledge drive expert/novice differences in basic task performance could be minimized by recruiting participants without mention of video games. We are aware of only two published expert/novice gaming studies adopting a covert recruitment strategy (Donohue et al., 2010; Clark et al., 2011). Encouragingly, both demonstrate a gamer advantage, although standard concerns of third-variable and directionality problems limit the strength of the conclusions that can be drawn from these studies (see Dye et al., 2009; Dye and Bavelier, 2010; Trick et al., 2005 for other instances of cross-sectional gamer differences using a covert recruitment strategy, in these cases with children as participants).

Covert recruitment is less efficient because it requires prescreening for videogame experience without any connection to a particular study or measuring experience only after the study, but it is the best way to avoid having the recruiting strategy itself contribute to group differences. Even then, expert gamers may be more motivated if they believe the tasks tap their expertise in gaming (e.g., the tasks are at all game like). Only if subjects have no reason to link their gaming expertise to the tasks under study is it reasonable to assume that expert/novice comparisons were unaffected by such meta-level knowledge.

Even with optimal recruiting strategies, correlational and cross-sectional evidence for expert/novice differences is only suggestive of gaming benefits (i.e., studies that report only cross-sectional differences must be interpreted cautiously, e.g., Bialystok, 2006; West et al., 2008; Chisholm et al., 2010; Colzato et al., 2010; Donohue et al., 2010; Karle et al., 2010; Clark et al., 2011). Claims that gaming causes cognitive improvements require an experimental design akin to a clinical trial; in this case, a training experiment.

Video Game Training Studies

Game training studies recruit non-gamers and give them video game experience (or a specific type of game experience) to see whether gaming enhances performance on cognitive tasks. Because they randomly assign participants to treatment and control groups, training studies permit causal inferences in principle. But as for any clinical trial, the power of the treatment effect must be compared to a suitable control group. Placebo baseline conditions are only effective if people do not know whether they are in the placebo or experimental condition. In a drug trial, if the drug induces side-effects and the placebo tastes of sugar, then the placebo control is inadequate – any differences between groups might come from that knowledge coupled with the belief that the experimental treatment should have an effect.

Commendably, most game training studies compare the effects of action game training to an active control group that receives game training on a different type of game (e.g., Green and Bavelier, 2003, 2006a,b, 2007). If action game training were instead compared to a group of participants who received no intervention, any differential improvement of the action game group could plausibly be attributed to a placebo effect. The issue of choosing a proper placebo condition is not always as straightforward as it first appears, however, and what constitutes an adequate placebo control for a gaming intervention is a thorny issue; unlike placebo pills, participants in game training studies know which training intervention they have received.

The problem comes when the treatment and control interventions produce differential placebo effects. Most game training studies just assume that placebo effects will be comparable in the control condition (e.g., playing Tetris) and the experimental group (e.g., playing a fast-paced action game), and none have explicitly measured differences in the perceived relatedness of the training task to the outcome measures.

Imagine a thought experiment with two training groups: one is trained extensively on Tetris and the other on a fast-paced, visually demanding action game (e.g., see Green and Bavelier, 2003). Following training, participants view (but do not perform) two transfer tasks: (a) a fast-paced task in which participants detect targets flashed in the visual periphery (the useful field of view, or UFOV) and (b) a task in which participants mentally rotate block-like shapes. The Tetris training group likely would predict that their training would improve their mental rotation performance and the action game training group likely would predict better UFOV performance. The perception of what each of these games should improve may drive group differences – a placebo effect (note that action game training might also produce a general placebo effect that could influence mental rotation ability, but we would predict that Tetris would produce a stronger placebo effect in this case).

The same criticism applies to interventions designed to offset cognitive aging. Even if the training had no effect, interventions that participants expect to help could produce a larger placebo effect. For example, the placebo effect on measures of auditory perception and memory likely would be greater following intensive practice on auditory tasks than following extended viewing of educational DVDs (Mahncke et al., 2006). Control groups that receive no training (Basak et al., 2008; Berry et al., 2010) are even less likely to experience any placebo effect, so any differences resulting from game training could well be due to a differential placebo effect.

No game training studies have taken the necessary precautions to avoid differential placebo effects across training conditions and outcome measures. In fact, no published studies have tested whether participants expect to improve as a result of training. Without an adequate control for placebo effects, any conclusion that game training caused cognitive improvements is premature – the benefit could be due to the expectation that a benefit should accrue.

Ability or Strategy Changes?

Even with an active control condition and explicit measures of what subjects expect will improve following training, game benefits might reflect shifts in strategy rather than changes in more basic cognitive or perceptual capacities. Short-term (Nelson and Strachan, 2009) and long-term game exposure does appear to produce strategy changes (Anderson et al., 2010). For example, experienced gamers search more thoroughly than non-gamers, leading to better change detection performance (Clark et al., 2011). Changes in how people approach a task are interesting and important, but without careful evaluation of strategy shifts, better expert performance might wrongly be attributed to more fundamental differences in perception and memory. Process tracing approaches such as think-aloud protocols, retrospective reports, and eye movement recording may be fruitful ways to explore the potential contributions of strategy to observed differences between gamers and non-gamers.

Inadequate Baseline for Transfer Effects

One of the most fundamental principles of learning is that performance improves with practice. Yet, a puzzling pattern emerges in studies claiming benefits of video game training on cognitive tasks: in many studies showing benefits of gaming, the control groups show no test-retest improvement when repeating the same tasks after training (Ackerman et al., 2010). Based on learning theory (and most studies using repeated testing), participants typically improve when performing a cognitive task for a second time. Given that the evidence for a training effect is based on differential improvement in the experimental and control group, a lack of any test-retest improvement in the control condition gives the appearance of a greater benefit of training in the experimental condition. Yet, the difference between the experimental and control conditions could be interpreted as an unexpected lack of improvement in the control condition rather than as benefit of training in the experimental group. Studies that do not find an effect of video game training typically have found the expected test–retest improvements in both the control condition and the experimental condition – the improvements are just of equal magnitude (Boot et al., 2008; Ackerman et al., 2010). In order to draw strong inferences about training benefits, it is important to make sure that the control condition performs as expected and that it is not an anomalous baseline. A lack of improvement in the control condition is worrisome unless there is experimental evidence that a particular outcome measure typically does not show improvement upon retesting.

The Importance of Independent Replication

Unlike cross-sectional studies, training studies are costly to conduct, often requiring as much as 50 h of training with dozens of participants coming to the lab regularly for weeks or months. Although such clinical trials are necessary to draw causal inferences about gaming benefits, the literature includes far fewer training studies than cross-sectional ones – few laboratories have the resources to conduct them.

Given the scope of a typical training study, the same training results often are split across multiple journal articles that each report a subset of the outcome measures while noting the use of overlapping training groups (e.g., the results of the ACTIVE trial exploring the effects of an intervention on cognitive aging have been published across many interlinked articles). With unlimited resources, an ideal study would test a single outcome measure for each trained group in order to avoid interactions among the outcome measures with repeated testing, but doing so is impractical.

Many training articles in the videogame literature adopt the approach of reporting different outcome measures in different papers (see Table 1). Unfortunately, those papers do not consistently report all of the outcome measures that were tested or the degree of overlap in the trained subjects across papers (Bavelier, personal communication). Game training studies should follow the lead of registered clinical trials by listing all outcome measures, even when the measures are reported across separate papers. Without doing so, it is unclear how many distinct replications exist.

Moreover, a few published studies have failed to find benefits of gaming, raising the specter of a file drawer problem (Castel et al., 2005; Boot et al., 2008; Murphy and Spencer, 2009; Irons et al., 2011). An early meta-analysis, published before three of these failures to replicate were published, confirms a significant publication bias and a much reduced effect of game experience on cognition when this bias was controlled for, although the game effect size was still significantly larger than zero after correction (Ferguson, 2007).

Improved Evidence for Gaming Effects

Table 1 lists recently published studies of the relationship between video games and cognitive performance in college-aged subjects. Most are cross-sectional studies of expert/novice differences which provide only suggestive evidence of gaming effects. Table 1 also lists, for each published paper, the methodological concerns that potentially undermine the conclusion of gaming benefits. No study has adequately avoided all of these pitfalls, meaning that claims of gaming benefits should be taken as tentative. Future studies could readily avoid most of these pitfalls, and such definitive tests are needed. We recommend that the following methodological improvements be adopted in all future studies of the effects of video games on cognition.

(1) For studies comparing expert and novice gamers, recruiting should be covert. Experts should have no reason to suspect that they are in a study of gamers. Participants should only be asked about their video game experience at the end of the study or in a prescreening that is not linked in any obvious way to the laboratory doing the testing or to the particular experiment (e.g., prescreening could take place at the start of a semester as part of a large battery given to all participants in a subject pool).

(2) At the end of each study, participants should be asked whether they are familiar with research or media reports on the benefits of gaming (or brain training) in order to verify whether or not such knowledge influenced performance. They also should be asked whether they perceived a connection between the tasks and their gaming experiences.

(3) In training studies, experimental and control groups should be equally likely to expect improvements for each outcome measure. Such expectations could be measured by having other participants judge whether they think training should affect performance on each outcome measure. Ideally, studies could use outcome measures that people think are equally likely to show improvements from the experimental and control training manipulations. Where equal expectations are infeasible, studies should report the differential expectations.

(4) All method details, including recruiting strategies and the outcome measures included in the study should be reported fully. When multiple outcome measures of a single experiment are reported across multiple articles, the interdependence of the papers should be stated explicitly.

These pitfalls are not unique to videogame studies. They apply equally to all clinical trials, training studies, and studies of expertise. We argue that, in the wake of exciting evidence for broad transfer from video games, they have been somewhat neglected. Future studies that adopt these new recommendations for recruiting, testing, and reporting could provide more definitive tests of the benefits of gaming.

Other techniques and approaches may also provide converging evidence for benefits and for the mechanisms that underlie them. For example, neuroimaging might provide evidence for a distinction between expectation-driven effects, strategy shifts, and improvements to core abilities. Preliminary work has found neurophysiological differences between action gamers and non-gamers (e.g., Granek et al., 2010; Mishra et al., 2011), but these studies are subject to the same concerns about the limits of expert/novice comparisons. The key will be to use neuroimaging to rule out other explanations for gaming effects by mapping performance differences onto brain regions with known functions.

Another approach would be to look for individual or group differences in the impact of training, perhaps looking at special populations. Although most expert/novice gaming studies recruit male subjects almost exclusively (expert action gamers are disproportionately male), training studies typically strive for equal representation of males and females (e.g., Green and Bavelier, 2006a,b, 2007; Feng et al., 2007). With large scale studies, it should be possible to look for sex differences in training effectiveness (see Feng et al., 2007 for a small-scale attempt to do so). The same approach could look at the effects of aging or even personality differences as a way to predict who would improve most, and such differences in improvement might help characterize the mechanisms underlying gaming benefits.

In sum, game training holds great promise as one of the few training techniques to show transfer beyond the trained task. The number and diversity of findings we have discussed appear to provide converging evidence for gaming effects, although the extent of convergence is qualified by the issues we have raised. By adopting a set of clinical trial best practices, and by considering and eliminating alternative explanations for gaming effects, future studies could help define the full extent of the possible benefits of gaming for perception and cognition. Such definitive tests could have implications well beyond the laboratory, potentially helping researchers to develop game interventions to address disorders of vision and attention and remediate the effects of cognitive aging.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Ackerman, P. L., Kanfer, R., and Calderwood, C. (2010). Use it or lose it? Wii brain exercise practice and reading for domain knowledge. Psychol. Aging 25, 753–766.

Anderson, A. F., Bavelier, D., and Green, C. S. (2010). “Speed-accuracy tradeoffs in cognitive tasks in action game players,” in Presented at the 10th Meeting of the Vision Sciences Society, Sarasota, FL.

Andrews, G., and Murphy, K. (2006). “Does video-game playing improve executive function?” in Frontiers in Cognitive Sciences, ed. M. A. Vanchevsky (New York, NY: Nova Science Publishers, Inc.), 145–161.

Ball, K., Berch, D. B., Helmers, K. F., Jobe, J. B., Leveck, M. D., Marsiske, M., Morris, J. N., Rebok, G. W., Smith, D. M., Tennstedt, S. L., Unverzagt, F. W., Willis, S. L., Advanced Cognitive Training for Independent and Vital Elderly Study Group. (2002). Effects of cognitive training interventions with older adults: a randomized controlled trial. JAMA 288, 2271–2281.

Basak, C., Boot, W. R., Voss, M., and Kramer, A. F. (2008). Can training in a real-time strategy videogame attenuate cognitive decline in older adults? Psychol. Aging 23, 765–777.

Berry, A. S., Zanto, T. P., Clapp, W. C., Hardy, J. L., Delahunt, P. B., Mahncke, H. W., and Gazzaley, A. (2010). The influence of perceptual training on working memory in older adults. PLoS ONE 5, 1–8.

Bialystok, E. (2006). Effect of bilingualisim and computer video game experience on the simon task. Can. J. Exp. Psychol. 60, 68–79.

Boot, W. R., Kramer, A. F., Simons, D. J., Fabiani, M., and Gratton, G. (2008). The effects of video game playing on attention, memory, and executive control. Acta Psychol. (Amst.) 129, 387–398.

Castel, A. D., Pratt, J., and Drummond, E. (2005). The effects of action video game experience on the time course of inhibition of return and the efficiency of visual search. Acta Psychol. (Amst.) 119, 217–230.

Chisholm, J. D., Hickey, C., Theeuwes, J., and Kingston, A. (2010). Reduced attentional capture in video game players. Atten. Percept. Psychophys. 72, 667–671.

Clark, K., Fleck, M. S., and Mitroff, S. R. (2011). Enhanced change detection performance reveals improved strategy use in avid action video game players. Acta Psychol. (Amst.) 136, 67–72.

Colzato, L. S., van Leeuwen, P. J. A., van den Wildenberg, W. P. M., and Hommel, B. (2010). DOOM’d to switch: superior cognitive flexibility in players of first person shooter games. Front. Psychol. 1, 1–5.

Donohue, S. E., Woldorff, M. G., and Mitroff, S. R. (2010). Video game players show more precise multisensory temporal processing abilities. Atten. Percept. Psychophys. 72, 1120–1129.

Dye, M. G. W., Green, C. S., and Bavelier, D. (2009). The development of attention skills in action video game players. Neuropsychologia 47, 1780–1789.

Dye, M. W., and Bavelier, D. (2010). Differential development of visual attention skills in school-age children. Vision Res. 50, 452–459.

Feng, J., Spence, I., and Pratt, J. (2007). Playing an action video game reduces gender differences in spatial cognition. Psychol. Sci. 18, 850–855.

Ferguson, C. J. (2007). The good, the bad, and the ugly: a meta-analytic review of positive and negative effects of violent video games. Psychiatr. Q. 78, 309–316.

Granek, J. A., Gorbet, D. J., and Sergio, L. E. (2010). Extensive video-game experience alters cortical networks for complex visuomotor transformations. Cortex 46, 1165–1177.

Green, C. S., and Bavelier, D. (2003). Action video game modifies visual selective attention. Nature 423, 534–537.

Green, C. S., and Bavelier, D. (2006a). Effect of action video games on the spatial distribution of visuospatial attention. J. Exp. Psychol. Hum. Percept. Perform. 1465–1468.

Green, C. S., and Bavelier, D. (2006b). Enumeration versus multiple object tracking: the case of action video game players. Cognition 101, 217–245.

Green, C. S., and Bavelier, D. (2007). Action video game experience alters the spatial resolution of attention. Psychol. Sci. 18, 88–94.

Green, C. S., Pouget, A., and Bavelier, D. (2010). A general mechanism for learning with action video games: improved probabilistic inference. Curr. Biol. 20, 1573–15792.

Hertzog, C., Kramer, A. F., Wilson, R. S., and Lindenberger, U. (2009). Enrichment effects on adult cognitive development. Psychol. Sci. Public Interest 9, 1–65.

Irons, J. L., Remington, R. W., and McLean, J. P. (2011). Not so fast: rethinking the effects of action video games on attentional capacity. Aust. J. Psychol. 63. [Epub ahead of print]. .

Karle, J. W., Watter, S., and Shedden, J. M. (2010). Task switching in video game players: benefits of selective attention but not resistance to proactive interference. Acta Psychol. (Amst.) 134, 70–78.

Langer, E., Djikic, M., Pirson, M., Madenci, A., and Donohue, R. (2010). Believing is seeing: using mindlessness (mindfully) to improve visual acuity. Psychol. Sci. 21, 661–666.

Li, R., Polat, U., Makous, W., and Bavelier, D. (2009). Enhancing the contrast sensitivity function through action video game training. Nat. Neurosci. 12, 549–551.

Li, R., Polat, U., Scalzo, F., and Bavelier, D. (2010). Reducing backward masking through action game training. J. Vis. 10, 33, 1–13.

Mahncke, H. W., Connor, B. B., Appelman, J., Ahsanuddin, O. N., Hardy, J. L., Wood, R. A., Joyce, N. M., Boniske, T., Atkins, S. M., and Merzenich, M. M. (2006). Memory enhancement in healthy older adults using a brain plasticity-based training program: a randomized, controlled study. Proc. Natl. Acad. Sci. U.S.A. 103, 12523–12528.

Mishra, J., Zinni, M., Bavelier, D., and Hillyard, S. A. (2011). Neural basis of superior performance of action videogame players in an attention-demanding task. J. Neurosci. 31, 992–998, 2011.

Murphy, K., and Spencer, A. (2009). Playing video games does not make for better visual attention skills. J. Articles Support Null Hypothesis 6, 1–20.

Nelson, R. A., and Strachan, I. (2009). Action and puzzle video games prime different speed/accuracy tradeoffs. Perception 38, 1678–1687.

Owen, A. M., Hampshire, A., Grahn, J. A., Stenton, R., Dajani, S., Burns, A. S., Howard, R. J., and Ballard, C. G. (2010). Putting brain training to the test. Nature 465, 775–779.

Trick, L. M., Jaspers-Fayer, F., and Sethi, N. (2005). Multiple-object tracking in children: the “catch the spies” task. Cogn. Dev. 20, 373–387.

Keywords: video games, cognitive training, transfer of training, perceptual learning

Citation: Boot WR, Blakely DP and Simons DJ (2011) Do action video games improve perception and cognition? Front. Psychology 2:226. doi: 10.3389/fpsyg.2011.00226

Received: 31 March 2011;

Accepted: 24 August 2011;

Published online: 13 September 2011.

Edited by:

Mattie Tops, University of Groningen, NetherlandsReviewed by:

Mattie Tops, University of Groningen, NetherlandsSarah E. Donohue, Duke University, USA

Copyright: © 2011 Boot, Blakely and Simons. This is an open-access article subject to a non-exclusive license between the authors and Frontiers Media SA, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and other Frontiers conditions are complied with.

*Correspondence: Walter R. Boot, Department of Psychology, Florida State University, 1107 W. Call Street, Tallahassee, FL 32306-4301, USA. e-mail:Ym9vdEBwc3kuZnN1LmVkdQ==