94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Psychol., 02 February 2011

Sec. Emotion Science

volume 2 - 2011 | https://doi.org/10.3389/fpsyg.2011.00016

Body motion is a rich source of information for social cognition. However, gender effects in body language reading are largely unknown. Here we investigated whether, and, if so, how recognition of emotional expressions revealed by body motion is gender dependent. To this end, females and males were presented with point-light displays portraying knocking at a door performed with different emotional expressions. The findings show that gender affects accuracy rather than speed of body language reading. This effect, however, is modulated by emotional content of actions: males surpass in recognition accuracy of happy actions, whereas females tend to excel in recognition of hostile angry knocking. Advantage of women in recognition accuracy of neutral actions suggests that females are better tuned to the lack of emotional content in body actions. The study provides novel insights into understanding of gender effects in body language reading, and helps to shed light on gender vulnerability to neuropsychiatric and neurodevelopmental impairments in visual social cognition.

Body language reading is of immense importance for adaptive social behavior and non-verbal communication. This ability constitutes a central component of social competence. Healthy perceivers are able to infer emotions and dispositions of others represented by point-light body movements that minimize availability of other cues (Pollick et al., 2001; Atkinson et al., 2004; Heberlein et al., 2004; Clarke et al., 2005; Ikeda and Watanabe, 2009; Rose and Clarke, 2009). Perceivers can reliably judge emotional content of dance represented by a few moving dots placed on the dancer’s body (Dittrich et al., 1996). Visual sensitivity to camouflaged point-light human locomotion is modulated by the emotional content of gait with the highest sensitivity to angry walking (Chouchourelou et al., 2006). Observers can discriminate between deceptive and true intentions conveyed by body motion, and true information is precisely detected despite misleading endeavors (Runeson and Frykholm, 1983; Grèzes et al., 2004a,b).

But how do we know whom to trust or who is attracted to us? Such judgments are vital to social interaction, and men and women appear to show profound differences in cues attended to. Yet research on sex differences in visual social cognition has been mainly limited to static face images, in particular, still photographs. In accordance with widespread beliefs, females exhibit higher sensitivity to non-verbal cues: they better discriminate friendliness from sexual interest (Farris et al., 2008) and are more proficient in recognition of facial emotions (Montagne et al., 2005). Females without and with Asperger syndrome are better at recognizing emotions from dynamic faces than males (Golan et al., 2006). Moreover, females tend to better recognize emotions from faces than from voices, whereas males exhibit the opposite tendency. As a rule, however, facial expressions and static body postures can only signal emotional states and affect, but do not provide information about how to deal with it. Dynamic body expressions, gestures, and actions of others are a richer and more ecologically valid source of information for social interaction (De Gelder, 2006, 2009; Pavlova, 2009). The other important advantage of bodily expressions is that whereas face expressions (similarly to a verbal information flow) are believed to be easily kept under control, body movements reveal our true feelings. When emotions expressed by faces and bodies are incongruent, recognition of facial expressions is affected by emotions revealed by body (Meeren et al., 2005). Brain imaging indicates that emotions expressed by dynamic bodies as compared to faces elicit greater activation in a number of brain areas including the superior temporal sulcus (STS), a cornerstone of the social brain (Kret et al., 2010). Experimental evidence obtained primarily in patients with lesions and cortical blindness favors the assumption that emotional body language can be processed automatically, without visual awareness and attention (for review, see Tamietto and de Gelder, 2010).

To a great surprise, however, gender impact on body language reading is largely unknown. A few studies conducted at the beginning of the 80s based on the profile of non-verbal sensitivity (PONS) test, which includes body motion (neck to knees) video clips, point to the superiority of females in body language reading (e.g., Blank et al., 1981). However, this test has some serious methodological limitations; for example, it is based on body motion video clips of only one female actor. Although sex differences represent a rather delicate topic, underestimation, or exaggeration of possible effects can retard progress in the field.

The present work intends to make an initial step in filling the gap, and to clarify whether, and, if so, how perceiver’s gender affects recognition of emotional expressions conveyed by actions of others. More specifically, we ask (i) whether gender affects recognition of emotions represented by body motion, or, in other words, whether females excel in recognition of emotional actions; and (ii) whether gender effects depend on emotional content of actions. To this end, healthy young females and males were presented with point-light displays portraying knocking at a door with different emotional expressions (happy, neutral, and angry). We took advantage of a point-light technique that helps to isolate information revealed by motion from other cues (shape, color, etc.). Perceivers saw only a few bright dots placed on the main joints of an otherwise invisible arm (Figure 1) so that all other clues except for motion characteristics were abandoned.

Figure 1. Three static frames taken from the dynamic sequence representing knocking motion by a set of dots placed on the arm joints, shoulder, and head of an otherwise invisible actor. Actors were seen facing right, in a sagittal view, and struck the surface directly in front of them.

Thirty four healthy adults, students of the University of Tübingen Medical School (aged 20–36), were enrolled in the study. Mean age of females (20 participants) was 23.8 ± 3.7 years, and mean age of males (14 participants) was 22.9 ± 2.0 years. There was no age difference between female and male participants (t32 = 0.95, p = 0.35, ns). The groups were also comparable in terms of educational and socio-economic status. All participants had normal or corrected-to-normal vision and heterosexual orientation. None had a history of neurological or psychiatric disorders including autistic spectrum disorders (ASD), schizophrenia, head injures, or medication for anxiety or depression. None had previous experience with such displays or tasks. Participants were run individually. Informed written consent was obtained in accordance with the requirements of the local Ethical Committee at the University of Tübingen Medical School.

We used point-light displays portraying knocking arm motion (Pollick et al., 2001, 2002). Point-light displays were recorded during performance of knocking with different emotional content (happy, neutral, and angry). We chose to use animations with happy and angry motions, because happiness and anger are reported to be quite similar on the activation dimension, and these animations tended to have fast and jerky movements (Pollick et al., 2001). Display creation is described in detail elsewhere (Pollick et al., 2001). In brief, recording was performed using a 3D position measurement system at a rate of 60 Hz (Optotrak, Northern Digital Inc., Waterloo, ON, Canada). Each display consisted of six point-light dots placed on the head, shoulder, elbow, wrist, and the first and forth metacarpal joints of an otherwise invisible right hand (Figure 1). Point-light actors were seen facing right, in a sagittal view, and struck the surface directly in front of them. The size of all point-light knocking stimuli was standardized in such a way that in the first frame, the distance from the head to the first metacarpal joint was identical for all actors. For each emotion, six different displays with equal number of knocking performed by female and male actors were created. By using the Presentation software (Neurobehavioral Systems Inc., Albany, CA, USA), each video was displayed five times per experimental session resulting in 30 trials per emotion. The whole experimental session consisted of a set of 90 displays representing three emotions in a random order, and took about 15–20 min per participant. Each display was shown for 1 s. We used a three alternative-forced choice paradigm. On each trial, participants indicated (by pressing with their dominant hand one of three respective keys on a computer keyboard) whether a display portrayed happy, neutral, or angry knocking. Positions of the keys were counterbalanced between participants. Participants were told that they have to perform the task as accurately as possible. No immediate feedback was given regarding performance.

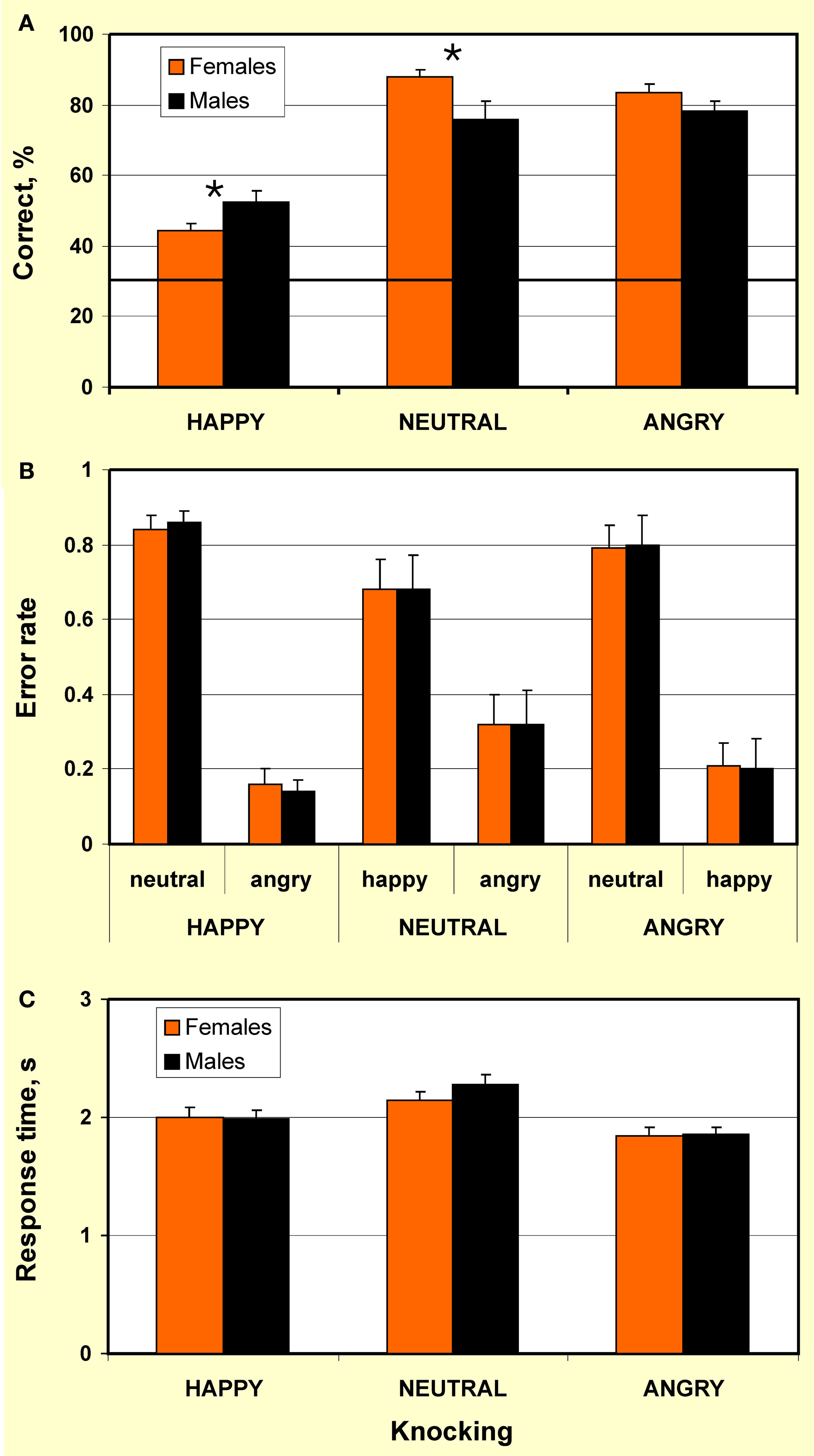

Percentage correct in recognition of emotions conveyed by knocking is represented in Figure 2A. In both females and males, recognition of all emotional expressions was above chance level (p < 0.001). However, recognition of happy knocking was less accurate than of neutral and angry actions. This is consistent with the outcome of previous studies on emotion recognition through point-light human locomotion (Chouchourelou et al., 2006; Ikeda and Watanabe, 2009) and dance (Dittrich et al., 1996) that show better recognition of angry over happy motion.

Figure 2. Recognition of happy, neutral, and angry point-light knocking by females and males. (A) Percentage correct: Males outperformed in recognition of happy knocking (p < 0.015), whereas females excelled in recognition of neutral knocking (p < 0.016) and tended to over-perform in recognition of angry knocking (p < 0.07). Bold horizontal line indicates chance level. Significant differences are indicated by an asterisk; (B) Error rate: The lack of gender differences in error rate demonstrates that gender differences in recognition accuracy of emotional content of knocking were not caused by gender-related bias for mistaking one emotion for another. Each bar represents an average ratio of the number of errors of particular type to the overall number of errors made for a display type (e.g., leftmost bar represents an average ratio of number of trials when happy knocking was mistaken for neutral knocking to the number of trials when happy knocking was mistaken for both neutral and angry knocking); (C) Response time to happy, neutral, and angry point-light knocking by females and males. Females and males do not differ in response time. Vertical bars represent ± SE.

Individual number of correct responses was submitted to a 2 × 3 repeated-measures ANOVA (as assessed by the Shapiro–Wilk test, the data were normally distributed) with factors Gender (female/male) and Emotional expression of knocking (happy/neutral/angry). This analysis revealed the lack of a main effect of gender (F(1,32) = 0.21, p = 0.648, ns). However, a main effect of emotional expression (F(2,32) = 82.94, p < 0.0001) and interaction between the factors Gender × Emotional expression (F(2,32) = 6.23, p < 0.003) were highly significant. Planned pair-wise comparisons indicated that males outperformed in recognition of happy knocking (t32 = 2.58, p < 0.015, one-tailed, here and below Bonferroni corrected for multiple comparisons; d = 0.84), whereas females tended to over-perform in recognition of angry knocking (t32 = 1.87, p < 0.07, one-tailed) and excelled in recognition of neutral knocking (t32 = 2.54, p < 0.016, one-tailed, d = 0.88). The data, therefore, reveals the lack of advantage of females in recognition accuracy. Instead, the findings indicate that sex effects in recognition accuracy are modulated by emotional contents of actions.

Error analysis (Figure 2B) indicated that by both females and males, happy knocking was mistaken for neutral knocking in more than 80% of wrong responses (error rate 0.84 and 0.86 for females and males, respectively; gender difference: t32 = 0.42, p = 0.68, two-tailed, ns. Error rate was calculated as an average ratio of the number of errors of particular type to the overall number of errors made for a display type). In turn, with a lack of gender differences, neutral knocking was misperceived as happy actions in about 70% of error responses (error rate 0.68 and 0.67 for females and males, respectively; gender differences: t32 = 0.02, p = 0.99, two-tailed, ns). In about 80% of error trials in response to angry knocking, both females and males mistook angry knocking for neutral knocking (error rate 0.79 and 0.8 for females and males, respectively; gender difference: t32 = 0.14, p = 0.88, two-tailed, ns). The lack of gender differences in error rate suggests that gender effects in recognition accuracy of emotional content of knocking observed in the present study are not caused by gender-related bias for mistaking one emotion for another.

For response time analyses, a 2 × 3 repeated-measures ANOVA was performed on individual values (as assessed by the Shapiro–Wilk test, the data were normally distributed) with factors Gender (female/male) and Emotional expression (happy/neutral/angry). This analysis did not reveal any effect of gender (F(1,32) = 1.56, p = 0.22, ns) as well as any interaction of factors Gender × Emotional expression on response time (F(2,32) = 1.42, p = 0.25, ns; Figure 2C). However, a main effect of emotional expression was significant (F(2,32) = 35.16, p < 0.0001), with the fastest response to angry knocking, and the slowest response to neutral knocking (Figure 2B). This shows that recognition of neutral knocking was more difficult than that of angry and happy knocking. Post hoc pair-wise comparisons showed no gender difference in response time to happy (t32 = 0.09, p = 0.93, two-tailed, ns, average 2.00 ± 0.39 and 1.99 ± 0.28 s from the stimulus onset, for females and males, respectively), neutral (t32 = 1.21, p = 0.24, two-tailed, ns; average 2.15 ± 0.33 and 2.28 ± 0.3 s, for females and males, respectively), and angry knocking (t32 = 0.14, p = 0.89, two-tailed, ns; average 1.84 ± 0.32 and 1.85 ± 0.28, for females and males, respectively). Taken together, the findings suggest that gender does not affect speed of body language reading. For both females and males, however, the swiftness of response to body language depends on the emotional content of actions. Since it is difficult to interpret negative findings within a relatively small sample size that might be considered a limitation of the study, the lack of sex differences in error rate and response time has to be further explored.

The outcome of the study indicates that gender affects accuracy rather than speed of body language reading. To the best of our knowledge, the present work delivers the first evidence for sex effects in body language reading. The gender effect, however, is modulated by the emotional content of actions. Females tend to excel in recognition accuracy of angry knocking, whereas males over-perform in recognition of happy actions. Furthermore, females clearly surpass males in recognition of emotionally neutral knocking. The lack of gender differences in error rate suggests that gender effects in recognition accuracy are not caused by gender-related bias.

Based on popular wisdom, one can expect that while women possess soft skills in social perception including high sensitivity to positive emotional signals and subtle details, men might outperform in recognition of negative menacing expressions. This assumption is based on the different evolutionary and socio-cultural roles of both genders (e.g., Biele and Grabowska, 2006; Proverbio et al., 2008). High sensitivity of women to positive emotions has been related to their role as primary offspring care providers. Social cognition in men is presumably connected with active interactions and immediate reactions, and, therefore, emotion perception is likely associated with motor programs. Anger detection is usually associated with a need to act, for example, escape from a person or prepare to confront a person. However, the data available are controversial. In the present study, males over-perform in recognition of emotionally positive happy actions. These data agree with findings showing that men appear to exhibit stronger brain activation in response to positive pictures (depicting landscapes, sport activities, families, and erotic scenes) than women (Wrase et al., 2003; Sabattineli et al., 2004; Gasbarri et al., 2007). Moreover, males are equally responsive to happiness conveyed through static and dynamic happy faces (males rate the intensity of dynamic and static expressions of happiness equally high), whereas females are less responsive to happiness in static faces (Biele and Grabowska, 2006). Presumably, this indicates that males are better tuned to subtle expressions of happiness in faces and actions. This might hold true, at least, for a population of young men with a high social status and educational level as those participated in the present study. The prominent outcome of the study is that females had a clear advantage in recognition of neutral knocking. This suggests that women are better tuned to the lack of emotional content in body actions. Future research should clarify whether gender effects in body language reading occur with other repertoires of actions, and with other arrays of emotions.

What is the nature of gender effects in body language reading? One possibility is that gender differences have neurobiological sources (Cahill, 2006; Jazin and Cahill, 2010), and brain mechanisms underpinning body language reading are sex-specific. The social cognition network, commonly referred to as the social brain, primarily involves the parieto-temporal junction, temporal cortices including the fusiform face area and the STS, orbitofrontal cortices, the amygdala (Adolphs, 2003), and the left lateral cerebellum (Sokolov et al., 2010). The right STS is a cornerstone for processing of meaningful body motion (Grossman and Blake, 2002; Pavlova et al., 2004; Pelphrey et al., 2004). Is the social brain sex-specific? This is an open question.

To date, studies of sex effects on the social brain have been limited to investigation of face expressions or body actions represented in still photographs. Brain activation in females is reported to be more bilaterally distributed, presumably providing greater contribution of both hemispheres to identification of facial affect (Bourne, 2005; Proverbio et al., 2010). Females show stronger event-related potential (ERP) response to emotional faces (Orozco and Ehlers, 1998). However, the findings are controversial. Sex effects are found in the blood oxygen level dependent (BOLD) response of the amygdala to happy, but not to fearful faces (Killgore and Yurgelun-Todd, 2001). On the other hand, a significant correlation between functional magnetic resonance imaging (fMRI) activity of the amygdala and behavioral response to fearful faces is observed in males only (Derntl et al., 2009a). Both behavioral and amygdala responses to threat-related face expressions are correlated with testosterone level (Derntl et al., 2009b).

In accordance with widespread belief, it is reported that the female brain is more responsive to social stimuli represented in still images (Proverbio et al., 2009). Recent ERP findings indicate that in females, processing of actions’ goals occurs earlier (Proverbio et al., 2010). Neuroimaging reveals that gender effects are not evident in the neural circuitry underpinning visual processing of social interaction, but rather in the regions engaged in perceptual decision making: the neuromagnetic gamma response over the left prefrontal cortex peaks earlier in females (Pavlova et al., 2010a).

Gender effects at behavioral level do not necessarily imply that there is sex-related difference in brain activation subserving body language reading. Moreover, gender differences in performance on social cognition tasks can be impacted by socio-cultural stereotypes (Pavlova et al., 2010b). Several types of interrelations between behavioral measures and brain mechanisms engaged in social perception should be taken into account: (i) sex differences both in behavioral and brain responses; (ii) sex differences detectable either at behavioral level or only in brain activation; and (iii) absence of sex differences both at behavioral and brain levels (Pavlova, 2009). Noteworthy, gender-related dimorphism in the brain may not only elicit but also prevent behavioral differences if they are maladaptive (De Vries, 2004).

Future research should be directed at uncovering sex differences in brain activity during body language reading. Such investigation would also shed light on sex differences in neuropsychiatric conditions characterized by impairments in social cognition such as ASD, depression, and schizophrenia. It is known that males are more commonly affected by ASD than females, with a ratio of about 4:1 (Newschaffer et al., 2007). Females, however, are affected much more severely, and, therefore, in high functioning autistic individuals this ratio is even much higher. Although there is some behavioral evidence that individuals with ASD have difficulties in revealing information about emotions from point-light body movements ( Moore et al., 1997; Hubert et al., 2007; Parron et al., 2008), it is unclear whether females and males with ASD differ in body language reading. The lack of studies in females with ASD calls for a thorough investigation of their profile. The other important issue for future research is sex differences in visual social cognition in survivors of premature birth. Males are at a 14–20% higher risk of premature birth (Melamed et al., 2010) and of its complications in the brain and cognition. Adolescents who were born prematurely are likely to exhibit difficulties in visual social cognition (Pavlova et al., 2008), but gender effects are largely unknown. Clarification of gender impact on body language reading and underlying brain networks would provide novel insights into understanding of gender vulnerability to neuropsychiatric and neurodevelopmental impairments in visual social cognition.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We greatly appreciate Frank E. Pollick for providing us with a set of point-light displays, and Alexander N. Sokolov for valuable comments. This work was supported by the Else Kröner-Fresenius-Foundation (Grants P63/2008 and P2010_92), the Werner Reichardt Center for Integrative Neuroscience (CIN) founded by the German Research Foundation (project 2009-24), and the Reinhold-Beitlich Foundation to Marina A. Pavlova.

Adolphs, R. (2003). Cognitive neuroscience of human social behaviour. Nat. Rev. Neurosci. 4, 165–178.

Atkinson, A. P., Dittrich, W. H., Gemmel, A. J., and Young, A. W. (2004). Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception 33, 717–746.

Biele, C., and Grabowska, A. (2006). Sex differences in perception of emotion intensity in dynamic and static facial expressions. Exp. Brain Res. 171, 1–6.

Blank, P. D., Rosenthal, R., Snodgrass, S. E., DePaulo, B. M., and Zuckerman, M. (1981). Sex differences in eavesdropping on non-verbal cues: developmental changes. J. Pers. Soc. Psychol. 41, 391–396.

Bourne, V. J. (2005). Lateralised processing of positive facial emotion: sex differences in strength of hemispheric dominance. Neuropsychologia 43, 953–956.

Chouchourelou, A., Toshihiko, M., Harber, K., and Shiffrar, M. (2006). The visual analysis of emotional actions. Soc. Neurosci. 1, 63–74.

Clarke, T. J., Bradshaw, M. F., Field, D. T., Hampson, S. E., and Rose, D. (2005). The perception of emotion from body movement in point-light displays of interpersonal dialogue. Perception 34, 1171–1180.

De Gelder, B. (2006). Towards the neurobiology of emotional body language. Nat. Rev. Neurosci. 7, 242–249.

De Gelder, B. (2009). Why bodies? Twelve reasons for including bodily expressions in affective neuroscience. Philos. Trans. R. Soc. Lond., B. 364, 3475–3484.

De Vries, G. J. (2004). Sex differences in adult and developing brains: compensation, compensation, compensation. Endocrinology 145, 1603–1608.

Derntl, B., Habel, U., Windischberger, C., Robinson, S., Kryspin-Exner, I., Gur, R. C., and Moser, E. (2009a). General and specific responsiveness of the amygdala during explicit emotion recognition in females and males. BMC Neurosci. 10, 91. doi: 10.1186/1471-2202-10-91

Derntl, B., Windischberger, C., Robinson, S., Kryspin-Exner, I., Gur, R. C., Moser, E., and Habel, U. (2009b). Amygdala activity to fear and anger in healthy young males is associated with testosterone. Psychoneuroendocrinology 34, 687–693.

Dittrich, W. H., Troscianko, T., Lea, S. E., and Morgan, D. (1996). Perception of emotion from dynamic point-light displays represented in dance. Perception 25, 727–738.

Farris, C., Treat, T. A., Vilken, R. J., and McFall, R. M. (2008). Perceptual mechanisms that characterize gender differences in decoding women’s sexual intent. Psychol. Sci. 19, 348–354.

Gasbarri, A., Arnone, B., Pompili, A., Pacitti, C., and Cahill, L. (2007). Sex-related hemispheric lateralization of electrical potentials evoked by arousing negative stimuli. Brain Res. 1138, 178–186.

Golan, O., Baron-Cohen, S., and Hill, J. (2006). The Cambridge Mindreading (CAM) Face-Voice Battery: the complex emotion recognition in adults with and without Asperger syndrome. J. Autism Dev. Dis. 36, 169–183.

Grèzes, J., Frith, C. D., and Passingham, R. E. (2004a). Brain mechanisms for inferring deceit in the actions of others. J. Neurosci. 24, 5500–5505.

Grèzes, J., Frith, C. D., and Passingham, R. E. (2004b). Inferring false beliefs from the actions of oneself and others: an fMRI study. Neuroimage 21, 744–750.

Grossman, E. D., and Blake, R. (2002). Brain areas active during visual perception of biological motion. Neuron 35, 1167–1175.

Heberlein, A. S., Adolphs, R., Tranel, D., and Damasio, H. (2004). Cortical regions for judgments of emotions and personality from point-light walkers. J. Cogn. Neurosci. 16, 1143–1158.

Hubert, B., Wicker, B., Moore, D. G., Monfardini, E., Duverger, H., Da Fonsega, D., and Deruelle, C. (2007). Brief report: recognition of emotional and non-emotional biological motion in individuals with autistic spectrum disorders. J. Autism Dev. Dis. 37, 1386–1392.

Ikeda, H., and Watanabe, K. (2009). Anger and happiness are linked differently to the explicit detection of biological motion. Perception 38, 1002–1011.

Jazin, E., and Cahill, L. (2010). Sex differences in molecular neuroscience: from fruit flies to humans. Nat. Rev. Neurosci. 11, 9–17.

Killgore, W. D., and Yurgelun-Todd, D. A. (2001). Sex differences in amygdala activation during the perception of facial affect. Neuroreport 12, 2543–2547.

Kret, M. E., Pichon, S., Grèzes, J., and de Gelder, B. (2010). Similarities and differences in perceiving threat from dynamic faces and bodies. An fMRI study. Neuroimage 54, 1755–1762.

Meeren, H. K., van Heijnsbergen, C. C., and de Gelder, B. (2005). Rapid perceptual integration of facial expression and emotional body language. Proc. Natl. Acad. Sci. U.S.A. 102, 16518–16523.

Melamed, N., Yogev, Y., and Glezerman, M. (2010). Fetal gender and pregnancy outcome. J. Matern. Fetal. Neonatal. Med. 23, 338–344.

Montagne, B., Kessels, R. P., Frigerio, E., de Haan, E. H., and Perrett, D. I. (2005). Sex differences in perception of affective facial expressions: do men really lack emotional sensitivity? Cogn. Process. 6, 136–141.

Moore, D. G., Hobson, R. P., and Lee, A. (1997). Components of person perception: an investigation with autistic, non-autistic retarded and typically developing children and adolescents. Br. J. Dev. Psychol. 15, 401–423.

Newschaffer, C. J., Croen, L. A., Daniels, J., Giarelli, E., Grether, J. K., Levy, S. E., Mandell, D. S., Miller, L. A., Pinto-Martin, J., Reaven, J., Reynolds, A. M., Rice, C. E., Schendel, D., and Windham, G. C. (2007). The epidemiology of autism spectrum disorders. Annu. Rev. Publ. Health 28, 235–258.

Orozco, S., and Ehlers, C. L. (1998). Gender differences in electrophysiological responses to facial stimuli. Biol. Psychiatry 44, 281–289.

Parron, C., Da Fonsega, D., Santos, A., Moore, D. G., Monfardini, E., and Deruelle, C. (2008). Recognition of biological motion in children with autistic spectrum disorders. Autism 12, 2161–2174.

Pavlova, M. (2009). Perception and understanding of intentions and actions: does gender matter? Neurosci. Lett. 499, 133–136.

Pavlova, M., Guerreschi, M., Lutzenberger, W., Sokolov, A. N., and Krägeloh-Mann, I. (2010a). Cortical response to social interaction is affected by gender. Neuroimage 50, 1327–1332.

Pavlova, M., Wecker, M., Krombholz, K., and Sokolov, A. A. (2010b). Perception of intentions and actions: gender stereotype susceptibility. Brain Res. 1311, 81–85.

Pavlova, M., Lutzenberger, W., Sokolov, A., and Birbaumer, N. (2004). Dissociable cortical processing of recognizable and non-recognizable biological movement: analyzing gamma MEG activity. Cereb. Cortex 14, 181–188.

Pavlova, M., Sokolov, A. N., Birbaumer, N., and Krägeloh-Mann, I. (2008). Perception and understanding of others’ actions and brain connectivity. J. Cogn. Neurosci. 20, 494–504.

Pelphrey, K. A., Morris, J. P., and McCarthy, G. (2004). Grasping the intentions of others: the perceived intentionality of an action influences activity in the superior temporal sulcus during social perception. J. Cogn. Neurosci. 16, 1706–1716.

Pollick, F. E., Lestou, V., Ryu, J., and Cho, S. B. (2002). Estimating the efficiency of recognizing gender and affect from biological motion. Vision Res. 422, 2345–2355.

Pollick, F. E., Paterson, H. M., Bruderlin, A., and Sanford, A. J. (2001). Perceiving affect from arm movement. Cognition 82, B51–B61.

Proverbio, A. M., Riva, F., Martin, E., and Zani, A. (2010). Face coding is bilateral in the female brain. PLoS One 5, e11242. doi: 10.1371/journal.pone.0011242

Proverbio, A. M., Riva, F., and Zani, A. (2009). Observation of static pictures of dynamic actions enhances the activity of movement-related brain areas. PLoS One 4, e5389. doi: 10.1371/journal.pone.0005389

Proverbio, A. M., Riva, F., and Zani, A. (2010). When neurons do not mirror the agent’s intentions: sex differences in neural coding of goal-directed actions. Neuropsychologia 48, 1454–1463.

Proverbio, A. M., Zani, A., and Adorni, R. (2008). Neural markers of a greater female responsiveness to social stimuli. BMC Neurosci. 9, 56. doi: 10.1186/1471-2202-9-56

Rose, D., and Clarke, T. J. (2009). Look who’s talking: visual detection of speech from whole-body biological motion cues during emotive interpersonal conversation. Perception 38, 153–156.

Runeson, S., and Frykholm, G. (1983). Kinematic specification of dynamics as information basis for the person-and-action perception: expectation, gender recognition, and deceptive intention. J. Exp. Psychol. 112, 585–615.

Sabattineli, D., Flaisch, T., Bradley, M. M., Fitzsimmons, J. R., and Lang, P. J. (2004). Affective picture perception: gender differences in visual cortex? NeuroReport 15, 1109–112.

Sokolov, A. A., Gharabaghi, A., Tatagiba, M., and Pavlova, M. (2010). Cerebellar engagement in an action observation network. Cereb. Cortex 20, 486–491.

Tamietto, M., and de Gelder, B. (2010). Neural bases of the non-conscious perception of emotional signals. Nat. Rev. Neurosci. 11, 697–709.

Keywords: visual perception, biological motion, social cognition, gender

Citation: Sokolov AA, Krüger S, Enck P, Krägeloh-Mann I and Pavlova MA (2011) Gender affects body language reading. Front. Psychol. 2:16. doi: 10.3389/fpsyg.2011.00016

Received: 19 July 2010;

Accepted: 20 January 2011;

Published online: 02 February 2011.

Edited by:

Marco Tamietto, Tilburg University, NetherlandsReviewed by:

Ute Habel, RWTH Aachen University, GermanyCopyright: © 2011 Sokolov, Krüger, Enck, Krägeloh-Mann and Pavlova. This is an open-access article subject to an exclusive license agreement between the authors and Frontiers Media SA, which permits unrestricted use, distribution, and reproduction in any medium, provided the original authors and source are credited.

*Correspondence: Marina A. Pavlova, Developmental Cognitive and Social Neuroscience Unit, Department of Paediatric Neurology and Child Development, Children’s Hospital, Medical School, Eberhard Karls University of Tübingen, Tübingen, Germany. e-mail:bWFyaW5hLnBhdmxvdmFAdW5pLXR1ZWJpbmdlbi5kZQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.