- 1Wu Tsai Institute, Yale University, New Haven, CT, United States

- 2Department of Computer Science, Yale University, New Haven, CT, United States

- 3Brain Function Laboratory, Department of Psychiatry, Yale University, New Haven, CT, United States

- 4Mila-Quebec AI Institute, Montreal, QC, Canada

- 5Department of Genetics, Yale School of Medicine, New Haven, CT, United States

- 6Department of Psychiatry, Yale University, New Haven, CT, United States

- 7Department of Comparative Medicine, Yale University, New Haven, CT, United States

- 8Department of Medical Physics and Biomedical Engineering, University College London, London, United Kingdom

- 9Department of Neuroscience, Yale University, New Haven, CT, United States

Schizophrenia is a severe psychiatric disorder associated with a wide range of cognitive and neurophysiological dysfunctions and long-term social difficulties. Early detection is expected to reduce the burden of disease by initiating early treatment. In this paper, we test the hypothesis that integration of multiple simultaneous acquisitions of neuroimaging, behavioral, and clinical information will be better for prediction of early psychosis than unimodal recordings. We propose a novel framework to investigate the neural underpinnings of the early psychosis symptoms (that can develop into Schizophrenia with age) using multimodal acquisitions of neural and behavioral recordings including functional near-infrared spectroscopy (fNIRS) and electroencephalography (EEG), and facial features. Our data acquisition paradigm is based on live face-toface interaction in order to study the neural correlates of social cognition in first-episode psychosis (FEP). We propose a novel deep representation learning framework, Neural-PRISM, for learning joint multimodal compressed representations combining neural as well as behavioral recordings. These learned representations are subsequently used to describe, classify, and predict the severity of early psychosis in patients, as measured by the Positive and Negative Syndrome Scale (PANSS) and Global Assessment of Functioning (GAF) scores to evaluate the impact of symptomatology. We found that incorporating joint multimodal representations from fNIRS and EEG along with behavioral recordings enhances classification between typical controls and FEP individuals (significant improvements between 10 − 20%). Additionally, our results suggest that geometric and topological features such as curvatures and path signatures of the embedded trajectories of brain activity enable detection of discriminatory neural characteristics in early psychosis.

1 Introduction

Schizophrenia is a complex mental disorder affecting millions of people worldwide. Individuals suffering from this condition face significant cognitive and social impairments. Current diagnostic methods, often based on static or single-subject studies, fail to capture the dynamic nature of social cognition, especially in interpreting facial expressions. This presents a challenge in early detection of the condition and subsequent early interventions that could improve the quality of life. Moreover, most existing methods focus on analyzing different neuroimaging and behavioral modalities separately, missing the intricate interactions between neural activities and their relationships to behavior. To address this, we propose a novel approach that combines live social interactions with multimodal neuroimaging (fNIRS, EEG) and facial expression analysis. Our method captures dynamic neural correlates of live face-to-face interactions in first-episode psychosis (FEP) patients, using a deep recurrent geometric autoencoder framework, that we call Neural-PRISM, to learn joint representations from these modalities, offering new insights and early predictive capabilities for clinical outcomes.

According to the Global Burden of Disease 2019 Study (1, 2), schizophrenia affects 23.6 million individuals worldwide. It is marked by positive symptoms such as delusions, hallucinations, and disorganized thinking, as well as negative symptoms including reduced speech, social withdrawal, and diminished emotional expression. The wide spectrum of cognitive and neurophysiological dysfunctions associated with Schizophrenia impose a profound impact on quality of life and social functioning. Moreover, the estimated economic burden of schizophrenia in the USA doubled from 2013 to 2019, reaching $343.2 billion in 2019 (3). This underscores the importance of developing effective early diagnosis strategies and treatment options to better manage this challenging disorder. However, studying schizophrenia using only unimodal neuroimaging or behavioral data is challenging because each offers a limited perspective, making it difficult to fully understand and address the cognitive and social deficits associated with the disorder. EEG offers high temporal but low spatial resolution, whereas, fNIRS provides better spatial but lower temporal resolution. Similarly, relying solely on behavioral data, like facial expression analysis, does not reveal the underlying neural mechanisms contributing to the observed impairments in schizophrenia. Some studies based on unimodal neuroimaging recordings include resting state functional magnetic resonance imaging (fMRI) (4–7) and resting state scalp electroencephalography (EEG) (8, 9). Although schizophrenia is often associated with disordered social interactions, much of the current understanding of its underlying neurophysiology comes from studies of single brains without social interaction. To address this issue we focus on dynamic behavior during social interactions.

Recently, an emerging focus on live social interactions between pairs of individuals, rather than single subjects, has improved the understanding of dynamic face processing as a proxy for real-life social interactions (10–12). These foundational findings provide a theoretical framework to study live face-to-face interactions in autism spectrum disorder (ASD) (13), where social difficulties are a primary symptom. This research prompts new questions about atypical dynamic and interactive face processing as an indicator of underlying neurophysiology for social function and/or social disability in schizophrenia. We hypothesize that the neural systems of FEP patients as compared to TD individuals reflect characteristic atypical social functioning and suggest that they could serve as early indicators of risk, predictors of disease progression, and potential targets for interventions such as neuromodulation. Thus, here we apply this novel method of neural and behavioral recordings during live social interactions to isolate fundamental neural correlates characteristic of atypical social cognition in schizophrenia.

Functional magnetic resonance imaging (fMRI) provides high spatial but limited temporal resolution (approximately 2 seconds). However, fMRI is limited to single subject tasks, other constraining conditions, and a high magnetic field that limits simultaneous measurement of related behaviors. Functional near infrared spectroscopy, fNIRS, like fMRI also measures the hemodynamic response function (HRF) but at much higher temporal resolution. A limitation of fNIRS, relative to fMRI, is the shallow signal penetration that is restricted to superficial cortex. However, superficial cortical activity is assumed to reflect subcortical activity from deeper structures, and the fNIRS technology adds the key dimension of live behaviors within live social interactions. Thus, this limitation of responses to superficial cortex and relatively low spatial resolution is balanced with advantages of two-person social neuroscience behaviors that extend conventional singlesubject neuroscience to dyadic functions and live reciprocal social interactions that cannot be observed using conventional neuroimaging methods. Here we apply live two-person interactive paradigm with simultaneous EEG and fNIRS recordings to investigate social cognitive mechanisms by live (ecologically valid) facial expressions (14) in both typically developing (TD) and FEP participants. These investigations are not possible with fMRI because live face-to-face imaging is difficult and the high magnetic field prevents incorporating other imaging modalities simultaneously.

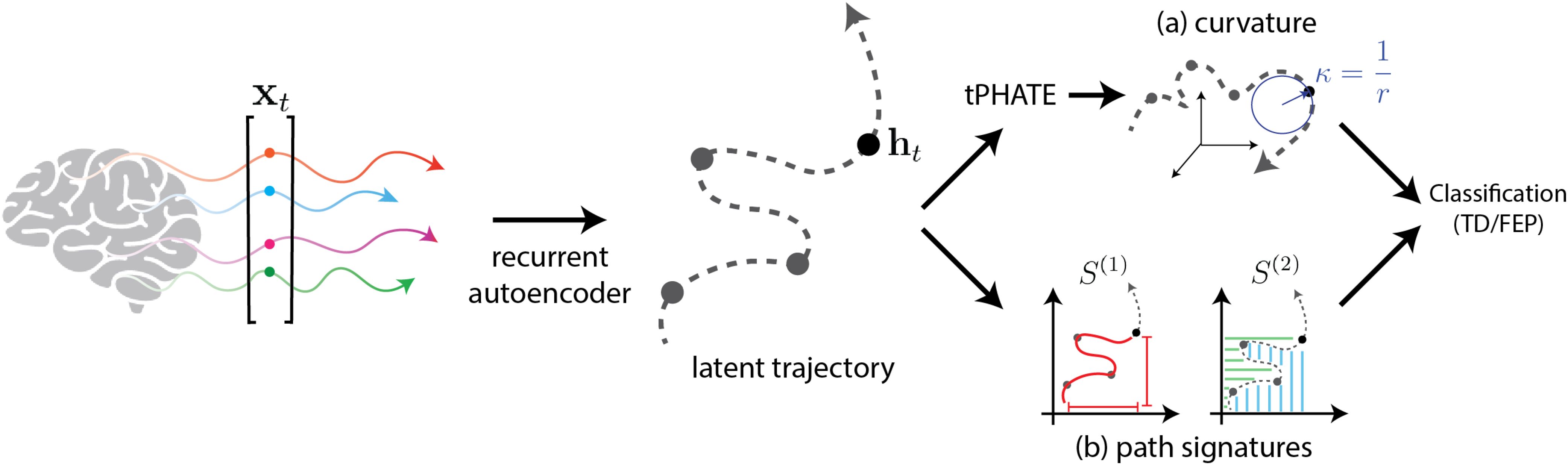

To gain insights from this multimodal data, in this paper we propose a novel multimodal representation learning framework called neural-PRISM (Path Representations for early Identification of Schizophrenia via Multimodal translation) for extracting signatures of brain activity in FEP. The proposed neural-PRISM is a recurrent geometric autoencoder framework that learns compressed and informative latent representations of multiple modalities including fNIRS, EEG, and behavior in form of facial action units(AUs) (15). These representations reveal a highly structured and temporally organized trajectory in 3-D, with high-curvature segments corresponding to transitions in brain activity between live interactions and rest. Both encoder and decoder networks consist of multiple long short-term memory (LSTM) (16, 17) layers to learn latent representations from neuroimaging (EEG and fNIRS) as well as behavioral (faceAU) modalities. These latent trajectories are utilized for distinguishing between FEP patients and typically developed (TD) individuals, as well as for forecasting clinical scores, such as Positive and Negative Syndrome Scale (PANSS) and Global Assessment of Functioning (GAF) scores (18, 19) that indicate the severity of psychosis. The learned representations are utilized via nonlinear dimensionality reduction method, t-PHATE (20), to visualize the neural activity in a three dimensional Euclidean space. We call these time lapse trajectories as neural motifs, which are further utilized for computing geometrical (curvatures) and topological (path signatures) features and discriminate between TD and FEP individuals.

To summarize, the contributions of this paper are as follows: (i) a novel live interactive paradigm with simultaneous fNIRS, EEG, and facial expression recordings to study the relationship between the neural correlates of FEP patients stimulated by social interaction and (ii) a novel recurrent geometric autoencoder framework called neural-PRISM for learning joint representations of multiple modalities. (iii) Empirical results demonstrating effective representation learning via visualization as well as classification result showing early FEP prediction.

2 Methods

2.1 Dataset and experimental setup

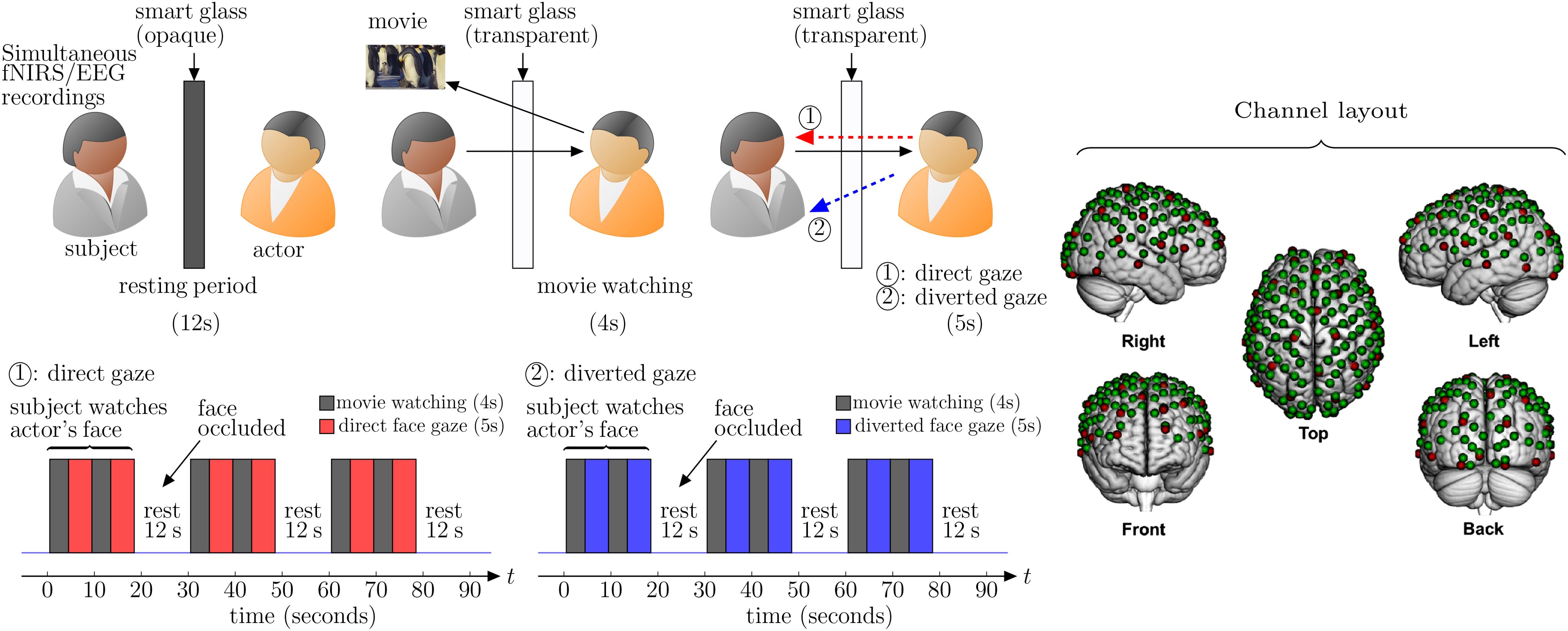

The proposed method employs dyads that include one individual who serves as the live expressive face stimulus and the other partner categorized as either typically developed (TD) or first episode psychosis (FEP) patient. Dyads faced each from across a table at a distance of approximately 140 cm and table-mounted eye-tracking systems were positioned to measure continuous eye movements of the subject. Functional NIRS and EEG data were also synchronized and continuously acquired hemodynamic and electrocortical responses of the subject during the experiment. The dyads were separated by a “smart glass” in the center of the table that controlled face gaze times (the glass was transparent during gaze periods) and “rest times” (the glass was opaque during rest) (12). The face gaze times were controlled according to the time series illustrated in Figure 1.

Figure 1. Experiment setup: the subject’s brain is being scanned with simultaneous fNIRS (functional nearinfrared spectroscopy), EEG (electroencephalogram), and facial expression recordings. The actor watches a (positive/negative) movie for 4 seconds followed by looking at the subject (eye contact/no eye contact) for 5 seconds. The same process of 9 seconds is repeated again before the smart glass is made opaque for 12 seconds (rest period). This sequence of 30 seconds activity is repeated three times in a single run. Channel layout for simultaneous EEG and fNIRS recordings: red dots represent the 32 EEG electrodes and green dots represent the 134 fNIRS channels.

2.1.1 Participants

Our study involved 14 FEP patients (2 females, 12 males; mean age: 24.2 ± 4.1 years) and 19 typical controls (8 females, 8 males and 3 identified as another gender; mean age: 25.1 ± 9.0 years). FEP patients were recruited from Connecticut Mental Health Center and Yale New Haven Hospital and the typically developing (TD) participants were recruited from the local community. All participants provided written informed consent in accordance with guidelines approved by the Yale University Human Investigation Committee (HIC # 1501015178).

2.1.2 Paradigm

The dyads were seated 140 cm across a table from each other. A “Smart Glass” (glass that is capable of alternating its appearance between opaque and transparent upon application of an appropriate voltage) panel was positioned in the middle of the table 70 cm away from each participant. In both conditions of direct and diverted face gaze, the subject was instructed to gaze at the eyes of their partner who watches emotionally valanced movie clips followed by direct or diverted gaze toward the subjects face (Figure 1). In the direct face gaze condition, dyads had a direct face-to-face view of each other. On the other hand, in the diverted face gaze condition the stimulus look at the subject’s shoulder.

The actor watches a 4 second movie (joyful or sad) and then looks at the partner’s (subject’s) eyes or his shoulders (diverted face gaze) for 5 seconds. These sequence of tasks were repeated twice for each pair. Then there is a 12 second rest period, when the smart glass is made opaque. The same process (30 seconds) is repeated three times for each condition. The subjects were instructed to watch the actor’s (stimulus) face all the time. The actor was instructed to watch short movies followed by direct or diverted gaze toward the subject.

2.1.2.1 Movie library

Emotionally evocative videos (movies) that are intended to elicit natural facial expressions were collected from publicly accessible sources and trimmed into 3-5 second clips. Video stimuli are pretested and rated for emotive properties along with 283 Amazon Mechanical Turk participants who rated 134 videos. The criteria for inclusion were that the videos be about 3-5 seconds in duration and have emotive inducing properties in accordance with three categories that we refer to as: adorables, creepies, and neutral landscapes. This is to avoid any presumption of emotional labels. This library of video clips has been employed previously to elicit dynamic and spontaneous facial expressions within a similar live-interaction paradigm (12). No video is repeated in any session.

2.1.3 Functional near-infrared spectroscopy signal acquisition

A Shimadzu LABNIRS system (Shimadzu Corp., Kyoto, Japan) was used to collect fNIRS data at a sampling rate of 123 ms (8.13 Hz). Forty emitters and forty detectors (80 optodes total) were placed in the cap in a 134-channel layout covering frontal, parietal, temporal, and occipital lobes (see channel layout in Figure 1) (21). Each emitter transmitted three wavelengths of light, 780, 805, and 830 nm, and each detector measured the amount of light that was not absorbed. The amount of light absorbed by the blood was converted to concentrations of OxyHb and deOxyHb using the Beer-Lambert equation. Custom-made caps with interspersed optode and electrode holders were used to acquire concurrent fNIRS and EEG signals (Shimadzu Corp., Kyoto, Japan). The distance between optodes was 2.75 cm or 3 cm, respectively, for participants with head circumferences less than 56.5 cm or greater than 56.5 cm. Caps were placed such that the most anterior midline optode holder was almost 2.0 cm above nasion, and the most posterior and inferior midline optode holder was on or below inion. A lighted fiber-optic probe (Daiso, Hiroshima, Japan) was used to remove all hair from the optode holder before optode placement.

2.1.4 Electroencephalograph signal acquisition

A g.USBamp (g.tec medical engineering GmbH, Austria) system with 2 bio-amplifiers and 32 electrodes was used to collect EEG data at a sampling rate of 256 Hz. Electrodes were arranged in a layout similar to the 10-10 system; however, exact positioning was limited by the location of the electrode holders, which were held rigid between the optode holders. Electrodes were placed as closely as possible to the following positions: Fp1, Fp2, AF3, AF4, F7, F3, Fz, F4, F8, PC5, PC1, PC2, PC6, T7, C3, Cz, C4, T8, CP5, CP1, CP2, CP6, P7, P3, Pz, P4, P8, PO3, PO4, O1, Oz, and O2. Conductive gel was applied to each electrode to reduce resistance by ensuring contact between the electrodes and the scalp. As gel was applied, data were visualized using a bandpass filter to allow frequencies between 1 and 60 Hz. The ground electrode was placed on the forehead between AF3 and AF4, and an ear clip was used for reference.

2.1.5 Facial features acquisition

The behavioral data for the subjects was simultaneously acquired in form of facial action units (AUs) using OpenFace (22) and Logitech C920 face cameras. OpenFace is one of several available platforms that provide algorithmically derived tracking of facial motion in both binary and continuous format. The automatic detection of facial AUs using these tools has become a foundational method in facial expression analysis, where facial movements are characterized as dynamic patterns reflecting the anatomy of facial muscles. While a direct link between specific emotions and activation patterns has been proposed (23), this approach focuses on breaking down facial expressions into discrete muscular components and their dynamics, without associating them with emotional labels. The facial AU data from OpenFace included 17 distinct classifications of anatomical configurations.

2.2 Representation learning and classification

With the proposed experimental setup for data collection discussed in the previous section, we propose a novel deep recurrent geometric autoencoder framework for classification and learning neural motifs of the FEP patients. Here, the term “neural motifs” refers to the underlying signatures of time-lapse neuroimaging data in a compressed low-dimensional space.

2.2.1 Latent trajectories from multimodal translation via recurrent autoencoders

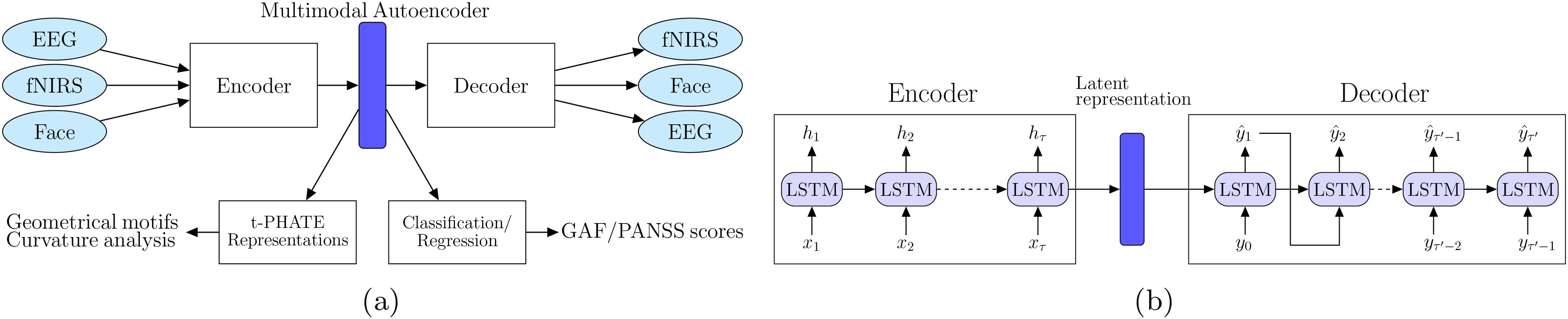

We propose a deep recurrent geometric autoencoder framework, neural-PRISM (Path Representations for early Identification of Schizophrenia via Multimodal translation), to learn unimodal and multimodal (joint) representations. Autoencoders are powerful machine learning models trained in a self-supervised fashion to reconstruct inputs by learning their abstract representations in the latent space. Besides learning representations for a single modality, the encoder decoder framework can also be utilized for learning joint representations of two neuroimaging modalities with input to the encoder being data from one modality and the decoder output being the other modality (Figure 2A). We utilize deep recurrent neural networks (RNNs) (24) for encoder as well as decoder to capture the time dependency of the neural recordings and facial action units. Both the encoder and decoder networks consist of RNN layers: the encoder network encodes the multidimensional input neural recordings (EEG or fNIRS) x into latent representations:

Figure 2. (A) Schematic of our neural-PRISM recurrent geometric autoencoder framework. Facial expressions are encoded in form of action units (FaceAUs). (B) Architecture of encoder and decoder networks. Both encoder and decoder networks have long short term memory (LSTM) recurrent neural network layers to learn latent trajectories.

where τ is the length of the input sequence. The dimensions of the input at each time-point is xt ∈ R134 for fNIRS or facial action units and xt ∈ R32 for EEG. The latent embeddings ht represent the compressed time encoded information in the input. The final latent embedding hτ encapsulates the temporal patterns present in the input and serves as the initialization for the decoder. The decoder network takes the latent embeddings hτ and generates the reconstructed data, with its outputs computed at each time step t:

where τ′ is the sequence length of the Decoder output modality. In our settings, the EEG data has 7680 samples corresponding to the 30 second block while fNIRS and face AU constitute 244 samples. Note that we consider HbDiff signal (25) as fNIRS recordings.

A Long-Short-Term-Memory (LSTM) RNN, as shown in Figure 2B, was chosen over the vanilla RNN because the latter experiences the vanishing-gradient problem during model training, which inhibited it from effectively leveraging context between elements by maintaining its internal state. For the decoder network, teacher forcing method (26, 27) was employed, in which the groundtruth samples yt are fed back into the model to be conditioned on for the prediction of later outputs. These fed back samples force the RNN to stay close to the ground-truth sequence. Three LSTM layers were used in both the encoder and decoder networks, with a latent dimension of 128. In the learning process, we utilized root mean square error (RMSE) as a loss function for training encoder and decoder networks, while cross entropy loss was used for training classification models. Adam optimizer is used along with ℓ2 regularization to prevent overfitting. The learning rate and weight decay (ℓ2 regularization) hyperparameters were tuned through grid search.

The final latent embeddings hτare fed to a multilayer perceptron (17) layer in order to classify FEP vs TD individuals. The learned trajectories in the latent space are further analyzed topologically and geometrically, as described in the following section. Our recurrent geometric autoencoder framework also offers a foundational approach for translating between different modalities. While other studies, such as (28), have focused solely on modality translation using resting-state EEG and fNIRS data, our primary goal here is not to advance translation techniques. Instead, the translation between neuroimaging modalities (EEG and fNIRS) and behavioral modalities (FaceAU) is an additional outcome of our framework.

2.2.2 Topological and geometrical summarization of latent trajectories

We summarize the high-dimensional latent trajectories obtained from the recurrent autoencoder using path signatures, subsequently leveraging these path signatures for classification. Path signatures (29), as effective descriptors of ordered data, capture essential characteristics of trajectories and have been successfully applied in various domains of neuroscience. For instance, path signatures have been employed to predict Alzheimer’s diagnosis by modeling disease progression trajectories (30), to detect epileptic seizures by analyzing electroencephalogram (EEG) patterns (31), in early autism diagnosis through behavioral pattern recognition (32), and in seizure forecasting (33).

Next, we reduce the dimensionality of the latent representations using the manifold learning technique tPHATE (20). tPHATE preserves local and global structures in the data, while simultaneously enabling us to visualize it in 3-D. By embedding the high-dimensional latent trajectories into a lowerdimensional space, we can compute and analyze the geometric features of the resulting low-dimensional trajectories, such as curvature. Here we employ curvature as a feature for classification, as it encapsulates information about changes in trajectory direction. Curvature analysis of dynamic trajectories has been widely used in scientific machine learning, including shape analysis in computer vision (34), understanding particle movement in physics (35), and analyzing motor control and movement dynamics in neuroscience (36, 37).

Overall, our approach of using geometrical and topological summaries of latent trajectories (see Figure 3), described below, enables a nuanced classification framework that leverages both temporal ordering and geometric properties of brain activity.

Figure 3. Classification of subjects based on curvature and path signatures of the latent trajectories obtained from the recurrent autoencoder. (A) Curvature computation is performed using circle fitting in 3D tPHATE coordinates. (B) Path signatures are directly computed from the latent trajectory.

Path Signatures Given the latent trajectory is first rescaled to unit variance, reducing scale discrepancies among features. This is accomplished by standardizing each component of the path h(t) as follows:

where µi and σi are the mean and standard deviation, respectively, of the i-th component across all time points. This normalization step ensures that each dimension contributes equally to the signature computation, minimizing bias toward features with larger scales.

To further address variability in the duration and sampling intervals across different modalities, we apply a time rescaling that standardizes the time interval of analysis. Specifically, we transform the time interval of interest [a,b] to the standard interval [0,1]:

Following these preprocessing steps, we compute the k-th level path signatures, Sk(h′(t)) for k = 1,…,N (see Supplementary Material Appendix A for details). Each computed signature is then normalized:

The normalized path signatures are subsequently fed into a four-layer multilayer perceptron (MLP) for classification.

2.2.2.1 PHATE and t-PHATE

Traditional dimensionality-reduction techniques such as PCA, t-SNE (38) and UMAP (39) are suboptimal: they are sensitive to noise, scramble global structures, fail to capture fine-grained local details, and often lack scalability for large datasets (40). To overcome these challenges, PHATE (potential of heat diffusion for affinity-based transition embedding) (40) provides a scalable dimensionality-reduction method that gives accurate, denoised visualizations of both local and global structures without imposing strong structural assumptions.

By incorporating time-varying features, t-PHATE (20) extends the PHATE algorithm to model the temporal properties of input signals, capturing both temporal autocorrelation and stimulus specific dynamics. When applied to fMRI data from cognitive tasks, it denoises the data and enhances access to brain-state trajectories compared to voxel data and other embeddings like PCA, UMAP, t-SNE and PHATE. Through the integration of temporal relationships between LSTM cells at different time points, t-PHATE generates a low-dimensional (3-D) embeddings that capture both the spatial organization of LSTM states and their temporal progression.

2.2.2.2 Geometrical feature extraction

One observation from the t-PHATE embeddings is that the rate of directional change over time in each trajectory correlates with the intensity of attention shifts during task-switch periods. This insight motivates the further use of three-dimensional t-PHATE embeddings for feature extraction in the form of curvature measures. More precisely, the curvature at a specific point reflects the rate of change of the curve at that point, or in mathematical terms, it represents the magnitude of the second derivative of the curve at that point. A plane curve given by Cartesian parametric equations x = x(t) and y = y(t), the curvature kappa, sometimes also called the “first curvature” (41), is defined by

where x′ and x″ denote first and second order derivatives, respectively. Here we consider 1-dimensional curves in 3-dimensional Euclidean space, specified parametrically by x = rcost and y = rsint, which is tangent to the curve at a given point. The curvature is then

For curvature at point p, we fit a circle S1(p,r) centered at p with radius r in the plane spanned by principal components of the 3-dimensional t-PHATE trajectory. The inverse of radius 1/r gives the curvature at p. More precisely, at each point p of the curve C, we select a local neighborhood of points around p. The size of this neighborhood, a user-defined hyper-parameter (set here to 8% of the total curve length), determines the number of points sampled symmetrically around p. The neighborhood is then centered by subtracting the mean of these points from each point, ensuring that the analysis is performed relative to the center of mass. Next, Singular Value Decomposition (SVD) is applied to the centered neighborhood, yielding two vectors that span the local plane and a normal vector perpendicular to this plane. A circle is then fitted to the points in the local plane using a least-squares method. The curvature at p is subsequently calculated as the reciprocal of the radius (1/r) of the fitted circle, assuming that locally the trajectory approximates a circular arc. This procedure is repeated for all points along the trajectory, giving a curvature profile across the entire curve. The four curvature values at four task switching times (including switching from gaze to rest) are then selected to be fed into a three-layer MLP for classification.

3 Results

We present our experimental results in two parts. First, we present the classification results from learned representations followed by prediction of GAF and PANSS scores. Next, we present the joint learned representations and show the distinction between TD and FEP individuals via topographical data analysis techniques. Results on modality translation, which is an additional outcome of the neural-PRISM framework, are presented in Supplementary Material Appendix B.

3.1 Classification

We divide the dataset into 30 seconds blocks such that each subject has 24 blocks of data: with positive/negative valence movies and direct/diverted gaze, corresponding to each condition, we have 6 blocks. In order to evaluate the performance of our method, we employ leave-one-subject-out cross validation scheme, the samples from one subject are used for testing, while samples from other subjects are used as the training set. It has to noted here that if we randomly choose certain blocks from all the data samples and split it into training and test sets, we achieve close to ideal 100% classification accuracy on test samples (with fNIRS recordings only) similar to the studies in previous works (8, 9). This is because the training set has some cues or signatures of every subject and as a consequence the leaned model is able to generalize in this setting.

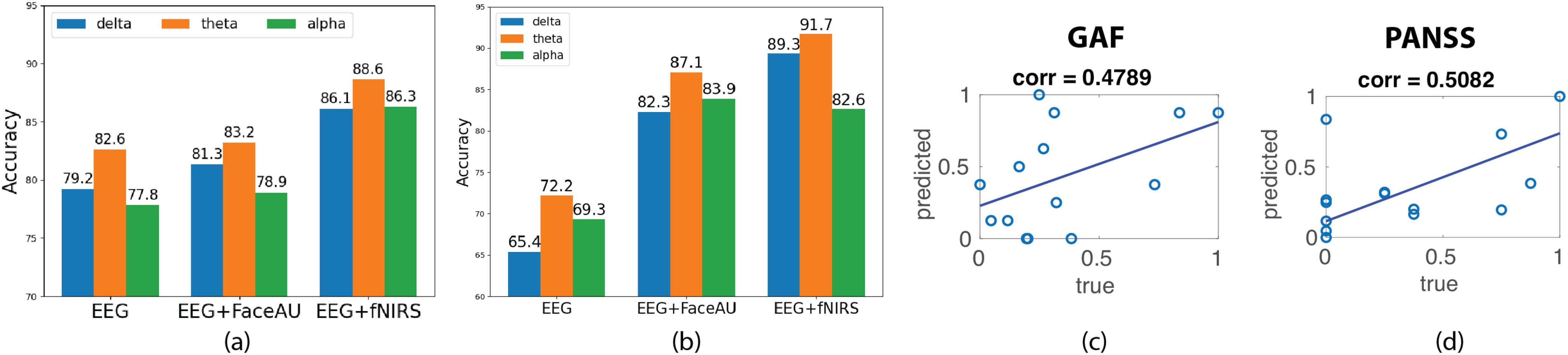

We train the encoder-decoder model with different EEG bands namely: delta [0.5–3 Hz], theta [4–7 Hz], and alpha [8–13 Hz] along with fNIRS and facial action units. The classification accuracy achieved using fNIRS data on withheld subject blocks is determined to be 85%, outperforming traditional support vector machine (SVM) accuracy of 71% and stand-alone MLP accuracy of 67%. Incorporating multimodal joint representations improves the classification and fNIRS + EEG data yields best classification accuracy of 88% (Figure 4A). Moreover, similar trend is observed when using path signatures of latent trajectories (Figure 4B).

Figure 4. Diagnosis of first episode psychosis (FEP) patients using latent trajectories obtained from neuralPRISM and prediction of disease severity scores. (A) Classification of TD (typically developed) and FEP subjects using a 2-layer MLP (multi-layer perceptron) trained on latent trajectories derived from unimodal EEG (electroencephalogram) data as well as multimodal EEG + FaceAU (facial action units) and EEG + fNIRS (functional near-infrared spectroscopy) data. (B) Classification of TD and FEP subjects using a 4-layer MLP trained on path signatures of latent trajectories derived from unimodal and multimodal data. (C) Block-averaged probability scores obtained from the classifier in (A) are correlated with GAF (global assessment of functioning) scores. (D) Block-averaged probability scores obtained from the classifier in (A) are correlated with PANSS (positive and negative syndrome scale) positive symptom score. Note that the PANSS and GAF scores are normalized to [0,1] for clarity.

3.1.1 Predicting GAF and PANSS scores

The Global Assessment of Functioning (GAF) (19, 42) covers the range from positive mental health to severe psychopathology, is an overall (global) measure of how patients are doing in their day-to-day life. GAF measures the degree of mental illness by rating psycho-logical, social and occupational functioning (43). The Positive and Negative Syndrome Scale (PANSS) (44, 45) was developed in order to provide a well-defined instrument to specifically assess both positive and negative symptoms of schizophrenia as well as general psychopathology.

The classification probability scores during testing of classification model were utilized to predict the PANSS and GAF scores. The probability scores corresponding to the 24 blocks of data for each FEP patient were averaged to get the predicted score. Note that the ground truth scores were not used during training of our classification model. The correlation coefficient between predicted scores and true GAF role scores is computed at 0.4789 while correlation between positive symptom PANSS score was 0.5082 (Figures 4C, D). However, the predicted scores did not have good correlation with the negative PANSS scores (0.1630).

3.2 Learned representations

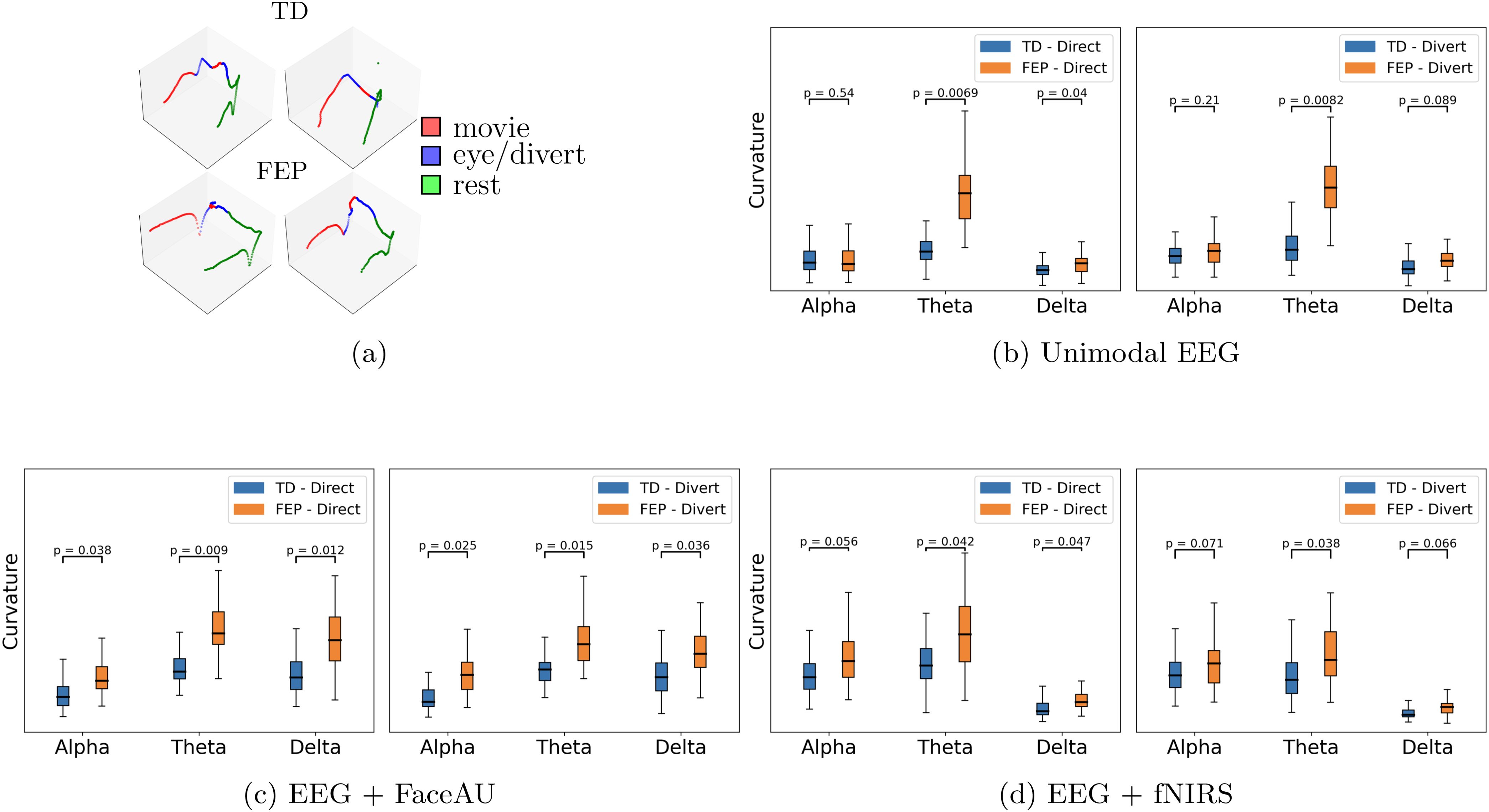

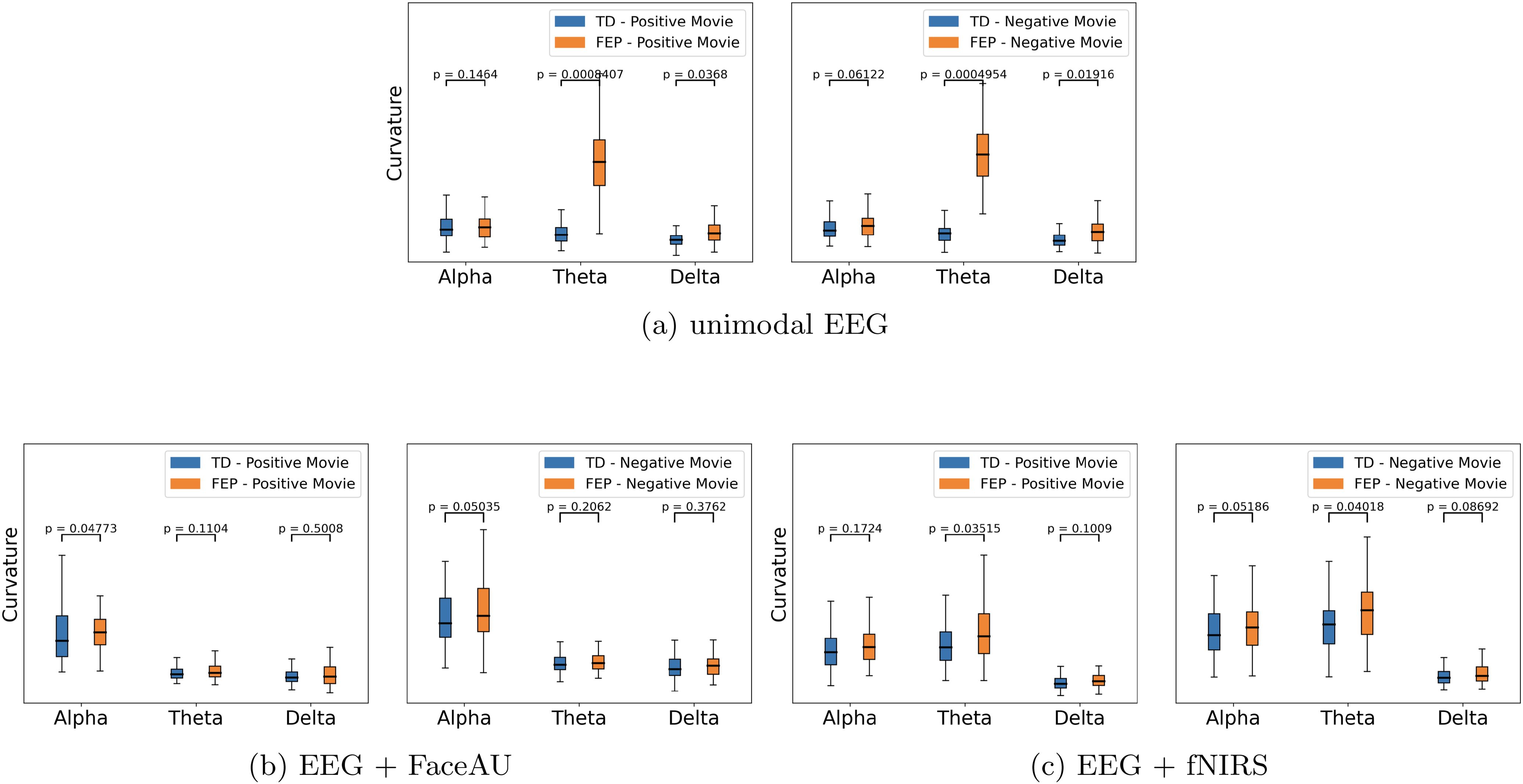

The learned latent representations of the unimodal and multimodal autoencoders are used to compute the time lapse t-PHATE trajectories. We subsequently analyze these trajectories and compute curvatures at different task switching times. We observe that the curvatures for FEP patients are larger than those for TD individuals, indicating the attentional dysregulation and sensitivity to the presence of emotional distractors in FEP patients (46, 47). Additionally, visualizing the learned embeddings in 3-D space using t-PHATE enables the identification of task switching times from movie watching to direct/diverted gaze and vice-versa (Figure 5A shows example trajectories). The curvatures at various switching times were analyzed for both FEP and TD individuals. By computing curvatures using only EEG unimodal representations, the greatest distinction between TD and FEP individuals was observed in the theta band (Figure 5B). Moreover, by integrating different modalities - fNIRS and FaceAU - with EEG, clear discrimination emerges in both the alpha and delta bands (Figures 5C, D). Another point to note here is the higher curvatures for FEP patients in case of directed gaze as compared to diverted gaze condition, suggesting higher neural activity in FEP patients during directed gaze. To analyze the sensitivity with respect to positive vs negative emotions, we depict the curvature values in Figure 6. We observe that (i) the curvatures of FEP patients are larger than TD individuals and (ii) the curvatures of FEP patients are larger for negative emotions stimulated by negatively valenced movies.

Figure 5. (A) Example t-PHATE visualizations (geometrical motifs) of learned representations in 3dimensional Euclidean space: first row is for TD (typically developed) and second row corresponds to FEP (first episode psychosis) patients. These examples are obtained from joint EEG (electroencephalogram) and fNIRS (functional near-infrared spectroscopy) representations. Curvatures of geometrical motifs: (B) unimodal EEG, (C) Multimodal EEG + FaceAU (facial action units), and (D) Multimodal EEG + fNIRS. p-values are obtained through Mann–Whitney U test. Higher curvatures for FEP patients in case of directed gaze as compared to diverted gaze condition suggest higher neural activity in FEP patients during directed gaze.

Figure 6. Curvature comparison based on the positive/negative movie watching conditions. (A) unimodal EEG (electroencephalogram), (B) Multimodal EEG + FaceAU (facial action units), and (C) Multimodal EEG + fNIRS (functional near-infrared spectroscopy). We observe that (i) the curvatures of FEP (first episode psychosis) patients are larger than TD (typically developed) individuals and (ii) the curvatures of FEP patients are larger for negative emotions stimulated by negatively valenced movies.

4 Discussion

In this study, we introduced a deep recurrent geometric autoencoder framework for multimodal representation learning and classification of first episode psychosis individuals. Our study is based on a live faceto-face interaction paradigm to investigate the neural correlates of social cognition in early psychosis. We hypothesized that incorporating multiple neuroimaging modalities (fNIRS and EEG) along with behavioral recordings (facial tracking) can predict early psychosis symptoms better than unimodal recordings alone.

Our proposed neural-PRISM framework consists of LSTM based encoder and decoder networks together with geometric and topological characterizations of the trajectory. The encoder network is trained to output latent trajectories over time and the decoder network is trained to output reconstructed modality conditioned on the encoder output. By training the networks to minimize the difference between the predicted and recorded (ground truth) output modalities, the autoencoder learns compressed joint embeddings of multimodal neural trajectories in the latent space (encoder output). Although the classification between FEP and TD individuals is based on the embedding at the final time points, the entire learned trajectories are utilized to capture geometrical features that facilitate characterization of early psychosis patients. Recurrent neural networks have been used for modeling brain dynamics (48). A Long-Short-Term-Memory (LSTM) RNN was chosen over the vanilla RNN because the latter experiences the vanishing-gradient problem during model training, which inhibited it from effectively leveraging context between elements by maintaining its internal state. Transformers (49) are usually used to model very long range dependencies especially for large-scale tasks such as document summarization and will be suitable for sleep studies or when the recordings live in streaming fashion. Although transformers can be trained in parallel, the LSTMs require less parameters than transformers. Some other scenarios where transformers can be suitable are real-time decoding from EEG to fNIRS or brain-computer interface for processing live streaming data of neuroimaging signals (50).

The classification and severity prediction results in Figure 4 as trajectory curvature analysis on our embeddings results in Figure 5 support our hypothesis, where the multimodal representations provide better discrimination for FEP patients. The curvatures of trajectories associated with the task-switching paradigm may indicate rapid transitions between events, which could be reflected in the EEG data which has higher temporal resolution. Moreover, higher curvatures of FEP patients validate the attentional dysregulation and sensitivity to the presence of emotional distractors in FEP patients (46, 47), underlying the validity of our approach.

Our results confirm the potential of our framework for facilitating classification and detection of early psychosis. We achieve higher classification accuracy from multimodal (EEG and fNIRS/FaceAU) joint representation learning than achieved solely from fNIRS data validating the usefulness of multimodal data processing. Moreover, our paradigm along with multivariate data analysis show correlations with early positive symptoms and this may aid clinicians at targeting for intervention.

Although the number of participants in the study is small, the current set of data provides foundational results with multivariate analysis techniques for potential future studies on larger populations as well as application of these tools to additional populations including chronic schizophrenia. Further generalization across subjects will require a larger sample size with a primary emphasis on understanding FEP through neural recordings stimulated by live face processing.

While fNIRS has been extensively used for neuroimaging in infants and children, its application in adult cognitive research has been limited, primarily due to sparse optode coverage and lower spatial resolution (around 3 cm) compared to fMRI. Nevertheless, its advantages, such as tolerance to movement and the absence of factors like a strong magnetic field, restrictive physical conditions, the requirement to lie supine, and loud noise, make it a preferable alternative for live interactive studies involving two individuals (10–12). Although fNIRS technology cannot record brain activity from subcortical regions, many studies on social interaction have found the superficial cortex including right temporoparietal junction to play a major role in these behaviors (51). Combining fNIRS recordings with EEG provides additional information that may represent neural processing at deeper and subcortical levels.

Previous studies have implemented multivariate machine learning classification methods in a number of multimodal studies related to diagnosis of psychosis patients including genomic data, patient records, EEG, structural neural imaging, task-based fMRI, resting state fMRI, and diffusion tensor imaging (DTI) (6, 52–56). These modalities were either used in univariate models or in combination with each other in multivariate models to best classify which participants were at risk for developing Schizophrenia or who would best benefit from anti-psychotic medications. In one study, patient records and structural MRI were combined using a neural network model to accurately predict which participants would benefit from clozapine (52). Convolutional neural network combined with layerwise relevance propagation were utilized to combine features from structural magnetic resonance imaging, fMRI, and genetic markers such as single nucleotide polymorphisms to classify individuals with schizophrenia (53). Another study distinguished patients with bipolar disorder from those with schizophrenia using a SVM and recursive feature elimination models with a multimodal combination of structural MRI, resting state fMRI, and DTI to show that regional functional connectivity strength in the left inferior parietal area might serve as a specific biomarker for schizophrenia (54). Other studies have used multimodal recordings of EEG and fMRI to classify individuals with schizophrenia from control participants. Interestingly, one recent study did not attempt to integrate the modalities of EEG and fMRI into models, but rather looked at their univariate ability to classify patients (56). This study showed that for fMRI modalities, tuned convolutional neural networks and random forest models showed the best classification performance. For EEG data it was shown that the random forest classifier features of spectral entropy, Hjorth mobility and complexity were most important for the categorization of patients. One additional study focused on the synthesizing of EEG to fMRI signals in a multimodal predictive model for schizophrenia diagnosis. While this study, did not translate the neuroimaging modalities, it did show validity in the synthesis of the multimodal signals for classification of schizophrenia patients (55).

A number of recent studies have shown the effectiveness of using support vector machine and other machine learning methods for classifying psychosis and TD participants when utilizing both alpha and theta power spectra (57–60). Because specific differences in these spectra have been shown previously to best predict diagnosis of TD versus FEP patients, we also utilized spectral filtering on the EEG data to compare changes in specific spectra. Specifically, these previous studies suggest a framework for unimodal EEG recordings that specify that alpha and theta power spectra features can be used as a screening tool in diagnosis of psychosis. One study compared low frequency oscillation spectra using random-forest, support vector machine, and Gaussian process classifier (GPC), to demonstrate the practicality of resting-state power spectral density (PSD) to distinguish patients of FEP from healthy controls (58). Other studies compared alpha and theta power in First episode psychosis patients to TD subjects during cognitive control tasks and showed that both alpha and theta power spectra were different from TD participants (59). Finally a systematic review of antipsychotic drug treatment on EEG patterns during resting state demonstrated that the most relevant predictors of a poor response to antipsychotics included change in theta power compared to healthy control and a high alpha power and connectivity (60).

In conclusion, this study demonstrated the potential of multivariate techniques to capture discriminatory patterns in neural and behavioral recordings of early psychosis. Our findings provide a foundation for exploring the mechanisms underlying these conditions and their interconnections.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors at Dryad https://doi.org/10.5061/dryad.gxd2547xn. All code to support the analyses will be available on GitHub at: https://github.com/KrishnaswamyLab/neural-PRISM.

Ethics statement

The studies involving humans were approved by Yale Human Research Protection Program. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study in accordance with protocol # HIC-2000028094 (typical subjects) and protocol # HIC-1501015178 (patients).

Author contributions

RS: Methodology, Visualization, Writing – original draft, Writing – review & editing, Formal analysis, Software. YZ: Methodology, Visualization, Writing – review & editing. DB: Writing – review & editing. VS: Conceptualization, Investigation, Resources, Validation, Writing – review & editing. CT: Conceptualization, Investigation, Resources, Validation, Writing – review & editing. XZ: Writing – review & editing, Data curation, Formal analysis. JN: Data curation, Writing – review & editing, Formal analysis. SK: Conceptualization, Funding acquisition, Investigation, Methodology, Resources, Supervision, Validation, Visualization, Writing – review & editing. JH: Conceptualization, Data curation, Funding acquisition, Investigation, Resources, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. RS is funded by the Wu Tsai Postdoctoral Fellowship from Yale University. DB is funded by the Kavli Institute for Neuroscience Postdoctoral Fellowship from Yale University. VS is funded by NIH grant R01MH103831 and the Gustavus and Louise Pfeiffer Research Foundation. CT is funded by the Gustavus and Louise Pfeiffer Research Foundation. SK is funded in part by the NIH (NIGMSR01GM135929, R01GM130847), NSF CAREER award IIS- 2047856, NSF DMS grant 2327211 and NSF CISE grant 2403317. JH is funded by NIH grants (NIMH R01MH111629, NIMH R01MH107573, and NIMH R01 MH119430) and the Gustavus and Louise Pfeiffer Research Foundation. The funding sources had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication. This work was also funded by the State of Connecticut, Department of Mental Health and Addiction Services. The content of this manuscript is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute of Health, the National Science Foundation, the Department of Mental Health and Addition Services or the State of Connecticut. The views and opinions expressed are those of the authors.

Acknowledgments

We thank Nina Levine, LMSW, MPH, Deepa Purushothaman, MD, and Ya-jie Wang for their assistance in scanning FEP participants. We extend our sincere appreciation to Raymond Cappiello, for managing all the required documentation.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyt.2025.1518762/full#supplementary-material

References

1. Vos T, Lim SS, Abbafati C, Abbas KM, Abbasi M, Abbasifard M, et al. Global burden of 369 diseases and injuries in 204 countries and territories 1990– 2019: a systematic analysis for the Global Burden of Disease Study 2019. Lancet. (2020) 396:1204–22. doi: 10.1016/S0140-6736(20)30925-9

2. Solmi M, Seitidis G, Mavridis DM, Correll CU, Dragioti E, Guimond S, et al. Incidence, prevalence, and global burden of schizophrenia-data, with critical appraisal, from the Global Burden of Disease (GBD) 2019. Mol Psychiatry. (2023) 28:5319–5327. doi: 10.1038/s41380-023-02138-4

3. Kadakia A, Catillon M, Fan Q, Rhys Williams G, Marden JR, Anderson A, et al. The economic burden of schizophrenia in the United States. In: J Clin Psychiatry. (2022) 83:43278. doi: 10.4088/JCP.22m14458

4. Cai X-L, Xie D-J, Madsen KH, Wang Y-M, Bögemann SA, Cheung EFC, et al. Generalizability of machine learning for classification of schizophrenia based on resting-state functional MRI data. Hum Brain Mapp. (2020) 41:172–84. doi: 10.1002/hbm.24797

5. Li G, Depeng H, Chao W, Wenxing H, Calhoun VD, Yu-Ping W. Application of deep canonically correlated sparse autoencoder for the classification of schizophrenia. Comput Methods programs biomedicine. (2020) 183:105073. doi: 10.1016/j.cmpb.2019.105073

6. Yassin W, Nakatani H, Zhu Y, Kojima M, Owada K, Kuwabara H, et al. Machine-learning classification using neuroimaging data in schizophrenia, autism, ultra-high risk and first-episode psychosis. Trans Psychiatry. (2020) 10:278. doi: 10.1038/s41398-020-00965-5

7. Lee L-H, Chen C-H, Chang W-C, Lee P-L, Shyu K-K, Chen M-H. Evaluating the performance of machine learning models for automatic diagnosis of patients with schizophrenia based on a single site dataset of 440 participants. Eur Psychiatry. (2022) 65:e1. doi: 10.1192/j.eurpsy.2021.2248

8. Sun J, Cao R, Zhou M, Hussain W, Wang B, Xue J, et al. A hybrid deep neural network for classification of schizophrenia using EEG Data. Sci Rep. (2021) 11:4706. doi: 10.1038/s41598-021-83350-6

9. De Miras JR, Ibáñez-Molina AJ, Soriano MF, Iglesias-Parro S. Schizophrenia classification using machine learning on resting state EEG signal. Biomed Signal Process Control. (2023) 79:104233. doi: 10.1016/j.bspc.2022.104233

10. Noah JA, Zhang X, Dravida S, Ono Y, Naples A, McPartland JC, et al. Real-time eye-to-eye contact is associated with cross-brain neural coupling in angular gyrus. Front Hum Neurosci. (2020) 14:19. doi: 10.3389/fnhum.2020.00019

11. Hirsch J, Zhang X, Noah JA, Dravida S, Naples A, Tiede MT, et al. Neural correlates of eye contact and social function in autism spectrum disorder. PloS One. (2022) 17:e0265798. doi: 10.1371/journal.pone.0265798

12. Hirsch J, Zhang X, Noah JA, Bhattacharya A. Neural mechanisms for emotional contagion and spontaneous mimicry of live facial expressions. Philos Trans R Soc B. (2023) 378:20210472. doi: 10.1098/rstb.2021.0472

13. Zhang X, Noah JA, Singh R, McPartland JC, Hirsch J. Support vector machine prediction of individual Autism Diagnostic Observation Schedule (ADOS) scores based on neural responses during live eye-to-eye contact. Sci Rep. (2024) 14:3232. doi: 10.1038/s41598-024-53942-z

14. Wild B, Erb M, Eyb M, Bartels M, Grodd W. Why are smiles contagious? An fMRI study of the interaction between perception of facial affect and facial movements. Psychiatry Research: Neuroimaging. (2003) 123:17–36. doi: 10.1016/S0925-4927(03)00006-4

15. Ekman P, Friesen WV. Facial action coding system. Environ Psychol Nonverbal Behav. (1978). doi: 10.1037/t27734-000

16. Hochreiter S, Schmidhuber Jürgen. Long short-term memory. Neural Comput. (1997) 9:1735–80. doi: 10.1162/neco.1997.9.8.1735

17. Goodfellow I, Bengio Y, Courville A. Deep learning. Cambridge, Massachusetts, United States: MIT press (2016).

18. Jones SH, Thornicroft G, Coffey M, Dunn G. A brief mental health outcome scale: Reliability and validity of the Global Assessment of Functioning (GAF). Br J Psychiatry. (1995) 166:654–9. doi: 10.1192/bjp.166.5.654

19. Srihari VH, Tek C, Kucukgoncu S, Phutane VH, Breitborde NJK, Pollard J, et al. First-episode services for psychotic disorders in the US public sector: a pragmatic randomized controlled trial. Psychiatr Serv. (2015) 66:705–12. doi: 10.1176/appi.ps.201400236

20. Busch EL, Huang E, Benz A, Wallenstein T, Lajoie G, Wolf G, et al. “Multi-view manifold learning of human brain-state trajectories”. In: Nature computational science, vol. 3. (2023). p. 240–53.

21. Dravida S, Ono Y, Noah JA, Zhang X, Hirsch J. Co-localization of theta-band activity and hemodynamic responses during face perception: simultaneous electroencephalography and functional near-infrared spectroscopy recordings. Neurophotonics. (2019) 6:045002–2. doi: 10.1117/1.NPh.6.4.045002

22. altrušaitis T, Robinson P, Morency L-P. 2016 IEEE winter conference on applications of computer vision (WACV), , IEEE. (2016). pp. 1–10.

23. Ekman P. Facial expression and emotion. Am Psychol. (1993) 48:384. doi: 10.1037/0003-066X.48.4.384

24. Hermans M, Schrauwen B. Training and analysing deep recurrent neural networks. Adv Neural Inf Process Syst. (2013) 26.

25. Kirilina E, Jelzow A, Heine A, Niessing M, Wabnitz H, Brühl R, et al. The physiological origin of task-evoked systemic artefacts in functional near infrared spectroscopy. Neuroimage. (2012) 61:70–81. doi: 10.1016/j.neuroimage.2012.02.074

26. Williams RJ, Zipser D. A learning algorithm for continually running fully recurrent neural networks. Neural Comput. (1989) 1:270–80. doi: 10.1162/neco.1989.1.2.270

27. Lamb AM, Goyal A, Zhang Y, Zhang S, Courville AC, Bengio Y, et al. Professor forcing: A new algorithm for training recurrent networks. Adv Neural Inf Process Syst. (2016) 29.

28. Sirpal P, Damseh R, Peng K, Nguyen DK, Lesage F. Multimodal autoencoder predicts fNIRS resting state from EEG signals. Neuroinformatics. (2022) 20:537–58.

29. Chevyrev I, Kormilitzin A. A primer on the signature method in machine learning. arXiv. (2016). Available online at: https://arxiv.org/abs/1603.03788.

30. Moore PJ, Lyons TJ, Gallacher J. Using path signatures to predict a diagnosis of Alzheimer’s disease. PloS One. (2019) 14:e0222212. doi: 10.1371/journal.pone.0222212

31. Tang Y, Wu Q, Mao H, Guo L. “Epileptic Seizure Detection Based on Path Signature and Bi-LSTM Network With Attention Mechanism,” in IEEE Transactions on Neural Systems and Rehabilitation Engineering, vol. 32, pp. 304–313. (2024). doi: 10.1109/TNSRE.2024.3350074

32. Yin Z, Ding X, Zhang X, Wu Z, Wang L, Xu X, et al. Early autism diagnosis based on path signature and Siamese unsupervised feature compressor. Cereb Cortex. (2024) 34:72–83. doi: 10.1093/cercor/bhae069

33. Haderlein JF, Peterson ADH, Zarei Eskikand P, Cook MJ, Burkitt AN, Mareels IMY, et al. Path signatures for seizure forecasting. arXiv. (2023).

35. Thiel M. Osculatory dynamics: framework for the analysis of oscillatory systems. arXiv. (2024). Available online at: https://arxiv.org/abs/2407.00235.

36. Tschechne S, Neumann H. Hierarchical representation of shapes in visual cortex—from localized features to figural shape segregation. Front Comput Neurosci. (2014) 8. doi: 10.3389/fncom.2014.00093

37. Rocchi MBL, Sisti D, Albertini MC. Current trends in shape and texture analysis in neurology: Aspects of the morphological substrate of volume and wiring transmission. Brain Res Rev. (2007) 55:97–107. doi: 10.1016/j.brainresrev.2007.04.001

39. McInnes L, Healy J, Melville J. Umap: Uniform manifold approximation and projection for dimension reduction. arXiv. (2018). doi: 10.21105/joss.00861

40. Moon KR, van Dijk D, Wang Z, Gigante S, Burkhardt DB, Chen WS, et al. Visualizing structure and transitions in high-dimensional biological data. Nat Biotechnol. (2019) 37:1482–92. doi: 10.1038/s41587-019-0336-3

42. Aas IHM. Global Assessment of Functioning (GAF): properties and frontier of current knowledge. Ann Gen Psychiatry. (2010) 9:1–11. doi: 10.1186/1744-859X-9-20

43. Söderberg P, Tungström S, Armelius BA. Special section on the GAF: reliability of Global Assessment of Functioning ratings made by clinical psychiatric staff. Psychiatr Serv. (2005) 56:434–8. doi: 10.1176/appi.ps.56.4.434

44. Kay SR, Fiszbein A, Opler LA. The positive and negative syndrome scale (PANSS) for schizophrenia. Schizophr Bull. (1987) 13:261–76. doi: 10.1093/schbul/13.2.261

45. Leucht S, Kane JM, Kissling W, Hamann J, Etschel E, Engel RR. What does the PANSS mean? Schizophr Res. (2005) 79:231–8.

46. Nestor PG, O’Donnell BF. The mind adrift: attentional dysregulation in schizophrenia. In: Parasuraman R, editor. The attentive brain. Cambridge, Massachusetts, United States: The MIT Press (1998). p. 527–46.

47. Grave J, Madeira N, Morais S, Rodrigues P, Soares SC. Emotional interference and attentional control in schizophrenia-spectrum disorders: The special case of neutral faces. J Behav Ther Exp Psychiatry. (2023) 81:101892. doi: 10.1016/j.jbtep.2023.101892

48. Güçlü U, Van Gerven MAJ. Modeling the dynamics of human brain activity with recurrent neural networks. Front Comput Neurosci. (2017) 11:7. doi: 10.3389/fncom.2017.00007

49. Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, et al. Attention is all you need. In: Guyon I, et al, editors. Advances in neural information processing systems, vol. 30. Red Hook, New York, United States: Curran Associates, Inc (2017).

50. Pfeffer MA, Ling SS Ho, Wong JKW. Exploring the frontier: transformer-based models in EEG signal analysis for brain-computer interfaces. Comput Biol Med. (2024) 178, 108705. doi: 10.1016/j.compbiomed.2024.108705

51. Carter RM, Huettel SA. A nexus model of the temporal–parietal junction. Trends Cogn Sci. (2013) 17:328–36. doi: 10.1016/j.tics.2013.05.007

52. Panula JM, Gotsopoulos AG, Alho J, Suvisaari J, Lindgren M, Kieseppä T, et al. Multimodal prediction of the need of clozapine in treatment resistant schizophrenia; a pilot study in first-episode psychosis. Biomarkers neuropsychiatry. (2024) 11:100102. doi: 10.1016/j.bionps.2024.100102

53. Kanyal A, Mazumder B, Calhoun VD, Preda A, Turner J, Ford J, et al. Multi-modal deep learning from imaging genomic data for schizophrenia classification. Front Psychiatry. (2024) 15:1384842. doi: 10.3389/fpsyt.2024.1384842

54. Chen M, Xia XW, Kang Z, Li Z, Dai J, Wu JY, et al. Distinguishing schizophrenia and bipolar disorder through a Multiclass classification model based on multimodal neuroimaging data. J Psychiatr Res. (2024) 172:119–128. doi: 10.1016/j.jpsychires.2024.02.024

55. Calhas D, Henriques R. EEG to fMRI synthesis for medical decision support: A case study on schizophrenia diagnosis. medRxiv. (2023), 2023–08. doi: 10.1101/2023.08.07.23293748

56. Alves CL, de O Toutain GL, Moura Porto JA, de Carvalho Aguiar PM, de Sena EP, Rodrigues FA, et al. Analysis of functional connectivity using machine learning and deep learning in different data modalities from individuals with schizophrenia. J Neural Eng. (2023) 20:056025. doi: 10.1088/1741-2552/acf734

57. Thilakavathi B, Devi SS, Malaiappan M, Bhanu K, Kannan PP. Identification of schizophrenia using alpha power and theta peak frequency during cognitive activity. Biomed Engineering: Applications Basis Commun. (2024), 2450037. doi: 10.4015/S1016237224500376

58. Redwan S Md, Uddin P Md, Ulhaq AA, Sharif MI, Krishnamoorthy G. Power spectral density-based resting-state EEG classification of first-episode psychosis. Sci Rep. (2024) 14:15154. doi: 10.1038/s41598-024-66110-0

59. Zhang Y, Yang T, He Y, Meng F, Zhang K, Jin X, et al. Abnormal theta and alpha oscillations in children and adolescents with firstepisode psychosis and clinical high-risk psychosis. BJPsych Open. (2024) 10:e71.

Keywords: RNN - recurrent neural network, face processing, multimodal representation, path signature feature, representation learning, first episode psychosis (FEP)

Citation: Singh R, Zhang Y, Bhaskar D, Srihari V, Tek C, Zhang X, Noah JA, Krishnaswamy S and Hirsch J (2025) Deep multimodal representations and classification of first-episode psychosis via live face processing. Front. Psychiatry 16:1518762. doi: 10.3389/fpsyt.2025.1518762

Received: 28 October 2024; Accepted: 21 January 2025;

Published: 26 February 2025.

Edited by:

Amit Singhal, Netaji Subhas University of Technology, IndiaReviewed by:

Yosuke Morishima, University of Bern, SwitzerlandUmaisa Hassan, Netaji Subhas University of Technology, India

Copyright © 2025 Singh, Zhang, Bhaskar, Srihari, Tek, Zhang, Noah, Krishnaswamy and Hirsch. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Smita Krishnaswamy, c21pdGEua3Jpc2huYXN3YW15QHlhbGUuZWR1; Joy Hirsch, am95LmhpcnNjaEB5YWxlLmVkdQ==

†These authors share senior authorship

Rahul Singh

Rahul Singh Yanlei Zhang

Yanlei Zhang Dhananjay Bhaskar1,5

Dhananjay Bhaskar1,5 Vinod Srihari

Vinod Srihari Cenk Tek

Cenk Tek J. Adam Noah

J. Adam Noah Joy Hirsch

Joy Hirsch