95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Plant Sci. , 09 January 2025

Sec. Plant Bioinformatics

Volume 15 - 2024 | https://doi.org/10.3389/fpls.2024.1502863

This article is part of the Research Topic Recent Advances in Big Data, Machine, and Deep Learning for Precision Agriculture, Volume II View all 11 articles

Jianliang Wang1,2†

Jianliang Wang1,2† Chen Chen3†

Chen Chen3† Senpeng Huang1,2†

Senpeng Huang1,2† Hui Wang4

Hui Wang4 Yuanyuan Zhao1,2

Yuanyuan Zhao1,2 Jiacheng Wang1,2

Jiacheng Wang1,2 Zhaosheng Yao1,2

Zhaosheng Yao1,2 Chengming Sun1,2

Chengming Sun1,2 Tao Liu1,2*

Tao Liu1,2*Real-time monitoring of rice-wheat rotation areas is crucial for improving agricultural productivity and ensuring the overall yield of rice and wheat. However, the current monitoring methods mainly rely on manual recording and observation, leading to low monitoring efficiency. This study addresses the challenges of monitoring agricultural progress and the time-consuming and labor-intensive nature of the monitoring process. By integrating Unmanned aerial vehicle (UAV) image analysis technology and deep learning techniques, we proposed a method for precise monitoring of agricultural progress in rice-wheat rotation areas. The proposed method was initially used to extract color, texture, and convolutional features from RGB images for model construction. Then, redundant features were removed through feature correlation analysis. Additionally, activation layer features suitable for agricultural progress classification were proposed using the deep learning framework, enhancing classification accuracy. The results showed that the classification accuracies obtained by combining Color+Texture, Color+L08CON, Color+ResNet50, and Color+Texture+L08CON with the random forest model were 0.91, 0.99, 0.98, and 0.99, respectively. In contrast, the model using only color features had an accuracy of 85.3%, which is significantly lower than that of the multi-feature combination models. Color feature extraction took the shortest processing time (0.19 s) for a single image. The proposed Color+L08CON method achieved high accuracy with a processing time of 1.25 s, much faster than directly using deep learning models. This method effectively meets the need for real-time monitoring of agricultural progress.

In the rice-wheat rotation areas, strictly following the rotation schedule is essential for the full growth and maturity of both crops and for effective agricultural progress management. Timely harvesting of rice is crucial for maximizing the utilization of seasonal and land resources for subsequent wheat planting. Late rice harvesting results in delayed wheat sowing, thereby affecting the entire growth cycle of wheat, especially its growth and maturation stages. Conversely, early harvest of rice affects its yield (Zhang L. et al., 2024). Time management and precise agricultural progress are essential in ensuring that crops are sown and harvested at optimal times, thereby improving the overall yield and quality. As rice and wheat production scales up, mastering the agricultural progress of different fields is critical for improving the overall work efficiency. A timely and accurate understanding of the agricultural progress of different fields is essential for effective agricultural management, enabling better planning and execution of key planting and harvesting activities to optimize crop production (Khormizi et al., 2024). Currently, agricultural progress monitoring is mainly conducted through manual surveys and records, which are labor-intensive and are easily susceptible to subjective factors (Du et al., 2024).

In recent years, the application of UAV remote sensing technology in agriculture has gained widespread attention. UAVs have become important tools for agricultural monitoring owing to their high flexibility, high resolution, and low cost. Additionally, UAVs can be equipped with various sensors, such as RGB, multispectral, and thermal imaging cameras, to capture high-resolution images of fields (Colomina and Molina, 2014). These sensors can be used to monitor crop growth, detect pests and diseases, and assess soil conditions (Zhang and Kovacs, 2012). In crop growth monitoring, UAV images are widely used to assess the growth and health of rice and wheat. The growth status and biomass of crops can be assessed by analyzing vegetation indices (such as normalized difference vegetation index (NDVI)) (Li et al., 2019; Najafi et al., 2023). For example, studies have shown that multispectral images obtained using UAV can accurately assess the growth status and predict the yield of rice (Zhou et al., 2017). Additionally, UAV images can be used for wheat growth monitoring and for obtaining vegetation indices from high-resolution image data to assess the growth and health of wheat (Su et al., 2019). UAV images also play an important role in pest and weed detection. The application of UAVs to identify disease spots and pest traces on crop leaves provides early warning and effective control measures in agricultural management. Previous studies have shown that multispectral images from the UAV can be used to detect rice blast disease with an accuracy of over 85% (Cao et al., 2013). The application of UAV images is highly effective in identifying common diseases in wheat, such as rust and powdery mildew (Garcia-Ruiz et al., 2013). In addition, UAV remote sensing technology has been well applied in land type classification. By obtaining high-resolution image data, researchers can classify different types of land. Previous studies have classified different land types, such as farmland, water bodies, and buildings, using multispectral images obtained using UAVs (Tong et al., 2020). In recent years, the application of deep learning technology in UAV remote sensing image processing has received considerable attention. Deep learning technology is used to develop complex neural network models for automatically extracting features from large-scale image data, enabling efficient image classification and object detection. Studies have shown that convolutional neural networks (CNNs) can significantly improve the classification accuracy of UAV images (Kamilaris and Prenafeta-Boldú, 2018). In agricultural applications, deep learning technology can achieve precise classification of crop growth, pests, and land types. For example, the deep learning model ResNet50 can be used to accurately classify different growth stages and pest conditions of rice and wheat (Fuentes et al., 2017).

Although UAV remote sensing technology has been widely used in agricultural monitoring, there are still some limitations in monitoring farming progress (Zhang W. et al., 2024). Most studies focus on crop growth status or pest and disease monitoring at a single point in time, lacking continuous monitoring of the entire farming process (Mulla, 2013)ADDIN. This shortcoming limits the comprehensive understanding of farming progress, affecting the precision of agricultural management. Traditional image processing methods lack accuracy and efficiency in complex farmland environments. Different crop types, soil conditions, and management practices across fields pose challenges for traditional methods in distinguishing similar farming activities (Timsina and Connor, 2001). For example, ploughing and harrowing are the two common farming activities with their image features, making them difficult to distinguish using traditional image processing (Li et al., 2024). Moreover, manual labeling is not only time-consuming and labor-intensive but also prone to subjective influences, resulting in poor accuracy and consistency of data. This problem is mostly common in large-scale applications, limiting the widespread use of UAV remote sensing technology in farming progress monitoring (Hunt Jr. et al., 2010).

Thus, this research utilized high-resolution image data captured by UAVs. Furthermore, these data were employed to extract image features using deep learning models, enabling precise classification of farming activities in rice-wheat rotation areas. This method improved the classification accuracy and reduced manual intervention, thereby enhancing the objectivity and consistency of the data. This study aimed 1) to identify different types of farming activities in rice-wheat rotation fields and their corresponding image features: By analyzing UAV images, image features of different farming activities, such as color, texture, and deep learning features, were extracted to achieve the classification of different farming activities; 2) to identify effective indices that can be used for farming activity classification: By comparing the classification effects of different image features, the most representative and capable indices were selected to provide data support for the subsequent construction of classification models; 3) to construct a precise and efficient farming activity classification model: Using the selected effective indices and combining them with deep learning technology, a precise and efficient farming activity classification model was developed to achieve real-time monitoring and management of farming activities in rice-wheat rotation areas. The research findings provide farmers and agricultural managers with more accurate information on plot farming activities, facilitating the optimization of agricultural management efficiency and scientific decision-making. In the context of precision agriculture management (Lu et al., 2023), this study enables real-time monitoring of farming activities, offering a scientific basis for adjusting planting and harvesting plans. This approach maximizes the utilization of land and seasonal resources, optimizes crop production systems, and enhances agricultural yield and quality. Such technological application holds not only scientific significance but also substantial practical value in advancing precision agriculture management.

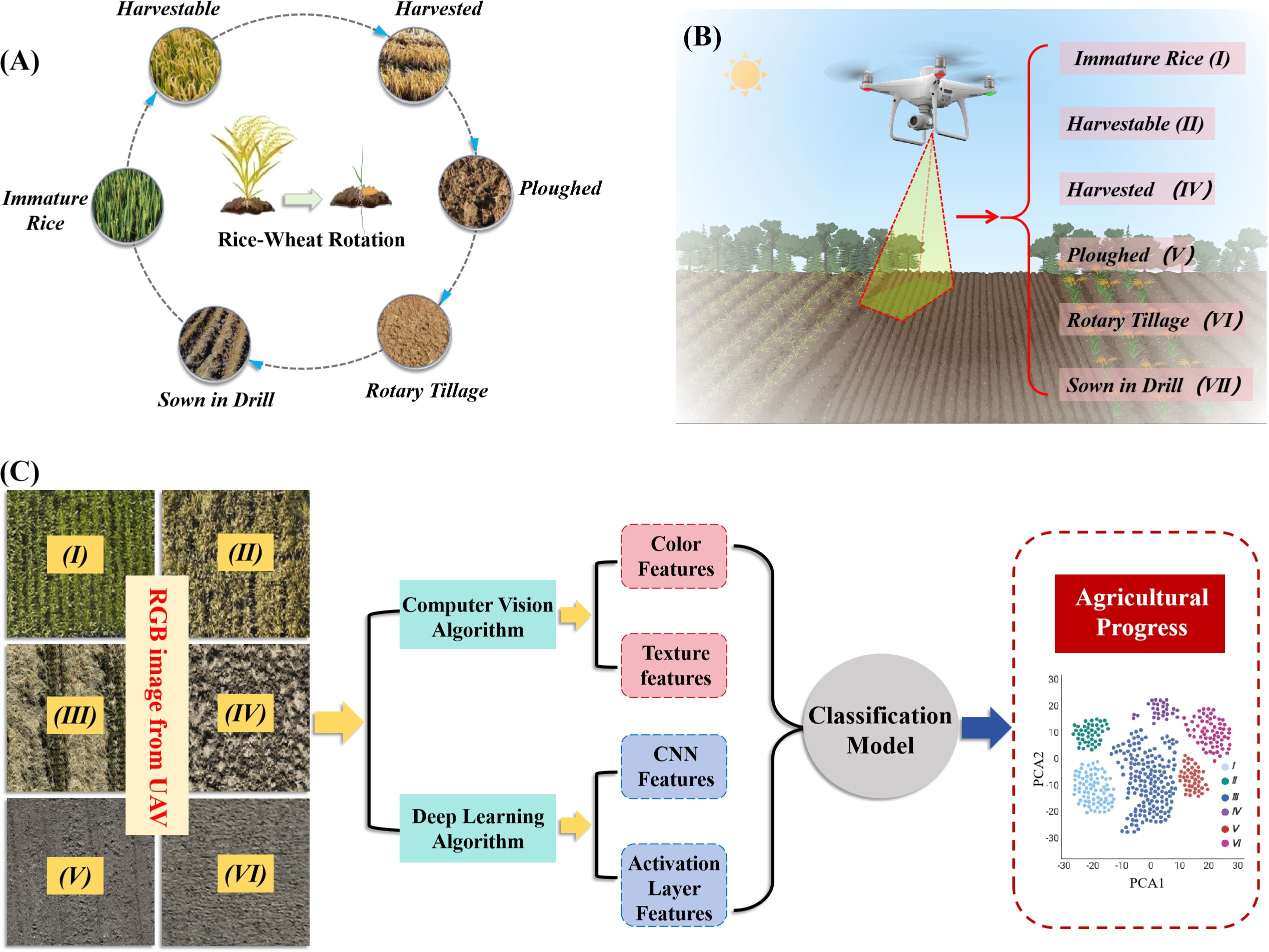

In this research, rice-wheat rotation areas were chosen as the focus, with the monitoring period spanning from the rice harvest to the completion of wheat planting. During this period, the fields were divided into six types based on farming progress: immature rice (I), harvestable rice (II), harvested rice (III), ploughed land (IV), rotary tillage land (V), and wheat was sown (VI) (Figure 1A). High-resolution visible light images of the fields were obtained using a UAV equipped with a high-resolution camera (Figure 1B). The UAV followed a predetermined route and altitude under clear and windless conditions to ensure the images covered the entire study area and maintained high quality and consistency. The obtained images were processed using computer vision and deep learning algorithms for field-type classification. First, the color features, texture features, high-level convolutional features, and activation layer features extracted using a CNN were extracted from the images, as shown in Figure 1C. These features were input to various classification models for field-type classification. By comparing the performance of different models, the optimal model was selected for final classification. To verify the accuracy of the classification results, ground survey data were combined to validate the UAV image classification results, ensuring consistency with the actual farming progress.

Figure 1. Technical flow of the study. (A) Depicts the six categories of agricultural operations in the rice-wheat rotation system; (B) offers a detailed classification of these operations: immature rice (I), harvestable rice (II), harvested rice (III), ploughed land (IV), rotary tillage land (V), and land where wheat was sown (VI); (C) illustrates the experimental methodology through a flowchart, comprising agricultural images acquired via RGB drones, an overview of the feature extraction algorithms, and the outputs of the classification model.

The experiment was conducted from 2020 to 2023 at the Modern Agricultural Science and Technology Comprehensive Demonstration Base in Huai’an City (Figure 2), Jiangsu Province, China (33°35′ N, 118°51′ E) and Yangzhou City (Figure 2), Yangzhou University Farm (32°23′ N, 119°24′ E), which belongs to the multi-year, multi-locational field experiment. The true values of the field types were obtained through surveys conducted by experienced farm staff. Determining the maturity and harvestability of the rice is challenging. The following specific criteria are used: golden yellow grains, yellow and withering leaves, drooping ears, and a rice moisture content of approximately 20% measured with a moisture meter. If these criteria are met, the rice is considered harvestable; otherwise, it is immature rice. Harvested rice refers to rice harvested using medium to large harvesters. Ploughed land refers to farmland ploughed using a ploughing tractor. Rotary tillage land refers to farmland tilled using a rotary tiller. Wheat that has been sown refers to wheat planted using a strip seeder. A DJI Mavic 3E aerial survey UAV (Shenzhen DJI Innovation Technology Co., Ltd, China) was used to collect RGB images of the fields. The visible light camera had an effective pixel count of 20 million, and the flight altitude was set to 15 m. The images were collected on clear, sunny days.

In this study, the DJI Enterprise software (Shenzhen DJI Innovation Technology Co., Ltd, China) was used to complete image stitching and to obtain orthophotos. ArcMap10.8 (Esri Corporation, USA) was used to perform image alignment, geo-registration, experimental field clipping, and calibration of the UAV images. To obtain more accurate datasets based on the spatial resolution of the images, the growth conditions of the rice, and the preprocessing results of the true color images, the calibrated UAV images of the six types of fields were cut into 0.60 × 0.60 m images. During the plant target segmentation process, images smaller than 0.36 m² were excluded, resulting in a total of 30,000 0.36 m² field images. These images were manually classified into the six aforementioned datasets, with 50% of the images used for model training and 50% for model testing.

In this study, based on preliminary experiments (Table A.1 of Appendix A), some features that were highly correlated with the six types of fields were selected, and 12 common color vegetation indices were calculated (Table 1). Moreover, texture features were selected, and the contrast (CON) of UAV images was extracted using a gray-level co-occurrence matrix (Liu et al., 2024). CON reflected the image clarity and the depth of the texture grooves. The deeper the texture grooves, the greater the contrast, resulting in a clearer effect. Conversely, with a smaller contrast value, the grooves are shallow, and the effect is blurry, making it suitable for classifying different types of fields. The calculation formula is as follows:

where and represent the pixel values of the gray level. The value of GLCM is the number of times that pixels with value are adjacent to pixels with value in the image.

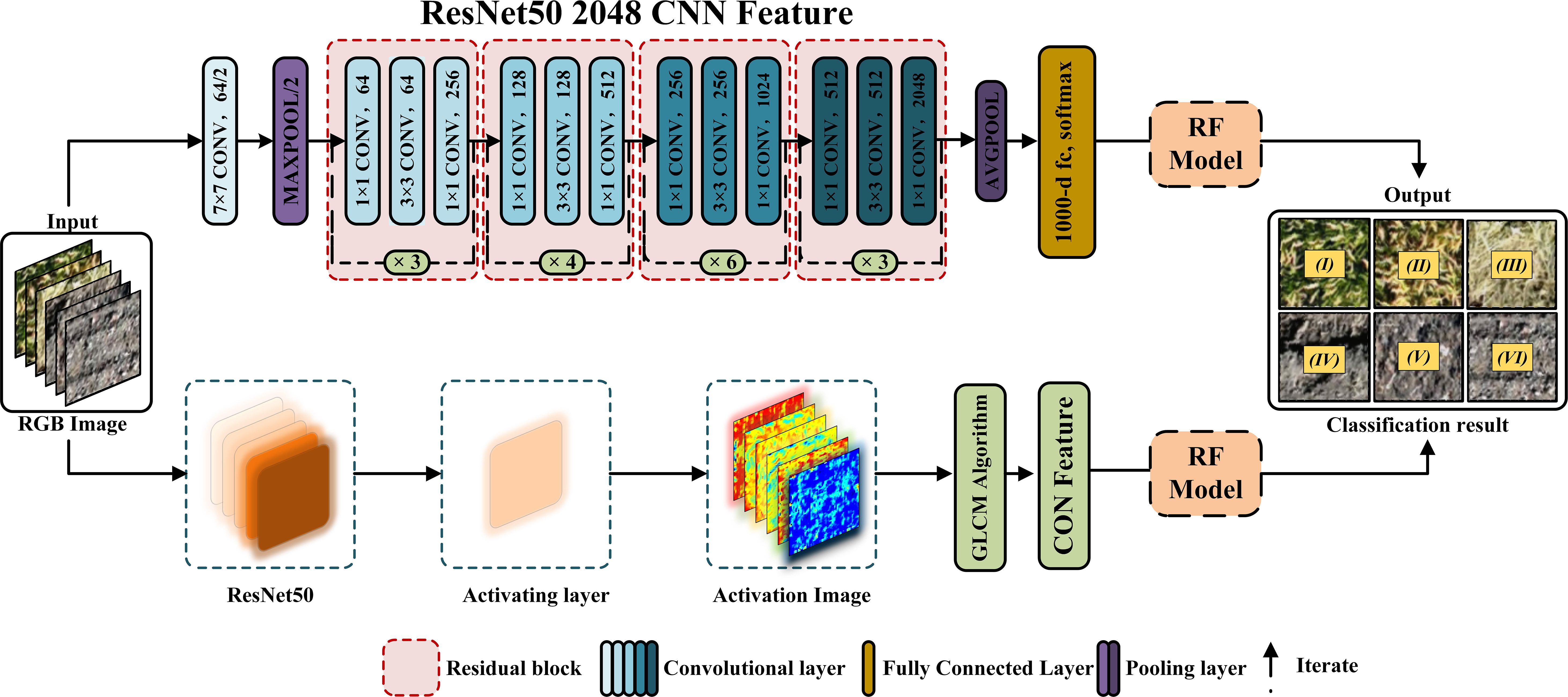

Traditional deep neural networks are prone to gradient vanishing or exploding issues when the number of layers increases, making training difficult. However, Residual Network (ResNet) can effectively solve this problem (Hu et al., 2021). The most commonly used ResNets include ResNet50 and ResNet101. Among them, ResNet50 has better recognition accuracy and real-time performance (Shabbir et al., 2021). In this study, ResNet50 was used to extract features of UAV RGB images of six types of fields and analyze and compare them with the network structure shown in Figure 3.

Figure 3. Convolutional feature extraction network architecture. The upper part of the figure provides an overview of the ResNet50 network architecture, including the algorithms involved: convolutional layers (convolution operation), pooling layers (max pooling), activation layers (ReLU activation function), fully connected layers, Softmax, and addition operations (implementing the residual structure). The lower part of the figure illustrates the process of extracting convolutional features from RGB images.

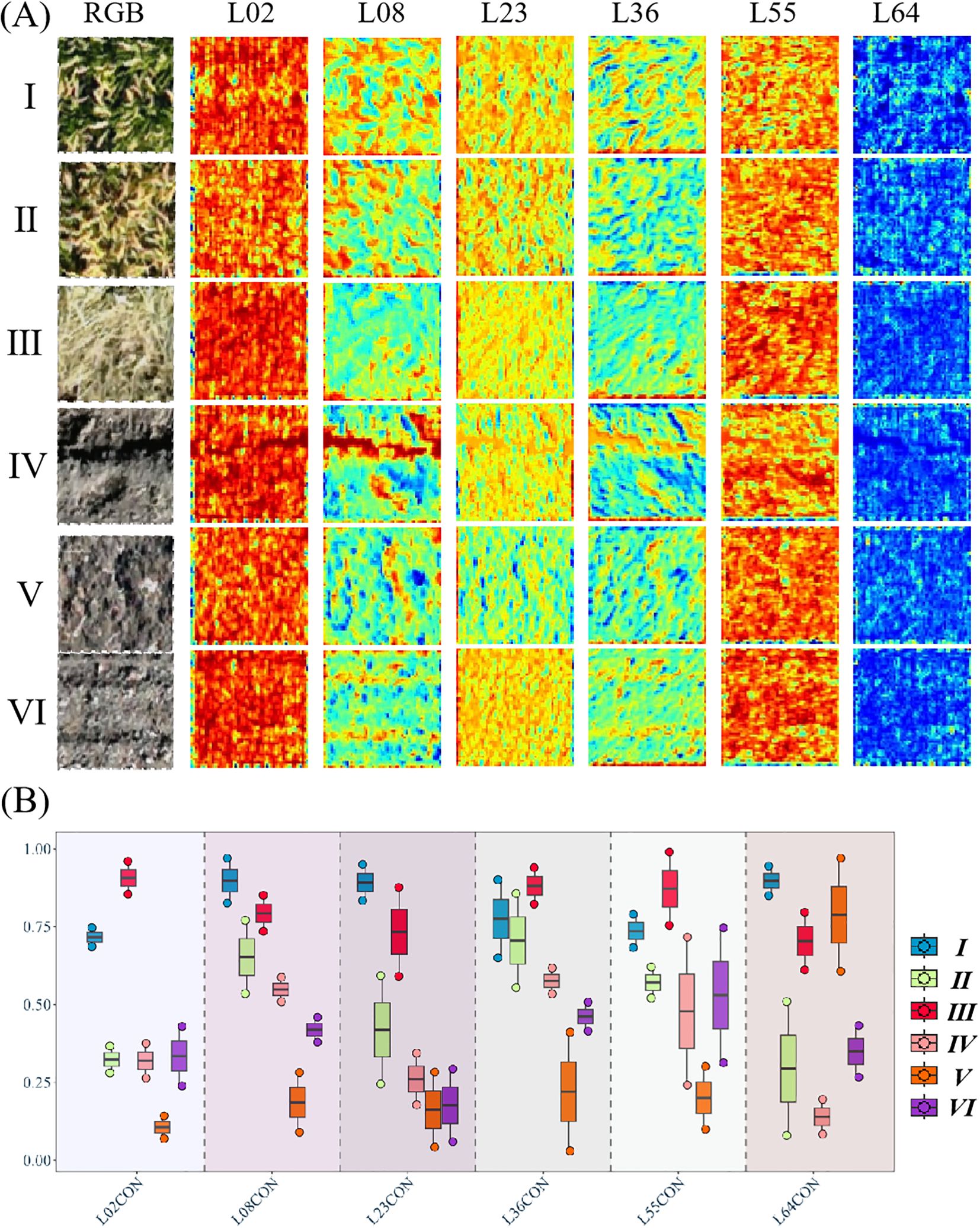

Based on the ResNet50 model, 64 activation layers were extracted, and texture feature analysis was conducted on the activation maps of each layer, as these layers reflect structural changes across six types of plots. The pre-experimental analysis methods included: 1) normalization preprocessing for each activation layer; 2) texture feature extraction using the gray-level co-occurrence matrix (GLCM) method; 3) correlation analysis to assess texture differences and classification performance across the six plot types for each activation layer; and 4) selection of activation layers that demonstrated superior texture differentiation and classification performance based on the pre-experimental findings. Six activation layers—L02, L08, L23, L36, L55, and L64—were identified in this experiment as exhibiting notable performance in texture differentiation and classification effectiveness, and their corresponding texture features were subsequently computed.

The random forest (RF) classification method was applied to classify six different plots. RF is a new classification algorithm proposed by the American scientist Breiman. It can efficiently handle datasets with multiple features, and it seeks the optimal solution for category attribution through cross-validation of sample features. It has advantages such as fast training speed, insensitivity to sample size, high classification accuracy, and strong noise resistance. It is one of the machine algorithms widely used in agricultural remote sensing big data intelligent learning. In the model validation stage, four metrics were used for evaluation: accuracy, recall, F1 score, and confusion matrix (Chicco and Jurman, 2020; Powers, 2020; Wen et al., 2022). The running time of models built with various methods was calculated to select the most accurate and efficient model. The formulas used are as follows:

where TP, FP, FN, and TN indicate true positive, false positive, false negative, and true negative cases, respectively. P and R represent accuracy and recall, respectively.

In this study, models were developed for six different types of plots using four different methods: color vegetation index features, color + texture vegetation index features, color + activated layer L8 features, and color + ResNet50 (2048 features). To prevent model overfitting, the dataset was divided into test sets and training sets in a 5:5 ratio. SHapley Additive exPlanation (SHAP) values were calculated for the test set. SHAP is a method used to explain machine learning model predictions. It is based on the Shapley values from game theory. It can analyze the importance of each feature in the model and quantify the contribution of each feature to the prediction of a given model for individual instances (Dikshit and Pradhan, 2021).

In the preliminary experiment (Table A.1 of Appendix A), after the image features correlated with six types of plots were initially screened, a Pearson’s correlation analysis was conducted on color and texture features commonly used in agricultural research to identify and filter out redundant features, thereby optimizing subsequent data processing and modeling work. The analysis results, shown as a heatmap (Figure 4), revealed a high correlation (correlation coefficient of 0.94) between EXGR and NGBDI. Additionally, the correlation coefficient between RGBVI and MGRVI was 0.94. These high correlation indicators suggest that while these features may play an important role in monitoring vegetation growth and health, they provide similar information, indicating that only one feature was retained during data simplification and model building. In subsequent analyses, a representative feature was selected from each pair of highly correlated feature groups to reduce model complexity and prevent multicollinearity issues.

Analyzing the six different features of the six types of plots selected in the previous section, as shown in Figure 5, CON shows a significant overlap between categories V and VI. The overlap indicates that the two categories are similar in the CON feature, making CON unsuitable for distinguishing between them. However, the CON values for categories I and II were relatively dispersed. The CON values show significant differences for categories III, IV, and V. The lower CON value for category IV might help distinguish it from other categories. ExG values were significantly high in category I, clearly distinguishing it from other categories. However, there were many overlapping areas for categories IV, V, and VI, making them prone to errors when used to classify these three types of plots. ExR exhibited the opposite pattern to ExG, but the difference between categories IV and V was significant, which can be used to improve the classification of these plots. The overall performance of INT was not as good as that of the previous three features, and it also showed significant differences in categories I and II but exhibited higher overlapping areas in the latter categories. MGRVI showed high values only in category I, with varying degrees of overlap among the other five types of plots. RGBVI values for categories I and II were significantly higher than those for the other categories. However, considerable overlap was observed among the remaining four types of plots, especially between categories III and IV.

In summary, the six features show significant differences across the six types of plots. Specifically, categories I and II exhibited significant differences, making them easier to identify through color and texture features. Category III exhibited high texture features but small differences in color features compared with the other categories. Distinguishing categories IV, V, and VI was more challenging due to their small differences in both color and texture features.

To further improve the accuracy of plot classification and enhance the classification accuracy of plots with similar color and texture features, we used ResNet50 to extract convolution features (mean and variance of features extracted by convolutional networks) of images from six types of plots. It was used to analyze the 64 activation layers of ResNet50, selecting L02, L08, L23, L36, L55, and L64 activation layers with significant differences among the six types of plots for further analysis and screening. The activation layers of the six types of plots are shown in Figure 6A. The activation layer images clearly distinguish changes in the surface structure of the plots, which is beneficial for plot differentiation. Further analysis of the contrast of the six activation layer images showed that the activation layer features of the six types of plots had significant differences compared with their color and texture features. As shown in Figure 6B, except for L23CON, significant differences were observed in the activation layer features of category V and VI plots. The observed difference can mitigate the difficulty in classifying these two types of plots using color and texture. Additionally, slight differences were observed among categories II, IV, V, and VI in L02CON, with some overlap with category IV. Moreover, slight differences were observed between categories I and II in L36CON. L55CON was similar to L02CON, with slight differences observed between categories V and VI, but with a larger overlapping than L02CON. L64CON exhibited overall differences among the six types of plots, with some overlap observed only between categories III and V and categories II and VI. In L08CON, differences were observed among the six types of plots with minimal overlapping values, except for small overlaps between categories II, III, and IV. Therefore, this feature was selected to establish the plot classification model.

Figure 6. Partial RGB image of six types of fields visualized with the eighth layer of features of the activation layer. (A) Presents RGB images alongside six classification images derived from activation layers L02, L08, L23, L36, L55, and L64 using the ResNet50 algorithm; (B) demonstrates the effectiveness of contrast features from the activation layers in field classification applications.

Principal Component Analysis (PCA) was conducted using color indices, combined color and texture indices, and convolutional features to examine the differences among data groups across six field categories (Figure 7). For the PCA based on color features, there was limited overlap between category I and the other categories, whereas significant overlap was observed among the remaining categories. This suggests that individual color indices exhibit limited discriminatory power. Additionally, the variance explained by the first two principal components was below 70%, indicating that the information is distributed across multiple components. When texture features were incorporated, the PCA results demonstrated improved category separation compared to those based solely on color features. Specifically, the boundary between category I and category IV became more distinct, and categories V and VI exhibited clearer clustering patterns. These improvements highlight the substantial contribution of texture features to the principal components, with the explained variance exceeding 80%. In contrast, convolutional features extracted using ResNet50 displayed a different pattern compared to color and texture features. Category III fields were distinctly separated from the others, and the boundary between categories V and VI was well-defined. However, performance for categories I and II was comparatively weaker. Notably, ResNet50 achieved an explained variance of 90–95%, reflecting the high concentration of inter-category differences in the low-dimensional space. The PCA findings further suggest that while color features are effective for rapid preliminary classification, auxiliary features may be necessary to distinguish complex or highly similar categories. Texture features contributed additional spatial information, enhancing the separation of categories with similar colors. Meanwhile, convolutional features provided information distinct from both color and texture, enabling effective differentiation among categories.

An RF classification model and different feature combinations were used to classify the plots. The confusion matrix is shown in Figure 8. When only color features were used for classification, both the overall misidentification and omission values were high, with significant errors. When combining color and texture, the recognition accuracy improved, especially in reducing the misidentification and omission of category V plots. The introduction of ResNet50 features (2048 sets) or L08CON features significantly improved accuracy, particularly in addressing the misidentification and omission issues between category V and VI plots, with L08CON performing better than ResNet50.

Further analysis of the overall accuracy, recall, F1 score, and run time for different combinations, as shown in Table 2, reveals that the overall accuracy is consistent with the confusion matrix analysis results. Both Color+L08CON and Color+ResNet50 achieved an accuracy of over 98%. The addition of texture on the basis of Color+L08CON did not significantly improve classification accuracy. In terms of classification time per image, the color feature extraction time was the shortest, at 0.19 s. The ResNet50 feature extraction time was the longest, exceeding 6 s; the texture feature extraction time was relatively long, exceeding 4 s. The L08CON extraction time was approximately 1.20 s. Although the Color+Texture+L08CON combination achieved the highest accuracy, its feature extraction time was relatively long, which is not conducive to real-time plot detection.

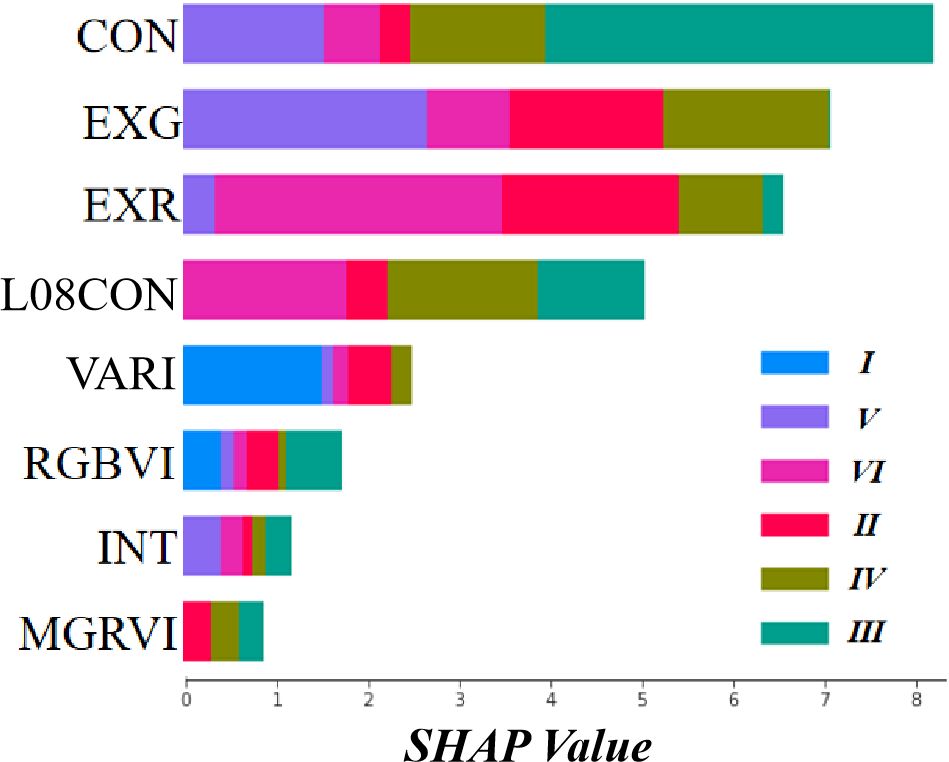

The test set for the RF classification model, built with four methods, was calculated to obtain the SHAP values of each feature in the model and visualize them (Figure 9). The SHAP values reveal that the texture feature CON, the color features ExG and ExR, and L08CON contribute the most to the model. The features VARI, RGBVI, INT, and MGRVI have the least impact, while the contributions of the remaining features are even smaller and not listed here.

Figure 9. Visualization of SHAP values for each group of features. In the legend, (I)–(III) represent six different plot types: immature rice (I), harvestable rice (II), harvested rice (III), ploughed land (IV), rotary tillage land (V), and wheat has been sown (VI). The colored rectangles indicate their respective contribution proportions in the model, with longer rectangle lengths signifying greater contributions.

This research integrated UAV imaging technology with diverse feature extraction and classification methods to achieve precise monitoring of agricultural activities in rice-wheat rotation areas. The results showed that different image features have unique strengths and limitations in field classification. Initially, the feature correlation analysis demonstrated that several color and texture features (e.g., EXGR and NGBDI, RGBVI and MGRVI) showed strong correlations. This observation implies that redundant features were excluded during data simplification and modeling processes to reduce the complexity of the model, aligning with methodologies reported in previous studies (Mutlag et al., 2020; Xie and Yang, 2020). The retention of only one representative feature after screening improved the computational efficiency and the stability of the model. In analyzing common color vegetation index features in fields, although color features showed significant differences between certain categories, their effectiveness was limited in distinguishing similar categories (e.g., IV, V, and VI). These results reveal the issue that solely relying on a single type of feature in field classification may lead to classification inaccuracy, especially for post-rice harvest field images. To address this issue, in this study, we further introduced deep learning features. The convolutional features and activation layer features extracted with ResNet50 significantly improved the classification accuracy, especially in distinguishing categories with similar color and texture features (e.g., V and VI). The results showed that convolutional features had significant advantages in capturing surface structure changes in fields, compensating for the shortcomings of traditional color and texture features in distinguishing certain categories. PCA further confirmed the effectiveness of feature combinations. Although color features are suitable for rapid preliminary classification, texture features and convolutional features are needed to distinguish complex or similar categories. Convolutional features provided complementary information to color and texture features in classification, effectively enhancing the separation of different categories. The final classification results showed that the integrated model using color, texture, and convolutional features (e.g., Color+L08CON and Color+ResNet50) achieved an accuracy of over 98%, significantly higher than the classification results of single features. Although the Color+Texture+L08CON combination achieved the highest accuracy, its feature extraction time was relatively long, making it unsuitable for real-time field detection. Therefore, in practical applications, a balance must be established between classification accuracy and processing time.

This study evaluated and compared the classification performance of the widely used deep learning algorithm LeNet-5 on field plots (Figure 10). The findings revealed that, following extensive training, LeNet-5 demonstrated satisfactory classification accuracy, particularly for categories II, III, and IV, yielding results comparable to those of the method proposed in this study. However, for categories I and V, LeNet-5 exhibited significantly lower accuracy compared to the method introduced here. Deep learning models such as LeNet-5 demand extensive initial training, with their accuracy being heavily dependent on the diversity and comprehensiveness of the dataset. Consequently, achieving high-precision classification necessitates large and diverse training datasets, which can present practical challenges in terms of data collection and annotation. Furthermore, in terms of processing speed, LeNet-5 required approximately 3 seconds longer per image than the method employed in this study, potentially limiting its applicability for real-time detection in large-scale field monitoring. While LeNet-5 performed well in identifying certain categories, its elevated training and runtime requirements pose challenges. In contrast, the method proposed in this study offers a more balanced and efficient approach, optimizing classification performance, training effort, and runtime efficiency. Therefore, for practical applications, the method presented here ensures robust classification accuracy while maintaining superior real-time performance and operational feasibility. Future research could focus on further optimizing deep learning models and exploring their integration into agricultural progress monitoring within rice-wheat rotation systems. This effort could involve combining deep learning approaches with traditional image processing techniques to achieve enhanced efficiency in agricultural monitoring.

The method used in this study has similarities and some significant differences with traditional remote sensing technology in land type classification research. The proposed agricultural progress classification method relies on high-resolution image data and uses various image features for classification. Remote sensing technology extracts spectral, texture, and shape features of land cover types from satellite images or UAV images and then uses classification algorithms to classify different land types (Al-Najjar et al., 2019), which is similar to the method used in this study. Meanwhile, the proposed method also extracts color features, texture features, and convolutional features. Additionally, it uses an RF classification model for field classification. These methods essentially distinguish different land cover types by analyzing the features of image data (Bai et al., 2021). However, due to the tight timing of agricultural progress and the slight differences in arable land types, using only traditional remote sensing classification methods is not ideal for agricultural progress classification. This study mainly focused on real-time monitoring of agricultural progress, not just the static classification of land cover types. Traditional remote sensing research is mostly used for large-scale land use and cover change monitoring, with low temporal resolution (Wang et al., 2022). In contrast, this study used UAV images to obtain high-frequency image data, enabling high temporal resolution monitoring and timely management of agricultural activities. A significant feature of this study is the application of convolutional features. The convolutional features extracted with deep learning models (such as ResNet50) proved more effective in distinguishing subtle changes under the same land cover types. This efficacy enabled our study to accurately differentiate subtle agricultural changes, such as differences in ploughing, tilling, and seeding land types, where traditional remote sensing classification methods are limited. Convolutional features provide richer spatial information, facilitating the capture of small changes in field surface structures, thus improving classification accuracy. The study inherits some classic methods of remote sensing technology in land classification but enhances real-time performance and classification accuracy by introducing UAV and deep learning technology, making it particularly suitable for agricultural progress monitoring in rice-wheat rotation areas.

This study successfully achieved precise monitoring of agricultural progress in rice-wheat rotation areas by integrating UAV imaging technology with various feature extraction and classification methods. The findings demonstrate that multiple image features offer distinct advantages in plot classification. By combining color, texture, and convolutional features extracted through deep learning, significant improvements in classification accuracy were achieved. The results indicate that integrated models using color, texture, and convolutional features (such as Color+L08CON and Color+ResNet50) can achieve an accuracy exceeding 98%, significantly reducing overall misclassification and omission rates compared to methods relying on a single feature. Specifically, the Color+L08CON model attained an accuracy of 98.76%, while the model using only color features achieved an accuracy of 80.54%. In terms of processing time for a single image, color feature extraction was the fastest at 0.19 seconds, followed by Color+L08CON at 1.25 seconds, whereas ResNet50 feature extraction took the longest, exceeding 6 seconds. The proposed Color+L08CON model not only achieved high accuracy but also minimized the processing time per image, meeting the requirements for real-time land type detection. Overall, this study demonstrated that combining UAV imaging with multiple feature extraction and classification methods enables efficient and accurate monitoring of agricultural progress in rice-wheat rotation areas. By adjusting model parameters and expanding training datasets, this method can be adapted to complex field environments and diverse crop planting patterns, offering reliable technological support for precision agricultural management. Future research should focus on optimizing feature extraction and classification algorithms to enhance real-time monitoring efficiency and accuracy. Additionally, integrating other remote sensing data and ground sensors would enable the development of a more comprehensive monitoring system, supporting scientific agricultural management and sustainable development.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

JLW: Formal analysis, Investigation, Software, Writing – original draft. CC: Funding acquisition, Resources, Writing – original draft. SH: Data curation, Investigation, Methodology, Writing – original draft. HW: Data curation, Investigation, Methodology, Writing – original draft. YZ: Conceptualization, Data curation, Formal analysis, Writing – original draft. JCW: Data curation, Methodology, Writing – original draft. ZY: Formal analysis, Supervision, Writing – original draft. CS: Supervision, Validation, Writing – original draft. TL: Software, Supervision, Validation, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was supported by the National Natural Science Foundation of China (32172110), the Key Research and Development Program (Modern Agriculture) of Jiangsu Province (BE2022342-2, BE2020319), the Central Public-interest Scientific Institution Basal Research Fund (JBYW-AII-2023-08) ,the Special Fund for Independent Innovation of Agriculture Science and Technology in Jiangsu, China (CX(22)3112) and the Postgraduate Research & Practice Innovation Program of Jiangsu Province [SJCX23_1973].

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer SL declared a past co-authorship with the author JLW to the handling editor.

The author(s) declare that no Generative AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpls.2024.1502863/full#supplementary-material

Ahmad, I. S., Reid, J. F. (1996). Evaluation of colour representations for maize images. J. Agric. Eng. Res. 63, 185–195. doi: 10.1006/jaer.1996.0020

Al-Najjar, H. A., Kalantar, B., Pradhan, B., Saeidi, V., Halin, A. A., Ueda, N., et al. (2019). Land cover classification from fused DSM and UAV images using convolutional neural networks. Remote Sens. 11, 1461. doi: 10.3390/rs11121461

Bai, Y., Sun, G., Li, Y., Ma, P., Li, G., Zhang, Y. (2021). Comprehensively analyzing optical and polarimetric SAR features for land-use/land-cover classification and urban vegetation extraction in highly-dense urban area. Int. J. Appl. Earth Observ. Geoinform. 103, 102496. doi: 10.1016/j.jag.2021.102496

Bendig, J., Yu, K., Aasen, H., Bolten, A., Bennertz, S., Broscheit, J., et al. (2015). Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Observ. Geoinform. 39, 79–87. doi: 10.1016/j.jag.2015.02.012

Cao, X., Luo, Y., Zhou, Y., Duan, X., Cheng, D. (2013). Detection of powdery mildew in two winter wheat cultivars using canopy hyperspectral reflectance. Crop Prot. 45, 124–131. doi: 10.1016/j.cropro.2012.12.002

Chicco, D., Jurman, G. (2020). The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genomics 21, 1–13. doi: 10.1186/s12864-019-6413-7

Colomina, I., Molina, P. (2014). Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 92, 79–97. doi: 10.1016/j.isprsjprs.2014.02.013

Dikshit, A., Pradhan, B. (2021). Interpretable and explainable AI (XAI) model for spatial drought prediction. Sci. Total Environ. 801, 149797. doi: 10.1016/j.scitotenv.2021.149797

Du, R., Xiang, Y., Zhang, F., Chen, J., Shi, H., Liu, H., et al. (2024). Combing transfer learning with the OPtical TRApezoid Model (OPTRAM) to diagnosis small-scale field soil moisture from hyperspectral data. Agric. Water Manage. 298, 108856. doi: 10.1016/j.agwat.2024.108856

Fuentes, A., Yoon, S., Kim, S. C., Park, D. S. (2017). A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 17, 2022. doi: 10.3390/s17092022

Garcia-Ruiz, F., Sankaran, S., Maja, J. M., Lee, W. S., Rasmussen, J., Ehsani, R. (2013). Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 91, 106–115. doi: 10.1016/j.compag.2012.12.002

Gitelson, A. A., Kaufman, Y. J., Stark, R., Rundquist, D. (2002). Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 80, 76–87. doi: 10.1016/S0034-4257(01)00289-9

Hu, Y., Tang, H., Pan, G. (2021). Spiking deep residual networks. IEEE Trans. Neural Networks Learn. Syst. 34, 5200–5205. doi: 10.1109/TNNLS.2021.3119238

Hunt, E. R., Jr., Hively, W. D., Fujikawa, S. J., Linden, D. S., Daughtry, C. S., McCarty, G. W. (2010). Acquisition of NIR-green-blue digital photographs from unmanned aircraft for crop monitoring. Remote Sens. 2, 290–305. doi: 10.3390/rs2010290

Jannoura, R., Brinkmann, K., Uteau, D., Bruns, C., Joergensen, R. G. (2015). Monitoring of crop biomass using true colour aerial photographs taken from a remote controlled hexacopter. Biosyst. Eng. 129, 341–351. doi: 10.1016/j.biosystemseng.2014.11.007

Kamilaris, A., Prenafeta-Boldú, F. X. (2018). Deep learning in agriculture: A survey. Comput. Electron. Agric. 147, 70–90. doi: 10.1016/j.compag.2018.02.016

Kerkech, M., Hafiane, A., Canals, R. (2018). Deep leaning approach with colorimetric spaces and vegetation indices for vine diseases detection in UAV images. Comput. Electron. Agric. 155, 237–243. doi: 10.1016/j.compag.2018.10.006

Khormizi, H. Z., Malamiri, H. R. G., Ferreira, C. S. S. (2024). Estimation of evaporation and drought stress of pistachio plant using UAV multispectral images and a surface energy balance approach. Horticulturae 10, 515. doi: 10.3390/horticulturae10050515

Li, C., Li, H., Li, J., Lei, Y., Li, C., Manevski, K., et al. (2019). Using NDVI percentiles to monitor real-time crop growth. Comput. Electron. Agric. 162, 357–363. doi: 10.1016/j.compag.2019.04.026

Li, J., Chen, L., Zhang, C., Ma, D., Zhou, G., Ning, Q., et al. (2024). Combining rotary and deep tillage increases crop yields by improving the soil physical structure and accumulating organic carbon of subsoil. Soil Tillage Res. 244, 106252. doi: 10.1016/j.still.2024.106252

Liu, H., Sun, H., Li, M., Iida, M. (2020). Application of color featuring and deep learning in maize plant detection. Remote Sens. 12, 2229. doi: 10.3390/rs12142229

Liu, T., Wang, J., Wang, J., Zhao, Y., Wang, H., Zhang, W., et al. (2024). Research on the estimation of wheat AGB at the entire growth stage based on improved convolutional features. J. Integr. Agric. doi: 10.1016/j.jia.2024.07.015

Lu, D., Ye, J., Wang, Y., Yu, Z. (2023). Plant detection and counting: Enhancing precision agriculture in UAV and general scenes. IEEE Access. 11, 116196–116205. doi: 10.1109/ACCESS.2023.3325747

Mulla, D. J. (2013). Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 114, 358–371. doi: 10.1016/j.biosystemseng.2012.08.009

Mutlag, W. K., Ali, S. K., Aydam, Z. M., Taher, B. H. (2020). “Feature extraction Q24 methods: a review,” in Paper presented at the Journal of Physics: Conference Series. 1591 012028

Najafi, P., Eftekhari, A., Sharifi, A. (2023). Evaluation of time-series Sentinel-2 images for early estimation of rice yields in south-west of Iran. Aircraft Eng. Aerospace Technol. 95, 741–748. doi: 10.1108/AEAT-06-2022-0171

Nie, S., Wang, C., Dong, P., Xi, X., Luo, S., Zhou, H. (2016). Estimating leaf area index of maize using airborne discrete-return LiDAR data. IEEE J. Selected Topics Appl. Earth Observ. Remote Sens. 9, 3259–3266. doi: 10.1109/JSTARS.2016.2554619

Powers, D. M. (2020). Evaluation: from precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv. 2011, 37–63. doi: 10.48550/arXiv.2010.16061

Qi, H., Wu, Z., Zhang, L., Li, J., Zhou, J., Jun, Z., et al. (2021). Monitoring of peanut leaves chlorophyll content based on drone-based multispectral image feature extraction. Comput. Electron. Agric. 187, 106292. doi: 10.1016/j.compag.2021.106292

Shabbir, A., Ali, N., Ahmed, J., Zafar, B., Rasheed, A., Sajid, M., et al. (2021). Satellite and scene image classification based on transfer learning and fine tuning of ResNet50. Math. Problems Eng. 2021, 1–18. doi: 10.1155/2021/5843816

Su, J., Liu, C., Hu, X., Xu, X., Guo, L., Chen, W.-H. (2019). Spatio-temporal monitoring of wheat yellow rust using UAV multispectral imagery. Comput. Electron. Agric. 167, 105035. doi: 10.1016/j.compag.2019.105035

Timsina, J., Connor, D. J. (2001). Productivity and management of rice–wheat cropping systems: issues and challenges. Field Crops Res. 69, 93–132. doi: 10.1016/S0378-4290(00)00143-X

Tong, X.-Y., Xia, G.-S., Lu, Q., Shen, H., Li, S., You, S., et al. (2020). Land-cover classification with high-resolution remote sensing images using transferable deep models. Remote Sens. Environ. 237, 111322. doi: 10.1016/j.rse.2019.111322

Torres-Sánchez, J., Peña, J. M., de Castro, A. I., López-Granados, F. (2014). Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 103, 104–113. doi: 10.1016/j.compag.2014.02.009

Wang, J., Bretz, M., Dewan, M. A. A., Delavar, M. A. (2022). Machine learning in modelling land-use and land cover-change (LULCC): Current status, challenges and prospects. Sci. Total Environ. 822, 153559. doi: 10.1016/j.scitotenv.2022.153559

Wang, X., Yan, S., Wang, W., Liubing, Y., Li, M., Yu, Z., et al. (2023). Monitoring leaf area index of the sown mixture pasture through UAV multispectral image and texture characteristics. Comput. Electron. Agric. 214, 108333. doi: 10.1016/j.compag.2023.108333

Wen, X., Jaxa-Rozen, M., Trutnevyte, E. (2022). Accuracy indicators for evaluating retrospective performance of energy system models. Appl. Energy 325, 119906. doi: 10.1016/j.apenergy.2022.119906

Xie, C., Yang, C. (2020). A review on plant high-throughput phenotyping traits using UAV-based sensors. Comput. Electron. Agric. 178, 105731. doi: 10.1016/j.compag.2020.105731

Zhang, C., Kovacs, J. M. (2012). The application of small unmanned aerial systems for precision agriculture: a review. Precis. Agric. 13, 693–712. doi: 10.1007/s11119-012-9274-5

Zhang, L., Zhang, F., Zhang, K., Liao, P., Xu, Q. (2024). Effect of agricultural management practices on rice yield and greenhouse gas emissions in the rice–wheat rotation system in China. Sci. Total Environ. 916, 170307. doi: 10.1016/j.scitotenv.2024.170307

Zhang, W., Yu, Q., Tang, H., Liu, J., Wu, W. (2024). Conservation tillage mapping and monitoring using remote sensing. Comput. Electron. Agric. 218, 108705. doi: 10.1016/j.compag.2024.108705

Zhang, X., Zhang, F., Qi, Y., Deng, L., Wang, X., Yang, S. (2019). New research methods for vegetation information extraction based on visible light remote sensing images from an unmanned aerial vehicle (UAV). Int. J. Appl. Earth Observ. Geoinform. 78, 215–226. doi: 10.1016/j.jag.2019.01.001

Zhao, R., Tang, W., An, L., Qiao, L., Wang, N., Sun, H., et al. (2023). Solar-induced chlorophyll fluorescence extraction based on heterogeneous light distribution for improving in-situ chlorophyll content estimation. Comput. Electron. Agric. 215, 108405. doi: 10.1016/j.compag.2023.108405

Keywords: UAV image, agricultural progress, deep learning, rice-wheat rotation, classification

Citation: Wang J, Chen C, Huang S, Wang H, Zhao Y, Wang J, Yao Z, Sun C and Liu T (2025) Monitoring of agricultural progress in rice-wheat rotation area based on UAV RGB images. Front. Plant Sci. 15:1502863. doi: 10.3389/fpls.2024.1502863

Received: 27 September 2024; Accepted: 17 December 2024;

Published: 09 January 2025.

Edited by:

Muhammad Fazal Ijaz, Melbourne Institute of Technology, AustraliaReviewed by:

Haikuan Feng, Beijing Research Center for Information Technology in Agriculture, ChinaCopyright © 2025 Wang, Chen, Huang, Wang, Zhao, Wang, Yao, Sun and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tao Liu, dGxpdUB5enUuZWR1LmNu

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.