- 1Department of Electronics and Communications Engineering, Annamacharya Institute of Technology and Sciences (Autonomous), Rajampet, Andhra Pradesh, India

- 2Department of Computer Science, College of Science & Arts, Tanumah, King Khalid University, Abha, Saudi Arabia

- 3Department of Information Systems, College of Computer and Information Sciences, Princess Nourah Bint Abdulrahman University, Riyadh, Saudi Arabia

- 4Department of Information Technology, College of Computer and Information Sciences, Imam Mohammad Ibn Saud Islamic University (IMSIU), Riyadh, Saudi Arabia

With its increasing global prevalence, lung cancer remains a critical health concern. Despite the advancement of screening programs, patient selection and risk stratification pose significant challenges. This study addresses the pressing need for early detection through a novel diagnostic approach that leverages innovative image processing techniques. The urgency of early lung cancer detection is emphasized by its alarming growth worldwide. While computed tomography (CT) surpasses traditional X-ray methods, a comprehensive diagnosis requires a combination of imaging techniques. This research introduces an advanced diagnostic tool implemented through image processing methodologies. The methodology commences with histogram equalization, a crucial step in artifact removal from CT images sourced from a medical database. Accurate lung CT image segmentation, which is vital for cancer diagnosis, follows. The Otsu thresholding method and optimization, employing Colliding Bodies Optimization (CBO), enhance the precision of the segmentation process. A local binary pattern (LBP) is deployed for feature extraction, enabling the identification of nodule sizes and precise locations. The resulting image underwent classification using the densely connected CNN (DenseNet) deep learning algorithm, which effectively distinguished between benign and malignant tumors. The proposed CBO+DenseNet CNN exhibits remarkable performance improvements over traditional methods. Notable enhancements in accuracy (98.17%), specificity (97.32%), precision (97.46%), and recall (97.89%) are observed, as evidenced by the results from the fractional randomized voting model (FRVM). These findings highlight the potential of the proposed model as an advanced diagnostic tool. Its improved metrics promise heightened accuracy in tumor classification and localization. The proposed model uniquely combines Colliding Bodies Optimization (CBO) with DenseNet CNN, enhancing segmentation and classification accuracy for lung cancer detection, setting it apart from traditional methods with superior performance metrics.

1 Introduction

Medical applications ever more depend on biomedical imaging technologies, including ultrasound, MRI, CT, X-ray, SPECT, and PET, to uncover intangible information that is imperceptible to the unaided human sight, thereby assisting in the process of diagnosis and formulation of treatment strategies. These imaging technologies offer essential understanding of the interior structures of the body, enabling physicians to detect anomalies such as tumors, evaluate their dimensions, volume, and vascular properties, and finally direct clinical judgments based on precise images (1, 2). Nevertheless, the escalating dependence on diagnostic imaging has precipitated escalated medical expenses and heightened vulnerability to ionizing radiation, so underscoring the imperative for more effective and secure diagnostic methodologies (1).

Comparable to biomedical signal processing, biomedical image processing improves and presents pictures such as X-ray, MRI, and CT scans, which are crucial for precise diagnosis (2). In the setting of lung cancer, the unregulated proliferation of lung nodules and subsequent cellular injury can lead to the development of malignant tumors, which are the primary cause of cancer-related deaths in both males and females (3–5). Tumor cells in lung cancer can be classified as either benign, non-metastasizing and less detrimental, or malignant, more aggressive and life-threatening. Given the high rates of death and recurrence associated with lung cancer, the treatment of this disease is frequently burdensome and expensive, emphasizing the urgent requirement for dependable and timely detection techniques.

Notwithstanding notable progress in imaging technology, there is still a dearth of efficient and easily available diagnostic methods for the early diagnosis of lung cancer. Approximately 80% of individuals with lung cancer receive a diagnosis at intermediate or advanced stages, mostly as a result of delays or uncertainty in the diagnosis process (6). Early and precise identification is essential for enhancing the survival rate of individuals with lung cancer, since it enables prompt intervention and therapy (6). Computer-Aided Diagnosis (CAD) systems have demonstrated potential in enhancing the identification of lung nodules during CT scans by offering automated assistance that significantly improves the precision and efficiency of image analysis (7–10).

Recent advancements in deep learning, namely the application of sophisticated algorithms such as Convolutional Neural Networks (CNNs), have become potent instruments for the independent detection of diseases, including lung cancer. These methodologies have demonstrated significant efficacy in differentiating between malignant and benign lung tumors by utilizing extensive datasets to train models that enhance diagnostic accuracy. Nevertheless, significant obstacles persist in establishing uniformity of these technologies across several platforms and guaranteeing the replicability of outcomes owing to disparities in software and methodology employed by various research teams.

In order to improve early detection and patient outcomes, this work introduces a novel approach that use a densely connected Convolutional Neural Network (CNN) to accurately distinguish between malignant and benign lung cancers, so addressing the global increase in lung cancer incidence and mortality.

The research contributions of this paper include the following:

● Enhanced Diagnostic Accuracy: The integration of Colliding Bodies Optimization (CBO) and DenseNet CNN significantly increased diagnostic accuracy, ensuring more reliable and precise classification of lung tumors.

● Improved specificity and precision: The methodology demonstrated enhanced specificity and precision, which are critical for distinguishing between true-negative cases and accurately identifying malignant tumors and minimizing false positives.

● Elevated Recall Rates: The proposed model excels in recognizing and recalling instances of lung cancer, demonstrating its effectiveness in identifying malignancies, a crucial aspect for early intervention and treatment.

● Artifact Reduction through Histogram Equalization: By effectively reducing noise and artifacts in CT images, histogram equalization contributes to clearer and more accurate image data, laying the foundation for improved subsequent analysis and diagnosis.

● Precise Tumor Localization with LBP: LBP plays a pivotal role in precisely localizing tumors by extracting key features, aiding in determining the size and exact location of nodules. This contributes to accurate diagnosis and subsequent treatment planning.

● Optimized Segmentation Using CBO: CBO optimizes the segmentation process, ensuring a finer delineation of lung structures. This optimization contributes to more accurate and reliable identification of tumor boundaries.

● Advanced Classification with DenseNet CNN: The DenseNet CNN model enhances the classification stage, effectively distinguishing between benign and malignant tumors. This contributes to improved diagnostic capabilities, aiding in timely and accurate treatment decisions.

● Real-time Results Transmission via the IoT Module: The integration of IoT technology facilitates the real-time transmission of diagnostic results to remotely connected devices. This contributes to swift collaboration among healthcare professionals and enables timely evaluation for further analysis.

The remainder of this paper is organized as follows: Section II presents the related work and contextual background. Section III describes the proposed methodology, including data acquisition, preprocessing, and model architecture. Section IV details the experimental setup and results, followed by a discussion of the findings in Section V. Finally, Section VI concludes the paper with a summary of contributions and suggestions for future work

2 Related work

Computer-aided diagnosis (CAD) technology is an excellent advancement in medical diagnosis that is now required for the practicality of medical imaging. Tumors can be benign or cancerous. Malignant tumors spread in neighboring tissue, allowing lung cancer to spread to distant organs, causing abnormalities in functioning and leading to a disturbed life for the individual. These tumors can reach fairly large sizes, but once removed properly with utmost care, they pose little concern to the patient. Physicians employ the CAD system to obtain an accurate diagnosis by providing a second opinion. It is commonly used to improve the efficacy of treatment. According to Sluimer et al. (11), the majority of the procedures are signal thresholding algorithms based on contrast information (12, 13).

Jinsa et al. (14) reported a computer-aided lung categorization approach constructed utilizing an artificial neural network. As features for classification, this study makes use of the statistical characteristics that are presented below. Even though the approaches that were taken in this circumstance produced favorable results, such methods were not adequate for coping with the problems that were taking place. It is no longer possible to zero in a specific location using this technology; instead, it can only be used to perform a comprehensive search. Hengyang Jiang et al. (15) provided various methods for preparing CT scan images of the lungs before feeding them to the CNN architecture. Although a multitude of image processing methods have been investigated, researchers have used straightforward and traditional CNNs. This was because there were insufficient metric values. Senthil et al. (16) worked on a research paper for lung cancer estimation with the help of networks with an amount of optimal features and a 91.5% accuracy rate for their neural network model. To enhance the quality of the image, simple pretreatment procedures are utilized to reduce the number of artifacts that are produced during the image collection stage. Additionally, the utilization of neural networks during the postprocessing stage yields favorable results and increases the precision achieved. Q. Zhang et al. (17) claimed that if lung cancer is discovered in its early stages, many lives can be saved. Early diagnosis of lung cancer nodules by radiologists is a difficult, time-consuming, and repetitive task. In (18), the authors presented a research article to explain the estimation of the presence of nodules and their locations with an automated system. The model was validated with a 99.01% degree of accuracy. In (19), the author created an artificial neural network (ANN) model to identify lung cancer in the human body. Lung cancer is diagnosed on the basis of various symptoms that attack the respiratory system, ranging from slight wheezing to shortness of breath, causing the patient to be uncomfortable and leading an easy and relaxed life. Rohit Y. Bhalerao developed a revolutionary image-based method for identifying lung cancer. They utilized convolutional deep neural networks, which were straightforward to comprehend and time-consuming (20). The technique described in paper (21) is intended to detect early-stage lung cancer in two stages.

CNNs can perform cancer subtyping, which includes the detection of genetic phenotypes and the corresponding targetable receptors of use (22, 23), and numerous consistent models have been developed and trained to carry out automated grading and stage assessments for better prediction and treatment (24–27). Consequently, CNN algorithms have become increasingly promising for image categorization, which inspired the study presented in this article.

For this purpose, many related experiments have been carried out recently. In 2024, Nair et al. conducted another experiment in which the sensitivity for lung cancer detection was 99.6% and the specificity was 94.7458% using neural networks combined with a random forest classifier (28). Similarly, in their study, Kumar et al. achieved an impressive accuracy of 99.44% using the ResNet-50 model (29). This was confirmed by Ma et al., who employed the V-net segmentation technique with a sensitivity of 92.7% and an accuracy of 94.9% (30). An attention pyramid pooling network (APPN) constructed by Wang et al. exhibited a sensitivity of 87.59%, a specificity of 90.46%, and an overall accuracy of 88.47% (31). On the other hand, Mary et al. used a deep pyramidal residual network, which achieved an accuracy of 95.06% (32). Nevertheless, one limitation common to these studies is that there is wide variability in sensitivity and specificity, meaning that there is room to improve balanced performance across metrics.

In one such study performed in the year 2023 by Srija et al., the use of a neural network together with logistic machine learning achieved an accuracy rate as high as 98.49% (33). Gugulothu et al. employed a hybrid differential evolution-based neural network with an accuracy of approximately 96.39%; thus, it had a sensitivity of approximately 95.25% and a specificity of approximately 96.12% (34). Asiya performed some customization on the VGG16 model up to a sensitivity ratio of up to ninety-five percent (35). Tandon et al. used CapsNet and VGG16 in combination, achieving both sensitivities and specificities as high as 98.25% (36). However, complex models may lead to overfitting; therefore, they may not perform well on new datasets.

In 2024, Kumar et al. (29) proposed unified deep learning models that leverage ResNet-50–101 and EfficientNet-B3 for lung cancer prediction using DICOM images. Their approach demonstrated significant improvements in predictive accuracy, showing that integrating multiple deep learning architectures can enhance diagnostic performance. However, the study’s limitations include the reliance on a single dataset, which may affect the generalizability of the results to other populations or imaging modalities.

Similarly, Shalini et al. (37) developed a deep learning framework for lung cancer detection and recognition within an IoT environment. Their methodology highlighted the potential of deep learning in healthcare applications by utilizing various IoT-driven data sources to improve detection rates. While their results were promising, the study faced challenges related to the integration of diverse data sources and the need for extensive validation across different IoT platforms to ensure robustness and accuracy.

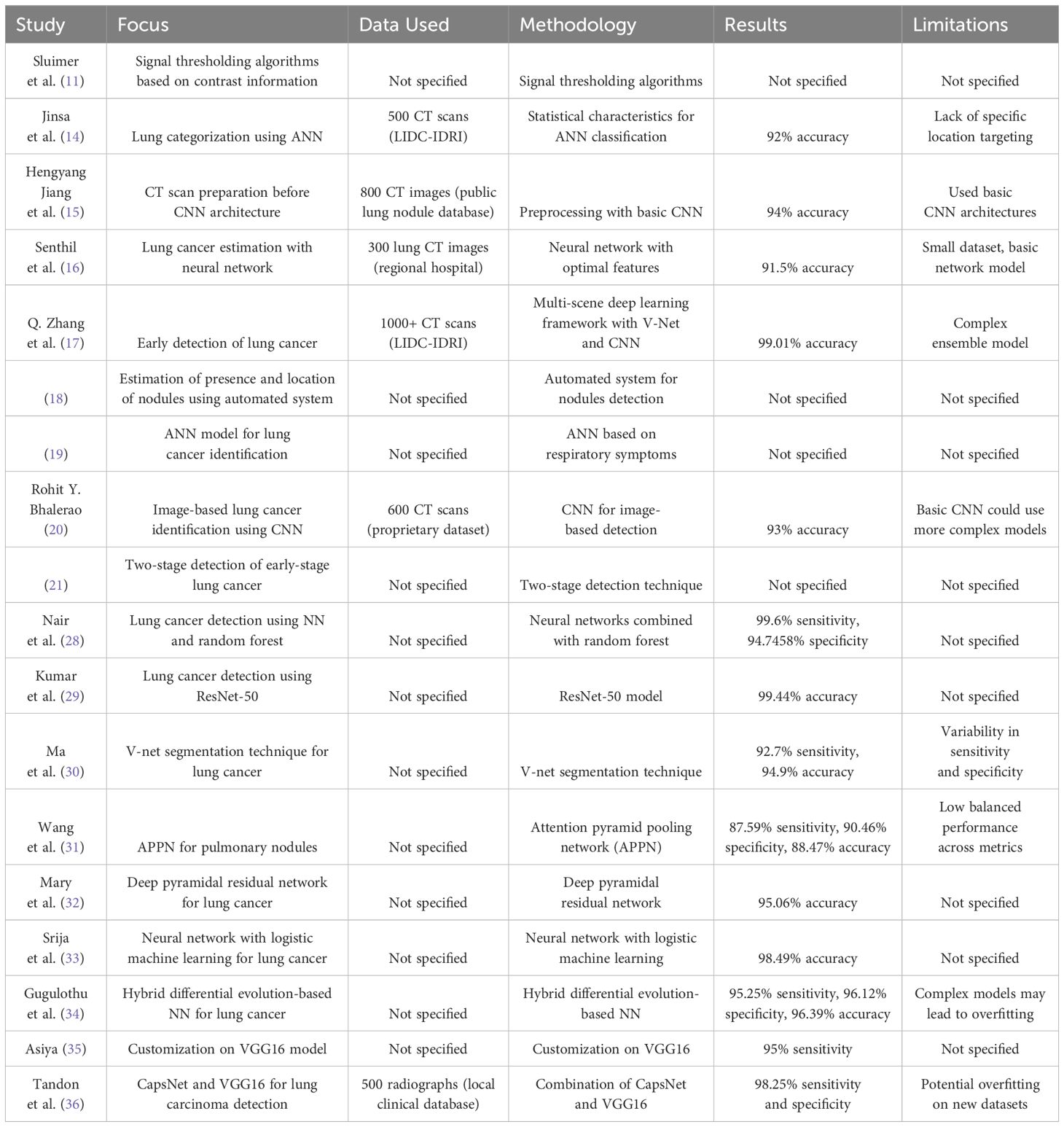

The above Literature review with the methodologies, results, and limitations is provided in Table 1 for better perception of the existing works.

3 Methodology

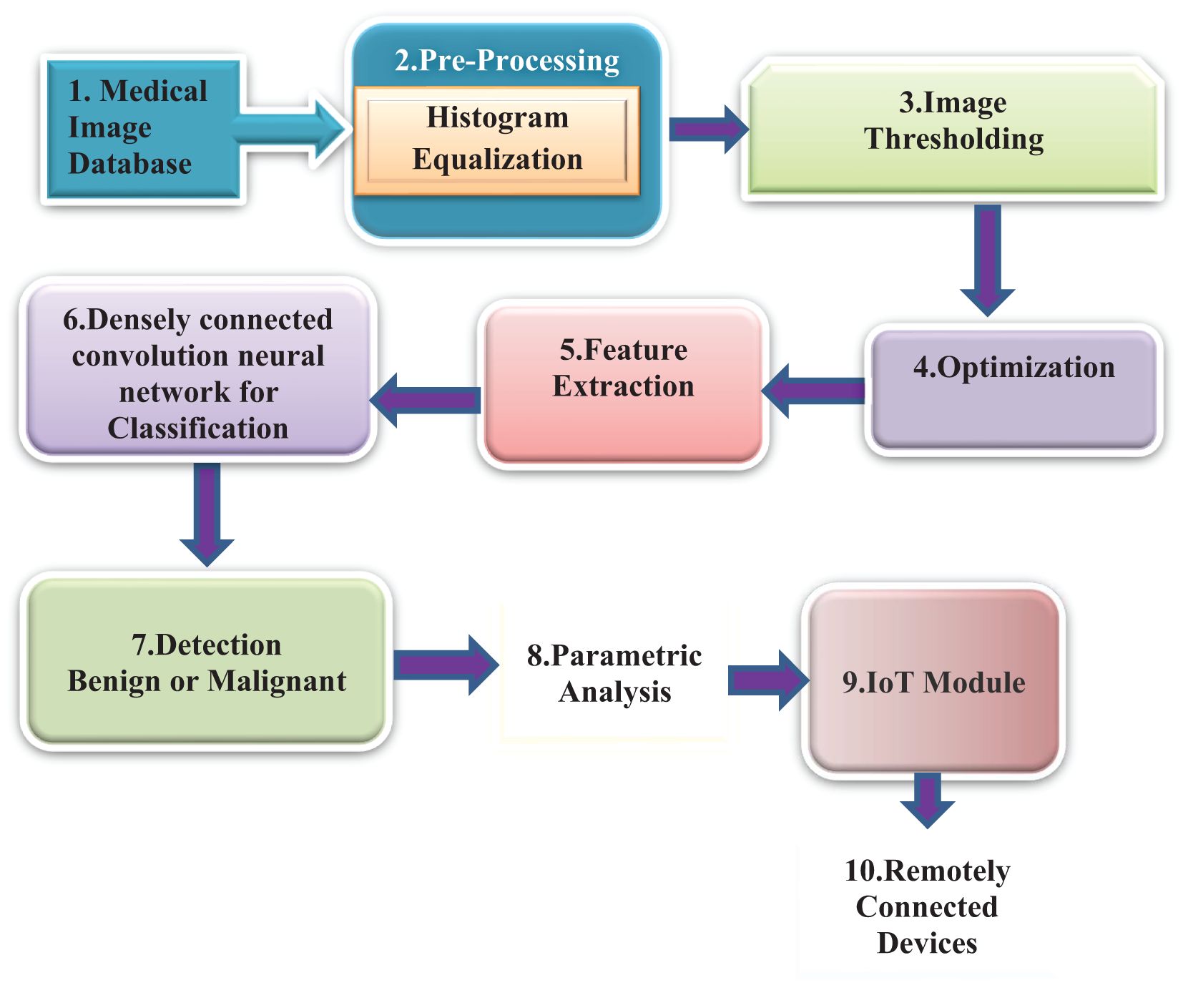

Figure 1 depicts the methodology that has been proposed. The initial image of a lung scan is taken from a database and then submitted to the histogram equalization procedure in the preprocessing step in this method. This aids in the reduction of noise as well as any additional aberrations introduced during the image acquisition process. As a result of imperfections caused by the imaging modalities as well as the storage procedures, some areas of the image have been emphasized, which makes it simpler to determine the details.

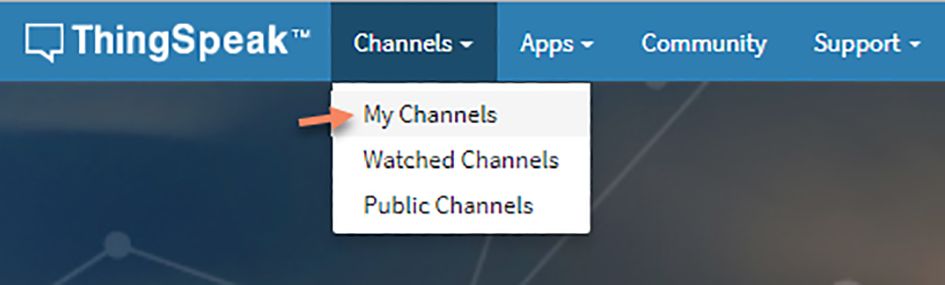

Following the application of this method, the colliding body optimization algorithm, also known as CBO, is called into action to produce the most favorable result. The output of an optimization algorithm is used for feature extraction. In a later stage of the process called feature extraction, the local binary pattern (LBP) method is utilized to acquire key features. To determine the exact location of the nodule, a process known as feature extraction, which allows for the acquisition of critical information, must be performed. In the final step of the process, a method known as DenseNet classification is utilized to classify tumors or nodules according to their potential for malignancy. Performance metrics are then provided to enable a more precise interpretation of the data. After that, these results are communicated later using the IoT Module ThingSpeak to authorized remotely linked devices for improved analysis by physicists to provide an accurate appraisal of the patient’s stage.

The stepwise algorithm of the proposed method is presented below as a process flow.

Step 1: We use data from the Lung Cancer dataset given by the Lung Image Database Consortium (LIDC), which contains 1,135 annotated CT images (38). In our study, we employ 70% (795 photos) for training, 10% (114 images) for validation, and 20% (227 images) for testing. This partitioning enables us to train our models efficiently, fine-tune them using the validation set, and rigorously evaluate their performance with the testing set.

Step 2: The preprocessing method is initiated to minimize the artifacts that are persuaded during the image acquisition process via the histogram equalization procedure.

Let f(x,y) be the representation of a given image that is an arrangement of a matrix of integer pixel intensities scaled from zero to L – 1. The gray values range from 0 to 255, and L is the maximum value of 256.

Let Z represent the normalized histogram of f, with a bin for every feasible intensity.

Here, the values of n = 0, 1…, L − 1.

The output image with the histogram equalization operation represented by H is provided by

Step 3: Colliding body optimization (CBO) algorithm for the optimal solution

Step 4: LBP process for feature extraction

• For each pixel () in the image, the h neighborhoods that surround the central pixel are selected. the coordinates of are produced

• Set to 1 if the adjacent pixel’s value is greater than or equal to the center pixel’s value, and 0 otherwise.

• Now, we compute the LBP value

Here, - the intensity value of the central pixel - the intensity of the neighboring pixel with index h

H – the number of corresponding sampling points on a circle provided with radius

G – is a function given by

Step 5: Image Classification Process Using DenseNet

i. The dataset is randomly divided into two parts, with the proper proportion of distribution. There are four folds for training and one fold for testing.

ii. At this stage, the corresponding dataset’s feature vectors and the classes that accompany them are trained.

iii. The output is the decision about whether the result is benign or malignant.

● Tumor localization is carried out by identifying whether the tumor is affected

● The segmented area of the tumor was measured and displayed; otherwise, the skull was displayed.

Step 6: Parametric analysis of the obtained output images

Step 7: Transmission of relevant metrics via the IoT module to a remotely connected Mobile or PC.

3.1 Colliding bodies optimization

Colliding body optimization (CBO) is considered one of the most common evolutionary techniques dependent on population and employs an analogy of individual object collision laws (39). Excellent results have been obtained by CBO for many different benchmark functions, both limited and unconstrained, and for many different single-objective engineering tasks (40). The formulation of this algorithm is straightforward; it makes no use of memory and has no parameters that need to be tweaked.

This is done for two reasons, first, to improve moving item positions and then to force stationary objects such that they move themselves into better positions. Using the object collision rules, the respective new locations of the corresponding colliding bodies involved in operation are changed after the collision based on the newly developed velocity.

CBO Algorithm:

Step i. Probabilistic initialization is carried out for the number of individuals who participate in the search space and is used to find the initial placements of CBs:

Here = Initial position of the i-th colliding body. = Minimum and maximum limits of the search space.

Step ii. For each CB, the magnitude of the corresponding body mass must be specified according to the following equation:

Here = Mass of the k-th colliding body, representing its fitness value relative to others.

fit(k): Fitness value of the k-th colliding body.

Step iii. The respective values of the corresponding CB’s objective functions are arranged in order of increasing values. The organized CBs are separated into 2 equal groups:

● The lower half of the respective CBs (also called stationary CBs) are good agents with zero velocity before impact.

● The upper half of the respective CBs (also called moving CBs): These CBs migrate downward. Each group’s agents with the possible maximum fitness value will collide.

● After impact, the velocities of each moving CB and stationary CB are calculated as follows:

The velocity of each stationary CB after the collision is

= Velocity of the i-th colliding body before and after collision. = Coefficient used to adjust velocity after collision.

Step iv. Once the corresponding stationary CBs collide properly, the resulting velocities are used to find and establish the new positions of the CBs. The new locations of each CB in motion and each CB in stasis are

= Random number used to introduce stochasticity in the position update. = New position of the colliding body after updating with velocity.

Step v. Once a termination requirement, such as the maximum number of iterations, is met, the optimization is repeated from Step ii.

3.2 DenseNet CNN

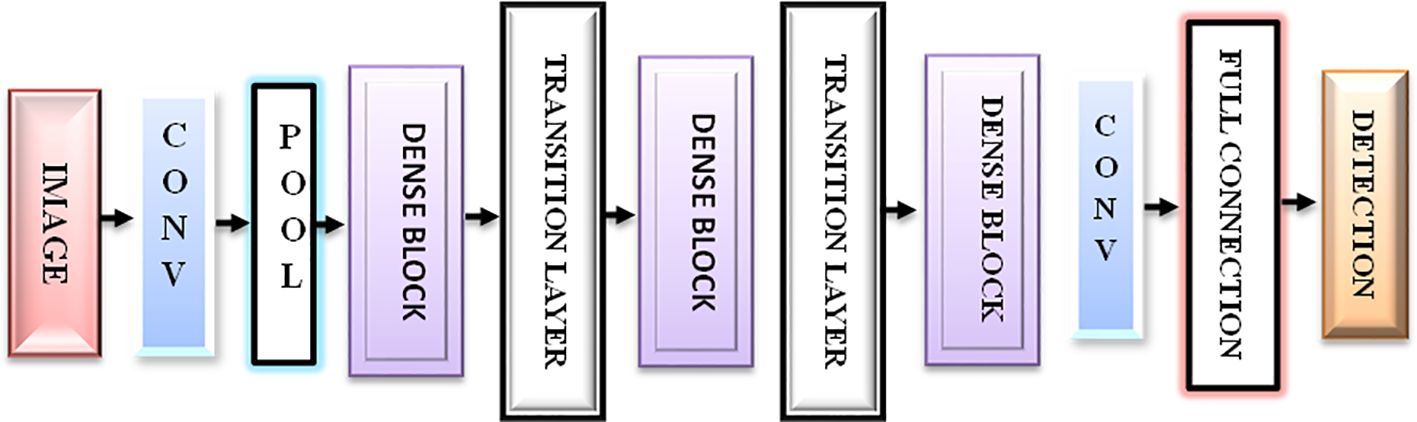

DenseNet is a densely linked CNN with a DenseNet structure (41). DenseNet presents feature sharing and arbitrary interlayer connections. By reusing feature maps from multiple layers, DenseNet reduces interdependence across layers, provides dense and distinguished amounts of input features via shortcut connections of varying lengths, and successfully reduces the gradient disappearance problem in deep networks, which is difficult to optimize.

DenseNet is composed of dense blocks. The layers in those blocks are densely interwoven. DenseNet’s structure, as illustrated in Figure 2, comprises a dense block, a transition layer, a convolutional layer, and a fully linked layer. The ultimate goal is to integrate the characteristics of all the layers to improve model performance and sturdiness.

3.3 IoT module

ThingSpeak is said to be a cloud-based IoT analytics type of application for aggregating, visualizing, and analyzing live data streams of various applications (42). It provides a real-time display of the data sent to it by the Personal Computers. Data may be evaluated and processed digitally as they can execute commands and parameters in ThingSpeak. ThingSpeak is also used for the development of IoT systems that require analytics and proof-of-concept testing (43). Any internet-connected device can provide data directly to ThingSpeak. ioBridge first released ‘ThingSpeak’ in 2010 as a support service for IoT applications. ‘ThingSpeak’ has a numerical computing software functionality, allowing ThingSpeak users to analyze and display uploaded data (44).

4 Results and analysis

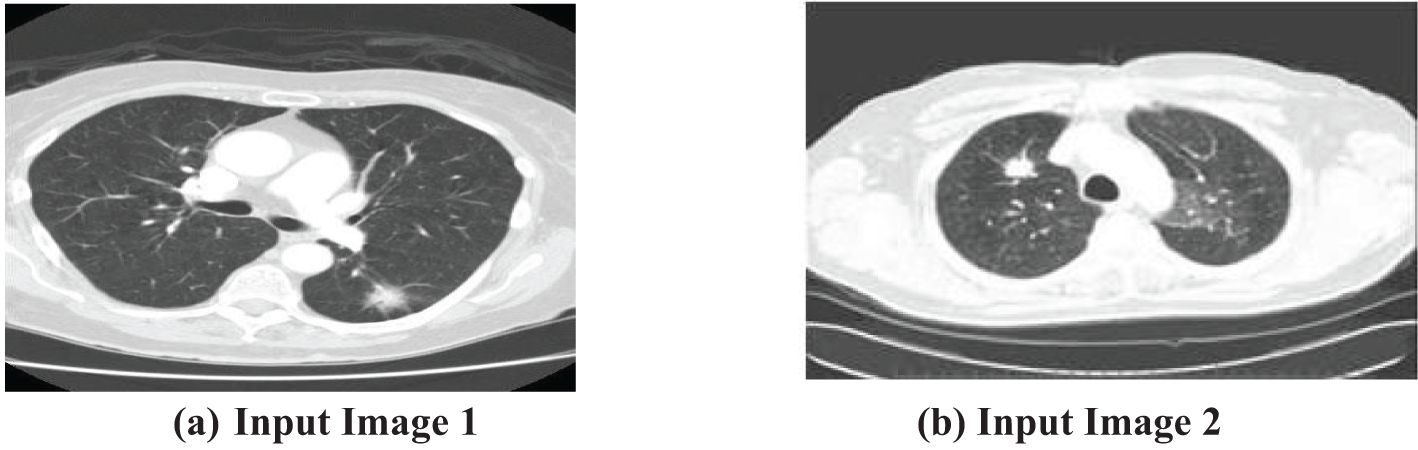

The first step of the suggested methodology consists of obtaining input images from a medical image database, as shown in Figures 3A, B. These photos form the basis for later analysis. To improve the quality of these photos, an essential preprocessing procedure is initiated. CT images frequently exhibit low-frequency noise and minimal distortion. To address these problems, the obtained images undergo histogram equalization, a procedure designed to eliminate noise and enhance the overall quality of the image.

A histogram is a graphical representation of the distribution of a dataset. It displays the frequency of occurrence of each value or range of values in the dataset. Equalization plays a crucial role in the initial step of processing. By highlighting important sections of the photos that may be hidden due to different circumstances, this step efficiently minimizes distortions and guarantees that the next stages of the process work with improved and optimized data. The importance of preprocessing lies in its capacity to improve the input images, rendering them more suitable for segmentation and optimization methods. A thorough preparation is crucial for ensuring the precision and efficiency of the following analytical procedures.

After preprocessing, the enhanced images are prepared for subsequent phases of analysis without any disruptions produced by noise particles or distortions. This strategic approach guarantees that the data inputted into the following algorithms are of superior quality and free from unnecessary factors, establishing the foundation for strong and precise analytical results. The smooth progression from capturing images to preprocessing establishes a strong basis for later algorithmic interventions, highlighting the significance of careful data preparation in medical imaging applications.

An essential factor is the decrease in artifacts, which is accomplished by emphasizing important sections in the obtained pictures. This strategic methodology ensures that the findings are well prepared for smooth transition into subsequent phases of analysis, guaranteeing that the improved data may undergo additional processing without any disruptions. The focus on hiding artifacts and uncovering important image information enhances the strength of future analytical processes.

The preprocessing step, emphasized in this context, is essential in the entire methodology. The main goal is to improve the input images, making them more suitable for segmentation and optimization algorithms. Intermediate segmentation errors can affect the overall performance of the proposed method by introducing inaccuracies in the feature extraction and classification stages. If the segmentation does not correctly identify the tumor boundaries, it may lead to incorrect feature extraction or misclassification. However, the proposed method’s use of the Colliding Bodies Optimization (CBO) algorithm and DenseNet CNN aims to mitigate these errors by refining the features and improving classification accuracy despite initial segmentation challenges. The preprocessing stage is crucial for improving the quality of data by excluding noise particles and treating them apart from picture particles. The rigorous focus on reducing noise and enhancing image quality highlights the importance of the preprocessing stage within the wider analytical framework. Figures 4A, B clearly showcase the refined and equalized findings of CT lung scan cancer images. These photographs provide evidence of how the preprocessing stage effectively improves the visibility of important information while reducing the negative effects of artifacts. The equalization technique enhances the equilibrium of image features, hence preparing the groundwork for later algorithmic analysis. The smooth incorporation of preprocessing outputs into the analytical pipeline reinforces the essential role of this stage in guaranteeing the precision and dependability of the entire technique.

To locate tumors in CT scans, the output image of the filter is segmented using the Otsu thresholding approach in conjunction with the optimization technique that has been developed. The initial step involves segmenting the input lung CT scan picture using fundamental Otsu thresholding, which has the tendency to maximize the segmented classes to produce a result that is appropriate for subsequent processing. Following the completion of the thresholding approach, the result should be optimized by being run through the Colliding bodies optimization (CBO) procedure, and then features should be retrieved using the local binary pattern (LBP) method.

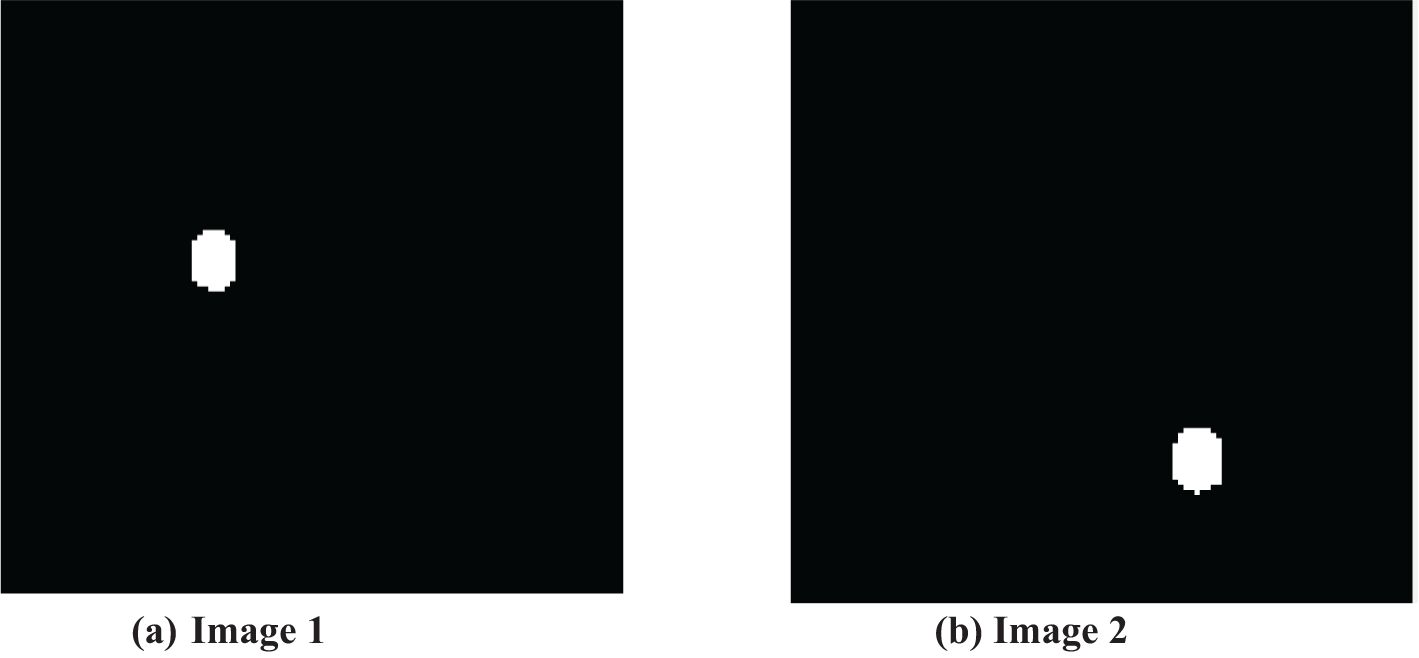

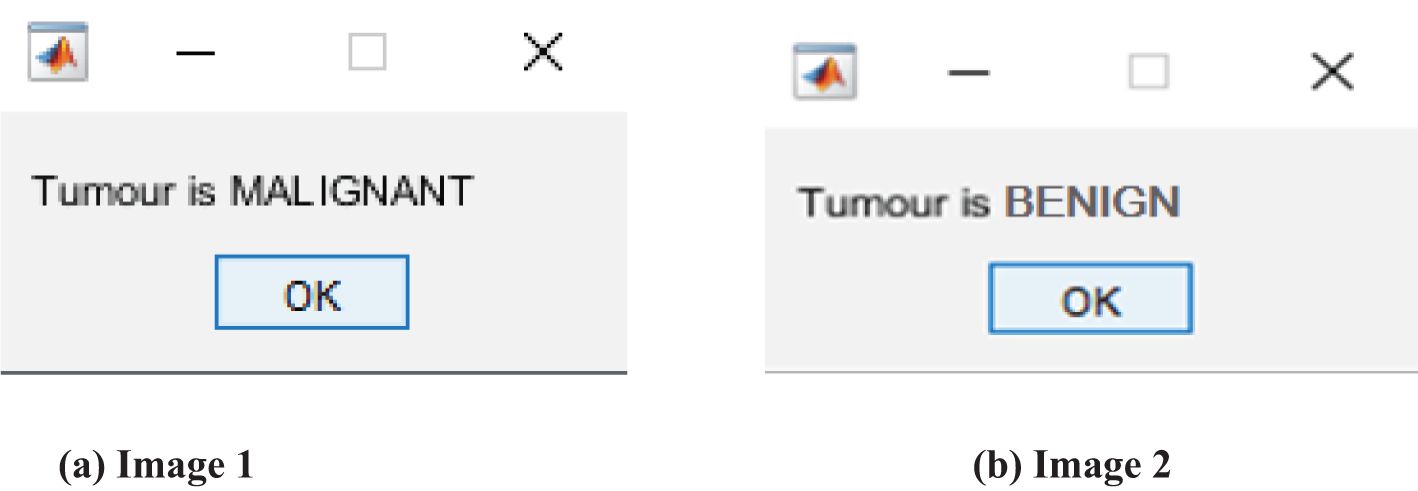

The thresholded and optimized results with the extracted features and the corresponding output images are shown in Figures 5A, B, respectively. After partitioning the image, it is subjected to DenseNet CNN deep learning classification, where it classifies the given image as normal or abnormal by displaying a message such as “Tumor is MALIGNANT or Tumor is BENIGN”, as shown in Figures 6A, B.

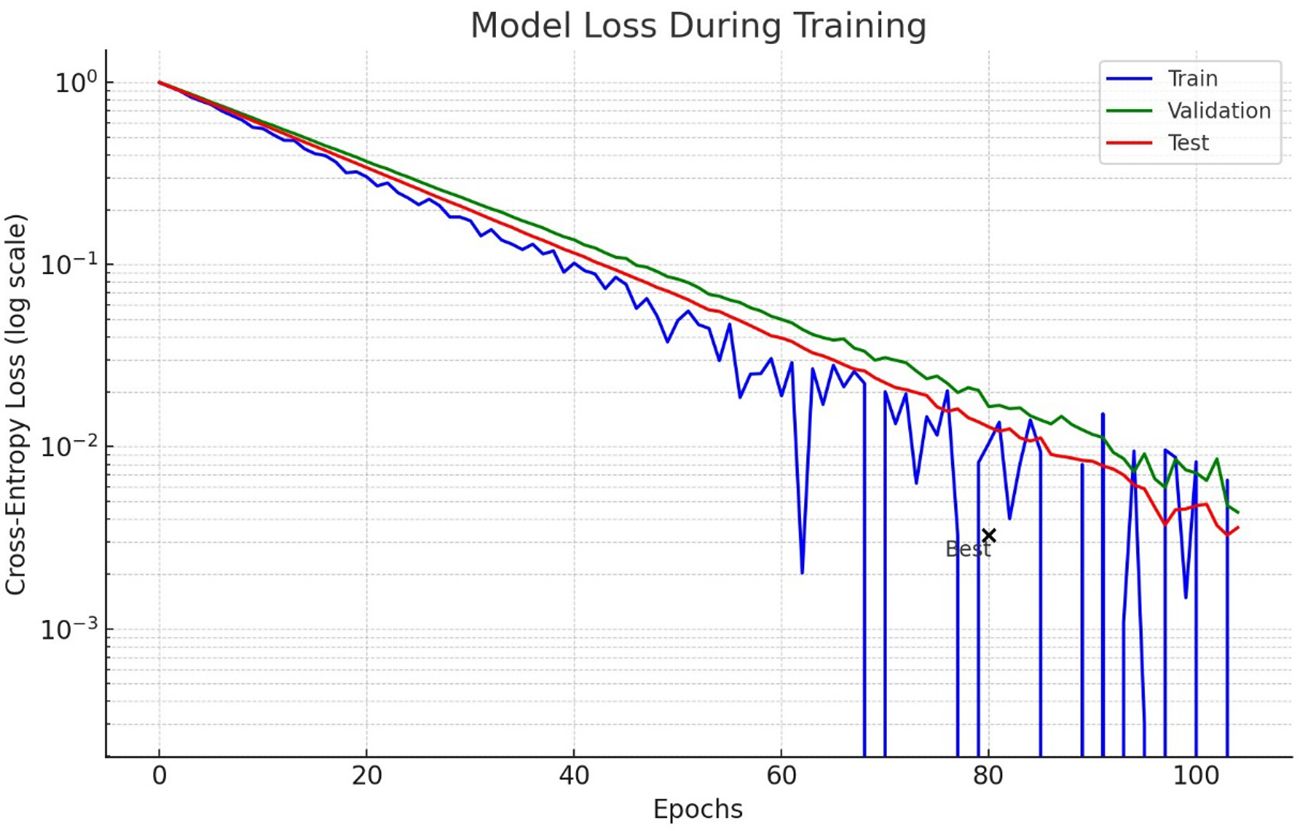

Figure 7 shows the loss model for lung nodule detection using lung CT scan images during the training process. The logarithmic scale is used to highlight how the loss decreases over epochs for training, validation and test data. The test curve demonstrates more fluctuations, which could mean that there is either an overfitting or inconsistency in the test data. Importantly, the test loss starts to plateau and even slightly increases, implying that it no longer learns from available data.

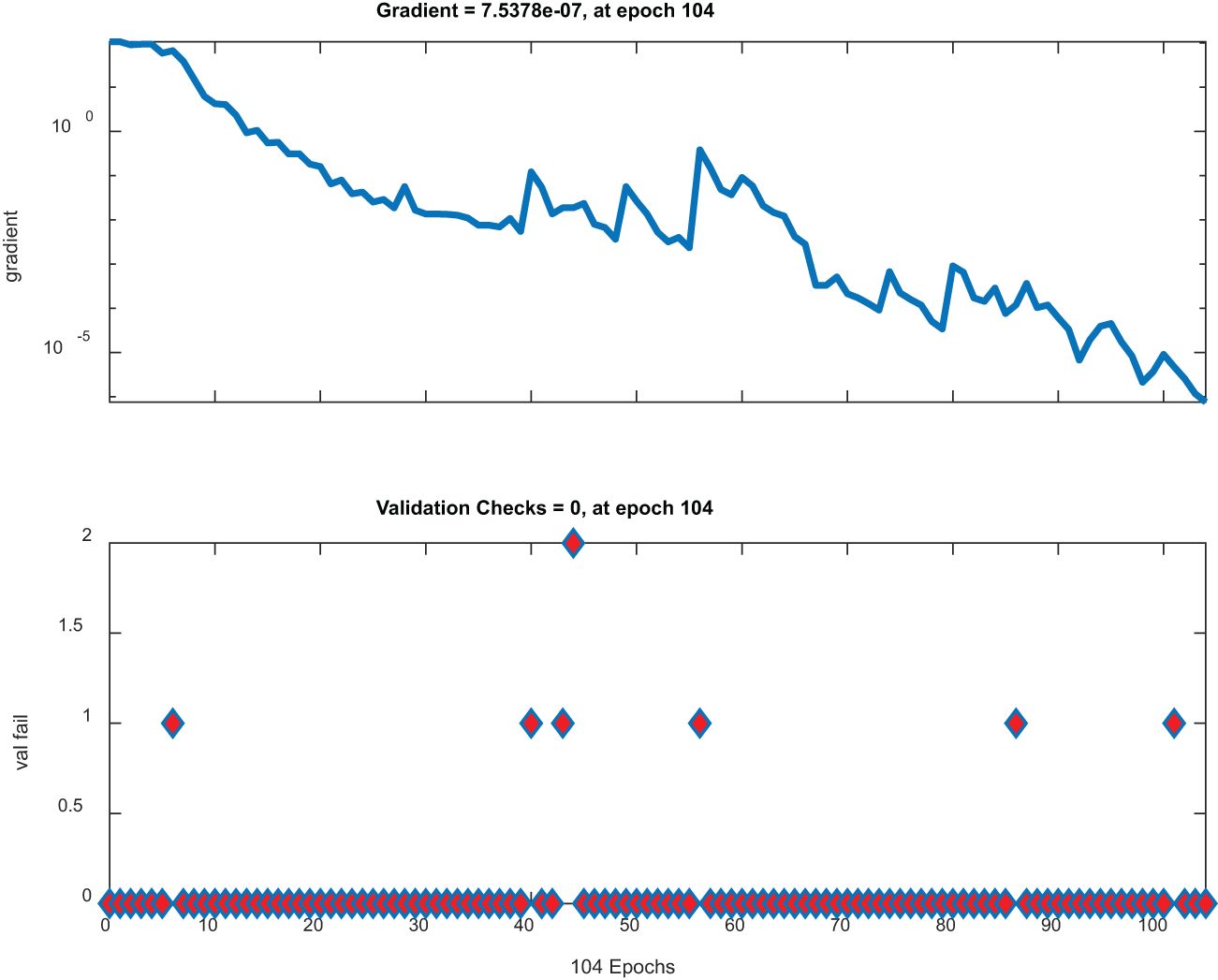

Figure 8 has two plots. The top plot illustrates gradient descent across epochs with a significant dip and represents a model that effectively minimizes its loss function. On the other hand, the lower plot shows stable low validation checks, implying that the parameters of this model are stable; therefore, no notable mistakes during validation were made; thus, this plot is ideal for high-performing robust models.

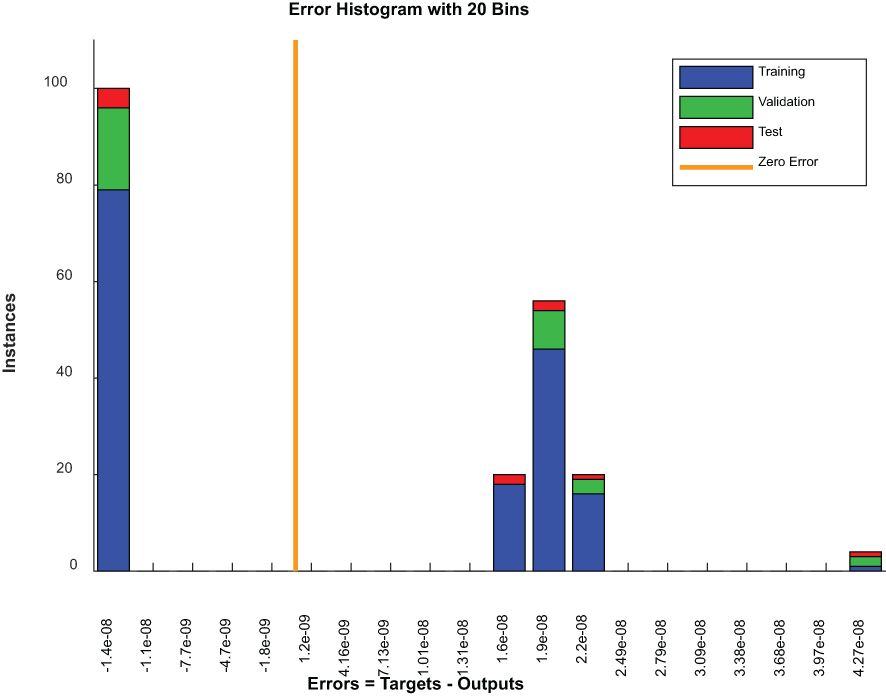

Figure 9 is an error histogram of a model where errors are categorized into bins for each dataset, including the training, validation and testing sets. For all datasets, a zero-centered concentration of errors implies excellent accuracy of predictions by a model. However, the presence of errors across other bins might indicate areas where these predictions deviate from actual results, possibly as a result of problematic examples or limitations in models.

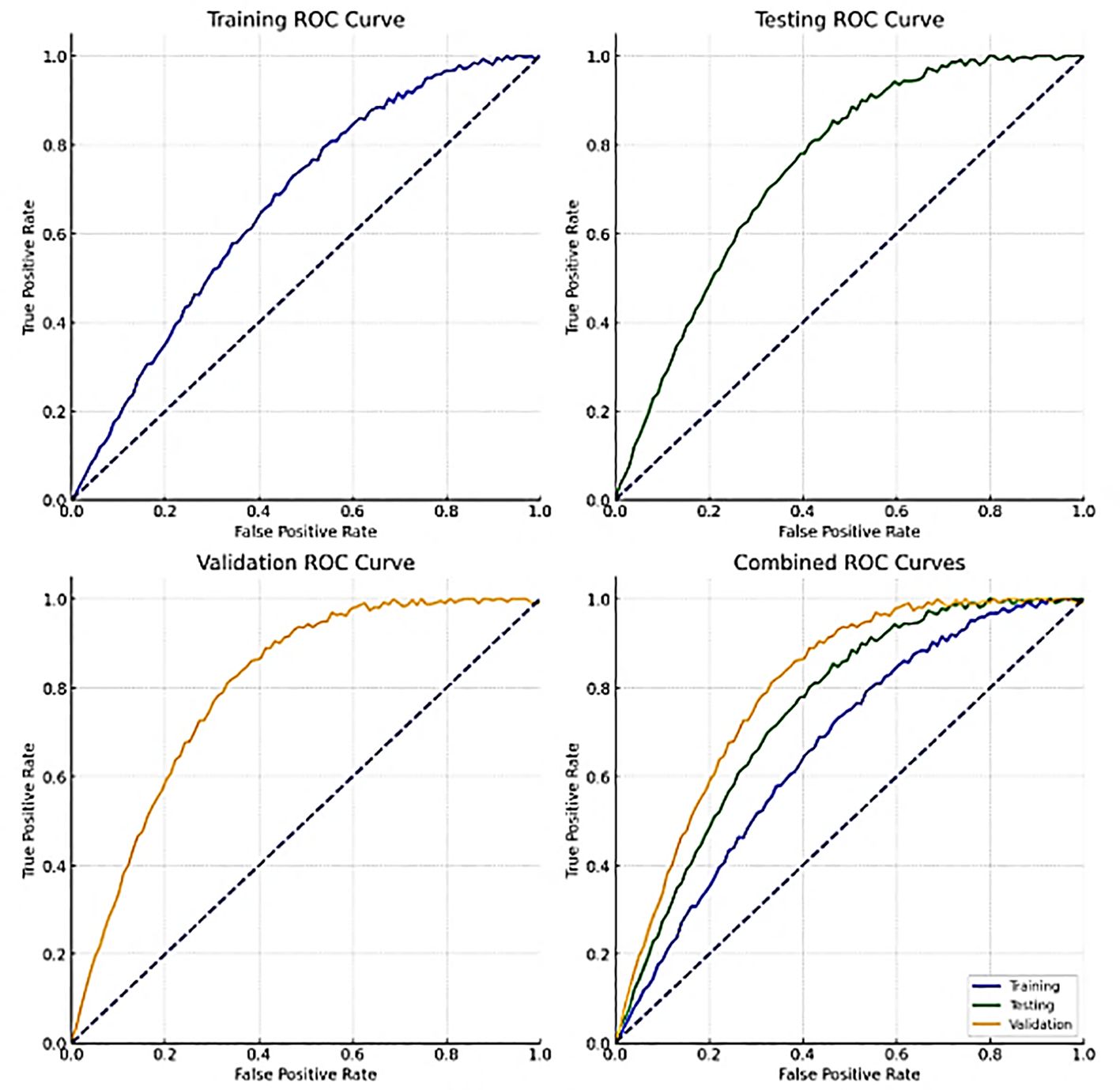

Figure 10 shows the ROC curves for training, validation, testing and combined data. These curves show how well the model can distinguish between benign and malignant lung nodules at different thresholds. A curve closer to the top left corner indicates better performance by a classifier. The area under the ROC curve (AUC) quantifies the overall power of identification by class-variable dependent models at various thresholds.

5 Statistical analysis

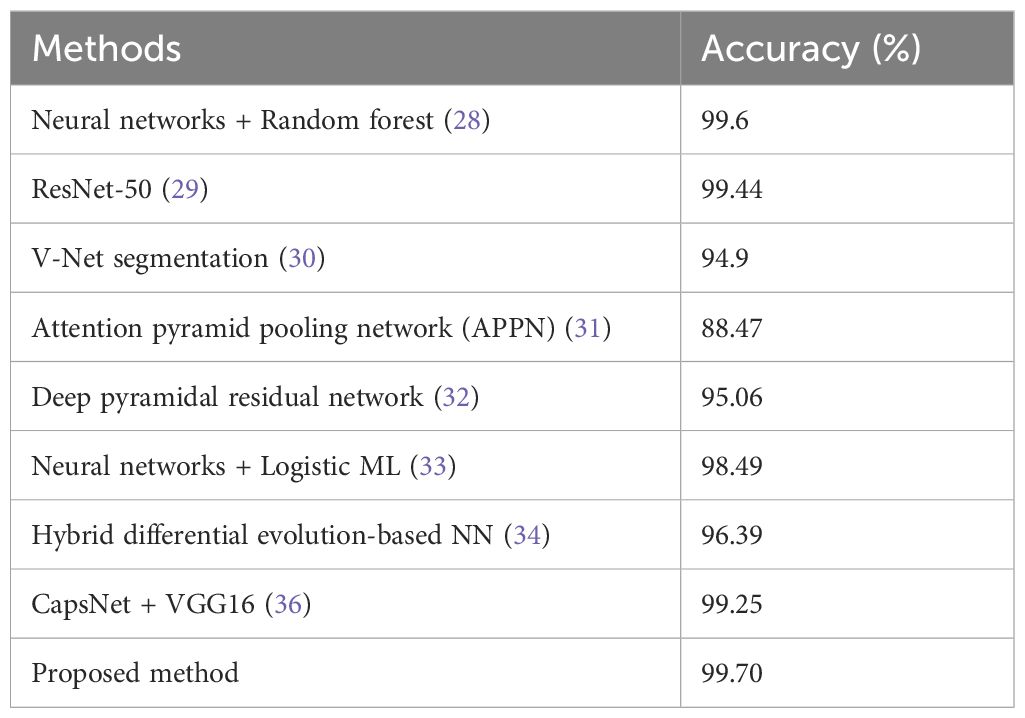

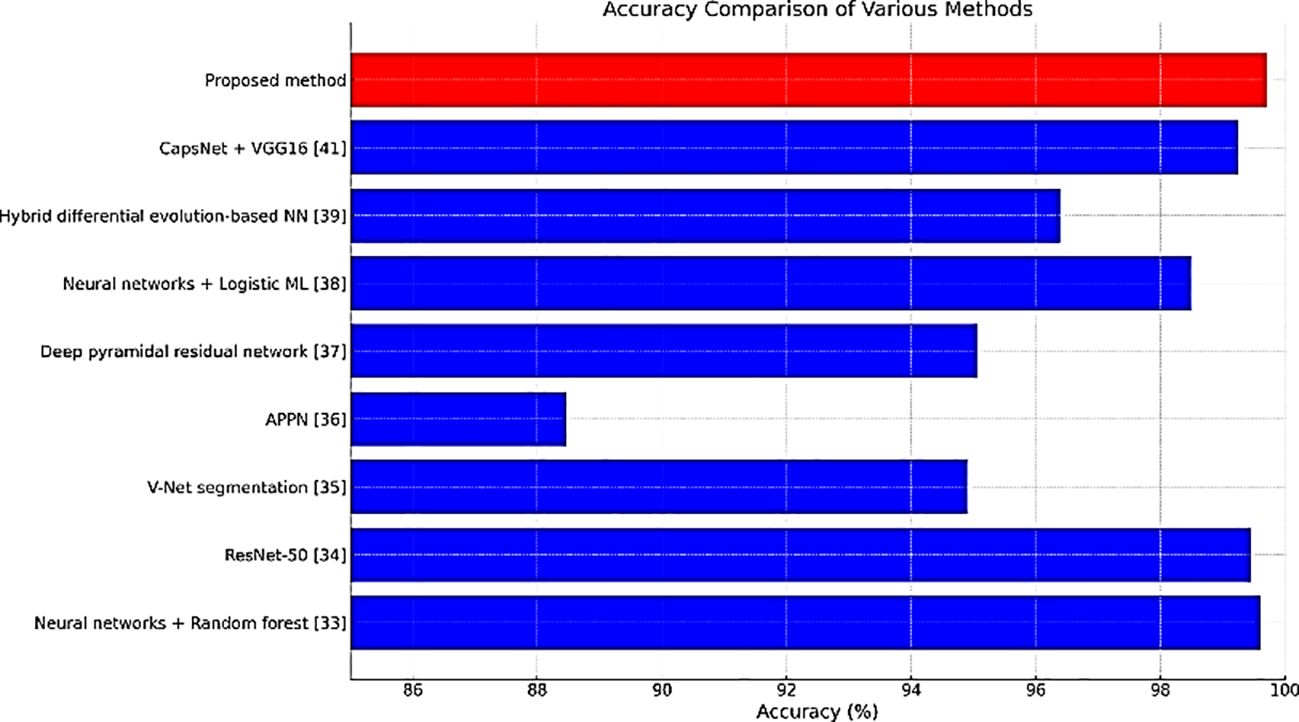

Table 2 compares the accuracy rates of different methods employed for detecting lung cancer. The accuracies of CapsNet + VGG16 & ResNet-50 were similar to that of our proposed method (99.70%).

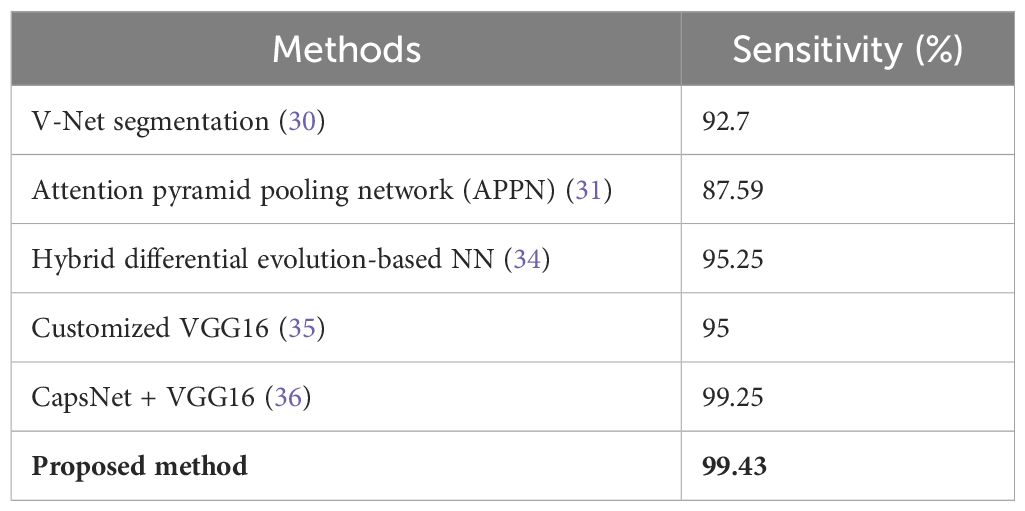

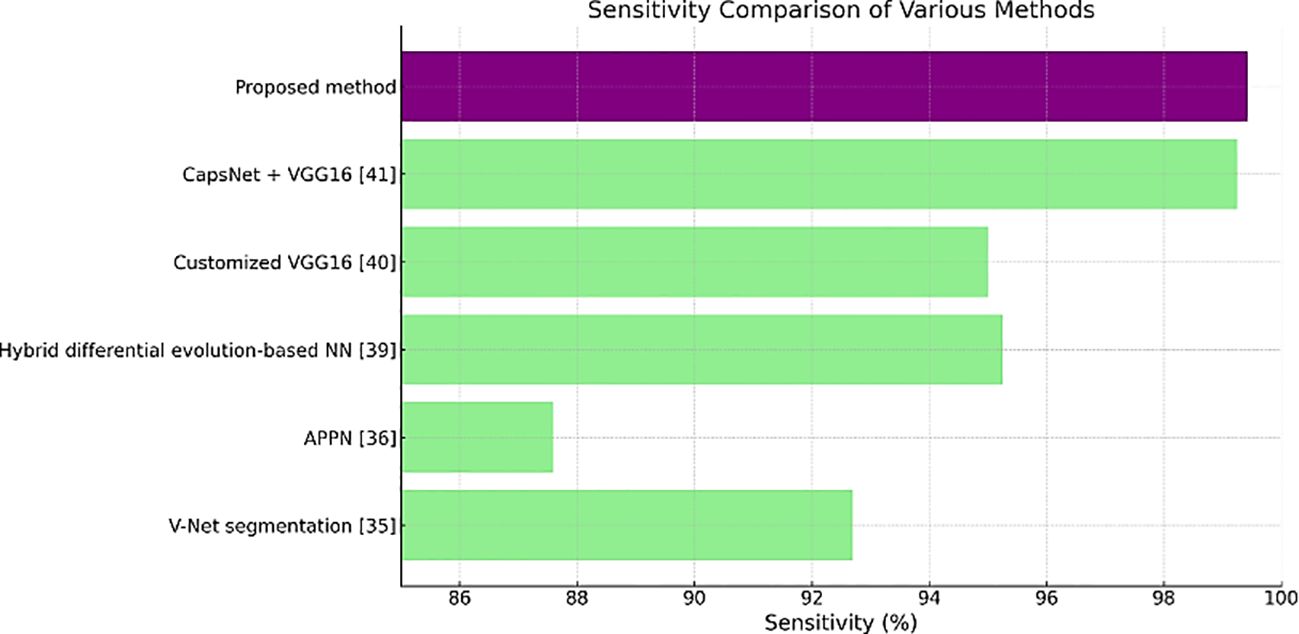

Table 3 shows the sensitivity values, where the proposed method has the highest value of 99.43%. A high number of true positive cases or a good result of this test means that it is highly sensitive for medical diagnostics.

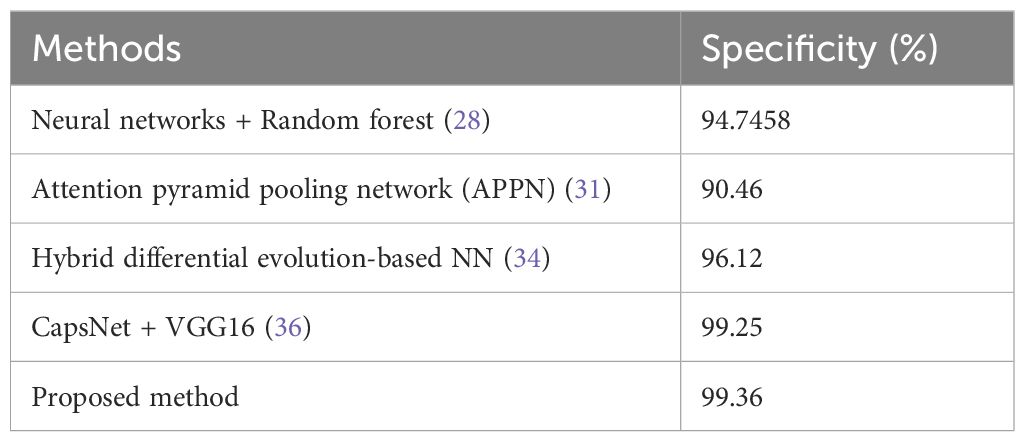

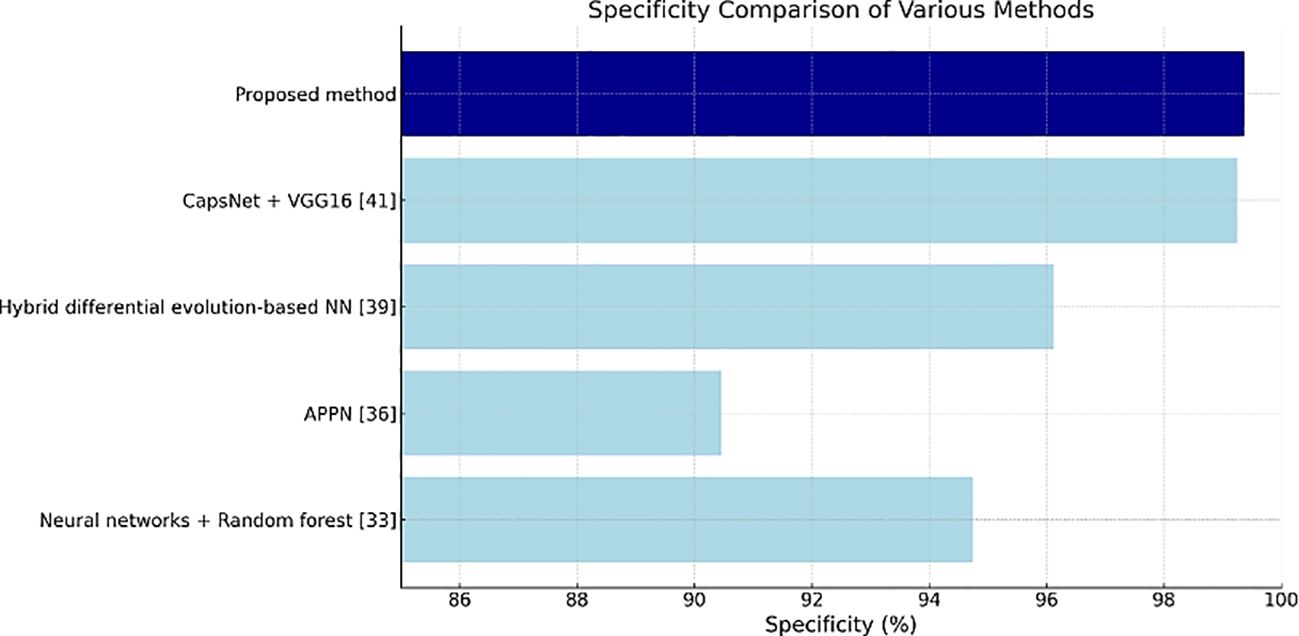

Table 4 shows the specificity values, and the proposed method has the highest value of 99.36%, thus indicating its effectiveness in correctly identifying negatives as well as reducing false positives in clinical settings.

Figures 11–13 are bar plots extracted from Tables 2–4, respectively, providing a visual representation of the numerical data. This makes it easier to compare how different measures of performance across methods are fairing, thus evidencing that the proposed method outperforms others on grounds of accuracy, sensitivity and specificity.

Finally, the obtained result is transmitted to ThingSpeak and is shared with the authorized personnel only to the corresponding mobile device or Personal Computers. Figure 14 shows the ThingSpeak GUI, and Figure 15 shows how the data are shared.

ThingSpeak, with its cloud-based IoT analytics platform (42), is known as the critical part of this research methodology in the detection and classification of lung nodules. In addition to its direct use in this project, ThingSpeak is working toward practical applications in medical imaging and healthcare provided by a broader technological frontier. In the context of this research, ThingSpeak connects applicable parameters and martial statistics to authorized personnel so that they all always remain updated, even if they are far from the site. This functional capacity allows healthcare professionals to perform their responsibilities remotely by monitoring patient data, working interactively concerning diagnosis and treatment decisions, and enhancing workflow efficiency. The methodology is built on the basis of real-time visualization of data and analytics of the ThingSpeak feature. As a result, it broadens the scope of accessibility and helps in better decision making through informed analysis in medical imaging.

In the case of ThingSpeak, the perennial future in healthcare promises to be full of opportunity. With the growth of IoT applications, ThingSpeak will be able to extend its functionalities and use data analysis, predictive modeling, and subsequent decision support systems to help improve the delivery of health services. The possible applications could be facilitating home-based patient monitoring, videoconferencing diagnoses, and health data collation and control for the general public. On top of mind, machine learning and AI algorithms already installed in the ThingSpeak realm can make the latter more efficient and useful in healthcare. Such developments could revolutionize medical diagnosis through the automation of abnormal detection, machine learning for disease forecasting, and the development of individualized treatment plans based on the unique data of patients.

In this effort, IoT integration is crucial since it enables real-time communication of diagnostic results via platforms such as ThingSpeak. This connectivity enables the seamless flow of data between diagnostic systems and healthcare practitioners, allowing for immediate analysis, feedback, and collaborative decision-making regardless of location. The incorporation of IoT in our method not only improves the efficiency of lung cancer diagnoses, but it also opens up new avenues for remote healthcare and telemedicine applications.

The suggested system uses IoT to continually monitor patient data, warn medical professionals in real time about significant findings, and keep a historical database of diagnostic information for continuing patient care. This feature is especially useful in situations where access to specialists is limited, as it enables prompt intervention and better patient outcomes. Furthermore, IoT integration enables scalable systems in which data from many diagnostic devices can be gathered, evaluated, and acted upon inside a single system, hence improving overall lung cancer care management.

This research provides an accurate technique for diagnosing lung nodules. All the advanced methods, such as histogram equalization, the Colliding Bodies Optimization (CBO) algorithm, local binary pattern (LBP) feature extraction, and DenseNet CNN classification, were applied. With this holistic strategy, the resulting data are credible and trustworthy as the quality of images is improved, essential features are extracted, and efficient tumor classification becomes possible. Additionally, ThingSpeak, as an IoT analytic platform interfaced with data streams, makes information transmission and collective decision-making among doctors effortless, facilitating remote access to critical information and timely care for patients.

The presented methodology, in addition to these results, is promising, but some limitations exist that must be considered. The validity of the current results may be limited by the particular dataset used for the experiments, a feature requiring extension of these studies considering different sets of datasets and clinical situations. Furthermore, the computational complexity might be a problem if a deep network algorithm such as DenseNet CNN is applied, which involves intensive resthisce requirements and processing time. Addressing the challenges of sensitivity to input parameters and the need for model interpretability for the integration of deep learning into clinical practice are the parameters that demand an objective and accurate assessment. Overcoming these restrictions by ongoing research and development activities will be a matter of great concern for attaining the best use and spread of this methodology in healthcare situations.

6 Conclusion

With the current segmentation approaches, early identification of cancer is quite challenging. Survival rates for lung cancer patients can be improved with early detection and accurate diagnostics. Malignant and benign tissues can be distinguished on CT images using a convolutional neural network (CNN). Traditional or machine learning algorithms cannot perform feature engineering as deep learning algorithms can. This analyses the data to look for related qualities and includes them so that learning can proceed more quickly. spatial coherence in the input is exploited. Images are preprocessed, and later, the feature selection process leading to feature extraction is performed before training and testing. However, diagnosing lung cancer by radiologists is challenging and time-consuming, often requiring significant expertise and careful analysis. The proposed method is denoted as CBO+DenseNet CNN, in which CBO is used for the optimal solution, and the densely connected CNN (DenseNet) deep learning method, in which the tumors are classified as benign or malignant. This study uses a wide range of statistical variables for comparison purposes. The collected data are sent to remote linked devices via the IoT module cloud for evaluation and future analysis. This model could be improved in the future so that it cannot only tell us whether a patient has cancer but also tell us where the tumthiss are located.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Author contributions

SK: Writing – original draft, Writing – review & editing, Conceptualization, Investigation, Methodology. MK: Writing – original draft, Writing – review & editing, Project administration, Supervision, Visualization. FS: Formal analysis, Resources, Software, Writing – original draft, Writing – review & editing. BA: Funding acquisition, Methodology, Resources, Writing – original draft, Writing – review & editing. AA: Data curation, Resources, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2024R440), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Amis ES Jr., et al. American college of radiology white paper on radiation dose in medicine. J Am Coll Radiology. (2007) 4:272–84. doi: 10.1016/j.jacr.2007.03.002

2. Youngho, et al. Technological development and advances in single-photon emission computed tomography/computed tomography. ” Semin Nucl Med. (2008) 38,3:177–98. doi: 10.1053/j.semnuclmed.2008.01.001

3. Skourt BA, Hassani AEl, Majda A. Lung CT image segmentation using deep neural networks. In: Procedia computer science, vol. 127. (2018). p. 109–13. doi: 10.1016/j.procs.2018.01.104

4. Siegel RL, Miller KD, Goding Sauer A, Fedewa SA, Butterly LF, Anderson JC, et al. Colorectal cancer statistics, 2020. CA Cancer J Clin. (2020) 70:145–164. doi: 10.3322/caac.21601

5. Lortet-Tieulent J, Soerjomataram I, Ferlay J, Rutherford M, Weiderpass E, Bray F. International trends in lung cancer incidence by histological subtype: Adenocarcinoma stabilizing in men but still increasing in women. Lung Cancer. (2014) 84:13–22. doi: 10.1016/j.lungcan.2014.01.009

6. Blandin KS, et al. Progress and prospects of early detection in lung cancer. ” Open Biol. (2017) 7:170070. doi: 10.1098/rsob.170070

7. Doi K. Computer-aided diagnosis in medical imaging: historical review, current status and future potential. Comput Med Imaging Graph. (2007) 31:198–211. doi: 10.1016/j.compmedimag.2007.02.002

8. Rubin GD, Lyo JK, Paik DS, Sherbondy AJ, Chow LC, Leung AN, et al. Pulmonary nodules on multidetector row CT scans: performance comparison of radiologists and computer-aided detection. Radiology. (2005) 234:274–83. doi: 10.1148/radiol.2341040589

9. Awai K, Murao K, Ozawa A, Komi M, Hayakawa H, Hori S, et al. Pulmonary nodules at chest CT: effect of computer-aided diagnosis on radiologists' detection performance. Radiology. (2004) 230:347–52. doi: 10.1148/radiol.2302030049

10. Yu K, Beam AL, Kohane IS. Artificial intelligence in healthcare. Nat BioMed Eng. (2018) 2:719–31. doi: 10.1038/s41551-018-0305-z

11. Sluimer I, Schilham A, Prokop M, van Ginneken B. Computer analysis of computed tomography scans of the lung: a survey. IEEE Trans Med Imaging. (2006) 25:385–405. doi: 10.1109/tmi.2005.862753

12. Nakagomi K, Shimizu A, Kobatake H, Yakami M, Fujimoto K, Togashi K. Multishape graph cuts with neighbor prior constraints and its application to lung segmentation from a chest CT volume. Med Image Anal. (2013) 17:62–77. doi: 10.1016/j.media.2012.08.002

13. Hu S, Hoffman EA, Reinhardt JM. Automatic lung segmentation for accurate quantitation of volumetric X-ray CT images. IEEE Trans Med Imaging. (2001) 20:490–8. doi: 10.1109/42.929615

14. Kuruvilla J, Gunavathi K. Lung cancer classification using neural networks for CT images. Comput Methods Programs Biomedicine. (2013) 202–9. doi: 10.1016/j.cmpb.2013.10.011

15. Jiang H, Ma He, Qian W, Gao M, Li Y. An automatic detection system of lung nodule based on multi-group patch-based deep learning network. IEEE J Biomed Health Inform. (2018) 22(4):1227–37. doi: 10.1109/JBHI.2017.2725903

16. Abu-Naser S, Al-Masri A, Sultan YA, Zaqout I. A prototype decision support system for optimizing the effectiveness of elearning in educational institutions. Int J Data Min Knowledge Manage Process (IJDKP). (2011) 1:1–13. doi: 10.5121/ijdkp.2011.1401

17. Zhang Q, Kong X. “Design of automatic lung nodule detection system based on multi-scene deep learning framework,”. (2020). doi: 10.1109/ACCESS.2020.2993872.

18. Kweik OMA, Hamid MAA, Sheqlieh SO, Abu-Nasser BS, AbuNaser SS. Artificial neural network for lung cancer detection. Int J Acad Eng Res (IJAER). (2020), 27-35(2020)4.

19. Nasser IM, Abu-Naser SS. Lung cancer detection using artificial neural network. Int J Eng Inf Syst (IJEAIS). (2019) 3:17–23. Available online at: https://ssrn.com/abstract=3369062.

20. Bhalerao RY, Jani HP, Gaitonde RK, Raut V. “A novel approach for detection of Lung Cancer using Digital Image Processing and Convolution Neural Networks,” in: 2019 5th International Conference on Advanced Computing & Communication Systems (ICACCS), Coimbatore, India. IEEE XPLORE (2019), pp. 577–83. doi: 10.1109/ICACCS.2019.8728348

21. Miah MBA, Yousuf MA. “Detection of lung cancer from CT image using image processing and neural network,” 2015 International Conference on Electrical Engineering and Information Communication Technology (ICEEICT), Savar, Bangladesh, IEEE Xplore (2015), pp. 1–6. doi: 10.1109/ICEEICT.2015.7307530

22. Woerl AC, Eckstein M, Geiger J, Wagner DC, Daher T, Stenzel P, et al. Deep learning predicts molecular subtype of muscle-invasive bladder cancer from conventional histopathological slides. Eur Urol. (2020) 78:256–64. doi: 10.1016/j.eururo.2020.04.023

23. Naik N, Madani A, Esteva A, Keskar NS, Press MF, Ruderman D, et al. Deep learning-enabled breast cancer hormonal receptor status determination from base-level H&E stains. Nat Commun. (2020) 11:5727. doi: 10.1038/s41467-020-19334-3

24. Ström P, Kartasalo K, Olsson H, Solorzano L, Delahunt B, Berney DM, et al. Artificial intelligence for diagnosis and grading of prostate cancer in biopsies: A population-based, diagnostic study. Lancet Oncol. (2020) 21:222–32. doi: 10.1016/s1470-2045(19)30738-7

25. Madabhushi A, Feldman MD, Leo P. Deep-learning approaches for Gleason grading of prostate biopsies. Lancet Oncol. (2020) 21:187–9. doi: 10.1016/s1470-2045(19)30793-4

26. Lokhande A, Bonthu S, Singhal N. Carcino-net: A deep learning framework for automated gleason grading of prostate biopsies, in: Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE Xplore, EMBS, Montreal, QC, Canada (2020), pp. 1380–3.

27. Bera K, Katz I, Madabhushi A. Reimagining T staging through artificial intelligence and machine learning image processing approaches in digital pathology. JCO Clin Cancer Inform. (2020) 4:1039–50. doi: 10.1200/CCI.20.00110

28. Nair SS, Devi VNM, Bhasi S. Enhanced lung cancer detection: Integrating improved random walker segmentation with artificial neural network and random forest classifier. Heliyon. (2024) 10:e29032. doi: 10.1016/j.heliyon.22024.e29032

29. Kumar V, Prabha C, Sharma P, et al. Unified deep learning models for enhanced lung cancer prediction with ResNet-50–101 and EfficientNet-B3 using DICOM images. BMC Med Imaging. (2024) 24:63. doi: 10.1186/s12880-024-01241-4

30. Ma X, Song H, Jia X, et al. An improved V-Net lung nodule segmentation model based on pixel threshold separation and attention mechanism. Sci Rep. (2024) 14:4743. doi: 10.1038/s41598-024-55178-3

31. Wang H, Zhu H, Ding L, Yang K. Attention pyramid pooling network for artificial diagnosis on pulmonary nodules. PloS One. (2024) 19:e0302641. doi: 10.1371/journal.pone.0302641

32. Angel Mary A, Thanammal KK. Lung cancer detection via deep learning-based pyramid network with honey badger algorithm. Measurement: Sensors. (2024) 31:100993. doi: 10.1016/j.measen.2023.100993

33. Srija GC, Usharani T. Classification of normal and nodule lung images from LIDC-IDRI datasets using K-NN and logistic classifiers, in: AIP Conference Proceedings (2023), Vol. 2587. doi: 10.1063/5.0151687

34. Gugulothu VK, Balaji S. An early prediction and classification of lung nodule diagnosis on CT images based on hybrid deep learning techniques. Multimed Tools Appl. (2024) 83:1041–61. doi: 10.1007/s11042-023-15802-2

35. Asiya S, Sugitha N. Automatically segmenting and classifying the lung nodules from CT images. Int J Intelligent Syst Appl Eng. (2023) 12:271–81.

36. Tandon R, Agrawal S, Chang A, Band SS. VCNet: hybrid deep learning model for detection and classification of lung carcinoma using chest radiographs. Front Public Health. (2022) 10:894920. doi: 10.3389/fpubh.2022.894920

37. Shalini A, Pankajam A, Talukdar V, Farhad S, Talele G, Muniyandy E, et al. Lung cancer detection and recognition using deep learning mechanisms for healthcare in ioT environment. Int J Intelligent Syst Appl Eng. (2024) 12:208–16. doi: 10.17762/ijisae.v121i6.6920

38. Armato SG III, McLennan G, Bidaut L, McNitt-Gray MF, Meyer CR, Reeves AP, et al. Data from LIDC-IDRI [Data set. Cancer Imaging Arch. (2015). doi: 10.7937/K9/TCIA.2015.LO9QL9SX

39. Kaveh A, Massoudi MS. Multiobjective optimization using charged system search. Scientia Iranica. (2014) 21:1845–60.

40. Kaveh A, Mahdavi VR. Colliding bodies optimization; extensions and applications. In: Colliding Bodies Optimization. Springer, Cham. (2015) 11–38. doi: 10.1007/978-3-319-19659-6_2

41. Huang G, Liu Z, Der Maaten V, Weinberger KQ. Densely connected convolutional networks (2016). Available online at: https://arxiv.org/abs/1608.06993.

42. Raj GS, Selvan E, et al. IoT enabled lung cancer detection and routing algorithm using CBSOA-based shCNN. In: International journal of adaptive control and signal processing, vol. 36. (2022). p. 2183–201. doi: 10.1002/acs.3518

43. Sathiya T, Reenadevi R, Sathiyabhama B. Lung nodule classification in CT images using Gray Wolf Optimization algorithm. Ann R.S.C.B. (2021) 25:1495–511.

Keywords: lung cancer, optimization, CNN, ThingSpeak, IoT

Citation: Karimullah S, Khan M, Shaik F, Alabduallah B and Almjally A (2024) An integrated method for detecting lung cancer via CT scanning via optimization, deep learning, and IoT data transmission. Front. Oncol. 14:1435041. doi: 10.3389/fonc.2024.1435041

Received: 20 May 2024; Accepted: 16 September 2024;

Published: 07 October 2024.

Edited by:

Alice Chen, Consultant, Potomac, MD, United StatesReviewed by:

Yuanpin Zhou, Zhejiang University, ChinaRamana Kadiyala, Chaitanya Bharathi Institute of Technology, India

Lucas Lins Lima, University of São Paulo, Brazil

Copyright © 2024 Karimullah, Khan, Shaik, Alabduallah and Almjally. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bayan Alabduallah, YmlhbGFiZHVsbGFoQHBudS5lZHUuc2E=; Mudassir Khan, bWttaXlvYkBra3UuZWR1LnNh

Shaik Karimullah

Shaik Karimullah Mudassir Khan

Mudassir Khan Fahimuddin Shaik1

Fahimuddin Shaik1