- 1School of Future Technology, South China University of Technology, Guangzhou, China

- 2Guangdong Artificial Intelligence and Digital Economy Laboratory, Guangzhou, China

- 3School of Artificial Intelligence and Automation, Huazhong University of Science and Technology, Wuhan, China

Electroencephalogram-based brain-computer interfaces (BCIs) hold promise for healthcare applications but are hindered by cross-subject variability and limited data. This article proposes a multi-task (MT) classification model, AM-MTEEG, which integrates deep learning-based convolutional and impulsive networks with bidirectional associative memory (AM) for cross-subject EEG classification. AM-MTEEG deals with the EEG classification of each subject as an independent task and utilizes common features across subjects. The model is built with a convolutional encoder-decoder and a population of impulsive neurons to extract shared features across subjects, as well as a Hebbian-learned bidirectional associative memory matrix to classify EEG within one subject. Experimental results on two BCI competition datasets demonstrate that AM-MTEEG improves average accuracy over state-of-the-art methods and reduces performance variance across subjects. Visualization of neuronal impulses in the bidirectional associative memory network reveal a precise mapping between hidden-layer neuron activities and specific movements. Given four motor imagery categories, the reconstructed waveforms resemble the real event-related potentials, highlighting the biological interpretability of the model beyond classification.

1 Introduction

The brain-computer interface (BCI) can be defined as a system that translates a user's brain activity patterns into messages or commands for interactive applications (Lotte et al., 2018). Efficient BCI systems can promote interactions between the brain and physical devices and have broad applications in medical rehabilitation and neuroscience research (Lebedev and Nicolelis, 2017). Most of the current BCI data comes from neural electrical signals recorded by electroencephalogram (EEG), which enables researchers to measure and decode human brain activity. A classic BCI paradigm for motor imagery (MI) is consisted of five parts: EEG acquisition, EEG preprocessing, feature extraction, classification, and task execution (Lotte et al., 2015). One crucial step is to extract features of EEG signals and classify them into the motion categories. To date, the EEG classification tasks are still constrained by the following limitations.

• Since the EEG data has large variability and the representation of neural activity changes over time (Degenhart et al., 2020), EEG classification models trained on given sample datasets are difficult to generalize to other samples.

• With the development of biosignal sensors for use in home environments (Zhang et al., 2023), processing models for biosignals require increasingly robust performance. However, most EEG classification models have low cross-subject classification accuracy (Lawhern et al., 2018), where models are only trained and tested on a single subject's data (Hu et al., 2019b), resulting in low generalization in cross-subject tasks.

• Because BCI experiments are time-consuming (Shen et al., 2022), and are limited by the energy and time of the subjects, the amount of EEG data collected by a single subject is relatively small, detrimental to the training of computational models.

Data-driven deep learning has achieved remarkable achievements in image classification, speech recognition, and natural language processing, among others (Ma et al., 2020). Due to the above-mentioned limitations, however, current deep learning models cannot be directly applied to deal with the EEG classification tasks, particularly for the cross-subject issue. In view of the ethical and safety considerations, the healthcare field has raised high requirements on the interpretability of deep learning models (Adadi and Berrada, 2018). Most of the existing models have poor interpretability given the end-to-end scenario, limiting their application in BCI. It is thus in demand to build new machine learning models to fix the cross-subject variability of EEG by data sharing, and enhance the neuroscience interpretability for wide application to BCI systems.

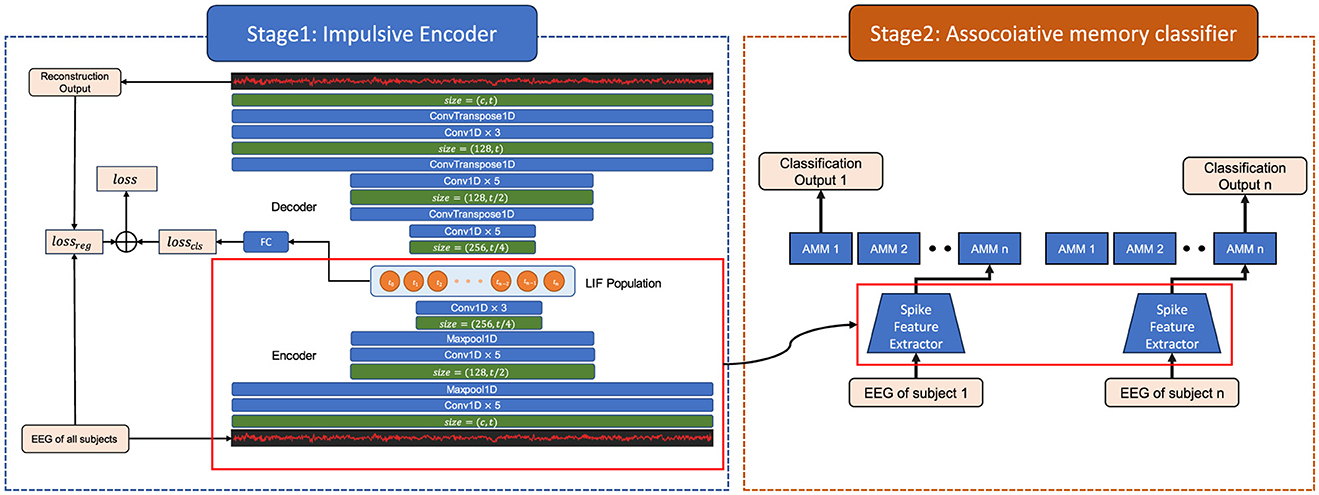

This article resorts to the multi-task learning (MTL) to cope with the large variability in the EEG data. The EEG classification of each subject is defined as a task, common features are extracted among samples from various subjects to guarantee cross-subject training, and these features are mapped into categories of each subject. Differing from the closely-related methods (Zheng et al., 2019; Wang et al., 2024; Lawhern et al., 2018), we incorporate associative memory to multi-task learning and propose the AM-MTEEG model by mixing Hebbian learning with deep learning (Figure 1). The AM-MTEEG combines a deep convolutional model with an impulsive associative memory network, as inspired by the memory principle of human brains (Seitz, 2010), which can learn accurate mappings from very few demonstrations. AM-MTEEG includes a deep learning encoder-decoder counterpart trained across various samples, replacing the encoding mechanism of the brain. The model further incorporates a layer of impulsive neurons to encode the necessary neural signals for associative memory formation. For each subject, a bidirectional associative memory network is built to map impulsive signals to the motion categories. The original EEG signals can be reconstructed by decoding the impulsive signals associated with specific category labels, thereby enhancing the interpretability beyond the classification process.

Figure 1. The architecture of the associative memory multi-task EEG (AM-MTEEG) model. There are two stages in the model. Stage 1 includes a convolutional encoder, an impulsive neural population, a decoder of transposed convolutions. Stage 2 is a bidirectional associative memory (BAM) classifier. Both the convolution and transposed convolution layers use a convolution kernel of length 5 and are activated through the ReLU function. The impulsive neural population is built with 200 leaky integrate-and-fire (LIF) neurons. The encoder, population and decoder are trained by backpropagation (BP) with joint loss, while the BAM module is optimized by Hebbian learning.

The main contributions of this article are summarized as follows.

• To deal with the cross-subject variability, a multi-task EEG classification model is proposed, termed as AM-MTEEG, which integrates a deep learning-based convolutional encoder with a Hebbian-type bidirectional associative memory (AM) network. The convolutional encoder captures shared features across different samples, while the AM network alleviates the data variability for classification by directly mapping latent features to motion categories.

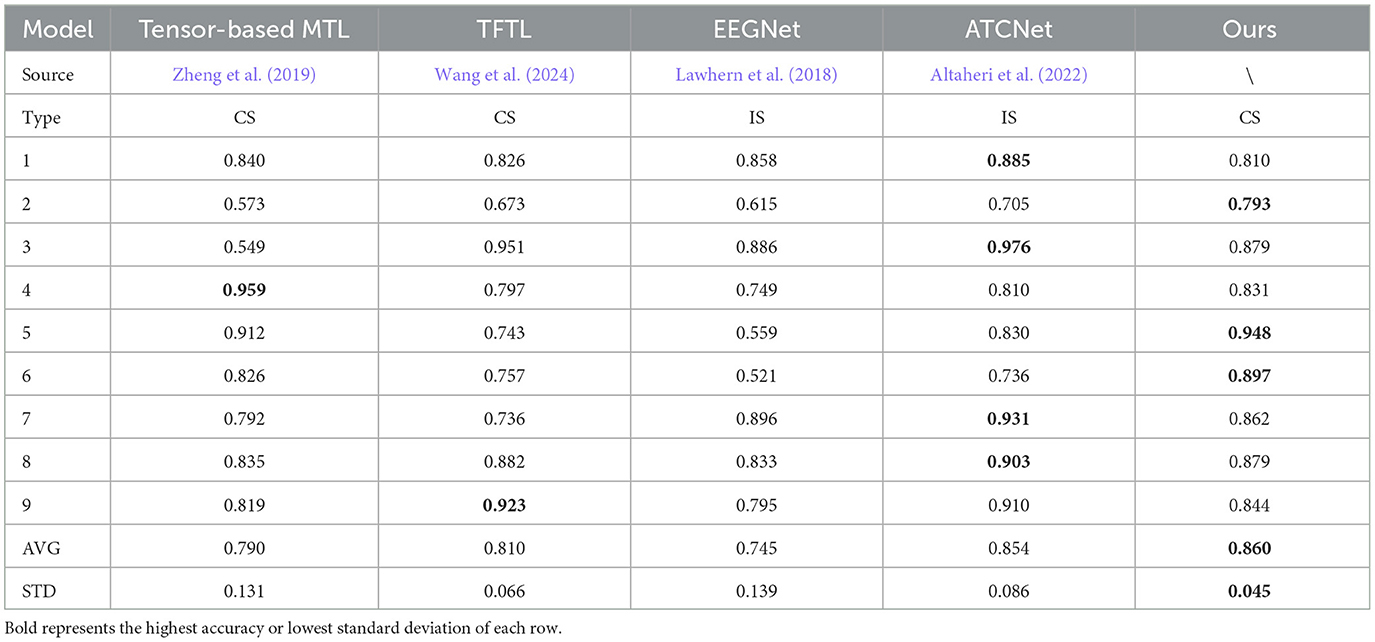

• AM-MTEEG achieves an average accuracy of 86% on the BCI Competition IV IIa dataset, surpassing state-of-the-art (SOTA) methods and exhibiting minimal performance variance across different samples. The decoder can reconstruct EEG signals from neural activities in the AM network, which resembles the real event-related potentials (ERPs) of each motion, thereby demonstrating the model interpretability.

• For any motor imagery EEG, impulsive neurons in AM-MTEEG exhibit specific firing patterns characterized by high synchrony. This firing synchrony suggests that neuronal activity is governed by a low-dimensional latent manifold, a feature consistent with the neural coding mechanisms observed in hippocampal neurons (Levy et al., 2023). This alignment indicates the neuro-inspired characteristic of the model, reflecting the biological plausibility.

2 Related work and research motivation

In EEG-based BCI tasks, one common approach is to utilize common spatial patterns (CSP) for feature extraction (Blankertz et al., 2007), followed by classification algorithms like LDA (linear discriminant analysis) and SVM (support vector machine). Although CSP helps to extract EEG features, traditional machine learning models are not adequate to recognize complex EEG patterns. Current deep learning models have demonstrated greater flexibility in EEG classification for complex BCI tasks (Lawhern et al., 2018; Altaheri et al., 2022). For example, Lawhern et al. (2018) proposed EEGNet, which applies separable two-dimensional convolutions to EEG classification problems. Liu et al. (2023) combine the same spatiotemporal convolution with filter banks and propose FBMSNet, which mixes deep convolution to extract temporal features at multiple scales and then performs spatial filtering to mitigate volume conduction. Altaheri et al. (2022) introduced ATCNet, a convolutional neural network with temporal attention mechanisms for EEG classification. ATCNet achieved an average accuracy of 85.4% on the BCI Competition IV IIa dataset, setting a new state-of-the-art performance on this dataset.

The accuracy of BCI decoding across subjects is constrained by the variability of emotion and experience between subjects (Huang et al., 2023). Waytowich et al. (2016) presented a spectral transfer model using information geometry to sort and combine the prediction results of information geometry classifier sets to achieve unsupervised transfer learning for single test detection. Zhi et al. (2024) proposed a generalization network using domain alignment and class regularization blocks of deep correlation alignment to establish domain-independent supervised contrastive learning. Another solution comes from multi-task learning, which allows different tasks to share common features. Compared to single-task learning models, MTL leverages more data from different tasks (Zhang and Yang, 2021), enabling the learning of more generalized representations. Moreover, MTL can be used to alleviate large high subject variability and limited sample size in EEG-based BCI tasks. Zheng et al. (2019) developed an effective algorithm where each subject's sample is treated as a separate task, utilizing regularized tensors. In addition to MTL, the use of ensemble learning can also reduce the variability of EEG. For example, Qi et al. (2022) used a dynamic ensemble Bayesian filter to assemble models to cope with variability in signals.

Spiking neural networks (SNNs), inspired by neural systems in the brain, offer computational advantages such as low power consumption and high interpretability, making them widely applicable across various tasks. Diehl and Cook (2015) implemented an SNN using unsupervised STDP learning for handwritten digit classification, achieving 95% accuracy on the MNIST dataset. Xu et al. Ma et al. (2020) used a spiking recurrent neural network to extract features and presented a neuromorphic approach for classifying electromyography (EMG) signals. Xu et al. (2023) proposed a spiking convolutional neural network for electromyography pattern recognition, which can be used in prosthesis control and human-computer interaction. The effectiveness of SNNs in multi-task learning has also been demonstrated. For instance, Cachi et al. (2023) proposed TM-SNN, which uses different spiking thresholds to represent different tasks while sharing the same structure and parameters across tasks. Convectional spiking networks cannot be used for EEG-based BCI tasks, since the training process would be hampered by the limited data and large variability across subjects.

Previous studies suggest that hybrid models that integrate associative memory networks with deep learning could perform better than conventional convolutional or spiking networks across tasks. Hu et al. (2019a) proposed that spiking neural networks using Hebbian learning can provide stable and fault-tolerant associative memory. Miconi et al. (2018) combined Hebbian rule-based associative memory with traditional backpropagation neural networks, achieving efficient learning on small-sample image datasets. Wu et al. (2022) applied a similar structure to spiking neural networks, where the network weights are updated through both global learning via backpropagation and local Hebbian learning. This hybrid method performed well in fault-tolerant learning and few-shot learning. The associative memory networks (Hu et al., 2019a; Kosko, 1988) could yield a short training time by directly mapping the spike representations to motion categories, thus reducing the calibration time of BCI systems on new subjects.

Based on these observations, this article develops a hybrid model of deep convolutional network and impulsive associative memory network, for the purpose of extracting cross-subject features. The training of the AM network has a linear time complexity for reducing the training time, while deep convolutional networks have flexible feature extraction capabilities. Moreover, the AM network can enhance the interpretability of BCI performance across different subjects, while the shared features extracted through multi-task learning improve classification stability across subjects. As a by-product, the proposed hybrid model enables to share data across subjects and reduce the time to adapt to new subjects, which could be useful for dealing with limited data. When used for EEG-based BCI systems, the combination of AM and deep learning helps to cut the calibration time onto new subjects and lessen the amount of data required for a single subject within a cross-subject dataset.

3 The AM-MTEEG model

Figure 1 demonstrates the proposed associative memory multi-task EEG (AM-MTEEG) model, consisting of an impulsive encoder and an associative memory classifier. The impulsive encoder utilizes a one-dimensional convolutional neural network to extract signal features, which are then fed into a population of spiking neurons, encoding the input into low-dimensional spiking representations. A convolutional neural network decoder is employed to reconstruct the EEG signals. The associative memory classifier assigns an associative memory matrix (AMM) to each classification task, mapping the encoded spikes to multi-task categories. The training of the AM-MTEEG model entails the following two stages.

• Stage 1: The impulsive encoder and decoder modules combine self-supervised learning with label-guided training to optimize the parameters. Multi-channel EEG signals are used simultaneously as both input and target, training the spiking encoder to reconstruct the one-dimensional EEG signals.

• Stage 2: The spiking encoder employs the pre-trained parameters from Stage 1. Only the associative memory network is trained for different tasks. The input and category label are represented as an input-output pattern pair {xi, yi}, where is the low-dimensional spiking representation vector from the spiking encoder, n is the number of neurons, t is the length of time series from the convolution encoder, and yi is the one-hot target vector. The associative memory network matches the input patterns to the corresponding output patterns by bidirectional hetero-associative memory.

3.1 Convolutional feature extractor

As shown in Figure 1, the convolutional module consists of an encoder E and a decoder D built with one-dimensional convolutions. The convolution kernel length of the convolutional layer is 5 and the ReLU (Rectified linear unit) function is used as activation. Unlike the existing EEG classification models based on 2D convolution (Lawhern et al., 2018; Altaheri et al., 2022), to achieve EEG data classification while maintaining the structure of the original EEG signal as much as possible, we only used 1D convolution for feature extraction. Therefore, the convolution kernel parameters to be trained are reduced from c×n2 to c×n, where c is the number of signal channels and n is the convolution kernel size, which allows us to use larger convolution kernels. In the motor imagery task, we used the CNN model architecture as shown in Table 1.

The input signal x ∈ Rct is downsampled to 1/4 of the original length by two one-dimensional maximum pooling in the encoder to obtain the hidden signal

The encoded signal is directly input into the spiking neuron as the current through the fully connected layer, recording the spike sequence emitted by the neuron. In the decoder stage, the hidden layer spike are mapped by the fully connected layer and then upsampled to the original length by two one-dimensional deconvolutions.

In this process, the encoder-decoder module and the impulsive neural population obtain a low-dimensional representation of EEG activity through autoregressive learning.

3.2 Impulsive neural population

Let the encoder output signal be the current I, which is to be input into the leaky integrate-and-fire (LIF) neuronal population. The purpose is to convert the encoder output into a discrete spike train s. By the LIF mechanism (Ward and Rhodes, 2022), the membrane potential u(t) evolves as

where R is a constant resistance. When the membrane potential is greater than a given threshold uth, the neuron generates a spike, and then the membrane potential would be reset to 0. In computer simulation, we use the following differential form

where τ is the decay constant, wi is the synaptic weight of the synapse i, st∈{0, 1} is the spike fired at t, and represents the input current of the synapse i at time t−1. When the membrane potential is greater than uth, the neuron generates a spike, and the membrane potential is set to 0 at the next time t+1. As shown in Equation 4, This process uses the unit step function

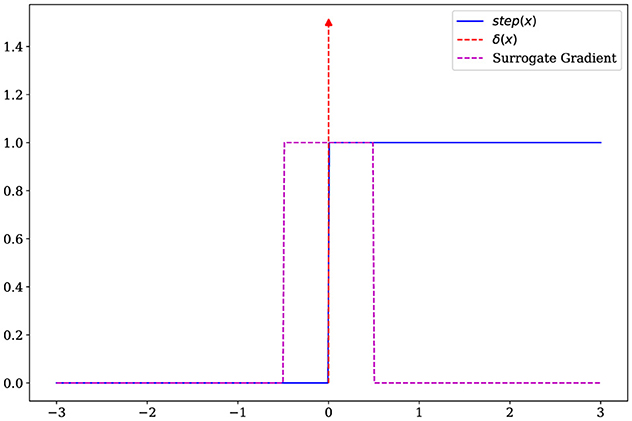

While training the encoder using backpropagation, calculating the gradient of the step function poses a challenge. Since the step function is discontinuous, its gradient results in an impulse response

The gradient of membrane potential is calculated as

Note that, the term makes it difficult to train the encoder. As shown in Figure 2, we use the surrogate gradient method (Neftci et al., 2019) to replace the impulse function with a rectangular window function

Then, using the surrogate gradient, the membrane potential gradient can be approximated by

At each time t, each signal processed by the convolutional neural network is treated as an input current (Figure 1), which is passed through a fully connected layer into multiple LIF neurons in the hidden layer. The hidden layer generates spikes, and these spike sequences encode essential information from the original EEG signal. Subsequently, we employ a self-supervision approach, using a convolutional neural network decoder to reconstruct the EEG signal. To ensure the identifiability of the impulses in signal reconstruction, the hidden layer neurons are linked to an auxiliary classifier for preliminary classification. Here, we use a trainable fully connected layer, with a joint loss function of reconstruction-type Lreg and classification-type Lcls. The reconstruction loss is defined by mean square error (MSE): , where x is the vector of original EEG signals of all subjects and is the reconstructed one. The classification loss uses cross-entropy loss, i.e., , where label is the motion categories of the MI task. The joint loss function has the following format:

where λ > 0 is a mixing factor. In computer simulation, one has λ = 0.1, and the Lcls is calculated by the hidden layer spike through the auxiliary classifier. Lreg makes the model more expressive, while Lcls enhances the identifiability of the latent space. When trained with by the joint loss (Equation 8), AM-MTEEG can perform the classification and reconstruction tasks simultaneously.

3.3 Associative memory classifier

In order to promote efficient training and accurate classification, we build a bidirectional associative memory network as a classifier to map the impulse activities into labels of the EEG data. Let the input-output pattern (or task) pair be {xk, yk}, where is the input column vector, is output one-hot vector, and k is the task index. In the memory retrieval stage, the iterative process of the pattern pair {xk, yk} is performed as Kosko (1988)

where is the associative memory matrix (AMM), , and t is the time.

In the following, the index k is omitted for simplicity. The Equation 9 can be written as the sum of all elements:

When the associative memory network is stable, one has yt+1 = yt, xt+1 = xt. Then, the above process is actually optimizing the energy function of the system (Kosko, 1988)

The gradient of Et regarding wij is , and the energy function achieves its minimum by taking wij = sgn(yixj).

During the training stage, the associative memory matrix Wk of the task k is

This equation suggests that the bidirectional associative memory fits into the correlation-based Hebbian learning. When the pre and post-synaptic neurons emit spikes simultaneously, the synaptic connection would be strengthened. This process is similar to the long-term synaptic plasticity mechanism of hippocampal neurons (Kelso et al., 1986).

Next, we prove the convergence of the bidirectional associative memory network. Let be the changes of xj, yi at time t. Then, the time difference of the energy function Et is

From Equation 11, it follows that

Substituting Equation 14 into Equation 13, one can get

where

Therefore, ΔE ≤ 0 holds, and the dynamic changes of the system will cause E to continue to decrease. Considering the use of the sgn function, the system will gradually converge to a stable value.

One-hot encoded yj is used as the output pattern. After applying the sgn function, the maximum value is set to 1, while the remaining values are set to -1. This system stabilizes after a single iteration. Therefore, during the testing phase, for a given task sample xi, the classification result is obtained using the associative memory matrix

Considering all the pattern pairs {xk, yk} to be stored, for any input xi in the prediction phase, it follows that

The above process is equivalent to taking the cosine similarity between the current input xi and xk in all pattern pairs as the average output yk of the weighted calculation.

4 Experimental results

The proposed AM-MTEEG model is validated on two public datasets, fitting the classic motor imagery BCI paradigm. In the following experiments, we utilize the BCI Competition datasets and achieve an average accuracy over 94% and 86% on two of its subsets, respectively.

4.1 Dataset description

BCI Competition III Iva is a binary classification dataset, including right-hand and foot movement imagery tasks performed by 5 subjects. Each task includes 118 channels of EEG signals obtained at a sampling rate of 100 Hz within 3 s (Dornhege et al., 2004).

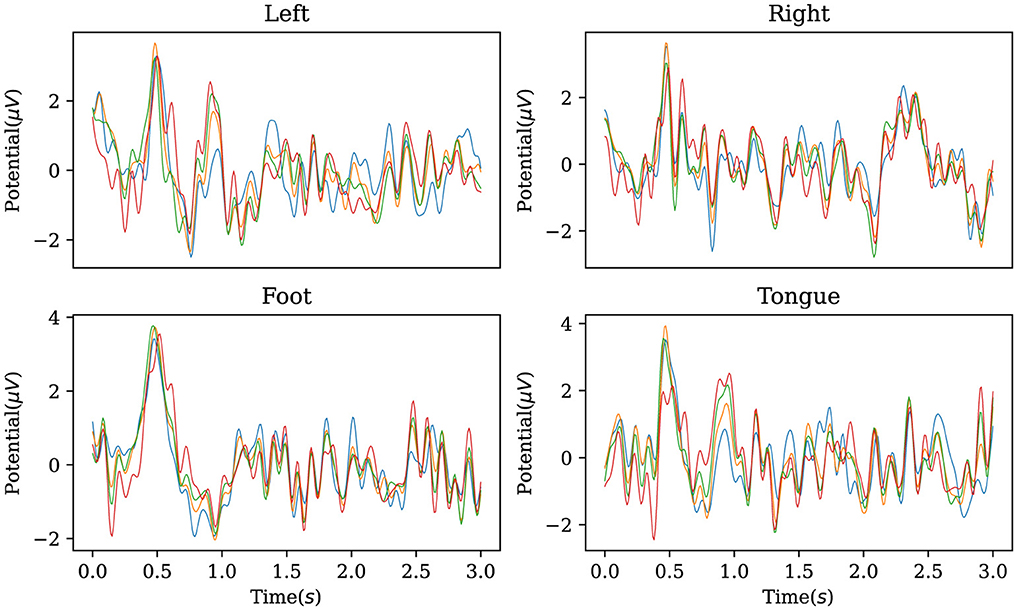

BCI Competition IV IIa is a four-category dataset, including motor imagery tasks of the left hand, right hand, feet, and tongue performed by 9 subjects. Each task includes 22 channels of EEG signals and 3 channels of EOG signals obtained at a sampling rate of 250 Hz within 3 s (Brunner et al., 2008).

4.2 Performance evaluation

4.2.1 Comparative studies

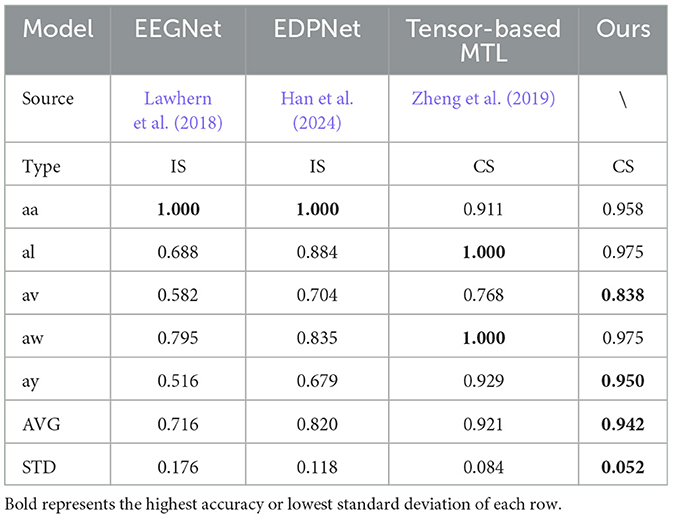

As shown in Tables 2, 3, we compare the AM-MTEEG model with other cross-subject (CS) or inner-subject (IS) models. In contrast to SOTA methods, AM-MTEEG achieves comparable accuracy and surpasses the current SOTA in terms of average accuracy on the BCI Competition IV IIa dataset. Compared to other multi-task models, the proposed model exhibits the smallest standard deviation in accuracy across different subjects, indicating its ability to provide stable classification performance across subjects. Additionally, when extending to new tasks, AM-MTEEG only requires retraining the associative memory matrix, and the Hebbian learning used in this process is highly efficient, demonstrating its good scalability.

Table 2. Accuracy comparisons on BCI competition IV IIa, including cross-subject (CS) model and inner-subject (IS) model.

Table 3. Accuracy comparisons on BCI competition III Iva, including cross-subject (CS) model and inner-subject (IS) model.

4.2.2 Ablation studies

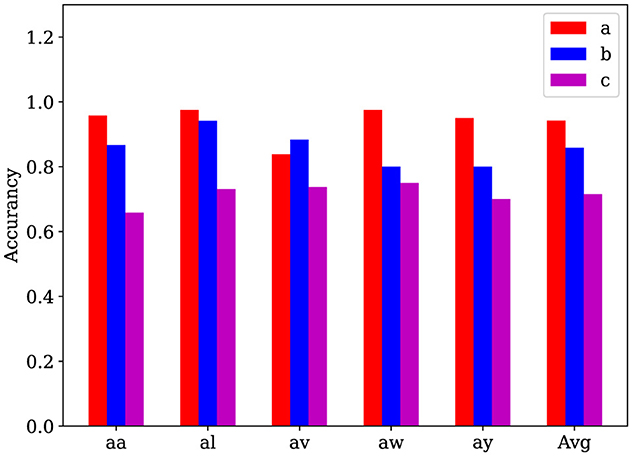

We evaluate the AM-MTEEG model on the BCI Competition III Iva dataset and compare the full model with two simplified models: (a) a model where spiking neurons were replaced with the tanh function, and (b) a model where the associative memory matrix was replaced by a fully connected layer trained via gradient descent. The results, as shown in Figure 3, demonstrate that the full model outperforms the simplified models in terms of accuracy on most samples. These findings suggest that both the spiking computation and the bidirectional associative memory classifier used in AM-MTEEG contribute to the improved performance.

Figure 3. Ablation experiments on the BCI Competition III Iva binary classification dataset. (a) The full AM-MTEEG model; (b) Model with spiking neurons removed; (c) Model with a fully connected network using gradient descent instead of the associative memory classifier. These accuracy histograms show that the classification performance can be improved by incorporating the spiking neurons and the bidirectional associative memory networks in the AM-MTEEG model.

4.3 Model interpretability

Due to the reversibility of bidirectional associative memory, the classification label is fed into the associative memory matrix to obtain the characteristic impulse sequence corresponding to any motion category. Define

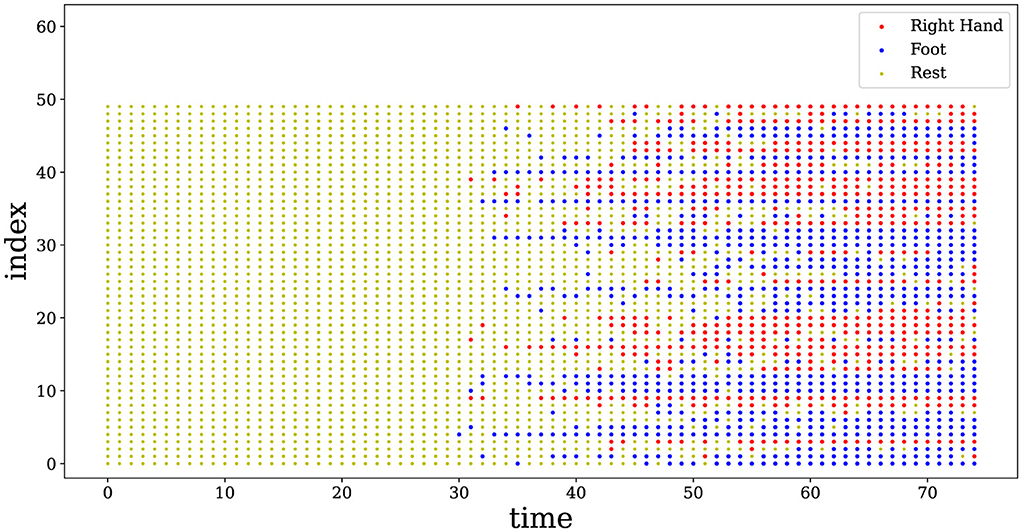

Figure 4 shows the impulse sequences obtained through the associative memory network, on the BCI Competition III Iva dataset. Here, we visualize the spiking neuronal activities from subject aa performing a motor imagery task with the right hand and foot, which reflects the neural coding behind the movements (Shen et al., 2023). It can be observed that the spikes exhibit a high degree of synchrony over time, and this synchronous behavior of neurons corresponds to a fixed category. This finding suggests that the impulsive neuronal population in the hidden layer fires within a similar pattern to that measured in the hippocampus of human brains (Levy et al., 2023).

Figure 4. Spike raster plot of neuronal activities corresponding to the two categories of motions. 50 neurons are randomly selected from the AM network. The spikes of neurons exhibit a high degree of synchrony over time, while the synchronous behavior represents a fixed category. Red represents the spike emission with a right hand movement, and blue shows the spike emission related to a foot movement. Yellow means no spike, i.e., neurons stay in the resting state.

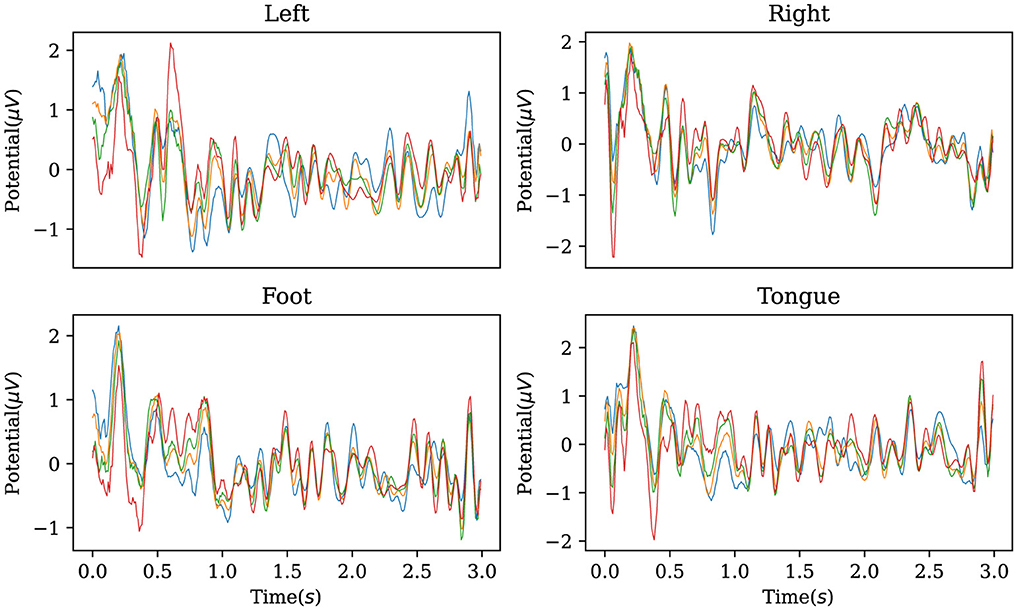

In addition, the established decoder is used to reconstruct the original EEG data corresponding to the labels in the BCI Competition IV IIa dataset, obtaining characteristic waveforms for the four motor imagery categories. As shown in Figure 5, the reconstructed EEG signals reveal distinct waveforms for each of the four categories. When comparing these waveforms with the ERP from the dataset, as illustrated in Figure 6, one can observe a similarity between the reconstructed waveforms and the ERP. The greater the similarity between these two waveforms, the higher the confidence in the model's precise classification. The R-square index is calculated by quantifying the normalized sum of squared differences between the characteristic waveforms and the ERP. Given the four MI tasks on the BCI Competition IV IIa dataset, the R-square satisfies

where ŷi is the reconstructed waveform, yi is the ERP, and ȳ is the average. The value of R2 approaches optimum 1, indicating that the reconstructed waveforms of the model can reflect the ERP of the four motor imagery tasks to a high degree.

Figure 5. Characteristic waveforms of four types of motor imagery tasks restored using the inverse AMM and transposed convolutional decoder on BCI Competition IV IIa. To be interpretable, the waveforms generated by the model should be similar to the real event-related potentials.

4.4 Discussion

The AM-MTEEG model shows effectiveness in the EEG classification task under the cross-subject variability, as demonstrated through the above experiments on BCI Competition datasets. By incorporating the bidirectional associative memory network, the AM-MTEEG model enables rapid adaptation onto new subjects once the encoder is pre-trained. For practical BCI systems, the proposed model could fit into new subjects using only a small number of samples, facilitating the application of BCI devices for wide users.

With the development of neuromorphic computing, specialized chips have been developed for deploying spiking neural networks (Davies et al., 2018; Pei et al., 2019). Compared to CPUs and GPUs via the Von Neumann architecture, neuromorphic circuits can be implemented using in-memory computing digital or analog circuits. Such neuromorphic circuits offer advantages such as high parallel efficiency, low energy consumption, and compact size. As indicated in Figure 1, both the two stages of AM-MTEEG entail spiking neural networks. This impulsive neuronal attribute make AM-MTEEG potential candidate for edge-computing scenarios in the healthcare field. Hence, it is a doable approach to developing the domestic and miniaturized uses of BCI devices by embedding the proposed model onto neuromorphic circuits.

5 Conclusion

This article has developed AM-MTEEG, a multi-task EEG classification model based on deep learning and impulsive associative memory. The model integrates impulsive neural representations from deep learning with bidirectional associative memory networks to alleviate challenges in BCI, such as high variability and limited data in EEG, and the lack of interpretability in end-to-end deep learning. By resorting to the multi-task learning, AM-MTEEG proceeds each subject's classification task as an independent task and leverages cross-subject training to extract shared features and facilitate feature sharing across subjects. Experimental results show the AM-MTEEG model surpasses state-of-the-art methods on the BCI Competition IV IIa dataset, while minimizing classification performance variance across different samples. Altogether, the AM-MTEEG model holds effectiveness in extracting common EEG features, capturing data variability, and handling cross-subject classification tasks. Future work will focus on integrating associative memory networks with deep convolutional networks as to treat single-subject multi-task or multi-subject multi-task BCI scenarios, as well as exploring the joint learning of Hebbian rules and gradient descent for few-shot EEG decoding.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: https://www.bbci.de/competition/.

Author contributions

JL: Writing – original draft. BH: Writing – review & editing. Z-HG: Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported in part by the National Natural Science Foundation of China under Fund 62322311 and Fund 62233007, and by the Guangdong Artificial Intelligence and Digital Economy Laboratory (Guangzhou) under Fund PZL2023ZZ0001.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adadi, A., and Berrada, M. (2018). Peeking inside the black-box: a survey on explainable artificial intelligence (XAI). IEEE Access 6, 52138–52160. doi: 10.1109/ACCESS.2018.2870052

Altaheri, H., Muhammad, G., and Alsulaiman, M. (2022). Physics-informed attention temporal convolutional network for EEG-based motor imagery classification. IEEE Trans. Indust. Inform. 19, 2249–2258. doi: 10.1109/TII.2022.3197419

Blankertz, B., Tomioka, R., Lemm, S., Kawanabe, M., and Muller, K.-R. (2007). Optimizing spatial filters for robust EEG single-trial analysis. IEEE Signal Process. Mag. 25, 41–56. doi: 10.1109/MSP.2008.4408441

Brunner, C., Leeb, R., Müller-Putz, G., Schlögl, A., and Pfurtscheller, G. (2008). “BCI Competition 2008-graz data set A,” in Institute for knowledge discovery (laboratory of brain-computer interfaces) (Graz: Graz University of Technology), 1–6.

Cachi, P. G., Soto, S. V., and Cios, K. J. (2023). “TM-SNN: threshold modulated spiking neural network for multi-task learning,” in International Work-Conference on Artificial Neural Networks (Cham: Springer), 653–663.

Davies, M., Srinivasa, N., Lin, T.-H., Chinya, G., Cao, Y., Choday, S. H., et al. (2018). Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99. doi: 10.1109/MM.2018.112130359

Degenhart, A. D., Bishop, W. E., Oby, E. R., Tyler-Kabara, E. C., Chase, S. M., Batista, A. P., et al. (2020). Stabilization of a brain-computer interface via the alignment of low-dimensional spaces of neural activity. Nat. Biomed. Eng. 4, 672–685. doi: 10.1038/s41551-020-0542-9

Diehl, P. U., and Cook, M. (2015). Unsupervised learning of digit recognition using spike-timing-dependent plasticity. Front. Comput. Neurosci. 9:99. doi: 10.3389/fncom.2015.00099

Dornhege, G., Blankertz, B., Curio, G., and Muller, K.-R. (2004). Boosting bit rates in noninvasive EEG single-trial classifications by feature combination and multiclass paradigms. IEEE Trans. Biomed. Eng. 51, 993–1002. doi: 10.1109/TBME.2004.827088

Han, C., Liu, C., Cai, C., Wang, J., and Qian, D. (2024). EDPNet: an efficient dual prototype network for motor imagery EEG decoding. arXiv [Preprint]. arXiv:2407.03177. doi: 10.48550/arXiv.2407.03177

Hu, B., Guan, Z.-H., Chen, G., and Lewis, F. L. (2019a). Multistability of delayed hybrid impulsive neural networks with application to associative memories. IEEE Trans. Neural Netw. Learn. Syst. 30, 1537–1551. doi: 10.1109/TNNLS.2018.2870553

Hu, X., Chen, J., Wang, F., and Zhang, D. (2019b). Ten challenges for EEG-based affective computing. Brain Sci. Adv. 5, 1–20. doi: 10.1177/2096595819896200

Huang, G., Zhao, Z., Zhang, S., Hu, Z., Fan, J., Fu, M., et al. (2023). Discrepancy between inter-and intra-subject variability in EEG-based motor imagery brain-computer interface: evidence from multiple perspectives. Front. Neurosci. 17:1122661. doi: 10.3389/fnins.2023.1122661

Kelso, S. R., Ganong, A. H., and Brown, T. H. (1986). Hebbian synapses in hippocampus. Proc. Nat. Acad. Sci. 83, 5326–5330. doi: 10.1073/pnas.83.14.5326

Kosko, B. (1988). Bidirectional associative memories. IEEE Trans. Syst. Man Cybern. 18, 49–60. doi: 10.1109/21.87054

Lawhern, V. J., Solon, A. J., Waytowich, N. R., Gordon, S. M., Hung, C. P., and Lance, B. J. (2018). EEGNet: a compact convolutional neural network for EEG-based brain-computer interfaces. J. Neural Eng. 15:056013. doi: 10.1088/1741-2552/aace8c

Lebedev, M. A., and Nicolelis, M. A. (2017). Brain-machine interfaces: from basic science to neuroprostheses and neurorehabilitation. Physiol. Rev. 97, 767–837. doi: 10.1152/physrev.00027.2016

Levy, E. R. J., Carrillo-Segura, S., Park, E. H., Redman, W. T., Hurtado, J. R., Chung, S., et al. (2023). A manifold neural population code for space in hippocampal coactivity dynamics independent of place fields. Cell Rep. 42:113142. doi: 10.1016/j.celrep.2023.113142

Liu, K., Yang, M., Yu, Z., Wang, G., and Wu, W. (2023). FBMSNet: a filter-bank multi-scale convolutional neural network for EEG-based motor imagery decoding. IEEE Trans. Biomed. Eng. 70, 436–445. doi: 10.1109/TBME.2022.3193277

Lotte, F., Bougrain, L., Cichocki, A., Clerc, M., Congedo, M., Rakotomamonjy, A., et al. (2018). A review of classification algorithms for EEG-based brain-computer interfaces: a 10 year update. J. Neural Eng. 15:031005. doi: 10.1088/1741-2552/aab2f2

Lotte, F., Bougrain, L., and Clerc, M. (2015). “Electroencephalography (EEG)-based brain-computer interfaces,” in Wiley Encyclopedia of Electrical and Electronics Engineering (Bristol: IOP Publishing), 44.

Ma, Y., Chen, B., Ren, P., Zheng, N., Indiveri, G., and Donati, E. (2020). EMG-based gestures classification using a mixed-signal neuromorphic processing system. IEEE J. Emerg. Select. Topics Circ. Syst. 10, 578–587. doi: 10.1109/JETCAS.2020.3037951

Miconi, T., Stanley, K., and Clune, J. (2018). “Differentiable plasticity: training plastic neural networks with backpropagation,” in International Conference on Machine Learning (New York: PMLR), 3559–3568.

Neftci, E. O., Mostafa, H., and Zenke, F. (2019). Surrogate gradient learning in spiking neural networks: Bringing the power of gradient-based optimization to spiking neural networks. IEEE Signal Process. Mag. 36, 51–63. doi: 10.1109/MSP.2019.2931595

Pei, J., Deng, L., Song, S., Zhao, M., Zhang, Y., Wu, S., et al. (2019). Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 572, 106–111. doi: 10.1038/s41586-019-1424-8

Qi, Y., Zhu, X., Xu, K., Ren, F., Jiang, H., Zhu, J., et al. (2022). Dynamic ensemble bayesian filter for robust control of a human brain-machine interface. IEEE Trans. Biomed. Eng. 69, 3825–3835. doi: 10.1109/TBME.2022.3182588

Seitz, A. R. (2010). Sensory learning: rapid extraction of meaning from noise. Curr. Biol. 20, R643–R644. doi: 10.1016/j.cub.2010.06.017

Shen, L., Lu, X., Yuan, X., Hu, R., Wang, Y., and Jiang, Y. (2023). Cortical encoding of rhythmic kinematic structures in biological motion. Neuroimage 268:119893. doi: 10.1016/j.neuroimage.2023.119893

Shen, X., Liu, X., Hu, X., Zhang, D., and Song, S. (2022). Contrastive learning of subject-invariant EEG representations for cross-subject emotion recognition. IEEE Trans. Affect. Comp. 14, 2496–2511. doi: 10.1109/TAFFC.2022.3164516

Wang, Y., Wang, J., Wang, W., Su, J., Bunterngchit, C., and Hou, Z.-G. (2024). TFTL: a task-free transfer learning strategy for EEG-based cross-subject & cross-dataset motor imagery BCI. IEEE Trans. Biomed. Eng. 72, 810–821. doi: 10.1109/TBME.2024.3474049

Ward, M., and Rhodes, O. (2022). Beyond LIF neurons on neuromorphic hardware. Front. Neurosci. 16:881598. doi: 10.3389/fnins.2022.881598

Waytowich, N. R., Lawhern, V. J., Bohannon, A. W., Ball, K. R., and Lance, B. J. (2016). Spectral transfer learning using information geometry for a user-independent brain-computer interface. Front. Neurosci. 10:430. doi: 10.3389/fnins.2016.00430

Wu, Y., Zhao, R., Zhu, J., Chen, F., Xu, M., Li, G., et al. (2022). Brain-inspired global-local learning incorporated with neuromorphic computing. Nat. Commun. 13:65. doi: 10.1038/s41467-021-27653-2

Xu, M., Chen, X., Sun, A., Zhang, X., and Chen, X. (2023). A novel event-driven spiking convolutional neural network for electromyography pattern recognition. IEEE Trans. Biomed. Eng. 70, 2604–2615. doi: 10.1109/TBME.2023.3258606

Zhang, Y., and Yang, Q. (2021). A survey on multi-task learning. IEEE Trans. Knowl. Data Eng. 34, 5586–5609. doi: 10.1109/TKDE.2021.3070203

Zhang, Y., Yang, R., Yue, Y., Lim, E. G., and Wang, Z. (2023). An overview of algorithms for contactless cardiac feature extraction from radar signals: advances and challenges. IEEE Trans. Instrum. Meas. 72, 1–20. doi: 10.1109/TIM.2023.3300471

Zheng, Q., Wang, Y., and Heng, P. A. (2019). Multitask feature learning meets robust tensor decomposition for EEG classification. IEEE Trans. Cybern. 51, 2242–2252. doi: 10.1109/TCYB.2019.2946914

Keywords: electroencephalogram (EEG), brain-computer interface, bidirectional associative memory, impulsive neural network, multi-task learning

Citation: Li J, Hu B and Guan Z-H (2025) AM-MTEEG: multi-task EEG classification based on impulsive associative memory. Front. Neurosci. 19:1557287. doi: 10.3389/fnins.2025.1557287

Received: 10 January 2025; Accepted: 12 February 2025;

Published: 06 March 2025.

Edited by:

Mei Liu, Multi-scale Medical Robotics Center Limited, ChinaCopyright © 2025 Li, Hu and Guan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bin Hu, aHV1QHNjdXQuZWR1LmNu

Junyan Li1,2

Junyan Li1,2 Bin Hu

Bin Hu