95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurosci. , 15 January 2024

Sec. Neuroprosthetics

Volume 17 - 2023 | https://doi.org/10.3389/fnins.2023.1345770

This article is part of the Research Topic Advances, Challenges, and Applications in Brain-Computer Interface View all 21 articles

Introduction: Affective computing is the core for Human-computer interface (HCI) to be more intelligent, where electroencephalogram (EEG) based emotion recognition is one of the primary research orientations. Besides, in the field of brain-computer interface, Riemannian manifold is a highly robust and effective method. However, the symmetric positive definiteness (SPD) of the features limits its application.

Methods: In the present work, we introduced the Laplace matrix to transform the functional connection features, i.e., phase locking value (PLV), Pearson correlation coefficient (PCC), spectral coherent (COH), and mutual information (MI), to into semi-positive, and the max operator to ensure the transformed feature be positive. Then the SPD network is employed to extract the deep spatial information and a fully connected layer is employed to validate the effectiveness of the extracted features. Particularly, the decision layer fusion strategy is utilized to achieve more accurate and stable recognition results, and the differences of classification performance of different feature combinations are studied. What's more, the optimal threshold value applied to the functional connection feature is also studied.

Results: The public emotional dataset, SEED, is adopted to test the proposed method with subject dependent cross-validation strategy. The result of average accuracies for the four features indicate that PCC outperform others three features. The proposed model achieve best accuracy of 91.05% for the fusion of PLV, PCC, and COH, followed by the fusion of all four features with the accuracy of 90.16%.

Discussion: The experimental results demonstrate that the optimal thresholds for the four functional connection features always kept relatively stable within a fixed interval. In conclusion, the experimental results demonstrated the effectiveness of the proposed method.

Affective computing is a science involving multiple disciplines, such as psychology, biology, and philosophy, among which research on affective models and emotion recognition are two important branches in the field of affective computing. Generally, affective models can be mainly categorized as discrete models and dimensional models. Discrete emotion theorists think that people's emotions consist of several basic emotions, such as Ekman's (1992) six-basic emotions model and Izard's (2007) 10-basic emotions model. However, dimensional emotion theorists believe that human's emotions are continuous and have some special characteristics, such as the valence-arousal model (Russell, 1980) and the Plutchik (2001)'s Wheel of Emotions.

With the development of information technology and artificial intelligence technology, emotion recognition plays an increasingly important role in the field of human–computer interaction, because machines can become more intelligent through emotional interaction with humans (Picard, 2003). For this reason, emotion recognition has received widespread attention from researchers in various fields, such as emotion recognition based on facial expressions, emotion recognition based on speech, and emotion recognition based on EEG signals (Koolagudi and Rao, 2012; Ko, 2018; Abramson et al., 2020; Houssein et al., 2022; Yi et al., 2024). However, among the many directions of emotion recognition, EEG signals can establish a closer and more realistic mapping relationship with emotions due to their “unconcealability,” that is to say, people can easily disguise their true emotions by adjusting their expressions and voices, but it is difficult to change the corresponding EEG signals at the same time.

Feature extraction is one of the core modules in the EEG emotion recognition process for highly discriminative features that can help improve the performance of emotion recognition models. In recent years, various features have been developed to solve the EEG-based emotion recognition task, where these features can been roughly divided into two classes, i.e., the single-channel features and the multi-channel features. The calculation of single-channel features does not depend on other channels of EEG, therefore, the features of each channel can be considered independent of each other. Duan et al. (2013) first developed differential entropy (DE) to decode the EEG signals and classify different emotional states, then Zheng and Lu (2015) verified that the DE features have higher discrimination and robustness compared with other features by experiments in two public emotional datasets, i.e., the SEED and the DEAP (Koelstra et al., 2011). Moreover, the sample entropy (SE) and the approximate entropy (ApEn) are also two common entropy-based features to measure the uncertainty of emotional EEG signals (Zeng et al., 2019; Wang et al., 2022). In addition, the wavelet energy and the power spectral density (PSD) are also two common features to measure the frequency domain information for EEG signals (Zheng and Lu, 2015; Mohammadi et al., 2017; Wang et al., 2022). Compared with single-channel features, multi-channel features, or functional connection features, are more concerned with measuring the interactive information between channels. The differential asymmetry (DASM) and the rational asymmetry (RASM) are widely employed to measure the difference of EEG signals between the left and right hemispheres (Zheng and Lu, 2015; Zhang et al., 2021). Both Dasdemir et al. (2017) and Nguyen and Artemiadis (2018) used the phase-locking value (PLV) to build a functional brain neural network and perform the emotion recognition task. Khosrowabadi et al. (2010) utilized the mutual information (MI) to establish a dynamic emotional system. In addition, the Pearson correlation coefficient (PCC), the spectral coherence coefficient (COH), the phase lag index (PLI), and the covariance matrix (COV) are also usually adopted to represent the emotional interaction information between different channels of EEG (Jadhav et al., 2017; Keelawat et al., 2021; Wu et al., 2022; Lin et al., 2023).

Furthermore, a large number of techniques have been developed to construct the mapping relationship between EEG features and emotions. Zheng and Lu (2015) introduced the deep belief network (DBN) to decoding the DE features and achieved 86.08% accuracy for positive, negative, and neutral states. Du et al. (2020) proposed an attention-based LSTM with Domain Discriminator (ATDD-LSTM) with DE as input features to solve the subject-dependent and subject-independent emotion recognition tasks in three public emotional datasets. Moon et al. (2020) employed the convolutional neural networks (CNNs) to extract the spatial domain information from PLV and PCC and transform entropy (TE). Song et al. (2018) developed a novel dynamical graph convolutional neural networks (DGCNNs) to model the multichannel EEG features and perform the emotion recognition task and achieved average accuracies of 90.4% and 79.95% for subject dependent and subject independent cross-validation on the SEED, respectively. Liu et al. (2023) combined the attention mechanism and pre-trianed convolutional capsule network to extract the spatial information from the original emotional EEG signals. Zali-Vargahan et al. (2023) introduced CNN to extract the deep time-frequency features and employed several machine classifiers such as decision tree to classify different emotional states, where the average accuracy of 94.58% had been achieved in SEED. Ma et al. (2023) developed the transfer learning methods to reduce the distribution differences of emotional EEG signals between different subjects, thereby enabling more robust cross-subject emotion recognition.

In recent years, Riemannian manifolds (RMs) have received a lot of attention in the field of brain–computer interfaces due to the simplicity, accuracy, robustness, and transfer learning capabilities (Congedo et al., 2017). Barachant et al. (2013) developed the Riemannian-based kernel support vector machine (RK-SVM) to solve the motor image task in a public brain–computer interface (BCI) competition dataset. However, since the conventional deep learning model mainly utilize the non-linear function to map the features located in the Euclidean space, the features located in RM usually cannot be fed into the deep model, for the non-linear function will change the SPD of the features and the mapped features will then locate in Euclidean space. Therefore, Huang and Van Gool (2017) designed a RM network architecture by the combination of bilinear and non-linear learning, and achieved the state-of-the-art accuracies in three datasets. Yair et al. (2019) utilized the parallel transport to achieve the domain adaptation for symmetric positive definite (SPD) matrices in RM, and achieve accuracy of 78% for four-class motor imagery task by the leave-one-session-out cross-validation. In addition, it can also map Riemannian features to Euclidean space by establishing a mapping between Riemannian manifold and Euclidean space, and then use deep learning methods (Wu et al., 2022). Particularly, in the domain of affective BCIs, Riemannian manifolds approaches have been instrumental in feature extraction and classification tasks related to emotion recognition. The utilization of covariance matrices and manifold-based representations allows for a more nuanced understanding of the underlying neural patterns associated with different emotional states (Abdel-Ghaffar and Daoudi, 2020; Wang et al., 2021). However, the limitation of the Riemannian manifold is that its features must be symmetric and positive definite, which greatly limits the application of the Riemannian methods.

In the present study, we adopted four functional connection features, i.e., PLV, PCC, COH, and MI, to perform the emotion recognition task in the SEED database. The main contributions of this study can be summarized as follows: (1) We introduced the the Laplace matrix with a max operation to transform the four functional connection features into SPD; (2) Four functional connection features almost achieved similar performances in recognizing emotions, especially PLV, PCC, and COH, which may indicate the stability of brain functional connection when subjects are evoked emotions; (3) The decision fusion strategy is employed to fuse the four features to achieve higher accuracy and robustness, and a detailed comparison about the combination for different features in emotion recognition has been made.

The layout of this study is organized into following sections: In Section 2, the detailed descriptions about the four functional connection features, the SPDnet, the Laplace matrix, and the overview about the proposed model are presented. The detailed experimental results, analysis, and discussion for the SEED dataset are presented in Section 3. Finally, Section 4 presents the conclusion about this study, as well as the discussion about future works.

In this study, four functional connection features are adopted to measure the effectiveness of the proposed method and recognize emotional states. Specially, denote the , i = 1, …, C as the i-th channel EEG signals where C and T represent the number of EEG channel and the time length, respectively. Table 1 displays all of main variables and the corresponding meaning.

The PLV is a phase-based method, which measures the phase difference between the two channel signals (Gysels and Celka, 2004). The calculation formula of PLV is defined as Equation (1),

Where ϕm, t and ϕn, t represent the phase angles of signals Xm and Xn, respectively, at time point t.

The PCC measures the linear correlation degree between two channel signals in time domain (Guevara and Corsi-Cabrera, 1996). The value range of PCC is [–1,1]; hence, PCC can measure whether two signals are positively or negatively correlated. The calculation formula of PCC is defined as Equation (2),

Where and are expectations of Xm and Xn, respectively.

Contrary to PCC, the COH measures the linear correlation degree between two channel signals in frequency domain (Guevara and Corsi-Cabrera, 1996). The calculation formula is defined as Equation (3),

Where Pm, n(f) means the cross-spectral density of EEG signal Xm and Xn in the frequency f, while Pm, m and Pn, n means the power spectral density of Xm and Xn, respectively.

MI is a information theory based method where advantage of MI is that it can detect the linear and non-linear correlation of two signals at the same time (Jeong et al., 2001). However, the accuracy of MI calculation is easily affected by the noise in the signal and the length of the signal. The calculation formula is defined as Equation (4),

Where PXm, Xn means the joint probability distribution of Xm and Xn, and PXm and PXn mean the probability distributions of Xm and Xn, respectively.

The SPD set is defined as Equation (5) as following

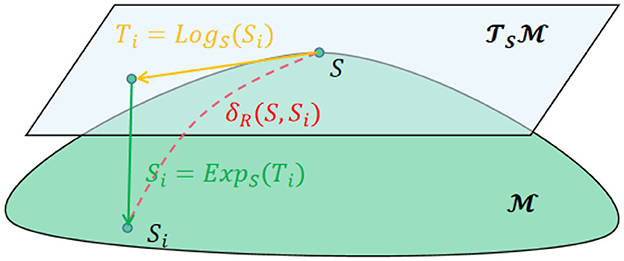

and lie on the Riemannian manifold (RM) rather than the Euclid space. However, There are two operators that can realize the mutual mapping between Riemannian manifold and Euclidean space. Concretely, suppose there is a point S∈Sym+, then the tangent space of S, denoted as , could be defined and belongs to the Euclid space. The logarithmicmap operator as following is defined to project the point to the shown in Equation (6),

where logm denotes the logarithm of a matrix calculated as Equation (7),

where U = [uij]C×C and V = diag{v1, v2, …, vC} are the eigenvector and eigenvalue of the matrix S. Moreover, a corresponding inverse operation, i.e., exponentialmap, is also defined to project the points in the to the shown in Equation (8),

where expm denotes the exponential of a matrix calculated as Equation (9),

Figure 1 shows the two operations between the RM and corresponding tangent space in S.

Figure 1. The two operations between the RM and corresponding tangent space in S. Particularly, δR represents the geodesic distance between S and Si where the calculation method can be found in Barachant et al. (2013).

The SPD matrix network (SPDnet), as introduced by Huang and Van Gool (2017), operates analogously to the commonly employed convolutional network (ConvNet). It effectively preserves the inherent geometric information of the SPD matrix, akin to the way ConvNets capture spatial features in other types of data. The SPD net mainly consists of three kinds of layers, i.e., the bilinear mapping (BiMap) layer, the eigenvalue rectification (ReEig) layer, and the log eigenvalue (LogEig) layer, where the BiMap layer is designed to transform the SPD set into a new SPD set by a bilinear mapping, the ReEig layer is designed to rectify the new SPD matrices by a non-linear function to ensure the positive definite of the new SPD matrices, the LogEig layer is designed to perform corresponding RM computing on the output new SPD matrices. Particularly, let Sk−1 be the input SPD matrix and the Sk be the output, then the calculation of the three layer can be defined as Equations (10)–(12),

where , , and are the k-th BiMap layer, the k-th ReEig layer, and the k-th LogEig layer, respectively. In addition, is the transformation matrix, the Uk−1 and the Vk−1 are calculated by eigenvalue decomposition (EIG), the ϵ is a rectification threshold, and the I is an identity matrix. Figure 2 shows a sample architecture of the SPDnet.

Laplace matrix has been widely employed to build the brain functional connection network in the brain–computer interface field, and achieved good performance. Generally, the Laplace matrix is a fundamental concept in graph theory and linear algebra and provides valuable insights into the connectivity and structural properties of a graph's adjacency matrix. Let A be an undirected adjacency matrix with C nodes, and D be the corresponding degree matrix, then the i-th element of D can be calculated as Equation (13),

Then, the laplacian matrix L is defined as Equation (14),

Typically, the Laplace matrix has an important property, i.e., the Laplace matrix is symmetric, positive, and semi-definite. However, since the Laplace matrix is only positive semi-definite and does not fully satisfy the conditions of the Riemannian manifold, inspired by the ReEig layer in the SPD net, we also introduced the max operator to transform the Laplace matrix into positive definite shown in Equation (15) i.e.,

Therefore, after the max operator, the Laplace matrix lies on the RM, and the matrix can be fed into the SPDnet.

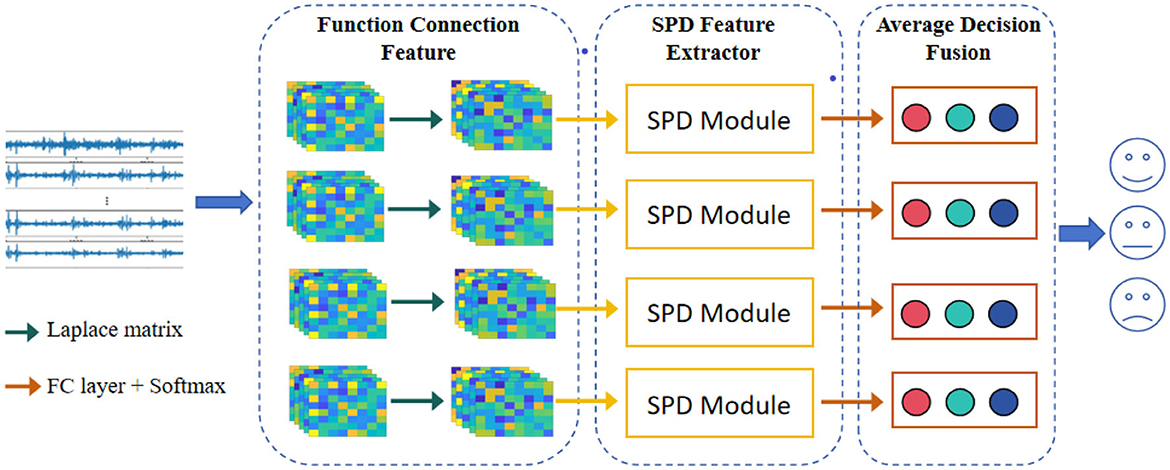

The overview of our model is displayed in Figure 3. As shown in the figure, the model mainly consists of three modules: the function connection feature, the deep SPD feature extractor, and the average decision fusion module. More concretely, in the function connection feature module, four types of feature (i.e., PLV, PCC, MI, and COH) are first extracted from the preprocessed EEG signals, and a threshold value is used to transform the functional connection features into undirected graphs, then the Laplace matrices with the max operator are calculated; in the SPD feature extractor module, four BiMap layers, four ReEig layers, and a LogEig layer are combined to further extract the deep features from the Laplace matrices, and the output features are flattened as a vector; in the average decision fusion, considering the difference in information carried by different functional connection features, we used average decision-making layer fusion to synthesize different feature information. The implementation details of the parameters of the proposed model are presented in Table 2.

Figure 3. The flowchart of the proposed model. The model mainly consists of three modules: the function connection feature, the deep SPD feature extractor, and the average decision fusion module.

In this study, the SEED dataset is employed to evaluate the effectiveness of the our method. The SEED dataset is a publicly available emotional dataset and widely used in emotion recognition. A total of 15 healthy subjects (7 male and 8 female participants, mean: 23.27, std: 2.37), who were university or graduate students, were invited to watch 15 film clips with different emotional labels, i.e., positive, negative, and neutral emotional states. That is to say, each experiment contains 15 trials. Particularly, each subject participated in the experiment three times, with at least 1 week between two adjacent experiments. Sixty-two channels EEG signals with the sampling frequency of 1,000 Hz were recorded. In addition, to save computing resources, the EEG signals were downsampled to 200 Hz. The artifact was also been removed. To evaluate the proposed model, the 15 trials EEG signals are divided into training data and testing data, where the training data contains first nine trials while the testing test contains the rest six trials from the same experiment. The EEG signals were filtered between 1 and 47 Hz by the fourth Butterworth bandpass filter, and a 1-s window with non-overlap was applied. To keep the number of samples in different categories consistent, we only selected the EEG signals of the last 2 min of each trial. The classification performance of the model is evaluated by accuracy as Equation (16),

where TP (True Positives) indicates the number of samples that the model correctly predicts as positive categories, TN (True Negatives) represents the number of samples that the model correctly predicts as negative categories, FP (False Positives) indicates the number of samples in which the model incorrectly predicts negative categories as positive categories, and FN (False Negatives) represents the number of samples in which the model incorrectly predicts positive categories as negative categories.

The experimental environment was built on a Windows 10 PC with Core (TM) i7-10700 CPU, NVIDIA GeForce RTX 3080Ti, and the computing environment was pytorch 1.10.1.

To test the effectiveness of the four function connection features and the proposed method, we divide the input of the model into four modes:

• Only one type of feature: PLV, PCC, MI, and COH;

• The combination of two types of features: PLV+PCC, PLV+MI, PLV+COH, PCC+MI, PCC+COH, and MI+COH;

• The combination of three types of features: PLV+PCC+MI, PLV+PCC+COH, PLV+MI+COH, and PCC+MI+COH;

• The combination of all four types of features: PLV+PCC+MI+COH.

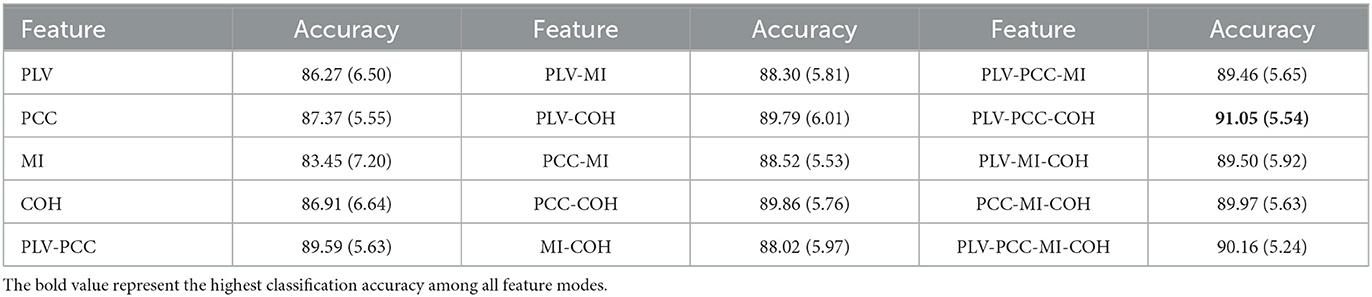

The experimental results are displayed in Table 3. As is shown in the table, the results for each feature mode is acceptable where the accuracies were higher than 83%. Besides, according to the Table 3, it can be also concluded that: (1) For the mode with only one feature, the PCC achieved highest performance (83.45%/7.20%) among the four functional connection features, while the PCC achieved highest performance (87.37%/5.5%), that is to say, MI is worse in accuracy and robustness in identifying the emotions of different subjects, while PCC is better than the other three features in these two aspects; (2) For the mode with the combination of two features, the PCC-COH achieved best accuracy, followed by the PLV-COH, PLV-PCC, PCC-MI, and PLV-MMI, and MI-COH achieved worst accuracy. An interesting phenomenon can be drawn that the average emotion recognition performances achieved by feature modes involving MI were always the worst; (3) For the mode with the combination of three features, the PLV-PCC-COH achieved the best mean accuracy, followed by the PCC-MI-COH, the PLV-MI-COH, and the PLV-PCC-MI. However, similar to the mode with two features, the feature modes involving MI still achieve poor performance; (4) For the mode with combination of all four features, the mean accuracy and standard deviation (std) for 15 subjects were 90.16% and 5.24%, respectively, where the accuracy was the second highest among all feature modes while the std was the lowest. It indicated that the four feature fusion modes can achieve more robust performance in emotion recognition tasks.

Table 3. The mean (standard deviation) accuracy of the proposed model with the four different feature modes (%).

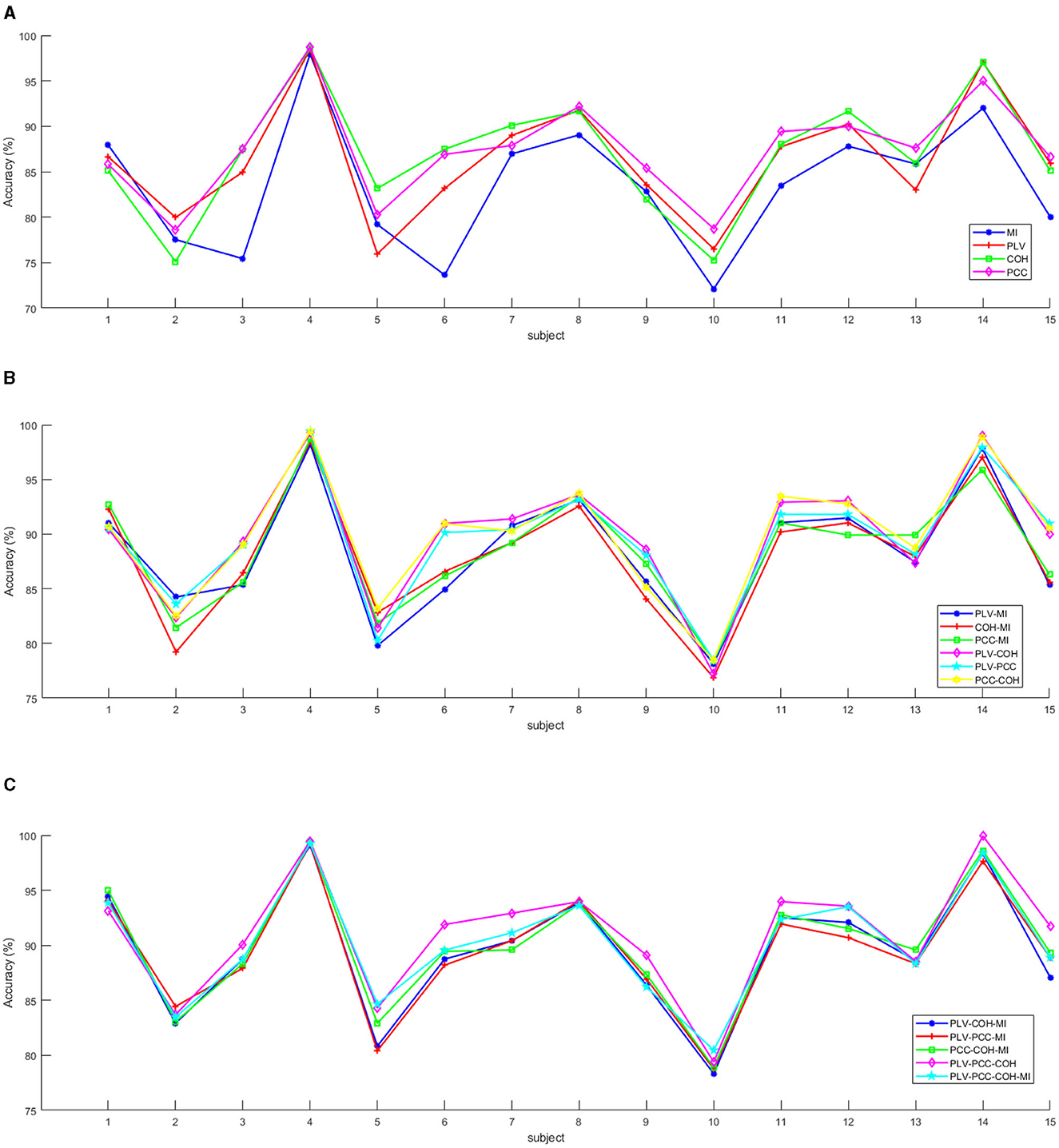

The detailed accuracy information for all the 15 subjects with all feature modes was shown in Figure 4. As is displayed in Figure 4A, the MI almost always achieved relatively low accuracies except for subject #1, while although the PCC did not always achieve the best accuracy, its value always kept stable and remained in the top two among the four features. Moreover, as is displayed in Figures 4B, C, for all 15 subjects, compared with a single feature mode, a combination of different number of features can effectively improve the performance of emotion recognition. In addition, for each subject, it can be found that compared with the difference in recognition performance between single feature modes, after the decision-making layer fusion of features, the performance difference of emotion classification models after different combinations of features is smaller. However, the PLV-PCC-COH almost always achieved best accuracies among all 15 subjects. In summary, combining Table 3 and Figure 4, the ability of PCC to measure brain functional connectivity (i.e., the interactive information between EEG electrodes) may be better than the other three functional connectivity features. In addition, for the fusion between features, PLV-PCC-COH is optimal in overall recognition performance, but the performance achieved when the four features are combined together is the most stable.

Figure 4. The mean accuracies for the 15 subjects with the different feature modes. (A) The mean accuracies for the 15 subjects with the mode of only one type of feature. (B) The mean accuracies for the 15 subjects with the mode of combination with two types of features. (C) The mean accuracies for the 15 subjects with the mode of combination with more than three types of features.

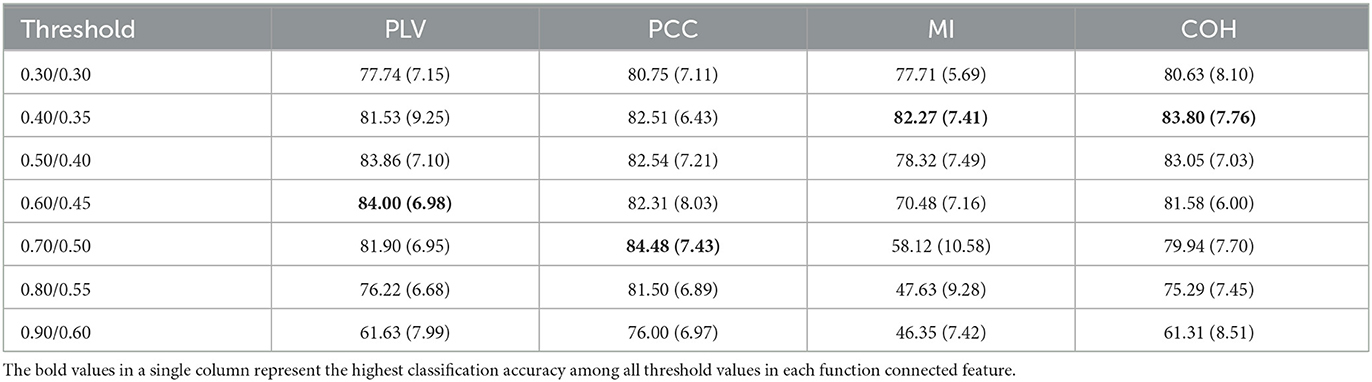

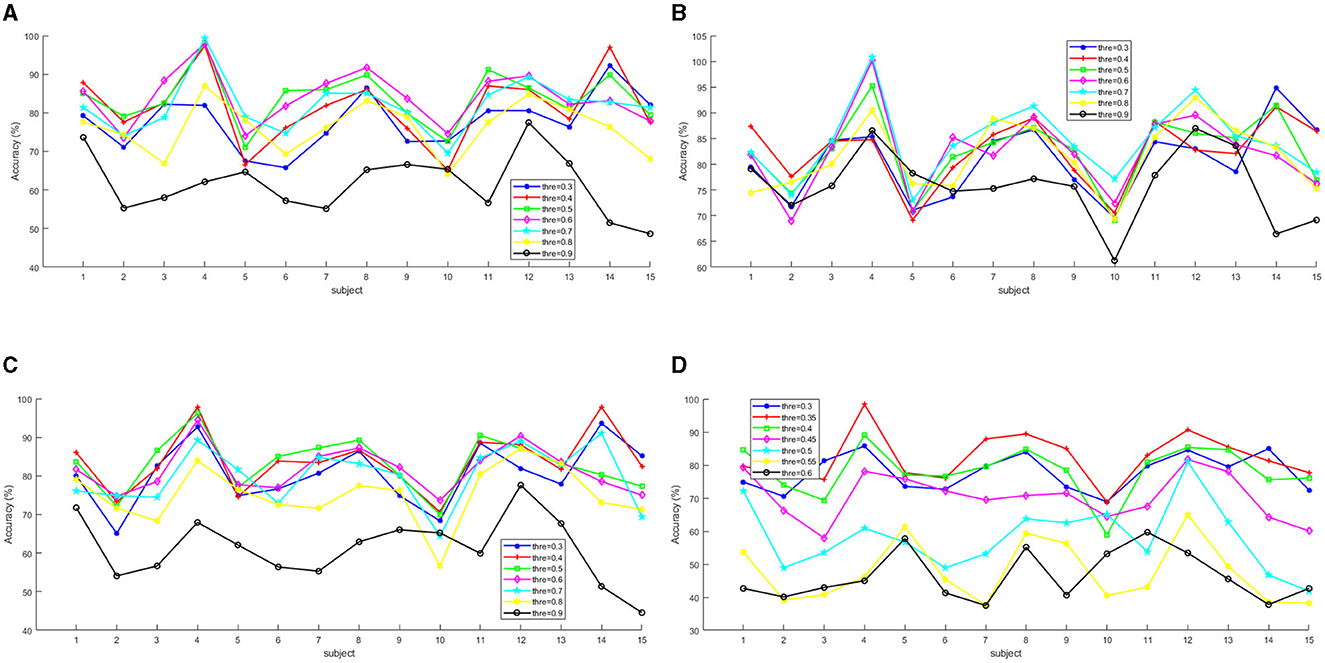

In this part, we investigate the influence of the threshold to transform the function connection features into the undirected graph of EEG channels. Particularly, since the value range of PLV and COH is between [0, 1], and the value range of PCC is between [–1, 1], we chose the threshold value for the three features from the set {0.3,0.4,0.5,0.6,0.7,0.8,0.9}. Since the maximum value of MI is around 0.65, we chose the threshold value from the set {0.3,0.35,0.4,0.45,0.5,0.55,0.6}. The average and detailed results among 15 subjects are shown in Table 4 and Figure 5, respectively. As is shown in the table, we can conclude that for each functional connection feature, as the threshold increased, the feature's emotion recognition performance would increase. However, when it increased to a certain threshold, the feature's performance would begin to decline. More concretely, when the thresholds are 0.6, 0.7, 0.35, and 0.4 respectively, the classification accuracy of PLV, PCC, MI, and COH reaches the peak, respectively. It may be indicated that the optimal threshold values of the four features are in the range [0.5, 0.7], [0.6, 0.8], [0.3, 0.4], and [0.3, 0.5].

Table 4. The mean (standard deviation) accuracy of the proposed model for the four function connected features with different threshold values (%).

Figure 5. The accuracies of the 15 subjects for the four functional connected features with different threshold values. (A) PLV. (B) PCC. (C) COH. (D) MI.

In addition, according to Figure 5, it is obvious that for each subject, the optimal threshold is different, but the differences in the impact of different thresholds on emotion recognition performance were relatively stable. In other words, for PLV, the emotion recognition accuracies achieved when the thresholds are 0.5, 0.6, and 0.7, which were almost always at the forefront (shown in Figure 5A); for PCC, the emotion recognition accuracies achieved when the thresholds are 0.6, 0.7, and 0.8, which were almost always at the forefront (shown in Figure 5B); for COH, the emotion recognition accuracies achieved when the thresholds are 0.3, 0.4, and 0.5, which were almost always at the forefront (shown in Figure 5C); and for MI, the emotion recognition accuracies achieved when the thresholds are 0.3, 0.35, and 0.4, which were almost always at the forefront (shown in Figure 5D).

Table 5 displayed the classification accuracies of parts of the state-of-the-art methods with the same training-test set partitioning strategy, i.e., 9 trials as training set and 6 trials as testing set for one experiment. As shown in the table, although the proposed model did not achieve the state-of-the-art performance, the method still outperforms most methods, which to some extent demonstrated the effectiveness of the proposed method. In addition, it can be obviously found that the DE feature is widely utilized in most methods to recognize emotions, while the functional connection features are employed relatively rarely. Therefore, the proposed method also demonstrates the effectiveness of functional connectivity features in identifying different emotions.

In this study, we proposed a novel method which consists of the functional connection features, the Laplace matrix, and the SPDnet to perform the EEG-based emotion recognition, where the Laplace matrix was utilized to transform the functional connection features and the SPDnet was utilized to extract the deep spatial information from the transformed features. The proposed method achieved desirable performance on the SEED dataset for subject dependent cross-validation with the highest average accuracy of 91.05%/5.54% subject to the fusion of PLV, PCC, and COH. In addition, the experimental results showed that PCC has higher discriminability in identifying different emotions, while MI had the lowest discriminability. Although there are differences among the four functional connection features, the recognition performance were almost similar, especially for PLV, PCC, and COH, which may indicate that, when emotions are induced in subjects, the brain functional connections measured by different functions show a certain degree of stability. However, experimental results using different thresholds applied for the four functional connection features can also draw a similar conclusion. Furthermore, the experiment for different thresholds also indicated that for each subject, the optimal thresholds for the four functional connection features always kept relatively stable within a fixed interval.

However, the current study has certain limitations. We only tested the proposed model in the SEED dataset, which results in an inability to fully demonstrate the generalization performance of the model. Therefore, in future, we will test the model in more public datasets. In addition, this study briefly discusses the effectiveness of the proposed method. Of course, there is more information that can be mined, such as building a brain network and combining the proposed method with complex networks. Finally, we only considered the subject dependent cross-validation strategy, since there are differences between subjects that lead to inconsistent distribution of EEG data. Therefore, we will further combine the transfer learning to test the performance of the proposed model with the subject independent cross-validation strategy.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

MW: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Software, Writing – original draft, Writing – review & editing. RO: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Writing – original draft. CZ: Investigation, Software, Validation, Visualization, Writing – original draft. ZS: Investigation, Software, Validation, Visualization, Writing – original draft. PL: Conceptualization, Funding acquisition, Project administration, Supervision, Writing – review & editing. FL: Conceptualization, Supervision, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by the National Natural Science Foundation of China (NSFC) (No. 61972437), Excellent Youth Foundation of Anhui Scientific Committee (No. 2208085J05), the National Key Research and Development Program of China (No. 2021ZD0201502), Special Fund for Key Program of Science and Technology of Anhui Province (No. 202203a07020008), Natural Science Foundation of Anhui Province (No. 2108085MF207), the Open Research Projects of Zhejiang Lab (No. 2021KH0AB06), the Open Projects Program of National Laboratory of Pattern Recognition (No. 202200014), and the Open Fund of Key Laboratory of Flight Techniques and Flight Safety, CAAC (No. FZ2022KF15).

The authors wish to express their gratitude to the reviewers whose comprehensive comments and insightful suggestions have facilitated significant improvements to the manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abdel-Ghaffar, E. A., and Daoudi, M. (2020). “Emotion recognition from multidimensional electroencephalographic signals on the manifold of symmetric positive definite matrices,” in 2020 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR) (IEEE), 354–359. doi: 10.1109/MIPR49039.2020.00078

Abramson, L., Petranker, R., Marom, I., and Aviezer, H. (2020). Social interaction context shapes emotion recognition through body language, not facial expressions. Emotion. 21, 557. doi: 10.1037/emo0000718

Barachant, A., Bonnet, S., Congedo, M., and Jutten, C. (2013). Classification of covariance matrices using a riemannian-based kernel for BCI applications. Neurocomputing 112, 172–178. doi: 10.1016/j.neucom.2012.12.039

Congedo, M., Barachant, A., and Bhatia, R. (2017). Riemannian geometry for EEG-based brain-computer interfaces; a primer and a review. Brain-Comput. Interf. 4, 155–174. doi: 10.1080/2326263X.2017.1297192

Dasdemir, Y., Yildirim, E., and Yildirim, S. (2017). Analysis of functional brain connections for positive–negative emotions using phase locking value. Cogn. Neurodyn. 11, 487–500. doi: 10.1007/s11571-017-9447-z

Du, X., Ma, C., Zhang, G., Li, J., Lai, Y.-K., Zhao, G., et al. (2020). An efficient LSTM network for emotion recognition from multichannel eeg signals. IEEE Trans. Affect. Comput. 13, 1528–1540. doi: 10.1109/TAFFC.2020.3013711

Duan, R.-N., Zhu, J.-Y., and Lu, B.-L. (2013). “Differential entropy feature for eeg-based emotion classification,” in 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER) (IEEE), 81–84. doi: 10.1109/NER.2013.6695876

Ekman, P. (1992). An argument for basic emotions. Cogn. Emot. 6, 169–200. doi: 10.1080/02699939208411068

Guevara, M. A., and Corsi-Cabrera, M. (1996). Eeg coherence or eeg correlation? Int. J. Psychophysiol 23, 145–153. doi: 10.1016/S0167-8760(96)00038-4

Gysels, E., and Celka, P. (2004). Phase synchronization for the recognition of mental tasks in a brain-computer interface. IEEE Trans. Neural Syst. Rehabilit. Eng. 12, 406–415. doi: 10.1109/TNSRE.2004.838443

Houssein, E. H., Hammad, A., and Ali, A. A. (2022). Human emotion recognition from EEG-based brain-computer interface using machine learning: a comprehensive review. Neural Comput. Applic. 34, 12527–12557. doi: 10.1007/s00521-022-07292-4

Huang, Z., and Van Gool, L. (2017). “A riemannian network for spd matrix learning,” in Proceedings of the AAAI Conference on Artificial Intelligence, 31. doi: 10.1609/aaai.v31i1.10866

Izard, C. E. (2007). Basic emotions, natural kinds, emotion schemas, and a new paradigm. Perspect. Psychol. Sci. 2, 260–280. doi: 10.1111/j.1745-6916.2007.00044.x

Jadhav, N., Manthalkar, R., and Joshi, Y. (2017). Effect of meditation on emotional response: an EEG-based study. Biomed. Signal Proc. Control 34, 101–113. doi: 10.1016/j.bspc.2017.01.008

Jeong, J., Gore, J. C., and Peterson, B. S. (2001). Mutual information analysis of the eeg in patients with alzheimer's disease. Clin. Neurophysiol. 112, 827–835. doi: 10.1016/S1388-2457(01)00513-2

Keelawat, P., Thammasan, N., Numao, M., and Kijsirikul, B. (2021). A comparative study of window size and channel arrangement on EEG-emotion recognition using deep CNN. Sensors 21, 1678. doi: 10.3390/s21051678

Khosrowabadi, R., Heijnen, M., Wahab, A., and Quek, H. C. (2010). “The dynamic emotion recognition system based on functional connectivity of brain regions,” in 2010 IEEE Intelligent Vehicles Symposium 377–381. doi: 10.1109/IVS.2010.5548102

Ko, B. C. (2018). A brief review of facial emotion recognition based on visual information. Sensors 18, 401. doi: 10.3390/s18020401

Koelstra, S., Muhl, C., Soleymani, M., Lee, J.-S., Yazdani, A., Ebrahimi, T., et al. (2011). Deap: a database for emotion analysis; using physiological signals. IEEE Trans. Affect. Comput. 3, 18–31. doi: 10.1109/T-AFFC.2011.15

Koolagudi, S. G., and Rao, K. S. (2012). Emotion recognition from speech: a review. Int. J. Speech Technol. 15, 99–117. doi: 10.1007/s10772-011-9125-1

Li, P., Liu, H., Si, Y., Li, C., Li, F., Zhu, X., et al. (2019). Eeg based emotion recognition by combining functional connectivity network and local activations. IEEE Trans. Biomed. Eng. 66, 2869–2881. doi: 10.1109/TBME.2019.2897651

Lin, X., Chen, J., Ma, W., Tang, W., and Wang, Y. (2023). EEG emotion recognition using improved graph neural network with channel selection. Comput. Methods Progr. Biomed. 231, 107380. doi: 10.1016/j.cmpb.2023.107380

Liu, S., Wang, Z., An, Y., Zhao, J., Zhao, Y., and Zhang, Y.-D. (2023). EEG emotion recognition based on the attention mechanism and pre-trained convolution capsule network. Knowl. Based Syst. 265, 110372. doi: 10.1016/j.knosys.2023.110372

Ma, Y., Zhao, W., Meng, M., Zhang, Q., She, Q., and Zhang, J. (2023). Cross-subject emotion recognition based on domain similarity of EEG signal transfer learning. IEEE Trans. Neural Syst. Rehabilit. Eng. 31, 936–943. doi: 10.1109/TNSRE.2023.3236687

Mohammadi, Z., Frounchi, J., and Amiri, M. (2017). Wavelet-based emotion recognition system using EEG signal. Neural Comput. Applic. 28, 1985–1990. doi: 10.1007/s00521-015-2149-8

Moon, S.-E., Chen, C.-J., Hsieh, C.-J., Wang, J.-L., and Lee, J.-S. (2020). Emotional eeg classification using connectivity features and convolutional neural networks. Neural Netw. 132, 96–107. doi: 10.1016/j.neunet.2020.08.009

Nguyen, C. H., and Artemiadis, P. (2018). Eeg feature descriptors and discriminant analysis under riemannian manifold perspective. Neurocomputing 275, 1871–1883. doi: 10.1016/j.neucom.2017.10.013

Picard, R. W. (2003). Affective computing: challenges. Int. J. Hum. Comput. Stud. 59, 55–64. doi: 10.1016/S1071-5819(03)00052-1

Plutchik, R. (2001). The nature of emotions: human emotions have deep evolutionary roots, a fact that may explain their complexity and provide tools for clinical practice. Am. Sci. 89, 344–350. doi: 10.1511/2001.28.344

Russell, J. A. (1980). A circumplex model of affect. J. Person. Soc. Psychol. 39, 1161. doi: 10.1037/h0077714

Song, T., Zheng, W., Song, P., and Cui, Z. (2018). EEG emotion recognition using dynamical graph convolutional neural networks. IEEE Trans. Affect. Comput. 11, 532–541. doi: 10.1109/TAFFC.2018.2817622

Wang, Y., Qiu, S., Ma, X., and He, H. (2021). A prototype-based SPD matrix network for domain adaptation EEG emotion recognition. Patt. Recogn. 110, 107626. doi: 10.1016/j.patcog.2020.107626

Wang, Z., Wang, Y., Hu, C., Yin, Z., and Song, Y. (2022). Transformers for EEG-based emotion recognition: a hierarchical spatial information learning model. IEEE Sensors J. 22, 4359–4368. doi: 10.1109/JSEN.2022.3144317

Wu, M., Hu, S., Wei, B., and Lv, Z. (2022). A novel deep learning model based on the ICA and riemannian manifold for EEG-based emotion recognition. J. Neurosci. Methods 378, 109642. doi: 10.1016/j.jneumeth.2022.109642

Yair, O., Ben-Chen, M., and Talmon, R. (2019). Parallel transport on the cone manifold of SPD matrices for domain adaptation. IEEE Trans. Signal Proc. 67, 1797–1811. doi: 10.1109/TSP.2019.2894801

Yi, G., Fan, C., Zhu, K., Lv, Z., Liang, S., Wen, Z., et al. (2024). Vlp2msa: expanding vision-language pre-training to multimodal sentiment analysis. Knowl. Based Syst. 283, 111136. doi: 10.1016/j.knosys.2023.111136

Zali-Vargahan, B., Charmin, A., Kalbkhani, H., and Barghandan, S. (2023). Deep time-frequency features and semi-supervised dimension reduction for subject-independent emotion recognition from multi-channel EEG signals. Biomed. Signal Proc. Control 85, 104806. doi: 10.1016/j.bspc.2023.104806

Zeng, H., Wu, Z., Zhang, J., Yang, C., Zhang, H., Dai, G., et al. (2019). EEG emotion classification using an improved sincnet-based deep learning model. Brain Sci. 9, 326. doi: 10.3390/brainsci9110326

Zhang, G., Yu, M., Liu, Y.-J., Zhao, G., Zhang, D., and Zheng, W. (2021). Sparsedgcnn: recognizing emotion from multichannel EEG signals. IEEE Trans. Affect. Comput. 14, 537–548. doi: 10.1109/TAFFC.2021.3051332

Zhang, T., Zheng, W., Cui, Z., Zong, Y., and Li, Y. (2018). Spatial-temporal recurrent neural network for emotion recognition. IEEE Trans. Cybern. 49, 839–847. doi: 10.1109/TCYB.2017.2788081

Zheng, W.-L., and Lu, B.-L. (2015). Investigating critical frequency bands and channels for eeg-based emotion recognition with deep neural networks. IEEE Trans. Auton. Mental Dev. 7, 162–175. doi: 10.1109/TAMD.2015.2431497

Zheng, W.-L., Zhu, J.-Y., and Lu, B.-L. (2017). Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans. Affect. Comput. 10, 417–429. doi: 10.1109/TAFFC.2017.2712143

Keywords: emotion recognition, human-computer interface (HCI), electroencephalogram (EEG), functional connection feature, Riemannian manifold, decision fusion

Citation: Wu M, Ouyang R, Zhou C, Sun Z, Li F and Li P (2024) A study on the combination of functional connection features and Riemannian manifold in EEG emotion recognition. Front. Neurosci. 17:1345770. doi: 10.3389/fnins.2023.1345770

Received: 28 November 2023; Accepted: 26 December 2023;

Published: 15 January 2024.

Edited by:

Yongcheng Li, Chinese Academy of Sciences (CAS), ChinaReviewed by:

Kaiwen Wei, Chinese Academy of Sciences (CAS), ChinaCopyright © 2024 Wu, Ouyang, Zhou, Sun, Li and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ping Li, cGluZ2xpMDExMkBnbWFpbC5jb20=

†These authors share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.