- 1State Grid Hubei Power Supply Limited Company Ezhou Power Supply Company, Ezhou, China

- 2School of Electrical and Electronic Engineering, Shandong University of Technology, Zibo, China

In this research, an image defogging algorithm is proposed for the electricity transmission line monitoring system in the smart city. The electricity transmission line image is typically situated in the top part of the image which is rather thin in size. Because the electricity transmission line is situated outside, there is frequently a sizable amount of sky in the backdrop. Firstly, an optimized quadtree segmentation method for calculating global atmospheric light is proposed, which gives higher weight to the upper part of the image with the sky region. This prevents interference from bright objects on the ground and guarantees that the global atmospheric light is computed in the top section of the image with the sky region. Secondly, a method of transmission calculation based on dark pixels is introduced. Finally, a detail sharpening post-processing based on visibility level and air light level is introduced to enhance the detail level of electricity transmission lines in the defogging image. Experimental results indicate that the algorithm performs well in enhancing the image details, preventing image distortion and avoiding image oversaturation.

1. Introduction

With the development of the Internet of Things sensor and image processing technology, the monitoring requirements of the power system for the transmission line are gradually improved. The transmission line can be equipped with image sensors to observe its running status in real time, which leads to potential risks of prefabrication. As an important part of power system, electricity transmission line is an important way of power resource transmission. Its operation stability will have a direct impact on power quality. People are always concerned with monitoring electricity transmission lines in order to guarantee the secure and reliable operation of those lines. Electricity transmission lines are installed outdoors and exposed to fog, rain, dew and other weather conditions for a long time, and are greatly affected by the environment. Faults such as insulator defects may occur, which will seriously affect the normal use of the electricity transmission line and reduce the service life of the line. Once the electricity transmission line fails, accidents such as tripping and power outages may occur, resulting in human and economic losses. Therefore, regular inspection of the electricity transmission line is of great significance to ensure the reliable, safe, and efficient operation of the electricity transmission line. The inspection of electricity transmission lines has been dominated by manual inspection for a long time, but manual inspection requires staff to work in the outdoor environment for a long time, which not only has poor monitoring efficiency and accuracy, but also has potential safety hazards for staff. Therefore, in recent years, the method of monitoring electricity transmission lines through a video monitor system has been widely used, which is of importance to improve the monitoring efficiency of electricity transmission lines and speed up the construction of smart cities.

In recent years, constant fog has become one of the terrible weather situations damaging the power grid’s atmospheric environment as a result of the rapid development of the economic scale and the acceleration of urbanization. Fog is a common occurrence in the atmosphere. In foggy circumstances, the air is dense with atmospheric particles that not only absorb and scatter the reflected light from the scene, but also disseminate some of it into the observation equipment (Xu et al., 2015). Therefore, in haze weather, the images obtained by the monitoring system and the vision system will be seriously degraded, such as image color offset, reduced visibility, loss of details, and other problems, which seriously affect image detection, tracking, recognition and the use of the monitoring system (Su et al., 2020). Electricity transmission line monitoring in hazy weather will face some problems, such as reduced contrast, chromatic aberration, and unclear details, which will significantly impact the visual impact of monitoring power transmission lines, adversely affect transmission line monitoring, and even cause misjudgment. Therefore, it is necessary to conduct defogging research for transmission line monitoring.

The two kinds of defogging algorithms that are now most often utilized are image enhancement and image restoration. Since computer hardware has improved quickly in recent years, image defogging algorithms based on machine learning have also been proposed (Sharma et al., 2021). The image enhancement-based defogging algorithm merely improves the image contrast and other characteristics using image enhancement technology to achieve the defogging goal. It does not take into account the physical process of fog generation. Traditional image contrast enhancement methods include histogram redistribution (Zhou et al., 2016), intensity transformation (Sangeetha and Anusudha, 2017), homomorphic filtering (Seow and Asari, 2006), wavelet transform (Jun and Rong, 2013), and Retinex algorithm (Jobson et al., 1997b). In Retinex theory, the image is made up of the incident element which represents the brightness information around the object and the reflection element which reflects the reflection ability of itself, then the single scale Retinex algorithm (SSR) is proposed. And then, multiscale Retinex with color restoration (MSRCR) and the multiscale Retinex (MSR) algorithm have both been developed on the foundation of SSR (Jobson et al., 1997a). Defogging algorithm based on image restoration is more commonly used at present. Such algorithms need to consider the physical processes of fog formation, and reasonably estimate the transmission and atmospheric light. In the end, the atmospheric scattering model’s calculations provide the restored image. Please note that the word “transmission” mentioned here is not the same as the word “transmission” in the electricity transmission line mentioned above. The “transmission” mentioned here is a parameter in the atmospheric scattering model that reflects the distance between the object in the image and the observation point (such as the camera). Without special circumstances, the t appears later to refer to transmission in atmospheric scattering models.

Multiple image defogging is mainly based on polarization method. Schechner proposed a method of defogging by using two polarized images taken vertically and horizontally (Schechner et al., 2001). Miyazaki et al. (2013) suggested a fog removal method based on the polarization data of two known photographs taken at various distances to predict the characteristics of fog. Shwartz and Schechner (2006) suggested a polarization defogging technique for images without sky areas that choose two comparable characteristics in the scene to estimate atmospheric scattering model parameters. However, the polarization-based image defogging algorithm needs to take multiple polarized images in the same weather condition, which is hard to fulfill the practical needs.

Due to the large limitations of multiple image defogging, it has not been widely used. The more commonly used defogging method is the restoration-based single image defogging method. To estimate necessary parameters based on atmospheric scattering model, Fattal (2008) created the concept of surface shading and the assumption that the transmission and surface shadow are unrelated. Based on the supposition that fog-covered images have less contrast than those taken in clear skies, Tan (2008) proposed an defogging algorithm for images based on the Markov random field optimization atmospheric scattering model to maximize local contrast. Meng et al. (2013) offered a technique to calculate the transmission of unknown scenes by combining the boundary constraint of single image defogging with context regularization based on weighted L1 norm. He et al. (2010) proposed a defogging algorithm called dark channel prior. For single image defogging, the dark channel prior algorithm has developed as one of the most popular methods. In order to reduce halo and block artifacts generated by coarse transmission estimation, He uses “soft matting” to smooth up the coarse transmission. However, the soft matting technique has the disadvantage of consuming too much time, so it is hard to apply in actual situations. To resolve this issue, He et al. (2012) proposed a guide filter and a fast guide filter (He and Sun, 2015). The neighborhood pixels relationship of hazy images may be transferred by the guided filter to improve air light and transmission smoothness. However, dark channel prior algorithm has some limitations. Dark channel prior algorithm is ineffective for sky region or bright ground region, and the result of defogging in this region is often oversaturated. And dark channel prior algorithm is poor in the processing of depth discontinuous region, and in the area where the foreground and background of the image meet, “halo” phenomena are simple to create. Tarel and Hautiere (2009) proposed a median filter and its variants to replace soft matting, which can improve the calculation speed. Ehsan et al. (2021) proposed a fog removal method that uses local patches of different sizes to calculate the two transmission maps and refine the transmission map with gradient-domain guided image filtering. With the help of training the sum of squared residual error, Raikwar and Tapaswi (2020) suggested a method to determine the lower limit of transmission based on the peak signal-to-noise ratio. Berman and Avidan (2016) assumed that an image can be approximated by hundreds of different colors, which form close clusters in RGB space, and thus proposed a non-local prior method of defogging.

More and more fog removal algorithms based on machine learning have been presented as a result of the advancement of computer neural networks and deep learning. Li et al. (2017) reconstructed the atmospheric scattering model. Then, to estimate the pertinent parameters of fog, an All-in-One Dehazing Network was created utilizing residual learning and convolutional neural network. GridDehazeNet is Liu’s proposed end-to-end trainable convolutional neural network for removing fog from a single image. It has pre-processing, backbone, and post-processing, it is a multi-scale network image defogging algorithm based on attention (Liu et al., 2019). Cai et al. (2016) proposed a deep CNN structure for fog removal, named Dehaze Net, to achieve end-to-end fog removal. Zhang and Patel (2018) proposed an edge-preserving densely connected encoder-decoder structure fusion end-to-end densely connected pyramid defogging network, named DCPDN. Pang et al. (2020) suggested a binocular image dehazing Network, which requires the simultaneous use of multiple images for defogging. Ren et al. (2018) suggested a Gated Fusion Network for image defogging, which fuses the three inputs preprocessed for foggy images to avoid halo artifacts. Qin et al. (2020) proposed an attention-based feature fusion single image dehazing network, named FFA-Net.

In order to promote the construction of smart cities, we propose a defogging algorithm for electricity transmission line monitoring. The following are the paper’s contributions:

• In order to solve the problem of inaccurate calculation of global atmospheric light in the original dark channel prior algorithm, according to the assumption that the sky area of the electricity transmission line image is usually in the upper half of the image, an improved quadtree segmentation is proposed to calculate the global atmospheric light value. The algorithm can avoid the interference caused by the bright objects on the ground to the solution of the global atmospheric light;

• The concept of dark pixel is introduced for the problem that the dark channel prior is prone to the “halo” effect. Dark pixels are located using super pixel segmentation and a fidelity function is proposed to calculate the transmission;

• Due to the size of the electricity transmission line in the image is tiny and difficult to observe, a detail sharpening post-processing based on visibility and air light is introduced to improve the image details of the electricity transmission line.

The remainder of this paper is organized as shown below. (Section “2 Related works) reviews atmospheric scattering models and dark channel priors, and points out the limitations of dark channel priors. (Section “3 Proposed method) presents a defogging method for electricity transmission line images based on improved quadtree segmentation and dark pixels, and enhances image details. (Section “4 Experimental results and discussion) evaluates the efficacy of the proposed method using both qualitative and quantitative analyses. And the entire study is summarized in (Section “5 Conclusion).

2. Related works

2.1. Physical model

The physical model of atmospheric scattering based on Mie scattering theory was initially put out by McCartney (1976). Narasimhan and Nayar (2001) believes that the wavelength of visible light in a uniform atmosphere has nothing to do with the scattering coefficient, and proposed a simplified version of the atmospheric scattering model:

In formula (1), I is the brightness of the sky, ρ(x) denotes the normalized radiance of a scene point x, β is the scattering coefficient of the atmosphere, and d is the scene depth. However, this model is too complicated, so a simplified atmospheric scattering model is proposed. The simplified atmospheric scattering model developed by he is now the most used atmospheric scattering model for expressing the principle of fog (He et al., 2010). It is shown in the following formula:

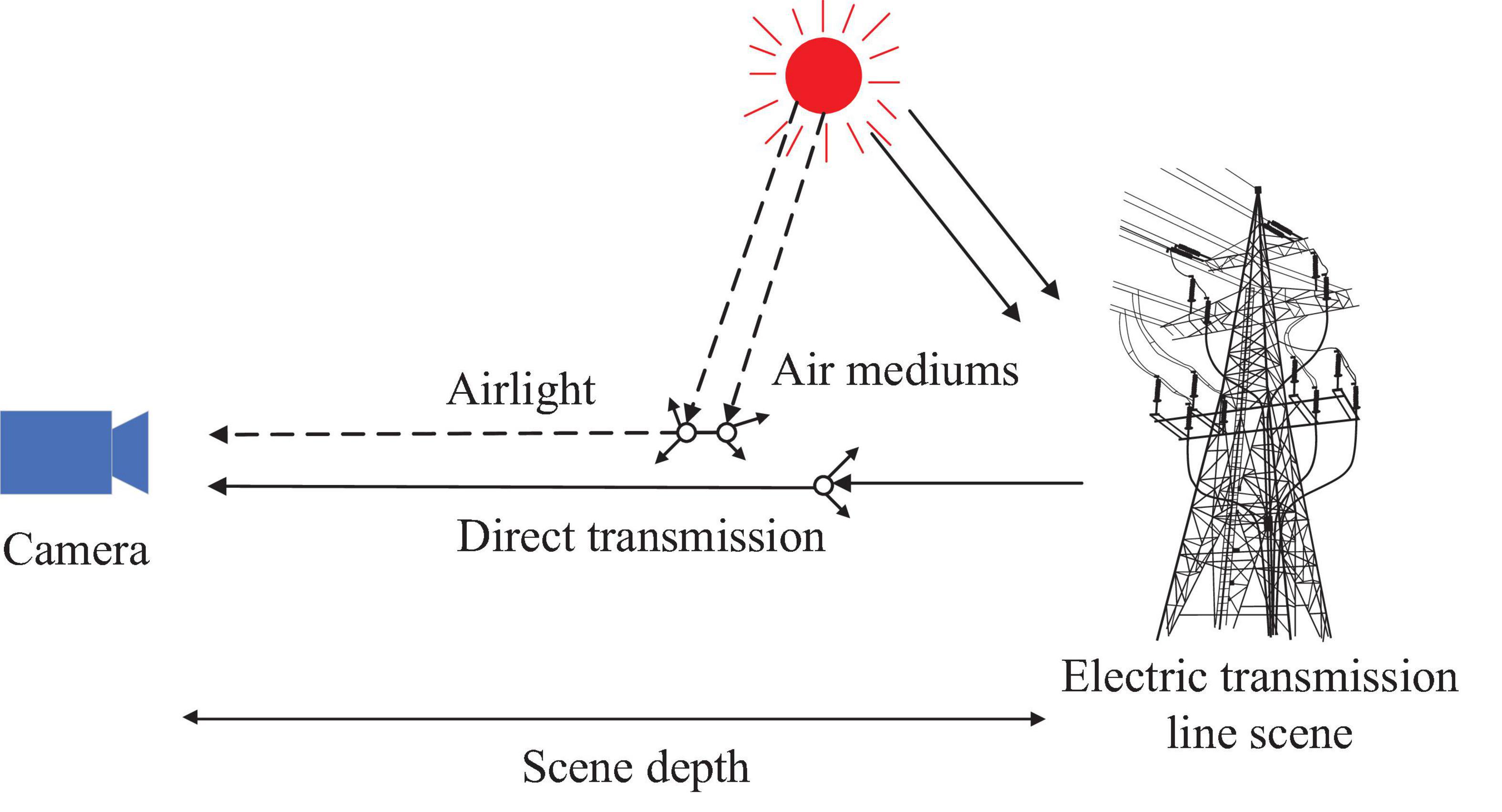

where A is the global atmospheric light, which represents the background lighting in the atmosphere, and I(x) and J(x) are the fogging and defogging images, respectively. And x = (m, n) is the coordinate of the image. t(x) is transmission. It represent the transmission of a medium that is not scattered and successfully entries into vision systems such as monitoring systems and cameras. As per the atmospheric scattering theory, the scattering of air light during the process of reaching the vision system and the attenuation process of the reflected light from the surface of the object reaching the vision system are the two main divisions of the scattering of atmospheric particles. For equation (2), J(x)t(x) is direct transmission, and A[1- t(x)] is airlight, denoted as a(x). Direct transmission means the attenuation of the foggy image directly passing through the air medium, and the airlight is generated by the scattered light. The schematic diagram for the atmospheric scattering model is shown in Figure 1. Note that the solid line represents direct transmission and the dashed line represents airlight.

For transmission t(x), we have:

In the above formula, d(x) represents the scene depth and, at the same time, β is the atmospheric scattering coefficient. The formula shows that the transmission decreases gradually as the depth of the scene increases.

Trying to imply the transmission t(x) and the global atmospheric light value A into the atmospheric scattering physical model yields the defogging image J, which is the essential step in image defogging based on the atmospheric scattering model. The following formula can be obtained by deriving formula (2):

It can be seen from formula (4) that the key to calculating the defogging image is to reasonably estimate the transmission t(x) of the foggy image and the global atmospheric light value A. At present, the most commonly used method of defogging is the dark channel prior theory proposed by He et al. (2010).

2.2. Dark channel prior theory

He gained a statistical rule by observing a significant number of images without fog: for a large number of non-sky local patches, there is always at least one color channel with pixel intensity so low that it is close to 0. So the dark channel Jdark(x) is defined by the following formula:

where Ω(x) is the local area centered at x, y is the pixel in the local area Ω(x), JC is the color channel of the fog-free image J, C is the three channels of the RGB image. And r, g, b represent the red, green and blue channels of the RGB image, respectively.

He draws the following conclusion through observation: for an outdoor fog-free image J, due to the shadows caused by buildings in the city or leaves in the natural landscape, the surfaces of colored objects with low reflectivity, and the surfaces of dark objects, dark channel intensity of J for non-sky regions is exceedingly low, almost nothing. So there is the following formula:

The transmission calculation formula may be constructed using the dark channel prior theory and the atmospheric scattering model above as follows:

The role of ω is to retain some fog to make the image appear more natural. The value range of ω is (0, 1), and the value is generally set to 0.95.

The transmission obtained by this method is not accurate, and is prone to “halo” effect. The “halo” phenomenon is an effect that tends to occur in images after the fog has been removed. Since the foreground is close to the observation point and the background is far from the observation point in the image, the depth of field of different positions in the image has a large gap, especially for the junction of the foreground and background. Therefore, the “halo” phenomenon is usually generated at the junction of the foreground and background of the image, resulting in abnormal color distortion at the edge of the observed object in the image after fog removal, and the “halo” phenomenon gradually weakens when the image is far away from the edge. Therefore, He optimized the transmission using “soft matting” to get rid of the “halo” effect. However, the “soft matting” consumes a lot of time, so it is not suitable or practical applications. Therefore, He proposed the guided filter, through which the transmission optimization time can be greatly shortened, and the resulting image edges are sharper.

In order to prevent the image from being enhanced too much due to too small transmission, it is required to define the bottom bound of transmission t0, which is usually set to 0.1. Then the final result can be obtained from the following equation:

It can be seen from Formula (8) that for a given fogged image I[x], to obtain the image after defogging [that is, J(x)], only two unknown quantities need to be solved: global atmospheric light value A and transmission t. Therefore, when using the atmospheric scattering model for image defogging, the most important two steps are the calculation of global atmospheric light A and the calculation of transmission t.

2.3. Disadvantages of dark channel priors

In the dark channel prior algorithm, the global atmospheric light is chosen in the brightest color channel in the image. He picks the pixels with the highest intensity as the global atmospheric light after first detecting the brightest top 0.1 percent of the dark channel pixels. However, this process suffers from large areas of white objects or objects that are too bright in the image. At this point the global atmospheric light is misestimated, resulting in a color shift in the recovered image. Second, it is common to create a “halo” phenomenon in the region separating the image’s foreground and background when employing the dark channel prior algorithm for regions with discontinuous depths. Finally, the atmospheric scattering in the real situation is multiple scattering. The single scattering model is the most often used atmospheric scattering model since it is challenging to compute multiple atmospheric scattering. As a result, the defogging images obtained by the dark channel prior algorithm are often too smooth and lack of image details. Therefore, this paper will optimize the dark channel prior algorithm for these three aspects.

3. Proposed method

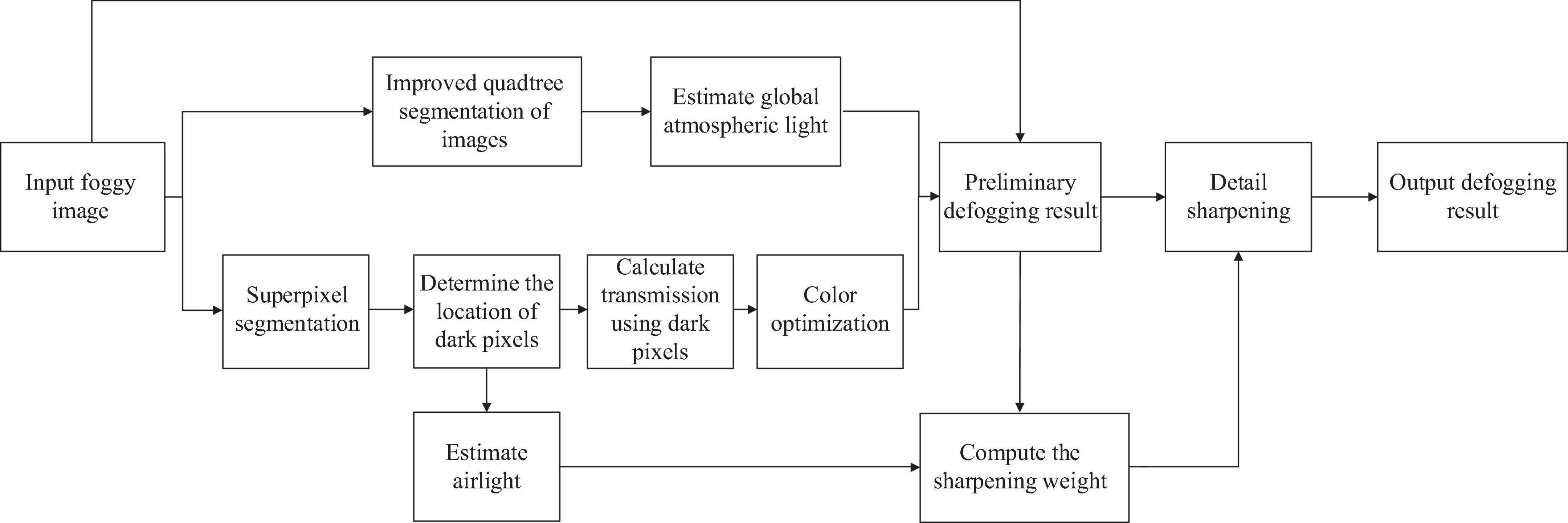

The defogging algorithm flowchart from this work is shown in Figure 2. Electricity transmission lines and power towers are often located outdoors, and their images often have large areas of the sky. According to the statistical law that the sky area often exists in the upper part of the image, in order to address the issue of erroneous estimation of the global atmospheric light due to the influence of a large area of white objects, a global atmospheric light solution based on the optimized quadtree algorithm is proposed. This ensures correct estimation of global atmospheric light. Then we define dark pixels, perform superpixel segmentation on the input foggy image, and locate dark pixels in the segmented superpixel block. The transmission is calculated through a fidelity function, and the solved transmission is optimized for color correction. The next step is to invert the atmospheric scattering model to produce a preliminary defogging image. Due to the thin size of electricity transmission line, which is not suitable for observation, and the defogging image lacks details, a detail sharpening post-processing algorithm based on airlight constraints and visibility constraints are used for the preliminary defogging image to improve the texture details of the image. Finally, the final defogging image J(x) is obtained.

3.1. Global atmospheric light estimation

The presence of fog in the image will lead to a brighter area in the image, so the global atmospheric light is usually selected in the bright area, usually in the sky area. However, white objects on the ground or high-brightness objects can easily induce an incorrect selection of the global atmospheric light, resulting in chromatic aberration in the fog-free image. Therefore, it is necessary to improve the selection of global atmospheric light.

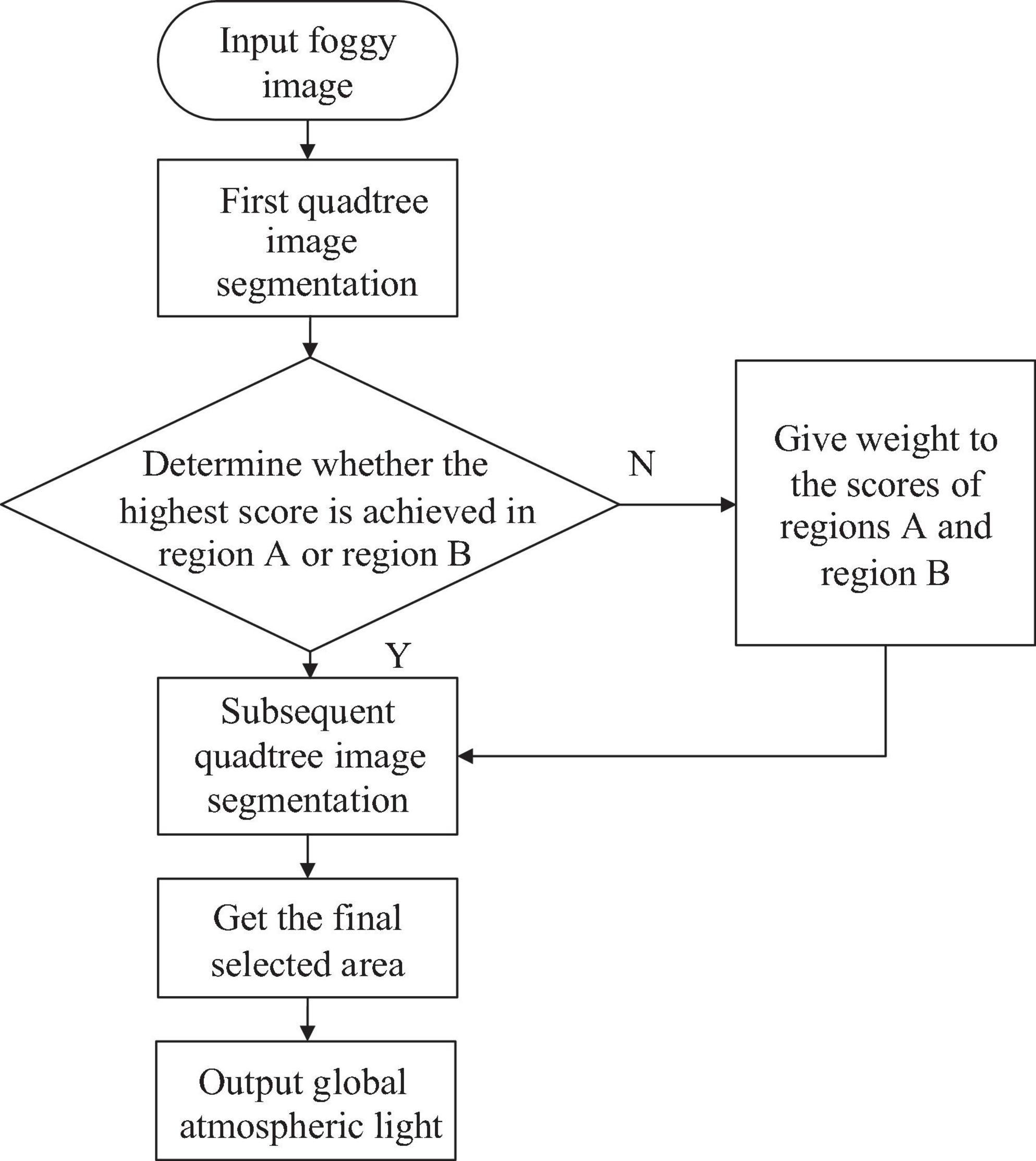

An optimized quadtree segmentation algorithm is used to estimate global atmospheric light in this study. Based on the principle that the pixel value variance of the image is often low in the foggy area, Kim et al. (2013) suggested an algorithm based on quadtree segmentation to select the global atmospheric light. The input image is first divided into four sections. Then we name the upper left area as area A, the upper right area as area B, the lower left area as area C, and the lower right area as area D. Then, for each region, we determine the standard deviation and mean of the pixel values for the three R, G, and B channels, and subtract the standard deviation from the mean to obtain a region score. The region with the highest score is first determined. It is then divided into four smaller parts, and the region with the highest score is selected from those four. Repeat the aforementioned procedure up until the size of the selected area is below the predetermined threshold. In the final selected area, we look for the value of the pixel closest to the white area as the global atmospheric light. For an RGB image, the white part is the area where the three channels of R, G, and B are all 255. Hence the estimate of global atmospheric light can be transformed into finding the minimum of the following formula:

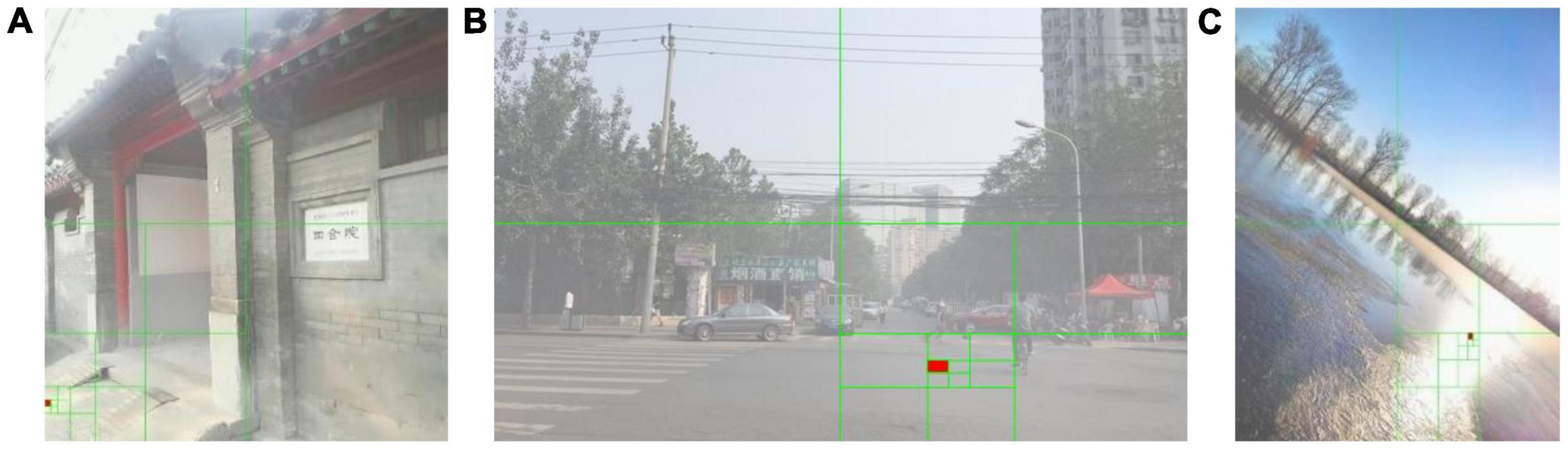

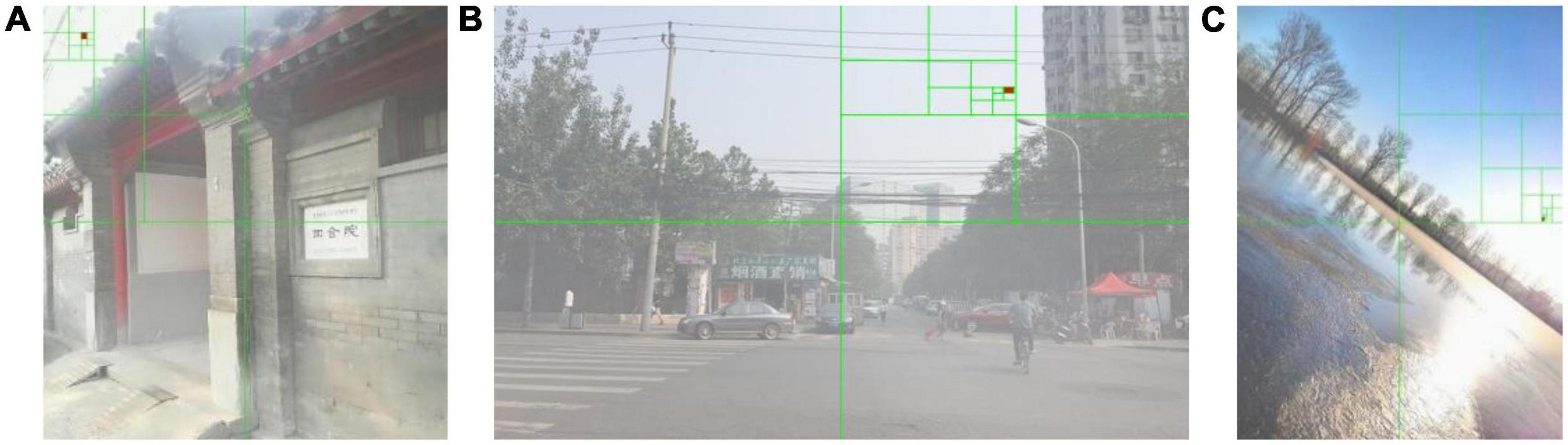

In Formula (9), I represents the input image, r, g, b represent the RGB color channel of the input image, x represents the pixel value of each pixel of the input image, and 255 represents the white in the RGB space. Through the quadtree segmentation method, the global atmospheric light can be selected in a brighter area as much as possible. However, when there are large areas of white objects or high-contrast objects in the non-sky area, the quadtree segmentation algorithm will still select the non-sky area as the global atmospheric light, as shown in Figure 3. Images are from the OTS dataset in the Realistic Single Image Dehazing dataset. We usually call it RESIDE. The green line in the image represents the quadtree segmentation process, and the red fill represents the final selected area. It can be seen from Figure 3 that the estimation of the global atmospheric light may be disturbed by the white objects on the ground, and the final result is chosen on the ground and the lake surface instead of the sky.

We optimize the quadtree segmentation for estimating the global atmospheric light to address this issue. For the four regions A, B, C, and D, we calculate their regional scores and record them as scoreA, scoreB, scoreC, and scoreD, and then compare the scores. Because the sky is mostly concentrated in the upper portion of the image, if the area with the highest score in the first step is located in the upper half of the image, that is, the scoreA or the scoreB has the highest score, the subsequent segmentation operation will be continued. If the region with the highest score in the first step is located in the lower half of the image, that is, the scoreC or scoreD has the highest score, then we assign the calculation weights ξA and ξB to the scoreA and scoreB, respectively, and the scores are recorded as ξA⋅scoreA and ξB⋅scoreB. Then calculation process returns to the first segmentation process, and re-compare the scores of ξA⋅scoreA, ξB⋅scoreB, scoreC, and scoreD, so that the global atmospheric light can be located at the top half of the image in the first step. To ensure that the recalculated ξA⋅scoreA and ξB⋅scoreB can be larger than scoreC and scoreD, the calculation weight ξ is set to 1.5. Finally, the average value of the selected area is used as the global atmospheric light. Figure 4 depicts the process mentioned previously.

Figure 5 shows the global atmospheric light selection result for the three foggy images given in Figure 3 using the optimized quadtree segmentation algorithm. The selected area of the global atmospheric light is changed from bottom half of images to the sky area. This shows that for foggy images with a sky, this method can locate the global atmospheric light in the sky area in the upper half of the image, and avoid locating it on the ground or large areas of white objects and other interfering objects.

Figure 5. Estimation of global atmospheric light using an optimized quadtree segmentation algorithm.

3.2. Transmission optimization

Since the dark channel prior uses the minimum filter to calculate the transmission, it is common for a depth discontinuity to emerge at the image’s boundaries. Hence it is easy to produce a “halo” phenomenon at the boundary between the foreground and background. When the foggy image is converted into a dark channel map to extract the clear part, the size of the local patch Ω is difficult to determine. When deriving the transmission calculation formula, the original dark channel prior method assumes that the transmission in the local patch Ω is a constant, which is not consistent with the real situation. To optimize transmission, a method combining super-pixel segmentation and the dark pixel is utilized in this research.

Zhu pointed out in Zhu et al. (2019) that dark pixels are widespread. First, this paper defines dark pixels as follows:

Simple linear iterative clustering (Achanta et al., 2012) is used for super-pixel segmentation of images. The technology can be called SLIC for short. Superpixel segmentation uses adjacent pixels with the same brightness and texture characteristics to form irregular pixel blocks, and aggregates pixels with similar characteristics to achieve the purpose of using a small number of superpixel blocks to replace a large number of pixels in original images. When the superpixel block is too large, block artifacts and “halo” phenomena may also occur, which are caused by the discontinuity of depth caused by the excessively large superpixel, so the size of the superpixel block needs to be selected reasonably. We partition the foggy image into 1,000 superpixels in this study. Next, we need to locate dark pixels in the generated superpixel block. We locate dark pixels using the local constant assumption (Zhu et al., 2019). Note that the local constant assumption is only used to locate dark pixels, not to estimate transmission. For each superpixel local patch Ω, there is at least one dark pixel in it. From the assumption that the amount of transmission in the local patch Ω of each superpixel is constant, it can be known that dark pixels are found in each superpixel local area Ω by finding a local minimum in , where .

For each dark pixel, there is the following formula:

A certain amount of fog should be preserved in order to give the image a more realistic appearance, and take Jc (z)/Ac = 0.05 (Zhu et al., 2019). Bringing formula (10) into formula (11), there is the following formula:

For any pixel x, the smaller the value of minc [Ic (x)/Ac] = minΩ,c [Ic (x)/Ac] is, the closer minc [Ic (x)/Ac] is to the minimum value of the local patch Ω, and the more likely the pixel is to be a dark pixel (Zhu et al., 2019). To ensure that x is a dark pixel, minc [Ic (x)/Ac] and minΩ,c [Ic (x)/Ac] should be close enough. Therefore we define the fidelity function F(x) for the dark pixel x as follows:

As can be seen from the above, the closer minc [Ic (x)/Ac] and minΩ,c [Ic (x)/Ac] are, the more likely pixel x is to be a dark pixel. Formula (13) is a fidelity function. According to this formula, when the difference between minc [Ic (x)/Ac] and minΩ,c [Ic (x)/Ac] is less than 0.001, it can be seen from the property of logarithmic function that the value of F(x) is 1, thus minc [Ic (x)/Ac] = minΩ,c [Ic (x)/Ac]. Therefore, it can be approximately considered that the pixel x is the expected dark pixel. Therefore, there is the following formula:

The final transmission is obtained by optimizing the following energy function:

where and are weight coefficients, defined as:

The final transmission can be obtained from the above formula, as shown in the following formula:

where is the vector form of t, is the vector form of . And is a sparse diagonal matrix composed of elements in F, is the Laplace matrix. The value of λ is 0.02.

The restored fog-free image may have color offset problems such as too dark color in non-sky area and overexposure color in bright sky area. It is necessary to perform color correction on the obtained transmission. Color correction for transmission t is performed by the following formula:

where 0.2 is used as the value for σ.

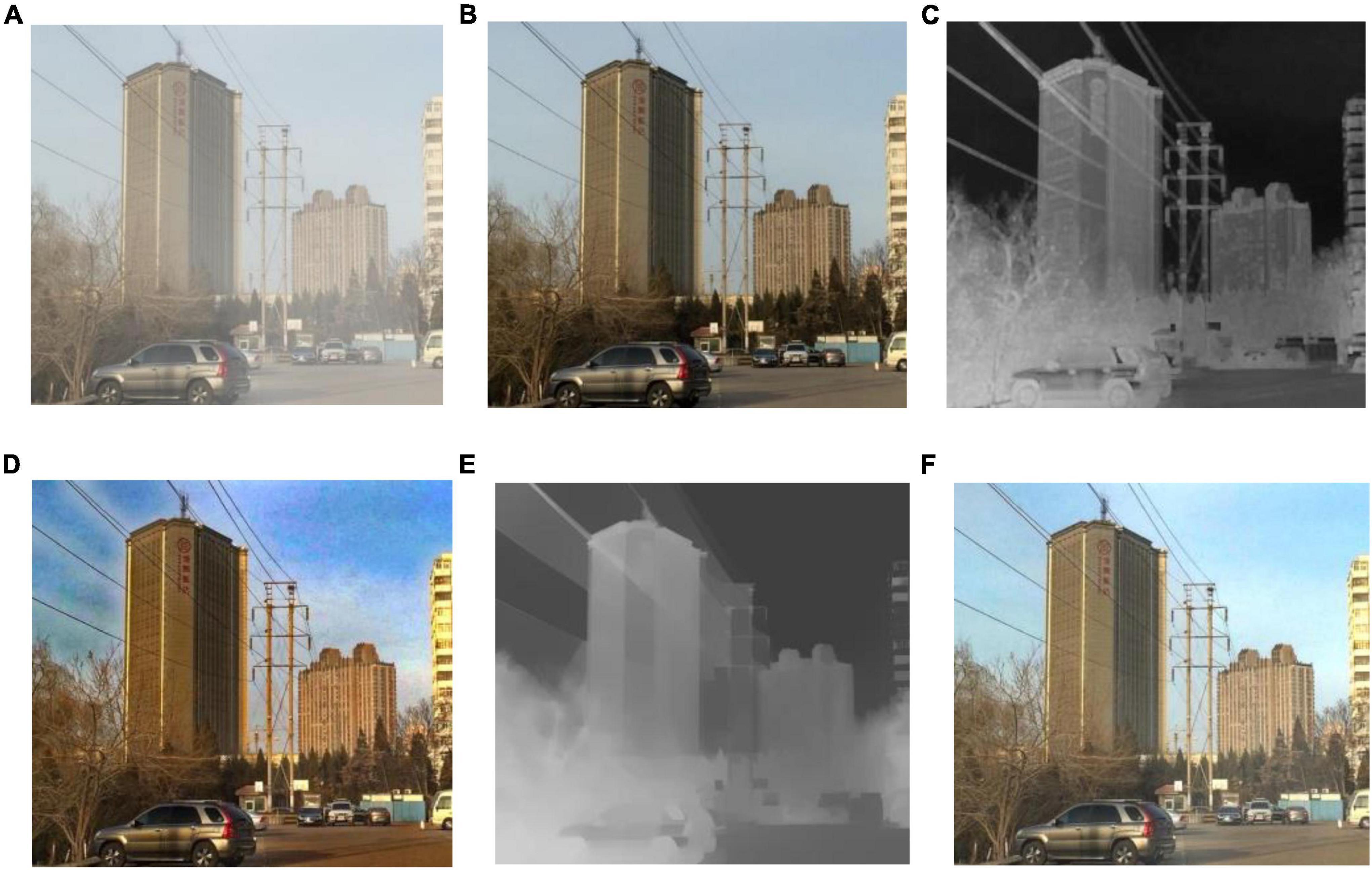

Figure 6 shows the comparison of the dark channel prior method and proposed method on transmission and recovered images. As shown in Figure 6C, when the transmission is estimated using the dark channel prior algorithm, the dark channel map contains some depth-independent details. And for thin overhead lines of electricity transmission lines, the range of the transmission map will be overestimated, resulting in halo artifacts near the overhead lines in the defogging results. As shown in Figure 6E, the proposed method can avoid overestimating the transmission of the overhead line, avoid halo artifacts near overhead line, and provide better transition at the junction of overhead line and sky. Comparing the method to the ground truth in Figure 6B, color saturation may also be avoided.

Figure 6. The effect of this method on transmission line transmittance optimization. (A) Hazy image; (B) ground truth (C) transmission of dark channel prior; (D) result of dark channel prior; (E) transmission of our method; (F) preliminary result of our method.

3.3. Detail sharpening

The transmission line is thin in size and difficult to observe, so it is necessary to enhance the details of the defogging image in order to better observe the electricity transmission line. The actual atmospheric scattering is multiple scattering, while the commonly used atmospheric scattering model is only one scattering, which will lead to the loss of details and blurred images in the defogging image. Therefore, it is necessary to sharpen the details of the defogging image. Image blur caused by multiple scattering is mainly related to two factors: visibility level and airlight level. The visibility level is related to detail, and the airlight level is related to depth (Gao et al., 2018). If the airlight level in a certain area of the image is high, the image details in that area will be smoother, so the degree of image sharpening is proportional to the airlight level. And the smoothness of the image details is poor when there is high visibility in a certain area, hence the degree of image sharpening is inversely related to the visibility level. The following is a definition of the sharpening coefficient:

In formula (20), S(x, y) represents the sharpening coefficient matrix. The function determined by the airlight level is represented by S1(x, y), while the function determined by the visibility level is represented by S2(x, y). S(x, y) means the multiplication of the corresponding elements of the S1(x, y) and S2(x, y) matrices.

Sigmoid function can satisfy the requirement that the airlight level is proportional to the sharpening coefficient, and the visibility level is inversely proportional to the sharpening coefficient. We use the cumulative distribution function as the constraint functions for the airlight level and visibility level. This function is a sigmoid function, expressed as follows:

The following formula is the error function erf(x):

The cumulative distribution function Φ(x) is an sigmoid function that increases monotonically with x. The cumulative distribution function can meet the requirement that the airlight level is proportional to the sharpening coefficient and the visibility level is inversely proportional to the sharpening coefficient. For the cumulative distribution function, it approaches 0 as x approaches −∞ and 1 as x approaches ∞, and the cumulative distribution function is a monotonically increasing function. If we add a minus sign to the cumulative distribution function, we get a monotonically decreasing function. Therefore, the cumulative distribution function can be used as the constraint function of airlight level and visibility level. As a result, the following definitions apply to the airlight level and visibility level constraints:

where a(x, y) denotes the airlight level, and C(x, y) represents the visibility level. aave represents the average value of the airlight level, Cave represents the average value of the visibility level, and k1 and k2 are the slope control coefficients. The visibility level C has a relationship with the Weber brightness (Hautiere et al., 2008). The expression for visibility level C is as follows:

In the above formula, ΔL is the brightness difference between the preliminary defogging result and the background image, Lt is the brightness of the preliminary defogging result, and Lb is the brightness of the image background. RGB space is the most commonly used color space, including three basic colors: red (R), green (G), and blue (B), while YCbCr is another color space, including luminance component (Y), blue chrominance component (Cb), and red chrominance component (Cr). To calculate the brightness difference, the image needs to be transferred from RGB space to YcbCr space. The preliminary defogging result is converted from RGB to YCbCr space, and the brightness component Lt is extracted, then the preliminary defogging result is low-pass filtered to produce Lb.

The final enhancement result is as follows:

For formula (26), Jfinal(x,y) is the final defogging result, J(x,y) is the preliminary defogging result, and the upper bound on the enhancement is constrained using θ. T(x,y) is the high-frequency value of the preliminary result J(x,y), which is obtained by Gaussian filtering on J(x,y) to prevent excessive enhancement of flat areas such as the sky. The preliminary defogging result J (x, y) in Formula (26) refers to the defogging result obtained by formula (8) after calculating the global atmospheric light A and transmission t of the input image I (x) through the methods of (Section “3.1 Global atmospheric light estimation”) and (Section “3.2 Transmission optimization”). That is, the fog-free image without detail sharpening.

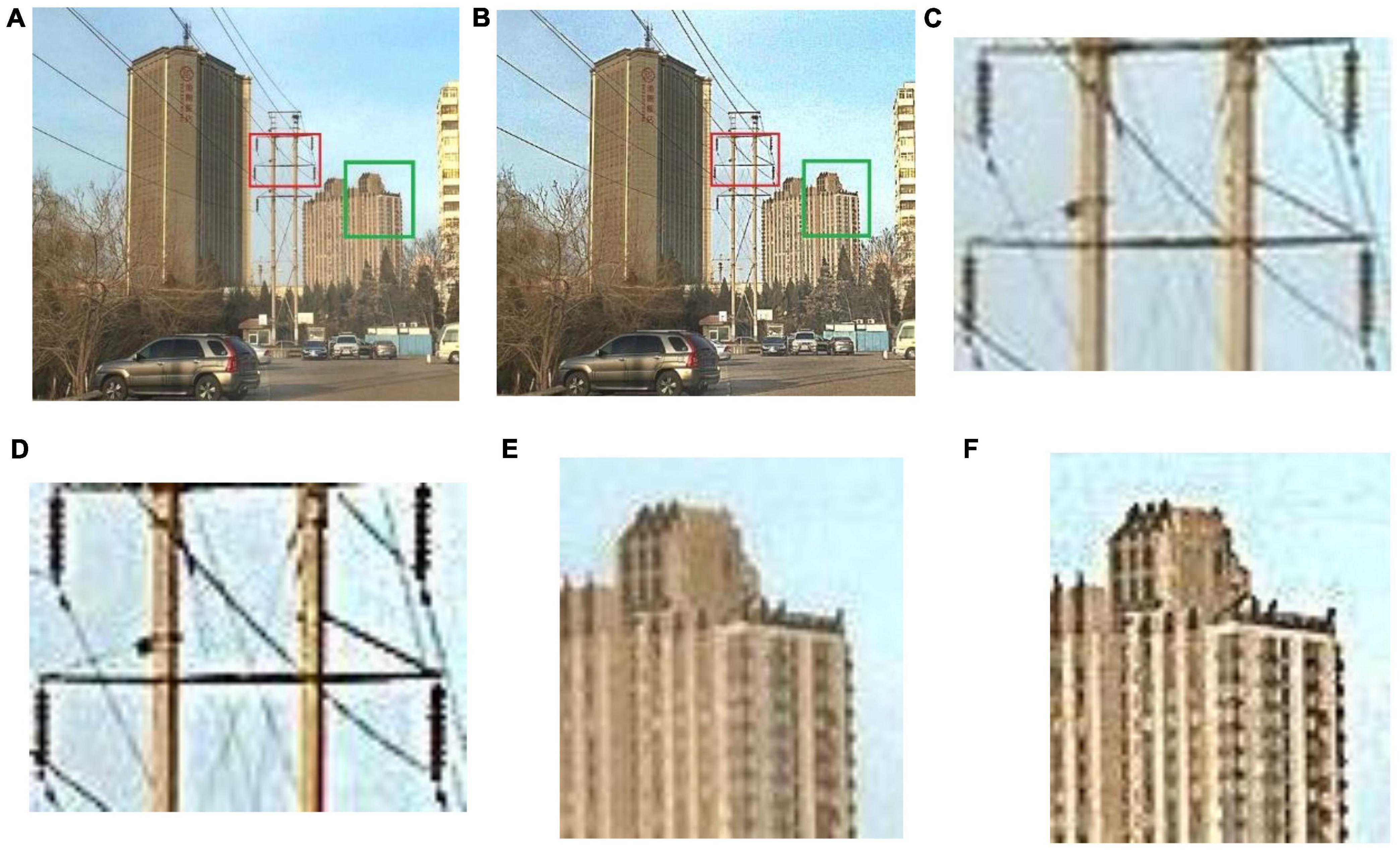

The final defogging result after detail sharpening has more accurate local details. Figure 7 shows the comparison between the preliminary defogging results without sharpening and the final defogging results after sharpening. The details of the electricity transmission lines and insulators after sharpening are richer. According to Figure 7D, the electricity transmission line and insulator have more prominent image details after sharpening. In addition, the details of distant buildings have also become clearer. As shown in Figures 7E, F, the details of buildings in the image after detail sharpening are more prominent.

Figure 7. Comparison of local details between the two methods. (A) Preliminary defogging result without sharpening; (B) Final defogging result after sharpening; (C) Local detail of intermediate defogging result (insulators); (D) Local detail of final defogging result (insulators); (E) Local detail of intermediate defogging result (buildings); (F) Local detail of final defogging result (buildings).

4. Experimental results and discussion

The efficiency of proposed defogging algorithm is examined through qualitative and quantitative comparisons with widely utilized defogging methods. We select foggy images with electricity transmission lines in the RESIDE dataset and compare with the methods of He et al. (2010), Fattal (2008), Meng et al. (2013), Tarel and Hautiere (2009), Ehsan et al. (2021), Berman and Avidan (2016), and Raikwar and Tapaswi (2020). The following parameters are selected for this study: ξ = 1.5, λ = 0.02, ε = 0.00001, σ = 0.2, k1 = 25, k2 = 0.01, θ = 3. The experimental platform is a 64-bit Windows 10 operating system laptop. The CPU is Inter(R) Core i7-11800 H and clocked at 2.30 GHz. The GPU is NVIDIA RTX3060. The computer memory is 40 GB. The software platform is MATLAB 2021b.

4.1. Qualitative comparison

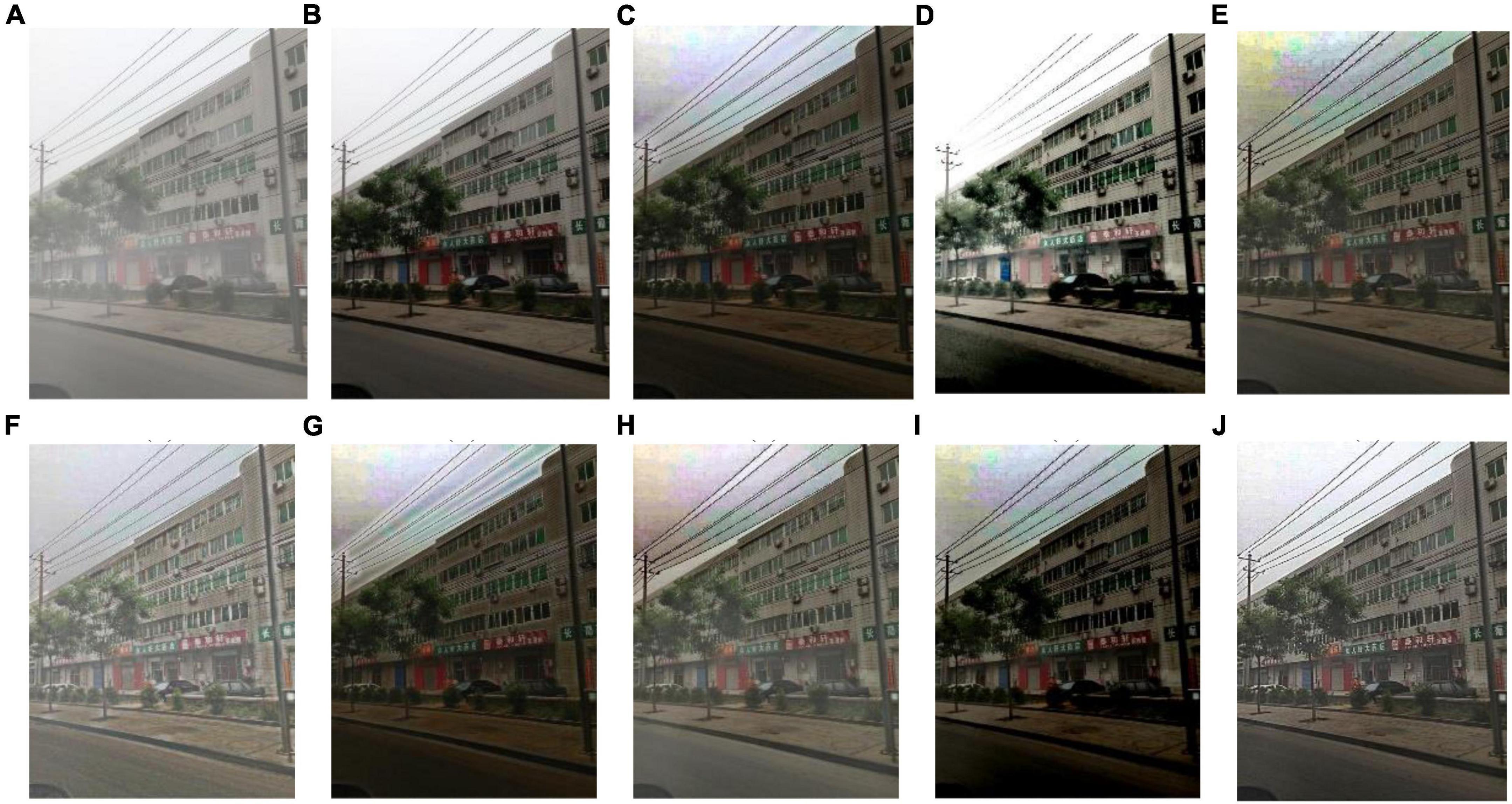

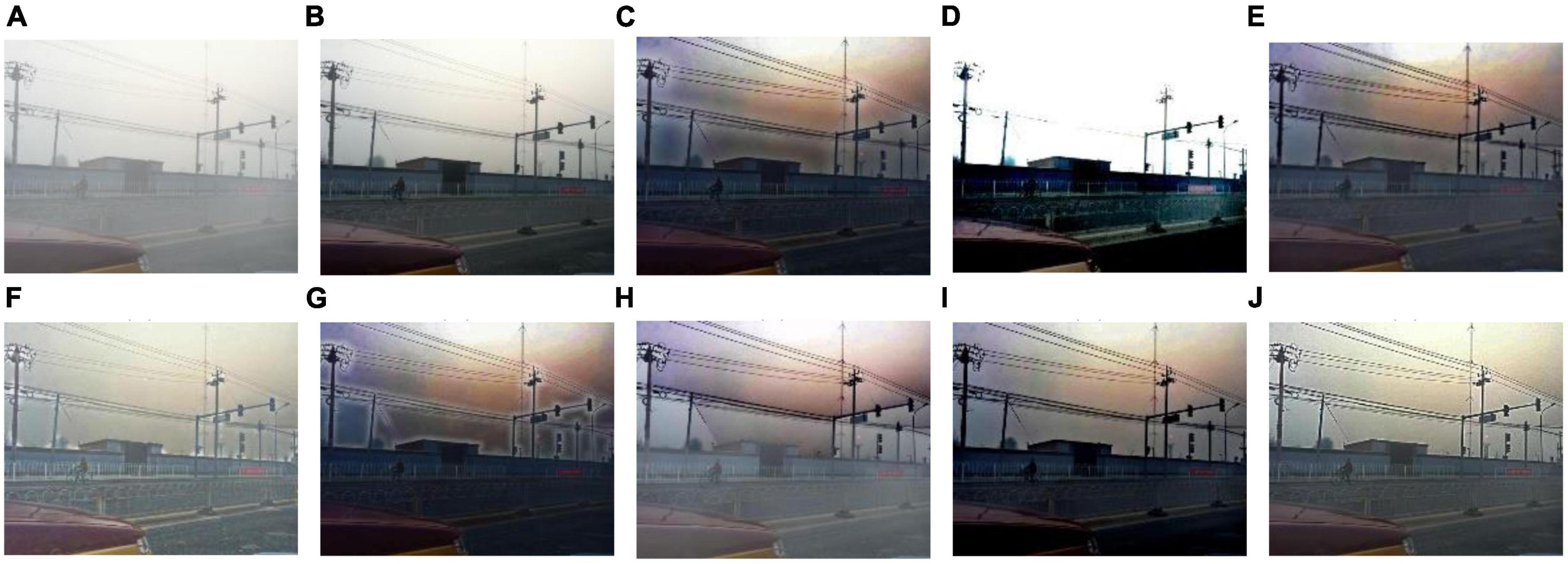

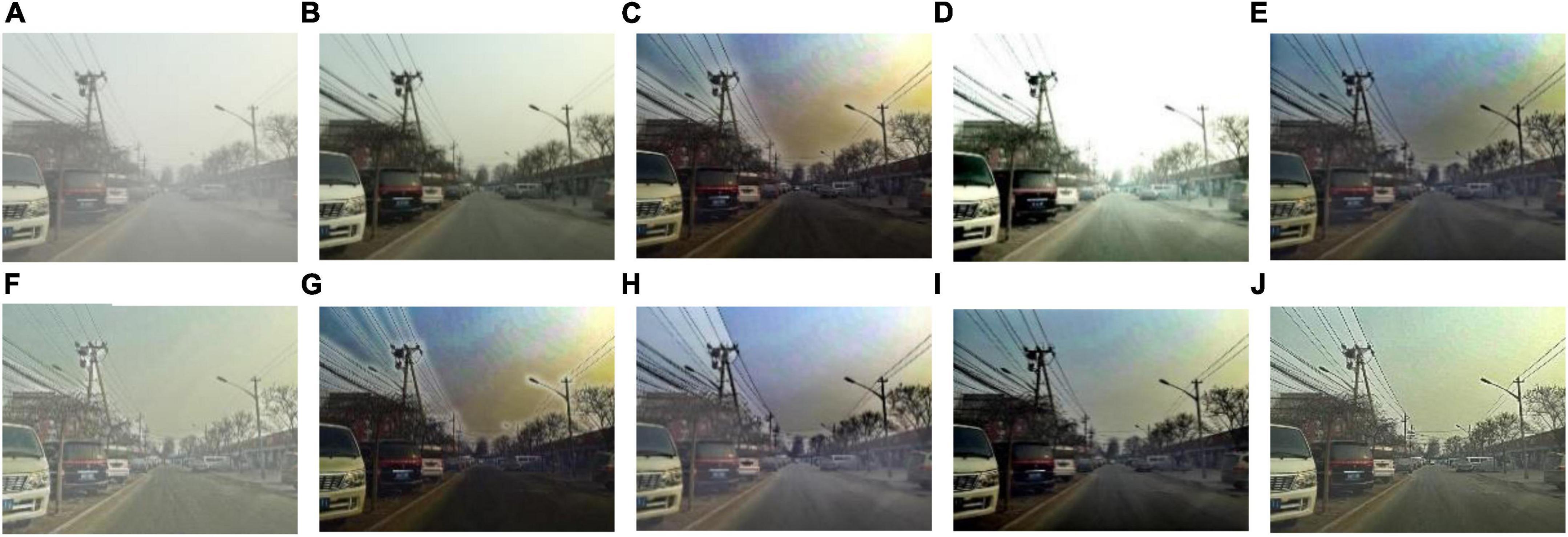

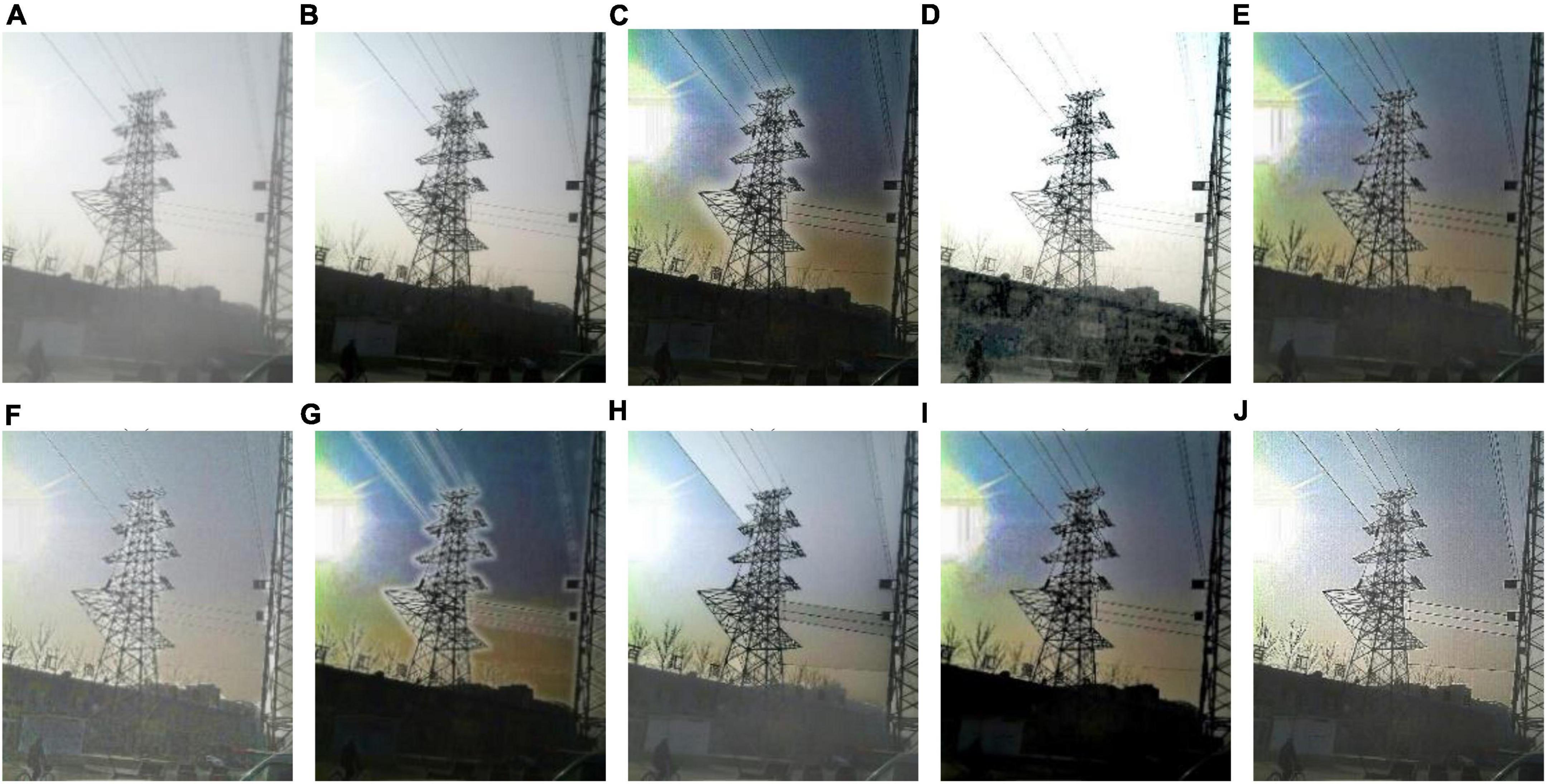

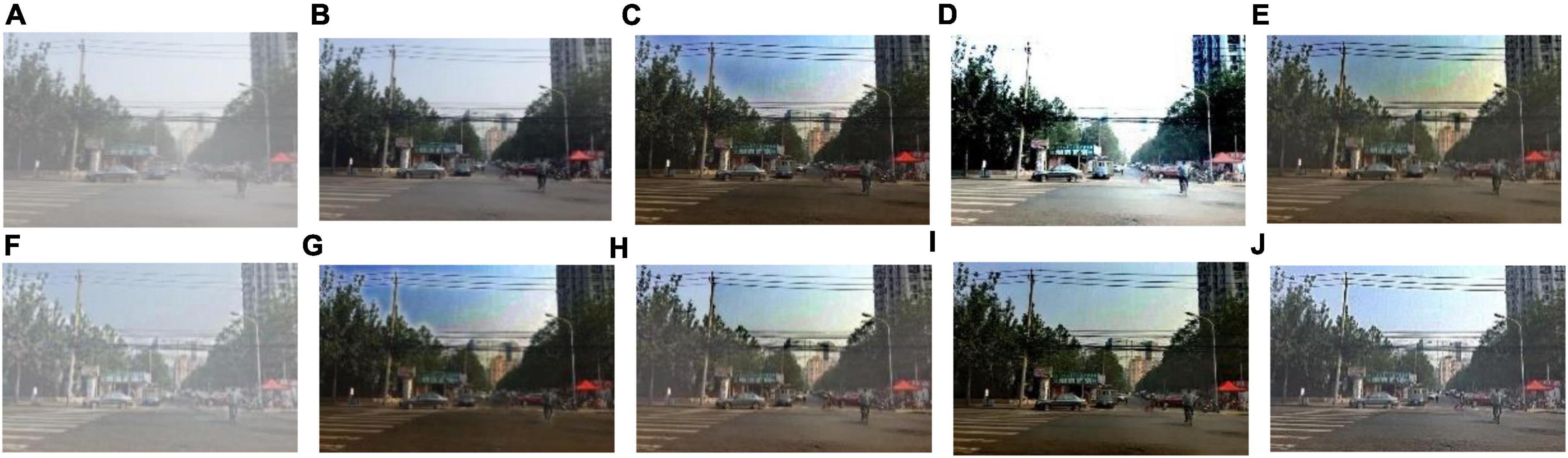

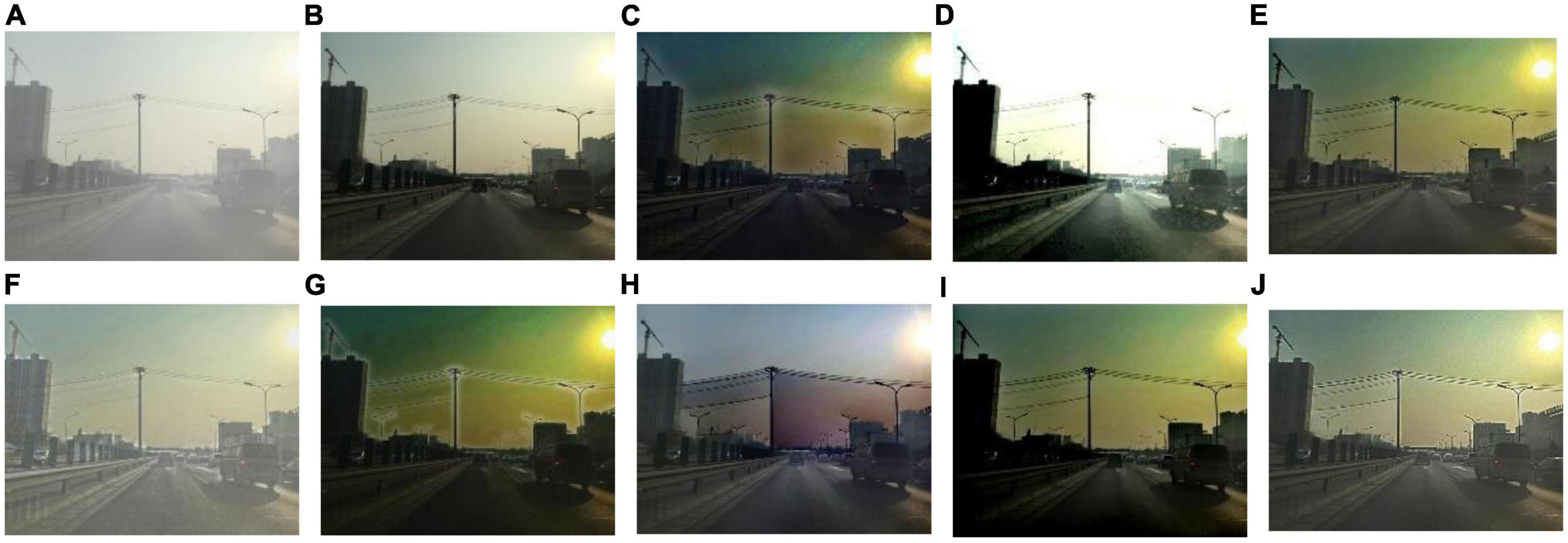

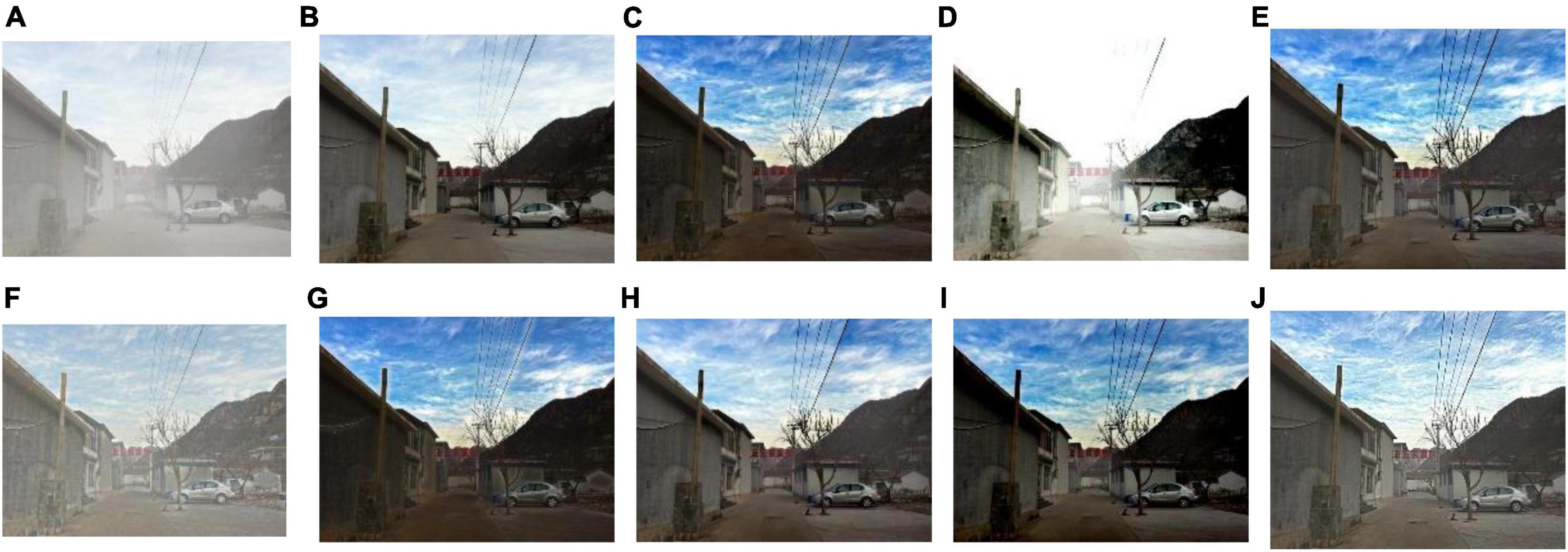

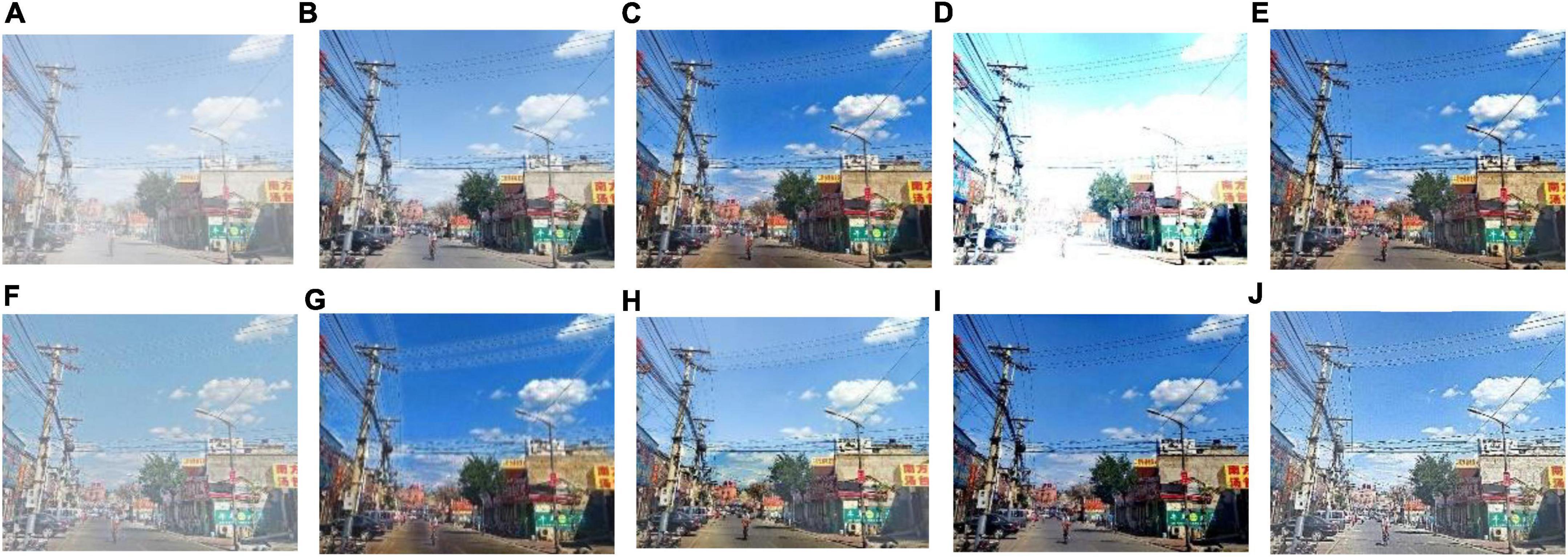

We select 8 foggy images with electricity transmission lines or power towers from the OTS dataset in the RESIDE dataset with ground truth as research objects. We name images as Figures 1–8. The defogging results are shown in Figures 8–15. Note that the post-processing of the transmission of He’s method employs guided filter, rather than soft matting.

Figure 8. Experimental results of different methods for Figure 1: (A) input image; (B) ground truth; (C) dark channel prior; (D) Fattal et al.; (E) Meng et al.; (F) Tarel et al.; (G) Ehsan et al.; (H) Berman et al.; (I) Raikwar et al.; (J) proposed.

Figure 9. Experimental results of different methods for Figure 2: (A) input image; (B) ground truth; (C) dark channel prior; (D) Fattal et al.; (E) Meng et al.; (F) Tarel et al.; (G) Ehsan et al.; (H) Berman et al.; (I) Raikwar et al.; (J) proposed.

Figure 10. Experimental results of different methods for Figure 3: (A) input image; (B) ground truth; (C) dark channel prior; (D) Fattal et al.; (E) Meng et al.; (F) Tarel et al.; (G) Ehsan et al.; (H) Berman et al.; (I) Raikwar et al.; (J) proposed.

Figure 11. Experimental results of different methods for Figure 4: (A) input image; (B) ground truth; (C) dark channel prior; (D) Fattal et al.; (E) Meng et al.; (F) Tarel et al.; (G) Ehsan et al.; (H) Berman et al.; (I) Raikwar et al.; (J) proposed.

Figure 12. Experimental results of different methods for Figure 5: (A) input image; (B) ground truth; (C) dark channel prior; (D) Fattal et al.; (E) Meng et al.; (F) Tarel et al.; (G) Ehsan et al.; (H) Berman et al.; (I) Raikwar et al.; (J) proposed.

Figure 13. Experimental results of different methods for Figure 6: (A) input image; (B) ground truth; (C) dark channel prior; (D) Fattal et al.; (E) Meng et al.; (F) Tarel et al.; (G) Ehsan et al.; (H) Berman et al.; (I) Raikwar et al.; (J) proposed.

Figure 14. Experimental results of different methods for Figure 7: (A) input image; (B) ground truth; (C) dark channel prior; (D) Fattal et al.; (E) Meng et al.; (F) Tarel et al.; (G) Ehsan et al.; (H) Berman et al.; (I) Raikwar et al.; (J) proposed.

Figure 15. Experimental results of different methods for Figure 8: (A) input image; (B) ground truth; (C) dark channel prior; (D) Fattal et al.; (E) Meng et al.; (E) Tarel et al.; (G) Ehsan et al.; (H) Berman et al.; (I) Raikwar et al.; (J) proposed.

It can be seen from Figures 8–15 that dark channel prior and Meng’s method will over enhance the sky area, resulting in color deviation or over saturation of the sky area, while it is too dark for the non-sky area. Therefore, the fog removal image is very different from the ground truth. The reason for this is that the transmission is often underestimated when using these methods. At the same time, it is noted that when He’s method is used to defog the electricity transmission line, because the power tower and electricity transmission line are used as the foreground and the sky area is used as the background, there is a depth discontinuity between the foreground and the background, so there will be an obvious “halo” effect at the edge of the electricity transmission line, which is not conducive to observation. The “halo” phenomenon will lead to the transition area of color deviation between the image to be observed and the background, and produce abnormal colors of white or other colors around the image, affecting the observation of the image. This phenomenon is particularly obvious when dealing with Figures 2, 3, 4, 8.

For Fattal’s method, the sky area in the defogging image will be overexposed, resulting in chromatic aberration in the sky area. In addition, due to the tine size of the transmission line, the image of the electricity transmission line occupies a small proportion in the entire image, so the image information of the electricity transmission line in the defogging image is partially or completely lost, which is not conducive to the observation of the electricity transmission line. This phenomenon has a particularly obvious impact on the results of the Fattal’s method for defogging in Figures 5–8. For Tarel’s method, this method cannot completely remove fog, the image still retains a part of fog after defogging, and the overall image looks hazy with low saturation. For Ehsan’s method, there is a large chromatic aberration in the sky area, and there is a “halo” effect around the electricity transmission line, which is particularly evident in the defogging images of Figures 1, 3, 4, 6, 8. For Berman’s method, serious color distortion and color shift occur in the sky area of some defogging images, as shown in Figures 2, 3, 5, which are quite different from the ground truth. This is because this method needs to preset a gamma value for each image, and the most suitable gamma value for different images is an unknown quantity. If the best gamma value for each image is unknown, Berma recommends trying to set the default gamma value to 1. Therefore, Berman’s algorithm cannot satisfy all situations, and has limitations for practical use. Raikwar’s method can eliminate the “halo” phenomenon effectively, but it will still cause color saturation in the sky area, resulting in color shift. At the same time, the method also has low contrast in the non-sky area, which leads to darkness in the non-sky area of the defogging image and affects the observation of power towers on the ground.

For our algorithm, the color saturation of the image after defogging is moderate, the sky area is not over-saturated, and the ground area is not too low in brightness, the “halo” effect can be reduced at the same time. And the visual effect is most similar to the ground truth. In addition, because the proposed method sharpens and enhances the details of the defogging image, the power towers and electricity transmission lines in the defogging image have a clearer visual effect, retaining clearer outlines and more detailed details. It is convenient to observe electricity transmission lines and power towers. The qualitative study above shows that our method has better visual effects, and the fog removal effect is better and more realistic.

4.2. Quantitative comparison

The proposed defogging algorithm will be compared and analyzed with previous defogging algorithms utilizing various test metrics in the section on quantitative comparison. The following are the evaluation methods used in this study: peak signal-to-noise ratio (PSNR), information entropy, structural similarity index measurement (SSIM), mean squared error (MSE), universal quality index (UQI), average gradient (AG). According to whether there is a reference image, image evaluation methods can be divided into full reference image quality assessment and no reference image quality assessment. Full reference image quality assessment refers to comparing the difference between the image to be evaluated and the reference image when there is an ideal image as the reference image. No reference image quality assessment refers to directly calculating the visual quality of an image when there is no reference image. PSNR, SSIM, MSE, and UQI belong to full reference image quality assessment, while information entropy and AG belong to no reference image quality assessment. PSNR is the most commonly used image quality evaluation metric, which is an objective standard to measure the level of image distortion. The similarity between the fog removal image and the ground truth is directly proportional to the value of PSNR. A larger PSNR value means that the smaller the distortion of the defogging image and the better the defogging effect. SSIM is a measurement metric that objectively compares the brightness, contrast, and structure of two images to determine how similar they are to one another. The value of SSIM is a number in the range of 0 to 1, and the closer the value is to 1, the more closely the defogging images resemble the ground truth image. For an image, the average amount of information can be determined via the information entropy. The more details and richer colors of the image after defogging, the greater the information entropy. The UQI can reflect the structural similarity between two images. The larger the value of UQI, the closer the two images are. The AG is related to the changing characteristics of the image detail texture and reflects the sharpness. The clearer the image, the higher the value of AG. Note that SSIM and UQI belong to the full reference image quality assessment and need to be compared with the reference image when calculating. Therefore, we selected the ground truth of OTS data set as the reference image, compared the defogging images obtained by different methods with the ground truth, and obtained the evaluation results. Similarly, PSNR and MSE also chose ground truth as the reference image.

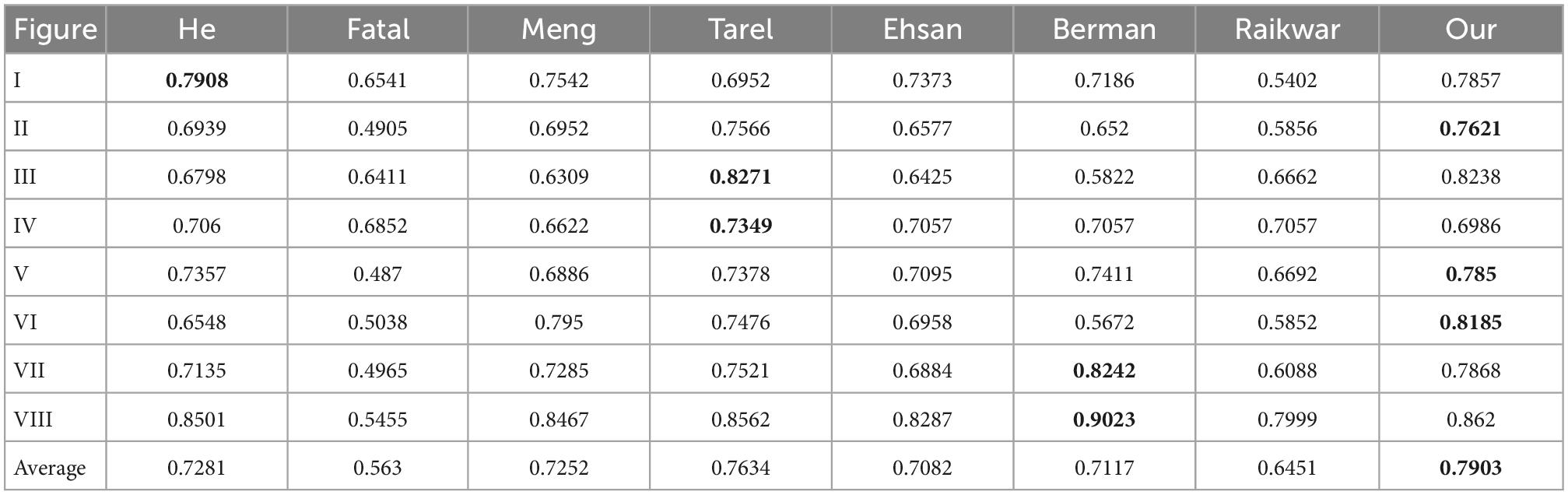

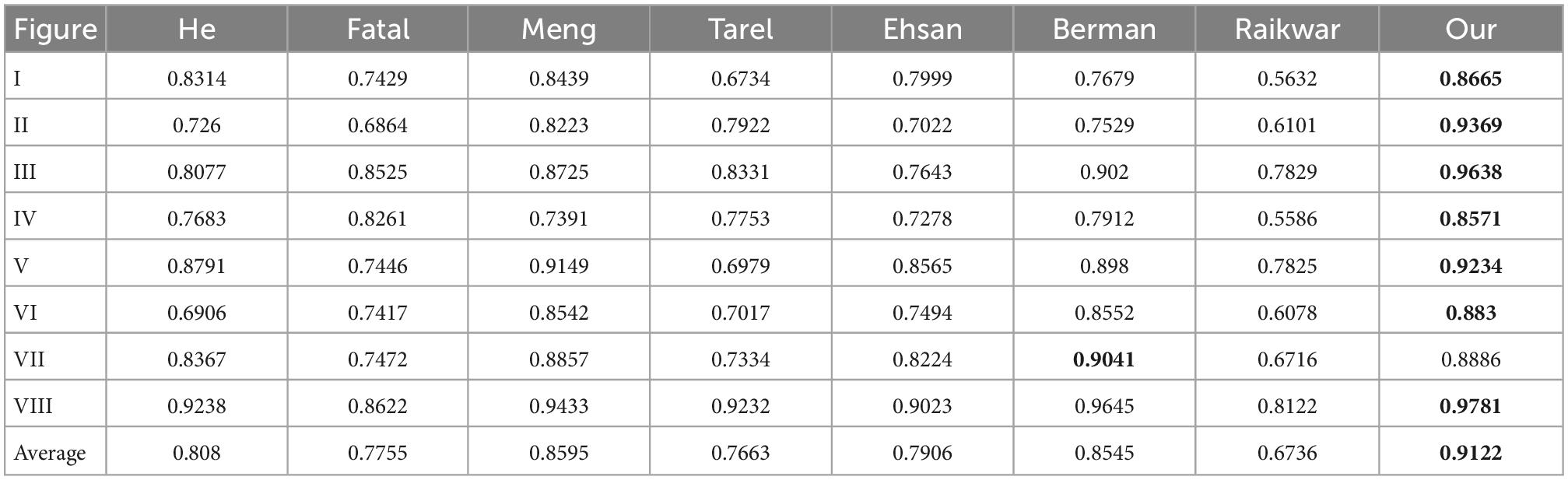

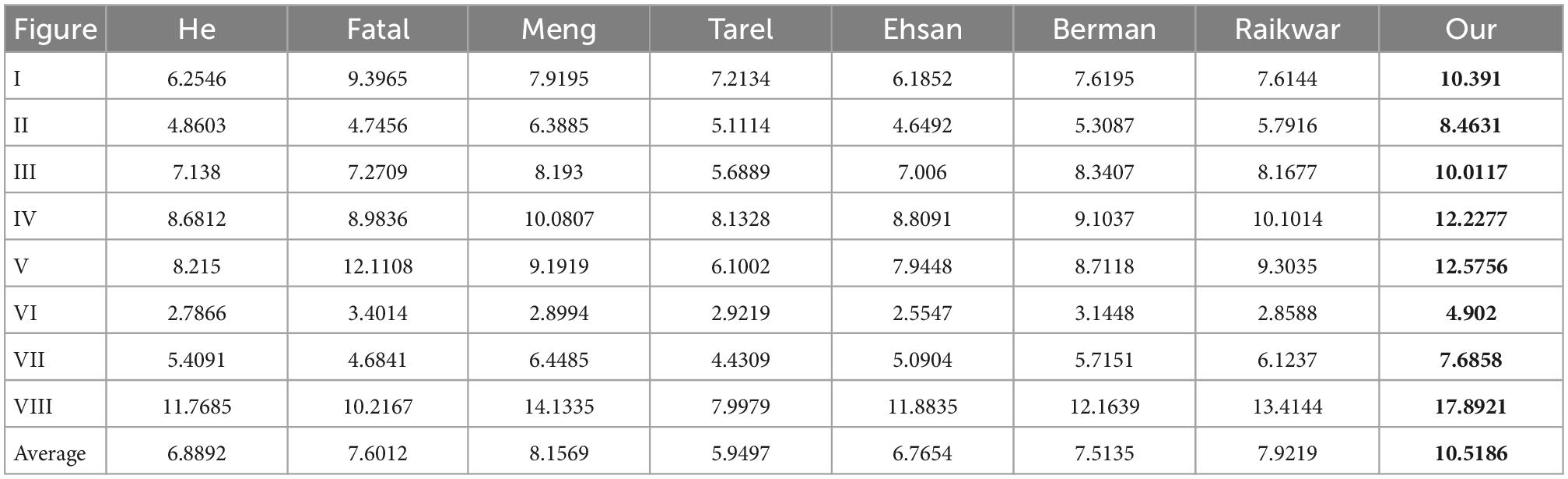

Tables 1–6 show the results of evaluation metrics obtained when different defogging algorithms are adopted in Figures 1–8. For each row in the table, the bold value represents that the evaluation metric can obtain the optimal result when the defogging algorithm corresponding to the value is adopted for the image.

As shown in Tables 1–6, for PSNR, MSE and AG, compared with other comparison algorithms, the algorithm proposed in this paper achieves the best effect for each image, and obviously the average evaluation results also achieve the best effect. For SSIM, information entropy and UQI, the proposed method achieves the highest or relatively high performance on single image metrics, respectively, and the best performance on average score. For SSIM, the average value obtained by the method proposed in this paper is 0.7903, which is 3.4% higher than that of Tarel, the second highest ranking method, and 28.76% higher than that of Fattal, the lowest ranking method. For information entropy, the method proposed in this paper achieves an average value of 7.5264, which is 0.99% higher than Berman’s method with the second highest ranking and 25.34% higher than Fattal’s method with the lowest ranking. For UQI, the method proposed in this paper achieves an average value of 0.9122, which is 5.78% higher than that of Meng, the second highest ranking method, and 26.16% higher than that of Raikwar, the lowest ranking method. Quantitative analysis show s that the image obtained by using the proposed method has better structure similarity, rich information content, better color restoration and clarity. As a result, the proposed method has good visual effect.

For the evaluation results of a single defogging image, Berman’s method and Raikwar’s method will cause color shift and over-saturation in the sky area, making the sky area more yellow or blue. And the information entropy is an indicator that reflects the richness of the color, so the information entropy is sometimes higher when using Berman’s method and Raikwar’s method. At the same time, Berman’s method requires a gamma value to be set in advance, so the application scenarios are limited. Although Tarel’s method is used for some images to obtain the best SSIM, Tarel’s method cannot completely remove the fog, and the details of electricity transmission lines in the defogging image are not obvious, which is not conducive to observation.

In general, this method can enhance image details, avoid image distortion and color offset, and has a good defogging effect.

5. Conclusion

In this study, we propose an image defogging algorithm for power towers and electricity transmission lines in video monitoring system. First of all, in view of the statistical law that most of the outdoor electricity transmission line images have a sky area in the upper part of the image, the proposed algorithm uses an improved quadtree segmentation algorithm to find the global atmospheric light, then locates the global atmospheric light in the sky area containing the electricity transmission line, preventing the white or bright objects on the ground interfere with the calculation of global atmospheric light. Second, to solve the “halo” effect when the transmission is computed by the dark channel prior, the algorithm in this paper introduces the concept of dark pixels, and uses superpixel segmentation to locate the dark pixels and use a fidelity function to compute the transmission. Finally, in view of the problem that the size of outdoor electricity transmission lines is tiny and unsuitable for observation, this paper introduces a detail enhancement post-processing based on visibility level and air light level to enhance the details of defogging images. We assess the efficacy of the proposed method by quantitatively and qualitatively assessing defogging images of power towers and transmission lines that were acquired using various methods. The results of the experiment proved that the defogging images restored by suggested algorithm have better detail level, structural similarity and color reproduction, and can effectively remove fog, which is superior to existing algorithms. In addition, the algorithm proposed in this paper can not only be used in power system online monitoring, but also can be extended to community monitoring, UAV monitoring, automatic driving, industrial production, Internet of Things and other fields, with broad application space. In the further work, we are going to do research on image defogging combined with dark image en-hancement to expand the application range of the algorithm.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: https://github.com/h4nwei/MEF-SSIMd

Author contributions

MZ and MG: conceptualization. ZS: methodology. JY: software, formal analysis, and writing—original draft preparation. JY and MG: validation and data curation. CY and ZJ: investigation. ZJ: resources. MG: writing—review and editing. YH: visualization and supervision. MZ and WC: project administration. MZ: funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

The authors declare that this study received funding from the Science and Technology Project of State Grid Hubei Power Supply Limited Company Ezhou Power Supply Company (Grant Number: B315F0222836). The funder was not involved in the study design, collection, analysis, interpretation of data, and the writing of this article or the decision to submit it for publication.

Acknowledgments

The authors would like to express sincere thanks to colleague from transmission line operation and maintenance, State Grid Hubei Power Supply Limited Company Ezhou Power Supply Company. Thanks to Prof. Gwanggil Jeon (employed at Shandong University of Technology) for his guidance in this manuscript.

Conflict of interest

MZ, ZS, CY, ZJ, and WC were employed by the State Grid Hubei Power Supply Limited Company Ezhou Power Supply Company.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Achanta, R., Shaji, A., Smith, K., Lucchi, A., Fua, P., and Süsstrunk, S. (2012). SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 34, 2274–2282. doi: 10.1109/TPAMI.2012.120

Berman, D., and Avidan, S. (2016). “Non-local image dehazing,” in Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NEV. doi: 10.1109/CVPR.2016.185

Cai, B., Xu, X., Jia, K., Qing, C., and Tao, D. (2016). Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 25, 5187–5198. doi: 10.1109/TIP.2016.2598681

Ehsan, S. M., Imran, M., Ullah, A., and Elbasi, E. A. (2021). Single image dehazing technique using the dual transmission maps strategy and gradient-domain guided image filtering. IEEE Access 9, 89055–89063. doi: 10.1109/ACCESS.2021.3090078

Gao, Y., Hu, H. M., Li, B., Guo, Q., and Pu, S. (2018). Detail preserved single image dehazing algorithm based on airlight refinement. IEEE Trans. Multimedia 21, 351–362. doi: 10.1109/TMM.2018.2856095

Hautiere, N., Tarel, J. P., Aubert, D., and Dumont, E. (2008). Blind contrast enhancement assessment by gradient ratioing at visible edges. Image Anal. Stereol. 27, 87–95. doi: 10.5566/ias.v27.p87-95

He, K., Sun, J., and Tang, X. (2010). Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33, 2341–2353. doi: 10.1109/TPAMI.2010.168

He, K., Sun, J., and Tang, X. (2012). Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 35, 1397–1409. doi: 10.1109/TPAMI.2012.213

Jobson, D. J., Rahman, Z., and Woodell, G. A. (1997b). Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 6, 451–462. doi: 10.1109/83.557356

Jobson, D. J., Rahman, Z., and Woodell, G. A. (1997a). A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 6, 965–976. doi: 10.1109/83.597272

Jun, W. L., and Rong, Z. (2013). Image defogging algorithm of single color image based on wavelet transform and histogram equalization. Appl. Math. Sci. 7, 3913–3921. doi: 10.12988/ams.2013.34206

Kim, J. H., Jang, W. D., Sim, J. Y., and Kim, C. S. (2013). Optimized contrast enhancement for real-time image and video dehazing. J. Vis. Commun. Image Represent. 24, 410–425. doi: 10.1016/j.jvcir.2013.02.004

Li, B., Peng, X., Wang, Z., Xu, J., and Feng, D. (2017). “Aod-net: All-in-one dehazing network,” in Proceedings of the IEEE international conference on computer vision, Venice, 22–29. doi: 10.1109/ICCV.2017.511

Liu, X., Ma, Y., Shi, Z., and Chen, J. (2019). “Griddehazenet: Attention-based multi-scale network for image dehazing,” in Proceedings of the IEEE/CVF international conference on computer vision, Seoul. doi: 10.1109/ICCV.2019.00741

McCartney, E. J. (1976). Optics of the atmosphere: Scattering by molecules and particles. New York, NY, 421.

Meng, G., Wang, Y., Duan, J., Xiang, S., and Pan, C. (2013). “Efficient image dehazing with boundary constraint and contextual regularization,” in Proceedings of the IEEE international conference on computer vision, Sydney, NSW. doi: 10.1109/ICCV.2013.82

Miyazaki, D., Akiyama, D., Baba, M., Furukawa, R., Hiura, S., and Asada, N. (2013). “Polarization-based dehazing using two reference objects,” in Proceedings of the IEEE international conference on computer vision workshops, Sydney, NSW. doi: 10.1109/ICCVW.2013.117

Narasimhan, S. G., and Nayar, S. K. (2001). “Removing weather effects from monochrome images,” in Proceedings of the 2001 IEEE computer society conference on computer vision and pattern recognition, Kauai.

Pang, Y., Nie, J., Xie, J., Han, J., and Li, X. (2020). “BidNet: Binocular image dehazing without explicit disparity estimation,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Seattle, DC. doi: 10.1109/CVPR42600.2020.00597

Qin, X., Wang, Z., Bai, Y., Xie, X., and Jia, H. (2020). “FFA-Net: Feature fusion attention network for single image dehazing,” in Proceedings of the AAAI conference on artificial intelligence, New York, NY. doi: 10.1609/aaai.v34i07.6865

Raikwar, S. C., and Tapaswi, S. (2020). Lower bound on transmission using non-linear bounding function in single image dehazing. IEEE Trans. Image Process. 29, 4832–4847. doi: 10.1109/TIP.2020.2975909

Ren, W., Ma, L., Zhang, J., Pan, J., Cao, X., Liu, W., et al. (2018). “Gated fusion network for single image dehazing,” in Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City. doi: 10.1109/CVPR.2018.00343

Sangeetha, N., and Anusudha, K. (2017). “Image defogging using enhancement techniques,” in Proceedings of 2017 international conference on computer, communication and signal processing (ICCCSP), Chennai. doi: 10.1109/ICCCSP.2017.7944087

Schechner, Y. Y., Narasimhan, S. G., and Nayar, S. K. (2001). “Instant dehazing of images using polarization,” in Proceedings of the 2001 IEEE computer society conference on computer vision and pattern recognition, Kauai.

Seow, M. J., and Asari, V. K. (2006). Ratio rule and homomorphic filter for enhancement of digital colour image. Neurocomputing 69, 954–958. doi: 10.1016/j.neucom.2005.07.003

Sharma, N., Kumar, V., and Singla, S. K. (2021). Single image defogging using deep learning techniques: Past, present and future. Arch. Comput. Methods Eng. 28, 4449–4469. doi: 10.1007/s11831-021-09541-6

Shwartz, S., and Schechner, Y. Y. (2006). “Blind haze separation,” in Proceedings of 2006 IEEE computer society conference on computer vision and pattern recognition (CVPR’06), New York, NY.

Su, C., Wang, W., Zhang, X., and Jin, L. (2020). Dehazing with offset correction and a weighted residual map. Electronics 9:1419. doi: 10.3390/electronics9091419

Tan, R. T. (2008). “Visibility in bad weather from a single image,” in Proceedings of 2008 IEEE conference on computer vision and pattern recognition, Anchorage, AK. doi: 10.1109/CVPR.2008.4587643

Tarel, J. P., and Hautiere, N. (2009). “Fast visibility restoration from a single color or gray level image,” in Proceedings of 2009 IEEE 12th international conference on computer vision, Kyoto. doi: 10.1109/ICCV.2009.5459251

Xu, Y., Wen, J., Fei, L., and Zhang, Z. (2015). Review of video and image defogging algorithms and related studies on image restoration and enhancement. IEEE Access 4, 165–188. doi: 10.1109/ACCESS.2015.2511558

Zhang, H., and Patel, V. M. (2018). “Densely connected pyramid dehazing network,” in Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, GA. doi: 10.1109/CVPR.2018.00337

Zhou, L., Bi, D. Y., and He, L. Y. (2016). Variational histogram equalization for single color image defogging. Math. Probl. Eng. 2016, 1–17. doi: 10.1155/2016/9897064

Keywords: smart city, power lines, atmospheric scattering model, global atmospheric light, dark pixels

Citation: Zhang M, Song Z, Yang J, Gao M, Hu Y, Yuan C, Jiang Z and Cheng W (2023) Study on the enhancement method of online monitoring image of dense fog environment with power lines in smart city. Front. Neurorobot. 16:1104559. doi: 10.3389/fnbot.2022.1104559

Received: 21 November 2022; Accepted: 12 December 2022;

Published: 06 January 2023.

Edited by:

Xin Jin, Yunnan University, ChinaReviewed by:

Zhibin Qiu, Nanchang University, ChinaRuohan Gong, Université de Lille, France

Zhixiang Chen, Minnan Normal University, China

Copyright © 2023 Zhang, Song, Yang, Gao, Hu, Yuan, Jiang and Cheng. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yuanchao Hu, ✉ aHV5dWFuY2hhbzMyMTFAMTI2LmNvbQ==

Meng Zhang1

Meng Zhang1 Mingliang Gao

Mingliang Gao Yuanchao Hu

Yuanchao Hu