- 1Brain Cognition and Brain Disease Institute, Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China

- 2Shenzhen-Hong Kong Institute of Brain Science-Shenzhen Fundamental Research Institutions, Shenzhen, China

- 3Department of Brain and Cognitive Sciences, Massachusetts Institute of Technology, Cambridge, MA, United States

- 4BIAI INC., Chelmsford, MA, United States

- 5BIAI Intelligence Biotech LLC, Shenzhen, China

- 6Department of Neurology, Harvard Medical School, Harvard University, Boston, MA, United States

- 7Retina Division, Department of Ophthalmology, Boston University Eye Associates, Boston University, Boston, MA, United States

- 8Center for High Performance Computing, Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China

- 9Department of Affective Disorders and Academician Workstation of Mood and Brain Sciences, The Affiliated Brain Hospital of Guangzhou Medical University (Guangzhou Huiai Hospital), Guangzhou, China

- 10Guangdong-Hong Kong-Macau Institute of Central Nervous System (CNS) Regeneration, Jinan University, Guangzhou, China

- 11Key Laboratory for Nonlinear Mathematical Models and Methods, School of Mathematical Science, Fudan University, Shanghai, China

- 12Depression Clinical and Research Program, Department of Psychiatry, Massachusetts General Hospital, Boston, MA, United States

- 13The State Key Laboratory of Brain and Cognitive Sciences, Department of Ophthalmology, University of Hong Kong, Pok Fu Lam, Hong Kong

Real-time ocular responses are tightly associated with emotional and cognitive processing within the central nervous system. Patterns seen in saccades, pupillary responses, and spontaneous blinking, as well as retinal microvasculature and morphology visualized via office-based ophthalmic imaging, are potential biomarkers for the screening and evaluation of cognitive and psychiatric disorders. In this review, we outline multiple techniques in which ocular assessments may serve as a non-invasive approach for the early detections of various brain disorders, such as autism spectrum disorder (ASD), Alzheimer's disease (AD), schizophrenia (SZ), and major depressive disorder (MDD). In addition, rapid advances in artificial intelligence (AI) present a growing opportunity to use machine learning-based AI, especially computer vision (CV) with deep-learning neural networks, to shed new light on the field of cognitive neuroscience, which is most likely to lead to novel evaluations and interventions for brain disorders. Hence, we highlight the potential of using AI to evaluate brain disorders based primarily on ocular features.

Introduction

The neurosensory retinas play a critical role in the functioning of our central nervous system (CNS), the latter of which processes our sensory input, motor output, emotion, cognition, and even consciousness (1). Multiple studies have shown that ocular evaluations can be used to assess CNS disorders (2). Many neurological and psychiatric disorders—such as glaucoma, stroke, Parkinson's disease (PD), autism spectrum disorder (ASD), Alzheimer's disease (AD), major depressive disorder (MDD), and schizophrenia (SZ)—lead to considerable personal suffering, financial costs, and social burden (3). Distinct ocular findings have exhibited the possibility of ocular assessments as early biomarkers for these disorders (2, 4). Since brain disorders represent one of the most challenging issues to modern humans, indeed, novel approaches are needed to advance psychiatric medicine, especially in terms of objective cognitive measurements (5) and real-time interventions for cognitive problems (6).

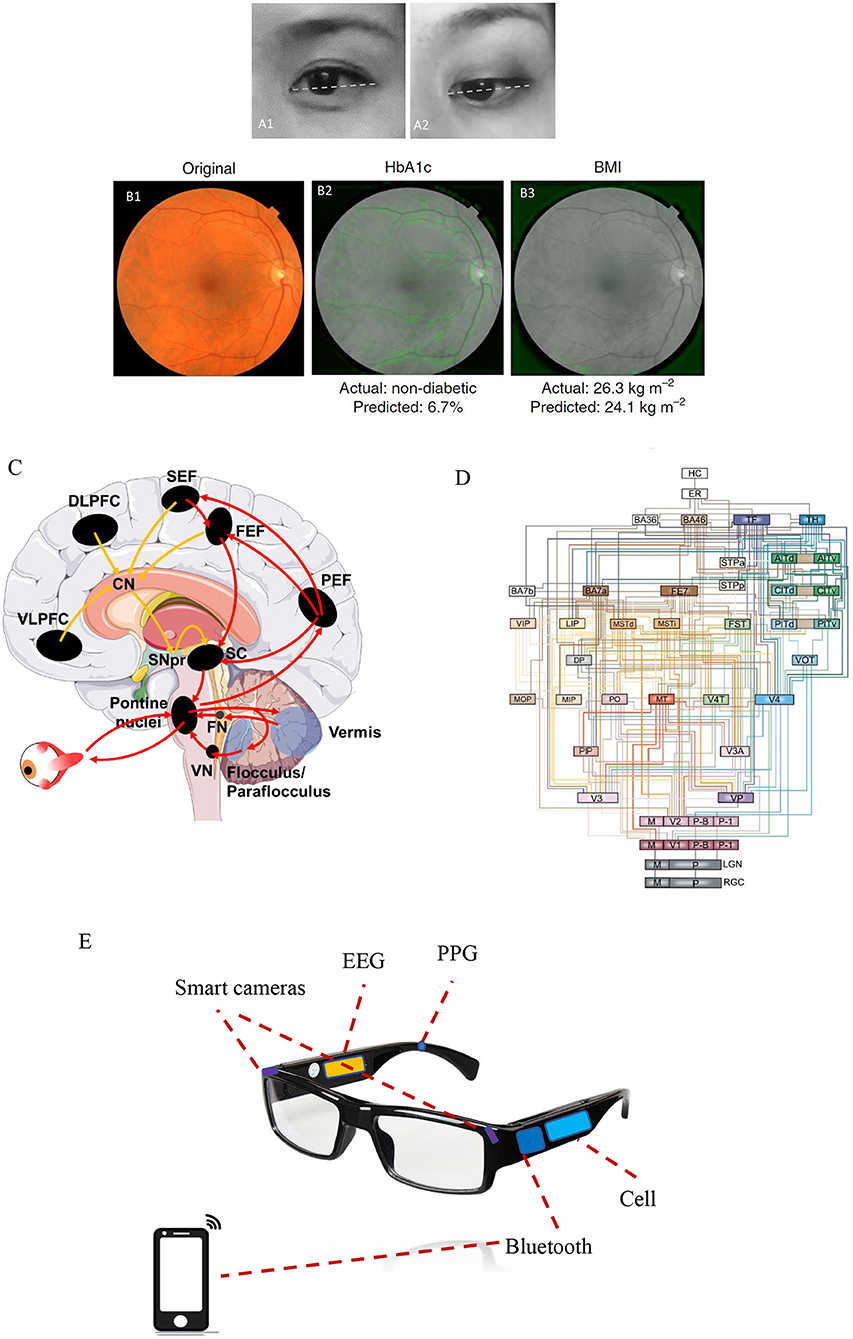

Recently, the Google/DeepMind was able to detect retinal diseases and cardiovascular risk factors using artificial intelligence (AI) algorithms on retinal images (7, 8). AI studies have already shown that AI-based detection to various diseases is possible and the potential of AI to impact the next generation of medical care as well (9). Given the increasing data connecting ocular parameters with brain disease states, it is possible that applying AI algorithms on ocular patterns would be helpful for the detection and evaluation of these diseases, especially with the rapid advancement of computer vision (CV) and deep-learning algorithms [(9, 10); Figures 1A,B]. In this present review, we discuss and highlight a novel approach of using CV with advanced AI to evaluate human brain disorders based primarily on ocular responses.

Figure 1. The eye as a window to uncover a healthy level of the brain. (A1) A restful and calm eye (positive state) is shown compared with (A2) a stressful and anxious eye (negative state). Note that a positive state is more frequently associated with an upside view of the eyes, whereas a negative state exhibits a more downside view of eyes. The eye images shown here are presented following permission from the corresponding subjects. (B1) Example of a retinal fundus image in color, whereas (B2, B3) show the same retinal image but in black and white. Machine learning predictions of diabetes and body mass index (BMI) states mainly rely on the features of the vasculature and optic disc, as indicated by the soft attention heat map with green color in those images. The images in (B1–B3) were adapted from Poplin et al. (8) (with permission). (C) Complex neural networks spanning the cortical, subcortical, and cerebellar areas are involved in voluntary saccadic eye movements for attentional control. The image was modified from that of Johnson et al. (11). Red arrows indicate the direct pathway (PEF, the parietal eye fields; FEF, frontal eye field; SEF, supplementary eye field) to the superior colliculus (SC) and brainstem premotor regions, while yellow arrows indicate the indirect pathway to the SC and brainstem premotor regions via the basal ganglia (striatum, subthalamic nucleus, globus pallidus, and substantia nigra pars reticularis). (D) An architectural model of the hierarchy of visual cortical circuitry, modified from Felleman and Van (12). There is a feedforward ascending pathway of the vision system from the retinas to the cortex, as well as a feedback descending pathway from the cortex to multiple downstream areas. (E) A potential application of eye–brain engineering developed to compute human brain states mainly based on smart cameras to detect ocular responses, combined with other biological signals including electroencephalography (EEG) and photoplethysmography (PPG).

Eye–Brain Connection

The eyes and the brain are intimately connected. Approximately 80% of the sensory input to the human brain initiates from the vision system, which begins at the retinas (1, 2). The axons of retinal ganglion cells (RGCs) send visual information collected on the neurosensory retinas to the CNS. There are at least 20 nuclei that receive projections from the retinas into the mammalian brain (13, 14); for example, the lateral geniculate nucleus serves as a thalamic visual relay to primary visual cortex, the superior colliculus is responsible for visuomotor processing, and the hypothalamic suprachiasmatic nucleus is involved in non-visual hormonal photoentrainment (15).

The eyes are usually linked to facial expressions, such as eye widening can be a sign of fear, whereas eye narrowing can be a sign of disgust (16). Lee et al. demonstrated that eye widening enhances one's visual field, thereby improving stimulus detection, while eye narrowing increases visual acuity, which improves objective discrimination (17, 18). Moreover, visual attention, pupillary responses, and spontaneous blinking are regarded as non-invasive and complementary measures of cognition (19). Vision-based attentional control includes the planning and timing of precise eye movements, which has been shown to be controlled by neural networks spanning cortical, subcortical, and cerebellar areas that have been extensively investigated in both humans and non-human primates [(20–22); Figure 1C]. Pupillary responses are primarily modulated by norepinephrine in the locus coeruleus, which controls physiological arousal and attention (23–25), whereas spontaneous eye-blink rates are tightly correlated with CNS dopaminergic levels and are associated with processes underlying learning and goal-directed behavior [(26, 27); Figure 1D]. Below, we outline in detail the connections between ocular assessments and some brain disorders including autism spectrum disorder (ASD), Alzheimer's disease (AD), schizophrenia (SZ), and major depressive disorder (MDD).

Autism Spectrum Disorder: Without Normal Eye Contact

A lack of normal eye contact during social interaction is one of the main clinical features of ASD (28). Screening for ocular fixation at 2–6 months old can provide early detection and even interventions for children with ASD (29). Full-field electroretinogram (ERG), which measures specific cellular functions within the retinas, further exhibits decreases in rod b-wave amplitude in ASD individuals (30). Different types of oculomotor dysfunction—such as saccade dysmetria (over- or undershooting of visual targets), loss of saccadic inhibition, and fixation impairment—have all been documented in patients with ASD (11). Saccade dysmetria may be caused by the dysfunction of neural networks connecting the cerebellar vermal–fastigial circuitry to the brainstem premotor nuclei that regulate oculomotor movements [(28, 29); Figure 1C]. A diminished ability to inhibit reflexive saccades during anti-saccade tasks is thought to be associated with the characteristic repetitive behaviors seen in patients with ASD (31, 32). In addition, fixation is often significantly impaired in ASD patients, which is more likely secondary to a reduced top–down modulation of sensorimotor processing (33, 34).

Alzheimer'S Disease: Dementia Feature in the Eyes

Ocular assessments of AD patients have demonstrated saccadic dysfunctions indicative of poor visual attention. In particular, AD patients have difficulty focusing on fixed objects (35). Prettyman et al. showed early in 1997 that there was a 75% greater latency in pupillary constriction in AD patients compared with that in age-matched controls (36). Additionally, AD patients have markedly decreased visual contrast sensitivity, which is evident even at the early stage of AD (37). Patients with AD also have altered retinal microvasculature, such as sparser and more tortuous retinal vessels and narrower retinal venules (38) and decreased retinal blood flow/blood-column diameter as indicated by laser Doppler flowmetry (39). Studies using optical coherence tomography (OCT) have indicated a gradual decrease in retinal nerve fiber layer thickness (RNFL), most prominently in the superior quadrants, when comparing patients with no AD to mild AD to severe AD (40, 41). AD patients exhibit a number of specific ocular findings; these ocular findings provide an opportunity for their collective use as biomarkers for machine learning algorithms to test AD (42).

Schizophrenia: To See or Not to See

Visual processing impairment including visual hallucination, distortion of shapes, or light intensity, is commonly observed in patients with SZ (43). Abnormal retinal findings like dilated retinal venules, RNFL thinning, and ERG abnormalities were present in SZ patients (44, 45). A twin study showed a positive correlation between wider retinal venules and more severe psychotic symptoms (46), suggesting the possible use of retinal venule diameter as a biomarker for SZ. In addition, RNFL thinning, which corresponds to the loss of RGCs axons, is seen in SZ patients (47, 48) and also in patients with PD (49) and AD (50). ERG abnormalities in SZ indicate reduced functionalities of rod and cone photoreceptors, bipolar cells, and RGCs, all of which can reflect the deregulation of neurotransmitters such as dopamine (51, 52). Furthermore, a portable handheld ERG device was made and can be used in psychiatry clinics for screening and evaluation of SZ (53).

Major Depressive Disorder: A Gray World of Eyes

Reduced contrast sensitivity is frequently seen in individuals with MDD, both medicated and unmedicated (54). There is even a strong correlation between contrast gain and depression severity, as indicated by pattern electroretinogram (PERG) (54, 55). When compared with healthy controls, patients with MDD often have higher error rates and increased reaction times in performing anti-saccade tasks (56, 57). Furthermore, patients with melancholic depression, when compared with saccade parameters in healthy controls and non-melancholic depressed patients, exhibit longer latencies, reduced peak velocities, and greater hypometricity during saccadic eye movement tasks (58). Patients with seasonal affective disorder (SAD, namely, winter depression) have a significantly reduced post-illumination pupillary response (PIPR) as demonstrated by infrared pupillometry (59, 60). These features are most likely to associate with dysfunction of melanopsin-expressing intrinsically photosensitive RGCs (ipRGCs), since ipRGCs often contribute to pupillary response function, particularly during sustained-state pupillary constriction (61, 62). However, the change in PIPR in response to blue light stimuli only happened in SAD patients carrying the OPN4 I394T genotype (59).

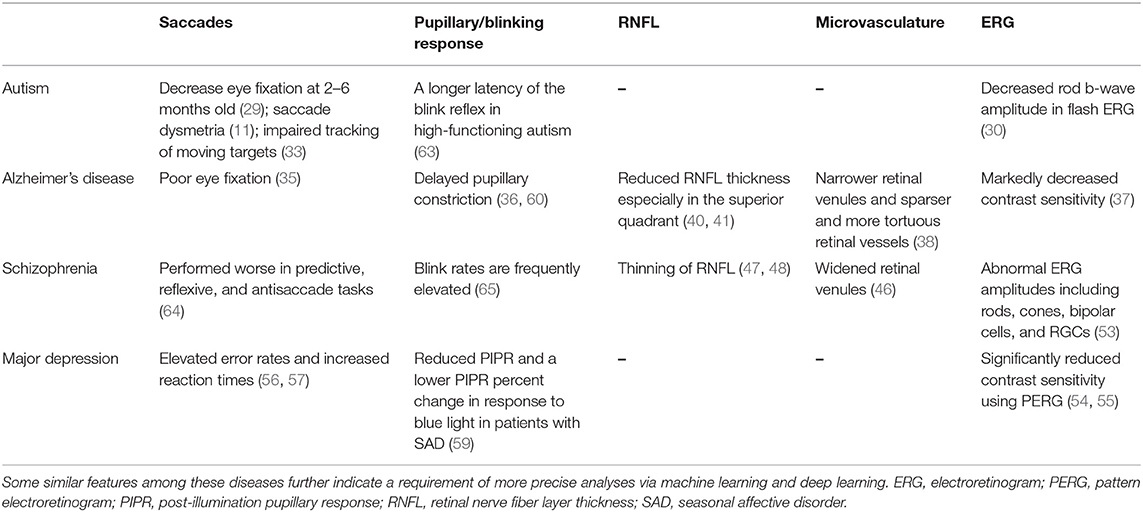

As previously outlined, different brain disorders usually have ocular manifestations. However, those ocular features may be either shared among multiple diseases or specific to a singular disease, as shown in detail in Table 1. Vast psychological and economic burdens caused by brain disorders call for more precise analyses of those disorders. It is a good choice for analysis in advance to combine with machine learning particularly deep-learning algorithms.

Table 1. Multiple changes in ocular parameters via ophthalmological assessments are associated with neurological disorders.

Computer Vision: With Advanced Artificial Intelligence

CV, as one of the most powerful tools to push AI applications into healthcare areas, exhibits a high capability of auto-screening diseases, such as skin cancer (66) and diabetic retinopathy (10, 67). Following a rapid development of deep learning-based AI, CV now has an impressive resolution that is close to that of human vision and maybe beyond sometime (9, 68). Hence, CV is likely to drive AI to provide novel tools for brain disorders, as machines have now been able to be trained to read human emotional and cognitive states, especially in terms of automated detection of facial expressions like fear and fatigue (18, 69). Individuals with brain disorders may benefit from CV-based AI applications in neurological healthcare, particularly to aid in patient self-monitoring of symptoms and in conducting real-time interventions for recovery of social and psychological abilities (70). Furthermore, CV-based AI recognition of human emotional and cognitive states will be more precisely achieved with automated detection and analyses of ocular responses.

Nevertheless, currently available CV datasets on emotional recognition—such as JAFFE, FERA, and CK+–have usually been based on thousands of facial images captured in the laboratory, but often neglected human eye movement and other primary ocular parameters, which usually contain abundant information on human affective states (16). Since Google AI applications focused on retinal and eye features have already demonstrated that this approach non-invasively and conveniently yields much information of physical health (7, 10), such eye-focused CV-based AI should be explored further for clinical neurosciences in the future. Hence, the eye as a window into the brain can be used to obtain health information, not only for eye diseases but also for determining cardiovascular risk factors (8) and even for brain disorders (Table 1). In order to achieve reliable detection of human emotional and cognitive states for brain disorders, a more quantitative representation of emotional recognition via AI algorithms is necessary, which will require more informative databases with dynamic features including eye movements captured with the natural responses, and furthermore, investigations to the neural mechanism involved action units of facial expression as well (71).

Discussion

Medical issues engaged in challenging human diseases are often tightly associated with big data, and AI algorithms have been demonstrated to leverage such big data to aid in solving these issues (9). AI applications in medicine are only at an early stage; however, AI-based automated diagnoses have already contributed to the identification of several types of cancers and retinal diseases (10, 66, 67). Some case reports on AI practices engaged in medical diagnoses have involved image recognition via supervised learning using deep neural networks, which has helped in effectively interpreting cancer slides (72), retinal images (73), and brain scans as well (74); nevertheless, most of these applications have only been completed at the preliminary stage.

AI performance has been frequently leveraged in ophthalmology since retinal images are relatively easy to obtain using fundus imaging or OCT without any invasion. Additionally, diagnostic standards of eye diseases have become more well-defined. Many eye diseases—including diabetic retinopathy (75), age-related macular degeneration (76), and congenital cataracts (77)—have already been assessed via deep-learning neural networks, and many applications have exhibited remarkable accuracies comparable with those of eye specialists (9). Since the neurosensory retinas as a key component of the vision system is a direct embryological extension of the CNS (1), the utility of AI algorithms to detect abnormal ocular responses associated with brain disorders is reasonable to test for its feasibility and efficacy (2, 78). CV-based AI algorithms for automated detection and analyses of ocular responses is more likely to represent promising tools for non-invasively detecting differences in ocular responses (16), particularly those associated with different types of brain disorders (78). Brain disorders tend to be more complicated than other medical issues, and often lead to a greater burden to human society, as indicated by at least 350 million people currently suffering from major depression in the world (79). There is a huge potential for AI to lend its power to patients with affective disorders and the caregivers helping these patients.

Undoubtedly, AI has begun to shed new light on brain disorders. Cognoa applied clinical data from thousands of children at risk for ASD to train and develop an AI platform, which may provide earlier diagnostics and personalized therapeutics for autistic children (80) and was approved by the FDA in 2018. In terms of autoscreening depression, Alhanai et al. used audio and text features to train a neural network with long short-term memory, which was found to be comparable with traditional evaluations via depression questionnaires (81). An eye tracking-based assessment has been already developed with video movies shown at the monitor, in order to evaluate cognitive impairments such as ASD and AD (82, 83). Haque et al. trained their AI using spoken language and 3D facial expressions commonly available in smartphones to measure depression severity (84). A project in our team is currently running and is aimed at developing an AI platform that utilizes ocular data to train a model to detect brain states under natural conditions. This AI platform is designed mainly based on real-time ocular responses and is likely to determine brain emotional and cognitive states of individuals with brain disorders. This core function will be accessed through a wearable smart glasses and an ordinary smartphone (in Figure 1E, the related patent was in progress).

Nevertheless, some issues regarding AI implementation in healthcare require consideration. The first issue is how to effectively collect big data with a high quality of valid features for AI algorithms. In terms of AI recognition of facial expression, high-resolution imaging is often required, especially for the potential application of brain disorders. Multiple ocular data should not be neglected for emotional recognition since eye expression plays a key role in social communication (16). Parameter patterns such as saccades, pupillary response, and blinking rate (Table 1) contain detailed and fruitful information on human affective states (19). Micro-expression detection is also sometimes required for the ground truth of facial expression (85). Some key features are unable to be shown by one single image and, instead, require dynamic videos with high frame rates. In addition, high-resolution imaging is potentially beneficial for obtaining more healthcare data via photoplethysmography (PPG) (86), such as heart rate variability (87). Another key issue is in terms of privacy protection when facial data are obtained to develop AI algorithms (88). Participants need to be provided informed consent regarding data collection. Investigators need to advise participants of their rights, summarize what is expected for participation during the study, and then keep the data safety continuously after the study (88). In our pilot design, all facial or ocular data will be obtained and stored by participants themselves through their smartphones, and they have the right to decide if data are shared without identifying their personal information. The third issue is some portable or wearable devices are required to develop for data collection of brain healthcare. It is better for early detection of symptoms involved in brain disorders (70), rather than diagnosing symptoms at the later and severe stages using functional MRI for evaluating depression (89) and computed tomography (CT) for screening head trauma (90). The wearable smart glasses that we designed (Figure 1E) can collect many different parameters involving ocular responses like saccades, pupillary response, and blinking parameters. Also, other biological data will be considered as well, such as electroencephalography (EEG) and PPG (Figure 1E). It will be defined as an integrated eye–brain engineering tool for human state recognition that enables powerful detection and evaluation of brain states in real time with machine learning. It is more likely to benefit some patients with brain disorders, even including stroke and bipolar disorders.

As AI is still at the early stage of being integrated into mental healthcare, integration of human biological intelligence (BI) and machine learning-based AI will need to be further promoted. AI alone is known to be insufficient for detecting brain disorders since machine learning is entirely dependent on the availability of collected data. The quality of collected data is vital, which will require the use of more knowledge from BI. Current AI representation of human facial expressions is often only achieved at a qualitative level that has been categorized into seven basic expressions plus some composite expressions, with an accuracy ratio of <70% (91, 92). Therefore, it requires the improvement to a quantitative level via more BI, especially with cognitive neuroscience and neuro-ophthalmology. Then we can perhaps learn more clearly about the threshold values of brain disorders distinguished from normal brain functions and learn more about specific features of different brain disorders. More interestingly, vision intervention with some ocular responses also directly exhibited the benefits to rescue brain disorders, for example, blue-enriched light therapy to major depression (14, 93) and 40-Hz light flicker to attenuate AD-associated pathology (94, 95). This type of vision-based therapy may be much beneficial for conducting non-invasive and timely prevention and even treatment for brain disorders in the near future. To this aim, machine learning-based AI, especially deep-learning neural networks, will likely be instrumental in further advances in clinical neuroscience (96, 97).

Conclusion and Perspective

In general, brain disorders can be assessed by ocular detection, while that certainly needs to consider the exclusion of the eye disease situation and that well-trained AI will offer its support again (7). After all, advanced AI will help those patients with brain disorders directly and currently, differing from gene therapy, which often plans to benefit for the next generation of those patients. It can be achieved mainly through autodetection with AI algorithms, self-evaluation with wearable sensors, and timely intervention with brain–computer interface as well. May the force of AI be with patients of brain disorders particularly using an approach with ocular representation in the real time.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Institute Review Board in Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, China. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

XiaotL wrote this works. FF drawed the Figures 1C,D. XC and K-FS revised this manuscript. JL and ZC collected some data. LN and ZQ worked for machine learning and computer vision. KL and AY reviewed the part of brain disorders. XiaojL and ZC designed the engineering of smart glasses. KF-S and LW supported and guided this project. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by the Key-Area Research and Development Program of Guangdong Province (2018B030331001), International Postdoctoral Exchange Fellowship Program by the Office of China Postdoctoral Council (20160021), International Partnership Program of Chinese Academy of Sciences (172644KYS820170004), Commission on Innovation and Technology in Shenzhen Municipality of China (JCYJ20150630114942262), Hong Kong, Macao, and Taiwan Science and Technology Cooperation Innovation Platform in Universities in Guangdong Province (2013gjhz0002), and the National Key R&D Program of China (2017YFC1310503). In particular, we would like to thank the Venture Mentoring Service (VMS) at MIT, Cambridge, MA, USA.

Conflict of Interest

XL and JL were both core members at the start-up company of BIAI INC, USA/BIAI LLC., China.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1. Dowling JE. The Retina: An Approachable Part of the Brain. Cambridge: Harvard University Press (1987).

2. London A, Benhar I, Schwartz M. The retina as a window to the brain—from eye research to CNS disorders. Nat Rev Neurol. (2013) 9:44. doi: 10.1038/nrneurol.2012.227

3. Lopez AD, Murray CC. The global burden of disease, 1990–2020. Nat Med. (1998) 4:1241. doi: 10.1038/3218

4. Chiu K, Chan TF, Wu A, Leung IY, So KF, Chang RC. Neurodegeneration of the retina in mouse models of Alzheimer's disease: what can we learn from the retina? Age. (2012) 34:633–49. doi: 10.1007/s11357-011-9260-2

5. Adhikari S, Stark DE. Video-based eye tracking for neuropsychiatric assessment. Ann N Y Acad Sci. (2017) 1387:145–52. doi: 10.1111/nyas.13305

6. Shanechi MM. Brain-machine interfaces from motor to mood. Nat Neurosci. (2019) 22:1554–64. doi: 10.1038/s41593-019-0488-y

7. De Fauw J, Ledsam JR, Romera-Paredes B, Nikolov S, Tomasev N, Blackwell S, et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat Med. (2018) 24:1342. doi: 10.1038/s41591-018-0107-6

8. Poplin R, Varadarajan AV, Blumer K, Liu Y, McConnell MV, Corrado GS, et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat Biomed Eng. (2018) 2:158. doi: 10.1038/s41551-018-0195-0

9. Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. (2019) 25:44. doi: 10.1038/s41591-018-0300-7

10. Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. (2016) 316:2402–10. doi: 10.1001/jama.2016.17216

11. Johnson BP, Lum JA, Rinehart NJ, Fielding J. Ocular motor disturbances in autism spectrum disorders: systematic review and comprehensive meta-analysis. Neurosci Biobehav Rev. (2016) 69:260–79. doi: 10.1016/j.neubiorev.2016.08.007

12. Felleman DJ, Van DE. Distributed hierarchical processing in the primate cerebral cortex. Cereb Cortex. (1991) 1:1–47. doi: 10.1093/cercor/1.1.1

13. Pickard GE, Sollars PJ. Intrinsically photosensitive retinal ganglion cells. Rev Physiol Biochem Pharmacol. (2012) 162:59–90. doi: 10.1007/112_2011_4

14. Li X, Li X. The antidepressant effect of light therapy from retinal projections. Neurosci Bull. (2018) 34:359–68. doi: 10.1007/s12264-018-0210-1

15. Hattar S, Kumar M, Park A, Tong P, Tung J, Yau KW, et al. Central projections of melanopsin-expressing retinal ganglion cells in the mouse. J Comp Neurol. (2006) 497:326–49. doi: 10.1002/cne.20970

16. Lee DH, Anderson AK. Reading what the mind thinks from how the eye sees. Psychol Sci. (2017) 28:494–503. doi: 10.1177/0956797616687364

17. Lee DH, Mirza R, Flanagan JG, Anderson AK. Optical origins of opposing facial expression actions. Psychol Sci. (2014) 25:745–52. doi: 10.1177/0956797613514451

18. Lee DH, Susskind JM, Anderson AK. Social transmission of the sensory benefits of eye widening in fear expressions. Psychol Sci. (2013) 24:957–65. doi: 10.1177/0956797612464500

19. Eckstein MK, Guerra-Carrillo B, Singley ATM, Bunge SA. Beyond eye gaze: What else can eyetracking reveal about cognition and cognitive development? Develop Cogn Neurosci. (2017) 25, 69–91. doi: 10.1016/j.dcn.2016.11.001

20. Jamadar S, Fielding J, Egan G. Quantitative meta-analysis of fMRI and PET studies reveals consistent activation in fronto-striatal-parietal regions and cerebellum during antisaccades and prosaccades. Front Psychol. (2013) 4:749. doi: 10.3389/fpsyg.2013.00749

21. Hikosaka O, Takikawa Y, Kawagoe R. Role of the basal ganglia in the control of purposive saccadic eye movements. Physiol Rev. (2000) 80:953–78. doi: 10.1152/physrev.2000.80.3.953

22. Baier B, Stoeter P, Dieterich M. Anatomical correlates of ocular motor deficits in cerebellar lesions. Brain. (2009) 132:2114–24. doi: 10.1093/brain/awp165

23. Alnaes D, Sneve MH, Espeseth T, Endestad T, van de Pavert SH, Laeng B. Pupil size signals mental effort deployed during multiple object tracking and predicts brain activity in the dorsal attention network and the locus coeruleus. J Vis. (2014) 14:1. doi: 10.1167/14.4.1

24. Murphy PR, O'Connell RG, O'Sullivan M, Robertson IH, Balsters JH. Pupil diameter covaries with BOLD activity in human locus coeruleus. Hum Brain Mapp. (2014) 35:4140–54. doi: 10.1002/hbm.22466

25. Varazzani C, San-Galli A, Gilardeau S, Bouret S. Noradrenaline and dopamine neurons in the reward/effort trade-off: a direct electrophysiological comparison in behaving monkeys. J Neurosci. (2015) 35:7866–77. doi: 10.1523/JNEUROSCI.0454-15.2015

26. Jongkees BJ, Colzato LS. Spontaneous eye blink rate as predictor of dopamine-related cognitive function-A review. Neurosci Biobehav Rev. (2016) 71:58–82. doi: 10.1016/j.neubiorev.2016.08.020

27. van Bochove ME, Van der Haegen L, Notebaert W, Verguts T. Blinking predicts enhanced cognitive control. Cogn Affect Behav Neurosci. (2013) 13:346–54. doi: 10.3758/s13415-012-0138-2

28. Lord C, Rutter M, DiLavore PC, Risi S. Autism Diagnostic Observation Schedule-WPS (ADOS-WPS). Los Angeles, CA: Western Psychological Services (1999). doi: 10.1037/t17256-000

29. Jones W, Klin A. Attention to eyes is present but in decline in 2–6-month-old infants later diagnosed with autism. Nature. (2013) 504:427. doi: 10.1038/nature12715

30. Realmuto G, Purple R, Knobloch W, Ritvo E. Electroretinograms (ERGs) in four autistic probands and six first-degree relatives. Can J Psychiatry. (1989) 34:435–9. doi: 10.1177/070674378903400513

31. Agam Y, Joseph RM, Barton JJ, Manoach DS. Reduced cognitive control of response inhibition by the anterior cingulate cortex in autism spectrum disorders. Neuroimage. (2010) 52:336–47. doi: 10.1016/j.neuroimage.2010.04.010

32. Thakkar KN, Polli FE, Joseph RM, Tuch DS, Hadjikhani N, Barton JJ, et al. Response monitoring, repetitive behaviour and anterior cingulate abnormalities in autism spectrum disorders (ASD). Brain. (2008) 131:2464–78. doi: 10.1093/brain/awn099

33. Takarae Y, Minshew NJ, Luna B, Krisky CM, Sweeney JA. Pursuit eye movement deficits in autism. Brain. (2004) 127:2584–94. doi: 10.1093/brain/awh307

34. Lisberger SG, Morris E, Tychsen L. Visual motion processing and sensory-motor integration for smooth pursuit eye movements. Ann Rev Neurosci. (1987) 10:97–129. doi: 10.1146/annurev.ne.10.030187.000525

35. Javaid FZ, Brenton J, Guo L, Cordeiro MF. Visual and ocular manifestations of Alzheimer's disease and their use as biomarkers for diagnosis and progression. Front Neurol. (2016) 7:55. doi: 10.3389/fneur.2016.00055

36. Prettyman R, Bitsios P, Szabadi E. Altered pupillary size and darkness and light reflexes in Alzheimer's disease. J Neurol Neurosurg Psychiatry. (1997) 62:665–8. doi: 10.1136/jnnp.62.6.665

37. Risacher SL, WuDunn D, Pepin SM, MaGee TR, McDonald BC, Flashman LA, et al. Visual contrast sensitivity in Alzheimer's disease, mild cognitive impairment, and older adults with cognitive complaints. Neurobiol Aging. (2013) 34:1133–44. doi: 10.1016/j.neurobiolaging.2012.08.007

38. Cheung CY-l, Ong YT, Ikram MK, Ong SY, Li X, Hilal S, et al. Microvascular network alterations in the retina of patients with Alzheimer's disease. Alzheimers Dement. (2014) 10:135–42. doi: 10.1016/j.jalz.2013.06.009

39. Feke GT, Hyman BT, Stern RA, Pasquale LR. Retinal blood flow in mild cognitive impairment and Alzheimer's disease. Alzheimers Dement. (2015) 1:144–51. doi: 10.1016/j.dadm.2015.01.004

40. Valenti DA. Neuroimaging of retinal nerve fiber layer in AD using optical coherence tomography. Neurology. (2007) 69:1060. doi: 10.1212/01.wnl.0000280584.64363.83

41. Liu D, Zhang L, Li Z, Zhang X, Wu Y, Yang H, et al. Thinner changes of the retinal nerve fiber layer in patients with mild cognitive impairment and Alzheimer's disease. BMC Neurol. (2015) 15:14. doi: 10.1186/s12883-015-0268-6

42. Mutlu U, Colijn JM, Ikram MA, Bonnemaijer PW, Licher S, Wolters FJ, et al. Association of retinal neurodegeneration on optical coherence tomography with dementia: a population-based study. JAMA Neurol. (2018) 75:1256–63. doi: 10.1001/jamaneurol.2018.1563

43. Jurisic D, Cavar I, Sesar A, Sesar I, Vukojevic J, Curkovic M. New insights into schizophrenia: a look at the eye and related structures. Psychiatr Danub. (2020) 32:60–9. doi: 10.24869/psyd.2020.60

44. Silverstein SM, Rosen R. Schizophrenia and the eye. Schizophrenia Res. Cogn. (2015) 2:46–55. doi: 10.1016/j.scog.2015.03.004

45. Adams SA, Nasrallah HA. Multiple retinal anomalies in schizophrenia. Schizophrenia Res. (2018) 195:3–12. doi: 10.1016/j.schres.2017.07.018

46. Meier MH, Gillespie NA, Hansell NK, Hewitt AW, Hickie IB, Lu Y, et al. Retinal microvessels reflect familial vulnerability to psychotic symptoms: a comparison of twins discordant for psychotic symptoms and controls. Schizophrenia Res. (2015) 164:47–52. doi: 10.1016/j.schres.2015.01.045

47. Lee WW, Tajunisah I, Sharmilla K, Peyman M, Subrayan V. Retinal nerve fiber layer structure abnormalities in schizophrenia and its relationship to disease state: evidence from optical coherence tomography. Investig Ophthal. Visual Sci. (2013) 54:7785–7792. doi: 10.1167/iovs.13-12534

48. Cabezon L, Ascaso F, Ramiro P, Quintanilla M, Gutierrez L, Lobo A, et al. Optical coherence tomography: a window into the brain of schizophrenic patients. Acta Ophthalmol. (2012) 90. doi: 10.1111/j.1755-3768.2012.T123.x

49. Satue M, Garcia-Martin E, Fuertes I, Otin S, Alarcia R, Herrero R, et al. Use of Fourier-domain OCT to detect retinal nerve fiber layer degeneration in Parkinson's disease patients. Eye. (2013) 27:507. doi: 10.1038/eye.2013.4

50. Moschos MM, Markopoulos I, Chatziralli I, Rouvas A, Papageorgiou GS, Ladas I, et al. Structural and functional impairment of the retina and optic nerve in Alzheimer's disease. Curr Alzheimer Res. (2012) 9:782–8. doi: 10.2174/156720512802455340

51. Lavoie J, Illiano P, Sotnikova TD, Gainetdinov RR, Beaulieu JM, Hébert M. The electroretinogram as a biomarker of central dopamine and serotonin: potential relevance to psychiatric disorders. Biol Psychiatry. (2014) 75:479–86. doi: 10.1016/j.biopsych.2012.11.024

52. Lavoie J, Maziade M, Hébert M. The brain through the retina: the flash electroretinogram as a tool to investigate psychiatric disorders. Progr Neuro Psychopharmacol Biol Psychiatry. (2014) 48:129–34. doi: 10.1016/j.pnpbp.2013.09.020

53. Demmin DL, Davis Q, Roché M, Silverstein SM. Electroretinographic anomalies in schizophrenia. J Abnorm Psychol. (2018) 127:417. doi: 10.1037/abn0000347

54. Bubl E, Kern E, Ebert D, Bach M, Van Elst TL. Seeing gray when feeling blue? Depression can be measured in the eye of the diseased. Biol Psychiatry. (2010) 68:205–208. doi: 10.1016/j.biopsych.2010.02.009

55. Bubl E, Ebert D, Kern E, van Elst LT, Bach M. Effect of antidepressive therapy on retinal contrast processing in depressive disorder. Br J Psychiatry. (2012) 201:151–8. doi: 10.1192/bjp.bp.111.100560

56. Harris MS, Reilly JL, Thase ME, Keshavan MS, Sweeney JA. Response suppression deficits in treatment-naive first-episode patients with schizophrenia, psychotic bipolar disorder and psychotic major depression. Psychiatry Res. (2009) 170:150–6. doi: 10.1016/j.psychres.2008.10.031

57. Malsert J, Guyader N, Chauvin A, Polosan M, Poulet E, Szekely D, et al. Antisaccades as a follow-up tool in major depressive disorder therapies: a pilot study. Psychiatry Res. (2012) 200:1051–3. doi: 10.1016/j.psychres.2012.05.007

58. Winograd-Gurvich C, Georgiou-Karistianis N, Fitzgerald P, Millist L, White O. Ocular motor differences between melancholic and non-melancholic depression. J Affect Dis. (2006) 93:193–203. doi: 10.1016/j.jad.2006.03.018

59. Roecklein K, Wong P, Ernecoff N, Miller M, Donofry S, Kamarck M, et al. The post illumination pupil response is reduced in seasonal affective disorder. Psychiatry Res. (2013) 210:150–8. doi: 10.1016/j.psychres.2013.05.023

60. La Morgia C, Carelli V, Carbonelli M. Melanopsin retinal ganglion cells and pupil: clinical implications for neuro-ophthalmology. Front Neurol. (2018) 9:1047. doi: 10.3389/fneur.2018.01047

61. Gamlin PD, McDougal DH, Pokorny J, Smith VC, Yau K-W, Dacey DM. Human and macaque pupil responses driven by melanopsin-containing retinal ganglion cells. Vis Res. (2007) 47:946–54. doi: 10.1016/j.visres.2006.12.015

62. Adhikari P, Pearson CA, Anderson AM, Zele AJ, Feigl B. Effect of age and refractive error on the melanopsin mediated post-illumination pupil response (PIPR). Scientific Rep. (2015) 5:17610. doi: 10.1038/srep17610

63. Erturk O, Korkmaz B, Alev G, Demirbilek V, Kiziltan M. Startle and blink reflex in high functioning autism. Neurophysiol Clin. (2016) 46:189–92. doi: 10.1016/j.neucli.2016.02.001

64. Obyedkov I, Skuhareuskaya M, Skugarevsky O, Obyedkov V, Buslauski P, et al. Saccadic eye movements in different dimensions of schizophrenia and in clinical high-risk state for psychosis. BMC Psychiatry. (2019) 19:110. doi: 10.1186/s12888-019-2093-8

65. Karson CN, Dykman RA, Paige SR. Blink rates in schizophrenia. Schizophr Bull. (1990) 16:345–54. doi: 10.1093/schbul/16.2.345

66. Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. (2017) 542:115. doi: 10.1038/nature21056

67. Ting DSW, Cheung CYL, Lim G, Tan GSW, Quang ND, Gan A, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA. (2017) 318:2211–23. doi: 10.1001/jama.2017.18152

69. Eriksson M, Papanikotopoulos NP. Eye-tracking for detection of driver fatigue. In: Proceedings of Conference on Intelligent Transportation Systems. Boston, MA: IEEE (1997).

70. Poh MZ, Loddenkemper T, Reinsberger C, Swenson NC, Goyal S, Sabtala MC, et al. Convulsive seizure detection using a wrist-worn electrodermal activity and accelerometry biosensor. Epilepsia. (2012) 53:e93–7. doi: 10.1111/j.1528-1167.2012.03444.x

71. Dolensek N, Gehrlach DA, Klein AS, Gogolla N. Facial expressions of emotion states and their neuronal correlates in mice. Science. (2020) 368:89–94 doi: 10.1126/science.aaz9468

72. Steiner DF, MacDonald R, Liu Y, Truszkowski P, Hipp JD, Gammage C, et al. Impact of deep learning assistance on the histopathologic review of lymph nodes for metastatic breast cancer. Am J Surg Pathol. (2018) 42:1636–46. doi: 10.1097/PAS.0000000000001151

73. Abràmoff MD, Lavin PT, Birch M, Shah N, Folk JC. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digit. Med. (2018) 1:39. doi: 10.1038/s41746-018-0040-6

74. Schnyer DM, Clasen PC, Gonzalez C, Beevers CG. Evaluating the diagnostic utility of applying a machine learning algorithm to diffusion tensor MRI measures in individuals with major depressive disorder. Psychiatry Res Neuroimaging. (2017) 264:1–9. doi: 10.1016/j.pscychresns.2017.03.003

75. Kanagasingam Y, Xiao D, Vignarajan J, Preetham A, Tay-Kearney M-L, Mehrotra A. Evaluation of artificial intelligence–based grading of diabetic retinopathy in primary care. JAMA Network Open. (2018) 1:e182665. doi: 10.1001/jamanetworkopen.2018.2665

76. Burlina PM, Joshi N, Pekala M, Pacheco KD, Freund DE, Bressler NM. Automated grading of age-related macular degeneration from color fundus images using deep convolutional neural networks. JAMA Ophthalmol. (2017) 135:1170–6. doi: 10.1001/jamaophthalmol.2017.3782

77. Long E, Lin H, Liu Z, Wu X, Wang L, Jiang J, et al. An artificial intelligence platform for the multihospital collaborative management of congenital cataracts. Nat Biomed Eng. (2017) 1:0024. doi: 10.1038/s41551-016-0024

78. Kersten HM, Roxburgh RH, Danesh-Meyer HV. Ophthalmic manifestations of inherited neurodegenerative disorders. Nat Rev Neurol. (2014) 10:349. doi: 10.1038/nrneurol.2014.79

79. Vos T, Flaxman AD, Naghavi M, Lozano R, Michaud C, Ezzati M, et al. Years lived with disability (YLDs) for 1160 sequelae of 289 diseases and injuries 1990-2010: a systematic analysis for the Global Burden of Disease Study 2010. Lancet. (2012) 380:2163–96. doi: 10.1016/S0140-6736(12)61729-2

80. Abbas H, Garberson F, Glover E, Wall DP. Machine learning approach for early detection of autism by combining questionnaire and home video screening. J Am Med Inform Assoc. (2017) 25:1000–7. doi: 10.1093/jamia/ocy039

81. Al Hanai T, Ghassemi M, Glass J. Detecting depression with audio/text sequence modeling of interviews. Proc. Interspeech. (2018) 1716–720. doi: 10.21437/Interspeech.2018-2522

82. Pierce K, Conant D, Hazin R, Stoner R, Desmond J. Preference for geometric patterns early in life as a risk factor for autism. Arch Gen Psychiatry. (2011) 68:101–9. doi: 10.1001/archgenpsychiatry.2010.113

83. Oyama A, Takeda S, Ito Y, Nakajima T, Takami Y, Takeya Y, et al. Novel method for rapid assessment of cognitive impairment using high-performance eye-tracking technology. Sci Rep. (2019) 9:12932. doi: 10.1038/s41598-019-49275-x

84. Haque A, Guo M, Miner AS, Fei-Fei L. Measuring depression symptom severity from spoken language and 3D facial expressions. arXiv preprint arXiv:1811.08592 (2018).

85. Oh Y-H, See J, Le Ngo AC, Phan RC-W, Baskaran VM. A survey of automatic facial micro-expression analysis: databases, methods and challenges. Front Psychol. (2018) 9:1128. doi: 10.3389/fpsyg.2018.01128

86. McDuff D, Gontarek S, Picard RW. Improvements in remote cardiopulmonary measurement using a five band digital camera. IEEE Trans Biomed Eng. (2014) 61:2593–601. doi: 10.1109/TBME.2014.2323695

87. McDuff D, Gontarek S, Picard R. Remote measurement of cognitive stress via heart rate variability. In: 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. Chicago, IL: IEEE (2014). doi: 10.1109/EMBC.2014.6944243

88. Price WN, Cohen IG. Privacy in the age of medical big data. Nat Med. (2019) 25:37. doi: 10.1038/s41591-018-0272-7

89. Drysdale AT, Grosenick L, Downar J, Dunlop K, Mansouri F, Meng Y, et al. Resting-state connectivity biomarkers define neurophysiological subtypes of depression. Nat Med. (2017) 23:28. doi: 10.1038/nm.4246

90. Chilamkurthy S, Ghosh R, Tanamala S, Biviji M, Campeau NG, Venugopal VK, et al. Deep learning algorithms for detection of critical findings in head CT scans: a retrospective study. Lancet. (2018) 392:2388–96. doi: 10.1016/S0140-6736(18)31645-3

91. Ko B. A brief review of facial emotion recognition based on visual information. Sensors. (2018) 18:401. doi: 10.3390/s18020401

92. Randhavane T, Bera A, Kapsaskis K, Bhattacharya U, Gray K, Manocha D. Identifying emotions from walking using affective and deep features. arXiv: Computer Vision and Pattern Recognition. (2019).

93. Huang L, Xi Y, Peng Y, Yang Y, Huang X, Fu Y, et al. A visual circuit related to habenula underlies the antidepressive effects of light therapy. Neuron. (2019) 102:128–42.e8. doi: 10.1016/j.neuron.2019.01.037

94. Iaccarino HF, Singer AC, Martorell AJ, Rudenko A, Gao F, Gillingham TZ, et al. Gamma frequency entrainment attenuates amyloid load and modifies microglia. Nature. (2016) 540:230–5. doi: 10.1038/nature20587

95. Singer AC, Martorell AJ, Douglas JM, Abdurrob F, Attokaren MK, Tipton J, et al. Noninvasive 40-Hz light flicker to recruit microglia and reduce amyloid beta load. Nat Protoc. (2018) 13:1850–68. doi: 10.1038/s41596-018-0021-x

96. Ullman S. Using neuroscience to develop artificial intelligence. Science. (2019) 363:692–3. doi: 10.1126/science.aau6595

Keywords: ocular assessment, retina, computer vision, cognitive neuroscience, brain disorders, eye-brain engineering

Citation: Li X, Fan F, Chen X, Li J, Ning L, Lin K, Chen Z, Qin Z, Yeung AS, Li X, Wang L and So K-F (2021) Computer Vision for Brain Disorders Based Primarily on Ocular Responses. Front. Neurol. 12:584270. doi: 10.3389/fneur.2021.584270

Received: 16 July 2020; Accepted: 15 March 2021;

Published: 21 April 2021.

Edited by:

Christine Nguyen, The University of Melbourne, AustraliaReviewed by:

Essam Mohamed Elmatbouly Saber, Benha University, EgyptMutsumi Kimura, Ryukoku University, Japan

Copyright © 2021 Li, Fan, Chen, Li, Ning, Lin, Chen, Qin, Yeung, Li, Wang and So. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaotao Li, eHRsaUBtaXQuZWR1; Kwok-Fai So, aHJtYXNrZkBoa3VjYy5oa3UuaGs=

Xiaotao Li

Xiaotao Li Fangfang Fan

Fangfang Fan Xuejing Chen7

Xuejing Chen7 Kangguang Lin

Kangguang Lin Kwok-Fai So

Kwok-Fai So