- 1The Neuro-Interaction Innovation Lab, Kennesaw State University, Department of Electrical Engineering, Marietta, GA, United States

- 2The Neuro-Interaction Innovation Lab, Kennesaw State University, Department of Engineering Technology, Marietta, GA, United States

As Artificial Intelligence (AI) proliferates across various sectors such as healthcare, transportation, energy, and military applications, the collaboration between human-AI teams is becoming increasingly critical. Understanding the interrelationships between system elements - humans and AI - is vital to achieving the best outcomes within individual team members' capabilities. This is also crucial in designing better AI algorithms and finding favored scenarios for joint AI-human missions that capitalize on the unique capabilities of both elements. In this conceptual study, we introduce Intentional Behavioral Synchrony (IBS) as a synchronization mechanism between humans and AI to set up a trusting relationship without compromising mission goals. IBS aims to create a sense of similarity between AI decisions and human expectations, drawing on psychological concepts that can be integrated into AI algorithms. We also discuss the potential of using multimodal fusion to set up a feedback loop between the two partners. Our aim with this work is to start a research trend centered on exploring innovative ways of deploying synchrony between teams of non-human members. Our goal is to foster a better sense of collaboration and trust between humans and AI, resulting in more effective joint missions.

1. Introduction

In coherence with the rapid growth of interest in Artificial Intelligence (AI) applications, it is inevitable that AI will play a vital part in humans' lives (Haenlein and Kaplan, 2019). As a result, the upcoming years will see various forms of human-machine teaming across several domains and applications, including those involved in high-sensitivity and complex tasks, such as military applications (National Intelligence Council, 2021), transportation (National Science and Technology Council United States Department of Transportation, 2020), and others. Studying the dynamics of human-AI teams is an interdisciplinary endeavor that involves technical concepts related to the intelligent part (such as computer science and engineering) and the human element (such as psychology). It also entails examining the interactions between humans and AI, which in addition to the concepts above, encompass a wide range of considerations, including human factors, ethics, and policymaking. This area of research is becoming increasingly important because fewer studies are available that examine the interactions between humans and AI than those that focus solely on the individual elements. Thus, there is a growing interest in understanding how humans and AI can work together in a way that is safe, ethical, and effective.

Although AI is a new field, research into it does not have to begin from scratch. Rather, we view AI as a subdomain of the machine-based automation movement that started in the mid-1900s. Therefore, there is a wealth of research and knowledge that we can use to design better human-AI systems. Furthermore, by viewing AI as an evolved, more-sophisticated version of a rule-based machine or a robot, one of the key pillars in understanding the relationship between humans and AI is human trust, a topic that has been addressed by many over the years, of those which date back to the 1980s (Muir, 1987). From the literature, we define human trust in AI as the degree to which a user holds a positive attitude toward an AI team member's reliability, predictability, and dependability to perform the actions that contribute to delivering the overall task aims. Trust establishment is influenced by many factors, which can be divided into three categories: human factors (about humans), partner factors (about AI), and environmental factors (about context), all of which are interconnected (Hancock et al., 2011). Achieving the correct balance and alignment between human trust and AI capabilities requires accounting for all these factors, a concept known as “calibrated trust” (Lee and See, 2004). This work follows in the same vein by introducing a new concept that has the potential to help enhance our knowledge of human-AI team systems.

In a human-human team setting, the level of trust the trustor places in the trustee is influenced by the latter's ability to defend themselves and explain the rationale behind their decisions. This is also extendable to human-AI teams (Chen et al., 2016). However, this is a challenging feature to have in AI (Calhoun et al., 2019). Thus, instead of imposing the explainability load on AI, we propose shifting this burden to humans by having AI imitate their actions in low-stakes subtasks. By recognizing themselves in the actions of AI, humans can set up a connection with it. As a result, in decision-making instances where the best decision is unclear, humans can trust the AI's decision based on the pre-established trust from earlier, more direct decision-making interactions. We are calling this model “Intentional Behavioral Synchrony (IBS),” and we define it as the intentional adoption of minor decision-making patterns by an AI agent to mimic those of its human teammate in scenarios where such decisions have a minimally noticeable impact on the mission's overall aims. This approach aims to enhance human-AI teaming, improving team performance in the long term. This paper aims to introduce this concept and discuss its origin and ability to implement it in real-life applications.

2. Intentional behavioral synchrony

2.1. Theoretical foundation

The IBS concept draws inspiration from a psychological phenomenon seen in human-human interaction, known as interpersonal synchrony. Traditionally, interpersonal synchrony refers to physical and temporal coordination (Schmidt et al., 1990), but it has been found to involve several other behavioral mechanisms beyond movement (Mazzurega et al., 2011). It is well-established that synchrony is an indicator of social closeness between two humans (Tickle-Degnen and Rosenthal, 1987). This concept can be easily extrapolated to human-agent teams, but only for agents with similar or very closely matched behavioral and cognitive abilities to humans. But here we are concerned with AI-powered machines, or agents, that have the abilities of processing complex-sets of information, reasoning, decision-making, and taking actions, in pursuit of general high-level aims [definition is inspired by Gabbay et al. (1998)]. These agents are not necessarily humanoid, and they might be embedded in a piece of software that is invisible to humans. This is why synchrony, in the traditional sense, cannot be used with such systems. Thus, the IBS concept was introduced. IBS can be thought of as an add-on to currently existing AI elements of different nature. It is a trust-building strategy for humans working with AI teammates, which can be integrated into AI algorithms to enhance the team's overall performance.

The importance of trust comes from its being essential in building a personal relationship or companionship with a teammate. This human trait has been shown to advance team efforts in achieving their goals (Love et al., 2021). Humans strive to prove a sense of companionship with their possessions, as shown by many individuals naming their belongings. This practice is prevalent across various domains, including sensitive environments like the military, where equipment is often named, such as the famous Big Dog robot. Therefore, by implementing IBS in AI algorithms, we can create a sense of harmony that aligns with human expectations, thereby fostering stronger connections between team members, allowing humans to trust more sophisticated decisions made by the AI that humans may not be able to attest the optimality of the decisions made.

2.2. Operational environments

The proposed strategy is fundamental in nature and, thus, can be implemented in a range of scenarios. Here, we offer some guidelines to illustrate how IBS can be effectively used.

2.2.1. Not limited to robots

IBS is meant to supply a connection between a decision-making element and a companion human to ease joint task performance. This connection is not restricted to robots or humanoid agents but can extend to any type of intelligent entity. For example, it could be a software application making actions, whether visibly or invisibly, that affect the joint task. Also, IBS and other behavioral synchrony mechanisms are expected to work effectively with humanoid robots, which may have greater potential than invisible AI agents.

2.2.2. Not limited to specific types of interaction

Regardless of the freedom given to AI and whether its role is complementary to humans in making joint decisions or if it handles making independent decisions, the trust value brought by behavioral synchrony is unquestionable. So, IBS can be used in various applications regardless of the AI's interaction with the human partner. Furthermore, the significance of trust between humans and AI is not limited to specific domains where the human's emotional state is crucial to achieving team aims but is a fundamental aspect of teamwork. Hence, it is essential to consider trust when perfecting the design of AI machines to maximize the overall benefits generated by the team.

2.2.3. A personalized fashion

Each human has a unique perspective and set of qualities that affect their ability to work effectively in teams and interact with AI, which is referred to as dispositional trust in literature (Hoff and Bashir, 2015). Dispositional trust develops from human characteristics, such as accumulated experiences and life events met by the individual up to the present time. These interactions significantly affect human behavior and can leave individuals vulnerable to intentional or unintentional biases, which can affect how they collaborate with AI in joint systems. As a result, IBS integration, as well as any trust-building mechanism, will need to be conducted on an individual basis. Nonetheless, clustering techniques could be used to find shared characteristics among humans that could ease the process.

3. Practical framework

3.1. Description

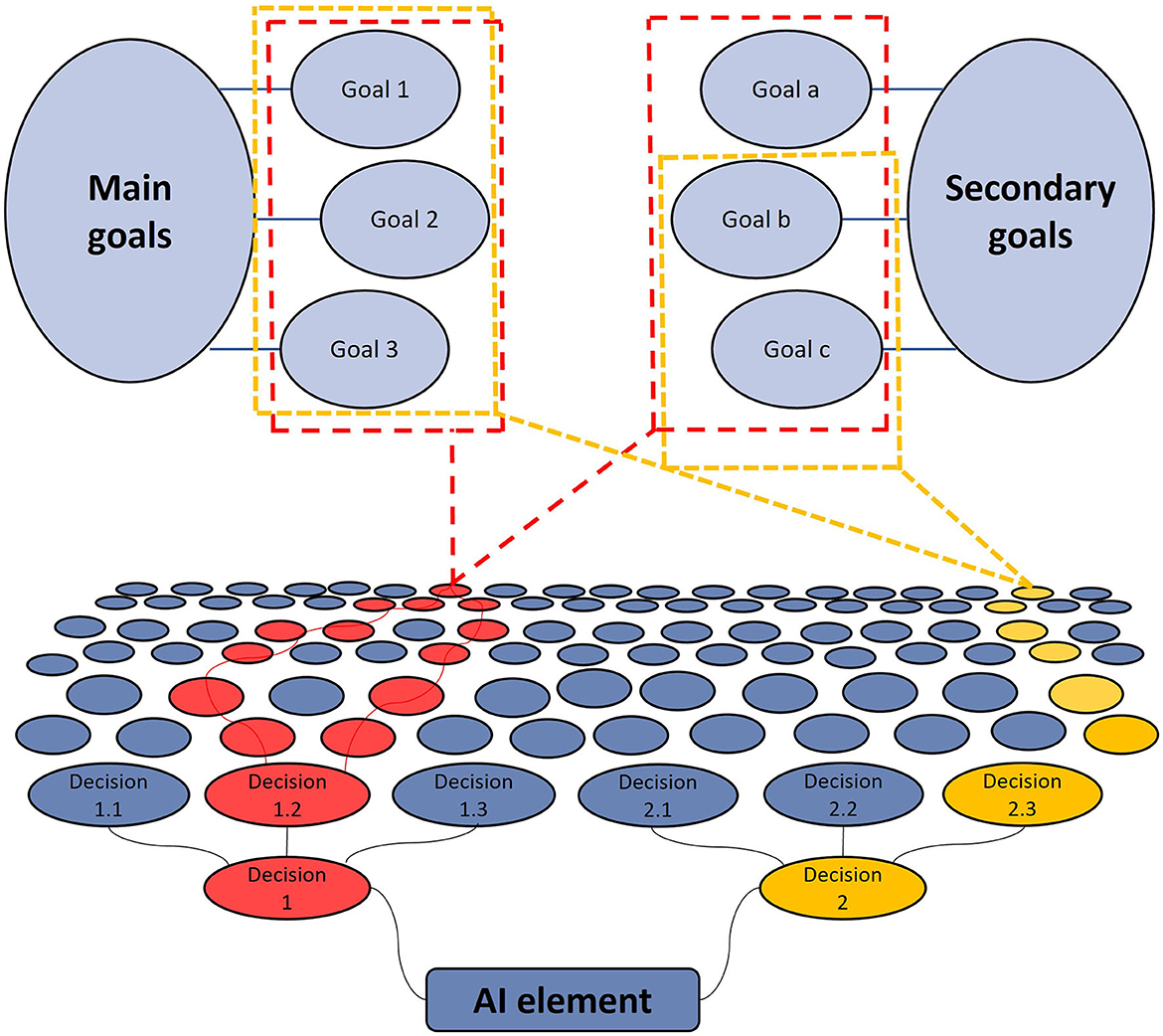

Our vision is to use IBS and behavioral synchrony in highly dynamic and complex environments where tasks are layered with multiple goals and aims. These environments offer many opportunities to test the effectiveness of our theory in real-world applications; they have the freedom to try alternative approaches (decisions) that eventually can achieve the same goals. To give a perspective on how such a strategy would work, consider the exemplary diagram in Figure 1. The figure illustrates how an AI element is positioned to make decisions in a hierarchical manner, where each decision affects the later set of decisions. These decisions are interdependent and driven by a set of primary and secondary goals that AI has been programmed to achieve. In the figure, AI is one of many elements of a network of elements involving the human companion and other AI-human teams. This setup is ideal for applying IBS and similar algorithms.

At each step of the decision-making process, the decision that contributes to achieving the greatest number of goals is preferred. This is how intelligent elements should be programmed. In the figure, these are the paths shown in red. In such an environment, AI can carry out the goals set by humans in diverse ways by following one of the red routes. Thus, no goals or aims of the experiment will be compromised by taking either path. The proposed IBS method suggests prioritizing the path that is more like what a human would choose, not just at the first stage but throughout the decision-making process. This can be implemented by adding this IBS requirement to the list of secondary or even tertiary requirements of the systems. While this is not an aim of the specific mission itself, it aims to build a connection with the humans involved which may help achieve other strategic goals eventually, using the AI algorithms developed in this training mission.

However, certain decisions made by AI could compromise the delivery of all aims, as depicted in the yellow path in the graph. In the case that the yellow path is much more intuitive to humans compared to the red paths, the challenge becomes about making comparisons and analyzing trade-offs between specific task aims (achieving as many goals as possible) and overall strategic aims (building robust AI algorithms that work efficiently and effectively with humans). The challenge lies in analyzing the associated risks and rewards for interconnected teams composed of many elements. There are two ways of integrating IBS to address situations where adding IBS criteria may compromise the quality of work. The first method involves integrating this element during the training phase in an offline manner. Once sufficient training has been completed, humans should have enough confidence in the AI's reasoning abilities to make proper decisions without the need for real-time IBS. The second approach is to keep IBS implemented during actual task execution to supply a continuous real-time sense of similarity to the human, simply by avoiding actions that are out of the ordinary.

3.2. Human reflections for feedback

To test the effect of AI decisions on humans and whether that is the ideal alternative from a set of options or not, one potential measurement method is the use of self-reported questionnaires. However, this method is susceptible to biases such as recall, interpretation, and social desirability biases, among others. An alternative approach is incorporating real-time human signals into the AI system. The use of physiological modalities in Human-Machine Interaction (HMI) applications is prevalent. In settings where signal acquisition devices are available, they could be used to set up a feedback loop from humans to the AI system to evaluate its decisions. However, this strategy has added constraints, most notably signal interpretation and the necessity to isolate the outside events from the targeted events with the machine. These obstacles may be outweighed by the insights physiological signals provide that cannot be gained through other traditional approaches.

Different human physiological signals have been long set up to be correct in detecting human emotions, including trust as one such emotion. Because of established neural correlates of trust, Electroencephalography (EEG) has been one of the key modalities used to extract trust levels (Long et al., 2012). The earliest attempts were mostly confined to using Event-Related Potentials (ERPs) to extract the brain response of humans while completing specific cognitive activities. Studies of this sort include (Boudreau et al., 2009; Long et al., 2012; Dong et al., 2015). The ERP (Event Related Potentials) approach is hard to be integrated into practical systems, and thus we would not recommend it. Furthermore, in a study published in Akash et al. (2018), EEG and Galvanic Skin Response (GSR) were used to find links with trust. The authors were successful in doing so, with accuracy values in the 70% range. GSR was also shown to correlate with trust levels in studies of different contexts, such as the work of Montague et al. (2014) and Khawaji et al. (2015). Furthermore, there have been several recent studies examining EEG for detecting the neural markers of human trust, such as Wang et al. (2018), Blais et al. (2019), Jung et al. (2019), and Oh et al. (2020). Electrocardiography (ECG) is far less researched compared to EEG and GSR. We are not aware of any studies that examined implementing ECG for capturing human trust in the context of intelligent machines. With that, some studies have shown a relationship between heart rate and other ECG features with trust in contexts not related to HMI, such as the work shown by Leichtenstern et al. (2011) and Ibáñez et al. (2016). Also, there are a few studies that examined the mapping between eye-tracking with trust, but in applications different from the application of interest here.

In addition to the physiological signals, there is a prospect of using facial expressions to detect trust and other emotions of the human in such systems. Using deep neural networks trained on video footage, which is more prevalent and easier to implement than certain physiological modalities, can classify human emotions. Detecting human emotional states from facial expressions is a broad area of research, and it is well-established to be amazingly effective (Ko, 2018; Naga et al., 2021).

As shown above, many inputs from humans have shown a potential in detecting human trust. Also, some studies have been undertaken to investigate the validity of multimodal fusion techniques in detecting human trust and emotions, such as Zheng et al. (2014), Huang et al. (2017), and Zhao et al. (2019). That said, multimodal fusion is still in the first stages of development. Still, it holds the potential for creating robust classification schemes, leaving room for further research on integrating different physiological modalities to quantify the trust between humans and AI.

3.3. Supporting evidence

By examining the relevant literature, one can investigate the soundness of the proposed approach. Here, two fundamental questions are discussed.

3.3.1. Can familiarity/similarity help establish trust between humans and AI?

Explainability is crucial for building trust with machines, but it is not always feasible. To address this, IBS is introduced as a tool to create similarity. Our aim is to replace the need for explainability with similarity, supported by the work of Brennen (2020) where users were found to build trust in AI through familiarity rather than detailed explanations of its working methodology. Furthermore, establishing trust based on behavioral imitation is a well-known psychological human trait. In the work of Over et al. (2013), it was demonstrated that children place great importance on establishing trust with adults when the adults imitate their choices. Other studies have also examined the importance of shared traits in establishing trust. For instance, in the study of Clerke and Heerey (2021), higher levels of behavioral mimicry and similarity were shown to lead to greater trust between individuals, which further supports the validity of exploring the IBS method for establishing trust in human-AI systems.

3.3.2. Is it possible to have the same/similar output despite making different early decisions?

In simple applications of singular goals, such as in a chess game, it is indeed possible to obtain the same or similar outcome despite making different early decisions (Sutton and Barto, 1998); different openings can lead to the same output of winning. However, when a network of sub-goals is introduced, such as aiming to finish the game in the shortest time, the process becomes more complex. While concrete empirical evidence is necessary to provide a definitive answer, it is reasonable to expect similar outputs, regardless of the early decisions made. This is because the number of possible paths (decisions) is highly likely to surpass the number of potential outcomes. As a result, some of these paths will ultimately lead to very similar outputs, providing many options for the machine to learn while establishing a relationship with the human partner.

4. Conclusions

In this brief conceptual study, we have introduced the idea of Intentional Behavioral Synchrony (IBS) as an essential step toward building trust between humans and AI machines in joint systems. We have highlighted the significance and positive impact of synchrony between humans and AI elements while working together as a team, especially in highly dynamic and complex tasks. Although our study does not supply empirical evidence, it offers valuable insights for examining the impact of IBS in real systems and encourages further research to explore factors that have been overlooked or underestimated in setting up more effective human-AI teams. Future studies could include investigating different mechanisms for measuring human reactions to AI in such dynamic applications. Also, the work paves the way for innovative ways of achieving synchrony among teams that include non-human members. Such an endeavor requires the collaborative effort of a multidisciplinary team of engineers, data scientists, and psychologists and must be executed in a context-dependent way.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

MN conceived and authored the entire paper under the direction of SB. All authors contributed to the article and approved the submitted version.

Funding

This article is part of an ongoing collaborative effort between Kennesaw State University and U.S. DEVCOM Army Research Laboratory (ARL) Cognition and Neuro-ergonomics Collaborative Technology Alliance (Contract W911NF-10-2-0022; more information available at: https://www.arl.army.mil/cast/CaNCTA). This collaboration was supported in part by Kennesaw State University with funding from ARL's Human Autonomy Teaming Essential Research Program (Contract #W911NF-2020205).

Conflict of interest

SB reports financial support was provided by the US Army Research Laboratory.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Akash, K., Hu, W. L., Jain, N., and Reid, T. (2018). A classification model for sensing human trust in machines using EEG and GSR. ACM Trans. Int. Syst. (TiiS) 8, 1–20. doi: 10.1145/3132743

Blais, C., Ellis, D. M., Wingert, K. M., Cohen, A. B., and Brewer, G. A. (2019). Alpha suppression over parietal electrode sites predicts decisions to trust. Soc. Neurosci. 14, 226–235. doi: 10.1080/17470919.2018.1433717

Boudreau, C., McCubbins, M. D., and Coulson, S. (2009). Knowing when to trust others: an ERP study of decision making after receiving information from unknown people. Soc. Cognit. Aff. Neurosci. 4, 23–34. doi: 10.1093/scan/nsn034

Brennen, A. (2020). “What Do People Really Want When They Say They Want ‘Explainable AI?' We Asked 60 Stakeholders,” in Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, 1–7. Honolulu, HI: ACM.

Calhoun, C. S., Bobko, P., Gallimore, J. J., and Lyons, J. B. (2019). Linking precursors of interpersonal trust to human-automation trust: an expanded typology and exploratory experiment. J. Trust Res. 9, 28–46. doi: 10.1080/21515581.2019.1579730

Chen, J. Y., Barnes, M. J., Selkowitz, A. R., Stowers, K., Lakhmani, S. G., Kasdaglis, N., et al. (2016). “Human-autonomy teaming and agent transparency” in Companion Publication of the 21st International Conference on Intelligent User Interfaces, 28–31. Sonoma, CA: ACM.

Clerke, A. S., and Heerey, E. A. (2021). The influence of similarity and mimicry on decisions to trust. Collabra: Psychology. 7, 23441. doi: 10.1525/collabra.23441

Dong, S. Y., Kim, B. K., Lee, K., and Lee, S. Y. (2015). A preliminary study on human trust measurements by EEG for human-machine interactions. Int. Hum. Inter. 8, 265–268. doi: 10.1145/2814940.2814993

Gabbay, D. M., Hogger, C. J., and Robinson, J. A. (1998). Handbook of Logic in Artificial Intelligence and Logic Programming: Volume 5: Logic Programming. London: Clarendon Press.

Haenlein, M., and Kaplan, A. (2019). A brief history of artificial intelligence: on the past, present, and future of artificial intelligence. California Manage. Rev. 61, 5–14. doi: 10.1177/0008125619864925

Hancock, P. A., Billings, D. R., Oleson, K. E., Chen, J. Y., Visser, D., Parasuraman, E., et al (2011). A Meta-Analysis of Factors Influencing the Development of Human-Robot Trust. doi: 10.1177/0018720811417254 Available online at: https://apps.dtic.mil/sti/pdfs/ADA556734.pdf

Hoff, K. A., and Bashir, M. (2015). Trust in automation: Integrating empirical evidence on factors that influence trust. Hum. Factors 57, 407–434. doi: 10.1177/0018720814547570

Huang, Y., Yang, J., Liao, P., and Pan, J. (2017). Fusion of facial expressions and EEG for multimodal emotion recognition. Comput. Int. Neurosci. 2017, 1–8. doi: 10.1155/2017/2107451

Ibáñez, M. I., Sabater-Grande, G., Barreda-Tarrazona, I., Mezquita, L., López-Ovejero, S., Villa, H., et al. (2016). Take the money and run: Psychopathic behavior in the trust game. Front. Psychol. 7, 1866. doi: 10.3389/fpsyg.2016.01866

Jung, E. S., Dong, S. Y., and Lee, S. Y. (2019). Neural correlates of variations in human trust in human-like machines during non-reciprocal interactions. Sci. Rep. 9, 1–10. doi: 10.1038/s41598-019-46098-8

Khawaji, A., Zhou, J., Chen, F., and Marcus, N. (2015). Using galvanic skin response (GSR) to measure trust and cognitive load in the text-chat environment. Ann. Ext. Abstr. Hum. Comput. Syst. 15, 1989–1994. doi: 10.1145/2702613.2732766

Ko, B. C. (2018). A brief review of facial emotion recognition based on visual information. Sensors. 18, 401. doi: 10.3390/s18020401

Lee, J. D., and See, K. A. (2004). Trust in automation: Designing for appropriate reliance. Hum. Factors 46, 50–80. doi: 10.1518/hfes.46.1.50.30392

Leichtenstern, K., Bee, N., André, E., Berkmüller, U., and Wagner, J. (2011). Physiological measurement of trust-related behavior in trust-neutral and trust-critical situations. IFIP Advances in Information and Communication Technology. Berlin, Heidelberg: Springer Berlin Heidelberg.

Long, Y., Jiang, X., and Zhou, X. (2012). To believe or not to believe: trust choice modulates brain responses in outcome evaluation. Neuroscience. 200, 50–58. doi: 10.1016/j.neuroscience.2011.10.035

Love, H. B., Cross, J. E., Fosdick, B., Crooks, K. R., VandeWoude, S., and Fisher, E. R. (2021). Interpersonal relationships drive successful team science: an exemplary case-based study. Hum. Soc. Sci. Commun. 8, 1–10. doi: 10.1057/s41599-021-00789-8

Mazzurega, M., Pavani, F., Paladino, M. P., and Schubert, T. W. (2011). Self-other bodily merging in the context of synchronous but arbitrary-related multisensory inputs. Exp. Brain Res. 213, 213–221. doi: 10.1007/s00221-011-2744-6

Montague, E., Xu, J., and Chiou, E. (2014). Shared experiences of technology and trust: an experimental study of physiological compliance between active and passive users in technology-mediated collaborative encounters. IEEE Trans. Hum. Mac. Syst. 44, 614–624. doi: 10.1109/THMS.2014.2325859

Muir, B. M. (1987). Trust between humans and machines, and the design of decision aids. Int. J. Man-Machine Stu. 27, 527–539. doi: 10.1016/S0020-7373(87)80013-5

Naga, P., Marri, S. D., and Borreo, R. (2021). Facial emotion recognition methods, datasets and technologies: a literature survey. Materials Today Proc. 80, 2824–2828. doi: 10.1016/j.matpr.2021.07.046

National Intelligence Council (2021). The Future of the Battlefield. NIC-2021-02493. Available online at: https://www.dni.gov/files/images/globalTrends/GT2040/NIC-2021-02493–Future-of-the-Battlefield–Unsourced−14May21.pdf (accessed May 14, 2021).

National Science Technology Council United States Department of Transportation (2020). Ensuring American Leadership in Automated Vehicle Technologies. AV 4, 0. Available online at: https://www.transportation.gov/sites/dot.gov/files/2020-02/EnsuringAmericanLeadershipAVTech4.pdf (accessed April 4, 2020).

Oh, S., Seong, Y., Yi, S., and Park, S. (2020). Neurological measurement of human trust in automation using electroencephalogram. Int. J. Fuzzy Logic Int. Syst. 20, 261–271. doi: 10.5391/IJFIS.2020.20.4.261

Over, H., Carpenter, M., Spears, R., and Gattis, M. (2013). Children selectively trust individuals who have imitated them. Social Dev. 22, 215–224. doi: 10.1111/sode.12020

Schmidt, R. C., Carello, C., and Turvey, M. T. (1990). Phase transitions and critical fluctuations in the visual coordination of rhythmic movements between people. J. Exp. Psychol. Hum. Percep. Perf. 16, 227. doi: 10.1037/0096-1523.16.2.227

Sutton, R. S., and Barto, A. G. (1998). Introduction to Reinforcement Learning. Cambridge, MA: MIT Press, 342.

Tickle-Degnen, L., and Rosenthal, R., (eds.). (1987). “Group Rapport and Nonverbal Behavior”, in Group Processes and Intergroup Relations (Sage Publications), 113–36.

Wang, M., Hussein, A., Rojas, R. F., Shafi, K., and Abbass, H. A. (2018). “EEG-based neural correlates of trust in human-autonomy interaction,” in 2018 IEEE Symposium Series on Computational Intelligence (SSCI), 350–57. IEEE. Bangalore: IEEE.

Zhao, L. M., Li, R., Zheng, W. L., and Lu, B. L. (2019). “Classification of five emotions from EEG and eye movement signals: complementary representation properties,” in 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER), 611–614. IEEE. San Francisco, CA, USA: IEEE.

Keywords: human-AI teaming, Intentional Behavioral Synchrony (IBS), human-machine trust, Human-Machine Interaction (HMI), multimodal fusion

Citation: Naser MYM and Bhattacharya S (2023) Empowering human-AI teams via Intentional Behavioral Synchrony. Front. Neuroergon. 4:1181827. doi: 10.3389/fnrgo.2023.1181827

Received: 07 March 2023; Accepted: 06 June 2023;

Published: 20 June 2023.

Edited by:

Gememg Zhang, Mayo Clinic, United StatesReviewed by:

Biao Cai, Tulane University, United StatesCopyright © 2023 Naser and Bhattacharya. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sylvia Bhattacharya, U0JoYXR0YTZAa2VubmVzYXcuZWR1

Mohammad Y. M. Naser

Mohammad Y. M. Naser Sylvia Bhattacharya2*

Sylvia Bhattacharya2*