94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci., 26 April 2023

Sec. Brain-Computer Interfaces

Volume 17 - 2023 | https://doi.org/10.3389/fnhum.2023.1126938

This article is part of the Research TopicInsights in Brain-Computer Interfaces: 2022View all 8 articles

Tinnitus is a neuropathological phenomenon caused by the recognition of external sound that does not actually exist. Existing diagnostic methods for tinnitus are rather subjective and complicated medical examination procedures. The present study aimed to diagnose tinnitus using deep learning analysis of electroencephalographic (EEG) signals while patients performed auditory cognitive tasks. We found that, during an active oddball task, patients with tinnitus could be identified with an area under the curve of 0.886 through a deep learning model (EEGNet) using EEG signals. Furthermore, using broadband (0.5 to 50 Hz) EEG signals, an analysis of the EEGNet convolutional kernel feature maps revealed that alpha activity might play a crucial role in identifying patients with tinnitus. A subsequent time-frequency analysis of the EEG signals indicated that the tinnitus group had significantly reduced pre-stimulus alpha activity compared with the healthy group. These differences were observed in both the active and passive oddball tasks. Only the target stimuli during the active oddball task yielded significantly higher evoked theta activity in the healthy group compared with the tinnitus group. Our findings suggest that task-relevant EEG features can be considered as a neural signature of tinnitus symptoms and support the feasibility of EEG-based deep-learning approach for the diagnosis of tinnitus.

Tinnitus is the illusory perception of sound in the absence of an external sound (Jastreboff and Sasaki, 1994; Baguley et al., 2013; Mohamad et al., 2016). People with tinnitus experience impaired cognitive efficiency and difficulties in mental concentration (Hallam et al., 2004). Based on functional imaging studies, it is generally accepted that tinnitus is associated with maladaptive neuroplasticity because of impairment in the auditory system (Schaette and McAlpine, 2011; Faber et al., 2012; Roberts et al., 2013; Kaya and Elhilali, 2014; Hong et al., 2016; Ahn et al., 2017). Most symptoms of tinnitus can be attributed to reorganization and hyperactivity in the auditory central nervous system (Muhlnickel et al., 1998; Kaltenbach and Afman, 2000; Salvi et al., 2000; Eggermont and Roberts, 2004). Tinnitus perception can be subject to top-down modulation of auditory processing (Mitchell et al., 2005) or attentional bottom-up processes that are influenced by stimulus salience. We reported neurophysiological and neurodynamic evidence revealing a differential engagement of top-down impairment along with deficits in bottom-up processing in patients with tinnitus (Hong et al., 2016). In addition, we observed that fronto-central cross-frequency coupling was absent during the resting state (Ahn et al., 2017), reflecting that maladaptive neuroplasticity or abnormal reorganization occurs in the auditory default mode network of patients with tinnitus.

Due to many possible causes, such as abnormality in top-down or bottom-up processes, and different symptoms, such as hearing loss or noise trauma, there is currently no universally effective clinical method for tinnitus diagnosis (Hall et al., 2016; Liu et al., 2022). At present, the diagnosis battery for tinnitus relies mainly on subjective assessments and self-reports, such as case history, audiometric tests, detailed tinnitus inquiry, tinnitus matching, and neuropsychological assessment (Basile et al., 2013; Tang et al., 2019).

Neuroimaging techniques are widely applied to monitor neural activity and diagnose different brain disorders. Electroencephalography (EEG) has emerged as one of the most practical techniques since it gives an insight into the temporal neuro-dynamics, it has an excellent temporal resolution (milliseconds or better), good portability, and an inexpensive set-up cost in comparison to other neuroimaging techniques, such as magnetoencephalography (MEG) or functional magnetic resonance imaging (fMRI) (Min et al., 2010). Many researchers have proposed that the assessment of abnormal neural activity as assessed by EEG signals may aid in the diagnosis of tinnitus since this disease is often associated with changes in the brain. Specifically, it is hypothesized that subjective tinnitus is the result of abnormal neural synchrony and spontaneous firing rates in the auditory system, therefore an EEG-based diagnostic approach for tinnitus may be an objective method to evaluate or predict its symptoms (Ibarra-Zarate and Alonso-Valerdi, 2020).

Here, to investigate whether patients with tinnitus can be identified using top-down or bottom-up EEG features, we used an active oddball paradigm (as a top-down directed task) in comparison to a passive oddball paradigm (as a bottom-up directed task). Task-relevant modulations may be reflected in event-related oscillations and provide the essential electrophysiological features for identifying patients with tinnitus. Thus, in the present study, we extracted top-down and bottom-up EEG cognitive signals and used them as discriminative features to identify patients with tinnitus using a novel deep-learning-based tinnitus-diagnostic tool.

Recent studies used machine learning to reduce reliance on experts and mitigate the influence of personal factors in the tinnitus-diagnosis process (Li P.-Z. et al., 2016; Wang et al., 2017; Sun et al., 2019). However, most machine learning EEG studies in tinnitus used resting-state EEG and focused on model performance or methodology (Mohagheghian et al., 2019; Sun et al., 2019; Allgaier et al., 2021). Therefore, there is a need for additional research on the diagnostic efficacy of EEG not only in resting-state but also in task-based studies (Mohagheghian et al., 2019; Allgaier et al., 2021). We hypothesized that there would be differences in brain activity during auditory cognitive tasks, reflecting distinct brain processing mechanisms between healthy individuals and patients with tinnitus. To assess, we used time-frequency analysis of EEG signals during task performance and evaluated neurophysiological differences in EEG spectral activity between the healthy and tinnitus groups. Importantly, we discriminated patients with tinnitus from healthy individuals using a deep learning decoding model and investigated whether the features learned by the model were consistent with task-relevant neurophysiological correlates.

Eleven patients with tinnitus (six women; mean age 32.1 years) and 11 age-matched healthy volunteers (five women; mean age 27.2 years) participated in the experiment. All patients had definite signs of chronic tinnitus, which lasted longer than 3 months but less than a year but had normal hearing otherwise. We assessed normal hearing with the following criteria: (i) the audiometric threshold was within 25 dB of the pure tone average at octave frequencies within 250–8,000 Hz; (ii) transient-evoked otoacoustic emissions (TEOAEs) were recorded in the external ear canal after stimulation with at least 5 dB signal-to-noise ratio (SNR), and distortion product otoacoustic emissions (DPOAEs) were recorded with at least 3 dB SNR; (iii) peak latencies for waves I-III were less than 2.4 ms, and for wave V were less than 6.2 ms on 90 dB normalized hearing level click-evoked auditory brainstem responses (ABR); and (iv) the tympanic membrane had a normal appearance on otoscope examination. Note, normal wave I-III latencies typically suggest intact peripheral auditory nerves (Moller et al., 1981; Moller and Jannetta, 1982), and normal otoacoustic emissions typically suggest normally functioning cochlear hair cells (Kemp, 1978; Mills and Rubel, 1994). However, it is possible that deafferentation was present in some of the tinnitus patients, though not detectible by conventional tests (TEOAEs, DPOAEs, ABR, and otoscope examination).

In addition, we introduced the following exclusion criteria to match patients and healthy volunteers in cognitive abilities: (i) age older than 50 years; (ii) present or past diagnosis of vertigo, Meniere’s disease, noise exposure, hyperacusis, or psychiatric problems; (iii) exposure to ototoxic drugs; (iv) complex cases of tinnitus, for example, a failure of tinnitus pitch matching.

To assess tinnitus severity, all patients completed a tinnitus questionnaire with a 0–10 scale (0: no annoyance; 10: severe annoyance) and a Korean translation of the Tinnitus Handicap Inventory of the American Tinnitus Association (Newman et al., 1996). The healthy volunteers had normal hearing and no signs of tinnitus.

Note, the data in this study were previously collected and published by our group. Extended details on the participants, data acquisition, and audiometric and tinnitus tests are provided in our previous study (Hong et al., 2016).

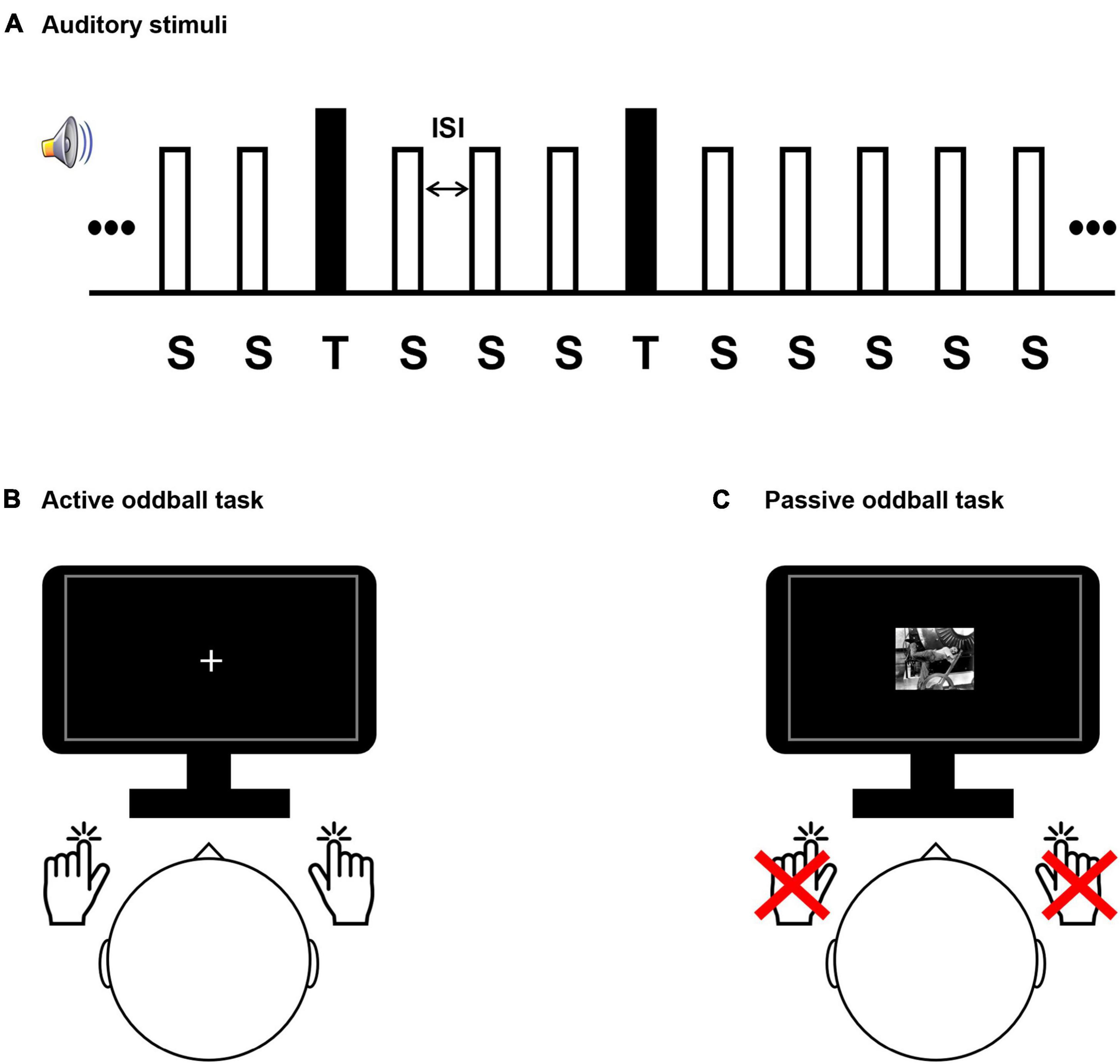

Participants performed auditory active and passive oddball tasks during EEG acquisition (Figure 1). During the active oddball task, two auditory stimuli (standard and target stimuli) were presented in random order for 200 ms, with standard stimuli being more frequent than target stimuli by an 8:2 ratio. Participants were instructed to discriminate between the frequently occurring standard stimuli and infrequently occurring target stimuli by pressing a button. We employed an active oddball task because the P300 component of the event-related potential (ERP) reflects fundamental cognitive processes (Donchin and Coles, 1988; Johnson, 1988; Picton, 1992; Polich, 1993, 2007) and is strongly elicited by this task (Katayama and Polich, 1999). Specifically, the P300 component is known to be involved in the contextual updating process (Polich, 2003). In the active oddball task, the P300 elicited by the target stimulus is a large, positive potential that is strongest over the parietal electrodes and occurs at about 300 ms post-stimulus in healthy young adults. Because the active oddball task required the participants’ active responses and therefore engaged cognitive decision-making processes, the results were interpreted as mostly auditory top-down effects. On the other hand, the same stream of auditory stimuli used in the active oddball task was also passively heard by the participants and was principally interpreted as a bottom-up process. This is because auditory bottom-up attention is a sensory-driven selection mechanism for shifting perception toward a salient auditory subset within an auditory scene (Kaya and Elhilali, 2014). In the passive oddball task, a mismatch negativity (MMN) component of ERP would be elicited. Since it is observed even if subjects do not perform a task using the stimulus stream, the MMN is a relatively preattentive and automatic response to an auditory stimulus deviating from the preceding standard stimuli (Näätänen and Kreegipuu, 2012). As deviant stimuli (physically the same as the target stimuli in the active oddball task) would evoke greater negative potentials compared to standard stimuli, with a fronto-central scalp maximum around 200 ms post-stimulus, this discrepancy is often isolated from the rest of the ERPs with a deviant-minus-standard difference wave, which is called an MMN.

Figure 1. Experimental design. (A) The auditory stimulus presentation sequence comprised a series of frequent standard “S” stimuli (80% occurrence probability; 500 Hz tones) and rare target “T” stimuli (20% occurrence probability; 8 kHz tones for healthy subjects and individual tinnitus pitch-matched frequencies for patients). Stimuli were presented for 200 ms and had variable interstimulus interval (1,300–1,700 ms). Participant conducted a sound discrimination task in the active oddball task (B), and passively watched a silent movie in the passive oddball task (C).

Participants performing the active oddball task were required to respond by pressing a button with one hand when a standard stimulus was detected and another button with the opposite hand when an infrequent target stimulus was detected (Duncan-Johnson and Donchin, 1977; Polich, 1989; Verleger and Berg, 1991; Figure 1). While the participants performed the active oddball task, they were instructed to fixate their eyes on a cross presented at the center of a screen to minimize any possible distracting effects due to alterations in visual attention. The passive oddball task presented the same auditory stimuli as the active oddball task, but participants had to remain still without pressing the button. In addition, to distract from attending to the auditory stimuli, participants performing the passive oddball task were shown a black-and-white silent movie (“Modern Times”: Charlie Chaplin’s movie).

The distance between the participant and the monitor was 80 cm, and visual stimuli were displayed within a visual angle of 6.5° to avoid image formation in the blind spot. The frequencies of the target stimuli in the oddball paradigm for the tinnitus group were their individual tinnitus-pitch-matched frequencies, and the target-stimulus frequency of the healthy group was 8 kHz, which was the most prominent as the individual tinnitus frequency in the tinnitus group. It has been reported that tinnitus patients respond sensitively to tinnitus sound stimuli (Cuny et al., 2004; Li Z. et al., 2016; Milner et al., 2020). The standard-stimulus frequency for both groups was 500 Hz. The experimental paradigm consisted of 320 standard stimuli (80% occurrence probability in the stimulus set) and 80 target stimuli (20% occurrence probability in the stimulus set), which were presented in random order. To minimize temporal expectancies, the interstimulus intervals (ISIs) were set to have variable intervals, ranging randomly between 1,300 and 1,700 ms, and centered at 1,500 ms (Min et al., 2008). All auditory stimuli were generated through Adobe Audition software (version 3.0, Adobe Systems Incorporated, San Jose, CA), and were presented through insert-earphones (EARTONE 3A®, 3M Company, Indianapolis, IN, USA) in both ears of the participants. All participants performed the task in the same environment, and the acoustic intensities of all stimuli were set to 50 dB SPL (sound pressure level) using a sound level meter (Type 2250, Brüel and Kjör Sound and Vibration Measurement, Denmark).

EEG signals were recorded using a BrainAmp DC amplifier (Brain Products, Germany) with a 32 Ag/AgCl-electrode actiCAP having a 10–10 electrode system placement. The ground was set as the AFz electrode, and the reference was set as an electrode on the tip of the nose. The impedances of the electrodes were lowered below 5 kΩ during electrode setup. EEG data were collected with a sampling frequency of 1 kHz and an analog band-pass filter of 0.5–70 Hz. An electrooculography (EOG) electrode was placed below the left eye to track eye movement artifacts. Vertical and horizontal electroocular signals were then estimated using the Fp1–EOG and F7–F8 electrode pairs, respectively. EOG artifacts were removed using an independent component analysis algorithm (Makeig et al., 1997; Winkler et al., 2011). The Brainstorm software (Tadel et al., 2011) was used to extract peristimulus data from −500 ms (baseline) to +1,000 ms with respect to stimulus onset. Every trial was baseline-corrected to remove the mean (−500 to 0 ms) from each channel. Trials containing large fluctuations exceeding ±100 μV maximum amplitude or 50 μV/ms maximal voltage gradient were excluded from further analyses.

Two dominant ERP components were analyzed: P300 and MMN. Depending on the regions of the brain in which the activity was most prominent (i.e., regions of interest), the following corresponding electrodes were chosen for analysis: for P300 (maximum peak 200–400 ms post-stimulus), four centro-parietal electrodes (Cz, CP1, CP2, and Pz); for MMN (minimum peak 100–300 ms post-stimulus), four frontal-central electrodes (Fz, FC1, FC2, and Cz). All time windows were based on their grand averages while taking individual variations into account. Baseline corrections were conducted using the 500–0 ms pre-stimulus interval. The amplitudes and latencies of each peak were compared between healthy and tinnitus groups. To display the ERP components, an offline filter (0.5–30 Hz) was applied to the results.

We used the Morlet wavelet transform to compute time-frequency responses (Herrmann et al., 2005). For the estimation of total activity (which includes the combined contribution of both phase-locked and non-phase-locked responses to the stimulus), the Morlet wavelet transform was applied to individual trials, and the resulting power of individual trials was averaged to obtain total activity. For the estimation of evoked activity (response phase-locked to the stimulus), the individual trials were first averaged, and the Morlet wavelet transform was applied to the averaged (evoked) trial. Since alpha-band oscillatory activity is the most pronounced rhythm in the human brain during relaxed (mentally inactive) wakefulness, we studied whether alpha activity differed between the tinnitus and healthy groups, which could suggest differences in preparatory mental states between the two groups. Furthermore, we also studied EEG theta oscillations, which have been linked to top-down regulation of memory processes (Sauseng et al., 2008). We did not investigate other frequency bands because they did not exhibit observable differences across the experimental conditions. Since the dominant frequency in each frequency band varies per individual, we determined subject-specific frequencies for each band but confined them to be within 8 to 13 Hz for the alpha band and 4 to 8 Hz for the theta band.

To calculate the pre-stimulus total activity in the alpha band, we averaged signal power in a baseline window −400 to −100 ms prior to stimulus onset. To calculate the evoked theta activity, we measured the maximum theta power in the time window between 0 and 500 ms after stimulus onset. All time windows were selected based on their grand-averages. Baseline correction was performed on the evoked theta activity using the pre-stimulus interval −400 to −100 ms prior to stimulus onset. No baseline correction was applied to the total alpha activity since we were interested in studying effects related to the pre-stimulus (i.e., baseline) total alpha activity (Min and Herrmann, 2007). Based on the areas of the brain where the EEG oscillatory activity was most pronounced, three parietal electrodes (Pz, P3, and P4) were selected for spectral analysis. The averaged amplitudes across the selected electrodes were analyzed at their dominant peaks within the corresponding time window (Heinrich et al., 2014; Karamacoska et al., 2019).

Deep learning has been tremendously successful, in large part because it enables the automatic learning of discriminative features from the data (LeCun et al., 1989, 2015; Boureau et al., 2010; Glorot et al., 2011). Recently, there has been a growing interest in adapting convolutional neural networks (CNNs) for EEG signal processing (Schirrmeister et al., 2017; Acharya et al., 2018; Lotte et al., 2018; Roy et al., 2019). Deep-learning approaches typically need large amounts of data due to the vast number of parameters that have to be learned (LeCun et al., 2015). Therefore, CNNs do not at first appear to be suitable for a relatively small number of EEG trials. However, a compact CNN called EEGNet was recently been proposed that performs well with relatively small numbers of EEG data (Lawhern et al., 2018). EEGNet is optimized for a small number of learnable parameters and thus reduces the need for additional techniques to deal with limited data, such as data augmentation (Dinarès-Ferran et al., 2018; Haradal et al., 2018; Ramponi et al., 2018; Zhang et al., 2019; Freer and Yang, 2020). That is, it performs well without the need for data augmentation, making the model simpler to implement (Lawhern et al., 2018). In addition, it has been shown that neurophysiologically interpretable features, instead of artifacts and noise, can be extracted from the EEGNet model (Lawhern et al., 2018). For these reasons, we selected the EEGNet architecture over other deep-learning models. Although the basic EEGNet may not result in the best performance (Zancanaro et al., 2021), we opted to use this model because it is a well-established architecture suitable for general applications and interpretations would not be confounded by any complex modifications (Borra et al., 2021; Zhu et al., 2021).

For training and evaluation of the EEGNet model to detect tinnitus patients, we used the time series of EEG data (30 electrodes) of both healthy and tinnitus groups (Lawhern et al., 2018). The architecture and parameters of the EEGNet are listed in both Supplementary Figure 1 and Supplementary Table 1. To investigate which frequency band of the EEG signals contributed dominantly to tinnitus-patient identification, the EEGNet model was separately trained with EEG signals from each frequency band but also using broadband data. The EEG data were band-pass filtered in the following frequency bands: delta (0.5–4 Hz), theta (4–8 Hz), alpha (8–13 Hz), beta (13–30 Hz), gamma (30–50 Hz), and broadband (0.5–50 Hz). In each stimulus-type and task-type condition (i.e., four decoding conditions: target stimuli in the active oddball task, standard stimuli in the active oddball task, target stimuli in the passive oddball task, and standard stimuli in the passive oddball task), the filtered single-trial EEG inputs were used for training and evaluation of the model. To prevent biases in classification performance, the same numbers of EEG data were randomly sampled for both the healthy and tinnitus groups. In addition, to compare EEG decoding performance between the different types of auditory stimuli, the number of EEG trials of the standard stimuli was set to be equal to that of the target stimuli. To compare the decoding performance between pre-stimulus and post-stimulus time windows, the EEG data segmented from 500 ms pre-stimulus to 1,000 ms post-stimulus were additionally decoded in separate time windows relative to stimulus onset: pre-stimulus (500 ms pre-stimulus to stimulus onset) and post-stimulus (stimulus onset to 1,000 ms post-stimulus) periods.

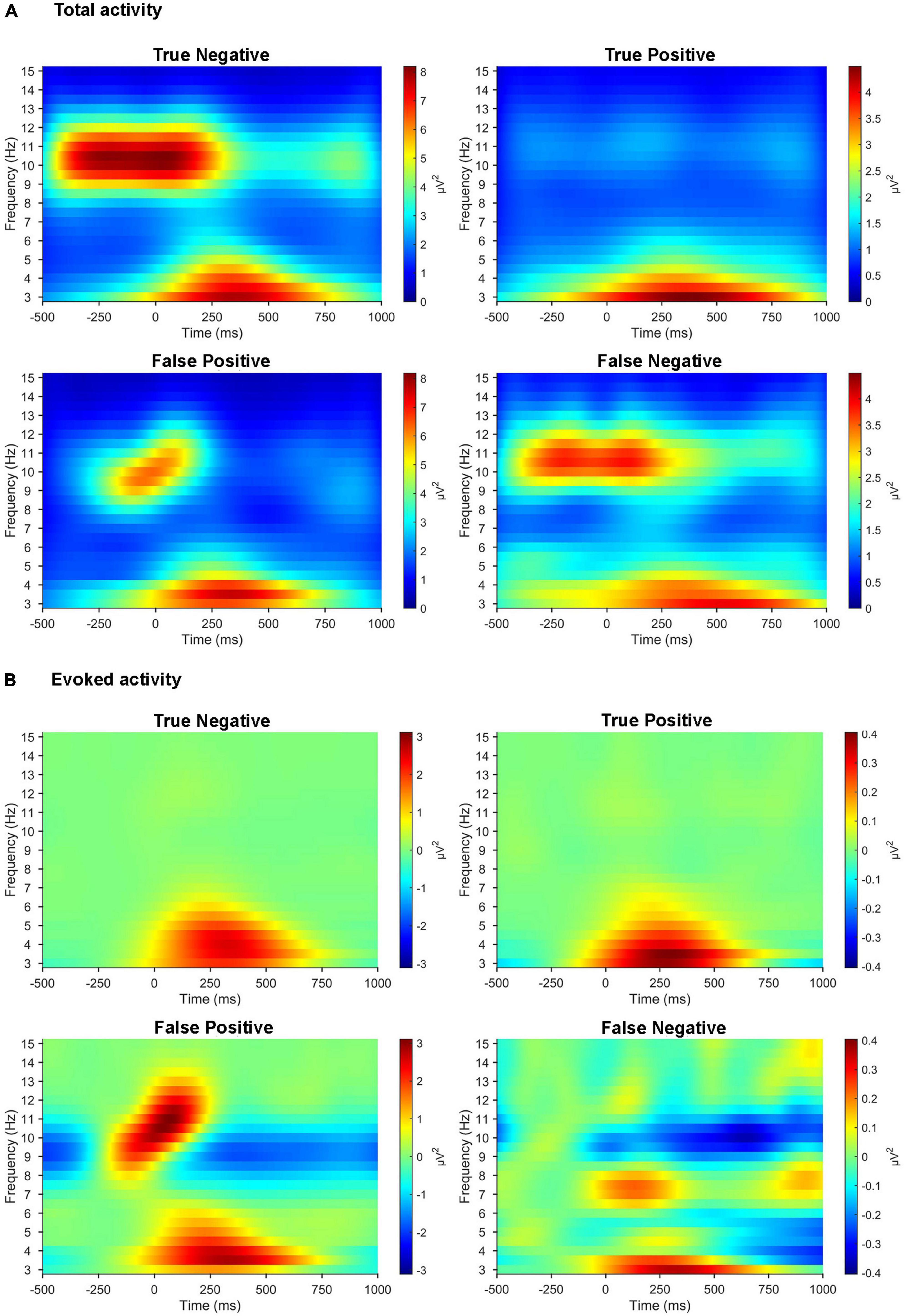

We trained the decoding model for up to 100 epochs, and the best model was finally selected based on the epoch with the minimum validation loss. To evaluate performance, the average of the area under the curve (AUC) in the receiver operating characteristic (ROC) curves, sensitivity, specificity, and accuracy were obtained through the 11-fold leave-one-pair-out cross-validation (Kohavi, 1995; Zou et al., 2007; Pedregosa et al., 2011), in which a pair indicated one healthy individual and one patient with tinnitus. Nine out of 11 folds were used as a training set to train the model, one fold out of the remaining two folds was used for validation, and the remaining one fold was finally used for the model evaluation. This process was repeated 11 times to obtain a total of 11 AUCs of model performance, and the model performance was evaluated through the average of these AUCs. Supplementary Tables 2–4 detail the classification power of the model (sensitivity, specificity, accuracy, and AUC) for each frequency band during the pre-stimulus, post-stimulus, and entire trial period, respectively. In addition, to investigate the cases of misclassifications made by the deep-learning-based model, the misclassified trials from a sample pair of healthy/patient subjects were separately analyzed and compared with the correctly classified trials in the case of EEG alpha activity for the target stimuli in the active oddball task. After the 11-fold cross-validation, the averaged time-frequency representations were computed across all the EEG trials collected individually for the following classifications: true positive (i.e., correctly classified tinnitus patients as tinnitus patients), false positive (i.e., incorrectly classified healthy individuals as tinnitus patients), true negative (i.e., correctly classified healthy individuals as healthy individuals), and false negative (i.e., incorrectly classified tinnitus patients as healthy individuals).

To compare the EEGNet-based decoding performance against other classical machine-learning techniques, a support vector machine (SVM) (Scholkopf et al., 1997; Manyakov et al., 2011) classifier was applied to the current dataset. For the SVM approach, the alpha-band time series of the entire EEG trial at the electrode Pz was used as an input feature to the classifier since alpha activity is generally predominant around the parietal region (Dockree et al., 2007; Cosmelli et al., 2011; Groppe et al., 2013). To investigate the effect of alpha band in the decoding performance of SVM, the AUC was also computed in the case of removing the alpha band, through a band-stop filter, from the input signals. With this feature representation, we applied a SVM with the radial-basis function as a kernel. The regularization parameter and the kernel parameter were chosen using grid search.

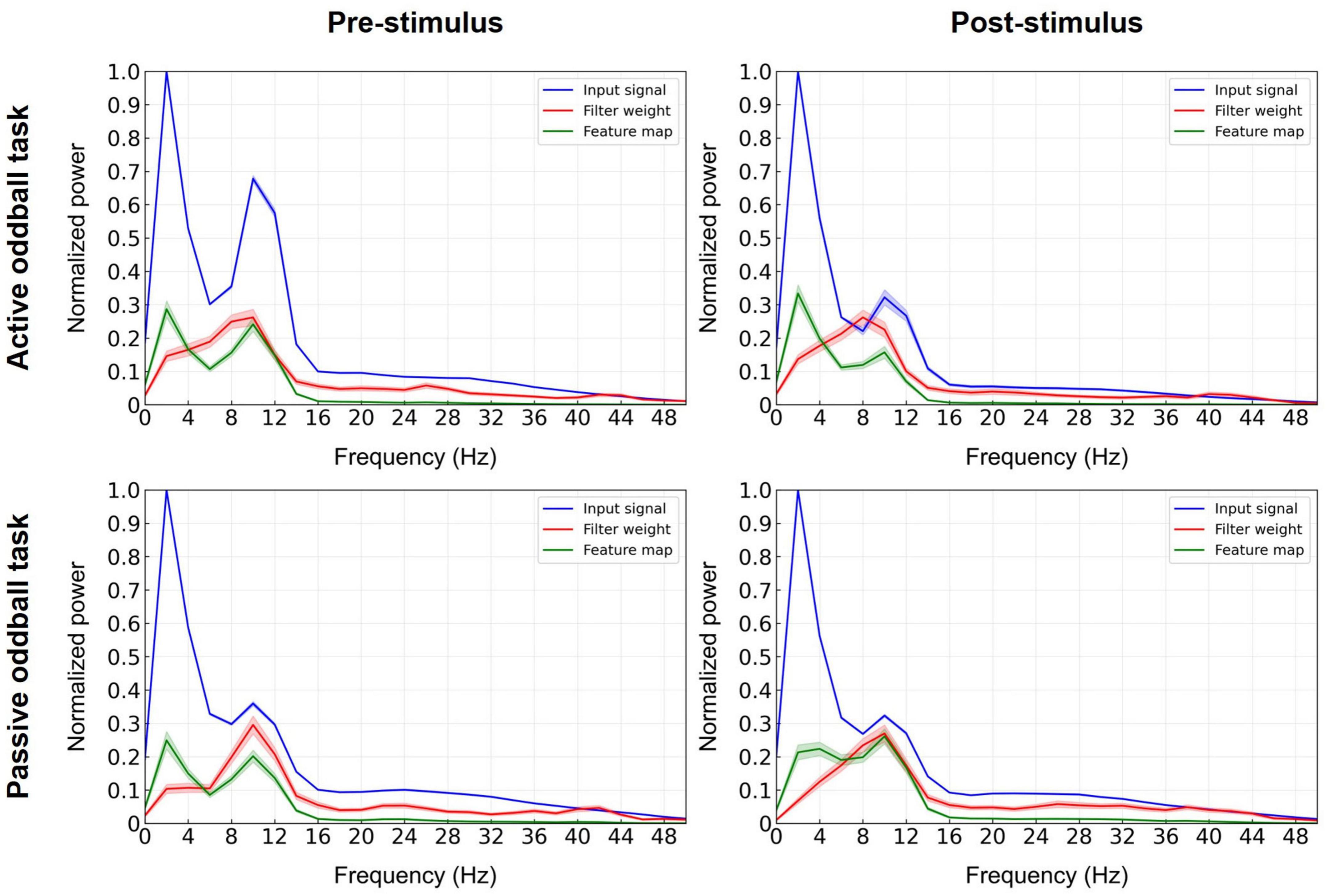

To investigate the contribution of each individual frequency band to model training, beyond assessing the classification performance of the EEGNet trained on band-limited data, we also performed a feature analysis of the convolutional layer filter of the EEGNet trained on broadband data (Deng et al., 2021; Riyad et al., 2021). The convolutional layer kernel of the EEGNet model used in this study worked as a temporal filter, which performs a similar role to a filter bank (Ang et al., 2012). Since the sampling rate of the EEG data used for model training and evaluation was 1,000 Hz and the size of the convolutional layer filter was 500, the time window of the convolutional layer filter was 500 ms. To identify the most influential frequencies in the EEGNet model, the input signals, learned filter weights of the first convolutional layer, and corresponding feature maps were projected to the frequency domain using the Fast Fourier Transform (Deng et al., 2021; Riyad et al., 2021). Normalized spectra were then averaged over the 11 cross-validation folds. More specifically, the feature map was computed by a convolution between the input signal and the learned filter weights of the first convolutional layer of the EEGNet model. The spectral power of the eight filters of the first convolutional layer of the present model in each frequency point was normalized by its maximum power over all the eight filters, and the results were averaged across 11 folds. A similar analysis was conducted to compute the spectra of the feature maps.

Last, to investigate the robustness and stability of the deep neural network model, we additionally assessed decoding performance using a smaller number of filters in the first convolutional layer (five instead of eight filters) of the EEGNet architecture. This result is shown in the Supplementary Material.

The independent-sample Mann–Whitney U test was performed to compare the measures between the two groups (healthy and tinnitus) and to compare the AUCs between the two decoding methods of EEGNet and SVM. To statistically assess decoding performances, we evaluated whether the AUC was statistically significantly higher than the chance level using Wilcoxon signed-rank tests (Z scores). All analysis and statistical processing were performed using MATLAB (ver. R2021a, MathWorks, Natick, MA, USA), Python (Python Software Foundation) or SPSS Statistics (ver. 26, IBM, Armonk, NY, USA).

Significantly higher P300 amplitudes were observed in healthy controls than patients with tinnitus during the active oddball task (healthy group, 18.673μV, tinnitus group, 7.865μV; U = 16, p < 0.005; Figure 2A). On the other hand, the MMN amplitudes were not significantly different between the two groups during the passive oddball task (healthy group, −3.150μV, tinnitus group, −3.092μV; U = 54, n.s.; Figure 2B).

Figure 2. P300 and MMN responses. (A) Grand-averaged P300 topographies and ERP time courses at electrode Pz of both healthy controls (red line) and patients with tinnitus (blue line) for the target stimuli during the active oddball task. (B) Grand-averaged MMN topographies and ERP time courses at electrode Fz of both healthy controls (red line) and patients with tinnitus (blue line) for the target minus standard stimuli during the passive oddball task.

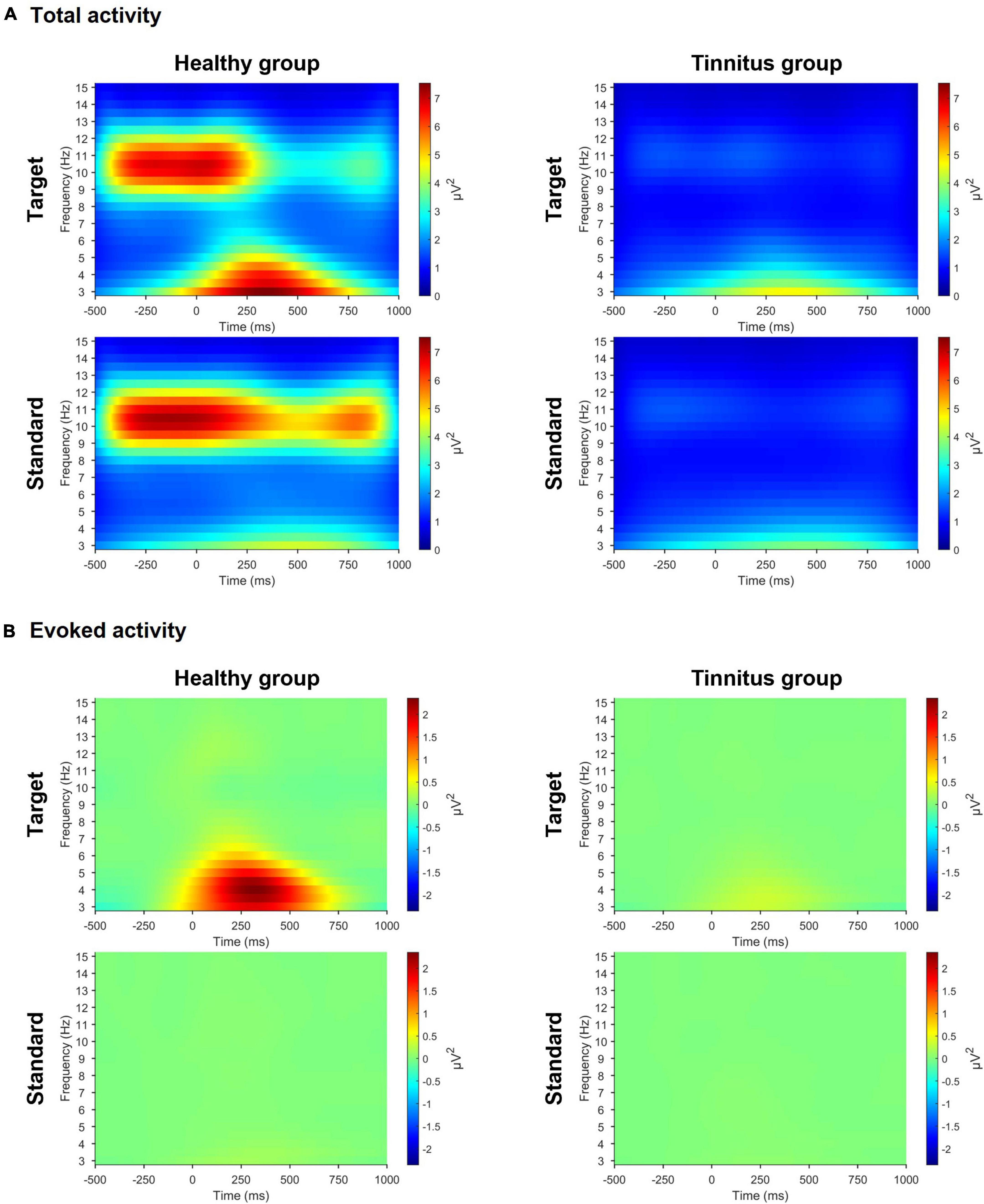

In the active oddball task, we observed significant differences in both pre-stimulus alpha and evoked theta activities between the two groups (Figure 3). For the target stimuli, the healthy group had stronger pre-stimulus alpha activity (healthy group, 4.521μV2, tinnitus group, 1.187μV2; U = 4, p < 0.0005) and stronger evoked theta activity (healthy group, 2.431μV2, tinnitus group, 0.336μV2; U = 16, p < 0.005) compared with the tinnitus group. For the standard stimuli, the healthy group exhibited salient pre-stimulus alpha activity compared with the tinnitus group (healthy group, 4.747μV2, tinnitus group, 1.235μV2; U = 4, p < 0.0005), but the two groups had no significant differences in evoked theta activity.

Figure 3. Time-frequency representations in the active oddball task. (A) Time-frequency representations of grand-averaged total activity across three parietal electrodes (Pz, P3, and P4) during the active oddball task. (B) Time-frequency representations of grand-averaged evoked activity across the same three parietal electrodes during the active oddball task. Note the pronounced pre-stimulus total alpha (8–13 Hz) and evoked theta (4–8 Hz) activities in the healthy group compared with the tinnitus group.

In the passive oddball task, for the target stimuli, the healthy group had pronounced pre-stimulus alpha activity compared with the tinnitus group (healthy group, 1.702μV2, tinnitus group, 0.958μV2; U = 21, p < 0.01), but the two groups had no significant differences in evoked theta activity. For the standard stimuli, the healthy group exhibited salient pre-stimulus alpha activity compared with the tinnitus group (healthy group, 1.693μV2, tinnitus group, 0.969μV2; U = 20, p < 0.01), but the two groups had no significant differences in evoked theta activity (Supplementary Figure 2).

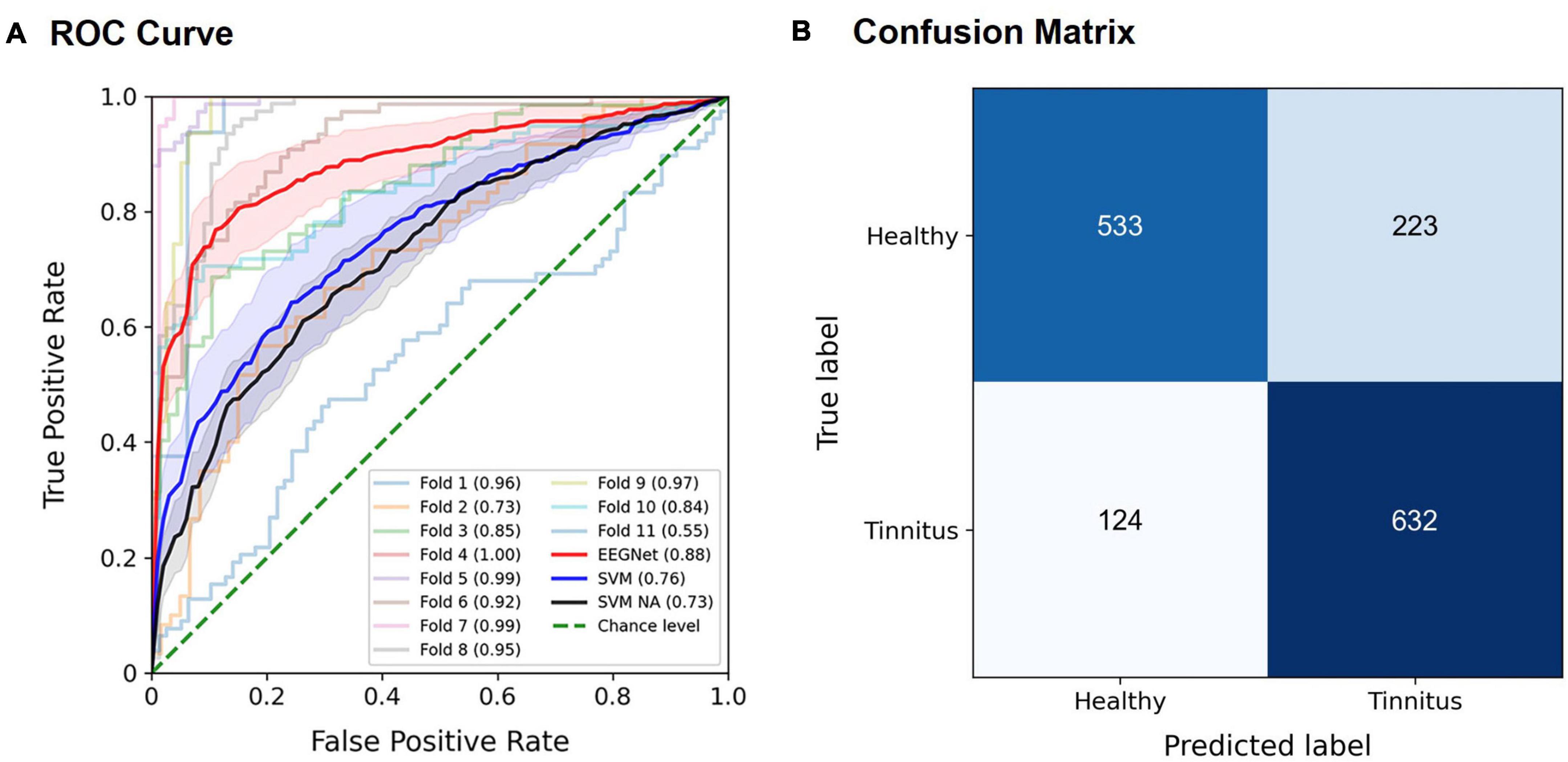

The EEGNet model effectively discriminated patients with tinnitus from healthy controls, most often achieving the best performance using spectral features in the alpha band. For example, when using the entire trial time period, for the target stimuli in the active oddball task, the highest AUC of 0.886 ± 0.042 (mean ± standard error; Z = 2.934, p < 0.005) was achieved using the EEG alpha band (Figure 4A). The AUCs by the EEGNet indicated marginally better decoding performance than those by the SVM (EEGNet, 0.886, SVM, 0.759; U = 34, p = 0.08; red and blue lines in Figure 4A). The SVM-based AUC in the case of removing the alpha band from the input signals was 0.73 (black line in Figure 4A). The SVM-based AUC based on the alpha band (0.759) was not significantly different from that of the removal of the alpha band (0.73; U = 50, n.s.). The associated confusion matrix of the EEGNet model is provided in Figure 4B. Similarly, for the standard stimuli in the active oddball task, the highest AUC of 0.858 ± 0.049 (Z = 2.934, p < 0.005) was also achieved using the EEG alpha band. On the other hand, for the target stimuli in the passive oddball task, the highest AUC of 0.819 ± 0.068 (Z = 2.669, p < 0.01) was achieved using the EEG broadband. For the standard stimuli in the passive oddball task, the highest AUC of 0.807 ± 0.058 (Z = 2.756, p < 0.01) was achieved using the EEG alpha activity.

Figure 4. Classification performance of the EEGNet model for the target stimuli in the active oddball task using EEG alpha activity. (A) The area under the curve (AUC) scores of each fold in the receiver operating characteristic (ROC) curves were obtained through 11-fold leave-one-pair-out cross-validation using EEG alpha activity in the target stimuli during the active oddball task. Each curve represents the ROC curve for each fold in the cross-validation, with the corresponding AUC scores noted within the legend. The red solid line represents the EEGNet-based averaged AUC across 11 folds, and the blue solid line represents the SVM-based averaged AUC across 11 folds. The black solid line represents the SVM-based averaged AUC based on the removal of the alpha band from the input signals (noted as “SVM NA” in the legend). The green dotted line indicates the chance level. Error bands indicate standard errors of the mean (EEGNet in red, and SVM in blue, and SVM NA in dark gray). (B) Confusion matrix of the EEGNet model classification results.

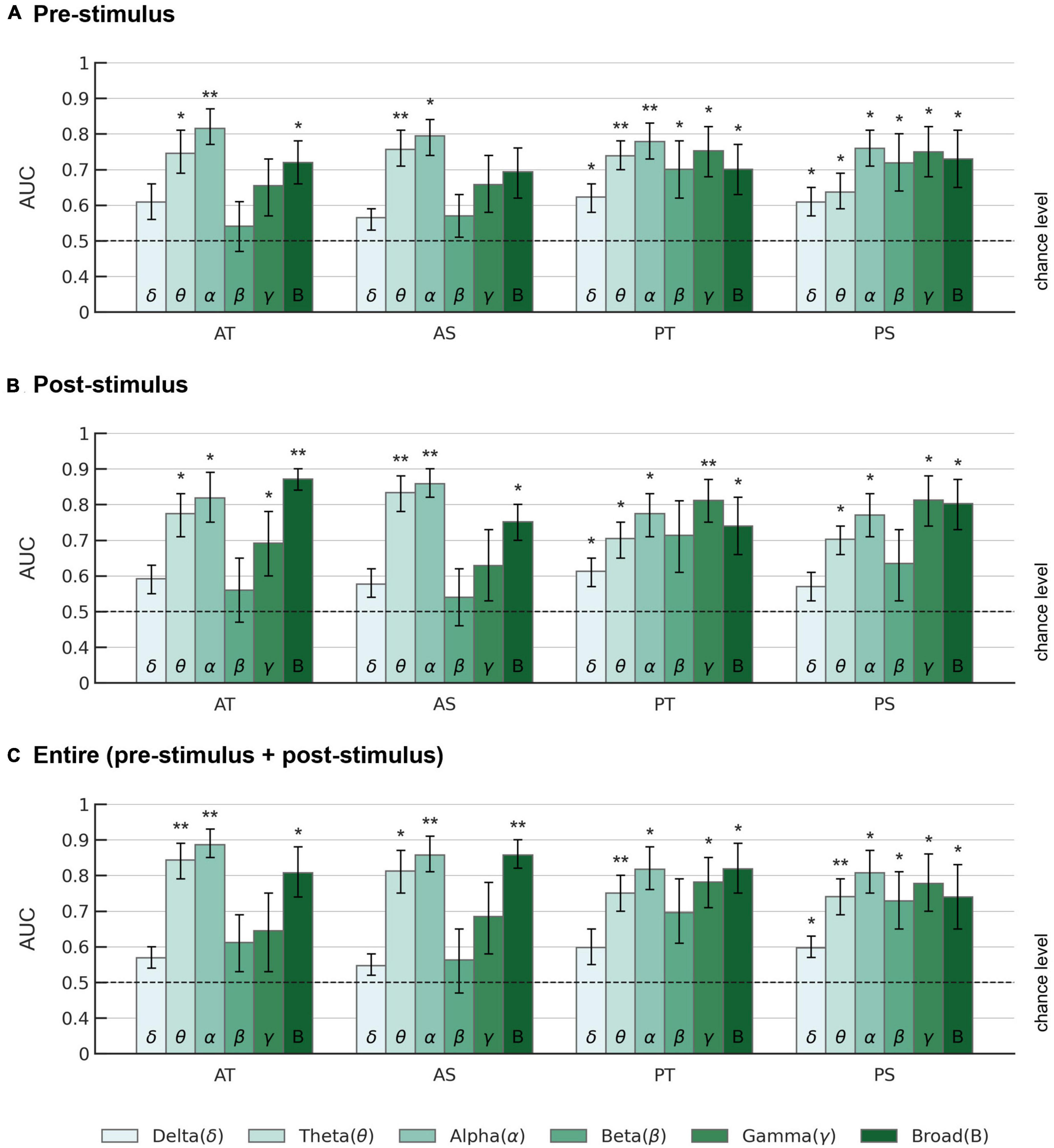

Further details for the classification performance of the EEGNet model across all frequency bands, different evaluation metrics (sensitivity, specificity, accuracy, AUC), and different time periods (pre-stimulus, post-stimulus, and entire trial) are provided in Figure 5 and Supplementary Tables 2–4.

Figure 5. AUC scores of the EEGNet model across different frequency bands. AUCs are displayed for (A) pre-stimulus, (B) post-stimulus, and (C) entire trial period in each frequency band. AT: active oddball task, target stimuli; AS: active oddball task, standard stimuli; PT: passive oddball task, target stimuli; PS: passive oddball task, standard stimuli. Error bars represent standard errors of the mean. The dotted lines indicate the chance level. Asterisks indicate statistical significance (*p < 0.05; **p < 0.005).

Overall, for the pre-stimulus period, EEG alpha activity showed the highest average AUC of 0.815 ± 0.048 (Z = 2.934, p < 0.005) for the target stimulus in the active oddball task (Figure 5A). For the post-stimulus period, EEG broadband activity showed the highest average AUC of 0.871 ± 0.032 (Z = 2.934, p < 0.005) for the target stimulus in the active oddball task (Figure 5B). For the entire trial period, EEG alpha band yielded the highest AUC of 0.886 ± 0.042 for the target stimulus in the active oddball task (Figure 5C). As shown in Supplementary Figure 3, these results were largely stable even when changing the number of filters in the first convolutional layer of the EEGNet architecture.

To identify which frequency band in the EEGNet model with broadband EEG data critically contributed to the performance, we computed the normalized spectral power of the input, the learned filter weights of the first convolutional layer, and its corresponding feature map (Deng et al., 2021; Riyad et al., 2021; Figure 6). The most prominent contribution of the convolutional layer filters was observed in the alpha band. This suggests that the first layer of the EEGNet model enhanced the alpha band at the expense of other frequencies, as also evident by the spectra of the input versus output (feature map) of this layer.

Figure 6. Decisive EEG spectral features in the decoding model. The input signals (blue lines), learned filter weights of the first convolutional layer (red lines), and corresponding feature maps (green lines) of the EEGNet model trained on broadband data were projected to the frequency domain using Fast Fourier Transform (at electrode Pz) and spectra were averaged over the 11 cross-validation folds. Error bands indicate standard errors of the mean.

We compared the time-frequency representations of the correctly classified versus misclassified EEG trials in a sample pair of healthy/patient subjects (Figure 7). After the 11-fold cross-validation, the averaged time-frequency representations were computed across all trials in the cases of true positive (N = 632), false positive (N = 223), true negative (N = 533), and false negative (N = 124). Patterns in the time-frequency plots revealed that the misclassified EEG trials clearly lacked the prominent features of the correctly classified EEG trials.

Figure 7. Time-frequency representations of misclassified EEG trials compared with those correctly classified. (A) Time-frequency representations of total activity averaged across three parietal electrodes (Pz, P3, and P4) for the target stimuli in the active oddball task. (B) Time-frequency representations of evoked activity averaged across the same three parietal electrodes for the target stimuli in the active oddball task. All the plots are computed across all the EEG trials (from a sample pair of healthy/patient subjects) collected individually for true positive, false positive, true negative, and false negative.

This study demonstrated that human EEG signals provide promising tinnitus identification features that enable practical tinnitus-diagnostic applications. Based on the EEG spectral analysis, we observed different behaviors of pre-stimulus alpha and evoked theta activities during task performance between healthy controls and patients with tinnitus, suggesting that these spectral components may be crucial features for EEG-based diagnosis of tinnitus. It is noteworthy that the tinnitus group showed significantly reduced pre-stimulus alpha activity compared with healthy participants. Since pre-stimulus alpha activity might reflect the top-down preparation for upcoming stimuli (Min and Herrmann, 2007; Jensen and Mazaheri, 2010), the reduced pre-stimulus alpha power in the tinnitus group may reveal abnormal preparatory top-down processing in the pre-stimulus period. This is consistent with prior studies that found suppressed parietal alpha activity when patients with tinnitus focused on the tinnitus sound rather than when they focused on their own body (Milner et al., 2020). Since the classical P300 and MMN were obviously observed in the active and passive oddball tasks, respectively (Figure 2), the present experimental paradigm seemed to be well designed for investigating each top-down and bottom-up processing. Our results showed more pronounced differences in alpha activity in the active oddball task (i.e., top-down processing) than the passive oddball task (i.e., bottom-up processing). This is probably because suppression of EEG alpha activity is known to be associated with active cognitive processing (Sauseng et al., 2005; Klimesch et al., 2007; Min and Park, 2010), and top-down attention was not focused on the presented auditory stimuli in the passive oddball task (Sarter et al., 2001). Furthermore, since evoked theta activity reflects post-stimulus top-down processing (Sauseng et al., 2008), the absence of evoked theta activity during the active oddball task in the tinnitus group (Figure 3B) also suggests that this group may have compromised top-down processing after stimulation (Hong et al., 2016). It is also notable that the results of time-frequency analyses demonstrated that the misclassified EEG trials clearly lacked the prominent features of correctly classified EEG trials (Figure 7).

In agreement with the EEG spectral analysis, the spectral analysis of the first convolutional layer of the EEGNet model further implicated the EEG alpha band as the most decisive feature for the classification of patients with tinnitus (Figure 6). Further, the significant differences in EEG alpha activity between healthy and tinnitus groups were observed in both the active and passive oddball tasks (see Supplementary Figure 2 for results on the passive oddball task). Overall, our findings consistently point to alterations in alpha band activity as a key discriminative feature in diagnosing tinnitus.

To investigate whether the healthy and tinnitus groups could be distinguished even before stimulus presentation, the EEGNet model was also trained and evaluated by dividing the EEG trial data into corresponding time segments (Figure 5). For the target stimuli in the active oddball task, although the EEG alpha band in the entire trial period yielded the highest AUC of 0.886 (accuracy 0.774, sensitivity 0.827, and specificity 0.721), the EEG alpha activity in the pre-stimulus period also showed a considerably high AUC of 0.815 (accuracy 0.748, sensitivity 0.833, and specificity 0.662). This observation is consistent with the significantly higher pre-stimulus alpha activity in the healthy versus the tinnitus group (Figure 3A). Similarly, during the passive oddball task, pre-stimulus EEG alpha activity resulted in a high AUC of 0.776 (accuracy 0.696, sensitivity 0.750, and specificity 0.642) for the target stimuli.

The SVM-based AUC based on the alpha band (0.759) was not significantly different from that of the removal of the alpha band (0.73) (Figure 4A). This observation suggests that other frequencies also contain relevant information, as confirmed by our EEGNet model in Figure 5, and EEGNet might as well exploit this information to some extent. Although the performance of EEGNet-based decoding was only marginally better than that of the SVM-based decoding (Figure 4A), the use of the deep learning-based EEGNet offers significant advantages over classical approaches such as SVM. This is because deep learning methods are capable of automatically learning complex patterns from data, resulting in improved performance compared to traditional hand-crafted features that require prior knowledge and expertise to select, such as the choice of discriminative EEG channels and frequency bands (Yang et al., 2022). In other words, EEGNet can work directly with raw EEG data, eliminating the need for manual feature extraction (Song et al., 2022).

Symptoms of tinnitus have been linked to hyperactivity and reorganization of the auditory central nervous system (Muhlnickel et al., 1998; Kaltenbach and Afman, 2000; Salvi et al., 2000; Eggermont and Roberts, 2004) with the engagement of other non-auditory brain areas, including the dorsolateral prefrontal cortex (DLPFC; Schlee et al., 2009; Vanneste et al., 2010) and anterior cingulate cortex (ACC; Muhlau et al., 2006; Vanneste et al., 2010). The DLPFC subserves higher-order functions and domain-general executive control functions (Fuster, 1989; Miller and Cummings, 2007; McNamee et al., 2015). The ACC mediates specific functions, such as error detection, attention, and motivation (Johnston et al., 2007; Silton et al., 2010). Both DLPFC and ACC have also been found to be involved in auditory attention (Alain et al., 1998; Lewis et al., 2000; Voisin et al., 2006), thus playing a role in top-down modulation of auditory processing (Mitchell et al., 2005). There is evidence that tinnitus influences affect auditory selective attention (Andersson et al., 2000; Rossiter et al., 2006), with patients reporting concentration difficulties due to their tinnitus (Andersson et al., 1999; Heeren et al., 2014). This is consistent with a study suggesting that a failure in top-down inhibitory processes might play a causal role in tinnitus (Norena et al., 1999). Given that EEG alpha oscillations are linked to top-down processing (Min and Herrmann, 2007; Min and Park, 2010) and inhibitory control of task-irrelevant processing (Klimesch et al., 2007; Min and Park, 2010), our findings provide interpretable neurophysiological correlates of tinnitus that are consistent with prior literature.

Thus, the present deep-learning method of EEG-based tinnitus diagnosis demonstrated its capability of extracting and harnessing interpretable EEG features generally corresponding to known neurophysiological observations. The highest AUC in the alpha band (Figure 5) can be attributed to the difference in alpha activity between the healthy and tinnitus groups observed in the time-frequency analysis (Figure 3). These results were consistently observed, irrespective of the type of experimental task (active or passive oddball tasks). Taken together, these findings indicate that the EEGNet model was trained based on tinnitus-related neurophysiological signatures particularly reflected in EEG alpha activity.

The proposed deep learning-based decoding approach for the identification of tinnitus symptoms could become an effective future technology for the diagnosis or prediction of tinnitus. The training of EEGNet was based on raw EEG data without prior knowledge of important features, which has critical implications when used in practice. However, the present tinnitus-diagnostic approach still has potential for improvement in subsequent studies. A critical limitation is that a larger sample size would have improved the statistical power of our study, but sample sizes were limited by the recruitment of healthy/patient subjects. Thus, despite the use of non-parametric statistical tests (e.g., Mann–Whitney U tests and Wilcoxon signed-rank tests), the limited statistical power should be carefully considered when interpreting our findings. Several data-augmentation methods, such as generative adversarial networks (Haradal et al., 2018; Ramponi et al., 2018) or random transformations (e.g., rotation, jittering, scaling, or frequency warping) (Freer and Yang, 2020), may ameliorate the problem of insufficient numbers of EEG data, thus leading to applicable numbers of data for a deep-learning approach.

Overall, our deep-learning approach presents significant advantages over existing methods for tinnitus diagnosis. Our study shows that EEGNet can automatically identify robust task-relevant EEG features, which may facilitate the development of practical and ubiquitous EEG-based applications for disease diagnosis in cutting-edge clinical platforms. Looking ahead, as large amounts of data are progressively collected for heterogeneous symptoms of tinnitus, deep-learning approaches such as the one presented here may prove effective in further discovering stable and generalizable features. Such features may correspond to the varying spectrums of patients with tinnitus, enabling accurate classification and stratification of patients.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The study was conducted in accordance with the ethical guidelines established by the Institutional Review Board of the Hallym University College of Medicine (IRB No. 2016-I013). The patients/participants provided their written informed consent to participate in this study.

B-KM conceptualized the study and designed the experimental paradigm to identify tinnitus symptoms. E-SH, H-SK, and B-KM performed the experiment and analyzed the data. E-SH, H-SK, SH, DP, and B-KM wrote the main manuscript text and reviewed the manuscript. All authors contributed to the article and approved the submitted version.

This work was supported by the Institute of Brain and Cognitive Engineering at Korea University and the Convergent Technology R&D Program for Human Augmentation (grant number: 2020M3C1B8081319 to B-KM) and which was funded by the Korean Government (MSICT) through the National Research Foundation of Korea.

We are thankful to Ji-Wan Kim and Jechoon Park for their kind assistance during the data acquisition and analysis.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnhum.2023.1126938/full#supplementary-material

Acharya, U. R., Oh, S. L., Hagiwara, Y., Tan, J. H., and Adeli, H. (2018). Deep convolutional neural network for the automated detection and diagnosis of seizure using EEG signals. Comput. Biol. Med. 100, 270–278. doi: 10.1016/j.compbiomed.2017.09.017

Ahn, M. H., Hong, S. K., and Min, B. K. (2017). The absence of resting-state high-gamma cross-frequency coupling in patients with tinnitus. Hear. Res. 356, 63–73. doi: 10.1016/j.heares.2017.10.008

Alain, C., Woods, D. L., and Knight, R. T. (1998). A distributed cortical network for auditory sensory memory in humans. Brain Res. 812, 23–37. doi: 10.1016/S0006-8993(98)00851-8

Allgaier, J., Neff, P., Schlee, W., Schoisswohl, S., and Pryss, R. (2021). “Deep learning end-to-end approach for the prediction of tinnitus based on EEG data,” in Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), (Piscataway, NJ: IEEE). doi: 10.1109/EMBC46164.2021.9629964

Andersson, G., Eriksson, J., Lundh, L. G., and Lyttkens, L. (2000). Tinnitus and cognitive interference: a stroop paradigm study. J. Speech Lang. Hear. Res. 43, 1168–1173. doi: 10.1044/jslhr.4305.1168

Andersson, G., Lyttkens, L., and Larsen, H. C. (1999). Distinguishing levels of tinnitus distress. Clin. Otolaryngol. Allied Sci. 24, 404–410. doi: 10.1046/j.1365-2273.1999.00278.x

Ang, K. K., Chin, Z. Y., Wang, C., Guan, C., and Zhang, H. (2012). Filter bank common spatial pattern algorithm on BCI competition IV datasets 2a and 2b. Front. Neurosci. 6:39. doi: 10.3389/fnins.2012.00039

Baguley, D., McFerran, D., and Hall, D. (2013). Tinnitus. Lancet 382, 1600–1607. doi: 10.1016/S0140-6736(13)60142-7

Basile, C. -É, Fournier, P., Hutchins, S., and Hébert, S. (2013). Psychoacoustic assessment to improve tinnitus diagnosis. PLoS One 8:e82995. doi: 10.1371/journal.pone.0082995

Borra, D., Fantozzi, S., and Magosso, E. (2021). A lightweight multi-scale convolutional neural network for P300 decoding: analysis of training strategies and uncovering of network decision. Front. Hum. Neurosci. 15:655840. doi: 10.3389/fnhum.2021.655840

Boureau, Y.-L., Bach, F., LeCun, Y., and Ponce, J. (2010). “Learning mid-level features for recognition,” in Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, (Piscataway, NJ: IEEE). doi: 10.1109/CVPR.2010.5539963

Cosmelli, D., López, V., Lachaux, J. P., López-Calderón, J., Renault, B., Martinerie, J., et al. (2011). Shifting visual attention away from fixation is specifically associated with alpha band activity over ipsilateral parietal regions. Psychophysiology 48, 312–322. doi: 10.1111/j.1469-8986.2010.01066.x

Cuny, C., Norena, A., El Massioui, F., and Chéry-Croze, S. (2004). Reduced attention shift in response to auditory changes in subjects with tinnitus. Audiol. Neurotol. 9, 294–302. doi: 10.1159/000080267

Deng, X., Zhang, B., Yu, N., Liu, K., and Sun, K. (2021). Advanced TSGL-EEGNet for motor imagery EEG-based brain-computer interfaces. IEEE Access 9, 25118–25130. doi: 10.1109/ACCESS.2021.3056088

Dinarès-Ferran, J., Ortner, R., Guger, C., and Solé-Casals, J. (2018). A new method to generate artificial frames using the empirical mode decomposition for an EEG-based motor imagery BCI. Front. Neurosci. 12:308. doi: 10.3389/fnins.2018.00308

Dockree, P. M., Kelly, S. P., Foxe, J. J., Reilly, R. B., and Robertson, I. H. (2007). Optimal sustained attention is linked to the spectral content of background EEG activity: greater ongoing tonic alpha (∼ 10 Hz) power supports successful phasic goal activation. Eur. J. Neurosci. 25, 900–907. doi: 10.1111/j.1460-9568.2007.05324.x

Donchin, E., and Coles, M. G. H. (1988). Is the P300 component a manifestation of context updating? Behav. Brain Sci. 11, 357–427. doi: 10.1017/S0140525X00058027

Duncan-Johnson, C. C., and Donchin, E. (1977). On quantifying surprise: the variation of event-related potentials with subjective probability. Psychophysiology 14, 456–467. doi: 10.1111/j.1469-8986.1977.tb01312.x

Eggermont, J. J., and Roberts, L. E. (2004). The neuroscience of tinnitus. Trends Neurosci. 27, 676–682. doi: 10.1016/j.tins.2004.08.010

Faber, M., Vanneste, S., Fregni, F., and De Ridder, D. (2012). Top down prefrontal affective modulation of tinnitus with multiple sessions of tDCS of dorsolateral prefrontal cortex. Brain Stimul. 5, 492–498. doi: 10.1016/j.brs.2011.09.003

Freer, D., and Yang, G.-Z. (2020). Data augmentation for self-paced motor imagery classification with C-LSTM. J. Neural Eng. 17, 016041. doi: 10.1088/1741-2552/ab57c0

Fuster, J. M. (1989). The Prefrontal Cortex : Anatomy, Physiology, and Neuropsychology of the Frontal Lobe, 2nd Edn. New York: Raven Press. doi: 10.1016/0896-6974(89)90035-2

Glorot, X, Bordes, A, and Bengio, Y. (2011). “Deep sparse rectifier neural networks,” in Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, (Fort Lauderdale, FL)

Groppe, D. M., Bickel, S., Keller, C. J., Jain, S. K., Hwang, S. T., Harden, C., et al. (2013). Dominant frequencies of resting human brain activity as measured by the electrocorticogram. Neuroimage 79, 223–233. doi: 10.1016/j.neuroimage.2013.04.044

Hall, D. A., Haider, H., Szczepek, A. J., Lau, P., Rabau, S., Jones-Diette, J., et al. (2016). Systematic review of outcome domains and instruments used in clinical trials of tinnitus treatments in adults. Trials 7, 1–19. doi: 10.1186/s13063-016-1399-9

Hallam, R. S., McKenna, L., and Shurlock, L. (2004). Tinnitus impairs cognitive efficiency. Int. J. Audiol. 43, 218–226. doi: 10.1080/14992020400050030

Haradal, S., Hayashi, H., and Uchida, S. (2018). “Biosignal data augmentation based on generative adversarial networks,” in Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), (Piscataway, NJ). doi: 10.1109/EMBC.2018.8512396

Heeren, A., Maurage, P., Perrot, H., De Volder, A., Renier, L., Araneda, R., et al. (2014). Tinnitus specifically alters the top-down executive control sub-component of attention: evidence from the attention network task. Behav. Brain Res. 269, 147–154. doi: 10.1016/j.bbr.2014.04.043

Heinrich, H., Busch, K., Studer, P., Erbe, K., Moll, G. H., and Kratz, O. (2014). EEG spectral analysis of attention in ADHD: implications for neurofeedback training? Front. Hum. Neurosci. 8:611. doi: 10.3389/fnhum.2014.00611

Herrmann, C. S., Grigutsch, M., and Busch, N. A. (2005). “EEG oscillations and wavelet analysis,” in Event-Related Potentials: a Methods Handbook, ed. D. C. Handy. Cambridge: The MIT Press.

Hong, S. K., Park, S., Ahn, M. H., and Min, B. K. (2016). Top-down and bottom-up neurodynamic evidence in patients with tinnitus. Hear. Res. 342, 86–100. doi: 10.1016/j.heares.2016.10.002

Ibarra-Zarate, D., and Alonso-Valerdi, L. M. (2020). Acoustic therapies for tinnitus: the basis and the electroencephalographic evaluation. Biomed. Signal Process. Control. 59:101900. doi: 10.1016/j.bspc.2020.101900

Jastreboff, P. J., and Sasaki, C. T. (1994). An animal model of tinnitus: a decade of development. Am. J. Otol. 15, 19–27.

Jensen, O., and Mazaheri, A. (2010). Shaping functional architecture by oscillatory alpha activity: gating by inhibition. Front. Hum. Neurosci. 4:186. doi: 10.3389/fnhum.2010.00186

Johnson, R. (1988). The amplitude of the P300 component of the event-related potential: review and synthesis. Adv. Psychophysiol. 3, 69–137.

Johnston, K., Levin, H. M., Koval, M. J., and Everling, S. (2007). Top-down control-signal dynamics in anterior cingulate and prefrontal cortex neurons following task switching. Neuron 53, 453–462. doi: 10.1016/j.neuron.2006.12.023

Kaltenbach, J. A., and Afman, C. E. (2000). Hyperactivity in the dorsal cochlear nucleus after intense sound exposure and its resemblance to tone-evoked activity: a physiological model for tinnitus. Hear. Res. 140, 165–172. doi: 10.1016/S0378-5955(99)00197-5

Karamacoska, D., Barry, R. J., and Steiner, G. Z. (2019). Using principal components analysis to examine resting state EEG in relation to task performance. Psychophysiology 56:e13327. doi: 10.1111/psyp.13327

Katayama, J., and Polich, J. (1999). Auditory and visual P300 topography from a 3 stimulus paradigm. Clin. Neurophysiol. 110, 463–468. doi: 10.1016/S1388-2457(98)00035-2

Kaya, E. M., and Elhilali, M. (2014). Investigating bottom-up auditory attention. Front. Hum. Neurosci. 8:327. doi: 10.3389/fnhum.2014.00327

Kemp, D. T. (1978). Stimulated acoustic emissions from within human auditory-system. J. Acoust. Soc. Am. 64, 1386–1391. doi: 10.1121/1.382104

Klimesch, W., Sauseng, P., and Hanslmayr, S. (2007). EEG alpha oscillations: the inhibition-timing hypothesis. Brain Res. Rev. 53, 63–88. doi: 10.1016/j.brainresrev.2006.06.003

Kohavi, R. (1995). “A study of cross-validation and bootstrap for accuracy estimation and model selection,” in Proceedings of the Ijcai, (Montreal).

Lawhern, V. J., Solon, A. J., Waytowich, N. R., Gordon, S. M., Hung, C. P., and Lance, B. J. (2018). EEGNet: a compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 15, 056013. doi: 10.1088/1741-2552/aace8c

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep learning. Nature 521, 436–444. doi: 10.1038/nature14539

LeCun, Y., Boser, B., Denker, J. S., Henderson, D., Howard, R. E., Hubbard, W., et al. (1989). Backpropagation applied to handwritten zip code recognition. Neural Comput. 1, 541–551. doi: 10.1162/neco.1989.1.4.541

Lewis, J. W., Beauchamp, M. S., and DeYoe, E. A. (2000). A comparison of visual and auditory motion processing in human cerebral cortex. Cereb. Cortex 10, 873–888. doi: 10.1093/cercor/10.9.873

Li, P.-Z., Li, J.-H., and Wang, C.-D. (2016). “A SVM-based EEG signal analysis: an auxiliary therapy for tinnitus,” in Proceedings of the International Conference on Brain Inspired Cognitive Systems, (Berlin: Springer). doi: 10.1007/978-3-319-49685-6_19

Li, Z., Gu, R., Zeng, X., Zhong, W., Qi, M., and Cen, J. (2016). Attentional bias in patients with decompensated tinnitus: prima facie evidence from event-related potentials. Audiol. Neurotol. 21, 38–44. doi: 10.1159/000441709

Liu, Z., Li, Y., Yao, L., Lucas, M., Monaghan, J. J., and Zhang, Y. (2022). Side-aware meta-learning for cross-dataset listener diagnosis with subjective tinnitus. IEEE Trans. Neural Syst. Rehabil. Eng. 30, 2352–2361. doi: 10.1109/TNSRE.2022.3201158

Lotte, F., Bougrain, L., Cichocki, A., Clerc, M., Congedo, M., Rakotomamonjy, A., et al. (2018). A review of classification algorithms for EEG-based brain–computer interfaces: a 10 year update. J. Neural Eng. 15, 031005. doi: 10.1088/1741-2552/aab2f2

Makeig, S., Jung, T. P., Bell, A. J., Ghahremani, D., and Sejnowski, T. J. (1997). Blind separation of auditory event-related brain responses into independent components. Proc. Natl. Acad. Sci. U S A. 94, 10979–10984. doi: 10.1073/pnas.94.20.10979

Manyakov, N. V., Chumerin, N., Combaz, A., and Van Hulle, M. M. (2011). Comparison of classification methods for P300 brain-computer interface on disabled subjects. Comput. Intell. Neurosci. 2011:519868. doi: 10.1155/2011/519868

McNamee, D., Liljeholm, M., Zika, O., and O’Doherty, J. P. (2015). Characterizing the associative content of brain structures involved in habitual and goal-directed actions in humans: a multivariate fMRI Study. J. Neurosci. 35, 3764–3771. doi: 10.1523/JNEUROSCI.4677-14.2015

Miller, B. L., and Cummings, J. L. (2007). The Human Frontal Lobes : Functions and Disorders, 2nd Edn. New York, NY: Guilford Press.

Mills, D. M., and Rubel, E. W. (1994). Variation of distortion-product otoacoustic emissions with furosemide injection. Hear. Res. 77, 183–199. doi: 10.1016/0378-5955(94)90266-6

Milner, R., Lewandowska, M., Ganc, M., Nikadon, J., Niedziałek, I., Jędrzejczak, W. W., et al. (2020). Electrophysiological correlates of focused attention on low-and high-distressed tinnitus. PLoS One 15:e0236521. doi: 10.1371/journal.pone.0236521

Min, B.-K., and Herrmann, C. S. (2007). Prestimulus EEG alpha activity reflects prestimulus top-down processing. Neurosci. Lett. 422, 131–135. doi: 10.1016/j.neulet.2007.06.013

Min, B. K., Marzelli, M. J., and Yoo, S. S. (2010). Neuroimaging-based approaches in the brain-computer interface. Trends Biotechnol. 28, 552–560. doi: 10.1016/j.tibtech.2010.08.002

Min, B. K., and Park, H. J. (2010). Task-related modulation of anterior theta and posterior alpha EEG reflects top-down preparation. BMC Neurosci. 11:79. doi: 10.1186/1471-2202-11-79

Min, B.-K., Park, J. Y., Kim, E. J., Kim, J. I., Kim, J.-J., and Park, H.-J. (2008). Prestimulus EEG alpha activity reflects temporal expectancy. Neurosci. Lett. 438, 270–274. doi: 10.1016/j.neulet.2008.04.067

Mitchell, T. V., Morey, R. A., Inan, S., and Belger, A. (2005). Functional magnetic resonance imaging measure of automatic and controlled auditory processing. Neuroreport 16, 457–461. doi: 10.1097/00001756-200504040-00008

Mohagheghian, F., Makkiabadi, B., Jalilvand, H., Khajehpoor, H., Samadzadehaghdam, N., Eqlimi, E., et al. (2019). Computer-aided tinnitus detection based on brain network analysis of EEG functional connectivity. J. Biomed. Phys. Eng. 9:687. doi: 10.31661/JBPE.V0I0.937

Mohamad, N., Hoare, D. J., and Hall, D. A. (2016). The consequences of tinnitus and tinnitus severity on cognition: a review of the behavioural evidence. Hear. Res. 332, 199–209. doi: 10.1016/j.heares.2015.10.001

Moller, A. R., Jannetta, P., Bennett, M., and Moller, M. B. (1981). Intracranially recorded responses from the human auditory nerve: new insights into the origin of brain stem evoked potentials (BSEPs). Electroencephalogr. Clin. Neurophysiol. 52, 18–27. doi: 10.1016/0013-4694(81)90184-X

Moller, A. R., and Jannetta, P. J. (1982). Evoked potentials from the inferior colliculus in man. Electroencephalogr. Clin. Neurophysiol. 53, 612–620. doi: 10.1016/0013-4694(82)90137-7

Muhlau, M., Rauschecker, J. P., Oestreicher, E., Gaser, C., Rottinger, M., Wohlschlager, A. M., et al. (2006). Structural brain changes in tinnitus. Cereb. Cortex 16, 1283–1288. doi: 10.1093/cercor/bhj070

Muhlnickel, W., Elbert, T., Taub, E., and Flor, H. (1998). Reorganization of auditory cortex in tinnitus. Proc. Natl. Acad. Sci. U S A. 95, 10340–10343. doi: 10.1073/pnas.95.17.10340

Näätänen, R., and Kreegipuu, K. (2012). “The mismatch negativity (MMN),” in The Oxford handbook of event-related potential components, eds S. J. Luck and E. S. Kappenman (New York, NY: Oxford University Press), 143–157.

Newman, C. W., Jacobson, G. P., and Spitzer, J. B. (1996). Development of the tinnitus handicap inventory. Arch. Otolaryngol. Head Neck Surgery 122, 143–148. doi: 10.1001/archotol.1996.01890140029007

Norena, A., Cransac, H., and Chery-Croze, S. (1999). Towards an objectification by classification of tinnitus. Clin. Neurophysiol. 110, 666–675. doi: 10.1016/S1388-2457(98)00034-0

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., et al. (2011). Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12, 2825–2830.

Picton, T. W. (1992). The P300 wave of the human event-related potential. J. Clin. Neurophysiol. 9, 456–479. doi: 10.1097/00004691-199210000-00002

Polich, J. (1989). Habituation of P300 from auditory stimuli. Psychobiology 17, 19–28. doi: 10.3758/BF03337813

Polich, J. (1993). Cognitive brain potentials. Curr. Dir. Psychol. Sci. 2, 175–179. doi: 10.1111/1467-8721.ep10769728

Polich, J. (2003). “Theoretical overview of P3a and P3b,” in Detection of Change, ed. J. Polich (Berlin: Springer). doi: 10.1007/978-1-4615-0294-4_5

Polich, J. (2007). Updating P300: an integrative theory of P3a and P3b. Clin. Neurophysiol. 118, 2128–2148. doi: 10.1016/j.clinph.2007.04.019

Ramponi, G., Protopapas, P., Brambilla, M., and Janssen, R. (2018). T-cgan: conditional generative adversarial network for data augmentation in noisy time series with irregular sampling. arXiv [Preprint]

Riyad, M., Khalil, M., and Adib, A. (2021). MI-EEGNET: A novel convolutional neural network for motor imagery classification. J. Neurosci. Methods 353:109037. doi: 10.1016/j.jneumeth.2020.109037

Roberts, L. E., Husain, F. T., and Eggermont, J. J. (2013). Role of attention in the generation and modulation of tinnitus. Neurosci. Biobehav. Rev. 37, 1754–1773. doi: 10.1016/j.neubiorev.2013.07.007

Rossiter, S., Stevens, C., and Walker, G. (2006). Tinnitus and its effect on working memory and attention. J. Speech Language Hear. Res. 49, 150–160. doi: 10.1044/1092-4388(2006/012)

Roy, Y., Banville, H., Albuquerque, I., Gramfort, A., Falk, T. H., and Faubert, J. (2019). Deep learning-based electroencephalography analysis: a systematic review. J. Neural Eng. 16:051001. doi: 10.1088/1741-2552/ab260c

Salvi, R. J., Wang, J., and Ding, D. (2000). Auditory plasticity and hyperactivity following cochlear damage. Hear Res. 147, 261–274. doi: 10.1016/S0378-5955(00)00136-2

Sarter, M., Givens, B., and Bruno, J. P. (2001). The cognitive neuroscience of sustained attention: where top-down meets bottom-up. Brain Res. Rev. 35, 146–160. doi: 10.1016/S0165-0173(01)00044-3

Sauseng, P., Klimesch, W., Doppelmayr, M., Pecherstorfer, T., Freunberger, R., and Hanslmayr, S. (2005). EEG alpha synchronization and functional coupling during top-down processing in a working memory task. Hum. Brain Mapp. 26, 148–155. doi: 10.1002/hbm.20150

Sauseng, P., Klimesch, W., Gruber, W. R., and Birbaumer, N. (2008). Cross-frequency phase synchronization: a brain mechanism of memory matching and attention. Neuroimage 40, 308–317. doi: 10.1016/j.neuroimage.2007.11.032

Schaette, R., and McAlpine, D. (2011). Tinnitus with a normal audiogram: physiological evidence for hidden hearing loss and computational model. J. Neurosci. 31, 13452–13457. doi: 10.1523/JNEUROSCI.2156-11.2011

Schirrmeister, R. T., Springenberg, J. T., Fiederer, L. D. J., Glasstetter, M., Eggensperger, K., Tangermann, M., et al. (2017). Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 38, 5391–5420. doi: 10.1002/hbm.23730

Schlee, W., Mueller, N., Hartmann, T., Keil, J., Lorenz, I., and Weisz, N. (2009). Mapping cortical hubs in tinnitus. BMC Biol. 7:80. doi: 10.1186/1741-7007-7-80

Scholkopf, B., Sung, K.-K., Burges, C. J., Girosi, F., Niyogi, P., Poggio, T., et al. (1997). Comparing support vector machines with gaussian kernels to radial basis function classifiers. IEEE Trans. Signal Process. 45, 2758–2765. doi: 10.1109/78.650102

Silton, R. L., Heller, W., Towers, D. N., Engels, A. S., Spielberg, J. M., Edgar, J. C., et al. (2010). The time course of activity in dorsolateral prefrontal cortex and anterior cingulate cortex during top-down attentional control. Neuroimage 50, 1292–1302. doi: 10.1016/j.neuroimage.2009.12.061

Song, X., Yan, D., Zhao, L., and Yang, L. (2022). LSDD-EEGNet: An efficient end-to-end framework for EEG-based depression detection. Biomed. Signal Process. Control 75:103612. doi: 10.1016/j.bspc.2022.103612

Sun, Z.-R., Cai, Y.-X., Wang, S.-J., Wang, C.-D., Zheng, Y.-Q., Chen, Y.-H., et al. (2019). Multi-view intact space learning for tinnitus classification in resting state EEG. Neural Process. Lett. 49, 611–624. doi: 10.1007/s11063-018-9845-1

Tadel, F., Baillet, S., Mosher, J. C., Pantazis, D., and Leahy, R. M. (2011). Brainstorm: a user-friendly application for MEG/EEG analysis. Comput. Intell. Neurosci. 2011:879716. doi: 10.1155/2011/879716

Tang, D., Li, H., and Chen, L. (2019). “Advances in understanding, diagnosis, and treatment of tinnitus,” in Hearing Loss: Mechanisms, Prevention and Cure, eds H. Li and R. Chai (Berlin: Springer). doi: 10.1007/978-981-13-6123-4_7

Vanneste, S., Plazier, M., der Loo, E., de Heyning, P. V., Congedo, M., and De Ridder, D. (2010). The neural correlates of tinnitus-related distress. Neuroimage 52, 470–480. doi: 10.1016/j.neuroimage.2010.04.029

Verleger, R., and Berg, P. (1991). The waltzing oddball. Psychophysiology 28, 468–477. doi: 10.1111/j.1469-8986.1991.tb00733.x

Voisin, J., Bidet-Caulet, A., Bertrand, O., and Fonlupt, P. (2006). Listening in silence activates auditory areas: a functional magnetic resonance imaging study. J. Neurosci. 26, 273–278. doi: 10.1523/JNEUROSCI.2967-05.2006

Wang, S.-J., Cai, Y.-X., Sun, Z.-R., Wang, C.-D., and Zheng, Y.-Q. (2017). “Tinnitus EEG classification based on multi-frequency bands,” in Proceedings of the Neural Information Processing, eds D. Liu, S. Xie, Y. Li, D. Zhao, and E.-S. M. El-Alfy (Berlin: Springer International Publishing). doi: 10.1007/978-3-319-70093-9_84

Winkler, I., Haufe, S., and Tangermann, M. (2011). Automatic classification of artifactual ICA-components for artifact removal in EEG signals. Behav. Brain Funct. 7, 1–15. doi: 10.1186/1744-9081-7-30

Yang, Q., Yang, M., Liu, K., and Deng, X. (2022). “Enhancing EEG motor imagery decoding performance via deep temporal-domain information extraction,” in Proceedings of the 2022 IEEE 11th Data Driven Control and Learning Systems Conference (DDCLS), (Piscataway, NJ: IEEE). doi: 10.1109/DDCLS55054.2022.9858575

Zancanaro, A., Cisotto, G., Paulo, J. R., Pires, G., and Nunes, U. J. (2021). “CNN-based approaches for cross-subject classification in motor imagery: from the state-of-the-art to DynamicNet,” in Proceedings of the 2021 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), (Piscataway, NJ: IEEE). doi: 10.1109/CIBCB49929.2021.9562821

Zhang, Z., Duan, F., Solé-Casals, J., Dinarès-Ferran, J., Cichocki, A., Yang, Z., et al. (2019). A novel deep learning approach with data augmentation to classify motor imagery signals. IEEE Access 7, 15945–15954. doi: 10.1109/ACCESS.2019.2895133

Zhu, Y., Li, Y., Lu, J., and Li, P. (2021). EEGNet with ensemble learning to improve the cross-session classification of SSVEP based BCI from ear-EEG. IEEE Access 9, 15295–15303. doi: 10.1109/ACCESS.2021.3052656

Keywords: tinnitus, electroencephalography, diagnosis, classification, deep learning

Citation: Hong E-S, Kim H-S, Hong SK, Pantazis D and Min B-K (2023) Deep learning-based electroencephalic diagnosis of tinnitus symptom. Front. Hum. Neurosci. 17:1126938. doi: 10.3389/fnhum.2023.1126938

Received: 18 December 2022; Accepted: 11 April 2023;

Published: 26 April 2023.

Edited by:

Gernot R. Müller-Putz, Graz University of Technology, AustriaReviewed by:

Gan Huang, Shenzhen University, ChinaCopyright © 2023 Hong, Kim, Hong, Pantazis and Min. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Byoung-Kyong Min, bWluX2JrQGtvcmVhLmFjLmty

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.