- 1Biocomplexity Institute, University of Virginia, Charlottesville, VA, United States

- 2School of Data Science, University of Virginia, Charlottesville, VA, United States

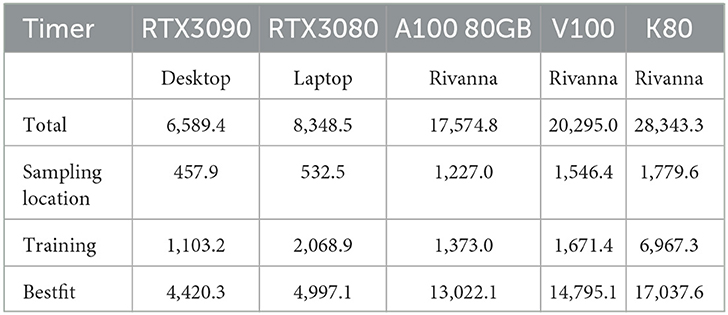

MLCommons is an effort to develop and improve the artificial intelligence (AI) ecosystem through benchmarks, public data sets, and research. It consists of members from start-ups, leading companies, academics, and non-profits from around the world. The goal is to make machine learning better for everyone. In order to increase participation by others, educational institutions provide valuable opportunities for engagement. In this article, we identify numerous insights obtained from different viewpoints as part of efforts to utilize high-performance computing (HPC) big data systems in existing education while developing and conducting science benchmarks for earthquake prediction. As this activity was conducted across multiple educational efforts, we project if and how it is possible to make such efforts available on a wider scale. This includes the integration of sophisticated benchmarks into courses and research activities at universities, exposing the students and researchers to topics that are otherwise typically not sufficiently covered in current course curricula as we witnessed from our practical experience across multiple organizations. As such, we have outlined the many lessons we learned throughout these efforts, culminating in the need for benchmark carpentry for scientists using advanced computational resources. The article also presents the analysis of an earthquake prediction code benchmark while focusing on the accuracy of the results and not only on the runtime; notedly, this benchmark was created as a result of our lessons learned. Energy traces were produced throughout these benchmarks, which are vital to analyzing the power expenditure within HPC environments. Additionally, one of the insights is that in the short time of the project with limited student availability, the activity was only possible by utilizing a benchmark runtime pipeline while developing and using software to generate jobs from the permutation of hyperparameters automatically. It integrates a templated job management framework for executing tasks and experiments based on hyperparameters while leveraging hybrid compute resources available at different institutions. The software is part of a collection called cloudmesh with its newly developed components, cloudmesh-ee (experiment executor) and cloudmesh-cc (compute coordinator).

1. Introduction

As today's academic institutions provide machine learning (ML), deep learning (DL), and high-performance computing (HPC) educational efforts, we attempt to identify if it is possible to leverage existing large-scale efforts from the MLCommons community (Thiyagalingam et al., 2022; MLCommons, 2023). We focus solely on challenges and opportunities cast by the MLCommons efforts to achieve this goal.

To provide a manageable entry point into answering this question, we summarize numerous insights that we obtained while improving and conducting earthquake benchmarks within the MLCommons™ Science Working Group, porting it to HPC big data systems. This includes insights into the usability and capability of HPC big data systems, the usage of the MLCommons benchmarking science applications (Thiyagalingam et al., 2022) and insights from improving the applicability in educational efforts.

Benchmarking is an important effort in exploring and using HPC big data systems. While using benchmarks, we can compare the performance of various systems. We can also evaluate the system's overall performance and identify potential areas for improvements and optimizations either on the system side or the algorithmic methods and their impact on the performance. Furthermore, benchmarking is ideal for enhancing the reproducibility of an experiment, where other researchers can replicate the performance and find enhancements to accuracy, modeling time, or other measurements.

While for traditional HPC systems, the pure computational power is measured such as projected by the TOP500 (Dongarra et al., 1997; Top500, 2023), it is also important to incorporate more sophisticated benchmarks that integrate different applications, such as the file system performance (which can considerably impact the computation time). This is especially the case when fast GPUs are used that need to be fed with data at an adequate rate to perform well. If file systems are too slow, then the expensive specialized GPUs cannot be adequately utilized.

Benchmarks also offer a common way to communicate the results to its users so that expectations on what is possible are disseminated within the computing and educational community. This includes users from the educational community. Students often have an easier time reproducing a benchmark and assessing the impact of modified parameters as part of the exploration of the behaviors of an algorithm. This is especially the case in DL, where a variety of hyperparameters are typically modified to find the most accurate solution.

Such parameters should include not only parameters related to the algorithm itself but also explore different systems parameters such as those impacting data access performance or even energy consumption.

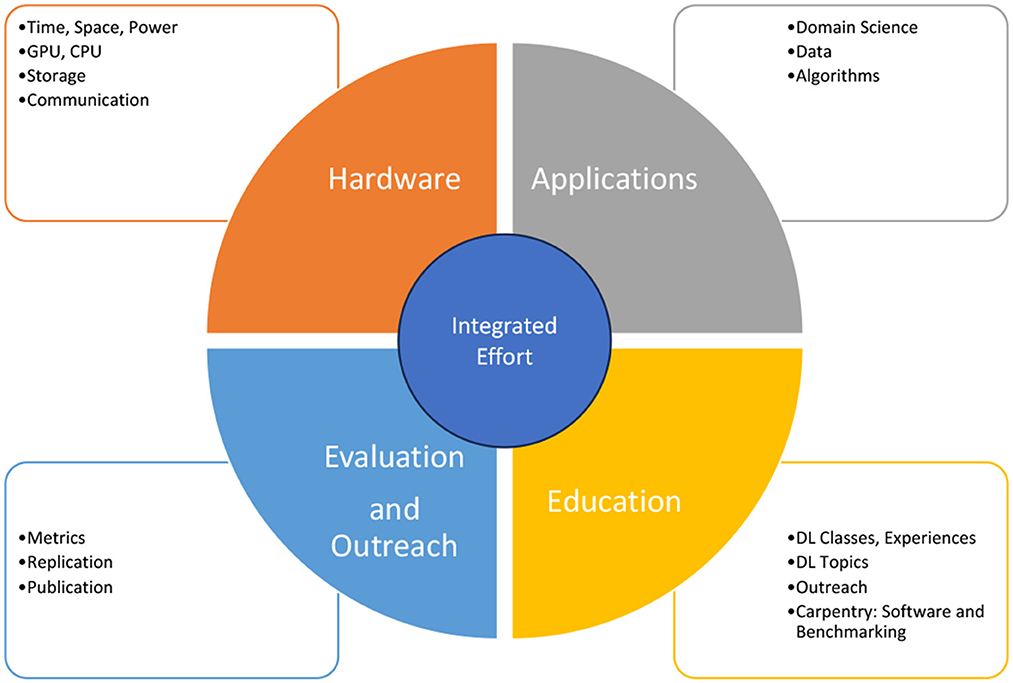

Within this article, we identify opportunities in four different areas as depicted in Figure 1 to enhance the MLCommons efforts we have been involved in as part of the MLCommons Science Working Group. This includes areas in hardware, applications, education, evaluation, and outreach. These areas intersect heavily with each other to create an integrated holistic benchmark effort for DL.

Figure 1. Overview of aspects of opportunities for an integrated educational effort for MLCommons while using applications from the Science Working Group. GPU, graphics processing unit; CPU, central processing unit; DL, deep learning.

Hence, we not only try to identify pathways and exemplars of how such efforts can enhance educational efforts by leveraging expertise from MLCommons into educational efforts, but also consider the unique opportunities and limitations that apply when considering their use within educational efforts.

In general, we look at opportunities and challenges about

• insights from MLCommons toward education and

• insights from education toward MLCommons.

The article is structured as follows: First, we provide an introduction to MLCommons (Section 1.1). Next, we provide some insights about ML in educational settings and the generalization of ML to other efforts (Section 2.1). We then specifically analyze which insights we gained from practically using MLCommons in educational efforts (Section 2.2). After this, we focus on the earthquake forecasting application, describe it (Section 3), and specifically identify our insights in the data management for this application (Section 3). As the application used is time-consuming and is impacted by policy limitations of the educational HPC data system, a special workflow framework has been designed to coordinate the many tasks needed to conduct a comprehensive analysis (Section 3). This includes the creation of an enhanced templated batch queue mechanism that bypasses the policy limitations but makes the management of the many jobs simple through convenient parameter management (Section 3). In addition, we developed a graphical compute coordinator that enables us to visualize the execution of the jobs in a generalized simple workflow system (Section 3). To showcase the performance (Section 4.2) of the earthquake forecasting application, we present data for the runtime (Section 4.2.2) and for the energy (Section 4.2.6). We complete the article with a brief discussion of our results (Section 5).

1.1. MLCommons

MLCommons is a non-profit organization with the goal to accelerate ML innovation to benefit everyone with the help of over 70 members from industry, academia, and government (MLCommons, 2023). Its main focus is developing standardized benchmarks for measuring performance systems using ML while applying them to various applications.

This includes, but is not limited to, application areas from healthcare, automotive, image analysis, and natural language processing (NLP). MLCommons is concerned with benchmarking training (Mattson et al., 2019) and validation algorithms to measure progress over time. Through this goal, MLCommons investigates ML efforts in the areas of benchmarking, data sets in support of benchmarking, and best practices that leverage ML.

MLCommons is organized into several working groups that address topics such as benchmarking related to training, training on HPC resources, and inference conducted on data centers, edge devices, mobile devices, and embedded systems. Best practices are explored in the areas of infrastructure and power. In addition, MLCommons also operates working groups in the areas of Algorithms, DataPerf Dynabench, Medical, Science, and Storage.

The Science Working Group is concerned with improving the science beyond just a static benchmark (Thiyagalingam et al., 2022). The work reported here has been conducted as part of the MLCommons Science Working Group goals.

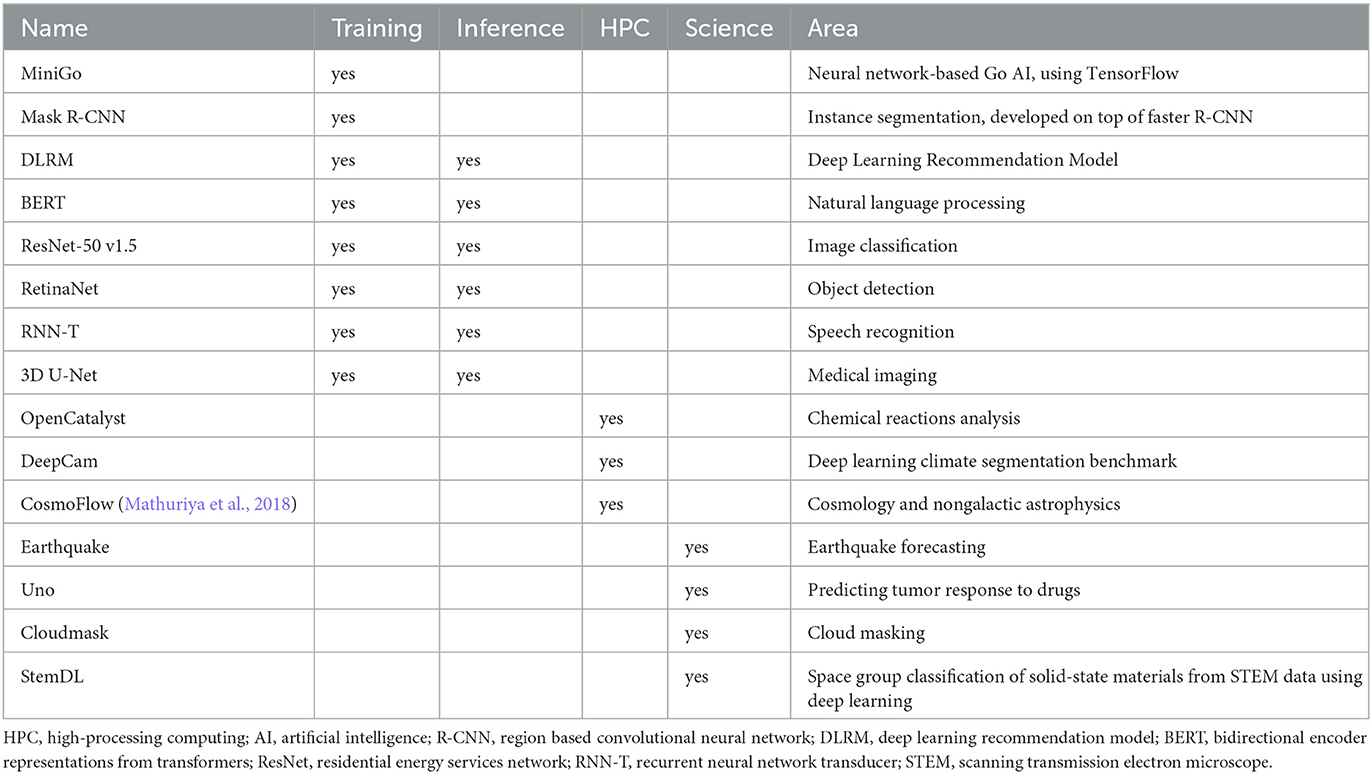

A list of selected benchmarks for the working groups focusing on inference, training, and science are shown in Table 1.

Due to the strong affiliation with industry as well as the integration of national labs and academic HPC centers, MLCommons provides a well-positioned starting point for academic participation. Over the years, we have participated significantly in MLCommons's efforts and integrated aspects of MLCommons into our educational activities. Hence, since its inception, we leveraged the MLCommons activities and obtained a number of important educational insights that we discuss in this article.

Summary section 1.1:

• Challenges: The rigor of applying benchmarks requires special attention to reproducible experiments. Educational resources may be limited and a benchmark of a full HPC system may not be possible within an educational computing center while not interrupting other shared usage.

• Opportunities: MLCommons provides a rich set of benchmarks in a variety of areas that comprehensively encompass many aspects of DL applications that are of interest for educational efforts.

2. Insights for educational activities

Next, we discuss our insights while focusing on educational activities. This includes general observations about machine learning methods, libraries, tools and software carpentry, benchmark carpentry, and infrastructure. We then discuss in specific terms how MLCommons-related topics shape our insights. This includes insights of MLCommons while using it in educational settings leading to the potential to create a course curriculum. We then focus on the earthquake application while presenting lessons learned while improving such a large application as part of the code development, data management, and workflow to conduct extensive hyperparameter-based experiments. This leads us to develop tools that simplify monitoring (time and energy), as well as tools to manage jobs and computations while taking into account policy limitations at the HPC center.

2.1. Insights of ML in education

Before starting with the insights from MLCommons on our efforts, we will first consider some of our experience regarding topics taught in educational activities for ML in general. We distinguish ML methods, applications that use or can use ML, the libraries used to apply these methods for applications, software development tools, and finally the infrastructure that is needed to execute them. Understanding these aspects will allow other ML endeavors to benefit from the time-saving, latest technology solutions we have identified that will devote more time to applying ML to real-world problems.

The aim is not to inundate students with all possible facets of machine learning but rather to guide students toward the completion of an interesting, memorable, applicable, real-world project that the student can take and apply to other projects. This necessitates finding a balance between automating the developer setup (providing a ready-to-go environment) and leaving such setup to the student, which can impart knowledge through self-learning. MLCommons provides an ideal starting point for a learning experience as it introduces the student to benchmarking, which is used within the earthquake application discussed later.

2.1.1. ML methods

We list some topics associated with traditional methods in machine learning (ML) and artificial intelligence (AI) that are frequently taught in classes. This includes clustering (exemplified via k-means), image classification, sentiment analysis, time-series prediction, surrogates (a new topic often not taught), and neural networks (with various standard architectures such as convolutional neural networks [CNNs], recurrent neural networks [RNNs], and artificial neural networks [ANNs]). More traditional methods also include modeling techniques such as random forests, decision trees, K-nearest neighbor, support vector machines, and genetic algorithms. These methods are frequently collected into three distinct algorithmic groups: supervised learning, unsupervised learning, and reinforcement learning.

From this small list, we can already see that a comprehensive course curriculum needs to be carefully developed, as it is arduous to cover the topics required in a one-semester course with sufficient depth, but it needs to span the duration of a student's curriculum in AI.

2.1.2. Libraries

There are several diverse libraries and tools that exist to support the development of AI and ML products. As an example, we list a subset of frequently used software libraries and tools that enable the ML engineer and student to write their applications.

First, we note that at the university level, the predominant programming language used for machine learning and data science is Python. This is evident from the success and popularity of sophisticated libraries such as scikit-learn, PyTorch, and TensorFlow. In recent years, we have seen a trend that PyTorch has become more popular at the university level than TensorFlow. Although the learning curve of these tools is significant, they provide invaluable opportunities while applying them to several different applications. As a result, we integrate these tools into our benchmarks and multi-use toolkit, cloudmesh.

In contrast, other specialized classes that focus on the development of faster, graphics processing unit (GPU)-based methods typically use C++ code leveraging the vendor's specialized libraries to interface with the GPUs such as Nvidia CUDA.

2.1.3. Tools and software carpentry

Unfortunately, today's students are not sufficiently exposed to software carpentry at the beginning of their studies, as we found while working with four different student groups from three different universities, despite the university curriculum consisting of Python and AI classes.

To efficiently use the libraries and methods, as well as the infrastructure used to execute software on shared HPC computers, students need a basic understanding of software engineering tools such as a text editor and code management system. A subset of this is often referred to as software carpentry (Wilson et al., 2014). Topics of immediate importance include the ability to (1) obtain a moderate grasp of terminal use with Unix commands, (2) leverage the features of a professional integrated development environment (IDE), (3) be familiar with a code management system and version control, (4) ensure the availability of the code using open sources, (5) understand how to collaborate with others, and (6) utilize queuing systems as used within shared resources managed in HPC centers.

It is vital to instill these industry-standard practices within apprentices new to AI utilization of HPC systems, beyond just the simplest example, to efficiently use the resources and plan benchmark experiments. These skills are key to evolving a beginner's research and class experience toward intermediate and advanced knowledge usable in the industry so they can further contribute to altruist AI applications and the dissemination of work within academia. Moreover, these students will bring valuable and lucrative skill sets with them to their future professional careers.

Although many centers offer Jupyter as an interactive use of the HPC resources, such notebooks are often designed to be simple one-off experiments, not allowing for encapsulation or expansion into other code. Furthermore, the queuing system time imitations within HPC environments hinder the reproducibility of experiments as the time requirements may only allow one experiment as we have experienced with our application.

Pertaining to educational insights, we observed that most students own Microsoft Windows-based desktops and have never come in contact with a terminal using commandline tools. This is backed up by the fact that Microsoft's Windows 10 possesses 68.75% of the operating system (OS) market as of 2023 (Norem, 2023). Hence, the students cannot often navigate a Unix HPC environment, where ML is commonly conducted in a shared resource. This also exacerbates students' manual code expenditure, as Unix commands such as grep, find, and make are typically not known, and automation of the programs building the workflow to execute a benchmark experiment efficiently is limited.

However, as part of our efforts, we found an easy way to not only teach students these concepts but also access HPC machines via a terminal straight from the laptop or desktop. While built-in terminals and shells can be used on macOS and Linux, the ones on Windows are not Unix-like. Nevertheless, the use of the open-source, downloadable Git Bash on Windows systems provides a Unix-like environment. We also leverage Chocolatey, a package manager that mimics the Unix package tools. Alternatively, Windows Subsystem for Linux achieves the same result while directly being able to run Linux in a virtual machine on the computers. However, for students with older or resource-limited machines, the latter may not be an option. To efficiently use the terminal, the elementary use of commands needs to be taught, including the use of a simple command line editor. While leveraging bash on the command line, it becomes easy to develop tutorials and scripts that allow the formulation of simple shell scripts to access the HPC queuing system.

Furthermore, sophisticated programming tools that readily exist in cross-OS portable fashion on the laptop/desktop can be used to develop or improve the code quality of the software. This includes the availability of IDEs (such as PyCharm and VSCode) with advanced features such as syntax highlighting, code inspection, and refactoring. As part of this, applying uniform formatting such as promoted by PEP 8 (www, 2023) increases code readability and uniformity, thereby effortlessly improving collaboration on code by various team members.

Although such IDEs can become quite complex with the evolution of their corresponding toolchains (Fincher and Robins, 2019), in our case, we can restrict their use toward code development and management. As such, habits are immediately introduced that improve the code quality. Furthermore, these tools allow collaborative code development through group editing and group version control management. Together, they help students write correct code that meets industry standards and practices (Tan et al., 2023).

From our experience, this knowledge saves significant effort in time-intensive programs such as Research Experiences for Undergraduates, which typically only last one semester and require the completion of a student project. As part of this, we observed that integrated software carpeting while also integrating IDEs benefits novice students as they are more likely to contribute to existing research activities related to scientific ML applications. Such sophisticated IDEs are offered as free community editions or are available in their professional version for free to students and open-source projects. Such IDEs also provide the ability to easily write markdown text and render the output while writing. This is very useful for writing documentation. Documentation is a necessity in ML research experiences as a lack thereof creates a barrier to entry (Königstorfer and Thalmann, 2022).

As previously mentioned, most recently, these tools also allow writing code remotely, as well as in online group sessions fostering collaboration. Hence, peer programming has become a reality, even if the students work remotely with each other. This is further proven by free online IDEs such as Replit, where students can edit the same file simultaneously (Kovtaniuk, 2022). However, such features have now become an integral part of modern IDEs such as PyCharm and vscode, so the use of external tools is unnecessary. Due to this, we noticed an uptake among students in using the remote editing capabilities of more advanced editors such as PyCharm and vscode; alongside their superiority while developing code, a command editor on the HPC terminal was often avoided. However, this comes with an increased load on the login nodes, which is outweighed by the developers' convenience and code quality while using such advanced editors. HPC centers are advised to increase their capabilities significantly to support such tools while increasing their resources for using them by their customers.

Finally, the common choice for collaborative code management is Git, with successful social coding platforms such as GitHub and GitLab. These code management systems are key for teams to share their developed code and enable collaborative code management. However, they require a significant learning curve. An important aspect is that the code management systems are typically hosted in the open, and the code is available for improvement at any time. We found that students who adopt the open-source philosophy perform considerably better than those who may store their code in a private repository. The openness fosters two aspects:

• First, the code quality improves as the students put more effort into the work due to its openness to the community. This allows students to share their code, improve other code, and gain networking opportunities. Also, perhaps most importantly, this allows scientists to replicate their experiments to ensure similar results and validity.

• Second, collaboration can include research experts from the original authors and researchers that would otherwise not be available at the university. Hence, the overall quality of the research experience for the student increases as the overall potential for success is implicitly accessible to the student.

An additional tool is JupyterLab, created by Project Jupyter. It provides a web browser interface for interactive Python notebooks (with file extension ipynb). The strength here is a rich external ecosystem that allows us to interactively run programs while integrating analysis components to utilize data frames and visualization to conduct data exploration. For example, this is possible by using web browser interfaces to either the HPC-hosted Jupyter notebook editor or Google Colab. The unfortunate disadvantage of using notebooks is that, while the segmentation of code into cells can provide debugging convenience, this format may break proper software engineering practices such as defining and using functions, classes, and self-defined Python libraries that lead to more sustainable and easier-to-manage code. An upside to Jupyter notebooks is that they possess an integrated markdown engine that can provide sophisticated documentation built in; we have also identified that students without access to capable local machines can leverage Google Colab, which is a free platform for using Jupyter notebooks. Jupyter notebooks accessing HPC queues are currently often made available through web-based access as part of on-demand interfaces to the HPC computing resource (uva, 2023).

Regrettably, live collaborative editing of Jupyter notebooks is not yet supported on some platforms such as Replit and PyCharm. However, vscode does support this feature, even within the browser, eliminating the need to download a client. We expect that such features will eventually become available in other tools.

While topical-focused classes such as ML and DL is obviously in the foreground, we see a lack of introducing students to software carpeting and even the understanding of HPC queuing systems in general. Tools such as Jupyter and Colab that are often used in such classes deprive the students often from the needed underlying understanding of efficiently using shared GPU resources for ML and DL.

Hence, students are often ill prepared for software carpeting needs that arise in more advanced applications of DL utilizing parallel and concurrent DL methodologies. Furthermore, programming language classes are often only applied to teaching Python while only emphasizing the language aspects but not with a sustainable, practical software engineering approach. Because ML is a relatively new venture in the computing field, there is not yet a definitive set of standards meant for beginning students. The lack of emphasizing standards as part of teaching activities such as these relates to a general problem at the university level.

We alleviate difficulties such as these encountered within research experience by leveraging a cross-platform cloud-computing toolkit named cloudmesh. This toolkit, alongside our use of professional IDEs and version control, allows students to focus less on manual code expenditures and operating system debugging, and more on HPC use and ML development on data sets such as from the Modified National Institute of Standards and Technology (MNIST), among others. We acknowledge the importance of saving time as it is a precious commodity in research experiences. The use of cloudmesh reduces the entry barrier surrounding the creation of machine learning benchmark workflow applications, as well as our standardized benchmarking system, MLCommons. This system is easily implemented as long as programmers can utilize the capabilities of an industry-standard IDE. Since we emphasize reproducibility and openness with other contributors, then an open-source solution like MLCommons is necessary.

2.1.4. Benchmark carpentry

Benchmark carpentry is not yet a well-known concept while focusing on applying software carpentry, common benchmark software, and experiment management aspects to create reproducible results in research computing. To work toward a consolidated effort of benchmark carpentry, the experiences and insights documented in this article have recently been reported to the MLCommons Science Working Group. Throughout the discussion, we identified the need to develop an effort focusing on benchmark carpentry that goes beyond the aspects typically taught in software carpentry while focusing on aspects of benchmarks that are not covered. This includes a review of other benchmark efforts such as TOP500 and Green500, the technical discussion around system benchmarks including SPEC benchmarks, as well as tools and practices to better benchmark a system. Special effort needs not only to be placed on benchmarking the central processing unit (CPU) and GPU capabilities but also on what effect the impact of the file system or the memory hierarchy has. This benchmarking ensures reproducibility while leveraging the findability, accessibility, interoperability, and reusability principle. Furthermore, using software that establishes not only immutable baseline environments such as Singularity and Docker but also the creation of reproducible benchmark pipelines and workflows using cloudmesh-ee and cloudmesh-cc, is beneficial. Such efforts can also be included in university courses, and the results of developing material for and by the participants can significantly pervade the concept of a standardized benchmarking system such as MLCommons's MLPerf.

2.1.5. Infrastructure

An additional aspect ML students must have exposure to is the need for access to computational resources due to distinct hardware requirements resulting from using an advanced ML framework. One common way of dealing with this is to use preestablished ML environments like Google Colab, which is easy to access and use with limited capability for free (with the option of obtaining a larger computational capability with a paid subscription). However, as Colab is based on Jupyter notebooks, we experience the same disadvantages discussed in Section 2.1.3. Furthermore, benchmarking can become quite expensive using Google Colab depending on the benchmark infrastructure needs.

Another path to obtain resources for machine learning can be found in the cloud. This may include infrastructure-as-a-service and platform-as-a-service cloud service offerings from Amazon, Azure, Google Cloud, Salesforce, and others. In addition to the computational needs for executing neural networks and DL algorithms, we also find services that can be accessed mainly through REpresentational State Transfer Application Programming Interface (REST APIs) offering methods to integrate the technology into the application research easily. Most popular tools focus on NLP, such as translation and, more recently, on text analysis and responses through OpenAI's ChatGPT and Google's Bard.

However, many academic institutions have access to campus-level and national-level computing resources in their HPC centers. In the United States, this includes resources from the Department of Energy and the National Science Foundation (NSF). Such computing resources are accessed mostly through traditional batch scheduling solutions (such as Slurm SLURM, 2003), which allows for sharing limited resources with a large user community. For this reason, centers often implement a scheduling policy that puts significant restrictions on the computational resources that can be used simultaneously and for a limited period. The number of files and the access to a local disk on compute nodes constituting the HPC resources may also be limited. This provides a potential very high entry barrier as these policy restrictions may not be integrated into the application design from the start. Moreover, in some cases, these restrictions may provide a significant performance penalty when data are placed in a slow network file system (NFS) instead of directly in memory (often the data do not fit in memory) or in NVMe storage if it exists and is not restricted on the compute nodes. It is also important to understand that such nodes may also be shared with other users and it is important to provide the infrastructure requirements upfront regarding computation time, memory footprint, and file storage requirements accurately so that scheduling can be performed most expediently. Furthermore, the computing staff maintains the software on these systems and is typically tailored for the HPC environment. It is best to develop with the version provided, which may target outdated software versions. Container technologies reduce the impact of this issue by enabling users of the HPC center to provide their own custom software dependencies as an image.

One of the popular container frameworks for HPC centers is Singularity, and some centers offer Docker as an alternative. As images must bring all the software needed to run a task, they quickly become large in size, and it is not feasible to just copy the image from your local computer but to work with the center to create the image within the HPC infrastructure. This is especially true when a university requires all resources to be accessed through a virtual private network (VPN). Here, one can often see a factor of 10 or more slowdown in transfer and access speeds (Tovar et al., 2021).

All these elements must be learned; establishing an understanding of these subjects can take considerable time. Hence, using HPC resources has to be introduced with specialized educational efforts often provided by the HPC center. However, sometimes these general courses are not targeted specifically to running a particular version of PyTorch or TensorFlow with cuDNN, but just the general aspect of accessing the queues. Although these efforts often fall under the offerings of software carpentry, the teaching objective may fall short as the focus is placed on a limited number of software, supported by the center instead of teaching how to install and use the latest version of TensorFlow. Furthermore, the offered software may be limited in case the underlying GPU card drivers are outdated. Software benchmarks need not only the newest software libraries but also the newest device drivers, which can only be installed by the HPC support team.

Furthermore, specifically customized queues demanding allocations, partitions, and resource requirements may not be documented or communicated to its users, and a burden is placed on the faculty member to integrate this accurately into the course curriculum.

Access to national-scale infrastructure is often restricted to research projects that require following a detailed application process. The faculty supervisor conducts this process and not the student. Background checks and review of the project may delay the application. Additional security requirements, such as the use of Duo Mobile, SSH keys, and other multifactor authentication tools must be carefully taught.

In case the benchmark includes environmental monitoring such as temperatures on the CPU/GPU and power consumption, access may be enabled through default libraries and can be generalized while monitoring the environmental controls over time. However, HPC centers may not allow access to the overall power consumption of entire compute racks as it is often very tightly controlled and only accessible to the HPC operational support staff.

2.2. Insights of MLCommons in education

The MLCommons benchmarks provide a valuable starting point for educational material addressing various aspects of the machine and deep learning ecosystem. This includes benchmarks targeted to a variety of system resources from tiny devices to the largest research HPC and data systems in the world while being able to adapt and test them on platforms between these two extremes. Thus, they can become ideal targets for adaptation in AI classes that want to go beyond typical introductory applications such as MNIST that run in a small amount of time.

We have gained practical experience while adapting benchmarks from the MLCommons Science Working Group while collaborating with various universities and student groups from the University of Virginia (UVA), New York University, and Indiana University. Furthermore, it was used at Florida A&M University as a research experience for undergraduates (REU) and is now executed at the UVA as research activity by a past student from the REU (Fleischer et al., 2022). The examples provide value for classes, capstones, REUs, team project-oriented software engineering and computer science classes, and internships.

We observed that traditional classes limit their resource needs and the target application to a very short period so assignments can be conducted instantly. Some MLCommons benchmarks go well beyond this while confronting the students not only with the theoretical background of the ML algorithm but also with big data systems management, which is required to execute benchmarks due to their data and time requirements. This is especially the case when hyperparameters are to be identified to derive scientifically accurate examples. It is also beneficial in that it allows the students to explore different algorithms applied to these problems.

From our experiences with these various efforts, we found that the following lessons provided significant add-on learning experiences:

• Teamwork. Students benefit from focusing on the success and collaboration of the entire team rather than mere individualism, as after graduation, students may work in large teams. This includes not only the opportunity for pair programming but also the fact that careful time planning in the team is needed to succeed. This also includes how to collaborate with peers using professional, industry-standard coding software and management of code in a team through a version control system such as Git. As others point out (Raibulet and Fontana, 2018), we also see an increase in enthusiasm and appreciation of teamwork-oriented platforms when such aspects are employed in coding courses. While courses may still focus on the individual's progress, an MLCommons Benchmark benefits from focusing on grading the team and taking the entire project and team progress into a holistic grade evaluation.

• Interdisciplinary research. Many of the applications in MLCommons require interdisciplinary research between the domain scientists, ML experts, and information technology engineers. As part of the teamwork, students have the opportunity to participate not only within their discipline but learn about how to operate in an interdisciplinary team. Such multidisciplinary experience not only broadens their knowledge base but also strengthens their market viability, making them attractive candidates for diverse job possibilities and career opportunities in the ever-evolving technological landscape (Zeidmane and Cernajeva, 2011).

• System benchmarking versus science benchmarking. Students can learn about two different benchmarking efforts. The first is system-level benchmarking in which a system is compared based on a predefined algorithm and its parameters measuring system performance. The second is the benchmarking of a scientific algorithm in which the quality of the algorithms is compared with each other, where system performance parameters are a secondary aspect.

• Software ecosystem. Students are often using a course-provided, limited, custom-defined environment prepared explicitly for a course that makes course management for the teacher easier but does not expose the students to various ways of setting up and utilizing the large variety of software related to big data systems. This includes setting up Python beyond the use of Conda and Colab notebooks, the use of queuing systems, containers, and cloud computing software for AI, DL, and HPC experiments as well as other advanced aspects of software engineering. Benchmarking introduces these concepts to students in a variety of configurations and environments, providing them with a more research- and industry-like approach to managing software systems.

• Execution ecosystem. While in-class problems typically do not require as many computing resources, some of the examples in MLCommons require a significant organizational aspect to select and run meaningful calculations that enhance the accuracy of the results. Careful planning with workflows and the potential use of hybrid heterogeneous systems significantly improves the awareness to deal with not only the laptop but also the large available resources students may get access to while leveraging flagship-class computing resources, or their own local HPC system when available. Learning to navigate an HPC system is imperative to teach to students and can be augmented by professor-created toolkits and platforms (Zou et al., 2017). We found it necessary to provide additional documentation to address the staff-provided HPC manual while focusing on specific aspects that are not general in nature but are related to group and queue management specifically set up for us by staff. This includes documentation about the accounting for system policies, remote system access, and frugal planning of experiments through the prediction of runtimes and the planning of hyperparameter searches (Claesen and De Moor, 2015; von Laszewski et al., 2022). This can also include dealing with energy consumption and other environmental parameters.

• Parallelization. The examples provide a basis for learning about various parallelization aspects. This includes the parallelization on not only the job level and hyperparameters searches but also on the use of parallelization methods provided by large-scale GPU-based big data systems.

• Input/Output (IO) data management. One other important lesson is the efficient and effective use of data stores to execute. For example, DL algorithms require a large number of fast IO interactions. Having access to sufficient space to store potentially larger data sets is beneficial. Also, the time needed to send data from the external storage to the GPU should be small to ensure that the GPUs have sufficient data to perform well without bottleneck. Such management is vital to be taught within education as the entirety of ML depends on the organization of data (Shapiro et al., 2018).

• Data analysis. The examples provide valuable input to further enhance abilities to conduct non-trivial data analysis through advanced Python scripts while integrating them in coordinated runs to analyze log files that are created to validate the numerical stability of the benchmarks. This includes the utilization of popular data analysis libraries (such as Pandas) as well as visualization frameworks (such as Seaborn). It also allows students to focus on identifying a result that can be communicated in a professional manner.

• Professional and academic communication. The results achieved need to be communicated to a larger audience and the students can engage in a report, paper, and presentation writing opportunities addressing scientific and professional communities.

• Benefits to society. The MLCommons benchmarks are including opportunities to improve the quality of ML algorithms that can be applied to societal tasks. Obviously, improving benchmarks such as earthquake forecasting are beneficial to society and can motivate students to participate in such educational opportunities.

2.2.1. MLCommons DL-based proposed course curriculum

In this section, we explore the idea to potentially create a course curriculum utilizing the MLCommons effort. For this to work and focus for MLCommons, the course can focus on DL while using examples from MLCommons benchmarks as well as additional enhancements into other topics that may not be covered.

In contrast to other courses that may only focus on DL techniques, this course will have the requirement to utilize significant computational resources that are, for example, available on many campuses as part of an HPC or a national scale facility such as NSF's Access. Alternatively, Google Colab can be used; however, it will have the disadvantage of not using HPC resources from local or national HPC centers as discussed earlier.

1. Course overview and introduction: Here the overview of the course is provided. Goals and expectations are explained and an introduction to deep learning is provided. This includes the history and applications of DL, a basic introduction to optimization technologies and neural networks, and the connection between MLCommons Applications is presented.

2. Infrastructure and benchmarking: An overview of MLCommons-based DL applications and benchmarks are discussed and will include a wide variety reaching from tiny devices to supercomputers and hyperscale clouds. Google Colab will be introduced. Practical topics such as using ssh and batch queues are discussed. An explicit effort is placed on using a code editor such as PyCharm or VSCode. Elementary software infrastructure is discussed while reviewing Python concepts for functions, classes, and code packaging with pip. The use of GitHub is introduced.

3. CNNs: A deeper understanding is taught by focusing on CNNs. The example of Mask R-CNN is explained.

4. RNNs: RNNs are taught and applications of RNNs are discussed. The RNN-T application focusing on speech recognition is presented and analyzed.

5. NLP: As NLP has such a big impact on industry and academia, additional lectures in that area are presented. This includes large language models, analyzing text, applications of NLP, language translation, and sentiment analysis. Practical examples are introduced while looking at ChatGPT. From MLCommons, the applications DLRM, BERT, and RNN-T are discussed.

6. Project presentations: The last part of the class is focused on a project presentation that students can conduct in a team or individually. It should showcase an application and performance results on one or multiple HPC data systems, or include an improvement to an existing MLCommons benchmark. It is expected that the students write a high-quality project report. Ideally, each team will submit its result to MLCommons. A good start here is the Science Working Group as it provides rolling submissions and its focus is accuracy and not speed, which is often a topic of interest in academia.

7. Submitting expanding MLCommons benchmarks: The results obtained can be also submitted to MLCommons. Here we see two opportunities: first the submission of results from standardized benchmarks provided by MLCommons and, second, the inclusion of new scentific application results submitted to the MLCommons Science Working group.

Adaptations of this material are possible and can be made accordingly to stay up to date with community AI developments as well as efforts newly covered in MLCommons. The semester-long project is accompanied by biweekly practical mini-assignments showcasing selected results and implementations of a particular topic. The final outcome will be a project report. Grading and integration can be done based on the instructors and the university course requirements that university policies may govern. Practically, we believe that grading the project will be sufficient; however, we observed that weekly graded assignments may be needed to compete with other weekly homework-oriented graded classes that require immediate attention by the students. The curriculum can be divided into several sections that can be taught over a semester in either a graduate or undergraduate class or a combination thereof. The curriculum could be used in its entirety, or selected aspects could be taught.

Summary section 2:

• Challenges: Students lack knowledge of software carpentry despite taking programming and AI classes at universities. Software carpeting tools such as terminals, command line tools, and IDEs are not sufficiently utilized although they provide significant benefits for professional code development and management of shared resources. Today's DL students often have only knowledge about Jupyter notebooks or Google Collab resulting in one-cell-at-a-time-oriented programming rather than a proper more sophisticated software engineering approach. Using computing resources at HPC centers may pose a considerable on-ramp hurdle, especially when combined with queuing systems and container technologies that vary in their implementation between centers; specialized documentation must be available.

• Opportunities: Software carpeting could be offered as an additional class and made a prerequisite for taking AI classes, or become an integral part of the DL experience. This should include learning about terminal commands, accessing queuing systems, IDEs, code management, and collaborative code development going beyond the usage of Jupyter notebooks. Benchmark carpentry should be offered in addition to software carpentry while focusing on unique aspects of reviewing common benchmark practices and applying them to DL applications. Tools such as cloudmesh used in several MLCommons applications allow leveraging creating simple standardized interfaces to time-based benchmarks and the display of the results in a human-readable form. Exposing students to knowledge about shared HPC resources used for DL rather than just reusing cloud resources offers a deeper understanding of resource-efficient resource utilization in a resource-starved environment as well as the costs associated with them having an impact on affordable benchmarks. MLCommons covers a wide variety of topics and it is conceivable to develop a comprehensive course curriculum around it that could be either used in its entirety or adapted based on interests as well as selectively taught. To address variations in the HPC technologies used, center documentation can be developed by an organization but may have to be adapted to simplify it while focusing on storage, compute, and container technologies and specifics offered. This course curriculum provides the opportunity to emphasize teamwork while focusing on a larger project.

3. Earthquake forecasting

While we so far have focused on the general applicability of MLCommons benchmarks as potential options to develop an educational curriculum, we focus next on an exemplar for a potential semester-long project and their insights toward the goal of using it as an educational tool.

Although MLCommons has many applications, we decided to use an application from the MLCommons Science Working Group as we most closely work as part of this group. It has four major benchmarks as documented in Thiyagalingam et al. (2022) in von Laszewski et al. (2023).

However, here we focus on the earthquake benchmark code that creates a Time Series Evolution Operator (TEvolOp) to be applied to several scientific applications such as hydrology and COVID-19 predictions. We focus on this application because, in contrast to other MLCommons applications it is written as a Jupyter notebook and therefore intercepts with many educational efforts using Jupyter notebooks. We restrict our report to the efforts related to earthquake forecasting as it is one of the first applications from the MLCommons Science Working Group that have been used in educational class projects.

The scientific objective of the earthquake benchmark is to extract the evolution using earthquake forecasting while utilizing time series forecasting.

The earthquake benchmark uses a subset of the overall earthquake data set for the region of Southern California. While conventional forecasting methods rely on statistical techniques, we use ML for extracting the evolution and testing the effectiveness of the forecast. As a metric, we use the Nash-Sutcliffe Efficiency (NSE) (Nash and Sutcliffe, 1970). Other qualitative predictions are discussed in Fox et al. (2022).

One of the common tasks when dealing with time series is the ability to predict or forecast them in advance. Time series capture the variation of values against time and can have multiple dimensions. For example, with earthquake forecasting, we use geospatial data sets that have two dimensions based both on time and spatial position. The prediction is considerably easier when we can identify an evolution structure across dimensions. For example, by analyzing earthquake data, we find a strong correlation between nearby spatial points. Thus, nearby spacial points influence each other and simplify the time-series prediction for an area. However, as earthquake faults and other geometric features are not uniformly distributed, such correlations are often not clearly defined in spatial regions. Thus it is important to look not only at the region but also at the evolution in time series. This benchmark extracts the evolution of time series applied to earthquake forecasting.

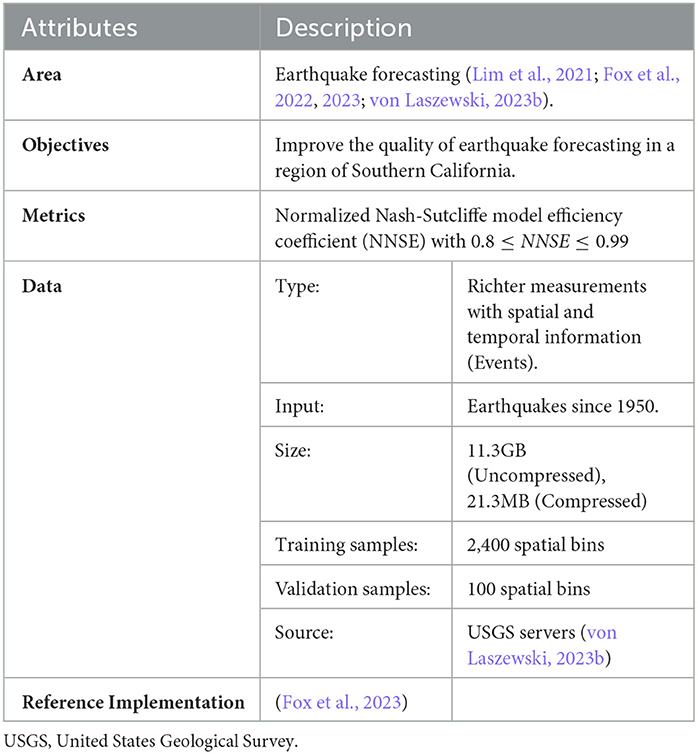

3.1. Earthquake data

The data for this earthquake are described in Thiyagalingam et al. (2022). It uses a subset of the earthquake data from the United States Geological Survey (USGS) focused on Southern California between latitude 32°N to 36°N and longitude: −120°S to −114°S). The data for this region cover all earthquakes since 1950. The data include four measurements per record: magnitude, spatial location, depth from the crust, and time. We curated the data set and reorganized it in different temporal and spatial bins. “Although the actual time lapse between measurements is one day, we accumulate this into fortnightly data. The region is then divided into a grid of 40 × 60 with each pixel covering an actual zone of 0.1 deg × 0.1 or 11km × 11km grid. The dataset also includes an assignment of pixels to known faults and a list of the largest earthquakes in that region from 1950. We have chosen various samplings of the dataset to provide both input and predicted values. These include time ranges from a fortnight up to four years. Furthermore, we calculate summed magnitudes and depths and counts of significant quakes (magnitude < 3.29)” (Fox et al., 2022). Table 2 depicts the key features of the benchmark (Thiyagalingam et al., 2022).

3.1.1. Implementation

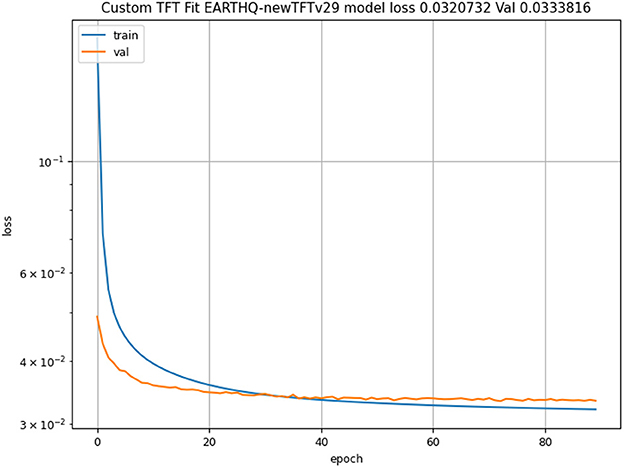

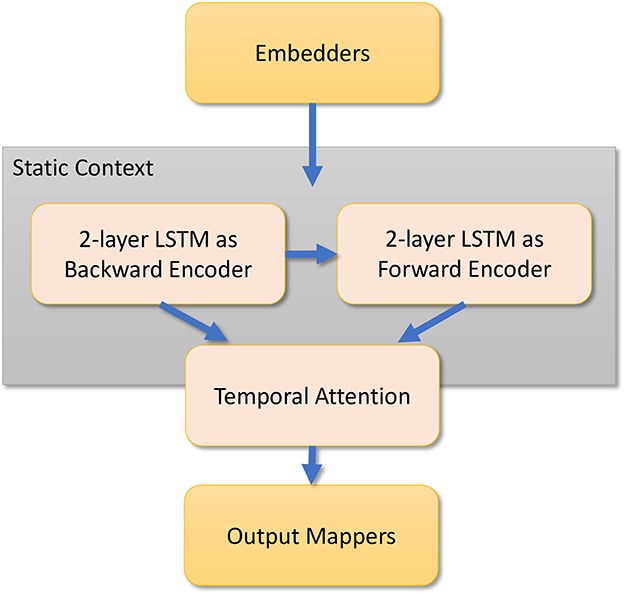

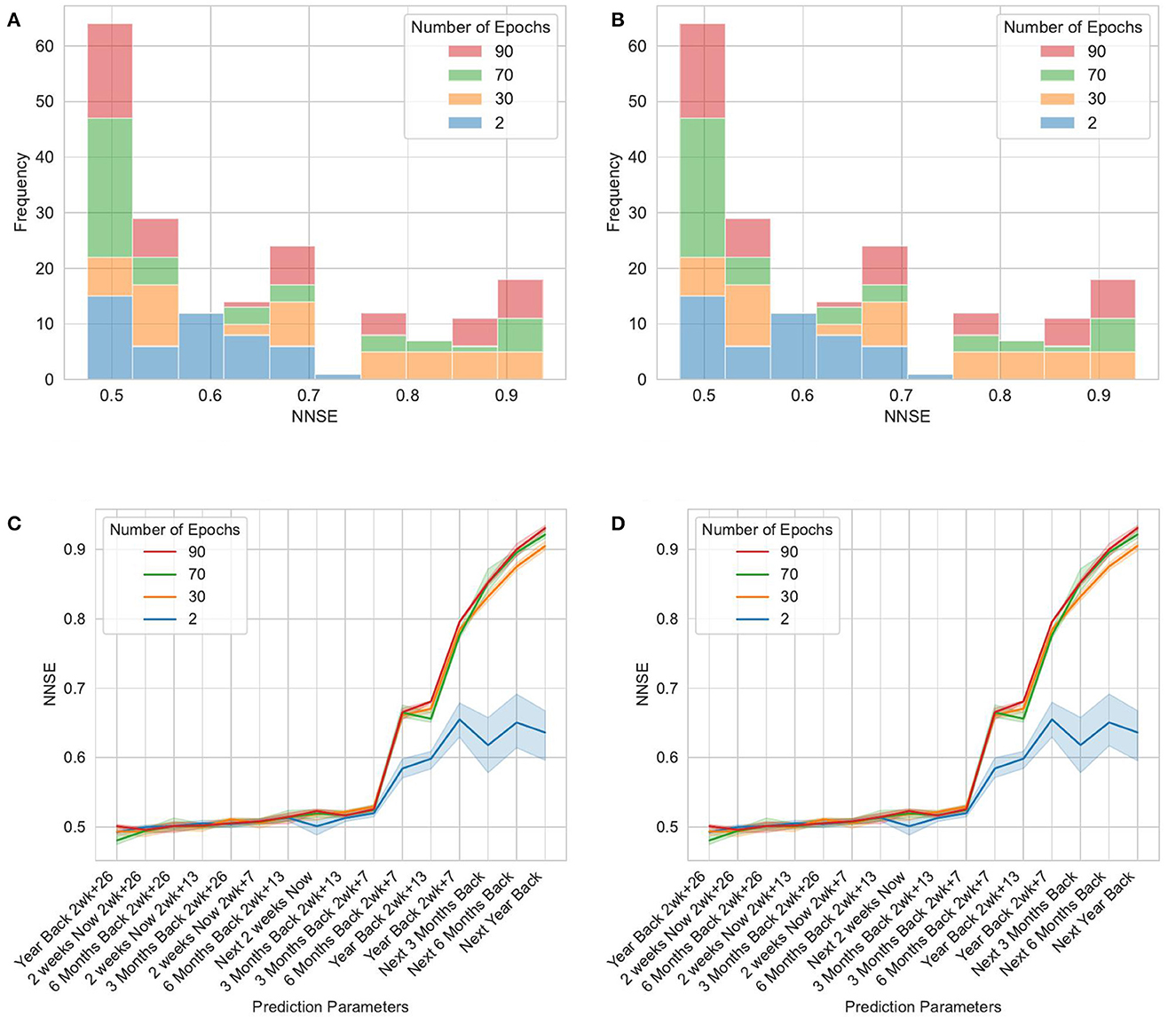

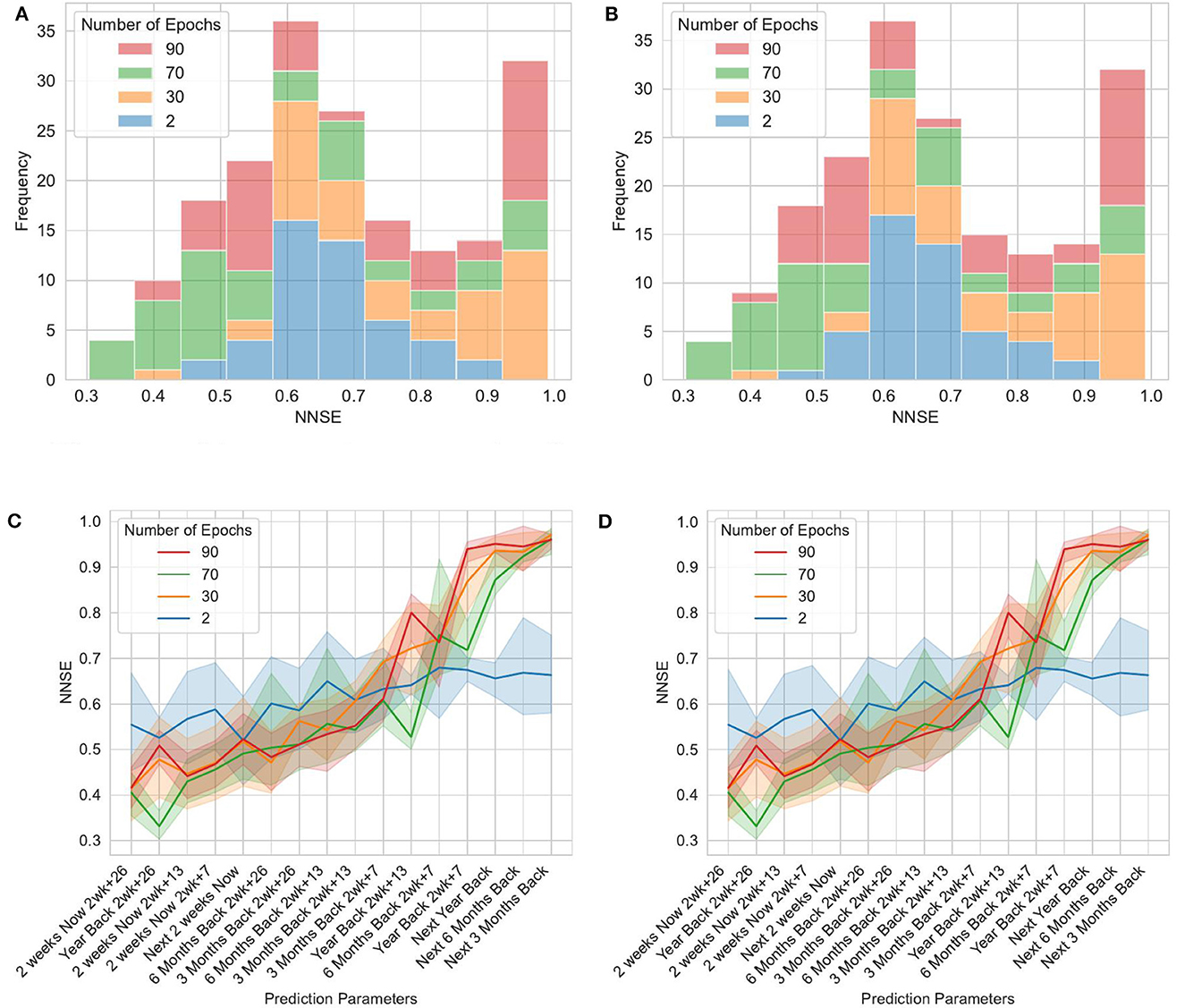

The reference implementation of the benchmark includes three distinct deep learning-based reference implementations. These are a long short-term memory (LSTM)–based model, a Google Temporal Fusion Transformer (TFT) (Lim et al., 2021)–based model, and a custom hybrid transformer model. The TFT-based model uses two distinct LSTMs, covering an encoder and a decoder with a temporal attention-based transformer. The custom model includes a space-time transformer for the decoder and a two-layer LSTM for the encoder. Figure 2 shows the TFT model architecture. Each model predicts NSE and generates visualizations illustrating the TFT for interpretable multi-horizon time-series forecasting (Lim et al., 2021).

Figure 2. Temporal fusion transformer model architecture (Fox et al., 2022). LSTM, long short-term memory.

For this article, we adopted the same calculations as defined in Fox et al. (2022): “We have chosen various samplings of the dataset to provide both input and predicted values. These include time ranges from a fortnight up to 4 years. Further, we calculate summed [according to Equation (1)] magnitudes and averaged depths (according to Equation (2)) and counts of significant earthquakes [magnitude > 3.29, Equation (3)]. We use the concept of energy averaging when there are multiple events in a single space-time bin. Therefore, the magnitude assigned to each bin is defined in Equation (1) as 'log(Energy)' where we sum over events of individual magnitudes mevent. We also use energy averaging defined in Equation (2) for quantities Qbin such as the depth of an earthquake that needs to be weighted by their importance when averaging over a bin.”

In this article, we only focus on the TFT implementation. The TFT inputs and outputs are described next (Fox et al., 2022).

• Static Known Inputs (five inputs): four space-filling curve labels of fault grouping, linear label of pixel.

• Targets (four targets): mbin(F : Δt, t) for Δt = 2, 14, 26, 52 weeks. Calculated for t − 52 to t for encoder and t to t + 52 weeks for decoder in 2 week intervals. 104 predictions per sequence.

• Dynamic known inputs (13 inputs): Pl(cosFull) for l = 0 to 4 cosperiod(t), sinperiod(t) for period = 8, 16, 32, 64.

• Dynamic unknown inputs (nine inputs): Energy-averaged depth, multiplicity, multiplicity m > 3.29 events mbin(B : Δt, t) for Δt = 2, 4, 8, 14, 26, 52 weeks.

These data can be input based on the time series. Backward data can be taken up to 1 year before the current date, and forward data can be taken up to 4 years into the future. The data are then enriched with the LSTM models on time and other factors like spacial location, fault grouping and energy produced at location. Feature selection is done. The data are then fed into an attention learning module, which learns trends and more complex relationships based on the data across all time steps and can apply this knowledge to any number of time steps. More feature selection is done. Then finally the data are run through quantile regression. The loss is calculated by mean aboslute error (MAE). This repeats until all epoch runs are done and the iteration that had the lowest loss is used to create predictions. Normalized Nash Sutcliffe efficiency (NNSE) and mean squared error (MSE) are used as a goodness of fit metric.

More details of the TFT model applied to the earthquake application are presented in Fox et al. (2022). More general details about TFT models can be found in Lim et al. (2021).

3.1.2. Insights into development of the code

The original code was developed with the goal of creating a DL method called TEvolOp to apply special time-series evolution for multiple applications including earthquake, hydrology, and COVID-19 prediction. The code was presented in a large Python Jupyter notebook on Google Colab. Due to the integration of multiple applications (hydrology and COVID-19), the code is complex and challenging to understand and maintain. For this reason, the total number of lines of 13,500 was reduced by more than 2,400 lines when the hydrology and the COVID code were removed. However, at the same time, we restructured the code and reached a final length of about 11,100 lines of code. The code was kept as a Jupyter notebook in order to test the applicability of benchmarking applications presented at notebooks rather than converting it into a pure Python script. The code included all definitions of variables and hyperparameters in the code itself. This means that the original code needed to be changed before running it in case a hyperparameter needed to be modified.

This code has some challenges that future versions ought to address. First, the code includes every aspect that is not covered by TensorFlow and contains a customized version of TFT. Second, due to this the code is very large, and manipulating and editing the code are time-consuming and error-prone. Third, as many code-related parameters are managed still in the code, running the same code with various parameters becomes cumbersome. In fact, multiple copies of the code need to be maintained when new parameters are chosen, instead of making such parameters part of a configuration file. Hence, we started moving toward the simplification of the code by introducing the concept of libraries that can be pip installed, as well as adding gradually more parameters to configuration files that are used by the program.

The advantage of using a notebook is that it can be augmented with lots of graphs that give updates on the progress and its measurement accuracy. It is infeasible for students to use and replicate the run of this notebook on their own computers as the runtime can be up to two days. Naturally, students use their computers for other purposes and need to be able to use them on the go, not having the luxury to dedicate such a prolonged time to running a single application. Hence, it is desirable to use academic HPC centers that provide interactive jobs in the batch queues in which Jupyter notebooks could be run. However, running such a time-consuming interactive job is also not possible in most cases. Instead, we opted to use Jupyter notebooks with a special batch script that internally uses Papermill (Papermill, 2020) and leverages an HPC queuing system to execute the notebook in the background. Papermill will execute the notebook and include all cells that have to be updated during runtime, including graphics in a separate runtime copy. The script we developed needed to be run multiple times with different hyperparameters such as the number of epochs. As the HPC system is a heterogeneous GPU system having access to A100, V100, P100, and RTX2080 graphics cards, the choice of the GPU system must be able to be configurable. Hence, the batch script includes the ability to also read in the configuration file and adapt itself to the needed parameters so such parameters can be separated from the actual notebook. This is controlled by a sophisticated but simple batch job generator, which we discuss in Section 3.

Summary choosing the earthquake benchmark application:

• Opportunities: Using a scientific application as a project within the educational efforts allows students to identify pathways on how to apply DL knowledge to such applications.

Furthermore, we have chosen an application written as a Jupyter notebook to identify if students have an easier time with it and to see if benchmarks can be easily generated if notebooks are used instead of just Python programs. We identify that existing tools such as Papermill can provide the ability to run Jupyter notebooks in queuing systems while running them as tasks in the background and capturing cell output.

• Challenges: Understanding a scientific application can be quite complex. Having a full implementation using DL for it, still provides challenges as data and algorithm dependencies need to be analyzed and domain knowledge needs to be communicated to gain deeper understanding. It is important to separate the runtime environment variables as much as possible from the actual notebook. The coordination of such variables can be challenging and tools such as cloudmesh-ee make such integration simple.

3.2. Insights into data management from the earthquake forecasting application

In data management, we are concerned with various aspects of the data set, the data compression and storage, and the data access speed. We discuss insights into each of them that we obtained while looking at the earthquake forecast application.

3.2.1. Data sets

When dealing with data sets, we typically encounter several issues. These issues are addressed by the MLCommons benchmarks and data management activities so that they provide ideal candidates for education without spending an exorbitant amount of time on data. Such issues typically include access to data without privacy restrictions, data preprocessing that makes the data suitable for DL, and data labeling in case they are part of a well-defined MLCommons benchmark. Other issues include data bias, noisy or missing data, as well as overfitting while using training data. Typically the MLCommons benchmarks will be designed to limit such issues. However, some benchmarks such as the science group benchmarks, which are concerned with improving the science, have the option to potentially address these issues in order to improve the accuracy. This could even include injecting new data and different preprocessing methods.

3.2.2. Data compression

An issue of utmost importance, especially for large data sets, is how the data are represented. For example, we found that the original data set was 11 GB for the earthquake benchmark. However, we found the underlying data were a sparse matrix, and was easily lossless compressed by a factor of 100. This is significant, as in this case the entire data set can be stored in GitHub or moved quickly into memory. The compressed xz archive file is only 21 MB, and downloading only the archive file using wget takes 0.253 s on the HPC. In case the data set and its repository are downloaded with Git, we note that the entire Git repository is 108MB (von Laszewski, 2023b). On the Rivanna Supercomputer, downloading this compressed data set only takes 7.723 s. Thus, it is preferred to just download the data using wget. In both cases, the data are compressed. To uncompress, the data will take an additional 1min 2.522 s. However, if we were to download the data in uncompressed form, it would take ≈ 3 h 51 s. The reduction in time is due to the fact that the data are sparse, and the compression allows a significant reduction needed to store and thus transfer the data.

From this simple example, it is clear that MLCommons benchmarks can provide students insights into how data are managed and delivered to, for example, large-scale computing clusters with many nodes while utilizing compression algorithms. We next discuss insights into infrastructure management while using file systems in HPC resources. While often object stores are discussed to host such large data sets, it is imperative to identify the units of storage in such object stores. In our case, an object store that would host individual data records is not useful due to the vast number of data points. Therefore, the best way to store these data, even in an object store, is as a single entry of compressed overall data. Other MLCommons Science Working Group benchmarks have data sets in the order of 500 GB–12 TB. Other tools, such as Globus transfer, can be used to download larger data sets. Obviously, these sets need special considerations when placed on a computing system where the students' storage capacities may be limited by policy.

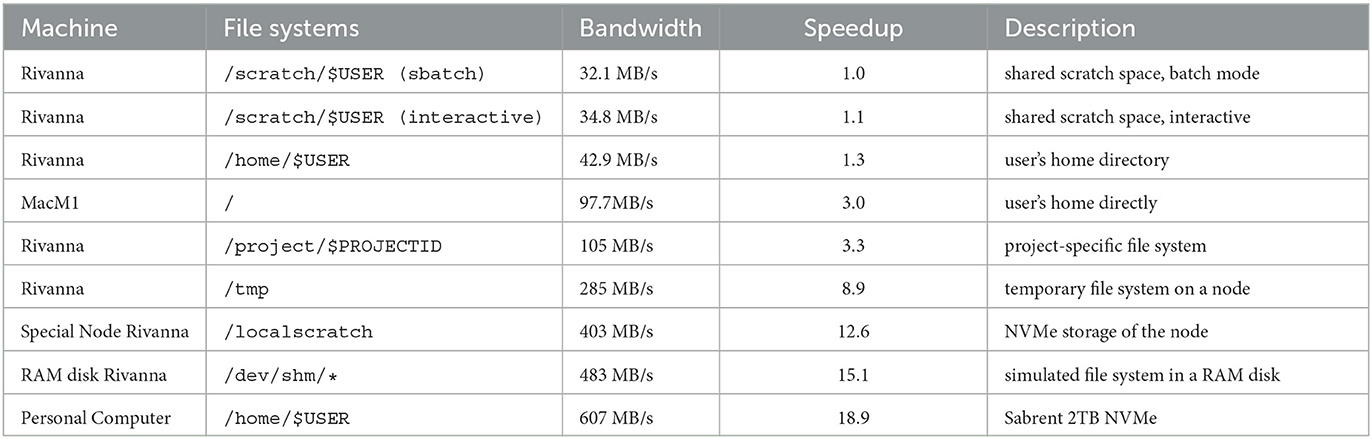

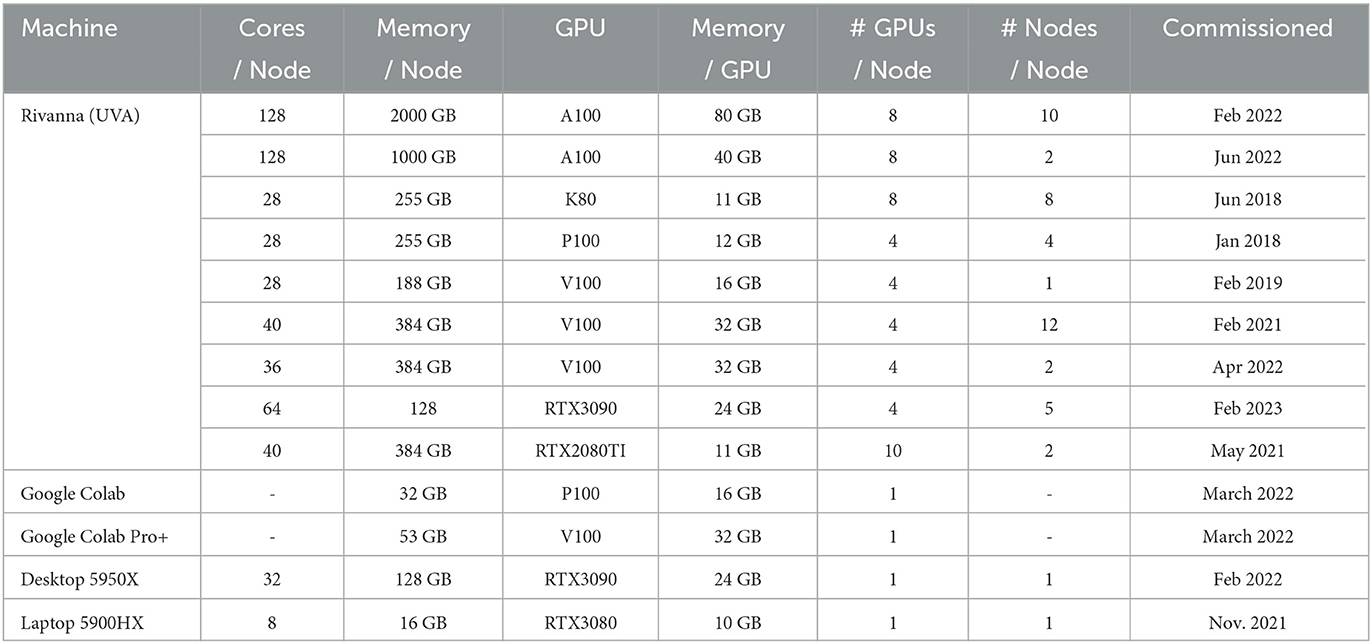

3.2.3. Data access

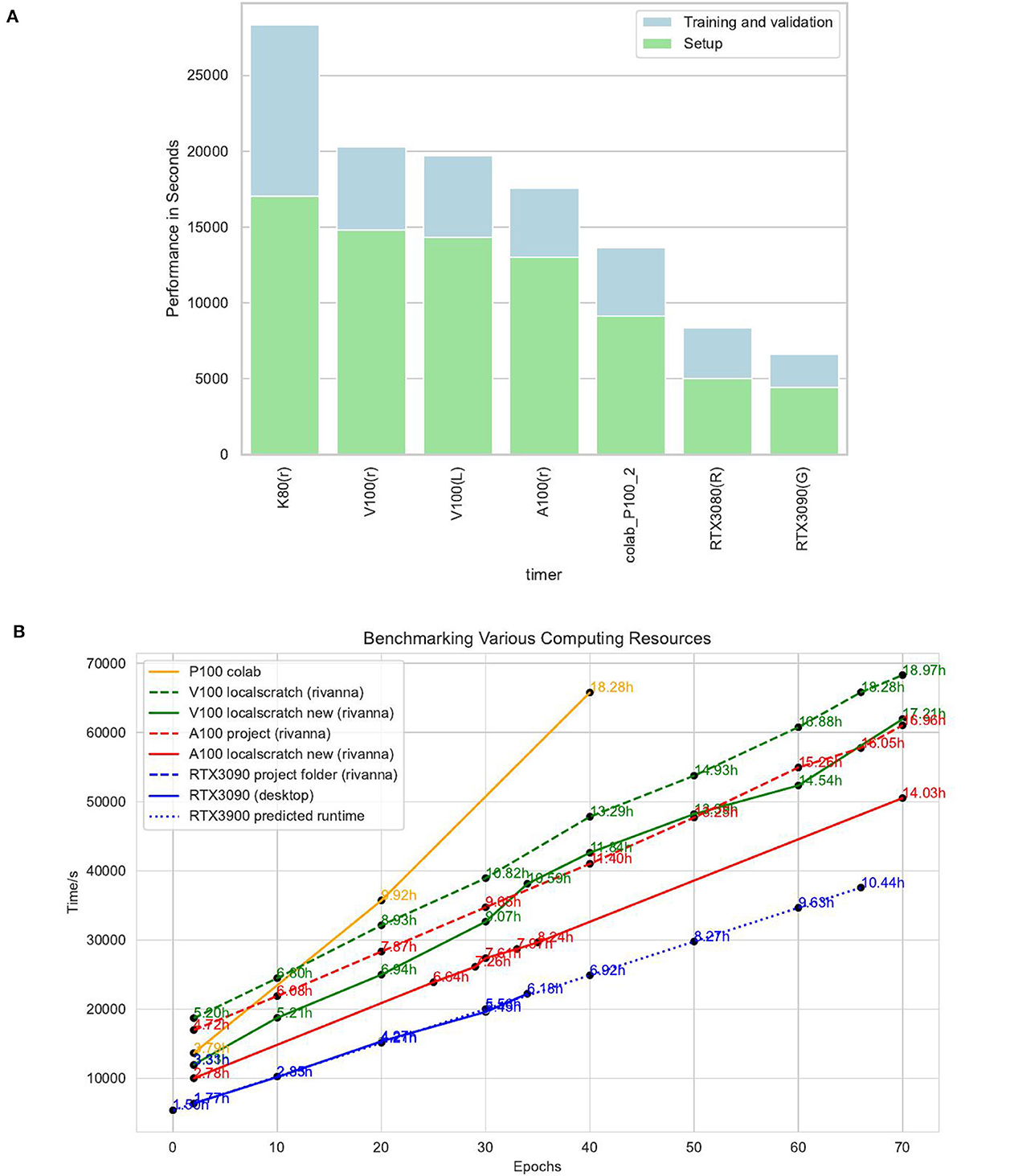

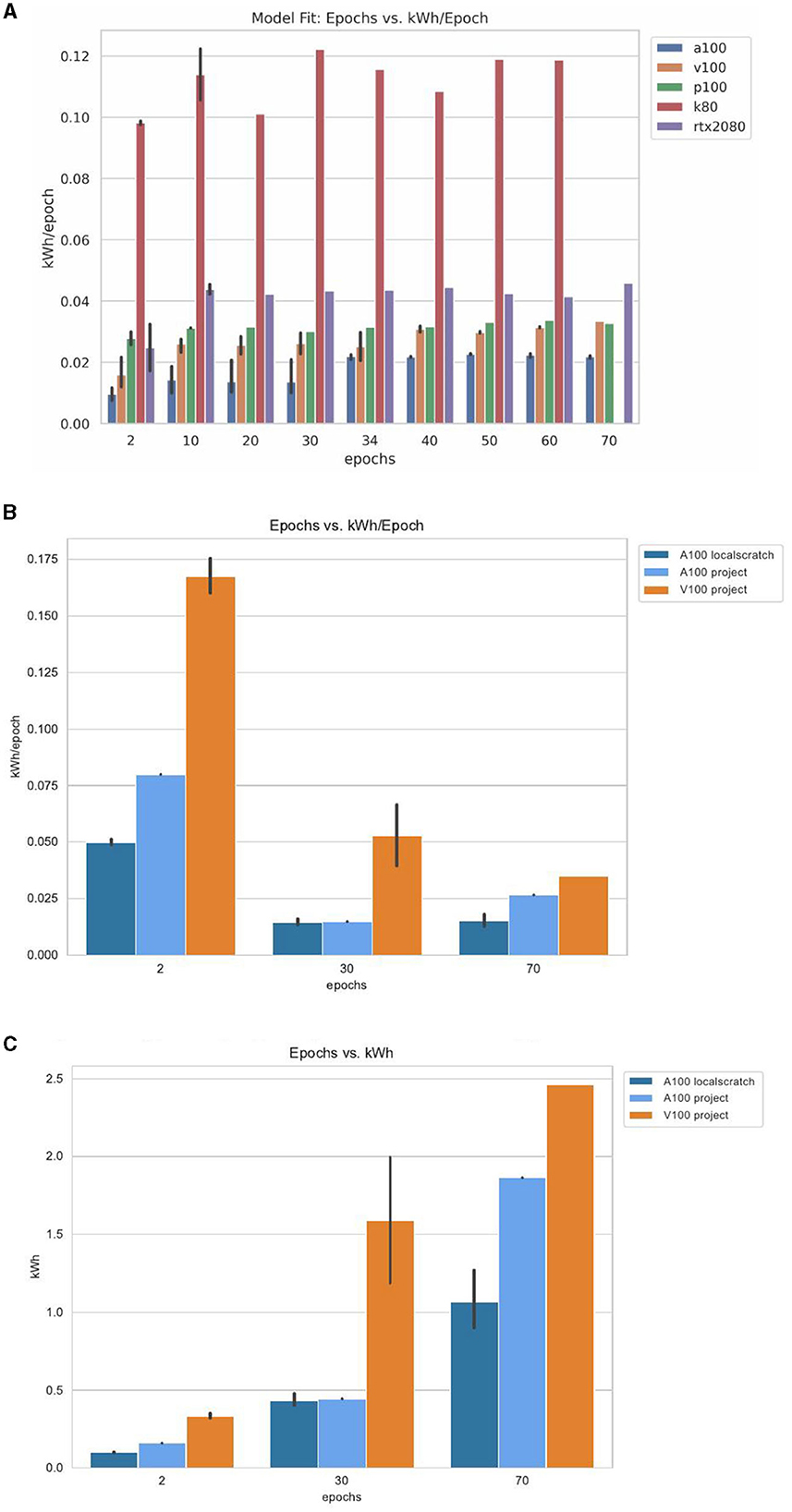

Besides having proper data and being able to download them efficiently from the location of storage, it is imperative to be able to access it in such a way that the GPUs used for DL are being fed with enough data without being idle. Our performance results were somewhat surprising and had a devastating effect on the overall execution time. We found that the performance was more than twice as fast on the personal computer while using an RTX3090 in contrast to using the HPC center recommended file systems when using an A100. For this reason, we have made a simple test and measured the performance to read access the various file systems. The results are shown in Table 3, which include not only various file systems at the UVA's Rivanna HPC but also a comparison with a personal computer.

Based on this observation, it was of great disadvantage to consider running the earthquake benchmark on the regularly configured HPC nodes as they ran on some resources for almost 24h due to the policy limit the Rivanna system allows for one job. Hence, we were allowed to use a special compute node that has additional non-volatile memory express (NVMe) storage available and accessible to us. On those nodes (in the Table listed as /localscratch), we were able to obtain a very suitable performance for this application while having a 10-fold increase in access in contrast to the scratch file system and almost double the performance given to us on the project file system. The /tmp system—although fast—was not sufficiently large for our application and performs slower than the /localscratch setup for us. In addition, we also made an experiment using a shared memory-based-hosted file system in the nodes random-access memory (RAM).

What we learn from this experience is that an HPC system must provide a fast file system locally available on the nodes to serve the GPUs adequately. The computer should be designed from the start to not only have the fastest possible GPUs for large data processing but also have a very fast file system that can keep up with the data input requirements presented by the GPU. Furthermore, in case updated GPUs are purchased, it is not sufficient to just take the previous-generation motherboard, CPU processor, and memory but to update the hardware components and include a state-of-the-art compute node. This often prevents the repurposing of the node while adding just GPUs due to inefficient hardware components that cannot keep up with the GPU's capabilities.

Summary of data management aspects:

• Challenges: Scientific applications require at times large-scale storage spaces that can be provided while using HPC compute centers. The speed of accessing the data depends on where the data is and can be stored in the HPC system. Performance between systems can vary drastically showcasing differences between shared, non-shared, NVMe-based storage, and in-memory storage volumes.

• Opportunities: Input data needs to be placed on appropriate storage options to satisfy the fastest possible access guided by benchmarks. As scientific data are often sparse, they could be significantly compressed, and the time to access the data to move them and uncompress them is often much shorter than the time one needs to load the uncompressed data. Access to a server to its local storage system is essential and must be provided by the HPC center. Instead of old-fashioned HDDs or even SSDs, the fastest NVMe storage should be provided.

3.3. Insights into DL benchmark workflows

As we are trying to benchmark various aspects of the applications and the systems utilizing DL, we need to be able to easily formulate runtime variables that take into account different control parameters either of the algorithm or the underlying system and hardware.

Furthermore, it is beneficial to be able to coordinate benchmarks on remote machines either on a single system or while using multiple systems in conjunction with hybrid and heterogeneous multi-HPC systems. Thus, if we change parameters for one infrastructure, it should be possible to easily and automatically be applied to another infrastructure to identify the impact on both. These concepts are similar to those found in cloud and grid computing for job services (von Laszewski et al., 2002) and for workflows (von Laszewski, 2005; von Laszewski et al., 2007). However, the focus here is on ensuring the services managing the execution are provided and controlled by the application user and not necessarily by the cloud or HPC provider. Thus, we distinguish the need for a workflow service that can utilize heterogeneous HPC systems while leveraging the same parameter set to conduct a benchmark for comparison by either varying parameters on the same or other systems. Such a framework is presented by von Laszewski et al. (2022, 2023) and is based on our earlier work on workflows in clouds and grids.

In addition, we need a mechanism to create various runs with different parameters. One of the issues we run into is that often our runtime needs exceed that of a single job submission. Although job arrays and custom configurations exist, they often lead to longer runtimes that may not be met by default policies used in educational settings. Thus, it is often more convenient to create jobs that fall within the limits of the HPC center's policies and split the benchmarking tasks across a number of jobs based on the parameter permutations. This also allows easier parallelization.

For this reason, von Laszewski et al. have implemented the cloudmesh Experiment Executor (cloudmesh-ee) that provides an easy-to-use batch job generator, creating parallel jobs based on a permutation of experiment parameters that are defined in a configuration file. The tool creates for each job its own subdirectory, copies the code and configuration files into it, and creates a shell script that lists all jobs to be submitted to the queuing system. This also has the advantage that Jupyter notebooks can easily be integrated into this workflow component, as a local copy is generated in each directory and the output for each cell is created during the program execution.

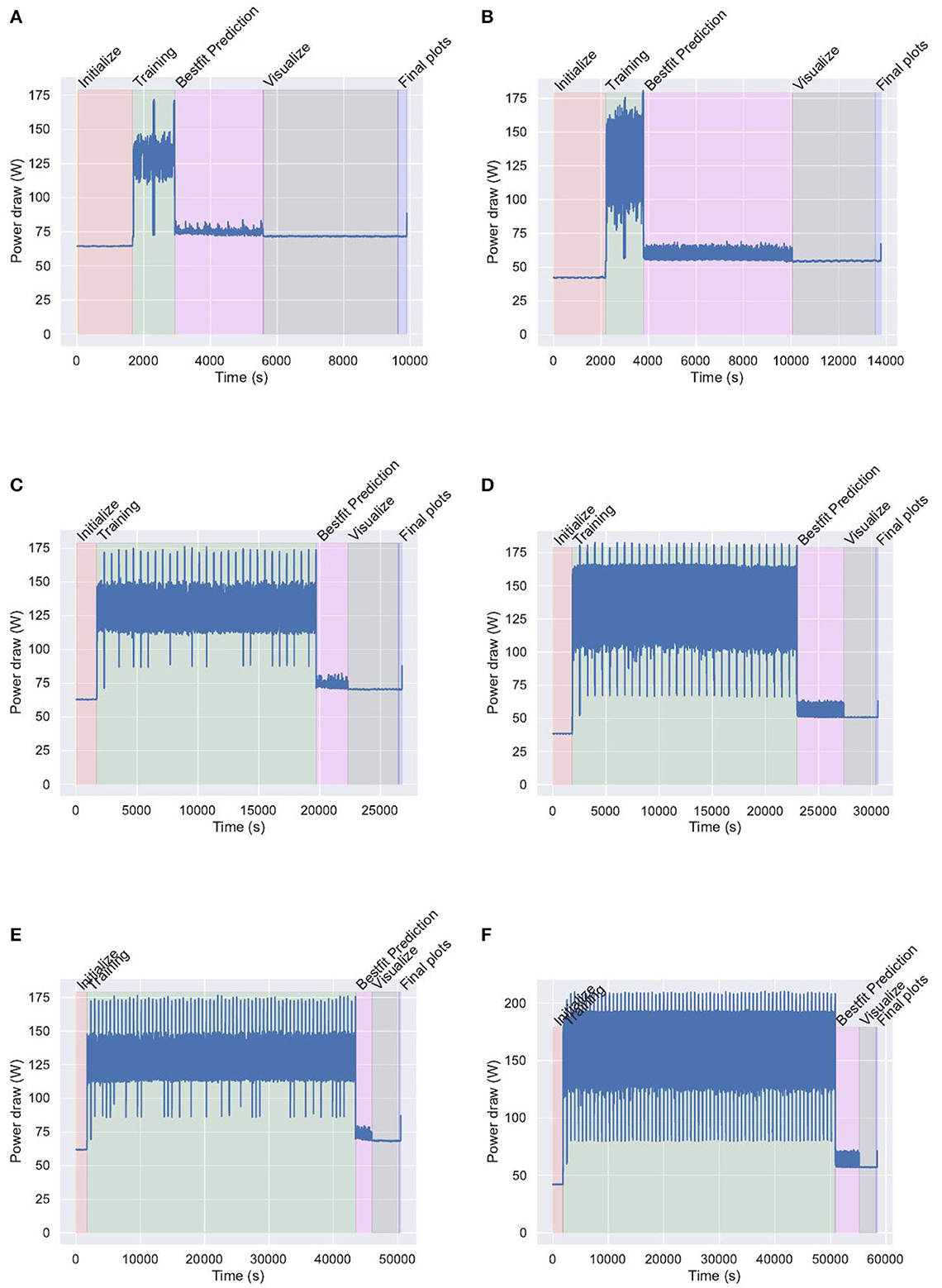

Furthermore, we need a simple system to measure the performance and energy, while communicating the data in an easy fashion to the users. This system was developed by von Laszewski and contains two components: (a) a general stopwatch and (b) a mechanism to monitor the GPU as discussed in Section 3.

We describe these systems briefly while focusing on their applicability for benchmarks.

3.3.1. Cloudmesh monitoring

For years, we have provided a convenient StopWatch package in Python to conduct time monitoring (von Laszewski, 2022a). It is very easy to use and is focused on runtime execution monitoring of time-consuming portions in a single-threaded Python application. Although MLCommons provides its own time-measuring component, called mllog, it is clear from the name that the focus is to create entries in a log file that is not easily readable by a human and may require postprocessing to make it usable. In contrast, our library contains not only simple labeled start and stop methods, but it also provides a convenient mechanism to print human-readable customizable performance tables. However, it is possible to also generate a result table in other formats such as comma-separated values (CSV), JavaScript object notation (JSON), YAML ain't markup language (YAML), shorthand for text (TXT), and others. Human readability is especially important during a debugging phase when benchmarks are developed. Moreover, we also have developed a plugin interface to mllog that allows us to automatically create mllog entries into an additional log file, so the data may be used within MLCommons through specialized analytics programs. A use case is depicted next (we have omitted other advanced features such as function decorators for the StopWatch to keep the example simple).

from cloudmesh.common.StopWatch

import StopWatch

# ...

StopWatch.event("start")

# this where the timer starts

StopWatch.start("earthquake")

# this is when the main benchmark

# starts

# ... run the earthquake code

# ... additional timers could be

# used here

with StopWatchBlock("calc"):

# this is how to use a block timer

run_long_calculation()

StopWatch.stop("earthquake")

# this is where the main benchmark

# ends

StopWatch.benchmark()

# prints the current results

To also have direct access to MLCommons events, we have recently added the ability to call a StopWatch.event.

In addition to the StopWatch, we have developed a simple command line tool that can be used, for example, in batch scripts to monitor the GPU performance characteristics such as energy, temperature, and other parameters (von Laszewski, 2022b). The tool can be started in a batch script as follows and is currently supporting NVIDIA GPUs:

cms gpu watch --gpu=0 --delay=0.5 --

dense > gpu0.log &

Monitoring time and system GPU information can provide significant insights into the application's performance characteristics. It is significant for planning a time-effective schedule for parameters while running a subset of planned experiments.

3.3.2. Analytics service pipelines

In many cases, a big data analysis is split up into multiple subtasks. These subtasks may be reusable in other analytics pipelines. Hence, it is desirable to be able to specify and use them in a coordinated fashion, allowing the reuse of the logic represented by the analysis. Users must have a clear understanding of what the analysis is doing and how it can be invoked and integrated.

The analysis must include an easy-to-understand specification that encourages reuse and provides sufficient details about its functionality, data dependency, and performance. Analytics services may have authentication, authorization, and access controls built-in that enable access by users controlled by the service providers.

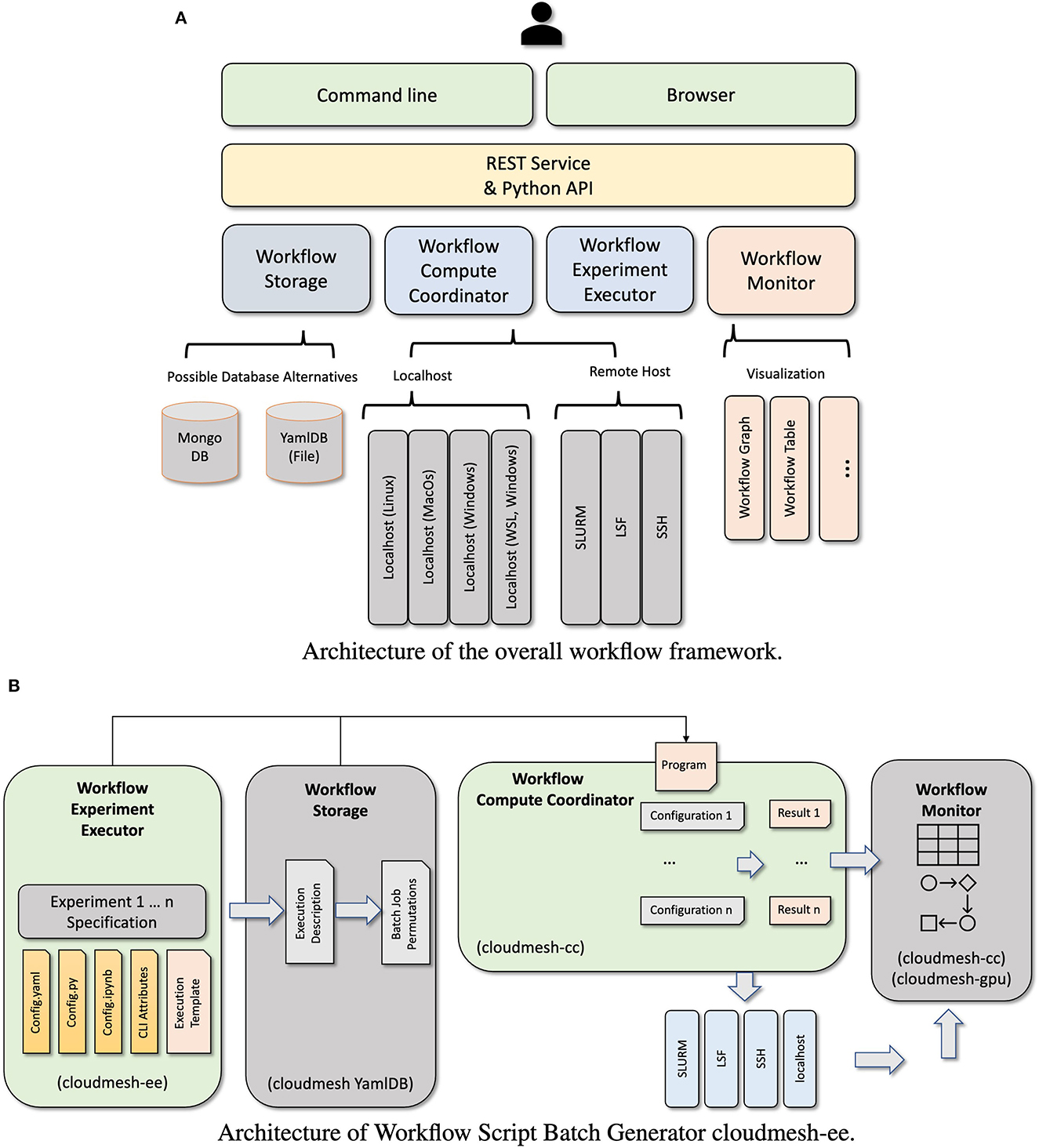

The overall architecture is depicted in Figure 3A. It showcases a layered architecture with components dealing with batch job generation, storage management, compute coordination, and monitoring. These components sit on top of other specialized systems that can easily be ported to other systems while using common system abstractions.

Figure 3. Architecture of the cloudmesh workflow service framework. (A) Architecture of the overall workflow framework. (B) Architecture of Workflow Script Batch Generator cloudmesh-ee. REST, REpresentational State Transfer; API, application programming interface; DB, database; WSL, windows subsystem for linux; SLURM, simple linux utility for resource management; LSF, load sharing facility; SSH, secure shell.

Instead of focusing on the details of this architecture, we found that the high-level use of it is very important as part of the educational activities which also have an implication in general on the use within any research activity.

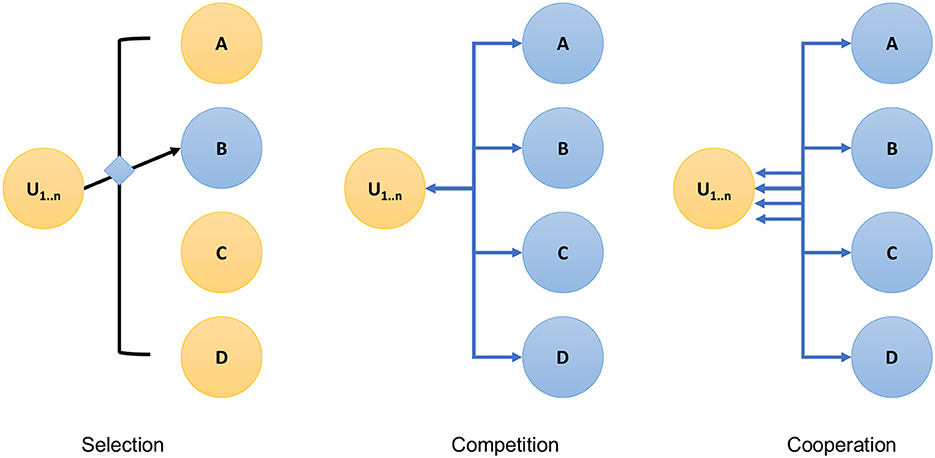

We identified three beneficial concepts as part of the analytics service pipelines (see Figure 4):

• Selection—Instead of performing all possible benchmarks, a specific parameter set is selected and only that is run.

• Competition—From a number of runs, a result is identified that is better than others. This may be, for example, the best of n benchmark runs.

• Cooperation—A number of analytics components are run (possibly in parallel) and the final result is a combination of the benchmark experiments run in cooperation. This, for example, could be that the job is split across multiple jobs due to resource limitations.

In the earthquake code, we have observed all three patterns are used in the benchmark process.

3.3.3. Workflow compute coordinator

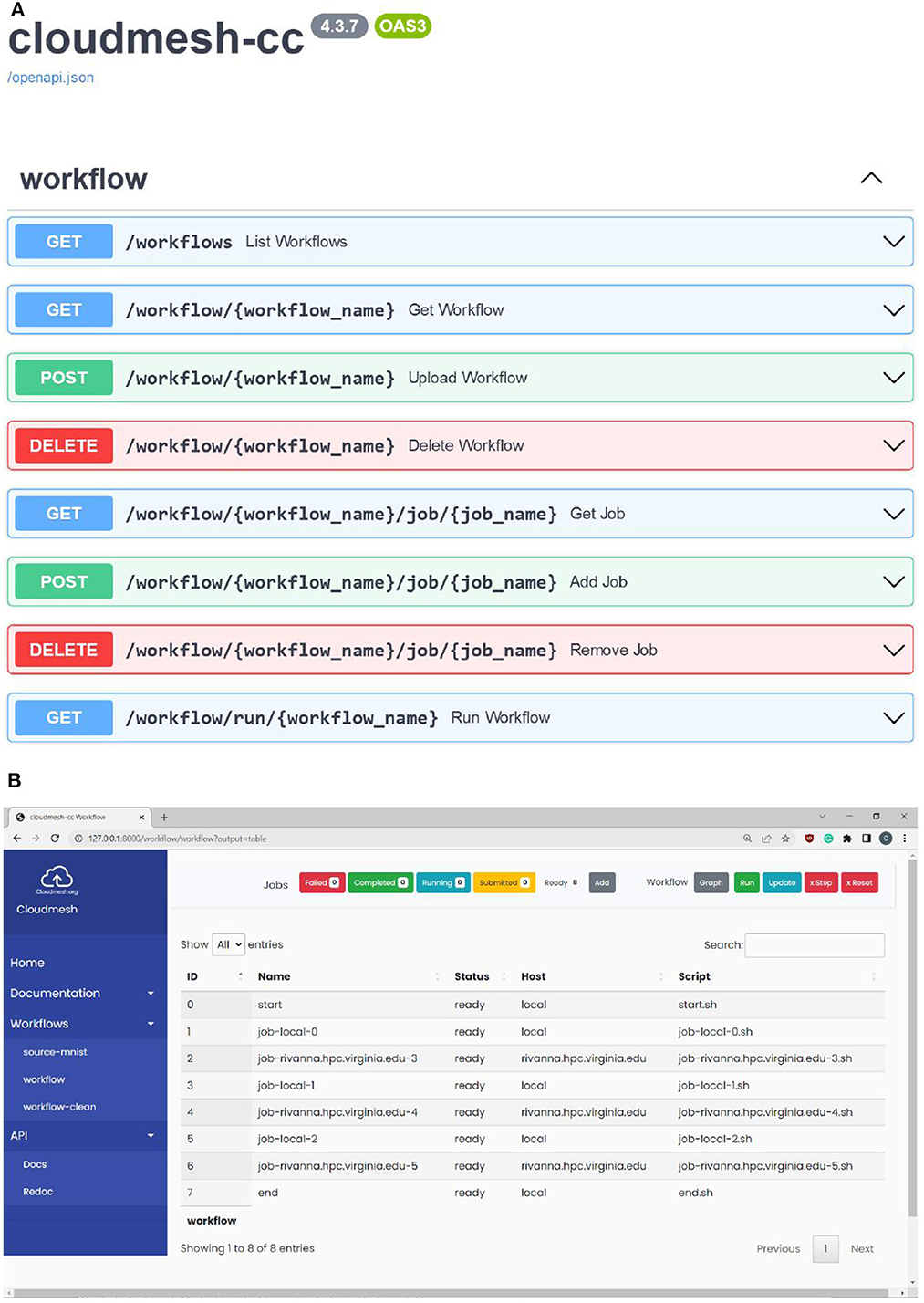

Within HPC environments, scientific tasks can leverage the processing power of a supercomputer so they can run at previously unobtainable high speeds or utilize specialized hardware for acceleration that otherwise is not available to the user. HPC can be used for analytic programs that leverage machine learning applied to large data sets to, for example, predict future values or to model current states. For such high-complexity projects, there are often multiple complex programs that may be running repeatedly in either competition or cooperation, as also in the earthquake forecast application. This may even include resources in the same or different data centers on which the benchmarks are run. To simplify the execution on such infrastructures, we developed a hybrid multi-cloud analytics service framework that was created to manage heterogeneous and remote workflows, queues, and jobs. It can be used through a Python API, the command line, and a REST service. It is supported on multiple operating systems like macOS, Linux, and Windows 10 and 11. The workflow is specified via an easy-to-define YAML file. Specifically, we have developed a library called Cloudmesh Compute Coordinator (cloudmesh-cc) (von Laszewski et al., 2022) that adds workflow features to control the execution of jobs on remote compute resources while at the same time leveraging capabilities provided by the local compute environments to directly interface with graphical visualizations better suited for the desktop. The goal is to provide numerous workflows that in cooperation enhance the experience of the analytics tasks. This includes a REST service (see Figure 5A) and command line tools to interact with it.

We have tested the framework while running various MNIST application examples, including multilayer perceptron, LSTM, auto-encoder, CNNs and RNNs, and distributed training. A much larger application using earthquake prediction has also been used. Recently, the framework was applied by students to all applications in the MLCommons Applications Working Group. Results of using it outside of the earthquake code are available in von Laszewski et al. (2023).

Figure 5A shows the REST specification and Figure 5B shows the graphical user interface.

3.3.4. Parameterized experiment workflow job generator

In traditional ML workflows, hyperparameter tuning and configuration are key elements in assessing and optimizing the performance of models. However, scaling hyperparameters for highly parallel execution with heterogeneous hardware is complex.