94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 05 March 2025

Sec. Assessment, Testing and Applied Measurement

Volume 10 - 2025 | https://doi.org/10.3389/feduc.2025.1523124

This article is part of the Research TopicStudent Voices in Formative Assessment FeedbackView all 8 articles

Formative assessment has been suggested as a means of supporting student motivation. However, empirical studies have shown mixed effects of formative assessment interventions on students’ motivation, making it necessary to understand the mechanisms underlying these effects. We analyzed a formative classroom practice implemented by a 10th-grade first-language teacher during 7 months. Teacher logs, classroom observations and a teacher interview were used to collect data for characterizing the formative assessment practice. Changes in students’ satisfaction regarding the basic psychological needs of perceived autonomy, competence and relatedness, as well as changes in student motivation manifesting as engagement in learning activities and autonomous types of motivation, were measured by pre- and post-questionnaires in the intervention class and four comparison classes. Since the intraclass correlation values ICC(1) and ICC(2) were low, we treated the comparison classes as one group and t-tests were used in the significance testing of the differences in changes in psychological needs satisfaction and motivation between the intervention class and the comparison classes. Path analysis was conducted to investigate whether a possible influence of the intervention on autonomous motivation and behavioral engagement would be mediated by basic psychological needs satisfaction. The analysis of the classroom practice in the intervention class identifies that both teacher and students were proactive agents in formative assessment processes. The analysis of the quantitative data shows that students’ psychological needs satisfaction increased more in the intervention class than in the comparison classes, and that this needs satisfaction mediated an effect on students’ behavioral engagement and autonomous motivation.

Motivation is the driving force of human behavior and a prerequisite for student learning. It is therefore paramount to find ways to effectively promote students’ motivation, and formative assessment is a classroom practice that has been suggested to improve motivation (e.g., Clark, 2012). Some empirical evidence for positive effects from formative assessment on student motivation have been found, but the effects have varied substantially. To understand why some formative assessment practices have certain effects on motivation while others do not, it is necessary to understand the mechanisms underlying the effects and how they function for different characteristics of formative assessment practices.

One way formative assessment may affect students’ motivation is by enhancing students’ satisfaction with the three psychological needs of competence, autonomy and relatedness (e.g., Hondrich et al., 2018; Leenknecht et al., 2021; Pat-El et al., 2012). However, few studies have empirically investigated the three psychological needs as mediators of effects of formative assessment on students’ motivation. In particular, studies within an ecologically valid regular classroom environment are scarce (Hondrich et al., 2018). Furthermore, there is a lack of studies investigating the three psychological needs as mediators for the effects on motivations manifested both as behavioral engagement and type of motivation, and for the mediating role of a composite measure of the satisfaction of all three needs. Studies using a composite measure could provide further valuable insight into the role of students’ psychological needs satisfaction since, according to self-determination theory (Ryan and Deci, 2020), all three needs are important for students to be autonomously motivated.

In this study, we describe and analyze a formative assessment practice involving a 10th-grade first-language teacher and her students. This practice was carried out as a daily classroom practice for 7 months. We investigate changes in the students’ psychological needs satisfaction, measuring both all three needs individually and a composite of all three needs. We then compare these students’ changes with changes in four comparison classes. We also investigate whether the composite measure mediates an influence of the formative assessment practice on students’ behavioral engagement and autonomous motivation.

Given the critical role that motivation plays in student learning, it is important to find ways to effectively promote students’ motivation. Students’ motivation to learn may be manifested in their engagement in learning activities, that is the extent to which they are actively involved in learning activities (Skinner et al., 2009). Engagement is a multidimensional construct comprising four distinct yet interrelated aspects. Behavioral engagement pertains to the extent of the student’s involvement in learning activities, reflecting their on-task attention and effort. Emotional engagement refers to the presence of positive emotions, such as enjoyment, during learning activities. Agentic engagement involves the student’s intentional, proactive, and constructive contributions to the teaching and learning activities, such as offering suggestions or expressing preferences. Finally, cognitive engagement relates to the student’s strategic approach to learning, involving the use of advanced learning techniques (Matos et al., 2018). Studies on engagement may include all or only individual aspects. In the latter case, naturally, the results cannot consider the relationships between the aspects. However, a focus on individual aspects may sometimes be necessary, and many studies do so. In the present study, to keep the student questionnaires sufficiently short, and also include measures of different types of motivation, we will focus on one individual aspect of engagement. The chosen aspect is behavioral engagement. This choice is based on that research has consistently reported higher levels of student behavioral engagement to be associated with higher levels of achievement (Fredricks et al., 2004; Hospel et al., 2016) and other forms of engagement (e.g., emotional and cognitive) to be weaker predictors of achievement than behavioral engagement (Stefansson et al., 2016).

While engagement refers to a manifestation of motivation in terms of what the students do, how much they do it, and with what intensity they do it, students may also have different types of motivation; that is, they may be motivated for different reasons. Self-determination theory (Ryan and Deci, 2020) describes such different types of motivation. Students will be intrinsically motivated to engage in activities that they experience as inherently interesting or fun and that allow them to feel competent and autonomous. Extrinsic motivation, in contrast, does not require the activities to be of interest to the students; rather, this type of motivation refers to engaging in activities as a means to an end: students choose to engage in activities because they believe they will lead to positive outcomes or prevent negative outcomes. Extrinsic motivation differs in the extent to which the reasons for students’ actions are self-determined or autonomous. Students may engage in activities because of external rewards, to avoid discomfort or punishment, to avoid feeling guilty or to attain ego-enhancement or pride. Such motivation reflects external control and is termed controlled motivation. Students may also have a more autonomous form of extrinsic motivation, engaging in an activity because they personally find it valuable and have identified its regulation as their own. Both this latter form of extrinsic motivation and intrinsic motivation are termed autonomous types of motivation. A student’s motivation type has consequences for learning and well-being. Autonomous types of motivation have been shown to be associated with not only greater engagement but also higher quality learning and greater psychological well-being. Controlled motivation, in contrast, has been shown to be associated with negative emotions and a poorer ability to cope with failures (Ryan and Deci, 2020).

Thus, positive student outcomes can be expected from facilitating students’ motivation in terms of engagement in learning activities and autonomous types of motivation. However, successfully supporting such motivation is not easy. Studies have shown that student engagement often decreases and student motivation becomes less autonomous throughout the school years (Ryan and Deci, 2020; Wylie and Hodgen, 2012).

Formative assessment is a classroom practice that has been suggested as a possible way to support student motivation (e.g., Clark, 2012). However, as argued by Yan and Chiu (2022), only formative assessment with certain characteristics and implemented to a sufficient extent is likely to have a significant effect on students’ motivation. In addition, implementing high-quality formative assessment is associated with challenges and barriers to overcome (e.g., Heitink et al., 2016; Yan et al., 2021). Formative assessment can be defined as follows:

Practice in a classroom is formative to the extent that evidence about student achievement is elicited, interpreted, and used by teachers, learners, or their peers, to make decisions about the next steps in instruction that are likely to be better, or better founded, than the decisions they would have taken in the absence of the evidence that was elicited (Black and Wiliam, 2009, p. 9).

Thus, formative assessment is a teaching and learning practice. Such classroom practices may differ, but they are unified by the common core characteristics of teachers and/or students gathering information about the students’ learning and adapting teaching and/or learning to meet the identified learning needs. For example, the definition above (Black and Wiliam, 2009, p. 9) affords approaches to formative assessment with a focus on the teachers gathering evidence of student learning through classroom dialogue or short written tests and adapting feedback or subsequent learning activities to the students’ knowledge, skills and learning needs identified in these assessments. In two other approaches to formative assessment, students may play a more proactive role in the core formative assessment processes. The students may support each other’s learning through peer-assessment and subsequent peer-feedback, where the latter involves providing explanations and suggestions to peers on how they can act to reach their learning goals. Students may also be proactive agents in formative assessment processes as self-regulated learners who assess their own learning and take subsequent action to meet the identified learning needs. When conducting a formative assessment practice in which the students are proactive agents in the core formative assessment processes, the teacher’s role is to help the students become motivated and proficient in carrying out these processes. Formative assessment practices may also include a combination of all the above-mentioned approaches.

A few empirical studies have found positive relationships between formative assessment and grade 1–12 students’ behavioral engagement. In a cross-sectional study, Federici et al. (2016) found a positive association between students’ perception of their teachers’ formative assessment practice and aspects of behavioral engagement; moreover, intervention studies by Näsström et al. (2021), Palmberg et al. (2024) and Wong (2017) all found that formative assessment had a positive effect on students’ behavioral engagement. Relationships between formative assessment with grade 1–12 students and the students’ autonomous motivation have also been empirically investigated. Cross-sectional studies have found positive relationships between formative assessment and students’ autonomous types of motivation (e.g., Baas et al., 2020; Federici et al., 2016; Pat-El et al., 2012). However, findings from intervention studies on the effect of formative assessment practices on students’ autonomous motivation range from no effect to a moderate effect (e.g., Förster and Souvignier, 2014; Hondrich et al., 2018; Meusen-Beekman et al., 2016; Näsström et al., 2021; Palmberg et al., 2024).

To understand why some formative assessment practices have certain effects on motivation while others do not, it is necessary to understand the mechanisms underlying the effects and how they function for different characteristics of formative assessment practices. One way formative assessment may affect students’ motivation is by enhancing students’ satisfaction with the three psychological needs of competence, autonomy and relatedness. According to self-determination theory (Ryan and Deci, 2020) these psychological needs influence students’ autonomous types of motivation. According to the self-system model of motivational development, fulfilment of these psychological needs may also influence students’ engagement (Skinner et al., 2008). Moreover, it is hypothesized that an increase in autonomous motivation leads to greater student engagement (Ryan and Deci, 2020). Formative assessment may facilitate needs satisfaction in several ways. Teacher feedback helping students monitor their learning progress and providing support for how goals and criteria can be met may make the students’ learning progress more explicit, and recognizing learning gains would foster feelings of competence (Andrade and Brookhart, 2020; Hondrich et al., 2018). Teacher feedback focusing on students’ effort, task-solving processes and learning progress may also influence students’ sense of autonomy (Andrade and Brookhart, 2020). Heritage and Wylie (2018) emphasize the inclusion of students in these processes of assessment and feedback. They argue that supporting students as peer-assisted and self-regulated learners by arranging for information from self-assessment and peer-assessment to affect classroom practices would enhance students’ sense of both autonomy and relatedness.

Although the numbers of studies investigating the effects of formative assessment on psychological needs vary between these needs, positive associations and effects have been found for all three needs—that is, the need for a sense of competence (Granberg et al., 2021; Hondrich et al., 2018; Pat-El et al., 2012; Rakoczy et al., 2019; Wollenschläger et al., 2016), a sense of autonomy (Granberg et al., 2021; Pat-El et al., 2012) and a sense of relatedness (Pat-El et al., 2012). However, very few studies have empirically investigated the three psychological needs as mediators of effects of formative assessment on students’ behavioral engagement or type of motivation. In a cross-sectional questionnaire study, Pat-El et al. (2012) found that students’ perceived competence and relatedness mediated an association between formative assessment and autonomous motivation, while perceived autonomy did not. Hondrich et al. (2018) found an indirect effect of formative assessment on autonomous motivation via perceived competence (sense of autonomy and relatedness were not included in the study). Kiemer et al. (2015) investigated students’ perceptions of their teachers’ support of their psychological needs, rather than of the actual fulfilment of these needs. They found that students’ perceived support of both autonomy and competence mediated the association between formative assessment and students’ autonomous motivation. All three of these studies focused on formative assessment in the form of teachers’ tasks or questions and their feedback or adapted instruction, and not on practices in which students play a more proactive role in the core formative assessment processes as peer- or self-assessors. In addition, none of these studies included an investigation of how formative assessment affected students’ engagement. Furthermore, although some studies investigating research questions other than those in the present study have used a composite measure of the satisfaction of the three psychological needs (e.g., Haerens et al., 2019), to the best of our knowledge none have investigated the potentially mediating role of a composite measure of the satisfaction of the three basic psychological needs in the effects of formative assessment on either student engagement or autonomous motivation.

In summary, formative assessment has been proposed as a way of enhancing student motivation, but studies that have investigated the effects of formative assessment on motivation within an ecologically valid, regular classroom environment are scarce (Hondrich et al., 2018). In addition, existing studies show a substantial variation in the effects. However, studies empirically investigating mechanisms underlying the effects are few, in particular, very few studies investigate the three psychological needs as mediators of the effects of formative assessment on behavioral engagement and type of motivation. In addition, we did not find any studies investigating such mediating effects from practices in which both the teacher and the students are proactive agents in the core formative assessment processes. Furthermore, there is a similar lack of studies investigating the mediating role of a composite measure of needs satisfaction.

In the present study we aim to contribute to filling the above-mentioned gaps in the literature by investigating the mediating effects of the three psychological needs on student motivation in an ecologically valid formative assessment practice that can be characterized as including both teacher and students being proactive agents in the formative assessment practices. In the investigation we will use a composite measure of the needs satisfaction. To be able to design FA practices with the largest effects on motivation we need to understand why some formative assessment practices have certain effects on motivation while others do not. It is necessary to understand the mechanisms underlying the effects and how they function for different characteristics of formative assessment practices. Investigating the mediating effects of the psychological needs in practices where both teachers and students are proactive agents in the formative assessment practices is important since such practices have the potential to provide more ways of influencing student motivation than the practices in which only the teacher is the main proactive agent (Palmberg et al., 2024). Using a composite measure of the needs satisfaction in this investigation could complement previous insights about the role of students’ psychological needs satisfaction since, according to self-determination theory (Ryan and Deci, 2020), all three needs are important for students to be autonomously motivated and existing studies have all used measurements of each individual need satisfaction.

In this study, we describe and analyze a classroom practice involving a 10th-grade first-language teacher (referred to using the pseudonym Jenny) and her students. Jenny aimed at engaging each and every student in formative assessment activities, and the practice was carried out during 7 months. We investigate changes in the students’ psychological needs satisfaction, measuring both all three needs individually and a composite of all three needs. We then compare these students’ changes with changes in four comparison classes. We also investigate whether the composite measure mediates an influence of the formative assessment practice on students’ behavioral engagement and autonomous motivation. We ask the following research questions:

1. What are the characteristics of Jenny’s formative assessment practice?

2. Does satisfaction of the three psychological needs increase in students in the intervention class, and how do changes in psychological needs satisfaction in the intervention class compare with changes in the comparison classes?

3. Does students’ basic psychological needs satisfaction mediate an influence of the formative assessment practice on their behavioral engagement and autonomous motivation?

Research questions 2 and 3 are the main research questions in the study, but for the results to these research questions to be meaningful it is essential to identify the characteristics of the implemented formative assessment practice (Research question 1).

Jenny had participated in a professional development programme (PDP) in formative assessment the previous year, and this year she aimed at implementing some of the activities she had learned from the PDP. Data used for the characterization of Jenny’s formative assessment practice was collected through teacher logs, classroom observations and a teacher interview. The students’ basic psychological needs satisfaction, behavioral engagement and autonomous motivation were measured using a questionnaire in all five classes at the beginning and end of the intervention (fall and spring). The intervention class and comparison classes were compared in terms of changes in the students’ responses to the questionnaire items before and after the intervention, and mediation was studied through path analysis. We were not given the opportunity to follow and analyze the practices of the teachers in the comparison classes. However, Jenny was asked not to implement any new formative assessment activities during the first 2 months of the term in order not to influence the students’ responses to the first questionnaire. This means that the first questionnaire can be seen as a baseline measurement that we used to compare the effects of the teaching in the comparison classes and Jenny’s teaching before she had implemented her formative assessment practice. Taking this baseline measurement into account, the second questionnaire was then used to compare the effects of Jenny’s implemented formative assessment activities with the effects of the teaching in the comparison classes, reducing effects from variables pertaining to the intervention teacher’s personal characteristics. The study design is a comparison between a changed practice and business-as-usual practices.

One intervention class taught by Jenny and four comparison classes at the same upper secondary school in Sweden participated in the present study. Jenny, who had a few years of teaching experience and had participated in a professional development program (PDP) in formative assessment the previous year, started to teach a Swedish language course for a class enrolled in the technology program and aimed to implement a formative assessment practice. The four comparison classes took the same Swedish language course, but their teachers had not participated in the PDP and continued to teach the same way they had done in previous school years. All students were approximately 17 years old, came from different social and cultural backgrounds, and were enrolled in academic programs that do not differ much regarding students’ prior academic achievement. Twenty students in the intervention class and 72 students in the comparison classes agreed to participate and completed both questionnaires. The distribution of students in the different classes is reported in Table 1.

Three sources of data; teacher logs, observations and an interview were used for triangulation to ensure the validity and reliability of the description of the classroom practice.

To obtain data about the enactment of, intentions with and experiences from the formative assessment implementation, Jenny was asked to make log notes shortly after having a lesson or a series of lessons. The log notes were made in a web-based form, where the teacher provided descriptions of implemented activities, reasons for choosing the activities, evaluations of the implementations, descriptions of what had worked out well and what had not worked out, and further comments. Jenny wrote 14 logs during the 7-month period. She also spontaneously wrote five emails commenting on the logs. Since the comments sometimes clarified the logs, the emails were compiled into the log text.

The observations and interview were used to collect further examples and details about the implemented classroom practice. The framework by Wiliam and Thompson (2008) that operationalizes the definition of formative assessment by Black and Wiliam (2009) was used in the data analysis. Both the observation scheme and the interview guide were structured in accordance with the key strategies in the framework. These key strategies are: (KS 1) working with students to achieve a common interpretation of the learning goals; (KS 2) eliciting evidence of student learning; (KS 3) providing feedback that moves learners forward; (KS 4) activating students as instructional resources for one another (peer-assessment and peer-feedback); and (KS 5) activating students as the owners of their own learning (self-assessment and subsequent adjustment of learning).

Data was collected from six classroom observations (60–80 min). In addition to focusing on the five key strategies, the observation scheme included support questions such as: ‘How are the learning goals presented?’, ‘How is information about student learning gathered, and how is the information used?’; and ‘How can students identify their progress?’. Indications of how commonly the activities were used in the classroom were noted–for example, if the students reacted with surprise or if the material seemed to have been used before. The researcher took notes throughout the observation. If Jenny informally spoke to the researcher before, during or after the observation, that information was included in the field notes.

The interview conducted at the end of the intervention was 80 min long. It was audio-recorded and transcribed. During the interview, information from the teacher log and classroom observations was used to initiate or boost the conversation. Aside from asking for examples and details about the implemented classroom practice, the interview included questions about Jenny’s reasons for using those implemented activities. The interview guide can be found in the Supplementary Material.

To identify and describe the characteristics of the formative assessment practice used by Jenny, an analysis of the collected data was conducted in three steps. First, we identified activities that align with any of the five key strategies in the formative assessment framework by Wiliam and Thompson (2008) described above. Activities not including any characteristics of formative assessment were excluded from the next step of the analysis. In the second step, we also excluded activities that were not regularly used by the teacher; for example, activities were excluded if Jenny expressed or indicated that the activity was new or had only been tested a few times, or if data from the observations indicated that the activity was not commonly used (unused material, uncertain or surprised students, etc.). As a last step, we listed and rigorously described the identified regularly used activities using all available data (logs, observations, and interview) as the basis to characterize Jenny’s formative assessment practice. The four activities that most characterize Jenny’s practice are presented in the results section. The analysis was generally carried out by the first author, but with the assistance of the other authors at times of uncertainty.

Research questions two and three examine the possible effect of the intervention on changes in students’ basic psychological needs satisfaction and whether changes in needs satisfaction mediate a possible influence of formative assessment on students’ changes in behavioral engagement and autonomous motivation. Therefore, measures of the changes in each of these constructs were obtained by inviting students to answer a questionnaire before and after the intervention. The questionnaire comprised 27 items. All items measuring behavioral engagement and basic psychological needs satisfaction were statements the students could respond to on a scale from 1 (not at all) to 7 (fully agree). The items measuring students’ autonomous motivation were statements of reasons for working during lessons or for learning the course content. The students were asked to mark the extent to which these reasons were important on a scale from 1 (not at all a reason) to 7 (really important reason).

Five items measuring behavioral engagement were adaptations of items from Skinner et al.’s (2009) questionnaire items on behavioral engagement. Items measuring needs satisfaction of autonomy (four items), competence (four items) and relatedness (six items) were also adapted from previously used questionnaire items (Deci et al., 2001; Ilardi et al., 1993; Kasser et al., 1992). Eight items measuring autonomous motivation were adapted from Ryan and Connell’s (1989) Self-Regulation Questionnaire. The adaptations were made to suit the context of the participants, for example by changing from a work context to the school context. Before the study, these adaptations were piloted with students in four other classes of the same age group to ensure that the questions were easy to understand. The questionnaire used in this study, and subsets of it, have been used in several other studies (Granberg et al., 2021; Näsström et al., 2021; Hofverberg et al., 2022; Palmberg et al., 2024). A list of all questionnaire items can be found in the Supplementary Material. An example of an item measuring behavioral engagement is: ‘I am always focused on what I’m supposed to do during lessons’. Example items, respectively, measuring need satisfaction of autonomy, competence and relatedness are: ‘I feel that, if I want to, I have the opportunity to influence what we do during lessons’; ‘I am sure I have the ability to understand the content in this subject’; and ‘My classmates care about me’. An example of an item measuring autonomous motivation is: ‘When I work during lessons with the tasks I have been assigned, I do it because I want to learn new things’. Cronbach’s alpha for each set of the items in spring/fall was 0.89/0.86 for behavioral engagement, 0.85/0.84 for need satisfaction of autonomy, 0.82/0.87 for need satisfaction of competence, 0.94/0.89 for need satisfaction of relatedness and 0.87/0.87 for autonomous motivation, indicating good internal consistency of the scales. To assess unidimensionality of each scale, we conducted exploratory factor analysis on each set of items for each time point. We used principal axis factor as extraction method, and for each scale, at each time point, parallel analysis suggested that only one factor should be retained, indicating that answers to the items are influenced by the same latent factor. We chose not to do exploratory factor analysis on all items for each time point because the low subject to item ratio (<5:1) would make the risk of misclassifying items and not finding the correct factor structure high (Costello and Osborne, 2019). The mean of the items connected to each construct (students’ behavioral engagement; need satisfaction of autonomy, competence, and relatedness; and autonomous motivation) at each time point was used as a representation of that construct at the time point. The composite measure of students’ basic psychological needs satisfaction (BPNS) was calculated by adding the averages of each basic need satisfaction for each time point. Cronbach’s alpha for this measure – calculated for all items measuring the three different psychological needs – was 0.86 and 0.81, respectively, for before and after the intervention.

To investigate the intervention class students’ changes in needs satisfaction of autonomy, competence and relatedness, independently or as a composite measure (RQ2), means and mean differences in the responses to the questionnaire items pertaining to these constructs between fall and spring were calculated for students in the intervention class and those in the comparison classes. To assess whether the intervention class changed their basic psychological needs satisfaction, for each need and as a composite measure, paired sample t-tests were made and Hedges’ g (Hedges, 1981) was calculated to get an indication of the size of the difference. Comparisons between the intervention class and comparison classes (as one group) were performed through independent samples t-tests. For the need satisfaction of relatedness, Welch’s t-test was used, since homogeneity of variances could not be assumed. For each comparison, Hedges’ g was again calculated as an indication of the size of the difference. Although the students were nested within classes, we treated the comparison classes as one group after having examined two types of intraclass correlations in accordance with Bliese (2000). Lam et al. (2015) suggests that multilevel analysis is warranted if ICC(1) exceeds 0.1 and if ICC(2) exceeds 0.7. In the comparison classes, ICC(1) < 0.03, and ICC(2) < 0.34 for all measures. The low ICC(1) means that between-class variation is very small and does not contribute much to the total variation of scores, and the low ICC(2) indicates a low degree of reliability with which class-mean ratings differ between classes.

To investigate whether a possible influence of the intervention on autonomous motivation and behavioral engagement is mediated by basic psychological needs satisfaction (RQ3), we conducted path analysis with Mplus 8.4 on two models. The models were specified with relationships between changes in basic psychological needs satisfaction, autonomous motivation and behavioral engagement, as proposed by Ryan and Deci (2020) and Skinner et al. (2008) (see Section 1.4). First, we used a saturated model in which the intervention was specified to predict changes in the composite measure of basic psychological needs satisfaction (BPNS), students’ autonomous motivation and behavioral engagement, where changes in BPNS predict changes in students’ autonomous motivation and behavioral engagement, and where changes in autonomous motivation predict behavioral engagement. Then, we compared the first model with a more parsimonious model in which BPNS fully mediated the influence of the intervention. All analyses were run using the maximum likelihood estimator and bootstrapping for standard errors. Although the students were nested, the ICC(1) and ICC(2) values for the outcome measures (i.e., autonomous motivation and behavioral engagement) for the whole sample were very low (ICC(1) < 0.012, and ICC(2) < 0.17), indicating that multilevel analysis would be ill advised (e.g., Marsh et al., 2012). Change scores and the composite measure of basic psychological needs satisfaction were used in order to keep the ratio between parameters and sample size as low as possible for the path analysis.

One overall characteristic of Jenny’s formative assessment practice was the embeddedness of the activities – that is, the formative assessment activities were interwoven with each other and with other aspects of her teaching. Another characteristic was her way of providing the students with opportunities and support to become active agents in the core formative assessment processes by facilitating their motivation and proficiency in carrying out these processes. Below, we present four salient formative assessment activities that characterized and permeated Jenny’s classroom practice: (1) warmups, (2) ‘the thumb’, (3) study groups and (4) teacher feedback. Because Jenny used formative assessment with embeddedness, the activities can often be linked to more than one key strategy.

Jenny used the warmup activity at the beginning of a course module to achieve a mutual understanding of the learning goals and the progress criteria for having attained these goals (key strategy 1). In the warmup activity, Jenny first presented the learning goal (e.g., the knowledge and skills being aimed for, regarding a particular type of text) and then activated the students to collaborate and discuss the main aspects of and progress criteria for this goal. An example of a warmup comes from students’ work with the investigative text type. Jenny provided examples of ready-made texts for the students; the students then worked together in groups to assess the texts using a grading matrix and provided feedback on the texts. The feedback from all groups was discussed among the whole class with the aim of achieving a mutual understanding of what constitutes a high-quality investigative text type. During the rest of the course module, Jenny used the learning goals and progress criteria on a daily basis as a point of reference in her feedback (key strategy 3).

Jenny used ‘the thumb’ as a way of eliciting information about students’ learning (key strategy 2) and the relevance of the learning activities, in order to adjust the teaching and learning in the classroom when needed. But, although Jenny could get a hint of the students’ learning, the activity foremost aimed at supporting students in taking a proactive role in the formative assessment processes (key strategy 5). ‘The thumb’ meant that the students—in the whole class or in groups—responded to Jenny’s questions by pointing their thumbs up (positive), down (negative) or horizontally (as an in-between response). For example, in the data, Jenny asked questions such as: ‘How did the work go for you?’, ‘How did you use the time?’ and ‘What thumb would you like to give this activity?’, and then asked selected students to give the reason for their (thumb) response. ‘The thumb’ activity provided an opportunity for the students to reflect on their learning process and gave Jenny information about, for instance, students’ perceptions of the learning goals and their learning in relation to those goals. For example, between two seminars involving writing about language change (see below), Jenny asked the students about their experiences of the first seminar; together, they concluded that it had only worked for some groups. She then let the groups themselves identify their individual needs and the most helpful way of structuring the second seminar.

The study group activity eventually became an activity Jenny used in most course modules. The main purpose of the activity was to make the students take responsibility for their own learning, albeit with structured support from their peers (key strategy 4 and 5). The students were activated in formative assessment processes as self-regulated learners and through peer-assessment with subsequent peer-feedback. The study group activity was a more complex and long-lasting form of organizational activity than the warmup and ‘thumb’ activities. It included a structure of planned sub-activities that followed one another for several weeks, including: doing a joint exercise before working with individual assignments; sharing work in progress and giving each other feedback within the group; and evaluating the general learning progress of the group. These evaluations included feedback to the group or to Jenny, which was used to determine how to proceed.

In the study groups, the students could have individual assignments but supported each other in carrying out these assignments. Jenny supported the students by explicitly describing the purpose of the (sub-)activities and what the students’ roles were (e.g., assessors and feedback providers to themselves and peers). Furthermore, she modelled these roles, provided opportunities to practice the roles, and then reflected on the activities together with the students. She provided frames for the work that gave students possible choices within those frames. For example, in the course module ‘Language change in Sweden and the Nordic countries’, Jenny organized the groups and presented the learning goal, the most important progress criteria of the learning goal and the sub-activities (key strategy 1). The students could choose which genre of a text they wanted to use and how to present their work to the rest of the class. The students could consult Jenny while making their decisions, but Jenny encouraged the students to turn to each other in the study group. At the start of any study group activity, Jenny talked about the purpose of the activity and emphasized that the study group is there for students to raise issues, discuss and reflect together. To help the students successfully support each other, Jenny discussed and provided opportunities for practice on how to give helpful feedback. Another type of peer-feedback support was access to templates formulating the progress criteria for different types of texts. As the weeks went by, Jenny reminded the students of the purpose of the study group activity, the learning goals and the progress criteria, as well as how to provide helpful goal-related peer-feedback.

Jenny did provide feedback on subject-matter content, but her feedback focus was on helping students to become proactive agents in the formative assessment processes (key strategies 3, 4 and 5). As exemplified above, she planned for activities in which students self-assessed, peer-assessed and gave each other peer-feedback; she then observed her students carrying out these activities and provided feedback focused on these specific processes and on the students’ collaborative learning processes in general. The feedback she gave to students asking for her help was mostly focused on supporting them to be proactive agents in the formative assessment processes. This feedback was provided with the aim of making the students assess their own learning progress and reflect on the goal of the continued work and the reasons why they got stuck. Based on this assessment, she often asked the students to suggest their own strategies for making progress with the assignment. To assist their thinking, she encouraged the students to ask themselves questions and think aloud to find ways to move on. Sometimes she helped the students to take the first step and get started, such as by referring the students to previously successful methods or materials.

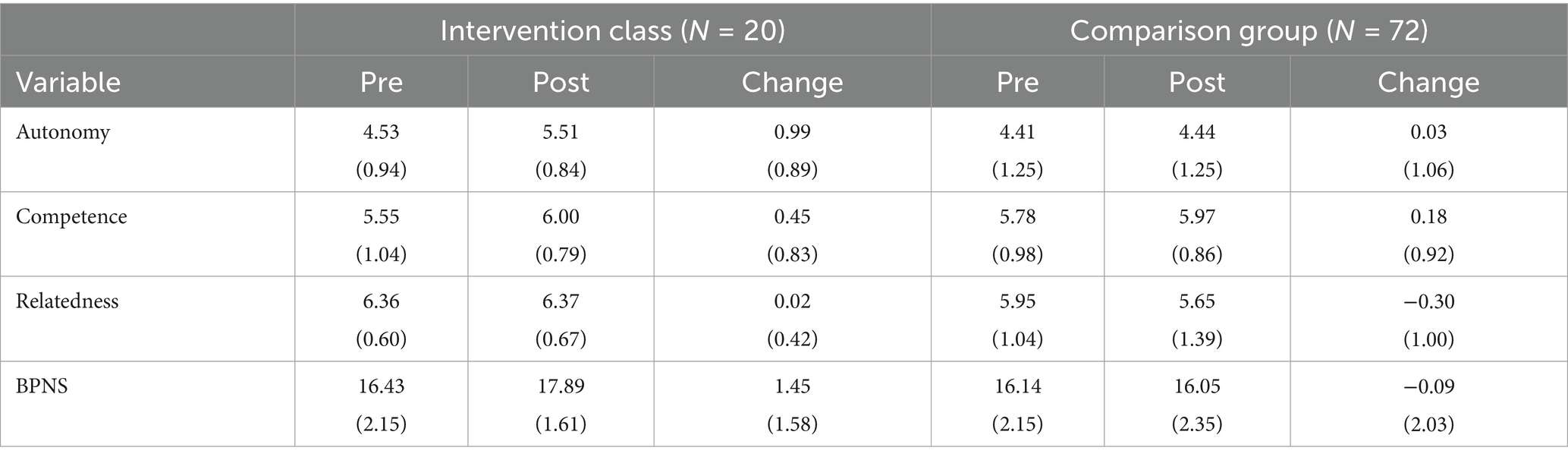

The satisfaction of all three individual basic psychological needs, and therefore also the composite measure of basic psychological needs satisfaction (BPNS), increased in the intervention class, although the increase for relatedness was small (see Table 2). The point estimate for the size of the change in the intervention class was large for autonomy [t(19) = 4.960, p < 0.001; g = 1.07], medium for competence [t(19) = 2.438, p = 0.025; g = 0.52], close to zero for relatedness [t(19) = 0.161, p = 0.87; g = 0.04], and large for the composite measure [t(19) = 4.117, p < 0.001; g = 0.88].

Table 2. Mean scores and standard deviations (in parentheses) for students’ basic psychological needs satisfaction.

Comparing the changes in the intervention class with the changes in the comparison group, t-tests reveal that the intervention class has a statistically significant better development than the comparison group regarding satisfaction of the need for autonomy [t(90) = 3.688, p < 0.001], relatedness [t(76.248) = 2.086, p = 0.040] and BPNS [t(90) = 3.126, p = 0.002], but not for satisfaction of the need for competence [t(90) = 1.166, p = 0.247]. Effect size estimates indicate that the differences are large for the need satisfaction of autonomy (g = 0.92) and BPNS (g = 0.78). For the needs satisfaction of relatedness and competence, Hedges’ g is 0.34 and 0.30, respectively.

Zero-order correlations for the constructs used in the path analysis (Table 3) reveal significant relationships between all variables except between the intervention and autonomous motivation and between the intervention and behavioral engagement. Table 4 displays means and standard deviations for autonomous motivation and behavioral engagement.

Table 4. Mean scores and standard deviations (in parentheses) for students’ behavioral engagement and autonomous motivation.

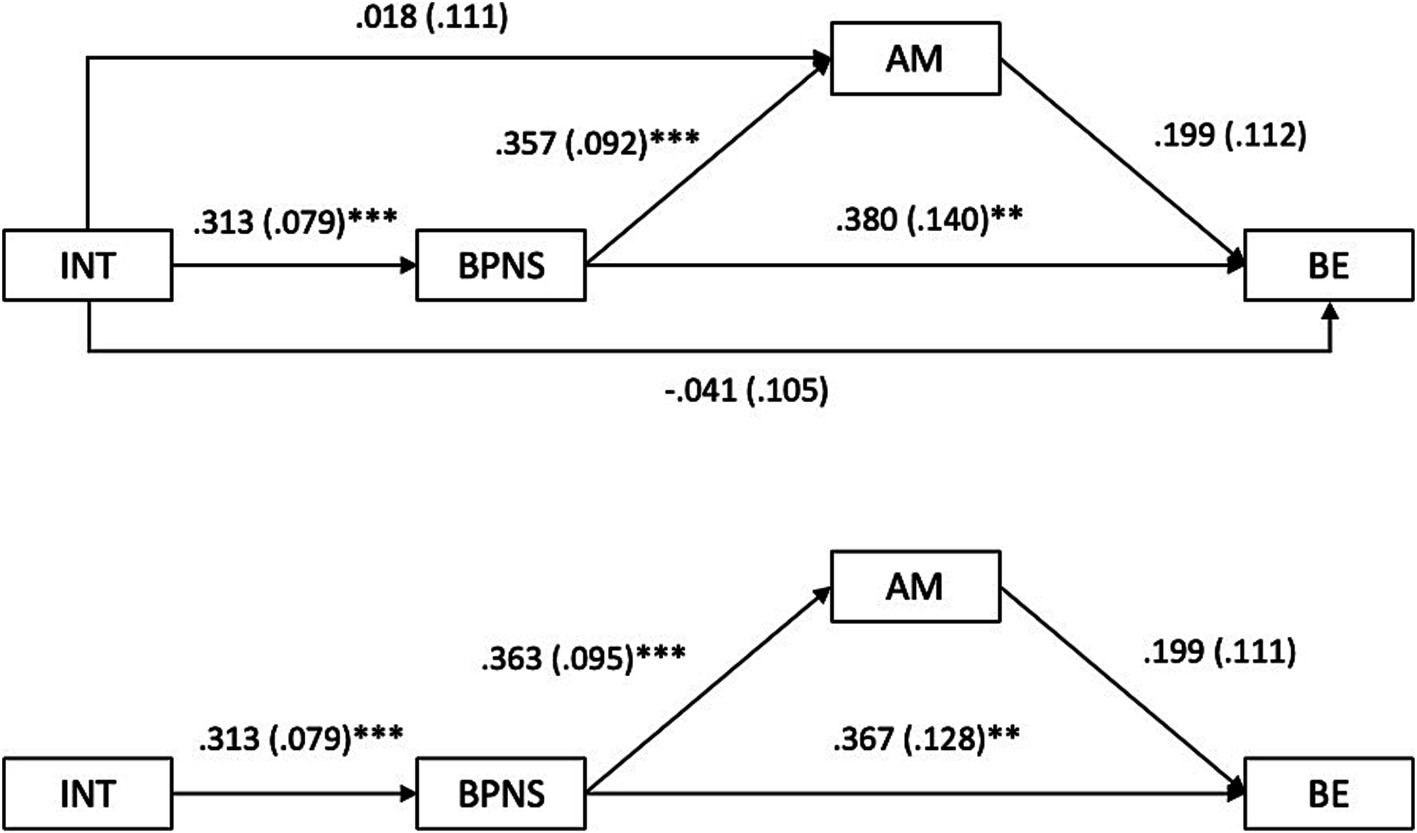

The results of the path analyses are depicted in Figure 1, with standardized path coefficients, standard error and significance level of coefficients for a saturated model and for a more parsimonious model in which the influence of the intervention on autonomous motivation and behavioral engagement is fully mediated by the composite measure of basic psychological needs satisfaction (BPNS). The more parsimonious model has excellent fit (Hu and Bentler, 1999; Steiger, 2007) [χ2(2, 92) =0.211, p = 0.900; CFI = 1.00; RMSEA = 0.00; SRMR = 0.011] and, in the saturated model, the paths representing direct effects on autonomous motivation and behavioral engagement from the intervention have very small and nonsignificant path coefficients. This finding implies that any possible influence of the intervention is fully mediated by BPNS. Tests of indirect effects in the parsimonious model show that there is in fact a statistically significant indirect effect on behavioral engagement from the intervention through BPNS (β = 0.115, SE = 0.044, p = 0.009) but not via autonomous motivation (β = 0.023, SE = 0.014, p = 0.115). Furthermore, there is a similarly sized indirect effect on autonomous motivation from the intervention via BPNS (β = 0.114, SE = 0.047, p = 0.015). The hypothesized relations between basic psychological needs satisfaction and autonomous motivation and behavioral engagement are both significant and in the expected direction, whereas the relation between autonomous motivation and behavioral engagement is in the expected direction but not significant (p = 0.074).

Figure 1. Path diagrams for the saturated model (top) and parsimonious model (bottom) with estimated relationships between the manifest variables. INT represents whether students belong to the intervention group (1) or not (0); BPNS is basic psychological needs satisfaction; AM is autonomous motivation; and BE is behavioral engagement. Path coefficients are standardized with SE in parenthesis, and asterisks indicate significance level as follows: *p < 0.05, **p < 0.01, and ***p < 0.001.

We began the results section by describing the characteristics of Jenny’s formative assessment practice as a means of making sense of the results of the study’s two main research questions (RQ2, RQ3). We conclude that Jenny’s practice includes activities where she acts as the main proactive agent in the core formative assessment processes (identifying students’ learning needs and providing feedback and learning activities adapted to these needs) as well as activities where she provides her students with opportunities and support to become proactive agents in the core formative assessment processes (peer-assessment with peer-feedback, and self-assessment with subsequent actions to meet identified learning needs). We will use this conclusion and the identified characteristics in the discussion below.

The results concerning Research question 2 show that, in comparison with the comparison classes, psychological needs satisfaction increased in the intervention class in which the formative assessment practice was implemented, and the increase was statistically significant for all needs constructs except perceived competence. In addition, the increase was large for both perceived autonomy and the composite measure of all three needs. This increase in perceived autonomy compared with the comparison group is larger than the association between perceived autonomy and the formative assessment practice focusing on teacher feedback reported by Pat-El et al. (2012), but smaller than the reported increase from the practice described by Granberg et al. (2021) in which both the teacher and the students to a larger extent than in the present study were proactive agents in the formative assessment processes. The increase in students’ perceived relatedness compared with the comparison group is smaller than that of perceived autonomy, but the same size as the association between perceived relatedness and the formative assessment practice reported in Pat-El et al. (2012). The increase in perceived competence in this study is the same size as the increase in perceived competence accomplished by the formative assessments investigated by Hondrich et al. (2018) and Rakoczy et al. (2019), but smaller than the increase in perceived competence from the formative assessment studied by Wollenschläger et al. (2016). These three studies focused on formative assessment focused on the teacher as the proactive agent. The increase in perceived competence in the present study was much smaller than the increase from the practice described by Granberg et al. (2021) in which both teacher and students to a very large extent were proactive agents in the formative assessment processes. Thus, the results of this study complement the existing literature by showing that this type of formative assessment may produce similar effects on students’ satisfaction of their three individual psychological needs–and even larger effects on students’ perceived autonomy–than the formative assessment practices focusing on the teacher as proactive agent in the formative assessment processes reported in the literature. However, the results also indicate that classroom practices that to an even larger extent include both the teacher and the students as proactive agents in the formative assessment practices, such as the one described in Granberg et al. (2021), may accomplish even larger effects. The large increase in students’ perceived autonomy in the intervention class may be understood through the many ways Jenny showed interest and trust in the students’ ideas and capability to take responsibility, as well as the many opportunities students were provided to make choices within given frames. That teacher activities with such characteristics would enhance students’ sense of autonomy is described within self-determination theory and have been empirically shown in several studies (Ryan and Deci, 2000; Ryan and Deci, 2020). The potential impact of these activities may also explain why this practice had a larger effect on students’ sense of autonomy than the practice focusing mostly on teacher feedback described by Pat-El et al. (2012), and less effect than the practice described by Granberg et al. (2021). The former practice did not include many of these types of activities while the latter included even more activities with these characteristics than Jenny’s practice.

Perceived competence may have been facilitated by the support Jenny gave the students in understanding the goals and progress criteria as well as in assessing and giving feedback to themselves and each other in order to recognize their learning and how to take the next learning step. Several researchers (e.g., Andrade and Brookhart, 2020; Hondrich et al., 2018) have argued that understanding a goal and having the experience that one can reach it can enhance a sense of competence, and this is also posited by self-determination theory it (Ryan and Deci, 2020; Ryan and Deci, 2000). However, it is possible that the students’ perceived competence would have been further facilitated by complementing the students’ feedback with more frequent feedback from the teacher to clarify the learning the students had accomplished. Teacher feedback may sometimes be experienced as more trustworthy if coming from the teacher (Bader et al., 2024).

The students’ perceived relatedness decreased in the comparison classes, while remaining very high in the intervention class. This lack of decrease in the intervention class may have been due to all the collective discussions and group work incorporated in the classroom practice. Indeed, Heritage and Wylie (2018) argued that supporting students as peer-assisted and self-regulated learners by arranging for information from self-assessment and peer-assessment to affect classroom practices would enhance students’ sense of relatedness. However, Jenny’s practice did not increase the students’ perceptions of relatedness when comparing their responses to the questionnaire at the two timepoints. This may have been due to their very high perceptions of relatedness already at the time of the first questionnaire when many students had already responded with the highest possible response. However, although Jenny aimed to foster a classroom climate in which students helped each other, student–student interactions do not always accomplish mutual trust and feelings of care. Implementing a more comprehensive system to ensure that these interactions actually foster a sense of belonging and connection may have been necessary for greater enhancement of perceived relatedness. This could have entailed a larger focus on helping students to provide peer feedback with comments experienced as given out of care. Self-determination theory stresses that feeling respected and cared for is a central tenet of relatedness (Ryan and Deci, 2020; Ryan and Deci, 2000). It is also possible that a longer period of time would have been required for students to fully experience the benefits of peer feedback, as well as to develop trust in and appreciation for this type of feedback, which could have led to increased perceived relatedness.

Our third research question concerns the mechanisms underlying the effects of formative assessment on autonomous motivation and student engagement by focusing on psychological needs satisfaction as a mediator of these effects. The existing studies on the mediating effects on autonomous motivation—all of which involve the teacher as the proactive agent in the formative assessment processes—show mixed results for the individual needs satisfaction of perceived autonomy, competence and relatedness as mediators of the effects of formative assessment on autonomous motivation (Hondrich et al., 2018; Kiemer et al., 2015; Pat-El et al., 2012). The present study complements this research in two ways, by (1) providing empirical evidence that a composite construct of the satisfaction of all three psychological needs may mediate an increase in autonomous motivation from formative assessment; and (2) doing so by involving a practice that also includes students as proactive agents in the formative assessment processes. Moreover, this study shows that basic needs satisfaction can also mediate the effects of formative assessment on students’ engagement in learning activities, which to the best of our knowledge has not been previously empirically investigated. Thus, the results indicate that this type of formative assessment practice has the potential to enhance both students’ autonomous motivation and their engagement in learning activities by facilitating their satisfaction of the psychological needs of autonomy, competence and relatedness.

The effects on psychological needs satisfaction from the kinds of activities included in the implemented formative assessment practice fits well with self-determination theory, and the subsequent mediating effects on autonomous motivation and engagement is aligned with self-determination theory (Ryan and Deci, 2020; Ryan and Deci, 2000) and self-system model of motivational development (Skinner et al., 2008) respectively. Self-determination theory also hypothesizes that an increase in autonomous motivation leads to greater engagement (Ryan and Deci, 2020). We therefore expected to find a relation between autonomous motivation and engagement. The estimate for this relation was in the anticipated direction, but not statistically significant. We would not argue that this necessarily implies that there is no real relation between autonomous motivation and behavioral engagement. Another possibility is that the relatively small number of students in the intervention group limits the possibilities to detect weaker relationships (see Section 6.5).

The results of the study imply that formative assessment may be used to enhance student motivation, and that practices in which both the teacher and the students are proactive agents in the formative assessment processes could accomplish larger effects on motivation than practices in which only the teacher acts as the proactive agent. The results also imply that it could be beneficial to design the activities so to facilitate students’ satisfaction of the three psychological needs of autonomy, competence and relatedness. Such activities would include supporting students in understanding the learning goals and criteria for progress, providing feedback that recognizes the students’ learning progress, and supporting the students’ motivation and ability to autonomously and proactively assess their own and their peers’ learning and provide supportive peer- and self-feedback.

However, developing such practices is not an easy endeavor. These practices place great demands on the teacher because it both requires students to take a more active role than they are used to and requires the teacher to replace well-known activities with activities they may not feel comfortable and competent doing. Implementing formative assessment that enhances student motivation also seems to require making more well-grounded decisions than traditional teaching (Näsström et al., 2021), and although involving students as proactive agents in the formative assessment processes has the potential to provide more ways of influencing student motivation than practices in which only the teacher is the main proactive agent (Palmberg et al., 2024) those practices require additional decision-making and teaching competencies to carry out effectively. Thus, to successfully develop such formative assessment practices it is likely that teachers would need substantial professional development support. But, implementing such practices has been found to be difficult even with professional development support (e.g., Heitink et al., 2016; Yan et al., 2021), and in particular with large-scale professional development initiatives (Anders et al., 2022). However, several studies have identified characteristics of teacher professional development programs that are important for teachers to be able to develop formative assessment practices (e.g., Andersson and Palm, 2018; Boström and Palm, 2020), and examples of professional development support that has accomplished formative assessment implementation with positive effects on student motivation do exist (e.g., Näsström et al., 2021; Palmberg et al., 2024).

A limitation of the study is the rather small sample consisting of 20 students in one intervention class and 72 students in four comparison classes. Thus, generalizations of the results of this study to other contexts must for several reasons be made with caution. The rather small, and unbalanced, sample also make the study underpowered to detect smaller effects. Also, the inclusion of only one intervention class does not allow for a variation in contextual factors (such as other teacher characteristics, school factors, and national policies) and in a variation in the characteristics of classroom practices grounded in the same formative assessment principles. Furthermore, the use of only one intervention class introduces the risk of bias connected to the teacher’s role. The teacher’s personal characteristics rather than the formative assessment practice might influence the results. However, administering the first questionnaire after 2 months of regular teaching in both the intervention class and the comparison classes creates a measurement of the effects of the teachers’ regular teaching in their respective student groups. Thus, when comparing the students’ responses to the questionnaire at time point 2 with their responses at time point 1 the only difference in teaching is the implemented formative assessment in the intervention class. Possible effects on students’ motivation pertaining to the intervention teacher’ personal characteristics and relationships with her students would likely be similar at both time points.

Another limitation is that we did not analyze the classroom practices in the comparison classes. If some of the teachers in the comparison classes also would have developed formative assessment practices in significant ways, this could have influenced the results. However, teachers need extensive professional development support to implement formative assessment (Heitink et al., 2016), and since the teachers in the comparison classes had not received that kind of support it is unlikely that they would have developed such practices. Furthermore, if they had implemented some formative assessment practices similar to that made by the intervention teacher, differences in formative assessment practices would have been smaller. In that case, logically, the effects of formative assessment we found would be underestimates rather than overestimates of the actual effects of the intervention teacher’s formative assessment practice in comparison with non-formative assessment practices. Finally, we chose to use a composite measure for the students’ psychological needs satisfaction. This complements existing studies that all have used measurements of each individual needs satisfaction when investigating psychological needs satisfaction as mediators of the effects of formative assessment on motivation. None of these measures would be better per se, they provide different kinds of valuable information. According to self-determination theory (Ryan and Deci, 2020) all three needs are important for the type of motivation students will develop, so effects on motivation may, for example, sometimes occur when there is an increase in all individual needs satisfaction although none of them are large. Using a composite measure might detect such effects, while using individual measure might not. On the other hand, a limitation of using a composite measure is that it might obscure variation in the influence of the satisfaction of individual needs. Future studies using larger samples of intervention teachers and involving more thorough analyses of the classroom practices in the comparison groups would be valuable for making more generalizable conclusions about the effects of formative assessment on students’ psychological needs satisfaction and about psychological needs satisfaction as a mediator of the effects of formative assessment on students’ motivation type and engagement in learning activities. In such larger studies, there would be a greater chance to identify smaller but still meaningful effects, and it would also be possible to compare the mediating effects of each individual psychological need construct and a composite construct involving all three psychological needs, which could provide further insights into the mechanisms underlying the effects of formative assessment on different manifestations of motivation.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Written informed consent was not obtained from the minor(s)’ legal guardian/next of kin, for the publication of any potentially identifiable images or data included in this article because all students in the study were older than 15 years. In Sweden, for students of that age legal guardians informed consent is not necessary. The written consent of the students is sufficient.

CA: Conceptualization, Formal analysis, Investigation, Methodology, Validation, Writing – original draft, Writing – review & editing. CG: Conceptualization, Investigation, Methodology, Validation, Writing – original draft, Writing – review & editing. BP: Conceptualization, Formal analysis, Investigation, Methodology, Validation, Writing – original draft, Writing – review & editing. TP: Conceptualization, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Validation, Writing – original draft, Writing – review & editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This research was founded by Umeå School of Education, Umeå University, Sweden.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors declare that no Generative AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1523124/full#supplementary-material

Anders, J., Foliano, F., Bursnall, M., Dorsett, R., Hudson, N., Runge, J., et al. (2022). The effect of embedding formative assessment on pupil attainment. J. Res. Educ. Effect. 15, 748–779. doi: 10.1080/19345747.2021.2018746

Andersson, C., and Palm, T. (2018). Reasons for teachers' successful development of a formative assessment practice through professional development – a motivation perspective. Assess. Educ. 25, 576–597. doi: 10.1080/0969594X.2018.1430685

Andrade, H., and Brookhart, S. (2020). Classroom assessment as the co-regulation of learning. Assess. Educ. Principles Policy Pract. 27, 350–372. doi: 10.1080/0969594X.2019.1571992

Baas, D., Vermeulen, M., Castelijns, J., Martens, R., and Segers, M. (2020). Portfolios as a tool for AfL and student motivation: are they related? Assess. Educ. Principles Policy Pract. 27, 444–462. doi: 10.1080/0969594X.2019.1653824

Black, P., and Wiliam, D. (2009). Developing the theory of formative assessment. Educ. Assess. Eval. Account. 21, 5–31. doi: 10.1007/s11092-008-9068-5

Bliese, P. D. (2000). “Within-group agreement, non-independence, and reliability: implications for data aggregation and analysis” in Multilevel theory, research, and methods in organizations: Foundations, extensions, and new directions. eds. K. J. Klein and S. W. J. Kozlowski (San Francisco, CA: Jossey-Bass), 349–381.

Boström, E., and Palm, T. (2020). Expectancy-value theory as an explanatory theory for the effect of professional development programmes in formative assessment on teacher practice. Teach. Dev. 24, 539–558. doi: 10.1080/13664530.2020.1782975

Bader, M., Hoem Iversen, S., and Borg, S. (2024). Student teachers’ reactions to formative teacher and peer feedback. European Journal of Teacher Education, 1–18. doi: 10.1080/02619768.2024.2385717

Clark, I. (2012). Formative assessment: assessment is for self-regulated learning. Educ. Psychol. Rev. 24, 205–249. doi: 10.1007/s10648-011-9191-6

Costello, A. B., and Osborne, J. (2019). Best practices in exploratory factor analysis: four recommendations for getting the most from your analysis. Pract. Assess. Res. Eval. 10:7. doi: 10.7275/jyj1-4868

Deci, E. L., Ryan, R. M., Gagné, M., Leone, D. R., Usunov, J., and Kornazheva, B. P. (2001). Need satisfaction, motivation, and well-being in the work organizations of a former Eastern Bloc country: a cross-cultural study of self-determination. Personal. Soc. Psychol. Bull. 27, 930–942. doi: 10.1177/0146167201278002

Federici, R. A., Caspersen, J., and Wendelborg, C. (2016). Students' perceptions of teacher support, numeracy, and assessment for learning: relations with motivational responses and mastery experiences. Int. Educ. Stud. 9, 1–15. doi: 10.5539/ies.v9n10p1

Förster, N., and Souvignier, E. (2014). Learning progress assessment and goal setting: effects on reading achievement, reading motivation and reading self-concept. Learn. Instr. 32, 91–100. doi: 10.1016/j.learninstruc.2014.02.002

Fredricks, J. A., Blumenfeld, P. C., and Paris, A. H. (2004). School engagement: potential of the concept, state of the evidence. Rev. Educ. Res. 74, 59–109. doi: 10.3102/00346543074001059

Granberg, C., Palm, T., and Palmberg, B. (2021). A case study of a formative assessment practice and the effects on students’ self-regulated learning. Stud. Educ. Eval. 68:100955. doi: 10.1016/j.stueduc.2020.100955

Haerens, L., Krijgsman, C., Mouratidis, A., Borghouts, L., Cardon, G., and Aelterman, N. (2019). How does knowledge about the criteria for an upcoming test relate to adolescents' situational motivation in physical education? A self-determination theory approach. Eur. Phys. Educ. Rev. 25, 983–1001. doi: 10.1177/1356336X18783983

Hedges, L. V. (1981). Distribution theory for Glass’s estimator of effect size and related estimators. J. Educ. Stat. 6, 107–128. doi: 10.3102/10769986006002107

Heitink, M. C., Van der Kleij, F. M., Veldkamp, B. P., Schildkamp, K., and Kippers, W. B. (2016). A systematic review of prerequisites for implementing assessment for learning in classroom practice. Educ. Res. Rev. 17, 50–62. doi: 10.1016/j.edurev.2015.12.002

Heritage, M., and Wylie, C. (2018). Reaping the benefits of assessment for learning: achievement, identity, and equity. ZDM 50, 729–741. doi: 10.1007/s11858-018-0943-3

Hofverberg, A., Winberg, M., Palmberg, B., Andersson, C., and Palm, T. (2022). Relationships between basic psychological need satisfaction, regulations, and behavioral engagement in mathematics. Front. Psychol. 13:829958. doi: 10.3389/fpsyg.2022.829958

Hondrich, A. L., Decristan, J., Hertel, S., and Klieme, E. (2018). Formative assessment and intrinsic motivation: the mediating role of perceived competence. Z. Erzieh. 21, 717–734. doi: 10.1007/s11618-018-0833-z

Hospel, V., Galand, B., and Janosz, M. (2016). Multidimensionality of behavioural engagement: empirical support and implications. Int. J. Educ. Res. 77, 37–49. doi: 10.1016/j.ijer.2016.02.007

Hu, L. T., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. Multidiscip. J. 6, 1–55. doi: 10.1080/10705519909540118

Ilardi, B. C., Leone, D., Kasser, T., and Ryan, R. M. (1993). Employee and supervisor ratings of motivation: main effects and discrepancies associated with job satisfaction and adjustment in a factory setting. J. Appl. Soc. Psychol. 23, 1789–1805. doi: 10.1111/j.1559-1816.1993.tb01066.x

Kasser, T., Davey, J., and Ryan, R. M. (1992). Motivation and employee-supervisor discrepancies in a psychiatric vocational rehabilitation setting. Rehabil. Psychol. 37, 175–188. doi: 10.1037/h0079104

Kiemer, K., Gröschner, A., Pehmer, A.-K., and Seidel, T. (2015). Effects of a classroom discourse intervention on teachers’ practice and students’ motivation to learn mathematics and science. Learn. Instr. 35, 94–103. doi: 10.1016/j.learninstruc.2014.10.003

Lam, A. C., Ruzek, E. A., Schenke, K., Conley, A. M., and Karabenick, S. A. (2015). Student perceptions of classroom achievement goal structure: is it appropriate to aggregate? J. Educ. Psychol. 107, 1102–1115. doi: 10.1037/edu0000028

Leenknecht, M., Wijnia, L., Köhlen, M., Fryer, L., Rikers, R., and Loyens, S. (2021). Formative assessment as practice: the role of students’ motivation. Assess. Eval. High. Educ. 46, 236–255. doi: 10.1080/02602938.2020.1765228

Marsh, H. W., Lüdtke, O., Nagengast, B., Trautwein, U., Morin, A. J. S., Abduljabbar, A. S., et al. (2012). Classroom climate and contextual effects: conceptual and methodological issues in the evaluation of group-level effects. Educ. Psychol. 47, 106–124. doi: 10.1080/00461520.2012.670488

Matos, L., Reeve, J., Herrera, D., and Claux, M. (2018). Students’ agentic engagement predicts longitudinal increases in perceived autonomy-supportive teaching: the squeaky wheel gets the grease. J. Exp. Educ. 86, 579–596. doi: 10.1080/00220973.2018.1448746

Meusen-Beekman, K. D., Joosten-ten Brinke, D., and Boshuizen, H. P. (2016). Effects of formative assessments to develop self-regulation among sixth grade students: results from a randomized controlled intervention. Stud. Educ. Eval. 51, 126–136. doi: 10.1016/j.stueduc.2016.10.008

Näsström, G., Andersson, C., Granberg, C., Palm, T., and Palmberg, B. (2021). Changes in student motivation and teacher decision making when implementing a formative assessment practice. Front. Educ. 6, 1–17. doi: 10.3389/feduc.2021.616216

Palmberg, B., Granberg, C., Andersson, C., and Palm, T. (2024). A multi-approach formative assessment practice and its potential for enhancing student motivation: a case study. Cogent Educ. 11, 1–22. doi: 10.1080/2331186X.2024.2371169

Pat-El, R., Tillema, H., and Van Koppen, S. W. (2012). Effects of formative feedback on intrinsic motivation: examining ethnic differences. Learn. Individ. Differ. 22, 449–454. doi: 10.1016/j.lindif.2012.04.001

Rakoczy, K., Pinger, P., Hochweber, J., Klieme, E., Schütze, B., and Besser, M. (2019). Formative assessment in mathematics: mediated by feedback's perceived usefulness and students' self-efficacy. Learn. Instr. 60, 154–165. doi: 10.1016/j.learninstruc.2018.01.004

Ryan, R. M., and Connell, J. P. (1989). Perceived locus of causality and internalization: examining reasons for acting in two domains. J. Pers. Soc. Psychol. 57, 749–761. doi: 10.1037/0022-3514.57.5.749

Ryan, R. M., and Deci, E. L. (2020). Intrinsic and extrinsic motivation from a self-determination theory perspective: definitions, theory, practices, and future directions. Contemp. Educ. Psychol. 61:101860. doi: 10.1016/j.cedpsych.2020.101860

Ryan, R. M., and Deci, E. L. (2000). Intrinsic and extrinsic motivations: classic definitions and new directions. Contemp. Educ. Psychol. 25:54–67. doi: 10.1006/ceps.1999.1020

Skinner, E., Furrer, C., Marchand, G., and Kindermann, T. (2008). Engagement and disaffection in the classroom: part of a larger motivational dynamic? J. Educ. Psychol. 100, 765–781. doi: 10.1037/a0012840

Skinner, E. A., Kindermann, T. A., Connell, J. P., and Wellborn, J. G. (2009). “Engagement and disaffection as organizational constructs in the dynamics of motivational development” in Handbook of motivation in school. eds. K. Wentzel and A. Wigfield (New York: Routledge), 223–245.

Stefansson, K. K., Gestsdottir, S., Geldhof, G. J., Skulason, S., and Lerner, R. M. (2016). A bifactor model of school engagement: assessing general and specific aspects of behavioral, emotional and cognitive engagement among adolescents. Int. J. Behav. Dev. 40, 471–480. doi: 10.1177/0165025415604056

Steiger, J. H. (2007). Understanding the limitations of global fit assessment in structural equation modeling. Personal. Individ. Differ. 42, 893–898. doi: 10.1016/j.paid.2006.09.017

Wiliam, D., and Thompson, M. (2008). “Integrating assessment with instruction: what will it take to make it work?” in The future of assessment: Shaping teaching and learning. ed. C. A. Dwyer (Mahwah, NJ: Erlbaum), 53–82.

Wollenschläger, M., Hattie, J., Machts, N., Möller, J., and Harms, U. (2016). What makes rubrics effective in teacher-feedback? Transparency of learning goals is not enough. Contemp. Educ. Psychol. 44-45, 1–11. doi: 10.1016/j.cedpsych.2015.11.003

Wong, H. M. (2017). Implementing self-assessment in Singapore primary schools: effects on students’ perceptions of self-assessment. Pedagogies 12, 391–409. doi: 10.1080/1554480X.2017.1362348

Wylie, C., and Hodgen, E. (2012). “Trajectories and patterns of student engagement: evidence from a longitudinal study” in Handbook of research on student engagement. eds. S. L. Christenson, A. L. Reschly, and C. Wylie (Boston, MA: Springer), 585–599.

Yan, Z., and Chiu, M. M. (2022). The relationship between formative assessment and reading achievement: a multilevel analysis of students in 19 countries/regions. Br. Educ. Res. J. 49, 186–208. doi: 10.1002/berj.3837

Keywords: formative assessment, assessment for learning, motivation, basic psychological needs, behavioral engagement

Citation: Andersson C, Granberg C, Palmberg B and Palm T (2025) Basic psychological needs satisfaction as a mediator of the effects of a formative assessment practice on behavioral engagement and autonomous motivation. Front. Educ. 10:1523124. doi: 10.3389/feduc.2025.1523124

Received: 05 November 2024; Accepted: 19 February 2025;

Published: 05 March 2025.

Edited by:

Mohd. Elmagzoub Eltahir, Ajman University, United Arab EmiratesReviewed by:

Dalia Bedewy, Ajman University, United Arab EmiratesCopyright © 2025 Andersson, Granberg, Palmberg and Palm. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Catarina Andersson, Y2F0YXJpbmEuYW5kZXJzc29uQHVtdS5zZQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.