94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 28 February 2025

Sec. Digital Education

Volume 10 - 2025 | https://doi.org/10.3389/feduc.2025.1517116

This article is part of the Research TopicGenerative AI Tools in Education and its Governance: Problems and SolutionsView all 8 articles

Background: The implementation of artificial intelligence (AI), and especially generative AI, is transforming many medical fields, while medical education faces new challenges in integrating AI into the curriculum and is facing challenges with the rise of generative AI chatbots.

Objective: This survey study aimed to assess medical students’ attitudes toward AI in medicine in general, effects of AI in students’ career plans, and students’ use of generative AI in medical studies.

Methods: An anonymous and voluntary online survey was designed using SurveyMonkey and was sent out to medical students at Gothenburg University. It consisted of 25 questions divided into various sections aiming to evaluate the students’ prior knowledge of AI, their use of generative AI during medical studies, their attitude toward AI in medicine in general, and the effect of AI on their career plans.

Results: Of the 172 students who completed the survey, 74% were aware of AI in medicine, and 71% agreed or strongly agreed that AI will improve medicine. One-third were frightened of the increased use of AI in medicine. Radiologists and pathologists were perceived as most likely to be replaced by AI. Interestingly, 37% of the responders agreed or strongly agreed that they will exclude some field of medicine because of AI. More than half argued that AI should be part of medical training. Almost all responders (99%) were aware of generative AI chatbots, and 64% had taken advantage of these in their medical studies. Fifty-eight percent agreed or strongly agreed that the use of AI is supporting their learning as medical students.

Conclusion: Medical students show high expectations for AI’s impact on medicine, yet they express concerns about their future careers. Over a third would avoid fields threatened by AI. These findings underscore the need to educate students, particularly in radiology and pathology, about optimizing human-AI collaboration rather than viewing it as a threat. There is an obvious need to integrate AI into the medical curriculum. Furthermore, the medical students rely on AI chatbots in their studies, which should be taken into consideration while restructuring medical education.

Artificial intelligence (AI) encompasses a wide array of technologies aimed at enabling robots and computers to simulate human intelligence. The advancement of computer hardware and software applications in the medical field drives the evolution and utilization of AI in medicine (Wang and Preininger, 2019; Haug and Drazen, 2023). The implementation of AI and machine learning has revolutionized many fields of medicine, from automated diagnostics in the fields of radiology (Najjar, 2023; Pedersen et al., 2024) and pathology (Shafi and Parwani, 2023) to AI-assisted surgery (Rivero-Moreno et al., 2023). It is expected that further advancements in generative AI will permeate all aspects of medicine (Wiljer and Hakim, 2019; Rao et al., 2024). The AI and machine learning applications hold great promise for solving many global healthcare problems, including doctor shortages, by facilitating diagnostics, decision-making, big data analytics, and administration (Meskó et al., 2018). The development of AI and machine learning solutions in medicine offers clear advantages but also challenges, including ethical issues and physicians’ fear of replacement by AI (Briganti and Le Moine, 2020; Grunhut et al., 2022; Rajpurkar et al., 2022). As AI technologies continue to advance, including incorporating generative AI tools into medicine (Lu et al., 2024; Koohi-Moghadam and Bae, 2023; Singh et al., 2024), it is crucial for future physicians to develop the necessary knowledge and skills to effectively utilize and critically evaluate these AI applications, especially in order to catch possible hallucinations and false diagnostic suggestions (Pesapane et al., 2024; Hatem et al., 2023).

Meanwhile, medical education is facing new challenges as it incorporates AI into the medical curriculum to meet the growing desire among students to learn about it (Brouillette, 2019). It is imperative that medical schools adapt to the use of these advanced technologies in their curricula to equip future physicians with the knowledge and skills needed to use AI applications and ensure that professional values and rights are protected effectively and safely (Ghorashi et al., 2023). Several studies have explored medical students’ perceptions of AI (Pinto et al., 2019; Civaner et al., 2022; Angkurawaranon et al., 2024; Reeder and Lee, 2022). While medical students have overall positive attitudes toward AI in healthcare (Al Hadithy et al., 2023), they have also expressed fear of future unemployment (Civaner et al., 2022). Furthermore, the students fear that AI will devalue the medical profession (Civaner et al., 2022). However, limited information is available on AI’s effects on the students’ career plans and choice of specialty.

At the same time, medical education is undergoing fundamental changes after the introduction of generative AI chatbots in 2022 (chat-GTP, Copilot). The AI chatbots are programmed to process and generate human language. They have the capability to summarize and simplify complex concepts and interact with students and thus have potential to enhance students’ learning (Ghorashi et al., 2023; Civaner et al., 2022). However, students may use these AI chatbots to generate content for assignments, which can lead to issues of plagiarism and detract from critical thinking skills (Ghorashi et al., 2023). Impressively, Chat-GTP achieved the equivalent of a passing score for a third-year medical student in the United States medical licensing examination (Gilson et al., 2023), which poses challenges to examination protocols. Even though the potential and threads of AI chatbots are widely discussed (Ghorashi et al., 2023), there is limited information about how much medical students currently use generative AI applications in their studies and about whether they are critically assessing the information provided by the chatbots (Biri et al., 2023).

This survey study aimed to assess medical students’ attitudes toward AI in medicine in general, effects of AI in students’ career plans, and students’ use of generative AI in medical studies. Specifically, we aimed to assess what medical students perceive as threats from AI to various medical specialties and how the development of AI influences medical students’ career plans and specialty choices. Furthermore, we aimed to assess how familiar the medical students are with generative AI chatbots and also to assess the extent to which students incorporate them into their medical studies. The further aim is to take advantage of this information when planning how to incorporate AI into medical education.

An anonymized and voluntary English online survey was prepared and distributed through SurveyMonkey R (San Mateo, CA, United States). The survey was sent to first- to sixth-year medical students at the University of Gothenburg. The survey opened on March 4, 2024, and closed on March 19, 2024 (14 survey days) and was sent only once. It was not possible for students to take the survey multiple times from the same device. The survey link was advertised via a closed university website platform (Canvas). It consisted of various sections aiming to evaluate the students’ prior knowledge of AI, use of AI during medical studies, their attitude toward AI in medicine in general, and the effect of AI on their career plans (Supplementary Table S1). Furthermore, demographic data including age, gender, and year of medical studies were recorded. Participation was not compensated. Respondents’ anonymity was ensured. The inclusion criteria for this survey study were that the participants were students of medicine and that they completed the entire survey. Answers to all questions were required for the survey to be considered complete. Exclusion criteria were that participants were not students, were studying other subjects, or did not complete the survey.

The curriculum/study program for medical students at the University of Gothenburg was used to determine whether students were exposed to specific fields of medicine by the time they answered the questionnaire (for example, by study year 3 all students were exposed to pathology). Students’ expectations of AI as a threat to employment were recorded as ordered variables with answers strongly disagree, disagree, neutral, agree, and strongly agree to questions of the following type: “In the foreseeable future, <specialization> will be replaced by AI.” Students who opted to skip a question and students who answered “I do not know” were recorded as missing for affected questions. An ordered logistic mixed model was fitted with students as random intercepts, using binary indicators for gender, the specializations, and whether the students had been exposed to the specializations. Ordered logistic models allow different “gaps” between adjacent categories, so that the difference between strongly disagree and disagree is not equal to the difference between disagree and neutral, and so on. As such, ordered logistic models provide information about the direction of effects based on which coefficients increase or decrease agreement. However, without strong assumptions, ordered data does not have explicit magnitudes of effect. To facilitate interpretation of the regression coefficients, the attitudes regarding the different specializations were compared by ranking the influences on the log odds scale and calculating 95% credible intervals on these ranks. That is, if the 95% credible interval for a specialty is, say (Najjar, 2023; Shafi and Parwani, 2023), then we are 95% certain that the true rank of said specialty is perceived as between 3rd-least threatened and as 5th-least threatened, if we assume the sample of students is representative of the target population, and so on. The model was fitted using R 4.2.2 with brms 2.18.0 (R Foundation for Statistical Computing, Vienna, Austria), using 4 chains for a total of 4,000 post-warm-up draws. Furthermore, an ordered logistic mixed model was fitted with students as random intercepts, using binary indicators for gender, the specializations, and whether the students had been exposed to the specialization. The year of study was included as a continuous variable.

A total of 192 students responded. Of these 172 (90%) completed the survey. However, some single questions could be skipped. The median time to complete the survey was 2 min 28 s. Ninety-six responders were females (56%) and 76 (44%) were males. Eighty-six percent (144/168) of the responders were aged between 18 and 24. Most (128/172, 72%) of the students who completed the survey were first- or second-year medical students. The responder characteristics are shown in Table 1. There was no evidence that gender or year of study affected perceptions regarding AI. Regression coefficients were 0.07 [−1.02; 1.16] for male versus female, respectively 0.23 [−0.27; 0.74] per year of study. Answers per year of study are shown in the Supplementary Table S1.

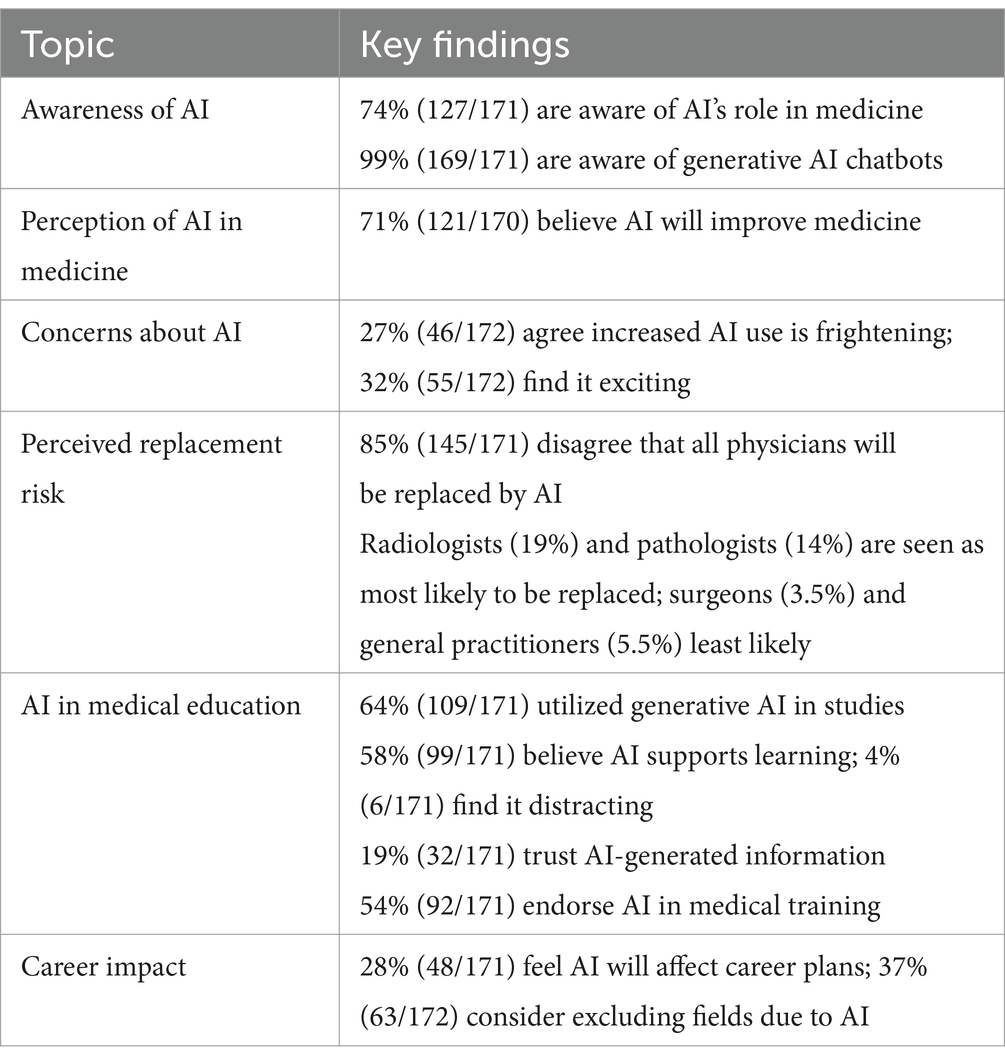

Table 2 summarizes the key findings from the survey. Ninety-nine percent (169/171) of the responders were aware of generative artificial intelligence (AI) chatbots. Sixty-four percent (109/171) had taken advantage of generative AI in their medical studies, and 67% (114/171) planned to use generative AI in their medical studies. Fifty-eight percent (99/171) agreed or strongly agreed that the use of AI is supporting their learning as medical students, while only 4% (6/171) agreed or strongly agreed that the use of generative AI is distracting their learning. However, only 19% (32/171) of the students agreed or strongly agreed that they trust information generated by AI.

Table 2. Key findings from the survey on medical students’ perceptions and attitudes toward artificial intelligence (AI).

Seventy-four percent (127/171) of the responders were aware of AI in medicine. Interestingly, 71% (121/170) agreed or strongly agreed that AI will improve medicine, while 59% (101/171) agreed or strongly agreed that AI will revolutionize medicine in general. A bit more than half of the responders (54%, 92/171) agreed or strongly agreed that AI should be part of medical training. Responses to the statement “A development with an increased use of AI in medicine in general frightens me” resulted in divided answers, with 27% agreeing or strongly agreeing, while 47% either disagreed or strongly disagreed. A similar trend was seen for the statement “Development with an increased use of AI makes medicine in general more exciting to me,” with 32% agreeing or strongly agreeing and 32% disagreeing or strongly disagreeing, while 35% neither agreed nor disagreed.

Eighty-five percent (145/171) disagreed or strongly disagreed that all physicians will be replaced by AI. In the students’ responses, radiologists were considered most likely to be replaced by AI (19% agreed or strongly agreed), followed by pathologists (14% agreed or strongly agreed), while surgeons and general practitioners were considered least likely to be replaced by AI (3.5 and 5.5% agreed or strongly agreed, respectively).

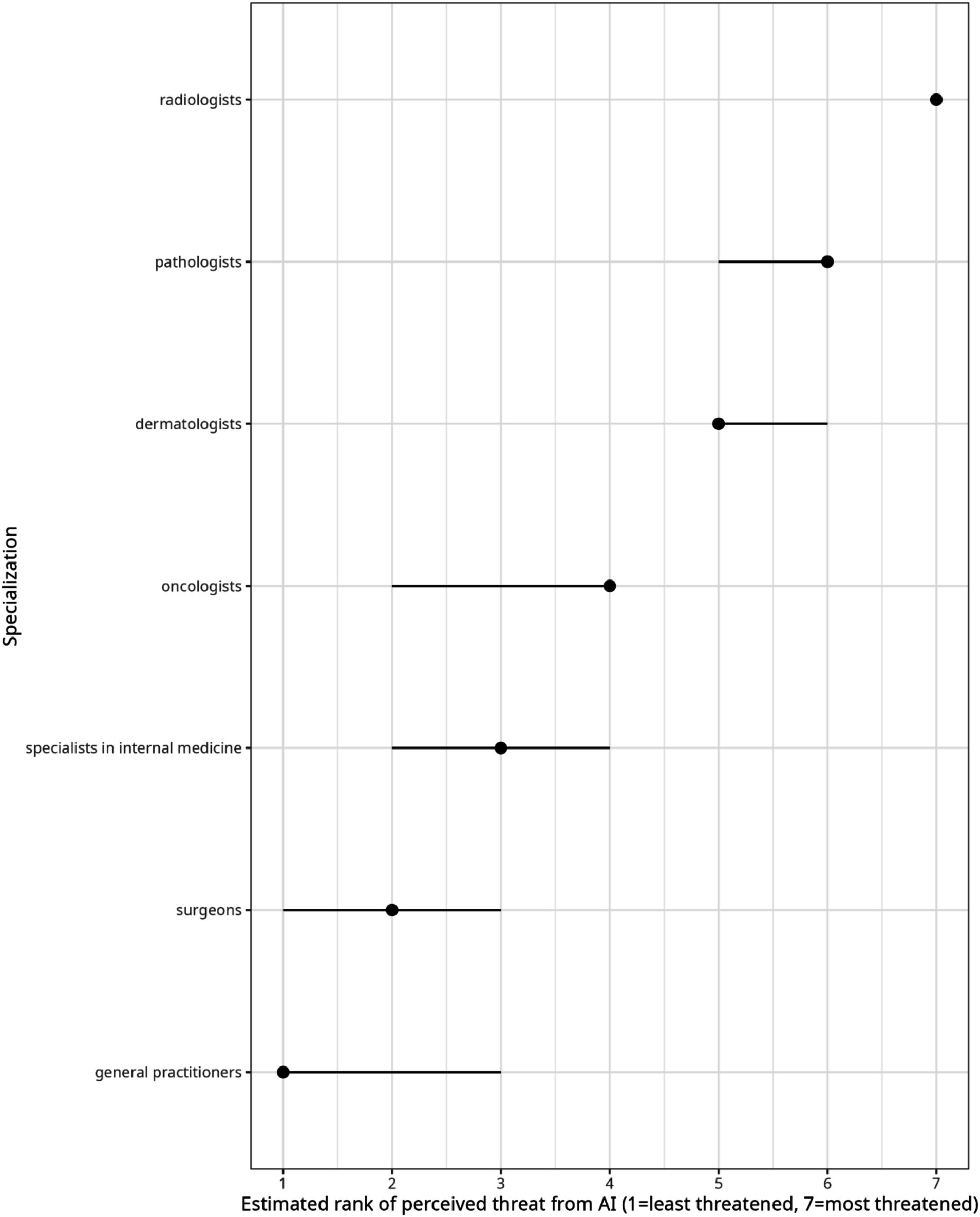

There was no evidence that exposure to specific fields of medicine during their medical studies affected the students’ perceptions regarding AI. Regression coefficients were 0.56 [−0.02; 1.13] for “exposed” versus not “exposed.” There was, however, substantial evidence for differences in perception of threat to specialties. Fitting a mixed ordered logistic regression model with and without specialty, the difference in expected log pointwise predictive density (elpd) was 150.9 with standard error 15.6; a ratio of 9.67 where generally a ratio of 2 is considered evidence in favor of the model with higher elpd. Figure 1 displays the ranks of the perceived threat to specialties, with 95% credible intervals on the rankings. Clearly, radiology was considered most threatened, rank 7 (with 97.5% certainty its rank is 7), while general practitioners and surgeons were least threatened.

Figure 1. Estimated rank of perceived threat from artificial intelligence per specialization. Surveyed students indicated agreement with statements of the type: “In the foreseeable future <specialization> will be replaced by AI.” Dots represent the expected rank of each specialization, and lines represent 95% credible intervals on the ranks, both obtained from a generalized linear mixed model with cumulative logit link. Non-overlapping credible intervals indicate evidence for the ordering in which specialization is less/more threatened, as perceived by medical students in Gothenburg in 2024.

One-third (28% 48/171) of responders agreed or strongly agreed that AI will affect their career plans; 37% (63/172) agreed or strongly agreed that they will exclude some field of medicine because of AI, while 38% (66/172) disagreed or strongly disagreed.

The results indicate that medical students have generally positive attitudes toward AI in medicine, and they have high expectations for AI improving medicine. At the same time, they express fear for their future careers as physicians. Interestingly, over one-third of students will exclude some fields of medicine threatened by the development of AI. Furthermore, the medical students express agreement that AI should be part of medical training, and they rely on AI chatbots in their studies, which should be taken into consideration while restructuring medical education. By combining several elements, including career impact, cross-specialty comparison, generative AI usage, and balanced perspectives, this survey study offers a comprehensive and timely snapshot of medical students’ attitudes toward AI, providing valuable insights for medical educators.

Previously, a large cross-sectional multi-center online survey was conducted among 3,018 Turkish medical students to examine the perceptions of future physicians on the possible influences of AI on medicine, and to determine the needs, which could then be considered when restructuring the medical curriculum (Civaner et al., 2022). Most of the medical students perceived AI as an assistive technology that could facilitate physicians’ access to information (85.8%) and patients’ access to healthcare (76.7%) and that could reduce errors (70.5%). This is in line with the findings in our survey, where 71% agreed or strongly agreed that AI will improve medicine. Another cross-sectional study conducted in Malaysia surveyed 301 medical students from 17 universities about their attitudes and readiness regarding AI (Gilson et al., 2023). Similar to our findings, 87.36% of the Malaysian students agreed that AI will play an essential role in healthcare. It must be noted that even though AI solutions may facilitate patients’ access to healthcare, it also causes challenges for physician-patient interactions. In a previous study, medical students agreed that the use of AI in medicine could damage trust (45.5%) and negatively affect patient-physician relationships (42.7%), and even that it might cause violations of professional confidentiality (Civaner et al., 2022).

In this study, one-third of the medical students felt frightened about an increased use of AI in medicine. In a previous study, 45% of the medical students were worried about the possible reduction in the services of physicians, which could lead to unemployment (Civaner et al., 2022). This trend was supported by our survey, where large numbers of the students agreed that specialists in several fields may be replaced by AI. Not surprisingly, the specialists in diagnostic fields were considered most likely to be replaced by AI, including radiologists (19% agreed or strongly agreed) followed by pathologists (14% agreed or strongly agreed), while general surgeons and general practitioners were considered least likely to be replaced by AI. In fact, most of the previous studies regarding medical students’ attitudes focus on evaluating attitudes toward AI and radiology (Ghorashi et al., 2023; Tung and Dong, 2023), the first field in which AI has been applied in clinical practice (Topol, 2019). The majority of medical students participating in a 2019 survey agreed that AI will revolutionize and improve radiology (77 and 86%), while 17% agreed with the statement that human radiologists will be replaced by AI (Pinto et al., 2019). Interestingly, in a more recent study by Allam with 4,492 responders, 48.9% agreed that AI could reduce the need for radiologists (Allam et al., 2023). In another recent study the medical students selected radiology (72.6%) and pathology (58.2%) as the specialties most likely to be impacted by AI (Stewart et al., 2023). A multi-national study involving Arab medical students found that 72.6% perceived radiology and 58.2% perceived pathology as the specialties most likely to be impacted by AI (Alamer, 2023), which aligns with our results identifying radiologists (19%) and pathologists (14%) as the top two specialties perceived to be replaceable by AI. In a recent Nordic study, nearly 80% of the responding students and trained radiographers expressed interest in pursuing AI education (Pedersen et al., 2024). Thus, the findings in this study are in line with the previous studies regarding perceptions of the medical students.

Even though there are several previous studies exploring medical students’ fears and concerns about AI, there is limited research specifically investigating how AI influences their career plans and choice of medical specialty. In our survey, 37% of the students agreed or strongly agreed that they will exclude some field of medicine because of AI. In a large survey study across 32 US medical schools, AI significantly lowered students’ preference for ranking radiology, meaning that one-sixth of students who would have chosen radiology as their first choice did not do so because of AI (REF). A similar trend was seen in a Canadian study distributed to 17 Canadian medical schools, where half of the responders agreed that AI caused them anxiety when considering the radiology specialty. Furthermore, one-sixth of responders who would otherwise rank radiology as the first choice would not consider radiology because of their anxiety about AI (Reeder and Lee, 2022). Furthermore, 33% of Malaysian students reported being less likely to consider a career in radiology due to AI advancements (Tung and Dong, 2023). Another study from Saudi Arabia surveyed 476 medical students, with 34 considering radiology as their first specialty choice (Bin Dahmash et al., 2020). The study also highlighted that students’ concerns about AI displacing radiologists negatively influenced their consideration of radiology as a career, corroborating our finding that 37% would exclude certain medical fields threatened by AI. These findings underscore the importance of educating medical students, particularly in radiology and pathology, about the potential of human-AI collaboration and how to optimize it for benefit rather than perceiving it as a threat.

While this survey focused on medical students’ attitudes and perceptions regarding AI, it is important to compare the results with studies involving practicing medical specialists. In a previous study regarding pathologists’ attitudes toward AI with 718 responders worldwide, 72% of responders agreed or strongly agreed that AI would improve dermatopathology (Polesie et al., 2020). However, compared to the students’ response, a lower percentage of pathologists (6%) agreed that pathologists will be replaced by AI. A study by Jiang et al. surveying 280 radiologists in the United States found that, while 23% believed AI would make radiologists’ jobs redundant, 78% believed AI would increase their productivity (Jiang et al., 2017). This suggests that, while students perceive a threat to specific specialties, practicing specialists may have a more nuanced view, recognizing AI’s potential to augment their roles.

Medical students have previously expressed a need to revise the medical curriculum to adapt to the changing healthcare environment influenced by AI (Pinto et al., 2019; Civaner et al., 2022). However, the vast majority of medical students do not currently receive formal AI training during medical education (Allam et al., 2023; Stewart et al., 2023). In this survey, 54% of the responders agreed or strongly agreed that AI should be part of medical training, which is in line with the findings in previous surveys (Grunhut et al., 2022; Stewart et al., 2023; Kimmerle et al., 2023). In line with our findings, a survey of 221 clinical-year medical students at Sultan Qaboos University in Oman revealed positive perceptions and attitudes toward AI, with 78.7% believing AI training should be incorporated into medical curricula (Al Hadithy et al., 2023). In addition, in a survey among Thai medical students, nearly all students (93.6%) recognized the value of AI training for their careers and strongly advocated for its inclusion in the medical school curriculum (Angkurawaranon et al., 2024). Medical students need to be equipped with the knowledge and skills required to use AI effectively and ethically in their future practice. This includes understanding the limitations and potential biases of AI algorithms by teaching the sensible use of human oversight and continuous monitoring to catch errors in AI algorithms and ensure that final decisions are made by human clinicians (Kimmerle et al., 2023). Current students should understand the breadth of AI tools, the framework of engineering and designing AI solutions to clinical issues, and the role of data in the development of AI innovations. Study cases in the curriculum should include an AI recommendation that may present critical decision-making challenges. Finally, the ethical implications of AI in medicine must be at the forefront of any comprehensive medical education (Briganti and Le Moine, 2020). It is obvious that the curriculum for medical students needs to be revised to incorporate several aspects of AI.

In this survey, the majority (64%) of the responders had taken advantage of AI chatbots in their medical studies, and 58% agreed or strongly agreed that the use of AI is supporting their learning as medical students. The extent to which the students use AI chatbots is rarely assessed in current literature. In a recent study from 2023 among Indian medical students, the majority of the students rarely used AI chatbots for their teaching-learning purposes (Biri et al., 2023). There are studies regarding the clear benefits of using AI chatbots in medical education (Kaur et al., 2021; Li et al., 2021). AI chatbots offer some advantages, including their capability to summarize, simplify complex concepts, automate the creation of memory aids, and serve as interactive tutors. Engaging in conversations with chat-based AI can help students improve their language skills, including grammar, vocabulary, and communication proficiency. However, current chatbots rely on unverified internet sources and may therefore provide inaccurate medical information (Ghorashi et al., 2023). They should be reprogrammed using evidence-based resources to serve as trustworthy point-of-care references for medical education and patient care. Overreliance on chatbots may hinder critical thinking and self-reliance in students. Interestingly, in our study only 19% of students trusted AI-generated information, highlighting the need to teach critical assessment of such data. Medical training must emphasize validating information, distinguishing facts from rhetoric, and disseminating accurate content that adheres to scientific and ethical standards (Brouillette, 2019).

Incorporating generative AI into medicine has the potential to revolutionize various aspects of healthcare and medical education (Rao et al., 2024; Haug and Drazen, 2023). AI has a potential role in clinical decision-making, assisting physicians by providing rapid, data-driven insights for diagnosis and treatment leading to personalized patient care. In the best-case scenario, AI will not put health professionals out of business; rather, it will make it possible for health professionals to do their jobs better (Haug and Drazen, 2023). However, incorporating AI into medicine involves several ethical challenges, including uncertainty and distrust of AI predictions, regulation and governance of medical AI, shifts in responsibility, and concerns about data privacy and security (Rajpurkar et al., 2022). The use of generative AI may create hallucinations or misleading information (Hatem et al., 2023).

Based on this survey, we provide some recommendations for integrating AI into medical education. Given that more than half of students believe AI should be part of medical training, medical schools should develop comprehensive courses that cover the basics of AI, its applications in medicine, and ethical considerations. This could include modules on machine learning, data analytics, and the use of AI tools in clinical practice. Furthermore, there is an obvious need for ethics courses that discuss the implications of AI in healthcare and the potential for AI to augment rather than replace human roles. Furthermore, educational strategies should implement training that emphasizes critical thinking, source verification, and the limitations of AI.

We should encourage students to cross-check AI-generated content with peer-reviewed medical literature and clinical guidelines. Given the potential for AI chatbots to generate content that could be used in exams, traditional examination formats may need to be revised to maintain academic integrity. To prevent the misuse of AI chatbots, some institutions might consider reverting to handwritten exams or supervised in-person assessments. Furthermore, exams should include evaluating students’ ability to critically analyze and verify AI-generated information. One option is to incorporate practical components in exams where students use AI tools to solve clinical problems. Furthermore, there is a need to establish clear guidelines on the acceptable use of AI tools in academic work and ensure that students understand the importance of academic integrity and the ethical use of AI. Institutions should provide clear policies on the use of AI in assignments and exams, emphasizing the need for proper attribution and the avoidance of plagiarism.

Limitations of this study include a relatively small single-center setup as the survey was only distributed to medical students at the University of Gothenburg. Currently, the Sahlgrenska University Hospital in Gothenburg is facing economic challenges, often featured in the local news. The hospital’s goal is to utilize AI to overcome the challenges, which may have affected the students’ fears about their future careers. Furthermore, not all fields of medicine, for example, psychiatry, were included in the survey. Most of the participants were in the first 2 years of their medical education, which mostly includes preclinical studies. Their career plans may still change several times during their education. We found no evidence that exposure to specific fields of medicine during responders’ medical studies affected their perceptions regarding AI; however, it is unclear how much the early-years students know about different disciplines in medicine and AI’s possible effects on these. Furthermore, the combination of the survey remaining open during the ongoing semester and a recent restructuring of the curriculum introduced some misclassification of students’ exposure to each specialization. Some students had skipped some questions in the survey. While the mixed model approach is robust to missing at random, there can be bias due to missing not at random, both in general non-response and item-specific non-response. Furthermore, the answer “do not know” was recoded as missing, which could have biased analyses of the influence of knowledge of the specialization if “do not know” is related to whether students understand the specialization well enough as opposed to driven by how much they think they understand AI. Furthermore, the survey combined both machine learning in medicine and the use of AI chatbots, which may affect the interpretation of some results. For example, we are not entirely sure if the students feel threatened about the development of AI in medicine in general or about the introduction of AI chatbots, since this was not specified in the survey.

To conclude, in this survey the medical students have high expectations for AI improving medicine. At the same time, they expressed fear for their future careers as physicians. Interestingly, over one-third of students will exclude some fields of medicine threatened by the development of AI. These findings emphasize the need to educate medical students, especially in radiology and pathology, about the potential of human-AI collaboration and how to optimize it for positive outcomes instead of viewing it as a threat. There is an obvious need to integrate AI into the medical curriculum.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

NN: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Visualization, Writing – original draft, Writing – review & editing.

The author declares that no financial support was received for the research, authorship, and/or publication of this article.

We wish to thank Koen Simons at the University of Gothenburg for helping with the statistical analyses.

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors declare that no Generative AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2025.1517116/full#supplementary-material

AI, Artificial intelligence.

Al Hadithy, Z. A., Al Lawati, A., Al-Zadjali, R., and Al, S. H. (2023). Knowledge, attitudes, and perceptions of artificial intelligence in healthcare among medical students at Sultan Qaboos University. Cureus 15:e44887. doi: 10.7759/cureus.44887

Alamer, A. (2023). Medical students' perspectives on artificial intelligence in radiology: the current understanding and impact on radiology as a future specialty choice. Curr. Med. Imaging 19, 921–930. doi: 10.2174/1573405618666220907111422

Allam, A. H., Eltewacy, N. K., Alabdallat, Y. J., Owais, T. A., Salman, S., Ebada, M. A., et al. (2023). Knowledge, attitude, and perception of Arab medical students towards artificial intelligence in medicine and radiology: a multi-national cross-sectional study. Eur. Radiol. 34, 1–14. doi: 10.1007/s00330-023-10509-2

Angkurawaranon, S., Inmutto, N., Bannangkoon, K., Wonghan, S., Kham-ai, T., Khumma, P., et al. (2024). Attitudes and perceptions of Thai medical students regarding artificial intelligence in radiology and medicine. BMC Med. Educ. 24:1188. doi: 10.1186/s12909-024-06150-2

Bin Dahmash, A., Alabdulkareem, M., Alfutais, A., Kamel, A. M., Alkholaiwi, F., Alshehri, S., et al. (2020). Artificial intelligence in radiology: does it impact medical students preference for radiology as their future career? BJR Open 2:20200037. doi: 10.1259/bjro.20200037

Biri, S. K., Kumar, S., Panigrahi, M., Mondal, S., Behera, J. K., and Mondal, H. (2023). Assessing the utilization of large language models in medical education: insights from undergraduate medical students. Cureus 15:e47468. doi: 10.7759/cureus.47468

Briganti, G., and Le Moine, O. (2020). Artificial intelligence in medicine: today and tomorrow. Front. Med. (Lausanne) 7:27. doi: 10.3389/fmed.2020.00027

Brouillette, M. (2019). AI added to the curriculum for doctors-to-be. Nat. Med. 25, 1808–1809. doi: 10.1038/s41591-019-0648-3

Civaner, M. M., Uncu, Y., Bulut, F., Chalil, E. G., and Tatli, A. (2022). Artificial intelligence in medical education: a cross-sectional needs assessment. BMC Med. Educ. 22:772. doi: 10.1186/s12909-022-03852-3

Ghorashi, N., Ismail, A., Ghosh, P., Sidawy, A., and Javan, R. (2023). AI-powered chatbots in medical education: potential applications and implications. Cureus 15:e43271. doi: 10.7759/cureus.43271

Gilson, A., Safranek, C. W., Huang, T., Socrates, V., Chi, L., Taylor, R. A., et al. (2023). How does ChatGPT perform on the United States medical licensing examination (USMLE)? The implications of large language models for medical education and knowledge assessment. JMIR Med. Educ. 9:e45312. doi: 10.2196/45312

Grunhut, J., Marques, O., and Wyatt, A. T. M. (2022). Needs, challenges, and applications of artificial intelligence in medical education curriculum. JMIR Med. Educ. 8:e35587. doi: 10.2196/35587

Hatem, R., Simmons, B., and Thornton, J. E. (2023). A call to address AI "hallucinations" and how healthcare professionals can mitigate their risks. Cureus 15:e44720. doi: 10.7759/cureus.44720

Haug, C. J., and Drazen, J. M. (2023). Artificial intelligence and machine learning in clinical medicine, 2023. N. Engl. J. Med. 388, 1201–1208. doi: 10.1056/NEJMra2302038

Jiang, F., Jiang, Y., Zhi, H., Dong, Y., Li, H., Ma, S., et al. (2017). Artificial intelligence in healthcare: past, present and future. Stroke Vasc. Neurol. 2, 230–243. doi: 10.1136/svn-2017-000101

Kaur, A., Singh, S., Chandan, J. S., Robbins, T., and Patel, V. (2021). Qualitative exploration of digital chatbot use in medical education: a pilot study. Digit. Health 7:20552076211038151. doi: 10.1177/20552076211038151

Kimmerle, J., Timm, J., Festl-Wietek, T., Cress, U., and Herrmann-Werner, A. (2023). Medical students' attitudes toward AI in medicine and their expectations for medical education. J. Med. Educat. Curri. Develop. 10:23821205231219346. doi: 10.1177/23821205231219346

Koohi-Moghadam, M., and Bae, K. T. (2023). Generative AI in medical imaging: applications, challenges, and ethics. J. Med. Syst. 47:94. doi: 10.1007/s10916-023-01987-4

Li, Y. S., Lam, C. S. N., and See, C. (2021). Using a machine learning architecture to create an AI-powered chatbot for anatomy education. Med. Sci. Educ. 31, 1729–1730. doi: 10.1007/s40670-021-01405-9

Lu, M. Y., Chen, B., Williamson, D. F. K., Chen, R. J., Zhao, M., Chow, A. K., et al. (2024). A multimodal generative AI copilot for human pathology. Nature 634, 466–473. doi: 10.1038/s41586-024-07618-3

Meskó, B., Hetényi, G., and Győrffy, Z. (2018). Will artificial intelligence solve the human resource crisis in healthcare? BMC Health Serv. Res. 18:545. doi: 10.1186/s12913-018-3359-4

Najjar, R. (2023). Redefining radiology: a review of artificial intelligence integration in medical imaging. Diagnostics (Basel) 13:2760. doi: 10.3390/diagnostics13172760

Pedersen, M. R. V., Kusk, M. W., Lysdahlgaard, S., Mork-Knudsen, H., Malamateniou, C., and Jensen, J. (2024). Nordic radiographers' and students' perspectives on artificial intelligence - a cross-sectional online survey. Radiography (Lond.) 30, 776–783. doi: 10.1016/j.radi.2024.02.020

Pesapane, F., Cuocolo, R., and Sardanelli, F. (2024). The Picasso's skepticism on computer science and the dawn of generative AI: questions after the answers to keep "machines-in-the-loop". Eur. Radiol. Exp. 8:81. doi: 10.1186/s41747-024-00485-7

Pinto, D., Giese, D., Brodehl, S., Chon, S. H., Staab, W., Kleinert, R., et al. (2019). Medical students' attitude towards artificial intelligence: a multicentre survey. Eur. Radiol. 29, 1640–1646. doi: 10.1007/s00330-018-5601-1

Polesie, S., McKee, P. H., Gardner, J. M., Gillstedt, M., Siarov, J., Neittaanmäki, N., et al. (2020). Attitudes toward artificial intelligence within dermatopathology: an international online survey. Front. Med. (Lausanne) 7:591952. doi: 10.3389/fmed.2020.591952

Rajpurkar, P., Chen, E., Banerjee, O., and Topol, E. J. (2022). AI in health and medicine. Nat. Med. 28, 31–38. doi: 10.1038/s41591-021-01614-0

Rao, S. J., Isath, A., Krishnan, P., Tangsrivimol, J. A., Virk, H. U. H., Wang, Z., et al. (2024). ChatGPT: a conceptual review of applications and utility in the field of medicine. J. Med. Syst. 48:59. doi: 10.1007/s10916-024-02075-x

Reeder, K., and Lee, H. (2022). Impact of artificial intelligence on US medical students' choice of radiology. Clin. Imaging 81, 67–71. doi: 10.1016/j.clinimag.2021.09.018

Rivero-Moreno, Y., Echevarria, S., Vidal-Valderrama, C., Pianetti, L., Cordova-Guilarte, J., Navarro-Gonzalez, J., et al. (2023). Robotic surgery: a comprehensive review of the literature and current trends. Cureus 15:e42370. doi: 10.7759/cureus.42370

Shafi, S., and Parwani, A. V. (2023). Artificial intelligence in diagnostic pathology. Diagn. Pathol. 18:109. doi: 10.1186/s13000-023-01375-z

Singh, Y., Hathaway, Q. A., and Erickson, B. J. (2024). Generative AI in oncological imaging: revolutionizing cancer detection and diagnosis. Oncotarget 15, 607–608. doi: 10.18632/oncotarget.28640

Stewart, J., Lu, J., Gahungu, N., Goudie, A., Fegan, P. G., Bennamoun, M., et al. (2023). Western Australian medical students' attitudes towards artificial intelligence in healthcare. PLoS One 18:e0290642. doi: 10.1371/journal.pone.0290642

Topol, E. J. (2019). High-performance medicine: the convergence of human and artificial intelligence. Nat. Med. 25, 44–56. doi: 10.1038/s41591-018-0300-7

Tung, A. Y. Z., and Dong, L. W. (2023). Malaysian medical students' attitudes and readiness toward AI (artificial intelligence): a cross-sectional study. J. Med. Educat. Curri. Develop. 10:23821205231201164. doi: 10.1177/23821205231201164

Wang, F., and Preininger, A. (2019). AI in health: state of the art, challenges, and future directions. Yearb. Med. Inform. 28, 16–26. doi: 10.1055/s-0039-1677908

Keywords: artificial intelligence, survey, medical students, medical education, generative AI, AI chatbots

Citation: Neittaanmäki N (2025) Swedish medical students’ attitudes toward artificial intelligence and effects on career plans: a survey. Front. Educ. 10:1517116. doi: 10.3389/feduc.2025.1517116

Received: 25 October 2024; Accepted: 11 February 2025;

Published: 28 February 2025.

Edited by:

Tisni Santika, Universitas Pasundan, IndonesiaReviewed by:

Jorge Cervantes, Nova Southeastern University, United StatesCopyright © 2025 Neittaanmäki. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Noora Neittaanmäki, bm9vcmEubmVpdHRhYW5tYWtpQGd1LnNl

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.