- 1Faculty of Graduate Studies, An-Najah National University, Nablus, Palestine

- 2Faculty of Humanities and Educational Sciences, An-Najah National University, Nablus, Palestine

The current study describes the process of development and validation of a rubric for assessing educational robotics skills among middle school students. A multiphase method has been followed, including a literature study, expert consultation, content validation, pilot testing, reliability analysis, and construct validation. The resulting dual-category rubric designing and programming skills were further elaborated by specific criteria and performance levels. Content validation identified seven key criteria: stability and durability, motors, innovation, code organization, dependability and reliability, movement and rotation, and innovative use of sensors. The results of inter-rater reliability analysis for all criteria indicated good agreement, with Krippendorff’s alpha ranging from 0.941 to 1.000. Additionally, construct validation using exploratory factor analysis confirmed a two-factor structure that aligns with the intended domains of design and programming skills, explaining 67.4% of the total variance. The rubric was consistent with learning/teaching theories such as Bloom’s taxonomy, constructivist learning theory, and self-determination theory. This rubric fulfills the existing need for a specific assessment tool in educational robotics and supports educational practices through very detailed feedback to students on their performance. This adds much to educational assessment and education in robotics and enhances teaching and learning outcomes for STEAM education in various settings.

Introduction

Robotics education has become an important component of the STEAM (Science, Technology, Engineering, Arts, and Mathematics) curriculum, promoting essential skills such as problem-solving, creativity, and cooperation (Eguchi, 2014). Middle school is important for introducing robotics as it corresponds with students’ considerable cognitive and social growth. There have been indications to show that activities on robotics increase the motivation of students to understand complex subject matters, hence preparing them for other technological challenges in future studies (Elkin et al., 2018; Nugent et al., 2013). Although the role of robotics education is realized, there is still a lack of a robust and reliable assessment tool that precisely captures students’ skills in designing, building, and programming robots. Traditional forms of testing cannot capture multidimensional skills in robotics. They include conventional assessment techniques, which can hardly go beyond rote learning and theoretical approaches. These are away from the capture of practical problem-solving skills rightly classically taught in educational robotics, particularly for middle school students (Zhu et al., 2023). This insufficiency underscores the critical need for robust tools that could grade students based on their performance and skills through a more comprehensive and practical approach.

The question is, how to achieve this? A rubric is an assessment tool containing the criteria and performance levels for a particular task, so it becomes an extensive guide for a teacher and student. Rubrics not only help clarify expectations and offer a guide toward effective instructional strategies and structured feedback to the students but also justify learning and improvement for students through guidance (Gallardo, 2020).

The most significant necessity for rubrics lies in educational robotics. In robotics education, learners are supposed to piece together knowledge from a variety of domains to acquire hands-on, project-based learning. These domains include engineering, programming, and mathematics. Using clear criteria for performance and guiding students throughout the learning process, rubrics effectively assess very different skills (Reddy and Andrade, 2010). Rubrics are essential to enhancing self-regulation in student reflective learning, other than an assessment tool. This means that students can be able to use the rubrics thereof to refer to what is expected from them and where gaps exist. This self-assessment feature is critical in building critical thinking and problem-solving skills that define robotics practice (Panadero and Jonsson, 2013). However, several varieties of rubrics may be developed for the implementation of general assessment purposes. Holistic rubrics are those that provide one general score produced from the overall impression created by a student’s performance. For analytic rubrics, it drops it into offering several criteria variables that are scored independently and demonstrate their respective scores. There are also further categories, such as developmental rubrics, which manifest in the ability to echo skill development over time. The last are classified under single-point rubrics and thus portray detailed feedback for each criterion, however, minus the specifics of scoring such variables (Andrade, 2005). Rubrics can have a valuable position in educational institutions, particularly in sectors or specialties that involve practical, hands-on applications of learned skills and creativity. Rubrics in robotics education help bridge the gap between theory and practice by ensuring that adequate assessment is conducted against realistic abilities and competencies in the real world (Moskal, 2000).

The present study was part of a larger study that targeted investigating the effect of using rubrics-based instruction to improve robotics skills and academic self-regulation for middle school Arab students in Jerusalem. Ethical aspects of the broad study were approved by the PhD Program Committee in Teaching & Learning, Faculty of Graduate Studies, An-Najah National University, ensuring all procedures adhered to the highest ethical standards. Written informed consent was acquired from each student and guardian, with full verbal and written disclosure about the nature of the research study and their option to withdraw at any time, with data collected kept highly confidential. This paper aimed to describe the cycle of development and validation of the robotics skills rubric for middle school students systematically.

Literature review

Educational robotics in middle schools

Educational robots have evolved considerably over the last two decades, from specialized programs to being more part of middle school STEAM curricula. This move underlines recent shifts in increased emphasis on technology literacy and 21st-century skills in education (Wang et al., 2023). Research portrays numerous gains in robotics education among middle school children. The results are usually a tremendous increase in the problem-solving skills developed through critical and creative thinking required during the building and programming of robots (Zhang et al., 2024). Collaborative teamwork helps learners develop social skills and enables them to work effectively in groups while doing robotics projects (Demetroulis et al., 2023). This significantly improves higher levels of student motivation and engagement in robotics education. Students who participate in robot programs provide reports regarding increased overall motivation and interest in STEAM subjects, which is very critical during middle school years when they are forming their academic identities and initially beginning to consider choices of future career fields (Selcuk et al., 2024). Challenges exist in educational robotics, however, which are mainly based on its resource-demanding nature. Most schools lack the primary resources, which include funds, equipment, and access to technology. This, in turn, prevents such schools from starting and maintaining a robotics school program (Ateş and Gündüzalp, 2024) On the other hand, these school robotics programs generally rely on the availability of pre-service and in-service teachers who are trained and hence knowledgeable, hence needing professional development together with continuous support for the teachers (Sullivan and Bers, 2018). The integration of robotics into the middle school curriculum ranges from dedicated robotics courses to projects integrated into existing science or technology classes. Successful integration would align activities in robotics with educational standards such as the Next Generation Science Standards (NGSS) and the International Society for Technology in Education (ISTE) standards, ensuring that robotics education supports broader learning objectives in line with students’ critical STEAM capability development (Furse and Yoshikawa-Ruesch, 2023). Educational robotics enhances this growth mindset in students by encouraging them to view challenges as learning opportunities. Through iterative design, building, and programming of robots, the students learn to persist through difficulties, hence valuing experimentation and innovations (Bers et al., 2014). Educational robotics has positive impacts on academic performance in terms of revealing improved problem-solving abilities and overall improvement in the academic achievement of students participating in robotics programs (Anwar et al., 2019).

Robotics skills and competencies

The skills of educational robotics incorporate quite a great variety of capabilities relevant to the development of students from STEAM majors, falling under technical and soft skills. Technical skills refer to: programming/coding, mechanical design, and construction, electronics, and sensors. This entails understanding the physical principles, spatial relationships, engineering concepts, and basic electronics (Boya-Lara et al., 2022; Ouyang and Xu, 2024). Some soft skills that can be induced through education in robotics include problem-solving and logical thinking, collaboration and teamwork, creativity and innovation, persistence, and adaptability. They are, therefore, born out of the iterative design and troubleshooting processes involved with robotics projects, teamwork, and the experimental nature thereof (Ouyang and Xu, 2024; Coufal, 2022; Graffin et al., 2022).

Assessment in educational robotics

In an educational robotics context, practical assessment generates further motivation and is also demanded for judgments related to student progress, instructional practices, and feedback. Current assessment practices are based essentially on less formal observations of students’ activity processes and reviews of project outcomes, which are subjective measures or varying quality. Robotics education combines cognitive skills, technical skills, and interpersonal skills that require assessments that can tease out technical accuracy from creative processes (Wang et al., 2023). Authentic assessment tools include project-based appraisal, performance tasks, and portfolios to help the teacher gather insights into student learning and development. These assessments will cover all the process and product domains of learning concerning creativity, innovation, and practical application knowledge (Gedera, 2023). Rubrics also help score complicated robotics skills clearly and provide specific criteria with descriptions of performance levels so that there is complete consistency and objectivity while performing an assessment. Whereas analytical rubrics disaggregate assessment into parts, holistic rubrics render general judgments about performance quality (Moskal, 2000). Despite significant progress in educational robotics, several gaps remain, particularly concerning the assessment of robotics skills in middle school students. These gaps include the lack of standardized, validated assessment tools, limited research on the long-term impact of robotics education, the transferability of robotics skills to other academic domains, issues of equity and access, teacher professional development, assessment of soft skills, and alignment with educational standards (Gallardo, 2020; Ouyang and Xu, 2024; Darmawansah et al., 2023; Gao et al., 2020; Luo et al., 2019).

Theoretical framework

The development of rubric standard assessment tools for educational robotics skills draws on several foundational educational theories and models, including Bloom’s taxonomy, constructivist learning theory, self-determination theory (SDT), and authentic assessment principles. These theories provide a robust understanding of how students learn in robotics education and inform the design of effective assessment tools (Black and Wiliam, 1998; Krathwohl, 2002; Adams and Krockover, 1999; Ryan and Deci, 2000; Darling-Hammond, 1995). The learning goals set by Bloom’s taxonomy are categorized under the cognitive, affective, and psychomotor domains. Primarily, there is a concern for six levels of cognitive learning objectives: knowledge, comprehension, application, analysis, synthesis, and evaluation. Together, this shall provide the framework for designing rubrics that will tap these various cognitive skills in robotics—from basic recall to the highest intellectual skills (Krathwohl, 2002). The constructivist learning theory argues that students develop their knowledge when they are fully involved with their surroundings. In the context of educational robotics, this theory emphasizes hands-on/experiential learning and hence aligns very well with formative assessments that have continuous feedback loops for iterative improvement. Rubrics based on this approach measure learning processes such as strategies to tackle problems, creativity, and reflection, in addition to the final product (Adams and Krockover, 1999). SDT describes intrinsic motivation to learn, whereby students are much more motivated to learn if they experience feelings of autonomy, competence, and relatedness. Project choices, development of competencies with challenging tasks, and collaborative work support these elements in educational robotics. A rubric designed with these criteria will reward student autonomy, competence, and collaboration to motivate the learning environment (Deci et al., 1991). Authentic assessment looks at students’ skills concerning the application of knowledge and acquired competencies in real-life scenarios, thus going hand in hand with constructivist principles of learning. Examples of authentic assessments applicable in educational robotics are project-based assessments, performance tasks, and portfolios. All these will capture the depth of student learning clearly. These rubrics spell out criteria for both the learning processes and their products, including creativity, innovation, and whether knowledge was well put into practice (Darling-Hammond, 1995). Formative assessment provides feedback during the process of learning, whereas summative assessment deals with the end of some instructional period. Both types are crucial in educational robotics. On the other hand, formative assessment allows for iterative design, test, and refinement cycles, while summative assessment helps to evaluate overall project success. In this respect, rubrics should support both types, yielding clear and helpful feedback yet comprehensive evaluation criteria (Black and Wiliam, 1998). Integration of theoretical frameworks drawing on aspects of bloom’s taxonomy, constructivist learning theory, SDT, and authentic assessment together with formative and summative evaluation becomes possible for a teacher to design rubrics that will not only evaluate technical and cognitive skills but also allow for student motivation, engagement, and holistic development.

Methodology

Developing and validating a rubric for evaluating educational robotics skills among middle school students followed a systematic, multiphase approach that guaranteed the rubric to be comprehensive, reliable, and valid. It started by delineating the goal and selecting a rubric type. After this, a literature review identified critical skills and provided information for the initial standards of the rubric. Expert consultations and content validation are used to fine-tune these criteria. Pilot testing with middle school students allowed some practical insights. Reliability analysis checked for inter-rater reliability. Finally, exploratory factor analysis (EFA) identified if the construct validity of the rubric was adequate to ensure the accurate measurement of the competencies being assessed. Each phase is explained below:

Phase 1: determining the goal of creating the rubric

First, we needed to determine the purpose and goal of the rubric. We engaged with different teachers, curriculum developers, and experts in robotics. Its primary goal was to create a rubric that assesses skills of middle school students in educational robotics, specifically designing and programming according to academic theories.

Phase 2: selection of rubric type

After determining the goals, an analytic rubric was chosen for this study. The choice of an analytic rubric was based on the reason that it provides detailed feedback on several criteria, which is essential given the multifaceted nature of robotics skills.

Phase 3: literature review and theoretical frameworks

Grounded in a critical review of the extant literature on educational robotics, STEAM education, assessment tools, and established theoretical frameworks such as Bloom’s taxonomy and constructivist learning theory, sources included peer-reviewed journals, academic reports, existing published rubrics, famous robotics platforms, and competitions. This review identified a narrower set of skills and competencies required for effective robotics education. These included understanding concepts, applying principles, analyzing problems, creating solutions, and evaluating designs.

Phase 4: preliminary drafting of the criteria

We developed from the literature review preliminary criteria of the rubric. The criteria were written to be clear and specific so that each skill would be measurable and observable. The rubric consisted of two major categories: designing skills and programming. The detailed criteria for each category are as follows:

Criteria of designing skills

The literature search yielded six key criteria for designing skills:

1. Simplicity vs. Complexity: The design of the robots should consider the balance between simplicity and complexity so that they are functional and efficient. Optimal kinematic design principles ensure that this balance is attained so that robots are neither simplistic nor cumbered with unnecessary complexities that render them impractical and ineffective (Paden and Sastry, 1988; Angeles and Park, 2008; Laribi et al., 2023).

2. Stability and Durability: These critical features will ensure robots can endure operational stresses without constant breakdowns. With a bid to offer complete insight into designing robust robotic systems, the Springer Handbook of Robotics explains mechanical stability and operational durability in design specifications for any robotic system (Siciliano and Khatib, 2016).

3. Aesthetics: The role of aesthetics in design concerning robotics comes from the urge to find a middle path between form and function. Aesthetic designs are pleasing, and as such, they increase user interaction and overall appeal without ever compromising functionality (Xefteris, 2021).

4. Innovation: Innovation in the design of robots means that they can be differently equipped with mechanisms and features that single them out from conventional designs. Within the most recent trends in innovative robot designs, new conceptual solutions and creative ideas have entered this space, which considerably move the capability boundary for robots forward (Carbone and Laribi, 2023).

5. Motors: Proper selection and integration of motors are very essential for robots. They determine their movement and performance in doing tasks designed for them (Angeles and Park, 2008).

6. Attachments: The attachments can be considered mechanisms that extend a robot’s capability in certain aspects. Literature available on the selection and optimization of robots includes works where to enhance overall capabilities, designing and integrating attachments to do specific tasks have been discussed in detail (Laribi et al., 2023).

Criteria of programming skills

Five key criteria identified about the programming skills are as follows:

1. Code organization: Any robotic software developed subsequently requires proper organization for easy maintenance and updating. Best practices in software engineering emphasize on a logical structure of the code, which is well documented and easy to read (Siciliano and Khatib, 2016).

2. Advanced programming concepts: Loops, conditionals, and functions are the core of any effective and efficient robotic software. Reviews on advanced techniques of robot programming give insights into how such advanced programming concepts can be relevant in enhancing the capabilities and performances of robots (Zhou et al., 2020; Kormushev et al., 2011).

3. Dependability and reliability: The development of robust software is supposed to have the ability for dependability and reliability under different conditions (Pinto et al., 2021).

4. Movement and rotation: Accurate movement and rotation are required for smooth and precise movement, hence, in complex operations performed (Angeles and Park, 2008).

5. Innovative Sensor Use: The innovative function of sensors in robotics supports decision-making and adaptability. Recent trends in sensor integration consider how creative use enhances the functions and responsiveness of the surroundings (Carbone and Laribi, 2023).

Phase 5: selection of initial performance levels

Initial performance levels with scores were defined for each criterion in the rubric. Performance levels with detailed descriptors are provided to ensure clarity and consistency of assessment. Three levels included nothing provided (0 points), poor performance (1 point), and achieves the goal (2 points).

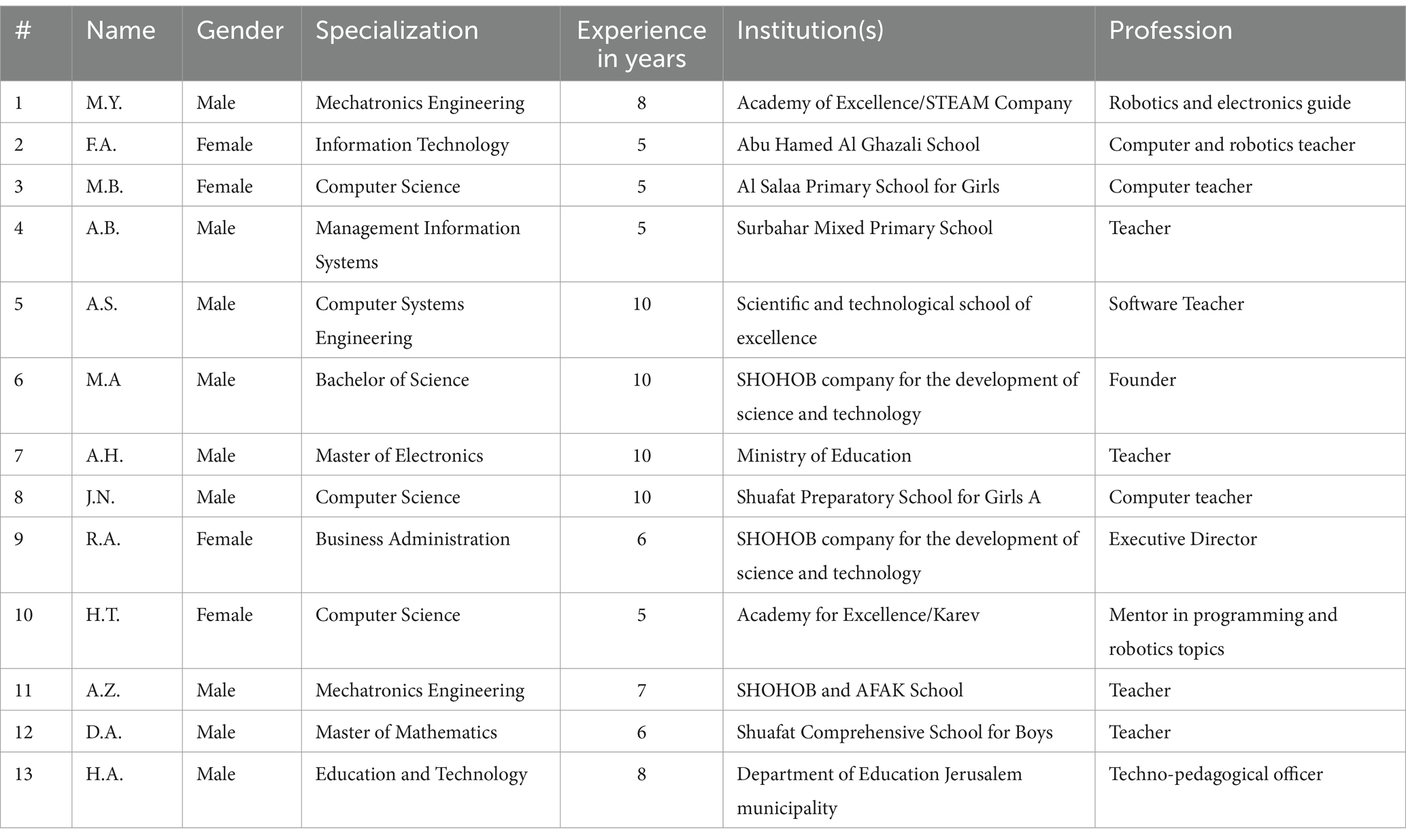

Phase 6: expert validation

We contacted a panel of experts to help us develop the rubric. A questionnaire was administered to gather suggestions on the initial standards and performance levels. Based on this feedback, the rubric was changed to ensure that each item was relevant, clear, and accurate. A detailed questionnaire was prepared to elicit expert opinions that would correspond to the proposed criteria of a rubric for assessing robotic skills. Each criterion is stated with its justification and one example that may allow experts to evaluate, per their expert opinion, the need, utility, and consequences arising from including each of these criteria. Ethical considerations ensure that participation by experts is voluntary, with informed consent obtained. There were 13 experts in robotics education and evaluation at Jerusalem. The panel of experts consisted of various educational backgrounds: mechatronics engineering, information technology, computer science, management information systems, and education technology. This broad multidisciplinary approach ensured scrutiny would be applied to the rubric from every professional perspective. In addition, these are experts with a rich practical experience of 5–10 years in robotics education, which helped add much value to the proceedings for validating the process. Experts contributed a lot toward developing the relevance and applicability of the rubric in the education environment. The details of the expert panel are summarized in Table A1. This panel’s expertise and opinion were influential in establishing the relevance and content validity of the rubrics. For example, M.Y.’s background in mechatronics engineering and guide in robotics and electronics made a vital contribution to technical accuracy in the design criteria within the rubric. The same line was strongly supported by F.A. with his IT background and M.B. with his computer science background. He helped develop the programming rubric so that it would align with current standards of learning in robotics. This diverse composition, not only professional but also gender-based and institutional, should guarantee a balanced debate through the characteristics of experts. This intense review process, then, has sharpened and made the criteria fitter from a cultural point of view and an educational perspective of courses in robotics in Jerusalem. Being experts, as H.A. has been, in covering techno-pedagogical development much more on the education front broadened it—the rubrics are sure to support pedagogical objectives and technical skill assessment. It had composition from experts cut across the board in various educational institutions and government departments around Jerusalem, such as schools and ministries. This makes the rubric quite flexible and hence able to find applications in many teaching environments, with a corresponding increase in general usefulness and relevance expected.

Phase 7: content validation

Content validity was assessed with the content validity ratio (CVR) and Item-Content Validity Index (I-CVI). Experts rated each criterion as “Essential,” “Useful but not Essential,” or “Unnecessary” and to adjust for chance agreement among the experts the modified kappa statistic (K*) was used. This process checked whether the rubric items were essential and representative of the targeted skills. Content validation analysis scores were computed based on judgments by a panel of experts in the field of education for robotics. The formula used was as follows: , where Ne = a number of experts indicating the item is “Essential,” N = total number of experts (Lawshe, 1975). It can be viewed as the amount of unanimous agreement among the experts regarding the identified essential criteria. I-CVI is the ratio of agreement among experts that an item is “Essential” and is given by (Polit and Beck, 2006). For items rated “Essential,” the chance-adjusted agreement was assessed using the K*. The formula used was , where Pe, the chance agreement, would conventionally be one-third as there are three options; as there were three options, then the chance agreement would constantly be one-third (Polit et al., 2007). An inclusion criterion in the rubric with a CVR of more than 0.53 and an I-CVI of more than 0.76 showed strong consensus among experts on the criterion of evaluating robotics skills as being essential (Polit and Beck, 2006).

Phase 8: pilot testing

The practicality of the rubrics was tested. This has been done by two pilot tests that included four practicing teachers and 40 middle school students attending robotics courses in Jerusalem. Precisely for this reason, modifications were enabled due to the feedback received from the students and teachers: rating scale modification and refinement of performance-level descriptors on the rubrics.

Phase 9: reliability analysis

To make sure the rubric would be consistent and reliable across different raters, inter-rater reliability was tested. Three raters rated the same projects of seven student performances using the rubric. Percent agreement and Krippendorff’s alpha (Krip.α) were run to measure rating consistency. While percent agreement only measures shared opinion by the raters in cases where they agree, Krip.α counts chance agreements and gives allowances for several different measurement scales; thus, it shall be used for complex judgments (Krippendorff, 2019). To ensure that the rubric was applied consistently, all raters received training. The session also provided a detailed walk-through of using the rubric, including student work examples and practice assessments to help people conducting the assessment calibrate their scoring as a way to improve reliability.

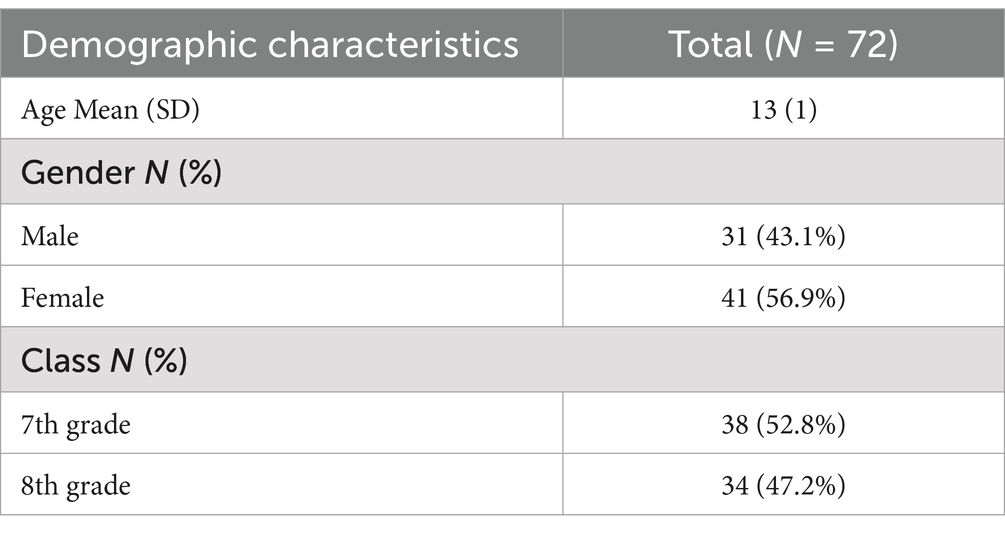

Phase 10: apply the rubric

That involved collecting all the validation results and then further fining and polishing the rubric. Present the application of the rubric to educators, train them, and then implement the rubric in the after-school robotics course on a sample of 72 students from three different middle schools in Jerusalem in the academic year 2023–2024. Table 1 presents the demographic characteristics of the participants.

Phase 11: construct validation

Construct validity using EFA was determined through data from the application of the rubric to participants. This was carried out with the intention of finding latent structure in the rubric and assuming items clustered into expected skill categories (Tavakol and Wetzel, 2020). This assisted in ensuring it measured intended constructs so precisely. EFA was used with JASP statistical analyses software version of 0.18.3.

Results

Expert feedback and rubric tuning

The refinement of our rubrics was greatly influenced by expert feedback. For instance, from the comment, “The design for the robot should start simple and build in complexity only when necessary to perform specific tasks,” we can emphasize efficient and task-adaptive design. Moreover, because “without stability and durability, operational problems may result, which will reduce the effectiveness of the robot,” this strengthened the argument behind our stability criterion and provided specific detail to the descriptors. An insight of expert that “Innovation encourages exploration and development of new mechanisms, adding value to the robot” encouraged us to increase the innovation criterion. Several experts indicated a well-organized code; among them, one commented, “Well-organized code is easier to understand, modify, and develop,” which led us to create specific criteria about the structure and readability of code. That “creative sensor use enables the robot to interact more with its environment” has been an observation that has led to nuanced descriptors of evaluation in sensor integration. While aesthetics did not quite meet the statistical thresholds to separate as a criterion in their own right, we rolled elements into the Innovation criterion based on the feedback that “Attention to aesthetic details could enhance positive interaction with the robot.” We used these expert comments to identify fine-tuning our rubric criteria and descriptors for real-world robotics education needs.

Content validation scores

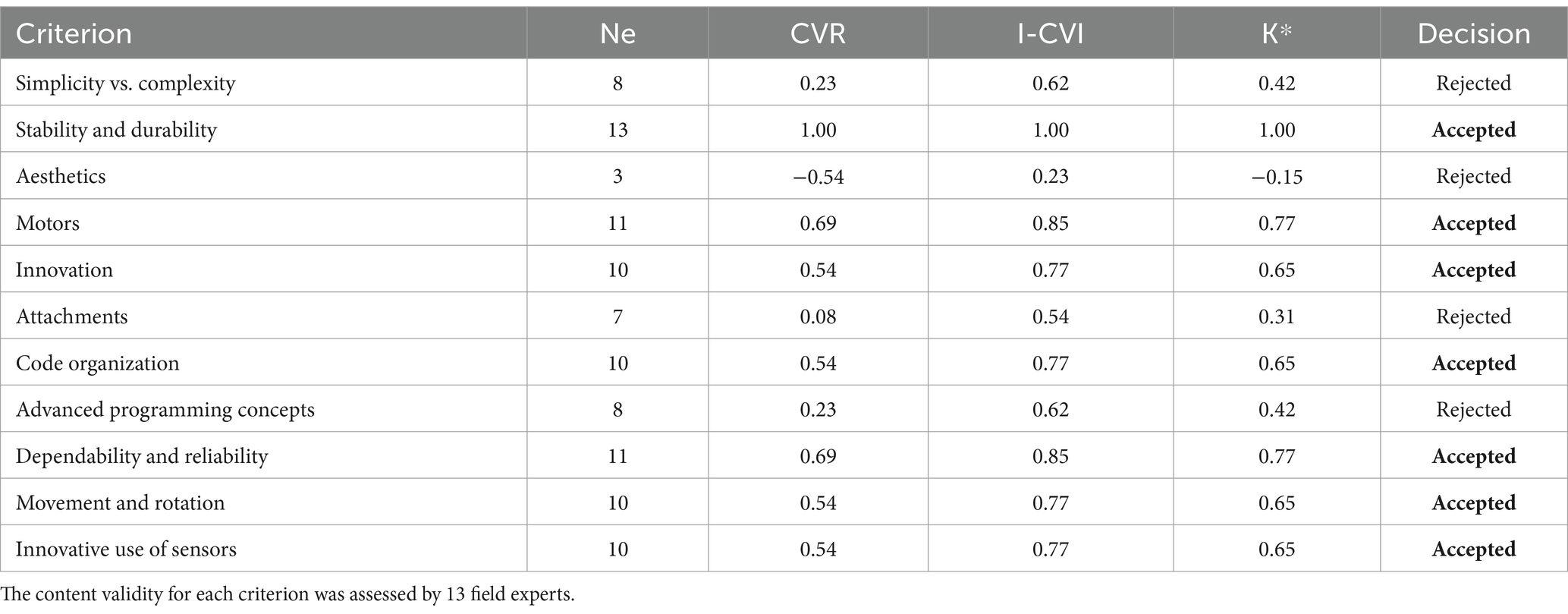

Table 2 presents the content validity scores after the instrument had been rigorously evaluated by 13 experts against several criteria ranked for relevance and clarity.

The accepted criteria were stability and durability, motors, innovation, code organization, dependability and reliability, movement and rotation, and innovative use of sensors. High content validity was shown in these as the CVR values range from 0.54 to 1.00, I-CVI values range from 0.77 to 1.00, and K* values range 0.65 to 1.00. Such high scores reflect strong agreement by experts about the importance and clarity of these criteria. On the other hand, criteria such as simplicity vs. complexity, aesthetics, attachments, and advanced programming concepts have not been selected because their CVR, I-CVI, and K* values were smaller, indicating that these criteria were either deemed less relevant or lacked sufficient clarity according to the experts’ evaluations. This thorough validation process ensures that only the most pertinent and well-defined criteria are utilized in the assessment of robotics skills.

Pilot testing outcomes

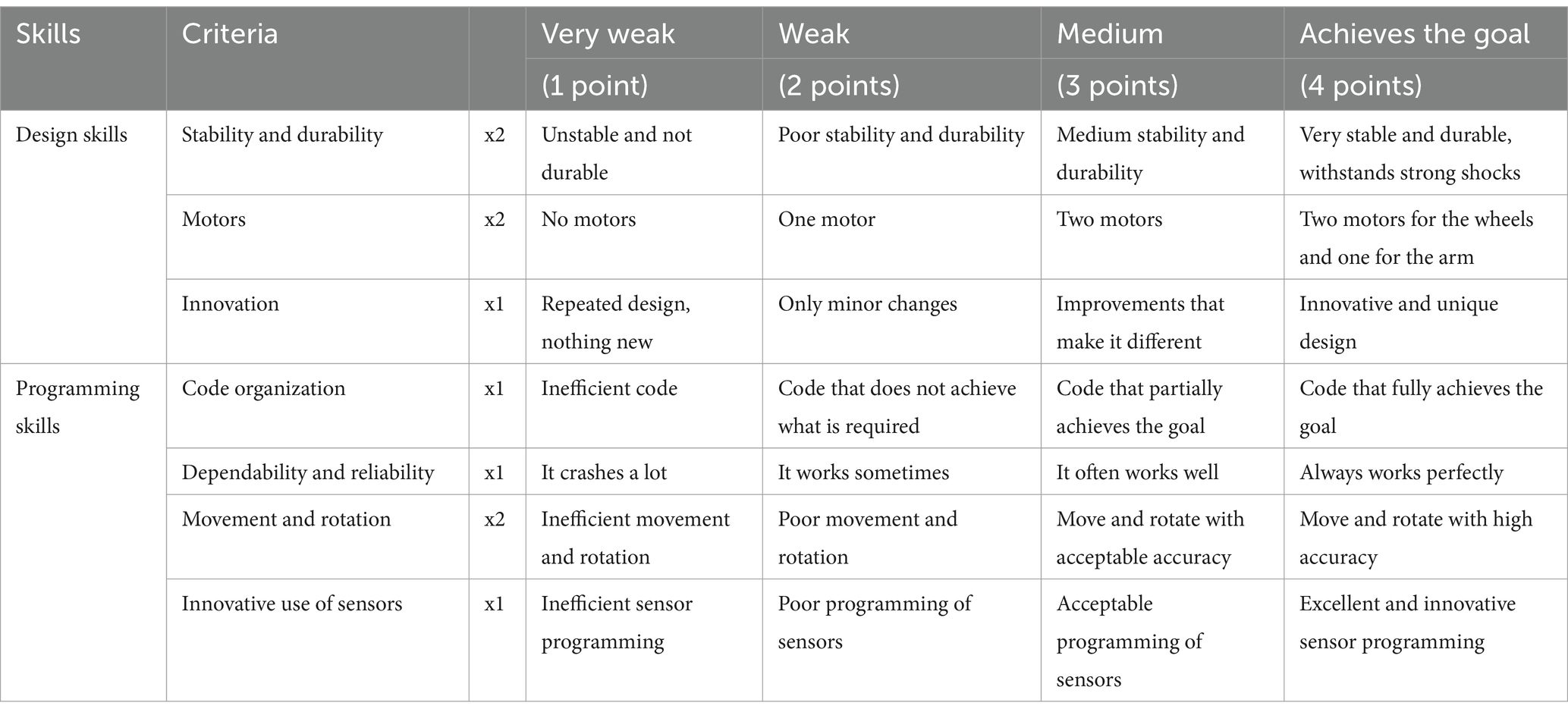

At the first pilot testing, student feedback constantly pointed out the flaws in the three-point scale that, most of the time, did not capture the differences between the designed robots. Teachers’ responses indicate that performance levels are vague, do not seem specific, and there is uniformity in grading, so a multiplier needs to be added to critical skills, such as “stability and durability,” “motors” and “movement and rotation.” These were monumental changes: The performance levels were changed from three levels to four levels: very weak (1 point), weak (2 points), medium (3 points), and achieves the goal (4 points). Zero points were excluded due to one of the teachers’ comments that the student who attended and watched the course and did not perform anything his situation was still better than the student who did not attend this course so Zero points should not be given as a student’s grade in the course, this view of the teacher was according to no-zero policy. The results of a second pilot test with this new rating scale were quite good, with students expressing appreciation for having clear guidance on how one would attain higher scores and teachers reporting improved consistency of their grading. It was only after this stage that the revised rubric shown in Table 3, currently fully meeting educational goals, demonstrated its usefulness in not requiring development further toward better teaching and assessment of robotics skills.

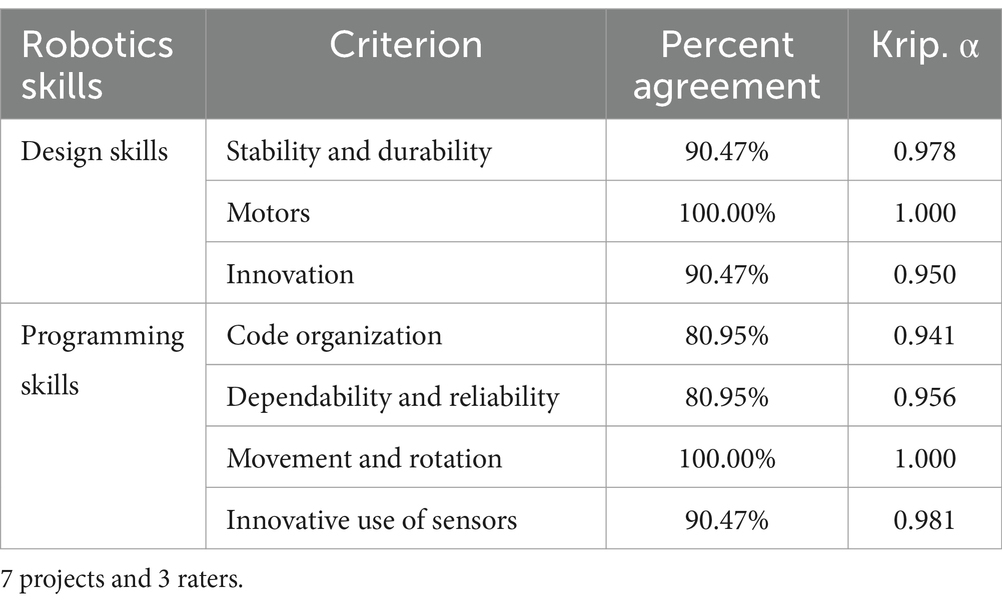

Reliability measures

Percent agreement and Krip.α between criteria for design and programming skills in robotics presented in Table 4.

Generally, there is an inter-rater solid agreement in all criteria in both design and programming skills. More specifically, related to the requirements of stability and durability, motor, and innovation in design skills, solid reliability is shown with Krippendorff’s alpha values equal to 0.978, 1.000, and 0.950, respectively, indicating near-perfect agreement among raters. In the same way, the programming skills criteria of code organization, dependability and reliability, movement and rotation, and innovative use of sensors are highly reliable, with alpha ranging from 0.941 to 1.000. This strong inter-rater agreement resonates with the strength and clarity of the evaluation criteria in ensuring that assessments of robotics skills are consistent and reliable.

Construct validity result

An EFA was conducted to establish the rubric construct by identifying the underlying factor structure. The chi-squared test indicated that the model fitted the data well, χ2(8) =15.772, p = 0.046; this p-value, being below the conventional threshold of 0.05, suggests statistical significance.

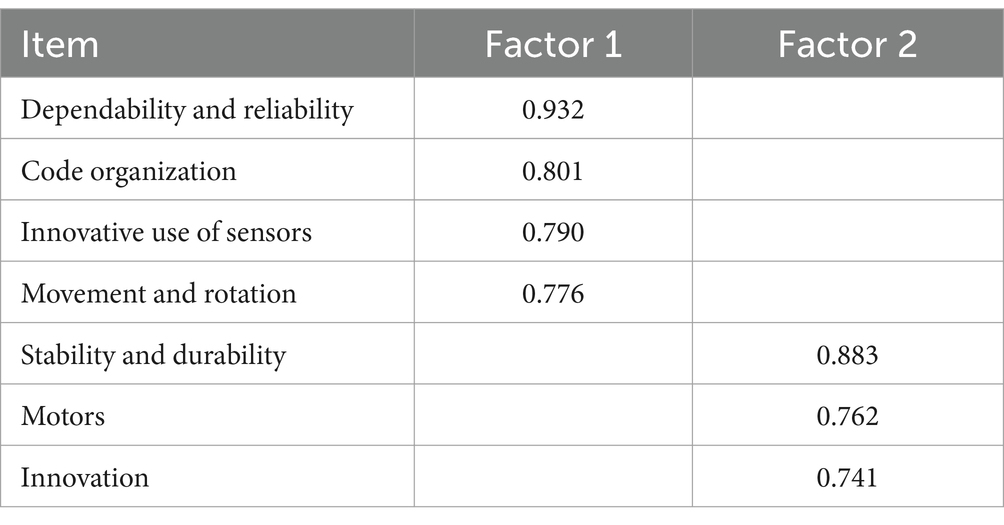

The factor loadings for each item on the two factors are presented in Table 5 items related to programming skills (code organization, dependability and reliability, movement and rotation, innovative use of sensors) loaded highly on factor 1, while items related to designing skills (stability and durability, motors, and innovation) loaded highly on factor 2.

The Promax rotation method was used to allow for correlations between factors. Factor 1 had an eigenvalue of 3.539 and explained 46.3% of the variance before rotation and 39.8% after rotation. Factor 2 had an eigenvalue of 1.814 and explained 21.1% of the variance before rotation and 27.6% after rotation. Together, these two factors explained 67.4% of the total variance in the data. The scree plot shown in Figure 1 supported the retention of two factors as indicated by the point where the eigenvalues began to level off.

The EFA results provide strong evidence of the construct validity of this rubric as the items strongly loaded onto their respective factors and uniqueness values were low. The chi-squared test result indicates a good fit between the model and the data, and characteristics demonstrate that these two factors have significant portion variance. The findings show that the rubric measures the intended constructs of programming and designing skills, making it a reliable tool for assessing educational robotics skills in middle school students.

Discussion

The results of this study on the matter are pretty consistent and in good concurrence with prior research that have strongly recommended the benefits of rubrics in educational settings. In regard to this, rubrics are instrumental in making expectations explicit, giving structured feedback, and engaging students in self-regulation toward better performance (Panadero and Jonsson, 2013). Our findings justify these conclusions, showing that the clarity and detailed guidance of the developed rubric for robotics skills are a necessity not only for students but also for teachers. It shows that rubrics play an essential role in the evaluation of complex skills in educational robotics and hence contribute to a deeper understanding of the ways such tools can be effectively applied in STEAM education in the same way as (Eguchi, 2014). Though some prior studies bring out the challenges to high reliability and validity using a rubric-based approach (Gallardo, 2020), our study presents a contrasting perspective by showcasing robust reliability and strong construct validity. Several reasons may exist for this difference. First, in our research, we adopted a multi-phase comprehensive approach, including expert consultations, pilot testing, and advanced statistical methods such as EFA. The whole process was thorough to ensure that the rubric was well-defined and rigorously tested for reliability and validity. Because only very distinct and well-defined robotics skills are assessed using the specific rubric design, this could be another reason why higher reliability and consistency were found in the second place. Similarly, the high level of involvement by the relevant and experienced experts with different educational and professional backgrounds increased the quality and relevance of the rubric, thereby making it a robust tool for assessment. This study provides significant contributions to both issues of educational assessment and robotics education. It provides rigorously developed and validated rubrics for rating robotics skills for middle school students, filling a critical gap in current assessment tools. The detailed methodology used in this research gives other interested researchers and educators one replicable model of how assessment instruments can be developed and validated across various educational contexts. The multiphase process, involving phases such as expert validation and pilot testing, guarantees the creation of reliable and valid rubrics.

The integration of educational theories enriches the design of the rubric, making it not only an assessment tool but also a means to enhance learning and motivation among students. The results indicate that the robotics skills rubric aligns well with several educational theories and models. This rubric incorporated the theory of Bloom’s taxonomy by rating its criteria on steep levels of cognitive complexity. As an illustration, the bottom levels of the rubric (“very weak” and “weak”) relate to simple remembering and understanding of robotics concepts, such as stability and durability. As one moves to high levels, such as “medium” and “achieves the goal,” application, analysis, and creation are manifest in students’ ability to design and develop innovative robots and find efficient solutions to programming. Aligned to Bloom’s taxonomy, the rubric-aligned measures not only develop current abilities but guide toward higher-order thinking skills (Krathwohl, 2002). The robotics skills rubric supports this type of constructivist learning, encouraging students to engage in hands-on activities. The criteria identified within this rubric—“innovation” and “innovative use of sensors”—further support creative problem-solving that is related to the application of theoretical knowledge within a context approximating reality. The approach clearly grants credence to the view of constructivism that learning is an active process in which students modify their prior knowledge and experiences to build new understandings.

Through clear and structured feedback, the rubric offers students the ability to reflect on the process of their learning and further comply with constructivist emphases on self-directed learning and continuous growth by showing them how to improve. Elements of SDT are encompassed within the robotics skill rubric through the provision of clear criteria of success and evidence for demonstrating competence at a variety of levels. The emphasis innovation and problem-solving brings to the rubric creates an autonomous time frame within which students can creatively approach tasks to develop unique solutions. The rubric enhances students’ feelings of competence in that the detailed feedback provided about performance helps students know where their strengths and weaknesses are. In addition, relatedness is fostered through collaboration on robotics projects since students will be working together, exchanging ideas, and learning from one another. Aligned with SDT, the rubric does not only assess students’ technical skills in the subject area but also provides the conditions to assist and help in maintaining students’ overall motivation and engagement in learning (Deci et al., 1991). Provided for in this formative assessment, the robotics skills rubric entails giving elaborate feedback on many aspects of robotics skills developed by students. The detailed criteria within the rubric, such as “code organization” and “dependability and reliability,” help identify places where students may need to focus more of their practice time or areas in which they may need extra support to improve. In this way, educators can build upon progress over time by remaining consistent in using the rubric for student work assessment; they can look for patterns in trends of needs and know to adjust instruction for particular students. More than that, the rubric allows for self-assessment and reflection, which helps students take responsibility for their learning and further furnishes them with goals to improve. This formative approach epitomizes the principles of practical assessment in engendering a continuous cycle of feedback, reflection, and growth as stated by Hattie and Timperley (2007).

Several factors may, therefore, be related to the positive findings of this study beyond the mere quality of the rubric itself. In this regard, one of the strengths associated with this development and validation process was involving highly experienced experts, ensuring its relevance and comprehensiveness. Their inputs, insights, and response backbone further refined the rubric to meet educational and practical needs. Iterative feedback and refinement with rounds of pilot testing enabled continuous improvement to this instrument function; perhaps that may be the reason, it turned out to be solid and efficient. Moreover, it may be that the degree of motivation and involvement of students when expressing themselves in robotics courses affected the results. In other words, highly motivated children are likely to perform better and give more constructive feedback during pilot testing.

Conclusion

The study successfully developed and validated a rubric to assess educational robotics skills in middle school students, with strong reliability and validity metrics demonstrating its effectiveness.

Implications for educational practice

These developed rubrics provide a standard way of assessing robotics skills, thereby filling a significant gap in current educational practice. The rubrics offer clear criteria on design and programming skills and hence help educators provide full, objective assessments of student performance. This increases the quality of feedback students will receive, thus helping in the identification of specific areas for improvement and tracking progress over time. Moreover, rubrics can be used to guide curriculum development through processes of finding primary skills and competency requirements that students would have to acquire through the education of robotics, thereby enabling educators to better instruct and design curricula targeting the learning needs of students and developing relevant vital competencies. Specifically, such standardized assessment tools as these rubrics could increase equity in robotics education because of their capacity to maintain consistent criteria for evaluation among all students from similar or different backgrounds with prior experience or no experience. This will be beneficial in further addressing disparities in access to robotics education and making sure that every student has equal opportunities for the development of critical STEAM competencies. Moreover, the rubrics will evolve into helpful tools for teacher professional development: They provide clear criteria and expectations of performance, thus guiding teachers toward knowledge of what to look for in student projects and how to score them consistently—therefore enhancing instructional quality wholesale in robotics education and supporting teachers’ professional growth.

Contributions to the literature

The significant contributions this research makes to the literature on educational robotics assessment are the development of rubrics for design and programming skills in educational robotics, which were validated and checked for reliability; multiple theoretical anchorages—Bloom’s taxonomy, constructivist learning theory, self-determination theory, and authentic assessment—underpinning the design and validation of these rubrics; and the adaption of a robust mixed-methods approach that triangulated qualitative and quantitative data collection and analysis. In this way, it enhances the significance, reliability, and validity of the results, thereby providing an excellent ground for further research and practice.

Limitations

The scope of application for the present research is done only on middle school students and to use it in the academic year 2023–2024, raising questions about the usability of the rubric in other contexts. Additionally, the study focuses on specific robotics platforms, such as LEGO Mindstorms and Spike Prime, thereby somehow restricting the application of the rubrics to other platforms or new technologies. Further research would be carried out on more extensions covering a broader scope of robotics.

Implications for future research

Future research should focus on expanding participant diversity to test more-systematically the rubric across different educational settings for enhanced generalizability and strength of findings. Additionally, research should investigate the long-term impacts of using this rubric on students’ educational trajectories, particularly in STEAM fields. Furthermore, much more research is still needed to develop and validate assessment tools that will assess such soft skills as problem-solving, collaboration, and creativity with reliability for students’ all-round development and success in the fields of STEAM.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

This study was part of a bigger study that aimed to investigate the effect of using RBI on robotics skills and academic self-regulation for middle school Arab students in Jerusalem. The ethical aspects of the bigger study were approved by the PhD Program Committee in Teaching & Learning, An-Najah National University, in accordance with the Declaration of Helsinki (date of approval: 05 October 2023). Written consent was obtained from each student and guardian, with full verbal and written explanation about the study and their right to withdraw at any time, and the data was kept confidential. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

Author contributions

AY: Conceptualization, Writing – original draft, Writing – review & editing. AA: Supervision, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

We would like to extend our gratitude to the Jerusalem leading expert panel, students, and teachers of the pilot test.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adams, P. E., and Krockover, G. H. (1999). Stimulating constructivist teaching styles through use of an observation rubric. J. Res. Sci. Teach. 36, 955–971.

Andrade, H. G. (2005). Teaching with rubrics: the good, the bad, and the ugly. Coll. Teach. 53, 27–31. doi: 10.3200/CTCH.53.1.27-31

Angeles, J., and Park, F. C. (2008). “Performance Evaluation and Design Criteria,” in Springer Handbook of Robotics, (Berlin, Heidelberg: Springer Berlin Heidelberg), Springer, 229–244. doi: 10.1007/978-3-540-30301-5_11

Anwar, S., Bascou, N. A., Menekse, M., and Kardgar, A. (2019). A systematic review of studies on educational robotics. J. Pre-Coll. Eng. Educ. Res. 9:2. doi: 10.7771/2157-9288.1223

Ateş, H., and Gündüzalp, C. (2024). A unified framework for understanding teachers’ adoption of robotics in STEM education. Educ. Inf. Technol. (Dordr), 29, 1–27. doi: 10.1007/S10639-023-12382-4

Bers, M. U., Flannery, L., Kazakoff, E. R., and Sullivan, A. (2014). Computational thinking and tinkering: exploration of an early childhood robotics curriculum. Comput. Educ. 72, 145–157. doi: 10.1016/j.compedu.2013.10.020

Black, P., and Wiliam, D. (1998). Assessment and classroom learning. Assess. Educ. 5, 7–74. doi: 10.1080/0969595980050102

Boya-Lara, C., Saavedra, D., Fehrenbach, A., and Marquez-Araque, A. (2022). Development of a course based on BEAM robots to enhance STEM learning in electrical, electronic, and mechanical domains. Int. J. Educ. Technol. High. Educ. 19, 1–23. doi: 10.1186/S41239-021-00311-9/FIGURES/16

Carbone, G., and Laribi, M. A. (2023). Recent trends on innovative robot designs and approaches. Appl. Sci. 13:1388. doi: 10.3390/APP13031388

Coufal, P. (2022, 2022). Project-based STEM learning using educational robotics as the development of student problem-solving competence. Mathematics 10:4618. doi: 10.3390/MATH10234618

Darling-Hammond, L. (1995). Setting standards for students: the case for authentic assessment. Educ. Forum 59, 14–21. doi: 10.1080/00131729409336358

Darmawansah, D., Hwang, G. J., Chen, M. R. A., and Liang, J. C. (2023). Trends and research foci of robotics-based STEM education: a systematic review from diverse angles based on the technology-based learning model. Int. J. STEM Educ. 10, 1–24. doi: 10.1186/S40594-023-00400-3/FIGURES/13

Deci, E. L., Vallerand, R. J., Pelletier, L. G., and Ryan, R. M. (1991). Motivation and education: the self-determination perspective. Educ. Psychol. 26, 325–346. doi: 10.1080/00461520.1991.9653137

Demetroulis, E. A., Theodoropoulos, A., Wallace, M., Poulopoulos, V., and Antoniou, A. (2023, 2023). Collaboration skills in educational robotics: a methodological approach—results from two case studies in primary schools. Educ. Sci. 13:468. doi: 10.3390/EDUCSCI13050468

Eguchi, A. (2014). Educational robotics for promoting 21st century skills. J. Autom. Mob. Robot. Intell. Syst., 8, 5–11. doi: 10.14313/JAMRIS_1-2014/1

Elkin, M., Sullivan, A., and Bers, M. U., “Books, Butterflies, and ‘Bots: Integrating Engineering and Robotics into Early Childhood Curricula,” in Early Engineering Learning, eds. L. English and T. Moore (Singapore: Springer Singapore). 225–248. doi: 10.1007/978-981-10-8621-2_11

Furse, J. S., and Yoshikawa-Ruesch, E. (2023). “Standards-based technology and engineering curricula in secondary education: the impact and implications of the standards for technological and engineering literacy” in Standards-based technology and engineering education: 63rd yearbook of the council on technology and engineering teacher education. eds. S. R. Bartholomew, M. Hoepfl, and P. J. Williams (Singapore: Springer Nature Singapore), 65–82.

Gallardo, K. (2020). Competency-based assessment and the use of performance-based evaluation rubrics in higher education: challenges towards the next decade. Probl. Educ. 21st Cent. 78, 61–79. doi: 10.33225/pec/20.78.61

Gao, X., Li, P., Shen, J., and Sun, H. (2020). Reviewing assessment of student learning in interdisciplinary STEM education. Int. J. STEM Educ. 7, 1–14. doi: 10.1186/S40594-020-00225-4/FIGURES/5

Gedera, D. (2023). A holistic approach to authentic assessment. Asian J. Assess. Teach. Learn. 13, 23–34. doi: 10.37134/AJATEL.VOL13.2.3.2023

Graffin, M., Sheffield, R., and Koul, R. (2022). “More than robots’: reviewing the impact of the FIRST® LEGO® league challenge robotics competition on school students” STEM Attitudes, Learning, and Twenty-First Century Skill Development. J. STEM Educ. Res. 5, 322–343. doi: 10.1007/S41979-022-00078-2/TABLES/2

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Kormushev, P., Calinon, S., and Caldwell, D. G. (2011). Imitation learning of positional and force skills demonstrated via kinesthetic teaching and haptic input. Adv. Robot. 25, 581–603. doi: 10.1163/016918611X558261

Krathwohl, D. R. (2002). A revision of Bloom’s taxonomy: an overview. Theory Pract. 41, 212–218. doi: 10.1207/s15430421tip4104_2

Krippendorff, K. (2019). Content Analysis: An Introduction to Its Methodology. 2455 Teller Road, Thousand Oaks California 91320 : SAGE Publications, Inc. doi: 10.4135/9781071878781

Laribi, M. A., Carbone, G., and Zeghloul, S. (2023). Robot design: optimization methods and task-based design. Mech. Mach. Sci. 123, 97–115. doi: 10.1007/978-3-031-11128-0_5

Lawshe, C. H. (1975). A quantitative approach to content validity. Pers. Psychol. 28, 563–575. doi: 10.1111/J.1744-6570.1975.TB01393.X

Luo, W., Wei, H. R., Ritzhaupt, A. D., Huggins-Manley, A. C., and Gardner-McCune, C. (2019). Using the S-STEM survey to evaluate a middle school robotics learning environment: validity evidence in a different context. J. Sci. Educ. Technol. 28, 429–443. doi: 10.1007/S10956-019-09773-Z

Moskal, B. M. (2000). Scoring rubrics: what, when and how? Pract. Assess. Res. Eval. 7, 1–5. doi: 10.7275/A5VQ-7Q66

Nugent, G. C., Barker, B., and Grandgenett, N. (2013). The impact of educational robotics on student STEM learning, attitudes, and workplace skills, robotics: concepts. Methodol. Tools Appl. 3, 1442–1459. doi: 10.4018/978-1-4666-4607-0.CH070

Ouyang, F., and Xu, W. (2024). The effects of educational robotics in STEM education: a multilevel meta-analysis. Int. J. STEM Educ. 11, 1–18. doi: 10.1186/S40594-024-00469-4/TABLES/4

Paden, B., and Sastry, S. (1988). Optimal kinematic design of 6R manipulators. Int. J. Robot. Res. 7, 43–61. doi: 10.1177/027836498800700204

Panadero, E., and Jonsson, A. (2013). The use of scoring rubrics for formative assessment purposes revisited: a review. Educ. Res. Rev. 9, 129–144. doi: 10.1016/j.edurev.2013.01.002

Pinto, M. L., Wehrmeister, M. A., and de Oliveira, A. S. (2021). Real-time performance evaluation for robotics: an approach using the Robotstone benchmark. J. Intell. Robot. Syst. 101, 1–20. doi: 10.1007/S10846-020-01301-1/METRICS

Polit, D. F., and Beck, C. T. (2006). The content validity index: are you sure you know what’s being reported? Critique and recommendations. Res. Nurs. Health 29, 489–497. doi: 10.1002/NUR.20147

Polit, D. F., Beck, C. T., and Owen, S. V. (2007). Is the CVI an acceptable indicator of content validity? Appraisal and recommendations. Res. Nurs. Health 30, 459–467. doi: 10.1002/NUR.20199

Reddy, Y. M., and Andrade, H. (2010). A review of rubric use in higher education. Assess. Eval. High. Educ. 35, 435–448. doi: 10.1080/02602930902862859

Ryan, R. M., and Deci, E. L. (2000). Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am. Psychol. 55, 68–78. doi: 10.1037/0003-066X.55.1.68

Selcuk, N. A., Kucuk, S., and Sisman, B. (2024). Does really educational robotics improve secondary school students’ course motivation, achievement and attitude? Educ. Inf. Technol. (Dordr), 1–28. doi: 10.1007/s10639-024-12773-1

Siciliano, B., and Khatib, O. (2016). Springer Handbook of Robotics. Cham: Springer International Publishing. doi: 10.1007/978-3-319-32552-1

Sullivan, A., and Bers, M. U. (2018). Dancing robots: integrating art, music, and robotics in Singapore’s early childhood centers. Int. J. Technol. Des. Educ. 28, 325–346. doi: 10.1007/s10798-017-9397-0

Tavakol, M., and Wetzel, A. (2020). Factor analysis: a means for theory and instrument development in support of construct validity. Int. J. Med. Educ. 11, 245–247. doi: 10.5116/ijme.5f96.0f4a

Wang, K., Sang, G. Y., Huang, L. Z., Li, S. H., and Guo, J. W. (2023). The effectiveness of educational robots in improving learning outcomes: a meta-analysis. Sustainability 15:4637. doi: 10.3390/SU15054637

Xefteris, S. (2021). Developing STEAM educational scenarios in pedagogical studies using robotics: an undergraduate course for elementary school teachers. Eng. Technol. Appl. Sci. Res. 11, 7358–7362. doi: 10.48084/etasr.4249

Zhang, D., Wang, J., Jing, Y., and Shen, A. (2024). The impact of robotics on STEM education: facilitating cognitive and interdisciplinary advancements. Appl. Comput. Eng. 69, 7–12. doi: 10.54254/2755-2721/69/20241433

Zhou, Z., Xiong, R., Wang, Y., and Zhang, J. (2020). Advanced robot programming: a review. Curr. Robot. Rep. 1, 251–258. doi: 10.1007/S43154-020-00023-4

Zhu, S., Guo, Q., and Yang, H. H. (2023, 2023). Beyond the traditional: a systematic review of digital game-based assessment for students’ knowledge, skills, and affections. Sustainability 15:4693. doi: 10.3390/SU15054693

Appendix

Keywords: educational robotics, rubric development, assessment, middle school education, STEAM, reliability, validity, exploratory factor analysis

Citation: Yousef A and Ayyoub A (2024) Rubric development and validation for assessing educational robotics skills. Front. Educ. 9:1496242. doi: 10.3389/feduc.2024.1496242

Edited by:

Elizabeth Archer, University of the Western Cape, South AfricaReviewed by:

Aušra Kazlauskienė, Vilnius University, LithuaniaLeonidas Gavrilas, University of Ioannina, Greece

Copyright © 2024 Yousef and Ayyoub. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ahmad Yousef, aXQuYWhtYWR5b3VzZWZAZ21haWwuY29t

†ORCID: Ahmad Yousef, https://orcid.org/0000-0002-1924-1811

Abedalkarim Ayyoub, https://orcid.org/0000-0001-9111-4465

Ahmad Yousef

Ahmad Yousef Abedalkarim Ayyoub

Abedalkarim Ayyoub