- 1Faculty of Business, Design, and Technology, Macromedia University, Munich, Germany

- 2Bavarian Association of Secondary School Teachers (Bayerischer Realschullehrerverband e.V.), Munich, Germany

- 3Secondary School Foundation, Munich, Germany

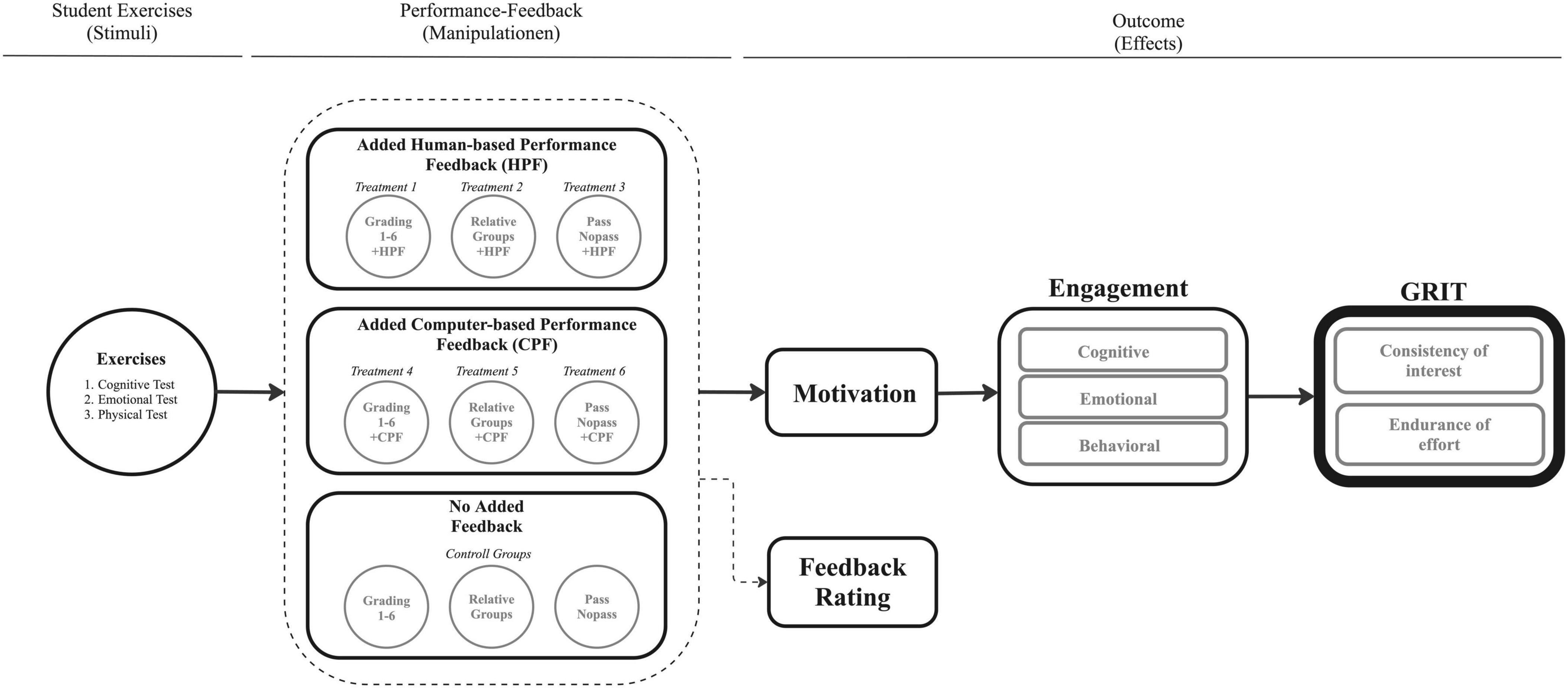

This study explored the impact of different feedback forms on the psychological parameters of learning success: motivation, engagement, and GRIT (GRIT: Growth, Resilience, Integrity, and Tenacity, a concept introduced to describe perseverance and passion for long-term goals) among 6th and 7th-grade students at secondary schools in Bavaria, Germany, employing a 2 × 3 factorial design. The factors included the type of feedback (human-based vs. computer-based), and the declaration of feedback type (grades/points anonymously vs. social comparison/group feedback vs. Pass/NoPass). Among 219 students, findings showed human-based feedback significantly improved feedback evaluations, while computer-based feedback notably increased GRIT. Additionally, feedback in the form of Pass/NoPass and social comparison positively affected GRIT. No significant impacts on motivation or engagement were detected. The results highlight the importance of tailored feedback strategies in fostering GRIT, though they suggest limited generalizability.

1 Introduction

School students’ lack of motivation, which affects their participation in class, the completion of tasks such as homework, preparation for exams and, in the worst case, even their future careers, is a common concern and challenge for educators. According to the Robert Bosch Foundation (2023) “German School Barometer”, 70% of students in Germany experienced motivation problems in 2023, a figure that is particularly high among secondary school students (p.10). There are many reasons for this. As far as student motivation is concerned, research shows that numerical grades as one of the established forms of feedback and performance measurement can play a central role in this context (cf. Heim, 2024). The usefulness and effectiveness of numerical grades as a widespread form of feedback for assessing student performance and increasing or decreasing motivation is the subject of controversial debate, both among the general public and in academic research (for example see Hübner et al., 2024; Bossard, 2023; Lerche, 2022; Kerbel, 2016; Harlen and Crick, 2002). While some authors emphasize that grades can negatively impact students’ performance and motivation (cf. Heim, 2024), others stress that grades are a proven form of performance feedback (cf. Bossard, 2023).

The rapid development of new technologies such as artificial intelligence (AI), and the opportunities and risks they pose for the school sector, puts the need for reform in testing and implementing alternative forms of performance feedback and measurement in schools in a new light. For example, the Bavarian State Ministry of Education and Cultural Affairs has launched a new media and AI budget specifically to “promote the pedagogically valuable use of digital media more strongly and to create financial relief for teaching staff and students” (Bavarian State Ministry of Education and Cultural Affairs, 2024). In times of increasing teacher shortages and more and more tasks that teachers have to deal with, taking them away from their core tasks of teaching and educating, using AI to provide performance feedback seems like the next logical step, but one that requires extensive research. Despite the growing interest in AI-assisted feedback in education, there is a research gap regarding the comparative effects of human-based and computer-based feedback on key psychological parameters of learning success, particularly in the context of secondary education in Germany. While studies have explored various feedback types individually, the specific effects of feedback source (human vs. computer) and feedback form (grades, social comparison, pass/no pass) on motivation, engagement, and GRIT remain underexplored.

Despite the increasing integration of information and communication technologies in the educational sector and the associated digitalization of learning processes, the impact of different feedback methods on student engagement and academic performance remains largely underexplored. Studies have shown that feedback, as a central component of the learning process, plays a significant role in the development of feedback literacy (Little et al., 2023). However, there is limited research on how various types of feedback—specifically human and computer-mediated feedback—affect student engagement and long-term motivation.

Some insights into the impact of online learning on academic performance have been established, with intrinsic motivation and academic engagement identified as key mediating factors (García-Machado et al., 2024). These studies indicate that the support students receive does not directly affect their academic performance unless it is mediated through intrinsic motivation and engagement. However, there is a lack of research on the specific mechanisms through which feedback types, particularly computer-mediated feedback, promote or hinder student engagement and motivation.

The implementation of new technologies, such as artificial intelligence, which could potentially enhance feedback in educational settings, has also been discussed in the literature (Dospinescu and Dospinescu, 2020). However, there is little research on how AI-based feedback systems are integrated into daily practice and what their concrete effects are on engagement and academic performance. Furthermore, no comprehensive assessment has been conducted in the empirical literature on feedback literacy to determine which elements of feedback interventions are effective and which aspects require further investigation (Little et al., 2023).

This research gap highlights the need for a detailed investigation into the specific effects of human and computer-mediated feedback on engagement, feedback evaluation, and long-term motivation (GRIT) in students, particularly concerning the development of feedback literacy and the use of modern technologies such as AI in education. Given the rapid advancements in technologies like GPT-4 (Parker et al., 2024) and their potential applications in feedback systems, it is crucial to evaluate their effectiveness in practice and compare them to traditional feedback methods in order to gain a comprehensive understanding of their impact on student engagement and performance.

To address this research gap, the primary objective of this study is to investigate the differential impacts of human-based and computer-based feedback, presented in various forms, on motivation, engagement, and GRIT among 6th and 7th-grade students in secondary schools (Realschulen) in Bavaria, Germany. Specifically, this research aims to:

(1) Evaluate how the source of feedback (human vs. computer) influences students’ engagement and feedback evaluation.

(2) Assess the effects of different feedback forms (grades/points, social comparison, pass/no pass) on these psychological parameters.

Based on these research questions, the following hypotheses were formulated:

H 1.1 (on engagement): 6th and 7th grade students who receive human feedback will show higher engagement compared to students who receive computerized feedback.

Rationale: Human feedback is often perceived as more personal and empathetic, which may promote student engagement.

H 1.2 (on feedback evaluation): Students will rate human feedback as more helpful and understandable than computerized feedback.

Rationale: Human feedback may be perceived by students as more credible or relevant, which reinforces the positive evaluation.

H 2.1 (on the effect of human feedback on GRIT): Human feedback will show a smaller effect on students’ GRIT scores than computer-based feedback.

Rationale: Human feedback can be more subjective and less standardized, which may lead it to influence GRIT values less systematically, as it is typically not available as frequently or consistently.

H 2.2 (on the effect of computer-based feedback on GRIT): Computerized feedback leads to a significant increase in students’ GRIT scores compared to human feedback.

Rationale: Computerized feedback could help students achieve their goals through direct, immediate feedback and provide a consistent, less subjective source of feedback.

This study investigates the impact of different feedback forms on motivation, engagement, and GRIT (persistence and passion for long-term goals) among 6th and 7th-grade students in secondary1 schools (Realschulen) in Bavaria, Germany. Motivation, engagement, and GRIT are key psychological elements that affect people’s actions and achievements and therefore provide a suitable approach to exploring feedback dynamics in the school context. They represent psychological parameters that are part of later learning success in the educational environment, which is of particular importance. The selected keywords - Human-Computer-based Feedback, Motivation, GRIT, Learning science, and classroom-experiment - are interconnected in this research as they collectively address the core aspects of the study. Human-Computer-based Feedback represents the different forms of feedback being investigated, while Motivation and GRIT are the psychological constructs being measured. Learning science provides the theoretical framework for understanding these interactions, and classroom-experiment denotes the methodological approach used in the study. Together, these keywords encapsulate the main elements of the research, combining technological innovations in education with psychological outcomes in a real-world classroom setting.

1.1 Motivation, engagement and GRIT

Energy, goal setting, perseverance, and the potential for varied endpoints are aspects of activation and intentionality, which collectively constitute motivation (cf. Ryan and Deci, 2000). The Self-Determination Theory (SDT) is a prominent theory in psychology that examines motivation, emphasizing the significance of internal, evolutionary resources for personality development and self-regulatory behavior (cf. Ryan et al., 1997; cf. Deci et al., 2017).

It delineates three distinct types of motivation: extrinsic, intrinsic, and amotivation (cf. Vallerand and Blssonnette, 1992). Extrinsic motivation is influenced by both internal and external factors, encompassing various regulations such as external, introjected, identified, and integrated regulations, heavily impacted by social context. Intrinsic motivation is self-determined, characterized by internal factors such as interest, pleasure, and satisfaction, yet it can be compromised by controlling conditions and perceived inefficacy. Amotivation is a non-self-determined reaction influenced by impersonal factors such as inability or lack of control. Supportive social contexts positively foster motivation, while excessive control and lack of connectedness can impair it (cf. Ryan and Deci, 2000).

Although it can be stated that motivation can be both self-determined and externally determined and depends on internal and external factors, the question of how strong these influences actually are remains unanswered. The simple assumption that both social and internal drives are equally decisive could underestimate or even oversimplify the complex interplay between these factors. It remains unclear to what extent individual differences or situational factors could have a stronger influence on perceived motivation.

Engagement can be defined as a state of optimal and enjoyable experiences characterized by appropriate challenge, immersion, control, freedom, clarity, immediate feedback, time insensitivity, and changes in one’s sense of identity (cf. Cowley et al., 2008).

Research distinguishes between cognitive, emotional, and behavioral engagement. Cognitive engagement focuses on conscious aspects such as effort and attention, while emotional engagement encompasses identification and emotions. Behavioral engagement is objective, concerning actions and involvement, neglecting physical components (cf. Doherty and Doherty, 2018; cf. Li and Lerner, 2012).

Studies show that game elements can increase behavioral engagement in digital learning tasks, reducing the likelihood that learners disengage entirely. Even simple game elements, like narrative structure, visual aesthetics, and a virtual reward system, can enhance learner engagement. Moreover, research on video game engagement categorizes it into six types: intellectual, physical, sensory, social, narrative, and emotional. Players may shift flexibly between these engagement types depending on game phase and content, and the desire to continue playing serves as a strong indicator of engagement (cf. Huber et al., 2023; cf. Schønau-Fog and Bjørner, 2012).

A combination of subjective and objective measurements is recommended to best understand engagement, proven to be the most effective measurement method in most cases (cf. Doherty and Doherty, 2018).

GRIT is a psychological trait associated with positive outcomes such as passion, motivation, and perseverance in pursuing long-term goals. It consists of two dimensions: “consistency of interests,” which pertains to how consistently individuals pursue their goals, and “perseverance of effort,” which relates to how well they can cope with challenges while maintaining their determination. “Perseverance of effort” correlates strongly with the well-being and personality strengths of the individual (cf. Disabato et al., 2018). GRIT can lead to higher academic performance, motivation, and engagement, as well as improved social and personal well-being (cf. Datu et al., 2016).

Markus and Kitayama’s (1991) Self-Construal Theory suggests that the cultural environment in which we are raised influences our ability to develop GRIT. Individuals from collectivist societies find it more challenging to exhibit GRIT compared to those from individualistic societies (cf. Datu et al., 2016; cf. Disabato et al., 2018). Even though GRIT is described as a malleable psychological trait that requires motivation, this simplification could distort the understanding of its origins and effects. The influence of the cultural environment is emphasized, but it remains unclear whether this environment actually plays a central role in all cultures. In addition, insufficient consideration is given to whether GRIT is equally relevant or helpful in all areas of life.

The assertion that these constructs are fundamental psychological factors that influence an individual’s behavior and outcomes may fall short. A close link between internal drives, social context and cultural influences is postulated, but it remains questionable to what extent this link applies always and everywhere. The complexity of individual success could be simplified by focusing on these factors while ignoring other, less obvious variables.

1.2 Feedback types

In the educational context, understanding feedback is crucial to address students’ needs (Hattie and Timperley, 2007). Following the discussion on motivation, engagement, and GRIT, it is necessary to examine various forms of feedback, as these can significantly contribute to fostering motivation and engagement and supporting the development of GRIT. Various forms of feedback need to be considered, including traditional grading systems, feedback generated with artificial intelligence (AI) assistance, student self-feedback, teacher feedback, oral feedback, and video feedback.

Traditional grading systems, such as report card grades, remain widespread. A recent development is AI-generated feedback. Hooda et al. (2022) identified I-FCN as effective software for machine learning and learning analytics, providing personalized feedback. Kochmar et al. (2020) demonstrated that AI feedback in the form of personalized hints and explanations significantly improves learning outcomes (Luckin et al., 2016).

Gan et al. (2023) emphasized the importance of student self-feedback, noting it as the strongest motivator for learning. This underscores the importance of students’ ability to self-assess and regulate their learning. This is supported by Panadero et al. (2017) who found that self-assessment practices improve student learning outcomes and self-regulation skills.

Teacher feedback also plays a central role in the learning process. Gan et al. (2021) showed that teacher feedback positively impacts course satisfaction but has little influence on motivation or exam results. Agricola et al. (2019) demonstrated that due to its direct and personal nature, oral feedback sharpens students’ perception but does not necessarily increase their self-efficacy or drive. These findings align with Wisniewski et al. (2020) who found that the effectiveness of feedback depends on various factors, including timing and delivery method.

Roure et al. (2019) conducted a study to investigate the impact of video feedback on students’ situational interest, which refers to the temporary engagement or curiosity a student experiences during a particular activity. The findings revealed that using video feedback in isolation was not sufficient to significantly enhance situational interest. The researchers argued that while video feedback can provide clear visual and auditory cues, its effectiveness is limited without the accompanying context and interpretive support traditionally offered by teachers. However, when combined with traditional teacher feedback, the impact on students’ situational interest was significantly enhanced. This suggests that the direct interaction and clarification provided by teachers play a crucial role in making video feedback more meaningful and engaging for students. Thus, the integration of video feedback with teacher commentary not only helps students better understand their progress but also sustains their interest by offering a more personalized learning experience. These findings underscore the importance of combining technological tools with human interaction to maximize their educational impact. This is consistent with Van Der Kleij et al. (2015) who found that elaborate feedback is more effective than simple correctness feedback.

In a study focusing on the role of reward systems in education, Hermes et al. (2021) examined how different types of rewards influence students’ motivation, effort, and academic performance, particularly in mathematics. The study found that introducing reward systems had a notably positive impact on students with lower prior achievements, boosting their motivation to participate and increasing their effort in mathematical tasks. This effect was attributed to the fact that rewards provide immediate and tangible recognition for students’ efforts, which can be particularly encouraging for those who struggle academically. Importantly, the study also found that the introduction of reward systems did not adversely affect the performance of higher-achieving students. This challenges the common criticism that rewards might only benefit struggling students at the expense of others. Instead, the results indicate that well-designed reward systems can create an inclusive learning environment that supports diverse student needs. Hermes et al. (2021) emphasize the careful calibration of reward systems to avoid promoting external incentives as the sole focus, and instead to use them as a supplementary strategy to foster a growth-oriented mindset. Similarly, Deci et al. (2001) found that positive feedback enhances intrinsic motivation, particularly when it conveys a sense of competence.

Beyond these individual feedback types, community involvement and feedback tailored to school environments also play a critical role in addressing student needs. Tripon (2024) highlighted that community-based learning and storytelling activities can create a more inclusive and supportive feedback environment, fostering a stronger connection between students and their communities. By incorporating service-learning opportunities, schools can better meet the diverse needs of their students, thereby enhancing their motivation and engagement.

To investigate the effects of different types of feedback, various experiments have been conducted. Hermes et al. (2021) implemented a five-week intervention using a mathematics e-learning platform where students could collect “gold coins” through task completion, later exchangeable for rewards. Kochmar et al. (2020) utilized artificial intelligence to provide personalized feedback on the Korbit platform. Faulconer et al. (2021) employed online quizzes where students received positive feedback for correct answers to difficult questions, whereas previously only feedback on errors was given. This approach is supported by Shute (2008) who emphasized the importance of formative feedback in enhancing learning and achievement. Additionally, Lili et al. (2016), Straub et al. (2023), Kuklick and Lindner (2023), and Roure et al. (2019) conducted studies capturing feedback through written assignments and surveys. Lili et al. (2016) had students write essays and subsequently surveyed them. Straub et al. (2023) combined questionnaires with creative and cognitive tasks as well as power manipulation experiments. Kuklick and Lindner (2023) utilized constructed response geometry tasks with tailored feedback, while Roure et al. (2019) divided participants into three groups to investigate different feedback methods – verbal feedback from the teacher, no feedback, or a combination of self-analysis and teacher feedback. These diverse methodologies align with Black and Wiliam (2018) who advocate for a variety of feedback approaches to cater to different learning styles and contexts. Lastly, Carless and Boud (2018) emphasize the importance of developing students’ feedback literacy, enabling them to understand, process, and use feedback effectively, which complements the findings of the other studies mentioned.

In conclusion, the various types of feedback exert complex and interrelated effects on students’ motivation and engagement. While some studies highlight the advantages of specific types of feedback, other research suggests that these effects are highly context-dependent and vary according to individual needs and perceptions of the learners. For instance, although the combination of oral feedback and teacher feedback can be effective in certain situations, it also carries the risk of fostering excessive reliance on external validation among students. Similarly, reward systems can boost motivation in the short term, but there are concerns that they may undermine intrinsic motivation over time.

Moreover, the studies reflect diverse methodological approaches and theoretical perspectives that are not always consistent with each other. These methodological and conceptual discrepancies complicate the task of drawing generalizable conclusions about the effectiveness of different feedback types. Therefore, making blanket recommendations or simplistic evaluations of specific feedback methods would be misguided. Instead, it is crucial to consider the specific context and the individual characteristics of learners and educators to make nuanced and evidence-based statements about feedback effectiveness.

This critical reflection highlights the need for further research to explore the long-term impacts of different feedback approaches on motivation, engagement, and GRIT in various educational settings (Figure 1).

To further delve into the nuances of feedback’s impact on student outcomes, the study addresses the following research questions:

(1) How do different forms of feedback (human-based vs. computer-based) impact the engagement and feedback evaluation of 6th and 7th-grade students in German secondary schools?

(2) What is the effect of human-based and computer-based feedback on the GRIT of 6th and 7th-grade students in German secondary schools?

2 Materials and methods

2.1 Participants

The participants of this study are students from 16 different Bavarian Secondary school classes, comprising eight classes each from the sixth and seventh grades. Four classes per grade come from the same school, the names of which cannot be disclosed due to regulations from the Bavarian Foundation of Secondary School Teachers. Each class consists of 25–30 students, resulting in a theoretical sample size of N = 400–480. However, the actual number of participating students is N = 219 due to various factors, including parental consent and unforeseen circumstances such as illness or personal emergencies.

2.2 Data collection

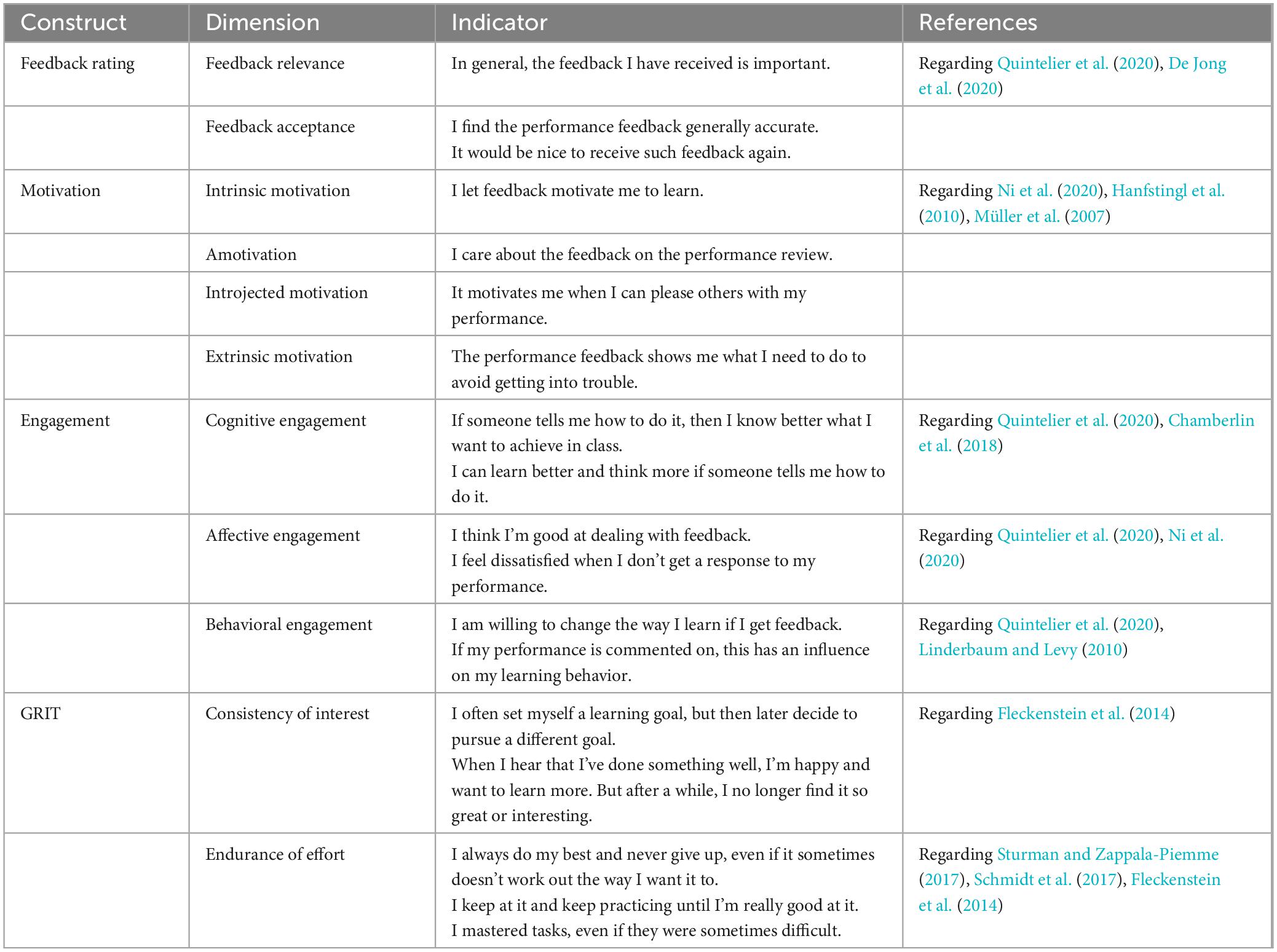

A questionnaire (see Table 1) was developed to systematically analyze the impact of performance feedback on student motivation, engagement, and GRIT. The questionnaire items were adapted to ensure clarity and appropriateness for a younger audience. In particular, the language was revised to make it more child-friendly and to simplify complex concepts without changing the core constructs being measured. For example, the questions assessing different dimensions of motivation were adapted based on the frameworks of Ni et al. (2020) for intrinsic motivation and amotivation, Hanfstingl et al. (2010) for introjected motivation and Müller et al. (2007) for extrinsic motivation. In addition, research into different forms of engagement was influenced by the findings of Quintelier et al. (2020), Chamberlin et al. (2018) and Ni et al. (2020). The GRIT construct comprising ‘constancy of interest’ and ‘persistence of effort’ was also adjusted in line with the research of Fleckenstein et al. (2014), Sturman and Zappala-Piemme (2017) and Schmidt et al. (2017). These adjustments aimed to maintain conceptual accuracy while ensuring that the items were accessible and appealing to younger respondents.

To evaluate students’ cognitive engagement in a manner suitable for children, a memory stimulus game is utilized (Table 1). In this task, participants are required to memorize images of Santas and subsequently identify the previously shown Santas after the displayed images have been modified. Emotional engagement is assessed through a multiple-choice quiz, wherein children are presented with images of human facial expressions and asked to match these expressions to the corresponding emotions from a list of options. Finally, students’ reaction times are measured via an online game. In this task, a red circle appears on the screen, and once it turns green, participants are instructed to tap or click the screen using the left mouse button as quickly as possible, assessing their response speed and reflexes.

2.3 Treatment procedures

The experimental 2 × 3 factorial design with the factors Feedback Type (Human-based vs. Computer-based), and Declaration of Feedback Type (Anonymous School Grades/Credit Points vs. Social Comparison/Group Feedback vs. Pass/NoPass) is based on the guidelines outlined in “The Experiment in Communication and Media Studies” by Koch et al. (2019). Firstly, the type of performance feedback varies (i.e., whether the feedback is Human-based Performance Feedback or Computer-based Performance Feedback). Depending on the treatment group, students receive feedback in the form of anonymous school grades/credit points, social comparison/group feedback, or pass/fail. For the feedback variants where students receive computer-generated feedback after performing the three stimuli games, the software ChatGTP was used to evaluate students’ performance and provide constructive feedback. The feedback texts were written in German and relate to the students’ results in the three stimuli games. The human-generated feedback texts have the same content as the computer-generated feedback texts. The difference lies in clearly communicating the origin of the feedback to the student.

The students were guided through the experiment and, after completing all three stimulus games, were asked to fill out an anonymous questionnaire. This questionnaire contained child-friendly statements that could be rated on a 5-point scale ranging from “strongly agree” to “strongly disagree”. Standardizing response scales, such as using a 0–5 format, improves data comparability and reduces respondent confusion. Research supports that consistent scales minimize cognitive load and response bias, enhancing reliability and interpretability across survey items (cf. Westland, 2022). We assume reliability based on prior validation and testing of these scales by other researchers, which ensures consistency and comparability across studies (cf. Jebb et al., 2021). Due to the guidelines of the Bavarian Foundation of Secondary School Teachers, the questionnaires were printed out and filled in by hand, as online surveys were not allowed. The Bavarian Ministry of Education’s ethics committee strictly limited the inclusion of moderators and mediators in this study to safeguard the sensitive personal data of underage students. Due to privacy regulations, we were only permitted to analyze a limited set of variables, which restricted our ability to examine baseline equivalence for all potential factors, including GRIT scores, across the three groups (control, human-based, and GPT-based). These constraints ensured compliance with data protection standards specific to vulnerable populations, as mandated by the ethics review board.

2.4 Data analysis

To ensure a thorough analysis of the study results, T-tests will be employed to determine whether there are statistically significant differences between the groups under investigation. In addition to the statistical testing, the results will also be presented visually using graphs. This visual representation will provide a clearer, more intuitive understanding of the findings, making it easier to interpret and communicate key trends and differences. The decision to use T-tests instead of ANOVA in a 2 × 3 experimental design can be justified if the primary goal is to compare mean differences between control and test groups rather than assess interaction effects. T-tests allow for direct pairwise comparisons, which is often more interpretable and straightforward, particularly when testing specific hypotheses about group differences without expecting interactions. This approach also minimizes the risk of Type I errors associated with multiple comparisons in ANOVA when interaction terms are not of primary interest (cf. Field, 2013; Cumming, 2013).

2.5 Limitations

The presented study has notable implications for research and practice, summarized as follows. Despite valuable insights, several common limitations related to methodology and design emerged during the research process. This experiment was specifically designed to explore causality, which allows for causal analysis but not representative conclusions. Ethical constraints set by the Bavarian Ministry restricted the use of moderators and mediators, limiting the study to 6th and 7th graders in Bavarian secondary schools; thus, results cannot be generalized to other age groups, genders, parental backgrounds, academic performance, or cultural contexts. Expanding to other educational systems or age groups could clarify broader applicability, and a more representative methodology could be beneficial.

Another challenge was designing suitable online games that replicate the cognitive, emotional, and reactive aspects of physical games—a complex task requiring extensive testing and adjustments. While carefully chosen to assess specific skills, these games may not capture the full range of abilities relevant to motivation, engagement, and GRIT, which could limit findings based on game design or context. Self-report dependence introduces response biases, such as the Hawthorne effect, as perceptions of engagement, motivation, and GRIT can be swayed by external factors, impacting result accuracy. Survey items were adapted for the children’s context but not pre-tested for readability. Temporal limitations may also exist; effects observed post-experiment could diminish over time, impacting the relevance of findings for sustainable applications. Longitudinal studies may address this concern.

In summary, this study reflects classic limitations in psychological experiments and causal research. The methodology was deliberately chosen, and anticipated effects were managed. Future research would benefit from controlling for moderators and mediators as a key methodological enhancement.

3 Results

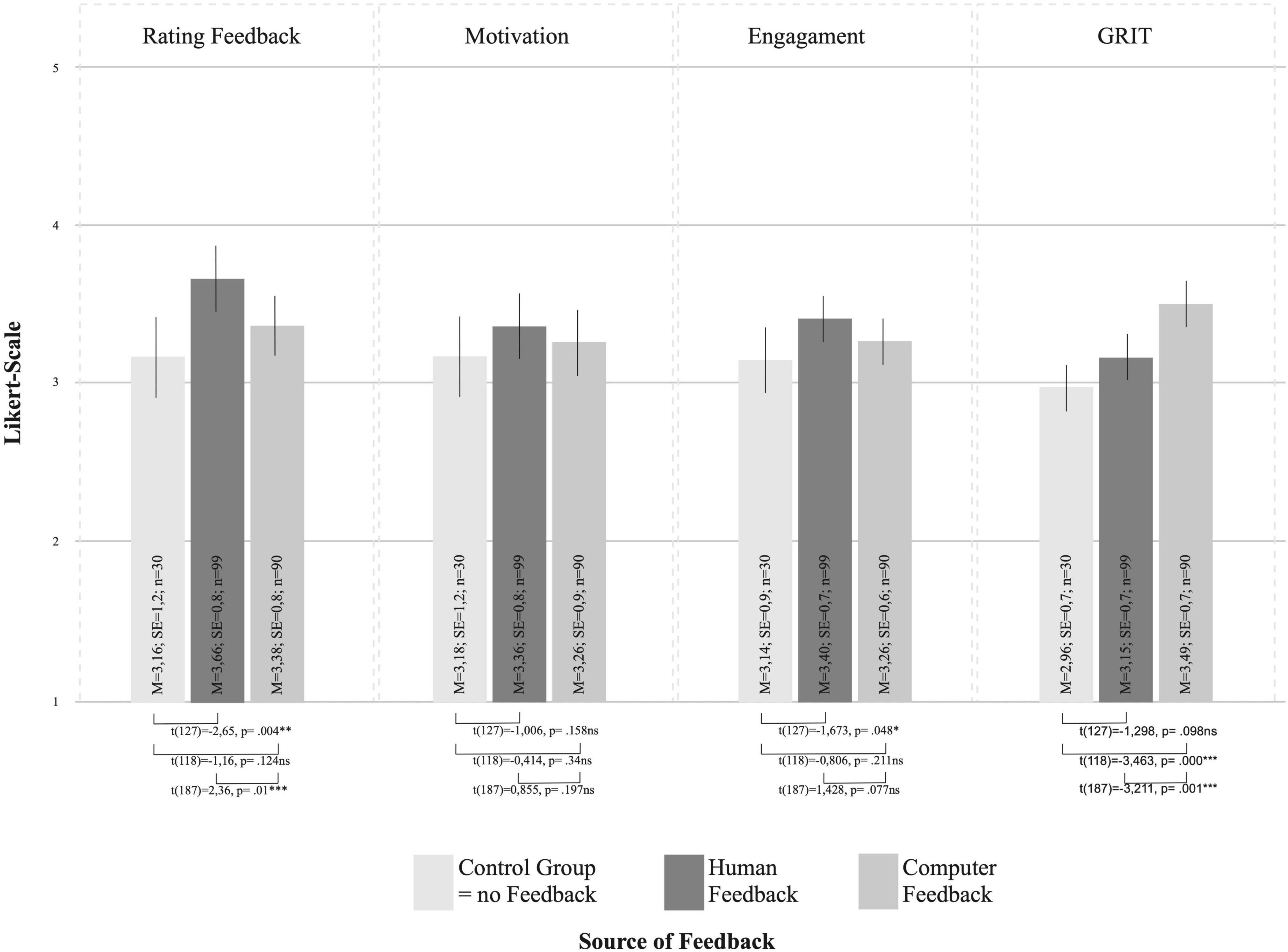

The results indicate that there is no significant effect of human-based or computer-based feedback on students’ motivation. Regarding the dependent variable “Feedback Evaluation”, it is observed that human-based feedback has a significantly positive influence on this variable (Figure 2).

A significant difference between the control group and human-based feedback was found, t(127) = 0.00, p < 0.05, with human-based feedback yielding more positive values. Additionally, human-based feedback has a significantly positive influence on the variable “Engagement”. A significant difference between the control group and human-based feedback was found, t(127) = 0.05, p < 0.05, with human-based feedback yielding more positive values.

On the other hand, computer-based feedback has a significantly positive influence on students’ GRIT. A significant difference between the control group and computer-based feedback was found, t(118) = 0.00, p < 0.05, with computer-based feedback yielding more positive values. This effect, represented by a Cohen’s d value of 0.74, indicates a medium effect size. Given that effect sizes are typically considered large at values of 0.8 or above, these results suggest a strong trend toward a high effect.

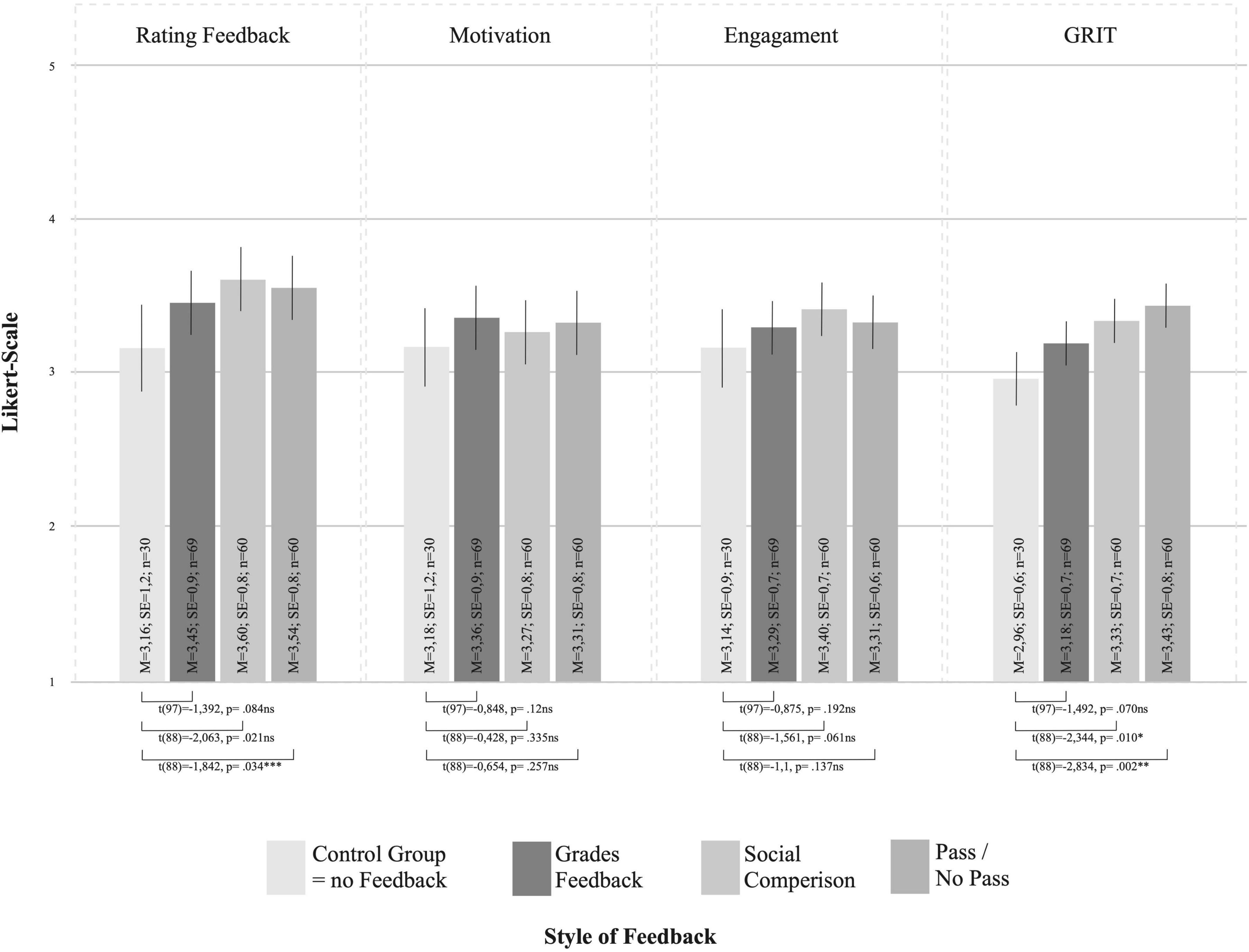

The results illustrate that the different types of feedback do not have a significant influence on the dependent variables “Motivation” and “Engagement”. When considering the influence of “No Feedback” and “Feedback” on the dependent variable “Feedback Evaluation”, it becomes clear that provided feedback has a significantly positive influence on the variable “Feedback Evaluation”. A significant difference between the control group and the feedback was found, t(217) = 0.02, p < 0.05, with provided feedback yielding more positive values. Additionally, “Pass/NoPass” has a significant positive influence on the Feedback Evaluation. A significant difference between the control group and the “Pass/NoPass” was found, t(88) = 0.03, p < 0.05, with provided “Pass/NoPass” yielding more positive values (Figure 3).

Figure 3. Visual mean comparison of the declaration of feedback type (anonymous school grades/credit points vs. social comparison/group feedback vs. Pass/NoPass).

Regarding the dependent variable “GRIT”, it is evident that “Social Comparison” and “Pass/NoPass” have a significantly positive effect. The significance of the results is confirmed with F(3,281) = 0.05, p = 0.02. A significant difference between the control group and social comparison was found, t(88) = 0.01, p < 0.05, with social comparison yielding more positive values. This represents a medium effect size, as indicated by the Cohen’s d value 0.53.

Additionally, a significant difference between the control group and “Pass/NoPass” was found, t(88) = 0.00, p < 0.05, with “Pass/NoPass” yielding more positive values. This also corresponds to a medium effect size, with a Cohen’s d value of 0.64.

4 Discussion

The aim of this study was to investigate the effects of different forms of feedback on the psychological constructs of motivation, engagement and GRIT in 6th and 7th grade secondary school students in Bavaria, Germany. The research specifically aimed to address two main questions: 1) How do different forms of feedback (human-based vs. computer-based) impact the engagement and feedback evaluation of these students? and 2) What is the effect of human-based and computer-based feedback on the GRIT of these students?

Regarding the hypotheses: H 1.1: 6th and 7th grade students who receive human feedback will show higher engagement compared to students who receive computerized feedback.

This hypothesis was partially supported. While human-based feedback significantly enhanced students’ feedback evaluation, it did not show a significant effect on engagement as initially hypothesized. H 1.2: Students will rate human feedback as more helpful and understandable than computerized feedback.

This hypothesis was supported. The findings indicate that human-based feedback significantly improved students’ feedback evaluation, aligning with our prediction. H 2.1: Human feedback will show a smaller effect on students’ GRIT scores than computer-based feedback.

This hypothesis was supported. The results show that computer-based feedback notably improved students’ GRIT, while human-based feedback did not have a significant effect on GRIT scores. H 2.2: Computerized feedback leads to a significant increase in students’ GRIT scores compared to human feedback.

This hypothesis was supported. The findings demonstrate that computer-based feedback had a significant positive effect on students’ GRIT scores, as predicted.

The significant positive effect of human-based feedback on engagement and feedback evaluation aligns with the literature emphasizing the importance of personalized and direct feedback from teachers. This can be attributed to the relational and immediate nature of human interaction, which may foster a supportive learning environment, enhancing students’ perception of feedback. Contrary to our expectations, human-based feedback did not significantly impact engagement, suggesting that the relationship between feedback source and student engagement may be more complex than initially theorized.

On the other hand, the positive influence of computer-based feedback on GRIT suggests that automated feedback systems might effectively support students’ persistence and passion for long-term goals. This could be due to the consistent and objective nature of AI-generated feedback, which provides clear insights that help students focus on their progress and long-term development.

These findings are consistent with previous studies, such as Kochmar et al. (2020) and Hooda et al. (2022), which highlighted the efficacy of AI-generated feedback in improving learning outcomes. Similarly, Gan et al. (2021) underscored the limited impact of teacher feedback on motivation, which aligns with our finding that neither human-based nor computer-based feedback significantly influenced student motivation.

While human feedback can enhance student feedback evaluation, AI-generated feedback can support the development of perseverance and goal-oriented behaviors. The lack of significant effects of computer-based feedback on engagement and feedback evaluation, as well as the unexpected result regarding human feedback and engagement, raises questions about the complex interplay between feedback source and student outcomes.

Future research should explore ways to enhance the relational aspects of computer-based feedback to potentially improve its impact on student engagement and evaluation. Additionally, investigating the reasons behind the null results in motivation and the unexpected findings regarding engagement could provide valuable insights for developing more effective feedback strategies that address all aspects of student psychological constructs.

These results directly address our research questions and hypotheses, demonstrating that human-based feedback positively impacts feedback evaluation, while computer-based feedback enhances GRIT in the studied student population. However, the findings also highlight the need for further investigation into the nuanced effects of different feedback types on various psychological constructs in educational settings.

5 Implications

The presented findings have implications for research and practice, which are summarized below.

The practical implications of these findings are manifold, as this study highlights the significance of feedback in the educational context. Educators and policymakers should consider integrating both human-based and computer-based feedback into the classroom. The results suggest that while human feedback is well-received and promotes short-term motivation, it is insufficient for fostering long-term perseverance. In contrast, computer-based feedback, which strengthens GRIT, should be used to complement the effects of human feedback. Combining both types of feedback could thus harness the best of both worlds while reducing the burden on teachers, as AI-generated feedback is more intensive, longer, and more idea-rich in a shorter period. Depending on the subject matter, student needs, and orientation of the course, the feedback process should be adjusted accordingly. Strengthening GRIT through the systematic implementation of computer-based feedback solutions positively impacts student performance and mental well-being. Regarding the implementation of AI feedback tools, it is also advisable to develop a strategic system model for the school context in each country, allowing education decision-makers to gain a better understanding and subsequently measure strategic educational goals. Wawrzinek et al. (2017) developed a strategic model for higher education, which could serve as a guide here and be systematically integrated into other learning contexts. The introduction of computer-based feedback solutions also represents an effective and significant relief for teachers. In times of increasing staff shortages in schools and an ever-growing number of tasks that need to be performed in addition to teaching and education, such support measures are in high demand and particularly valuable. Country-specific learning and development goals could also be centrally coordinated. AI-generated computer-based feedback provides accurate, precise, and individualized feedback for all students, which has significant implications for the teacher’s role. The teacher is perceived as a learning coach rather than merely an evaluator, thus enabling new forms of engagement with students.

6 Conclusion

This study provides a valuable foundation for the systematic integration of AI-based feedback in daily school settings, making an important contribution to the research on AI’s role in educational processes. The findings demonstrate that combining human and AI-driven feedback not only offers practical advantages for educators but also enables differentiated support for students: while human feedback enhances student engagement, AI-based feedback strengthens perseverance and goal-oriented behaviors. This highlights the potential role of AI systems as a complementary tool in schools, not only to convey learning content but also to foster skills such as resilience and self-regulation.

The study has important implications for research and practice, though it faces several limitations. Its causal design enables causal analysis but prevents representative conclusions. Ethical restrictions confined the study to 6th and 7th graders in Bavarian secondary schools, limiting generalizability to other demographics or cultural contexts. Expanding the scope and using a more representative methodology could improve applicability. Designing online games that mirror the cognitive and emotional aspects of physical games posed challenges, as these may not fully capture skills relevant to motivation, engagement, and GRIT. Self-reports introduced potential response biases, and survey items were not pre-tested for readability. Additionally, observed effects may diminish over time, underscoring the need for longitudinal studies. The study highlights common limitations in psychological experiments, suggesting future research should focus on controlling for moderators and mediators.

Furthermore, the study establishes a basis for future research that could explore the long-term effects of AI feedback systems and examine ways to personalize and adapt these tools to meet individual student needs. These insights are particularly valuable with regard to future AI applications, which may increasingly address the emotional, cognitive, and social aspects of learning. Additionally, future research could focus on comparative studies across different periods of time to understand how the role and impact of AI evolve alongside technological advancements and changing pedagogical practices. Investigating cross-cultural differences could provide insights into how diverse educational systems and cultural contexts influence the acceptance, effectiveness, and ethical considerations of AI-based feedback. Similarly, comparative research among different age groups or developmental stages could shed light on how AI systems need to be tailored to support varying cognitive and emotional needs.

This study also sets a precedent for subsequent research into the practical implementation and ethical considerations of AI in educational contexts. Future studies might delve into questions of scalability, efficiency, and acceptance among students and educators, aiming to gain a comprehensive understanding of the sustainable integration of AI feedback systems in education. Such research could also examine how AI systems can be adapted to address equity issues, ensuring that they support learners from diverse socioeconomic and linguistic backgrounds effectively.

In this way, this study offers a solid foundation for the development of evidence-based strategies for employing AI in schools and encourages the research community, policymakers, and educators to further explore the potential of AI feedback in creating a dynamic and adaptive learning environment.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in this article/Supplementary material.

Ethics statement

The studies involving humans were approved by Bavarian State Ministry of Education and Culture. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

Author contributions

LH: Writing – original draft, Writing – review and editing. VH: Writing – original draft, Writing – review and editing. VS: Writing – original draft, Writing – review and editing. DW: Writing – review and editing. UB: Writing – review and editing. GE: Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. We gratefully acknowledge the Foundation of Secondary School Teachers for providing funding to support Open Access Publishing.

Acknowledgments

We would like to express their gratitude to all participating schools, students, and families for their cooperation in this study. Special thanks are extended to the Bavarian Association and Foundation of Secondary School Teachers, as well as the Bavarian State Ministry of Education and Cultural Affairs, for their support and ethical approval, facilitating the successful execution of this research within Bavarian school settings. Their contributions have played a vital role in enabling this work. Additionally, we would like to acknowledge the reviewers for their valuable feedback and contributions to enhancing the academic rigor of this manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2024.1473727/full#supplementary-material

Footnotes

- ^ The Realschule is a type of secondary school in Bavaria, Germany, that builds on year 4 of primary school and comprises grades 5–10: “The Realschule provides a broad general and pre-vocational education. The Realschule is characterized by a self-contained educational programme that also includes vocational subjects. It thus lays the foundation for vocational training and later qualified employment in a wide range of professions with diverse theoretical and practical requirements. It creates the school prerequisites for the transition to further educational pathways up to university entrance qualification” (Bavarian State Chancellery, n.d.).

References

Agricola, B. T., Prins, F. J., and Sluijsmans, D. M. A. (2019). Impact of feedback request forms and verbal feedback on higher education students’ feedback perception, self-efficacy, and motivation. Assessm. Educ. Principles Policy Pract. 27, 6–25. doi: 10.1080/0969594x.2019.1688764

Bavarian State Chancellery, (n.d.). Bavarian Law on Education and Teaching (BayEUG): Art. 8 Die Realschule. Available online at: https://www.gesetze-bayern.de/Content/Document/BayEUG-8 (accessed June 20, 2024).

Bavarian State Ministry of Education and Cultural Affairs, (2024). Press Release no. 113 from 17.07.2024, Bavarian Minister of Education and Cultural Affairs Anna Stolz launches new media and AI budget (own translation). Available online at: https://www.km.bayern.de/meldung/kultusministerin-anna-stolz-bringt-neues-medien-und-ki-budget-an-den-start (accessed June 16, 2024).

Black, P., and Wiliam, D. (2018). Classroom assessment and pedagogy. Assessm. Educ. Principles Policy Pract. 25, 551–575. doi: 10.1080/0969594x.2018.1441807

Bossard, C. (2023). The Distress of School Grades - and Their Value (own translation): Society for Education and Knowledge e.V. (own translation). Available online at: https://bildung-wissen.eu/fachbeitraege/von-der-not-der-noten-und-ihrem-wert.html (accessed June 16, 2024).

Carless, D., and Boud, D. (2018). The development of student feedback literacy: enabling uptake of feedback. Assessm. Eval. High. Educ. 43, 1315–1325. doi: 10.1080/02602938.2018.1463354

Chamberlin, K., Yasué, M., and Chiang, I.-C. A. (2018). The impact of grades on student motivation. Active Learn. High. Educ. 24, 109–124. doi: 10.1177/1469787418819728

Cowley, B., Charles, D., Black, M., and Hickey, R. (2008). Toward an understanding of flow in video games. Comput. Entertainment 6, 1–27. doi: 10.1145/1371216.1371223

Datu, J. A. D., Yuen, M., and Chen, G. (2016). Grit and determination: a review of literature with implications for theory and research. J. Psychol. Counsellors Schools 27, 168–176. doi: 10.1017/jgc.2016.2

De Jong, C., Kühne, R., Peter, H., Van Straten, C. L., and Barco, A. (2020). Intentional acceptance of social robots: development and validation of a self-report measure for children. Int. J. Hum. Comput. Stud. 139:102426. doi: 10.1016/j.ijhcs.2020.102426

Deci, E. L., Koestner, R., and Ryan, R. M. (2001). Extrinsic rewards and intrinsic motivation in education: reconsidered once again. Rev. Educ. Res. 71, 1–27. doi: 10.3102/00346543071001001

Deci, E. L., Olafsen, A. H., and Ryan, R. M. (2017). Self-determination theory in work organizations: the state of a science. Annu. Rev. Organ. Psychol. Organ. Behav. 4, 19–43. doi: 10.1146/annurev-orgpsych-032516-113108

Disabato, D. J., Goodman, F. R., and Kashdan, T. B. (2018). Is grit relevant to well-being and strengths? Evidence across the globe for separating perseverance of effort and consistency of interests. J. Pers. 87, 194–211. doi: 10.1111/jopy.12382

Doherty, K., and Doherty, G. (2018). Engagement in HCI. ACM Comput. Surveys 51, 1–39. doi: 10.1145/3234149

Dospinescu, N., and Dospinescu, O. (2020). Information technologies to support education during COVID-19. Univers. Sci. Notes 3, 17–28. doi: 10.37491/unz.75-76.2

Duckworth, A. L., Peterson, C., Matthews, M. D., and Kelly, D. R. (2007). Grit: perseverance and passion for long-term goals. J. Pers. Soc. Psychol. 92:1087.

Faulconer, E., Griffith, J., and Gruss, A. (2021). The impact of positive feedback on student outcomes and perceptions. Assessm. Eval. High. Educ. 47, 259–268. doi: 10.1080/02602938.2021.1910140

Field, A. (2013). Discovering Statistics Using IBM SPSS Statistics, 4th Edn. Thousand Oaks, CA: Sage Publications.

Fleckenstein, J., Schmidt, F. T. C., and Möller, J. (2014). Who has teeth? Perseverance and persistent interest of student teachers. A German adaptation of the 12-Item Grit Scale (own translation). Psychol. Educ. Teach. 61:281. doi: 10.2378/peu2014.art22d

Gan, Z., An, Z., and Liu, F. (2021). Teacher feedback practices, student feedback motivation, and feedback behavior: how are they associated with learning outcomes? Front. Psychol. 12:697045. doi: 10.3389/fpsyg.2021.697045

Gan, Z., He, J., Zhang, L. J., and Schumacker, R. (2023). Examining the relationships between feedback practices and learning motivation. Meas. Interdiscip. Res. Perspect. 21, 38–50. doi: 10.1080/15366367.2022.2061236

García-Machado, J. J., Ávila, M. M., Dospinescu, N., and Dospinescu, O. (2024). How the support that students receive during online learning influences their academic performance. Educ. Inform. Technol. 29, 20005–20029. doi: 10.1007/s10639-024-12639-6

Hanfstingl, B., Andreitz, I., Müller, F. H., and Thomas, A. (2010). Are self-regulation and self-control mediators between psychological basic needs and intrinsic teacher motivation? J. Educ. Res. 2, 55–71. doi: 10.25656/01:4575

Harlen, W., and Crick, R. D. (2002). A systematic Review of the Impact of Summative Assessment and Tests on Students’ Motivation for Learning. London: EPPI-Centre.

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Heim, H. (2024). Are School Grades Still Future-Proof? (own translation) Stuz. Available online at: https://www.stuz.de/2024/03/04/sind-noten-noch-zukunftsfaehig/ (accessed June 16, 2024).

Hermes, H., Huschens, M., Rothlauf, F., and Schunk, D. (2021). Motivating low achievers—Relative performance feedback in primary schools. J. Econ. Behav. Organ. 187, 45–59. doi: 10.1016/j.jebo.2021.04.004

Hooda, M., Rana, C., Dahiya, O., Rizwan, A., and Hossain, M. S. (2022). Artificial intelligence for assessment and feedback to enhance student success in higher education. Math. Probl. Eng. 2022, 1–19. doi: 10.1155/2022/5215722

Huber, S. E., Cortez, R., Kiili, K., Lindstedt, A., and Ninaus, M. (2023). Game elements enhance engagement and mitigate attrition in online learning tasks. Comput. Hum. Behav. 149:107948. doi: 10.1016/j.chb.2023.107948

Hübner, N., Jansen, M., Stanat, P., Bohl, T., and Wagner, W. (2024). Is it all a question of the federal state? A multi-level analysis of the limited comparability of school grades (own translation). J. Educ. Sci. 27, 517–549. doi: 10.1007/s11618-024-01216-9

Jebb, A. T., Ng, V., and Tay, L. (2021). A review of key Likert scale development advances: 1995–2019. Front. Psychol. 12:637547. doi: 10.3389/fpsyg.2021.637547

Kerbel, B. (2016). The Dilemma With School Grades (own translation), Federal Agency for Civic Education (bpb). Available online at: https://www.bpb.de/themen/bildung/dossier-bildung/213307/das-dilemma-mit-den-schulnoten/ (accessed June 16, 2024).

Koch, T., Peter, C., and Müller, P. (2019). The experiment in communication and media studies (own translation),” ed. Springer VS Wiesbaden in Study Books on Communication and Media Studies (own translation). Wiesbaden: Springer, doi: 10.1007/978-3-658-19754-4

Kochmar, E., Vu, D. D., Belfer, R., Gupta, V., Serban, I. V., and Pineau, J. (2020). “Automated personalized feedback improves learning gains in an intelligent tutoring system,” in Lecture Notes In Computer Science, eds I. Bittencourt, M. Cukurova, K. Muldner, R. Luckin, and E. Millán (Cham: Springer), 140–146. doi: 10.1007/978-3-030-52240-7_26

Kuklick, L., and Lindner, M. A. (2023). Affective-motivational effects of performance feedback in computer-based assessment: does error message complexity matter? Contemp. Educ. Psychol. 73:102146. doi: 10.1016/j.cedpsych.2022.102146

Lerche, T. (2022). Performance Assessment in Schools (own translation). Munich: Chair of School Pedagogy, Ludwig-Maximilians-University Munich.

Li, Y., and Lerner, R. M. (2012). Interrelations of behavioral, emotional, and cognitive school engagement in high school students. J. Youth Adolesc. 42, 20–32. doi: 10.1007/s10964-012-9857-5

Lili, C., Loo, D. B., and Tajoda, H. (2016). Effects of students’ engagement on written corrective feedback on writing quality. APHEIT J. 5, 40–54.

Linderbaum, B. A., and Levy, P. E. (2010). The development and validation of the Feedback Orientation Scale (FOS). J. Manag. 36, 1372–1405. doi: 10.1177/0149206310373145

Little, T., Dawson, P., Boud, D., and Tai, J. (2023). Can students’ feedback literacy be improved? A scoping review of interventions. Assessm. Eval. High. Educ. 49, 39–52. doi: 10.1080/02602938.2023.2177613

Luckin, R., Holmes, W., Griffiths, M., and Forcier, L. B. (2016). Intelligence Unleashed An Argument for AI in Education. London: Pearson, Scientific Research Publishing.

Markus, H. R., and Kitayama, S. (1991). Culture and the self: implications for cognition, emotion, and motivation. Psychol. Rev. 98, 224–253. doi: 10.1037/0033-295x.98.2.224

Mü,ller, F. H., Hanfstingl, B., and Andreitz, I. (2007). Scales for Motivational Regulation in Student Learning, Scientific Contributions from the Institute for Teaching and School Development (own translation), Alpen-Adria-University. Available online at: https://ius-old.aau.at/wp-content/uploads/2016/01/486_IUS_Forschungsbericht_1_Motivationsskalen.pdf (accessed February 10, 2024).

Ni, X., Shao, X., Geng, Y., Qu, R., Niu, G., and Wang, Y. (2020). Development of the social media engagement scale for adolescents. Front. Psychol. 11:701. doi: 10.3389/fpsyg.2020.00701

Panadero, E., Jonsson, A., and Botella, J. (2017). Effects of self-assessment on self-regulated learning and self-efficacy: four meta-analyses. Educ. Res. Rev. 22, 74–98.

Parker, M. J., Anderson, C., Stone, C., and Oh, Y. (2024). A large language model approach to educational survey feedback analysis. Int. J. Artif. Intellig. Educ. doi: 10.1007/s40593-024-00414-0

Quintelier, A., De Maeyer, S., and Vanhoof, J. (2020). The role of feedback acceptance and gaining awareness on teachers’ willingness to use inspection feedback. Educ. Assessm. Eval. Account. 32, 311–333. doi: 10.1007/s11092-020-09325-9

Robert Bosch Foundation (2023). The German School Barometer: Current challenges from the Perspective of Teachers. Results of a Survey of Teachers in General and Vocational Schools (own translation). Stuttgart: Robert Bosch Foundation.

Roure, C., Méard, J., Lentillon-Kaestner, V., Flamme, X., Devillers, Y., and Dupont, J. P. (2019). The effects of video feedback on students’ situational interest in gymnastics. Technol. Pedag. Educ. 28, 563–574. doi: 10.1080/1475939x.2019.1682652

Ryan, R. M., and Deci, E. L. (2000). Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am. Psychol. 55, 68–78. doi: 10.1037/0003-066x.55.1.68

Ryan, R. M., Kuhl, J., and Deci, E. L. (1997). Nature and autonomy: an organizational view of social and neurobiological aspects of self-regulation in behavior and development. Dev. Psychopathol. 9, 701–728. doi: 10.1017/s0954579497001405

Schmidt, F. T. C., Fleckenstein, J., Retelsdorf, J., Eskreis-Winkler, L., and Möller, J. (2017). Measuring grit. Eur. J. Psychol. Assessm. 35, 436–447. doi: 10.1027/1015-5759/a000407

Schønau-Fog, H., and Bjørner, T. (2012). Sure, i would like to continue. Bull. Sci. Technol. Soc. 32, 405–412. doi: 10.1177/0270467612469068

Shute, V. J. (2008). Focus on formative feedback. Rev. Educ. Res. 78, 153–189. doi: 10.3102/0034654307313795

Straub, L. M., Lin, E., Tremonte-Freydefont, L., and Schmid, P. C. (2023). Individuals’ power determines how they respond to positive versus negative performance feedback. Eur. J. Soc. Psychol. 53, 1402–1420. doi: 10.1002/ejsp.2985

Sturman, E. D., and Zappala-Piemme, K. (2017). Development of the grit scale for children and adults and its relation to student efficacy, test anxiety, and academic performance. Learn. Individ. Differ. 59, 1–10. doi: 10.1016/j.lindif.2017.08.004

Tripon, C. (2024). Bridging horizons: exploring STEM students’ perspectives on Service Learning and storytelling activities for community engagement and gender equality. Trends High. Educ. 3, 324–341. doi: 10.3390/higheredu3020020

Vallerand, R. J., and Blssonnette, R. (1992). Intrinsic, extrinsic, and amotivational styles as predictors of behavior: a Prospective study. J. Pers. 60, 599–620. doi: 10.1111/j.1467-6494.1992.tb00922.x

Van Der Kleij, F. M., Feskens, R. C. W., and Eggen, T. J. H. M. (2015). Effects of feedback in a computer-based learning environment on students’ learning outcomes. Rev. Educ. Res. 85, 475–511. doi: 10.3102/0034654314564881

Wawrzinek, D., Ellert, G., and Germelmann, C. C. (2017). Value configuration in Higher education – intermediate tool development for teaching in complex uncertain environments and developing a higher education value framework. Athens J. Educ. 4, 271–290. doi: 10.30958/aje.4-3-5

Westland, J. C. (2022). Information loss and bias in likert survey responses. PLoS One 17:e0271949. doi: 10.1371/journal.pone.0271949

Keywords: Human-Computer-based Feedback, motivation, GRIT, learning science, classroom-experiment (A22)

Citation: Heindl L, Huber V, Schuricht V, Wawrzinek D, Babl U and Ellert G (2025) Exploring feedback dynamics: an experimental analysis of human and computer feedback on motivation, engagement, and GRIT in secondary school students. Front. Educ. 9:1473727. doi: 10.3389/feduc.2024.1473727

Received: 31 July 2024; Accepted: 20 November 2024;

Published: 07 January 2025.

Edited by:

Octavian Dospinescu, Alexandru Ioan Cuza University, RomaniaReviewed by:

Cristina Tripon, Politehnica University of Bucharest, RomaniaTing Zhao, Southwestern University of Finance and Economics, China

Claas Christian Germelmann, University of Bayreuth, Germany

Copyright © 2025 Heindl, Huber, Schuricht, Wawrzinek, Babl and Ellert. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lizzy Heindl, YnJ2LmxoZWluZGxAZ21haWwuY29t

Lizzy Heindl

Lizzy Heindl Veronika Huber1

Veronika Huber1 Victoria Schuricht

Victoria Schuricht Guido Ellert

Guido Ellert