- 1Department of Mechanical and Industrial Engineering, College of Engineering, Qatar University, Doha, Qatar

- 2Department of Civil and Environmental Engineering, College of Engineering, Qatar University, Doha, Qatar

- 3Technology Innovation and Engineering Education Unit (TIEE), Qatar University, Doha, Qatar

Introduction: The learning experience has undergone significant changes recently, particularly with the adoption of advanced technology and online lectures to address challenges such as pandemics. In fields like engineering, where hands-on classes are essential, the online learning environment plays a crucial role in shaping students’ experiences and satisfaction.

Methods: This study aimed to explore the key factors affecting engineering students’ satisfaction with online learning. A structured survey was administered to 263 students across various engineering disciplines and academic levels, all of whom had experienced both in-person learning before the pandemic and online learning during the pandemic. Factor analysis and multiple linear regression were employed to analyze the data.

Results: The analysis identified interactions, services, and technology as the main factors positively influencing online learning satisfaction. The regression analysis further revealed that students’ satisfaction is significantly dependent on the availability and quality of online learning services, assessment and interaction tools, and technology.

Discussion: This study highlights the critical factors that enhance engineering students’ satisfaction with online learning. It offers strategies for educators to improve online learning environments, emphasizing the importance of quality services, assessment, and interaction tools. These findings can guide the development of more effective online learning experiences in engineering education.

1 Introduction

During the last decade, many worldwide disruptions have challenged the continuity of the in-class learning process. Since the outbreak of the COVID-19 pandemic, universities around the world have resorted to online learning as a temporary solution to education during the crisis. Online learning can be utilized not only during pandemics but also in response to various risks and disruptions that challenge the continuity of the educational process, such as earthquakes and natural disasters. In such events, when physical education buildings may be damaged and rendered unusable, online learning provides a resilient alternative to ensure the continuity of education.

Given that unforeseen events could disrupt face-to-face learning, the importance of online learning is expected to grow. For example, the pandemic forced more than 1.7 billion students worldwide to continue their education through online learning due to its spread (Husain, 2021). Initially, due to sudden changes and lack of necessary training, not all teachers coped with the situation, leading to unsatisfactory results (Na and Jung, 2021). However, the situation forced instructors to adopt online learning tools in a short time, which required them to be knowledgeable about technology and creative in conducting study material through online platforms (Amin et al., 2002). For example, the University of New South Wales in Sydney developed a pedagogy for construction engineering students using Augmented Reality to create an immersive learning experience using accessible tools such as smartphones (Sepasgozar, 2020).

The adaptation of online learning in various educational institutes during outbreaks, especially during the pandemic, resulted in the need for several electronic devices, leading to a significant change in the educational field (Hamad, 2022). However, problems such as difficulty or uncertainty in understanding the material, media, or receiving sound given by the lecturer during online lectures have been reported (Hermiza, 2020). Several studies have explored factors that affect the quality of the learning experience and students’ satisfaction with online learning in the pandemic era, including Palmer and Holt (2009), Zeng and Wang (2021), and Landrum et al. (2021). One of the quality measures of student learning in higher education is their satisfaction (Parahoo et al., 2016). Multiple studies concluded that learners’ perceptions of the effectiveness of a learning experience are a key factor in determining its overall effectiveness, and thus user satisfaction can be used in evaluating the study process (Violante and Vezzetti, 2015). Student satisfaction in evaluating learning experience has been studied widely, such as by Cole et al. (2014), Nagy (2018), Dashtestani (2020), Yu (2022), and Maican et al. (2024).

Students in all parts of the world have experienced a swift transition in their learning environment, suddenly moving from a traditional face-to-face system to online lectures and assessments. After experiencing such a transition, it is crucial to learn and adapt the integration of technology into engineering education, enhancing the resilience of the education process. This adaptation ensures the ability to seamlessly execute potential transitions, preventing any negative experience that limits student learning.

This study is conducted to evaluate this transformative experience and improve it for future potential disruptions that can cause a shift from in-class learning to online modalities. As a result, in this paper, we investigate and develop a framework that represents the relationship between different factors that influence students’ experience of online learning and affect their satisfaction, particularly in engineering disciplines. The remainder of the paper includes a review of the literature, methodology, data analysis, results, discussion, and finally, a conclusion and limitations.

2 Review of the literature

Access to educational institutions is affected by disruptions like natural disasters. Organizations such as UNICEF make various efforts to ensure the continuity of education (Spond et al., 2022). Online learning has the potential to replace traditional methods with its coping property with uncontrollable disruptions of the learning process (Dhawan, 2020). This is especially evident after the COVID-19 pandemic that caused a major disruption in higher education, resulting in a shift to online learning in many higher education institutes (Aristovnik et al., 2023). While previous studies have highlighted both the challenges and opportunities of online learning (Adedoyin and Soykan, 2020), significant gaps remain in measuring the success of the online learning process and how to improve it especially is math and lab-based disciplines like engineering.

Bourne et al. (2005), identified key challenges in implementing online programs for engineering education, particularly the difficulty of conducting laboratory activities. Despite this, it was concluded that online engineering education would eventually be widely accepted, offering quality equivalent to traditional education and broad accessibility. Historically, online engineering education was primarily at the graduate level due to the complexity of delivering mathematics and science courses online for undergraduates (Bourne et al., 2005). In fact, conducting laboratory activities online is especially challenging for undergraduate students, yet these activities are crucial for their education (Widharto et al., 2021).

As a result, many studies focus on measuring the effectiveness of online learning. Student satisfaction with online learning is indicated to measure the quality of the knowledge and the student’s perspective of the achieved success (Puška et al., 2020; Sampson et al., 2010). Student satisfaction is a comprehensive measure to improve learning quality, defined by efficiency and effectiveness (Puriwat and Tripopsakul, 2021), and it represents the difference between learners’ expectations and their actual experiences (Yu, 2022).

There are different categories of factors that were covered in the literature that affect students’ satisfaction with online learning including pedagogical and students’ demographic information, and technological factors (Adeniyi et al., 2024; Yu, 2022). Pedagogical and student demographic factors that affect students’ satisfaction were covered by different studies, for example, it was stated that self-regulation and teacher-student interactions significantly influence student motivation and satisfaction, with students experiencing a blend of dissatisfaction and satisfaction (Zhang and Liu, 2024). On the other hand, Said et al. (2022) applied machine learning which resulted in identifying quality, interaction, and comprehension as key predictors of student satisfaction, while demographic factors like class, gender, and nationality were found to be insignificant regarding online learning. Blended learning and the use of multiple tools in the teaching process are effective strategies to engage students and support their educational development (Ayari et al., 2012). Puška et al. (2020) examined the relationship between independent factors (self-efficacy, metacognition, strategies, and goal setting) and dependent factors (social dimension and environmental structure). They concluded that these factors directly or indirectly contribute to student satisfaction. The study also noted the importance of considering other influences on student satisfaction, such as age, gender, and previous experience with technology. Alam et al. (2021) developed a framework with five factors to make online learning successful, including instructor, information, learner, system, and institutional factors. A study of Indian university students highlighted the positive impacts of instructor quality, course design, prompt feedback, and student expectations on satisfaction and performance (Gopal et al., 2021). Further research confirmed that self-regulation, self-efficacy, task value, and learning design are crucial for students’ satisfaction (Yalçın and Dennen, 2024). While Gachigi et al. (2023), studied post-COVID e-learning and identified course delivery, modes of assessment, sense of belonging, and technological quality as significant predictors of student satisfaction, underscoring the importance of these factors in the design of effective online learning environments. Similarly, during the pandemic, a survey was conducted at the University of Bacau (Romania) to assess the quality of online education. The survey targeted engineering students who required various online learning activities, including lectures, labs, and experiments. It showed general satisfaction with online learning, though some students were dissatisfied due to communication difficulties with instructors and discomfort from prolonged monitor exposure (Radu et al., 2020).

On the other hand, technology emerges as a crucial factor, significantly influencing the online learning experience. For example, Prasetya et al. (2020) stated that the speed and reliability of internet connectivity, and the quality of the hardware affect students’ satisfaction with the online learning process. Similarly, Dinh and Nguyen (2020) noted challenges like poor internet quality affecting participation and satisfaction, but there is a potential for adapting to online methods through improved engagement strategies due to student dissatisfaction with online interaction.

Sun et al. (2008) showed that flexibility and technology play an important role in student satisfaction with online learning. Jiang et al. (2021) identified factors using the Technology Satisfaction Model to show that student satisfaction is strongly linked to their ability to manage computers and online learning platforms. Similarly, Njoroge et al. (2012) studied two aspects of technology in student learning that lead to satisfaction, identifying four key factors: preference, assessment, performance, and proficiency. The study emphasized that the availability and accessibility of technology are crucial when assessing student satisfaction with e-learning technology.

Other technological characteristics covered in the literature that influence students’ satisfaction with online learning include the ease of operating necessary software, streamlined procedures, user-friendly interfaces, and high-quality media (Piccoli et al., 2001; Sun et al., 2008; Suryani et al., 2021).

A non-exhaustive list of recent significant contributions in this area includes Yu (2022), Maican et al. (2024), and AlBlooshi et al. (2023). In addition to literature review papers done by Zhao et al. (2022), Nortvig et al. (2018), Zeng and Wang (2021), and Refae et al. (2021).

In the literature, various factors affecting students’ satisfaction with online learning have been identified. However, no study has yet provided a comprehensive framework that combines technology aspects with instructional and learning design specifically tailored to engineering students. This article aims to fill that research gap by mapping independent variables related to technology and classroom interaction that impact online learning in engineering disciplines. Engineering education involves extensive applications and practices in science, mathematics, and technology, requiring collaboration and engagement in diverse activities and projects. The main contribution of this study is to connect these variables to students’ satisfaction with online learning, using a real case study at a college of engineering in the Gulf Region, and to examine the influence of different characteristics on online education preferences.

3 Methodology

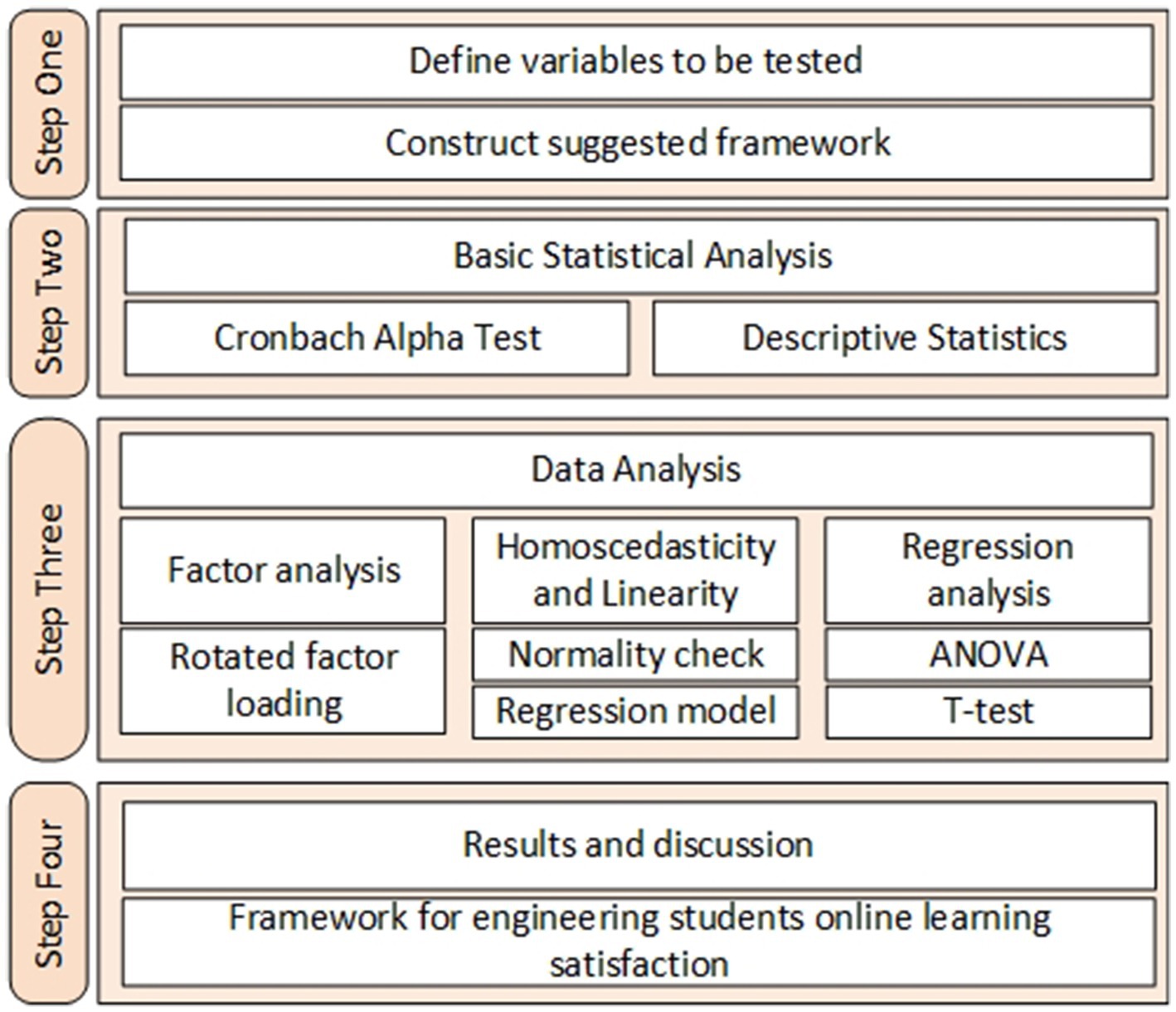

Based on the reviewed literature, seven key variables were identified as contributing to satisfaction with online learning methods. These variables were processed through the research methodology to pinpoint the critical factors affecting the satisfaction of engineering students. The methodology flow, represented in Figure 1, outlines the steps taken to achieve this objective.

Step one: defining variables and constructing the framework

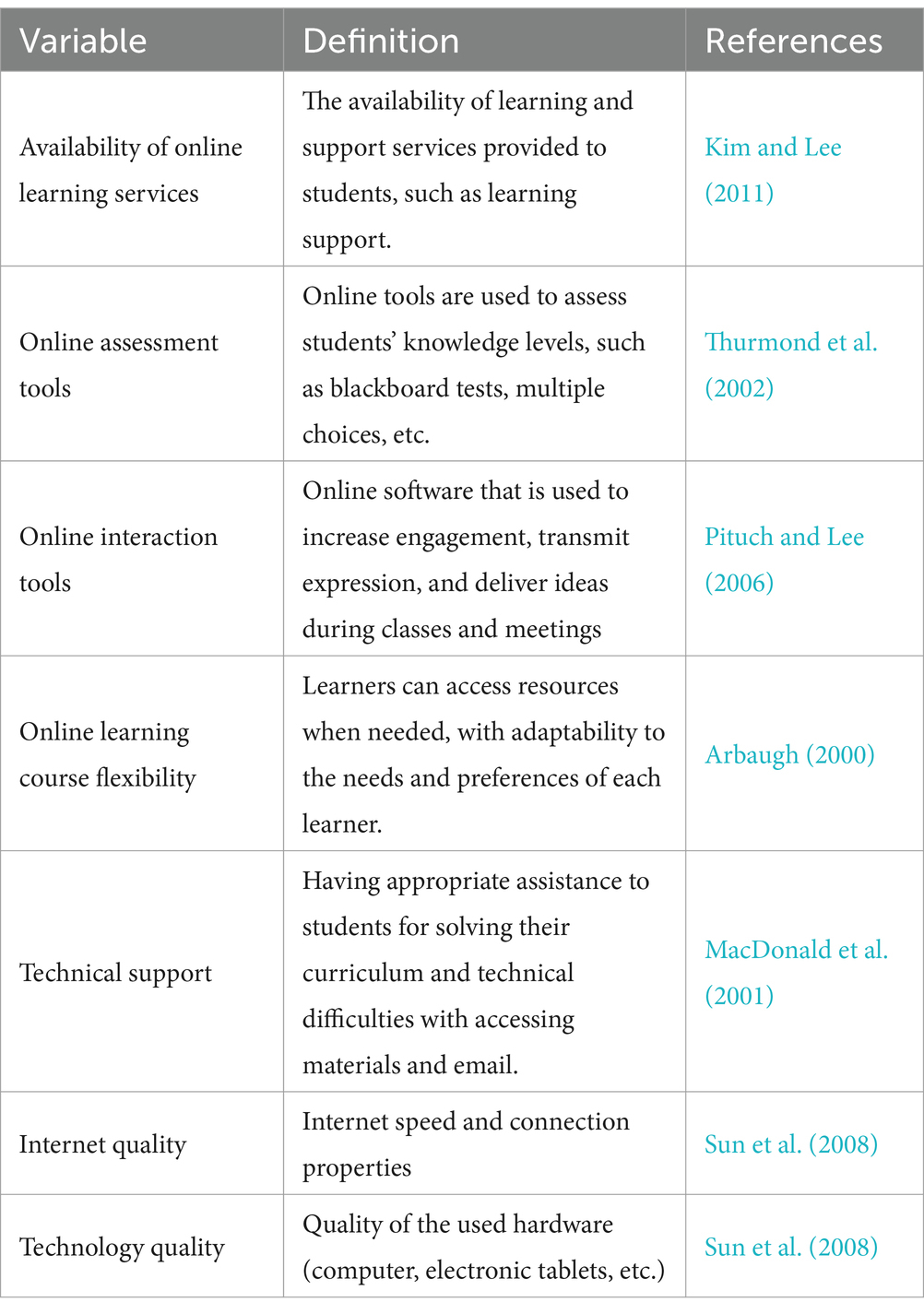

The first step involved defining the variables to be tested and constructing the suggested framework. Seven preliminary factors that potentially affect online learning satisfaction were identified: availability of online learning services, online assessment tools, online interaction tools, online learning course flexibility, technical support, internet quality, and technology quality. Table 1 presents each variable’s name, its definition, and the source from which it is adapted.

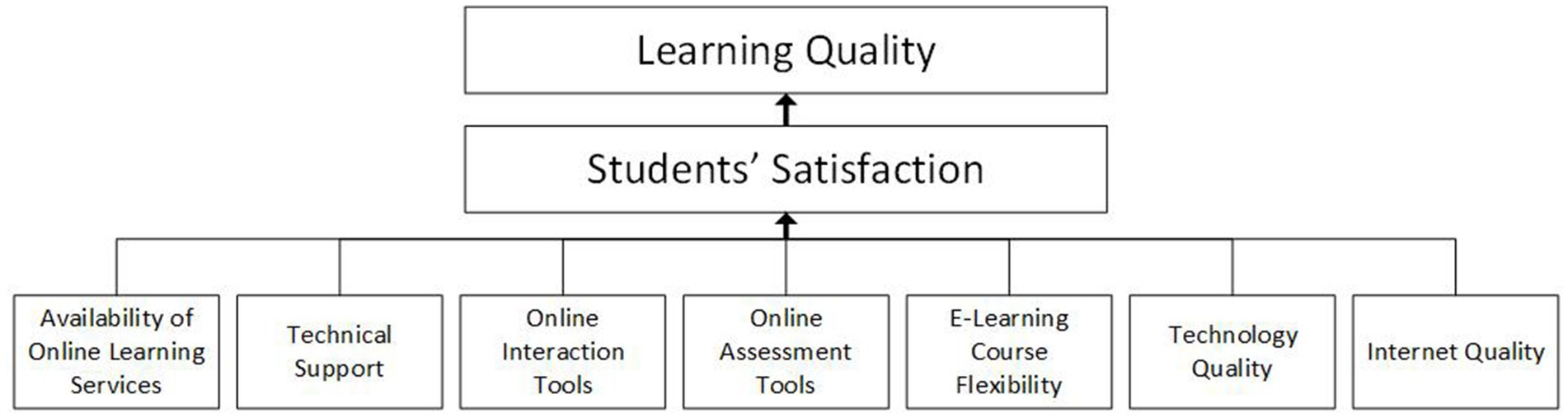

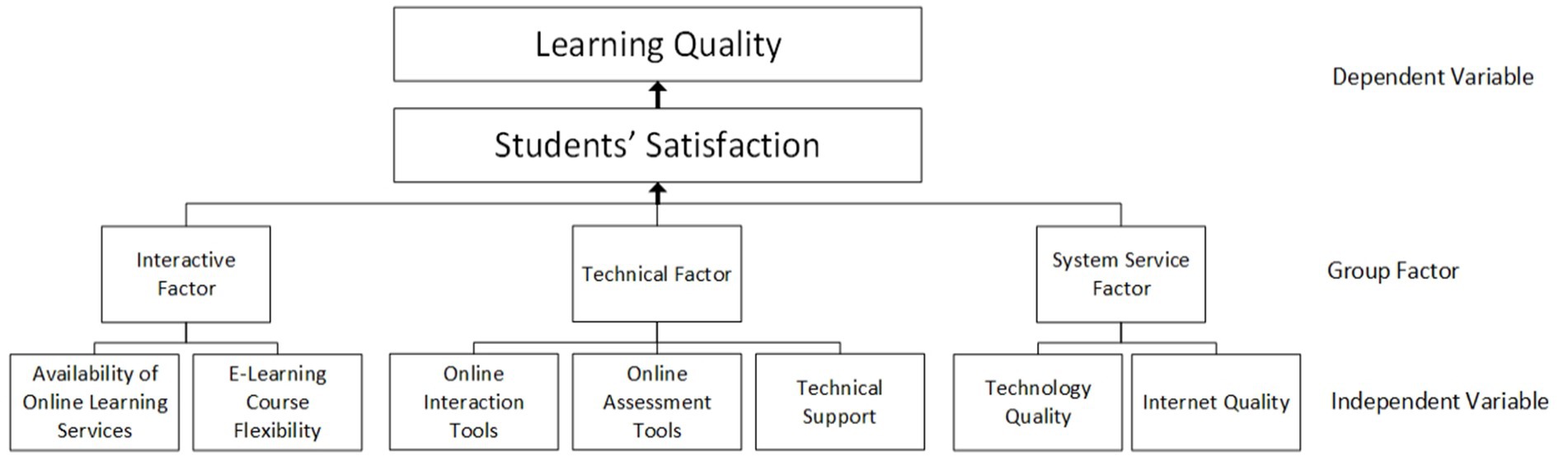

Based on the variable definitions and to cope with the defined methodology process, the preliminary research model is depicted in Figure 2 which maps the possible relationship between the independent and dependent variables.

Step two: basic statistical analysis

To ensure the validity of the identified variables, basic statistical analyses were conducted. This included Cronbach’s Alpha Test to measure internal consistency and descriptive statistics to provide a summary of the data.

Step three: data analysis

The data analysis phase involved four different methods that are factor analysis, regression analysis, normality check, and ANOVA and T-test (Fox, 2015; Montgomery and Runger, 2010). The reason behind each method is defined as follows:

• Factor analysis: This was conducted to identify the latent factors behind the variables. Factor analysis helps in understanding the underlying relationships between the dependent and independent variables, which is exactly the purpose of this study. In addition, it helps in identifying hidden patterns and relationships between the variables by reducing the number of variables to a smaller set of factors, making the data easier to understand and interpret these factors and target them with improvement plans.

• Normality, linearity, and homoscedasticity check: Ensuring that the data follows a normal distribution which is crucial for the validity of the regression models.

• Regression analysis: Multiple linear regression analysis was performed to determine the influence of the latent factors on student satisfaction. Regression analysis is essential for understanding how different variables impact the dependent variable.

• ANOVA and T-test: ANOVA and t-tests were conducted to compare groups stratified by demographic factors such as age, gender, and the number of semesters studied online. These tests help in identifying any significant differences between groups.

Step four: results and discussion

The final step is finalizing and presenting a framework for engineering online learning satisfaction. This framework was developed based on the identified factors and their impact on student satisfaction, providing insights into how to enhance the online learning experience for engineering students.

3.1 Data collection

To follow the defined methodology a data collection is required for building a realistic framework. A newly developed survey, designed specifically for this case study, used a structured questionnaire for data collection. The questionnaire is composed of two parts; the respondent information part (age, gender, major, and online learning experience) and the main question part using a 5-Likert scale (1 for the lowest satisfaction and 5 for the highest satisfaction). To ensure internal consistency in the instrument, three questions per variable were assigned, consisting of seven independent variables and one dependent variable (overall satisfaction). The reason for including three questions per variable is to facilitate the calculation of Cronbach’s Alpha. This approach is commonly practiced by many scholars, as evidenced by Taber (2018). This resulted in a total of 24 questions presented in the English language, consistent with the instructional language used at the university. Additionally, an open-ended question was included at the end of the survey to gather feedback or comments from the students. This approach allowed respondents to provide comments that clarified the reasons behind their responses, facilitating further analysis. The distributed survey is presented in Appendix I.

Before the pandemic, the instruction method relied on the traditional face-to-face teaching method, encompassing both lectures and hands-on labs. The background of these students is mainly from traditional teaching method schools. On campus, students had access to various study areas that were equipped with computer devices tailored to the specific requirements of engineering coursework and featured uninterrupted WIFI connectivity. These designated spaces were accessible at any required time while the campus was open.

However, in the context of this study, students are reflecting on their experiences with online learning during the pandemic, a period in which the educational process shifted to a remote setting, and students engaged in learning activities from home using personal devices. To cope with these challenges, the university management helped by providing laptop devices available to those in need, ensuring that everyone had the necessary technology for their studies during the pandemic and access to materials.

The used surveying technique to collect responses was a combination of Stratified Sampling and Convenience Sampling. The survey was distributed electronically to university students, with access restricted to university email accounts to ensure the authenticity and relevance of the respondents allowing only one-time access to it. Additionally, the survey was specifically sent to students who were admitted before or during the pandemic to ensure they had experienced both online and in-person classes at the university. This criterion was essential to gather comprehensive insights into their experiences and preferences regarding different modes of learning. To collect responses, the student population was divided into distinct subgroups based on their academic major, and academic classification. This stratification ensured that each major, and classification was adequately represented in the survey, providing a more accurate reflection in the collected feedback. Within each subgroup, a random selection process was initially intended to identify potential participants. However, given practical constraints such as time, accessibility, and the need to maximize response rates, Convenience Sampling was applied. This meant that responses were collected from those students who were willing to participate at the time of the survey distribution, and the survey was open for 2 weeks to collect responses. This hybrid approach allowed for a more efficient and practical data collection process while still striving to maintain a representative sample from each demographic group.

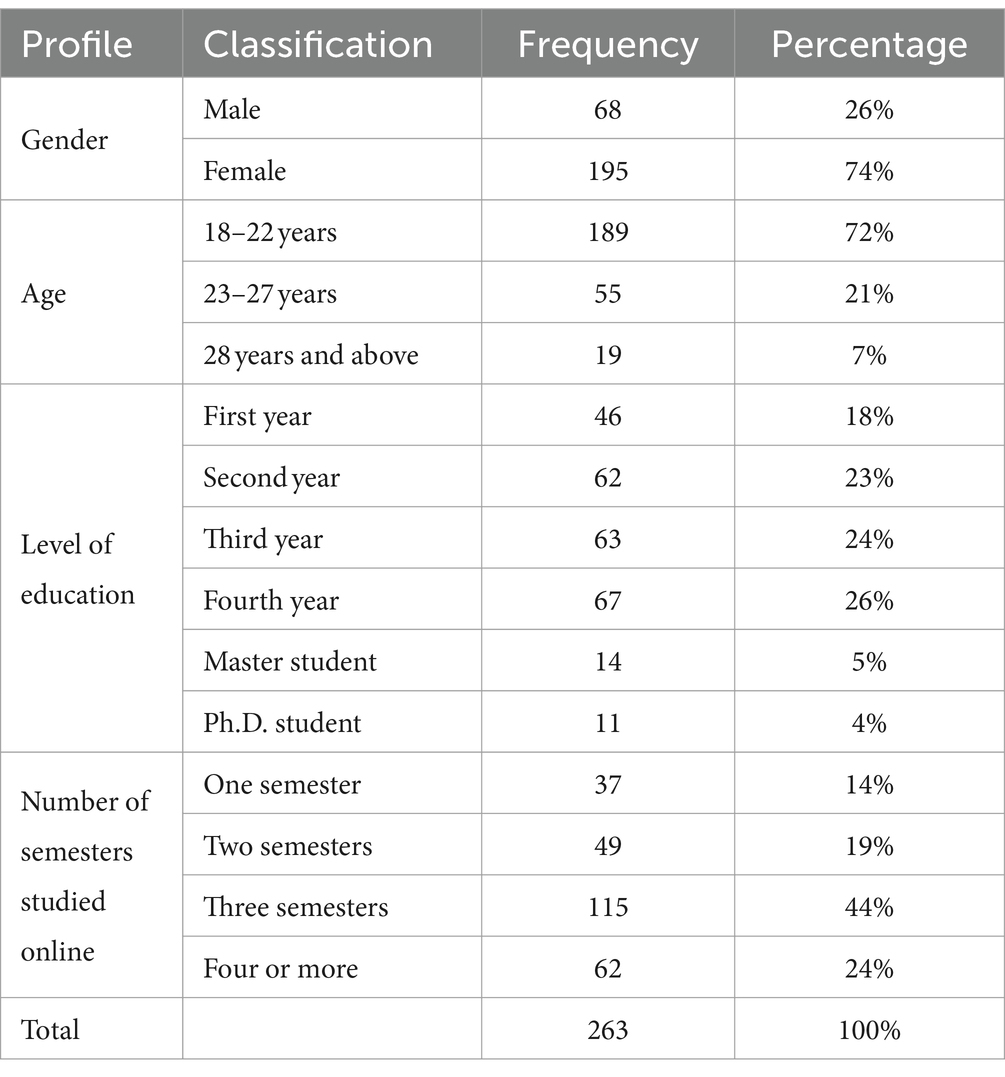

As a result, in total 263 engineering students participated in the survey. Engineering students are enrolled in the engineering program at the university, which contains nine undergraduate programs and 11 graduate programs. The undergraduate programs include Architecture, Chemical Engineering, Civil Engineering, Computer Science, Computer Engineering, Electrical Engineering, Industrial Engineering, Mechanical Engineering, and Mechatronics Engineering. While the graduate program includes Architecture, Chemical Engineering, Civil Engineering, Computer Science, Computer Engineering, Electrical Engineering, Engineering Management, Environmental Engineering, Industrial Engineering, Material Science, and Mechanical Engineering.

As shown in Table 2, among the 263 participants, 238 were undergraduate students, 14 were master’s students, and 11 were PhD students, all enrolled in the College of Engineering. The participants were representative of the engineering student population, encompassing a wide range of disciplines and academic levels. However, there were some differences among the three groups in terms of their distribution across the various programs. Due to the significant variation in the number of participants from different classifications (undergraduate, master’s, and PhD), an analysis to identify differences between these groups was not conducted. The collected feedback reveals a significant gender disparity in responses, with 75% coming from female students and 25% from male students. This finding is further validated by examining the distribution of responses by major: 36% of the total responses were from Chemical Engineering students, and 34% were from Computer Science and Engineering students. These figures contrast with the actual population distributions in these majors, where approximately 75 and 60% of the students, respectively, are female.

3.2 Data analysis

Minitab 8.0 was used for data analysis. A Cronbach’s alpha test was initially carried out to check the internal consistency of the questionnaire, which is a measure of reliability. As shown in Table 3, Cronbach’s alphas for all variables were >0.6, which is considered in the acceptable range for exploratory research. These values prove the questionnaire is valid for analysis. In fact, values greater than 0.6 are considered in the acceptable range for exploratory research, while values above 0.7 are generally preferred for established research (Griethuijsen et al., 2015).

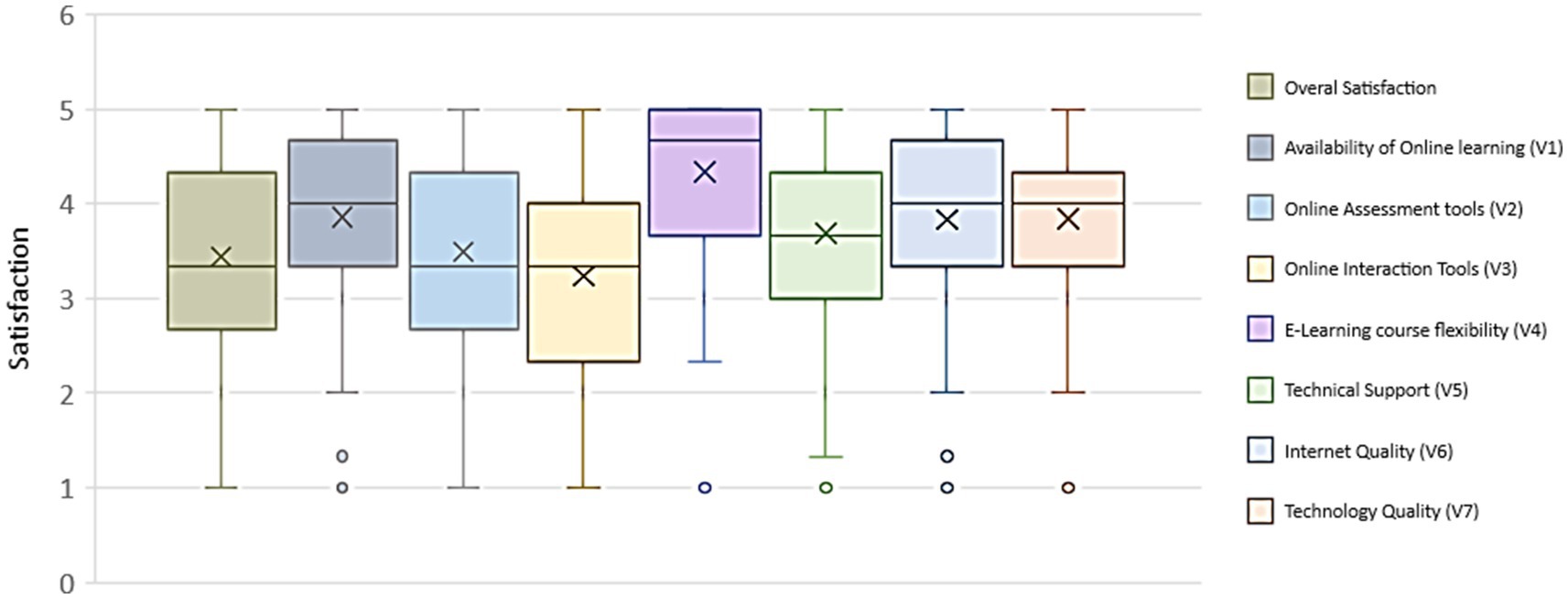

The scores for all variables were higher than 3 out of 5, demonstrating that the satisfaction rate among students is above satisfactory for all categories. The flexibility of the online learning course was the most satisfactory (4.34) and the online interaction tool was the least satisfactory (3.24). The overall satisfaction result was 3.44 out of 5. Therefore, to get a more clear conclusion, a box plot is performed to see the distribution of the data. Figure 3 presents a positive trend in satisfaction with key variables, including the availability of online learning, the flexibility of e-learning courses, technical support, the quality of the Internet, and the quality of technology. Notably, 75% of the data points for these variables are distributed above the value of 3 on the satisfaction scale. This signifies that a substantial majority of respondents express satisfaction levels exceeding the neutral point, indicating positive feelings toward the evaluated variables.

To assess the impact of various student characteristics on satisfaction, we performed a two-sample t-test stratified by gender and a one-way ANOVA based on age group and number of semesters studied. The results indicate that there is no statistically significant effect of classification factors on overall student satisfaction.

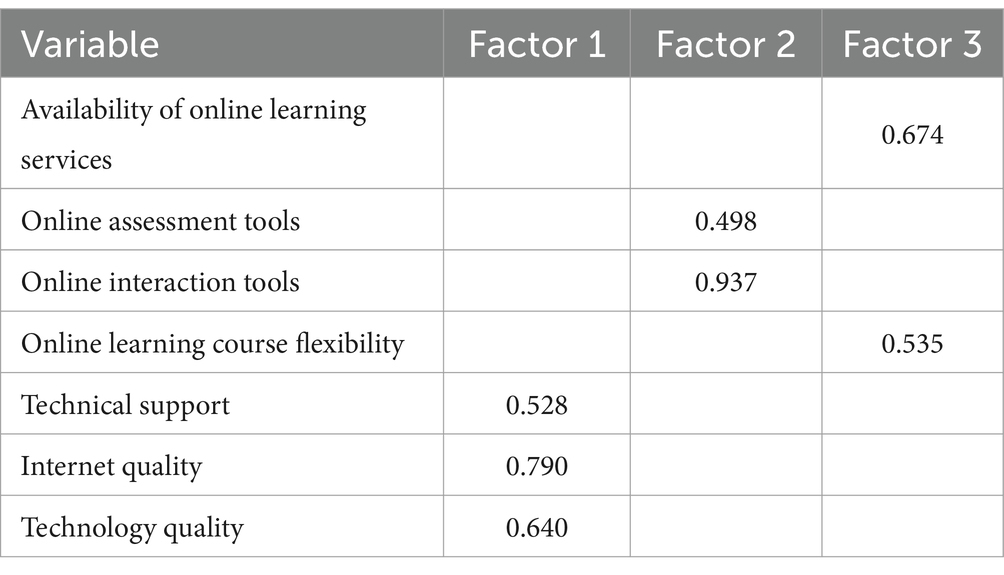

Then, exploratory factor analysis was conducted to identify the underlying structure of the data and to group related variables into factors, thereby simplifying subsequent analysis and interpretation. Exploratory factor analysis was chosen because the factor structure was not known beforehand and needed to be discovered, as suggested by the literature (Howard, 2023). Maximum likelihood extraction was performed to estimate the factors, and Varimax rotation was applied to achieve a simpler factor structure. A factor loading threshold of 0.4 was used to include only variables with significant relationships to the factors, following standard practices in factor analysis (Stevens, 2002). Based on the analysis Table 4 shows that technical support and internet quality in factor 1, online assessment tools and online interaction tools in factor 2, and availability of online learning services and flexibility of online learning courses in factor 3 were identified.

Taking into account the common features of the variables in each factor, the latent factors are named as follows:

• Technical factor: This factor includes technical support, internet quality, and technology quality. These components are integral to the technical infrastructure and support required for effective online learning.

• Interactive factor: This factor includes online assessment tools and online interaction tools. Both elements are central to the interactive aspects of online learning, facilitating engagement, communication, and assessment.

• System service factor: This factor includes the availability of online learning services and the flexibility of online learning courses. These variables reflect the system’s ability to provide accessible and adaptable learning options to students.

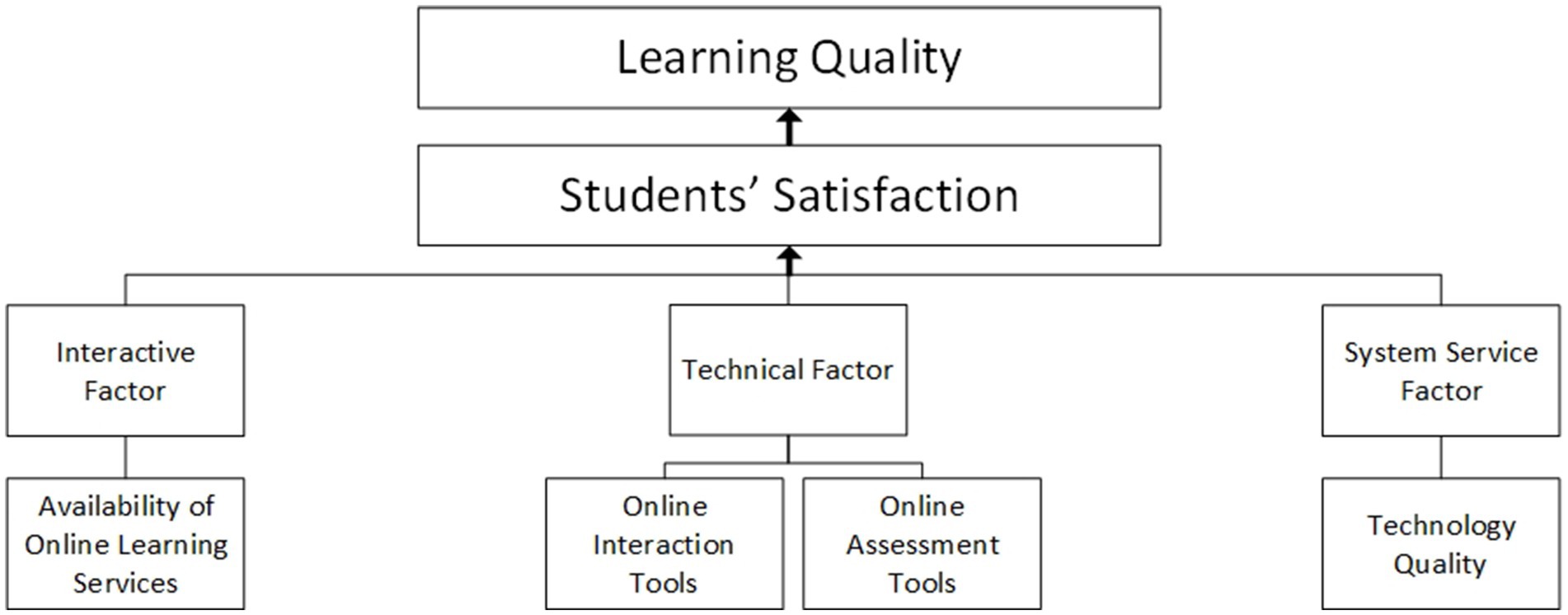

These factors are named according to the defining features of the variables they incorporate, ensuring clarity and relevance to the context of online learning. As a result, Figure 4 presents the updated framework, now incorporating factor analysis, and includes the associated variables to be tested in the upcoming regression analysis.

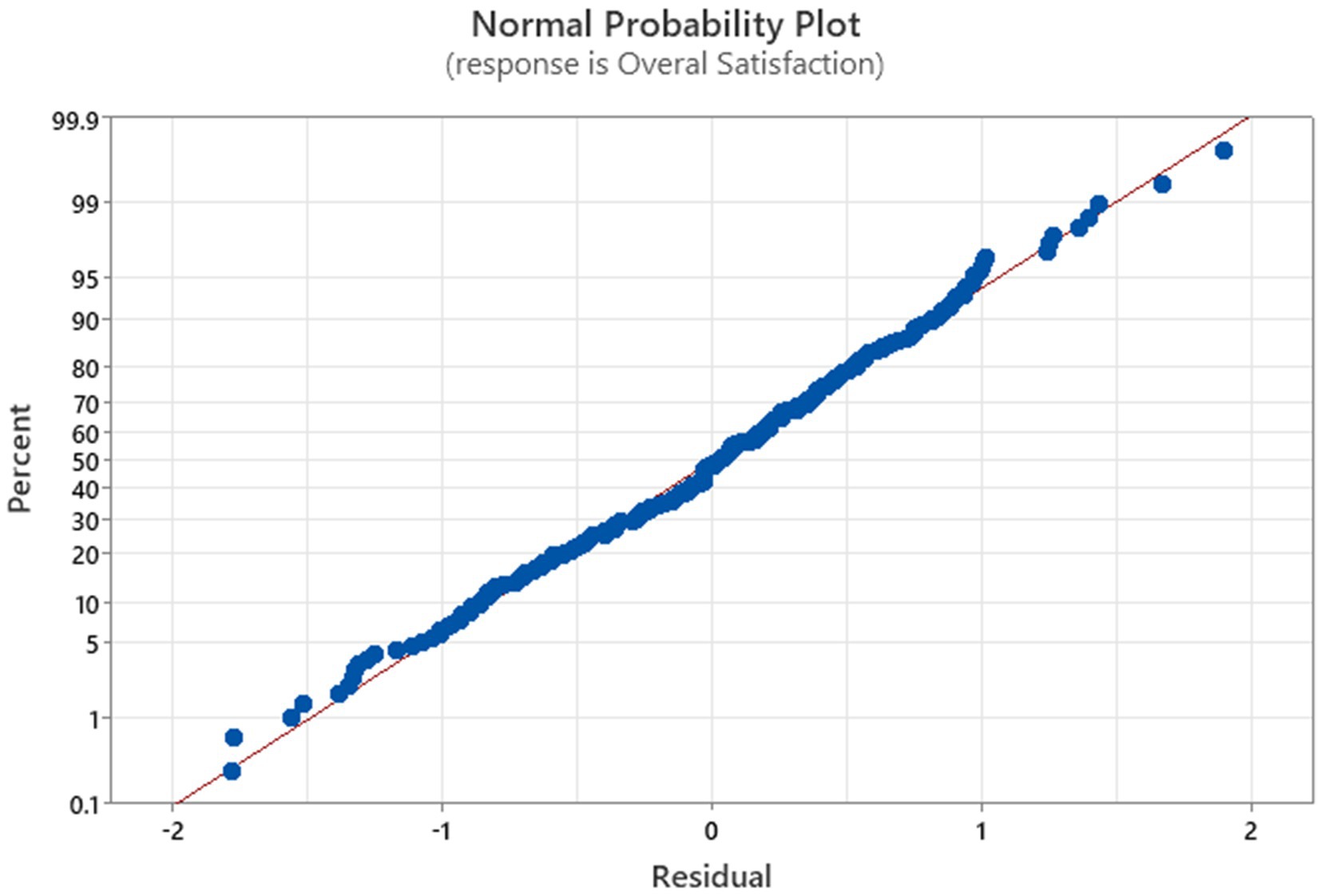

Then a multiple regression analysis was performed to examine the relationship between student satisfaction and independent variables. As a residual pre-test, a normality test was conducted at α = 0.05. As shown in Figure 5, the residuals of the normality of the data were secured (p = 0.299) to use a linear regression analysis.

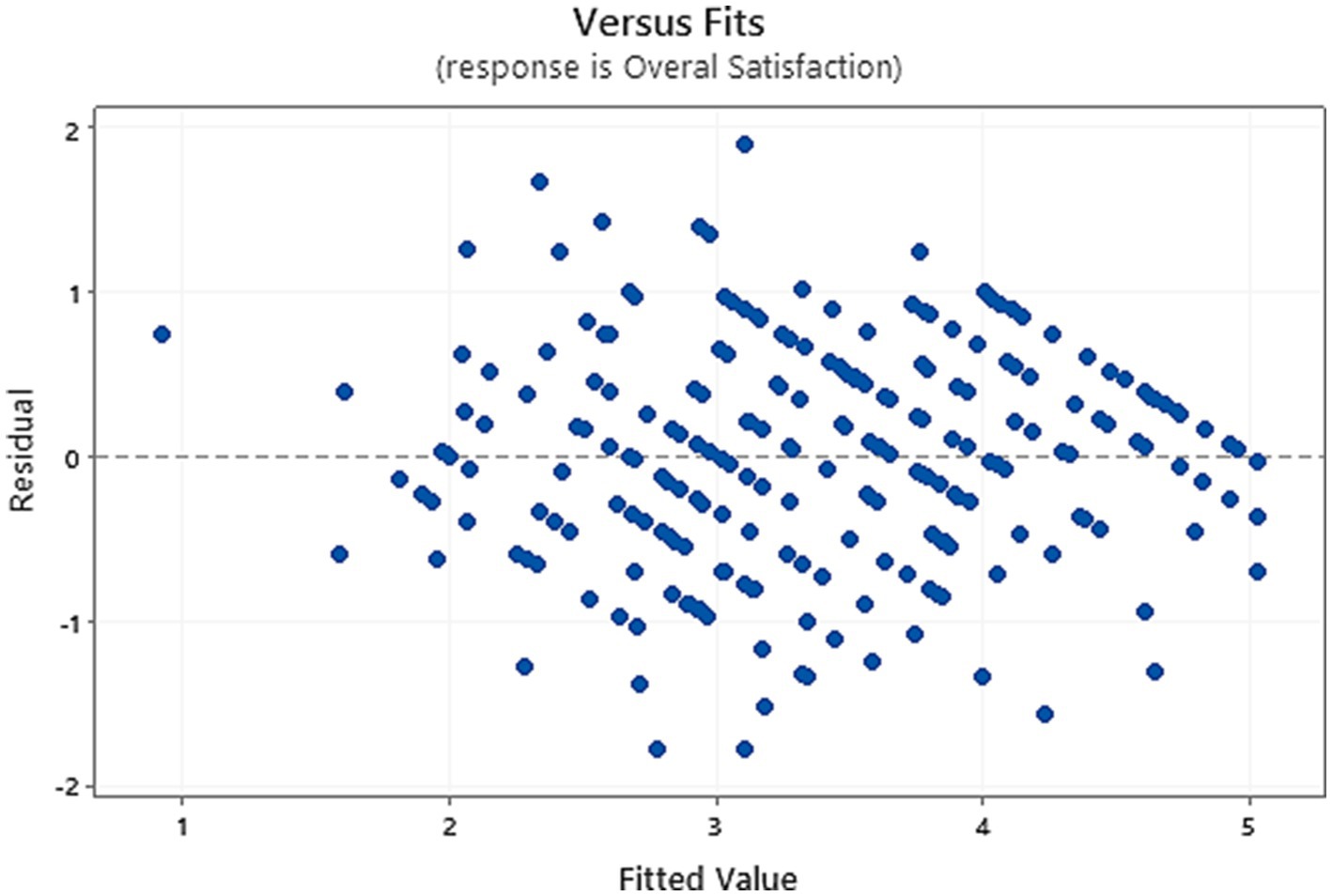

A homoscedasticity test was performed using a residual plot of overall satisfaction vs. fitted values as shown in Figure 6. The residuals were randomly scattered around the horizontal axis with no pronounced patterns, indicating randomness and centering around zero. This suggests that the model fits the data well. Additionally, the residual plot confirms that the assumption of linearity is largely satisfied. The random distribution of residuals around the zero line suggests that the relationship between the predictors and the response variable is well-represented by a linear model. Therefore, the data is suitable for regression analysis, meeting both homoscedasticity and linearity assumptions.

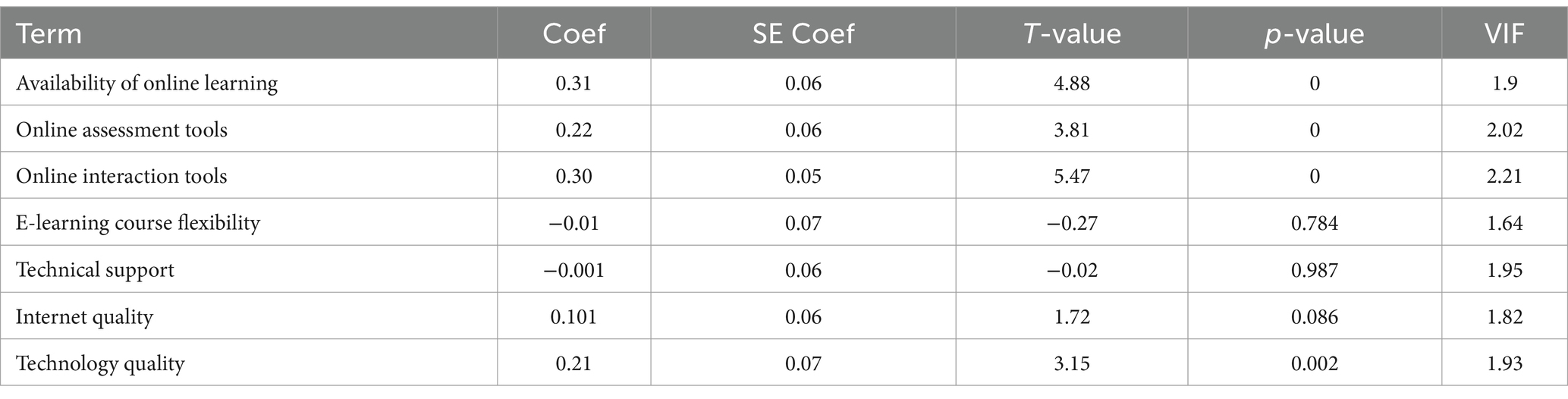

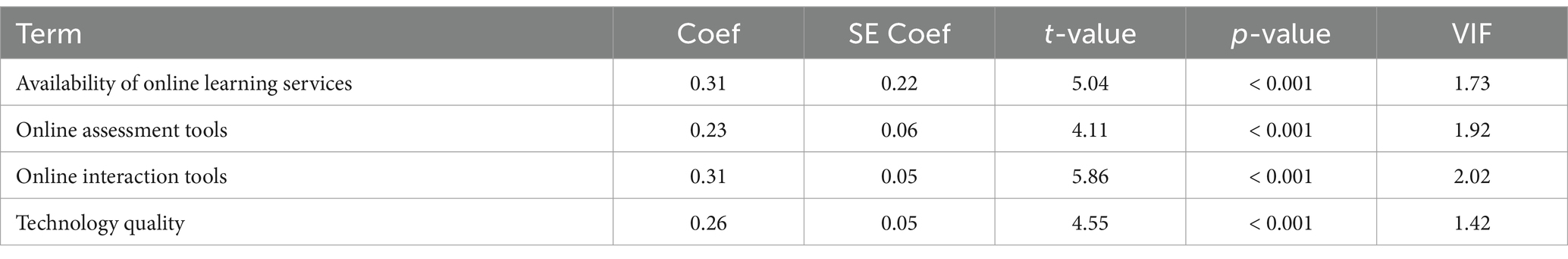

As shown in Table 5, the variance inflation factor (VIF) of all variables is <5, which shows that there are no significant multicollinearity issues with all variables. However, three independent variables (online learning course flexibility, technical support, and Internet Quality) were found to be not significant (p > 0.05) in the regression model.

After removing the insignificant variables from the model, the final regression model with four independent variables (availability of online learning services, online assessment tools, online interaction tools, and technology quality) was obtained as shown in the Equation 1.

(R2 = 63.83% and R2(adjusted) = 63.13%)

Four independent variables are significant at α = 0.05 (p < 0.05) with no multicollinearity issue among the variables (VIF < 5), which shows the relevance of the regression model using the independent variables (Table 6).

According to the regression analysis, 63.83% of the variance in student satisfaction was explained by the regression model (R2 = 63.83%). Given the fact that there is no large difference between R2 (63.83%) and R2(adjusted) (63.13%), it was found that there is no significant over-fitting issue. Online interaction tools (0.3133) and availability of online learning services (0.3118) showed greater contributions to increasing student satisfaction with online learning than online assessment tools (0.2342) and quality of technology (0.2673) to increase online learning experience.

4 Results and discussion

First, from the analysis, we can conclude that student characteristics such as gender, age group, and number of semesters studied do not have a statistically significant impact on overall student satisfaction. This suggests that these classification factors do not contribute meaningfully to variations in satisfaction levels among students.

Secondly, we can see that several key factors significantly affect the satisfaction of engineering students with online learning. From the regression analysis, these variables include the availability of online learning services, online assessment tools, online interaction tools, and technology quality, resulting in the framework presented in Figure 7. The framework presents the grouping factors and the variables. The factor analysis was conducted to enhance the reliability of the framework by grouping variables into distinct latent factors. This approach provides a structured overview of the data but does not imply that only the identified variables influence these factors. Other variables may also affect the latent factors, which warrants further investigation. While incorporating a regression analysis with the latent factors as independent variables could yield a more comprehensive understanding of their relationships and effects, the primary focus of this study was on assessing the impact of individual variables. The survey questions were specifically designed to evaluate these variables directly, rather than the latent factors themselves.

In-depth exploration of the latent factors was beyond the scope of this paper. Our primary aim was to analyze the effects of the individual variables. The latent factors were included as additional insights to refine the framework and provide a foundation for future research by other scholars.

This is justified as it plays a crucial role in shaping the overall perception of online lectures among engineering students. Furthermore, these variables directly influence the effective delivery and interpretation of online educational content during online lectures that used to be done face-to-face. Other variables such as technical support, flexibility of the online course, and quality of the Internet were integral components of the educational process even before the pandemic.

The university has been providing students with comprehensive access to learning materials through platforms such as Blackboard. In addition, a robust technical support system, facilitated by an IT helpdesk, was always available to students at all times. Additionally, the campus and various locations throughout the country, including study areas, coffee shops, and, in general, each residence, have consistently offered high-quality Internet access.

Therefore, the analysis suggests that the satisfaction of engineering students with online learning is closely related to these variables, emphasizing the importance of continued support and enhancement of these variables for an optimal online learning experience.

The results indicate that the quality of interactions, communications, and services between students and instructors affects the online learning experience as much as technical quality. This is consistent with the fact that online learning differs from offline learning primarily in terms of interactions, communication, and services, as opposed to the physical environment. Based on students’ feedback in the open-ended question (shown in Appendix I), it is beneficial to use similarity or likeness-based metaphors of offline learning as much as possible. The advantage lies in reducing the disparity between face-to-face and online learning, particularly in mitigating the loss of facial expressions and interactive elements. This can be achieved by incorporating emoticons or animations in communications to mimic offline interactions or communications, demonstrating a classroom or a meeting room with a graphic layout that stimulates the affordance of the interaction tool in the environment. Furthermore, based on student feedback, they expect online communication to be similar to offline communication in classrooms, meeting rooms, and laboratories. The main effort to improve online learning should be given to improving communication clarity and speed, considering that two main measures to assess communication quality are transmitted information (transmitted entropy) and channel capacity (Lehto and Landry, 2012). For example, the implementation of a live support service can help users resolve misunderstandings that occur for engineering students, such as using WebEx Board. WebEx Board, an interactive whiteboard designed for virtual meetings and presentations, serves as a hub for online collaboration and communication. Upgrading the WebEx Board with a wireless presentation screen, a digital whiteboard, and an audio/video conferencing system can improve online interactions. This enhancement can also capture a virtual image of the room, facilitating nonverbal communication through body language or facial expression, which is missing in applications such as WebEx or Teams which are just conferencing platforms.

As a result, this adaptation to online learning results more easily and efficiently in improving online learning satisfaction among engineering students. However, considering that technical quality improvement takes time and cost. Moreover, enhancing online learning satisfaction presents a challenge in training users, both instructors and students, on the specialized tools and pedagogy tailored for online education. The use of multiple tools often leads to difficulties in navigation and utilization.

Linear regression analysis found that about two-thirds of online learning satisfaction is affected by the quality of online learning services, online assessment tools online interaction tools, and quality of technology. Interestingly, software components such as the availability of online learning services and online interaction tools contribute more to online learning satisfaction than technical quality. Evaluation is a challenge in online learning, although it is one of the significant factors in the regression model. There is no perfect way to monitor a test due to issues such as cheating, privacy, and system failure, which are context, culture, or technology-dependent.

Technology improvements based on virtual reality, artificial intelligence, machine learning, and the fifth-generation mobile network (5G) are expected to partially reduce the aforementioned issues (communication barriers, assessment invigilation, etc.) (Kumar et al., 2022).

Although online learning is not new, it had not been implemented worldwide until the COVID-19 pandemic broke out. The pandemic is pushing societies to utilize technological advancements. Online learning was urgently implemented without prior preparation, like most universities around the world. This study showed that students were fairly satisfied with online learning, although it needs improvements in services, tools, technologies, and assessments. Without a doubt, online learning is not just a short trend due to COVID-19, and it is expected to continue along with offline learning, supplementing each other’s drawbacks. The role of online learning is expected to increase even after the pandemic ends.

Finally, following a comprehensive review of the relevant literature, we have identified a notable gap in research concerning the impact of various factors on engineering students, particularly those engaged in advanced mathematical and laboratory-based coursework. To date, there appears to be no study that specifically examines how variables such as the availability of online learning services, online assessment tools, online interaction tools, and technology quality affect engineering students who traditionally rely on face-to-face instruction due to the complex and hands-on nature of their studies. This gap is significant given that engineering education has always involved in-person interactions with the practical and mathematical aspects of the discipline. Therefore, any attempt to compare our findings with existing research may not be appropriate, as our study is the first of its kind in its exploration of this relatively unexplored area. The novelty of this study lies in its focus on understanding how engineering students interact with online learning environments and identifying critical factors that influence their online educational experience. Key areas of interest include the availability and effectiveness of online learning services, the utility of online assessment tools, the functionality of online interaction tools, and the overall quality of technology used in online learning settings. These factors are crucial for developing effective online learning strategies tailored to the needs of engineering students.

5 Conclusion and limitations

This research presents a framework for understanding the factors influencing online learning satisfaction in engineering disciplines. The study utilized real-case data from a college of engineering, with a total of 263 students participating from different engineering disciplines. These students engaged in both online and in-person learning throughout their studies. Various statistical methods were employed, including Cronbach’s alpha test, descriptive statistics, factor analysis, regression analysis, normality checks, regression modeling, ANOVA, and t-tests. The findings indicate that satisfaction with online learning was consistent across different student groups, with no significant differences based on demographic factors such as age, gender, or duration of online learning experience. Through exploratory factor analysis, the study identified technical factors, interactive factors, and system service factors as key variables enhancing online learning satisfaction. After conducting regression analysis and removing insignificant variables, the final model revealed that four key independent variables significantly impact satisfaction: availability of online learning services, online assessment tools, online interaction tools, and technology quality. This comprehensive framework, which integrates both factor and regression analyses, is designed to improve students’ satisfaction with online learning by focusing on these critical factors. As a result, the study presents a comprehensive framework designed to improve students’ satisfaction with online learning by focusing on these critical factors.

While this study offers valuable insights into factors affecting engineering students’ satisfaction with online learning, it has several limitations that should be addressed in future research. First, future research should include a broader range of variables, such as the impact of long-term technology use and its influence on student interactions. Second, the study’s focus on the Gulf Region may not be generalizable to other regions; thus, surveying a larger and more diverse population could validate the findings. Additionally, the study did not explore the perspectives of academic educators, which could offer valuable insights into the perceived effectiveness of online teaching methods. Moreover, a comparative study should examine these factors across various disciplines to identify any differences or similarities in their impact on student satisfaction. Finally, future research should examine personal and intellectual factors, such as individual traits and mental or physical challenges, which could significantly impact the online learning experience. Addressing these limitations will enhance understanding and contribute to the development of more effective online learning strategies.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The research protocol was reviewed and approved by the Institutional Review Board (IRB) at Qatar University under the reference number QU-IRB 042/2024-EM. Written informed consent from the (patients/ participants OR patients/participants legal guardian/next of kin) was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

MA-K: Writing – original draft. AA: Writing – original draft. MT: Writing – original draft. AK: Writing – original draft. MA: Writing – review & editing. PC: Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

The initial draft of this paper was submitted as a partial fulfillment of the graduate course Applied Statistics Techniques DENG 604 offered at the College of Engineering, Qatar University, Doha, Qatar. The authors acknowledge the support of the Academic Advising Office in the College of Engineering of Qatar University for providing access to students’ contact information for survey distribution purposes.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2024.1445885/full#supplementary-material

References

Adedoyin, O. B., and Soykan, E. (2020). COVID-19 pandemic and online learning: the challenges and opportunities. Interact. Learn. Environ. 31, 863–875. doi: 10.1080/10494820.2020.1813180

Adeniyi, I. S., Hamad, A., Mohd, N., Adewusi, O. E., Unachukwu, C. C., Osawaru, B., et al. (2024). Reviewing online learning effectiveness during the COVID-19 pandemic: a global perspective. Int J Sci Res Archive 11, 1676–1685.

Alam, M. M., Ahmad, N., Naveed, Q. N., Patel, A., Abohashrh, M., and Khaleel, M. A. (2021). E-learning services to achieve sustainable learning and academic performance: an empirical study. Sustain. For. 13:2653. doi: 10.3390/su13052653

AlBlooshi, S., Smail, L., Albedwawi, A., al, M., and AlSafi, M. (2023). The effect of COVID-19 on the academic performance of Zayed university students in the United Arab Emirates. Front. Psychol. 14:1199684. doi: 10.3389/fpsyg.2023.1199684

Amin, M., Sibuea, A. M., and Mustaqim, B. (2002). The effectiveness of online learning using E-learning during pandemic COVID-19. J. Educ. Technol. 6, 247–257. doi: 10.23887/jet.v6i2.44125

Arbaugh, B. (2000). Virtual classroom characteristics and student satisfaction with internet-based MBA courses. J. Manag. Educ. 24, 32–54. doi: 10.1177/105256290002400104

Aristovnik, A., Karampelas, K., Umek, L., and Ravšelj, D. (2023). Impact of the COVID-19 pandemic on online learning in higher education: a bibliometric analysis. Front Educ 8:1225834. doi: 10.3389/feduc.2023.1225834

Ayari, M. A., Ayari, S., and Ayari, A. (2012). Effects of use of technology on students' motivation. Journal of teaching and education 1, 407–412.

Bourne, J., Harris, D., and Mayadas, F. (2005). Online engineering education: learning anywhere, anytime. J. Eng. Educ. 94, 131–146. doi: 10.1002/j.2168-9830.2005.tb00834.x

Cole, M. T., Shelley, D. J., and Swartz, L. B. (2014). Online instruction, e-learning, and student satisfaction: a three year study. Int. Rev. Res. Open Distrib. Learning 15:1748. doi: 10.19173/irrodl.v15i6.1748

Dashtestani, R. (2020). Online courses in the higher education system of Iran: a stakeholder-based investigation of pre-service teachers’ acceptance, learning achievement, and satisfaction. Int. Rev. Res. Open Distrib. Learning 21, 117–142. doi: 10.19173/irrodl.v21i4.4873CopiedAn

Dhawan, S. (2020). Online learning: a panacea in the time of COVID-19 crisis. J. Educ. Technol. Syst. 49, 5–22. doi: 10.1177/0047239520934018

Dinh, L. P., and Nguyen, T. T. (2020). Pandemic, social distancing, and social work education: students’ satisfaction with online education in Vietnam. Soc. Work. Educ. 39, 1074–1083. doi: 10.1080/02615479.2020.1823365

Fox, J. (2015). Applied regression analysis and generalized linear models. London: Sage publications.

Gachigi, P. N., Ngunu, S., Okoth, E., and Alulu, L. (2023). Predictors of students’ satisfaction in online learning. Int. J. Res. Innov. Soc. Sci. 7, 1657–1669.

Gopal, R., Singh, V., and Aggarwal, A. (2021). Impact of online classes on the satisfaction and performance of students during the pandemic period of COVID 19. Educ. Inf. Technol. 26, 6923–6947.

Griethuijsen, V., Ralf, A. L. F., Eijck, V., Michiel, H., Helen, D. B., Perry, S., et al. (2015). Global patterns in students’ views of science and interest in science. Res. Sci. Educ. 45, 581–603. doi: 10.1007/s11165-014-9438-6

Hamad, W. (2022). Understanding the foremost challenges in the transition to online teaching and learning during COVID-19 pandemic: a systematic literature review. J. Educ. Technol. Online Learning 5, 393–410. doi: 10.30574/ijsra.2024.11.1.0282

Hermiza, M. (2020). The effect of online learning on university students' learning motivation. JPP 27, 42–47. doi: 10.17977/um047v27i12020p042

Howard, M. C. (2023). A systematic literature review of exploratory factor analyses in management. J. Bus. Res. 164:113969. doi: 10.1016/j.jbusres.2023.113969

Husain, Arshad Saeed . (2021). Education: the future of digital education. https://www.dawn.com/news/1636577 (Accessed June 01, 2024).

Jiang, H., Islam, A. Y. M., Gu, X., and Spector, J. M. (2021). Online learning satisfaction in higher education during the COVID-19 pandemic: a regional comparison between eastern and Western Chinese universities. Educ. Inf. Technol. 26, 6747–6769. doi: 10.1007/s10639-021-10519-x

Kim, J. M., and Lee, W. G. (2011). Assistance and possibilities: analysis of learning-related factors affecting the online learning satisfaction of underprivileged students. Comput. Educ. Inf. Technol. 57, 2395–2405. doi: 10.1016/j.compedu.2011.05.021

Kumar, P Pramod, Thallapalli, Ravikumar, Akshay, R, Sai, K Sridhar, Sai, K Srikar, and Srujan, G Sai. (2022). State-of-the-art: Implementation of augmented reality and virtual reality with the integration of 5G in the classroom. AIP Conference Proceedings. Vol. 2418. (AIP Publishing).

Landrum, B., Bannister, J., Garza, G., and Rhame, S. (2021). A class of one: students’ satisfaction with online learning. J. Educ. Bus. 96, 82–88. doi: 10.1080/08832323.2020.1757592

Lehto, M. R., and Landry, S. J. (2012). Introduction to human factors and ergonomics for engineers. Crc Press.

MacDonald, C., Stodel, E., Farres, L., Breithaupt, K., and Gabriel, M. (2001). The demand-driven learning model: a framework for web-based learning. Int. Higher Educ. 4, 9–30. doi: 10.1016/S1096-7516(01)00045-8

Maican, C. I., Cazan, A. M., Cocoradă, E., Dovleac, L., Lixăndroiu, R. C., Maican, M. A., et al. (2024). The role of contextual and individual factors in successful e-learning experiences during and after the pandemic–a two-year study. J. Comput. Educ. 14, 1–36. doi: 10.1007/s40692-024-00323-0

Montgomery, D. C., and Runger, G. C. (2010). Applied statistics and probability for engineers. New York, NY: John wiley and sons.

Na, S., and Jung, H. (2021). Exploring university instructors’ challenges in online teaching and design opportunities during the COVID-19 pandemic: a systematic review. Int. J. Learn. Teach. Educ. Res. 20, 308–327. doi: 10.26803/ijlter.20.9.18

Nagy, J. T. (2018). Evaluation of online video usage and learning satisfaction: an extension of the technology acceptance model. Int. Rev. Res. Open Distrib. Learn. 19:2886. doi: 10.19173/irrodl.v19i1.2886

Njoroge, J., Norman, A., Reed, D., and Suh, I. (2012). Identifying facets of technology satisfaction: measure development and application. J. Learn. Higher Educ. 8, 7–17.

Nortvig, Anne-Mette, Petersen, Anne Kristine, and Balle, Søren Hattesen. (2018). A Literature review of the factors influencing e-learning and blended learning in relation to learning outcome, student satisfaction and engagement. Electr. J. E-learning, 16, pp. 46–55.

Palmer, S. R., and Holt, D. M. (2009). Examining student satisfaction with wholly online learning. J. Comput. Assist. Learn. 25, 101–113. doi: 10.1111/j.1365-2729.2008.00294.x

Parahoo, S. K., Santally, M. I., Rajabalee, Y., and Harvey, H. L. (2016). Designing a predictive model of student satisfaction in online learning. J. Mark. High. Educ. 26, 1–19. doi: 10.1080/08841241.2015.1083511

Piccoli, G., Ahmad, R., and Ives, B. (2001). Web-based virtual learning environments: a research framework and a preliminary assessment of effectiveness in basic IT skills training. MIS Q. 25:401. doi: 10.2307/3250989

Pituch, K., and Lee, Y.-k. (2006). The influence of system characteristics on e-learning use. Comput. Educ. Inf. Technol. 47, 222–244. doi: 10.1016/j.compedu.2004.10.007

Prasetya, T. A., Harjanto, C. T., and Setiyawan, A. (2020). Analysis of student satisfaction of e-learning using the end-user computing satisfaction method during the COVID-19 pandemic. J. Phys. Conf. Ser. 1700:012012. doi: 10.1088/1742-6596/1700/1/012012

Puriwat, W., and Tripopsakul, S. (2021). The impact of e-learning quality on student satisfaction and continuance usage intentions during covid-19. Int. J. Inf. Educ. Technol. 11, 368–374. doi: 10.18178/ijiet.2021.11.8.1536

Puška, A., Puška, E., Dragić, L., Maksimović, A., and Osmanović, N. (2020). Students’ satisfaction with E-learning platforms in Bosnia and Herzegovina. Technol. Knowl. Learn. 26, 173–191. doi: 10.1007/s10758-020-09446-6

Radu, M.-C., Schnakovszky, C., Herghelegiu, E., Ciubotariu, V.-A., and Cristea, I. (2020). The impact of the COVID-19 pandemic on the quality of educational process: a student survey. Int. J. Environ. Res. Pub. Health 17:7770. doi: 10.3390/ijerph17217770

Refae, E., Awad, G., Kaba, A., and Eletter, S. (2021). Distance learning during COVID-19 pandemic: satisfaction, opportunities and challenges as perceived by faculty members and students. Interactive Technol. Smart Educ. 18, 298–318. doi: 10.1108/ITSE-08-2020-0128

Said, B., Ahmed, A.-S., Abdel-Salam, G., Abu-Shanab, E., and Alhazaa, K. (2022). “Factors affecting student satisfaction towards online teaching: a machine learning approach” in Digital economy, business analytics, and big data analytics applications. ed. S. G. Yaseen (Springer), 309–318.

Sampson, P. M., Leonard, J., Ballenger, J. W., and Coleman, J. C. (2010). Student satisfaction of online courses for educational leadership. Online J. Dist. Learn. Admin. 13, 1–12.

Sepasgozar, S. M. E. (2020). Digital twin and web-based virtual gaming technologies for online education: a case of construction management and engineering. Appl. Sci. 10:4678. doi: 10.3390/app10134678

Spond, M., Ussery, V., Warr, A., and Dickinson, K. J. (2022). Adapting to major disruptions to the learning environment: strategies and lessons learnt during a global pandemic. Med. Sci. Educ. 32, 1173–1182. doi: 10.1007/s40670-022-01608-8

Stevens, J. (2002). Applied multivariate statistics for the social sciences, Vol. 4. Mahwah, NJ: Lawrence Erlbaum Associates.

Sun, P.-C., Tsai, R., Finger, G., Chen, Y.-Y., and Yeh, D. (2008). What drives a successful e-learning? An empirical investigation of the critical factors influencing learner satisfaction. Comput. Educ. 50, 1183–1202. doi: 10.1016/j.compedu.2006.11.007

Suryani, N. K., Sugianingrat, I. A., and Widani, P. (2021). Student E-learning satisfaction during the COVID-19 pandemic in Bali, Indonesia. J. Econ. 17, 141–151.

Taber, K. S. (2018). The use of Cronbach’s alpha when developing and reporting research instruments in science education. Res. Sci. Educ. 48, 1273–1296. doi: 10.1007/s11165-016-9602-2

Thurmond, V., Wambach, K., Connors, H., and Frey, B. (2002). Evaluation of student satisfaction: determining the impact of a web-based environment by controlling for student characteristics. Am. J. Dist. Educ. 16, 169–190. doi: 10.1207/S15389286AJDE1603_4

Violante, M. G., and Vezzetti, E. (2015). Virtual interactive e-learning application: an evaluation of the student satisfaction. Comput. Appl. Eng. Educ. 23, 72–91. doi: 10.1002/cae.21580

Widharto, Y., Tahqiqi, M., Rizal, N., Denny, S., and Wicaksono, P. A. (2021). The virtual laboratory for turning machine operations using the goal-directed design method in the production system laboratory as simulation devices. IOP Conf. Series Mat. Sci. Eng. 1072:012076. doi: 10.1088/1757-899x/1072/1/012076

Yalçın, Y., and Dennen, V. P. (2024). An investigation of the factors that influence online learners’ satisfaction with the learning experience. Educ. Inf. Technol. 29, 3807–3836. doi: 10.1007/s10639-023-11984-2

Yu, Q. (2022). Factors influencing online learning satisfaction. Front. Psychol. 13:852360. doi: 10.3389/fpsyg.2022.852360

Zeng, X., and Wang, T. (2021). College student satisfaction with online learning during COVID-19: a review and implications. Int. J. Multidisciplinary Persp. Higher Educ. 6, 182–195. doi: 10.32674/jimphe.v6i1.3502

Zhang, H., and Liu, Y. (2024). Schrödinger's cat-parallel experiences: exploring the underlying mechanisms of undergraduates' engagement and perception in online learning. Front. Psychol. 15:1354641. doi: 10.3389/fpsyg.2024.1354641

Keywords: online learning experience, factor analysis, multiple linear regression, user experience, learning quality, engineering education

Citation: Al-Khatib M, Alkhatib A, Talhami M, Kashem AHM, Ayari MA and Choe P (2024) Enhancing engineering students’ satisfaction with online learning: factors, framework, and strategies. Front. Educ. 9:1445885. doi: 10.3389/feduc.2024.1445885

Edited by:

Silvia F. Rivas, Universidad de Salamanca, SpainReviewed by:

Mohammad Najib Jaffar, Islamic Science University of Malaysia, MalaysiaQiangfu Yu, Xi’an University of Technology, China

Copyright © 2024 Al-Khatib, Alkhatib, Talhami, Kashem, Ayari and Choe. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mohamed Arselene Ayari, YXJzbGFuYUBxdS5lZHUucWE=

Maryam Al-Khatib

Maryam Al-Khatib Amira Alkhatib2

Amira Alkhatib2 Mohamed Arselene Ayari

Mohamed Arselene Ayari