- 1Department of Psychology, Laboratoire Interdisciplinaire en Neurosciences, Physiologie et Psychologie. Université Paris Nanterre, Paris, France

- 2Departement de Sciences de l’Éducation et de la Formation, Laboratoire d’Innovation et Numerique pour l’Education, Université Côte d’Azur, Nice, France

Introduction: This study looked at how an assessment instruction and test anxiety (TA) can influence divergent thinking (DT) components of creativity in a playful robotic problem-solving task.

Methods: We measured TA and creative performance (TD) under assessment and non-assessment conditions in 122 secondary students engaged in creative problem solving (CPS).

Result: The aspects of DT (fluidity and originality) showed a tendency to be impacted by assessment instruction. Thus, under non-assessment conditions, the learners show higher fluidity and better originality in the first occurrence of the CPS task. In the second occurrence, time spent on CPS decreases. Moreover, the originality turns to be impaired in the second trial and only student under assessment maintain their engagement in the activity. No correlation was found between TA and DT, and no gender or age differences were observed. The results suggest that TA does not influence the performance of the students involved in creative problem-solving processes.

Discussion: We discuss the findings in relation to game-based learning specificities. The assessment instruction in playful activities can be perceived as a positive challenge and even the students showing higher levels of test anxiety do not perceive it as a threat. Furthermore, if time constraints are minimized, the impact of assessment instruction on creative performance might be further reduced. The finding of this study opens promising perspectives to the research on innovative forms of school assessment and creative problem solving.

Creativity and creative problem-solving

Creativity is an important component of the problem-solving process (Besançon et al., 2015; Nazzal and Kaufman, 2020; Runco, 2023). In educational tasks, creative problem-solving (CPS) engages complex cognitive processes including divergent thinking, convergent thinking, and the regulation of the CPS process toward the activity goal. CPS tasks are characterized by a certain level of uncertainty (Runco, 2022) and are often ill-defined (Mumford et al., 2020; Romero et al., 2022), in which they have different degrees of creative freedom for progressing toward a solution. Greenwald (2000) characterizes ill-defined problems as being “unclear and raises questions about what is known, what needs to be known, and how the answer can be found. Because the problem is unclear, there are many ways to solve it, and the solutions are influenced by one’s vantage point and experience” (p. 28). In this sense, CPS is more complex than well-defined problem-solving tasks in which the process and solution could be solved by applying logical reasoning (Jaarsveld et al., 2010). Another difficulty in the study of CPS is the diversity of the epistemological and methodological approaches of CPS studies; from the neuroscience level to the socio-cultural one, different levels of analysis are difficult to consider simultaneously. While the cognitive neuroscience level advances in the understanding of how our brains function in creative tasks (Beaty et al., 2023; Daikoku et al., 2021), psychologists working on creativity develop a diversity of approaches in which we can find studies at the cognitive level (Berlin et al., 2023; Hao et al., 2021; Sternberg and Lubart, 2023), the behavioral level (Nemiro et al., 2017), the small group level (Cassone et al., 2021; Sarmiento and Stahl, 2008), and the organizational one (Selkrig and Keamy, 2017). Researchers in the learning sciences more often do not consider creativity at the (neuro)cognitive level; they focus on situated learning tasks in ecological contexts (Savic, 2016). In this study, we consider both the creative studies and the CPS studies in the learning sciences to inform the experimental design for the study of CPS.

In educational settings, assessment is an important factor to consider in the study of the learning processes. In this study, we analyze the impact of assessment in creative problem-solving (CPS) in educational settings. For this objective, we consider the assessment impact on both creative performance and divergent thinking (DT).

The Four-Stage Model of Creative Problem-Solving, Engineering Design, and Design Thinking (Besançon et al., 2015; Nazzal and Kaufman, 2020; Runco, 2022) distinguishes problem recognition, idea generation, idea evaluation, and solution validation in the CPS process. In this study, we focus on idea generation, by evaluating the DT components of fluidity, flexibility, and originality (Guilford, 1967). The creative margin for idea generation and the corresponding DT scores can be influenced by the level of uncertainty of the task. When a CPS task is performed several times, the degree of uncertainty is reduced, and we can expect a consequent reduction of the DT scores but also the CPS time-on-task. The meta-review of Paek et al. (2021) shows an inverted J-shaped relationship between time-on-task and DT performance based on 22 studies, in which the DT performance increases with more time but at some point, the growth slows down.

Creativity and divergent thinking

Creativity is a complex human process that engages the participant in generating novel and useful ideas and solutions in each situation. It comprises divergent thinking and convergent thinking processes (Zhang et al., 2020). Divergent thinking (DT) concerns idea generation, while convergent thinking (CT) focuses on the selection of these ideas about the context of the activity (Wigert et al., 2022).

We consider the DT components of fluidity, flexibility, and originality in DT defined by Guilford (1967) for the Alternate Uses Task (AUT). These three components of DT are well accepted to evaluate divergent thinking not only in AUT but also in other DT tasks. DT is considered an important aspect in the evaluation of creativity (Beaty et al., 2023; Runco and Acar, 2012). DT assessment has been mostly developed through tasks in which the participants should imagine solutions and use language to say their ideas or write them. Silvia et al. (2008) revised different tasks for the assessment of DT and observed that “in a typical verbal task, people are asked to generate unusual uses for common objects (e.g., bricks, knives, and newspapers), instances of common concepts (e.g., instances of things are round, strong, or loud), consequences of hypothetical events (e.g., what would happen if people went blind, shrank to 12 in. tall, or no longer needed to sleep), or similarities between common concepts (e.g., ways in which milk and meat are similar)” (p. 69). Divergent thinking tasks (such as the AUT) analyze a subject’s capacity to generate new ideas, mostly different uses of familiar objects. In these tasks, fluidity, flexibility, and originality are evaluated. The fluidity score refers to the total number of alternative uses found per object although the flexibility score corresponds to the number of different ideas. Finally, the originality score refers to the rareness of each response compared to the total number of given responses by the group or reference (Guilford, 1967).

In this study, we focus on DT processes to characterize in what way negative emotions such as test anxiety can have an impact on idea generation under assessment conditions. Test anxiety is a situation-specific trait to appraise performance evaluative situations as threatening and react with elevated state anxiety (Spielberger and Vagg, 1995; Putwain et al., 2021; Endler and Kocovski, 2001).

Negative emotions and creative performance

The situations of school assessment and exam-related threats generated by achievement-focused situations are known to have a negative influence on performance. Performance assessment instructions can be a trigger of assessment stress and can generate somatic reactions (stress response) to the threat to underperform (Prokofieva et al., 2022). Depending on individual differences, students can experience a decrease in performance on difficult/complex tasks and manifest a particular behavioral pattern corresponding to the increase of time spent on activity (Prokofieva et al., 2022). However, together with some studies postulating that the impact of stress can differ according to the value perception of the examination by a student, some studies focusing on the influence of stressors on creativity advance less negative results. Thus, the meta-research examining the interaction of different stressors (mostly linked to evaluative context or time pressure) and creativity states that this impact is not always negative but can also have a curvelineated or even positive relation (Byron and Khazanchi, 2011).

As performance-achievement situations are an integral part of the educational process, it, therefore, seems to be especially relevant to study the influence of assessment instruction on divergent aspects of creativity in problem-solving tasks.

Test anxiety and creativity

Considered as a negative academic emotion (Pekrun et al., 2007), test anxiety (TA) is conceptualized as a multidimensional, complex phenomenon comprising cognitive, behavioral, psychological (affective) aspects (Cassady and Johnson, 2002; Putwain et al., 2021). Most studies are unanimous concerning the negative influence of test anxiety on school performance via processing deficits such as those of working memory (Owens et al., 2008; Hadwin et al., 2005; von der Embse and Witmer, 2014). The cognitive component of test anxiety (worry) is shown to be a stronger negative predictor of impaired performance than emotional one whereas social aspects can have both negative and positive influence on performance (von der Embse and Witmer, 2014). Cognitive interference is produced by the evaluative threat referring to the apprehension of a poor performance in achievement situations. The feeling of this threat produces an attentional focus bias toward task-irrelevant thoughts, and this drains cognitive resources needed for the efficient accomplishment of a task or resolution of a problem.

This is particularly significant as the capacity to generate new ideas is predominant in creative performance. Furthermore, the results of a meta-analysis (Byron and Khazanchi, 2011) show that anxiety is significantly and negatively related to creative performance, even if this influence can be moderated by different aspects such as, for example, the type of a task (verbal or figurate) or a type of anxiety (state or trait).

Last century research was highly interested in exploring the relationship between negative emotions such as stress or test anxiety and performance in general and, especially, creative performance (Krop et al., 1969; Baer, 1998; Amabile et al., 1990; Landon and Suedfeld, 1972). Most studies found a hindrance effect of TA on creativity especially on its diverging components (Krop et al., 1969; Vidler and Karan, 1975). TA is known to reduce cognitive resources available for creative problem-solving. This, in its turn, results in the usage of simpler cognitive strategies requiring a narrow attentional focus (Eysenck et al., 2007). TA is also reported to impact associative memory which is important for creativity (Madan et al., 2017; Roozendaal et al., 2009; Tejeda and O'Donnell, 2014; Williams et al., 2001).

The mediating role of TA in the relationship between a stressor (assessment instruction) and creative performance advanced by the research (Byron et al., 2010) will be examined in this study. We hypothesize that under assessment instructions, the learners with high test anxiety will show poorer creative performance and DT scores.

Present study

The aim of this study was to examine whether assessment instruction can modify creativity performance in a CPS task in terms of three components of divergent thinking. It also investigated whether TA correlates with the aspects of DT. Gender difference and age covariance were considered in all interactions. The following hypotheses were examined:

• H1. CPS time-on-task is expected to be lower in the second occurrence (A2) than in the first activity (A1).

• H2. Assessment instruction decreases DT scores (fluidity, flexibility, and originality).

• H3. Test anxiety (TA) predicts lower DT scores (fluidity, flexibility, and originality).

Materials and methods

Participants

The sample was drawn from a public secondary school situated in the urban zone of Nice, France. A total of 122 students (aged from 11 to 15 years old) participated in the study. The mean age of participants was 12.42 years (SD = 0.96). The study was approved by the research ethics committee of Université X (n°Y). Written consent was sought from all students participating in the study.

Materials

Divergent thinking components

In this study we proposed an ill-defined CPS task named CreaCube to engage participants to evaluate their DT score. In this task, DT is evaluated through its three main components (fluency, flexibility, and originality), based on the adaptation of the AUT. The task engages the participants in CPS with modular robotics (Leroy et al., 2021). In this task, the participants are invited to build a vehicle from four different robotic cubes. This ill-defined CPS requires the participant to explore the material and build different configurations to understand the specific behavior of each robotic cube. The participant should assemble the four modular robotic cubes into a vehicle that moves from a starting point to a finishing point. Instead of asking the participants to imagine an unusual alternative use for familiar objects, as in the AUT (Guilford, 1967), the CreaCube task invites the participant to create a familiar object (a vehicle) using four novel, unfamiliar, objects: the robotic cubes. In the CreaCube task, we operationalize the assessment of creativity based on the three main components of DT: fluidity, flexibility, and originality (Guilford, 1967). Fluency is operationalized as the total number of different configurations, flexibility as configurations with different shapes, and originality as the configurations created by less than 5% of the participants (Leroy et al., 2021).

Test anxiety measure

Test anxiety was measured using the 16-item French version (Fenouillet et al., 2023) of the self-reported Multiple Test Anxiety Scale (MTAS) (Putwain et al., 2021). Two cognitive aspects: worry (e.g., “Before a test/examination, I am worried I will fail”) and cognitive interference (e.g., “During tests/examinations, I forget things that I have learnt”), and two affective-physiological items, namely, tension (e.g., “Even when I have prepared for a test/examination, I feel nervous about it”) and physiological indicators (e.g., “My heart races when I take a test/examination”), were measured. Each subscale comprises four items. Participants were asked to respond on a five-point scale (1 for “strongly disagree,” 3 for neither, 5 for strongly agree), with a higher score representing greater TA.

Creative problem-solving task

The CreaCube task is a creative problem-solving task engaging the participant in exploring and assembling four modular robotic Cubelets (Schweikardt, 2010). The configurations of the task can be analyzed to operationalize the DT component scores. The CreaCube activity was proposed twice to answer our first hypothesis (H1) about uncertainty reduction in the second task. Participants completed activity A1 under assessed or non-assessed conditions depending on group attribution (control or experimental). The second task (A2) has the same instruction, but we expected the A1 experience to lessen ambiguity. We also observed in A2 if the participant searches for a new solution (a cube configuration that has never been tried) or keeps the answer from A1 and reproduces the same efficient cube configuration.

Procedure

The experimenters explained to the students that there was no link between the activities they were going to realize and mathematics class content in order not to bias the results of the study with the math anxiety variable. Self-reported data were collected (MTAS), and the CPS tasks were developed during the mathematics classes in the school. Answering paper and pencil questionnaires took approximately 5–7 min.

Students were randomly split into two subgroups: an experimental group (N = 61) to solve the CreaCube task under assessment instruction and a controlled group (N = 61) proceeding the task under non-assessment. Every group was gender-randomized. During classes, the participants were extracted by two (one for each experimental condition) and were asked to come to a specially equipped room. The participants of the “assessment” group condition listened to the instruction indicating that their performance on task would be assessed. The control group’s participants were engaged in the regular CreaCube instruction (without any assessment instruction).

Participants were told that only their hands would be recorded by a static camera situated in front of them. Each participant signed an individual consent form. The videos were temporarily registered on iPads and then transferred to the CreaCube Learning Analytics platform via iCloud where it was stocked and the video were analyzed to code each configuration of the cubes to obtain the DT scores.

Results

The inter-subject protocol under two assessment condition analyses (assessed–non-assessed) and intra-subject protocol for the time-on-task spent on Activity 1 (A1) and Activity 2 (A2) were used. The G*Power procedure was applied to assess the size of the sample (Faul et al., 2009). Power analysis was done on the two groups (assessed/non-assessed) in the two occurrences of the task (A1 and A2). With a power of 95%, an alpha of 0.05 (α = 5%), and an effect size of.25, the necessary sample size is 52. Our sample (N = 122) was more than double the required minimum sample size.

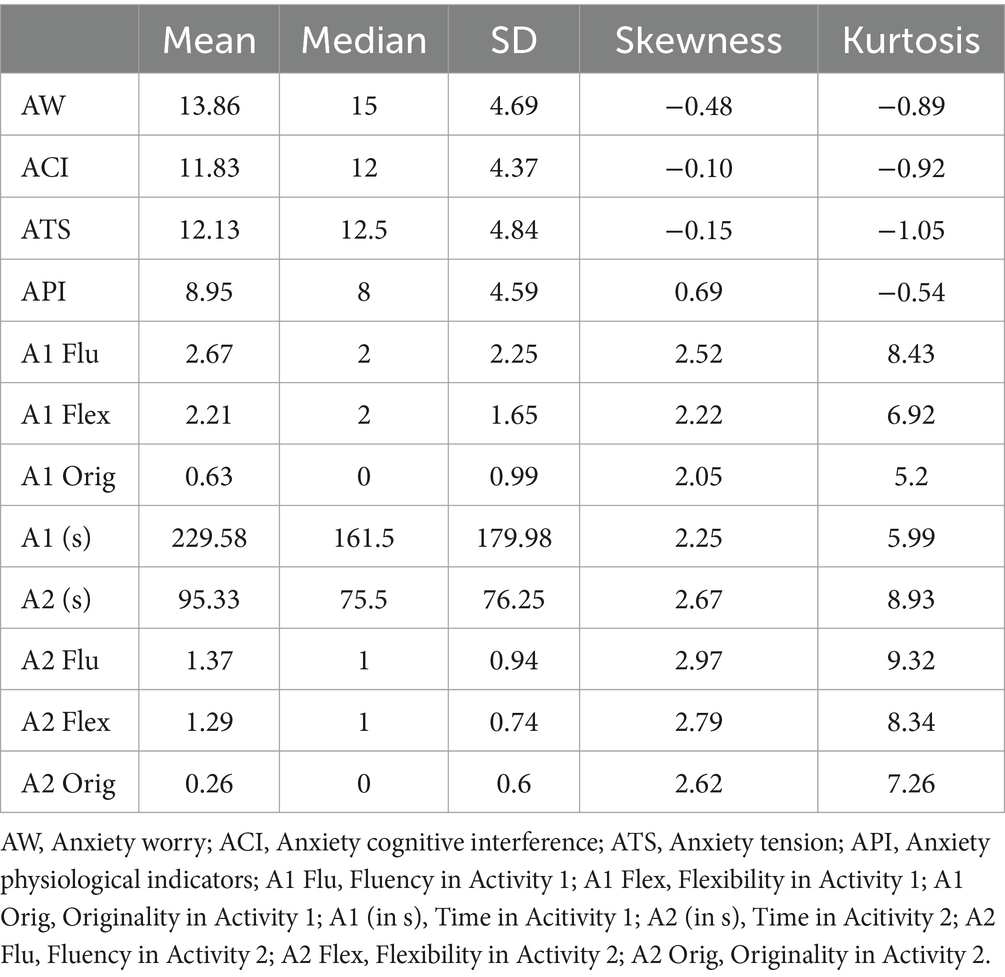

As it can be seen in Table 1, the data for fluency (Flue), flexibility (Flex), and originality (Orig) in A1 and A2 presented an abnormal distribution (skewness and kurtosis are superior to 2). The inverse logarithmic transformation was done, and as a result, skewness and kurtosis values were closer to normality (2<) for all variables. The time-on-task for each occurrence of the CreaCube CPA task (A1 and A2) was completed using squaring. Statistical analysis was based on the transformed variables.

Table 1. Descriptive statistics on DT score and MTAS dimensions: W (worry), CI (cognitive interference), TS (tension), and PI (physical indicator) in A1 and A2.

Time-on-task

The time-on-task was measured in seconds. The results permitted to identify a significant activity order effect (F[1,120] = 94.54, p < 0.001, η2p = 0.44) that was found to be a meaningful time-on-task decrease on the second occurrence of task A2 (M = 95.33, SD = 76.25) in comparison with time-on-task on A1 (M = 229.58, SD = 179.98). However, there was no interaction between time and assessment (F[1,120] = 0.57, ns).

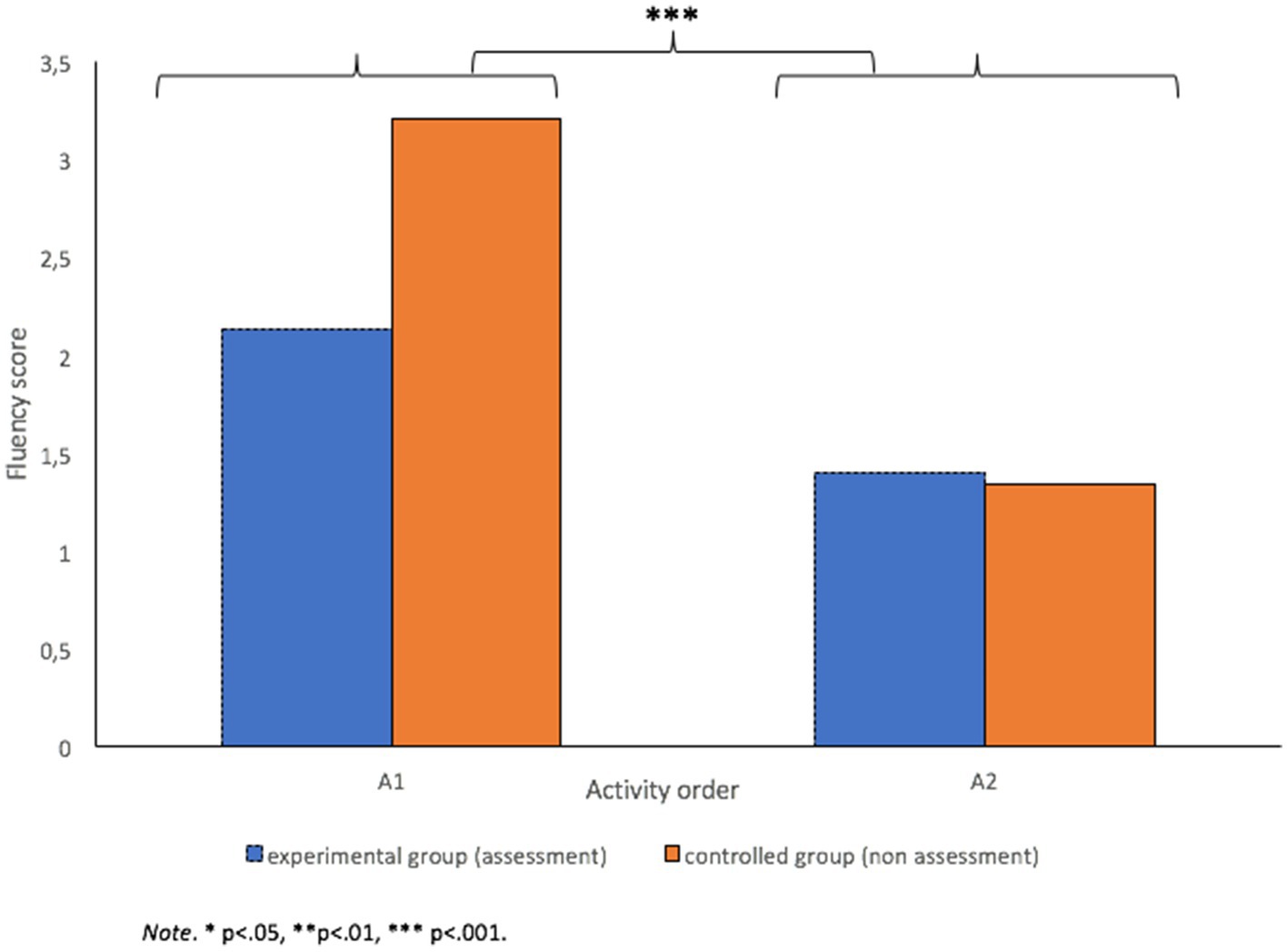

Fluency

Significant activity order effect was found (F[1,118] = 53.54, p < 0.001, η2p = 0.31) meaning that students show better performance on fluency in A1 (M = 2.67, SD = 2.25) than in A2 (M = 1.37, SD = 0.94).

A nearly significant interaction effect between assessment condition and activity order was found (F[1,118] = 3.58, p = 0.06, η2p = 0.03) (Figure 1), suggesting better fluency performance in A1 when students are not assessed. However, post-hoc comparisons indicated that there was no difference in fluency in A1 between assessed and non-assessed conditions [t holm (118) = 1.84, ns] nor in A2 [t holm (118) = 0.81, ns].

Flexibility

Similarly to fluency performance (F[1,119] = 40.15, p < 0.001, η2p = 0.25), better flexibility was shown in A1 (M = 2.21, SD = 1.65) than in A2 (M = 1.29, SD = 0.74). Nevertheless, no interaction effect was observed (F[1,119] = 1.67, ns).

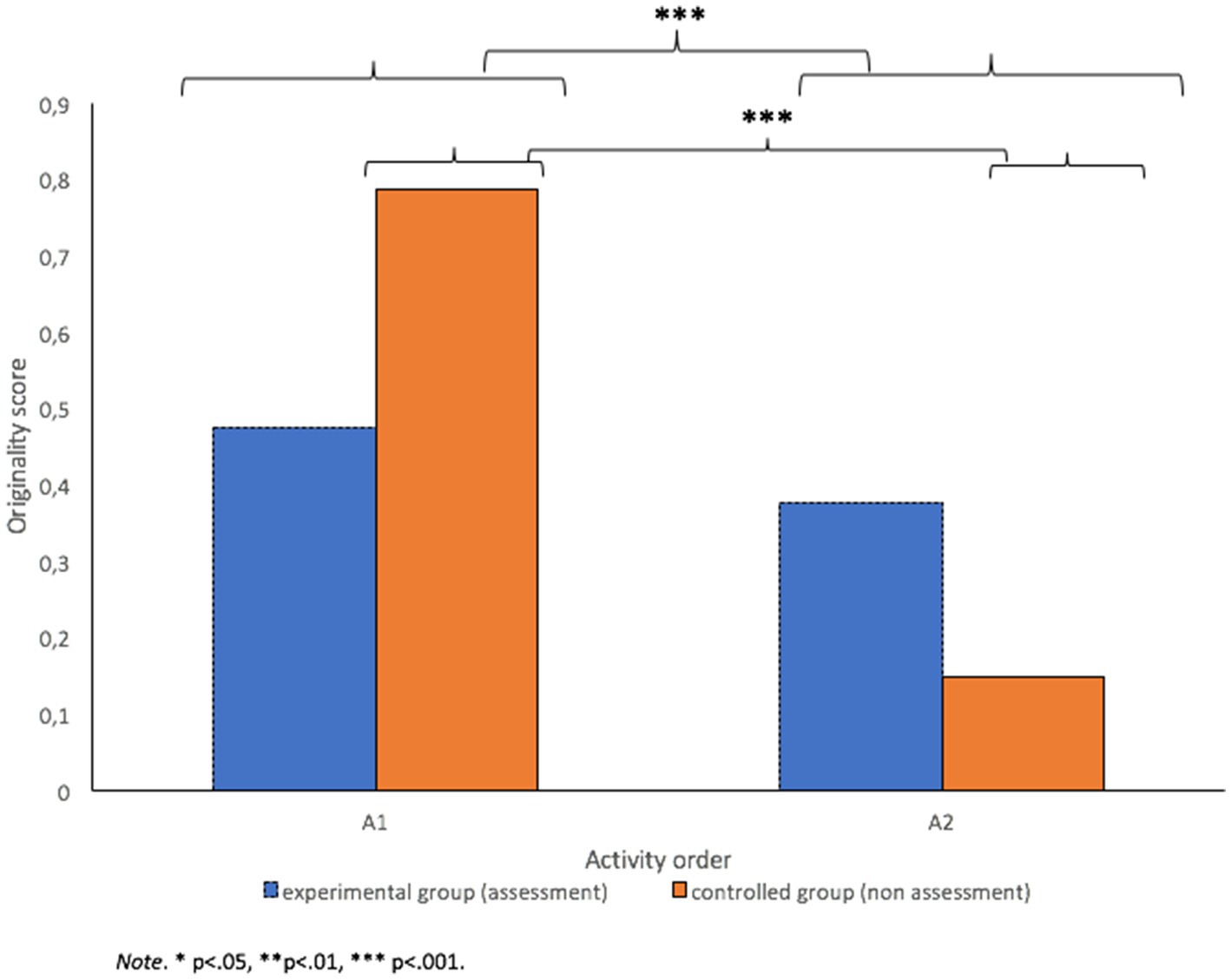

Originality

Originality performance was generally better (F[1,120] = 16.04, p = 0.013, η2p = 0.12) in A1 (M = 0.63, SD = 0.999) than in A2 (M = 0.26, SD = 0.60), but a significant interaction effect between activity order and assessment context was found (F[1,120] = 6.35, p = 0.013, η2p = 0.05) (Figure 2). The post-hoc results show that there was no difference in A1 between assessed and non-assessed conditions [t holm (120) = 1.17, ns] nor in A2 [t holm (120) = 2.28, ns]. On the other hand, under non-assessed conditions, there was a significant difference in originality [t holm (120) = 4.61, p < 0.001] between A1 (M = 0.79, SD = 1.18) and A2 (M = 0.15, SD = 0.44). Originality stayed stable under assessed conditions, and there was no significant difference [t holm (120) = 1.05, ns] between A1 (M = 0.48, SD = 0.72) and A2 (M = 0.38, SD = 0.71).

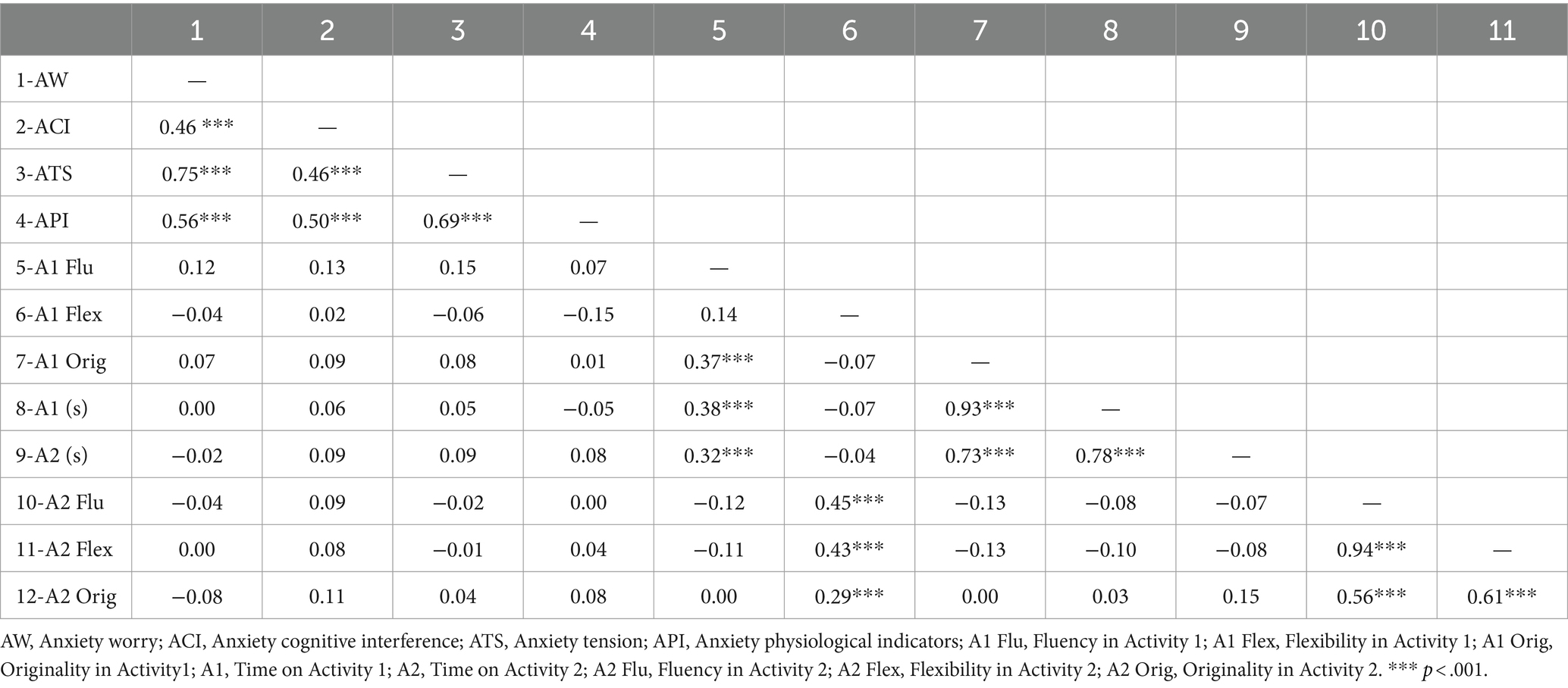

Our third hypothesis expected test anxiety (TA) to predict lower divergent thinking scores (DT) (fluidity, flexibility, and originality). However, the results show no correlation between test anxiety and DT scores (Table 2).

Table 2. Correlations (rho Spearman’s) between creativity indicators (Flue: fluency, Flex: flexibility, O: originality) and aspects of MTAS.

As shown in Table 2, there was no significant relationship between fluency in A1 (A1 Flu) and all aspects of test anxiety (worry, r = −0.12, ns; cognitive interference, r = −0.13, ns; tension, r = −0.15, ns; physical indicator, r = −0.07, ns). The absence of correlation was observed also for flexibility and originality both in A1 and A2.

Discussion

This study focused on the analysis of divergent thinking (DT) score on creative problem-solving (CPS) and their relation to assessment instruction and test anxiety (TA). Two occurrences of a robotic CPS task with the same goals were proposed to all students during mathematics class. Half of the group of secondary students was asked to realize the CPS robotic task under assessment instruction, and the other half did the same task without any assessment instruction. Students’ test anxiety was measured using the MTAS instrument (Putwain et al., 2021; Fenouillet et al., 2023).

The results show changes in creative behavior depending on the order of activity. In accordance with our hypothesis (H1), the students spent less time-on-task in the second occurrence (A2) than in the first occurrence (A1). The decrease of time-on-task in A2 can be explained by a certain learning effect as in A2 the students were familiar with the task and the CPS instruction to achieve the task goal. This familiarity could lead to a lower uncertainty in the CPS task (Runco, 2022), which enabled the participants to solve the task quicker. Moreover, better creativity performance was shown in A1 than in A2. It suggests that the students did not use A2 for exploring different ways to solve the CPS task but preferred to replicate what they had found already in A1. The reduction of uncertainty in A2 compromised the new idea generation and permitted the students to reuse existing solutions, for achieving the goal quicker. This can be discussed as a by default tendency, to engage in a temporal performance rather than engaging in a creativity performance which could require more time-on-task engagement. We can observe a tension between a creative behavior that requires more time-on-task and a temporal performance-oriented behavior in which the DT scores are reduced by reusing an existing solution. The temporal performance-achievement behavior seemed to produce also a nearly significant negative effect of assessment on fluency, flexibility, and originality performance in the first occurrence (A1) for an assessed group, compared to the group of participants engaged in the non-assessment (H2). Originally, we expected that the negative effect of assessment would be related to test anxiety (H3). However, the interesting finding of this study refers to the absence of any correlation between test anxiety and creativity contrary to other studies (Runco, 2003; Byron and Khazanchi, 2011). The lack of correlation may indicate, among other possible explanations, that the source of pressure was a potential association of assessment with temporal performance rather than a threat of evaluation. Indeed traditional education values temporal performance and time optimization instead of the creative behavior requiring more time. Being assessed is perceived by the participants as a temporal pressure and is self-induced in the assessment condition despite the lack of assessment of the duration of the task. This explanation can be supported by the fact that the assessment-on-performance effect disappears in A2 suggesting a certain atomization of the task.

The significant decrease in performance on the originality score of DT under non-assessment instruction in A2 is another interesting result. The participants who are not under the assessment constraint do not engage in the effort of trying very different ideas, while participants in the assessment condition stay engaged in generating original configurations. This maintenance of engagement under assessment can be explained by the physiological arousal induced by assessment and increased students’ extrinsic motivation. Moreover, these findings provide support for the studies on creativity assessment, suggesting that under certain conditions, being evaluated can be experienced as a motivating challenge and can stimulate creativity performance (Bullock Muir et al., 2024; Byron et al., 2010; Blascovich and Mendes 2010). Contrary to A1, the assessment in A2 might have been perceived by the students as a milder pressure because of the repetition of the instruction and the same activity task. However, it is important to emphasize that although students may feel motivated to continue solving creative problems, their creative performance does not improve under assessment.

As it was mentioned before the absence of any correlation between test anxiety and creativity is an unexpected finding in this study. Although the literature is rather unanimous on the impairing effect of TA on creativity (Runco, 2003; Byron et al., 2010 for meta-analysis; Krop et al., 1969; Baer, 1998; Amabile et al., 1990; Landon and Suedfeld, 1972), our results are inconsistent with this hypothesis. Several explanations can justify these results. First, the playful perception of the manipulative modular robotic task might have attenuated the assessment threat and decreased the significance of a negative effect of assessment pressure on performance. Moreover, despite some studies postulating that the anxiety-creative performance relationship depends on exposure to a stressor (Byron and Khazanchi, 2011), the playful nature of this interactive and manipulative task moderated test anxiety even in the students exposed to a stressor (assessment instruction). Furthermore, a near-zero effect of test anxiety on creative performance can be explained also by the nature of the task. According to Byron and Khazanchi (2011), the impairing effect of anxiety is higher on verbal tasks and lesser on figural tasks. This proves to be true in our study as the CreaCube can be considered a figural task. However, our findings do not endorse the assertion that anxiety can increase creative performance on motor tasks (Weinberg, 1990).

In addition, no age or gender differences were observed in the study neither on DT aspects of creativity nor on behavior patterns. It suggests that, contrary to the studies on gender differences in test anxiety reporting that girls are more sensitive to assessment-focused threats than boys (Putwain and Daly, 2014), this seems not to be true in the situation of creative playful problem-solving activities.

Conclusion and implications

The results of this study show the importance of assessment instructions in the perception of the students especially related to temporal performance and DT scores. The playfulness of the task appears to reduce the negative impact of TA and proves to be a powerful tool to engage learners in assessment activities without hampering their performance. Furthermore, the impact of assessment on creative performance may be brought down if time constraints are minimized. Educators can develop the way they introduce and communicate the assessment instructions through playful tasks to reduce test anxiety and avoid the hindering aspects of this type of anxiety on task performance, especially in CPS tasks. Moreover, introducing playful creative problem-solving tasks can help to moderate some types of anxiety (e.g., linked to problem-solving) in mathematics classes.

To better understand the impact of the assessment condition in CPS tasks, we can consider that in a further study, a self-report scale measuring task-focused positive emotions can be added to the protocol. It will make it possible to see if positive emotions generated by the playful robotic task (joy, curiosity, and interest) can reduce test anxiety and enhance creative performance. Curiosity induced by novel material to play with has been discussed as a powerful engagement factor (Oudeyer et al., 2016). However, even if students seem not to relate playful tasks with classical academic assessment, the instructors and educators must moderate the use of assessment instructions as this can hamper originality and performance in general.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the research ethics committee of Université Côte d’Azur (n° CER-2019-6). The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

Author contributions

VP: Writing – review & editing, Writing – original draft. Methodology, Formal analysis, Data curation, Conceptualization, Visualization, FF: Writing – original draft, Writing – review & editing, Validation, Visualization, Formal analysis. MR: Writing – review & editing, Writing – original draft, Visualization, Validation, Supervision, Resources, Project administration, Methodology, Funding acquisition, Formal analysis, Conceptualization.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This study has been funded by Incubateurs Numériques (DRANE) and ANR CreaMaker (ANR-18-CE38-0001).

Acknowledgments

The authors would like to thank the teaching staff of the secondary school Collège International Joseph Vernier of Nice, France, and, particularly, Madame Ann-Gwenn Lorand for the assistance in carrying out of the study. Special thanks to all students participated in this research.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Amabile, T. M., Goldfarb, P., and Brackfleld, S. C. (1990). Social influences on creativity: evaluation, coaction, and surveillance. Creat. Res. J. 3, 6–21. doi: 10.1080/10400419009534330

Baer, J. (1998). The case for domain specificity of creativity. Creat. Res. J. 11, 173–177. doi: 10.1207/s15326934crj1102_7

Beaty, R. E., Cortes, R. A., Merseal, H. M., Hardiman, M. M., and Green, A. E. (2023). Brain networks supporting scientific creative thinking. Psychol. Aesthet. Creat. Arts. doi: 10.1037/aca0000603

Berlin, N., Dul, J., Gazel, M., Lévy-Garboua, L., and Lubart, T. I. (2023). Creative cognition as a bandit problem. Centre d'économie de la Sorbonne.

Besançon, M., Fenouillet, F., and Shankland, R. (2015). Influence of school environment on adolescents’ creative potential, motivation and well-being. Learn. Individ. Differ. 43, 178–184. doi: 10.1016/j.lindif.2015.08.029

Blascovich, J., and Mendes, W. B. (2010). “Social psychophysiology and embodiment” in The Handbook of Social Psychology. eds. S. T. Fiske, D. T. Gilbert, and G. Lindzey. 5th ed (New York, NY: Wiley), 194–227.

Bullock Muir, A., Tribe, B., and Forster, S. (2024). Creativity on tap? The effect of creativity anxiety under evaluative pressure. Creat. Res. J., 1–10. doi: 10.1080/10400419.2024.2330800

Byron, K., and Khazanchi, S. (2011). A meta-analytic investigation of the relationship of state and trait anxiety to performance on figural and verbal creative tasks. Personal. Soc. Psychol. Bull. 37, 269–283. doi: 10.1177/0146167210392788

Byron, K., Khazanchi, S., and Nazarian, D. (2010). The relationship between stressors and creativity: a meta-analysis examining competing theoretical models. J. Appl. Psychol. 95, 201–212. doi: 10.1037/a0017868

Cassady, J. C., and Johnson, R. E. (2002). Cognitive test anxiety and academic performance. Contemp. Educ. Psychol. 27, 270–295. doi: 10.1006/ceps.2001.1094

Cassone, L., Romero, M., and Basiri Esfahani, S. (2021). Group processes and creative components in a problem-solving task with modular robotics. J. Comput. Educ. 8, 87–107. doi: 10.1007/s40692-020-00172-7

Daikoku, T., Fang, Q., Hamada, T., Handa, Y., and Nagai, Y. (2021). Importance of environmental settings for the temporal dynamics of creativity. Think. Skills Creat. 41:100911. doi: 10.1016/j.tsc.2021.100911

Endler, N. S., and Kocovski, N. L. (2001). State and trait anxiety revisited. J. Anxiety Disord. 15, 231–245. doi: 10.1016/S0887-6185(01)00060-3

Eysenck, M. W., Derakshan, N., Santos, R., and Calvo, M. G. (2007). Anxiety and cognitive performance: attentional control theory. Emotion 7, 336–353. doi: 10.1037/1528-3542.7.2.336

Faul, F., Erdfelder, E., Buchner, A., and Lang, A.-G. (2009). Statistical power analyses using G*power 3.1: tests for correlation and regression analyses. Behav. Res. Methods 41, 1149–1160. doi: 10.3758/BRM.41.4.1149

Fenouillet, F., Prokofieva, V., Lorant, S., Masson, J., and Putwain, D. W. (2023). French study of multidimensional test anxiety scale in relation to performance, age, and gender. J. Psychoeduc. Assess. 41, 828–834. doi: 10.1177/07342829231186871

Hadwin, J. A., Brogan, J., and Stevenson, J. (2005). State anxiety and working memory in children: a test of processing efficiency theory. Educ. Psychol. 25, 379–393. doi: 10.1080/01443410500041607

Hao, X., Geng, F., Wang, T., Hu, Y., and Huang, K. (2021). Relations of creativity to the interplay between high-order cognitive functions: behavioral and neural evidence. Neuroscience 473, 90–101. doi: 10.1016/j.neuroscience.2021.08.015

Jaarsveld, S., Lachmann, T., Hamel, R., and Leeuwen, C. V. (2010). Solving and creating raven progressive matrices: reasoning in well-and ill-defined problem spaces. Creat. Res. J. 22, 304–319. doi: 10.1080/10400419.2010.503541

Krop, H. D., Alegre, C. E., and Williams, C. D. (1969). Effect of induced stress on convergent and divergent thinking. Psychol. Rep. 24, 895–898. doi: 10.2466/pr0.1969.24.3.895

Landon, P. B., and Suedfeld, P. (1972). Complex cognitive performance and sensory deprivation: completing the U-curve. Percept. Mot. Skills 34, 601–602. doi: 10.2466/pms.1972.34.2.601

Leroy, A., Romero, M., and Cassone, L. (2021). Interactivity and materiality matter in creativity: educational robotics for the assessment of divergent thinking. Interact. Learn. Environ. 31, 1–12. doi: 10.1080/10494820.2021.1875005

Madan, C. R., Fujiwara, E., Caplan, J. B., and Sommer, T. (2017). Emotional arousal impairs association-memory: roles of amygdala and hippocampus. NeuroImage 156, 14–28. doi: 10.1016/j.neuroimage.2017.04.065

Mumford, M. D., Martin, R., Elliott, S., and McIntosh, T. (2020). Creative failure: why can't people solve creative problems. J. Creat. Behav. 54, 378–394. doi: 10.1002/jocb.372

Nazzal, L. J., and Kaufman, J. C. (2020). The relationship of the quality of creative problem solving stages to overall creativity in engineering students. Think. Skills Creat. 38:100734. doi: 10.1016/j.tsc.2020.100734

Nemiro, J., Larriva, C., and Jawaharlal, M. (2017). Developing creative behavior in elementary school students with robotics. J. Creat. Behav. 51, 70–90. doi: 10.1002/jocb.87

Oudeyer, P. Y., Gottlieb, J., and Lopes, M. (2016). Intrinsic motivation, curiosity, and learning: theory and applications in educational technologies. Prog. Brain Res. 229, 257–284. doi: 10.1016/bs.pbr.2016.05.005

Owens, M., Stevenson, J., Norgate, R., and Hadwin, J. A. (2008). Processing efficiency theory in children: working memory as a mediator between trait anxiety and academic performance. Anxiety Stress Coping 21, 417–430. doi: 10.1080/10615800701847823

Paek, S. H., Alabbasi, A. M. A., Acar, S., and Runco, M. A. (2021). Is more time better for divergent thinking? A meta-analysis of the time-on-task effect on divergent thinking. Think. Skills Creat. 41:100894. doi: 10.1016/j.tsc.2021.100894

Pekrun, R., Frenzel, A. C., Goetz, T., and Perry, R. P. (2007). “The control-value theory of achievement emotions: an integrative approach to emotions in education” in Emotion in Education. eds. Schutz P. A., and Pekrun R. (Amsterdam: Academic Press), 13–36.

Prokofieva, V., Kostromina, S., Brandt-Pomares, P., Hérold, J. F., Fenouillet, F., and Velay, J. L. (2022). The relationship between assessment-related stress, performance, and gender in a class test. Mind Brain Educ. 16, 112–121. doi: 10.1111/mbe.12316

Putwain, D., and Daly, A. L. (2014). Test anxiety prevalence and gender differences in a sample of English secondary school students. Educ. Stud. 40, 554–570. doi: 10.1080/03055698.2014.953914

Putwain, D. W., von der Embse, N. P., Rainbird, E. C., and West, G. (2021). The development and validation of a new multidimensional test anxiety scale (MTAS). Eur. J. Psychol. Assess. 37, 236–246. doi: 10.1027/1015-5759/a000604

Romero, M., Freiman, V., and Rafalska, M. (2022). “Techno-creative problem-solving (TCPS) framework for transversal epistemological and didactical positions: the case studies of CreaCube and the tower of Hanoi” in Mathematics and Its Connections to the Arts and Sciences (MACAS). eds. Michelsen, C., Beckmann, A., Freiman, V., Jankvist, U.T. and Savard, A (Cham: Springer International Publishing), 245–274.

Roozendaal, B., McEwen, B. S., and Chattarji, S. (2009). Stress, memory and the amygdala. Nat. Rev. Neurosci. 10, 423–433. doi: 10.1038/nrn2651

Runco, M. A. (2003). “Idea evaluation, divergent thinking and creativity” in Critical Creative Process. ed. M. A. Runco (Cresskill: Hampton).

Runco, M. A. (2022). “Uncertainty makes creativity possible” in Uncertainty: A Catalyst for Creativity, Learning and Development. eds. Beghetto, R.A. and Jaeger, G. J. (Cham: Springer International Publishing), 23–36.

Runco, M. A., and Acar, S. (2012). Divergent thinking as an indicator of creative potential. Creat. Res. J. 24, 66–75. doi: 10.1080/10400419.2012.652929

Sarmiento, J. W., and Stahl, G. (2008). Group creativity in interaction: collaborative referencing, remembering, and bridging. Int. J. Hum. Comp. Interact. 24, 492–504.

Savic, M. (2016). Mathematical problem-solving via Wallas’ four stages of creativity: implications for the undergraduate classroom. Math. Enthusiast 13, 255–278. doi: 10.54870/1551-3440.1377

Schweikardt, E. (2010). “Modular robotics studio” in Proceedings of the fifth international conference on Tangible, embedded, and embodied interaction. pp. 353–356.

Selkrig, M., and Keamy, K. (2017). Creative pedagogy: a case for teachers’ creative learning being at the Centre. Teach. Educ. 28, 317–332. doi: 10.1080/10476210.2017.1296829

Silvia, P. J., Winterstein, B. P., Willse, J. T., Barona, C. M., Cram, J. T., Hess, K. I., et al. (2008). Assessing creativity with divergent thinking tasks: exploring the reliability and validity of new subjective scoring methods. Psychol. Aesthet. Creat. Arts 2, 68–85. doi: 10.1037/1931-3896.2.2.68

Spielberger, C. D., and Vagg, R. P. (1995). “Test anxiety: a transactional process model” in Test Anxiety: Theory, Assessment and Treatment. eds. C. D. Speilberger and P. R. Vagg (Bristol, UK: Taylor & Francis), 3–14.

Sternberg, R. J., and Lubart, T. (2023). Beyond defiance: an augmented investment perspective on creativity. J. Creat. Behav. 57, 127–137. doi: 10.1002/jocb.567

Tejeda, H. A., and O'Donnell, P. (2014). Amygdala inputs to the prefrontal cortex elicit heterosynaptic suppression of hippocampal inputs. J. Neurosci. 34, 14365–14374. doi: 10.1523/JNEUROSCI.0837-14.2014

Vidler, D. C., and Karan, V. E. (1975). A study of curiosity, divergent thinking, and test-anxiety. Aust. J. Psychol. 90, 237–243. doi: 10.1080/00223980.1975.9915781

von der Embse, N. P., and Witmer, S. E. (2014). High-stakes accountability: student anxiety and large-scale testing. J. Appl. Sch. Psychol. 30, 132–156. doi: 10.1080/15377903.2014.888529

Weinberg, R. S. (1990). Anxiety and motor performance: where to from here? Anxiety Res. 2, 227–242. doi: 10.1080/08917779008248731

Wigert, B., Murugavel, V., and Reiter-Palmon, R. (2022). The utility of divergent and convergent thinking in the problem construction processes during creative problem-solving. Psychol. Aesthet. Creat. Arts. doi: 10.1037/aca0000513

Williams, L. M., Phillips, M. L., Brammer, M. J., Skerrett, D., Lagopoulos, J., Rennie, C., et al. (2001). Arousal dissociates amygdala and hippocampal fear responses: evidence from simultaneous fMRI and skin conductance recording. NeuroImage 14, 1070–1079. doi: 10.1006/nimg.2001.0904

Keywords: creative problem-solving (CPS), divergent thinking, assessment instruction, test anxiety, creativity performance

Citation: Prokofieva V, Fenouillet F and Romero M (2024) The effects of assessment instruction and test anxiety on divergent components of creative problem-solving tasks. Front. Educ. 9:1440248. doi: 10.3389/feduc.2024.1440248

Edited by:

Fernanda Klein Marcondes, University of Campinas, BrazilReviewed by:

Angelo Cortelazzo, State University of Campinas, BrazilLuís Montrezor, University of Araraquara, Brazil

Copyright © 2024 Prokofieva, Fenouillet and Romero. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Victoria Prokofieva, dmljdG9yaWEubi5wcm9rb2ZpZXZhQGdtYWlsLmNvbQ==

Victoria Prokofieva

Victoria Prokofieva Fabien Fenouillet

Fabien Fenouillet Margarida Romero

Margarida Romero