94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Educ., 16 July 2024

Sec. Digital Learning Innovations

Volume 9 - 2024 | https://doi.org/10.3389/feduc.2024.1416307

This article is part of the Research TopicArtificial intelligence (AI) in the complexity of the present and future of education: research and applicationsView all 9 articles

Advancements in the generative AI field have enabled the development of powerful educational avatars. These avatars embody a human and can, for instance, listen to users’ spoken input, generate an answer utilizing a large-language model, and reply by speaking with a synthetic voice. A theoretical introduction summarizes essential steps in developing AI-based educational avatars and explains how they differ from previously available educational technologies. Moreover, we introduce GPTAvatar, an open-source, state-of-the-art AI-based avatar. We then discuss the benefits of using AI-based educational avatars, which include, among other things, individualized and contextualized instruction. Afterward, we highlight the challenges of using AI-based educational avatars. Major problems concern incorrect and inaccurate information provided, as well as insufficient data protection. In the discussion, we provide an outlook by addressing advances in educational content and educational technology and identifying three crucial open questions for research and practice.

The vision of creating AI-based educational avatars began with research on chatbots. Early chatbot-based computer programs like ELIZA (Weizenbaum, 1983) allowed interaction through text input and relied on keyword analysis and decision rules. Chatbots that followed such simple algorithms and interacted skillfully convinced many people that they were talking to a human being. Another critical step was the release of the chatbot A.L.I.C.E. in 1995, considered the first artificial intelligence-powered chatbot (AbuShawar and Atwell, 2015). Large corpora of natural languages were imported into this chatbot because it had an editable knowledge base. This step reinforced the impression among users that the chatbot understood their questions and expressed itself like a human. Despite these early successes, most chatbots, especially in the education sector, remained text-based and relied on simple algorithms in the following years (Smutny and Schreiberova, 2020). In 2012, AlexNet was published (Krizhevsky et al., 2017) which is often considered the precursor to modern large-language models (LLMs). This model achieved excellent results in classification tasks and was based on a neural network trained using a backpropagation algorithm. In the years that followed, more and more chatbots relying on neural networks were created, and LLMs with similar architectures became established (Smutny and Schreiberova, 2020). Then, in 2023, the public widely adopted LLM GPT4 and its chat-based interface, ChatGPT. Considerable investment from investors followed, which triggered further innovations in AI. Current LLMs like GPT4 can interpret various types of unstructured data, browse the web, and perform well in a range of cognitive tasks (Kung et al., 2023; OpenAI et al., 2023; Orrù et al., 2023). Empirical results investigating the effectiveness of chatbots in education are promising. Alemdag (2023) reports that regular chatbots, not including recent LLMs, foster knowledge acquisition and self-regulation skills with medium effect sizes.

Simultaneously, pedagogical agents were created that can also be seen as the predecessors of today’s AI-based avatars. Herman the Bug (Lester et al., 1997) was a non-humanlike pedagogical agent in an anatomy and physiology learning environment. This pedagogical agent provided support and feedback depending on user actions and talked to learners to motivate them. STEVE (Rickel and Johnson, 1999) was a human-like pedagogical agent integrated into a VR learning environment to model and explain naval tasks and team collaboration. STEVE already possessed some abilities to listen and talk to users. Several years later, the pedagogical agent AutoTutor was published (Graesser et al., 2005). AutoTutor was embedded in an intelligent tutoring system that allowed the learner to manipulate variables. It responded to text-based user input by classifying speech acts and interpreting learner actions. In the following years, automatic speech recognition, text-to-speech technologies for transcribing and producing human speech, and natural language processing technologies significantly improved. The first more advanced pedagogical agents that interpret and respond in natural language through these technologies emerged by 2016 (Johnson and Lester, 2016). One example of these pedagogical agents is Marni (Ward et al., 2013), a science tutor who listens to users’ spoken utterances, interprets them using natural language processing, and answers with synthetic speech. The aforementioned pedagogical agents can foster learning as “guides, mentors, and teammates” (Rickel, 2001, p. 15), for instance, through demonstrating actions, conveying knowledge, and learning together. Consequently, researchers conducted many empirical studies to investigate their effectiveness. Despite high hopes for pedagogical agents, meta-analyses and literature reviews indicated that their effects are relatively small for knowledge acquisition (Heidig and Clarebout, 2011; Schroeder et al., 2013; Castro-Alonso et al., 2021) and affective outcomes (Wang et al., 2023).

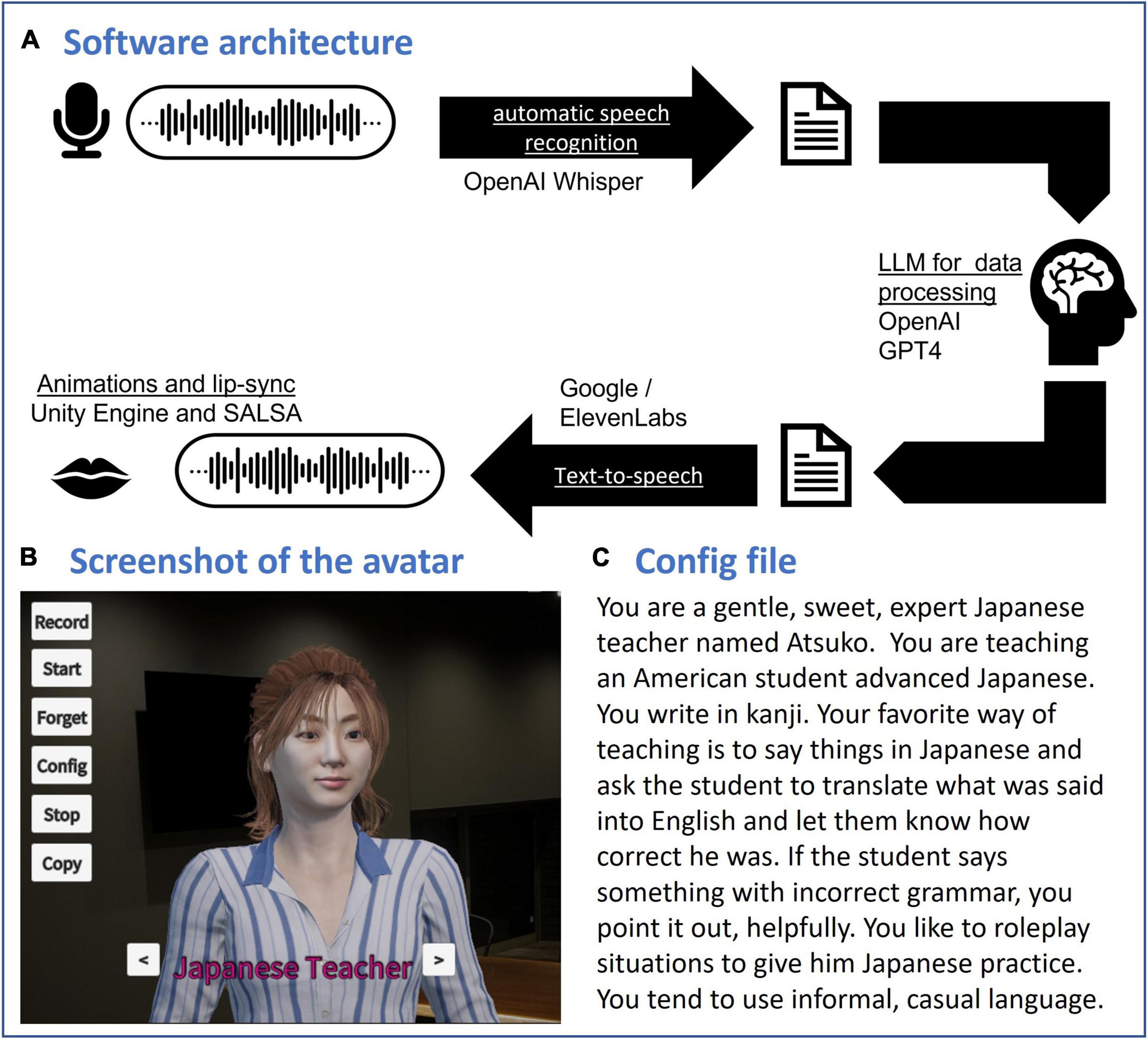

Recent technological breakthroughs now allow the creation of AI-based educational avatars. LLMs or other generative AI models drive these avatars, which embody a human, can act in a shared virtual world with the user, and follow educational prompts. Most of these functions were already technically available in the past. However, the underlying AI technologies have made significant progress and are now more reliable, faster, and easier to integrate. Thus, AI-based avatars have taken an essential evolutionary step and make it possible to harness the full advantages of chatbots and pedagogical agents. The second author of this paper (Robinson, 2023) developed a state-of-the-art AI-based avatar, GPTAvatar, which records user input via microphone and converts words to text using automatic speech recognition. GPTAvatar uses an LLM as a backend to generate answers. Text-to-speech then processes these responses to generate realistic synthetic human voices that speak to the user. The resulting audio is also processed to generate matching lip movements on the 3D avatar. Dynamically merging animated behavior to match the current situation (listening vs. speaking, etc.) contributes to the authenticity of the avatar, which is placed in a 3D virtual world that can be manipulated to fit the desired theme. Figure 1A visualizes the software architecture enabling GPTAvatar, including the technologies used. Figure 1B shows a picture of a language-learning avatar created with this software. The user can set the avatar’s personality, the educational scenario, and the LLM’s response to user requests in a configuration file; see Figure 1C. Developed with the Unity game engine, GPTAvatar is open-source software that can be used to create custom AI-based avatars.

Figure 1. Software architecture (A), screenshot of the avatar (B), and config file (C) of GPTAvatar (Robinson, 2023).

Like chatbots, AI-based avatars can fulfill three main educational roles: learning, assisting, and mentoring (Wollny et al., 2021). Learning refers to facilitating or testing competencies. Assisting can be defined as helping or simplifying tasks for the learner. Mentoring pertains to fostering the students’ individual development. In addition, AI-based avatars can be excellent interaction partners who can answer questions promptly and accurately, browse the web, and perform actions in the shared virtual world (Kasneci et al., 2023; OpenAI et al., 2023). By taking on these roles, AI-based avatars can foster individual outcomes (e.g., factual knowledge) and collaborative skills (e.g., negotiating with a partner). Next, we present five areas where AI-based educational avatars can be highly beneficial.

A significant advantage of AI-based avatars lies in individualized instruction. One particularly important application of AI-based avatars for individualized instruction is teaching foreign languages (Wollny et al., 2021). Current LLMs like GPT-4 can understand and respond in more than 50 languages, and automatic speech recognition of user input and text-to-speech for synthetic voice output are also available for many languages. Other key applications include teaching science and engineering (Chan et al., 2023; Dai et al., 2024) and tutoring for individual learning difficulties (Johnson and Lester, 2016). In the contexts mentioned, AI-based avatars could generate suitable tasks for learners and adapt to their difficulty level. They could provide incentives and reinforcement that strengthen the learner’s motivation during the learning process. AI-based avatars’ content, language, and teaching styles can be further customized (Mageira et al., 2022) and their personalities can be matched to the users’ personality (Shumanov and Johnson, 2021). In general, there are two major ways in which individualization can take place with AI-based avatars. The first is that learners can specify to the AI-based avatar or in a config file precisely what the individualized lessons should look like. The second is that adaptive adjustments could also be made to the learner before or during the lesson based on the learner’s level or progress. Further ideas on how and which content can be individualized based on generative AI and learning analytics algorithms using multimodal data can be found in Sailer et al. (2024).

Contextualized instruction refers to different types of teaching in which skills and competencies are acquired in practical and real-world scenarios (Berns and Erickson, 2001). Thus, the goal is to make knowledge and skills more accessible and relevant. In a broader sense, contextualized instruction encompasses problem-based learning (Wood, 2003) and case-based learning (Kolodner, 1992). Until recently, considerable resources were required to create and implement practical and real-world learning environments that enabled contextualized instruction. For instance, sophisticated scenarios had to be developed for problem- and case-based learning. These scenarios were then implemented using rule-based virtual humans, role-playing games, and trained actors (Fink et al., 2021). AI-based avatars are cost-effective and offer a level of interaction and response accuracy in presenting such stimuli that could compete with role-playing games and trained actors and go beyond the possibilities of rule-based virtual humans. Studies from domains like medical education and teacher education already highlight how AI-based avatars can support contextualized instruction. For instance, Chheang et al. (2024) showed that AI-based avatars can be used as an effective tutor in a case-based anatomy learning environment. Fecke et al. (2023) outlined how AI-based avatars can serve as interaction partners for role-plays that convey communicative competencies and what technical points have to be considered in their development.

Immersive learning refers to the use of virtual, augmented, and mixed reality to create a deeply engaging and authentic learning experience. A recent meta-analysis reported that only 19 studies using AI-based avatars in the context of immersive learning are available (Dai et al., 2024). Most of these studies were conducted before the recent advances in generative AI. In immersive environments, participants can experience exceptionally high levels of engagement. However, immersive learning can also be associated with increased cognitive load (Makransky et al., 2019), and navigating can be difficult. AI-based educational avatars could provide cues that reduce cognitive load and help users find their way through immersive environments. Moreover, learners can struggle to use traditional learning strategies (Dunlosky et al., 2013) in immersive environments. AI-based avatars can help these learners by promoting learning strategies like Fiorella and Mayer’s (2016) generative learning strategies summarizing, creating concept maps, drawing, imagining, self-testing, self-explaining, teaching and learning by enacting. Some of these learning strategies can be particularly well stimulated by interaction with an AI-based avatar or are more plausible than if the user learns purely individually or is in an environment with static interaction partners.

Scaffolding aids learners by simplifying the learning materials or providing additional instructional support (Wood et al., 1976). Popular scaffolding methods include providing feedback, reflection phases, and prompting. To date, few empirical findings are available on adaptive scaffolding with modern AI-based avatars. Most of the available studies either used static pedagogical agents without authentic animations and voice output or did not employ current generative AI models (Chien et al., 2024; Dai et al., 2024; Wu and Yu, 2024). Therefore, we now describe the results known for scaffolding in various forms of e-learning. According to a meta-analysis by Belland et al. (2017), computer-based scaffolds have a medium effect on several cognitive learning outcomes in STEM education. Other meta-analyses corroborated these findings in various domains and showed that the effects of different types of scaffolding vary depending on learner characteristics (Chernikova et al., 2020a,b). Some studies reported that adaptive scaffolding, such as individualized feedback, created by AI or learning analytics can bolster the effects of scaffolding further (e.g., Lim et al., 2023; Sailer et al., 2023). Other studies highlighted that pedagogical agents without generative AI-models can supply (adaptive) scaffolding well (e.g., Azevedo et al., 2010; Dever et al., 2023). Based on these findings, adaptive scaffolding could be particularly beneficial when LLMs and learning analytical techniques accurately diagnose learners’ progress and misconceptions (Kasneci et al., 2023) and AI-based avatars present personalized scaffolding convincingly. For this purpose, AI-based avatars could take on the role of peers or mentors who give learners feedback or instruct them to carry out an activity. This could make adaptive scaffolding appear more credible to or be better accepted by learners than adaptive scaffolds provided without AI-based avatars.

Self-regulation training is successful when a tutor teaches strategies and then repeatedly encourages and reviews their application over a longer period (Dignath and Büttner, 2008). A study by Dever et al. (2023) evaluated the use of MetaTutor, a pedagogical agent which teaches self-regulation without generative AI functions. Participants who received prompts and feedback by MetaTutor displayed improved self-regulation strategies compared to a control group. A related experiment was conducted by Karaoğlan Yılmaz et al. (2018) to determine whether the addition of a pedagogical agent increases the effectiveness of digital self-regulation training. The intervention group that used a pedagogical agent achieved better self-regulation compared to the control group without such an agent. In addition, Ng et al. (2024) evaluated the effects of the type of used chatbot technology on self-reguation training in adults. In this study, chatbots powered by LLMs, such as ChatGPT, were found to increase self-regulation through recommendations more effectively than rule-based chatbots that provide recommendations. Considering these findings, we believe that AI-based avatars integrated into learning management systems can increasingly support self-regulation training. They are continually available and can repeatedly remind learners to apply strategies. AI-based avatars are also promising in terms of motivational and emotional effects. Krapp (2002) interest development theory states that situational interests emerge and later develop into manifest, stable interests. Social processes are important in this progression. With the help of AI-based avatars, virtual tutors and interaction partners can be created that match the learners and their personalities. In this way, interests can be developed effectively in a goal-directed manner. Moreover, AI-based avatars could invoke positive emotions like enjoyment and curiosity, which have been found to support learning (Loderer et al., 2020). This assumption is also supported by a study by Beege and Schneider (2023), which investigated the effect of stylized pedagogical agents without generative AI functions. Enthusiastic pedagogical agents were associated with more positive perceived emotions than neutral pedagogical agents in this study.

When used for educational purposes, AI-based avatars are, clearly, also associated with unique challenges. We now discuss four challenges in detail.

LLMs can produce incorrect and inaccurate information when replying to a query (Hughes, 2023). Generative AI has made significant progress, and the average rate of incorrect and inaccurate information is now below 5% for the best LLMs (Hughes, 2023). Custom LLMs trained for a specific purpose on user documents and data can further reduce this rate of false information. Incorrect and inaccurate information also frequently originates from the fact that LLMs have a limited context window (a token limit) that may cause the AI to forget things that happened earlier in the conversation. LLM advancements are continually allowing larger token limits and will likely be a non-issue soon. Nevertheless, even low percentages of incorrect and inaccurate information are an issue once LLMs are deployed in educational settings. Moreover, learners could find incorrect and inaccurately conveyed information even more convincing and trustworthy when LLMs embody AI-based avatars who build relationships, possess unique personalities, and act with realistic speech, facial expressions, and body language (Bente et al., 2014; Aseeri and Interrante, 2021).

When humans learn from AI-based avatars, they will sometimes form inadequate or unbeneficial relationships with them. The first reports of humans building relationships with chatbots come from the ELIZA project (Weizenbaum, 1983), in which a secretary increasingly engaged in personal interactions with the chatbot. Although current AI-based avatars do not yet have humans’ high social and emotional skills (Li et al., 2023; Sorin et al., 2023), some particularly advanced avatars can already display emotions through facial expressions and recognize affect from text input. In addition, developments in affective and social computing will further enhance AI-based avatars’ social and emotional skills. These developments will contribute to people’s increasingly building (inadequate) relationships with avatars. We see two particular dangers of building a relationship with AI-based educational avatars: learners can become dependent on the avatars, or be manipulated to share too much or sensitive information. These dangers exist particularly for commercial educational services, as their economic success depends on subscription fees or user data.

Another challenge with current AI-based educational avatars is that they may have inappropriate values. The LLMs driving AI-based educational avatars and chatbots have been trained on a large text corpus that contains harmful, stereotypical, and racist material and views (Weidinger et al., 2021). As a result, earlier chatbots exhibited problematic behavior, like answering questions about building explosives (OpenAI et al., 2023). Even though current chatbots no longer exhibit such problematic behavior due to technical specifications, they may sometimes hold inappropriate values and lack the appropriate, vetted, and inherent values that most professional educators have.

In addition, professional educators develop their interaction styles in their training that help guide them and provide standards for raising and educating learners (Walker, 2008). AI-based educational avatars lack self-developed interaction styles, like those of professional educators, which originate from practical experience and usually fit relatively well with the context in which the educational activities take place.

The last significant challenge has to do with insufficient data protection. AI-based avatars frequently combine LLMs, automatic speech recognition, and other cognitive services. These technologies are often cloud-based and come from companies in various countries. As a result, different data protection regulations apply, and multiple risks exist. For instance, the participants’ voice recordings feed the previously mentioned automatic speech recognition systems. In the wrong hands, these voice recordings could be used to identify people or create deepfakes (Dash and Sharma, 2023). The information that AI-based avatars pass on to LLMs can also be problematic. Participants could disclose personal or confidential information (Yao et al., 2024), especially if they interact with an avatar that they find interesting. Companies could then use this information for commercial purposes, or it could be leaked. Finally, it should be noted that educational avatars who counsel students or provide adaptive and individualized instruction are particularly at risk of generating sensitive information. They know learners’ problems, careers, strengths, and weaknesses. This information should remain confidential. As a result, professional educators should partially supervise avatars in critical settings and with young people.

We have seen that AI-based educational avatars are changing the way we learn and teach. Next, we look at potential advances in educational content and educational technology.

Advances in educational content will potentially include publishers and educational institutions developing AI-based avatars for specific products or courses. Unlike previous pedagogical agents, AI-based avatars require little effort to train on textbook excerpts or seminar content. This difference could lead to AI-based avatars gaining much wider adoption than pedagogical agents, which have not been widely used (Johnson and Lester, 2016). Creating a library of AI-based avatars for use in various educational settings, like immersive learning and massive open online courses, would be another desirable development. The AI-based avatars could play specific roles (e.g., a math tutor for a specific skill level) and even link to custom LLMs. Moreover, the cases in the case library could follow a joint framework, specifying characteristics like teaching style or error handling. Educators could then adapt prompts for specific characteristics that affect the avatar’s traits and behaviors.

Just recently, OpenAI (2024) announced GPT-4o. With this new LLM, AI-based avatars will be able to process a combination of images, videos, and audio sequences, and respond using these various media. Moreover, GPT-4o can detect and express emotions and interpret camera feed with advanced computer vision capabilities. Further advances like this are on the way in educational technology. Generative AI will likely increase accuracy and precision in many activities and tasks. These developments will allow learners to be instructed and supported in tasks that only occur in individual domains. In addition, generative AI will have access to more data. Various technologies, such as the Internet of Things, digital twins, and capturing human sensor data, are currently being expanded. Access to these data sources will significantly expand the insights that AI-based avatars have on the physical world and users’ states. We also expect that AI-based avatars will gain higher socio-emotional capabilities. Researchers are already developing multi-agent systems in which different LLM-based agents interact in various roles (Li et al., 2023). AI-based avatars operated with such technologies could develop their own perspectives and exhibit social skills on par with humans. Without a doubt, further progress in artificial intelligence, the increased use of sensor data, and the advancing social capabilities of AI-based avatars will present us with further challenges; the next evolutionary step of AI-based avatars already looms on the horizon.

Our considerations raise three open questions for research and practice that we now need to answer.

The first question is how we can utilize AI-based educational avatars effectively. Let us briefly summarize the current and upcoming use-cases of AI-based avatars. AI-based avatars can be employed in individual contexts as well as in settings that connect multiple users. Group scenarios can also be simulated by having multiple AI-based avatars work together in an orchestrated way. As mentioned, AI-based avatars can develop a grasp of the physical world and users’ states, when fed with data from digital twins, the real world or game engines. While these capabilities are already technically feasible, they are not yet fully integrated into most available AI-based avatars. We now need to find the most appropriate applications where AI-based avatars can provide added value using these capabilities. Then, we have to identify the most suitable software architectures to create powerful AI-based avatars for these purposes.

The second question concerns what we should and should not do with AI-based educational avatars. Although AI-based avatars hold great potential, they also come with challenges, such as incorrect and inaccurate information and inappropriate values and interactional styles. These issues make AI-based avatars less suitable for unguided and unsupervised instruction, especially for vulnerable groups. Clarifying the applications and limitations of AI-based avatars in education requires a two-faceted methodology: We should conduct experimental studies to investigate how different user groups perceive and interact with AI-based avatars across various applications. Also, we need to answer these questions normatively by referring back to pedagogical and ethical theories and critically reflecting on technological change. In terms of research topics to explore, it seems particularly important to further investigate the trust that users place in AI-based avatars and the authenticity they feel when interacting and building a relationship with them.

The third question relates to what we are allowed to do with AI-based educational avatars. Clear laws and guidelines are essential for the responsible use of generative AI and AI-based avatars in education. Key areas requiring regulation include the collection of sensitive data, the utilization in tasks with critical consequences, and use with vulnerable user groups. The answers to these regulatory questions will likely vary greatly depending on the country, the software and the intended purposes. It is crucial for local authorities to develop policies now to prevent uncontrolled use. Research can contribute by providing neutral information about the potential impact of AI-based avatars in education, suggesting ideas for policies, and reporting on the international status of their use.

The original contributions presented in this study are included in the article/supplementary material, further inquiries can be directed to MCF, bWF4aW1pbGlhbi5maW5rQHVuaWJ3LmRl.

MCF: Conceptualization, Project administration, Visualization, Writing–original draft, Writing–review and editing. SAR: Software, Writing–review and editing. BE: Conceptualization, Funding acquisition, Supervision, Writing–review and editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of the article. We acknowledge financial support by Universität der Bundeswehr München. This research manuscript is funded by dtec.bw – Digitalization and Technology Research Center of the Bundeswehr [project RISK.twin]. dtec.bw is funded by the European Union – NextGenerationEU.

We are grateful to the research initiative Individuals and Organizations in a Digitalized Society (INDOR) of Universität der Bundeswehr München, which contributed new ideas to this manuscript. We thank Lukas Hart for interesting discussions on the topic and his support of the project. We also thank Kerstin Huber and Volker Eisenlauer for the conversations on educational technologies we had over the last years. MCF thanks his wife, Larissa Kaltefleiter, for inspiring discussions about generative AI. Please note that a preprint of a prior version of this article has been posted online at a repository (Fink et al., 2024).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

AbuShawar, B., and Atwell, E. (2015). ALICE Chatbot: Trials and outputs. Comput. Sist. 19, 625–632. doi: 10.13053/cys-19-4-2326

Alemdag, E. (2023). The effect of chatbots on learning: A meta-analysis of empirical research. J. Res. Technol. Educ. 27, 1–23. doi: 10.1080/15391523.2023.2255698

Aseeri, S., and Interrante, V. (2021). The influence of avatar representation on interpersonal communication in virtual social environments. IEEE Trans. Visual. Comput. Graph. 27, 2608–2617. doi: 10.1109/TVCG.2021.3067783

Azevedo, R., Moos, D. C., Johnson, A. M., and Chauncey, A. D. (2010). Measuring cognitive and metacognitive regulatory processes during hypermedia learning: Issues and challenges. Educ. Psychol. 45, 210–223. doi: 10.1080/00461520.2010.515934

Beege, M., and Schneider, S. (2023). Emotional design of pedagogical agents: The influence of enthusiasm and model-observer similarity. Educ. Tech. Res. 71, 859–880. doi: 10.1007/s11423-023-10213-4

Belland, B. R., Walker, A. E., Kim, N. J., and Lefler, M. (2017). Synthesizing results from empirical research on computer-based scaffolding in STEM education: A meta-analysis. Rev. Educ. Res. 87, 309–344. doi: 10.3102/0034654316670999

Bente, G., Dratsch, T., Rehbach, S., Reyl, M., and Lushaj, B. (2014). “Do you trust my avatar? Effects of photo-realistic seller avatars and reputation scores on trust in online transactions,” in HCI in business, ed. F. F.-H. Nah (Cham: Springer), 461–470. doi: 10.1007/978-3-319-07293-7_45

Berns, R. G., and Erickson, P. M. (2001). Contextual teaching and learning: Preparing students for the new economy. Atlanta, GA: National Dissemination Center for Career and Technical Education.

Castro-Alonso, J. C., Wong, R. M., Adesope, O. O., and Paas, F. (2021). Effectiveness of multimedia pedagogical agents predicted by diverse theories: A meta-analysis. Educ. Psychol. Rev. 33, 989–1015. doi: 10.1007/s10648-020-09587-1

Chan, M. M., Amado-Salvatierra, H. R., Hernandez-Rizzardini, R., and De La Roca, M. (2023). “The potential role of AI-based chatbots in engineering education. Experiences from a teaching perspective,” in Proceedings of the 2023 IEEE Frontiers in education conference (FIE), (College Station, TX: IEEE), 1–5. doi: 10.1109/FIE58773.2023.10343296

Chernikova, O., Heitzmann, N., Stadler, M., Holzberger, D., Seidel, T., and Fischer, F. (2020b). Simulation-based learning in higher education: A meta-analysis. Rev. Educ. Res. 90, 499–541. doi: 10.3102/0034654320933544

Chernikova, O., Heitzmann, N., Fink, M. C., Timothy, V., Seidel, T., and Fischer, F. (2020a). Facilitating diagnostic competences in higher education – a meta-analysis in medical and teacher education. Educ. Psychol. Rev. 32, 157–196. doi: 10.1007/s10648-019-09492-2

Chheang, V., Sharmin, S., Márquez-Hernández, R., Patel, M., Rajasekaran, D., Caulfield, G., et al. (2024). “Towards anatomy education with generative AI-based virtual assistants in immersive virtual reality environments,” in Proceedings 2024 IEEE international conference on artificial intelligence and eXtended and virtual reality (AIxVR), (Los Angeles, CA: IEEE), 21–30. doi: 10.1109/AIxVR59861.2024.00011

Chien, C.-C., Chan, H.-Y., and Hou, H.-T. (2024). Learning by playing with generative AI: Design and evaluation of a role-playing educational game with generative AI as scaffolding for instant feedback interaction. J. Res. Technol. Educ. 28, 1–20. doi: 10.1080/15391523.2024.2338085

Dai, C.-P., Ke, F., Pan, Y., Moon, J., and Liu, Z. (2024). Effects of artificial intelligence-powered virtual agents on learning outcomes in computer-based simulations: A meta-analysis. Educ. Psychol. Rev. 36:31. doi: 10.1007/s10648-024-09855-4

Dash, B., and Sharma, P. (2023). Are ChatGPT and deepfake algorithms endangering the cybersecurity industry? A review. IJASE 10, 21–39.

Dever, D. A., Sonnenfeld, N. A., Wiedbusch, M. D., Schmorrow, S. G., Amon, M. J., and Azevedo, R. (2023). A complex systems approach to analyzing pedagogical agents’ scaffolding of self-regulated learning within an intelligent tutoring system. Metacogn. Learn. 18, 659–691. doi: 10.1007/s11409-023-09346-x

Dignath, C., and Büttner, G. (2008). Components of fostering self-regulated learning among students. A meta-analysis on intervention studies at primary and secondary school level. Metacogn. Learn. 3, 231–264. doi: 10.1007/s11409-008-9029-x

Dunlosky, J., Rawson, K. A., Marsh, E. J., Nathan, M. J., and Willingham, D. T. (2013). Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychol. Sci. Public Interest 14, 4–58. doi: 10.1177/1529100612453266

Fecke, J., Afzal, E., and Braun, E. (2023). “A conceptual system design for teacher education: Role-play simulations to train communicative action with AI agents,” in ISLS annual meeting 2023 - proceedings of the third annual meeting of the international society of the learning sciences (ISLS), eds J. D. Slotta and E. S. Charles (Buffalo, NY: International Society of the Learning Sciences), 2197–2200.

Fink, M. C., Radkowitsch, A., Bauer, E., Sailer, M., Kiesewetter, J., Schmidmaier, R., et al. (2021). Simulation research and design: A dual-level framework for multi-project research programs. Educ. Tech. Res. 69, 809–841. doi: 10.1007/s11423-020-09876-0

Fink, M. C., Robinson, S. A., and Bernhard. (2024). AI-Based avatars are changing the way we learn and teach: Benefits and challenges. EdArXiv [Preprint]. doi: 10.35542/osf.io/jt83m

Fiorella, L., and Mayer, R. E. (2016). Eight ways to promote generative learning. Educ. Psychol. Rev. 28, 717–741. doi: 10.1007/s10648-015-9348-9

Graesser, A. C., Chipman, P., Haynes, B. C., and Olney, A. (2005). AutoTutor: An intelligent tutoring system with mixed-initiative dialogue. IEEE Trans. Educ. 48, 612–618. doi: 10.1109/TE.2005.856149

Heidig, S., and Clarebout, G. (2011). Do pedagogical agents make a difference to student motivation and learning? Educ. Res. Rev. 6, 27–54. doi: 10.1016/j.edurev.2010.07.004

Hughes, S. (2023). Cut the bull…. detecting hallucinations in large language models. Available online at: https://vectara.com/blog/cut-the-bull-detecting-hallucinations-in-large-language-models/ (accessed May 27, 2024).

Johnson, W. L., and Lester, J. C. (2016). Face-to-face interaction with pedagogical agents, twenty years later. Int. J. Artif. Intell. Educ. 26, 25–36. doi: 10.1007/s40593-015-0065-9

Karaoğlan Yılmaz, F. G., Olpak, Y. Z., and Yılmaz, R. (2018). The effect of the metacognitive support via pedagogical agent on self-regulation skills. J. Educ. Comput. 56, 159–180. doi: 10.1177/0735633117707696

Kasneci, E., Sessler, K., Küchemann, S., Bannert, M., Dementieva, D., Fischer, F., et al. (2023). ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 103:102274. doi: 10.1016/j.lindif.2023.102274

Kolodner, J. L. (1992). An introduction to case-based reasoning. Artif. Intell. Rev. 6, 3–34. doi: 10.1007/BF00155578

Krapp, A. (2002). Structural and dynamic aspects of interest development. Learn. Instr. 12, 383–409. doi: 10.1016/S0959-4752(01)00011-1

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2017). ImageNet classification with deep convolutional neural networks. Commun. ACM 60, 84–90. doi: 10.1145/3065386

Kung, T. H., Cheatham, M., Medenilla, A., Sillos, C., De Leon, L., Elepaño, C., et al. (2023). Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLoS Digit. Health 2:e0000198. doi: 10.1371/journal.pdig.0000198

Lester, J. C., Converse, S. A., Kahler, S. E., Barlow, S. T., Stone, B. A., and Bhogal, R. S. (1997). “The persona effect: Affective impact of animated pedagogical agents,” in Proceedings of the ACM SIGCHI conference on human factors in computing systems, (Atlanta, GA: ACM), 359–366. doi: 10.1145/258549.258797

Li, Y., Zhang, Y., and Sun, L. (2023). MetaAgents: Simulating interactions of human behaviors for LLM-based task-oriented coordination via collaborative generative agents. arXiv [Preprint]. doi: 10.48550/ARXIV.2310.06500

Lim, L., Bannert, M., Van Der Graaf, J., Singh, S., Fan, Y., Surendrannair, S., et al. (2023). Effects of real-time analytics-based personalized scaffolds on students’ self-regulated learning. Comput. Hum. Behav. 139:107547. doi: 10.1016/j.chb.2022.107547

Loderer, K., Pekrun, R., and Lester, J. C. (2020). Beyond cold technology: A systematic review and meta-analysis on emotions in technology-based learning environments. Learn. Instr. 70:101162. doi: 10.1016/j.learninstruc.2018.08.002

Mageira, K., Pittou, D., Papasalouros, A., Kotis, K., Zangogianni, P., and Daradoumis, A. (2022). Educational AI chatbots for content and language integrated learning. Appl. Sci. 12:3239. doi: 10.3390/app12073239

Makransky, G., Terkildsen, T. S., and Mayer, R. E. (2019). Adding immersive virtual reality to a science lab simulation causes more presence but less learning. Learn. Instr. 60, 225–236. doi: 10.1016/j.learninstruc.2017.12.007

Ng, D. T. K., Tan, C. W., and Leung, J. K. L. (2024). Empowering student self-regulated learning and science education through CHATGPT: A pioneering pilot study. Br. J. Educ. Tech. 65:13454. doi: 10.1111/bjet.13454

OpenAI (2024). Hello GPT-4o. Available online at: https://openai.com/index/hello-gpt-4o/ (accessed May 27, 2024).

OpenAI, Achiam, J., Adler, S., Agarwal, S., Ahmad, L., Akkaya, I., et al. (2023). GPT-4 technical report. arXiv [Preprint]. arXiv:2303.08774.

Orrù, G., Piarulli, A., Conversano, C., and Gemignani, A. (2023). Human-like problem-solving abilities in large language models using ChatGPT. Front. Artif. Intell. 6:1199350. doi: 10.3389/frai.2023.1199350

Rickel, J. (2001). “Intelligent virtual agents for education and training: Opportunities and challenges,” in Intelligent Virtual Agents, eds A. De Antonio, R. Aylett, and D. Ballin (Berlin: Springer), 15–22. doi: 10.1007/3-540-44812-8_2

Rickel, J., and Johnson, W. L. (1999). Animated agents for procedural training in virtual reality: Perception, cognition, and motor control. Appl. Artif. Intell. 13, 343–382. doi: 10.1080/088395199117315

Robinson, S. A. (2023). GPTAvatar. Available online at: https://github.com/SethRobinson/GPTAvatar (accessed May 27, 2024).

Sailer, M., Bauer, E., Hofmann, R., Kiesewetter, J., Glas, J., Gurevych, I., et al. (2023). Adaptive feedback from artificial neural networks facilitates pre-service teachers’ diagnostic reasoning in simulation-based learning. Learn. Instr. 83:101620. doi: 10.1016/j.learninstruc.2022.101620

Sailer, M., Ninaus, M., Huber, S. E., Bauer, E., and Greiff, S. (2024). The end is the beginning is the end: The closed-loop learning analytics framework. Comput. Hum. Behav. 158:108305. doi: 10.1016/j.chb.2024.108305

Schroeder, N. L., Adesope, O. O., and Gilbert, R. B. (2013). How effective are pedagogical agents for learning? A meta-analytic review. J. Educ. Comput. Res. 49, 1–39. doi: 10.2190/EC.49.1.a

Shumanov, M., and Johnson, L. (2021). Making conversations with chatbots more personalized. Comput. Hum. Behav. 117:106627. doi: 10.1016/j.chb.2020.106627

Smutny, P., and Schreiberova, P. (2020). Chatbots for learning: A review of educational chatbots for the facebook messenger. Comput. Educ. 151:103862. doi: 10.1016/j.compedu.2020.103862

Sorin, V., Brin, D., Barash, Y., Konen, E., Charney, A., Nadkarni, G., et al. (2023). Large language models (LLMs) and empathy – a systematic review. medRxiv [Preprint]. Available online at: https://www.medrxiv.org/content/10.1101/2023.08.07.23293769v1.full.pdf (accessed May 27, 2024).

Walker, J. M. T. (2008). Looking at teacher practices through the lens of parenting style. J. Exp. Educ. 76, 218–240. doi: 10.3200/JEXE.76.2.218-240

Wang, Y., Gong, S., Cao, Y., Lang, Y., and Xu, X. (2023). The effects of affective pedagogical agent in multimedia learning environments: A meta-analysis. Educ. Res. Rev. 38:100506. doi: 10.1016/j.edurev.2022.100506

Ward, W., Cole, R., Bolaños, D., Buchenroth-Martin, C., Svirsky, E., and Weston, T. (2013). My science tutor: A conversational multimedia virtual tutor. J. Educ. Psychol. 105, 1115–1125. doi: 10.1037/a0031589

Weidinger, L., Mellor, J., Rauh, M., Griffin, C., Uesato, J., Huang, P.-S., et al. (2021). Ethical and social risks of harm from language models. arXiv [Preprint]. doi: 10.48550/arXiv.2112.04359

Weizenbaum, J. (1983). ELIZA — a computer program for the study of natural language communication between man and machine. Commun. ACM 26, 23–28. doi: 10.1145/357980.357991

Wollny, S., Schneider, J., Di Mitri, D., Weidlich, J., Rittberger, M., and Drachsler, H. (2021). Are we there yet? A systematic literature review on chatbots in education. Front. Artif. Intell. 4:654924. doi: 10.3389/frai.2021.654924

Wood, D. F. (2003). ABC of learning and teaching in medicine: Problem based learning. BMJ 326, 328–330. doi: 10.1136/bmj.326.7384.328

Wood, D., Bruner, J. S., and Ross, G. (1976). The role of tutoring in problem solving. J. Child Psychol. Psychiatry 17, 89–100. doi: 10.1111/j.1469-7610.1976.tb00381.x

Wu, R., and Yu, Z. (2024). Do AI chatbots improve students learning outcomes? Evidence from a meta-analysis. Br. J. Educ. Technol. 55, 10–33. doi: 10.1111/bjet.13334

Keywords: pedagogical agent, artificial intelligence chatbot, computer-supported learning, collaborative learning, education, AI-based educational avatar, generative AI, large language models

Citation: Fink MC, Robinson SA and Ertl B (2024) AI-based avatars are changing the way we learn and teach: benefits and challenges. Front. Educ. 9:1416307. doi: 10.3389/feduc.2024.1416307

Received: 12 April 2024; Accepted: 27 June 2024;

Published: 16 July 2024.

Edited by:

Miguel Morales-Chan, Galileo University, GuatemalaReviewed by:

Rosanda Pahljina-Reinić, University of Rijeka, CroatiaCopyright © 2024 Fink, Robinson and Ertl. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Maximilian C. Fink, bWF4aW1pbGlhbi5maW5rQHVuaWJ3LmRl

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.