- Department of Chemistry and Biochemistry, University of Detroit Mercy, Detroit, MI, United States

The global pandemic forced educators at all levels to re-evaluate how they would engage content, generate relevance, and assess the development of their students. While alternative grading strategies were not necessarily new to the world of chemical education research (CER) in 2020, the pandemic accelerated the examination of such principles for instructors who wished to prioritize learning over compliance with course policies and eschew points for grading. This article describes the transformation of a traditional lecture organic chemistry course under new, standards-based principles. Working from a discrete set of grouped learning outcomes, these courses aim to clearly define standards, give helpful feedback, indicate semester-long student progress, and allow for reattempts without penalty using a token system. Students explore both core (all of which must be passed in order to show progress) and non-core (students can choose a majority of these to pass) learning outcomes in a variety of formative and summative assessment approaches. Unique to the reformatting of these courses is the use of collaborative, take-home assessments, integration of multiple-choice questions, ungrading of student-submitted summary notes, live solving of select problems with peer feedback, and a learning check system that reinforces the flipped nature of content delivery. Another distinctive feature of the courses is the requirement for students who reach a certain threshold of repeating learning outcomes to perform in-person problem solving for the instructor. Overall, students report that the structure of the courses reduces their general and specific anxiety, lowers the temptations to challenge their academic integrity, and increases their own learning self-monitoring, reflecting known pedagogies of metacognition. The instructor reports that alternative grading strategies take far less time, generates more meaningful feedback, and shifts student attitudes away from final grading and toward genuine learning.

1 Introduction

“The most difficult academic year in the history of American higher education.” This was the social media post that stared back at me in early May 2022. I thought for a moment: how could any academic year be more difficult than 2019–2020, when we all had to pivot our teaching so severely that we were unsure what the nature of learning was anymore? And what about 2020–2021? That was the year of extreme social upheaval and a continuance of uncertainty. Semester after semester, the highly trained professors of the Academy found themselves completely unprepared. Student pushback against tried-and-true methods made the most experienced educator question their abilities, wherewithal, and dedication to the art of teaching and learning. Amid this, after 20 years of teaching organic chemistry, I had my worst semester ever.

Largely, I had the same students during Winter 2022 (Detroit Mercy refers to the January to April semester as “Winter”) that I’d had during Fall 2021. What had gone wrong? Student behavior had devolved into raised voices in public, and direct confrontation of my teaching methods. Problems which would have barely challenged students only a few years back were labeled “impossible,” and students outright refused to do the work of the class. Students ignored official course announcements, even those posted online and through texting apps, while verbal assurances mattered less and less as daily attendance plummeted to less than 50% of enrollment. In lieu of course content, the majority of my job became explaining, over and over, how grades were calculated in the course. All of this occurred despite a fill-in-the-blank grade calculation worksheet from my syllabus that other colleagues had termed “babying” my students. I survived to the end of the term, but at the expense of my emotional and intellectual wellbeing. Over two decades of teaching, a personal metric for the success of a course was the number of students that “followed-up” after grades were posted (emailed, called, or texted to complain about their final grade). By this measure, the course was a nearcomplete failure – 27 of my 115 students demanded 30 min meetings and a recount of every point gained and lost over the 16 week period.

A regular semester would have had only two or three such contacts. As the warm weather of spring took over, I was exhausted, and the prospect of licking my wounds and doing it all over again in fall seemed more than daunting.

“The most difficult academic year in the history of American higher education.” It was happening to other professors, as well. Maybe it wasn’t me? Certainly, the pandemic had affected students and their learning. They seemed ill-prepared, discourteous, and refusing to adapt to the rigors of higher education. I retreated into the world of reading and reflecting, hoping that I could research my way out of the most serious existential crisis of my career as an organic chemical educator. As previous confidence in my ability to do this job well faded, my beliefs that a better way must exist grew.

Contemplation brought me quickly to a number of conclusions: (1) of course the Covid-19 pandemic was affecting students, and it was my job, as the more experienced professional in the room, to help them navigate the rough waters; (2) many in higher education knew that the pandemic had not brought these issues anew, but had exacerbated issues that were already fomenting long before 2020 (McMurtrie, 2023); (3) any lost zeal I was projecting to students was being projected back to me, a dark cycle where good learning was breaking down. In the end, my reflections always brought me back to that fundamental, but unwritten rule of the classroom: that the professor is in charge of the content, methods, and environment of learning. Pandemic or not, I had let my students down by not responding appropriately to the trials of the period.

During the pandemic, it seemed certain that every semester had its own unique challenges so that each was different than the last. While responding nimbly to these ever-changing issues was easier said than done, I sensed that the time for change had arrived. It was time to fully overhaul my courses, and to do so in such a way that I stayed true to my core values of engaging content, generation of relevance, and assessment of student development over the length of the course. I discovered the Grading for Growth blog of Talbert and Clark (n.d.) and was immediately entranced by their no-nonsense take on cutting-edge pedagogical research surrounding alternative grading methods. At the heart of my reflection after a tough semester was finding a way to prioritize learning over compliance with course policies and eschew points for grading in my courses. I knew I had found what I was looking for when I read the story of the first-year calculus student.

In short, Talbert and Clark talked about an eager, first-year college calculus student who started the semester strong, but slowly ebbed in their work until, after failing a number of assessments and the final exam, earned an F grade for the course. Upon examination of the student’s work over time, the professors noted that the student had a perfect mastery of the concepts of calculus that happened to always lag a few days or weeks behind the current topic coverage. Suddenly, the F grade was a metric of how well the student performed at the current topics, not a measure of 16 weeks of progress. In asking other faculty about this student as a hypothetical, Talbert and Clark found that many thought she deserved an A for her efforts – the grade farthest from that which she earned! After all, she was performing admirably, just a few lessons behind the rest of the class. This story spoke directly to me as I thought about how my own students earned their letter grades and how the assumption of learning connected to these grades has historically been viewed.

This article describes the transformation of a traditional lecture organic chemistry course under new, standards-based principles. First, a brief background on ABCDF grading will be described, followed by foundational principles for alternative grading strategies (AGS). Specific incorporation of these principles into an organic chemistry lecture and lab course will be explained, in conjunction with a number of preliminary outcomes from AGS for both student and instructor.

1.1 Background – a brief history of letter grades

According to Feldman (2018), the history of ABCDF grades in the United States is best viewed through the lens of the 20th century, even though the earliest recorded use of grading goes back to 1785 at Yale University. It was not until approximately 100 years later that ABCDF-style grades would become more widespread in the United States, and not until the 1940s that institutions of higher education began ABCDF grade usage extensively. Since 67% of US primary and secondary schools used letter grades by 1970, overall the concept is not a particularly old one; and for members of Generation X (like the author), grades fit comfortably into our lifetimes.

Arguably then, letter grading and students being motivated in academic studies by such grading (and its close cousin percentages) is a construct of the last 50 years of American educational history. Since the unwritten rule of education is that most teachers will teach the way they were taught (Oleson and Hora, 2014), it is not unexpected that these ideas have been passed down to following generations. Furthermore, when letter grading is married to concepts as powerful as admission, school ratings, faculty salaries, and district funding, instances of decoupling learning from grades are bound to increase. This brings us to Goodhart’s Law, first written about in 1975 (Goodhart, 1975): “Any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes.” In other words, “When a measure becomes a target, it ceases to be a good measure.” While written for modern-age British economics, the application of Goodhart’s Law to the 20th century US phenomenon of standardized testing has been written about extensively. As funding for public schools, in particular, became more and more tied to student performance on mandated tests, a corollary of Goodhart’s Law came into view – Campbell’s Law (Campbell, 1979). Again, tied to the area of economics, this law states: “The more any quantitative social indicator is used for social decision-making, the more subject it will be to corruption pressures and the more apt it will be to distort and corrupt the social processes it is intended to monitor.” Taken together, Goodhart and Campbell paint a dark, but inevitable start to the 21st century in education. When important metrics like student test scores and grades count for decisions, especially funding and admissions, the more those processes will be susceptible to fraud for the sake of success.

Moreover, pondering these economic philosophies toward education cannot be complete without considering arguments about the meaning of academic rigor, a “sign of the times” in teaching for the first 20 years of the 21st century. A hard concept to define, many educators and students alike know it exists, but do not know how to apply it or what it is actually good for. Student and faculty attitudes toward academic rigor have been studied since the early 2010s with both parties agreeing that rigor is multifaceted, but with students caring most about grades and faculty caring more for learning (Draeger et al., 2013, 2015). Nelson (2010) refers to the muddle of these conflicting viewpoints as “dysfunctional illusions of rigor” and chooses to recast the negativity of the fixed and deficit mindsets with challenges to the Academy. For example, someone might claim that hard courses “weeding out” students helps society rid itself of students with poor preparatory skills or lack of motivation as in the widely-publicized and debated organic chemistry classes of Prof. M. Jones (Supiano, 2022). A more realistic view is that poor or ineffective pedagogy is more likely to blame for student failure. At its core, argues Nelson, are two additional, dysfunctional illusions: (1) that traditional methods of instruction offer effective ways to teach undergrads, and (2) that massive grade inflation is a corruption of standards. He counter-argues that it is more realistic to view lecturing as considerably less effective than other methods and distinguishing between unjustifiably high grades and more effective pedagogy giving better student achievement. A turn toward the positive could lead any educator toward the central illusion of academic rigor: that faculty know enough in the modern era to revise their courses and curricula for the betterment of student learning. Nelson posits that teaching and curricular revision should be driven by pedagogical research and best practices, or DBER (discipline-based education research). In short, the burden has shifted – using what amounts to ancient methods now requires more rationalization than the existing data on best practices in education, including all that we know about ABCDF grading.

Adding issues of diversity, equity, and inclusion (DEI) to this conversation only bolsters the argument for a new philosophy of grading. Article after article finds traditional grading methods to be ineffective, harmful, and unjust (Ko, 2019). We must be purposeful about our instructional methods to reach as many students as possible, but could not possibly tailor individual instruction plans for each student. At my home institution, University of Detroit Mercy, we learn to place contemplatives into action as part of our dual charism Mission (University of Detroit Mercy Mission and Vision, n.d.). This translates into taking care of the whole person (“cura personalis”) and all the people (“cura apostolica”). In a recapitulation of the literature findings about rigor, we know that it exists, and faculty wish for our students to learn, so we must search for the most inclusive and equitable ways to do so.

As a final item of focus, many educators exploring alternate grading modes find “the question of the C student” to be the definitive threshold: when thinking about students who earn a C grade in your courses, would you rather they be able to do a small number things very well, or do everything in the course average? There is no correct response to this question – it is meant to be a frame from which educators can plan for what tasks or standards their students should be able to complete with mastery at the end of a lesson, unit, or course. As I considered this fundamental question, I realized I had taken the first steps on a journey of alternative grading, but I needed more input from experts to define my own, organic chemistry version of these varied principles.

1.2 Background – core philosophies for alternative grading

In preparation for the redesign of my course from an alternative grading perspective, I delved into the pedagogical philosophies of purposeful instruction in metacognition, specifications grading, ungrading, matters of grading equity, and evaluating overall student progress.

The work of McGuire (2015), a researcher in the discipline of chemistry, centers around her enthusiasm for metacognition and instructing students in the ways of their own learning. Such an element would be vital to convincing students of the value of a nontraditional evaluation scheme. I have used the “Study Cycle” graphic from her work to help my students plan for their daily and weekly work for over 10 years, and was eager to learn more about the learning habits of my students (Louisiana State University Center for Academic Success, n.d.). McGuire’s theories on how to aid students in thinking about their thinking was pivotal in my building an introductory module for my future students, especially in light of the graphical and conceptual nature of organic chemistry. McGuire’s discourse on treating separate subjects/disciplines as different when studying became the foundation for my own opening remarks to students at the beginning of the semester.

Nilson’s (2014) work on specifications grading was also an inspiration. Even before 2020, I had investigated the use of “all-or-nothing” style problems on my unit-ending assessments to emphasize the difference between full and partial skill mastery. Again, there are as many takes on “specs grading” as there are disciplines of study, but Nilson’s theories on connections among learning outcomes, grading criteria, and agreed-upon standards helped me converge on what was right for my students. Additionally, Prof. Susan D. Blum’s work in the area of “ungrading” and the disconnect between learning and schooling also brought insight to my ongoing course redesign (Blum, 2020). Her interpretation of the changing educational landscape and how to prepare students for the working world through timely, constructive feedback with no grade attached appealed to my affective side of teaching – why did I always feel guilty when grades were poor for a student? Making the correlation between these emotions and the role that I play in the classroom reinforced my confidence in being able to provide students meaningful feedback and set them on a trajectory of growth. Joe Feldman’s writing in the area of grading for equity also helped me understand my students’ previous experiences and how they shape their attitudes toward learning in higher education (Feldman, 2018). With knowledge stemming from K-12 experiences, Feldman differentiates compliance with teacher demands and learning to tear down systemic achievement and opportunity gaps for students.

The main motivation for my journey was Talbert and Clark’s Grading for Growth blog and monograph (Feldman, 2018; Clark and Talbert, 2023). The sensible prose of their writing, in light of the theory and practice of alternative grading, drove home the need for an overhaul in my lecture and lab courses. Talbert and Clark’s work made the seemingly insurmountable task of course redesign feel more feasible and, in post-pandemic times, necessary for matters of inclusion and equity. My research was complete, and I was ready to decide the core values that would drive the overarching change in my organic chemistry courses.

1.3 Foundational principles of course redesign with AGS

Even in its nascent form, the amount of research literature surrounding the concepts of alternative grading can be overwhelming (Clark, n.d.; Townsley, n.d.). There have been a number of discipline-based articles, as well as writings on central concepts in the field. In addition, nearly every corner of modern education has been examined, from pre-school to graduate-level instruction. After my personal survey of the alternative grading landscape, I attempted to synthesize a smaller number of essential concepts for my courses, some more fundamental to my pedagogy, some more specific to the content of organic chemistry. This section will detail the “non-negotiables” which served as the guideposts for my course redesign.

Paramount amongst the ideas I was to explore was a commitment to experimentation in the classroom. A common criticism in the world of chemical education research (CER) is that scientific educators are only too eager, mostly from their training, to research in the laboratory, but loathe to do so in the lecture hall. Such inertia is understandable; however, I have found that an enthusiasm for new methods, when explained to students at the start of the experiment, can re-invigorate the post-pandemic classroom environment. While some worry that altering content delivery and assessment methods negatively affects student learning, numerous studies [some in chemistry (Houchlei et al., 2023)] have shown the ability for student metacognition and resilience in the face of shifting pedagogies, even in the same subject area.

In addition, I aimed to hold fast to the following principles in my course redesign: eliminating points for assessment, grading major and minor assessments using specifications grading, monitoring placeholders in the gradebook for work that has not yet met specifications, and enacting all changes with an eye on inclusivity and equity for all students.

For over two (2) decades, I have awarded my students numerical points for correct responses on major and minor assessments. At the end of the course, one would simply need to divide the points earned by the points possible to determine a percentage, and therefore, letter grade. On paper, this is a simple calculation, especially in light of the worksheet I would attach to the last page of my syllabus: complete all assignments, record points earned, divide by maximum possible points. In the years leading up to and after the pandemic, I noticed a trend that I was spending more of the last month of the semester walking my students through this calculation than discussing content or exercises. In addition, I had long wondered what awarding 12/15 versus 13/15 meant for my students, even with a detailed rubric. What good was this rubric if students did not read it and I could not quickly summarize it? During the first two (2) years of the pandemic, I experimented with specifications grading on one (1) problem per major assessment. Students were intrigued by the “all-or-nothing” nature of the grading associated with this problem, but in the end disliked it because there was no way to demonstrate partial knowledge. I liked it because it was quick to grade and allowed me to give more rich feedback. Not allowing students to earn traditional “zeroes” for missed assignments came to me after reading Feldman’s treatise on the message it sends students: earning a zero for work not done gets them off the hook and gives license to not learn the skill at hand (Feldman, 2018).

This concept fits into a cornerstone of alternative grading: recognition of the difference between qualitative and quantitative numeration. In other words, a zero can mean something else besides a fraction like 0/100 – it can mean “not done yet.” Lastly, I wanted to make all the changes in my courses reflect a deep interlace of inclusivity and equity for all my students. I was particularly concerned for neurodivergent students whose learning preferences so drastically vary from the accepted norm and are often excluded from the areas where neurotypical students are given access.

In summary, I appreciated the practical nature of Talbert and Clark’s “Four Pillars of Grading for Growth” (Clark and Talbert, 2023) and modified them to include the important points mentioned in this section:

1. Clearly defined standards (by way of pinpointed learning outcomes).

2. Give helpful feedback (verbal/written, not numeric).

3. Allow for reattempts without penalty (use a token system).

4. Indicate semester-long student progress (growth from unit to unit defines final grade).

Using these four values to guide my course re-design, I began to re-evaluate every course policy, exercise, assignment and major/minor assessment. The only thing that did not change in my courses was the content.

2 Planning the lecture course – organic chemistry I

Organic chemistry affects every person at every moment of their lives in ways that have only been scientifically examined for the last 150 years – in other words, it is a fascinating subject to learn. The recursive relationship between structure and reactivity drives the very engine of life and is the basis for much of modern materials – clothes, building resources, electronics, and vehicles. There is merit in the study of this subject based on its graphical nature, its logical and creative approach to problem solving, and its vastness. Some have wondered about organic chemistry’s longstanding inclusion in the undergraduate pre-health curriculum, but few argue with its unique place in the world of higher education (Dixson et al., 2022). In many ways, I have found that organic chemistry’s distinctive place in the undergraduate science curriculum makes it ideally suited to experimentation with alternative grading strategies. The Fall 2022 semester would offer a chance to experiment with my Organic Chemistry I lecture course. This three (3)-credit class would have 75 total enrollees, mostly 2nd- and 3rd year students, and would meet for 75 min twice a week, plus a 50 min recitation at the end of the week. Topic coverage would be traditional, including structure, spectroscopy, and introductory reactivity (acids/bases, substitution, elimination, rearrangement, electrophilic addition). But where to begin?

2.1 Clearly defined standards

First and foremost, there can be no “clearly defined standards” without a discrete set of learning outcomes. There are numerous models for how to map student learning onto a set of outcomes for the purposes of evaluation or grading. Upon first being introduced to Bloom’s taxonomy in my early years on the faculty, I was attracted to the order with which epistemological exercises could be categorized as learning (Bloom and Krathwohl, 1956). Moving from lower levels (knowledge, comprehension) to higher levels (synthesis, evaluation) gave students a sense of learning progress and educators a pathway to follow for instructional activities. This learning framework emphasizes what a student possesses at the end of an action and is basically the model for the modern learning outcome – i.e., any statement that begins with, “At the end of this lesson, a student should be able to XYZ if they have mastered the outcome.” Anderson and Krathwohl’s revision of Bloom’s Taxonomy preserves the order of the original, but it reframes the student’s role as demonstrator of a skill and rewrites the differing levels as active verbs (Anderson et al., 2001). Perry’s (1970) casting of a four-step model aimed to frame undergraduate student learning as growth and Wolcott and Lynch go one step further to specifically map grades to outcomes by way of students’ ability to re-envision information (Dixson et al., 2022).

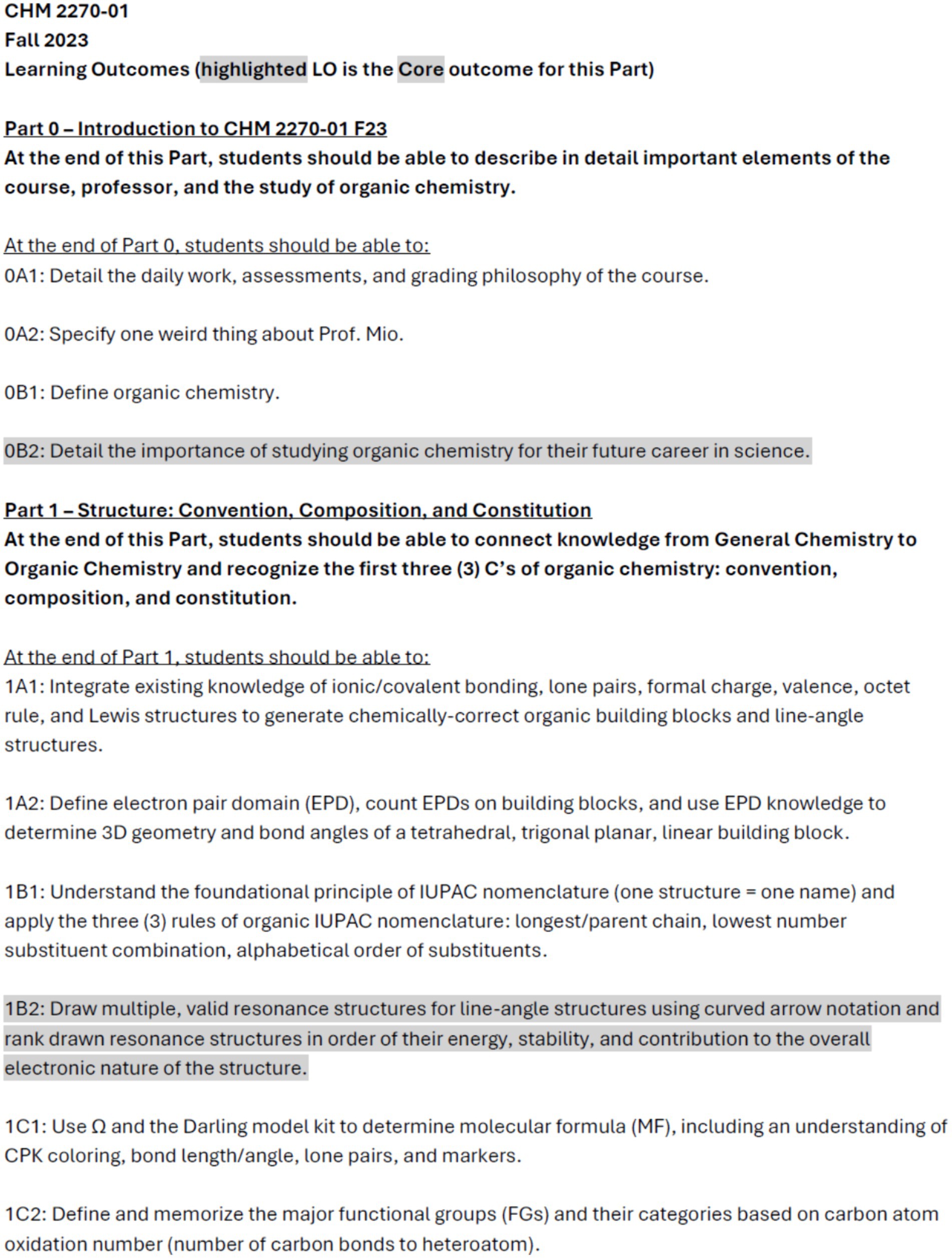

With these “maps” in hand, I began the culling of learning outcomes from a comprehensive list down to the particular learning units of both my Organic I and Organic II lecture courses. Before the pandemic, I had distilled my lecture notes into lists of micro-outcomes that better resembled a litany of individual facts than learning outcomes. In fact, there were over 300 of these, and this made the work untenable for a 16-week course (or two). I decided to group, rewrite, and reduce the total number of outcomes to fit into six (6) two-week parts. In addition, for Organic Chemistry I, I followed the advice of Talbert and Clark to consider “Core” learning outcomes for fundamental concepts in an introductory course. What resulted from this work became the superstructure of my Organic I Lecture course in Fall 2022:

Part 1 – Convention, Composition, and Constitution Core Learning Outcome – drawing and ranking resonance structures

Part 2 – Constitution, Conformation, and Configuration Core Learning Outcome – identifying isomeric relationships

Part 3 – Spectroscopy, Spectrometry, and Spectrophotometry Core Learning Outcome – interpreting (McMurtrie, 2023) H NMR data (symmetry)

Part 4 – Integrated Spectroscopy and Organic Reaction Basics Core Learning Outcome – solving a structure from NMR, MS, IR data

Part 5 – Substitution and Elimination Pathways Core Learning Outcome – using reaction criteria to determine SN and/or E pathways

Part 6 – Electrophilic Addition Pathways Core Learning Outcome – solving retrosynthesis problems

The core learning outcomes were borne out of a thought experiment many of us have considered – all details aside, what would we be horrified to find out our students did not learn at minimum in our courses? (Figure 1). I had already decided to make some of the non-Core learning outcomes in these Parts optional by way of what constituted a “Pass” for the main summative assessment at the end of the unit. In my first iteration of Organic II Lecture, I decided to abstain from having Core learning outcomes in lieu of review outcomes that recapitulated the concepts of the first term.

2.2 Give helpful feedback

2.2.1 Take-home problem sets

Fast-forwarding to the end of a course unit (I call them “Parts”) from the student perspective, I planned to assign multi-day, collaborative take-home problem sets (THPS) as the main summative assessments of the course. For over two (2) decades, I experimented with recent, literature-based, multi-day, peer-collaborative, free-response problem sets to evaluate student learning. Primarily based on the CER (claim, evidence, reasoning) structure (Brunsell, 2024), students were asked to extend their skills past the elementary level and combine learned principles in new applications of problem solving. I retained this method of evaluation, but now linking each specific learning outcome to a single problem. With six (6) learning outcomes per THPS and one (1) of them being a Core learning outcome, students were challenged to complete the set in 4 days or less. If a student’s response did not pass on the Core learning outcome (vide supra 2.1) or on more than one (1) of the five (5) non-Core learning outcomes, the entire THPS would be termed “Try Again” and sent back to the student for editing or overhaul, depending on the number and magnitude of the misconception. Using tokens (vide infra 2.3), students could revise any problem not passed on the first try in an attempt to pass the THPS and earn a “badge” for that Part of the course. The number of badges accrued over the length of the course reflects their final grade. Badges were used to differentiate the successful completion of a THPS versus an unsuccessful attempt.

This style of assessment is time-consuming to write and evaluate. The second principle of “give helpful feedback” is based on a verbal, non-numeric model for constructive criticism. Therefore, I left numeric grading behind and went to an all-or-nothing evaluation style. Heavily involving the ideology of specifications grading, though, it is relatively easy to write “Pass” or “Try Again” with a sentence or two of review. For example – if no less than three (3) resonance contributors were asked for by the problem, but only two (2) were given by a student, this would immediately merit a “Try Again.” More fundamental misunderstandings (an incorrect charge on an atom or missing multiple bonds) would also merit “Try Again,” but with more direct feedback on what to change to make the response completely correct. In general, students understand these methods, but they do not like them when first introduced. Until they see the style of feedback offered a few times, they view “all-or-nothing” evaluation as punitive. To aid in students’ quick adjustment to the alternative grading model, I discuss with students what small errors (i.e., those that did not directly affect a student’s achievement of the learning outcome) were present and how to identify them. They quickly learn the best way to plan their responses to earn “Pass” on the first try.

Even if students do not earn a “Pass” on the first try for the THPS, tokens can be earned and used (vide infra 2.3) to review and revise their responses to full accuracy. In the spirit of the third and fourth ‘pillars’ (allow for reattempts without penalty and indicate semester-long student progress) students are allowed one (1) chance to correct on their own, followed by any further chances after the second being performed in person as an oral exam. Speaking to pandemic positives, many of us in higher education have returned to the medieval roots of university education with oral exams, being possibly the last bastion of extemporaneous evaluation in a world of Chegg and domyclasscholarly.com.

2.2.2 Skeleton notes, learning checks, and exercises

If learning outcomes are the first steps of a Part and take-home problem sets are the summative assessments, what occurs in the middle — the domain of formative assessment? First, as a result of the older learning outcome catalog, I transformed my personal lecture notes into “skeleton notes” videos for students to watch for content introduction. In the wake of traditional lecture courses fading away, the clear, concise, engaging, and scrollable content introduction video reigns supreme. To ensure students engage with the videos, they are required to prepare enough to pass the next day’s learning check (LC) – a three-question quiz (multiple choice, short response) where the only purpose is for students to take the LC, not give correct responses. The sum result is a flipped classroom (Bergmann and Sams, 2012), wherein the vast majority of class time is spent formatively evaluating progress and running problems, with the vast majority of outside classwork introducing new concepts. The LCs also allow for an easy attendance policy to be enacted.

While in class, students engage in think-pair-share, small-group, and discussion-based active learning exercises to extend their content knowledge beyond the introductory video. Students are always encouraged to attend office hours with more specific questions, but they are trained to bring broader concerns to the whole class during regular meetings. Homework, both online and written, is used to follow-up on new topics after class meetings. In addition, submission of summarized notes at the end of the course Part is also used for content follow-up. Students are asked to fit the main concepts of the last topic set into a certain page space, then their submissions are ungraded (Bergmann and Sams, 2012; Bergmann and Sams, 2014). As per many items in the course, the number of summarized notes turned in on time and meeting a minimum set of criteria affect a student’s final grade in the course.

2.2.3 Part summary quizzes and problem days

For the last class meeting of a course Part, problem day and a Part summary quiz are administered. Problem Day is a chance for students to think on their feet and solve smaller problems on the whiteboard. At the start of the term, students are pre-assigned multiple problem dates to reduce anxiety about this very out-in-the-open work. On the day of, students are randomly assigned a partner and are set to a problem, either as a “writer” or a “reviewer.” Writer pairs respond directly to the question after consultation with peers and the professor. They must draw out a detailed response next to the original problem on the board. Reviewer pairs work together to evaluate the response on the board and make edits as needed. They will make a 20- to 30-s presentation at the end to talk about the original problem, the writer response, and any changes they could make to the response. Again, the only tracked quantity for problem day is whether students participate based on their randomly assigned role. The activity gives a sense of finality to the set of learning outcomes in the past unit and prepares students for the collaborative nature of the Take-Home Problem Sets.

Part Summary Quizzes (PSQs) were generated as a response to the number of pre-health students at Detroit Mercy (essentially the majority of all science majors) who will take multiple choice, organic chemistry-based admissions exams. An online course response system (CRS) is used to administer a multiple-choice quiz with one question per learning outcome at the very end of problem day. Students who participate in the PSQ earn credit toward their final grade, and those that “pass” with the same rubric as the Take-Home Problem Set (students must pass the Core learning outcome and 4/5 of the non-Core learning outcomes) can earn a token as a reward for keeping up with the material. Detroit Mercy also has a well-established tradition of recitation sessions for science lecture courses. These non-required sessions are independent problem-solving class meetings where new material cannot be covered, but skills can be practiced. The CRS is used to practice new material with multiple choice mini quizzes that can earn students tokens for simply being present and taking them, as well as additional tokens for passing with a number of questions responded to correctly.

2.3 Allow for reattempts without penalty

The next pillar of the alternative grading scheme requires what is commonly known as a token system. While the administration of the token system may seem like busywork for the educator, I assure you it’s no more complicated than the multiple weighted-point calculations that we are all used to with percentage grades! As a baseline, tokens are introduced to students as a system that will encourage students to become proficient in as many course learning outcomes as they choose. Students can redeem tokens: to extend a deadline for submission, to replace a “Try Again” mark to “Pass” for a lower-level item (non-summative assessment, THPS), to replace an online homework submission, to allow for a revision of a Take-Home Problem Set where a badge was not earned, or on some other bending of the course rules agreed upon by the student and the professor.

Every student in the course starts with one (1) token. Students can earn more tokens throughout the semester by: (1) attending and participating in recitation, (2) getting a minimum of 2 out of 3 RQuiz questions correct at the end of recitation, (3) attending and writing a one-page reflection for a Detroit Mercy event approved of ahead of time, (4) uploading complete THPS responses early, (5) attending in-person office hours once in the first two (2) weeks of the term, (6) enrolling in the course messaging app by Friday of the first week of classes, (7) passing the Part Summary Quiz, and (8) responding in a timely manner with regard to grade check-in. Some token-earning events are one-time-only, others can be completed multiple times during the semester. Some events earn partial tokens, while others earn full ones. In my courses, there was no maximum set on token earning and the average earned was approximately twelve (12).

Redeeming tokens is done through an online form, the link to which is displayed prominently on the course Learning Management System page. Once students submit this form, the student and the instructor receive an email receipt. This receipt serves as official approval, allowing students to immediately do the thing they redeemed the token for. Some items in the class require one (1) token to redeem (lower-level item replacement or deadline extension), while others need more than one (1) token (homework replacement, revision of a Take-Home Problem Set). The token total is updated in the professor’s gradebook.

As a matter of record, tokens cannot be shared, and unused tokens will be discarded at the end of the semester. The earning and redeeming of tokens have been shown to reduce student and instructor anxiety, as they allow for a universal correction coefficient for many of life’s unexpected twists and turns (Clark and Talbert, 2023). Tokens make the many iterations needed to master a learning outcome possible and can be used to make due dates flexible. In short, there are various ways to earn tokens and various items students can redeem tokens to effect.

Students are instructed in the first week that this type of pedagogy nearly always generates a “token economy.” This term refers to the fact that students are advised to earn as many tokens as they think they will need to use. Some students may be late to class often (missing low-level Learning Checks) or need multiple revisions of their Take-Home Problem Sets. Statistics are supplied to current students based on the last term of token usage; for example, in Fall 2022, students used 77% of their tokens to revise THPS responses and earned 42% of their tokens by choosing to attend optional recitation.

Having used alternative grading in my courses for the past three semesters, I have noticed that tokens afford two (2) major benefits for the instructor. First, students tend to recognize the positive reward of a token early in the term, even though they may not have ever used the system before. In the Fall 2023 iteration of my course, over 89% of my 60 students responded during a week 1 assessment that they did not understand how the token system worked but had a favorable view of the concept. Bringing students on board with a token system may be the easiest part of making a transition to alternative grading. Secondly, tokens allow for a number of “normal” course operations to be incentivized. I have given students a colored notecard to hand to me during the first two (2) weeks of the term at office hours to earn a token reward. I have also asked students to self-determine their midterm and final grades with evidence to earn a token. This practice, in particular, opens up the lines of communication with students who over- or under-estimate their performance. Need students to sign up for your class messaging app? Offer them a token. The possibilities are endless and can apply to multiple modes of instruction.

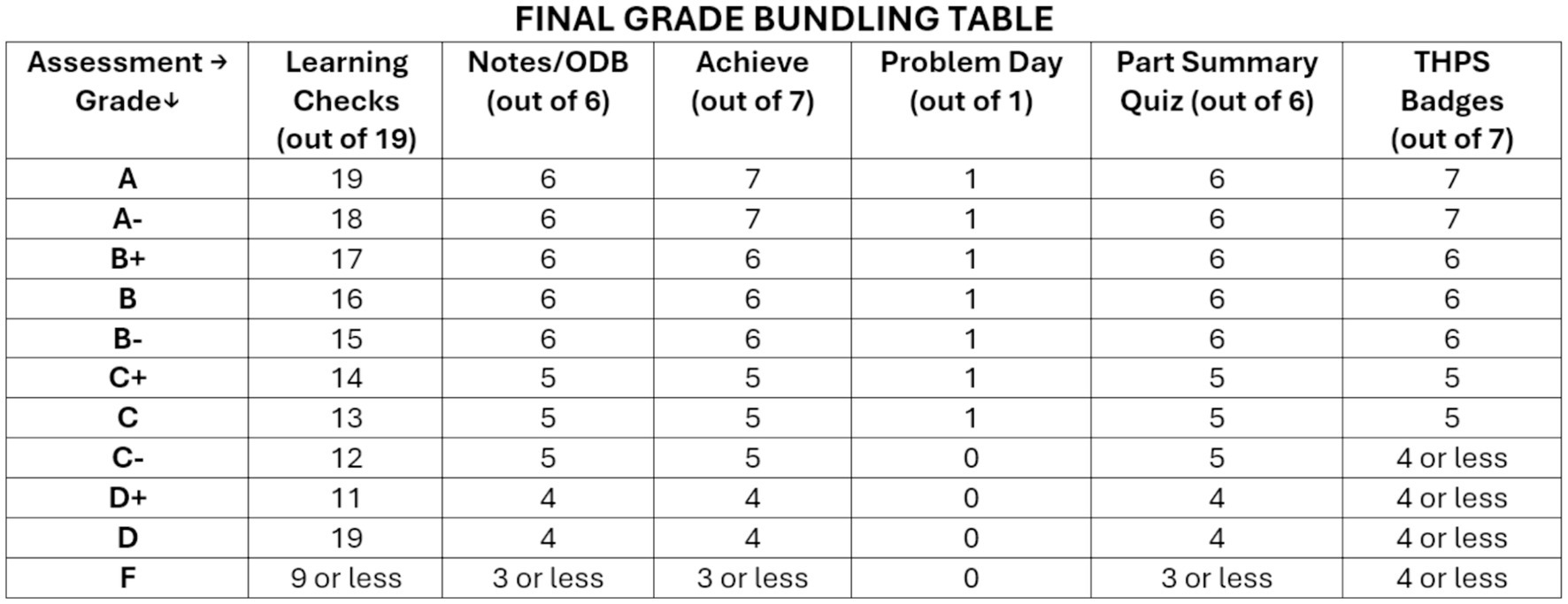

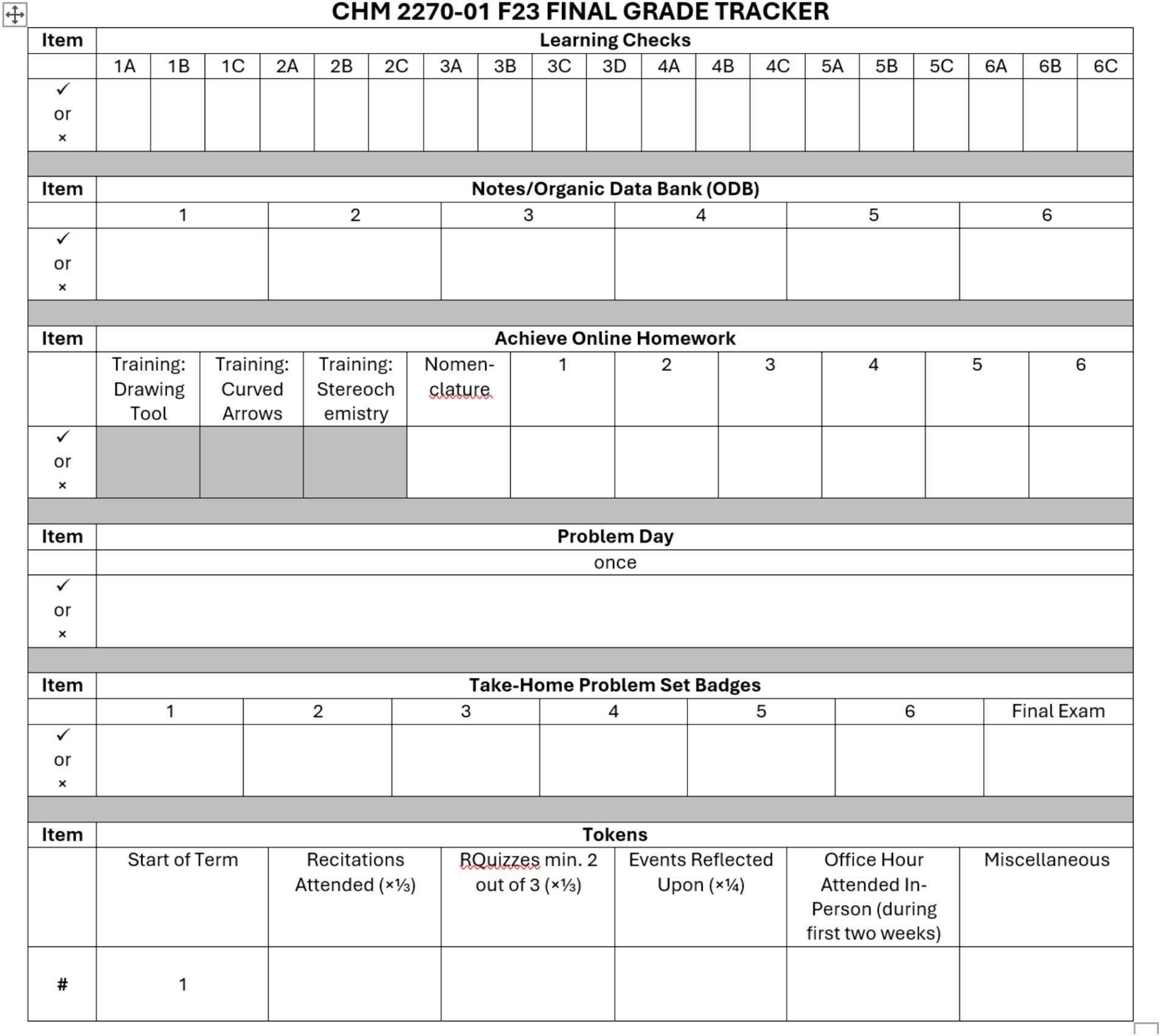

2.4 Indicate semester-long student progress

From the very start of the course, students are familiarized with the concept of the final grade bundling table (Figure 2). As previously mentioned for the multiple dimensions of the course, a minimum number of “passes” needs to be earned to reach a certain final letter grade level. Several key concepts of alternative grading are reflected directly or indirectly by this key component of the syllabus. First off, students are presented with the fact that the course will not be based on accrued points being divided by possible points and then charted against the percentage grade scale. Practically speaking, this was the largest departure from traditional course operation for my students and needs special emphasis during the first weeks of class. Second, in most cases the final grade bundling table makes clear that students do not need to “do everything” in the course to earn an A final grade or pass with a D final grade. Theoretically, students can chart out a minimum number of passes on specific course dimensions to “dial in” their desired final grade. Of course, thanks to the token system there can always be another chance to pass critical items and bulk up weaker areas. In a world where even before the pandemic, I was struggling to get my students to calculate a simple fraction to know their grade in the course, daily tracking of progress is very uncomplicated with a tracking sheet attached to the last page of the syllabus (Figure 3). Lastly, the final grade bundling table establishes an absolute metric for final grades and performance in the course. No more haggling over decimal places, rounding, or looking for extra credit. If a student earns only five (5) take-home problem set badges in the course, the best grade they can earn is a B+. In fact, there is still a little nuance in a final grade bundling table, as different criteria can give different final grades that can be minimized, maximized, or averaged for reported final grades. The specific number chosen for each assessment type is meant to align with a specific fundamental academic skill: learning checks (attendance for engagement), notes/ODB (organic data bank, record-keeping), Achieve and Problem Day (exercises for practice), and THPS badges (summative assessment through collaboration).

2.5 Communicating expectations

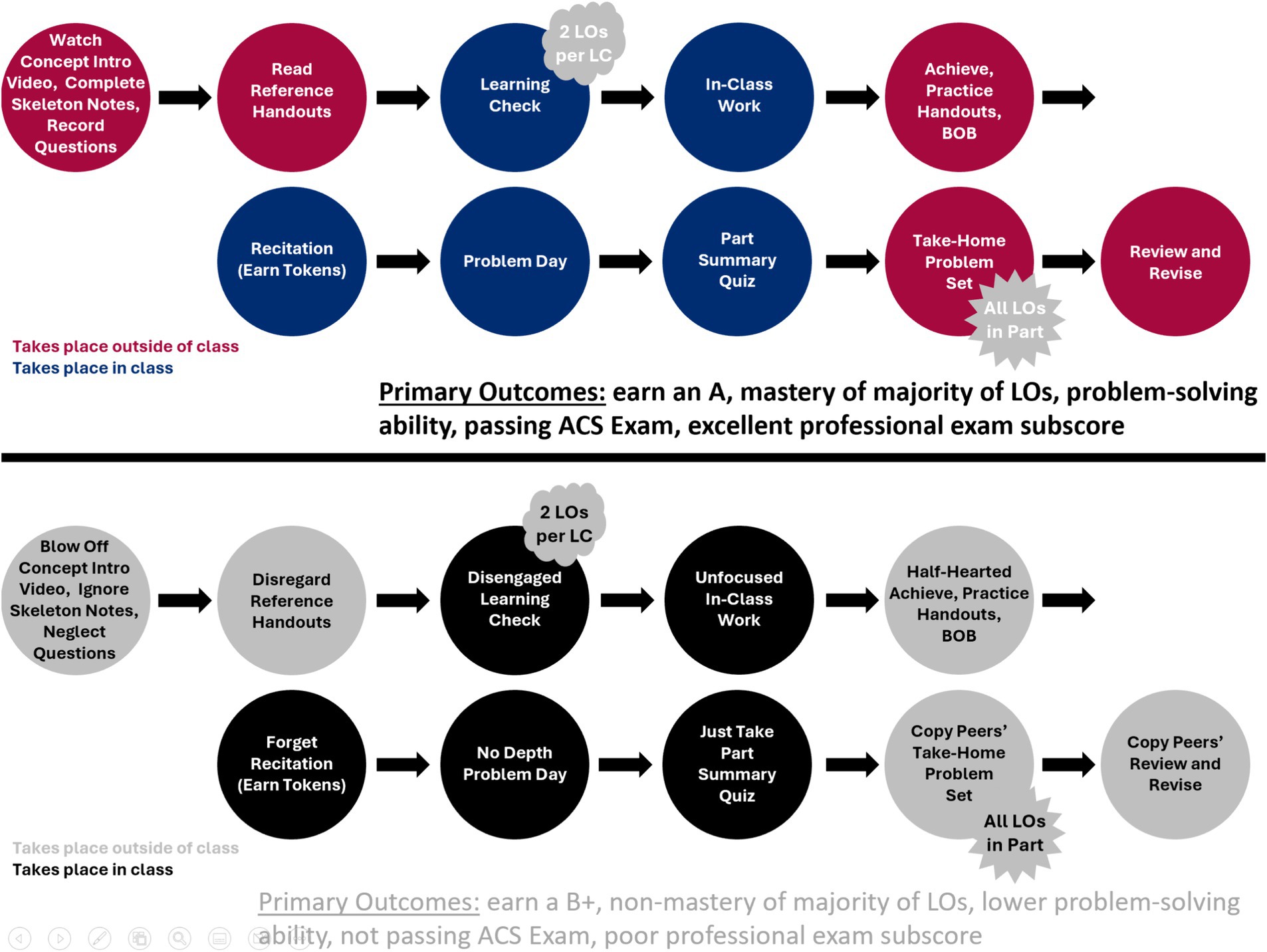

During the first iteration of the alternatively graded lecture course, a student information survey on the first day of class showed that zero students out of 75 identified with being a part of an alternatively-graded course in the past. In each consequent iteration, the total number of students who claimed to understand alternative grading never crested higher than 5% of total enrollment. Following a lead from the literature (McGuire, 2015), a large amount of time during the first week of classes was spent training the students in three (3) major areas: (1) the pedagogical principles upon which the course was based, (2) logistics of how to navigate the course, and (3) the general approach of metacognitive learning. In all iterations of the alternatively graded lecture course since the first, these simple investments did a great deal to quell student uneasiness and quickly prove the heart of the course was learning, not grades. Since there is no one recipe for how to change one’s teaching (Supiano, 2023) (and even perfectly executed best practices can easily backfire), I decided to vulnerably convey my own anxiety about these relatively untested methods by designing a graphic to guide students through the cycles of the course (Figure 4). On it, I lay bare the “right” and “wrong” ways to proceed through the course, harshly detailing ways to circumvent course policies and therefore, deep learning. In short, my pledge to students was to meet them where they are (on screens, via video content introductions) and to gamify (owing to their love of social media and video games) the course. Anecdotally, students mentioned that this may have been the first course they took that cared enough to walk them through the process and expectations that undergirded their learning. I learned that it can be a common mistake to assume your students know even the basics of how to learn – showing up, taking notes, reviewing content, practicing with exercises, taking breaks, appropriate collaboration. Each of these skills is individually incentivized in the alternatively graded system, emphasizing skills beyond those of basic organic chemistry.

The key here is to encourage both a growth mindset and student agency in discovering that mindset for themselves (Torres, 2023). Students need to be placed into environments where they feel safe enough to take calculated risks, especially where livelihoods and lives are not yet on the line. When environmental conditions are changed, students’ struggles with new material can be normalized or overcome. We can also ask ourselves if we assign failure as a natural part of revision and, ultimately, learning? Students all too often equate performance with identity and assume that most skills are innate. Since potential can be cultivated through different means, various methods of demonstrating mastery of learning outcomes should be designed for students. Additionally, student work should be responded to with the twin goals of affirmation and challenge. Most educators agree that work falls to the student to do the labor of learning. Instructors, however, can see from the 30,000-foot view and can parse out content and avoid feedback overload. Finally, we need to ask ourselves if students have the chance to digest, interpret, and apply the feedback we provide in our courses. Appropriate time for reflection and discernment is needed when moving from smaller, lower-stakes work to larger, higher-stakes assessment. Alternative grading removes many of the barriers to fulfilling these ideologies and casts the instructor-student relationship in positive, outcome-centered, and self-determining light.

At the very end of the first iteration of the course, I was very pleased to find that my original goal had been achieved: ZERO students contacted me after final grades to discuss, barter, or complain about what they had earned. After all, they were directly interacting with the principles of alternative grading from the first day of class to the conclusion of the cumulative final exam. I observed that students were in touch with their final grades on a nearly day-to-day basis for the entirety of the 16-week course.

2.6 Planning the lab course – organic chemistry I

In the same semester I experimented with alternative grading strategies in my Organic Chemistry I lecture course, I did the same with my Organic Chemistry I laboratory course. This one (1)-credit class would have 24 total enrollees, mostly 2nd- and 3rd year students, and would meet for 180 min once a week, plus a 50 min recitation that preceded the lab session. Because of previous tweaks to the AGS format, and perhaps also due to the more adaptable nature of practical laboratory courses, less overhaul was required. I had already designed a successful CURE (course-based undergraduate research experience) surrounding legal cannabinoids in over-the-counter products (Mio, 2022). Most scientific educators know that laboratory instruction takes a very different tack than theory courses, and in many cases, a much larger amount of prework. The alternative grading methods used for the lab course would have to follow the Four Pillars mentioned previously, in conjunction with a few corollaries: maximization of time in the lab and out-of-lab reflection, as well as ample time for engagement when lab is not center of mind (at Detroit Mercy, a one-hour lab recitation session occurs right before a three-hour lab session). All specific learning outcomes were arranged around the safe handling and bench chemistry of volatile organic compounds.

For the lab course, the focus became (a) what students will do to prepare for the lab session, (b) what students will accomplish during the lab session, and (c) what students will do to follow-up from the lab session. Weekly prep work involved a lab notebook setup, along with CURE-based research into both materials and techniques. Elements of safety were always part of prep, in addition to short videos showing students performing the technique to be practiced. Upon arriving in lab, student pre-work was checked in and partners were assigned. Questioning all aspects of the bench work was encouraged by both instructor and teaching assistant (TA) throughout the session, and a quick partner evaluation was filled out at the end upon exit. After lab, a certain number of days were given for post-lab questions to be responded to, where this task “unlocked” a follow-up assessment on both the theory and the practice of the week’s experiment, including simple distillation, thin-layer chromatography (TLC), solubility, extraction, and instrumental (GC–MS, FT-IR, NMR) analysis methods. Any missed or less-than-satisfactory aspect of the course resulted in a call for discussion with the instructor within 48 h. A literature research project with weekly objectives brought about a group presentation at the end of the semester describing both data and social implications of the work. In short, as long as students showed up and completed their assigned work in good faith and a timely manner, they were rewarded with positive feedback. Missed items and unsatisfactory work, as measured against a “minimum expectations” rubric, caused short-term discussion and chances to redo the work. While preliminary outcomes will be discussed in the section of this work, students from two (2) iterations of this alternatively-graded lab course anecdotally reported they experienced reduced pressure from not taking quizzes/exams, they found lab sessions more enjoyable because they felt more prepared, and also thought their learning went deeper with more focused reflection outside of lab.

3 Discussion – preliminary outcomes

Considering the timetable for innovation in alternative grading has been relatively short, many educational researchers have published their findings for over 30 years (Clark, n.d.). Qualification and quantification of data in this area will serve to legitimize the field and attract more educators as the benefits of alternative grading are explored from pre-elementary all the way up to the graduate level.

Active scholars in the field have clustered four (4) student-centered and positive results of courses run with alternatively-graded activities or completely formatting under the principles of alternative grading: (Clark, n.d.) (1) task-related feedback encourages students’ intrinsic motivation and can improve performance; (2) students feel and exhibit less anxiety and more risk-taking; (3) instances of student academic misconduct are less likely; and (4) student who transition from AGS can do well in later AGS or traditional courses. In short, alternatively graded courses in higher education are not harmful; and in fact, students report that the structure of such courses is beneficial to many aspects of their learning in the course.

In my few iterations of alternatively graded courses, I have found all of these initial findings to be true and have discovered many instructor-specific benefits along the way. While it is true that course redesign and preparation are always time-consuming on the order of weeks, the investment pays off greatly in day-to-day activities taking far less effort and nimbleness raised to its highest boundary. Since all activities of the course are fenced by the learning outcomes phrased as direct tasks, the writing of daily/unit assessments can morph around the specific strengths and weaknesses of the current cohort of students. In addition, more meaningful feedback can be generated more quickly because the assumed rubric of grading is “Did the student accomplish the task?”

In general, I have found that shifting student attitudes away from final grade and toward genuine learning is a benefit that nearly all educators would embrace. Again, I can report that this has occurred in my AGS courses in both day-to-day and overarching conversations. There is a sense in my students, especially after the course structure is unveiled on the first few days of classes that having options for what to complete in a course does not equate to setting lower learning goals. As anecdotal evidence, in the Fall 2022 and 2023 versions of the Organic Chemistry I course, nearly all students revised all of their incorrect responses on Take-Home Problem Sets, even the ones not required to earn the “pass.” I have witnessed that student self-agency has overall increased in my AGS courses, and anecdotally, students report on end-of-term evaluations that they appreciate being put “in the driver seat” of their learning, not just being told what to do.

Finally, I have discovered that in writing letters of recommendation for former students who have shared AGS courses with me, it is a far more straightforward endeavor. Each task in the lecture course, for example, is paired with a chief, non-organic chemistry learning goal: daily learning checks (keeping up with content), submission of summarized notes (ability to condense major topics), online homework (exercises to extend skills), extemporaneous problem-solving presentations (spontaneous exercise work), and biweekly, skills-based assessments (summative evaluation). Alignment of student work to these metrics allowed for a very simple structure to letters of recommendation that includes many of the non-content skills employers and graduate schools are looking for in undergraduate students. Speaking of final grades, detractors of AGS state that too many high grades will be assigned using these principles. In short, I have found that the number of A and B grades increases with the application of AGS. However, there may be a fallacy conflated in statements like, “massive grade inflation is a corruption of standards.” I have found that it is a more realistic view to distinguish between negative grade inflation, or unjustifiably high grades, and positive grade inflation, or more effective pedagogies resulting in higher achievement for more students. I find that I can now effortlessly focus on what my students can and cannot do, down to the number of times it takes them to “pass” an individual learning outcome. In fact, I am beginning to sense that there may be no difference between a student able to pass a learning outcome on the first versus more numerous tries. Accommodating more than one (1) learning preference to a course is always a supreme challenge for the educator and AGS provides one very beneficial way to accomplish that.

3.1 Advice for those starting an alternative grading journey

The early returns on alternative grading delivering on promises of enhanced student learning and equity are strong. However, decades of traditional pedagogical methods will not submit overnight. In addition, the best laid plans often do not work out the way we educators wish they would or intend them to. Herein lies some advice for those looking to take the first steps in their AGS journey.

3.1.1 Matters of scale matter, both in the size of course enrollment and content

It is theoretically possible to incorporate AGS into an entire course, one unit of a course, one lesson of a course, or simply one class period. I have done this for smaller (24 students) and larger (116 students) courses. Moreso than traditional methods, I find AGS balances out in these enrollment realms with little cause for overhaul. I have also been fascinated by experienced faculty’s response to which of these is the better place to start. Some think that the only way to begin anew is to cast off all course logistics. Others state that starting small is always the lowest risk. I agree with both statements. As long as you are learning outcome-focused, an entire course or one class period can accommodate change. I would rephrase this as: Do something – anything – and tie its assessment to a discrete learning outcome. The two-point rubric is quite freeing – can student do it or not? What do they need to do it? How can you, as an educator, support that need?

3.1.2 Building the plane while flying it is OK

Welcoming students to your experiment can have a calming effect on all involved—we do not know what will happen next, but we are excited about it! Interestingly, this was the advice given to me as I started my academic career with regard to laboratory research. As an organic chemist, I reflect often on the comment “Why are we so eager to experiment in the lab, but not in the classroom?” Any amount of effort could reap benefits in ways difficult to visualize at the outset. Critics may bring up the tyranny of content and the fact that too much gear-shifting can disorient students. A more positive take on these common critiques might be that exposing students to many different methods of instruction and assessment can bolster their learning toolbox. Ungrading and metacognitive instruction are the low-hanging fruit that can instantly transform instruction. Because all disciplines are distinct in what pedagogy works best for learning, and taking into account the diversity of learners swells the possibilities to incalculable numbers, we educators must simplify, simplify, simplify. Students learn lessons, then can practice to earn feedback unfettered by grading (ungrading). In the same vein, walking students through a “cycle” of the course and how to think about their own thinking (metacognition) in the discipline proves our dedication to reflection on learning, a skill we wish to incorporate in them.

3.1.3 As a cornerstone principle, pledge to decouple learning from fear of evaluation

Post-Covid students in American higher education have endured global pandemic, massive social and political upheaval, the advent of social media and AI, all in an era of near-instant information sharing over less than 10 years. Our best students will recognize that in considering alternative grading, their educators are concerned for both their learning and mental health. When educators attempt to use anxiety as motivation with the current generation of college students, academic performance diminishes. Alternative grading strategies demonstrate, from first principles, that we care about a student’s learning and academic success. Paraphrased by the writer and civil rights activist Maya Angelou, “I’ve learned that people will forget what you have said, people will forget what you did, but they will never forget how you made them feel.”

4 Conclusion

I hope that my writing has served to inspire you to pursue a path of alternative grading for your courses, organic chemistry or otherwise. The benefits of the pedagogy far outweigh the uncertainty and time involved in converting aspects of your course, or complete courses. With traditional lecturing hundreds of years old and ABCDF grading still relatively young, time will afford us little chance to await an educational sea change as substantial as the Covid-19 pandemic. I, like many members of the Academy, think that the global pandemic only accelerated and exacerbated issues that were already fomenting. We know our chosen disciplines so very well. The question has always been: How can we help students embrace the confusion of learning a brand-new set of concepts? Alternative grading, with its student-centered goal-setting, timely and applicable feedback, intent of clear learning outcome achievement, and reassessment of revised work both meets and exceeds the needs of a new generation for academic evaluation. The winds have already changed, and the time has come to walk down a different road on the journey of teaching and learning.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

MM: Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

The author would like to thank his colleagues M. R. Livezey and M. Abdel Latif for inspiring and insightful conversations on the many topics of alternative grading. I would also like to thank my students for always being the best motivators and idea-machines for better pedagogy.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Anderson, L. W., and Krathwohl, D. R. (Eds.) (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. Boston, MA (Pearson Education Group): Allyn & Bacon.

Bergmann, J., and Sams, A. (2012). Flip your classroom: Reach every student in every class every day. Washington, DC: International Society for Technology in Education.

Bloom, B. S., and Krathwohl, D. R. (1956). Taxonomy of educational objectives: The classification of educational goals, by a committee of college and university examiners. Handbook I: Cognitive domain. NY: Longmans, Green.

Blum, S. D. (2020). Ungrading: why rating students undermines learning (and what to do instead). Morgantown, WV: West Virginia University Press.

Brunsell, E. Designing science inquiry: claim + evidence + reasoning = explanation. Edutopia, Available at: https://www.edutopia.org/blog/science-inquiry-claim-evidence-reasoning-eric-brunsell (Accessed March 1, 2024).

Campbell, D. T. (1979). Assessing the impact of planned social change. Eval. Program Plann. 2, 67–90. doi: 10.1016/0149-7189(79)90048-X

Clark, D. Bibliography of alternative grading research. Available at: https://docs.google.com/document/d/1zCpoFgrzN2JUXyTfAm5xWdfLeLWt8P6xu8oqySZflYc/edit#heading=h.34m439eanynn (Accessed March 1, 2024).

Clark, D. Four key research results: a summary of some important research on alternative grading. Available at: https://gradingforgrowth.com/p/four-key-research-results?utm_source=%2Fsearch%2Fbibliography&utm_medium=reader2 (Accessed March 1, 2024).

Dixson, L., Pomales, B., Hashemzadeh, M., and Hashemzadeh, M. (2022). Is organic chemistry helpful for basic understanding of disease and medical education? J. Chem. Educ. 99, 688–693. doi: 10.1021/acs.jchemed.1c00772

Draeger, J., del Prado Hill, P., Hunter, L. R., and Mahler, R. (2013). The anatomy of academic rigor: the story of one institutional journey. Innov. High. Educ. 38, 267–279. doi: 10.1007/s10755-012-9246-8

Draeger, J., del Prado Hill, P., and Mahler, R. (2015). Developing a student conception of academic rigor. Innov. High. Educ. 40, 215–228. doi: 10.1007/s10755-014-9308-1

Feldman, J. (2018). Grading for equity: what it is, why it matters, and how it can transform schools and classrooms. Thousand Oaks, CA: Corwin, 23.

Goodhart, C. (1975). “Problems of Monetary Management: the U.K. Experience” in Inflation, depression, and economic policy in the west. ed. A. S. Courakis (Totowa, New Jersey: Barnes and Noble Books), 116.

Houchlei, S. K., Crandell, O. M., and Cooper, M. M. (2023). “What about the students who switched course type?”: an investigation of inconsistent course experience. J. Chem. Educ. 100, 4212–4223. doi: 10.1021/acs.jchemed.3c00345

Ko, A. (2019). Grading is ineffective, harmful, and unjust—let’s stop doing it. Medium 16. Available at: Medium.com.

Louisiana State University Center for Academic Success. Available at: https://www.lsu.edu/cas/ (Accessed March 1, 2024).

McMurtrie, B. (2023). Students crossing boundaries: rudeness, disruptions, unrealistic demands. Where to draw the line? Chron. High. Educ. 14.

Mio, M. J. (2022). “Second-year, course-based undergraduate research experience (CURE) in the organic chemistry of cannabinoids” in Abstract of papers, ACS fall 2022 (American Chemical Society) (Washington, DC: American Chemical Society).

Nelson, C. E. (2010). Dysfunctional illusions of rigor. Improve Acad. 28, 177–192. doi: 10.1002/j.2334-4822.2010.tb00602.x

Oleson, A., and Hora, M. T. (2014). Teaching the way they were taught? Revisiting the sources of teaching knowledge and the role of prior experience in shaping faculty teaching practices. High. Educ. 68, 29–45. doi: 10.1007/s10734-013-9678-9

Perry, W. G. Jr. (1970). Forms of intellectual and ethical development in the college years: A scheme. New York: Holt, Rinehart, and Winston.

Talbert, R., and Clark, D., Available at: gradingforgrowth.com (Accessed March 1, 2024).

Torres, J. T. (2023). 4 questions to ask to promote student learning. Inside High. Ed. (Accessed March 7, 2023).

Townsley, M. A comprehensive list of standards-based grading articles. Available at: http://mctownsley.net/a-comprehensive-list-of-standards-based-grading-articles/ (Accessed March 1, 2024).

University of Detroit Mercy Mission and Vision. Available at: https://www.udmercy.edu/about/mission-vision (Accessed March 1, 2024).

Keywords: alternative grading, ungrading, organic chemistry, chemistry education research, metacognition, specifications grading

Citation: Mio MJ (2024) Alternative grading strategies in organic chemistry: a journey. Front. Educ. 9:1400058. doi: 10.3389/feduc.2024.1400058

Edited by:

Michael Wentzel, Augsburg University, United StatesReviewed by:

Brian Woods, Saint Louis University, United StatesBrandon J. Yik, University of Virginia, United States

Copyright © 2024 Mio. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Matthew J. Mio, bWlvbWpAdWRtZXJjeS5lZHU=

Matthew J. Mio

Matthew J. Mio