- Educational Leadership & Policy Division, College of Education and Human Development, George Mason University, Fairfax, VA, United States

School systems have increasingly turned to continuous improvement (CI) processes because traditional school improvement plans (SIPs) have resulted in neither reaching set goals nor maintaining performance in challenging times. Improvement science is one way of enacting CI that combines CI with networked improvement to encourage educational equity and build organizational resilience. This study examines the efforts of a school district in the United States to use improvement science to transition their static SIPs to a dynamic process in their underperforming schools. Using a case study design with observations and interviews, we find several sensemaking mechanisms acted as mediators between organizational learning and authentic improvement science implementation. The complexity of improvement science often inhibited sensemaking given time and resource constraints before and during the COVID-19 pandemic, leading to participants often reporting improvement science as too inefficient for their needs. Schools that more successfully integrated improvement science into SIPs saw the value of a systematic approach to SIPs, had interest in distributed leadership, and saw improvement science as advancing equity. This study provides insight into the utility of improvement science as a tool to build organizational resilience as part of school improvement while documenting the many difficulties school improvement teams have in shifting away from static school improvement practices.

1 Introduction

Improvement science in education is lauded as a groundbreaking philosophy that can guide educational organizations to accelerate improvements through disciplined inquiry, networked improvement, and adapting research to local contexts (Biag and Sherer, 2021; Hinnant-Crawford, 2020; LeMahieu et al., 2017; Lewis, 2015). However, this paradigmatic shift in how school innovations are created and implemented could come with significant political and organizational challenges inhibiting improvement science’s effectiveness (Bush-Mecenas, 2022; Lewis, 2015; Yurkofsky et al., 2020). Several common challenges can impede the effectiveness of improvement science in underperforming schools like external accountability pressures that prioritize outcomes over improvement processes, organizational cultures that are antagonistic towards admitting failure or the need to improve, and scheduling meetings in and across schools within the confines of contractual hours. Such impediments have recently been conceptualized as the relational elements of schools that incentivize leaders to resist change due to mistrust, reluctance, and teacher autonomy (Yurkofsky et al., 2020).

At the same time, school leaders and teachers often recognize that current practices are not resulting in schools meeting their improvement goals, incentivizing practitioners to implement processes like improvement science that can disrupt persistent problems of practice and lead to lasting change (Biag and Sherer, 2021; Bryk et al., 2015). Improvement science achieves these goals through six core principles: a problem-focused, user-centered approach to improvement (1), developing a deep understanding of variation in the problem (2) and the systems that create the problem (3), embedding measurement of implementation and outcomes (4), accelerating improvement through rapid cycles of continuous improvement (CI), often called plan-do-study-act (PDSA) cycles (5), and networked improvement across organizations (6). Yet, despite the robust theoretical framework undergirding improvement science, empirical research is only beginning to emerge concerning the design, implementation, and effects of improvement science and other CI processes (e.g., Data Wise, design-based implementation research), particularly during tumultuous times like the years of the COVID-19 pandemic (Aaron et al., 2022; Yurkofsky, 2021). For instance, prior research has found educational leaders can struggle to integrate improvement science into their work and, instead, fall back on typical routines (Bonney et al., 2024; Mintrop and Zumpe, 2019). Overall, we have little understanding of the extent to which improvement science could build schools’ organizational resilience – their ability to navigate challenges to both cope successfully and make positive adaptations (Duchek, 2020; Vogus and Sutcliffe, 2007).

In this study, we explore how central office instructional leaders in one large, suburban school district in the United States sought to use improvement science (as operationalized through the six core principles) to transition school improvement from the creation of compliance-driven, static plans (the norm within this setting) into dynamic theories of action, updated throughout the school year in response to context-specific implementation efforts. The school district did so through the creation of a networked improvement community (NIC), an arrangement in which multiple school improvement teams (comprised of school leaders and teachers) collaborate to share learning to accelerate improvement. This study focuses on a context in which recognizing the need to improve was disincentivized with a desire to not upset the status quo with the central office instructional leaders having no supervisory authority over the school improvement teams. This effort explicitly positioned improvement science as the method for encouraging more resilient school improvement efforts since adaptation and continuous innovation are fundamental aspects of organizational resilience within a system in which central office instructional leaders need to convince school improvement teams to participate (Duchek, 2020).

Our analyses sought to understand whether improvement science encouraged underperforming schools’ improvement teams (including school leaders and teachers) to have a more collaborative approach to school improvement and transition from year-long to short-cycle school improvement planning. Unlike prior work that examines shifts in mindsets and school improvement plans (SIPs), we examine how these initiatives meaningfully changed the school improvement process to be a more resilient organizational routine (Aaron et al., 2022). We do so through distinguishing whether the implementation of improvement science into school improvement was authentically aligned with improvement science principles, only partially authentically aligned to improvement science principles, or inauthentically aligned (i.e., did not integrate improvement science into school improvement). This study concerns a pilot improvement science-driven school improvement NIC and addresses the following research questions:

1. How did school improvement team members describe their experiences with district-led organizational learning on improvement science?

2. How did school improvement team members make sense of improvement science in ways informed by their organizational learning?

3. How did school improvement team members describe the authenticity of their improvement science implementation into their school improvement processes and the ways in which authenticity of implementation was informed by organizational learning and their sensemaking?

In addition to the previously mentioned purposes of the study, we explore how sensemaking processes of beliefs, context, and messaging enabled or constrained improvement science implementation. We describe how sensemaking enabled school improvement teams at NIC schools to authentically integrate the six improvement science principles into their work, providing some positive evidence on the applicability of improvement science’s theory of change in an educational context. However, we document significant barriers practitioners encountered that challenged improvement science’s theory of change and ability to build organizational resilience. The primary contribution of this study is to build evidence on the necessary processes for authentic improvement science implementation and organizational resilience against the backdrop of schools’ limited organizational capacity and serious political considerations.

2 Conceptual framework

In broad terms, educational research regarding CI in education settings falls along a continuum; one extreme attests to the transformative possibilities of CI, while the other questions whether CI is feasible in school settings (Aaron et al., 2022; Biag and Sherer, 2021; Bryk et al., 2015; Bush-Mecenas, 2022; Mintrop and Zumpe, 2019; Penuel et al., 2011; Peurach, 2016; Yurkofsky et al., 2020; Yurkofsky, 2021). Ultimately, much of this writing focuses on whether schools (including schools from our study setting) should adopt CI (e.g., Bryk et al., 2015). An emerging line of research is bringing evidence to bear upon this debate by seeking to understand CI implementation in educational contexts (see Yurkofsky et al., 2020); our study contributes to this emergent research. Below, we describe our conceptual framework, which connects organizational learning on improvement science to the authenticity of improvement science implementation, both directly and mediated through sensemaking processes of beliefs, context, and messaging.

2.1 Authenticity of improvement science implementation

Improvement science is one way of integrating CI into school improvement routines and educator mindsets. We define CI as the integration of iterative, short-cycle (45–90 day) plans that intentionally build on one another by proposing hypotheses about specific change practices, testing those hypotheses, and then adapting practice based on test results. For example, a school may aim to change how its math teachers help students build their understanding of the real-world contexts from which mathematical problems arise. A CI-focused approach to this innovation, often operationalized through PDSA cycles, might lead these schools to propose hypotheses about the extent to which real-world, context-building instruction is effective at building student mastery and collect data to test the hypotheses. Then, schools would use test results to inform future context-building instructional efforts in a process referred to as disciplined inquiry (Bryk et al., 2015).

Improvement science complements this principle, disciplined inquiry, with five other principles yielding the six core principles of improvement science. Prior to learning through disciplined inquiry through PDSA cycles, educators should deeply understand the problem they are seeking to address, integrating the perspectives of those with direct experience with that problem (i.e., principle 1 “be problem-focused and user-centered”). Improvement science uses data to understand variation in implementation and outcomes (i.e., principle 2 “attend to variability”), seeking to understand the ecosystem in which the improvement is to take place (i.e., principle 3 “see the system”). In order to engage in disciplined inquiry, educators need to “embrace measurement” (principle 4) as a tool for understanding implementation and intended outcomes. Finally, what educators are learning through enacting these first five principles, particularly what they “learn through disciplined inquiry” (principle 5) is then accelerated when schools “organize as networks” (principle 6) by collaborating across multiple organizations within a NIC structure (Bryk et al., 2015).

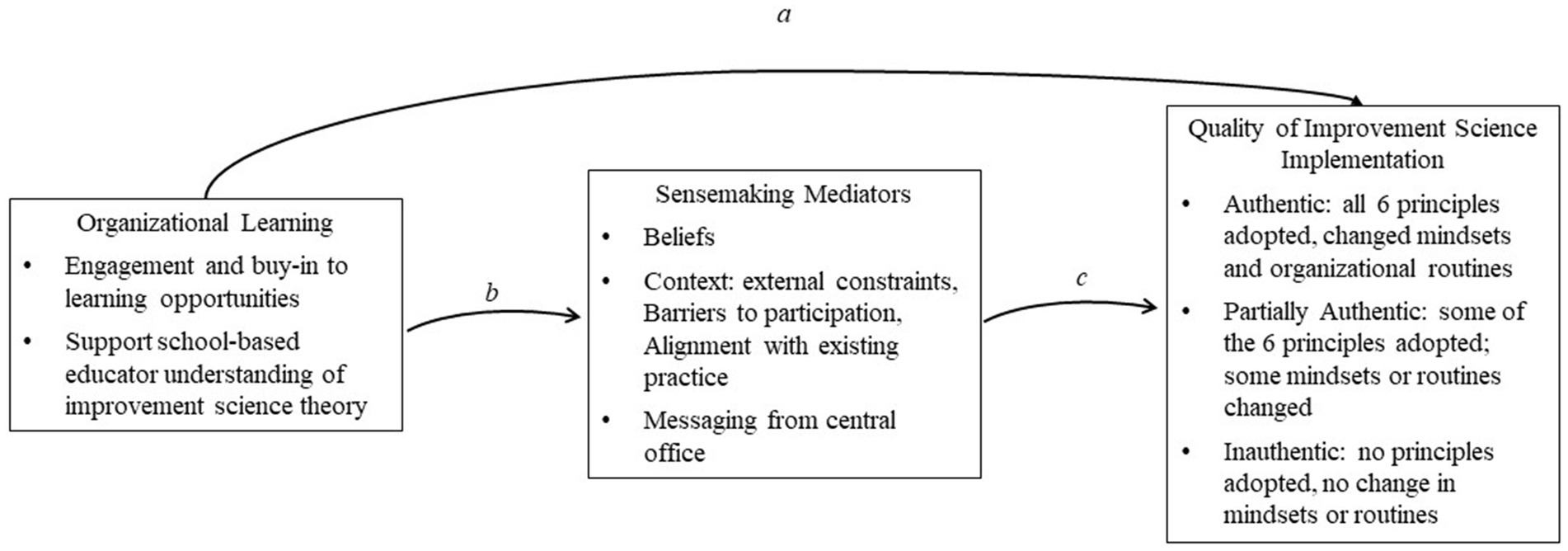

Notably, improvement science is not concerned with the fidelity of implementation concerning any particular change practice; instead, it focuses on strengthening school improvement processes that transcend specific change practices. In other words, improvement science seeks to change mindsets and organizational routines, so schools can improve regardless of the specific problem or change practices. “Authentic” integration of improvement science would enmesh its six core principles into day-to-day school improvement as would be exhibited through a focus on understanding problems, attending to variability, seeing the system that produces the problems, embracing measurement, learning through disciplined inquiry, and networking learning (see rightmost box in Figure 1; Bryk et al., 2015; Hinnant-Crawford, 2020). We characterize schools that adopted only some of the six core principles or only changed some school improvement-related mindsets or routines as “partially authentic” implementation (see rightmost box in Figure 1). For instance, a school that can be “problem-focused and user-centered” in their approach to problems of practice but not engage in PDSA cycles. This example would be classified as partially authentic implementation of improvement science because they integrated one improvement science principle but not others (“learn through disciplined inquiry”). Finally, schools that did not integrate principles are labeled “inauthentic” (Figure 1). For instance, inauthentic implementation would occur if a principal states that they did not have time to engage in improvement science or were disinterested in using improvement science, thus never using any of the improvement science principles in their school improvement work.

Figure 1. Conceptual framework linking engagement with organizational learning to the quality of improvement science implementation independently and through mediators.

Our conceptual framework for the present study extends this improvement science framework by recognizing the theoretical importance of organizational learning for learning about and building engagement in improvement science along with key sensemaking mediators necessary for authentic improvement science implementation. We explicate the connections in the conceptual framework below and our analysis builds understanding on the application of this framework within our study.

2.2 Organizational learning

We define organizational learning as the experiences delivered by central office instructional leaders to school improvement teams (i.e., school-based teams) that aim to apply improvement science (Leger et al., 2023). While learning can be delivered by school-based leaders, outside vendors, and university partners, in this study all organizational learning was delivered by central office instructional leaders. Organizational learning opportunities include (in)formal meetings, coaching, and the physical or digital resources central office instructional leaders provide. We argue that participation in organizational learning is an antecedent of improvement science implementation as organizational learning is often the primary mechanism through which schools adopt new improvement processes. We argue organizational learning can affect improvement science implementation if participants are engaged in these learning opportunities and agree that it is worthwhile to participate in these opportunities (i.e., buy-in), or these learning opportunities successfully support learning of improvement science theory and how to use improvement science organizational routines. Engaging with the content, buying into these opportunities, and understanding improvement science theory can then directly support implementation (path a, Figure 1) as well as enhance sensemaking (path b).

2.3 Sensemaking mediators

A novel aspect of this study is the conceptualization of “sensemaking mediators” between organizational learning and the authenticity of school-based improvement science implementation. We use the term mediators, as we theorize sensemaking is affected by antecedents (organizational learning), and sensemaking affect outputs (improvement science implementation). Previous literature has examined the relationship between learning about improvement science and implementation (see Leger et al., 2023) and how aspects of sensemaking influence improvement science implementation (see Bonney et al., 2024; Mintrop and Zumpe, 2019). However, this literature has focused on educational practitioners who are learning improvement science as part of their graduate-level university coursework – a situation, we argue, that is distinct from learning about improvement science through organizational learning provided within school districts. For instance, prior literature finds educators often struggle to implement what they are learning about improvement science in graduate coursework within their district contexts in which others are not familiar with the six core principles and without robust support for the use of improvement science (Bonney et al., 2024; Leger et al., 2023). However, we study a context in which it is the school district encouraging the use of improvement science and providing organizational learning opportunities themselves. Within this study, we hypothesize that district-provided organizational learning affects the proposed mediators (sensemaking) along path b in Figure 1, while the mediators (sensemaking) affect the authenticity of improvement science implementation via path c.

We examine three mediators prior qualitative work suggests may enable or obstruct the integration of improvement science into school-based routines and mindsets. Other authors refer to such integration as “sensemaking;” hence, we apply the term “sensemaking mediators” (Spillane et al., 2011). First, prior research finds that individuals filter new information through existing beliefs about their work, schooling processes, and more (Benn, 2004; Carraway, 2012; Spillane et al., 2002). For example, individuals may compare improvement science practices to their prior beliefs about school improvement. Professional development research finds that effective organizational learning changes participants’ beliefs (path b, Figure 1; Darling-Hammond et al., 2017; Guskey, 2000). Conceptually, the more closely improvement science aligns with participants’ core beliefs about school improvement, the more likely they will be to authentically implement improvement science (path c, Figure 1; Redding and Viano, 2018).

Second, context offers essential clues for interpreting educator responses to innovations like improvement science. Understanding current school improvement routines (i.e., existing practice) as well as the available social (e.g., connections with others who can assist) and physical (e.g., available meeting rooms) resources provides pivotal clues about how school leaders perceive improvement science as an alternative approach to business as usual (Benn, 2004; Coburn, 2001). Contexts can yield inauthentic improvement science implementation via external constraints and barriers; for example, extra-school factors that demand educator time may inhibit authentic improvement science implementation (path c, Figure 1). However, contexts can enhance improvement science implementation through alignment with existing practice; for example, central office instructional leaders may show school improvement teams how to use centralized data management systems to align implementation science routines (path b), affecting data collection and reporting in ways that align better with authentic improvement science implementation (path c).

Finally, we consider messaging, or what is communicated to school improvement team members by those in positions of authority about improvement science. Our considerations of messaging focus on how central office instructional leaders communicated their expectations regarding members of the school improvement team and the school district’s prioritization of improvement science relative to other reforms. Notably, we conceptualize messaging as distinct from organizational learning (Coburn, 2005; Spillane et al., 2002). Then, messaging can then affect the authenticity of implementation by encouraging (or discouraging) the use of improvement science (path c). For example, a principal supervisor telling school leaders that they look forward to hearing more about their improvement science work likely would encourage the school to focus more on improvement science to please their supervisor.

2.4 Summary

As is shown in Figure 1, this conceptual framework is organized around three major stages involved in improvement science implementation: organizational learning, sensemaking mediators, and the authenticity of improvement science implementation. We organized our conceptual framework beginning from the left-hand side with organizational learning (engagement/buy-in and understanding of improvement science theory) followed by the identified sensemaking mediators (beliefs, context, messaging) and concluding with the authenticity of improvement science implementation (authentic, partially authentic, inauthentic). We connect these three stages such that organizational learning is proposed to affect sensemaking and authenticity of implementation, both directly and through its effect on sensemaking. Using a case study design, we seek to understand how the interrelated sensemaking processes of beliefs, context, and messaging could mediate the relationship between organizational learning and implementation of improvement science in schools.

3 This study

This study considers the efforts of a large, diverse, suburban school district (henceforth referred to as Confidential School District or CSD) to integrate improvement science into the annual school improvement planning and implementation process. School improvement planning is commonplace across the United States. However, school improvement is often criticized for having too little variation in planning by context, little accountability for implementing SIPs, and nonexistent expectations for meeting the goals set in those plans (Aaron et al., 2022; Meyers and VanGronigen, 2019; VanGronigen et al., 2023). CSD sought to address these challenges by encouraging the integration of improvement science into school improvement routines. Unlike prior studies on the implementation of CI into school improvement where mandated CI models led to “perverse coherence” or “technical ceremony” (see Aaron et al., 2022; Yurkofsky, 2021) or focused on specific problems of practice (Bryk et al., 2015; Margolin et al., 2021), CSD did not mandate the integration of improvement science principles. Instead, CSD sought to improve organizational resilience in carefully selected underperforming schools by encouraging the school improvement team to apply improvement science to address the problems of practice in their school. School improvement teams included teachers and school leaders (i.e., assistant principals and the principal).

CSD’s history and characteristics were intimately connected to the decision to make implementation of improvement science optional. While many schools in CSD have significant gaps in opportunities and performance by race, socio-economic status, and language (including the schools under study), the setting’s affluent nature encouraged leaders to avoid publicizing these inequities. This is a geographic area many families selected because of the perception that this school system has high-authenticity schools (Brasington and Hite, 2012; Kim et al., 2005). A prevalent conception in CSD is that if schools were to focus on opportunity or achievement gaps, they risk losing these families because publicizing problems might push these families to seek schools they perceive as “better” (Holme, 2009). We argue that making improvement science optional is a symptom of a culture lacking urgency – where acknowledging problems is discouraged – in ways that likely undermine organizational resilience (Duchek, 2020). While previous studies have investigated the implementation of optional improvement science initiatives (e.g., Bush-Mecenas, 2022), this type of setting where schools have significant problems that need addressing but have incentives to maintain the status quo is worth additional consideration. The contribution of this study is centered on seeking to elucidate how improvement science is and is not positioned, politically and relationally, to guide school improvement efforts. We review how CSD structured its implementation of the school improvement NIC and the resulting ways in which it affected school improvement practices below.

4 Research methods

This study reflects on the work of central office instructional leaders to organize a school improvement NIC including approximately eight elementary schools (note that the number of elementary schools varied over time by implementation year and COVID-19-driven turbulence). CSD is racially diverse, with similar proportions of students who identify as White, Hispanic, and Asian American with smaller proportions of students who identify as Black or multiracial. About a third of CSD students are classified as economically disadvantaged and a fifth as English learners. The central office instructional leaders were from different departments and held a wide variety of job titles. What brought these central office instructional leaders together was a professional commitment to using improvement science to improve schools in pursuit of equitable educational outcomes. This led to a diverse array of central office instructional leaders including staff from offices focused on instructional support, Title I services, secondary literacy, school improvement, and leadership development. The central office instructional leaders had titles ranging from Executive Director to Administrative Coordinator, and the invitation to join this team was open to any staff in central office with an interest in improvement science.

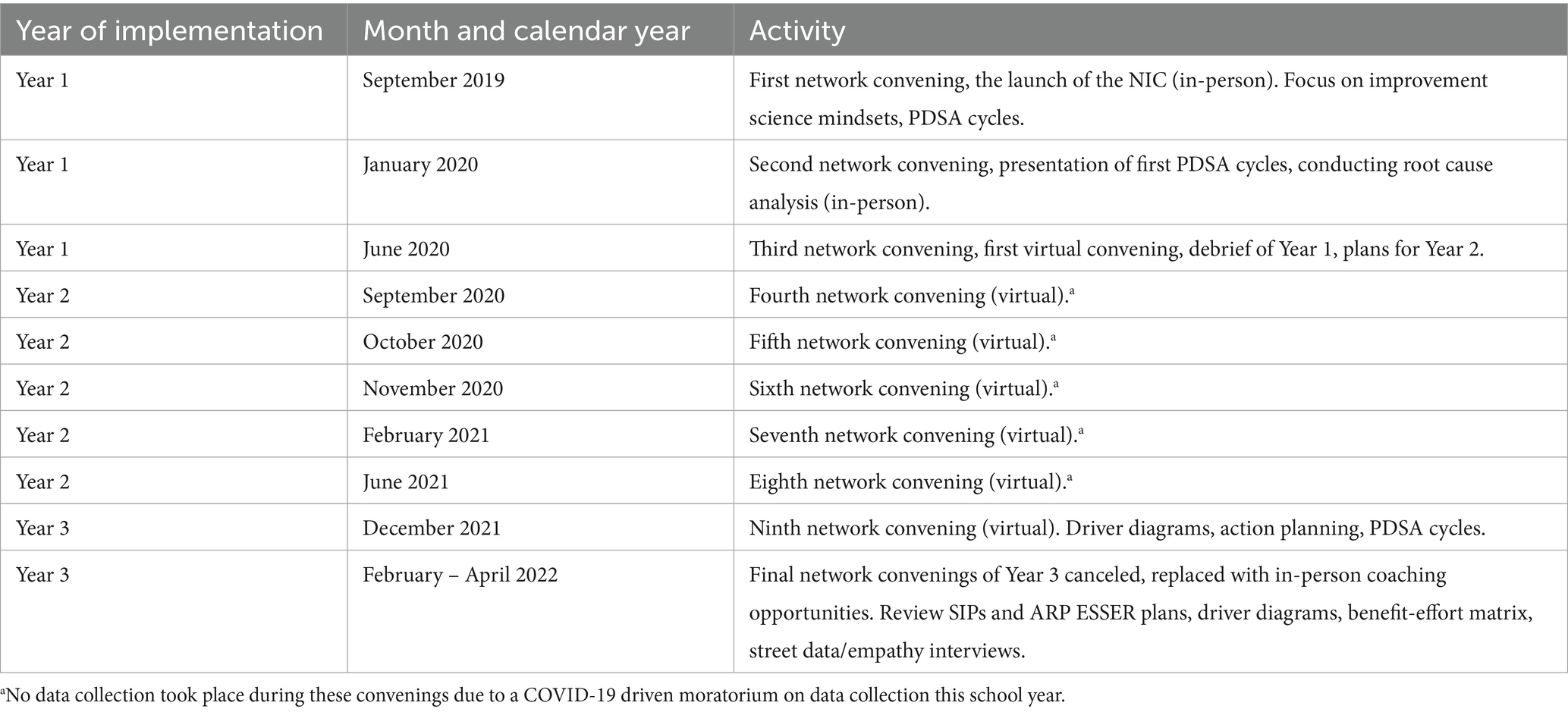

The NIC began in September 2019, and CSD had many improvement science initiatives dating a few years prior to the launch of the examined NIC. The NIC continued throughout the COVID-19 pandemic, with this study following the NIC through the end of the 2021–22 school year; see Table 1 for a timeline of events across the 3 years of implementation. All NIC schools were at the elementary level and in close geographic proximity, with principals sharing a supervisor who was often involved in the NIC work. CSD chose to work with elementary schools because, during the NIC launch, secondary schools were engaged in a significant organizational change unrelated to improvement science. The central office instructional leaders selected schools within this specific geographic area because of regional superintendent interest. All schools either received federal Title I funding (indicative of a high population of economically disadvantaged students) or were close to qualifying for Title I funds such that each had a significant population of economically disadvantaged students. In addition, each school had recently been part of a recently dissolved program that provided extra support to underperforming schools. Participation in the NIC and this study was voluntary, based on the principal’s discretion, as is common in site-based management models in education (Addi-Raccah and Gavish, 2010). The school improvement teams included typical members of this team with participants that were classroom teachers and leaders (principals and assistant principals).1

4.1 Design

This qualitative case design seeks to understand how central office instructional leaders sought to shift school improvement from static to dynamic processes through improvement science and the results of these efforts through the perspective of members of the school improvement teams in the NIC (i.e., teachers and school leaders) and central office instructional leaders managing the NIC. A case design is appropriate for this task since we seek to develop an in-depth understanding of how the users experienced and integrated improvement science processes into school improvement (Yin, 2009). This is a single case design which treats the central office instructional leaders and NIC participants from each school as a one unified case. While sensemaking might be different at each NIC school, we condense these phenomena with an understanding that all schools exist within a single NIC and a common organizational learning environment.

4.2 Data2

Our data include observations of NIC convenings (i.e., times when more than one school met concurrently) and within-school meetings as well as interviews with members of the NIC and central office instructional leaders. We also took note of key artifacts like presentations, deliverables, and other documents created as part of this work. At the outset of the NIC, each school was assigned a central office instructional leader as a coach with the intent that the coach would meet with the school improvement team monthly (actual meetings were closer to quarterly). We often observed coaching occurring within convening and school improvement team meetings (i.e., meetings at a single school). We observed approximately 1,280 min over four convenings (September 2019, January 2020, June 2020, and December 2021). In the school improvement team meetings, we collected observation data using a standardized protocol in the 2019–20 (n = 7) and 2021–22 (n = 8) school years, approximately 720 min. The meeting observations were designed to capture both organizational learning strategies and participant sensemaking; see the Supplementary materials for the protocols. We conducted the first round of interviews in December 2019 (n = 7) using a semi-structured interview protocol with a second round in spring 2022 (n = 25; data collection was paused from July 2020–August 2021).3 We interviewed members of the school improvement teams (both interview rounds) and central office instructional leaders (including coaches and the principal supervisor, all during the second round of interviews). We designed the meeting and interview protocols to align with the improvement science principles and our conceptual framework to capture the authenticity of their improvement science implementation and perspectives on improvement science (i.e., their sensemaking). For sample protocols which includes the prompts through which we elicited our data and the format for our meeting/convening protocols, see the Supplementary Appendix.

4.3 Analysis

Our analysis centered on a set of predetermined themes from our conceptual framework while allowing for emergent themes discovered through analysis (Lincoln and Guba, 1985; Noor, 2008). We used the constant comparative method to analyze transcripts and observation notes to code for our predetermined and emergent themes (Corbin and Strauss, 2014; Miles and Huberman, 1994). The authors developed the coding framework and refined it collaboratively throughout. At least one author and a trained graduate research assistant (who had participated in data collection) double coded all transcripts and observation notes to seek fuller coverage towards the goal of crystallization. Crystallization is an alternative construct to triangulation that symbolizes a common understanding of the data that is multidimensional as opposed to triangular (Ellingson, 2009; Tracy, 2010). Throughout the coding process, coders periodically discussed our coding based on the predetermined themes and our emergent codes. We compared our coding for the same transcripts (for double coders) and different transcripts (Corbin and Strauss, 2014; Miles and Huberman, 1994). We then exported segments of transcripts and meeting observation notes by code. The authors summarized each code based on this export, noting illustrative quotes and major themes. These coding memos formed the basis for our results. We engaged in member checking on our results with CSD central office instructional leaders to verify the trustworthiness of our inferences (Tracy, 2010). Consequently, the major themes in these memos informed our results and that the quotes below illustrate examples of major themes.

5 Results

Our results are organized according to the conceptual framework in Figure 1, with all headings matching the text in the boxes on Figure 1. We begin with our observations on NIC school improvement teams’ responses to improvement science organizational learning opportunities and their improvement science sensemaking. We then connect how school improvement teams respond to organizational learning facilitated by central office instructional leaders and the sensemaking mediators to improvement science implementation to observe patterns in the authenticity of improvement science integration into school improvement. Our results will show how sensemaking was an important mediator between organizational learning and authenticity of improvement science implementation. Specifically, we observe higher authenticity improvement science implementation when participants engaged in sensemaking as an enabling, rather than constraining, process.

5.1 Organizational learning

Organizational learning activities primarily consisted of networked convenings and coaching. The NIC launched with an in-person network convening in Fall 2019. After an in-person convening in January 2020, the remainder of the convenings were virtual. Coaching took place across the 3 years of the NIC, with learning in the third COVID-19 school year primarily driven through coaching. Active learning (i.e., engagement with the improvement science lessons and tasks, see Bonwell and Eison, 1991) was the primary strategy for facilitating participant learning. Active learning activities focused on improvement science tool use, filling out forms, organizing information about school improvement strategies, collaborative planning for school improvement, and planning for school-based disciplined inquiry activities through PDSA cycles.

5.1.1 Engagement and buy-in to learning opportunities

Central office instructional leaders were, overall, very satisfied with the buy-in and engagement of the school improvement teams, although we observed significant differences across schools and time. School improvement teams were often engaged during network convenings and coaching sessions, particularly when using tools or materials to complete visual tasks. As was stated by a central office instructional leader, “Because all of our NIC schools opted into this process, you know, some of them are super gung-ho about using the improvement science tools. And so, then they took it upon themselves to do that.” In addition, including teacher leaders and leaders on school improvement teams built engagement, as expressed in this illustrative school-based instructional coach interview:

We need to hear from the people who are doing this every day, it's easy to be like, I can write a goal. And we're going to do this and get all excited about it. And it's really easy to purchase a program and be like, “Okay, we're gonna use this,” but it's definitely the human resources that make it come to life.

Having teams of individuals from instructional and leadership roles helped to foster engagement in the NIC activities. At the same time, engagement varied over time, as is reflected in this quote from a principal:

I am finally ready to engage now. I wasn’t in December [2021] so we had to pull back, but now we are excited to do this and dig into the conversation, and getting into the leadership – getting a new model for us to process our thinking moving forward.

This quote highlights two key engagement patterns we noted in the NIC. First, schools communicated that they had narrow windows of time during which they were willing to fully engage in the NIC work that were determined by the pace of the schooling calendar. Second, turbulence led schools to cycle through periods of disengagement. This was most prominently caused by the COVID-19 pandemic, but schools also disengaged prior to the pandemic for reasons like staff turnover, as reflected in this interview with a teacher in December 2019, “We’ve had a little issue with implementation … we lost our resource teacher. He took another position, which we are not happy, we are sad about. So, it’s been hard to kind of get that momentum going.” Engagement was tenuous for most of the schools in the NIC with many teams depending on particular staff for their engagement or involvement that was susceptible to turbulence caused by COVID-19 or other kinds of disturbances typical to schools.

5.1.2 Support school-based educator understanding of improvement science theory

Participant understanding of improvement science was driven by how central office instructional leaders organized the NIC. Approaching Year 2 and through Year 3, most schools applied several improvement science tools (e.g., creating and using a “driver diagram”) and maxims (e.g., “fail fast to learn quickly,” “small but mighty change”). However, it is unclear if participants understood how these procedures and tools related to one another. For example, although participants used a tool called a “fishbone diagram” when coaches asked them to, it was unclear if participants understood the diagram’s significance in the improvement science in relation to the six core principles, specifically that fishbone diagrams are a tool to be “problem-focused” and “see the system.”

The introduction of some concepts during network convenings might have compounded participant misunderstanding. In the first convening, facilitators distributed one-page “cliff notes” on improvement science principles. Subsequently, it was often assumed by central office instructional leaders that NIC participants would naturally make connections between discrete activities, but participants were rarely asked to demonstrate these connections. This might also explain why participants appreciated it when central office instructional leaders shared concrete examples of improvement science procedures during school improvement team meetings; examples might have clarified the purpose of improvement science for participants who received cursory overviews. The first convening might also have predisposed participants towards “solutionitis” (i.e., jumping to solutions without understanding the problem; Bryk, 2015; Hinnant-Crawford, 2020). For example, central office instructional leaders asked participants to plan for a PDSA cycle without understanding the problem (e.g., through conducting a root cause analysis). Indeed, many schools did not identify a problem they sought to address through PDSA cycles or did so superficially throughout the study. The first network convening also left some participants in December 2019 with very little understanding of improvement science like this response from a teacher when asked about improvement science:

No, no, no. It's called, it's something about how children's brain works and it talks about improvement science in those terms and what we know about children's learning. Also, I read a book this summer called [redacted] and it's about children in crisis and using improvement science to sort of help get them through their school day, learning how their brains work and how you can deescalate things. And that's all another piece of that.

To be clear, this participant is expressing confusion, as they are integrating the term “improvement science” into an unrelated construct. This quote reflects that there was a lot of confusion about improvement science in the first year of the NIC. Over time, participants were less likely to have these kinds of misunderstandings. For instance, a principal in spring 2022 gave an astute summary of how the improvement science process differs from how she used to approach challenges:

Because when you aren't in that constant, reactive, fix it type of mode always came from a good place, you just want to be helpful, right? What I learned was, is that I made a lot of assumptions about things that one, were actually, you know, true, and improvement, for improvement to really sustain, it takes time to unpack and address and make the necessary shifts. And though I might have put a Band Aid on a problem prior to, it wasn't a sustainable solution. And I realized quickly that you can't have 30 band aids, you know, all over the place, because they're going to fall off, and then the problems going to exist again. So really taking the time to examine, evaluate and unpack with my team. Not in isolation just with my people. You're really addressing things and at a more effective way.

This principal noted how improvement science encouraged addressing root causes of problems, to take time to understand the problem and the system before jumping to a solution, a process often referred to in improvement science as “starting slow to accelerate improvement.”

Although many participants made substantial progress in their understanding of measurement, it remained a persistent weakness. Participants’ understanding of measurement was often singularly focused on student assessments, limiting how they designed measurement plans. For example, team members at one school thought all PDSA cycles must use student achievement data. As stated by a principal, “We are using [state assessment] scores, because how else can we measure growth?” Relatedly, school improvement teams often made claims about outcomes or schooling processes without supporting evidence. For instance, schools made claims about instruction without any evidence or made claims about student outcomes but only referenced inputs as evidence (e.g., professional development occurred, meetings were held), like in this interview with a teacher:

But I think we did a good job of thoughtfully thinking about how we should be measuring things, we focus mostly on one goal about how like, what it means to bring [advanced topics] into the general education classroom. That was our one of our main goals. And so, we talked about how many, you know, what that looks like, what resources to use. How we could track it. And maybe it has been tracked? I'm just not sure, you know, to what extent, like the ending data has turned out.

Schools also struggled to understand the role of the PDSA cycle in the context of improvement science. Schools seemed to understand how to apply a one-cycle PDSA cycle. However, few grasped the concept of repeated PDSA cycles as they iterated towards improvement – a weakness magnified by the misaligned measures school leaders embedded in PDSA cycles. Overall, we observed a wide range of understanding of improvement science theory, with some participants advancing in their improvement science knowledge over the study and others barely recognizing the six core principles by summer 2022.

5.2 Sensemaking mediators

5.2.1 Beliefs

By Year 3, participants often described the utility of improvement science more clearly, assigning more positive beliefs to the tools. Principals had a much better understanding of improvement science in spring 2022 than members of the school improvement team did in winter 2019, and many reflected on how their prior beliefs matched with improvement science theory. For instance, one assistant principal summarized their journey with improvement science over time this way:

Yeah, I think being at these meetings, and part of this group has helped me to really understand it a little bit better. And to keep those, like goals, on kind of like the forefront of my mind, I tried to, you know, sometimes it slips, but like, it helps me to, especially when we would have the meetings, we have like this kind of like rejuvenation of energy towards what we were doing.

Along the lines of the increasingly positive view of improvement science over time, we first review beliefs that likely suppressed improvement science implementation (i.e., suppressing mediators) followed by beliefs that could have enhanced implementation (i.e., enhancing mediators).

5.2.1.1 Beliefs as suppressing mediators

An improvement science process to addressing problems of practice is complex by design. Many participants expressed that slow improvement processes were unappealing, especially in a constrained environment where improvement science was optional. As expressed by a central office instructional leader reflecting on the NIC’s challenges:

There's a lot of excitement around [improvement science]. However, I don't think there's time to kind of relish in that, because they know it's something that they can try but then a shiny object goes by … And they move away from [improvement science].

This illustrative quote expresses how, for some, improvement science could not compete against other more appealing alternatives that are pitched as easy to implement with high payoff (e.g., brief social-psychological interventions like those teaching growth mindset through a short, online module, see Yeager and Walton, 2011).

We also observed this pattern in school improvement team meetings and network convenings. Participants often did not attend, and teams tended to focus on easier deliverables, skipping others that were seen as too demanding. This resulted in many school improvement team members not understanding the purpose of the NIC.

5.2.1.2 Beliefs as enhancing mediators

Participants expressed many positive beliefs about improvement science, especially related to how improvement science complemented other processes schools were already implementing. Participants reported that their required SIP work tended to be scattered and unfocused. Improvement science helped the school improvement team to create a cohesive understanding of the problems in their schools. As a principal commented:

Improvement science, simply put in my mind, would be the intentional structures to use data to identify areas where our commitment to student success may not be as effective, impactful or beneficial for students as it can be. And then we've got tools to have an impact.

While improvement science is also “slower,” participants often reflected on how this pace allowed the school improvement team to be more intentional.

Another aspect of improvement science that participants had positive beliefs about was the implicit connection to a distributed leadership model that many were already interested in, especially in schools that had not yet made SIP reforms a school-wide priority. As reflected in this illustrative quote from a teacher leader:

I think a lot of times, the [SIP] is just a foreign topic for staff. And this process helped it come to light more, and I think we still have more work to do. But having different voices on the committee really helped to like, round out our thinking. And it's not just like a top-down approach. And I think that's one of the beauties of this process.

Schools might not have had the incentives or understood how to make school improvement a shared responsibility, and improvement science helped them make this transition by including more staff in processes like determining how goals (i.e., aims) are connected with SIP actions. Improvement science’s focus on collective sensemaking around SIP goals, actions, and improvement brought in more team members than had previously been involved in writing and enacting the static version of the SIP in place.

We also noted a theme regarding participants’ beliefs about equity and positive beliefs about improvement science. Participants who were interested in educational equity often expressed positive beliefs about improvement science since they believed improvement science supported equity, as a principal stated, “I do not think we can achieve equity without improvement science.” Improvement science was sometimes seen as, “an evidence-based way to work towards positive social change.” These positive beliefs about improvement science seemed to influence improvement science implementation in ways we explore further below.

5.2.2 Context

Various elements of the study context (i.e., the term for “setting” typical of sensemaking theory, indicative of not only the location but also the political nature of the case) were more likely to suppress improvement science implementation than enable it. We begin by discussing the suppressing mediators (i.e., external constraints and barriers to participation in the NIC) followed by how improvement science was aligned with other aspects of the setting to aid participant sensemaking.

5.2.2.1 External constraints and barriers to participation

NIC participants discussed several significant constraints on their time and energy as they related to their ability to engage in sensemaking of improvement science. They often discussed constraints on their time that made it difficult to engage in the NIC work as well as limited access to consistent coaching support. While many of the constraints related to COVID-19, participants reported other constraints before March 2020, including reports that schools were overwhelmed with addressing chronic shortages and dealing with staff burnout, as expressed in this quote from an assistant principal in December 2019:

It's always a challenge with time on, we know that we need to stop and we need to look at data and reflect and refine and move forward with the next steps. But it's always very challenging, especially at a school that's as large as ours is, to pull together all the necessary people without disrupting instruction and compromising the learning that's happening for our students. It is a challenge to add this layer on.

Participants valued in-person networking and learning from others; however, these activities were infrequent (approximately two to three times a year) and all convenings moved virtual after March 2020. Lack of capacity and time needed for training, learning, and networking were all barriers to sensemaking. As expressed by a central office instructional leader:

Now, that goes back to the original problem, though, of there's no subs, there's no funding. So, this is just something like, “Hey, do you want to join us after school? On your own time with no compensation or no benefit to join a network improvement community?” So, I can't say that they're going to be successful? Different situation? They could be very successful. But the way that we have to approach it now? I'm not sure.

The size of this school district and the school districts’ site-based management model also constrained improvement science implementation. SIP requirements were not well aligned with the kinds of activities associated with improvement science. For instance, schools typically engaged in improvement planning over a few days during the summer, giving little time for problem identification and seeing the system before selecting solutions. The de facto SIP had no requirements for iterations during implementation, discouraging the improvement science principle of disciplined inquiry. As one principal noted when asked if the NIC work was aligned with the school’s required school improvement activities:

Yeah, I think it was an add on. It was something more extra to do. However, I think that we were able to see the benefits of it definitely in the past, and this year was not a year where my team was in the space to do those types of things.

Additionally, during the 2021–22 school year, schools were given substantial autonomy regarding their spending of American Rescue Plan Elementary and Secondary School Emergency Relief Fund (ARP ESSER), a $112 billion investment in schools’ recovery from the COVID-19 pandemic from the United States federal government. However, CSD mandated the exact goals of the ARP ESSER funding and did not allow schools to create their own aims (as is customary in improvement science). School leaders noted the disconnect between what CSD required for spending ARP ESSER funds and what schools identified as their needs as part of the NIC. Meanwhile, the site-based management model made it easy for school leaders to disengage from the NIC during stressful periods of time since they were under no requirement to maintain participation in most CSD programs. As one principal noted in spring 2022:

While we were initially quite energized, and quite excited, it was very hard. And just in full transparency, it was very hard to say kind of it was hard to go through, because priorities were just so all over the place, and we were just barely functioning. And so that, while you could make the argument that even more reason to need to do improvement science, we all felt like you needed stronger footing to be standing on to be able to do that work, and we were all walking around on very unsalted ground.

These constraints made it difficult for schools to focus on improvement science in productive ways.

5.2.2.2 Alignment with existing practice

While improvement science was not aligned with the required components of the de facto SIP (i.e., the official process on paper), improvement science was strongly aligned with the SIP’s underlying goals. Specifically, participants often saw improvement science as outlining the theory of change underlying the SIP in that it “helps create a pathway to get a really great [SIP] document,” in the illustrative words of an NIC principal. No one claimed that the SIP requirements were aligned with improvement science, but they tended to agree that improvement science supported SIP purposes in novel and potentially impactful ways. Improvement science “shows what the process could be” and “can bring [the SIP] to life” according to teacher leaders at two different NIC schools.

5.2.3 Messaging from central office

The most prevalent messages schools received about the NIC indicated that participation and improvement science implementation were optional, and schools should only consider NIC participation if they saw the value of improvement science to their SIP work. Participants often commented that it was hard for them to continue to engage in the improvement science work when it was seen as neither urgent nor required. When schools engaged, it was typically because central office instructional leadership convinced the schools that improvement science would show results, as one central office instructional leader reflected, “The principals are tired, assistant principals are tired, they do not want this extra thing to do. But now they are not seeing it as extra this year because we kind of couched it in terms of strategic planning.” Productive messaging reinforced that improvement science generally fits well with addressing inequities, offering some alignment with CSD initiatives. This messaging was often delivered by principal supervisors and school leaders who shared their successes with others.

5.3 Authenticity of improvement science implementation

We now reflect on the authenticity of improvement science implementation in NIC schools. We are not specifically concerned with implementation fidelity, as improvement science is more concerned with developing mindsets and local adaptation. Instead, we examined whether and how mindsets and routines had changed to align with the improvement science principles. We distinguish between authentic implementation, in which schools integrate all improvement science principles as the guiding methodology for school improvement work; partially authentic implementation, in which schools apply some of the core principles, and inauthentic implementation of improvement science in schools due to either resistance to or confusion about improvement science principles.

5.3.1 Authentic improvement science implementation

Across the 3 years and out of the eight schools that participated at some point, only one school authentically engaged in improvement science across all 3 years; additionally, one other school excelled in their application of improvement science principles by the end of Year 3. Notably, participants in these schools said improvement planning used to be looking at end-of-year accountability-focused student achievement scores, writing academic goals, ignoring current or pre-existing practices that might contribute to or alleviate problems of practice, and recommending broad improvements like “more PD.” By the end of Year 3, these schools were considering specific school-based factors causing problems of practice, identifying more specific solutions, and revising SIPs in light of evidence collected throughout the year. For instance, one member of the school improvement team at one of these two schools commented:

Even though [teachers] might not have known how [to conduct a PDSA cycle], or felt uncomfortable doing it, once they start finding success and trying those new things. It's very motivating for them. So, I do think that trying the new things and the PDSA cycles, and getting teachers voice and choice in those things, is really impactful and shifting practices.

These schools recognized improvements in their school improvement planning strategies and felt that their authentic implementation of improvement science translated into noticeable changes in their instruction and student outcomes.

5.3.2 Partially authentic improvement science implementation

Mid-way through Year 1 (pre-COVID), several schools were in the process of integrating tools that are part of seeing the system (e.g., driver diagrams) and planning PDSA cycles. Of these schools, a few schools noted the value of including more role-group diversity on SIP teams (e.g., parents, teachers) and saw how seeing the system could move school improvement planning beyond a compliance-driven activity into a more justifiable and motivating exercise. Later in Year 1 and into Year 2, schools applied at least one PDSA cycle and engaged in core routines in improvement science related to being problem-focused. These applications led to several insights regarding the reasons for persistent problems. Several participants reported they valued this problem focus and the PDSA cycles because they illuminated where a school ought to focus its attention, as expressed by a teacher leader:

Making the work problems specific and user centered; yeah, I think that that was really pivotal, especially the first year for us. I think kind of in the past, like that idea of SIP was we're just focusing on something with math and something in reading, and we're going to make our scores better, and it's going to increase by this much, and it's going to be great. But there wasn't a ton of thought as to how or why, what can we actually do that's going to significantly impact [the outcome]. And I think that by focusing on specific things that we really needed as a school that really helped kind of refocus our conversation. I think that that was really important.

By the end of the study period (summer 2022), interviews with members of the school improvement teams and central office instructional leaders suggested that all schools had at least applied partially authentic improvement science principles, procedures, and tools. Several school improvement team members mentioned how much they valued some of the tools (e.g., fishbone and driver diagrams) for within-school communication. Most principals also valued SIP team role diversity (especially teacher leader membership), arguing it facilitated identifying problems, solutions, and communicating improvement strategies among all faculty. School leaders often discussed how they embedded improvement science into pre-existing processes. Some schools integrated improvement science into pre-existing professional learning communities, classroom observation processes, and formal SIP processes. As shared by one assistant principal, “Improvement science is much more aligned with the processes now … improvement science has allowed us to use other components, so like classroom observations and coaching cycles [as part of the SIP].” Authentic engagement with improvement science often had to be in the context of already existing infrastructure like integrating data into the SIP. The success of improvement science hinged on not adding to the school’s already overflowing schedules and required activities.

Interviews often illuminated SIP teams’ confusion about improvement science through team members’ inability to articulate how they had integrated improvement science into school improvement. Several schools struggled with understanding whether their improvement efforts were aligned with improvement science or not. For instance, this school principal struggled at the end of Year 3 to describe what aspects of improvement science their school had implemented:

I think there's always been an education and need to improve. Right. I think that's been a hope. Hopefully, it's inherent in all of us leaders, right? We always want to recognize what we're doing well, and identify our areas of opportunity. I think we were always good at that. I think so the now what, you know, if we've already recognized a subgroup of students who were not making the progress we wanted, or we looked at data in terms of community engagement and things like that. I think we were good at just identifying strengths and areas of opportunity. But it was really the work that came after that, which was really an eye-opening experience for me, if that makes sense. What do you do with that data? And really, unpacking the root of challenges, concerns are things that need to change.

While the principal is showing a strong interest in improving, they did not note anything specific about their improvement efforts that indicated authentic implementation of improvement science.

At times, some principals could articulate the ways in which their implementation did not align with the intent of improvement science because improvement science work was seen as inefficient, reflecting the pre-and post-pandemic pressures many schools faced. As a principal explained when discussing why they had not participated in NIC activities over an extended period of time:

So [principals are] trusted to do our jobs. So [principals] don't have laid out, aside from very generic, you know, [identify a goal] and, you know, big picture areas, we don't have a whole lot of directive. It's more, “how can we support what you're doing.” There is this trust that [central office instructional leaders are] not going to get in our way. Because I'm trusted to do what I think is right, and if I think NIC and improvement science was never going to be helpful, nobody's going to question that.

This principal is reflecting on how the site-based management model allowed the school to completely disengage from the NIC and using improvement science if the principal decided it would not be helpful – no questions asked. This freedom did allow several schools to leave the NIC, temporarily or permanently, during the three years under study.

We also noted other ways that the culture of this school district limited participant involvement. Schools in this setting wanted to act fast and solve problems quickly instead of intentionally slowing down to engage in improvement science. As a teacher leader stated, “We want it to be efficient, and we want it to be collaborative. If not, everyone has had the opportunity to learn more about improvement science, it’s very difficult, because then it’s like, we are forcing a process sometimes.” This illustrative quote reflects the perceived inefficiency of collaborative leadership for CI efforts.

5.3.3 Inauthentic improvement science implementation

Data collected during Year 1 suggest that some participants were overwhelmed with the amount of information received at convenings. While participants understood discrete processes (e.g., how to implement PDSA cycles), they did not understand how individual processes connected to the improvement science principles. For instance, this assistant principal liked the convenings themselves, but did not note any aspect of improvement science, not even networking, that they had integrated into their school improvement work, “I think I liked the ideas of the convenings. I mean, overall the approach was something that I thought was really interesting. I think the convenings though, was something I connected with.” This assistant principal liked having a day away from their building to focus on school improvement planning, which was not actually the primary goal of the convenings which were meant to network learning between schools.

Some schools struggled with writing evidence-informed goals and using the PDSA cycles or formative data analysis to inform improvement efforts. In some schools, the staff tasked with managing improvement science implementation departed or were assigned other unrelated duties, crowding out their focus on improvement science. Other schools did not clearly identify which people were responsible for managing improvement science, so implementation fell by the wayside. Finally, schools sometimes seemed to view improvement science and improvement science-related data analysis as an “add-on,” affecting willingness to engage in improvement science.

By 2022, external factors (COVID recovery, snow days, inability to hold teachers accountable) affected improvement science implementation. At least one school continued to struggle with measurement as they believed the PDSA cycles could only collect student assessment data, and teachers were already overly burdened by assessments. COVID-related issues (exhaustion, staff shortages, shifting priorities) also affected the central office instructional leaders responsible for managing the NIC; specifically, some central office instructional leaders and programs lost the capacity to manage the NIC. By 2022, one school and several central office instructional leaders noted that the lack of orchestration at the district-level might have impeded school-to-school accelerated learning via networking. Participants mentioned the lack of common goals and improvement strategies, undermining their motivation to participate in NIC activities. These struggles led at least six of the eight participating schools to go through long periods of time (6 months or more) without any engagement with improvement science in their schools within the 3 years of the NIC. One principal noted that after 3 years in the NIC, the school had yet to really integrate improvement science into school improvement:

I mean, very honestly, like, it didn't really come to be, I think, between COVID and getting a new assistant principal and some other changes in our building. I think people were kind of overwhelmed. And it definitely took a backseat, unfortunately. So I know like that the work that we were doing that second year, and using these like drivers and things like it kind of came to a stop. And unfortunately, it didn't continue, quite honestly, in the third year. And then I think we kind of officially paused back in like January and said, like, we need to kind of pause and we can't continue at this point in time. So I think, you know, we can thank COVID for that, unfortunately. And I was definitely a bit disappointed because I saw the potential and the growth. But that was kind of where we were as a school is we didn't really we weren't in a place to move super forward with it.

Even with intentions to participate and excitement about improvement science, school struggled to incorporate improvement science into their school improvement routines and plans.

6 Discussion

In this study, we illuminated a school improvement NIC that experienced some successes in organizational learning, sensemaking, and authentic improvement science implementation focused on the six core principles of improvement science: Be problem-focused and user-centered (1), Attend to variability (2), See the system (3), Embrace measurement (4), Learn through disciplined inquiry (5), and Organize as networks (6). Below, we review our key results.

6.1 Sensemaking mediators between organizational learning and improvement science implementation

We observed that our proposed sensemaking mediators were helpful in understanding how and why schools engaged in the NIC for school improvement. First, we noted a significant number of barriers in relation to school improvement team members’ sensemaking of improvement science. Some basic elements of improvement science provided roadblocks to sensemaking. Participants sometimes chafed at the purposefully slow nature of improvement science. While improvement science is designed for improvement efforts to “start slow” to “learn quickly,” schools often were deterred by this concept because of the fast-paced nature typical of school improvement. They expressed that slowing down came off as inefficient. Participants also sometimes struggled with the complexity of improvement science, leading to confusion that negatively affected sensemaking throughout the 3 years of the NIC.

Other mediators that suppressed improvement science sensemaking concerned context and messaging. Even before COVID-19, SIP team members felt that they had little time to engage in improvement science, and the timing of NIC activities often did not align with other required deadlines related to the SIP and other CSD activities. CSD’s site-based management model combined with the optional nature of NIC participation made it easy for schools to opt-out when an obstacle or difficulty arose.

We also identified several enabling conditions that aided participant sensemaking. School leaders often noted that de facto SIP work, while required, had often been isolated to only certain times of the year with few people in the school engaged in what was intended to be school-wide improvement. Through improvement science, these school leaders were able to write a more cohesive SIP and make it a shared responsibility. They also often commented on how improvement science helped support a distributed leadership model for the SIP in ways that had been difficult without improvement science. We also often heard about the alignment, in spirit, between the SIP and improvement science, as both aimed to “improve” schools and learning. This was especially the case for school leaders interested in educational equity who became passionate about improvement science as a vehicle for increasing equity of opportunity and outcomes in their schools. Within a setting where equity concerns were often downplayed, school leaders interested in bucking that trend were also those more likely to authentically implement improvement science.

6.2 Improvement science and dynamic school improvement

The core goal in this study was to understand if and how improvement science could shift school improvement from a static to dynamic process, and, in doing so, bolster schools’ organizational resilience by enhancing school capacity to address future challenges. The evidence suggested that improvement science led some schools to adopt a more iterative, problem-focused, distributed school improvement methodology, building up organizational resilience. Being more problem focused and, consequently, proactive to address problems rather than only reacting to symptoms of problems as they arise, was a common outcome of these efforts. Such characteristics are indicative of more resilient organizations. Schools that engaged in organizational learning and held strong, positive beliefs about improvement science, reported high engagement with improvement science in school improvement even outside of direct engagement with the NIC. Broadly, the convenings inspired the most engagement but did not lead to changing organizational routines in most settings, which jeopardized these schools’ abilities to build organizational resilience helpful in building resilience. One of the greatest challenges inhibiting authentic implementation, and consequently organizational resilience, was the perception that improvement science is inefficient. While improvement science in education theorists argue that the process only begins slowly and pays off over time in improvement gains (Bryk et al., 2015), schools were often so concerned with time and personnel resource allocation that improvement science felt too risky an investment to integrate authentically into school improvement routines. These kinds of limiting beliefs undermined improvement science implementation and could also be indicators that these schools are less likely to be resilient, struggling to make positive adaptations during challenging times. How to encourage schools to take the time to improve is a significant challenge to building their resilience and to the theory of change embedded in improvement science.

6.3 Implications

This study serves as both assurance to school-and district-level leaders that they can successfully integrate some elements of improvement science into school improvement while illustrating the difficulty of integrating improvement science into traditional school improvement, during business-as-usual but also during turbulent times like the pandemic. Some elements of this case are encouraging in that the successful implementation of improvement science into school improvement aligns with prior work concerning effective school improvement practice and building organizational resilience. For instance, prior research has found that collaborative leadership where teachers are actively involved in school-wide improvement increases teachers’ organizational commitment and the effectiveness of school improvement efforts (Hallinger and Heck, 2010; Lai and Cheung, 2015). We encourage school-and district-level leaders to similarly involve teachers in their improvement science initiatives. In this study, schools added teachers to their school improvement teams, a change that is worthwhile for schools that do not already include teachers in the SIP. For district-level leaders interested in improvement science, this study provides some assurance that improvement science can lead to more dynamic, effective school improvement with the caveat that the networking aspect of improvement science can be difficult to include without a more strategic focus on collaboration between schools. This focus on collaboration was, arguably, the biggest challenge in a district with site-based management and optional improvement science implementation, a difficulty to engage with head-on for district-level leaders or others organizing a NIC hub. While previous work on required CI initiatives found implementation could be compliance-driven (Aaron et al., 2022; Yurkofsky, 2021), not requiring implementation made networking infeasible. District leaders should consider their context when identifying potential difficulties that could occur with networking. For instance, a school district with a culture of site-based management might similarly struggle with networking between schools while districts with more centralized management structures might more easily be able to convene schools regularly for networking.

We note several other implications for future research on improvement science or other similar CI methodologies. First, our study analysis proposes a theory of how organizational learning could lead to improvement science implementation as mediated through sensemaking, but we do not test this theory quantitatively or engage in alternative theories of improvement science implementation. Future research might engage in quantitative measures of these constructs, perhaps within a structural equation model, to assess whether these constructs are observed to be causally related to each other as well as testing alternate explanations for implementation. Second, we only barely began to examine key questions in improvement research related to the goals of improvement science. We echo the call of Eddy-Spicer and Penuel (2022) to ask the question “improvement for what?” when seeking to understand why we are asking schools to integrate improvement science into school improvement. In schools that are already incentivized to focus on their accomplishments, does improvement science help to increase opportunities for students marginalized by this system? These core questions need to be addressed along with further interrogation of how improvement science does or does not support strong equity. Strong equity is defined as school improvement that, “recognizes and then confront[s] the reproduction of inequities through the everyday structural and systematic aspects of schools” (Eddy-Spicer and Gomez, 2022, p. 90). Previous research on improvement science implementation is skeptical that these efforts produce strong equity (e.g., integrating recognition of the structural nature of inequality in problem definition) unless participants purposefully integrated racial equity logics into their continuous improvement practices (Bush-Mecenas, 2022). While we found that equity orientations encouraged improvement science implementation, future work should continue to examine the relationship between improvement science implementation and equity. Studying this potential relationship is also an area of opportunity for educational leadership preparation programs with an equity orientation, for faculty to consider how their work with educational leaders (either part of leadership preparation or in partnership with districts) can encourage strong equity as part of their improvement science practice. For example, educational leadership programs could review their syllabi or their internship requirements for alignment with strong equity practices. Improvement science proponents have successfully spread these routines into schools internationally, but there is much to be done to understand if these approaches can successfully navigate the relational elements of schools to produce the promised improvements.

Data availability statement

The datasets presented in this article are not readily available because research agreement with the partner school district does not allow posting of the data. Requests to access the datasets should be directed to Samantha Viano, c3ZpYW5vQGdtdS5lZHU=.

Ethics statement

The studies involving humans were approved by George Mason University Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written or electronic informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

SV: Writing – review & editing, Writing – original draft, Supervision, Project administration, Methodology, Investigation, Funding acquisition, Formal analysis, Conceptualization. FS: Writing – review & editing, Writing – original draft, Formal analysis, Conceptualization. SH: Writing – review & editing, Writing – original draft, Methodology, Investigation, Formal analysis, Conceptualization.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was funded by the George Mason University College of Education and Human Development Seed Grant program.

Acknowledgments