- Department of Counseling, Clinical, and School Psychology, University of California, Santa Barbara, Santa Barbara, CA, United States

As the world becomes more aware of the prevalence and consequences of trauma for young people, the education sector is increasingly responsible for supporting students emotionally and academically. School-based mental health supports for students who have experienced trauma are crucial, as schools are often the only access point for intervention for many children and families. Given that over two-thirds of children in the U.S. will experience a traumatic event by age 16, it is imperative to better understand the mechanisms of implementing mental health support in schools. Despite the increasing need for trauma-informed practices in schools (TIPS), schools often struggle to provide them due to a myriad of barriers. More research is needed to understand how to implement and sustain TIPS. Researchers have begun exploring these questions, but there is still a shortage of research about how to best implement TIPS. We argue that the Consolidated Framework for Implementation Research (CFIR) is useful for organizing and advancing the implementation of TIPS. By consolidating findings from existing scholarship on TIPS, we identify themes and future directions within the CFIR framework. Based on our review, we also provide practical suggestions for schools seeking to implement TIPS.

Introduction

Many children and adolescents are exposed to a variety of traumatic events, both chronic and sudden (Bence, 2021). Specifically, around two-thirds of children will be exposed to one or more traumatic events by the time they reach 16 years old (Substance Abuse and Mental Health Services Administration & The National Child Traumatic Stress Network, 2022). Examples of chronic traumatic stressors, also referred to as adverse childhood experiences (ACES), include systemic racism, houselessness, community violence, food insecurity, and domestic violence within the home (Bence, 2021). ACES are associated with worse health outcomes in childhood and into adulthood (Felitti et al., 1998; Liu et al., 2020), meaning it is crucial to consider these kinds of stressors in trauma informed models. In contrast to chronic traumatic stressors, examples of sudden traumatic stressors include natural disasters and school and community shootings (Bence, 2021). Children who experience trauma are at risk of experiencing mental health challenges, including difficulty building relationships, trouble managing emotions, and dissociation (National Child Traumatic Stress Network-a, n.d.; National Child Traumatic Stress Network-b, n.d.). Given that such a large population of students experience trauma and related mental health symptoms, it is important for schools to implement trauma-informed practices in the schools (TIPS), which are systematic supports designed to both address traumatic stress resulting from chronic and/or sudden trauma, and to promote students’ mental health and wellbeing.

Providing a consistent operationalization of TIPS is difficult, as there is not currently a uniform protocol or set of interventions that define them (Wassink-de Stigter et al., 2022). Broadly, TIPS are programs that involve school personnel being able to (1) recognize and identify symptoms of trauma, (2) understand the ramifications of trauma, (3) implement policies and procedures aimed at prevention and intervention for students, and (4) engage in actions that seek to reduce re-traumatization (Overstreet and Chafouleas, 2016; Maynard et al., 2019).

Ideally, trauma informed schools integrate TIPS with Multi-Tiered Systems of Support (MTSS; California Department of Education, 2023; University of California, San Francisco, n.d.). MTSS is framework for academic, behavioral, and social emotional interventions that provides supports at three levels, or tiers. Tier one provides universal supports for all students. Tier two provides more specialized, smaller group supports for about 15% of students who aren’t benefiting from tier one services. Tier three provides targeted, individualized support for about 5% of students with more needs.

TIPS can be integrated in various ways across the MTSS continuum. Examples of tier one TIPS include personnel training in specific TIPS and universal screening (Overstreet and Chafouleas, 2016), promotion of staff wellness, and anti-racist practices (University of California, San Francisco, n.d.), as well as trauma-informed classrooms, which are microcosms of the broader school environment wherein teachers are trained in and implement TIPS. Tier two TIPS target early intervention and response and can include interventions group counseling delivered by school counselors, psychologists, or other school personnel (Center for Safe & Resilient Schools and Workplaces, 2023). Tier three TIPS involve targeted recovery after trauma and often include individual therapy with school psychologists or other school personnel. Multitiered supports in schools are interconnected and require consistent communication and re-evaluation among team members using data to make informed decisions that ensure students are progressing through the tiers in a manner that best supports them. For this reason, many TIPS can be implemented across tiers (Center on MTSS, n.d.).

Despite the increasing guidance on how to structure TIPS within schools, there is a need to better understand both the effectiveness of TIPS and mechanisms of successful TIPS implementation. Evaluation of TIPS implementation and effectiveness outcomes is lacking, as demonstrated by several systematic reviews (Berger, 2019; Maynard et al., 2019; Avery et al., 2021; Cohen and Barron, 2021). Each review only yielded between zero and thirteen studies to include in the analyses, indicating a lack of research on TIPS implementation. In addition to systematic reviews, scholars in the field have discussed their concerns regarding the lack of consistent evaluation of TIPS. A review by Chafouleas et al. (2021) explained that aims up to now have involved educators’ ability to realize and recognize the impact of trauma, and subsequently respond and avoid re-traumatization, with less of a focus on the role of TIPS more broadly. Additionally, much of the extant research has focused on individual students and small groups within a tier two and three approach (e.g., in providing direct counseling services to target trauma), with less attention paid to tier one TIPS (e.g., whole-school initiatives; Chafouleas et al., 2021).

To date, there is no one model of TIPS that has been tested and found effective. Moreover, TIPS are a whole-school, multi-tiered endeavor, which requires attention to implementation readiness. The goal of this paper is to critically analyze TIPS research and introduce the merits of applying an implementation framework to facilitate adaptation and the study of TIPS. First, we synthesize findings and lessons learned from recent effectiveness research on TIPS and provide key future directions and improvements for advancing the impact of TIPS. The second part of this paper will highlight how implementation science methods should be applied to enhance TIPS adoption and sustainment. In keeping with implementation science recommendations, we identify an implementation determinant framework, the Consolidated Framework for Implementation Research (CFIR; Damschroder et al., 2009), and incorporate it into our recommendations for TIPS research. These recommendations align with the need to integrate effectiveness and implementation research to enhance the public health impact of interventions (Curran et al., 2012; Rudd et al., 2020).

Effectiveness of TIPS

As TIPS is still a relatively new area of research, its effectiveness needs to continue to be studied in several ways. Extant literature points to gaps in evaluation of professional development, student and school outcomes, and contextual factors, as well as a need for increased rigor in methodology and measurement.

Professional development

Chafouleas et al. (2016) noted the dearth of controlled studies demonstrating the effectiveness of professional development as it relates to building competence and consensus regarding TIPS. This is a crucial area of research, as existing literature points to the necessity for school staff consensus and competence around implementing TIPS. As Chafouleas et al. (2016) point out, professional development aimed at building staff consensus and competence is particularly important when considering implementation of a mental-health initiative such as TIPS because many educators do not obtain training in TIPS before starting to work in schools.

Student and school outcomes

More evaluation also needs to assess student and school outcomes related to TIPS (Chafouleas et al., 2016). There is currently a very small body of research on the effectiveness of TIPS for creating positive student and school outcomes. Maynard et al. (2019) conducted a systematic review with this specific research question in mind and found that no studies met their inclusion criteria for analysis, which highlights the lack of efficacy research in this area. Several other reviews have identified promising student outcomes as a result of TIPS, such as improved academic achievement and behavior (Berger, 2019) and trauma symptoms (Cohen and Barron, 2021). However, the small number of studies, as well as the methodological limitations and small sample sizes, mean that the generalizability of these student outcomes is lacking (Berger, 2019; Cohen and Barron, 2021).

It is unclear which components of TIPS are responsible for positive outcomes identified to date. Thus, there is a need to parse apart specific elements of TIPS that are impactful and how various aspects of TIPS interact with one another (Avery et al., 2021). Due to the fact that schools and teachers are perennially pressed for time to implement interventions given a multitude of competing demands, identifying what components of TIPS are the most beneficial to student and school outcomes would maximize efficiency of TIPS interventions and reduce the burden on schools.

Contextual considerations

Another gap in the literature revolves around the lack of consideration for contextual factors. Extant attempts to implement TIPS generally involve a decontextualized approach to trauma, wherein considerations such as the developmental age of students or the social, geographical, and cultural settings of the schools are lacking (Chafouleas et al., 2016). For example, considerations of racism and oppression are not at the forefront (Chafouleas et al., 2021). This gap aligns with concerns around the dearth of studies in general in the area of TIPS; because so few studies have been done relative to other areas of psychological intervention, there is less known in general about how context intertwines with current knowledge. The existing suggestions on how to solve this gap revolve around establishing a greater consideration for context when conducting TIPS research. Drawing from implementation science literature is particularly useful in this regard, as several implementation outcomes (e.g., acceptability, appropriateness, feasibility, and adaptability) take context into account. For example, Chafouleas et al. (2016) suggest pulling from implementation research and asking whether TIPS are able to be adapted to fit the local context and culture of schools.

Methodological rigor and measurement

One large issue with the current state of TIPS effectiveness research is a lack of methodological rigor and consistent measurement resulting in reduced generalizability of findings. Most of the evidence base to-date includes uncontrolled and/or advocacy-driven studies (Overstreet and Chafouleas, 2016). Greater consistency across research methods and interventions is also needed for greater generalizability (Berger, 2019; Avery et al., 2021). Cohen and Barron (2021) advocated for larger sample sizes and the use of randomization with more diverse populations, given that many of the studies in their systematic review included fewer than 35 participants. Increasing the number of schools would allow consideration of school-level factors. However, it is difficult to conduct randomized or quasi-experimental designs within the context of a school and there is an argument to be made for flexible frameworks (Avery et al., 2021). Chafouleas et al. (2016) suggested that single-subject methodology, such as multiple baseline designs, might be a way to increase methodological rigor that fits within the school context.

One suggestion from multiple researchers in the field revolves around improvement of the tools used for evaluation. Chafouleas et al. (2016) suggested developing more psychometrically sound tools for evaluating the impact of trauma-informed schools. Avery et al. (2021) specifically pointed to the need to develop tools to measure student, teacher, and family outcomes, as well as fidelity monitoring. Chafouleas et al. (2016) noted that researchers might consider being intentional about collecting data in multiple arenas, including context process data (context, input, and fidelity), and outcome data (impact). They also proposed that collecting data on actions that support implementation is important.

TIPS implementation research

Very few existing studies on TIPS have examined implementation factors. Implementation in schools has been defined as, “the process of putting a practice or program in place in the functioning of an organization, such as a school, and can be viewed as the set of activities designed to accomplish this” (Forman et al., 2013, p. 78). School-based implementation outcomes are different from intervention outcomes and specifically measure the success of implementation. Common implementation outcomes include acceptability (consumer satisfaction), adoption (intent to implement), appropriateness (alignment of intervention and users), costs, feasibility (degree to which something can be successfully used), fidelity (whether something is delivered as planned), penetration (integration into a setting), and sustainability (routine integration; Proctor et al., 2011; Hagermoser Sanetti and Collier-Meek, 2019).

A scoping review conducted by Wassink-de Stigter et al. (2022) identified 57 publications that had a focus on TIPS implementation. Only seven of the sources studied implementation empirically in a peer-reviewed project; examples of the other 50 sources included non-peer reviewed empirical studies, conceptual articles, and book chapters. The authors included all 57 publications in their synthesis and overall, results indicated that there were multiple factors associated with implementing TIPS. Specifically, professional development, implementation planning, leadership support, engaging users, and buy-in were all identified as drivers of implementation success (Wassink-de Stigter et al., 2022). As evidenced by the scant number of peer-reviewed, empirical studies on TIPS implementation, there is still a looming question of how to successfully implement TIPS. Additionally, because TIPS generally involve school-wide systems change, evaluating implementation success and isolating implementation components proves more challenging than when evaluating a single intervention (Bunting et al., 2019).

Suggestion for future research: the CFIR

Overall, research to date points to a need to conduct TIPS studies that focus on effectiveness outcomes, implementation outcomes, and contextual settings, while also increasing methodological rigor. This paper proposes that the CFIR is a helpful way to address these considerations for multiple reasons. This section will explain the benefits of using implementation frameworks, as well as explain how the CFIR has been a beneficial tool in school-based and trauma-focused research up to now.

Frequently, implementation science studies use a determinant framework to guide the process. Determinant frameworks are useful because they summarize variables that may impact implementation outcomes (Moullin et al., 2020). In some instances, these variables have already been shown to impact implementation, while others are hypothesized to do so (Hagermoser Sanetti and Collier-Meek, 2019). Most determinant frameworks consider the barriers and facilitators that are present across different levels of a system.

The CFIR is a particularly useful determination framework for several reasons. First, it is well suited to center innovation recipients and consider determinants of equity in implementation (Damschroder et al., 2022a). Additionally, it is one of the most highly cited frameworks currently being used in implementation science (Damschroder et al., 2022a), making it well-recognizable and relevant. As will be highlighted in subsequent sections of this paper, the CFIR has also been used numerous times to evaluate implementation drivers within schools, establishing it as an appropriate framework for the specificities and nuances of the educational setting.

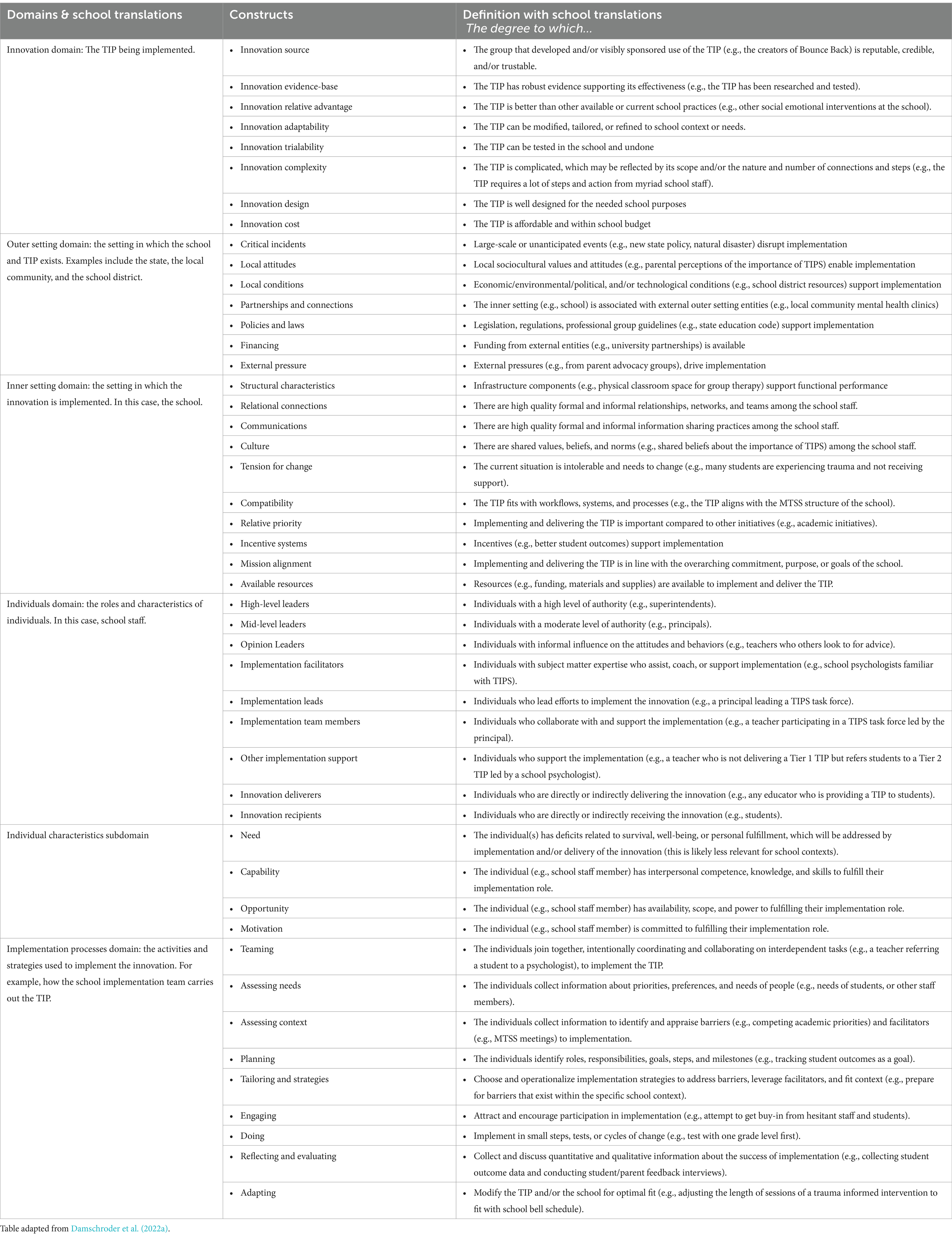

Overview of the CFIR domains

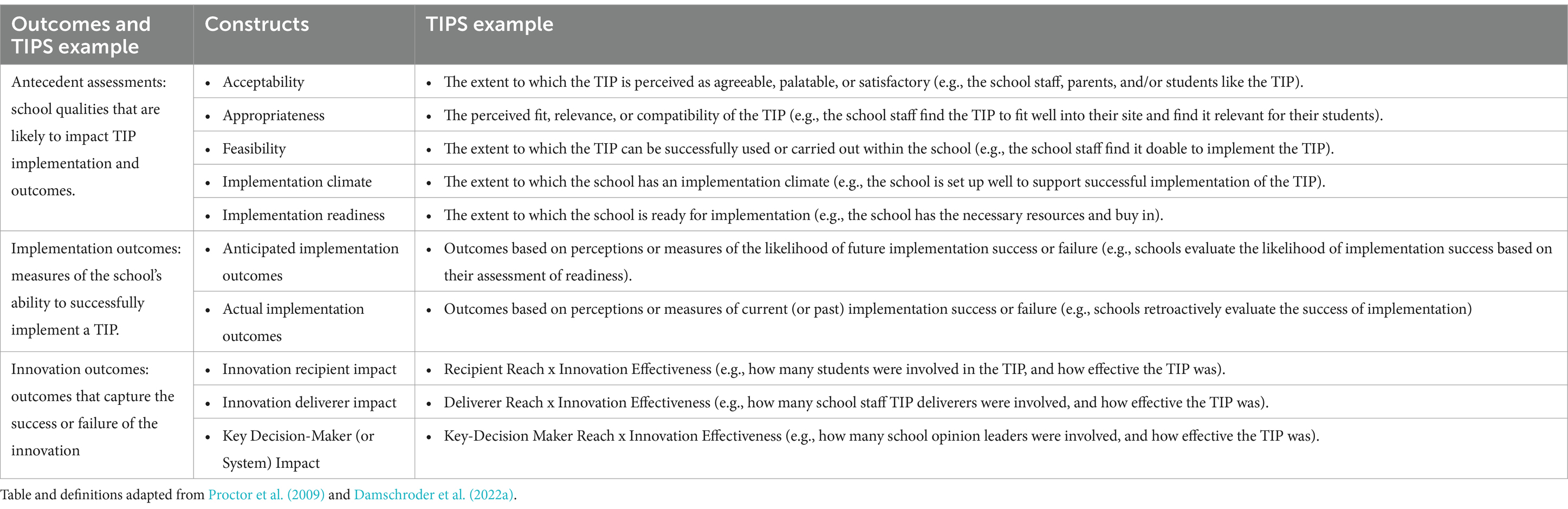

The CFIR is a determinant framework that comprises five domains: the innovation, outer setting, inner setting, individuals, and implementation process domains (Damschroder et al., 2009, 2022a). The innovation domain is the approach or practices being implemented (i.e., TIPS). The outer setting domain is the place in which the inner setting exists. There can be multiple outer settings or multiple levels within the outer setting. In the context of implementing TIPS, the outer setting context could include locations such as the school district, the county, or the state, as well as the local community culture. The inner setting domain is the setting where the innovation is implemented, which is the school when implementing TIPS. The individuals domain refers to the roles and characteristics of individuals involved in implementation. In the context of TIPS, this would include school-based implementers such as principals, teachers, school psychologists, and counselors. The implementation process domain includes the activities and strategies that are used in implementing the innovation. All of these domains and their constructs, in addition to outcome and implementation measures suggested within the CFIR, are found in Tables 1, 2.

CFIR applicability for TIPS

One strength of the CFIR is its ability to map onto ecological systems. Researchers such as Atkins et al. (2016) have suggested that implementation research is best suited to communities when it takes into consideration Bronfenbrenner’s ecological systems theory (Bronfenbrenner, 1986). They propose a four-step process to consider when conducting implementation work in schools: (1) select a setting pertinent to development, (2) identify core goals and think about the goals’ mental health benefits, (3) identify key opinion leaders who will support the goals and promote implementation, and (4) identify the needed and available resources to implement and sustain the intervention (Atkins et al., 2016). Three CFIR domains – the individual domain and the inner/outer setting domains, respectively, – align with the goals of identifying key opinion leaders (step three) and available resources (step four).

CFIR applicability in school settings

The use of the CFIR in school-based implementation research is not completely novel. The CFIR has been used multiple times to evaluate the implementation of non-TIPS interventions in school contexts. For instance, it has been used to guide research on the implementation of drug prevention interventions (Eisman et al., 2022), health interventions (Leeman et al., 2018), sexual health referral programs (Leung et al., 2020), middle school sexual assault prevention programs (Orchowski et al., 2022), and physical activity programs (Wendt et al., 2023). The CFIR has also been used to evaluate the implementation of mental health interventions within schools. For example, the CFIR has been used to evaluate school-based implementation of the Modular Approach to Therapy for Children (MATCH) protocol (Corteselli et al., 2020) and a whole-school mindfulness program (Hudson et al., 2020).

To the authors’ knowledge, only one study has examined implementation of TIPS through a CFIR lens. Wittich et al. (2020) examined teacher and school staff reports of barriers and facilitators to implementation of a TIPS initiative that took place over the course of 3 years. Their results indicated that most barriers and facilitators of TIPS are found within the inner setting; access to knowledge and information, available resources, and implementation climate were the most commonly coded constructs across all domains in the study. The authors perceived there to be minor misfits between the CFIR and the school context and suggested that future use of the CFIR in schools might benefit from additional school-specific constructs. This study’s ability to successfully assess for implementation determinants in TIPS is a hopeful first step in incorporating the CFIR in subsequent TIPS research. The results of Wittich et al. (2020), taken in conjunction with the results from other school-based studies that used the CFIR, speak to the utility of the CFIR in school-based research. As will be demonstrated in the following sections, these studies indicate that the CFIR is helpful at various stages of the implementation evaluation process, and also that nearly all CFIR domains have been identified as relevant within school-based research.

Applicability across implementation phases

The CFIR is useful in that it can evaluate implementation determinants before, during, or after implementation has taken place; this speaks to its flexibility as a framework.

Pre-implementation evaluation

The CFIR is useful in conducting pre-implementation investigations of likely determinants that are particular to that school context. Orchowski et al. (2022) conducted a qualitative analysis with various school-based community members (i.e., impacted groups and non-research partners such as principals, counselors) prior to rolling out a sexual assault prevention program. Their aim was to gather awareness of school and community factors that might be associated with whether or not implementation would be successful. Some predictors of implementation that school partners identified included the relative priority compared to other school demands, school climate, parent perspectives, and considerations of strategies for rollout (e.g., not disrupting existing schedules). The study was conducted before rollout of the intervention, so implementation success was not reported on in the study. Instead, the study served as an example of utilizing the CFIR for predicting and anticipating possible implementation barriers and facilitators ahead of an intervention rollout.

Ongoing implementation evaluation

It is also feasible to track implementation determinants over time using the CFIR. Hudson et al. (2020) conducted a longitudinal evaluation of a mindfulness program in schools in the United Kingdom. They gathered school member (e.g., teacher) perspectives on implementation determinants at two timepoints 6 months apart while implementation of the program was ongoing. Over the course of the implementation process, the researchers found that the CFIR was useful in capturing implementation challenges in the school setting, and that 74% of CFIR constructs were relevant in their data collection. This points to the CFIR’s utility in assessing for implementation success in a longitudinal manner.

Post-implementation evaluation

The CFIR can also be used after implementation of a school-based intervention has taken place. This method was used by Corteselli et al. (2020) as part of a larger randomized control trial (RCT) evaluating the use of the MATCH protocol in schools. In this study, researchers used the CFIR to guide interviews with school counselors at the conclusion of the RCT to reflect on implementation determinants. Results indicated that both barriers and facilitators to MATCH implementation were identified across all domains except the outer setting domain. The authors concluded by advocating for increased consideration of implementation determinants in the form of studies that integrate both effectiveness and implementation outcomes.

Relevance across CFIR constructs

The results from prior school-based CFIR research - regardless of whether it was conducted prior to, during, or after implementation - have identified many CFIR constructs as being relevant to school-based implementation.

Innovation domain

The innovation domain was also identified in multiple studies as being an implementation consideration in schools. Several studies have identified innovation adaptability as being relevant. For instance, Leeman et al. (2018) evaluated school staffs’ implementation of Center for Disease Control (CDC) tools for evidence-based interventions (these tools include items such as resources that summarize and organize information about interventions). One of the findings that emerged from this qualitative study was the need to adapt and shorten the tools to make them applicable for school staff implementation. The suggestions they posed included providing ready to use implementation tools (Leeman et al., 2018). Similarly, in their study of implementing the MATCH protocol in schools, Corteselli et al. (2020) identified the need to make MATCH adaptable to school settings in order to combat identified barriers such as lack of time and space. They specifically suggested implementing quick and/or group-based modular treatments (Corteselli et al., 2020).

Outer setting domain

Extant literature has also pointed to the importance of considering the outer setting domain while conducting school-based research. Orchowski et al. (2022) identified parents as likely to play a role in the successful implementation of a sexual health intervention. Additionally, both Leeman et al. (2018; in their evaluation of a physical health intervention using CDC tools), and Leung et al. (2020; in their evaluation of a sexual health referral system) identified the importance of collaboration with non-school based community health providers and the role of state and federal policies as being associated with implementation success. For instance, Leung et al. (2020) found that when schools and community-based healthcare providers had shared visions of support and formalized agreements, it took the burden off of school staff. Similarly, Leeman et al. (2018) found that school staffs’ engagement with health-based outside organizations was associated with the use of tools. Leung et al. (2020) also found that prohibitive statewide policies were large barriers, whereas supportive policies were often not sufficient on their own in supporting implementation of the sexual health referral system. Contrastingly, Leeman et al. (2018) found that the presence of supportive state and federal policies that supported use of the CDC tools was beneficial for staff implementation.

Inner setting domain

Various inner setting considerations have also been identified across studies, including school staffs’ lack of knowledge on school policies (Leung et al., 2020), leveraging existing networks of communication (Leung et al., 2020), relative priority and the need to prioritize academics (Orchowski et al., 2022), and school culture and its alignment with the new intervention (Orchowski et al., 2022).

Individuals domain

The individuals domain has also proved to be relevant when considering school-based implementation. In their examination of a whole-school mindfulness program, Hudson et al. (2020) found that leadership was the most influential construct related to successful implementation. Leung et al. (2020) identified that knowledgeable school staff were the ones who were most actively involved in program implementation.

Acceptability addendum

Numerous studies have identified user acceptability as an important factor. This construct was added in the CFIR addendum (Damschroder et al., 2022b), which will be described in more detail in subsequent sections of this paper. Specifically, Eisman et al. (2022) found higher implementer acceptability to be associated with the higher dose and fidelity of the Michigan Model for Health curriculum. Corteselli et al. (2020) also identified that higher school counselor acceptability for certain MATCH modules (e.g., the depression module) increased likelihood of implementing that module, whereas the opposite was also true in that modules the counselors found less acceptable (e.g., the trauma module) were implemented less.

The results of this extant school-based literature indicate that the CFIR will be useful in conceptualizing TIPS implementation determinants. One reason is that previous studies that have used the CFIR to evaluate implementation have identified myriad CFIR domains and constructs as important for successful school-based implementation. Second, several researchers who have used the CFIR in school settings have directly addressed the utility of its use in school-based implementation research. Leeman et al. (2018) suggested that the CFIR is useful in identifying factors to consider in order to design the intervention tools in such a way that they align with user needs and school contexts. The CFIR has also been shown to be useful in identifying complex barriers and facilitators of school-based implementation (Hudson et al., 2020; Leung et al., 2020). Taken together, these studies provide a growing case for the utility of using the CFIR in a school setting.

CFIR applicability in trauma informed research

While only one study has yet to use the CFIR to evaluate TIPS, evidence from other contexts supports its utility for use in TIPS research. While TIPS can include specific trauma-informed interventions, they go beyond individual interventions and are designed as system-wide frameworks. Therefore, they may require different processes to examine implementation than individual interventions. Trauma-informed care in the medical and community setting is similarly complex (Piper et al., 2021), making it a good proxy for examining potential utility of the CFIR for TIPS. Results from several studies have found that the CFIR is a relevant tool for evaluating trauma-informed care implementation across multiple CFIR domains and constructs.

A qualitative analysis of trauma-informed HIV care indicated that there were inner and outer setting constructs that impacted uptake of trauma-informed care (Piper et al., 2021). In a pre-implementation assessment with providers, staff, and administrators working at an urban HIV care center, researchers examined potential factors related to implementing a trauma-informed care model. The authors used SAMHSA (2014) definition of trauma informed care to guide their study. Inner setting implementation factors included staff and providers’ perceptions of the relative priority of these trauma-informed practices as compared to other priorities in supporting clients (relative priority construct), the fact that the trauma-informed care was aligned with the mission and services already offered (compatibility construct), lack of sufficient time with patients (available resources construct), lack of training on trauma (access to knowledge and information construct), and the helpful existence of service linkages and warm handoffs (networks and communications construct). Outer setting implementation factors included the lack of clear referral procedures to external organizations who may have been able to further support patients with trauma (cosmopolitanism construct) and barriers such as stigma and system navigation (patient needs/resources construct).

Furthermore, in a large-scale systematic review of individuals implementing trauma-informed care (again defined using the SAMHSA, 2014 definition) in human services, health, and education practice settings, Robey et al. (2021) used the CFIR to identify common implementation drivers. In particular, they identified that most of the factors driving implementation cited by participants in these studies were located in the inner setting domain (Robey et al., 2021). Often, these factors were listed as implementation facilitators. Some specific examples of these inner setting factors included needing cost effective structures, training access, consultation with knowledgeable professionals, and clear lines of communication. Both this study and the findings from Piper et al. (2021) demonstrate the utility of using the CFIR when exploring the implementation of trauma-informed care in various contexts, making it a useful tool when examining TIPS.

Implications for the field

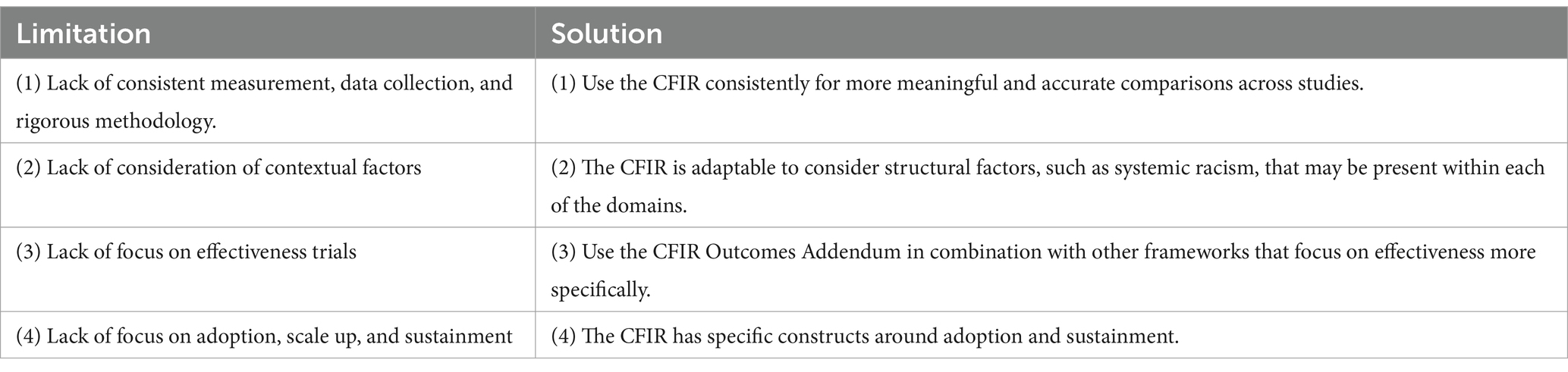

There is reason to believe that using the CFIR when conducting research related to the implementation of TIPS will be useful. This is evidenced by its extensive prior use in examining school-based interventions and community-based trauma informed interventions. It is therefore our recommendation that the CFIR be used for future TIPS research as well. We argue that many of the concerns and suggestions around the evaluation of TIPS – which have been presented by TIPS researchers and summarized previously in this paper – can be ameliorated by using the CFIR.

CFIR utility with consistent measurement, data collection, and rigorous methodology

One concern brought up by multiple researchers was the lack of consistency in measurement, data collection, and methodology among the existing TIPS research projects. It would be beneficial for future studies to implement the CFIR because it is a scoping and comprehensive framework. The CFIR provides a consistent taxonomy, terminology, and definitions (Damschroder et al., 2009) across projects, which would benefit TIPS implementation research. The consistency that would be garnered by multiple studies using the same framework would lead to easier comparison of findings and greater generalizability of results.

CFIR utility with considering contextual factors

Another suggestion for improvement of TIPS research is to be more intentional about considering the contextual factors associated with trauma, including considerations of structural racism (Allen et al., 2021). The CFIR is also helpful in this arena because of its previously established utility in considering contextual factors in systems; it has already been used to examine community factors and their impacts on implementation. The CFIR has been used to measure contextual implementation determinants within the K-12 school setting. For example, Allen et al. (2021) identified several instances in which racism complicated implementation of a participatory intervention aimed at addressing school connectedness and improving academic and behavioral outcomes for youth who identified as Black, Indigenous, People of Color (BIPOC). Simultaneously, they identified contextual facilitators; for example, they found that leaders’ willingness to examine their BIPOC students’ and families’ experiences with discrimination and marginalization was associated with implementation uptake.

Previous uses of the CFIR to consider contextual factors such as systemic racism serve to indicate the framework’s utility for identifying how these factors are associated with TIPS implementation. Schools are an incredibly diverse ecosystem filled with many different students and staff who come from myriad cultural, racial, economic, and contextual backgrounds. Most schools have student bodies that have been differentially impacted by acute, chronic, and racial trauma. Therefore, it is imperative to understand how school contexts impact implementation.

CFIR utility with focusing on effectiveness trials

As there is still much to learn about the effectiveness of TIPS, it is important to consider how best to assess innovation outcomes in addition to implementation outcomes; though the CFIR is an implementation framework, it has utility in the realm of effectiveness as well. Damschroder et al. (2022a) are clear that outcome data are outside the scope of the CFIR as it is an implementation framework. However, a CFIR Outcomes Addendum was created in 2022 that includes guidance on how to measure important innovation outcome data (Damschroder et al., 2022b).

The addendum was created by pulling from existing CFIR constructs, as well as the Reach, Effectiveness, Adoption, Implementation, and Maintenance framework (RE-AIM; Glasgow et al., 2019) and the Implementation Outcomes Framework (IOF; Proctor et al., 2011). The research team pulled from these frameworks specifically when creating the addendum because multiple researchers who had used the CFIR in the past supplemented the model with these two frameworks. Damschroder et al. (2022b) explain that their goal was to broadly conceptualize implementation and innovation outcomes, rather than develop or add more specific outcome constructs. Many of the more specific outcome constructs that are used in other frameworks such as RE-AIM align with the broader CFIR outcome constructs.

The CFIR addendum presents three innovation outcomes constructs. The resulting innovation outcome constructs are (1) Innovation Recipient Impact (defined as the combination of deliverer reach and innovation effectiveness), (2) Innovation Deliverer Impact (defined as the combination of recipient reach and innovation effectiveness), (3) Key-Decision Maker/System Impact (defined as the combination of key-decision maker reach and innovation effectiveness). These outcomes serve as an indication of innovation success or failure via examining the innovation impact on users (Damschroder et al., 2022b). In the context of TIPS, there are multiple innovation outcomes with which implementers would be concerned. One area of interest is the reduction of symptoms in students who have experienced trauma. Innovation outcomes of interest may include a reduction in PTSD symptoms (where applicable), increased ability to concentrate, decreases in risky behaviors, and decreases in aggression, all of which are symptoms associated with trauma (National Child Traumatic Stress Network-a, n.d.; National Child Traumatic Stress Network-b, n.d.). Additionally, measures of school success are relevant innovation outcomes to examine. Childhood trauma can impact students’ attendance, grade point average, and assessment scores (National Child Traumatic Stress Network-a, n.d.; National Child Traumatic Stress Network-b, n.d.). Therefore, when evaluating TIPS innovation outcomes, these school measures are a crucial addition to consider.

It is important to clarify that measurement of effectiveness is still somewhat beyond the scope of the CFIR. As researchers think about how to measure outcome data such as reduction in trauma symptoms and increase in student academic success, it will be beneficial to pull from other frameworks that center effectiveness, such as RE-AIM. However, using the CFIR to conceptualize innovation outcomes – all of which consider effectiveness’s role in implementation – will be useful for examining the success of a given innovation.

CFIR utility with focusing on implementation outcomes

A key step forward in understanding TIPS is considering implementation more intentionally, including a focus on adoption, scale up, and sustainment. Here, too, the CFIR can be useful. Damschroder et al. (2009) specifically mention implementation and sustainment, speaking to the need for researchers to assess the effectiveness of implementation in specific contexts in order to optimize benefit and prolong sustainability, rather than just evaluating health outcomes.

Practical considerations for schools

In addition to its utility for career researchers, the CFIR would also be of use to school and district employees in evaluating their sites’ readiness for implementation of TIPS. The rising understanding of the negative ramifications of trauma for teachers and students alike, the potential benefits of TIPS, and the increase in governmental initiatives aimed at increasing the use of TIPS (Maynard et al., 2019) has culminated to make it likely that many schools across the country will begin to implement TIPS in the future. Using the CFIR would allow the district staff who are responsible for the TIPS rollout to anticipate specific barriers and facilitators within the various domain levels and subsequently make changes before beginning implementation. We provide several considerations and suggestions about how school personnel might go about leveraging the CFIR.

Key implementation personnel

When considering which educators are equipped to lead TIPS implementation, school psychologists have expertise across a number of domains that equip them for leadership in TIPS. Although implementation science is a nascent field within school psychology; the importance of studying implementation processes and outcomes has been identified as being in alignment with the mission of school psychology research and practice. Implementation is directly referenced in NASP practice model domains two (consultation and collaboration) and five (school-wide practices to promote learning; National Association of School Psychologists, 2020). Moreover, Division 16 of the American Psychological Association (APA) created a Working Group on Translating Science to Practice in schools, noting that implementation considerations are critical to school improvement (Forman et al., 2013). Thus, school psychologists are educators with the training and expertise to champion TIPS implementation in schools.

While school psychologists are highly qualified to facilitate the implementation of TIPS, it is also important to consider the utility of other school personnel such as nurses, teachers, administrators, counselors, and social workers. School psychologists are often preoccupied with tasks beyond consultation and school-wide practices to promote learning; over half of their weeks are taken up by assessment administration, report writing, and IEP meetings (Filter et al., 2013). These assessment-related obligations, in conjunction with other duties such as individual and group counseling, leave little time for leading and evaluating the implementation of TIPS implementation initiatives. For this reason, it may be helpful to consider establishing multidisciplinary teams comprised of school psychologists and other school personnel. The benefits of this approach are twofold. First, a disciplinary model would lessen the time and work burden on any one individual. Second, it would provide an opportunity for collaboration among stakeholders who all hold different and complimentary skillsets (e.g., social worker knowledge of mental health interventions, administrator knowledge of district policies and procedures) to collaborate on implementing TIPS. Establishing these kinds of school-based, multidisciplinary workgroups aligns with suggestions provided by Yatchmenoff et al. (2017) in their paper on recommendations for how to implement trauma-informed care across various settings.

Methods for evaluating determinants

Due to the large number of domains and constructs to consider when embarking on a CFIR-based evaluation of TIPS implementation, it may feel overwhelming to know how to start evaluating each domain or construct of interest. As CFIR research continues to be conducted, there are an increasing number of measures that map onto specific domains and constructs. For example, the Society for Implementation and Research Collaboration (SIRC) is currently undergoing an Instrument Review Project that is advancing the use of implementation measures. Their repository houses various quantitative measures that map onto CFIR constructs and can be used by both researchers and school-based evaluators (Society for Implementation Research Collaboration, n.d.). The CFIR website itself provides suggested qualitative questions for many of the constructs that researchers and evaluators can use (Consolidated Framework for Implementation Research, n.d.). In addition, there are various evaluation tools for trauma informed practices specifically that may prove helpful in evaluating TIPS implementation determinants, such as the Attitudes Related to Trauma-Informed Care scale (ARTIC; Baker et al., 2021) and the Trauma Responsive Schools Implementation Assessment (TRS-IA; National Center for School Mental Health, 2020). Evidently, there are many different existing tools to measure CFIR implementation determinants, which allows evaluation teams flexibility to identify and select the tools that fit best with their specific research questions and goals.

Leveraging implementation strategy matching tools

The CFIR is incredibly useful in identifying potentially relevant implementation determinants that are specific to each school site. Once these barriers and facilitators have been identified using the CFIR, school personnel will likely want to use specific implementation strategies to reduce the identified barriers and leverage identified facilitators. One such model is the CFIR-ERIC Implementation Strategy Matching Tool. The Expert Recommendations for Implementing Change (ERIC) sought to compile a comprehensive list of implementation strategy terms and definitions (Powell et al., 2015). The resulting ERIC model is comprised of 73 strategies that teams can use to bolster implementation in their various contexts. The CFIR-ERIC Implementation Strategy Matching Tool can be used to link salient implementation determinants that have been identified using the CFIR with specific actionable strategies to address these determinants (Waltz et al., 2019).

The ERIC model was subsequently adapted to better fit the needs of the education sector in the SISTER project (Cook et al., 2019; Lyon et al., 2019). SISTER includes 75 school-adapted strategies for implementation. Examples of these strategies include developing educational materials and altering student and personnel obligations to enhance participation (Lyon et al., 2019). Based on the existing CFIR-ERIC Implementation Strategy Matching Tool, it is likely that school teams that are attempting to implement TIPS would benefit from use of the CFIR for evaluating and identifying implementation determinants, and the SISTER tool for selecting specific strategies to use to address these determinants.

Conclusion

The purpose of this paper was to synthesize existing literature on TIPS, including identifying extant knowledge and gaps, and in doing so advocate for the field to study the benefits of the CFIR in future research on TIPS. There is currently a multitude of gaps in the research surrounding the effectiveness and implementation of TIPS. As the school psychology research community continues to learn more about the complexities of implementing TIPS, it will be beneficial to be intentional about ways that researchers can support generalizability and consistency of findings. Our suggestion for how to accomplish this intentionality is to utilize the CFIR. This paper provided examples for the ways in which using the CFIR in future research on TIPS would support filling the gaps in the current literature (see Table 3 for a summary).

Using the CFIR allows for consistency across TIPS research projects while also maintaining researcher autonomy and variability. For example, researchers still have the ability to select domains and constructs of interest to their specific research questions. There are numerous constructs within each domain, and researchers almost never attempt to study all five domains within a single project. In fact, it is seldom possible to account for all domains or constructs in one project (Moullin et al., 2020). The CFIR developers suggest that researchers select whichever domains and constructs are most relevant to the research questions, as decided upon by team discussions, review of previous studies, and policy review (Damschroder et al., 2022a,b). Additionally, the CFIR is suited to qualitative, quantitative, and mixed methods methodology. Existing school-based and trauma-focused research projects have measured CFIR variables using a variety of methodologies. The flexibility of the CFIR – both in terms of the selection of variables and methodology – allows for researcher autonomy and tailoring, while still providing the consistent lexicon and continuity of project variables needed to further our understanding of TIPS implementation.

Author contributions

AM: Writing – original draft, Writing – review & editing. JS: Writing – review & editing. MB: Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Allen, M., Wilhelm, A., Ortega, L. E., Pergament, S., Bates, N., and Cunningham, B. (2021). Applying a race(ism)-conscious adaptation of the CFIR framework to understand implementation of a school-based equity-oriented intervention. Ethn. Dis. 31, 375–388. doi: 10.18865/ed.31.S1.375

Atkins, M. S., Rusch, D., Mehta, T. G., and Lakind, D. (2016). Future directions for dissemination and implementation science: aligning ecological theory and public health to close the research to practice gap. J. Clin. Child Adolesc. Psychol. 45, 215–226. doi: 10.1080/15374416.2015.1050724

Avery, J. C., Morris, H., Galvin, E., Misso, M., Savaglio, M., and Skouteris, H. (2021). Systematic review of school-wide trauma-informed approaches. J. Child Adolesc. Trauma 14, 381–397. doi: 10.1007/s40653-020-00321-1

Baker, C. N., Brown, S. M., Overstreet, S., and Wilcox, P. D. New Orleans Trauma-Informed Schools Learning Collaborative. (2021). Validation of the attitudes related to trauma-informed care scale ARTIC. Psychol. Trauma. 13, 505–513. doi: 10.1037/tra0000989

Bence, S. (2021). The difference between acute and chronic trauma. Very Well Health. Available at: https://www.verywellhealth.com/acute-trauma-vs-chronic-trauma-5208875

Berger, E. (2019). Multi-tiered approaches to trauma-informed care in schools: a systematic review. Sch. Ment. Heal. 11, 650–664. doi: 10.1007/s12310-019-09326-0

Bronfenbrenner, U. (1986). Ecology of the family as a context for human development: research perspectives. Dev. Psychol. 22, 723–742. doi: 10.1037/0012-1649.22.6.723

Bunting, L., Montgomery, L., Mooney, S., MacDonald, M., Coulter, S., Hayes, D., et al. (2019). Trauma informed child welfare systems - a rapid evidence review. Int. J. Environ. Res. Public Health. 16:2365. doi: 10.3390/ijerph16132365

California Department of Education. (2023). Definition of MTSS. Available at: https://www.cde.ca.gov/ci/cr/ri/mtsscomprti2.asp

Center for Safe & Resilient Schools and Workplaces. (2023). What we do. Available at: https://traumaawareschools.org/index.php/our-services/

Center on MTSS. (n.d.). Essential components of MTSS. Available at: https://mtss4success.org/essential-components

Chafouleas, S. M., Johnson, A. H., Overstreet, S., and Santos, N. M. (2016). Toward a blueprint for trauma-informed service delivery in schools. Sch. Ment. Heal. 8, 144–162. doi: 10.1007/s12310-015-9166-8

Chafouleas, S. M., Pickens, I., and Gherardi, S. A. (2021). Adverse childhood experiences (ACEs): translation into action in K12 education settings. Sch. Ment. Heal. 13, 213–224. doi: 10.1007/s12310-021-09427-9

Cohen, C. E., and Barron, I. G. (2021). Trauma-informed high schools: a systematic narrative review of the literature. Sch. Ment. Heal. 13, 225–234. doi: 10.1007/s12310-021-09432-y

Consolidated Framework for Implementation Research. (n.d.). Evaluation design. Available at: https://cfirguide.org/evaluation-design/

Cook, C. R., Lyon, A. R., Locke, J., Waltz, T., and Powell, B. J. (2019). Adapting a compilation of implementation strategies to advance school-based implementation research and practice. Prev. Sci. 20, 914–935. doi: 10.1007/s11121-019-01017-1

Corteselli, K. A., Hollinsaid, N. L., Harmon, S. L., Bonadio, F. T., Westine, M., Weisz, J. R., et al. (2020). School counselor perspectives on implementing a modular treatment for youth. Adolescent Mental Health 5, 271–287. doi: 10.1080/23794925.2020.1765434

Curran, G. M., Bauer, M., Mittman, B., Pyne, J. M., and Stetler, C. (2012). Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med. Care 50, 217–226. doi: 10.1097/MLR.0b013e3182408812

Damschroder, L. J., Aron, D. C., Keith, R. E., Kirsh, S. R., Alexander, J. A., and Lowery, J. C. (2009). Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement. Sci. 4:50. doi: 10.1186/1748-5908-4-50

Damschroder, L. J., Reardon, C. M., Widerquist, M. A. O., and Lowery, J. (2022a). The updated consolidated framework for implementation research based on user feedback. Implement. Sci. 17:75. doi: 10.1186/s13012-022-01245-0

Damschroder, L. J., Reardon, C. M., Widerquist, M. A. O., and Lowery, J. (2022b). Conceptualizing outcomes for use with the consolidated framework for implementation research (CFIR): the CFIR outcomes addendum. Implement. Sci. 17:7. doi: 10.1186/s13012-021-01181-5

Eisman, A. B., Palinkas, L. A., Brown, S., Lundahl, L., and Kilbourne, A. M. (2022). A mixed methods investigation of implementation determinants for a school-based universal prevention intervention. Implement. Res. Pract. 3, 263348952211249–263348952211214. doi: 10.1177/26334895221124962

Felitti, V. J., Anda, R. F., Nordenberg, D., Williamson, D. F., Spitz, A. M., Edwards, V., et al. (1998). Relationship of childhood abuse and household dysfunction to many of the leading causes of death in adults: the adverse childhood experiences (ACE) study. Am. J. Prev. Med. 14, 245–258. doi: 10.1016/S0749-3797(98)00017-8

Filter, K. J., Ebsen, S., and Dibos, R. (2013). School psychology crossroads in America: discrepancies between actual and preferred discrete practices and barriers to preferred practice. Int. J. Special Educ. 28, 88–100

Forman, S. G., Shapiro, E. S., Codding, R. S., Gonzales, J. E., Reddy, L. A., Rosenfield, S. A., et al. (2013). Implementation science and school psychology. School Psychol. 28, 77–100. doi: 10.1037/spq0000019

Glasgow, R. E., Harden, S. M., Gaglio, B., Rabin, B., Smith, M. L., Porter, G. C., et al. (2019). RE-AIM planning and evaluation framework: adapting to new science and practice with a 20-year review. Front. Public Health 7:64. doi: 10.3389/fpubh.2019.00064

Hagermoser Sanetti, L. M., and Collier-Meek, M. A. (2019). Increasing implementation science literacy to address the research-to-practice gap in school psychology. J. Sch. Psychol. 76, 33–47. doi: 10.1016/j.jsp.2019.07.008

Hudson, K. G., Lawton, R., and Hugh-Jones, S. (2020). Factors affecting the implementation of a whole school mindfulness program: a qualitative study using the consolidated framework for implementation research. BMC Health Serv. Res. 20:133. doi: 10.1186/s12913-020-4942-z

Leeman, J., Wiecha, J. L., Vu, M., Blitstein, J. L., Allgood, S., Lee, S., et al. (2018). School health implementation tools: a mixed methods evaluation of factors influencing their use. Implement. Sci. 13. doi: 10.1186/s13012-018-0738-5

Leung, E., Wanner, K. J., Senter, L., Brown, A., and Middleton, D. (2020). What will it take? Using an implementation research framework to identify facilitators and barriers in implementing a school-based referral system for sexual health services. BMC Health Serv. Res. 20:292. doi: 10.1186/s12913-020-05147-z

Liu, S. R., Kia-Keating, M., Nylund-Gibson, K., and Barnett, M. L. (2020). Co-occurring youth profiles of adverse childhood experiences and protective factors: associations with health, resilience, and racial disparities. Am. J. Community Psychol. 65, 173–186. doi: 10.1002/ajcp.12387

Lyon, A. R., Cook, C. R., Locke, J., Davis, C., Powell, B. J., and Waltz, T. J. (2019). Importance and feasibility of an adapted set of implementation strategies in schools. J. Sch. Psychol. 76, 66–77. doi: 10.1016/j.jsp.2019.07.014

Maynard, B. R., Farina, A., Dell, N. A., and Kelly, M. S. (2019). Effects of trauma-informed approaches in schools: a systematic review. Campbell Syst. Rev. 15:e1018. doi: 10.1002/cl2.1018

Moullin, J. C., Dickson, K. S., Stadnick, N. A., Albers, B., Nilsen, P., Broder-Fingert, S., et al. (2020). Ten recommendations for using implementation frameworks in research and practice. Implement. Sci. Commun. 1:42. doi: 10.1186/s43058-020-00023-7

National Association of School Psychologists. (2020). NASP 2020 Domains of Practice. Available at: https://www.nasponline.org/standards-and-certification/nasp-2020-professional-standards-adopted/nasp-2020-domains-of-practice

National Center for School Mental Health. (2020). Trauma responsive schools. Available at: https://www.theshapesystem.com/trauma/

National Child Traumatic Stress Network-a. (n.d.). Effects. Available at: https://www.nctsn.org/what-is-child-trauma/trauma-types/complex-trauma/effects

National Child Traumatic Stress Network-b (n.d.). Trauma-Informed Schools for Children in K-12: A System Framework. Available at: https://www.nctsn.org/sites/default/files/resources/fact-sheet/trauma_informed_schools_for_children_in_k-12_a_systems_framework.pdf

Orchowski, L. M., Oesterle, D. W., Zong, Z. Y., Bogen, K. W., Elwy, A. R., Berkowitz, A. D., et al. (2022). Implementing school-wide sexual assault prevention in middle schools: a qualitative analysis of school stakeholder perspectives. J. Community Psychol. 51, 1314–1334. doi: 10.1002/jcop.22974

Overstreet, S., and Chafouleas, S. M. (2016). Trauma-informed schools: introduction to the special issue. Sch. Ment. Heal. 8, 1–6. doi: 10.1007/s12310-016-9184-1

Piper, K. N., Brown, L. L., Tamler, I., Kalokhe, A. S., and Sales, J. M. (2021). Application of the consolidated framework for implementation research to facilitate delivery of trauma-informed HIC care. Ethn. Dis. 31, 109–118. doi: 10.18865/ed.31.1.109

Powell, B. J., Waltz, T. J., Chinman, M. J., Damschroder, L. J., Smith, J. L., Matthieu, M. N., et al. (2015). A refined compilation of implementation strategies: results from the expert recommendations for implementing change (ERIC) project. Implement. Sci. 10:21. doi: 10.1186/s13012-015-0209-1

Proctor, E. K., Landsverk, J., Aarons, G., Chambers, D., Glisson, C., and Mittman, B. (2009). Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Admin. Pol. Ment. Health 36, 24–34. doi: 10.1007/s10488-008-0197-4

Proctor, E., Silmere, H., Raghavan, R., Hovmand, P., Aarons, G., Bunger, A., et al. (2011). Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm. Policy Ment. Health Ment. Health Serv. Res. 38, 65–76. doi: 10.1007/s10488-010-0319-7

Robey, N., Margolies, S., Sutherland, L., Rupp, C., Black, C., Hill, T., et al. (2021). Understanding staff- and system-level contextual factors relevant to trauma-informed care implementation. Psychol. Trauma Theory Res. Pract. Policy 13, 249–257. doi: 10.1037/tra0000948

Rudd, B. N., Davis, M., and Beidas, R. S. (2020). Integrating implementation science in clinical research to maximize public health impact: a call for the reporting and alignment of implementation strategy use with implementation outcomes in clinical research. Implement. Sci. 15, 103–111. doi: 10.1186/s13012-020-01060-5

Society for Implementation Research Collaboration. (n.d.). Instrument review project. Available at: https://societyforimplementationresearchcollaboration.org/sirc-instrument-project/

Substance Abuse and Mental Health Servivces Administration. (2014). SAMHSA’s concept of trauma and guidance for a trauma-informed approach. Available at: https://store.samhsa.gov/product/samhsas-concept-trauma-and-guidance-trauma-informed-approach/sma14-4884

Substance Abuse and Mental Health Services Administration & The National Child Traumatic Stress Network. (2022). Trauma and violence. SAMHSA. Available at: https://www.samhsa.gov/trauma-violence#:~:text=SAMHSA%20describes%20individual%20trauma%20as,physical%2C%20social%2C%20emotional%2C%20or

University of California, San Francisco. (n.d.). HEARTS Program Overview. Available at: https://hearts.ucsf.edu/program-overview

Waltz, T. J., Powell, B. J., Fernández, M. E., Abadie, B., and Damschroder, L. J. (2019). Choosing implementation strategies to address contextual barriers: diversity in recommendations and future directions. Implement. Sci. 14:42. doi: 10.1186/s13012-019-0892-4

Wassink-de Stigter, R., Kooijmans, R., Asselman, M. W., Offerman, E. C. P., Nelen, W., and Helmond, P. (2022). Facilitators and barriers in the implementation of trauma-informed approaches in schools: a scoping review. Sch. Ment. Heal. 14, 470–484. doi: 10.1007/s12310-021-09496-w

Wendt, J., Scheller, D. A., Fletcher-Mors, M., Meshkovska, B., Luszczynska, A., Lien, N., et al. (2023). Barriers and facilitators to adoption of physical activity policies in elementary schools from the perspective of principals: an application of the consolidated framework for implementation research – a cross-sectional study. Front. Public Health 11:935292. doi: 10.3389/fpubh.2023.935292

Wittich, C., Wogenrich, C., Overstreet, S., Baker, C. N., and Collaborative, T. N. O. T. I. S. L. (2020). Barriers and facilitators of the implementation of trauma-informed schools. Res. Pract. Schools 7, 1–16

Keywords: CFIR, trauma informed practices, implementation science, trauma informed schools, consolidated framework for implementation research

Citation: Mullin AC, Sharkey JD and Barnett M (2024) Advancing trauma informed practices in schools using the Consolidated Framework for Implementation Research. Front. Educ. 9:1346933. doi: 10.3389/feduc.2024.1346933

Edited by:

Michael R. Hass, Chapman University, United StatesReviewed by:

Christopher Kearney, University of Nevada, Las Vegas, United StatesElizabeth Connors, Yale University, United States

Copyright © 2024 Mullin, Sharkey and Barnett. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alice C. Mullin, YWxpY2VtdWxsaW5AdWNzYi5lZHU=

†ORCID: Alice C. Mullin, https://orcid.org/0000-0002-3713-6142

Jill D. Sharkey, https://orcid.org/0000-0003-4658-2811

Miya Barnett, https://orcid.org/0000-0002-2359-9998

Alice C. Mullin

Alice C. Mullin Jill D. Sharkey

Jill D. Sharkey Miya Barnett

Miya Barnett