94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 26 February 2024

Sec. Assessment, Testing and Applied Measurement

Volume 9 - 2024 | https://doi.org/10.3389/feduc.2024.1332170

Introduction: Self-regulated learning (SRL), as the self-directed and goal-orientated control of one’s learning process, is an important ability for academic success. Even at preschool age, when its development is at a very early stage, SRL helps to predict later learning outcomes. Valid test instruments are needed to identify preschoolers who require SRL support and help them to start school successfully.

Methods: The present study aimed to provide an adequate SRL test instrument for preschoolers by revising and optimizing an existing strategy knowledge test and validating the revised version–the SRL Strategy Knowledge Test—in a sample of n = 104 German preschoolers (Mage = 5;11 years; 48.1% girls). For the validation, we used measures of (1) SRL and related constructs, (2) psychomotor development, and (3) academic competence, to determine three levels of validity: (a) convergent, (b) divergent, and (c) criterion. All the correlation analyses controlled for child intelligence.

Results: The results showed that the test is of moderate difficulty and sufficiently reliable (Cronbach’s α = 0.74), can generate normally distributed data, and has a one-factor structure. In line with our hypotheses, we found significant correlations for the convergent and criterion measures, and numerically smaller and insignificant correlations for divergent measures. The correlations for the criterion measures failed to be significant when controlling for intelligence.

Discussion: The missing evidence for criterion validity when controlling for intelligence may have been due to limitations in the measures used to examine criterion validity. The SRL Strategy Knowledge Test can be used in practice to diagnose the need for SRL support and in future studies and interventions on SRL development.

When children start school, the demands on their goal-oriented behavior change: for example, children have to maintain their attention in class and study for exams in order to achieve good academic results. Self-regulated learning (SRL) as the ability to plan, perform, and reflect on one’s cognitions and behavior in a self-directed manner in order to achieve learning goals (Zimmerman, 2000) can help to master these demands successfully. As meta-analyses have shown, SRL is of great importance for academic results such as school grades, mathematical reasoning, or reading competence (Dent and Koenka, 2016; Ergen and Kanadli, 2017; Widana, 2022). Its importance is also illustrated by the meta-analytical finding that learners can increase their academic skills through interventions that foster SRL (Dignath et al., 2008; Guo, 2022). Even before school entry, early self-regulatory abilities such as SRL can predict academic competencies both cross-sectionally and longitudinally (e.g., Howse et al., 2003; Willoughby et al., 2011; Sasser et al., 2015; Woodward et al., 2017). Against this background, it would be desirable to be able to identify those children whose SRL skills are weakly developed at preschool age. They could then be offered appropriate support even before they start school, which would enable them to successfully cope with school requirements from the moment they enter school; for example, they would find it easier to do their homework. In turn, these successful experiences could prevent problems such as low self-esteem and motivational difficulties (e.g., Deci and Ryan, 2008; Wang and Veugelers, 2008). Reliable and valid measurement instruments are needed to assess preschool SRL, diagnose a possible need for supporting SRL skills in children, and evaluate the effectiveness of SRL support programs (e.g., Lange et al., 1989; Whitebread et al., 2009). In the sense of taking a multi-method approach, both indirect procedures, which capture observable SRL behaviors, and direct procedures, which capture internal processes such as preschoolers’ knowledge of SRL strategies, should be used (Rovers et al., 2019). Specifics in preschoolers’ cognitive and motivational processes present challenges for the construction of tests that capture preschoolers’ internal SRL processes; for example, classic measures such as self-report questionnaires cannot be used because young children cannot yet assess how frequently they use certain SRL strategies (Jacob et al., 2021). Therefore, the present study aimed to revise an existing measure of preschoolers’ knowledge of SRL strategies, which has previously been identified as having potential for further development (Jacob et al., 2019), and analyze its psychometrical qualities.

The theoretical basis for Jacob et al.’s (2019) strategy knowledge test instrument is Zimmerman’s (2000) social cognitive SRL model. Zimmerman (2000) describes an ideal process of SRL in his work, and since the strategies used in this process can be learned, the model has been widely used to design effective SRL interventions in past studies (e.g., Becker, 2013; Jansen et al., 2020) and provides a sound basis for diagnosing the possible need for SRL support. Zimmerman (2000) assumes three successive and cyclical phases that interact reciprocally through feedback loops: a pre-actional forethought phase, an actional performance phase, and a post-actional self-reflection phase. In the forethought phase, the learner sets their learning goals, plans the course of action to achieve these goals, and motivates themself to work toward the goals by activating their self-efficacy expectations and clarifying the intrinsic value of the activity. During the performance phase, the learner employs volitional strategies that serve to maintain attention and they monitor whether the actions taken are goal-directed. After completing an action, in the self-reflection phase, the learner evaluates to what extent the previously set goals have been achieved and identifies what might have led to the (non-)achievement of the goals; this evaluation leads to an emotional reaction, such as satisfaction or disappointment. Conclusions for adapting future learning situations are drawn from the evaluation, which closes the circle before the next forethought phase begins (so creating a feedback loop; Zimmerman, 2000).

Zimmerman and Moylan (2009) extended this initial version of the model by describing in more detail what processes interact during the phases of learning. However, the basic structure of the phases is retained in the revision. Panadero and Alonso-Tapia (2014) mention as a limitation of the (original and revised) framework that it pays too little attention to the role of emotions during the forethought phase, such as anxiety. As the phased nature of the model provides a good basis for designing SRL training and enables a clear visualization of the learning process (Panadero, 2017), it is nevertheless widely used in current research on SRL promotion (e.g., Ateş Akdeniz, 2023; Lee et al., 2023).

The strategies used in the three phases of the learning process engage cognitive, metacognitive, and motivational processes (Boekaerts, 1999). Because these processes mature into adolescence and, partially, into adulthood (e.g., Somerville and Casey, 2010), preschool-aged SRL is still at an early stage of development. Therefore, the following section explains in more detail how SRL develops in preschool age and depends on related precursor abilities.

Even though preschoolers do not learn by reading texts and memorizing content and formulas as do older students, they already exhibit behaviors that can be mapped to Zimmerman’s (2000) SRL model. For example, regarding the forethought phase, they plan how they will proceed before they begin an action (e.g., Hudson and Fivush, 1991; Moffett et al., 2018); regarding the performance phase, they observe their own behavior and check whether it is goal-directed (e.g., Welsh, 1991; Lambert, 2001), and as strategies for the reflection phase, they evaluate their learning outcome and show emotional reactions to this evaluation after they complete an action (Stipek et al., 1992).

However, since there is still little neuronal differentiation in preschoolers’ brains (e.g., Wiebe et al., 2008), the phases within the SRL process and the components belonging to SRL (cognition, metacognition, motivation) do not emerge as distinct factors in this age group (Dörr and Perels, 2018; Jacob et al., 2019).

The extent to which children can regulate their learning behavior depends on how far the development of certain precursor skills has progressed (Jacob et al., 2021). Executive functions (EFs) as higher-order processes that enable task-related control of lower-order cognitive processes, for example, by suppressing task-irrelevant behavioral impulses (Miyake et al., 2000; Spiegel et al., 2021), can be regarded as such a precursor skill (Davis et al., 2021). Metacognition–cognition about cognitions, such as knowledge about memory processes (Schneider, 2008; Stanton et al., 2021)–also influences SRL development (e.g., Çetin, 2017). Whether and to what extent intelligence, another competence that is important for academic success (e.g., Ridgell and Lounsbury, 2004; Kiss et al., 2014), plays a role in the development of SRL is not yet clear. To date, there are only a few studies on the relationship between the two constructs and these have produced inconsistent findings (Diseth, 2002; Howse et al., 2003; Zuffianò et al., 2013). The only study with a sample of preschoolers known to the authors of this paper found a significant cross-sectional correlation (Howse et al., 2003). The definition of intelligence as a basic cognitive ability that enables the acquisition of knowledge and other cognitive skills (e.g., Cattell, 1987) likewise suggests that intelligence influences SRL development.

As the role of EFs and metacognition as SRL precursor skills is more theoretically and empirically founded than the role of intelligence, these constructs will be discussed in more detail in the following. EFs are composed of a variety of processes; for example, planning, impulse control, and attention control processes (Doebel, 2020). These processes can be divided into “hot” EFs, which are important in tasks with emotional content (e.g., delay of gratification tasks), and “cool” EFs, which are relevant in decontextualized and emotionally neutral tasks (e.g., the Wisconsin Card Sorting Test; Zelazo and Carlson, 2012). Both EF facets are of relevance to SRL. “Hot” EFs are important for SRL because learning usually involves delayed emotional–motivational consequences, such as verbal feedback or school grades, while short-term reinforcing activities, such as playing with friends, must be put behind. “Cool” EFs are relevant to SRL because the application of SRL strategies inherently involves rather complex, emotionally neutral tasks. For example, successful completion of the SRL process requires maintaining learning goals and tasks, as well as directing attention away from distracting factors and toward the learning activity (Davis et al., 2021). In both “hot” and “cool” EFs, significant developmental gains are evident during early childhood (e.g., Zelazo and Müller, 2011; Zelazo and Carlson, 2012). Since, as described above, both types of EFs are instrumental in regulating one’s learning behavior in a goal-directed manner, many authors assume them to be the developmental basis of SRL (e.g., Sasser et al., 2015; Rutherford et al., 2018). Empirical evidence for executive functioning as a precursor ability to SRL is provided by Davis et al. (2021); these authors compared different prediction models between EFs and SRL across the transition from kindergarten to elementary school and showed that EFs predicted future SRL, but the prediction of EFs by SRL was not possible.

The importance of metacognitive abilities for SRL has already been described by Boekaerts (1999). Metacognition includes both declarative and procedural competences (Roebers and Feurer, 2016). Declarative metacognition refers to one’s knowledge about thoughts, memories, and learning processes; accordingly, the term metacognitive knowledge is used to describe this competence (Schneider, 2008). Procedural metacognition, on the other hand, refers to processes of regulating one’s cognitions, such as monitoring and controlling one’s memory processes. The term metacognitive skills is used to describe this competence (Bryce et al., 2015). While metacognitive knowledge is necessary to plan one’s learning process before an action, metacognitive skills are especially important for monitoring and adjusting the learning process during an action and evaluating it after an action. In order to make adjustments for future learning actions that result from this evaluation, metacognitive knowledge is again required (Cera et al., 2013). Several studies (e.g., Sperling et al., 2004; Isaacson and Fujita, 2006; Çetin, 2017) have found significant relationships between SRL and learners’ metacognition, indicating that successful completion of the SRL cycle is enabled by metacognitive competences. Previous research on metacognitive competences has shown that preschoolers already have basic metacognitive knowledge that they continue to build as they enter school (Waters and Schneider, 2010). Similarly, preschoolers also engage in initial behaviors that can be categorized as metacognitive skills, but these improve significantly with age (Waters and Schneider, 2010).

Follmer and Sperling (2016) focused on the interplay of EFs, metacognition, and SRL. In a sample of university students, they found that the association between EFs (measured using self-report and performance data) and self-reported SRL was mediated by self-reported metacognitive competences. Accordingly, EFs form the developmental basis for metacognitive competences, which in turn influence the development of SRL skills. Since there is considerable interindividual variance in precursor abilities at preschool age (e.g., Schoemaker et al., 2012; Escolano-Pérez et al., 2019), considerable interindividual heterogeneity is also evident in preschool-age SRL (Jacob et al., 2021; Grüneisen et al., 2023), which means that some preschoolers will have strong deficiencies in SRL. Because of the importance of SRL to academic success, it would be desirable to identify preschoolers with SRL deficits early on, so they can be provided with SRL support before they enter school. This would make it much easier for them to start school. To this end, reliable and valid measures of preschool SRL are needed so that SRL support needs can be diagnosed and the effectiveness of interventions can be evaluated. Therefore, this project aimed to provide a valid SRL measurement tool that captures internal SRL processes. With this in mind, we describe below the state of current research on preschool SRL measurement.

In general, a differentiation can be made between online and offline methods for the assessment of SRL (Rovers et al., 2019). Online methods assess SRL while the learner is working on a task; an example is the think-aloud protocol. In contrast, offline methods assess anticipated or retrospective SRL (as expected before or exhibited after learning). Examples of offline methods are self-report questionnaires and learning diaries, which are commonly used for older cohorts, such as elementary school, high school, or college students (Roth et al., 2016). However, they cannot be used with preschoolers for several reasons: on the one hand, the items in these assessment methods cannot be read by preschool-age students, since their reading skills are not yet developed or are only rudimentary (Lonigan et al., 2000); on the other hand, immature memory processes make it difficult for preschoolers to recall (the frequency of) employed SRL strategies (Maylor and Logie, 2010). In addition, preschoolers need motivational incentives, such as a playful test character, for sufficient test compliance (Stephenson and Hanley, 2010).

To deal with these obstacles, previous research on preschool SRL has primarily relied on external assessment methods in which observers or caregivers assess and report SRL-relevant behaviors in preschool-age children (Lange et al., 1989; Bronson, 1994; McDermott et al., 2002; Whitebread et al., 2009; Dörrenbächer and Perels, 2018). These measurement methods provide a good way to measure the frequency of preschoolers’ externally observable SRL strategy use. However, because many SRL strategies are not externally visible, such as recalling past experiences of success to enhance self-efficacy beliefs, these measures can only partially map the SRL process. In addition, knowledge about SRL strategies that have not yet been integrated into child behavior cannot be assessed using indirect measures. To take a holistic and multi-method approach, therefore, measurement instruments that can record these inner processes should be used. To enable the assessment of preschoolers’ knowledge of SRL strategies, Jacob et al. (2019) developed a scenario-based knowledge test that was based on a metacognitive knowledge test that has already been used successfully with young children (Lockl et al., 2016). This scenario-based knowledge test was revised for the current study and is described in more detail below.

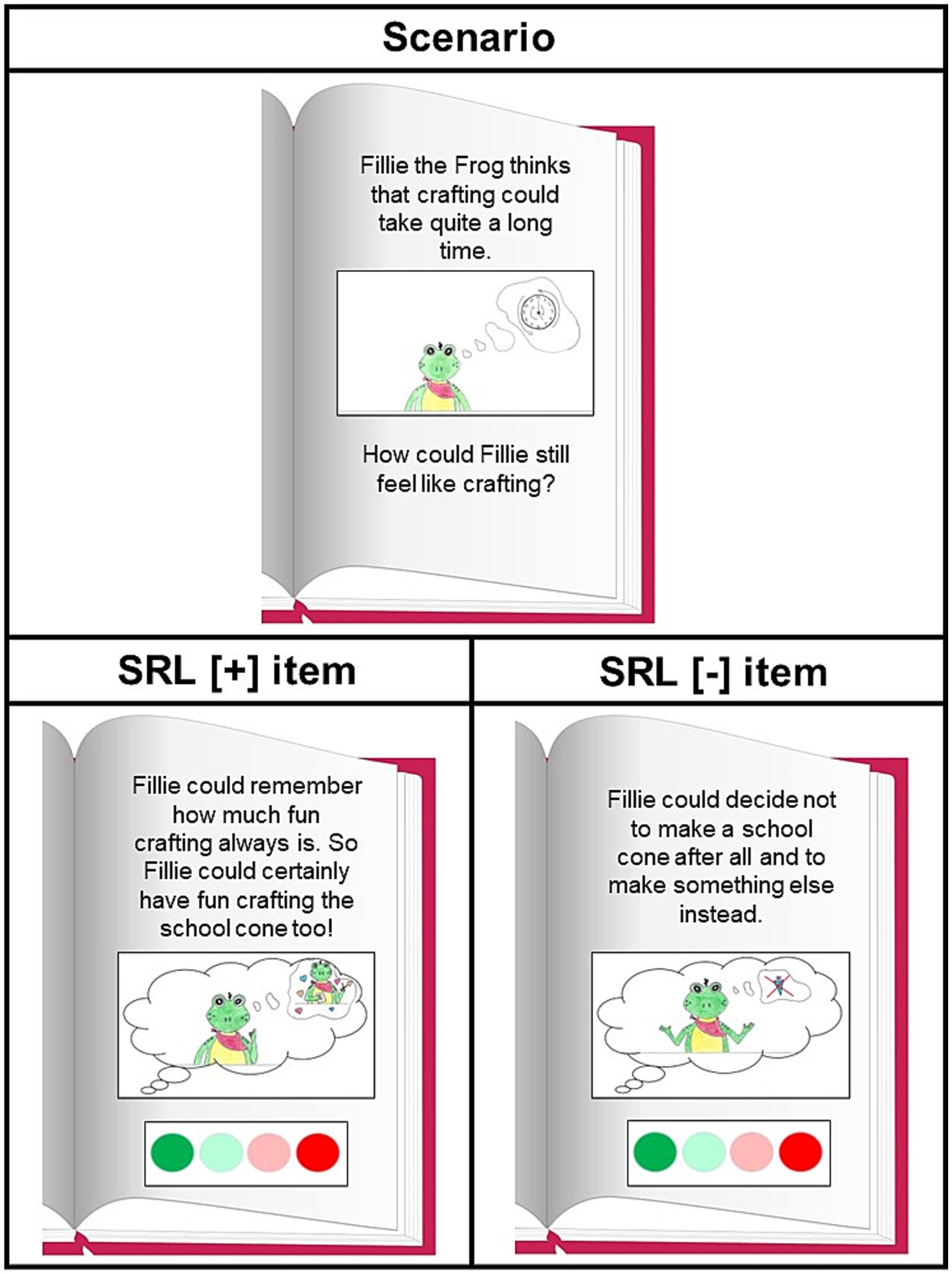

In Jacob et al. (2019) strategy knowledge test, preschoolers are read a story that is visualized with painted pictures, in which the protagonist encounters problem scenarios that can be more easily solved using SRL strategies. For each scenario, children are given two possible solutions (items): one that uses an SRL strategy (SRL [+] item) and one that does not use an SRL strategy (SRL [−] item). The children’s task is to rate the solutions/items as either a “bad idea” or “great idea” using a two-point response scale (a sad or a happy smiley face) and their performance is determined by the correspondence of their ratings to the SRL relevance of the items. For better understanding, the problem scenarios and the items are visualized by drawings. In their reliability analysis, Jacob et al. (2019) found a satisfactory internal consistency of α = 0.72, but their version of the test contained only 11 of the original 24 items. This was because the item difficulty and discriminatory power of many of the items was too low. The present study addressed this issue by revising Jacob et al. (2019) strategy knowledge test in several ways to allow for greater variance in children’s responses and thereby increase the item difficulty and discriminatory power.

The aims of the present study arose from discussions about the importance of SRL for school success (e.g., Sasser et al., 2015; Woodward et al., 2017). There is a need for valid SRL diagnostic instruments that can be used in preschool to identify children who require SRL development support before school entry. Against this background, the present study aimed to revise an existing strategy knowledge test for preschoolers (Jacob et al., 2019) to increase its reliability and validity and test the revised test version psychometrically. The reliability and validity of the revised test were calculated according to validity standards jointly developed by the American Educational Research Association (AERA), the American Psychological Association (APA), and the National Council on Measurement in Education (NCME) (American Educational Research Association, 2014).1 To achieve this goal, the validity analysis involved several steps:

1. We first conducted confirmatory factor analysis (CFA) to determine whether the test has a one-factor structure as has previously been found for an SRL external rating scale validated by Dörr and Perels (2018)

2. Next, correlations between the revised test and other measurement instruments on preschoolers’ SRL and its precursor abilities were calculated to derive statements on convergent validity. We expected to find significant and positive correlations between the revised SRL test and the convergent validity measures.

3. To determine divergent validity, additional psychomotor tests were used. We expected lower and insignificant correlations between the revised SRL test and the divergent validity measures.

4. Due to the importance of SRL for academic success, we calculated correlations between the revised SRL test and measures of academic competence to determine the criterion validity. We expected significant and positive correlations between the revised SRL test and the academic competence measures.

The design and hypotheses adopted for the present study have been preregistered and can be found using the following link: https://aspredicted.org/mz5fs.pdf.

The conduct of the study was consistent with ethical research standards. Parents were informed about the study objectives and the testing methods to be used before giving their informed consent for their child to participate in the study. As a second level of consent, the children participated in the study voluntarily and could stop the tests at any time without any disadvantage to them. The data collection also included a parent survey; completion of the survey as a whole, or the single items within it, was voluntary.

Data collection was undertaken anonymously by assigning numerical codes to the participants so that no conclusions could be drawn between the participants’ collected and personal data. As a thank you for their participation in the study, the children received a small present.

We planned the sample size needed for this study based on a review article by Anthoine et al. (2014), who compared 114 validation studies in terms of their sample size. They found that approximately 90% of the studies had a sample size of at least 100 participants. In order to follow the existing research situation, we planned to include N = 100 preschoolers in our data collection.

We promoted our study via a press report on the university website, a newspaper article, and the distribution of flyers in kindergartens and sports clubs in order to reach children from a high range of social classes. N = 1062 German children registered to take part in the study. Children were in their last year of kindergarten, as this period is considered to be preschool age in Germany. Parents of all the children provided written informed consent. Of these 106 preschoolers, n = 104 children (Mage = 5;11 years; age range: 5;1 years to 6;8 years; 48.1% female, 51.9% male) completed the revised SRL Strategy Knowledge Test and were therefore included as the final sample for our data analyses. The native language of 86.5% of the preschoolers was German. The remaining children spoke other native languages at home: Arabic (2.9%), English (1.9%), Turkish (1.9%), Russian (1.9%), Bulgarian (1.0%), and Romanian (1.0%) (2.9% missing data on native language). The children had normal or corrected-to-normal vision; 3.6% of the children had dyschromatopsia.3 The children had no known developmental delays.

In addition to the preschoolers themselves, one parent of each child was also surveyed. N = 83 parents participated in the parent survey. The parent sample was composed of individuals with different levels of vocational education (3.6% no vocational degree, 37.3% vocational apprenticeship degree, 47% university degree, 10.8% doctorate, 1.2% missing data on vocational degree). The distribution of vocational qualifications in the sample indicates that university degrees (including completed doctorates) were slightly overrepresented compared to the general German population, in which 52.3% of 30 to 34-year-olds have a university degree (Statistics Bundesamt, 2024).

The preschoolers participated in two sessions each so that their attentional capacities were not exceeded. The first test session lasted an average of 32 min, and the second test session lasted an average of 36 min. The interval between the two test sessions averaged 1.54 weeks. To ensure that the children were able to work on the test items with as little distraction as possible, the tests took place in a quiet room and each child was tested individually by the respective test administrator. In addition to the child testing, one parent for each child received a parent questionnaire.

A total of eight test administrators worked on the study: one doctoral student and seven psychology students. All the test administrators received extensive training on the test administration and test protocol prior to their first testing session. Standardized test administration and recording were also ensured by providing the administrators with a manual containing the test instructions and protocol specifications.

Table 1 provides an overview of the test instruments used in this study and the number of participants who completed each measure. If a child or parent did not complete a task or questionnaire, they were excluded from the corresponding analysis for validation.

The SRL Strategy Knowledge Test used in this study was developed by making the following changes to Jacob et al. (2019) strategy knowledge test: (1) We reworded or removed items that proved to have little discriminatory power. (2) To capture a wider range of preschool SRL strategies, we constructed additional problem scenarios in which previously unrecorded SRL strategies could be used. (3) For each of these scenarios, we generated two new solutions/items, one that described an SRL strategy (SRL [+] item) and one that described an SRL-irrelevant behavior (SRL [−] item).

(4) In addition to revising the test content, we also implemented a visual redesign. To ensure that the test’s visual design was appropriate for preschoolers’ perceptual and processing abilities, we based the visual revision on the results of an expert survey. N = 38 German-speaking experts in the fields of child development and child education participated in this survey (70.3% researchers, 10.8% practitioners, and 18.9% people working in research and practice). The results of the survey led to the following changes in visual test design: the male and smiling protagonist in the previous test version was changed to a gender-neutral protagonist without a mouth. Since a smiling face is experienced by children as reinforcement, the absence of a smiling mouth was expected to make children feel less inclined to judge the protagonist’s ideas as “great.” Furthermore, we formulated all the items in the subjunctive mood and presented the visualizations of them in thought bubbles to make it clear to the children that they were ideas and that their judgments on them could help the protagonist decide whether the ideas should be implemented.

(5) The children were asked to rate the usefulness of the items and the response scale was expanded from a two-point to a four-point format, as results of the expert survey indicated that preschoolers can differentiate between four answer options. The naming of the response options was based on the response scale of a convergent test instrument on metacognitive knowledge (Lockl et al., 2016): the negative pole of the scale was named “not so great idea,” and the positive pole was named “really great idea.” The visual appearance of the response scale was also revised based on the expert survey. The response scale originally consisted of one happy and one sad smiley face. The revised response scale consisted of four colored circles, from a bright red circle (negative pole) to a pale red circle to a pale green circle to a bright green circle (positive pole). The assignment of the colors to the meanings of the circles was expected to be familiar to the children from their everyday kindergarten life, in which they work with traffic light systems to reinforce desired behavior and sanction undesired behavior. Additionally, the meaning of the colors was explained to the children verbally to ensure that they understood the response scale.

The resulting SRL Strategy Knowledge Test consisted of 14 problem scenarios that corresponded to one of 14 SRL strategies for solving the problem; each of these had two corresponding test items. The scenarios were embedded in the story of Fillie the Frog who wanted to make a gift for their friend Malie the Seagull. The SRL strategies were mapped to Zimmerman’s (2000) three cyclical phases: Forethought phase: 1. Goal setting, 2. Using prior knowledge, 3. Planning the action sequence, 4. Enhancing self-efficacy beliefs, and 5. Activating task interest. Performance phase: 6. Proceeding step by step, 7. Dealing with distraction, 8. Self-monitoring, 9. Dealing with mistakes, 10. Taking breaks, and 11. Self-praise. Self-reflection phase: 12. Self-evaluation, 13. Self-satisfaction, and 14. Self-response. In addition to the SRL [+] items, which involved applying the stated strategies, the SRL [−] items presented ways of dealing with the scenarios that did not help in achieving the goals, and accordingly, did not represent SRL-related behavior.

The story and the scenarios were read to the children and the associated items were visualized using pictures. An example scenario for the activating task interest strategy with associated items is shown in Figure 1, and a list of all the SRL Strategy Knowledge Test scenarios and items is provided in Appendix A.

Figure 1. Example scenario for the activating the task interest strategy and the associated SRL [+] and SRL [−] items. SRL, Self-regulated learning.

The scoring of the SRL Strategy Knowledge Test was analogous to that of other strategy knowledge tests in that options for action were given for the scenarios using pair comparisons (Händel et al., 2013; Maag Merki et al., 2013; Lockl et al., 2016; Dörrenbächer-Ulrich et al. (accepted)). If a child rated the SRL [+] item on a scenario as being better than the SRL [−] item, the child received one point. If the child rated the SRL [+] item as being as good as or worse than the SRL [−] item, the child received zero points. With a total of 14 scenarios, a point range from 0 to 14 points was thus possible, with 14 points representing the maximum performance.

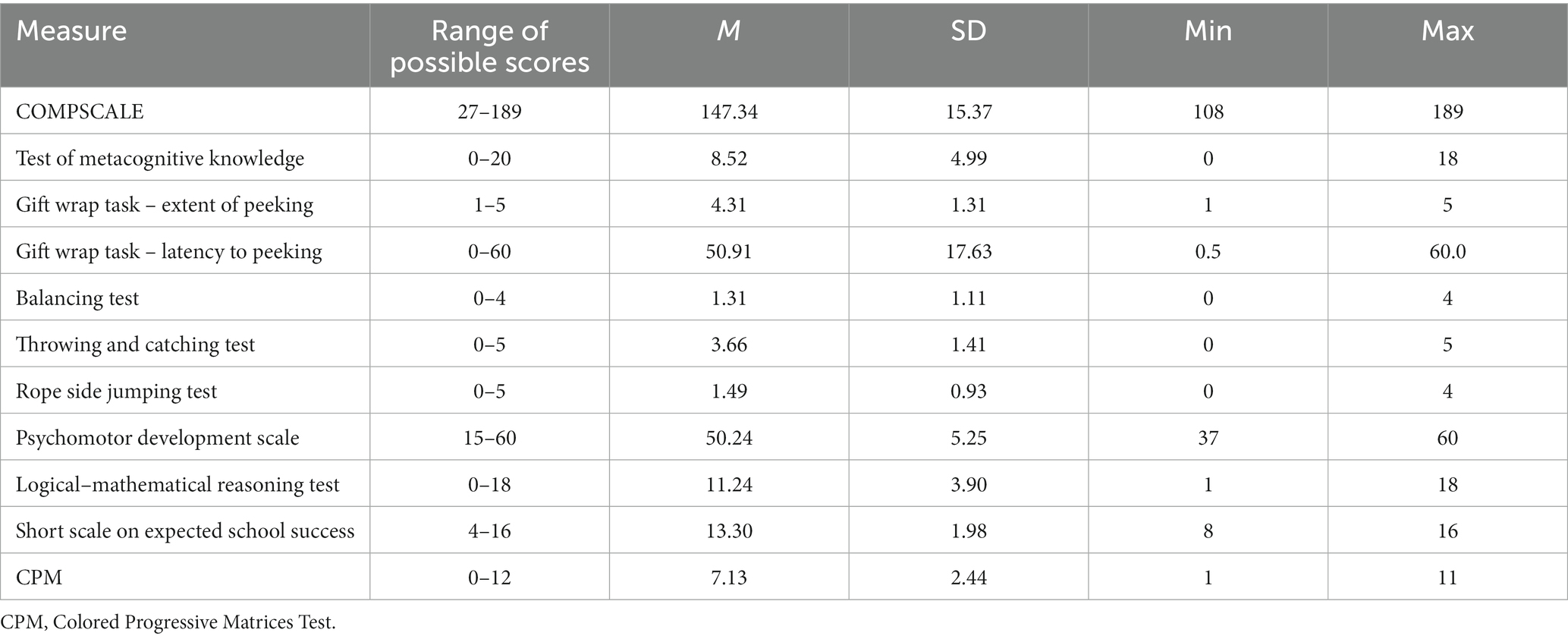

For convergent validity, parental ratings of the children’s SRL-related behaviors were collected using a German translation of the COMPSCALE (Instrumental Competence Scale for Young Children; Lange et al., 1989). The COMPSCALE consists of 27 items (six negatively polarized), each describing one behavior that can be assigned to SRL, e.g., “The child is confident in his or her ability to deal effectively with the environment” (positively polarized item) or “The child is easily distractible in task settings” (negatively polarized item). Parents were asked to indicate their level of agreement with the items using a seven-point response scale ranging from 1 (strongly disagree) to 7 (strongly agree). With a total of 27 items, a total range from 27 to 189 points was possible. The COMPSCALE achieved a reliability of Cronbach’s α = 0.85 (McDonald’s ω = 0.86) for the present sample. Lange et al. (1989) found a significant correlation of r = 0.41 to performance in a learning task and a significant correlation of r = 0.24 to performance in a problem-solving task.

Given the importance of metacognitive abilities for children’s SRL, we used a second convergent validity measure: Lockl et al.’s (2016) test of metacognitive knowledge. Like the test instrument that was being validated, this test is a scenario-based knowledge test. Children are given 10 problem situations, each of which can be solved by using metacognitive knowledge, such as knowledge of how to remember things better. For each scenario, three suggested solutions (items) are read out, the usefulness of which is evaluated by the child participant using a three-point response scale visualized using one to three stars. An example scenario is that a girl needs her gym bag the following day and wants to find a way to not forget to take it with her. The proposed solutions/items are to (1) hang the bag on the front door, (2) ask her little brother to remind her, or (3) think hard about the bag before falling asleep.

The items on the scenarios were first rated by a number of experts (Lockl et al., 2016), so the test scoring depends on the correspondence between children’s judgments and the expert ratings. If a child judges an item on a scenario to be more useful than another item that was judged to be more useful by at least 75% of the experts, they receive one point. If the usefulness rating is the same or worse, they receive zero points. Since a total of 20 items were judged as superior to other items in the same scenario by at least 75% of the experts, the children’s performance can range from 0 to 20 points. For our sample, we were able to find an internal consistency of Cronbach’s α = 0.86 (McDonald’s ω = 0.84). Since Lockl et al. (2016) did not examine any correlations between the measurement instrument and other measurement tools, no data-based statement on validity can be made.

To further determine convergent validity, because of the role of hot EFs as precursor skills for SRL, we also used a third measure: an adaptation of the gift wrap task (Kochanska et al., 2000; Merz et al., 2017). The children received a small gift as a thank-you for their participation in the study and were told that it still needed to be wrapped. The investigator did this at a table next to the study table and the children were instructed not to look at the investigator while the gift was being wrapped. The gift was wrapped for 60 s and the children were judged by two independent, previously trained raters on two measures. First, the extent of peeking was assessed using a five-point scale (1 = turns around, 2 = turns around slightly, 3 = peeks over shoulder, 4 = turns head only slightly to peek, 5 = does not peek). In addition, the latency to peeking was assessed in seconds (possible range: 0 to 60 s). With ICC = 0.92 for the extent of peeking and ICC = 0.97 for the latency to peeking, a high level of agreement among observer ratings can be assumed (Greguras and Robie, 1998). Merz et al. (2017) found a significant correlation of r = 0.39 between the gift wrap task and another task on delay of gratification. The validity analysis for the revised strategy knowledge test included the mean of the two raters’ values for each gift wrap task measure: (1) the mean for extent of peeking, and (2) the mean for latency to peeking.

To obtain divergent validity evidence for the SRL Strategy Knowledge Test, we recorded preschoolers’ gross motor skills as a construct-unrelated skill. For this purpose, we used adaptations of three subtests of the Psychomotor Test Battery of the IDS-2 Developmental Scales (Grob and Hagmann-von Arx, 2018). (1) For the Balancing Test, in the first part of the task, each child was told to walk 10 steps on a rope lying on the ground and not to leave a gap between their feet. If they succeeded, they received 2 points. If they stepped next to the rope or left a gap between their feet, they received 1 point. If they stepped next to the rope and left a gap, they received no point. If a child scored at least 1 point in the first part of the task, they were to perform the task again in the second part with their eyes closed. The scoring here was analogous to the first part of the task. A total of 0 to 4 points were therefore possible in this test. (2) In the Throwing and Catching Test, a juggling ball was thrown to each child four times, and they were asked to throw it back to the experimenter each time. The number of catches was scored from 0 to 3 (0 for zero or one catch, 1 for two catches, 2 for three catches, and 3 for four catches) and the accuracy of throwing was scored from 0 to 2 (0 for mostly uncoordinated throwing, 1 for mostly coordinated throwing, and 2 for coordinated throwing). Thus, overall, a child could score 0 to 5 points on this test. (3) In the Rope Side Jumping Test, each child was instructed to jump for 15 s as many times as possible with both legs from the right or left side of a rope lying on the ground and back again. The child received 0 to 5 points depending on the number of jumps performed correctly (0 points for zero to six correct jumps, 1 point for seven to 12 correct jumps, 2 points for 13 to 18 correct jumps, 3 points for 19 to 24 correct jumps, 4 points for 25 to 30 correct jumps, and 5 points for more than 30 correct jumps). Since a scale constructed from the three tasks was not sufficiently reliable (Cronbach’s α =0.36; McDonald’s ω = 0.53), the three individual scores for the tasks were included in the validity analysis. However, because Grob and Hagmann-von Arx (2018) achieved Cronbach’s α reliability = 0.87 for the entire Psychomotor Test Battery, which also includes fine motor and visuomotor tasks, the individual measures appear to be substantially related to the other motor skills measures.

As another measure of divergent validity, we constructed an External Rating Scale for Psychomotor Development, which parents answered as part of the parent survey. We used the psychomotor tests from the IDS-2 (Grob and Hagmann-von Arx, 2018) as a basis for item construction, so that questions on gross motor, fine motor, and visuomotor development were included. For each subtest (or for each subtest part in the case of multi-part subtests), we selected an activity that parents could observe in their children’s everyday lives and specified the ability to perform this everyday activity as an item. For example, the first part of the subtest Drawing Figures (visuomotor skills) was translated into the item “The child can draw simple geometric shapes (e.g., a circle).” The responses to these items were collected using a four-point scale ranging from 1 (strongly disagree) to 4 (strongly agree). In total, the scale consisted of 15 items, so a total score from 15 to 60 was possible. For the present sample, we found a satisfactory reliability of Cronbach’s α = 0.82 (McDonald’s ω = 0.82). An overview of the psychomotor items can be found in Appendix B.

Because SRL is predictive of academic achievement and grades are not yet available in German preschooling to assess academic achievement, we used an adaptation of the Logical–Mathematical Reasoning Test from the IDS-2 (Grob and Hagmann-von Arx, 2018) as a measure of criterion validity. The children performed seven tasks (each with one to three subtasks), which served to assess the various basic mathematical competencies that are already present at preschool age: 1. Counting skills, 2. Understanding of ordinal numbers, 3. Understanding of quantities, 4. Recognizing digits, 5. Recognizing invariance, 6. Mental addition skills, and 7. Solving simple equations. For example, we recorded the ability to recognize digits by showing the children digits and having them give the experimenter the associated number of marbles. The preschoolers received one point for each correctly solved subtask. Since the adaptation of the test consisted of 18 subtasks, the total number of points ranged from 0 to 18 (Cronbach’s α = 0.87; McDonald’s ω = 0.86 for the present sample). Grob and Hagmann-von Arx (2018) achieved Cronbach’s α = 0.97 for the total test battery on preschool academic skills, which also measured precursor skills to reading. Accordingly, the test used here shows strong links to other academic competencies.

Because academic success is evident in many other subjects besides mathematics, we constructed a Short Scale on Expected School Success for parents that served to provide a more holistic measure of expected school performance. For this purpose, we generated four statements (two of them negatively polarized) describing the prospective successful mastery of school requirements (e.g., “The child will be able to achieve good grades in school.”), which we assessed on a four-point scale ranging from 1 (strongly disagree) to 4 (strongly agree). In total, therefore, a score range from 4 to 16 was possible. The test had a satisfactory reliability (Cronbach’s α = 0.78; McDonald’s ω = 0.79) despite the small number of items. The scale is presented in Appendix C.

To control for the general intellectual abilities of the preschoolers in the validity analyses, we assessed reasoning with Set AB of the Colored Progressive Matrices Test (CPM; Raven et al., 1990). Here, the children were given 12 incomplete patterns (items) in succession. For each item, the children were asked to select from a set of six parts the one that correctly completes the pattern. For each correctly solved item, a child received one point, giving a potential points range from 0 to 12. Using the present sample, we achieved an acceptable reliability (Cronbach’s α = 0.60; McDonald’s ω = 0.64) for Set AB. In a study by Haile et al. (2016), which compared child scores on the CPM to other measures, significant correlations ranging from r = 0.32 to r = 0.64 were found between the CPM and various subtests of the K-ABC II Intelligence Test Battery (Singer et al., 2012).

For the data analysis, we used IBM SPSS statistical software, version 27. For reliability analysis, we calculated difficulties and discriminatory power for each pairwise comparison and Cronbach’s α as a measure of internal consistency for the entire test instrument. We used the add-on SPSS2LAVAAN (Busching, 2016) to conduct our confirmatory factor analysis. For the validity analysis, we calculated bivariate Pearson correlations between the sum scores on the SRL Strategy Knowledge Test and the convergent, divergent, and criterial validity measures. To control for the influence of intelligence, we also calculated partial correlations using the CPM score as an additional variable.

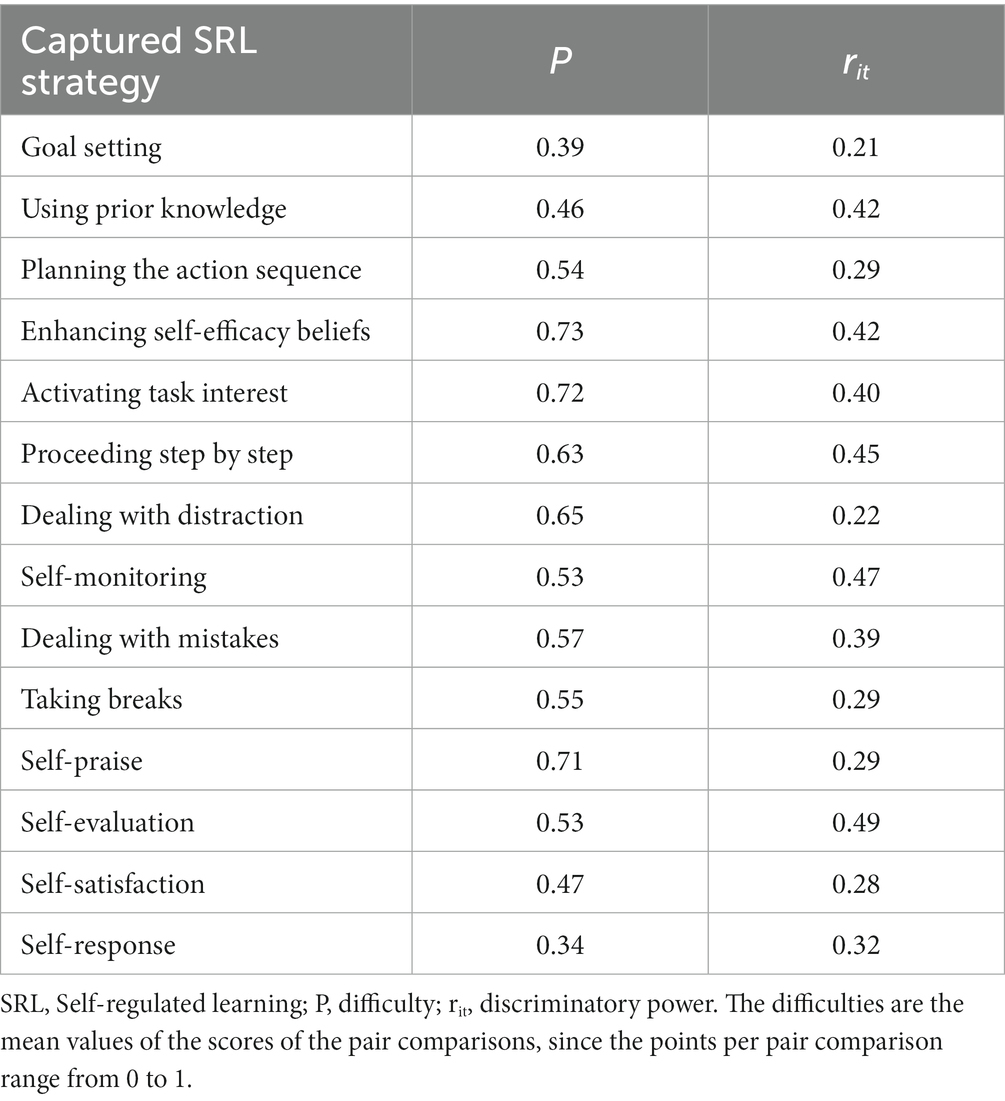

The difficulties and discriminatory power of the pair comparisons for each scenario are shown in Table 2. The analysis of the individual pair comparisons showed that the difficulties of all the pairwise comparisons fell within the range of 0.20 to 0.80, which is considered adequate for sufficient differentiation (Mummendey and Grau, 2014). The discriminatory power was also considered to be acceptable to good.

Table 2. Difficulties and discriminatory power of the pairwise comparisons for the scenarios, ordered by SRL strategy as captured by the scenarios.

For the entire test, we found a sufficient internal consistency of Cronbach’s α = 0.74 (McDonald’s ω = 0.74) The distribution of sum scores had a skewness of −0.27 (SE = 0.24) and a kurtosis of −0.46 (SE = 0.47). With Z-values of −1.13 for skewness and − 0.98 for kurtosis, a normal distribution for the test values was assumed (Kim, 2013). The sum scores ranged from 0 to 14 points and thus covered the entire spectrum of possible scores. The average test performance was M = 7.83 points (SD = 3.25). This again reflected a moderate test difficulty, which allowed good differentiation between high and low test performance. The assumption of moderate test difficulty was also supported by the fact that only 1% of the participants achieved a possible maximum score of 14 and 1.9% of the participants achieved a possible minimum score of 0. Ceiling and floor effects, which are assumed to be present for maximum or minimum scores above 15% (Terwee et al., 2007), can thus be ruled out.

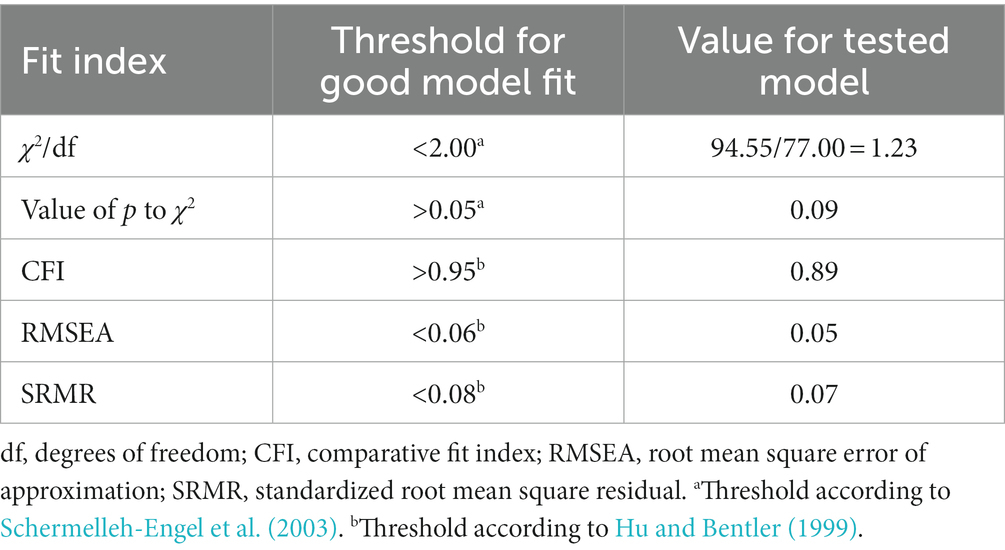

Table 3 shows the global fit indexes of the tested one-factor model. All the indexes, except for the CFI (comparative fit index), supported the assumed one-factor structure of the SRL test.

Table 3. Global fit indexes of the confirmatory factor analysis testing for a one-factor structure of the SRL strategy knowledge test.

Table 4 provides an overview of the descriptive statistics for all the variables included in the convergent, divergent, and criterion validity analyses.

Table 4. Ranges of possible scores, means, standard deviations, minima, and maxima of the measures used for the validity analysis.

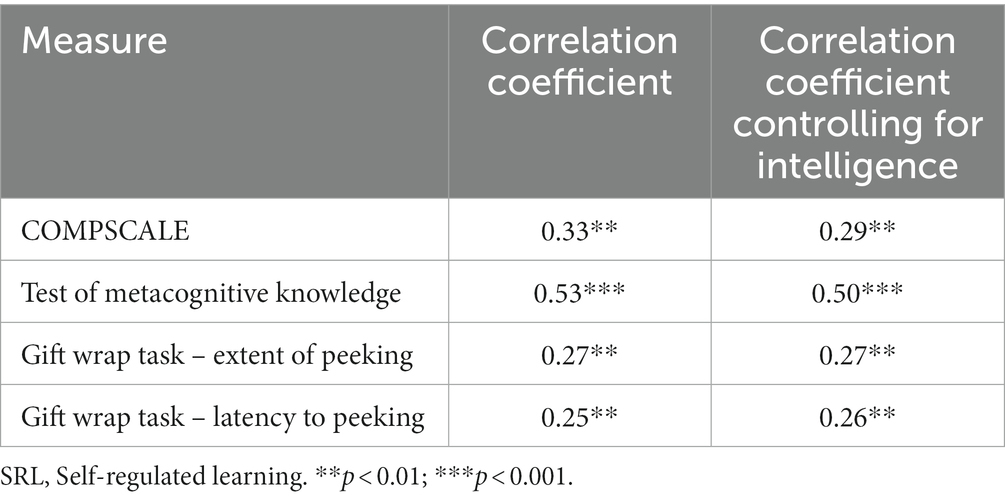

We calculated Pearson correlations between the SRL Strategy Knowledge Test and the COMPSCALE, the test of metacognitive knowledge, and the extent of peeking and latency to peeking during the gift wrap task to determine convergent validity. Table 5 presents the results of this analysis. Consistent with the hypothesis, the SRL Strategy Knowledge Test showed positive and significant correlations to all convergent validity measures. Furthermore, when using the CPM score to control for the possible influence of child cognitive ability, significant partial correlations were shown for all the convergent measures (all ps ≤ 0.008).

Table 5. Bivariate correlations between the SRL strategy knowledge test to be validated and the measures of convergent validity.

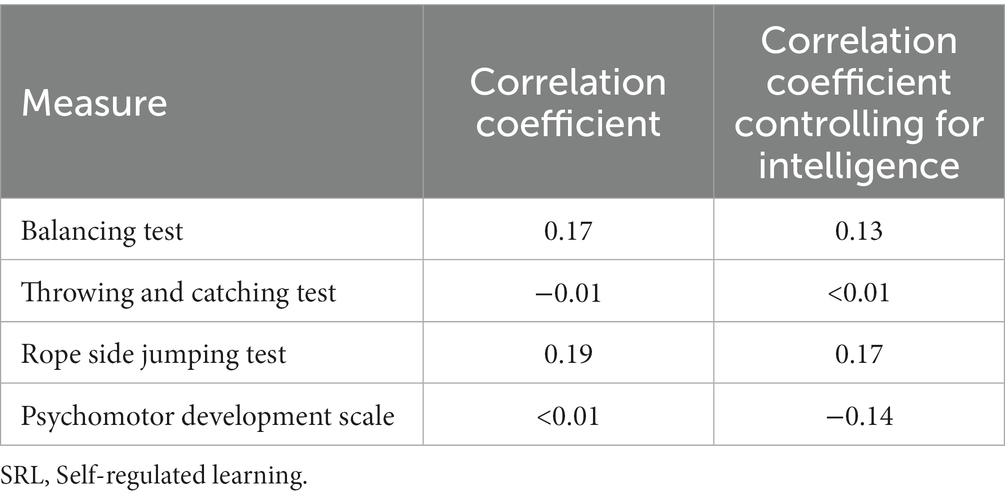

We calculated Pearson correlations between the SRL Strategy Knowledge Test and the Balancing Test, the Throwing and Catching Test, the Rope Side Jumping Test, and the Psychomotor Development Scale to determine divergent validity. The results of this analysis are shown in Table 6. Consistent with expectations, the correlations for the divergent validity measures were numerically lower than those for the convergent validity measures and not significant. The partial correlations using the CPM score as a control were also not significant (all ps ≥ 0.092).

Table 6. Bivariate correlations between the SRL strategy knowledge test to be validated and the measures of divergent validity.

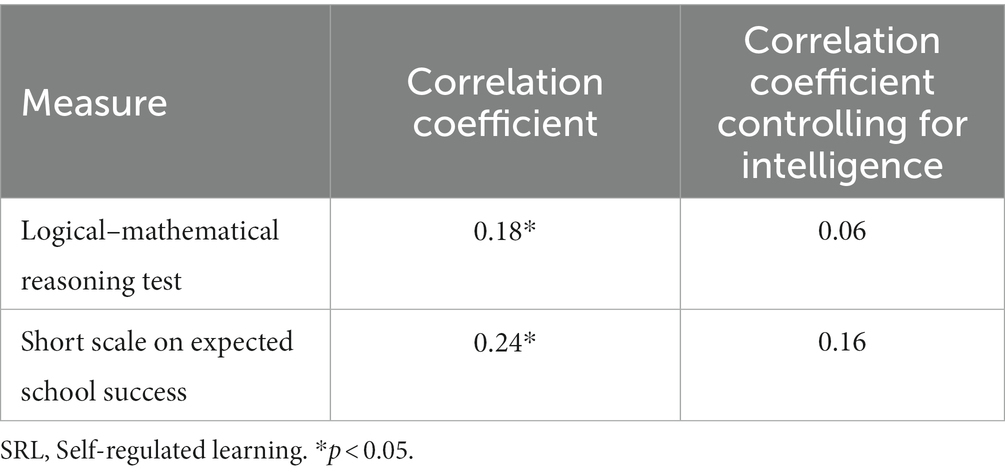

We calculated Pearson correlations between the SRL Strategy Knowledge Test and the Logical–Mathematical Reasoning Test as well as the Short Scale on Expected School Success to determine criterion validity. The results of this analysis are presented in Table 7. Consistent with the hypothesis, positive and significant correlations were found between the SRL Strategy Knowledge Test and the measures for criterion validity. However, the correlations were no longer significant when controlling for CPM (all ps ≥ 0.085).

Table 7. Bivariate correlations between the SRL strategy knowledge test to be validated and the measures of criterion validity.

The present study aimed to provide a valid measurement instrument to directly assess preschool SRL using the SRL Strategy Knowledge Test. The need for such direct assessment has arisen because previous research has mainly focused on indirect measurement methods using observer or caregiver ratings (e.g., Lange et al., 1989; McDermott et al., 2002; Whitebread et al., 2009), which do not allow for the capture of internal processes, such as strategy knowledge. Therefore, the strategy knowledge test presented by Jacob et al. (2019) was revised in terms of content and visual appearance to capture a broader range of strategies at play during the SRL process (Zimmerman, 2000) and increase the test difficulty. The resulting SRL Strategy Knowledge Test was then intensively tested based on a validation sample of preschoolers in order to derive data-based statements on reliability and validity. We found evidence for satisfactory difficulty and reliability as well as for the assumed one-factor structure for SRL in preschool-age children. In line with our hypotheses, we found that the revised SRL Strategy Knowledge Test correlated significantly with the convergent and criterion validity measures but not significantly with the divergent validity measures. However, when controlling for intelligence, all the correlations with the criterion measures were no longer significant.

The reliability analysis showed that the test had medium difficulty both at the level of individual pairwise comparisons and the total score level. Thus, a differentiation between high- and low-level proficiency using the SRL Strategy Knowledge Test is possible with regard to both knowledge of single SRL strategies and SRL strategy knowledge as a whole (Mummendey and Grau, 2014). The discriminatory power of the pairwise comparisons was also sufficient to good, resulting in satisfactory internal consistency for the entire test instrument. Thus, the revised SRL Strategy Knowledge Test represents a reliable test instrument with a difficulty appropriate for differentiating among various ability levels.

The results of the confirmatory factor analysis largely argue for the one-factor structure of the strategy knowledge test, which we assumed because previous studies on SRL assessment instruments for preschoolers have also found evidence for a one-factor structure (Dörr and Perels, 2018; Jacob et al., 2019). Only one of the five global fit indexes (the CFI) did not indicate a good model fit. Therefore, as the majority of the global fit indexes indicated a good model fit, we assumed that the value was below the threshold for CFI results because of the restricted sample size. As Hu and Bentler (1999) note, the CFI criterion may lead to the over-rejection of true-population models when the sample size is smaller than 250, which was the case in this study. A replication of this study with a larger sample size may provide clearer results on the factorial structure of the SRL Strategy Knowledge Test. Overall, the results of the present study together with the findings of previous studies (Dörr and Perels, 2018; Jacob et al., 2019) suggest that the low neuronal differentiation in preschool age also manifests itself in SRL; therefore, SRL seems to be a unidimensional construct at this age and differentiation into its subcomponents–cognition, metacognition, and motivation (Boekaerts, 1999; Panadero, 2017)–presumably occurs at a later stage of development.

Concerning the correlational validity analysis, we found significant correlations between the SRL Strategy Knowledge Test and the convergent measures for SRL and its related constructs, namely EFs and metacognitive abilities as SRL precursor skills (Boekaerts, 1999; Follmer and Sperling, 2016; Davis et al., 2021). Even when controlling for child intelligence, significant correlations were found for all the measures. As expected, numerically smaller and insignificant correlations were found for the divergent measures, regardless of whether intelligence was included as a control variable. Thus, the SRL Strategy Knowledge Test can be classified as a valid construct; it measures children’s self-regulatory competencies without explaining variance in construct-irrelevant abilities.

Regarding criterion validity, we also found supportive evidence in terms of significant correlations when considering only the validation measures (Logical–Mathematical Reasoning Test, parental ratings of expected school success). However, when controlling for intelligence, no significant correlations were found. Thus, without considering children’s general cognitive abilities, the SRL test shows significant associations with academic competence, consistent with the findings of previous academic competence studies and those that have used external rating scales to assess SRL (Howse et al., 2003; Sasser et al., 2015). However, while Howse et al. (2003) and Sasser et al. (2015) found evidence for this relationship even when controlling for cognitive ability, this finding could not be replicated in the present study. This may be due to different educational systems in the study countries as well as differences in study design. The present study was conducted in Germany, where preschool learning differs greatly in terms of learning goals and content from learning in elementary school and preschool learning in the United States, where the previous studies were conducted. In Germany, the focus is on learning socio-emotional skills in preschool (Köck, 2009). However, the acquisition of written language and mathematical skills, which are taught in a structured manner in preschools in the United States, is not targeted until elementary school in Germany. The mathematical abilities measured by the Logical–Mathematical Reasoning Test are therefore still at a very basal level in preschool children in Germany and are relatively unaffected by their learning behavior, so a self-regulated approach to learning may have only a small effect on mathematical abilities.

It is likely that parental judgments of expected school success are also shaped by children’s mathematical abilities (as well as their preschool writing skills, which are also still unaffected by school learning), as children will be graded in these performance domains during future school attendance. If this is the case, parental judgments also relate to skills, for which there are still few learning opportunities that can be controlled self-directedly. This may explain why we could not find a significant relationship between the SRL Strategy Knowledge Test and parental judgments of expected school success when controlling for intelligence. A longitudinal design could allow an additional investigation of mathematical (as well as writing) skills in first grade when children can have the first opportunity to use their learning-related (including SRL) behaviors in class and during homework. Potentially, a longitudinal study design would show a significant relationship between the SRL Strategy Knowledge Test and academic skills even when controlling for intelligence. In this respect, the present study also differs from previous studies that have used longitudinal designs that span several months (Howse et al., 2003) to several years (Sasser et al., 2015) between data collection points.

As mentioned above, the study design can be considered as needing improvement in terms of criterion validity. A longitudinal approach, in addition to providing a more in-depth look at the interplay between SRL, intelligence, and academic competence, would also allow for the determination of predictive rather than exclusively concurrent relationships.

Furthermore, the use of parent ratings to assess children’s expected school success can be classed as a limitation. Parents usually interact with only a small number of children in their everyday lives, which impedes normative comparisons needed to assess their child’s capabilities in relation to other children’s (Lange et al., 1989). Judgments of children’s expected school success that rely more on normative comparisons might result from kindergarten teacher ratings instead of parent ratings, as in their working life, kindergarten teachers interact with a greater number of children.

In terms of convergent validity, it should be noted that we only measured the hot component of executive functioning; no measure of cool EFs was used. As SRL involves both types of EF components (O’Toole et al., 2020), it would be desirable to determine whether the SRL Strategy Knowledge Test assesses aspects of SRL that are related to cool EFs in addition to those related to hot EFs. Further research using measures for cool in addition to hot EF measures is needed to draw more definitive conclusions about this.

The present study provides a valid test instrument for identifying preschoolers’ knowledge about SRL strategies and shows that it is possible to assess preschoolers’ internal SRL processes despite this age group’s metacognitive and motivational peculiarities (Maylor and Logie, 2010; Stephenson and Hanley, 2010) and restricted reading abilities (Lonigan et al., 2000). As the SRL Strategy Knowledge Test generates normally distributed data and its difficulty level is moderate, it enables differentiation between children with high and low SRL strategy knowledge. Therefore, the test appears to be appropriate for assessing whether a child needs support to acquire SRL strategies before they enter school. In order to be able to determine a distribution-dependent value from a child’s individual test score, a standardization study should be carried out based on a larger and more representative sample in terms of parental vocational education (American Educational Research Association, 2014). To diagnose the need for SRL support in a more holistic way, children’s SRL-related behavior in addition to their strategy knowledge should be assessed. Combining the SRL Strategy Knowledge Test with a caregiver’s rating, for example, using the COMPSCALE (Lange et al., 1989), can provide information on what strategies should be taught from the bottom up and what strategies solely need encouragement to be used.

In addition to its relevance for diagnostic practice, a multi-method approach to assessing preschoolers’ SRL is also of importance for future research. Therefore, combining the SRL Strategy Knowledge Test with measures for preschoolers’ SRL-related behaviors would allow for the investigation of how strongly preschoolers’ SRL strategy knowledge and their actual use of these strategies are related. Furthermore, these measures could be used together multiple times during the preschool year to gain deeper insights into preschool SRL development. To ensure that any increased scores are not exclusively due to practice effects, a study on retest effects with distances of only a few days between testing and retesting, should precede the examination of preschool SRL development. An initial approach to multi-method preschool SRL assessment was undertaken by Silva Moreira et al. (2022), who combined observational and interview data. However, as interviewing requires the verbalization of strategies, this procedure again leads to assessing only the internal processes that children can already verbalize based on their limited metacognitive capacities (Maylor and Logie, 2010).

Due to the importance of EFs for the development of SRL (e.g., Follmer and Sperling, 2016), an interesting question for future research is to what extent hot EFs compared to cool EFs contributes to preschoolers’ SRL strategy knowledge. Initial assumptions on this can be drawn from research on learning-related behaviors that contain SRL behaviors, such as maintaining work on tasks in the face of difficulties, and also behaviors typical of attention deficit hyperactivity disorder (Brock et al., 2009; O’Toole et al., 2020). The results of these studies suggest that hot EFs do not explain any variance in SRL behaviors over and above cool EFs. However, whether these findings on SRL behaviors also apply to knowledge about SRL strategies remains to be examined in future studies.

Finally, the relationship between SRL and intelligence, as well as their interplay for predicting academic competence, needs to be investigated in more detail, as the results of the present study suggest that the shared variance between children’s SRL strategy knowledge and academic competence can be completely explained by their reasoning abilities, developed as part of their fluid intelligence. Based on the assumption that these fluid capacities are used to gain and connect knowledge (Cattell, 1987), one could suppose that fluid intelligence enables the acquisition of SRL strategies, which in turn facilitates the acquisition of academic abilities. In addition to reasoning abilities, which were recorded in the present study, it is conceivable that further components of intelligence, as postulated in the Cattell-Horn-Carroll theory (McGrew, 2005), enable the acquisition of SRL strategies. For example, a high memory capacity could make it easier to memorize and remember SRL strategies. Assuming that these cognitive abilities enable not only the use of SRL strategies, but also the acquisition and memorization of the learning content itself, SRL could be regarded as a partial mediator between intelligence and academic competence. However, previous research on the three constructs is scarce and has led to heterogenous findings (Diseth, 2002; Howse et al., 2003; Zuffianò et al., 2013). Furthermore, the authors of the present study are not aware of any study that has specifically tested the above-described mediation model. Therefore, the relationship between the constructs should be targeted in future research to gain a deeper insight into the interplay of the abilities important for academic success (e.g., Dent and Koenka, 2016; Kriegbaum et al., 2018). The resulting findings could then be used to make conclusions about research questions for intervention studies aimed at mastering school demands.

The raw data supporting the conclusions of this article will be made available by the authors on request.

Ethical approval was not required for the study involving human samples in accordance with the local legislation and institutional requirements. Participants (or their parents) could withdraw their consent at any time during the study, without providing a reason for doing so. Collected data are stored and processed according to German regulations on data protection. Only data relevant to the study were collected and data collection was conducted or supervised by experienced researchers. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

LG: Conceptualization, Data curation, Investigation, Methodology, Project administration, Writing – original draft, Writing – review & editing. LD-U: Supervision, Writing – review & editing. EK: Conceptualization, Writing – review & editing. FP: Conceptualization, Resources, Supervision, Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

We thank Emilia Göz, Tabea Helweg, Ronja Hilbig, Linda Lechner, Annika Paulus, Juliane Schneider, and Lisa-Marie Weber for their support in data collection. Special thanks go to Juliane Schneider for drawing the pictures for our test instrument and subject recruitment. Last but not least, we would like to thank all the preschoolers and their parents for participating in the study.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2024.1332170/full#supplementary-material

1. ^Since the present study was exclusively aimed at validating the test for research purposes and not at standardizing it for use in diagnostic practice, we did not refer to the American Educational Research Association et al. (2014) standards about test score interpretation (Cluster 1). This would have required a much larger sample than the one used here.

2. ^This sample was slightly larger than the target sample size mentioned in the preregistration (N = 100). This was because we did not want to cancel anyone whose study application arrived on the same day to maximize the likelihood that they would be willing to also apply for future studies. In addition, this way we could ensure that we had sufficient analyzable data on our test instrument, as the experimenters reported that some of the participating children refused to complete the SRL test.

3. ^Because the response scale of the revised SRL test contained the colors green and red, a t-test was used to determine whether performance on the test differed between children with and without dyschromatopsia. We found no significant differences, t(82) = −0.45, p = 0.653.

American Educational Research Association. (2014). Standards for educational and psychological testing: National Council on measurement in education. Washington, DC: American Educational Research Association, American Psychological Association, National Council on Measurement in Education.

Anthoine, E., Moret, L., Regnault, A., Sébille, V., and Hardouin, J. B. (2014). Sample size used to validate a scale: a review of publications on newly-developed patient reported outcomes measures. Health Qual. Life Outcomes 12:176. doi: 10.1186/s12955-014-0176-2

Ateş Akdeniz, A. (2023). Exploring the impact of self-regulated learning intervention on students' strategy use and performance in a design studio course. Int. J. Technol. Des. Educ. 33, 1923–1957. doi: 10.1007/s10798-022-09798-3

Becker, L. L. (2013). Self-regulated learning interventions in the introductory accounting course: an empirical study. Issues Account. Educ. 28, 435–460. doi: 10.2308/iace-50444

Boekaerts, M. (1999). Self-regulated learning: where we are today. Int. J. Educ. Res. 31, 445–457. doi: 10.1016/S0883-0355(99)00014-2

Brock, L. L., Rimm-Kaufman, S. E., Nathanson, L., and Grimm, K. J. (2009). The contributions of ‘hot’ and ‘cool’ executive function to children’s academic achievement, learning-related behaviors, and engagement in kindergarten. Early Child. Res. Q. 24, 337–349. doi: 10.1016/j.ecresq.2009.06.001

Bronson, M. B. (1994). The usefulness of an observational measure of young children’s social and mastery behaviors in early childhood classrooms. Early Child. Res. Q. 9, 19–43. doi: 10.1016/0885-2006(94)90027-2

Bryce, D., Whitebread, D., and Szűcs, D. (2015). The relationships among executive functions, metacognitive skills and educational achievement in 5 and 7 year-old children. Metacogn. Learn. 10, 181–198. doi: 10.1007/s11409-014-9120-4

Busching, R. (2016). Konfirmatorische Faktorenanalyse mit SPSS [confirmatory factor analysis using SPSS]. STATISIK–verständlich. Available at: https://www.statistik-verstaendlich.de/2016/06/konfirmatorische-faktorenanalyse-spss/.

Cattell, R. B. (1987). Intelligence: Its structure, growth and action. Amsterdam, Netherlands: Elsevier.

Cera, R., Mancini, M., and Antonietti, A. (2013). Relationships between metacognition, self-efficacy and self-regulation in learning. J. Educ. Cultur. Psychol. Stud. 4, 115–141. doi: 10.7358/ecps-2013-007-cera

Çetin, B. (2017). Metacognition and self-regulated learning in predicting university students’ academic achievement in Turkey. J. Educ. Train. Stud. 5, 132–138. doi: 10.11114/jets.v5i4.2233

Davis, H., Valcan, D. S., and Pino-Pasternak, D. (2021). The relationship between executive functioning and self-regulated learning in Australian children. Br. J. Dev. Psychol. 39, 625–652. doi: 10.1111/bjdp.12391

Deci, E. L., and Ryan, R. M. (2008). Self-determination theory: a macrotheory of human motivation, development, and health. Can. Psychol. 49, 182–185. doi: 10.1037/a0012801

Dent, A. L., and Koenka, A. C. (2016). The relation between self-regulated learning and academic achievement across childhood and adolescence: a meta-analysis. Educ. Psychol. Rev. 28, 425–474. doi: 10.1007/s10648-015-9320-8

Dignath, C., Buettner, G., and Langfeldt, H.-P. (2008). How can primary school students learn self-regulated learning strategies most effectively?: a meta-analysis on self-regulation training programmes. Educ. Res. Rev. 3, 101–129. doi: 10.1016/j.edurev.2008.02.003

Diseth, Å. (2002). The relationship between intelligence, approaches to learning and academic achievement. Scand. J. Educ. Res. 46, 219–230. doi: 10.1080/00313830220142218

Doebel, S. (2020). Rethinking executive function and its development. Perspect. Psychol. Sci. 15, 942–956. doi: 10.1177/1745691620904771

Dörrenbächer, L., and Perels, F. (2018). Measuring self-regulation in preschoolers: Validation of two observational instruments. In J. W. Stefaniak (Ed.), Self-regulated learners: Strategies, performance and individual differences (pp. 31–66). Nova Science Publishers.

Dörrenbächer-Ulrich, L., Sparfeldt, J., and Perels, F. (accepted) Knowing how to learn: Development and Validation of a Strategy Knowledge Test on Self-Regulated Learning for College Students. Metacognition and Learning.

Dörr, L., and Perels, F. (2018). Multiperspektivische Erfassung der Selbstregulationsfähigkeit von Vorschulkindern [Multi-perspective assessment of self-regulatory abiltiy in preschool children]. Frühe Bildung, 7, 98–106. doi: 10.1026/2191-9186/a000359

Ergen, B., and Kanadli, S. (2017). The effect of self-regulated learning strategies on academic achievement: a meta-analysis study. Eurasian J. Educ. Res. 17, 55–74. doi: 10.14689/ejer.2017.69.4

Escolano-Pérez, E., Herrero-Nivela, M. L., and Anguera, M. T. (2019). Preschool metacognitive skill assessment in order to promote educational sensitive response from mixed-methods approach: complementarity of data analysis. Front. Psychol. 10:1298. doi: 10.3389/fpsyg.2019.01298

Follmer, D. J., and Sperling, R. A. (2016). The mediating role of metacognition in the relationship between executive function and self-regulated learning. Br. J. Educ. Psychol. 86, 559–575. doi: 10.1111/bjep.12123

Greguras, G. J., and Robie, C. (1998). A new look at within-source interrater reliability of 360-degree feedback ratings. J. Appl. Psychol. 83, 960–968. doi: 10.1037/0021-9010.83.6.960

Grob, A., and Hagmann-von Arx, P. (2018). Intelligence and Development Scales – 2 (IDS–2). Intelligenz- und Entwicklungsskalen für Kinder und Jugendliche [Intelligence and development scales for children and adolescents]. Göttingen: Hogrefe.

Guo, L. (2022). Using metacognitive prompts to enhance self-regulated learning and learning outcomes: a meta-analysis of experimental studies in computer-based learning environments. J. Comput. Assist. Learn. 38, 811–832. doi: 10.1111/jcal.12650

Grüneisen, L., Dörrenbächer-Ulrich, L., and Perels, F. (2023). Differential development and trainability of self-regulatory abilities among preschoolers. Acta Psychologica, 232, Article 103802. doi: 10.1016/j.actpsy.2022.103802

Haile, D., Gashaw, K., Nigatu, D., and Demelash, H. (2016). Cognitive function and associated factors among school age children in Goba town, south-East Ethiopia. Cogn. Dev. 40, 144–151. doi: 10.1016/j.cogdev.2016.09.002

Händel, M., Artelt, C., and Weinert, S. (2013). Assessing metacognitive knowledge: development and evaluation of a test instrument. J. Educ. Res. Online 5, 162–188. doi: 10.25656/01:8429

Howse, R. B., Calkins, S. D., Anastopoulos, A. D., Keane, S. P., and Shelton, T. L. (2003). Regulatory contributors to children’s kindergarten achievement. Early Educ. Dev. 14, 101–120. doi: 10.1207/s15566935eed1401_7

Hu, L., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. Multidiscip. J. 6, 1–55. doi: 10.1080/10705519909540118

Hudson, J. A., and Fivush, R. (1991). Planning in the preschool years: the emergence of plans from general event knowledge. Cogn. Dev. 6, 393–415. doi: 10.1016/0885-2014(91)90046-G

Jacob, L., Dörrenbächer, S., and Perels, F. (2019). A pilot study of the online assessment of self-regulated learning in preschool children: Development of a direct, quantitative measurement tool. Int. Electron. J. Elem. Educ., 12, 115–126. doi: 10.26822/iejee.2019257655

Jacob, L., Dörrenbächer, S., and Perels, F. (2021). The influence of interindividual differences in precursor abilities for self-regulated learning in preschoolers. Early Child Dev. Care. 191, 2364–2380. doi: 10.1080/03004430.2019.1705799

Isaacson, R., and Fujita, F. (2006). Metacognitive knowledge monitoring and self-regulated learning. J. Scholarsh. Teach. Learn. 6, 39–55.

Jansen, R. S., van Leeuwen, A., Janssen, J., Conijn, R., and Kester, L. (2020). Supporting learners’ self-regulated learning in massive open online courses. Comput. Educ. 146:103771. doi: 10.1016/j.compedu.2019.103771

Kim, H.-Y. (2013). Statistical notes for clinical researchers: assessing normal distribution (2) using skewness and kurtosis. Restor. Dent. Endod. 38, 52–54. doi: 10.5395/rde.2013.38.1.52

Kiss, M., Kotsis, Á., and Kun, A. (2014). The relationship between intelligence, emotional intelligence, personality styles and academic success. Bus. Educ. Accredit. 6, 23–34.

Kochanska, G., Murray, K. T., and Harlan, E. T. (2000). Effortful control in early childhood: continuity and change, antecedents, and implications for social development. Dev. Psychol. 36, 220–232. doi: 10.1037/0012-1649.36.2.220

Köck, P. (2009). Praxis der Beobachtung und Beratung: Eine Handreichung für den Erziehungs- und Unterrichtsalltag (1. Klasse/Vorschule) [practice of observation and counseling: A handout for everyday education and teaching (1st grade/preschool)]. Heidelberg: Auer Verlag.

Kriegbaum, K., Becker, N., and Spinath, B. (2018). The relative importance of intelligence and motivation as predictors of school achievement: a meta-analysis. Educ. Res. Rev. 25, 120–148. doi: 10.1016/j.edurev.2018.10.001

Lambert, E. B. (2001). Metacognitive problem solving in preschoolers. Australas. J. Early Childhood 26, 24–29. doi: 10.1177/183693910102600306

Lange, G., MacKinnon, C. E., and Nida, R. E. (1989). Knowledge, strategy, and motivational contributions to preschool children’s object recall. Dev. Psychol. 25, 772–779. doi: 10.1037/0012-1649.25.5.772

Lee, M., Lee, S. Y., Kim, J. E., and Lee, H. J. (2023). Domain-specific self-regulated learning interventions for elementary school students. Learn. Instr. 88:101810. doi: 10.1016/j.learninstruc.2023.101810

Lockl, K., Händel, M., Haberkorn, K., and Weinert, S. (2016). Metacognitive knowledge in young children: development of a new test procedure for first graders. In H.-P. Blossfeld, J. Maurice Von, M. Bayer, and J. Skopek Methodological issues of longitudinal surveys: The example of the National Educational Panel Study. Berlin: Springer Fachmedien, pp. 465–484.

Lonigan, C. J., Burgess, S. R., and Anthony, J. L. (2000). Development of emergent literacy and early reading skills in preschool children: evidence from a latent-variable longitudinal study. Dev. Psychol. 36, 596–613. doi: 10.1037/0012-1649.36.5.596

Maag Merki, K., Ramseier, E., and Karlen, Y. (2013). Reliability and validity analyses of a newly developed test to assess learning strategy knowledge. J. Cogn. Educ. Psychol. 12, 391–408. doi: 10.1891/1945-8959.12.3.391

Maylor, E. A., and Logie, R. H. (2010). A large-scale comparison of prospective and retrospective memory development from childhood to middle age. Q. J. Exp. Psychol. 63, 442–451. doi: 10.1080/17470210903469872

McDermott, P. A., Leigh, N. M., and Perry, M. A. (2002). Development and validation of the preschool learning behaviors scale. Psychol. Sch. 39, 353–365. doi: 10.1002/pits.10036

McGrew, K. S. (2005). “The Cattell-horn-Carroll theory of cognitive abilities: past, present, and future” in Contemporary intellectual assessment: Theories, tests, and issues. eds. D. P. Flanagan and P. L. Harrison (New York: The Guilford Press), 136–181.

Merz, E. C., Landry, S. H., Montroy, J. J., and Williams, J. M. (2017). Bidirectional associations between parental responsiveness and executive function during early childhood. Soc. Dev. 26, 591–609. doi: 10.1111/sode.12204

Miyake, A., Friedman, N. P., Emerson, M. J., Witzki, A. H., Howerter, A., and Wager, T. D. (2000). The unity and diversity of executive functions and their contributions to complex “frontal lobe” tasks: a latent variable analysis. Cogn. Psychol. 41, 49–100. doi: 10.1006/cogp.1999.0734

Moffett, L., Moll, H., and FitzGibbon, L. (2018). Future planning in preschool children. Dev. Psychol. 54, 866–874. doi: 10.1037/dev0000484

Mummendey, H. D., and Grau, I. (2014). Die Fragebogen-Methode: Grundlagen und Anwendung in Persönlichkeits-, Einstellungs- und Selbstkonzeptforschung [the questionnaire method: Basics and application in personality, attitude, and self-concept research]. 6th Edn. Göttingen: Hogrefe.

O’Toole, S. E., Monks, C. P., Tsermentseli, S., and Rix, K. (2020). The contribution of cool and hot executive function to academic achievement, learning-related behaviours, and classroom behaviour. Early Child Dev. Care 190, 806–821. doi: 10.1080/03004430.2018.1494595

Panadero, E. (2017). A review of self-regulated learning: six models and four directions for research. Front. Psychol. 8:422. doi: 10.3389/fpsyg.2017.00422

Panadero, E., and Alonso-Tapia, J. (2014). ¿Cómo autorregulan nuestros alumnos? Modelo de Zimmerman sobre estrategias de aprendizaje [how do students self-regulate? Review of Zimmerman’s cyclical model of self-regulated learning]. Anal. Psicol. 30, 450–462. doi: 10.6018/analesps.30.2.167221

Raven, J. C., Raven, J. E., and Court, J. H. (1990). Manual for Raven’s progressive matrices and vocabulary scales. Oxford: Oxford Psychologists Press.

Ridgell, S. D., and Lounsbury, J. W. (2004). Predicting academic success: general intelligence," big five" personality traits, and work drive. Coll. Stud. J. 38, 607–619.

Roebers, C. M., and Feurer, E. (2016). Linking executive functions and procedural metacognition. Child Dev. Perspect. 10, 39–44. doi: 10.1111/cdep.12159

Roth, A., Ogrin, S., and Schmitz, B. (2016). Assessing self-regulated learning in higher education: a systematic literature review of self-report instruments. Educ. Assess. Eval. Account. 28, 225–250. doi: 10.1007/s11092-015-9229-2

Rovers, S. F. E., Clarebout, G., Savelberg, H. H. C. M., de Bruin, A. B. H., and van Merriënboer, J. J. G. (2019). Granularity matters: comparing different ways of measuring self-regulated learning. Metacogn. Learn. 14, 1–19. doi: 10.1007/s11409-019-09188-6

Rutherford, T., Buschkuehl, M., Jaeggi, S. M., and Farkas, G. (2018). Links between achievement, executive functions, and self-regulated learning. Appl. Cogn. Psychol. 32, 763–774. doi: 10.1002/acp.3462

Sasser, T. R., Bierman, K. L., and Heinrichs, B. (2015). Executive functioning and school adjustment: the mediational role of pre-kindergarten learning-related behaviors. Early Child. Res. Q. 30, 70–79. doi: 10.1016/j.ecresq.2014.09.001

Schermelleh-Engel, K., Moosbrugger, H., and Müller, H. (2003). Evaluating the fit of structural equation models: tests of significance and descriptive goodness-of-fit measures. Methods Psychol. Res. Online 8, 23–74.

Schneider, W. (2008). The development of metacognitive knowledge in children and adolescents: major trends and implications for education. Mind Brain Educ. 2, 114–121. doi: 10.1111/j.1751-228X.2008.00041.x

Schoemaker, K., Bunte, T., Wiebe, S. A., Espy, K. A., Deković, M., and Matthys, W. (2012). Executive function deficits in preschool children with ADHD and DBD. J. Child Psychol. Psychiatry 53, 111–119. doi: 10.1111/j.1469-7610.2011.02468.x

Silva Moreira, J., Ferreira, P. C., and Veiga Simão, A. M. (2022). Dynamic assessment of self-regulated learning in preschool. Heliyon 8:e10035. doi: 10.1016/j.heliyon.2022.e10035

Singer, J. K., Lichtenberger, E. O., Kaufman, J. C., Kaufman, A. S., and Kaufman, N. L. (2012). “The Kaufman assessment battery for children–second edition and the Kaufman test of educational achievement—second edition” in Contemporary intellectual assessment: Theories, tests, and issues. eds. D. P. Flanagan and P. L. Harrison. 3rd ed (New York: The Guilford Press), 269–296.