- 1Department of Pediatrics, Division of Developmental Behavioral Pediatrics, Coaching in Early Intervention Training and Mentorship Program, University of Louisville School of Medicine, Louisville, KY, United States

- 2Occupational Therapy, Rush University, Chicago, IL, United States

- 3College of Health Sciences, Occupational Therapy, University of Missouri, Columbia, MO, United States

Introduction: The translation and use of evidence-based practices (EBPs) within early intervention (EI) systems presents challenges. The Office of Special Education (OSEP) has emphasized results-driven accountability to expand state accountability from compliance to also include quality services that align with EBPs. OSEP’s results-driven accountability provided states the opportunity to design State Systemic Improvement Plans (SSIP) to strengthen the quality of EI services by increasing the capacity of EI systems to implement, scale-up, and sustain use of EBPs. Caregiver coaching is widely accepted as an EBP within EI settings, yet uptake and fidelity to coaching practices remains limited. Such widespread implementation of caregiver coaching is partially limited by a lack of measurement tools that operationalize behaviors consistent with coaching. In this study, we describe the development of the Kentucky Coaching Adherence Rubric-Revised (KCAR-R) and psychometric testing of the instrument.

Methods: We developed and tested the KCAR-R to measure fidelity of coaching practices within a state-wide professional development program, the Coaching in Early Intervention Training and Mentorship Program. We define operational elements of the KCAR-R and rubric design elements related to: creators; users and uses; specificity, judgment complexity; evaluative criteria; quality levels; quality definitions; scoring strategies; presentation; explanation; quality processes; accompanying feedback information; secrecy; and exemplars. With regard to psychometric validation of the KCAR-R, interrater reliability was analyzed using intraclass correlation coefficients across eight raters and 301 randomly selected video submissions. Internal consistency was evaluated using Cronbach’s alpha across 429 video submissions.

Results: Results showed 0.987 agreement, indicating excellent interrater reliability; item level internal consistency values ranged from 0.860 to 0.882. for scale if item deleted and 0.834 for the total scale.

Discussion: Findings from this study showed that the KCAR-R operationalized behaviors that exemplify caregiver coaching and may serve as a resource for other states or programs to document the quality and fidelity of evidence-based EI services. To influence EI provider practices at a systems level, we used implementation science to guide our work and provide examples of how EI systems seeking ways to create sustainable quality services may build upon our approach.

1 Introduction

In early intervention (EI), as mandated by Part C of the Individuals with Disabilities Education Act (IDEA, 2004), the translation of evidence-based practices (EBPs) to everyday practice presents challenges. While caregiver coaching is a recommended practice for providing EI services (Division for Early Childhood, 2014), the uptake and fidelity of caregiver coaching in authentic practice settings, outside of research studies, is limited (Douglas et al., 2020; Romano and Schnurr, 2022). Fidelity to coaching is limited partially due to a lack of standardized and psychometrically sound instruments that outline the core EI provider behaviors that align with coaching. To address this gap in the translation process of EBPs to everyday EI practice settings, we developed the Kentucky Coaching Adherence Rubric-Revised (KCAR-R), an adherence rubric of EI caregiver coaching practices, as part of a statewide professional development (PD) program. In this study, we describe the development of the KCAR-R using Dawson’s (2017) 14 design elements for rubrics and initial psychometric testing of the instrument.

Caregiver coaching is a relationship-directed process that encompasses the ideas of family-centered, capacity building practice (Kemp and Turnbull, 2014). Caregiver coaching places an emphasis on triadic interactions between the caregiver, provider, and child that facilitates active caregiver participation in EI sessions (Aranbarri et al., 2021; Ciupe and Salisbury, 2020; Douglas et al., 2020; Friedman et al., 2012; Rush and Shelden, 2020), which results in caregiver’s enhanced competence and confidence in supporting their child (Douglas et al., 2020; Jayaraman et al., 2015; Rush and Shelden, 2020; Salisbury et al., 2018; Salisbury and Copeland, 2013; Stewart and Applequist, 2019). Caregiver coaching creates opportunities for caregivers to practice and learn when providers are not present (Mahoney and Mac Donald, 2007; Meadan et al., 2016). In addition to enhanced caregiver outcomes, research also highlights positive outcomes for children (Ciupe and Salisbury, 2020; Douglas et al., 2020; Salisbury et al., 2018; Salisbury and Copeland, 2013). Given the evidence on benefits of the caregiver coaching approach, the American Association of Pediatrics has identified coaching as an EBP in EI (Adams et al., 2013).

Despite clear support for caregiver coaching in EI settings, there is not a clear operational definition for, or measurement of, caregiver coaching fidelity. Kemp and Turnbull (2014) and Friedman et al. (2012) highlighted the lack of an accepted, universal definition of caregiver coaching and components in EI. Aranbarri et al. (2021) emphasized that coaching is often mistakenly used equivalently with the terms training and education, which refer to the provider working with the child and discussing the interventions with caregivers, respectively. Kemp and Turnbull (2014) acknowledged Rush and Shelden’s (2011) definition of coaching is frequently used in EI:

an adult learning strategy in which the coach promotes the learner’s ability to reflect on his or her actions as a means to determine the effectiveness of an action or practice and develop a plan for refinement and use of the action in immediate and future situations. (p. 8)

Rooted in trusting relationships and adult learning theory (Friedman et al., 2012; Marturana and Woods, 2012), caregiver coaching in EI is an interaction style or approach, comprising several components aimed to build caregiver capacity. Salisbury and Copeland’s (2013) study used caregiver coaching strategies of targeted information sharing (S), observation, and opportunities for caregiver practice with provider feedback (OO), problem solving and reflection (P), and review of the session (R; SOOPR) and used a checklist format to measure the presence of the characteristics as observed, partially observed, or not observed during EI visits. Pellecchia et al. (2022) identified comparable core elements of caregiver coaching including use of authentic learning experiences, collaborative decision-making, demonstration, in vivo feedback, and reflection. Rush and Shelden (2020) described the practical use of the five caregiver coaching characteristics of joint planning, observation, action/practice, reflection, and feedback. Clearly, there is much congruence between these scholars’ identified characteristics, strategies, and core elements of coaching used to support caregivers to help their children develop and learn; however, literature continues to report the lack of a singular framework or operational definition of coaching (Seruya et al., 2022; Ward et al., 2020).

There is inconsistency in reporting of EI provider training processes and lack of intervention fidelity measurement in these coaching studies. A recent systematic review of coaching practices used in EI noted that the extent to which interventionists adhered to coaching practices was poorly described, with only two of the included 18 studies evaluating therapist use of coaching (Ward et al., 2020). Similar findings had been previously reported in an early evidence-based review of coaching with only 3 of 27 studies reporting implementation fidelity to a coaching framework (Artman-Meeker et al., 2015). Studies that do include a fidelity measure, do so via direct observation and checklists (e.g., Family Guided Routines Based Intervention Key Indicators Checklist [Woods, 2018]) or rating scales (e.g., Coaching Practices Rating Scale [Rush and Shelden, 2011]) tied to their uniquely defined coaching framework, with variability in evaluative criteria (Tomeny et al., 2020; Ward et al., 2020). Additionally, these fidelity measures are often limited in their levels of measurement by rating observations of coaching characteristics as present, emerging, or not present.

Translating coaching models to practice has also been difficult, partially due to a lack of validated measures, and EI providers continue to deliver child-focused interventions (Bruder et al., 2021; Douglas et al., 2020; Romano and Schnurr, 2022; Sawyer and Campbell, 2017). Caregiver coaching is complex and requires a diverse skill set, including base knowledge of child development and EBPs to address a number of developmental and family concerns (Division for Early Childhood, 2014; Friedman et al., 2012; Kemp and Turnbull, 2014). High quality EI occurs when providers support both child-oriented skills and build caregiver capacity (Romano and Schnurr, 2022). Many EI providers value coaching, though report feeling ill-equipped and recognize the need for specialized training (Douglas et al., 2020; Romano and Schnurr, 2022; Stewart and Applequist, 2019). Feelings of unpreparedness to implement coaching often stem from a lack of training as well as a lack of universally accepted standards and behaviors that align with a coaching model. Clearly, there is a need to identify and measure essential EI provider behaviors that align with the caregiver coaching model to shape the implementation of this EBP in both research and everyday practice. By developing and validating a coaching rubric, this study addresses this essential need in the field of EI.

Beyond research and practice needs, the Office of Special Education Programs (OSEP) in the Office of Special Programs of the U.S. Department of Education (USDE), Results-Driven Accountability (RDA; U.S. Department of Education, 2019) recently expanded state EI program accountability from an emphasis on compliance to a framework that focuses on results: quality services to improve child and family outcomes. OSEP’s RDA provided states the opportunity to design State Systemic Improvement Plans (SSIPs) to strengthen the quality of EI services by increasing the capacity of EI systems to implement, scale-up, and sustain use of EBPs. The principles of implementation science (Fixsen et al., 2005) have been embedded into the design of the SSIP process and OSEP expects that states will use the principles in planning and implementing improvement strategies. The new emphasis on RDA means that EI providers must use EBPs, such as caregiver coaching, to provide services to children and families, as well as show the quality of services positively influences child and family outcomes. RDA also emphasizes systems change, so there is the need to outline and quantify behaviors that align with caregiver coaching, for EI systems to provide comprehensive training and EI providers can implement coaching with fidelity in everyday settings.

Implementation science focuses on translating evidence to practice using system level components that support quality implementation, continuous improvement, and sustainability to improve outcomes (Metz et al., 2013). Active implementation frameworks (AIFs) are an evidence-based set of frameworks created from synthesized research findings for organization and systems’ change, program development, successful use of innovations, and intentional use of the implementation components in practice (Fixsen et al., 2021). The active implementation formula for success for achieving socially significant outcomes is effective practices and effective implementation teams with enabling contexts (National Implementation Research Network, University of North Carolina at Chapel Hill, 2022).

A well-operationalized innovation, practice, or model is fundamental to transition to active implementation. They are teachable, learnable, doable, and assessable in practice with fidelity measures that correspond to intended outcomes (Blase et al., 2018). Innovations can produce socially significant outcomes when they have clear descriptions, a coherent explanation of the essential functions that define the innovation, operational definitions of the essential functions, and a practical assessment of fidelity.

Implementation stages and implementation drivers support effective implementation of the innovation. Metz et al. (2013) defined the implementation stages (i.e., exploration, installation, initial implementation, full implementation) as the process of determining and operationalizing an innovation to improve outcomes. Although there is a natural progression through the stages in pursuit of intended outcomes, there are often overlapping functions when transitioning to a next stage (National Implementation Research Network, University of North Carolina at Chapel Hill, 2022). All four stages are critical to the process, and rather than functioning independently, sustainability is integrated into each stage. Implementation drivers ensure the development of competency, organizational support, and engaging leadership components around an innovation to support change reflecting an effective and sustainable framework (Fixsen et al., 2005; Metz et al., 2013).

Lastly, the support of enabling contexts is critical to the success of the initiative and rely on strong implementation teams and improvement cycles. Implementation teams have knowledge and skills around usable innovations and use evidence-based strategies to support systems’ change in real world settings targeting fidelity, sustainability, and scaling up (Metz et al., 2013). They use improvement cycles (i.e., Plan, Do, Study, Act; usability testing, practice-policy feedback loops) to enhance system functioning, continuously refine procedures, address problems, and identify solutions to challenges associated with implementing innovations, (National Implementation Research Network, University of North Carolina at Chapel Hill, 2022). While scaling up initiatives, they focus on developing a replicable, effective framework for all components needed to implement a sustainable innovation with fidelity.

Considered together, the absence of a clear operational definition, lack of measurement of intervention fidelity, EI provider challenges with uptake of coaching practices and EI State program accountability mandates highlight the need for systems-change with a well-defined and manualized caregiver coaching approach to ensure EI providers reach levels of fidelity of implementation that are known to create positive child and family outcomes (Romano and Schnurr, 2022). Fidelity tools need to detail the specific coaching practices that should be used in each session along with operational definitions of practices to ensure that each is discreet, observable, and measurable (Romano and Schnurr, 2022). Sensitive, valid, and reliable fidelity measurement of EBP use is needed to assess caregiver coaching (Meadan et al., 2023) and is central to assuring consistency in systems change.

Rubrics are common fidelity measurement tools used in authentic contexts to clarify learning expectations, facilitate learner self-assessment and provide performance feedback to learners (Firmansyah et al., 2020; Ford and Hewitt, 2020). They include specific criteria for rating dimensions of performance and typically describe levels of performance quality (Firmansyah et al., 2020; Ford and Hewitt, 2020). Dawson (2017) has proposed fourteen (14) design elements as common language for rubrics to support replicable research and practice. This framework provides a mechanism to clearly and concisely communicate what a particular rubric intervention entails, promotes sufficient detail required for replicable research, and may provide policy-makers the opportunity to identify and measure particular practices they are mandating (Dawson, 2017).

We have documented that caregiver coaching varies widely in key active ingredients, practice implementation, and fidelity measurement. There are challenges with systematically replicating the key components and behaviors of caregiver coaching (Kemp and Turnbull, 2014; Ward et al., 2020). The complex, individualized nature of caregiver coaching focused on families’ priorities and routines is often unconducive to a consistent, reproducible procedure. However, framing an EI visit by using the common caregiver coaching characteristics with fidelity can provide some consistency in processes to evaluate the quality and effectiveness of EI provider behavior. Therefore, the purpose of this paper is to present a rubric which operationalizes a continuum of quality EI caregiver coaching practices for use in a statewide in-service PD program targeting workforce development. Specifically, we will:

1. Describe the development and field testing of an adherence rubric of early intervention caregiver coaching practices

2. Introduce the KCAR-R using Dawson’s (2017) 14 design elements, including initial psychometric properties of the KCAR-R (i.e., inter-rater agreement, internal consistency, construct validity)

3. Present how the KCAR-R is a central measure for systems change guided by implementation science principles

2 Method

2.1 Context and procedures

Extant research shows that high-quality PD is necessary to influence EI provider behavior change (Dunst et al., 2015), and RDA rejuvenated Comprehensive Systems of Professional Development (CSPD; Bruder, 2016; Kasprzak et al., 2019) as agents of systems change and workforce development (Tomchek and Wheeler, 2022). To improve the quality of EI services, in-service PD programs aim to close the research to practice gap by increasing EI providers’ capacity to implement a caregiver coaching model in their practice.

The University of Louisville School of Medicine Department of Pediatrics was contracted to develop PD based on adult learning to improve practice/intervention fidelity to caregiver coaching within a larger SSIP. Coaching in Early Intervention Training and Mentorship Program (2023) commenced in 2017 with a team including three experienced early interventionists (two being second and third authors), the program director (first author) who also served as a mentor, and a project coordinator who provided organizational and technical support to the team and providers. Two external consultants (including last author) supplemented training and mentored the initial three PD specialists.

To transition into the PD specialist role, the experienced EI providers engaged in a series of multi-component learning activities. They began by reviewing literature on caregiver coaching in EI (i.e., Dunn et al., 2012; Dunn et al., 2018; Foster et al., 2013; Jayaraman et al., 2015; Rush et al., 2003; Rush and Shelden, 2011) and mentoring (i.e., Fazel, 2013; Neuman and Cunningham, 2009; Rush and Shelden, 2011; Watson and Gatti, 2012). They completed eLearning modules on the topics of caregiver coaching; home and community visits in EI; using a primary coach approach; using activities, routines, and materials in the natural environment; and strengths (Dunn and Pope, 2017). Additionally, they participated in individual written and group verbal reflective activities; self-assessed caregiver coaching practices on their own recorded EI visits; received performance feedback; provided performance feedback on peer’s caregiver coaching skills; and engaged in interactive, virtual small group coaching sessions weekly with mentors. Training and mentorship lasted 6 months and was determined complete after each PD specialist consistently demonstrated fidelity to caregiver coaching during ongoing EI services and acknowledged confidence in mentoring.

We developed and implemented a multi-component, evidence-informed in-service PD program for credentialed EI providers targeting the adoption and sustained use of caregiver coaching with fidelity. Coaching in Early Intervention Training and Mentorship Program (2023) was built on research-based adult learning principles (Dunst and Trivette, 2012) and key characteristics of effective in-service PD (Dunst et al., 2015) that promote positive outcomes for providers and families including: PD specialist illustration and introduction of caregiver coaching practices, collaborative teaming with monthly virtual meetings, job-embedded opportunities for providers to practice and self-reflect on caregiver coaching practices, and mentorship with performance feedback from dedicated PD specialists. The CEITMP spans 32 weeks with approximately 60 min weekly time expenditure, to provide a reasonable duration and intensity for providers’ practice change. Lastly, the program has an ongoing maintenance component focused on follow-up support targeting continued fidelity to caregiver coaching. All ongoing EI service providers in Kentucky complete the PD as a requirement of their service provider agreement, and 329 providers have completed the CEITMP at the time of this manuscript.

To support the success of the PD program, we developed a manualized caregiver coaching approach and carefully defined EI provider behaviors that aligned with coaching practices to ensure EI providers reach levels of intervention fidelity that are known to create positive child and family outcomes (Romano and Schnurr, 2022).

2.2 Fidelity measure development and field testing

We followed a standard process for measure development (DeVellis, 2003). First, Kentucky’s EI System selected caregiver coaching as the core EBP to be measured. We completed an iterative process to create a strong rubric, the KCAR-R, that reflects what quality caregiver coaching looks like in EI services. The initial PD specialist cohort, mentors, and consultants researched and contributed to the development of a caregiver coaching fidelity tool. The team used current guidance from the field (Division for Early Childhood, 2014; Kemp and Turnbull, 2014; Rush and Shelden, 2011; Workgroup on Principles and Practices in Natural Environments, OSEP TA Community of Practice: Part C Settings, 2008), to iteratively develop a fidelity measure reflective of key elements of caregiver coaching practices and Early Intervention Provider Performance Standards (Kentucky Early Intervention Services, 2023).

We identified coaching items and operational definitions through both review of existing literature and iterative generation. Initially, coaching literature specific to use with caregivers in EI was reviewed. The team summarized common elements in the literature (Friedman et al., 2012; Kemp and Turnbull, 2014; McWilliam, 2010; Rush and Shelden, 2011) to identify joint planning at beginning, observation, action/practice, feedback, reflection, and joint planning at session end.

The consultants developed the base language for the fidelity tool. To further define key coaching components and behaviors, the initial PD specialists uploaded coaching session videos weekly to allow consultant and mentor feedback for reflective discussion in weekly meetings. As the team identified essential performance elements that reflected quality coaching practices, they used these ideas to build the coaching indicators and quality considerations for the first draft of a rubric. Each week the team refined the rubric components and behaviors to provide more detail. Working drafts of the descriptors were used to review coaching videos and to refine practices.

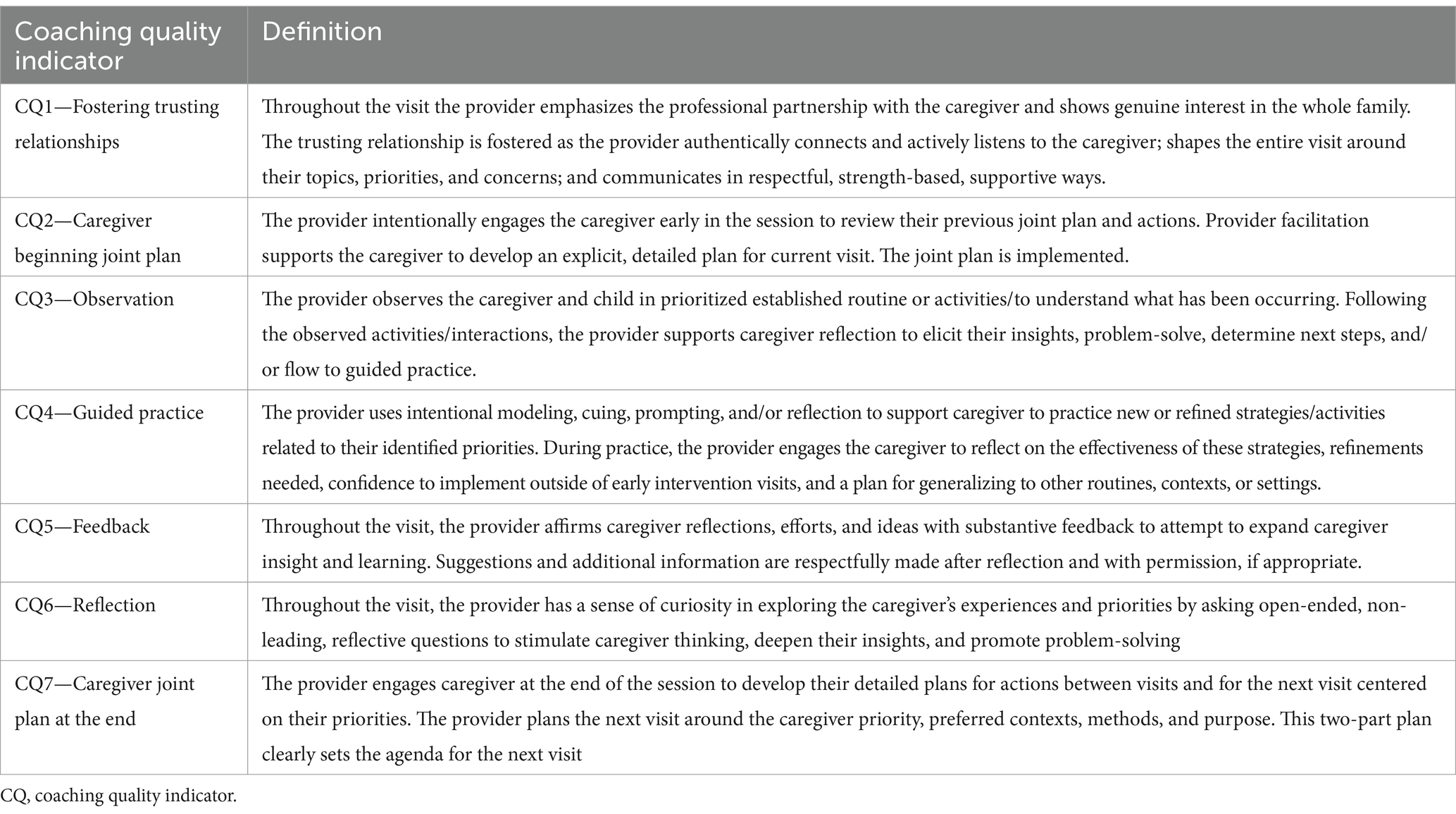

The CEITMP team expanded and refined during field testing and when establishing reliability. The final KCAR-R consists of seven defined coaching quality indicators (CQs) reflecting key behaviors that EI providers utilize to coach caregivers of infants and toddlers at risk of or with developmental differences, striving to build their confidence and competence to support their children’s learning and development. In addition to the initial six items, the iterative process resulted in the addition of a CQ related to fostering trusting relationships as the foundation for the coaching interaction.

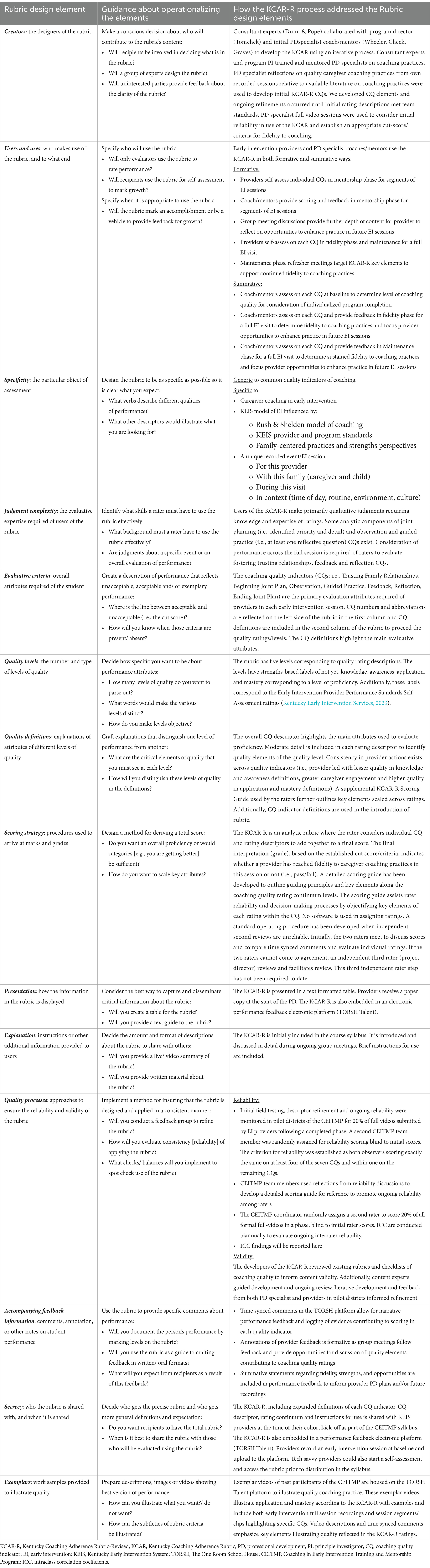

We summarize the fidelity tool qualities and development in Table 1 using Dawson’s (2017) 14 design elements proposed as common language for rubrics to support replicable research and practice. These design elements highlight not only developmental qualities (e.g., defining users, assessment focus, judgment complexity, quality levels and definition), but also describe uses (e.g., exemplars, accompanying feedback) and evaluative aspects (e.g., scoring strategy, quality processes of reliability and validity). Additionally, we have included in Table 1 considerations and decision points used within each element to guide our iterative process and differentiate the KCAR-R from other coaching fidelity measures. The KCAR-R, as seen in Supplementary material, consists of seven defined coaching quality indicators (CQs) reflecting key behaviors necessary for EI providers to provide quality caregiver coaching. The details in the rubric provide a mechanism to build confidence and competence in the EI provider network. The CQs are summarized in Table 2.

Table 1. Kentucky Coaching Adherence Rubric-Revised design elements (Dawson, 2017).

Each CQ features behavioral descriptors, representing a continuum of EI provider caregiver coaching quality. Five levels of competence were developed for each CQ on a Likert-type scale, with ratings of 0 = Not Yet; 1 = Knowledge; 2 = Awareness; 3 = Application; and 4 = Mastery. We intentionally aligned the ‘not yet’ category with language of traditional child-directed therapy to highlight the changes we were expecting providers to make. These five levels allowed for precise quality measurement sensitive to changes in implementation. As previously noted, many existing fidelity checklists use a two or three category system (i.e., ‘present/absent’ or ‘present, emerging, absent’); however, such measures are limited in their use to provide quality performance feedback to EI providers that guides precisely how to improve their practices. Describing each incremental step toward quality improvement within five categories of competence allowed the KCAR-R to be used not only as a sensitive measure of quality services that could be linked to outcomes for families, but as a feedback/learning tool for the PD program.

We sought expert review of the KCAR from EI experts from state leadership and faculty members outside of Kentucky. No changes were made to core CQs, though action/practice was renamed “guided practice.” We incorporated recommended refinements to CQ operational definitions and behavior rating descriptors. We then field tested the KCAR with provider submitted video recordings of their EI visits on a designated schedule prior to beginning, during, and following completion of the CEITMP. PD specialists from the CEITMP team used the KCAR-R to rate EI providers’ level of quality to the defined set of caregiver coaching practices. While viewing entire video recorded EI sessions, CEITMP team raters evaluated providers’ application of the seven CQs and assigned each CQ a score from 0 to 4. We assessed adherence to quality caregiver coaching practices by combining scores across all seven CQs for one entire EI visit, with a possible minimum total summed score of 0 and maximum of 28.

We selected a cut score of 18 as indicative of fidelity to caregiver coaching, with the stipulation that no 0 (not yet) o or 1 (knowledge) ratings were present in the total score. This reflects demonstration of more application and/or mastery level caregiver coaching skills than awareness, with no skills at the not yet or knowledge level. This cut score also acknowledges that providers may not have opportunities to demonstrate all CQs at mastery during any one visit.

To support consistent scoring among fidelity raters, the CEITMP team refined language into the KCAR-R and developed a scoring guide for PD specialist reference. The scoring guide expands individual CQ definitions and further outlines observable elements of each CQ rating descriptor. In addition to objectifying rubric scoring criteria, the guide supports PD program group meeting discussions where each CQ is dissected and is used as a manual for onboarding PD specialists. An example for CQ1, Fostering Trusting Relationships, can be found in Supplementary material.

2.3 Participants

Members of the PD team and the EI providers as consumers of the training are considered study participants.

2.3.1 Raters

At the time of this manuscript, the cross-disciplinary CEITMP PD team was comprised of a physical therapist, an occupational therapist who serves as the program director, two speech language pathologists, three developmental interventionists, one of whom is a Teacher for the Deaf and Hard of Hearing, and a coordinator with a behavioral health background. The raters had experience as providers in EI ranging from 15 to 30 years. All maintained requisite licenses and certifications for their respective disciplines.

2.3.2 EI providers

Kentucky EI providers are independent contractors, subcontractors, or agency employees with vendor agreements with the Kentucky Cabinet for Health and Family Services to provide services to Kentucky Early Intervention System (KEIS) eligible infants and toddlers, and their families. This vendor agreement stipulates participation in the CEITMP by all ongoing cross-disciplinary service providers (i.e., DIs, OTs, PTs, and SLPs). Providers deliver services across different regions of the state. Services are offered in the environments natural to children and families via tele-intervention, face-to-face, or hybrid formats. The pool of active ongoing service providers in the KEIS fluctuates across the years.

2.4 Reliability

During development and implementation of the KCAR and KCAR-R, the PD team conducts reliability checks across 20% of randomly selected video submissions following each CEITMP phase (i.e., baseline, fidelity, maintenance). For reliability checks, the CEITMP coordinator randomly assigns a second rater blinded (unaware) to initial scores. Based on field testing experiences, the team set the criteria for reliability as both observers scoring exactly the same on at least four of the seven CQs and within one on the remaining CQs.

2.5 Data analysis

The PD team conducted interrater reliability on n = 301 randomly selected video submissions across n = 8 raters. Interrater reliability was analyzed using intraclass correlation coefficients (ICCs; Shrout and Fleiss, 1979) at the item and scale levels using a two-way random effects model with a 95% confidence interval. ICCs ranging from 0.4 to 0.6 were considered fair, those >0.6 were considered good, and those >0.75 were considered excellent (Fleiss, 1986). We analyzed all data using Statistical Package for the Social Sciences Version 28, 2022 (IBM Corp, 2021). Additionally, we calculated percent agreement for established criteria (i.e., both observers scoring exactly the same on at least four of the seven CQs and within one on the remaining CQs). We evaluated internal consistency on n = 429 video submissions at the scale and item levels using Cronbach’s alpha. Values ranging from 0.7 to 0.8 were considered fair, those with 0.8–0.85 were considered good, and those >0.85 were considered excellent (Tavakol and Dennick, 2011).

3 Results

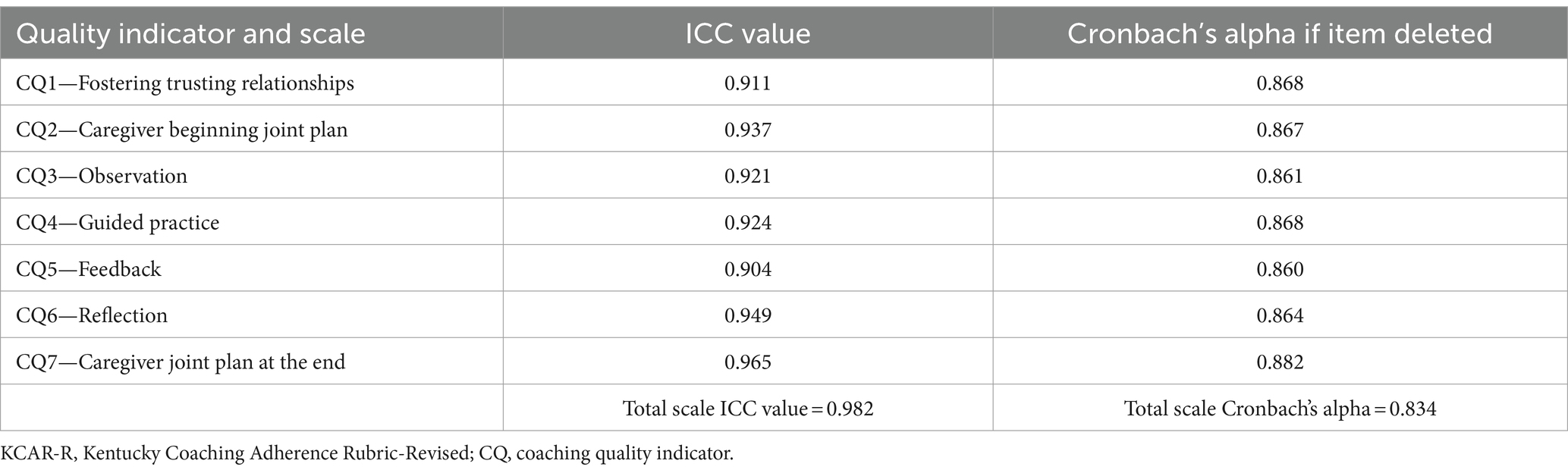

PD specialists had 0.987 agreement at video level across all eight raters on 301 reliability checks (range = 0.90–0.987). Table 3 summarizes interrater reliability and internal consistency findings. Absolute agreement and 95% confidence interval ICCs were excellent for both total score (0.982) and individual CQs (range 0.904–0.965). The overall internal consistency of the yielded α = 0.834. The contribution of each item to the scale’s internal consistency was evaluated, yielding (α if item deleted) coefficients ranging from 0.860 to 0.882.

4 Discussion

This study described the development and reliability of an adherence rubric of EI caregiver coaching practices. Findings from this study demonstrated how a fidelity measure of coaching behaviors aligns with Dawson’s (2017) 14 design elements and can be reliably scored among various disciplines of EI providers in everyday practice. Internal consistency results show that the items on the KCAR-R are well aligned and each item measures distinct behaviors associated with coaching practices. While EBPs are necessary to improve child and family outcomes in the EI system, there is limited research on how PD programs create sustainable ways to influence provider behavior. In response to OSEP’s call for RDA to improve positive child and family outcomes, KEIS committed to transforming its organization to include accountability for quality services. Professionals discuss quality EI services, often describing the components they believe indicate excellence. Federal agencies such as OSEP and the USDE provide requirements for services with an emphasis on compliance. With the shift to RDA, states and service agencies must now document not only compliance but the quality of their services to promote positive child and family outcomes. In this report, we highlighted how KEIS moved beyond compliance to develop a reliable measure of exemplary, sustainable quality services. In this discussion, we address our third aim to provide an overview of how we used implementation science to guide systems level change and provide examples of how EI systems seeking ways to create sustainable quality services may build upon our approach.

We describe how the creation and uptake of the fidelity rubric aligned with three elements commonly described within AIFs: (1) Usable Innovations; (2) Stages and Drivers for Effective Implementation; and (3) Teams and Improvement Cycles in Enabling Contexts.

The innovation of caregiver coaching practices to build caregiver capacity to help their children develop and learn was adopted as the effective practice. We created a template that would support providers to make decisions consistently and intentionally during their visits with families. With details embedded in the rubric, each provider has a way to check their practices in real time. By using time-synced feedback in the TORSH Talent platform (i.e., an electronic performance feedback electronic platform), providers can reflect on how to refine their coaching skills for subsequent visits. Simultaneously, our process reflects the DEC RPs (2014), creating an easy way to report on statewide progress.

The KCAR-R operationalized behaviors that exemplify caregiver coaching for Kentucky’s SSIP and may serve as a resource for other states to document the quality and fidelity of caregiver coaching. The KCAR-R utilized five levels of competence for each quality indicator to allow for precise quality measurement sensitive to changes in implementation. We have noted that many fidelity measures use a two or three category system. Such measures are limited in how they are used to provide quality feedback to EI providers to recognize precisely how to improve their practices. By describing each incremental step toward quality improvement within 5 categories of competence, we created a feedback/learning tool and a sensitive measure of quality services that could be linked to outcomes for families. We ensured that the ‘not yet’ category aligned with language of current practices as a cue about what changes we were expecting providers to make. The resultant detailed operational definitions of caregiver coaching embedded within the KCAR-R illustrates how to integrate Routines Based Early Intervention (McWilliam, 2010), coaching (Rush and Shelden, 2020) and Family Guided Routines Based Interventions (Woods, 2023) as highlighted in the Early Intervention Caregiver Coaching Crosswalk (Early Intervention/Early Childhood Professional Development Community of Practice, 2023). In addition to these common coaching components, we also include a quality indicator about fostering a trusting relationship between families and providers (see Supplementary material).

In this section, we describe how the CEITMP evolved through four implementation stages (i.e., exploration, installation, initial implementation, full implementation).

The decision to adopt the innovation occurred during the exploration stage which involved collaboration between leadership, experts, stakeholders, and purveyors to determine the feasibility of implementation and was based on assessed needs, evidence, and availability of resources. In the installation stage, KEIS focused on recruiting and training PD specialists for the CEITMP team; designing the CEITMP in-service PD around caregiver coaching; developing and field testing the KCAR-R fidelity measure; and ensuring procedures and policies, by embedding mandatory KEIS provider participation into service provider agreements. The pilot implementation of the CEITMP in three different-sized districts during the initial implementation stage required managing change at all levels as the PD was launched. The CEITMP used PD participant feedback for rapid-cycle problem-solving and decision-making, consistent messaging, and frequent communication with KEIS stakeholders, and established reliability on the KCAR-R. The CEITMP is now in full implementation, and the majority of CEITMP trained KEIS providers are coaching caregivers with fidelity, demonstrating the successful systems change focused on quality services. Sustainability embedded into each stage is crucial to success (Metz et al., 2013). Financial resources and supports for the CEITMP have been established to sustain fidelity to caregiver coaching long-term (Wheeler, 2023) and continue to rely on data to periodically inform decisions, continuously improve, and adapt as needed.

Implementation drivers are key to AIF and promote the adoption and use of an innovation leading to improved outcomes (National Implementation Research Network, University of North Carolina at Chapel Hill, 2022). To maximize the adoption and use of an innovation, implementation drivers must be integrated and compensatory. Kentucky made a commitment to deploy a program to train, evaluate and monitor provider implementation of an effective, sustainable system as part of their SSIP. The competency drivers of selection, training, coaching, and fidelity create processes that support change and ensure high fidelity of innovations necessary for positive outcomes. For KEIS, this included the selection and training of the CEITMP implementation team and the KCAR-R development. Organization drivers aim to form, support, and sustain accommodating environments for effective services with facilitative administration, systems intervention, and decision-support data systems, and for KEIS the state lead agency (SLA), SSIP, and CEITMP teams comprised these drivers. Leadership drivers support competency drivers and organization drivers by using technical or adaptive leadership strategies to correspond to different types of challenges during implementation efforts. Technical leadership typically involves a single individual who uses traditional approaches for solving common problems; and adaptive leadership strategies are employed by a group working together to address complex and unclear problems with equally involved solutions that require time, collaboration, and test to resolve. The leadership drivers for this initiative constituted the state lead agency, SSIP, and OSEP teams.

During the implementation phases, state and regional level leaders had to commit resources to support goals associated with a quality service system. Providers and district leaders increasingly recognized that adopting the EBP, caregiver coaching, was not a fad. As an example of the commitment to implementation of caregiver coaching, statewide leaders committed to the process by requiring that any provider in the EI system had to achieve and sustain the quality service standards set out by the project as outlined by the fidelity measure, the KCAR-R. The requirement of reaching and maintaining fidelity created an incentive for EI providers to take the process seriously and highlights the importance of the creation and testing of the rubric used to measure fidelity.

Implementation teams and improvement cycles are enabling contexts critical to success. The design of the CEITMP and development of the KCAR-R centered on the quality EI services families deserved, was supported by various teams across all levels in the state, within continuous process improvement cycles.

Implementation teams with knowledge or skill in the innovation may comprise existing staff, external experts, new staff, intermediary supports, and groups outside the organization at different levels. There are three levels of the KEIS SSIP implementation teams: (1) district teams comprised of point of entry (POE) managers and staff, KEIS providers, and a CEITMP PD specialist; (2) SSIP teams that include POE managers, the CEITMP and SLA team; and (3) state teams that constitute the SLA team and the Interagency Coordinating Council (ICC)/key stakeholder group.

These teams use Plan, Do, Study, Act (PDSA) to emphasize continuous quality improvement. The CEITMP coordinator responds to technical problems quickly and the team modifies program procedures to reflect changes with immediate effect when needed. Usability testing is a planned set of checks to assess the feasibility and significance of an innovation or processes for improvement. Survey feedback from each cohort of providers is used to improve the CEITMP and clarify the KCAR-R. Practice-policy feedback loops involve executive leadership becoming aware of barriers, ensuring policy allows for sustained implementation, and transparent processes. Monthly SSIP implementation meetings focus on collaboration to problem-solve and ensure program processes are transparent and align with KEIS policies. The improvement cycles were central to ensuring we were developing a replicable, effective framework for to support KEIS providers use and maintain fidelity to caregiver coaching.

Our reliance on implementation science and active implementation frameworks have supported the development of a rubric for caregiver coaching practices that reflects recently cross-walked key caregiver coaching indicators (Early Intervention/Early Childhood Professional Development Community of Practice, 2023). The strengths of the KCAR-R highlight its versatility as a measure intervention fidelity, a framework for ongoing EI visits, and a guide for self-reflection and feedback during PD programs targeting these practices. Nonetheless, the generalization of these uses and current study findings may be limited at this time because the PDS were all from one state EI program. Additionally, given the real-world context for Kentucky’s SSIP, the KCAR-R was refined simultaneously to its use and reported findings relied on existing program data. We report reliability focused findings here. Despite excellent reliability across eight different raters, other state programs may operationalize caregiver coaching differently. The high reliability is a direct result of the intensive onboarding process and reliability training for the PDS. This extensive training may be seen as a limitation of the KCAR-R. Future studies using the KCAR-R can explore uses across EI practice settings (e.g., home, childcare centers, community), coaching service delivery (i.e., in person and telehealth), and sensitivity to measuring change.

5 Summary/conclusion

In this paper, we outline the processes used to develop, implement, and evaluate an EI PD system building EBPs that fosters accountability and high-quality outcomes for families. We used evidence-based caregiver coaching practices within EI recommended practices to design a PD and accountability system within the state of Kentucky. We provide a refined rubric which outlines five levels of performance for each of seven caregiver coaching quality indicators that are used as a framework for ongoing EI visits. Investigation of the psychometric properties of the rubric showed excellent inter-rater reliability and good internal consistency. The rubric epitomizes a continuum of quality and therefore can be used as a model for organizations responding to calls for accountability of quality care.

Data availability statement

The datasets presented in this article are not readily available because data is restricted to use by the investigators as per the IRB agreement. Requests to access the datasets should be directed to c2NvdHQudG9tY2hla0Bsb3Vpc3ZpbGxlLmVkdQ==.

Ethics statement

The studies involving humans were approved by University of Louisville Human Subjects Protections Office. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and institutional requirements.

Author contributions

ST: Writing – original draft, Writing – review & editing. SW: Writing – original draft, Writing – review & editing. CC: Writing – review & editing. LL: Writing – original draft, Writing – review & editing. WD: Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This material is based on contracted work with the Kentucky Department for Public Health, Cabinet for Health and Family Services supported by the US Department of Education under Grant Number H181A220085.1.1.

Acknowledgments

The authors acknowledge the ongoing unwavering support of the CEITMP from the KY DPH/CHFS. The authors also acknowledge the contributions to initial discussions that influenced the KCAR of Beth Graves (an initial PDS) and Ellen Pope (external consultant). We also acknowledge the rest of the CEITMP team (Denise Insley, Michele Magness, Lisa Simpson, Julie Leezer, Minda Kohner-Coogle) for their contributions to refinement of the KCAR-R descriptors.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2024.1324118/full#supplementary-material

References

Adams, R. C., Tapia, C., The Council on Children with DisabilitiesMurphy, N. A., Norwood, K. W. Jr., Adams, R. C., et al. (2013). Early intervention, IDEA part c services, and the medical home: collaboration for best practice and best outcomes. Pediatrics 132, e1073–e1088. doi: 10.1542/peds.2013-2305

Aranbarri, A., Stahmer, A. C., Talbott, M. R., Miller, M. E., Drahota, A., Pellecchia, M., et al. (2021). Examining US public early intervention for toddlers with autism: characterizing services and readiness for evidence-based practice implementation. Front. Psych. 12:786138. doi: 10.3389/fpsyt.2021.786138

Artman-Meeker, K., Fettig, A., Barton, E. E., Penney, A., and Zeng, S. (2015). Applying an evidence-based framework to the early childhood coaching literature. Top. Early Child. Spec. Educ. 35, 183–196. doi: 10.1177/0271121415595550

Blase, K. A., Fixsen, D. L., and Van Dyke, M. (2018). Developing usable innovations. Chapel Hill, NC: Active Implementation Research Network.

Bruder, M. B. (2016). “Personnel development practices in early childhood intervention” in Handbook of early childhood special education. eds. B. Reichow, B. Boyd, E. Barton, and S. Odom (Cham: Springer), 289–333.

Bruder, M. B., Gundler, D., Stayton, V., and Kemp, P. (2021). The early childhood personnel center: building capacity to improve outcomes for infants and young children with disabilities and their families. Infants Young Child. 34, 69–82. doi: 10.1097/IYC.0000000000000191

Ciupe, A., and Salisbury, C. (2020). Examining caregivers’ independence in early intervention home visit sessions. J. Early Interv. 42, 338–358. doi: 10.1177/1053815120902727

Coaching in Early Intervention Training and Mentorship Program. (2023). University of Louisville Department of Pediatrics. Available at: https://louisville.edu/medicine/departments/pediatrics/divisions/developmental-behavioral-genetics/coaching-in-early-training-and-mentorship-program (Accessed August 1, 2024).

Dawson, P. (2017). Assessment rubrics: towards clearer and more replicable design, research and practice. Assess. Eval. High. Educ. 42, 347–360. doi: 10.1080/02602938.2015.1111294

DeVellis, R. R. (2003). Scale development: theory and applications. 2nd Edn. Sage: Thousand Oaks, CA.

Division for Early Childhood. (2014). DEC recommended practices in early intervention/early childhood special education 2014. Division for Early Childhood of the Council for Exceptional Children. Available at: https://www.dec-sped.org/dec-recommended-practices (Accessed August 1, 2024).

Douglas, S. N., Meadan, H., and Kammes, R. (2020). Early interventionists’ caregiver coaching: a mixed methods approach exploring experiences and practices. Top. Early Child. Spec. Educ. 40, 84–96. doi: 10.1177/0271121419829899

Dunn, W., Cox, J., Foster, L., Mische-Lawson, L., and Tanquary, J. (2012). Impact of a contextual intervention on child participation and parent competence among children with autism spectrum disorders: a pretest–posttest repeated-measures design. Am. J. Occup. Ther. 66, 520–528. doi: 10.5014/ajot.2012.004119

Dunn, W., Little, L. M., Pope, E., and Wallisch, A. (2018). Establishing fidelity of occupational performance coaching. OTJR 38, 96–104. doi: 10.1177/1539449217724755

Dunn, W., and Pope, E. (2017) E-learning lessons. Dunn and Pope Strength Based Coaching. Available at: http://dunnpopecoaching.com/e-learning-lessons (Accessed October 11, 2022).

Dunst, C. J., Bruder, M. B., and Hamby, D. W. (2015). Metasynthesis of in-service professional development research: features associated with positive educator and student outcomes. Educ. Res. Rev. 10, 1731–1744. doi: 10.5897/ERR2015.2306

Dunst, C. J., and Trivette, C. M. (2012). “Meta-analysis of implementation” in Handbook of implementation science for psychology in education. eds. B. Kelly and F. Daniel (Cambridge, United Kingdom: Cambridge University Press).

Early Intervention/Early Childhood Professional Development Community of Practice (2023). Early intervention caregiver coaching crosswalk. Available at: http://eieconlinelearning.pbworks.com/w/page/141196335/Resources%20and%20Activities (Accessed October 10, 2023).

Fazel, P. (2013). Teacher-coach-student coaching model: a vehicle to improve efficiency of adult institution. Procedia Soc. Behav. Sci. 97, 384–391. doi: 10.1016/j.sbspro.2013.10.249

Firmansyah, R., Nahadi, N., and Firman, H. (2020). Development of performance assessment instruments to measure students’ scientific thinking skill in the quantitative analysis acetic acid levels. J. Educ. Sci. 4, 459–468. doi: 10.31258/jes.4.3

Fixsen, A. A., Aijaz, M., Fixsen, D. L., Burks, E., and Schultes, M. T. (2021). Implementation frameworks: an analysis. Chapel Hill, NC: Active Implementation Research Network.

Fixsen, D. L., Naoom, S. F., Blase, K. A., Friedman, R. M., and Wallace, F. (2005). Implementation research: a synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network (FMHI Publication #231).

Ford, T. G., and Hewitt, K. (2020). Better integrating summative and formative goals in the design of next generation teacher evaluation systems. Educ. Policy Anal. Arch. 28:63. doi: 10.14507/epaa.28.5024

Foster, L., Dunn, W., and Lawson, L. M. (2013). Coaching mothers of children with autism: a qualitative study for occupational therapy practice. Phys. Occup. Ther. Pediatr. 33, 253–263. doi: 10.3109/01942638.2012.747581

Friedman, M., Woods, J., and Salisbury, C. (2012). Caregiver coaching strategies for early intervention providers: moving toward operational definitions. Infants Young Child. 25, 62–82. doi: 10.1097/IYC.0b013e31823d8f12

Jayaraman, G., Marvin, C., Knoche, L., and Bainter, S. (2015). Coaching conversations in early childhood programs. Infants Young Child. 28, 323–336. doi: 10.1097/IYC.0000000000000048

Kasprzak, C., Hebbeler, K., Spiker, D., McCullough, K., Lucas, A., Walsh, S., et al. (2019). A state system framework for high-quality early intervention and early childhood special education. Top. Early Child. Spec. Educ. 40, 97–109. doi: 10.1177/0271121419831766

Kemp, P., and Turnbull, A. P. (2014). Coaching with parents in early intervention: an interdisciplinary research synthesis. Infants Young Child. 27, 305–324. doi: 10.1097/IYC.0000000000000018

Kentucky Early Intervention Services. (2023). Early Intervention Provider Performance Standards. Available at: https://www.chfs.ky.gov/agencies/dph/dmch/ecdb/Pages/fsenrollment.aspx (Accessed August 1, 2024).

Mahoney, G., and Mac Donald, J. D. (2007). Autism and developmental delays in young children: the responsive teaching curriculum for parents and professionals. Austin, TX: Pro-ed.

Marturana, E. R., and Woods, J. J. (2012). Technology-supported performance-based feedback for early intervention home visiting. Top. Early Child. Spec. Educ. 32, 14–23. doi: 10.1177/0271121411434935

Meadan, H., Lee, J., Sands, M., Chung, M., and García-Grau, P. (2023). The coaching Fidelity scale (CFS). Infants Young Child. 36, 37–52. doi: 10.1097/IYC.0000000000000231

Meadan, H., Snodgrass, M. R., Meyer, L. E., Fisher, K. W., Chung, M. Y., and Halle, J. W. (2016). Internet-based parent-implemented intervention for young children with autism: a pilot study. J. Early Interv. 38, 3–23. doi: 10.1177/1053815116630327

Metz, A., Halle, T., Bartley, L., and Blasberg, A. (2013). “Implementation Science” in Applying implementation science in early childhood programs and systems. eds. T. Halle, A. Metz, and Martinez-Beck (Towson, MD: Brookes), 1–3.

National Implementation Research Network, University of North Carolina at Chapel Hill (2022). Active implementation frameworks. Available at: https://nirn.fpg.unc.edu/module-1 (Accessed August 1, 2024).

Neuman, S. B., and Cunningham, L. (2009). The impact of professional development and coaching on early language and literacy instructional practices. Am. Educ. Res. J. 46, 532–566. doi: 10.3102/0002831208328088

Pellecchia, M., Mandell, D. S., Beidas, R. S., Dunst, C. J., Tomczuk, L., Newman, J., et al. (2022). Parent coaching in early intervention for autism spectrum disorder: a brief report. J. Early Interv. 45, 185–197. doi: 10.1177/10538151221095860

Romano, M., and Schnurr, M. (2022). Mind the gap: strategies to bridge the research-to-practice divide in early intervention caregiver coaching practices. Top. Early Child. Spec. Educ. 42, 64–76. doi: 10.1177/0271121419899163

Rush, D. D., M'Lisa, L. S., and Hanft, B. E. (2003). Coaching families and colleagues: a process for collaboration in natural settings. Infants Young Child. 16, 33–47. doi: 10.1097/00001163-200301000-00005

Rush, D., and Shelden, M. L. (2020). The early childhood coaching handbook. 2nd Edn. Towson, MD: Brookes.

Salisbury, C., and Copeland, C. (2013). Progress of infants/toddlers with severe disabilities: perceived and measured change. Top. Early Child. Spec. Educ. 33, 68–77. doi: 10.1177/0271121412474104

Salisbury, C., Woods, J., Snyder, P., Moddelmog, K., Mawdsley, H., Romano, M., et al. (2018). Caregiver and provider experiences with coaching and embedded intervention. Top. Early Child. Spec. Educ. 38, 17–29. doi: 10.1177/0271121417708036

Sawyer, B. E., and Campbell, P. H. (2017). Teaching caregivers in early intervention. Infants Young Child. 30, 175–189. doi: 10.1097/IYC.0000000000000094

Seruya, F. M., Feit, E., Tirado, A., Ottomanelli, D., and Celio, M. (2022). Caregiver coaching in early intervention: a scoping review. Am. J. Occup. Ther. 76:7604205070. doi: 10.5014/ajot.2022.049143

Shrout, P. E., and Fleiss, J. L. (1979). Intraclass correlations: uses in assessing rater reliability. Psychol. Bull. 86, 420–428. doi: 10.1037/0033-2909.86.2.420

Stewart, S. L., and Applequist, K. (2019). Diverse families in early intervention: professionals’ views of coaching. J. Res. Child. Educ. 33, 242–256. doi: 10.1080/02568543.2019.1577777

Tavakol, M., and Dennick, R. (2011). Making sense of Cronbach’s alpha. Int. J. Med. Educ. 2, 53–55. doi: 10.5116/ijme.4dfb.8dfd

Tomchek, S. D., and Wheeler, S. (2022). Using the EI/ECSE personnel preparation standards to inform in-service professional development in early intervention. Young Except. Children 25, 146–157. doi: 10.1177/10962506221108952

Tomeny, K. R., McWilliam, R. A., and Tomeny, T. S. (2020). Caregiver-implemented intervention for young children with autism spectrum disorder: a systematic review of coaching components. Rev. J. Autism Dev. Disord. 7, 168–181. doi: 10.1007/s40489-019-00186-7

U.S. Department of Education. (2019). RDA: Results driven accountability. Available at: https://www2.ed.gov/about/offices/list/osers/osep/rda/index.html (Accessed August 1, 2024).

Ward, R., Reynolds, J. E., Pieterse, B., Elliott, C., Boyd, R., and Miller, L. (2020). Utilisation of coaching practices in early interventions in children at risk of developmental disability/delay: a systematic review. Disabil. Rehabil. 42, 2846–2867. doi: 10.1080/09638288.2019.1581846

Watson, C., and Gatti, S. N. (2012). Professional development through reflective consultation in early intervention. Infants Young Child. 25, 109–121. doi: 10.1097/IYC.0b013e31824c0685

Wheeler, S. J. (2023). Evidence-informed in-service professional development to support KEIS providers' sustained fidelity to caregiver coaching. Electronic theses and dissertations. Paper 4051. Available at: https://ir.library.louisville.edu/etd/4051 (Accessed August 1, 2024).

Woods, J. (2018). Family guided routines based intervention: key indicators manual. 3rd Edn. Unpublished manual. Tallahassee, FL, United States: Communication and Early Childhood Research and Practice Center, Florida State University.

Woods, J. (2023). Family guided routines based intervention. Available at: https://www.fgrbi.com

Workgroup on Principles and Practices in Natural Environments, OSEP TA Community of Practice: Part C Settings. (2008). Seven key principles: Looks like/doesn’t look like. Available at: https://www.ectacenter.org/~pdfs/topics/familites/Principles_LooksLike_DoesntLookLike3_11_08.pdf (Accessed August 1, 2024).

Keywords: coaching, fidelity measurement, part C early intervention, professional development, results driven accountability

Citation: Tomchek SD, Wheeler S, Cheek C, Little L and Dunn W (2024) Development, field testing, and initial validation of an adherence rubric for caregiver coaching. Front. Educ. 9:1324118. doi: 10.3389/feduc.2024.1324118

Edited by:

Angeliki Kallitsoglou, University of Exeter, United KingdomReviewed by:

Ashley Johnson Harrison, University of Georgia, United StatesEvdokia Pittas, University of Nicosia, Cyprus

Copyright © 2024 Tomchek, Wheeler, Cheek, Little and Dunn. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Scott D. Tomchek, c2NvdHQudG9tY2hla0Bsb3Vpc3ZpbGxlLmVkdQ==

Scott D. Tomchek

Scott D. Tomchek Serena Wheeler

Serena Wheeler Cybil Cheek1

Cybil Cheek1 Lauren Little

Lauren Little Winnie Dunn

Winnie Dunn