- School of Biomedical Sciences, Faculty of Health, University of Plymouth, Plymouth, United Kingdom

Introduction: Online examinations are becoming increasingly incorporated into higher education. However, Biomedical Science students’ perspectives on exam format preferences remains unexplored. This study aims to investigate exam format preferences and attitudes of these students.

Methods: A self-reported survey of 31 questions on online exam perceptions was utilized and composed of six dimensions: affective factors, validity, practicality, reliability, security, and pedagogy. Scores measured student attitudes around online exams. Additionally, categorical questions examined attitudes around open-book online exams (OBOEs), closed-book online exams (CBOEs), and paper-based exams (PBEs). Qualitative analysis was conducted via the use of open-ended questions and a focus group on five participants. The questionnaire was distributed to undergraduates and 146 students responded across six different programmes.

Results: The findings revealed that 57.5% of students preferred OBOEs while only 19.9% preferred PBEs. OBOEs were perceived as more favorable in all six dimensions and superior in terms of reducing stress, ensuring fairness, allowing demonstration of understanding, and retaining information. Gender had no statistically significant influence on perception. However, programme statistically significantly affected responses. Qualitative data supported the main statistical analysis and identified a trade-off between the ability to retain information with PBEs, despite the stress and better demonstration of understanding with OBOEs.

Discussion: Overall, OBOEs were viewed positively and were well accepted; they are anticipated to be a dominant examination format at the UoP. Institutions wishing to implement online exams should consider the perceived benefits they have over traditional exams. These findings contribute to the understanding of students’ perceptions of exam formats, which can inform their design and application in higher education. Further research should explore the perceptions of other disciplines and identify ways to address any challenges associated with online exams.

Introduction

In an attempt to mitigate the spread of COVID-19 in 2020, non-essential social contact was severely limited during lockdowns. Academic staff were forced to adapt content for an online setting without adequate support or training (Müller et al., 2021). Within UK universities, in-person exams were difficult to implement, thus all educational aspects were modified to allow for remote distance learning and assessment (The Organisation for Economic Co-operation and Development, 2020).

A decade ago, predictions were made that paper examinations would be phased out in favor of online examinations. A 2013 news report found that many spokespersons for academic organizations predicted a full transition to online-based examinations by the year 2023 (BBC News, 2013), although with the admission that there are technical and logistical “hurdles” to overcome.

Exams are an integral part of education. At the end of the learning process, it is vital to assess capabilities and knowledge, enabling students to make informed decisions on how to improve their learning strategies, identify weaknesses, and maximize learning.

Traditionally, exams have used a paper format which have remained largely unchanged for over a century (Cambridge Assessment, 2008). Based on our previous faculty experiences, examination methods can be classified into three types: open-book online exams (OBOEs), closed-book online exams (CBOEs), and paper-based exams (PBEs). OBOEs allow students to conduct the exam remotely from any location or device with full internet access. CBOEs are conducted on campus in a computer suite with an invigilator and no access to any resources. PBEs refer to the traditional and most common exam setting; they are performed on campus using pen and paper with an invigilator and no internet access. In most settings, exam questions can be a variation of multiple-choice, short answers, or long answer essays.

Post-pandemic saw most educational institutions return to traditional paper exams. However, some institutions saw an advantage in replacing PBEs with OBOE/CBOEs, suggesting that they potentially have benefits over PBEs (Bena, 2023) and therefore have already made the switch to online exams (Alsadoon, 2017). Following their lead, the School of Biomedical Sciences, Faculty of Health (FoH) at the University of Plymouth (UoP) plans to move most first-year assessments online.

Previous studies suggest that students typically prefer online exams compared to PBEs (Donovan et al., 2007; Dermo, 2009; Debuse and Lawley, 2016; Alsadoon, 2017; Afacan Adanır et al., 2020) due to the range of benefits associated. Advantages include students’ feeling more comfortable taking an online exam (Gokulkumari et al., 2022), having reduced OBOE related anxiety and stress (Alsadoon, 2017) with no negative impact on academic performance (Woldeab and Brothen, 2019). Additionally, automation of grading eliminates the need to decipher illegible handwriting, and results can be quickly and accurately processed (Alsadoon, 2017; Gokulkumari et al., 2022). Conducting an online exam could also allow for a greater range of question design (Boitshwarelo et al., 2017).

However, there are disadvantages. Some online exams may not be appropriate for all subjects (Alsadoon, 2017). Medical students prefer CBOEs (Lim et al., 2006; Elsalem et al., 2021) and PBEs (Eurboonyanun et al., 2021) above OBOEs. A proportion of UK students are considered to be digitally disadvantaged (Office for Students, 2020; Joint Information Systems Committee, 2021) and may struggle with online exams due lack of access to technology or resources. PBEs are less susceptible to technical issues or connectivity problems (The Organisation for Economic Co-operation and Development, 2020), and this format allows consistency during exams. OBOEs are perceived as easier to cheat on and collude with others (Elmehdi and Ibrahem, 2019), however, implementation of proctoring can mitigate this.

Students’ attitudes on exams can affect academic performance and engagement in the learning process. Students with negative perceptions of exams may be less motivated, which may lead to lower exam scores (Woldeab and Brothen, 2019). Understanding students’ perceptions on the benefits and limitations of online exams can provide valuable insights to improve the testing experience and enhance student learning. Student perceptions of their performance during an online exam should be explored including satisfaction with facilities, environmental aspects, and potential future concerns regarding employability.

This study aims to analyze student perceptions of exams using a focus group and questionnaire exploring six dimensions: how students feel during exams (affective factors), their appropriateness (validity), the challenges associated (practicality), their accuracy and reliability (reliability), whether they are a secure alternative (security), and whether online exams play a positive role in learning (pedagogy). The School of Biomedical Sciences runs four undergraduate programmes. This study will also determine any differences between responses regarding age, gender, and programme.

Materials and methods

Ethical statement

This study was approved by the University of Plymouth Science and Engineering Human Ethics Committee. Questionnaire participants were asked to give their consent as a preliminary question and to confirm they were over the age of 18. Focus group participants gave written consent for their participation in the study. All data was anonymized.

Study design

The questionnaire consisted of 24 multiple choice questions (MCQs) based on previous work by Dermo (2009), however, certain questions were modified or removed to meet the needs of the research question following a pilot study (see below). Questions referring to MCQs were removed as only a few FoH modules used them. Within the validity dimension, the question “Online exams tests my IT skills” that was previously reverse scored by Dermo was not reverse scored in this paper as the Cronbach’s alpha was severely impacted, increasing from −0.100 to 0.606. This is likely because students are much more comfortable using computers today than they were in 2009; Dermo correlated that not liking computers equates to liking paper exams. Dermo looked only at “online” and “paper,” whereas this study explores three different exam modes, including “closed-book online.” In the practicality dimension, we did not include the question “There are serious health and safety issues with online exams” as Dermo concluded health and safety was not a concern, and we agreed. However, within this dimension, we included how participants computer skills and resources would impact exams (e.g., “I have adequate computer knowledge to participate in online exams”). In the security dimension, “The technology used in online assessments is unreliable” was modified to “I am concerned that technical or internet problems will impact my performance in an online exam” for specificity. “The online exam system is vulnerable to hackers” was removed as hacking during an exam is unlikely to be a risk, as a literature review revealed no published data of such hacking. General security also included new questions (e.g., “Online assessment is just as secure as paper-based exams”). In the pedagogy dimension “Online assessment can do things paper-based exams can’t” was not included as it is too vague, therefore specific questions were added about how pedagogy can be influenced through factors such as immediate feedback. “Online assessment is just a gimmick that does not really benefit learning,” was changed to “I feel that online exams add value to my learning” so all questions in this dimension were positive toward online exams, and ambiguous wording was removed.

For the focus group, qualitative, semi-structured interviewing sessions were created to collect relevant information (Supplementary Data 1). Unlike the questionnaire, the focus group did not differentiate online exams into OBOEs and CBOEs.

Questionnaire pilot study

The questionnaire was created using JISC software and piloted on 16 non-FoH students and staff. Further modifications were done based on qualitative feedback received from this pilot study (Supplementary Data 2). Modifications included the removal of repetitive questions such as “I find open-book online exams more stressful than other formats,” which would need to have a separate question for each exam format. These were replaced with questions such as “Which exam setting do you find most stressful?” offering a choice of one of four options “Open-book online,” “Closed-book online,” “Paper-based exam,” and “All are equal.” It was determined that this was more expedient to increase student participation, as too many questions can be off putting.

Participant eligibility

Eligibility for participation in this study required that participants were:

– ≥ 18 years old.

– Students within the University of Plymouth’s School of Biomedical Sciences undergraduate teaching programmes (n = 654).

– For the focus group, experience in online and traditional examinations.

School of Biomedical Sciences runs four undergraduate programmes: Biomedical Science (BMS), Human Biosciences, Clinical Physiology, Biomedical Science with Integrated Foundation year and Nutrition, Exercise and Health. The foundation year is co-taught with the Bachelor of Medicine Bachelor of Surgery with Foundation year. For the focus group, one participant was from the MSc Biomedical Science programme, although they were a recent BMS graduate.

Participant recruitment

For the questionnaire (Supplementary Data 3), a QR code was generated for the questionnaire and sent to FoH students via social media platforms (Facebook and WhatsApp groups) and flyers. A follow-up message was sent 1 week before the survey closed. For the focus group, an email was sent out to students inviting them to take part. For both the focus group and questionnaire, students were also invited to participate via word-of-mouth from informed academics and participating students.

Focus group conceptual framework

The theoretical framework for the research conducted was derived from “The Theory of Situated Learning.” The theory states that learning is a “fundamentally social process” and “a process of participation in communities of practice.” In a context regarding higher-education assessment, students within the UoP’s FoH represent a united community as they all share a similar goal in their education. The roles of a student fit as an example of legitimate peripheral participation, in which students progress to become experienced members of the University’s community of practice (Lave and Wenger, 1991). For this study, it is important to consider the implications of online and paper examinations, respecting the Theory of Situated Learning, to assess if online or paper exams best suit the context in which students collaborate and learn information on their specific modules.

Focus group data collection

Participants were given a choice of attending a 45-min interview in a room or virtually via Zoom. Participants were allowed to express any queries regarding the study and were given an information sheet to read prior to the interview. The interview used the informal conversation technique to attempt to create an environment in which each participant could freely express opinions in a non-interruptive, unbiased environment. The interview consisted of ten questions and prompts were also given when necessary (Supplementary Data 1). Whilst the structure of the interview was not explicitly organized into groups, the questions were presented in a specific sequence. With consent, all interviews were recorded as a mp4 file using Zoom and notes were also taken. All files were securely stored in line with GDPR guidelines.

Statistical analysis

Statistical analysis was conducted on SPSS Version 25. Internal validation using Cronbach alpha test results were reported for each dimension. Descriptive statistics were used to report student demographics, examination preference, and responses to questions. For each item in the questionnaire, we determined the median response using a 5-point Likert scale, where 1 is strongly disagree and 5 is strongly agree. This median value represents the most common response to each item and lower (Q1) and upper quartiles (Q3) encompass the middle 50% of responses, which allowed us to understand the spread of responses. This approach captured both the central tendency and dispersion of responses for each item. A Mann-Whitney U test was conducted to determine differences in perception of each dimension based on (i) gender and (ii) student programme. Associations between age and dimensions were measured using multiple linear regression analysis. P-values of < 0.05 were considered statistically significant.

Qualitative analysis

The method of quantitative analysis we used was grounded theory. At the end of the questionnaire, an open-ended question asked: “Do you have any comments and feedback relating to this study?” Analysis of qualitative data identified common themes (e.g., attitudes surrounding OBOEs) and generated categories for coding (e.g., OBOEs positive, OBOEs negative).

For the focus group, qualitative analysis aimed to determine how students perceive paper and online examinations using direct quotations. Any potential similarities or differences in opinions and perceptions between the participants.

Results: questionnaire

Student demographics

A total of 22% of students responded (n = 146) with an age range of 18–46 years, mean 21.4 (SD = 5.3) years, and 70% were female (n = 102). Most participants were Biomedical Science students (n = 94; 64.4%), while Bachelor of Medicine Bachelor of Surgery with Foundation Year had the fewest (n = 3; 2.1%). Year 1 students had the highest number of responses (n = 64; 43.8%) while Placement year had the lowest (n = 3; 2.1%; Table 1). Programmes were designated into two groups, based on the fact that Biomedical Science is the largest programme in the School: Biomedical Science (n = 94) and Other (n = 52). Other included Human Biosciences, Clinical Physiology, Biomedical Science with Integrated Foundation year, and Bachelor of Medicine Bachelor of Surgery with Foundation year, and Nutrition, Exercise and Health. 74% of respondents had participated in an online exam before, either at college or university.

Student perceptions

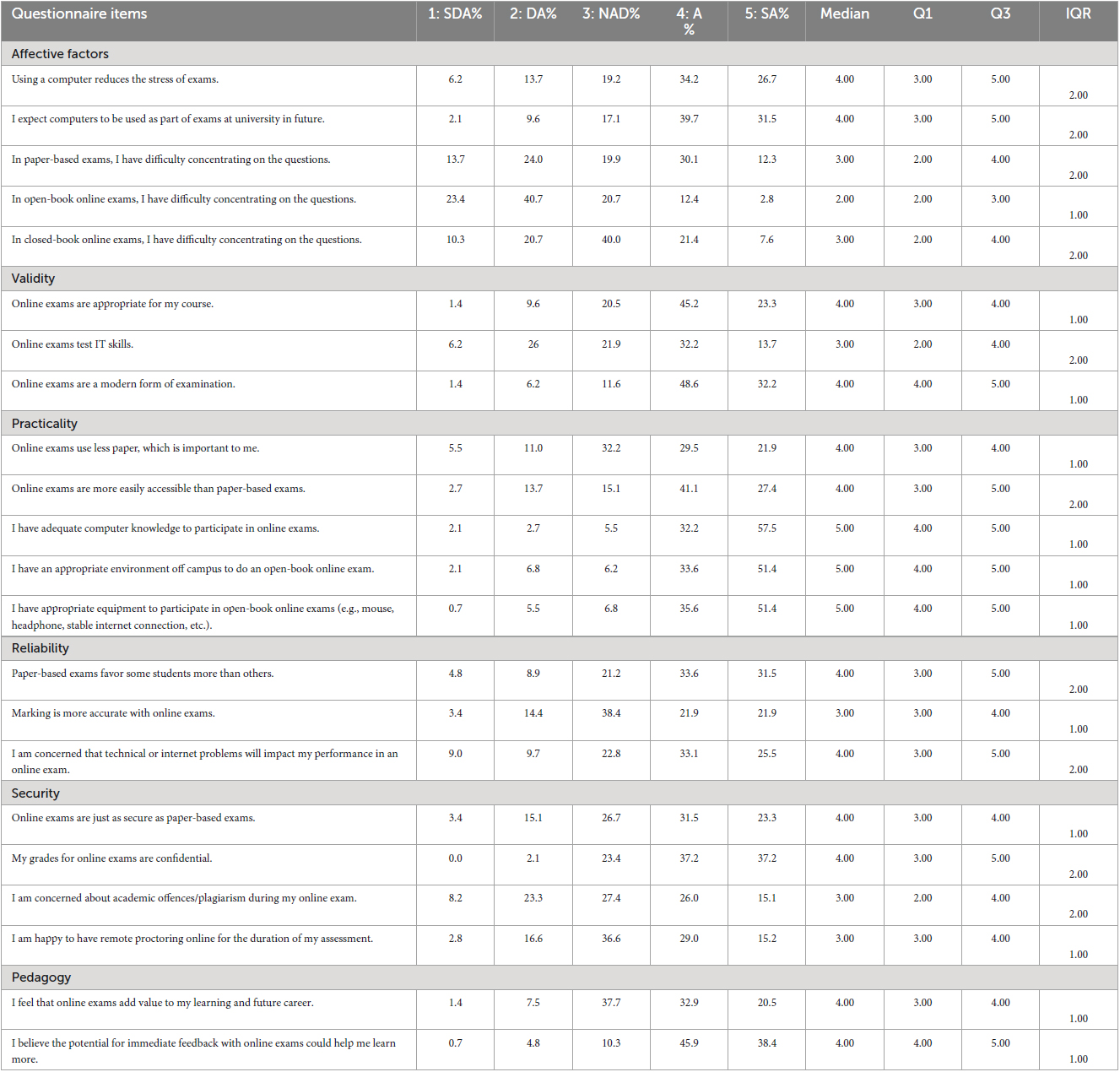

Table 2 shows the results of the Likert scale analysis. We considered a median score > 3.00 as indicative of a positive attitude toward the item, while a median score < 3.0 was negative and 3.00 was neutral.

Table 2. Percentage of participant responses (n = 146) with median where SA: strongly agree; A: agree; NAD: neither agree or disagree; DA: disagree; SDA: strongly disagree; Q1: lower quartile; Q3: upper quartile; IQR: inter quartile range.

Affective factors

The majority of students (60.9%) agreed that using a computer reduces the stress of exams and expect computers to be used for exams at university (71.2%). More students had difficulty concentrating on PBEs (42.4%) rather than OBOEs (15.2%) and CBOEs (29%).

Validity

Participants agreed that online examinations are appropriate for their course (68.5%), and they are a modern form of examination (80.8%). According to the surveyed students, online examinations were seen as a test of their IT skills (45.9%).

Practicality

In terms of environmental sustainability, 51.4% of students thought the use of less paper was important. The majority of students expressed that online exams are more accessible (68.5%) than PBEs, while the majority of students strongly expressed that they had adequate computer knowledge (89.7%). With regard to OBOEs, most felt they had the appropriate environment (85.0%) and equipment (87.0%) off campus.

Reliability

A total of 65.1% of students thought PBEs were in favor of certain peers. Students believed that the accuracy of marking online exams was marginally better than PBEs (43.8%) and were slightly concerned that technical difficulties will impact exam performance (58.6%).

Security

Online exams are perceived to be slightly more secure than PBEs (54.8%) and students agree that grades are more confidential when online (74.4%). A total of 41.1% students weren’t overly concerned about academic offences or plagiarism during online exams and 44.2% wouldn’t mind remote proctoring.

Pedagogy

A total of 53.4% of students felt that online exams add value to their learning and future careers, and very strongly agreed that immediate feedback enhanced learning (84.3%).

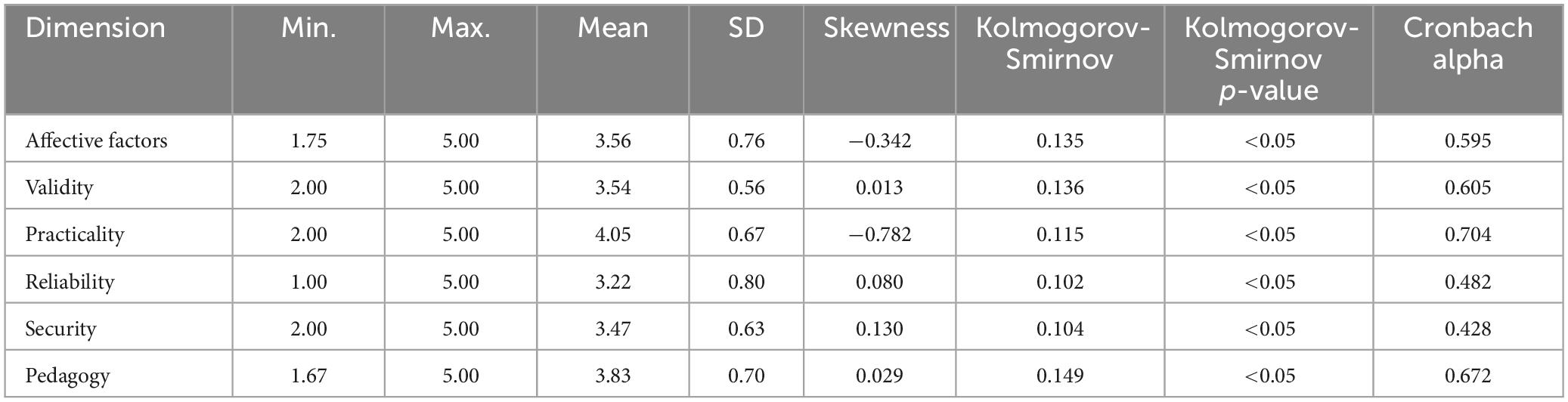

All dimensions

Data for questions under each dimension were collated (Table 3) and all had positive mean ratings favoring online exams, with practicality having the highest value (4.05). All dimensions appear to have a neutral skewness except practicality which is moderately left-skewed (−0.782). Kolmogorov-Smirnov test indicates that all sample distributions are not normally distributed (p < 0.05). Cronbach’s coefficient alpha determined that the entire questionnaire had an internal validity value of 0.874. Practicality had an acceptable internal consistency (0.704), while the remaining dimensions were either barely acceptable or unacceptable.

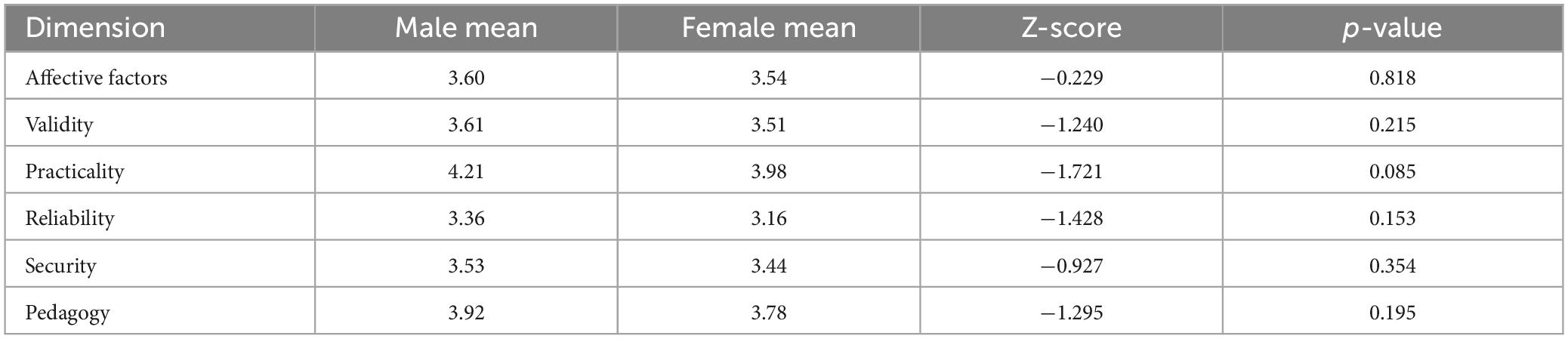

Gender differences

No statistically significant gender differences were found in mean scores for each dimension (Table 4). However, in terms of absolute values, males had a consistently higher mean for each dimension relative to females.

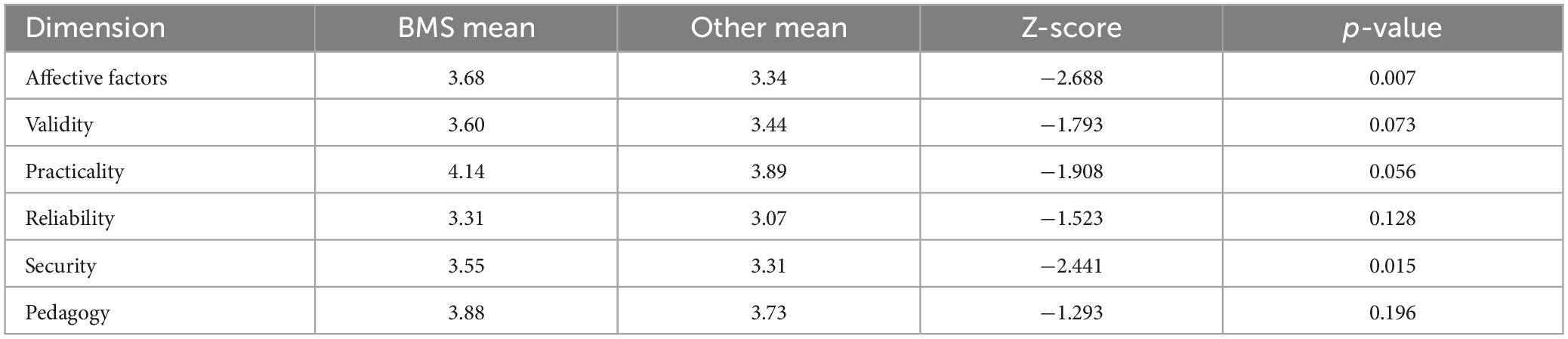

Programme differences

Programme only had a significant impact on perception within two dimensions: affective factors (p = 0.007) and security (p = 0.015; Table 5). BMS students had more difficulty concentrating during PBEs than students in Other programmes [mean (SD) = 3.23 (1.24) vs. 2.67 (1.23); p = 0.011] but found it easier to concentrate during OBOEs [mean (SD) = 2.10 (0.93) vs. 2.67 (1.15); p = 0.004]. Although all courses agree that their grades for online exams are confidential, BMS students felt more strongly than Other students [mean (SD) = 4.23 (0.81) vs. 3.87 (0.82); p = 0.011].

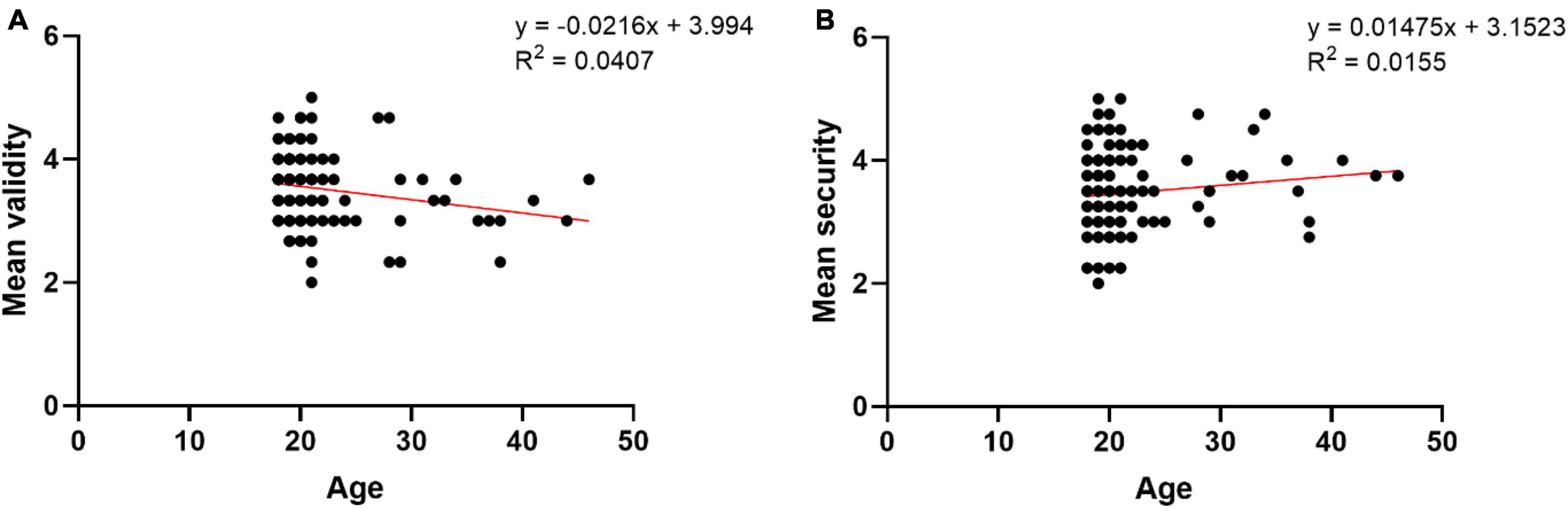

Age differences

Multiple regression analysis determined that there was a statistically significant difference between age and two dimensions: validity (negative relationship p = 0.001; Figure 1A) and security (positive relationship p = 0.008; Figure 1B). BMS students viewed online exams more positively than Other students, while the latter were neutral as to whether online exams are a more valid and secure alternate exam.

Figure 1. Linear regression of two dimensions against age for (A) mean validity and (B) mean security.

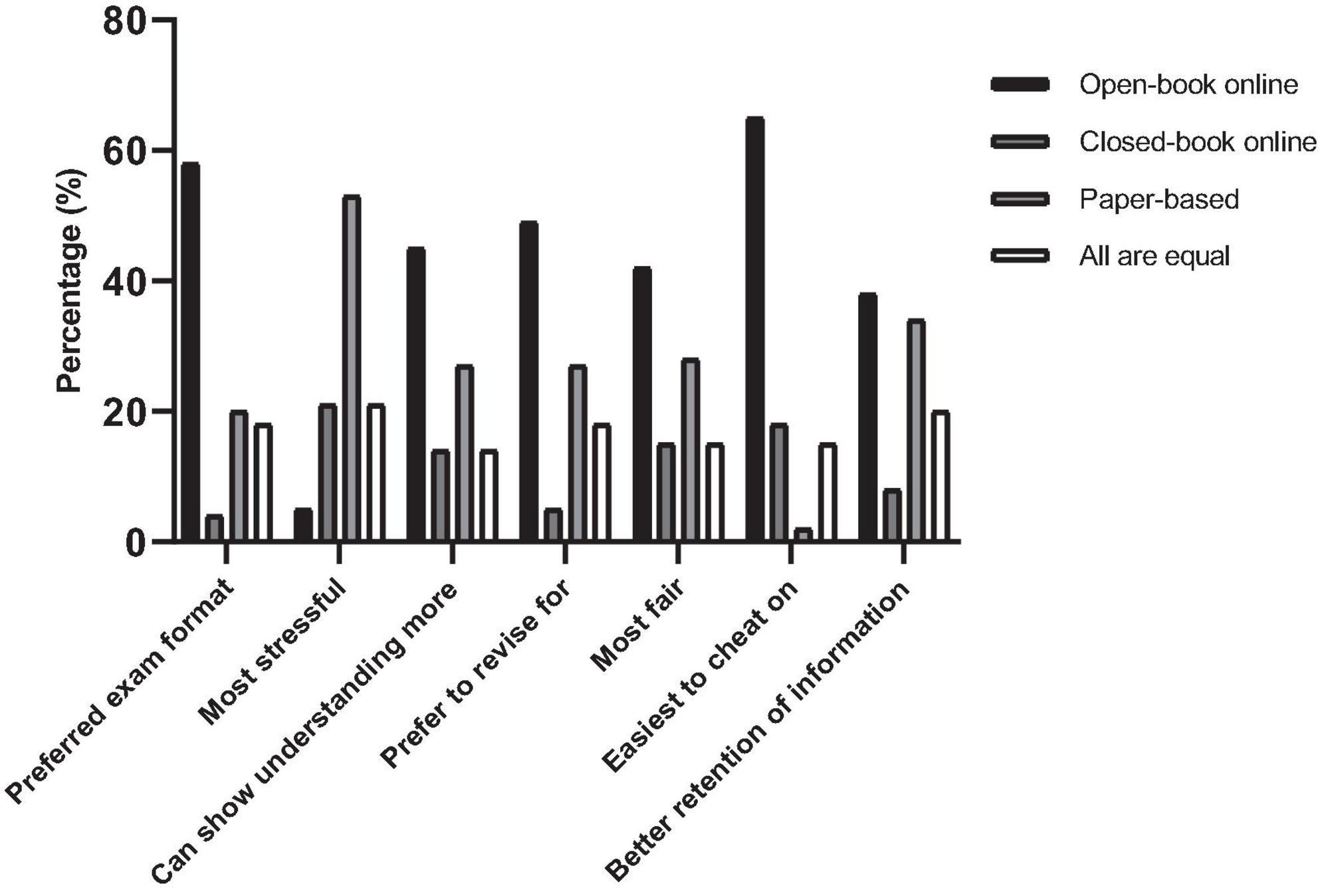

Examination preferences (non-Likert scale questions)

This study showed that most students preferred OBOEs (57.5%), while only a small number preferred CBOEs (4.1%; Figure 2). PBEs were perceived as the most stressful (53.4%), followed by CBOEs and all are equal (20.5%). Only 8% found OBOEs more stressful than other formats. OBOEs were perceived as allowing for better demonstration of understanding (45.2%) compared to CBOEs (13.7%) and PBEs (26.7%). Half of respondents preferred to revise for OBOEs (49.3%), while CBOEs was the least preferred method (5.5%). OBOEs were considered the fairer (41.8%), followed by PBEs (28.1%), with CBOEs and all equal at 15.1%. OBOEs were thought to promote better retention of information (38.4%), followed closely by PBEs (33.6%), all are equal at 19.9% and CBOEs at 8.2%. OBOEs online exams where thought to be the easiest to cheat on (65.1%), with CBOEs at 17.8%, all are equal at 15.1%, and PBEs at 2.1%.

Figure 2. Representation of the percentage of student responses for multiple-choice questions relating to preference of exam setting based on different aspects (n = 146).

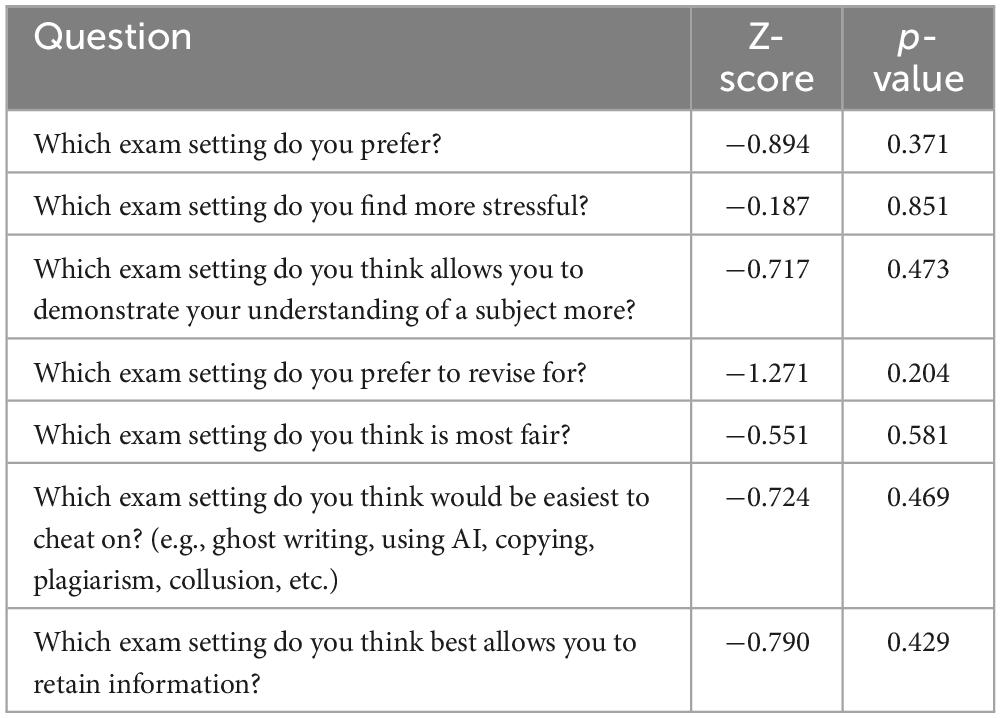

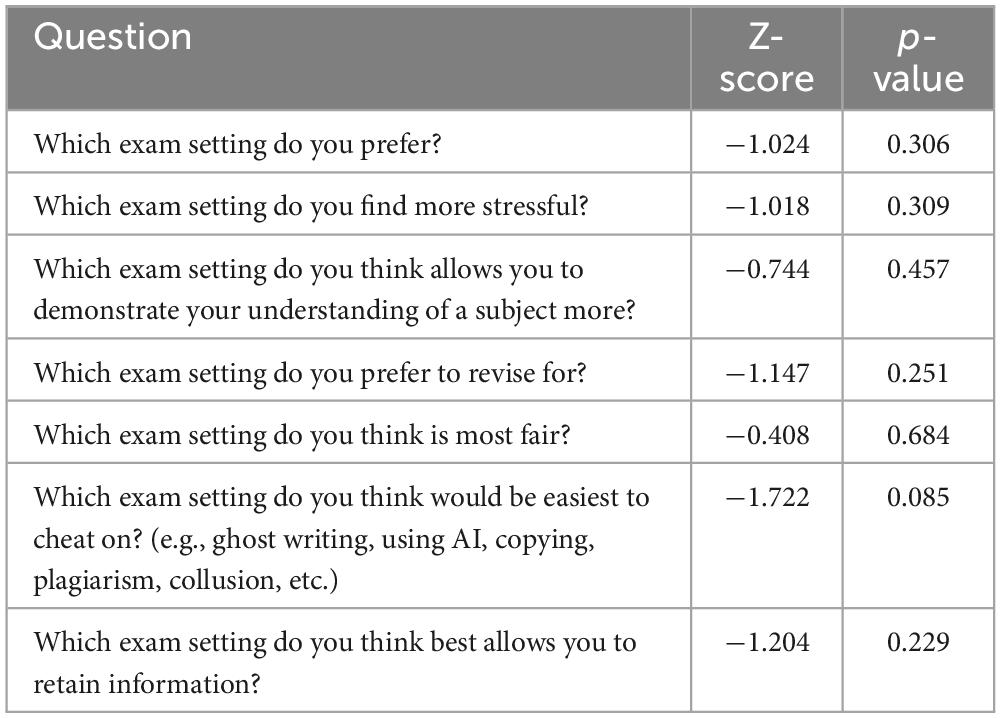

Gender differences

No statistically significant differences were found between gender and exam setting preference (Table 6). Supplementary Data 4 shows percentage responses by gender.

Programme differences

No statistically significant differences were found between programme and exam setting preference (Table 7). Supplementary Data 4 shows percentage responses by programme.

Questionnaire qualitative data—experiences and attitudes

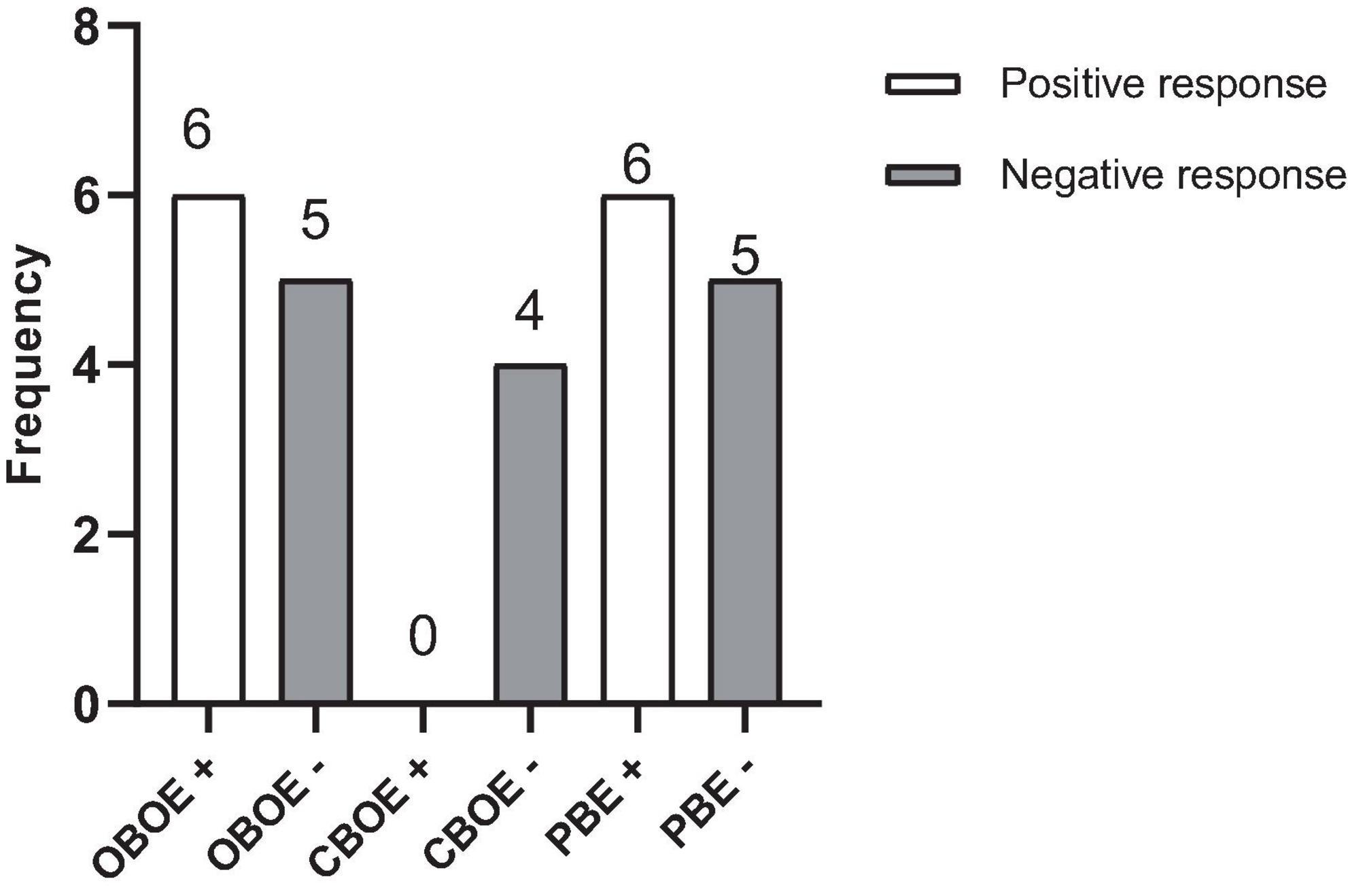

Students were encouraged to provide comments relating to the study via an open-ended question, of which 16 people responded (10.9%; Supplementary Data 5). Responses were organized into six codes, pertaining to positive and negative attitudes to OBOEs, CBOEs and PBEs. Some responses had multiple codes, leading to 27 total codes from the 16 responses. There was an even number of positive and negative responses of 6 and 5, respectively, regarding OBOEs and PBEs, whereas CBOEs had 4 negative comments and no positive comments (Figure 3).

Figure 3. Positive and negative attitudes of the six codes and the frequency of either positive (+) or negative (−) response to an exam setting (n = 16).

Open-book online exams

Positives comments related to reduced anxiety, supporting mental health issues, and ability to demonstrate deeper understanding. One student noted: “… online open book exams provide more equal chances as you can differentiate between who understands the content and who doesn’t. It’s not … a memory test and reflects a working environment more than closed-book paper exams do.” OBOEs were seen as a closer reflection to a professional environment, particularly research. Conversely, negative comments included how OBOEs increased stress, reduced information retention, lack of preparation incentive, and increased cheating. One participant noted: “I think both [OBOEs and PBEs] should be included in studies, I personally learned a lot more when preparing for the paper [based] exam.” They also expressed how they: “had to step back … and dive into papers … so I could be confident … this took me weeks. … But for the online exams I learned a great deal of the required material (within) the 48 h timer and ended up with good grades.” Although OBOEs required less effort and time to acquire good grades, students found that PBEs allowed for deeper learning where more effort and time dedication was required. They state how less effort and time commitment is required for OBOEs compared to PBEs to still acquire good grades “… months later I still remember the things I learned (from) the paper exam but have forgotten material used in the online exams.” Multiple concerns were expressed regarding the ability of OBOEs to retain information over time.

Closed-book online exams

Students expressed no positive comments about CBOEs as they were seen as favoring those with better memory and not testing understanding. One student commented “(CBOEs) do not make sense … Anything closed book favors people with better memory!.” This was a recurring theme throughout the analysis with students noting that this format did not allow the differentiation between those who understand the content and those who have good recall. Additionally, some students with anxiety or panic disorders found it difficult to participate in CBOEs: “I suffer panic attacks in the environment of paper based and closed exams.” Interestingly, those who expressed negative opinions about CBOEs also had negative view on PBEs.

Paper-based exams

Students had mixed opinions about PBEs. Positive themes included longer retention of information and removal of the plagiarism risk; this format is better for learning. One student commented “I much prefer paper (based) exams because they force me to revise harder.” Negative themes focused on PBEs’ emphasis on recall of information and stress levels. One student noted: “Paper based exams are generally more stressful… they rely solely on a person’s capacity to retain lots of information and use it quickly - which not everyone can do easily.” Students were concerned that this format favors those with good working memory.

Overall, OBOEs were seen to provide opportunity to demonstrate understanding of real-like skills (e.g., organization and presentation of information), but lacked incentive to prepare. CBOEs received no positive responses. In general, there is trade-off between OBOEs and PBEs, with some students preferring PBEs for the retention of information despite the stress, while others preferred OBOEs for their ability to demonstrate understanding.

Results: focus group

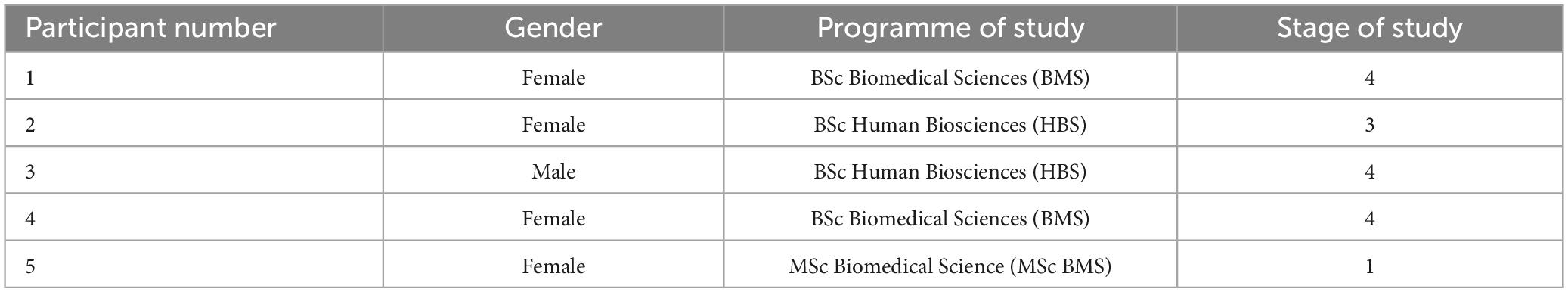

Details of the five students who participated in the focus are given in Table 8.

Table 8. Description of demographic information and educational levels of the participants of the study (n = 5).

General thoughts on paper-based examination formats

When asked about thoughts on PBEs, four participants felt that they were a good format to assess content, however, they recognized they often felt stressed by them due to the significant preparation needed before the exam to perform well.

“I think they’re good, but obviously you have to do a lot of preparation for them if you want to do well in them, lots of revision to do in the weeks leading up to the exam. I feel it can be quite a stressful procedure, considering we don’t know what’s in the exam.”

Participant 1

Whilst they recognized the impact of the stress from exam preparation, one participant felt they had significant confidence once inside the venue and felt comfortable taking the exam because of the preparation the participant did in the weeks before the exam.

“Having a good, all-round, basis of the knowledge, I’m okay to go in and I feel confident.”

Participant 2

One participant believed that there is a growing stigma around arguing against paper-based exams due to how long they’ve been used in education. Despite this, the participant firmly believed that the use of PBEs is outdated, and that content can be better assessed with other exam formats.

“(Paper-based exams) have been used for a long time, so I think there is some stigma about going against them, but personally, I feel they are a little bit outdated as a method of examination.”

Participant 3

One participant thought that PBEs were not at all effective as a form of assessment in comparison to other methods, suggesting that the format promotes memorization over understanding of the content.

“From previous experience, I don’t think they (PBEs) are effective. It’s more about remembering PowerPoints and rewriting it in an exam, which is enough to pass.”

Participant 5

General thoughts on online examination formats

All the participants perceived online examinations (OEs) to feel significantly different than PBEs, but they still felt that online-based examinations had limitations. All the participants were limited in their experience in OEs, but they managed to recall previous experiences regarding the unsatisfaction of doing an exam on a computer compared to a traditional paper exam, for example:

“I’ve not really done many [online examinations], but I feel that online exams are sometimes less rewarding than paper exams because there seems to be a stigma of not needing to prepare as much for them. Because it’s computer-based people feel they have more time.”

Participant 2

All participants felt taking part in an OE was less stressful than PBEs. However, because of this, three participants felt that OEs were potentially less advantageous than PBEs. For example:

“I think they’re less stressful than the traditional paper exam, but I don’t necessarily think they’re advantageous over paper exams. I feel students wouldn’t prepare for them as well as they would a paper exam.”

Participant 1

“I didn’t feel as motivated to study for online-exams.”

Participant 4

One participant stated they felt OEs were more reflective of how the world runs outside of a university environment, allowing the participant to feel more confident they have the experience of using online-based technology necessary to succeed once they had graduated from university.

“I feel more satisfied doing online examinations. I feel they are more reflective of the type of work that one would be doing in the real world, or an academic setting.”

Participant 3

Thoughts on exam preparation

There was variation in how students prepared between OEs and PBEs. One participant who prepared for both exams similarly did not feel the need to alter their preparation.

“I probably wouldn’t alter my exam preparation (for online exams), I think I’d just read through my notes, not testing my memory as such but I’d be more focused on understanding (the content).”

Participant 2

The four remaining participants felt like their exam preparation was altered. Their OE preparation saw a shift in revision technique to focus more on understanding the content before an online exam, whereas PBEs saw a greater amount of work and focus on memorizing content.

“To be honest, for a paper written exam I do a lot more work, I make sure I understand as much as I can. I take a lot of notes, although I always take notes, but in terms of reviewing my notes and revising, definitely I do a lot more for a paper-written exam.”

Participant 1

“For paper-based exams, I focused a lot more on memorization, I used flashcards and similar revision methods that emphasize memorizing key terms, key facts, and such, whereas with online examinations, it was a lot more about understanding material.”

Participant 3

There was recognition among one participant that their peers struggled when there was a lack of clarity toward the lead-up to the exam on the format of the examination and instructions that weren’t clear caused issues and stress for them.

“We had an issue this year, where the exam format was changed quite last minute which I think a couple of students got a bit confused about it.”

Participant 1

One participant emphasized the importance of having sufficient materials to revise from, and insufficient guidance and material content to aid preparation, negatively affecting the confidence of the participant sitting the examination.

“I really wanted to do a mock exam, as we had never done (paper-based exams) due to COVID, I hadn’t done an exam since GCSE, but they didn’t have any… When you don’t have access to past papers and things like that, that’s when I start to feel quite nervous… you can’t see how they mark questions, so when you get some 15- or 20-mark questions, I feel a bit in the dark.”

Participant 2

Conducting the exam

There was recognition that OEs were easier to carry out than PBEs. Two participants felt that PBEs restricted the participant’s ability to thoroughly answer the questions on the exam, due to difficulties with the participants’ handwriting.

“Personally, for my handwriting for it to be legible, I have to write quite slowly, so I find typing with a keyboard is much more to ‘the speed of my thoughts’ and is easier for me.”

Participant 3

“I can get hand cramps when doing a paper-based exam.”

Participant 4

However, one participant had concerns toward the reliability of the infrastructure and logistics of performing an OE.

“Online exams have things that could fail, you might not be able to access it. I’ve had it before where the exam is available online, but we haven’t been able to access it for hours. Extra time was given to us after, but it was still stressful.”

Participant 2

Comments on facilities and logistics

All of the participants felt the facilities in both exam formats were acceptable, however, three of the participants had minor comments about the facilities provided.

“I think (paper-based exam) facilities are okay, I just wish there were more clocks around the room. It’s quite hard to see the big clock at the front especially if you’re sat at the back of the hall. It’s quite hard to see especially if you’re short sighted.”

Participant 1

“I do feel the university could prepare a bit more on the exam format, so people can practice on essays and exams.”

Participant 4

“Invigilators could be more understanding, so less whispering and looking over your shoulder.”

Participant 5

One participant recognized the potential of OEs in improving accessibility for students that require exam modifications due to illness or disability, despite them voicing concerns over the reliability of online exams.

“I’m a Type 1 (Diabetic). If I were to be able to do an exam at home, I would feel calmer. I had last year a bad experience with a paper exam… I had to leave the exam for 20 min to allow my blood-sugars to regulate and I missed out on exam time and (the invigilators) wouldn’t let me leave as they didn’t really understand. I felt bad and stressed out as I thought I was disturbing everyone else.”

Participant 2

Participant exam performance and academic feedback

Very few similarities in opinions about participant exam performance was seen. Two participants said they performed better with PBEs. Furthermore, one of those participants recalled, during the COVID-19 pandemic, they performed much better on more stringent time-limited remote online-based exams than the remote 48-h open-book online-based exam format used by other modules.

“I performed better in paper-based exams than I did in online exams, whether that is due to the format or due to my own preparation, I’m not sure… I found that I did better on the time-limited online exam than I did 48 h exam. I think, if I am to speculate, that because I knew that it was time limited, I prepared more and therefore did better on it.”

Participant 3

Another participant said that exam performance was similar in both OEs and PBEs, however, the sense of achievement was much greater on PBEs than OEs, due to the preparation done for the paper-based exam feeling more worthwhile.

“To be honest I felt that I performed very similarly (in online exams) results wise, compared to previous grades… when I get a grade back, when I’ve done it in person I feel more accomplished than if I did it as an online-exam.”

Participant 1

Two participants said their performance was greater when using OEs, due to the lack of stress they faced, and the extra time allowed.

“I feel that I did better on online exams, because I felt I had more time to reflect and I don’t rush. I have more time to look back on my work. I feel less stressed as well which I think makes me perform better.”

Participant 2

“I did better with online exams than paper-based exams, as the extra time allowed a better grade.”

Participant 5

All participants, however, believed that feedback for online exam results was more detailed and significant as a tool to understand how to improve. For example:

“I got a lot more feedback on the online examinations, I don’t think I received any feedback for paper-based examinations that I can remember.”

Participant 3

Two participants mentioned that they felt the marking criteria from the online exam were marked to a more stringent level than compared to PBEs.

“I found that (online exams) were marked harsher. It is possible that (the different exam formats) is a factor (for the difference in marks). I don’t think it would be a very big factor as I found last year the paper examination and the online examination I did, the paper exam still had similar questions for the essay part, and then the MCQs after.”

Participant 3

“We were told in lectures leading up to the exams that examiners are more lenient to written papers than online exams.”

Participant 1

Employability concerns

Four participants had little concern that OEs will decrease their chances of employment post-graduation. One participant felt that the ability to undertake both formats of examination would re-enforce to employers their flexibility and adaptation toward different environments.

“The two different styles of exam shows people that you are adaptable between the two exam formats, and you have the skills for both, so maybe in the future it will be a good thing that students had to sit both types of exam.”

Participant 1

One participant felt that OEs could affect their employability in the future.

“People undermine online exams, especially online exams at university.”

Participant 4

One participant recognized that OEs are still in the early stages of development, but that in the future more development in them will ensure that they are up to the standard that PBEs are currently, meaning there will potentially be a negligible difference in employer perceptions of exam format.

“I don’t think that either format will affect my employability significantly. I feel that if in the future if online exams became the standard and we become, as a society, better at administering online exams that can really test knowledge and understanding, then I think everyone’s employability could improve.”

Participant 3

Discussing the use of more online exams in the future

Four of the participants felt that in the future, they expect that examinations will move to online formats, except for practical-based examinations.

“I feel in a few years they will be more online, nowadays everything is done online. Shopping is online, reading is online, textbooks are online as well, so I think they would go down the route of online too, unless it was a lab experiment or similar.”

Participant 2

One participant believes that whilst the UK may adopt online examinations in the future, OEs will likely not be the global standard of examination in the future due to the cost of installing computers.

“In the UK, online exams seem logical, however, all of my cousins in South Africa have never done an online exam.”

Participant 4

Two participants had no concerns overall regarding the security of online examinations, however, one participant had concerns regarding the recent proliferation of artificial intelligence which could potentially compromise the reliability of online exams in the future. The participant mentioned ChatGPT, a software that is programmed to generate a detailed response to a question (OpenAI, 2022).

“Being realistic, I think exams will remain paper-based for a long time, I’d personally love to see more online exams to keep up with standards outside of university, but unfortunately with things like ChatGPT artificial intelligence, it might be difficult to do online examinations that can be invigilated properly.”

Participant 3

All participants made comments regarding potential environmental benefits that OEs have over PBEs. Participants recognized that the lack of paper in an OE reduces paper waste, however, one participant was unsure if the increase in energy used to run computers outweigh the paper use, from an environmental perspective.

“From an environmental point, it’s much less paper, its saving trees but you’re still using electricity.”

Participant 2

Discussion

During the COVID-19 pandemic, the higher education system dramatically shifted away from traditional paper-based assessments. OBOEs become the primary mode to assess academic progress and understanding. With the onset of a different format of examination, it is important to examine student perceptions to determine whether it is perceived to be a reliable, valid, and secure alternative to PBEs, especially if institutions wish to continue using this format. Most participants from the current study accepted the use of online examinations, however, there was clear communication that further work needs to be done for their wide-spread use.

Our questionnaire found no significant differences with gender in exam preference, or any of the six dimensions. Previous findings support this (Dermo, 2009; Ilgaz and Afacan Adanır, 2020; Elsalem et al., 2021), although one study found that males have slightly more positive attitudes to online exams than females (Bahar and Asil, 2018). The fact that this previous study was done in a non-English speaking country could be a reason for this difference. There was no significant difference between programme and exam setting preferences, however, BMS was significantly higher than Other programmes in the dimension affective factors (including reduced stress) and security. This links with the focus group where participants felt that online exams were less stressful than PBEs as they felt they were easier to complete.

Our regression data showed there was a significant correlation between age and both dimensions of validity and security. This differs from other research that suggest age has no relation to exam perception (Dermo, 2009), however, perceptions could have changed over time, as these studies were conducted 14 years apart. Our results showed that older individuals were less confident in the validity of online exams and were less concerned about the security of online exams, however, this result should be interpreted with caution as our older sample size was limited.

Therefore, additional measures may be needed to alleviate these concerns and investigate them further. Due to costs and logistical reasons, it might be difficult for institutions to consider offering multiple modes of examination to cater to different students.

Questionnaire and focus group data both concluded an OBOE was the preferred method of examination, due to its perceived fairness, reduced level of stress, ability to demonstrate knowledge, and favored in terms of revision and information retention. However, it was also acknowledged by the majority that this format is the easiest to cheat on, especially considering the development of artificial intelligence. OBOEs were viewed positively and favorably in all six dimensions, with the highest positive score for the dimension of practicality.

Questionnaire qualitative data was not in line with quantitative data; responses were likely skewed toward people who have stronger feelings about exams, and not everyone commented. Positive and negative responses toward OBOEs and PBEs were almost equal, whereas the questionnaire data overwhelmingly favored OBOEs. In the focus group, most replies did not differentiate between OBOEs and CBOEs, however, online exams were noted to be less stressful. Presented in the qualitative analysis is an argument that a potential reason OBOEs are perceived as better is simply because they are easier, and this was noted by the focus group too. Perhaps this highlights a bias toward the positive aspects of OBOEs as PBEs are more difficult and time-consuming. However, just because PBEs are harder and require a significant dedication of time, it does not mean they are superior.

It was found that students who commented negatively about CBOEs also commented negatively about PBEs. This suggests that perceptions are not about whether the exam is conducted using a computer or in-person, but rather if relevant information is accessible, and high-stress situations in a short period of time can be avoided. It was found that CBOEs had no perceived benefits; this is in-line with the quantitative data that found it was the least preferred format across nearly all aspects. This may be because CBOEs are conducted less often in the FoH, therefore experiences are limited.

Our research found that most students preferred OBOEs, in line with previous research which shows that students are satisfied with them, accept their implementation, and prefer it (Donovan et al., 2007; Dermo, 2009; Debuse and Lawley, 2016; Alsadoon, 2017; Ilgaz and Afacan Adanır, 2020). Sánchez-Cabrero et al. (2021) found that 63.1% of students preferred to be evaluated remotely, whereas only 0.6% preferred on-site evaluation. One study has shown that medical students prefer OBOEs, and those who participated were the most satisfied (Bladt et al., 2022), although other work has shown they prefer CBOEs or PBEs (Lim et al., 2006; Elsalem et al., 2021; Eurboonyanun et al., 2021). Our focus group reported two students prefer typing to handwriting, and this has been previously reported where using a keyboard and mouse is more comfortable and quicker than handwriting (James, 2016).

CBOEs were the least preferred format, congruent with other research. Boevé et al. (2015) found that only 25% of students preferred CBOEs over PBEs, while another study found only 37% chose to use a computer for their exams (Hochlehnert et al., 2011). Studies have shown individuals of “Generation Z,” persons born between 1997–2010, are more partial toward learning via digital formats rather than traditional paper formats (Hochlehnert et al., 2011; Szymkowiak et al., 2021), however, some data suggest that there is no significant difference in student performance between typed and written exams (Mogey et al., 2010).

Our study found that OBOEs were perceived as the fairest, followed by PBEs. This is contrary to studies that found PBEs were fairer than computerized exams (Shraim, 2019), or that online exams are of equal fairness (Sánchez-Cabrero et al., 2021). Our qualitative data suggests that OBOEs are fairer than PBEs, as they reduce emphasis on memorization and instead focus on comprehension and application of information that allows for a deeper demonstration of understanding. OBOEs may promote deeper learning as students must engage in analysis and synthesis of a wide range of information. This approach is applicable to real-world situations, where professionals have access to journals and textbooks and must critically analyze and reference relevant materials, instead of memorizing facts for a short period of time. Focus group comments also stated OEs have less memorization, in-line with the progression of society and are more like real-world situations.

Our study found that students’ believed OBOEs to decrease exam stress; qualitative and focus data supported this. This aligns with previous research indicating that remote or computer-based exams can reduce stress and anxiety levels, alleviate fatigue, and enhance comfort and enjoyment (Sánchez-Cabrero et al., 2021; Gokulkumari et al., 2022). A meta-analysis reported test anxiety was linked to poor academic performance, but caution must be taken with causal inference with correlations (von der Embse et al., 2018). Our results suggest that the longer time limit in OBOEs may provide students with opportunities to contemplate and refine answers, while CBOE and PBEs can be more stressful due to the shorter time limit.

Data from The Open University examined 2018 and 2022 academic year student datasets to evaluate performance from a switch to remote, online examinations (Cross et al., 2022). Results showed that students felt less anxious about sitting a remote online exam and students’ experience of revising or sitting the exam was unaffected by the move to online exams. Whilst our students similarly felt less stress participating in OEs, the results differ as there were fluctuations in how much our students altered their exam preparation to sit an online exam. Whilst the results are useful to compare, it is important to highlight that The Open University is primarily a distance-learning institution, suggesting that students from The Open University are accustomed to online learning. Despite the positive perceptions, some of our students reported that using a computer increased stress levels due to the risk of plagiarism. Similar findings were reported whereby students expressed feeling more stressed when taking computer-based exams, but still recognized its validity, practicality, security, and positive contribution to their future career (Kundu and Bej, 2021).

Our study found that students find it easier to concentrate during OBOEs relative to CBOEs and PBEs, potentially due to the increased time allocation. This is consistent with previous research (Dermo, 2009); however, other studies found no difference in the ability to concentrate between computer- and paper-based exams (Shraim, 2019; Gokulkumari et al., 2022). The neutral response toward CBOEs suggests that students may have less experience with this format. Institutions considering CBOEs should take into account the computer room environment to avoid distractions (e.g., loud typing) that could affect concentration (Wibowo et al., 2016).

Biomedical Science students had slightly more difficulty concentrating on PBEs than Other programmes. Both programmes had less difficulty concentrating on OBOEs, but BMS students found it much easier. These differences are consistent with the previous literature suggesting that academic course can influence opinions (Afacan Adanır et al., 2020). Our data showed that students expected the use of computers for exams in future, indicating that it is not only accepted, but anticipated.

Students have previously perceived remote and on-site online exams, as equally valid and fair assessment tools (Sánchez-Cabrero et al., 2021). Most of our students (80.8%) expressed anticipation that online exams will increasingly become the dominant format in the future. This finding aligns with a Spanish study, which reported that 54.4% of respondents agreed with this (Sánchez-Cabrero et al., 2021). These results suggest a growing recognition among students regarding the acceptance of OEs. The adoption of computer-based assessment could allow for more frequent examination, eliminate venue space limitations, reduce staff and equipment costs, and accommodate a growing student population. It could also enable students to apply feedback and monitor progress (Sorensen, 2013). The potential for immediate feedback was viewed to enhance learning by 84.3% of our respondents; focus group participants did relate that online feedback received from academics was more significant than PBEs, allowing them to understand in more depth how to improve.

These findings are consistent with previous research which found that online exams improved consistency, quality, and constructiveness in feedback (Debuse and Lawley, 2016). The availability of immediate feedback has been noted as a significant benefit of online exams, as it enables personalized feedback that students can apply to future assessments and refer back to Dermo (2009) and Alsadoon (2017).

In the practicality dimension, most students indicated OEs are more accessible. OBOEs eliminate the need to travel, and they are accessible for individuals with disabilities or mental health issues. This is especially beneficial as some disabilities may not be covered by exceptional circumstances, and some students have a stigma in terms of reporting their health issues. Also, the process of proving eligibility can be costly and time-consuming. OBOEs alleviate these challenges, providing a more inclusive examination format.

Although 90% of our students felt comfortable with their computer knowledge, some students may require additional support. To bridge the gap in computer literacy, institutions could provide training programmes targeted to those with lower digital competencies. Despite the majority of students having suitable equipment and facilities for online exams, a small proportion did not have an adequate environment or lacked equipment. Digital poverty is a wide-spread issue that should be addressed systematically (James, 2016; Joint Information Systems Committee, 2021), and universities can further promote additional digital support such as long-term laptop loans and quiet study spaces to improve digital inclusion, particularly for students who may be disadvantaged.

Some students believe that PBEs are biased toward those with better memory and ability to recall information; both our questionnaire and focus group data supported this. Online exams can require critical thinking to answer questions based on large amounts of information, thus possibly leveling the playing field. Additionally, online exams are perceived to be more accurate in marking due to automation, along with elimination of illegible handwriting and human error (Alsadoon, 2017; Kundu and Bej, 2021). Online essay questions allow for personalized feedback that is available anywhere at any time, eliminating the need for booking a session with faculty for feedback (Alsadoon, 2017).

Some of our students expressed concerns about technical issues during online exams. Disruptions include the loss of internet connection or interruptions which have been reported to negatively affect academic performance (James, 2016; Ilgaz and Afacan Adanır, 2020); students should be made aware of these issues. Institutions do prioritize solving technical issues and one solution is by allowing extra time. Regular upgrades to a digital learning environment (DLE) are necessary. Our students found online exams as equally reliable as paper exams.

Students found online exam grades more secure and confidential than PBEs, which is supported by previous work (Gokulkumari et al., 2022). Online exams are stored behind secure and encrypted login details, unlike paper exams which are physically stored, therefore subject to unauthorized access or destruction. To further enhance security, fingerprint or facial recognition software, or exam management systems, can be introduced (Rashad et al., 2010; Yong-Sheng et al., 2015; Al-Hakeem and Abdulrahman, 2017). Our study found that OBOEs were perceived as the easiest to cheat on, supported by some (Walsh et al., 2012), but contradictory to others (Alsadoon, 2017). Prior research has indicated that students cheat, regardless of location and format (Adzima, 2021). This highlights the need for cheating prevention tactics across all exam formats. Proctoring was used for online exams in the FoH dentistry and medical programmes, as it can provide online exam security and reliability (Sánchez-Cabrero et al., 2021), although it can be costly. Proctoring can foster trust whilst also deterring academic dishonesty and ensuring a fair evaluation (Alessio et al., 2017). Our study found that students are receptive to the implementation of proctoring during online exams. However, cheating is not limited to online exams (Williams and Wong, 2007). Unique question design and higher-order thinking questions can reduce academic dishonesty (Williams and Wong, 2007). Specialized browsers, random question banks, and other tactics can also discourage cheating (Elmehdi and Ibrahem, 2019; Shraim, 2019). Rather than focusing on cheaters, it is more important to prioritize higher quality learning outcomes for the majority (Williams and Wong, 2009).

Our students were not concerned about academic offences during online exams, suggesting that the UoP has provided sufficient information on plagiarism avoidance, and Turnitin software, a plagiarism checker, which notifies students prior to submission, has proven effective. The majority of students agreed that online exams added value to their learning and future careers. Focus group comments were mixed with regard to better employability.

Online exams enable a wider variety of questions that demand critical thinking and application of knowledge. Therefore, question design is a crucial aspect of exams. Exams that incorporate real-world problems and multi-media can offer an engaging experience and foster deeper learning among students (Williams and Wong, 2009; Alsadoon, 2017). However, it is important to provide additional training for examiners to effectively implement these techniques.

It is clear that the ‘sense of accomplishment’ completing an online exam is underwhelming compared to completing a paper-based examination. This could potentially be attributed to an association that formal examinations take place as PBEs, as seen at GCSE or A-Levels. Academic institutions aiming to use online exams must develop and format online exams to make them feel academically rewarding for students and to emphasize that doing well in online exams can still be attributed to hard work, the same as with PBEs.

The practicality dimension and focus group data both showed students were concerned about the environmental impact of PBEs. In terms of carbon footprints, using CO2 production of paper (Two Sides, 2021) and computer use (Energuide, 2023), we compared a typical 2-h exam time with an estimated paper usage of 20 sheets. We found PBEs have a 60% increase in carbon footprint over online examinations (CO2 production/20 sheets/student = 0.0616 kg vs. CO2 production/2-h computer use/student = 0.0386 kg). This allowed us to predict a cost of £50 million/year for paper and storage costs for all UK higher education institutes for PBEs. Whilst the price of desktops has increased due to the global semiconductor shortage, many academic establishments already have computer rooms that can accommodate a large number of students. With proper management however, there are long-term potential cost benefits as desktop computers can last for years, as they are not just used for exams but available to students and staff throughout the year. Online exams could potentially reduce the number of invigilators, and only students with “modified-assessment-provisions” would require a separate room with an invigilator. Although not covered by our analysis, there is potential for OEs to reduce faculty assessment workloads. UoP is also moving to a net-zero goal by 2025, in-line with the UK and EU to be carbon neutral by the year 2050 (Guterres, 2020). Moving away from PBEs to online exams are better from an environmental perspective and have the potential to help us achieve these goals.

Although significant, caution should be exercised when interpreting our results due to several limitations. Firstly, our study was conducted on a specific population of UoP students and had a limited sample size. The focus group study planned to recruit eight to ten participants, as recommended (Krueger and Casey, 2008), however, several of those interested were unable to attend due to assessment deadlines. Four out of five focus group participants were female, but this was deemed acceptable as 60–70% of our cohort are female. Another potential bias was that four out of five focus group participants were from Stages 3 and 4, and only one was from Stage 1.

The number of responses between programmes varied greatly, suggesting that responses captured could be from individuals who feel more or less strongly about the topic. Another limitation is that the scale lacked strong internal validity. While a pilot study was conducted to improve clarity of the questions, the Cronbach alpha, assessing internal reliability for the questionnaire, could be improved by increasing the validity of the weakest dimensions and increasing the number of relevant questions. A source of bias could have been that most questions for online exams were positively facing. One reason for this was that the base questionnaire used was published pre-COVID in 2009 (Dermo, 2009), and at that time, online exams were not practical and were a distant reality.

Conclusion and recommendations

Universities have traditionally been dominated by paper-based exams, but with the onset of the COVID-19 pandemic, universities were forced to adopt open-book online exams. Our study in undergraduate Biomedical Science students shows this new format is perceived to be superior compared to traditional and closed-book online exams. This study found that students perceived open-book online exams to significantly reduce stress, while improve concentration, reliability, fairness, security, and add value to learning compared to other formats. As a result, these exams are well-accepted and anticipated to be the dominant method in future. Despite this, there are still disruptive limitations to online examinations that need to be worked through to allow them to gain widespread use among universities. Focus group participants reported there was variation in how participants performed in online exams and highlight that online exams do not feel rewarding. Online examinations also could help with national net-zero ambitions and lower faculty workloads.

Responses suggest that students are expecting exams to move to an online format, therefore institutions aiming to move to an online format need to work closely with students, to ensure these exams remain as rewarding as their paper-based equivalents, with clear clarification of the support available to students. Clarity on online exam format and the availability of practice exams should be a priority. This will better allow students to understand how to prepare and what to expect, to minimize student scepticism. Regular online mock exams will also ensure that institutions can inspect the network and repair any potential problems to minimize the chances of errors during summative exams.

Several aspects of this study would benefit from further investigation, which would be useful for institutions wishing to adopt or maintain online exams. Firstly, additional questions should be asked to investigate how students prepare for online exams and how feedback could be improved. Secondly, an evaluation should be carried out to compare grades between different exam formats. Thirdly, it would be useful to investigate faculty perceptions on exam format, and the support they received during and after the COVID-19 pandemic. Additionally, surveys should examine the use of artificial intelligence and their use in online exams. Future studies could also assess the validity of exams in relation to an assessment that captures the competencies of the student in relation to a chosen profession.

By addressing these areas, institutions can better understand the benefits and limitations of online exams and make informed decisions about the adoption of these formats for their own assessments. Future studies that aim to investigate OEs and PBEs should aim to recruit volunteers from a wider range of course progression and focus more on attaining a post-COVID pandemic analysis on the exam formats by attempting to include a sample population with participants both directly affected by the COVID-19 pandemic and those less affected.

Summary table

What is known about the subject?

• During the COVID-19 pandemic, paper-based exams were difficult to implement, thus most UK institutions ran open book online exams.

• Post-pandemic saw most institutions return to paper exams. However, some institutions believed continuing with online exams was advantageous.

• Students from other programmes typically preferred online exams compared to paper-based exams.

What this paper adds

• Novel findings showing Biomedical Science students’ perceptions to open book exams are preferred compared to paper and closed-book online exams.

• Students perceived open-book exams reduce stress, while improve concentration, reliability, fairness, security, and value to learning.

• Focus group participants reported variation in online exam performance and report they do not feel rewarding.

Summary sentence

This work represents an advance in biomedical science because we have clearly shown biomedical science students perceive open book online exams to be preferable to paper and closed-book exam formats.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the University of Plymouth Science and Engineering Human Ethics Committee. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

EW: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Validation, Writing—original draft, Writing—review and editing. WM: Conceptualization, Data curation, Investigation, Methodology, Validation, Writing—original draft, Writing—review and editing. KJ: Conceptualization, Methodology, Project administration, Resources, Supervision, Validation, Writing—original draft, Writing—review and editing. MS: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Writing—original draft.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2023.1321206/full#supplementary-material

Data Sheet 1 = Supplementary Data 1

Table 1 = Supplementary Data 2

Table 2 = Supplementary Data 3

Table 3 = Supplementary Data 4

Table 4 = Supplementary Data 5

References

Adzima, K. (2021). Examining online cheating in higher education using traditional classroom cheating as a guide. Electron. J. Elearn. 18, 476-493. doi: 10.34190/jel.18.6.002

Afacan Adanır, D. G., İsmailova, A. P. D. R., Omuraliev, P. D. A., and Muhametjanova, A. P. D. G. (2020). Learners’ perceptions of online exams: a comparative study in Turkey and Kyrgyzstan. Int. Rev. Res. Open Dis. 21, 1–17. doi: 10.19173/irrodl.v21i3.4679

Alessio, H. M., Malay, N., Maurer, K., Bailer, A. J., and Rubin, B. (2017). Examining the effect of proctoring on online test scores. Online Learn. 21, 146–161.

Al-Hakeem, S. M., and Abdulrahman, S. M. (2017). Developing a new e-exam platform to enhance the university academic examinations: the Case of Lebanese French University. Int. J. Mod. Educ. Comput. Sci. 9, 9–16. doi: 10.5815/ijmecs.2017.05.02

Alsadoon, H. (2017). Students’ perceptions of e-assessment at saudi electronic university. Turkish Online J. Educ. Technol. 16, 147–153.

Bahar, M., and Asil, M. (2018). Attitude towards e-assessment: influence of gender, computer usage and level of education. Open Learn. J. Open Distance Elearn. 33, 221–237. doi: 10.1080/02680513.2018.1503529

BBC News (2013). Online tests to replace paper exams within a decade–Family and Education. London: BBC News.

Bladt, F., Khanal, P., Prabhu, A. M., Hauke, E., Kingsbury, M., and Saleh, S. N. (2022). Medical students’ perception of changes in assessments implemented during the COVID-19 pandemic. BMC Med. Educ. 22:844. doi: 10.1186/s12909-022-03787-9

Boevé, A. J., Meijer, R. R., Albers, C. J., Beetsma, Y., and Bosker, R. J. (2015). Introducing computer-based testing in high-stakes exams in higher education: results of a field experiment. PLoS One 10:e0143616. doi: 10.1371/journal.pone.0143616

Boitshwarelo, B., Reedy, A. K., and Billany, T. (2017). Envisioning the use of online tests in assessing twenty-first century learning: a literature review. Res. Pract. Technol. Enhanc. Learn. 12:16. doi: 10.1186/s41039-017-0055-7

Cambridge Assessment (2008). How have school exams changed over the past 150 years?. Cambridge: Cambridge University Press & Assessment.

Cross, S., Aristeidou, M., Rossade, K., Wood, C., and Brasher, A. (2022). “The impact of online exams on the quality of distance learners’ exam and exam revision experience? Perspectives from The Open University UK,” in Proceedings of the innovating higher education conference 2022, Athens.

Debuse, J. C., and Lawley, M. (2016). Benefits and drawbacks of computer-based assessment and feedback systems: student and educator perspectives. Br. J. Educ. Technol. 47, 294–301. doi: 10.1111/bjet.12232

Dermo, J. (2009). e-Assessment and the student learning experience: a survey of student perceptions of e-assessment. Br. J. Educ. Technol. 40, 203–214. doi: 10.1111/j.1467-8535.2008.00915.x

Donovan, J., Mader, C., and Shinsky, J. (2007). Online vs. traditional course evaluation formats: student perceptions. J. Interact. Online Learn. 6, 158–180.

Elmehdi, H. M., and Ibrahem, A. M. (2019). “Online summative assessment and its impact on students’ academic performance, perception and attitude towards online exams: university of sharjah study case,” in Creative business and social innovations for a sustainable future. advances in science, technology & innovation, eds M. Mateev and P. Poutziouris (Cham: Springer), 211–218. doi: 10.1007/978-3-030-01662-3_24

Elsalem, L., Al-Azzam, N., Jum’ah, A. A., and Obeidat, N. (2021). Remote E-exams during Covid-19 pandemic: a cross-sectional study of students’ preferences and academic dishonesty in faculties of medical sciences. Ann. Med. Surg. (Lond.) 62, 326–333. doi: 10.1016/j.amsu.2021.01.054

Energuide (2023). How much power does a computer use? And how much CO2 does that represent?. Available online at: https://www.energuide.be/en/questions-answers/how-much-power-does-a-computer-use-and-how-much-co2-does-that-represent/54/#:~:text=A%20desktop%20uses%20an%20average,used%2C%20depending%20on%20the%20model (accessed June 28, 2023)

Eurboonyanun, C., Wittayapairoch, J., Aphinives, P., Petrusa, E., Gee, D. W., and Phitayakorn, R. (2021). Adaptation to open-book online examination during the COVID-19 pandemic. J. Surg. Educ. 78, 737–739. doi: 10.1016/j.jsurg.2020.08.046

Gokulkumari, G., Al-Hussain, T., Akmal, S., and Singh, P. (2022). Analysis of E-Exam practices in higher education institutions of KSA: learners’ perspectives. Adv. Eng. Softw. 173:103195. doi: 10.1016/j.advengsoft.2022.103195

Guterres, A. (2020). Carbon neutrality by 2050: the world’s most urgent mission. New York, NY: United Nations.

Hochlehnert, A., Brass, K., Moeltner, A., and Juenger, J. (2011). Does medical students’ preference of test format (computer-based vs. paper-based) have an influence on performance? BMC Med. Educ. 11:89. doi: 10.1111/j.1365-2923.2008.03125.x

Ilgaz, H., and Afacan Adanır, G. (2020). Providing online exams for online learners: does it really matter for them? Edu. Inf. Technol. 25, 1255–1269. doi: 10.1007/s10639-019-10020-6

James, R. (2016). Tertiary student attitudes to invigilated, online summative examinations. Int. J. Educ. Technol. High. Educ. 13, 1–13. doi: 10.1186/s41239-016-0015-0

Joint Information Systems Committee (2021). Government action called for to lift HE students out of digital poverty. Bristol: Joint Information Systems Committee.

Krueger, R. A., and Casey, M. A. (2008). Focus groups: a practical guide for applied research, 4th Edn. New York, NY: SAGE.

Kundu, A., and Bej, T. (2021). Experiencing e-assessment during COVID-19: an analysis of Indian students’ perception. High. Educ. Res. Dev. 15, 114–134. doi: 10.1108/heed-03-2021-0032

Lave, J., and Wenger, E. (1991). Situated learning: legitimate peripheral participation. Learning in doing: social, cognitive, and computational perspectives. Cambridge: Cambridge University Press. doi: 10.1017/CBO9780511815355

Lim, E. C., Ong, B. K., Wilder-Smith, E. P., and Seet, R. C. (2006). Computer-based versus pen-and-paper testing: students’ perception. Ann. Acad. Med. Singap. 35:599. doi: 10.47102/annals-acadmedsg.202328

Mogey, N., Paterson, J., Burk, J., and Purcell, M. (2010). Typing compared with handwriting for essay examinations at university: letting the students choose. Res. Learn. Technol. 18, 29–47. doi: 10.1080/09687761003657580

Müller, A. M., Goh, C., Lim, L. Z., and Gao, X. (2021). Covid-19 emergency elearning and beyond: experiences and perspectives of university educators. Edu. Sci. 11:19. doi: 10.3390/educsci11010019

Office for Students, (2020). Digital poverty’ risks leaving students behind. Available online at: https://www.officeforstudents.org.uk/news-blog-and-events/press-and-media/digital-poverty-risks-leaving-students-behind/ (accessed June 28, 2023)

Rashad, M. Z., Kandil, M. S., Hassan, A. E., and Zaher, M. A. (2010). An Arabic web-based exam management system. Int. J. Electr. Comput. Sci. 10, 48–55.

Sánchez-Cabrero, R., Casado-Pérez, J., Arigita-García, A., Zubiaurre-Ibáñez, E., Gil-Pareja, D., and Sánchez-Rico, A. (2021). E-Assessment in e-learning degrees: comparison vs. face-to-face assessment through perceived stress and academic performance in a longitudinal study. Appl. Sci. 11:7664. doi: 10.3390/app11167664

Shraim, K. (2019). Online examination practices in higher education institutions: learners’ perspectives. Turk. Online J. Distance Educ. 20, 185–196. doi: 10.17718/tojde.640588

Sorensen, E. (2013). Implementation and student perceptions of e-assessment in a Chemical Engineering module. Eur. J. Eng. Educ. 38, 172–185. doi: 10.1080/03043797.2012.760533

Szymkowiak, A., Melović, B., Dabić, M., Jeganathan, K., and Kundi, G. S. (2021). Information technology and Gen Z: the role of teachers, the internet, and technology in the education of young people. Technol. Soc. 65:101565. doi: 10.1016/j.techsoc.2021.101565

The Organisation for Economic Co-operation and Development (2020). Remote online exams in higher education during the COVID-19 crisis OECD Education Policy Perspectives. Paris: OECD.

Two Sides (2021). Myth: paper production is a major cause of global greenhouse gas emissions. Daventry: Two Sides.

von der Embse, N., Jester, D., Roy, D., and Post, J. (2018). Test anxiety effects, predictors, and correlates: a 30-year meta-analytic review. J. Affect. Disord. 227, 483–493. doi: 10.1016/j.jad.2017.11.048

Walsh, L. L., Lichti, D. A., Zambrano-Varghese, C. M., Borgaonkar, A. D., Sodhi, J. S., Moon, S., et al. (2012). Why and how science students in the United States think their peers cheat more frequently online: perspectives during the COVID-19 pandemic. Int. J. Educ. Integr. 17, 1–18. doi: 10.1007/s40979-021-00089-3

Wibowo, S., Grandhi, S., Chugh, R., and Sawir, E. (2016). A pilot study of an electronic exam system at an Australian university. J. Educ. Technol. Syst. 45, 5–33. doi: 10.1177/0047239516646746

Williams, J. B., and Wong, A. (2007). “Closed book, invigilated exams vs open book, open web exams: an empirical analysis,” in Proceedings of the ICT: providing choices for learners and learning. Proceedings ascilite Singapore 2007, eds R. J. Atkinson, C. McBeath, S. K. A. Soong, and C. Cheers (Singapore: Centre for Educational Development, Nanyang Technological University), 1079–1083.

Williams, J. B., and Wong, A. (2009). The efficacy of final examinations: a comparative study of closed-book, invigilated exams and open-book, open-web exams. Br. J. Educ. Technol. 40, 227–236. doi: 10.1111/j.1467-8535.2008.00929.x

Woldeab, D., and Brothen, T. (2019). 21st century assessment: online proctoring, test anxiety, and student performance. Int. J. Distance Educ. Elearn. 34, 1–10.

Keywords: biomedical science, examinations, focus group, questionnaire, assessment, online exams, paper-based exams

Citation: Winters E, Mitchell WG, Jeremy KP and Subhan MMF (2024) Comparison of biomedical science students’ perceptions of online versus paper-based examinations. Front. Educ. 8:1321206. doi: 10.3389/feduc.2023.1321206

Received: 13 October 2023; Accepted: 08 December 2023;

Published: 08 January 2024.

Edited by:

Tom Crick, Swansea University, United KingdomReviewed by:

Larisa-Loredana Dragolea, 1 Decembrie 1918 University, RomaniaNigel James Francis, Cardiff University, United Kingdom

Copyright © 2024 Winters, Mitchell, Jeremy and Subhan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mirza Mohammad Feisal Subhan, feisal.subhan@plymouth.ac.uk

Elizabeth Winters

Elizabeth Winters