- 1Media Department, Middlesex University Dubai, Dubai, United Arab Emirates

- 2Computer Engineering and Informatics, Middlesex University Dubai, Dubai, United Arab Emirates

This brief research report examines claims made across contemporary media channels that generative artificial intelligence can be used to develop educational materials, in an experiment to develop a new course for advertising, PR and branding professionals. A collaborative auto-ethnography is employed to examine the journey and unintended consequences experienced by a non-technology lecturer engaging with generative AI for the first-time and is examined under the lens of the 2023 Gartner Hype Cycle for Emerging Technologies. The researchers were able to map lived experiences to stages of the Gartner model, presenting evidence that this tool could have extended utility in the field of human resources for the support of technology integration projects. They also recorded several potential manifestations of symptoms related to the problematic use of Internet (PUI). The implications of the findings contribute to ongoing public discourse regarding the introduction of artificial intelligence within education, with insights for policy development and governance, as well as faculty and student wellbeing.

1 Introduction

The impetus for this brief research report arises from the pervasive public discourse surrounding generative A.I., which proclaims this groundbreaking technology as a universal solution for various needs and desires. Consequently, the researchers initiated this study to scrutinize the assertions made by “Edu-Influencers” prominent within the environment of an informal TikTok and Instagram Teacher-to-Teacher Online Marketplace of Ideas (TOMI; Shelton et al., 2020) who proclaim that the development of innovative educational materials using generative A.I. applications might be attainable.

Consequently the research questions follow the typical focus (Cooper and Lilyea, 2022) of exploring both the context of generative AI within education: RQ1 “how a non-technology lecturer begins to employ generative artificial intelligence in the development of teaching and learning materials”; and the social issues surrounding it: RQ2 “what unintended/unexpected consequences may arise in the attempt?”

1.1 Generative A.I. and education

In the realm of education, generative A.I. has garnered diverse evaluations, with early discussions highlighting concerns regarding its impact on assessment integrity (Ghapanchi, 2023; Terry, 2023; Weale, 2023), while others advocate for its integration as a study aid (UAE Ministry of Artificial Intelligence, Digital Economy and Remote Work Applications, 2023). Notably, social media influencers have been fervent in promoting the use of generative A.I. to facilitate course design as a supplementary source of income, exemplified by individuals such as AI Avalanche (2023) and Its Alan Ayoubi (2023). Through screen captures and concise videos, these “Edu-influencers” demonstrate how generative A.I., through specific prompts and commands, can generate educational materials on a given subject, even in the absence of prior knowledge on the part of the human author.

1.2 Generative A.I. and advertising, PR, and marketing communications

Another field experiencing the impact of A.I. is marketing communications and advertising. In 2018, the Chartered Institute of Public Relations (Valin, 2018) predicted that by 2023, over one-third of work tasks in this domain would involve A.I. or undergo complete transformation due to its influence. The growing interest in this field is also evident as the London-based Chartered Institute for Public Relations (CIPR) recommended in 2020 a review of education and training courses aimed at equipping professionals for the evolving work landscape (Gregory and Virmani, 2020). In the most recent review from the CIPR (Gregory et al., 2023), the association reports that AI is found supporting or augmenting just under 40% of PR roles.

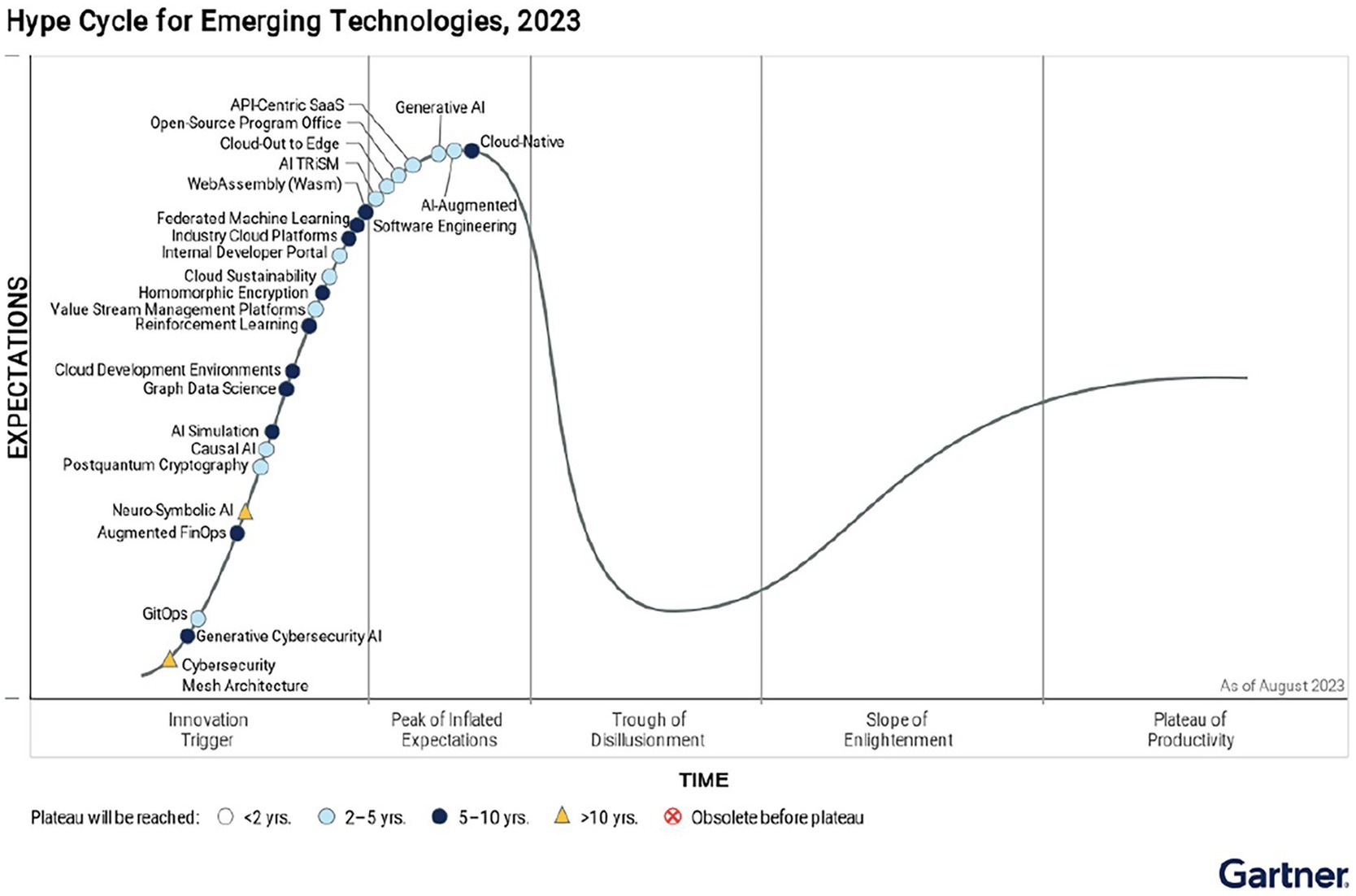

1.3 The Gartner Hype Cycle

Gartner Hype Cycles, as illustrated by the 2023 Gartner Hype Cycle for Emerging Technologies in Figure 1, provide a visual representation of the maturity and adoption of technologies and applications, showcasing their potential relevance in addressing business challenges and leveraging emerging opportunities. This methodology aims to offer valuable insights into "how a technology or application will evolve over time" (Gartner, 2023), facilitating their effective deployment in alignment with specific business objectives. The Hype Cycle begins with a trigger that sparks the interest of early adopters, who navigate through five distinct phases over time, until "mainstream adoption starts to take off" (Gartner, 2023). In August and several months following the conclusion of our study, Gartner (Chandrasekaran and Davis, 2023) placed generative A.I. on the "Peak of of Inflated Expectations” phase of their model.

Figure 1. Gartner’s Hype Cycle for Emerging Technologies, 2023 (Chandrasekaran and Davis, 2023).

1.4 Problematic use of internet

With nearly ubiquitous access to internet services and billions of users worldwide, the impact of these technologies on health has garnered significant attention in academic research. While the concept of Problematic Use of Internet (PUI) has not been officially recognized as a diagnosable medical condition akin to addiction, researchers recognize the importance of discussing potentially harmful behaviors that exhibit signs of addiction. These behaviors involve a lack of control over online engagement, despite experiencing negative consequences that may impair various aspects of a person’s life. Furthermore, specific conditions such as problematic use of social media (PUSM) and differences in internet usage patterns between mobile phones and laptops have also been identified (Moretta et al., 2022).

2 Methodology

The advancements in Artificial Intelligence parallel the rapid adoption of e-learning during the Covid-19 pandemic. This unique opportunity offers a chance to document lived experiences and present the “scholarly challenges” experienced during a transformative global phenomenon (Roy and Uekusa, 2020). A collaborative autoethnography which introduces a lecturer’s own voice into public discourse is presented for practical and social aspects, and in particular to address a genuine need within the academic community to “foreground our own experiences” (Taber, 2010, p. 8).

2.1 Research participants

The study describes the experiences of Stephen King, an advertising lecturer with no prior experience with generative A.I. He is observed by Dr. Judhi Prasetyo, an award-winning lecturer focusing on robotics with a research pedigree in related fields. This collaborative autoethnography purposively involves colleagues from different faculties and levels of familiarity with the subject to increase relevance of the study, and to bring a rich multi-disciplinary perspective to the findings and presentation of results.

2.2 Research design

An evocative autoethnographic narrative encapsulating the qualities of a “Confessional Tale” (Van Maanen, 1988, p. 73) is presented, constructed through interviews and dialogues between the researchers, along with a textual analysis of the implementing researcher’s journal. In doing so the authors attempt to transcend the storytelling features of self-narrative in order to engage in form of “cultural analysis and interpretation” (Chang, 2008, p. 43). The intent is to help other educators overcome the anxiety of adopting new technologies by offering a personal account of King’s journey.

The researchers engaged in weekly meetings conducted in a semi-structured manner, with Prasetyo adopting a pastoral role. The discussions were recorded and subsequently shared via a podcast (https://incongruent.buzzsprout.com/) to provide an immediate resource that contributes to evolving discourse on this topic.

2.2.1 Boundaries

Given the evolving nature of institutional governance policies generally during the early months of 2023 and which have continued to the date of submission, the researchers’ affiliated institute granted approval for an autoethnography that specifically focused on the development of a module addressing a specific theme. Research would span a duration of 3 weeks and aim to explore the utilization of current tools to create the following curriculum components:

• Design a module narrative

• Define module aims

• Establish learning outcomes

• Develop a syllabus

• Determine assessment methods

• Identify relevant readings

Furthermore, the study aimed to create course content encompassing lecture slides and seminar activities to support the module.

2.2.2 Data collection

The researchers took the opportunity to employ and experiment with new A.I.-enabled tools to help document and report their journey. For example, the researchers used Descript to record podcasts. This is a premium A.I.-enhanced tool that was identified during preliminary research as the most suitable for this study. Additionally, Microsoft OneNote was used to complete the journal and experiments were used to employ its transcriptions tools.

2.2.3 Data analysis

The podcast transcriptions and sanitized journal entries underwent latent pattern content analysis, guided by patterns derived from the literature on the Gartner Hype Cycle and emerging discussions on problematic use of the Internet (PUI). This analysis approach aimed to identify underlying patterns and themes within the data related to the topics of interest.

2.3 Ethics

In conducting this autoethnography, the research team acknowledges the dual role of being both the originator of the content and the storyteller. To ensure ethical practices, the team has made every effort to present the study design and final conclusions in a balanced and academically rigorous manner, providing an honest account of the research journey. To address the many concerns outlined in literature (Deitering, 2017; Roy and Uekusa, 2020), the study has been designed as a collaborative effort between two colleagues with a history of collegiality and cooperation. Constructive dialogical tensions and increased accountability are facilitated by the established respect between the researchers. In doing so the team integrated a form of process consent, supported the care and well-being of the autoethnographer, shared, and ensured that the end output was unlikely to cause unintended harm. Further, while this (collaborative) self-reflective investigation aims to provide valuable insights and insider perspectives, the team is mindful of the potential for narcissism and self-indulgence and has made every effort to address these concerns.

3 Results

This section delves into the experiences of the researchers through the lens of the Gartner Hype Cycle.

3.1 Innovation Trigger

According to Gartner, this phase is initiated by a “breakthrough, public demonstration, product launch or other event sparks media and industry interest in a technology or other type of innovation” (Dawson et al., 2023, p. 4).

Stephen King [SK]: “[The TikTok environment] is a furor. It is a frenzy of online pseudo experts who are saying that you can use various apps to create courses.

3.2 Climbing the Peak of Inflated Expectations

At this point, the researcher is “On the Rise” section of the Gartner Hype Cycle. This is described by Gartner as a period where the “excitement about, and expectations for, the innovation exceed the reality of its current capabilities” (Dawson et al., 2023, p. 4).

[SK] “There are just so many Apps – what do they all do? How to evaluate them? Are these Apps even relevant or are the better ones? Feeling overwhelmed…”

The first experiment is related to their existing work practice, using A.I. for a task that they would usually spend significant time and effort to perform.

[SK] “[I successfully] tested ChatGPT to rewrite the rubric text from an assessment: “Wow…!” I tried again and I became excited and attempted with longer and more sophisticated prompts. Wow! This is so helpful!”

The experiment appears successful, and so encouraged the researcher attempts to make more complex requests.

[SK] “Really following Alice down the rabbit hole now. I ask: “What A.I. tool should we use to create animations or videos?” Then I tried a slide creator requesting it to create a ‘typical week’ of content. This is scary… but I will use it.”

Interestingly the technology fails to impress in one of these trials, but the researcher is willing to forgive and accept this as a partial success. They do not reflect on why the A.I. failed to fulfil earlier expectations.

[SK] “I also try uploading instruction text to create slides – less exciting, but still helpful.”

Instead, the early successes and increasing usage causes the researcher to express concern for their well-being.

[SK] “I’m becoming addicted – have to put this stuff away.”

They have entered an environment where it is difficult if not impossible to disconnect, even “relapsing” to the study during rest days.

[SK] “I give up on rest-day as I spot a Metaverse event taking place in the evening via Facebook, and I feel I have to try it out.”

Eventually sensitive content begins to seep unsolicited into social media feeds causing the researcher to reflect upon alternative uses of the technology.

[SK] “Begin the day reading how Ukraine is using A.I. in war against Russia on Quora via my mobile phone in bed…. Wake to another A.I.-war story on Quora.”

Various A.I. interactions provide recommendations for using A.I. in other aspects of the researcher’s life demonstrating a technological omniscience and building a feeling of being continuously observed.

[SK] “Another A.I.-service in my YouTube, this time a realtor. Before bed, YouTube sends me a link to an A.I. course offered in the US.”

This feeling eventually explodes into full-blown paranoia.

[SK] “But then I notice I’m becoming paranoid about what I’m viewing online – starting to think the computer is watching me and sending me content. I accidentally click on a strange link which takes me to a pseudo-religious community worshipping A.I. LinkedIn is now sending me A.I. posts, and some of my content is being followed by A.I. companies.”

The researcher continues the study with a new experiment It is interesting that the researcher is using it to test the ability of the A.I. against their own, personal outputs.

[SK] “Thought to use A.I. to summarise the abstract for an academic conference. A.I. does not count and the responses are lengthy and unusable. Using the word ‘succinct’ is something that the A.I. understands, and the resulting content is workable.”

Now that the researcher has confidence that the technology can reproduce basic text, a new experiment seeks to test the cognitive ability of the A.I. system. Can it read and understand materials given to it in a useful fashion?

[SK] “Wondering if A.I. can summarise academic readings to speed up analysis. This produced a 5-paragraph summary from a 7-page technical paper in about 10 min. It’s little more than an extended abstract therefore I still need to read the paper to find the specific ‘meat’ that is required for academic thinking.”

A second failure, but the researcher is not deterred. They are nearing the Peak of Inflated Expectations and see the innovation as a “panacea”, “with little regard for its suitability to their context” (Dawson et al., 2023, p. 9).

[SK] “Module narratives [were] the first [documents] I created. In a four-hour period, I was able to produce three of what I consider it to be very good module narratives. I’m very happy with it.”

At this stage it is possible to observe that the researcher has attained the peak.

[SK] “It was very good. And that did not take much time at all. I was enthusiastic. “THIS IS HUGE… I’M SHELL SHOCKED…. It is very clear that A.I. is going to enhance our work.”

This one successful prototype, coming at the conclusion of a series of minor experiments, some which failed, shows a potential cognitive bias and colours all previous trials with a positive afterglow.

[SK] “Yes, my productivity improved when I used it. My enjoyment of writing has significantly improved. I’m going to really increase my, like productivity in that respect.”

The potentially fortunate success of this one initiative is considered so important, that the researcher is willing to forgo additional research and make immediate steps to recommend transformative changes.

[SK] “At this point, I was banging down everyone’s doors. Saying, look, I have this transformative piece of software. And it’s going to change everything!”

Gartner explains that the period at the peak is when innovations are adopted “without fully understanding the potential challenges and risks” (Dawson et al., 2023, p. 9). It is noteworthy therefore that the researcher begins to experiment with digital twining technologies and makes their first investments.

[SK] “I got the premium version of Descript and that allowed me to clone my voice. I thought that was quite cool.”

They also begin incorporating A.I. into their personal life and hobbies demonstrating an increasing obsession.

[SK] “I tried to create a comic using A.I. and Photoshop for a board game I play. It worked quite well. I used a Dall-E app on my mobile. The content I was creating was so beautiful. And I wanted to host it somewhere. The correct site for hosting required a certain amount of subscription. And so I paid for somewhere for the A.I. content to be seen.”

And this is where the researcher begins to crest the rise and “slide into the Trough of Disillusionment.”

3.3 Trough of Disillusionment

During this phase the successes of earlier trials become fewer, or are less exciting. This may be because the number of experiments increases, or that the A.I.-apps explored no-longer live up to the researcher’s overinflated expectations. The first evidence explains how the tool fails to meet the requirements of the project it is actually tasked to attack.

[SK] “The first thing I used was a slide designer (see Figure 2) that gives you eight slides. One of those is a thank you slide, one of those is an agenda slide. It was giving me about three or four slides [per prompt]. It did not give me many slides at all.”

FIGURE 2

The researcher makes a second attempt using a different tool on a different A.I. platform.

[SK] “Now the weakness of this particular software or app is that … you cannot put in a prompt and ask it to just come up with a whole deck for you. You have to put in the content and then it will summarize it for you. And then it will lay it out across however many slides you want.”

Not willing to accept defeat, the researcher invests more money to help the experiment succeed.

[SK] It only allows 500 characters on the basic model. So I’d have to keep going backwards and forwards. Plus it was taking two minutes to process everything every single time. It was taking a long time. So eventually I paid for the premium version.

But it would continue to fail.

[SK] The problem here was that the text on the slide was too little. So it’s the Goldilocks scenario. One gave us too much. Then the other gives us too little (see Figure 3).

FIGURE 3

And the researcher attempted to rationalise the failure and look for reasons within their own ‘human-experience’ as to why successful outcomes were not being realised.

[SK] I start to wonder, is my A.I. getting bored with the tasks we are giving it? The A.I. was not giving as rich results any more…

Ultimately, they were prepared to ignore the evidence of their eyes and promote positive elements even in light of the facts that the overall result failed to meet the required standards.

[SK] “As I go through this copy-paste process, I’m getting bored and I can also see from initial skim-reading that the end result will not be as impressive as my expectations. However, it’s still impressive to have done 8 weeks of content even in draft state in about 45 min…”

But when shared with a colleague, reality is inescapable, and the software fails again.

[SK] “We went through some of those slides and we spotted a mistake. And the mistake could only have been spotted by [an expert]. I had absolutely no idea it wasn’t real.”

Which results in the researcher reflecting on other negatives that were previously ignored due to cognitive bias. And ultimately, the reality of the situation is appreciated as the researcher reaches the trough.

[SK] “So, then the next option, would be to do it as a human being. And to actually research it yourself. So the idea of just pressing a single button and computer will do everything does not apply. And I actually canceled my subscription.”

3.4 Slope of Enlightenment

At this stage, Gartner describes a period of reflection “when the real-world benefits of the innovation are predictable” (Dawson et al., 2023, p. 10). This period was artificially accelerated as a result of the methodology of this study which included regular mentor meetings where one participant has significant expertise in the subject matter.

[SK] “I was very lucky that I had to take a breather. We had our discussion, which was very important. I had overestimated what was possible.”

This allows the researcher to reflect on their interactions with the technology and how their own frame of mind and motivations may have influenced the success of the project.

[SK] “When I’m asking it to produce based on no input, I’m not seeing the fact that it is my lack of input that is contributing to the failure of the machine to give me a good output. And then my expectations because of the early successes have been so amazing, when it does not achieve four or five times your expectations, your disappointment is four or five times magnified.”

Following this revelatory period and discussion, experimentation is perceived to have slowed. Journal entries are fewer in the final week and more rest days are recorded. This marks the transition from the trough to a new attempt to climb a “Slope of Enlightenment”.

[SK] “I had one last chance - Agent GPT. And so I use this to develop workshop activities. And that was actually quite helpful. I would just say, develop me a workshop related to chatbots. And it would come up with two activities, which would be awesome.”

In contrast to the earlier experiments, the researcher is more conservative in their evaluation and in more control over their emotions. This marks the ascent to the “Plateau of Productivity,” where the “the real-world benefits of the technology are predictable and broadly acknowledged” (Dawson et al., 2023, p. 10).

[SK] “Again, the content is not there. Top line. “This is what you need to do. Introduce it at this level. Let them build. This step one, step two, step three, step four.” But it was, it’s helpful to create a top-line lesson plan for a workshop.”

3.5 Plateau of Productivity

This is where the buzz dissipates, and interest is limited. In this study, this was achieved in approximately 4 weeks from the first experiment. At this point the researcher is able to make a rational evaluation as to the impact of A.I. on their work. This is in contrast to the first experiments where the researcher attempted to test their own abilities against A.I. and determined that the A.I. output was superior.

[SK] “Although there is an element that it can save your productivity. Just flipping between screens, copying and pasting [is still inefficient]. And my typing speed is already quite good.”

Ultimately, and concernedly for developers of A.I. the researcher has returned to his existing practice, and has reinforced his pre-A.I. behaviours.

[SK] “I’m even appreciating spelling mistakes in my work now. And saying this is proper human content. That’s my new excuse here, if there’s any mistakes in there. So this is deliberate. It is human generated it’s my real voice.”

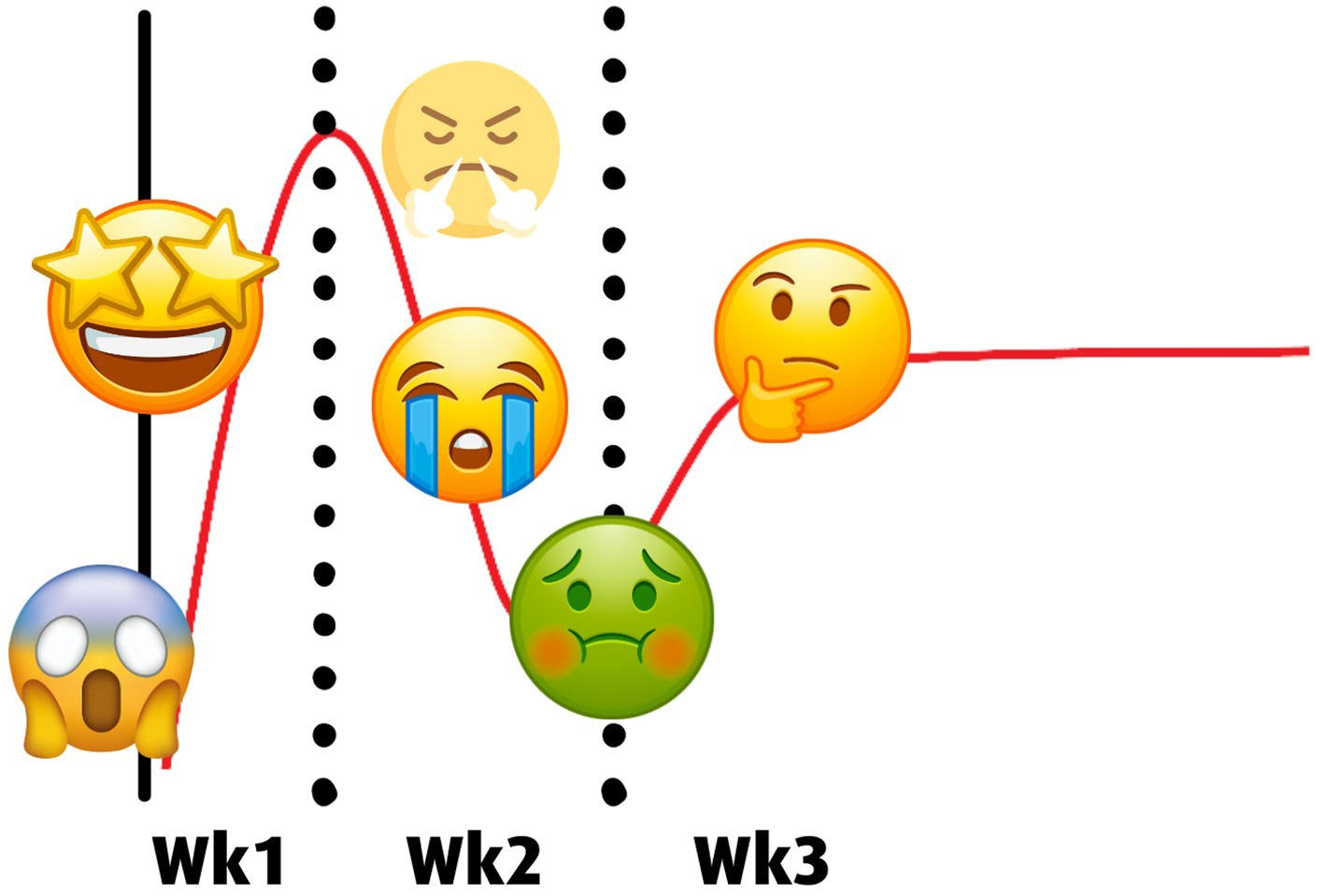

The Hype Cycle has concluded and the emotional state of the participant has returned to a neutral or pre-study condition. It is clear that over the course of the study they passed through a variety of emotional states from fear, to joy, anger and frustration, to sadness followed by disgust. Ultimately though, the participant concluded at a stable position neither excited nor dismissive of the technology as illustrated in Figure 4.

4 Discussion

The study presents an evocative narrative into the journey of “how a non-technology lecturer begins to employ generative artificial intelligence in the development of teaching and learning materials.” The emotions of fear, followed by rapid experimentation, as well as the lapse and ultimate recovery align with key stages of the Gartner model. These insights should help other educators in their attempts to integrate AI within their teaching strategies, and management teams planning the roll out of new technologies.

The researcher further reported several instances of behaviours that may be explained through the concept of problematic uses of Internet related technologies (PUI), including those that may be considered manifestations of addictive behaviours. In addition to the incidents described and highlighted within the main narrative, the researcher also identified a preoccupation with A.I., symptoms of irritability, anxiety, and sadness, unsuccessful attempts to control his behaviour, and continued excessive use despite appreciation of growing problems. This unintended and unexpected consequence informs educators and management teams to be conscious of the potential impact on staff and student wellness.

It is acknowledged that the autoethnographic method requires researchers to take special care of their well-being given that the methodology encourages the study team to undertake experiments and push themselves further than would be expected in a normal situation. This was certainly evident in this experiment and the research protocol of weekly meetings, as well as the diverse and relevant expertise of the team were key factors in the success of the study. The study team recommends that future studies should familiarise themselves with the taxonomy of symptoms. The researchers also acknowledge that due to the nature of this technology and the large investments being made, the limitations described in this report may change and therefore this study should be repeated after a suitable timeframe has passed.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material. Additional data can be found here: https://mdxac-my.sharepoint.com/:f:/g/personal/s_king_mdx_ac_ae/EnpLEGBwR85Pu4Z3mS189O0BZJZIfIz_C9o-FJMHS8xMKQ?e=weVKId. Further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Middlesex University Dubai Research Committee. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

SK: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Resources, Validation, Visualization, Writing – original draft. JP: Formal analysis, Investigation, Methodology, Resources, Supervision, Validation, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

The authors express their gratitude to the organising committees for the 2nd International Conference on Technology, Innovation, and Sustainability in Business Management (ICTIS 2023) held at Middlesex University Dubai between 3 and 4 May and 5th AUE International Research Conference: Emerging Trends in Multi-Disciplinary Research held at the American University in the Emirates May 29–31, as well as the Public Relations and Communications Association (PRCA) MENA chapter for inviting them to present preliminary findings. As may be expected in preparing an autographic study on generative A.I., ChatGPT May 3, 2023 has been employed in editing this submission, however all facts and references are accurate and have been verified by the correspondent author. GARTNER and HYPE CYCLE are registered trademarks of Gartner, Inc. and/or its affiliates and are used herein with permission. All rights reserved.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

AI Avalanche (2023). How to make an online course using ChatGPT [TikTok]. Available at: https://www.tiktok.com/@aiavalanche/video/7221098667170794758?is_from_webapp=1&sender_device=pc&web_id=7020378888799274498 (Accessed May 17, 2023).

Chandrasekaran, A., and Davis, M. (2023). Gartner, Hype Cycle for Emerging Technologies. Gartner. Available at: https://www.gartner.com/en/documents/4597499 (Accessed: November 26, 2023).

Cooper, R., and Lilyea, B. V. (2022). I’m interested in autoethnography, but how do I do it? Qual. Rep. 27, 197–208. doi: 10.46743/2160-3715/2022.5288

Dawson, P., Lowendahl, J., and Gaehtgens, F. (2023). Understanding Gartner’s Hype Cycles. Gartner. Available at: https://www.gartner.com/en/documents/4556699 (Accessed: November 26, 2023).

Deitering, A. M. (2017). “Introduction: why autoethnography?” in The self as subject autoethnographic research into identity, culture, and academic librarianship. eds. A. M. Deitering and R. Stoddart (Chicago: Association of College and Research Libraries, a division of the American Library Association).

Gartner (2023). Gartner Hype Cycle. Available at: https://www.gartner.com/en/chat/gartner-hype-cycle.

Ghapanchi, A. (2023). How generative AI like ChatGPT is pushing assessment reform. Available at: https://www.timeshighereducation.com/campus/how-generative-ai-chatgpt-pushing-assessment-reform (Accessed July 22, 2023).

Gregory, A., and Virmani, S. (2020). The effects of AI on the Professions. Chartered Insitute of Public Relations. Available at: https://www.cipr.co.uk/CIPR/Our_work/Policy/AI_in_PR_/AI_in_PR_guides.aspx. (Accessed May 22, 2023).

Gregory, A., Virmani, S., and Valin Hon, J. (2023) Humans Needed More Than Ever. The world’s first comprehensive analysis of the use of AI in PR and its impact on public relations work. Chartered Insitute of Public Relations. Available at: https://www.cipr.co.uk/CIPR/Our_work/Policy/AI_in_PR_/AI_in_PR_guides.aspx?WebsiteKey=0379ffac-bc76-433c-9a94-56a04331bf64 (Accessed: October 1, 2023).

Its Alan Ayoubi (2023). Now You can create online courses using Ai [TikTok]. Available at: https://www.tiktok.com/@itsalanayoubi/video/7217155565145918726?is_from_webapp=1&sender_device=pc&web_id=7020378888799274498 (Accessed May 17, 2023).

Moretta, T., Buodo, G., Demetrovics, Z., and Potenza, M. (2022). Tracing 20 years of research on problematic use of the internet and social media: theoretical models, assessment tools, and an agenda for future work. Compr. Psychiatry 112:152286. doi: 10.1016/j.comppsych.2021.152286

Roy, R., and Uekusa, S. (2020). Collaborative autoethnography: “self-reflection” as a timely alternative research approach during the global pandemic. Qual. Res. J. 20, 383–392. doi: 10.1108/QRJ-06-2020-0054

Shelton, C., Schroeder, S., and Curcio, R. (2020). Instagramming their hearts out: what do edu-influencers share on Instagram? Contemp. Issues Tech. Teach. Educ. 20. Available at: https://citejournal.org/volume-20/issue-3-20/general/instagramming-their-hearts-out-what-do-edu-influencers-share-on-instagram

Taber, N. (2010). Institutional ethnography, autoethnography, and narrative: an argument for incorporating multiple methodologies. Qual. Res. 10, 5–25. doi: 10.1177/1468794109348680

Terry, O. K., (2023). I’m a student you have no idea how much we’re using ChatGPT. The chronicle of higher education, May 12. Available at: https://www.chronicle.com/article/im-a-student-you-have-no-idea-how-much-were-using-chatgpt?cid=gen_sign_in (Accessed May 17, 2023).

UAE Ministry of Artificial Intelligence, Digital Economy and Remote Work Applications (2023). 100 practical applications and use cases of generative AI. April 30. Available at: https://ai.gov.ae/wp-content/uploads/2023/04/406.-Generative-AI-Guide_ver1-EN.pdf (Accessed May 18, 2023).

Valin, J. (2018). AI: humans still needed. Chartered Institute of Public Relations, May 24. Available at: https://www.cipr.co.uk/CIPR/Our_work/Policy/AI_in_PR_/AI_in_PR_guides.aspx (Accessed May 17, 2023).

Weale, S. (2023). Lecturers urged to review assessments in UK amid concerns over new AI tool. The Guadian, January 13. Available at: https://www.theguardian.com/technology/2023/jan/13/end-of-the-essay-uk-lecturers-assessments-chatgpt-concerns-ai (Accessed May 17, 2023).

Keywords: generative A.I., problematic use of Internet, autoethnography, Gartner Technology Hype Cycle, curriculum design

Citation: King S and Prasetyo J (2023) Assessing generative A.I. through the lens of the 2023 Gartner Hype Cycle for Emerging Technologies: a collaborative autoethnography. Front. Educ. 8:1300391. doi: 10.3389/feduc.2023.1300391

Edited by:

Changsong Wang, Xiamen University, MalaysiaReviewed by:

Iffat Aksar, Xiamen University, MalaysiaRustono Farady Marta, Universitas Satya Negara Indonesia, Indonesia

Copyright © 2023 King and Prasetyo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Stephen King, cy5raW5nQG1keC5hYy5hZQ==

†These authors have contributed equally to this work and share first authorship

Stephen King

Stephen King Judhi Prasetyo

Judhi Prasetyo