94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 02 October 2023

Sec. Assessment, Testing and Applied Measurement

Volume 8 - 2023 | https://doi.org/10.3389/feduc.2023.1259364

Background: Through the standardization of residency training in certain Chinese medical education institutions, it was discovered that the current evaluation system falls short in accurately assessing residents’ professional skills in clinical practice. Therefore, we developed the list of Entrustable Professional Activities (EPAs) in orthopaedic residency training to explore a new evaluation system.

Methods: The process of constructing EPAs includes seven steps. 40 orthopaedic residents were randomly assigned to two groups (20 in each). The experimental group used the EPAs evaluation system while the control group employed the traditional Mini Clinical Exercise (Mini-CEX) system. Post-residency, theoretical and practical tests were conducted to measure training effectiveness. Additionally, a survey gauged teaching satisfaction, knowledge mastery, and course engagement in both groups.

Results: The control group scored an average of 76.05 ± 10.58, while the experimental group achieved 83.30 ± 8.69 (p < 0.05) on the combined theoretical and practical test. Statistically significant differences were observed between the two groups concerning teaching satisfaction, knowledge mastery, and course engagement.

Conclusion: The application of EPAs in orthopaedic residency training yielded higher theoretical and practical test scores compared to the traditional formative evaluation system. It also enhanced teaching satisfaction, knowledge mastery, and course engagement. The EPAs present a potential model for national orthopaedic residency training.

Standardized training for residents in medical colleges have become integral to postgraduate medical education. The education provided at the residency stage determines the career trajectory of young physicians (Ten Cate, 2017), and plays a critical role in nurturing high-level medical professionals and providing high-quality medical services.

Different from college education, standardized resident training requires a lot of clinical practice and emphasizes the cultivation of competence. This has become the core challenge and focus in current medical education reform studies. The late 20th century marked the third generation of medical education reform, and a key feature of this reform was the emphasis on competency-based medical education (CBME) (Pellegrini, 2006). CBME, an innovative practice in education, has been thoroughly examined and implemented in medical education reform in Europe and the United States, profoundly impacting the theory and practice of medical education in China (Calhoun et al., 2011). It stipulates that corresponding skills should be acquired by the conclusion of residency training (Holbrook and Kasales, 2020; Weller et al., 2020), which is the cornerstone of standardized resident training.

As a teaching hospital, teaching base of China-Japan Friendship Hospital has shouldered the clinical training responsibilities of numerous top-tier medical schools in China and has established a comprehensive evaluation system founded on CBME principles. One of the evaluation systems is Mini-CEX, a traditional teaching and formative evaluation method. The Mini-CEX grew out of the clinical evaluation exercise (CEX), a method introduced by the American Society of Internal Medicine in 1972 to assess the clinical competence of residents, especially for first-year residents. A resident is required to complete the inquiry, physical examination, diagnosis and treatment of a hospitalized patient, and the entire process is graded by a clinical teacher in about 2 h. The Mini-CEX focuses on real patients, which can make up for the defects caused by “simulation” in OSCE teaching.

However, as medical education continues to evolve, the current system has unveiled several issues. For instance, the delineation of competencies is becoming increasingly intricate (Ginsburg et al., 2010), the evaluation content and assessment procedures are cumbersome, and they consume a significant portion of the clinical work time (Leung, 2002). Furthermore, some research argued that the individual components or abilities within each competency domain do not add up to the whole of practice (Lurie, 2012). Mastery of abilities in individual competency domains does not ensure the capability to integrate them across domains or to appropriately apply them to patient care. Additionally, the ability to provide patient care in one context or clinical circumstance may not necessarily translate to other contexts and circumstances. Meanwhile, the focus on objective assessment of measurable abilities may detract attention from assessing how learners actually care for their patients in a variety of clinical work. These authors believed that performance outcomes should be framed in the context of clinical care, recognizing that professional development requires the integration of abilities across multiple competency domains and application within the health care environment (Brooks, 2009; Frank et al., 2010; Ten Cate, 2013).

In order to enable CBME to be implemented from an abstract competency framework in the ordinary clinical training process, professor Olle Ten Cate, a Dutch medical education expert, introduced the concept of Entrustable Professional Activities (EPAs) (Ten Cate, 2005). EPAs operationalize medical education outcomes as essential professional activities that one entrusts a professional to perform and involve observing residents’ professional behavior in clinical tasks such as communication, preliminary diagnosis, and differential diagnosis. Supervisors would then assign corresponding trust, clarify their rights and responsibilities, and perform a comprehensive evaluation. Whereas traditional competency frameworks focus on qualities of the person, EPAs focus on qualities of the work to be completed. EPAs therefore ground outcomes in the tasks of physicians and offer an approach to CBME that better addresses concerns around integration of competency domains and context than previous CBME frameworks. It transforms abstract ability assessment into the EPAs level evaluation of specific clinical tasks, which takes into account resident learning and patient safety, and is conducive to the implementation of CBME.

In 2016, Dwyer and colleagues utilized EPAs to gauge orthopaedic residents’ performance in managing ankle fractures, hip fractures, and total knee arthroplasty (Mulder et al., 2010). A group of seven orthopaedic surgeons, including Adam Watson from the University of Toronto, formulated a compulsory EPAs checklist for orthopaedic residency training in Ontario, Canada. This catalog of 49 EPAs sets a vital reference point for other programs to follow when constructing and assessing competency-based orthopaedic surgery curricula (Ten Cate et al., 2015). Therefore, based on previous research, we explored the application of Entrustable Professional Activities (EPAs) assessment system in orthopaedic residency training.

From January 1st 2022 to December 2nd 2022 during COVID-19 pandemic in China, postgraduate students undergoing standardized training in the orthopaedic teaching base were randomly segregated into two groups: an experimental group (Group A) and a control group (Group B), with 20 residents in each group. The training time was 40 h per week (8 h per day). General information, such as students’ age, gender, and grade, were documented. The study procedures were approved by the Ethics committee of China-Japan Friendship Hospital. Research procedures followed all relevant guidelines and met the criteria for the Declaration of Helsinki.

The Entrustable Professional Activities (EPAs) assessment system was implemented in Group A, while Group B used the Mini-Clinical Exercise Evaluation (Mini-CEX). Educators at the teaching base developed the teaching content and methods for Osteonecrosis of the Femoral Head (ONFH) to provide both theoretical instruction and practical guidance.

Upon the completion of the rotation, a theoretical and practical test was held to evaluate the effectiveness of the training. Additionally, a questionnaire survey which aimed to evaluate the effectiveness of EPAs was administered to both groups. The survey was designed around students’ levels of teaching satisfaction, knowledge mastery, and course engagement, with students providing scores based on their subjective perceptions. Each of these three categories contained five questions, each question contributing one point, making the total possible score for each category five points.

EPAs emphasize the direct observation of residents’ clinical behaviors by supervisors in a clinical setting. These observations encompass workplace-based assessments (Wagner et al., 2018) and competency-based evaluations, with judgments assigned on a scale ranging from level 1 to 5 (Table 1) (El-Haddad et al., 2016).

Five academic staff from the Education Department developed the EPAs, all with university teaching qualifications, who are responsible for the task of university education reform and optimization. The process of constructing EPAs mainly includes several steps. According to Ten Cate, EL-Haddad, Wagner et al. ‘s literature reporting method, the clinician in our teaching base constructed the EPAs by the following steps (Ten Cate and Scheele, 2007; Wagner et al., 2018; O'Dowd et al., 2019).

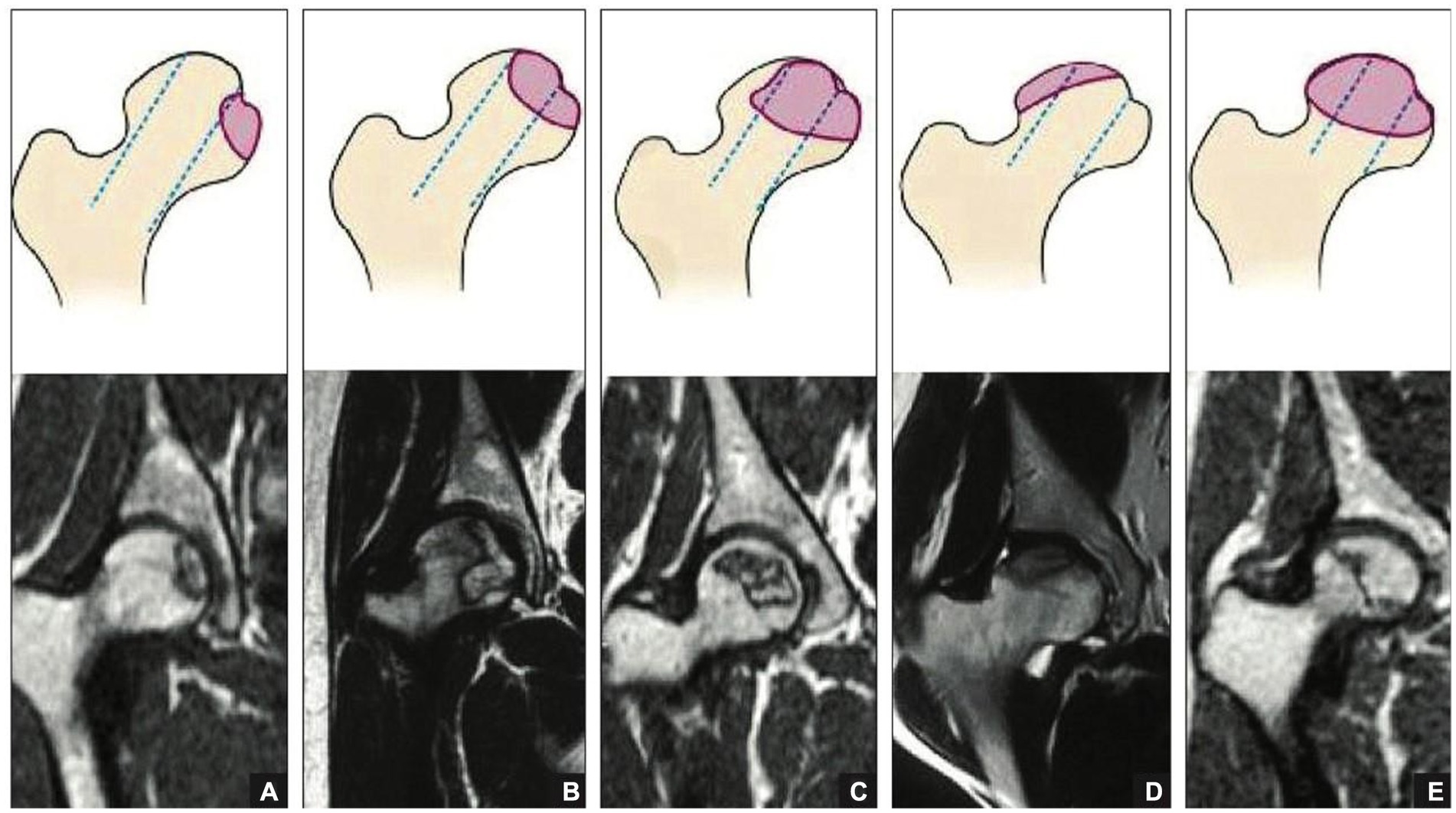

We took “Principles of Diagnosis and Individualized Treatment of Osteonecrosis of the Femoral Head (ONFH)” as EPAs for training and evaluation. This fundamental and crucial professional behavior impacts the subsequent diagnostic and treatment process. It’s necessary for residents to be proficient in this behavior in the early stages of their training. However, there currently lacks a corresponding evaluation method for assessing the quality and accuracy of ONFH diagnosis, the Association Research Circulation Osseous (ARCO) Staging, the China-Japan Friendship Hospital Classification (CJFH Classification, Figure 1) and individualized treatment. Therefore, we selected the “Principles of Diagnosis and Individualized Treatment of ONFH” as a case study for exploring the development of EPAs in our orthopaedic teaching base.

Figure 1. Schematic diagrams (top) and magnetic resonance images (bottom) of the China-Japan Friendship Hospital classification of osteonecrosis of the femoral head based on the 3 pillars. Type M: Necrosis involves the medial pillar (A). Type C: Necrosis involves the medial and central pillars (B). Type L1: Necrosis involves all 3 pillars, but the lateral pillar is partially preserved (C). Type L2: Necrosis involves the entire lateral pillar and part of the central pillar (D). Type L3: Necrosis involves all 3 pillars, including the cortical bone and marrow (E).

During the initial rotation of postgraduate residents at our orthopaedic teaching base, they participated in a 60-min theoretical training session on the “Principles of Diagnosis and Individualized Treatment of ONFH.” They accompanied their instructors in the respective diagnosis and treatment groups for theoretical study and practical operation. After conducting a literature review and reflecting on practical teaching experiences, we identified four influencing factors for this EPAs:

a. Learner factors, such as the level of professional knowledge mastered by residents, practical abilities, communication methods with instructors, and self-confidence levels.

b. Instructor factors, such as the instructor’s experience, observational skills, and assessment capabilities.

c. Task factors, such as the medical history, clinical manifestations, and imaging examinations of patients with ONFH, which influence the speed and accuracy of residents’ evaluation of the ARCO Staging and CJFH Classification.

d. System factors, where the quality of imaging data significantly impacts the quality of residents’ assessments of the ARCO Staging and CJFH Classification.

For residents, the progression of their capabilities can be represented by five distinct levels (Table 1). Throughout the standardized resident training, three instructors regularly conducted the EPAs grade evaluation for the residents every month. If there were discrepancies in the grades assigned by each instructor, a final grade was determined after a collective discussion among the instructors. We aligned EPAs with resident competencies and provided training corresponding to each EPAs level.

Firstly, residents are expected to become proficient in ARCO Staging and CJFH Classification, as well as acquire the ability to process differential diagnoses. Secondly, they should be able to communicate effectively with patients and their families and demonstrate medical professionalism by prioritizing the patients’ needs. Lastly, residents should be able to independently diagnose ONFH and develop individualized treatment plans.

After consultations with the clinical teaching doctors in our department, it was determined that EPAs comprise 13 evaluation criteria (Table 2).

Subsequently, we developed an evaluation tool specifically for the diagnosis and individualized treatment of ONFH (a–e):

a. Mastery of ARCO Staging and CJFH Classification: Unfamiliar (Level 1), Familiar (Level 2), Able to decide on conservative treatment or arthroplasty (Level 3), Can develop appropriate treatment plans (Level 4), Expert in developing appropriate treatment plans and capable of instructing others, able to perform basic operations for hip conservative surgery (Level 5).

b. Ability to collect key medical history and perform physical examination: Can complete reliably and understandably (Level 1), Can complete effectively (Level 2), Can complete within limited time and in an emergency setting (Level 3), Able to analyze, diagnose, and prescribe appropriate treatment (Level 4), Can analyze, diagnose, and prescribe appropriate treatment for complex or rare cases (Level 5).

c. Differential diagnostic capabilities of imaging studies: None (Level 1), Can diagnose ONFH, but has poor differential diagnostic ability (Level 2), Can diagnose ONFH, but has slightly insufficient differential diagnostic ability (Level 3), Able to accurately evaluate ARCO Staging and CJFH Classification and possess the differential diagnosis ability (Level 4), Expert at accurately evaluating ARCO Staging and CJFH Classification and differential diagnosis (Level 5).

d. Effective communication with patients and families: Have difficulty (Level 1), Able to answer questions (Level 2), Communicate proactively and appropriately (Level 3), Excellent communicator from a patient perspective (Level 4), Serves as an exemplary role model and communicates effectively (Level 5).

e. Code of conduct and responsibility: Lacks standard operations and sense of responsibility (Level 1), Operates relatively standard procedures and has a certain sense of responsibility (Level 2), Adheres to standard operations and has a definite sense of responsibility (Level 3), Exhibits standard operations and a strong sense of responsibility (Level 4), Consistently puts patient needs first, conscious of being patient-friendly, and adheres to medical professionalism (Level 5).

Through the analysis and discussion of feedback on training outcomes, instructors progressively refined and enhanced the evaluation criteria for each level of EPAs. During the process of regular evaluation, instructors provided appropriate oversight and support at each stage, enabling residents to smoothly progress to subsequent levels. The anticipated timeframe to reach the level of unsupervised clinical practice (Level 4 in Table 1) is set at 2 months.

One month prior to the project implementation, relevant training was provided for each instructor. At the conclusion of the rotation, residents used a questionnaire to conduct a comprehensive assessment of the instructors’ teaching abilities and the teaching management of our center.

In this study, statistical data are presented as mean ± standard deviation (SD), and analyzed using SPSS 26.0 statistical software. We compared the differences in theoretical and practical test scores, as well as residents’ subjective self-assessment in the level of teaching satisfaction, knowledge mastery, and course engagement between the two groups. The total theoretical and practical test scores, level of teaching satisfaction, level of knowledge mastery and level of course engagement were expressed by mean ± standard deviation (SD) and two independent sample t-test was used on analysis of data. A p-value less than 0.05 was deemed statistically significant.

Forty residents participated and completed the study. Group A consisted of 13 males and 7 females with an average age of 26.4 ± 1.7 years. Group B included 9 males and 11 females, with an average age of 26.3 ± 1.8 years. No significant difference was observed in age, gender, and grade between the two groups (p > 0.05).

The content of theoretical test included choice question, short answer question and case analysis question and practical test contained basic operation skill, dressing change skill and physical examination skill. The total scores for theoretical and practical tests were 83.30 ± 8.69 in group A and 76.05 ± 10.58 in group B. The p value was 0.023, indicating a statistically significant difference between the two groups (Table 3).

Meanwhile, we conducted a questionnaire survey. For example, “The degree of participation in the admission of newly admitted patients” to gauge the level of course engagement, “The degree of acceptance of the teacher’s teaching style” to gauge the level of teaching satisfaction, “The degree of mastery of common orthopaedic diseases” to gauge the level of knowledge mastery. There were significant statistical differences in the level of teaching satisfaction, knowledge mastery, and course engagement between the two groups. Residents in group A scored significantly higher than those in group B.

Subsequently, we solicited feedback from participating clinicians. “I can really feel that the residents’ clinical behavior in the EPAs group is becoming more and more reliable and they are improving faster than I could have imagined. Meanwhile, as a teacher, the new evaluation system is simpler and easier to use,” one teacher said. They commended the new assessment system for its comprehensiveness, its alignment with actual clinical practice, and its graspable evaluation standards. The system was deemed to objectively and accurately evaluate the clinical competence of residents. EPAs efficiently mitigated randomness by observing residents’ daily clinical behaviors over time, offering a realistic reflection of their professional conduct. Furthermore, using EPAs, teaching physicians can keep track of residents’ progress and provide personalized training to those showing subpar performance. For instance, if a resident demonstrates significantly below-average basic surgical skills according to the EPAs assessment, teaching physicians can conduct additional specialized assessments through DOPS to reinforce targeted training. In summary, the EPAs received the clinical teacher’s approval, they agreed to further explore the application of EPAs in clinical teaching.

EPAs have emerged as a favored approach to bridge the gap between the Accreditation Council for Graduate Medical Education (ACGME) competencies and their practical application across various tasks (Nousiainen et al., 2017). They provide the scaffolding for CBME, aligning the anticipated performance of residents and supervisors in procedural skills (Dwyer et al., 2016). When paired with objective competency assessments, EPAs enhance the graduates’ capacity to deliver safe patient care (Watson et al., 2021). The successful completion of EPAs necessitates residents to exhibit a specific level of competence or proficiency across a range of clinical skills (Chang et al., 2013). For instance, executing surgical procedures demands not only a comprehensive understanding of anatomy, instruments, and procedural technicalities but also non-technical skills, like effective patient communication (Carraccio and Burke, 2010; Wagner et al., 2018). EPAs, being both workplace-based and competency-based, underscore the direct observation of residents’ conduct by their superiors during clinical practice. They facilitate a smoother transition from undergraduate to postgraduate and continuing education (el-Haddad et al., 2016). EPAs, as a novel competency evaluation model, concentrate on the continuous enhancement of crucial resident clinical behaviors, which are observable, measurable, and executable. Supervisors can assess resident physicians at any given time. Compared to other competency models, EPAs are more streamlined and user-friendly (Pangaro and Ten Cate, 2013). They evaluate competency levels based on the extent of completion and supervision of professional behaviors, thereby establishing a correlation between clinical “critical” behaviors and competencies (Wagner et al., 2018). Prompt feedback post-evaluation can significantly bolster the core competencies of residents (Duijn et al., 2017), and receiving multi-source feedback from residents, clinical teachers, and patients can further augment the quality of EPAs and medical care (Browne et al., 2010).

In essence, the key distinction between EPAs and other assessment systems lies in their contextual application. EPAs do not require the creation of separate assessment items, instead, they are directly implemented within clinical work, thereby reducing scoring errors (Meyer et al., 2019). EPAs emphasize the wholeness of residents’ medical behavior and its observability within the work environment, without the restrictions of a specific evaluation timeframe (Pangaro and Ten Cate, 2013). Moreover, EPAs are designed for easy comprehension, featuring straightforward evaluation criteria, and closely link residents’ clinical behavior with ability assessment (Beeson et al., 2014; Sharma et al., 2018). This approach makes EPAs an ideal tool for assessing residents’ competencies in a comprehensive and practical manner.

EPAs aim to be comprehensive, and as such, may not be as effective for first-year residents due to their relative inexperience in various clinical aspects. Therefore, evaluation methods such as Mini-CEX, SOAP, and DOPS may be more effective in assessing and nurturing specific areas of expertise. EPAs assessments can be more beneficial as a screening method in the second and third years to identify residents’ weaknesses and provide targeted training.

This study also has its limitations. Firstly, in order to ensure the residents in experimental and control groups do not interact with one another, we assigned participants to different medical groups and told them to follow their own evaluation content and assessment procedures and should not interact with residents of opposing groups. But despite this, different groups of residents still have chance to communicate with each other which may influence the results. Secondly, as a new evaluation system in our teaching base, different teachers have different degrees of mastery, which may affect the accuracy of results. Furthermore, as it was only conducted within our orthopaedic teaching base, the number of residents involved was relatively small because of COVID-19 pandemic and the 13 evaluation criteria for EPAs for orthopaedic residents require further validation through larger, multi-center studies. The quest to improve training quality, cultivate more exceptional medical talents, and incorporate EPA assessments into the existing residents training system warrants further exploration.

The EPAs assessment system in orthopaedic postgraduate residents training can improve the total theoretical and practical test score compared with traditional formative evaluation system, and can also raise the level of teaching satisfaction, knowledge mastery and course engagement. The EPAs can offer a reference way to our national orthopaedic residency training.

The datasets analyzed in this study are available on reasonable request from the corresponding author. Requests to access these datasets should be directed to FG, Z2FvZnVxaWFuZ0Biam11LmVkdS5jbg==.

The studies involving humans were approved by the Ethics committee of China Japan Friendship Hospital. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

RQ: Data curation, Methodology, Software, Writing – original draft. XY: Software, Writing – original draft, Data curation, Methodology. YL: Conceptualization, Funding acquisition, Resources, Supervision, Writing – review & editing. FG: Conceptualization, Funding acquisition, Writing – review & editing, Data curation, Software. WS: Project administration, Supervision, Writing – review & editing. ZL: Project administration, Supervision, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This study was supported by the Education and Teaching Reform Research Project of Capital Medical University (2022JYY378), Peking University Health Science Center Medical Education Research Funding Project (2021YB18), National High Level Hospital Clinical Research Funding (2022-NHLHCRF-PY-20), Elite Medical Professionals project of China-Japan Friendship Hospital (NO.ZRJY2021-GG12), The Biomedical Translational Engineering Research Center of BUCT‑CJFH (No. RZ2020‑02), and 2021 Resident Doctor standardized training quality Improvement Project (Resident Training 2021023, 2021024).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Beeson, M. S., Warrington, S., Bradford-Saffles, A., and Hart, D. (2014). Entrustable professional activities: making sense of the emergency medicine milestones. J. Emerg. Med. 47, 441–452. doi: 10.1016/j.jemermed.2014.06.014

Brooks, M. A. (2009). Medical education and the tyranny of competency. Perspect. Biol. Med. 52, 90–102. doi: 10.1353/pbm.0.0068

Browne, K., Roseman, D., Shaller, D., and Edgman-Levitan, S. (2010). Analysis & commentary. Measuring patient experience as a strategy for improving primary care. Health Aff 29, 921–925. doi: 10.1377/hlthaff.2010.0238

Calhoun, J. G., Spencer, H. C., and Buekens, P. (2011). Competencies for global heath graduate education. Infect. Dis. Clin. N. Am. 25, 575–592, viii. doi: 10.1016/j.idc.2011.02.015

Carraccio, C., and Burke, A. E. (2010). Beyond competencies and milestones: adding meaning through context. J. Grad. Med. Educ. 2, 419–422. doi: 10.4300/JGME-D-10-00127.1

Chang, A., Bowen, J. L., Buranosky, R. A., Frankel, R. M., Ghosh, N., Rosenblum, M. J., et al. (2013). Transforming primary care training--patient-centered medical home entrustable professional activities for internal medicine residents. J. Gen. Intern. Med. 28, 801–809. doi: 10.1007/s11606-012-2193-3

Duijn, C., Welink, L. S., Mandoki, M., ten Cate, O., Kremer, W. D. J., and Bok, H. G. J. (2017). Am I ready for it? Students' perceptions of meaningful feedback on entrustable professional activities. Perspect Med Educ 6, 256–264. doi: 10.1007/s40037-017-0361-1

Dwyer, T., Wadey, V., Archibald, D., Kraemer, W., Shantz, J. S., Townley, J., et al. (2016). Cognitive and psychomotor Entrustable professional activities: can simulators help assess competency in trainees? Clin. Orthop. Relat. Res. 474, 926–934. doi: 10.1007/s11999-015-4553-x

El-Haddad, C., Damodaran, A., McNeil, H. P., and Hu, W. (2016). The ABCs of entrustable professional activities: an overview of 'entrustable professional activities' in medical education. Intern. Med. J. 46, 1006–1010. doi: 10.1111/imj.12914

Frank, J. R., Snell, L. S., Cate, O. T., Holmboe, E. S., Carraccio, C., Swing, S. R., et al. (2010). Competency-based medical education: theory to practice. Med. Teach. 32, 638–645. doi: 10.3109/0142159X.2010.501190

Ginsburg, S., McIlroy, J., Oulanova, O., Eva, K., and Regehr, G. (2010). Toward authentic clinical evaluation: pitfalls in the pursuit of competency. Acad. Med. 85, 780–786. doi: 10.1097/ACM.0b013e3181d73fb6

Holbrook, A. I., and Kasales, C. (2020). Advancing competency-based medical education through assessment and feedback in breast imaging. Acad. Radiol. 27, 442–446. doi: 10.1016/j.acra.2019.04.017

Leung, W. C. (2002). Competency based medical training: review. BMJ 325, 693–696. doi: 10.1136/bmj.325.7366.693

Lurie, S. J. (2012). History and practice of competency-based assessment. Med. Educ. 46, 49–57. doi: 10.1111/j.1365-2923.2011.04142.x

Meyer, E. G., Chen, H. C., Uijtdehaage, S., Durning, S. J., and Maggio, L. A. (2019). Scoping review of Entrustable professional activities in undergraduate medical education. Acad. Med. 94, 1040–1049. doi: 10.1097/ACM.0000000000002735

Mulder, H., Cate, O. T., Daalder, R., and Berkvens, J. (2010). Building a competency-based workplace curriculum around entrustable professional activities: the case of physician assistant training. Med. Teach. 32, e453–e459. doi: 10.3109/0142159X.2010.513719

Nousiainen, M., Incoll, I., Peabody, T., and Marsh, J. L. (2017). Can we agree on expectations and assessments of graduating residents?: 2016 AOA critical issues symposium. J. Bone Joint Surg. Am. 99:e56. doi: 10.2106/JBJS.16.01048

O'Dowd, E., Lydon, S., O'Connor, P., Madden, C., and Byrne, D. (2019). A systematic review of 7 years of research on entrustable professional activities in graduate medical education, 2011-2018. Med. Educ. 53, 234–249. doi: 10.1111/medu.13792

Pangaro, L., and Ten Cate, O. (2013). Frameworks for learner assessment in medicine: AMEE guide no. 78. Med. Teach. 35, e1197–e1210. doi: 10.3109/0142159X.2013.788789

Pellegrini, V. D.Jr. (2006). Mentoring during residency education: a unique challenge for the surgeon? Clin. Orthop. Relat. Res. 449, 143–148. doi: 10.1097/01.blo.0000224026.85732.fb

Sharma, P., Tanveer, N., and Goyal, A. (2018). A search for entrustable professional activities for the 1(st) year pathology postgraduate trainees. J Lab Physic 10, 26–30. doi: 10.4103/JLP.JLP_51_17

Ten Cate, O. (2005). Entrustability of professional activities and competency-based training. Med. Educ. 39, 1176–1177. doi: 10.1111/j.1365-2929.2005.02341.x

Ten Cate, O. (2013). Competency-based education, entrustable professional activities, and the power of language. J. Grad. Med. Educ. 5, 6–7. doi: 10.4300/JGME-D-12-00381.1

Ten Cate, O. (2017). Competency-based postgraduate medical education: past, present and future. GMS. J. Med. Educ. 34:Doc69. doi: 10.3205/zma001146

Ten Cate, O., Chen, H. C., Hoff, R. G., Peters, H., Bok, H., and van der Schaaf, M. (2015). Curriculum development for the workplace using Entrustable professional activities (EPAs): AMEE guide no. 99. Med. Teach. 37, 983–1002. doi: 10.3109/0142159X.2015.1060308

Ten Cate, O., and Scheele, F. (2007). Competency-based postgraduate training: can we bridge the gap between theory and clinical practice? Acad. Med. 82, 542–547. doi: 10.1097/ACM.0b013e31805559c7

Wagner, L. M., Dolansky, M. A., and Englander, R. (2018). Entrustable professional activities for quality and patient safety. Nurs. Outlook 66, 237–243. doi: 10.1016/j.outlook.2017.11.001

Wagner, J. P., Lewis, C. E., Tillou, A., Agopian, V. G., Quach, C., Donahue, T. R., et al. (2018). Use of Entrustable professional activities in the assessment of surgical resident competency. JAMA Surg. 153, 335–343. doi: 10.1001/jamasurg.2017.4547

Watson, A., Leroux, T., Ogilvie-Harris, D., Nousiainen, M., Ferguson, P. C., Murnahan, L., et al. (2021). Entrustable professional activities in Orthopaedics. JB JS open. Access 6:e20.00010. doi: 10.2106/JBJS.OA.20.00010

Keywords: Entrustable professional activities, medical education, orthopaedics, residency training, teaching reform exploration

Citation: Qu R, Yang X, Li Y, Gao F, Sun W and Li Z (2023) An application and exploration of entrustable professional activities in Chinese orthopaedic postgraduate residents training: a pilot study. Front. Educ. 8:1259364. doi: 10.3389/feduc.2023.1259364

Received: 15 July 2023; Accepted: 18 September 2023;

Published: 02 October 2023.

Edited by:

Raman Grover, Consultant, Vancouver, CanadaReviewed by:

Rania Zaini, Umm Al-Qura University, Saudi ArabiaCopyright © 2023 Qu, Yang, Li, Gao, Sun and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ying Li, bGl5aW5nX2VsaW5rQDE2My5jb20=; Fuqiang Gao, Z2FvZnVxaWFuZ0Biam11LmVkdS5jbg==

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.