94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ., 13 October 2023

Sec. Digital Education

Volume 8 - 2023 | https://doi.org/10.3389/feduc.2023.1251163

This article is part of the Research TopicImpact and implications of AI methods and tools for the future of educationView all 15 articles

In November 2022, the public release of ChatGPT, an artificial intelligence (AI)-based natural language model, was a groundbreaking point in many sectors of human life and education was not the exception. We describe how ChatGPT was integrated in an undergraduate course for an International Relations program in a private Mexican university. Under an action research methodology, we introduced this novel instrument in a course on Future Studies. Students were evaluated on their ability to explain to ChatGPT several discipline-specific methods and to make the AI implement these methods step by step. After six such activities, the outcomes evidenced that the students not only learned how to use the new AI tool and deepen their understanding of prospective methods, but also strengthened three soft or transversal competencies: communication, critical thinking, and logical and methodical reasoning. These results are promising in the framework of Skills for Industry 4.0 and Education for Sustainable Development; even more, they demonstrate how ChatGPT created an opportunity for the students to strengthen, and for the professor to assess, time-tested competencies. This is a call-to action for faculty and educational institutions to incorporate AI in their instructional design, not only to prepare our graduates for professional environments where they will collaborate with these technologies but also to enhance the quality and relevance of higher education in the digital age. Therefore, this work contributes to the growing body of research on how Artificial Intelligence (AI) can be used in higher education settings to enhance learning experiences and outcomes.

With ChatGPT1 now freely available to whoever wants to use it, certain classical tasks have suddenly become obsolete, if their completion can be fully or mostly delegated to this new Artificial Intelligence (AI) tool (Eloundou et al., 2023).

ChatGPT’s integration into higher education has been received by the academic community with both enthusiasm and caution. On the positive side, ChatGPT offers a range of benefits that can significantly enhance the learning experience not only in higher education, but even toward self-directed learning and life-long learning. Notably, it provides real-time feedback and guidance to assist students in staying on track and addressing challenges as they arise. Additionally, its accessibility across various platforms, such as websites, smartphone apps, and messaging services, allows learners to engage with the tool at their convenience, fostering flexible learning. Furthermore, ChatGPT provides personalized support tailored to each learner’s choices and goals and has the potential to enhance the use of open educational resources (Firat, 2023). This can be especially beneficial for self-directed learners and for learners who might not have access to traditional institutional education, therefore democratizing knowledge, making it more accessible to a broader audience.

Among the main concerns is the threat ChatGPT poses to traditional assessment methods. The tool’s sophisticated text-generating capabilities can produce essays which raises questions about the integrity of student submissions and the validity of assessments, potentially undermining the very foundation of academic evaluation (Neumann et al., 2023; Rudolph et al., 2023). Additionally, ChatGPT can generate incorrect though seemingly relevant and accurate content, which can lead to a lack of critical thinking, if students overly rely on the tool for answers without deeply engaging with the content (Rudolph et al., 2023). Another issue is its fast-paced improvement, making it increasingly challenging for educational institutions to monitor or regulate its use.

In front of this new situation, it could be tempting to prevent the students from using ChatGPT or any other AI tool, nonetheless this path leads nowhere. Not only there is no way to enforce such a prohibition, but even if there were one, it would not make much sense to oblige the students to stay away from a type of tools whose use is destined to gradually become generalized—for good or for bad.

Hence, the need to choose a different direction, where we educators recognize the new existing situation. In the worst case we must adapt to it, while in the best scenario we can even take advantage of it. Concretely, in the short term, it means getting rid of certain tasks that have traditionally been assigned to students and embracing new ones.

This article represents an attempt to address this growing challenge: now that AI tools are able to complete a multiplicity of tasks almost instantaneously, how can these new instruments be used to foster and improve teaching and learning processes, instead of undermining them?

Our quest for an answer took the form of action research, based on an experiment carried out within the framework of a university course. First, various ways of incorporating ChatGPT as a pedagogical resource were explored and tested. Then the observation of the first set of results allowed us to select one of these ways. Finally, the merits of such a strategy were identified: it not only improved the students’ technological literacy and understanding of key elements from the course itself, but also stimulated the acquisition of time-tested competencies. These competencies have proved to be useful in contexts that include and even transcend both the course and the ability to productively use this particular technological tool.

Consequently, this generates an apparent paradox: a vanguard instrument proves to be an effective way to practice and develop timeless competencies.

This research is decidedly grounded on an empirical basis, provided by an in-class experiment in April–May, 2023, at the early stages of the adoption of ChatGPT by university students. We evaluated several strategies for effectively integrating this emerging Artificial Intelligence (AI) tool as a teaching resource in the specific context of the lecture and eventually selected the one that, in our context, showed the most potential.

In March, 2023, the Institute for the Future of Education (IFE) launched an invitation to professors to action research involving the insertion of AI tools in teaching environments.

Action research is a collaborative, reflective process where educators engage in systematic inquiry to improve their teaching practices and the learning experiences of their students. It involves a cyclical process of identifying a problem, planning a change, implementing the change, and then reflecting on the results to inform further action (Davison et al., 2004; Meyer et al., 2018; Voldby and Klein-Døssing, 2020). This iterative process, as one of its defining features, allows for continuous improvement and adaptation of the methodology based on the findings and reflections from each cycle. And, above all, this research approach emphasizes the active involvement of educators in studying and improving their own practices and sharing their results with the academic community (Davison et al., 2004; Meyer et al., 2018; Voldby and Klein-Døssing, 2020).

In response to this call from IFE, action research was deployed with the aim of identifying how ChatGPT’s potential could be exploited at the university level. As many other educators also were at that time, the first author of this article was puzzled and intrigued by this AI tool recently released by OpenAI. The IFE initiative provided a framework to push boundaries and move forward into this new world. An ongoing course on Future Studies represented an adequate setting for such an exploration. Moreover, exploring what tomorrow might hold with the help of a tool that seemed to come from the future seemed to be a stimulating and elegant way to proceed.

The basic research protocol laid down by the IFE required to integrate the use of ChatGPT (Version 3.5) in at least four class activities or assignments, to register data throughout the whole process and finally to closely analyze the results from the experiment. This document constitutes the product of such an effort, in the pursuit of finding an answer to the research question exposed above.

The experiment was to be conducted in the context of the English-taught course “Future Scenarios on the International Political Economy,” taught at the Tecnologico de Monterrey, Campus Puebla in the Spring semester of 2023. A total of 19 students were initially enrolled in the course, all of whom were specializing in International Relations (IR). Approximately one-third of the students were pursuing a dual degree in IR and Economics, while another third of them were pursuing a dual degree in IR and Law. The students in the latter two groups were in their sixth semester of undergraduate studies, while the remaining students, who were solely enrolled in IR, were in their fourth semester. As a result, all students fell within the range of 19–21 years old.

We adhered to the following ethical considerations to ensure the integrity of our research and the protection of our participants’ rights and privacy. All participants were over 18 years old, aligning with the definition of formal citizens under the Mexican legal framework. Prior to their involvement, each participant provided explicit consent to partake in the study. They were thoroughly informed about the research and confirmed their understanding of how their information would be used. It is paramount to note that all data gathered was treated with the utmost confidentiality, ensuring participant anonymity. Furthermore, the handling and storage of this data strictly adhered to the Privacy Policies set forth by the higher education institution overseeing the study.

On April 11th 2023, ChatGPT as a tool was introduced to the whole group. This initial session was meant to properly launch the experiment. First, some general and contextual information was provided about the software. In the meantime, the students without an OpenAI account2 signed up for one. Following that, the professor offered a range of recommendations regarding the most effective approaches for engaging with ChatGPT, including: (1) Formulate the right prompts, (2) Interact repeatedly with it until you get what you want/need, (3) Be creative in what you ask it to do, including by using personas,3 (4) Take its answers critically, and (5) Use it as a complement of your own effort.

Next, two exercises were successively applied: groups of students were tasked first to instruct the AI to build a SWOT (Strengths, Weaknesses, Opportunities, and Threats) matrix on a given famous individual, and then to generate several multiple-choice questions about another method known as the Problem Tree. Importantly, both methods had been exposed in previous lessons, so the exercise was intended as a way to look again at the same contents through a different lens. Next, a class discussion took place on the outcomes generated in the process, with the aim of highlighting both mistakes and good practices.

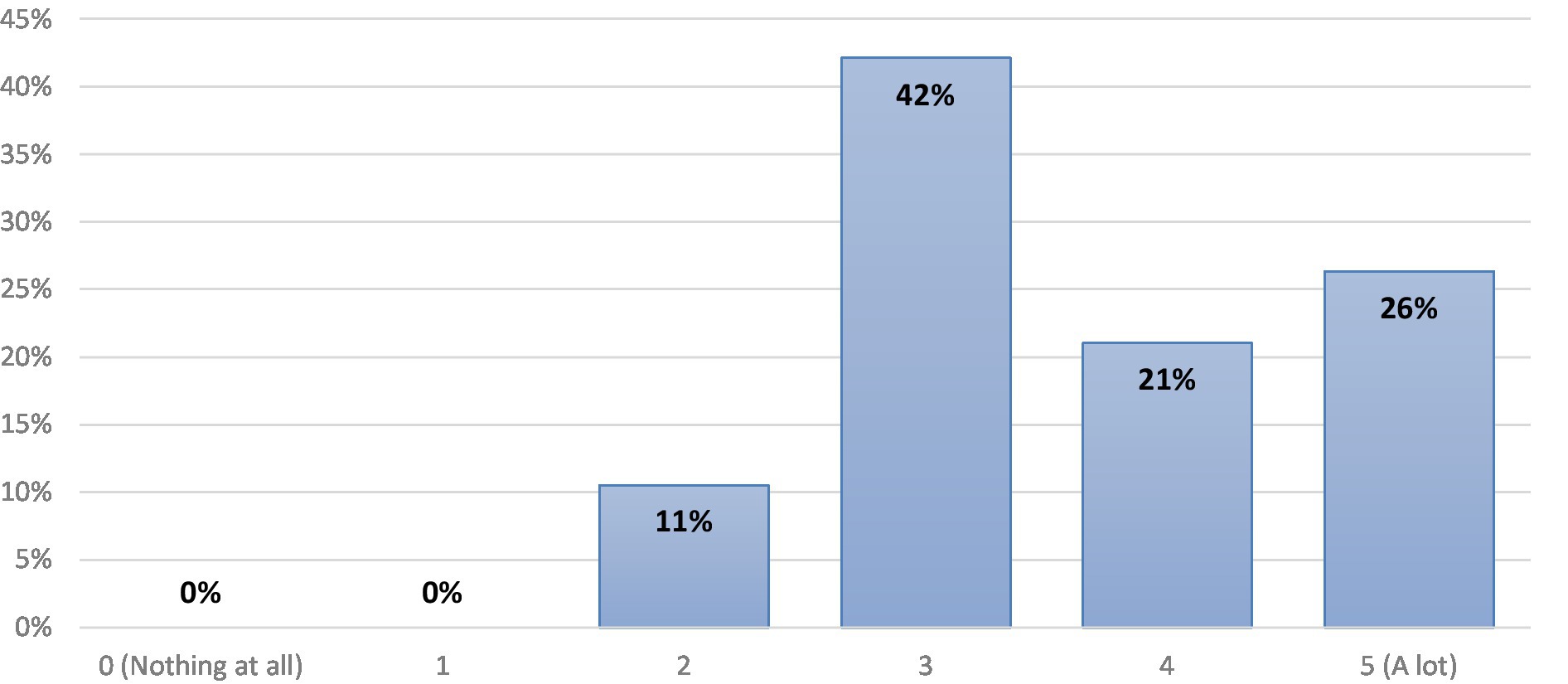

To close the launching session, all the students completed a survey regarding their own perceptions and experience (if any) about ChatGPT. The survey was designed in English, powered by Google Forms, and included seven multiple choice questions. One of the key questions sought to assess the level of prior knowledge each participant had about the software, with answers to be placed on a Likert scale from 0 (“Nothing at all”) to 5 (“Already a lot”). As depicted in Figure 1, there was a notable disparity in that regard, but none of the surveyed individuals declared fully ignoring the tool, that had been released to the public half a year earlier. Among the respondents, 42% indicated possessing an intermediate level (Likert category: 3) of prior knowledge about it, 47% rated their familiarity as higher than that level, and only 11% positioned themselves below it.

Figure 1. Prior knowledge of ChatGPT by the students (n = 19), according to their answers to: “What did you know about ChatGPT prior to today’s [first] session?”

Further questions revealed that slightly more than half of the group had never used ChatGPT for academic purposes before. Moreover, almost half the students considered that the activities conducted that day represented an effective way to verify and consolidate their knowledge about the two abovementioned methods that were the basis for both exercises. A positive but moderate correlation (with a Pearson coefficient of 0.52) was found between having prior knowledge of the tool and perceiving the activities implemented in class that day as effective. Only one quarter of the group selected one of the two options indicating that they would be using this tool often or very often in the future, with almost 60% expressing uncertainty about it. These results underscore a relatively high level of initial skepticism right after this first contact in the context of this specific course.

However, almost two-thirds of the surveyed individuals expressed confidence that ChatGPT would allow them to “learn more thanks to a more efficient use of [their] time,” while most of the remaining students selected the option about “learn[ing] differently, but probably in the same amount as before.”

If anything, the contrast between these positions—most are unsure whether they would use it but are nevertheless valuing positively its utility in the learning process—reveals the mixed feelings toward such an innovative tool, at the same time impressive and error-prone, that had suddenly popped-up in their lives as university students. The apparent inconsistency between the results can also be tentatively explained by the fear of being seen as embracing too openly the use of a tool whose compatibility with academic honesty and good practices was (and arguably still is) in question.

At this stage, the objectives of the research were that the students: (1) acquired the ability to implement certain methods for exploring the future, using ChatGPT as an alternate and possibly effective teaching tool, and (2) developed new skills, which would enable them to take advantage and become familiar with ChatGPT. Undoubtedly, as the first publicly available AI tool operating under natural language instructions, this software was opening the way to a new setting where AI would gradually be found in most aspects of our everyday lives. In other words, it was about making sure that they would develop “good habits” from the early stages of their adoption of ChatGPT, so they would be comfortable when using other AI tools in the longer run. Even if it can be anticipated that the next generations of such instruments will be much more sophisticated, being an “early adopter” of a given system is commonly understood as a long-term advantage (Tobbin and Adjei, 2012), even if such a system evolves and get transformed over time.

This path leaves aside the assumption that the younger generations, for the sole merit of belonging to a particular age-range, are naturally able to smoothly and quickly adopt new technological tools and features. This purported quality, commonly expressed under the popular “digital natives” label, is severely questioned by research on the subject (Selwyn, 2009; Bennett and Maton, 2010; Margaryan et al., 2011). On the contrary, undergraduate students do not have an above-normal capacity to swiftly realize and learn how to properly use a new digital tool: Instead, they need to receive explanation and training, as any other person would.

For these two motives, there was a compelling rationale for integrating exercises involving the use of ChatGPT into the course. Guided practice would facilitate a better assimilation of how the discipline-specific methods operate, and how ChatGPT functionalities can be productively and meaningfully mobilized.

Prior to its implementation, the experiment aimed at testing the relative merits of three possible uses of ChatGPT in the context of this course, whose content consisted in teaching a series of methods for developing a prospective project research.

It was initially assumed that this tool could be utilized by the students to perform one or several of the following functions, understood as the fulfillment of a particular task:

• (i) to summarize/reformulate known information about methods,

• (ii) to practically implement the methods that had already been studied in class and/or

• (iii) to discover and learn about methods that they did not know yet.

These three functions were selected for their potential to diversify and enrich the process through which students would learn and understand the functioning of prospective methods.

As detailed in Table 1, six different activities were applied in the context of this experiment, throughout four successive assignments. Each activity was assessed under three criteria: the quality of the prompts, the effectiveness / productivity of the interaction with ChatGPT, and finally, the extent to which the achieved outcome was fulfilling the requirements. Each student was given specific written feedback on their performance in each area but, for the purpose of this experimentation, each activity was eventually given an assessment in terms of “Positive,” “Basic” or “Negative” for each of the three dimensions mentioned earlier. After being evaluated, each assignment was discussed in the classroom, in order to highlight both the good practices and the most common missteps.

Notably, not all the activities were characterized by the same level of complexity.4 This discrepancy was not intentional but rather an inevitable outcome of conducting this experiment within real-life conditions, specifically within the context of a course that pursued its own pedagogical objectives. Therefore, the evolution of the students’ success rate over time should be interpreted cautiously. However, even if the last task was the most challenging for being based on the most advanced prospective method, it was also the one where the performance of the students turned out to be the highest.

The deliverable consisted in a Word document, which included a link to a webpage where the whole interaction with ChatGPT would be shown. At the time of the experiment, the function to generate such a link was not embedded into the software, so we resorted to Share GPT, a Chrome extension specifically designed to that end.5

The rest of the same Word document served to display the student’s answers to three or four questions to guide their personal reflections about their experience when using ChatGPT. The purpose was to monitor the evolution of their thoughts about their ability to use the AI software and their perception of its utility in their learning process.

The first assignment consisted of two activities, both centered on already known Future Studies methods, with the aim of helping the students prepare for an imminent exam. Activity 1.1 consisted in having ChatGPT recapitulate the steps to be followed in the implementation process of either of two methods, thereby testing the function “to summarize/reformulate known information about methods” identified above as (i). Activity 1.2 required the students to instruct ChatGPT to apply another method, which related to function “to practically implement the methods that had already been studied in class” (ii). In both cases, the methods had been studied and implemented in previous sessions.

The results that were obtained through Activity 1.1 highlighted that ChatGPT was ill-suited to provide information about the content and functioning of any given method, because of its well-known intrinsic limitations in terms of factual veracity.

Furthermore, specialized webpages, that can easily be found via a classical web search, would provide complete and more reliable information on the matter, thereby reducing the utility of resorting to AI for that purpose. It is true that ChatGPT offers added value by providing explanations in terms that are better suited to the student and addressing follow-up questions on the same subject. However, this potential added value becomes moot when there exists so much uncertainty about the soundness of the information it delivers.

In contrast, Activity 1.2 delivered more encouraging results insofar as the observed limitations had to do with how the students used the tool, instead of being the consequence of intrinsic flaws from the tool itself.

The second assignment was also made of two activities, both of them based on the same prospective method. In this case, the students had not heard about this method beforehand, and Activity 2.1 consisted in having ChatGPT explain to them such method, which corresponds to function “to discover and learn about methods that they did not know yet,” defined earlier as (iii). The instructions contained a short description of it to help the students formulate their prompts. Activity 2.2 was designed to further test function (ii), so it was substantially similar to 1.2: once more, it consisted in implementing a method—in this case, the one that had been discovered thanks to Activity 2.1.

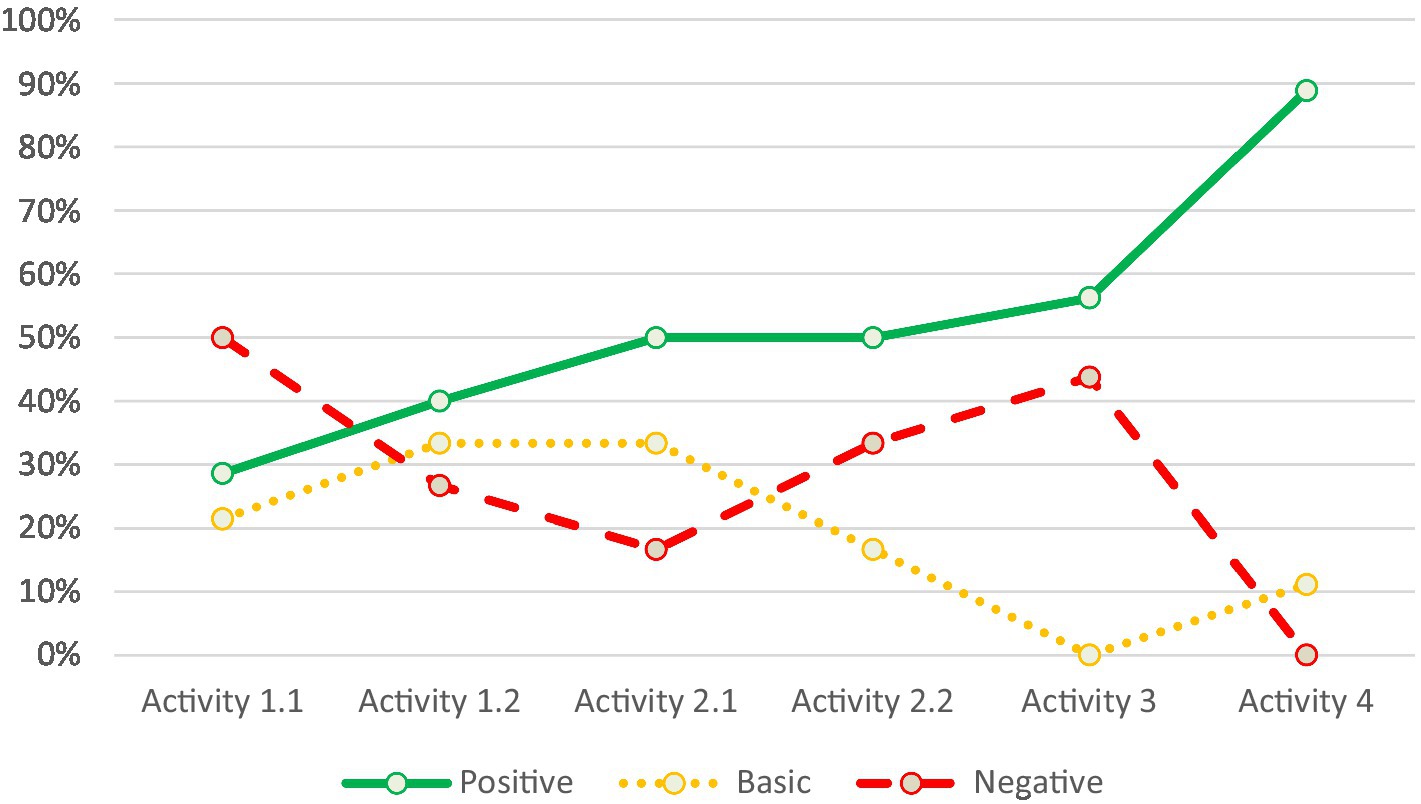

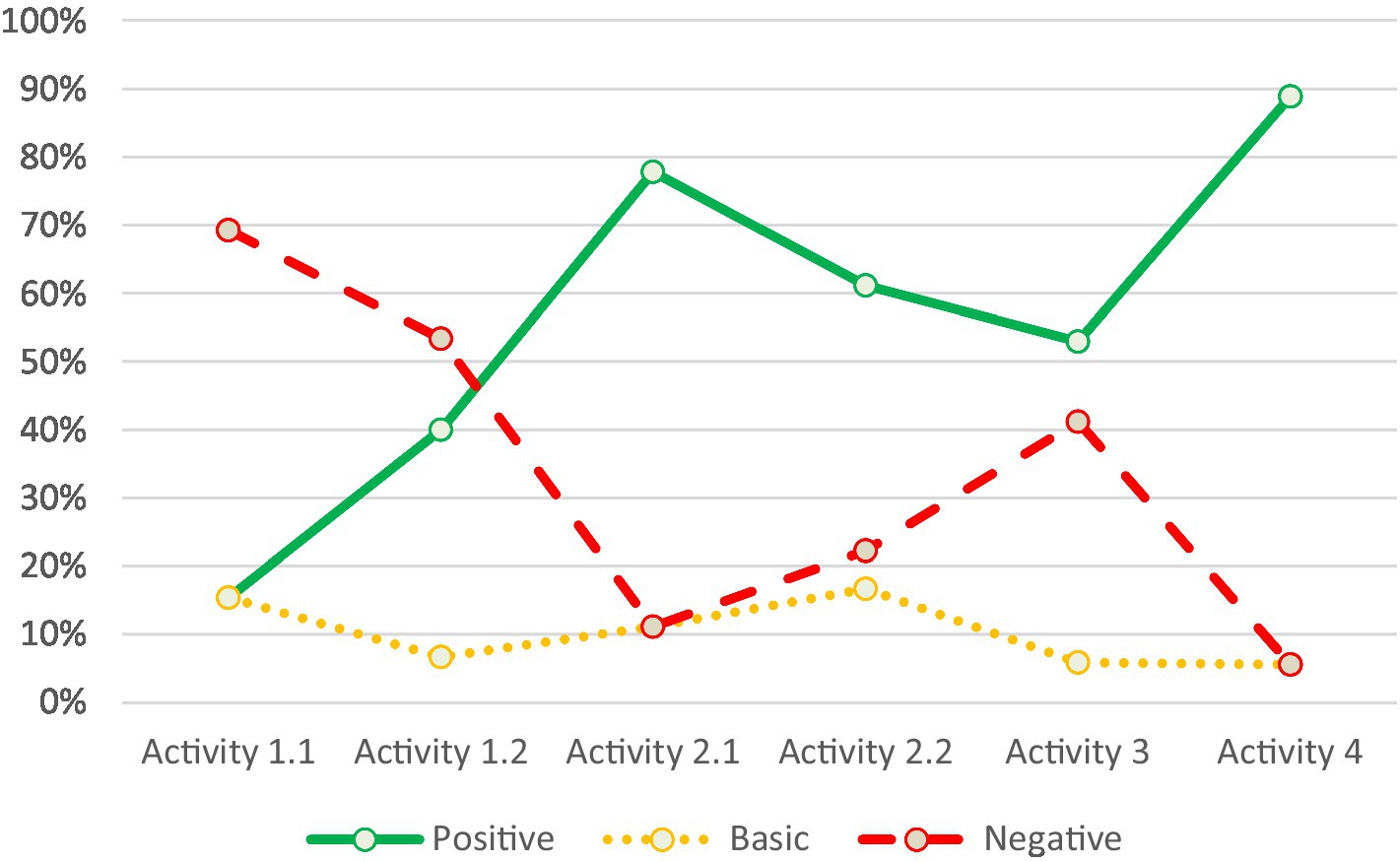

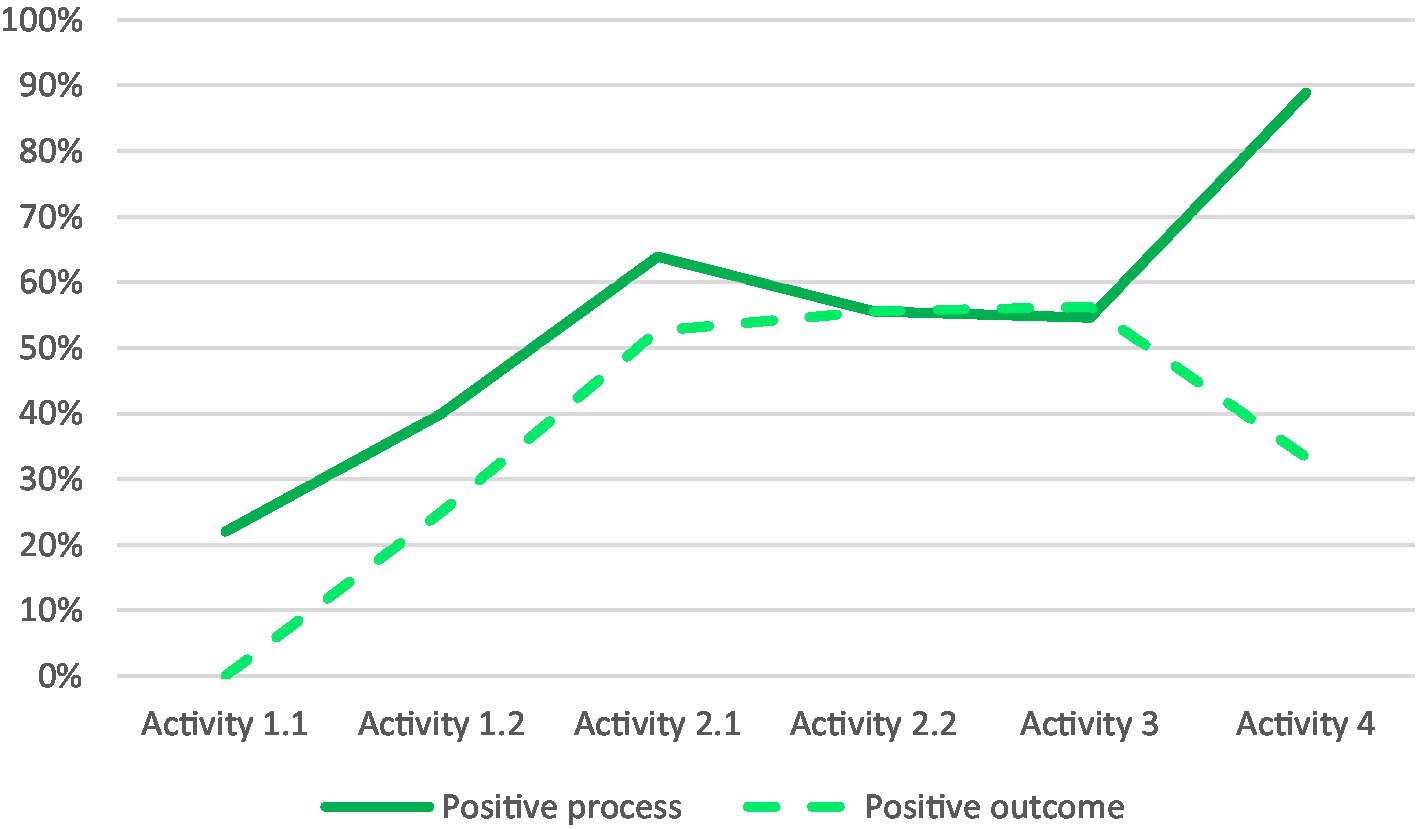

At first sight, Activity 2.1 appeared to have been completed in a satisfactory fashion, since all the process-related indicators went up (see Figures 2, 3). However, two problems were detected: first, it was too simple for the students to formulate proper prompts, since they only had to include in their instructions the information that they had received about the method. Consequently, this specific task did not require them to mobilize any reflection, creativity or effort of any kind. Second, the available information did not prevent some (minor) errors from slipping into the generated answers, which exposed the students to inaccurate factual data about the method itself. In contrast, Activity 2.2 provided more interesting insights on how well the students were able to have ChatGPT follow their instructions and eventually produce the desired product.

Figure 2. Percentage of students and the quality of their prompts per learning activity. The prompts were assessed positively, negatively, or in-between, by the professor (n = between 14 and 18, since not all of the students delivered every required assignment).

Figure 3. Percentage of students and quality of their “conversation” with ChatGPT per learning activity. The interaction was assessed by the professor as positively, negatively, or in-between (n = between 13 and 18, since not all of the students delivered every required assignment).

The intermediate results obtained from these first four activities revealed that function (ii) “to implement the methods that had already been studied in class” was much more promising than the other two functions, (i) and (iii).

Consequently, the professor in charge of the experiment decided that, moving forward, the subsequent activities would no longer consist in the students using ChatGPT to summarize or discover methods, but exclusively in the students using it to implement such methods. Therefore, the next two tasks were designed to test and strengthen knowledge in a practical fashion, where each student would be giving instructions to ChatGPT about the steps to be followed in order to complete the full methodological process. In the context of this method-oriented course, it undoubtedly was a more promising way to take advantage of ChatGPT’s affordances.

From an overall perspective, the experiment highlighted that despite a significant number of students rating their command of ChatGPT as decent or high, the learning curve proved to be steep: A total of six activities (in this case, distributed among four distinct installments) were indeed required to achieve that most students attain a satisfactory level of proficiency of ChatGPT.

This observation supports that the belief in the existence of “digital natives”—young people portrayed as being “innate, talented users of digital technologies” (Selwyn, 2009, p. 364)—is unfounded. Instead, a gradual adaptation and adoption process had to take place before most of the group proved to be able to make a fruitful use of the tool.

A prompt is defined as “a phrase or question that is used to stimulate a response from ChatGPT” (Morales-Chan, 2023, p. 1). This is, fundamentally, the human side of the interaction with AI. Consequently, the quality of the answer generated by the program heavily depends on the way the request was formulated in the first place (Morales-Chan, 2023, p. 2). This has become so clear that, at the beginning of 2023, training courses and articles on prompt writing—also known as “prompt engineering”—have become commonplace (see for instance an 18-h lesson offered on Coursera; White, 2023).

As shown in Figure 2, the quality of the prompts started from a low ground in the experiment, with less than a third of the students being able to properly explain to the machine what output they were expecting from it. At the beginning, even after taking an introductory session on the matter, a common mistake consisted in adopting the same pattern as when using a search engine, with a string of keywords loosely connected with one another.

At later stages of the experiment, full sentences were finally becoming the regular practice: in Activities 2.1, 2.2, and 3, a narrow majority of students wrote adequate prompts, but the rest of them still lacked clarity and/or specificity. Activity 4, which was the final one, eventually showed an uptick in progress in that respect, with 90% of the group properly communicating with the AI tool. The rest of the group achieved a level that was considered basic, and no one showed plain incapacity to perform this task (see Figure 2).

Therefore, the trial-and-error process, conducted throughout six successive activities interspersed with feedback, allowed for a significant improvement in the proportion of students capable of “communicating” through clear and specific prompts.

Unlike using search engines, engaging with ChatGPT involves a dynamic back-and-forth exchange between the user and the machine. Instead of a simple request and the presentation of a list of results, what unfolds is akin to a genuine interaction or conversation: This is precisely the reason why the word “chat” that was appended to GPT, the latter referring to the underlying technology that powers it (Eloundou et al., 2023).

Familiar with digital tools that typically involve one-off queries (even if some of them might end up being repeated under different forms as part of the same research process), many students initially lacked the inclination to engage in a continuous exchange with ChatGPT. They did not readily recognize the opportunity, or even in certain cases the need, to follow a step-by-step interaction, to request more details, or even highlight mistakes and ask for rectifications.

Engaging with ChatGPT entails more than just writing additional prompts after the initial one: it also implies analyzing and understanding the generated answers and tailoring subsequent prompts accordingly. In the context of the activities based on function (ii) “to practically implement the methods that had already been studied in class,” it further assumes that the user possesses sufficient clarity about how the entire process can be broken down, and what outcomes are expected at each stage.

In line with the earlier observations regarding prompts writing, it also took some time before many of the students were able to conduct a meaningful and productive interaction with the tool. In particular, they were prone to simply accept what the program delivered to them and moved from there without further questioning the generated statements.

As shown in Figure 3, the quality of the interaction with the software showed an uneven but noticeable progress, starting below 20% and ending close to 90%. The ups and downs in-between can be explained by the varying levels of difficulty of the different methods: a more demanding method would require a longer and arguably more sophisticated discussion with ChatGPT, which raises the likelihood that students commit errors in the process. However, the fact that the last—and most challenging—method was the one where, on average, the best interactions were observed is compelling evidence that this point ended up being properly assimilated by most of the group.

After focusing on two dimensions based on the process of using ChatGPT (sections 3.1 and 3.2), the third criterion had to do with how effective this interaction had been, as revealed by the quality of the product that was eventually generated by ChatGPT when following each student’s instructions.

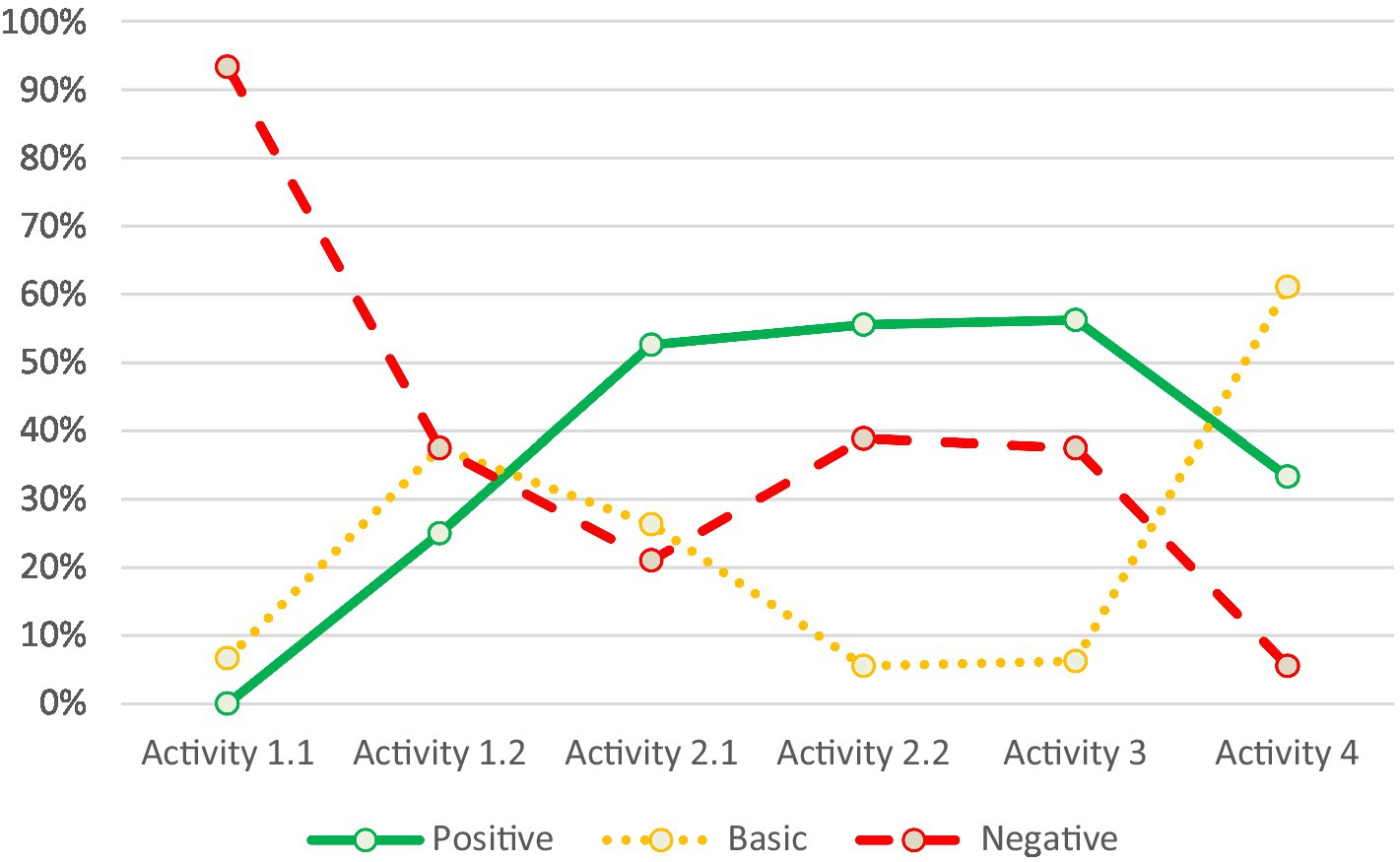

Figure 4 reveals that almost all the outcomes generated for Activity 1.1 were substandard. Regardless of which specific method they chose (they had to pick one, among two that had previously been studied in class), almost all the students gathered incomplete and/or false data about it. They proved unable to deal with the discrepancy between what they had learnt earlier in class on this subject, and what ChatGPT was—misleadingly—delivering to them.

Figure 4. Percentage of students and quality of the outcome generated by ChatGPT per learning activity. The outcomes assessed by the professor as positively, negatively, or in-between (n = between 15 and 19, since not all of the students delivered every required assignment).

While the software generated answers with inaccurate and fragmented information about the method, it presented them with such apparent confidence that the students simply accepted this alternate—and fictitious—version of what the method consisted of.

The outcomes for Activity 1.2 (which, as a reminder, was delivered at the same time as Activity 1.1) were significantly better since close to 25% of the group got it right. Since the methods to be used in both parts of the first assignment (i.e., Activities 1.1 and 1.2) were equally challenging in terms of intrinsic complexity, the explanation for the gap between the observed performances in each case had to be found elsewhere, namely in the kind of exercise faced by the students. In this case, ChatGPT’s propensity to invent to fill in knowledge gaps was not at the fore, since the AI program was merely asked to implement a series of steps. Therefore, the quality of the eventual outcomes did not depend that much on ChatGPT’s being truthful and/or accurate, but primarily on each student’s capacity to properly use and “guide” the tool throughout the different phases that constitute each method. This observation stood as a confirmation that function (ii) was more promising than the other two, since the latter rely too heavily on ChatGPT’s changing capacity to stick to real information.6

In the class discussion that followed, further emphasis was made on the software’s limitations in terms of factual veracity, so the students were encouraged not to settle too easily with ChatGPT’s first answers.

The next three activities delivered better outcomes, with between 50 and 60% of the students managing to obtain satisfactory answers from the software (see Figure 4). Despite being a majority, it can still be seen as relatively low percentages, all the more so since they did not show significant increase over time. The last activity even showed a decline in the rate of positive outcomes, which can be explained by the more challenging nature of the exercise, based on the most complex method. Even if only one third of the students had it right, almost everyone else ended up obtaining a “basic” outcome, with only one of them reaching a final result assessed as “negative.” This latest observation allows to relate the drop in the “positive” curve with the increased difficulty of the task and therefore to relativize the significance of such a break in the upward trend.

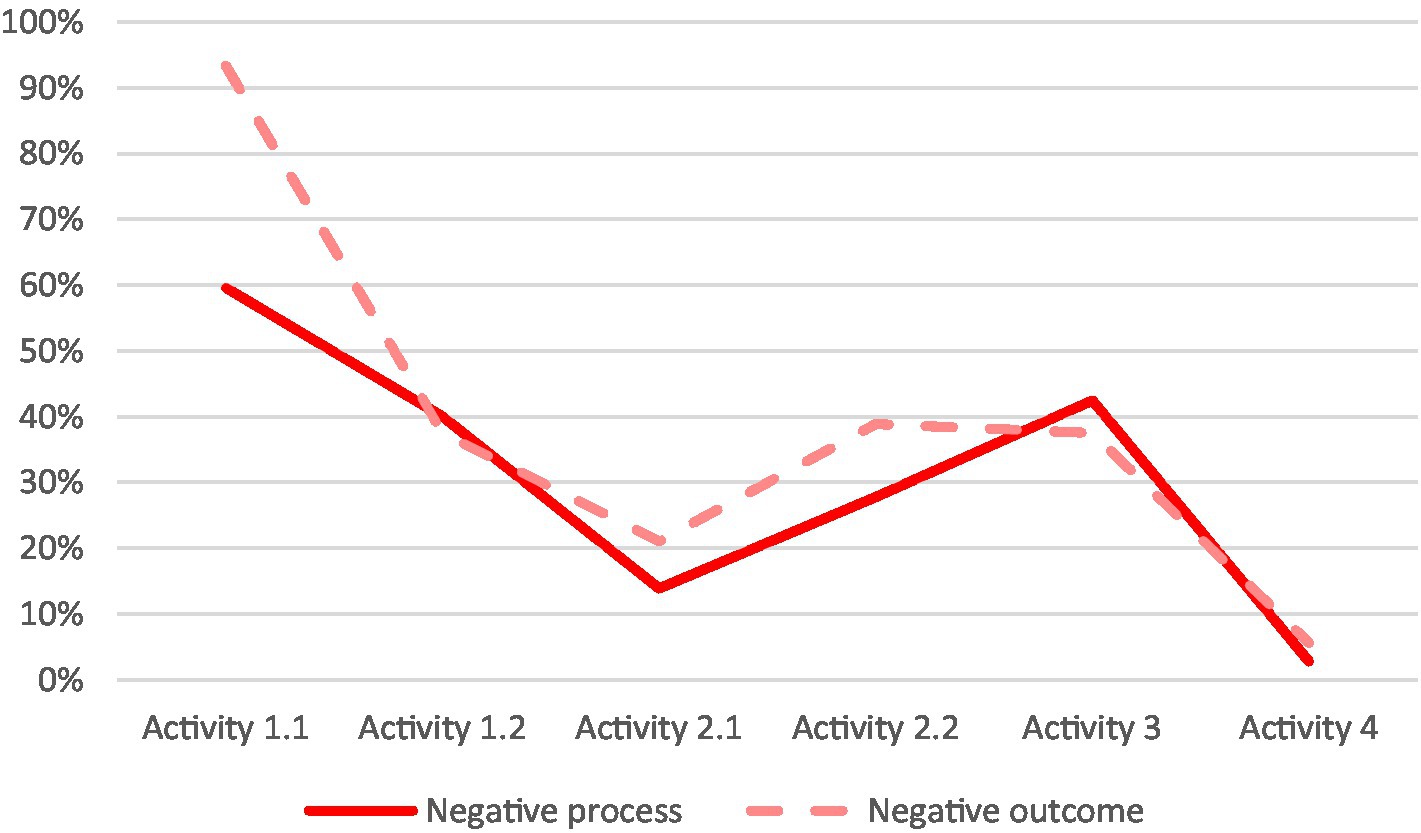

It is worth noticing that a higher rate of “positive” assessments in terms of process usually translates into more “positive” assessments in terms of outcomes, and the same applies for negative assessments and outcomes. As illustrated by the proximity between the curves on Figures 5, 6, the rates are closely correlated, except for the first two activities and the very last one. In the first two cases, which were both part of the first assignment, this can be explained by the fact that this was the first time the students were completing a ChatGPT-based homework and were only starting to navigate the learning curve.

Figure 5. Percentages of positive assessment of outcomes and positive assessments of the interactions with ChatGPT (calculated as the average rate of positive assessments of prompts and conversations with the tool) per learning activity (n = between 15 and 19, since not all of the students delivered every required assignment).

Figure 6. Percentages of negative assessment of outcomes and negative assessments of the interactions with ChatGPT (calculated as the average rate of negative assessments of prompts and conversations with the tool) per learning activity (n = between 15 and 19, since not all students delivered all the required assignments).

In the last case, the significant increase of the method’s complexity is an adequate explanatory factor: even if almost all the students (close to 90%) had performed very well in terms of process, it was no guarantee that the outcome would be satisfactory in the same proportions. Indeed, even if the prompts are formulated within the recommended parameters and if the conversation is effectively sustained, the final result might be judged as falling short of the expectations, if some confusions or misunderstandings subsisted in the students’ minds regarding how exactly the method is supposed to be operated.

However, this by no means indicates that the activity itself was a failure: on the contrary, it played a role as a powerful and straightforward indicator of which specific parts of the method had not been properly understood by the students.7 This new information offered the opportunity for a precisely targeted complementary explanation and/or exercise during the next session.

In addition to the activities themselves, each of the four assignments also consisted in each student providing a “final reflection” about their experience when dealing with ChatGPT.

As a confirmation of the “learning curve” that still had to be navigated in the first assignment, 87% of the students answered that it had been “hard” to complete it by using ChatGPT, while only 6% qualified the task as “easy,” and the same proportion provided a mixed answer8 (see Figure 7). The answers to the same question about the second assignment (on Activities 2.1 and 2.2) revealed a radical switch in perceptions, since this time only 11% selected “hard” and 61% “easy,” with the rest sitting on the fence.9

Figure 7. Self-assessment by the students of their ability to use ChatGPT throughout the assignments. For comparison, the black dots indicate how well, on average, the processes were conducted in each assignment, which implied to calculate the average rate of positive assessments for both “prompts” and “conversations”. Assignment 1 considers activities 1.1 and 1.2, while Assignment 2 considers activities 2.1 and 2.2 (n = between 15 and 19, since not all students delivered all the required assignments).

For the third assignment (Activity 3), the question was modified to focus on their self-assessed ability to use the tool,10 and close to 100% of the respondents considered that they did actually improve it, expressing confidence that they were now proficient in handling the tool. However, these numbers were much higher than the percentages of students who had managed to properly apply the processes. This gap reveals that many students—almost half of them—were taking for granted that they were good enough using ChatGPT, while it was in fact not the case yet. In contrast, self-assessments and external evaluation became much more aligned with one another for the last assignment (Activity 4).

This transitory gap between actual performance and its perception was also noticeable through their answers to a question, asked as part of each of the four assignments, about the “techniques” that they had applied in their interaction with ChatGPT. Since the very first assignment, the students had been consistently providing relevant and correct advice, by describing good practices such as being specific in the requests, providing relevant information in the prompt itself, being patient and organized in the interaction, checking the accuracy of the generated contents, or signaling possible mistakes. However, except for the very last assignment, there was a notable delay between the correct verbalization of the need to proceed in a particular way, and the actual implementation of it.

This latest observation further reinforces the point that it is not enough to inform the students about how to proceed, or to hear or read them describe the process (Reese, 2011). It is rather much more fruitful to have them actually do it, which allows the professor to observe to what extent their own practice is consistent with what they state should be done. In fact, it has been shown that learning-by-doing plays a key role for digital literacy since students learn how to use technology by using it (Tan and Kim, 2015).

Even though the experiment was conducted with two objectives in mind (teaching prospective methods through novel means and providing the students with an introduction to the use of ChatGPT), it turned out that this initiative produced another, unexpected, output.

Indeed, over the course of the experiment, the professor noted that, in order to properly execute the ChatGPT-based tasks, the students had to mobilize certain competencies that can be qualified as “classical” or “timeless,” since they have long been identified as key within any student’s formation. When sharing feedback, it soon became clear that the central conditions for reaching their objectives when interacting with ChatGPT did not have to do with ChatGPT-specific requirements, but with being able to mobilize three competencies with a much broader range and relevance: written expression, critical thinking, and methodical and logical reasoning. These competencies are identified as soft, transversal, or non-cognitive, to reflect that they are the basis for a successful performance within the professional and personal spheres (OECD, 2015).

Consequently, using ChatGPT appeared to bear another merit: not only it would contribute to the course’s own objectives in terms of content, and help students assimilate how to handle a new digital tool, but it would at the same time stimulate the development of competencies that are widely recognized as being essential for any (future) professional, especially (but of course not exclusively) in the field of social sciences.

Additionally, ChatGPT proved to serve as an efficient revelator of the extent to which each student has achieved to assimilate each of these competencies, which is particularly useful from the educator’s perspective.

Given its capacity to generate text with correct grammar, spelling, and logic at a pace faster than it can be read out loud, ChatGPT may understandably be perceived as a potential threat to the development of writing skills if individuals start relying heavily on it for this task. However, ChatGPT also presents opportunities in this regard, since it necessitates the formulation of precise and clear prompts to obtain outputs that align with initial expectations. As a result, it can serve as a catalyst for enhancing the ability to articulate thoughts effectively and communicate intentions with clarity.

For the Tecnologico de Monterrey, communication as a competency refers to effectively use different languages, resources, and communication strategies according to the context, in their interaction within various professional and personal networks, with different purposes or objectives (Olivares et al., 2021). It is a transversal competency that is relevant for successful performance within the professional and personal environment (OECD, 2015). It is understood as comprising language proficiency, presentation competency, capacity for dialogue, communication readiness, consensus orientation and openness toward criticism (Ehlers, 2020). Therefore, the focus is on information purposes as well as strategic communication skills in order to be able to successfully and appropriately deal with different contexts and situations. As a modality, written communication enables the student to express through writing ideas, arguments, and emotions with linguistic correctness and considering contextual elements, both in the mother tongue and in an additional language (Olivares et al., 2021).

Before and throughout the experiment, several tips were provided to the students as to what effective prompts look like. At some point, it seemed that all of them could be subsumed into one, that consisted in writing as if you were communicating with a fellow human. While it appears to be straightforward on its face, this advice nevertheless proved to be of little effectiveness, since today’s students also tend to simply be as evasive and vague in their written exchanges with their “fellow humans.” It was therefore necessary to specifically insist, in the feedback sessions, on what constituted a good prompt, as opposed to an ineffective one.

In doing so, it was particularly helpful to point at the patent connection between an inadequately worded prompt and the unsatisfactory nature of the output generated by ChatGPT, thereby instantly revealing that “something is wrong.” This feedback presents similarities with the one that a developer receives after committing mistakes in their programming process: since their code is not working as intended, something must be corrected somewhere. Before the public release of ChatGPT, this was not something that could be highlighted so clearly for natural language. The closest equivalent would be a tutor showing to the tutee, on a one-on-one interaction, that the sentences he or she has written are confusing or missing important points. By definition, such a process would be extremely hard to scale and would in any case be tributary to other parameters, such as the level of mutual understanding between the people involved. In contrast, ChatGPT offers promising prospects in this regard.

This is not to suggest that the students’ writing experience should henceforth be limited to the formulation of instructions to an AI machine. The point here is that the interaction with ChatGPT offers opportunities to develop and strengthen this skill, in such a way that it can afterwards be exploited in other contexts.

False or fake information is the well-known drawback of the trove of data available on the Internet. This risk is compounded on (and by) ChatGPT, first because it does not provide its own sources (whose reliability or lack thereof usually stands for a useful indicator for the cautious Internet user), and second because of its own self-confidence when generating outputs, which makes its statements appear sounder than they actually are.

Paradoxically, one of ChatGPT’s main flaws—its very flexible relationship with truth—turns out to be a useful quality in the educational context since it represents a strong incentive for the students to double-check the generated outputs. Therefore, the mere fact of using it represents a powerfully illustrative case-study of the importance of not trusting blindly the information that has been generated. On the contrary, with every use of ChatGPT, students are reminded that they must refrain from taking the veracity of ChatGPT’s answers for granted and must instead approach them with systematic skepticism, by applying their critical thinking. As denoted by the easy and quick dissemination of disinformation,11 this reflex is not well established yet.

For the Tecnologico de Monterrey, a student with critical thinking evaluates the solidity of one’s own and others’ reasoning, based on the identification of fallacies and contradictions that allow forming a personal judgment in the face of a situation or problem (Olivares et al., 2021). Critical thinking allows questioning and changing perspectives in relation to existing identified facts (Ehlers, 2020); it is therefore related to other reasoning skills, like self-reflection and problem-solving competencies. Besides, it is a relevant competency in the education for sustainable development framework and it is essential for facing the threat of employment disruptions from automation and AI (PWC, 2020).

In the experiment, students played a leading role, which was intended to give them more confidence when it came to questioning the generated outcome: instead of simply querying for information and passively receiving (and accepting) it, they were placed in a situation where they were instructed to gradually implement a given method and getting outputs in return. Since they had previously learnt this method in class, they were, at least theoretically, sufficiently equipped to critically examine and assess the value of the generated answers.

This context was designed as a means to test and practice critical thinking, defined as “skillful, responsible thinking that facilitates good judgment because it (1) relies upon criteria, (2) is self-correcting, and (3) is sensitive to context” (Lipman, 1988, p. 145). In the exercise, each student had to contrast what they had obtained with what they knew, first to identify if there existed a discrepancy between the two and, if any, find out whether it was the result of a flawed knowledge (or a wrongly worded prompt) on their side, or a misstep on ChatGPT’s.

As shown in the results section, the critical thinking competency is the one that students are taking longer to assimilate, since the outcomes that most students had reached were still containing undetected errors, which is the sign of a premature and undeserved acceptance of the responses provided by ChatGPT.

To be meaningful, the two competencies highlighted in sections 4.1 and 4.2 have to be combined with a third one, which is the ability to structure the process in such a way that the different steps eventually lead to the desired outcome. First, the possible detection of factual errors or omissions in the generated responses serves as an opportunity to engage in a constructive dialogue with the tool, which aims to highlight and address these inaccuracies, ultimately leading to their rectification.

Second, carefully worded prompts would be of little use if they were not inserted in a logically articulated framework. In fact, it can even be argued that being able to correctly organize ideas (or, for this purpose, the successive steps) together is part of the written expression competency. In this article, they have been presented as separate to take due account of the fact that the described activities, designed around function (ii), fit neatly within a key feature of ChatGPT, which is its conversational dimension.

For the Tecnologico de Monterrey, the ability to solve problems and questions using logical and methodical reasoning in the analysis of clearly structured situations represents an incipient or basic level of “scientific thinking.” This competency involves using structured methods in the analysis of complex situations from disciplinary and multidisciplinary perspectives and incorporating evidence-based professional practice (Olivares et al., 2021). Scientific thinking involves higher-order reasoning skills like analysis, evaluation, and synthesis of information (Suciati et al., 2018). As mentioned before, this competency relates with the ability to understand and solve complex problems (Vázquez-Parra et al., 2022).

Indeed, implementing different prospective methods, as mandated in four of the six activities, demands a comprehensive understanding of how each of them breaks down into a series of successive steps. Since ChatGPT allows for a sustained interaction between the user and the program, in a way that mimics human interaction, it allows the students to organize a communication process on this matter. Importantly, it also grants the professor an opportunity to observe and assess how it has been sequenced and conducted.

This organized reasoning can be assimilated to an improved “self-explanation,” defined as “prompting students to explain concepts to themselves during initial learning” (MIT Teaching + Learning Lab, 2023), insofar as they are indeed describing the successive steps of a given method, with enough details for these to be implemented. Technically, they are not explaining it to anyone else, but they do obtain a response from the AI tool, in such a way that they can assess the accuracy and completeness of their own input, and eventually make (or request) adjustments in the following stages of the “conversation.” It triggers a process that has its own merits in comparison to pure self-explanation (where no external feedback is delivered) and to an explanation actually directed to another person. In addition to being contingent on the other person’s own availability and ability to deal with the issue at stake, this second scenario is unlikely to place the student in a leading role, but rather in a position where they intend to guide someone who actually knows more about the process and will judge or correct them in case of missteps.

It might appear that the description of this experiment only leaves a narrow margin of application for this specific use of ChatGPT, limited to courses dedicated to explaining how to use certain methods. This perception is not accurate, since this strategy can be transposed to any setting where students are expected to follow and implement a series of steps. The described exercise, which by no means forbids the implementation of additional, complementary activities where AI is not mobilized,12 fundamentally consists in giving them the task to lead a process (with ChatGPT in the executing role) instead of merely following its steps.

Such logic can be applied in different contexts. For instance, students might be tasked with having ChatGPT writing a story or an essay, with the specific instructions that the AI tool has to be guided step by step throughout the process. As in the case of the described experiment, the evaluation should not concentrate—at least not primarily—on the generated outcome, but rather on the way the student guided the whole process, including by requiring corrections and improvements along the way. Hence the importance of including evidence of the interaction sequence, as exposed in section 2.5.

On a more general note, a key practical implication of this paper is that ChatGPT provides an opportunity for the professor to closely and directly observe how the student manages a complex process, instead of merely inferring from the outcome how well the process had been conducted. In order not to miss such an opportunity, the design and instructions of assignments should be adjusted with this extended target in mind.

We recognize that, as an exploratory action research on a novel and quickly evolving groundbreaking tool, our research has limitations, which could be addressed in future work.

Our results are based on the professor’s evaluation of students’ interactions with, and outcomes from, ChatGPT as well as the students’ perception of their own ability to use this AI. For a more robust support for the findings in future study, researchers should consider the use of an evaluation instrument that would not be based only on the specific observable skills when handling ChatGPT (prompts writing, quality of the interaction and generated outcome) but directly on the competencies that this exercise inadvertently allowed to both test and foster (written expression, critical thinking, and logical and methodical reasoning).

Besides, the participants in this research were unfamiliar with the use of AI for learning applications. In the future, researchers will be dealing with students with more experience in the use of ChatGPT and other technological tools. This context is likely to require an adjustment to the instructions and expectations. For instance, most participants might be more comfortable in prompt writing, in comparison to the sample observed in the context of this experiment.

While our activities relied on using open access ChatGPT Version 3.5, other versions and even other apps and software have emerged since, offering more opportunities for supporting the learning process. So, future studies should analyze their own potential and/or actual integration into higher education.

It should also be noted that our research took place in the context of a private institution, where the students have access to computers, smartphones, and internet connection in a higher proportion than most of Latin American students. In this continent, digital divide has been evidenced as a limitation of population to access the internet and other information and communication technologies. Future research should consider collaborating with different universities for implementation of similar activities, to have a broader sample of Latin American students.

Finally, this longitudinal research was developed over a relatively short period: its temporal scope could be extended, either by replicating a similar experiment to a new generation of students, or by applying a more advanced experiment on the same initial group of participants.

Initially geared toward the transmission—and hopefully the eventual acquisition—of techniques to handle a novel digital tool, this experiment ended up providing additional arguments for the continuing relevance of competencies whose importance had been emphasized long ago: written expression, critical thinking, and organized reasoning.

This unexpected finding serves as confirmation that, despite the continuous evolution of our tools toward increased sophistication, there are certain skills and competencies that remain as essential as ever. Rather than diminishing their significance, AI technology is, in fact, strengthening the case for persistently fostering their development.

The datasets presented in this article are not readily available because the participants agreed to participate under the condition of confidentiality and anonymity of their answers. Requests to access the datasets should be directed to Ym1pY2hhbG9uQHRlYy5teA==.

All participants were over 18 years old, aligning with the definition of formal citizens under the Mexican legal framework. Prior to their involvement, each participant provided explicit consent to partake in the study. They were thoroughly informed about the research and confirmed their understanding of how their information would be used. It is paramount to note that all data gathered was treated with the utmost confidentiality, ensuring participant anonymity. Furthermore, the handling and storage of this data strictly adhered to the Privacy Policies set forth by the higher education institution overseeing the study. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

BM and CC-Z contributed to the conception and design of the study, identified and adapted the references and the theoretical framework, and wrote the final manuscript. BM conducted the instructional design and its implementation, conducted the data collection and analysis, as well as, visualization of the results, and wrote the first manuscript. All authors contributed to the article and approved the submitted version.

The authors would like to acknowledge the support of Writing Lab, Institute for the Future of Education, Tecnologico de Monterrey, Mexico in the production of this work.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. ^While GPT stands for “Generative Pre-trained Transformer,” it has also been associated with “General Purpose Technology” (Eloundou et al., 2023).

3. ^Which means to instruct ChatGPT to play a particular role (expert, teacher, decision-maker, advisor, etc.) and/or to tailor its answer to a particular audience.

4. ^Table 1 integrates a column with an estimated difficulty score for each activity.

6. ^It has to be conceded, however, that function (i) (generating a summary of about an already-known information) offers a way to use at our advantage ChatGPT’s tendency to present its own creations as facts, as long as the students are instructed to detect mistakes in the generated answers and to have them rectified. Nonetheless, this option remains viable only when ChatGPT’s mistakes are confined to specific aspects of an otherwise well-understood method. In our experience, the program often resorted to fully inventing a given method that it did not know beforehand, starting from the scant elements that were provided to it. This adaptation strategy from the program renders the task to correct it not only overwhelming but also of limited utility for learning purposes.

7. ^As evidenced by their incomplete or flawed instructions and/or their failure to identify inaccurate or contradictory elements in the generated answers.

8. ^The exact question was “How hard was it for you to get what you wanted from ChatGPT?”

9. ^For the purpose of building Figure 7, the answer “hard to complete it by using ChatGPT” was eventually recoded as a “low” capacity to use ChatGPT and, conversely, “easy” was recoded as a “high” ability.

10. ^“Do you feel that you have improved your capacity to get what you wanted from ChatGPT?”

11. ^Contrary to common perceptions, young people are not better protected against disinformation than older individuals (Pan et al., 2021).

12. ^Which indeed applied in the context of the prospective course, where students had to apply the methods themselves both before the experiment (while they were taught about them) and after it (by integrating them in their respective final prospective research projects).

Bennett, S., and Maton, K. (2010). Beyond the ‘digital natives’ debate: towards a more nuanced understanding of students’ technology experiences: beyond the ‘digital natives’ debate. J. Comput. Assist. Learn. 26, 321–331. doi: 10.1111/j.1365-2729.2010.00360.x

Davison, R., Martinsons, M. G., and Kock, N. (2004). Principles of canonical action research. Inf. Syst. J. 14, 65–86. doi: 10.1111/j.1365-2575.2004.00162.x

Ehlers, U. D. (2020). Future skills: the future of learning and higher education Books on Demand Available at: https://books.google.com.mx/books?id=lnTgDwAAQBAJ.

Eloundou, T., Manning, S., Mishkin, P., and Rock, Y. D. (2023). GPTs are GPTs: an early look at the labor market impact potential of large language models. arXiv. doi: 10.48550/ARXIV.2303.10130

Firat, M. (2023). How Chat GPT Can Transform Autodidactic Experiences and Open Education? OSF Preprints. doi: 10.31219/osf.io/9ge8m

Lipman, M. (1988). Critical thinking: what can it be? Contemporary issues in curriculum, A. Ornsteinde and L. Behar. Boston, MA: Allyn & Bacon. Available at: https://eric.ed.gov/?id=ED352326.

Margaryan, A., Littlejohn, A., and Vojt, G. (2011). Are digital natives a myth or reality? University students’ use of digital technologies. Comput. Educ. 56, 429–440. doi: 10.1016/j.compedu.2010.09.004

Meyer, M. A., Hendricks, M., Newman, G. D., Masterson, J. H., Cooper, J. T., Sansom, G., et al. (2018). Participatory action research: tools for disaster resilience education. Int. J. Disast. Resil. Built Environ. 9, 402–419. doi: 10.1108/IJDRBE-02-2017-0015

MIT Teaching + Learning Lab . (2023). Help students retain, organize and integrate knowledge. Available at: https://tll.mit.edu/teaching-resources/how-to-teach/help-students-retain-organize-and-integrate-knowledge/.

Morales-Chan, Miguel Ángel . (2023). Explorando el potencial de Chat GPT: una clasificación de Prompts efectivos para la enseñanza. Paper GES. Available at: http://biblioteca.galileo.edu/tesario/handle/123456789/1348.

Neumann, M., Rauschenberger, M., and Schön, E.-M. (2023). “We need to talk about ChatGPT: the future of AI and higher education” in In 2023 IEEE/ACM 5th International Workshop on Software Engineering Education for the Next Generation (SEENG) (Melbourne: IEEE), 29–32.

OECD (2015). Skills for Social Progress: The Power of Social and Emotional Skills. OECD Skills Studies, OECD Publishing, Paris. doi: 10.1787/9789264226159-en

Olivares, S. L. O., Islas, J. R. L., Garín, M. J. P., Chapa, J. A. R., Hernández, C. H. A., and Ortega, L. O. P. (2021). Modelo Educativo Tec21: retos para una vivencia que transforma Tecnológico de Monterrey Available at: https://books.google.com.mx/books?id=aF47EAAAQBAJ.

Pan, W., Liu, D., and Fang, J. (2021). An examination of factors contributing to the acceptance of online health misinformation. Front. Psychol. 12:630268. doi: 10.3389/fpsyg.2021.630268

PWC . (2020). Skills for industry curriculum guidelines 4.0: future proof education and training for manufacturing in Europe: final report. Lu: Publications Office.

Reese, H. W. (2011). The learning-by-doing principle. Behav. Dev. Bull. 17, 1–19. doi: 10.1037/h0100597

Rudolph, J., Tan, S., and Tan, S. (2023). ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? J. Appl. Learn. Teach. 6, 342–363. doi: 10.37074/jalt.2023.6.1.9

Selwyn, N. (2009). The digital native – myth and reality. ASLIB Proc. 61, 364–379. doi: 10.1108/00012530910973776

Suciati, S., Ali, M., Anggraini, A., and Dermawan, Z. (2018). The profile of XI grade students’ scientific thinking abilities on scientific approach implementation. J. Pendidi. IPA Indon. 7, 341–346. doi: 10.15294/jpii.v7i3.15382

Tan, L., and Kim, B. (2015). “Learning by doing in the digital media age” in New media and learning in the 21st century. eds. T.-B. Lin, V. Chen, and C. S. Chai (Singapore: Springer Singapore), 181–197. Education Innovation Series.

Tobbin, P., and Adjei, J. (2012). Understanding the characteristics of early and late adopters of technology: the case of mobile money. Int. J. E-Serv. Mob. Appl. 4, 37–54. doi: 10.4018/jesma.2012040103

Vázquez-Parra, J. C., Castillo-Martínez, I. M., Ramírez-Montoya, M. S., and Millán, A. (2022). Development of the perception of achievement of complex thinking: a disciplinary approach in a Latin American student population. Educ. Sci. 12:289. doi: 10.3390/educsci12050289

Voldby, C. R., and Klein-Døssing, R. (2020). I thought we were supposed to learn how to become better coaches: developing coach education through action research. Educ. Act. Res. 28, 534–553. doi: 10.1080/09650792.2019.1605920

White, J. (2023). Prompt engineering for ChatGPT Coursera Available at: https://www.coursera.org/learn/prompt-engineering.

Keywords: soft competencies, artificial intelligence in education, critical thinking, communication, educational innovation, higher education, organized reasoning

Citation: Michalon B and Camacho-Zuñiga C (2023) ChatGPT, a brand-new tool to strengthen timeless competencies. Front. Educ. 8:1251163. doi: 10.3389/feduc.2023.1251163

Received: 01 July 2023; Accepted: 28 September 2023;

Published: 13 October 2023.

Edited by:

Julius Nganji, University of Toronto, CanadaReviewed by:

Maria Rauschenberger, University of Applied Sciences Emden Leer, GermanyCopyright © 2023 Michalon and Camacho-Zuñiga. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Barthélémy Michalon, Ym1pY2hhbG9uQHRlYy5teA==; Claudia Camacho-Zuñiga, Y2xhdWRpYS5jYW1hY2hvQHRlYy5teA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.