- 1The School of Education, Achva Academic College, Shikmim, Israel

- 2Department of Multi-Disciplinary Studies, University of Haifa, Haifa, Israel

Introduction: Simulation-based learning (SBL) is gradually being integrated into the field of teacher education. However, beyond specific content acquisition, comprehensive knowledge of SBL outcomes is limited. This research aimed to systematically develop a scale to measure SBL outcomes in teacher education.

Methods: A mixed-methods sequential exploratory design was implemented, to develop the Simulation-based Learning Outcomes in Teacher Education (SLOTE) scale. Data were collected in two phases: a qualitative (N = 518) phase followed by a quantitative (N = 370) phase.

Results: The qualitative analysis revealed three overarching themes: communication skills, collaborative-learning-related insights, and emotional self-awareness. The scale items were prepared based on key quotes from the qualitative data. To define, quantify, and validate learning outcomes of SBL in teacher education, EFA and CFA were used to test the relationship among 29 items of the scale.

Discussion: The study provides a theoretical conceptualization of SBL’s multifaceted learning outcomes in teacher education. These findings allow for a better understanding of the observers’ role in SBL, suggesting that enacting the simulation is not inherently more emotionally demanding and, consequently, participants in both roles are apt to benefit equally from the experiential learning afforded by the SBL process. Theoretical and practical implications of using SBL in teacher education are discussed.

1. Introduction

Simulation-based learning (SBL) is a technique that simulates the conditions of the professional arena for the purpose of learning and practicing specific skills (Chernikova et al., 2020; Liu and McFarland, 2021), particularly skills for effective communication (Gaffney and Dannels, 2015). One of the models of SBL is the clinical model, which involves professional actors who play specific roles, simulating situations experienced in the professional field (Jack et al., 2014).

The simulation technique was developed in the military and medical fields and the outcomes of SBL have been comprehensively documented in the literature, using a variety of research scales (Hofmann et al., 2021). However, in the field of teacher education, the development of simulations began only two decades ago (Kaufman and Ireland, 2016; Theelen et al., 2019) consequently, the outcomes of SBL in this field have yet to be addressed. Moreover, there is no validated scale for measuring these outcomes in the teacher-education arena and especially in the case of clinical simulations (Dotger, 2013).

The focus of the current study is on the process of developing and validating a scale to measure outcomes of clinical SBL workshops conducted with preservice and inservice teachers. To this end, we used a mixed methodology. This is part of a broader effort to identify the theoretical and practical value of using simulations in the field of teacher education (Badiee and Kaufman, 2014; Dotger, 2015; Ferguson, 2017).

2. Theoretical background

SBL is an active learning experience, in which the learner acts out a professional scene, the focus of which is a problem or a conflict; this is followed by a reflective debriefing session (Manburg et al., 2017), during which the participants, guided by the simulation instructor, engage in a peer discussion of ways to improve their future performance in similar situations (Levin and Flavian, 2022).

Learning through simulations is grounded in theoretical constructionist learning theories (Orland-Barak and Maskit, 2017). Specifically, Vygotsky (1978) conceptualized the learning process as grounded in socially based experiences, inherently mediated by others, and collaborative in nature. In accordance with Vygotsky’s ideas of social constructivism, SBL imitates a social environment (Frei-Landau et al, 2022), wherein participants engage in peer learning (Levin and Flavian, 2022). However, although SBL is anchored in a strong theoretical foundation (Ferguson, 2017), its practical learning outcomes have yet to be fully assessed, particularly in the teacher-education arena.

The current study is based on the clinical simulation model, in which scenarios are enacted by a learner and a professional actor in real-time and video-recorded. The goal of clinical SBL workshops is to deepen the reflective abilities of educators while strengthening their interpersonal skills within conflictual situations (Ran and Nahari, 2018). A clinical SBL workshop involves four consecutive generic stages: (1) An opening phase for the instructor to introduce the workshop’s framework and activities. (2) A “simulated experience,” i.e., a five-minute scenario enacted by a professional actor who interacts in real-time with a group-member-learner (the actor responds according to predefined schemes written into the scenario). (3) Following each enacted simulation, a debriefing phase is led by the instructor. The core structure of the SBL debriefing is based on universal learning models (Kolb, 1984). During the debriefing, participants examine the facts (“what happened here?”), decipher the causes and motives behind the events (“why did this happen?”), and deduce general lessons to be learned from the experience (“what will we choose to do next time?”). The debriefing ends with the actor’s feedback to the workshop participants. (4) Each workshop ends with a summary phase, wherein participants are asked to share their insights and draw possible conclusions. Although all workshops are comprised of the same four generic stages, they are diverse in terms of their contents (different scenarios). In this study, we examined the outcomes of standardized four-hour SBL workshops, held over a period of five sequential semesters, at the simulation center at the Be simulation center at Achva Academic College. Each workshop included three scenarios and involved 15 participants.

The clinical simulation model was originally borrowed from the field of medical education (Hallinger and Wang, 2020). In 1963, Howard Barrows, a medical educator, began utilizing local actors to prepare future physicians (Barrows and Abrahamson, 1964). Using Barrows’ framework, Benjamin Dotger adapted the pedagogy of clinical simulations for use in teacher education (Dotger, 2013). There are differences between the medical and educational clinical simulations in terms of the goals, scopes and procedures, which together determine the differences in learning outcomes. Clinical simulations in medicine are used to practice routine and extreme techniques in a protocol manner, as well as to gain non-technical skills (Goolsarran et al., 2018). In medical simulations, learners perform a task involving a binary outcome of either success or failure; hence, in medicine, simulations are also used to test and evaluate learners’ performance and knowledge (Hofmann et al., 2021). The clinical simulation in teacher education, however, mainly focuses on soft skills used in routine conflictual situations. During the debriefing phase, the focus is on elements that were present and those that could have been used during the scenario and their influences on what was achieved or could be achieved next time. Thus, in the fields of medicine and nursing, many comprehensive and validated scales have been developed; some are used to assess the various simulation outcomes (Franklin et al., 2014), others examine attitudes regarding the simulation experience (Sigalet et al., 2012; Pinar et al., 2014), and yet others measure participants’ satisfaction with the use of simulations (Chernikova et al., 2020). There is also abundant literature evaluating these simulation scales (Hofmann et al., 2021).

By contrast, in the field of teacher education, there is no single comprehensive scale that addresses the wide variety of simulation outcomes. Rather, studies that have examined the value of SBL have focused on the relationship between participation in a simulation and one specific and known variable, such as self-efficacy (De Coninck et al., 2020) or leadership (Shapira-Lishchinsky, 2015). To date, no research scale for examining the numerous SBL outcomes in a comprehensive and integrative manner has been devised—not to mention—validated. This absence is not surprising, given that SBL research in this field is still in a fledgling state (Theelen et al., 2019; Levin et al., 2023).

Whether simulations are conducted face-to-face or virtually (Tang et al., 2021; Frei-Landau and Levin, 2022), there are currently three types of outcomes that are the focus of SBL-related research in teacher education. One of these emphasizes the practical outcomes, i.e., the acquisition of practical behaviors and skills needed to perform professionally in this field (e.g., effective management of the conflicts that arise between a teacher and a student’s parent). It was found that simulations provide the context in which cognitive knowledge can be translated into operational knowledge (Theelen et al., 2019; Dalinger et al., 2020). As compared to traditional methods of study, the SBL has a greater potential for inducing behavioral changes and improving performance (Theelen et al., 2019; Cohen et al., 2020). When the emphasis is on communication skills as the required practice, SBL was found to assist in the development of these skills as well (Dotger, 2015).

Another type of SBL outcome that is being investigated in teacher-education research pertains to the domain of social cognition. Social cognition is categorized as the mental procedures used to decipher and encode social cues; it involves the processing of information about everyone, including the self, as well as about social norms and practices (Fiske and Taylor, 1991). SBL was shown to be associated with the promotion of social skills in both interpersonal and team contexts (Shapira-Lishchinsky et al., 2016; Levin et al., 2023). SBL was also found to successfully cultivate learners’ reflective abilities (Levin, 2022), including their critical reflection skills (Tutticci et al., 2018; Codreanu et al., 2021). Furthermore, the collaborative aspect of SBL was found to lead to a high level of collaboration and to promote critical thinking, with an emphasis on a supportive rather than a judgmental environment (Manburg et al., 2017).

The third SBL-related outcome that is examined in teacher-education research is the emotional process and its resulting emotional outcomes when using SBL. In this framework, studies have found that because the SBL process provides a safe environment for learning and making mistakes (Bradley and Kendall, 2014; Dieker et al., 2017), SBL may result in decreased levels of anxiety as well as a balanced emotional experience (Levin and Flavian, 2022), as compared to practice conducted in the classroom. Thus, it ultimately enables a balanced reflection on one’s practice, which in turn increases preservice teachers’ sense of self-efficacy (Theelen et al., 2019). However, it is important to note that studies have also indicated that SBL could also lead to negative emotional experiences and outcomes. For instance, during the SBL process, learners can experience negative feelings such as embarrassment due to peers’ critique or demotivation due to learning overload, emotions which can undermine their self-confidence (Bautista and Boone, 2015; Dalgarno et al., 2016; Frei-Landau and Levin, 2023). Given these seemingly contradictory findings, the efficacy of SBL in the field of teacher education requires further study.

To summarize, in the field of teacher education, the existing knowledge about the outcomes of SBL is decentralized and there is a need for a comprehensive scale that can provide an integrative description of the learning outcomes. To this end, the current study describes the process of formulating and validating a scale for comprehensively assessing SBL outcomes, based on SBL workshops conducted over five sequential semesters with preservice and inservice teacher participants.

3. Materials and methods

The objective of the study was to systematically develop a scale to measure SBL outcomes in teacher education. As the knowledge available about SBL outcomes in this field is limited, developing such an instrument could not begin with the traditional method of conducting a literature review. Instead, we determined that the most suitable way to gain insights about SBL’s possible outcomes was to consider participants’ reported feedback collected immediately following their participation in an SBL workshop, regarding the insights they gained through the SBL experience. Hence, a mixed-methods sequential exploratory design was implemented, to develop the Simulation-based Learning Outcomes in Teacher Education (SLOTE) scale, according to the recommended psychometric standards and criteria (Benson and Clark, 1982).

This mixed-methods sequential exploratory design consisted of a qualitative phase followed by a quantitative one (Creswell and Clark, 2017). Generally, mixed-method designs are well-suited for scale development (DeCuir-Gunby, 2008), as collecting qualitative and quantitative data allows the researcher to gain both a broad and profound understanding of the topic of study. Furthermore, the mixed-method design maximizes the scale’s reliability, strength, and appropriateness (Leech and Onwuegbuzie, 2010).

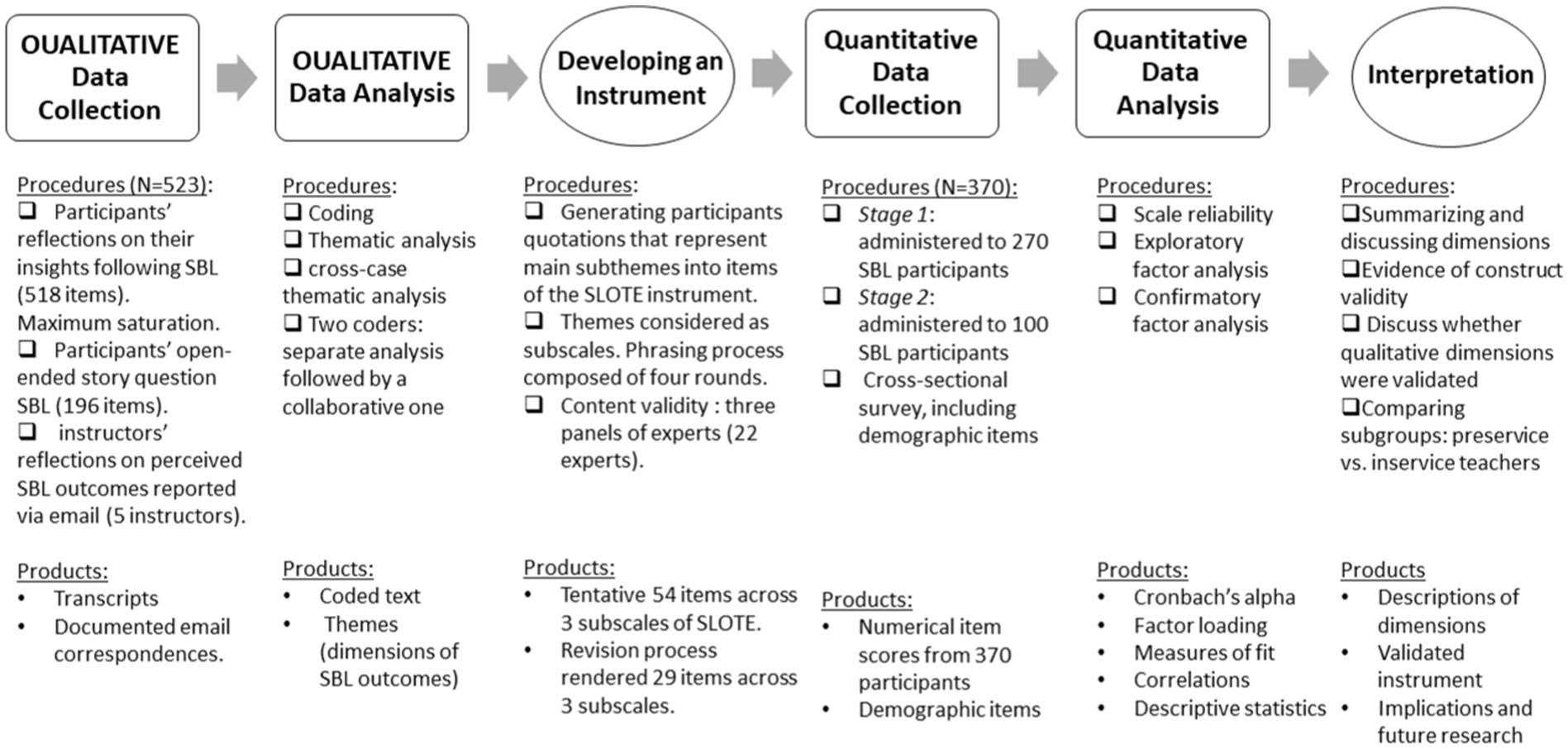

We first collected and analyzed qualitative data from 518 participants’ answers to open-ended questions, presented as an end-of-workshop task. These data were collected during workshops conducted during the first two of the five sequential semesters. Then, building on the exploratory results, we developed a quantitative survey scale measuring SBL outcomes. The quantitative phase included systematic quantitative data collection using a postworkshop survey of SBL participants, to assess the overall prevalence of these SBL outcomes. Finally, a psychometric analysis of the scale was performed to interpret the results and gain a better understanding of the SBL outcomes. Figure 1 displays the full design procedure.

Figure 1. A visual model for the mixed-methods sequential exploratory design, used for the development and validation of the SLOTE scale (N = 888). SLOTE, Simulation-based learning outcomes in teacher education; SBL, Simulation-based learning.

The ethics committee of the college where the simulation center is located approved the entire study. Furthermore, every effort was made to meticulously maintain the rights, privacy, anonymity, and confidentiality of the participants throughout the research process, by upholding professional ethical standards. The participants were given a clear explanation of the purpose of the research, the framework in which it would be carried out, and the voluntary nature of their participation. Only then were they asked to indicate their agreement by signing an informed consent form.

The following sections describe the qualitative and quantitative phases utilized to empirically develop and validate the SLOTE scale.

3.1. The qualitative phase

In this first qualitative phase, we sought to gain insights into the possible SBL outcomes, by collecting participants’ unstructured responses to open-ended questions provided immediately after the SBL workshop. This was designed to explore the participants’ perspectives as well as to reveal basic patterns and themes (Braun and Clarke, 2006).

To increase the validity and reliability of this part of the study, we sought additional input about participants’ SBL outcomes as perceived from the perspective of five veteran SBL instructors. Furthermore, we followed rigorous qualitative inquiry methods, including triangulation of data, achieving saturation standards, conducting member checking, and providing detailed reports. To reduce biases as much as possible, we conducted an independent two-coder process which provided an indication of interrater reliability (Onwuegbuzie and Leech, 2007; Creswell and Clark, 2017).

3.1.1. Participants

3.1.1.1. Workshop participants

To gain a comprehensive understanding of the SBL outcomes, we approached 720 participants recruited from 48 different SBL workshops (each lasting 4 h and involving 15 participants) conducted over the first two—of five—consecutive semesters at the simulation center. The recruits were informed that the two open-ended questions they were about to address were designed to explore their experiences and perceptions upon completing the SBL workshop and that they were free to opt out of participating. Of the 720, 632 agreed to participate, by signing the informed-consent form. Of these, 518 submitted full responses to the first question and 196 responded in full to the second question (i.e., providing elaborate answers rather than a single word or a sentence—a detailed description can be found in the following section). Of the participants, 143 were preservice teachers (28%) and 375 were inservice teachers (72%). Most participants were women (85%), which coincides with the known gender imbalance in education (Lassibille and Navarro-Gómez, 2020). We continued collecting qualitative data until data saturation was reached.

3.1.1.2. Veteran SBL instructors

In addition, we approached five veteran SBL instructors who we considered SBL experts. According to Vogt et al. (2004), experts are members of the target population (in our case, teachers) who have accumulated direct and extensive experience with the relevant “construct” (in our case, the SBL workshop). To recruit these participants, we used a purposeful sampling method (Creswell, 2013), as we desired to include the most experienced instructors working at the simulation center.

3.1.2. Data collection

To provide a rich and deep understanding of SBL outcomes, we used multiple data sources, as described in the following sections.

3.1.2.1. Workshop participants’ responses to open-ended questions

This part of the qualitative data was collected using a multiple case-study approach (Yin, 2009), to enable a cross-case analysis of general SBL outcomes in the teacher-education arena.

Immediately after each workshop, participants were asked to answer two open-ended questions, in addition to providing their provided demographic information. Question 1—Insights gained: participants were asked to describe the perceived insights gained during the workshop and to share relevant thoughts, emotions, etc. Question 2—Sharing a story/situation: participants were asked to share a story from their personal or professional life, which was brought to mind by the workshop content, and to describe the way they managed the situation at the time and how they would consider managing it, in light of what they learned in the SBL workshop. The aim of these two questions was to capture both explicit and implicit insights.

Of the 632 participants, 85 responses to Question 1 were eliminated because they gave only a very brief assessment statement (“It was great”) and another 29 due to the absence of any specific insights. Thus, 518 participants provided elaborate answers to Question 1, often more than one insight. In response to Question 2, 196 of the initial 632 provided richly detailed stories, either by noting what they would now do differently or by stating that the workshop confirmed that they had handled the situation appropriately. We eliminated responses that consisted of a one-sentence story (287), those that failed to recall a story (117) and others that did not answer at all (32).

3.1.2.2. Reflections submitted by veteran SBL instructors

As a part of the pursuit of theoretical validation, we asked five veteran SBL instructors to reflect on possible learning outcomes resulting from participation in SBL workshops. They sent us their written reflections via e-mail and, if needed, we followed up with a one-on-one telephone interview to obtain further clarifications.

3.1.3. Data analysis

The qualitative data were analyzed using Braun and Clarke's (2006) six-phase thematic analysis procedure. Accordingly, the first step was an initial reading and rereading of the data, to immerse ourselves in the data and become familiar with the inner experience of the participants. Then, each researcher separately highlighted key statements that represented possible SBL outcomes. Second, using an inductive process, we generated initial codes across the entire data set. Codes are used to refer to a feature of the data that is perceived as relevant and interesting to the understanding of the phenomenon and, typically, they are comprised of a basic segment, such as words or sentences (Boyatzis, 1998). These codes were identified and marked by each researcher separately. This was followed by a collaborative discussion to identify the most frequent or significant codes. The third step began after all the data had been coded. We sorted the different codes into potential themes, by comparing and contrasting the proposed codes to identify patterns and main themes. Fourth, we reviewed and refined the themes, using four rounds of collaborative discussions, to achieve internal homogeneity and external heterogeneity (Braun and Clarke, 2006). Then, we reviewed the data to ensure that the themes were comprehensive and supported by the data grounded in participants’ answers. Fifth, we “refined and defined” (Braun and Clarke, 2006, p. 93) the themes and subthemes, by identifying the essence of each theme and by assigning a concise, punchy, and mutually exclusive name to each. Finally, we identified exemplars that provide evidence of the theme and relate to the research question. Note that as two researchers have professional training in SBL, and the third researcher was familiar with SBL from previous studies, each one of the researchers analyzed and coded the data independently. Using Cohen’s kappa interrater reliability measure revealed that the reliability rate of the codes identified by the three researchers was 0.80 (Fleiss et al., 2013). Cases of disagreement were discussed until a consensus was reached.

3.1.4. Qualitative findings

The qualitative analysis revealed three overarching themes: communication skills, collaborative-learning-related insights, and emotional self-awareness. The first theme of learning outcomes concerns the communication skills acquired by participating in an SBL workshop. These skills, which involved both verbal and body language, included listening, empathy, and assertiveness, to name a few. The second theme highlights the cognitive insights gained during the collaborative process of SBL, namely, understanding the importance of group reflection, and realizing that colleagues experience similar difficulties. The third theme refers to the emotional self-awareness that is gained by observing one’s emotional reactions to challenges encountered during SBL, emotions such as self-doubt, shyness, and/or embarrassment, which may have resulted from performing in front of a group, and/or from having to contend with group members’ criticism. The qualitative themes and subthemes were then used to develop the instrument of the SLOTE scale.

3.2. The quantitative phase

Based on the findings from the qualitative phase, the quantitative phase took place during the following three semesters (i.e., the third, fourth, and fifth of the five consecutive semesters). During this time, the SLOTE scale was developed, tested, and validated.

3.2.1. Participants

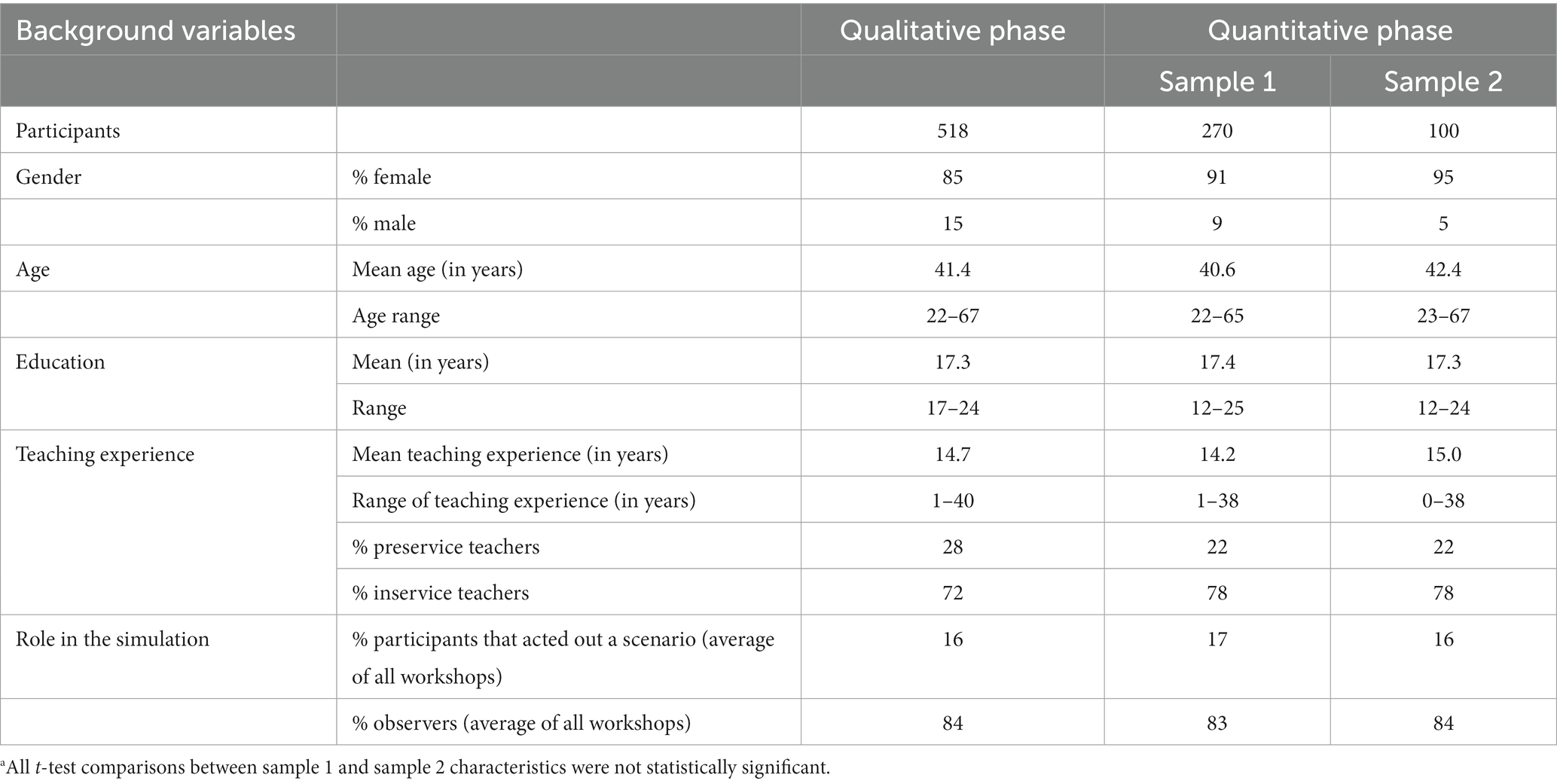

In the quantitative phase, 370 SBL participants agreed to complete a postworkshop survey (a questionnaire constructed based on the qualitative data), which assessed the overall prevalence of the SBL outcomes. Among the 370 participants, 78 were preservice teachers (22%) and 292 were inservice teachers (78%). Most participants were women (91%–95%). Demographic data of the participants in both the qualitative and the quantitative phases (n = 888) are presented in Table 1.

Table 1. Overview of participants’ background variables.a

To demonstrate the stability of results over time (Pohlmann, 2004), the quantitative sample was divided into two subsamples according to simulation date: 270 participants completed the survey at the conclusion of workshops held during the third and fourth of the five consecutive semesters and 100 participants completed the survey at the conclusion of workshops held during the fifth of the five consecutive semesters. Independent-sample T-tests and Chi-square tests confirmed the two subsamples had similar background characteristics (see Table 1).

3.2.2. Scale development

To develop the SLOTE, a pool of 54 scale items was prepared, based on key quotes from the qualitative data. Each of these items described a specific outcome representing the subthemes of the various content areas identified earlier. Eventually, the three main themes became the three dimensions of the scale (Creswell and Clark, 2017). The quantitative scale required respondents to indicate on a 1–7 Likert scale, ranging from not at all (1) to very much (7), the extent to which (according to their subjective experience) the SBL workshop had resulted in the specific outcome described in each of the 54 items. This type of scale tends to offer optimal statistical variance (Hinkin, 1995). Following Hinkin’s (1995) guidelines, items were kept as simple as possible.

3.2.3. Content validity

To examine the content validity, we used Morais and Ogden’s (2011) content validity trial approach. To this end, a pretest of the 54-item questionnaire was administered to three groups of individuals acting as “panels of experts” (Hogan et al., 2001). The first group was comprised of SBL-trained instructors (n = 11), the second consisted of academic researchers of SBL (n = 5), and the third included SBL actors (n = 6). All the trial participants were asked to complete the survey and critique it, in terms of quality and relevance; then their feedback was incorporated into the next version of the scale. To address concerns raised about the length of the scale, we revised it, keeping only the 29 most relevant items, while maintaining the seven-point Likert-like scale.

3.2.4. Data collection

The participants were asked to indicate the extent of their agreement with statements representing SBL outcomes. The following are examples of workshop outcomes and the corresponding statements used in the survey items. Thus, we assessed whether the workshop honed participants’ ability to develop a humbler view of others’ difficulties (e.g., “I realized that many educators experience difficulties similar to mine”), reflect on and accept criticism (e.g., “I realized that being the object of a peer’s criticism makes it more difficult to learn from the workshop experience”), cope with conflicts (e.g., “The workshop gave me tools for dealing with conflict with authority figures-managers, supervisors, etc.”), overcome inhibitions such as embarrassment, shyness, and egotism (e.g., “I realized that keeping my feelings and thoughts pent up inside me simply is not worthwhile or effective”), improve their everyday conduct (e.g., “I learned how I should start a conversation”), and engage in professional reflection (e.g., “I realized that when I face any situation there are many details and aspects that I am not sufficiently aware of”). The final 29 items are presented in.

3.2.5. Data analysis

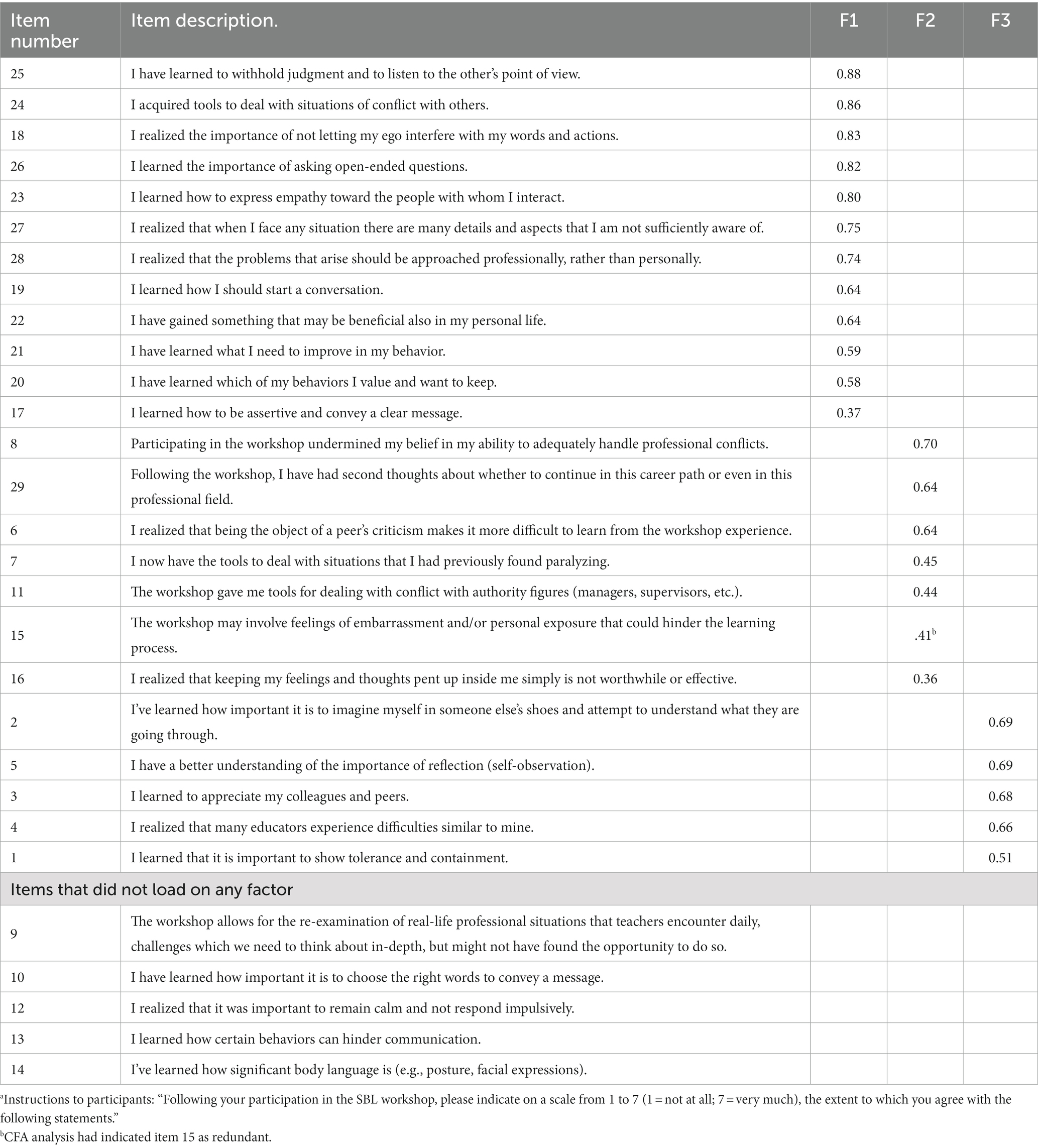

Following De Coninck et al. (2020), scales were constructed and validated using four consecutive stages. The focus of the first stage was to identify the number of factors using Exploratory Factor Analysis (EFA) with data from the first subsample, as conducted in previous studies (Thompson and Mazer, 2012). Following Costello and Osborne’s (2005) recommendations for non-normally distributed items and correlated factors, the Principal-Axis Factoring (PAF) method was used with direct oblimin rotation (oblique rotation). The latter allows factors to be correlated and produces estimates of correlations among factors. Initially, the Kaiser (1960) criterion was used to exclude factors with eigenvalues smaller than one, followed by a Scree plot analysis (Cattell, 1996), parallel analysis (Horn, 1965), and Velicer’s MAP (O’connor, 2000) to determine factor numbers. Finally, all items with loadings of 0.35 or less were excluded from further analysis, as were items with strong cross-loadings on more than one factor (Costello and Osborne, 2005). All EFA analyses were conducted using SPSS 25.0 and are summarized in Table 2.

Table 2. The validated version of the Simulation-based learning outcomes in teacher education (SLOTE) scalea: results of EFA indicating three factors (Subsample 1, n = 270).

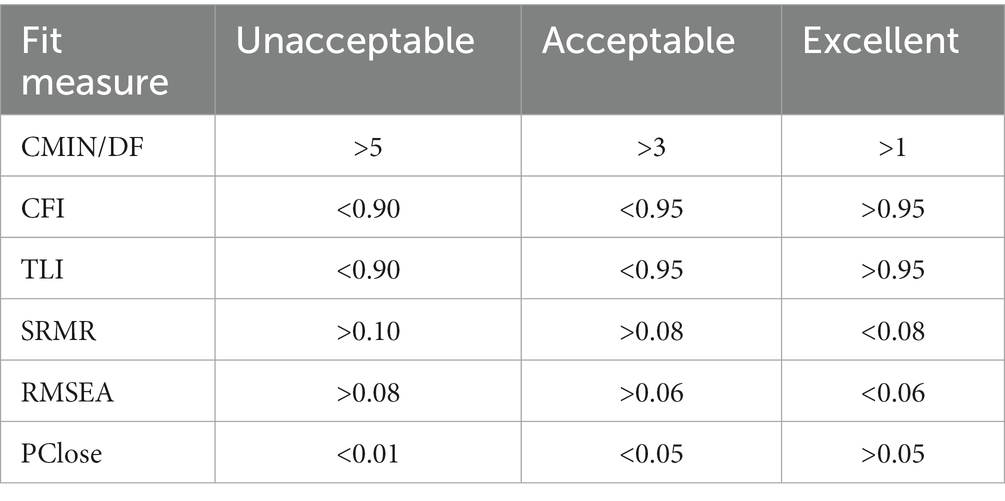

The second stage was to confirm the exploratory structure and test its stability, using the data from the second subsample (Pohlmann, 2004). A Confirmatory Factor Analysis (CFA) was conducted using AMOS 24. The analysis estimates whether a theoretical model fits the data, using the Maximum-Likelihood model (Awang, 2012), and it is common to use three sets of goodness-of-fit estimators: absolute fit (χ2, SRMT, RMSEA, PClose), Incremental fit (CFI), and Parsimonious fit (χ2/df).

Table 3 shows the acceptable common thresholds found in the analyses.

Table 3. Recommended cutoff criteria of fit (Hu and Bentler, 1999).

The third stage of the analysis was to determine the internal consistency of the scales. Cronbach’s α common threshold of 0.70 (Taber, 2018) was used to determine a factor’s internal consistency. The fourth stage involved calculating the means and standard deviations for each constructed subscale and performing descriptive analyses, to gain further insight regarding SBL aspects. First, a t-test for independent samples was carried out, to ensure that the factors of the first and second samples were equitable. Next, the data from the two subsamples were tested for gender, teaching experience, and simulation-role differences, using the simple t-test for independent sample analyses. Data from the two subsamples were used also to examine age and experience relations with the factors, using simple correlation tests.

3.2.6. Quantitative results

To define, quantify, and validate learning outcomes of SBL in teacher education, EFA and CFA were used to test the relationship among 29 items from a self-report survey of SBL learning outcomes.

3.2.6.1. Exploratory factor analysis

EFA was conducted on the data of the first subsample to reveal the underlying structure of the 29 items in the proposed scale for assessing the participants’ SBL dimensions. The analysis yielded three factors, accounting for 55.9% of the variance in the respondents’ scores, showing only 24 items loaded on this three-factor model. The factor structure was as follows: 12 items with factor loadings ranging from 0.88 to 0.37 loaded on factor 1 (communication skills); seven items with factor loadings ranging from 0.70 to 0.36 loaded on factor 2 (emotional self-awareness); five items with factor loadings from 0.69 to 0.51 loaded on factor 3 (collaborative-learning-related insights); and five items (9–10, 12–14) were deleted due to both cross-loading and low factor loadings. Results of the EFA indicating three factors are shown in Table 2.

Factor 1 corresponds to all items representing the dimension of participants’ communication skills for managing and resolving conflicts. These items represent both interpersonal practices (e.g., asking open-ended questions, being assertive), as well as intrapersonal ones (e.g., improving one’s own behavior, withholding judgment while listening to someone else’s point of view). This factor represents an instrumental aspect of the simulation, reflecting a learning outcome related to individual skills and abilities. Thus, the first factor measures the extent to which SBL workshops contribute to the development and improvement of preservice teachers’ and inservice teachers’ communications skills.

Factor 2 corresponds to the items that represent participants’ emotional self-awareness, reflecting how they contended with challenges inherent to the SBL process (e.g., feeling too embarrassed to perform in front of their peers).

Factor 3 corresponds to the items that represent professional collaborative-learning-related insights (e.g., understanding the importance of group reflection, realizing that colleagues experience similar difficulties). Hence, this factor may be considered a group-based learning outcome, as it measures the extent to which the simulation setup successfully exposes and elicits key situations and emotions shared by professionals in the field.

3.2.6.2. Confirmatory factor analysis

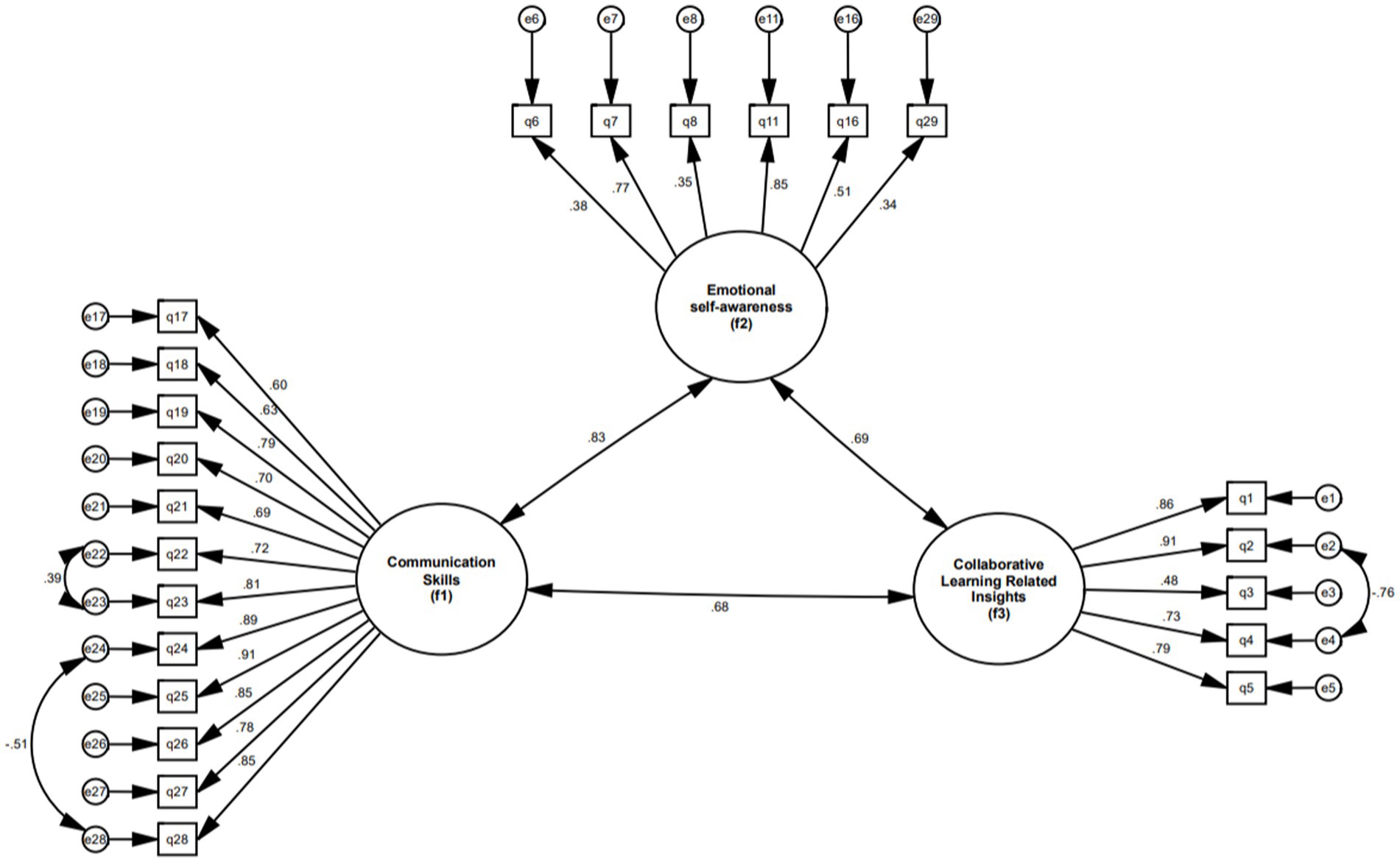

A CFA was conducted on the data of the second subsample. The CFA confirmed the three-factor structure. The final model thus includes a total of 23 items (excluding item 15 which loaded on factor 2); 12 items are related to communication skills (factor 1), six items are related to participants’ emotional self-awareness (factor 2), and five items are related to collaborative-learning-related insights (factor 3). The results of the CFA, including the pattern coefficients, are presented in Figure 2.

Results indicate a good fit between the hypothesized three-factor model and the observed data (χ2 = 317.181, df = 244, χ2/df ratio = 1.416, p ≤ 0.001). The goodness-of-fit estimates were CFI = 0.94, TLI = 0.93, SRMR = 0.07, and RSMEA = 0.06, with a 90% interval of 0.047 and 0.081, indicating good fit. The results suggest that all items load significantly onto the three latent factors. All coefficients were between 0.33 and 0.91 and differed from zero at the 0.01 significance level. The correlation between the communications-skills factor and the collaborative-learning-related insights factor was high (0.68, p < 0.001), as were the correlations between the communications-skills factor and the emotional self-awareness factor (0.83, p < 0.001) and the correlation between the emotional self-awareness factor and the collaborative-learning-related insights factor (0.69, p < 0.001). This means that the correlation is consistent with related but independent facets of communication. As Figure 2 illustrates, the residuals (“e”’s) were allowed to be correlated for three pairs of items: 2 and 4, 22 and 23, and 24 and 28.

Items 2 and 4 are related, as item 4 measures the degree to which a participant realizes that others experience difficulties similar to their own, from which one may derive the importance of understanding others from an empathic perspective (item 2). Likewise, item 22 and item 23 are related, as the former measures whether the SBL had a positive impact on participants’ personal life, and the latter measures the contribution of SBL to the ability of participants to express empathy toward others. In the third pairing, item 28 measures the degree to which participants realize they should face conflicts professionally and item 24 measures the extent to which SBL provided participants with professional tools to deal with conflicts. As all these items are clear indicators of their factor and because they reflect the intended features of the research design, the correlation of their residuals was deemed acceptable (De Coninck et al., 2020).

3.2.6.3. Internal consistency

The internal consistency of the three factors was measured using Cronbach’s alpha coefficient. The complete data set was used (n = 370). The newly constructed subscales were reliable, with a Cronbach’s alpha value of α = 0.95 on the communication skill dimension, 0.74 on the emotional self-awareness dimension, and 0.86 on the collaborative-learning-related insights dimension. These coefficients indicate a high internal consistency between the item of each dimension and imply good reliability of the factors.

3.2.6.4. Descriptive analyses

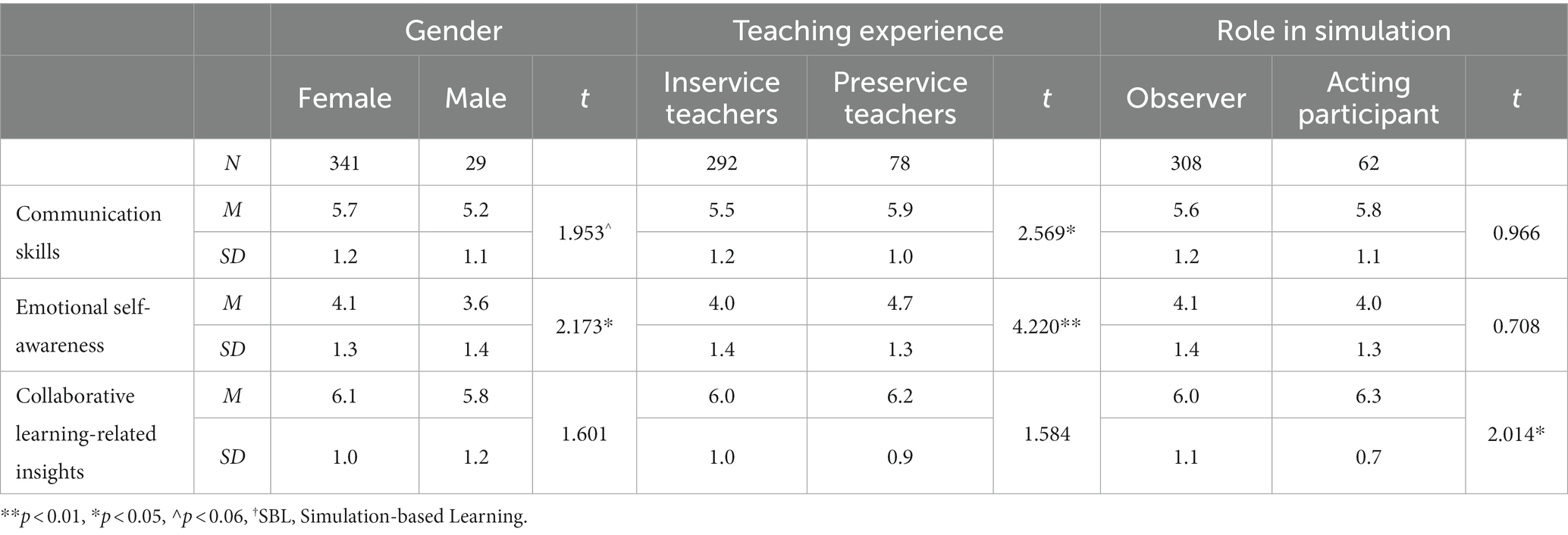

A summary of the participants’ descriptive statistics is presented in Table 4. It appears that male participants’ mean score on emotional self-awareness (M = 3.6) was significantly [t(df = 368) = 2.173, p < 0.05] lower than that of the female participants (M = 4.1). Also, male participants’ mean score on communication skills (M = 5.2) was significantly [t(df = 368) = 1.953, p < 0.052] lower than that of the female participants (M = 5.7). Acting Participants’ mean score on collaborative-learning-related insights (M = 6.3) was significantly higher [t(df = 117) = 2.014, p < 0.05] than that of the Observers (M = 6.0).

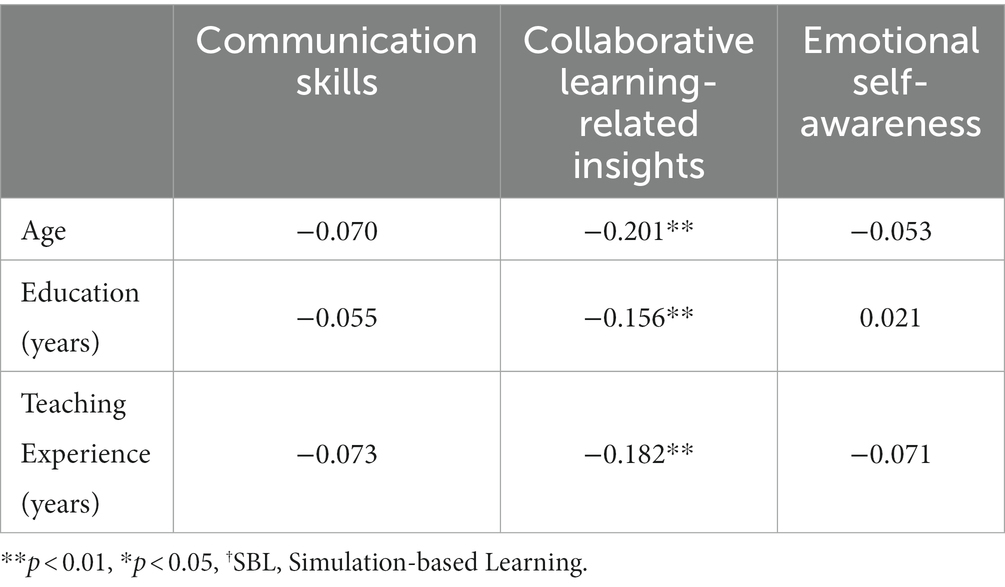

Additionally, inservice teachers’ mean score (M = 5.5) on communication skills acquired through the SBL experience was significantly [t(df = 368) = 2,569, p < 0.05] lower than that indicated by the preservice teacher participants (M = 5.9), as was their mean emotional self-awareness score [M = 4.0 vs. M = 4.7, respectively; t(df = 368) = 4.220, p < 0.01]. Finally, Pearson correlations are presented in Table 5.

Table 5. Pearson’s correlations between participants’ perceptions regarding SBL† and age, education, and teaching experience.

Findings indicate a significant negative correlation between participants’ emotional self-awareness and age (r = −0.201, p < 0.01), education level (r = −0.156, p < 0.01), and teaching experience (r = −0.182, p < 0.01).

4. Discussion

The most significant contribution of this study is in addressing SBL’s multifaceted learning outcomes as revealed through participants’ perceptions. Thus, this study enhances our ability to theoretically and practically examine the use of SBL in teacher education, by providing a validated tool for assessing these outcomes. The SLOTE scale is a first in this subfield of teacher education, where the use of SBL is still being developed (Dotger, 2015; Theelen et al., 2019). In contrast to previous studies, which examined the effects of SBL in terms of a single outcome, such as teaching reading (Ferguson, 2017) or conflict management (Shapira-Lishchinsky, 2015), the current study’s innovation lies in conducting a comprehensive examination of the multifaceted simulation outcomes. Hence, the study’s contribution is in providing a theoretical conceptualization of SBL outcomes and examining these among preservice and inservice teachers. These outcomes are manifested in three dimensions: a cognitive dimension (communication skills), a behavioral dimension (collaborative learning-related insights) and an emotional dimension (emotional self-awareness).

The findings related to the first factor of communication skills echo those of previous studies in teacher education, which found that SBL helps nurture communication skills (Dotger, 2015). The current study not only confirms this finding but also demonstrates that SBL participants, regardless of their age, teaching experience, or level of education, indicated the development of communication skills as an SBL outcome. This added facet underscores the importance of the simulation activity (Theelen et al., 2019; Dalinger et al., 2020). Moreover, the numeric scores indicate that preservice teachers benefitted more—in terms of the acquisition of communication skills—from the SBL experience than did their more veteran colleagues. This finding echoes those of earlier studies that examined the benefits of the simulation experience in the context of participants’ professional development stage, whether in the field of medicine (Elliott et al., 2011) or in a paramedical field such as speech therapy (Tavares, 2019). A possible explanation for this finding is related to the assumption that preservice teachers have had less experience in the field, as compared to veteran teachers who have had more opportunities to acquire communication strategies (Symeou et al., 2012).

The finding that communication-skills outcomes for the simulation observers and the simulation actor-participants did not differ significantly confirms that significant learning occurs regardless of the role (observer or participant) of the SBL participant (Frei-Landau et al., 2022). It also corresponds to findings from studies in the field of medicine that compared the perceived outcomes expressed by observers vs. those expressed by participants, although these findings were not definitive (Bong et al., 2017; Zottmann et al., 2018). However, medical simulations are used to practice techniques as well as communication skills; consequently, every learner must actively experience a simulation (Goolsarran et al., 2018). Thus, the finding of the current study provides an empirical anchor to the rationale for using SBL in teacher education in a manner that does not require every participant to take an active role in the simulation.

The outcome of collaborative learning is another relevant aspect of the SBL experience (Manburg et al., 2017; Levin and Flavian, 2022), which corresponds to the growing tendency seen in higher education study programs to emphasize the development of collaborative learning skills (Curşeu et al., 2018). Furthermore, in the current findings, the number of collaborative-learning-related insights reported by participants who acted in the simulations was greater than the number reported by their counterparts who observed the simulations. This may be because the acting participants were involved in the workshop both actively, as actors in the simulation, and passively, as observers of simulations enacted by others, which by definition increased their opportunity to benefit from collaborative learning. Of note, there are various levels of collaborative learning (Luhrs and McAnally-Salas, 2016); hence, future studies ought to compare the observers’ and participants’ SBL outcomes in terms of the various levels of collaborative learning in SBL.

The study found that one of the major outcomes of SBL consists of emotional self-awareness. This is an awareness of the emotions that were elicited when contending with the challenges inherent in the SBL process, such as the critiquing element. This finding is in line with previous studies, which indicated that the SBL experience elicited negative—as well as positive—emotions among its participants (Bautista and Boone, 2015; Dalgarno et al., 2016). Not surprisingly, the preservice teachers in this study mentioned emotional self-awareness more frequently than did the experienced inservice teachers. This may be explained by earlier studies, which have shown that emotional self-awareness enables openness toward learning, which is greater when one is more professionally grounded and hence has also had more positive experiences (Govender and Grayson, 2008; Levin and Muchnik-Rozanov, 2023). An interesting finding was that the women mentioned the emergence of both positive and negative emotions in reaction to challenges encountered. This finding is in line with a previous study that found that women who participated in SBL reported on positive emotions, such as relief and confidence, as well as negative emotions, such as stress and self-criticism (Levin and Muchnik-Rozanov, 2023). However, the finding that the women mentioned emotional self-awareness more frequently than did the men in this study may suggest a relationship between emotions and gender in SBL. This can be explained by the findings of a previous study, according to which women who reported participating in SBL less frequently also indicated lower levels of satisfaction, and did not feel a sense of belonging (Engel et al., 2021).

A surprising finding was the absence of a significant difference between the observers and the active participants in terms of the outcome of emotional self-awareness. We expected to find that actively participating in the simulation would involve a sense of greater exposure and consequently would elicit more instances of emotional self-awareness, as was found in other studies concerning SBL in the field of teacher education (Bautista and Boone, 2015; Dalgarno et al., 2016). In the same vein, it was found that active participants in medical simulations were under a greater amount of stress than were the observers (Bong et al., 2017). The novelty of the current findings is that they suggest that active participation is not inherently more emotionally demanding and, consequently, participants in both roles are apt to benefit equally from the experiential learning afforded by the SBL process. Additional studies could compare the SBL experience as perceived by the simulation observers vs. the acting participants in relation to other SBL outcomes.

4.1. Study limitations

This study has several limitations. First, there is a gender imbalance in the sample, which could be considered misleading and hinder the drawing of further conclusions from this study. However, the gender imbalance has been reported and is recognized as a characteristic of the field of teacher education (Lassibille and Navarro-Gómez, 2020); hence, we believe that these findings can be considered representative of the relevant populations. Second, the tools used, i.e., both self-reported questionnaires and interviews, are subject to social desirability bias. To minimize this effect, data were collected from several sources to enable triangulation. Third, this is a cross-sectional study; the outcomes of SBL should also be examined in the framework of long-term studies, as well as in the case of multiple SBL experiences. Furthermore, given that the validation of the scale was conducted with a sample that participated in clinical simulations, it is likely that the simulation outcomes identified in the study may be relevant only to this type of simulation. Considering this limitation, we call for further validation of the scale in relation to other SBL models used in the field of teacher education, so that this tool can be applicable to the entire field of teacher education. SBL outcomes should be investigated in further studies, focusing on the differences related to gender, teaching experience, and spectator vs. acting participant roles, particularly in relation to emotional self-awareness and collaborative-learning-related insights.

4.2. Conclusion

The study’s contribution is in developing and validating a scale that assesses the learning outcomes of SBL in teacher education in an integrative manner. Thus, it conceptualizes our empirical knowledge, as well as our theoretical understanding of the benefits of learning through simulations, and adds to the research that assesses the simulation tool. The developed scale is comprised of three major factors, which together highlight the multifaceted qualities of the simulation tool: communication skills, emotional self-awareness, and collaborative-learning-related insights. Not only does this conceptualization provides, for the first time, a comprehensive scale that provides an integrative description of learning outcomes provided by the use of simulation in the field of teachers’ education, but it also provides a preliminary understanding of the need to adapt SBL to target audiences, to maximize its practical benefits. That is, the findings reveal that SBL is more beneficial to women and preservice teachers (compared to men and inservice teachers) and that the benefits of SBL decrease with teachers’ age and experience. Moreover, the conceptualization of the benefits of learning through simulations allow for a better understanding of the observers’ role in SBL, suggesting that enacting the simulation is not inherently more emotionally demanding and consequently participants in both roles are likely to gain equally from the experiential learning afforded by the SBL process. For the SLOTE scale to be applicable to the entire field of teacher education, we urge for additional validation of it in comparison to other SBL models utilized in this field.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by the Ethics Committee of Achva Academic College. The patients/participants provided their written informed consent to participate in this study.

Author contributions

OL: conceptualization, data curation, funding acquisition, investigation, methodology, project administration, resources, validation, and writing. RF-L: conceptualization, formal analysis, funding acquisition, investigation, methodology, resources, validation, and writing. CG: conceptualization, statistical analysis, investigation, methodology, resources, validation, visualization, and writing. All authors contributed to the article and approved the submitted version.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Awang, Z. (2012). A handbook on SEM. Structural equation modeling. 2nd Edn. New York, NY; London: Guilford.

Badiee, F., and Kaufman, D. (2014). Effectiveness of an online simulation for teacher education. J. Technol. Teach. Educ. 22, 167–186.

Barrows, H. S., and Abrahamson, S. (1964). The programmed patient: a technique for appraising student performance in clinical neurology. Acad. Med. 39, 802–805.

Bautista, N. U., and Boone, W. J. (2015). Exploring the impact of TeachMETM lab virtual classroom teaching simulation on early childhood education majors' self-efficacy beliefs. J. Sci. Teach. Educ. 26, 237–262. doi: 10.1007/s10972-014-9418-8

Benson, J., and Clark, F. (1982). A guide for instrument development and validation. Am. J. Occup. Ther. 36, 789–800. doi: 10.5014/ajot.36.12.789

Bong, C. L., Lee, S., Ng, A. S. B., Allen, J. C., Lim, E. H. L., and Vidyarthi, A. (2017). The effects of active (hot-seat) versus observer roles during simulation-based training on stress levels and non-technical performance: a randomized trial. Adv. Simul. 2:7. doi: 10.1186/s41077-017-0040-7

Bradley, E. G., and Kendall, B. (2014). A review of computer simulations in teacher education. J. Educ. Technol. Syst. 43, 3–12. doi: 10.2190/ET.43.1.b

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101. doi: 10.1191/1478088706qp063oa

Cattell, R. B. (1996). The scree test for the number of factors. Multivar. Behav. Res. 1, 245–276. doi: 10.1207/s15327906mbr0102_10

Chernikova, O., Heitzmann, N., Stadler, M., Holzberger, D., Seidel, T., and Fischer, F. (2020). Simulation-based learning in higher education: a meta-analysis. Rev. Educ. Res. 90, 499–541. doi: 10.3102/0034654320933544

Codreanu, E., Sommerhoff, D., Huber, S., Ufer, S., and Seidel, T. (2021). Exploring the process of preservice teachers’ diagnostic activities in a video-based simulation. Front Educ 6:626666. doi: 10.3389/feduc.2021.626666

Cohen, J., Wong, V., Krishnamachari, A., and Berlin, R. (2020). Teacher coaching in a simulated environment. Educ. Eval. Policy Anal. 42, 208–231. doi: 10.3102/0162373720906217

Costello, A. B., and Osborne, J. (2005). Best practices in exploratory factor analysis: four recommendations for getting the most from your analysis. Pract. Assess. Res. Eval. 10:7. doi: 10.7275/jyj1-4868

Creswell, J. W., and Clark, V. L. P. (2017). Designing and conducting mixed methods research (3rd). Thousand Oaks: Sage.

Curşeu, P. L., Chappin, M. M., and Jansen, R. J. (2018). Gender diversity and motivation in collaborative learning groups: the mediating role of group discussion quality. Soc. Psychol. Educ. 21, 289–302. doi: 10.1007/s11218-017-9419-5

Dalgarno, B., Gregory, S., Knox, V., and Reiners, T. (2016). Practicing teaching using virtual classroom role plays. Austral J Teach Educ 41, 126–154. doi: 10.14221/ajte.2016v41n1.8

Dalinger, T., Thomas, K. B., Stansberry, S., and Xiu, Y. (2020). A mixed reality simulation offers strategic practice for pre-service teachers. Comput. Educ. 144:103696. doi: 10.1016/j.compedu.2019.103696

De Coninck, K., Walker, J., Dotger, B., and Vanderlinde, R. (2020). Measuring student teachers’ self-efficacy beliefs about family-teacher communication: scale construction and validation. Stud. Educ. Eval. 64:100820. doi: 10.1016/j.stueduc.2019.100820

DeCuir-Gunby, J. T. (2008). “Designing mixed methods research in the social sciences: a racial identity scale development example” in Best practices in quantitative methods. ed. J. Osborne (Thousand Oaks: Sage), 125–136.

Dieker, L. A., Hughes, C. E., Hynes, M. C., and Straub, C. (2017). Using simulated virtual environments to improve teacher performance. School-University Partnerships 10, 62–81.

Dotger, B. H. (2013). I had no idea: Clinical simulations for teacher development. Charlotte: Information Age Publication.

Dotger, B. H. (2015). Core pedagogy: individual uncertainty, shared practice, formative ethos. J. Teach. Educ. 66, 215–226. doi: 10.1177/0022487115570093

Elliott, S., Murrell, K., Harper, P., Stephens, T., and Pellowe, C. (2011). A comprehensive systematic review of the use of simulation in the continuing education and training of qualified medical, nursing, and midwifery staff. JBI Database System Rev. Implement. Rep. 9, 538–587. doi: 10.11124/01938924-201109170-00001

Engel, S., Mayersen, D., Pedersen, D., and Eidenfalk, J. (2021). The impact of gender on international relations simulations. J Polit Sci Educ 17, 595–613. doi: 10.1080/15512169.2019.1694532

Ferguson, K. (2017). Using a simulation to teach reading assessment to preservice teachers. Read. Teach. 70, 561–569. doi: 10.1002/trtr.1561

Fleiss, J. L., Levin, B., and Paik, M. C. (2013) Statistical methods for rates and proportions (3rd). New Jersey: John Wiley & Sons.

Franklin, A. E., Burns, P., and Lee, C. S. (2014). Psychometric testing on the NLN student satisfaction and self-confidence in learning, simulation design scale, and educational practices questionnaire using a sample of pre-licensure novice nurses. Nurse Educ. Today 34, 1298–1304. doi: 10.1016/j.nedt.2014.06.011

Frei-Landau, R., and Levin, O. (2022). The virtual Sim (HU) lation model: Conceptualization and implementation in the context of distant learning in teacher education. Teaching and Teacher Education 117:103798. doi: 10.1016/j.tate.2022.103798

Frei-Landau, R., and Levin, O. (2023). Simulation-based learning in teacher education: Using Maslow’s Hierarchy of needs to conceptualize instructors’ needs. Front. Psychol. 14:1149576. doi: 10.3389/fpsyg.2023.1149576

Frei-Landau, R., Orland-Barak, L., and Muchnick-Rozonov, Y. (2022). What’s in it for the observer? Mimetic aspects of learning through observation in simulation-based learning in teacher education. Teaching and Teacher Education 113:103682. doi: 10.1016/j.tate.2022.103682

Gaffney, A. L. H., and Dannels, D. P. (2015). Reclaiming affective learning. Commun. Educ. 64, 499–502. doi: 10.1080/03634523.2015.1058488

Goolsarran, N., Hamo, C. E., Lane, S., Frawley, S., and Lu, W. H. (2018). Effectiveness of an interprofessional patient safety team-based learning simulation experience on healthcare professional trainees. BMC Med. Educ. 18:192. doi: 10.1186/s12909-018-1301-4

Govender, I., and Grayson, D. J. (2008). Pre-service and in-service teachers’ experiences of learning to program in an object-oriented language. Comput. Educ. 51, 874–885. doi: 10.1016/j.compedu.2007.09.004

Hallinger, P., and Wang, R. (2020). The evolution of simulation-based learning across the disciplines, 1965–2018: a science map of the literature. Simul. Gaming 51, 9–32. doi: 10.1177/1046878119888246

Hinkin, T. R. (1995). A review of scale development practices in the study of organizations. J. Manag. 21, 967–988. doi: 10.1177/014920639502100509

Hofmann, R., Curran, S., and Dickens, S. (2021). Models and measures of learning outcomes for non-technical skills in simulation-based medical education. Stud. Educ. Eval. 71:101093. doi: 10.1016/j.stueduc.2021.101093

Hogan, S., Greenfield, D. B., Lee, A., and Schmidt, N. (2001). Development and validation of the Hogan grief reaction checklist. Death Stud. 25, 1–32. doi: 10.1080/074811801750058609

Horn, J. L. (1965). A rationale and test for the number of factors in factor analysis. Psychometrika 30, 179–185. doi: 10.1007/BF02289447

Hu, L., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Modeling 6, 1–55. doi: 10.1080/10705519909540118

Jack, D., Gerolamo, A. M., Frederick, D., Szajna, A., and Muccitelli, J. (2014). Using a trained actor to model mental health nursing care. Clin. Simul. Nurs. 10, 515–520. doi: 10.1016/j.ecns.2014.06.003

Kaiser, H. F. (1960). The application of electronic computers to factor analysis. Educ. Psychol. Meas. 20, 141–151. doi: 10.1177/001316446002000116

Kaufman, D., and Ireland, A. (2016). Enhancing teacher education with simulations. TechTrends 60, 260–267. doi: 10.1007/s11528-016-0049-0

Kolb, D. A. , (1984). Experiential learning: Experience as the source of learning and development. New Jersey: Prentice-Hall.

Lassibille, G., and Navarro-Gómez, M. L. (2020). Teachers’ job satisfaction and gender imbalance at school. Educ. Econ. 28, 567–586. doi: 10.1080/09645292.2020.1811839

Leech, N. L., and Onwuegbuzie, A. (2010). Guidelines for conducting and reporting mixed research in the field of counseling and beyond. J. Couns. Dev. 88, 61–69. doi: 10.1002/j.1556-6678.2010.tb00151.x

Levin, O. (2022). Reflective processes in clinical simulations from the perspective of the simulation actors. Reflective Practice 23, 635–650. doi: 10.1080/14623943.2022.2103106

Levin, O., and Flavian, H. (2022). Simulation-based learning in the context of peer learning from the perspective of preservice teachers: A case study. Europ. J. Teachr Educ. 45, 373–394. doi: 10.1080/02619768.2020.1827391

Levin, O., Frei-Landau, R., Flavian, H., and Miller, E. C. (2023). Creating authenticity in simulation-based learning scenarios in teacher education. Europ. J. Teachr Educ. 1–22. doi: 10.1080/02619768.2023.2175664

Levin, O., and Muchnik-Rozanov, Y. (2023). Professional development during simulation-based learning: Experiences and insights of preservice teachers. J. Educ. Teach. 49, 120–136. doi: 10.1080/02607476.2022.2048176

Liu, K., and McFarland, J. (2021). Examining the efficacy of fishbowl simulation in preparing to teach English learners in secondary schools. Front Educ 6:700051. doi: 10.3389/feduc.2021.700051

Luhrs, C., and McAnally-Salas, L. (2016). Collaboration levels in asynchronous discussion forums: a social network analysis approach. J. Interact. Online Learn. 14, 29–44.

Manburg, J., Moore, R., Griffin, D., and Seperson, M. (2017). Building reflective practice through an online diversity simulation in an undergraduate teacher education program. Contemp Iss Technol Teach Educ 17, 128–153.

Morais, D. B., and Ogden, A. C. (2011). Initial development and validation of the global citizenship scale. J. Stud. Int. Educ. 15, 445–466. doi: 10.1177/1028315310375308

O’connor, B. P. (2000). SPSS and SAS programs for determining the number of components using parallel analysis and Velicer’s MAP test. Behav. Res. Methods Instrum. Comput. 32, 396–402. doi: 10.3758/BF03200807

Onwuegbuzie, A. J., and Leech, N. L. (2007). Validity and qualitative research: an oxymoron? Qual. Quant. 41, 233–249. doi: 10.1007/s11135-006-9000-3

Orland-Barak, L., and Maskit, D. (2017). Methodologies of mediation in professional learning. Switzerland: Springer.

Pinar, G., Acar, G. B., and Kan, A. (2014). A study of reliability and validity an attitude scale towards simulation-based education. Arch Nurs Pract Care 2, 028–031. doi: 10.17352/2581-4265.000010

Pohlmann, J. T. (2004). Use and interpretation of factor analysis in the journal of educational research: 1992-2002. J. Educ. Res. 98, 14–23. doi: 10.3200/JOER.98.1.14-23

Ran, A., and Nahari, G. (2018). Simulations in education: Guidelines for new staff training in simulation centers. Tel Aviv: MOFET Institute (Hebrew).

Shapira-Lishchinsky, O. (2015). Simulation-based constructivist approach for education leaders. Educ Manage Admin Leadersh 43, 972–988. doi: 10.1177/1741143214543203

Shapira-Lishchinsky, O., Glanz, J., and Shaer, A. (2016). Team-based simulations: towards developing ethical guidelines among USA and Israeli teachers in Jewish schools. Relig. Educ. 111, 555–574. doi: 10.1080/00344087.2016.1085134

Sigalet, E., Donnon, T., and Grant, V. (2012). Undergraduate students’ perceptions of and attitudes toward a simulation-based interprofessional curriculum: the KidSIM questionnaire. Simul. Healthc. 7, 353–358. doi: 10.1097/SIH.0b013e318264499e

Symeou, L., Roussounidou, E., and Michaelides, M. (2012). "I feel much more confident now to talk with parents": an evaluation of in-service training on teacher-parent communication. Sch. Community J. 22, 65–87.

Taber, K. S. (2018). The use of Cronbach’s alpha when developing and reporting research instruments in science education. Res. Sci. Educ. 48, 1273–1296. doi: 10.1007/s11165-016-9602-2

Tang, F. M. K., Lee, R. M. F., Szeto, R. H. L., Cheng, J. K. K., Choi, F. W. T., Cheung, J. C. T., et al. (2021). A simulation Design of Immersive Virtual Reality for animal handling training to biomedical sciences undergraduates. Front Educ 6:239. doi: 10.3389/feduc.2021.710354

Tavares, W. (2019). Roads less traveled: understanding the “why” in simulation as an integrated continuing professional development activity. Adv. Simul. 4:24. doi: 10.1186/s41077-019-0111-z

Theelen, H., van den Beemt, A., and Brok, P. D. (2019). Classroom simulations in teacher education to support preservice teachers’ interpersonal competence: a systematic literature review. Comput. Educ. 129, 14–26. doi: 10.1016/j.compedu.2018.10.015

Thompson, B., and Mazer, J. P. (2012). Development of the parental academic support scale: frequency, importance, and modes of communication. Commun. Educ. 61, 131–160. doi: 10.1080/03634523.2012.657207

Tutticci, N., Ryan, M., Coyer, F., and Lewis, P. A. (2018). Collaborative facilitation of debrief after high fidelity simulation and its implications for reflective thinking: student experiences. Stud. High. Educ. 43, 1654–1667. doi: 10.1080/03075079.2017.1281238

Vogt, D. S., King, D. W., and King, L. A. (2004). Focus groups in psychological assessment: enhancing content validity by consulting members of the target population. Psychol. Assess. 16, 231–243. doi: 10.1037/1040-3590.16.3.231

Keywords: simulation-based learning, teacher education, communication skills, social-emotional learning, collaborative learning, mixed methodology, SBL outcome scale

Citation: Levin O, Frei-Landau R and Goldberg C (2023) Development and validation of a scale to measure the simulation-based learning outcomes in teacher education. Front. Educ. 8:1116626. doi: 10.3389/feduc.2023.1116626

Edited by:

Merav Esther Hemi, Gordon College of Education, IsraelReviewed by:

Ronen Kasperski, Gordon College of Education, IsraelMarina Milner-Bolotin, University of British Columbia, Canada

Copyright © 2023 Levin, Frei-Landau and Goldberg. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Orna Levin, b3JuYV9sQGFjaHZhLmFjLmls

Orna Levin

Orna Levin Rivi Frei-Landau

Rivi Frei-Landau Chen Goldberg

Chen Goldberg