- 1BIOCON, IU-ECOAQUA, Universidad de Las Palmas de Gran Canaria, Las Palmas de Gran Canaria, Spain

- 2Departamento de Ecología, Facultad de Ciencias, Universidad Católica de la Santísima Concepción, Concepción, Chile

- 3Photoelectrocatalysis for Environmental Applications (FEAM), Department of Chemistry, Institute of Environmental Studies and Natural Resources (i-UNAT), Universidad de Las Palmas de Gran Canaria, Las Palmas de Gran Canaria, Spain

- 4Christopher Ingold Laboratories, Department of Chemistry, UCL (University College London), London, United Kingdom

- 5Molecular Ecology Group (MEG), Water Research Institute (IRSA), National Research Council of Italy (CNR), Verbania, Italy

- 6Department of Biological and Environmental Sciences, University of Gothenburg, Gothenburg, Sweden

- 7Marine Environment Laboratories, International Atomic Energy Agency, Monaco, Monaco

The academic bottleneck

Academia is highly competitive, with universities producing a huge number of PhD graduates relative to the available number of academic positions worldwide (Gould, 2015). For example, only 12.8% of PhD graduates can attain academic positions in the United States (Larson et al., 2014). This bottleneck is also narrowing due to economic cuts in universities and research institutions (OECD, 2016).

As a result, it is common to see many researchers with very heterogeneous backgrounds applying for a single academic vacancy (Fernandes et al., 2020). This is not only an administrative load in the evaluation processes of academic positions but also a frustrating exercise for applicants who are impelled to design specific research and teaching programs for their applications. An accurate description of the researcher's role is pivotal, and the contracting party (i.e., the university or research institute) needs to set specific requirements for the vacancy. Nevertheless, academic roles cover a wide range of activities, some of them common to every research institution, such as the production and dissemination of research outputs; attraction of funding; lecturing and supervision of students; and participation in outreach activities. Other requirements for academic roles also take into account the structure, flexibility, and idiosyncrasy of each institution. Even within the same institution, the requirements may vary depending on the current necessities or priorities, as well as the vacancy profile. Several suggestions have been recently outlining the requirements, needs, and priorities of the institution (Guimaraes et al., 2019), including fair comparison among candidates applying for a vacancy (Bradshaw et al., 2021). To our knowledge, none of them have been extensively implemented, though some institutions have developed procedures where they are considered, as is the case for the Résumé for Researchers by the Royal Society in the United Kingdom.

The temptation of metrics

Academic applications are usually evaluated by senior and highly experienced scientists with a background in research evaluation metrics. These evaluators may be tempted to establish their evaluation criteria in standards, such as journal impact factor, number of citations, H-index, m-index, i10 index (Paulus et al., 2015; Tregoning, 2018), or a combination of multiple citation indicators (Ioannidis et al., 2016). These standards have been repeatedly questioned because of their biases (Bornman and Werner, 2014; Cameron et al., 2016). The abuse and misuse of the aforementioned indices are especially accentuated upon establishing individual ranks relative to the competition (Chapman et al., 2019; McKiernan et al., 2019). Numerous scientists have raised their voices against the excessive impact of these metrics (Callaway, 2016) following Goodhart's law: “when a measure becomes the target, it ceases to be a good measure.” Some institutions are aware of the pitfalls of using journal impact factors as a surrogate of research quality. This awareness has followed declarations such as DORA (San Francisco Declaration of Research Assessment) (Raff, 2013), the HEFCE Metric Tide report (Wilsdon, 2015), and the Leiden manifesto (Hicks et al., 2015) for decision-making about science, specifically in the recruitment process of researchers. Unfortunately, these institutions are still rare, and most evaluators fall into classic evaluation metrics as they provide a number (= datum), which seems providential to rank all candidates into a short list. Classic evaluations have increased the value placed on research at the expense of other academic responsibilities (e.g., teaching, outreach, and service), which often results in an incongruity between how faculty actually spend their time vs. what is considered pivotal in their evaluation (Schimanski and Alperin, 2018; Alperin et al., 2019).

Despite their practical use, the most common misuse of classic evaluation metrics is the lack of integration into a “holistic” approach for curricula evaluation, with fundamental academic activities often disregarded and conventionally considered secondary professional profiles. Recently, a number of new metrics, frameworks, and proposals have been developed to fill this gap (e.g., Mazumdar et al., 2015; Hutchins et al., 2016). Several issues are related to a hyper-specialization of researchers (Riera et al., 2018) and a lack of problem-solving (Ioannidis, 2016), mostly hidden away from society (“Academia bubble”). Postdoctoral and early-career researchers with a long list of publications signed by an extensive number of authors are often disregarded because their specific role in their articles is considered “diluted” by numerous researchers (Shaffer, 2014; Teixeira da Silva and Dobránszki, 2015), despite the rules of collaborative work among authors (Frassl et al., 2018). Honorary predatory and guest authorship is also a problem, not only limited to well-established researchers but also early-career ones (Elliott et al., 2016; Fong and Wilhite, 2017).

Overall, conventional criteria for curriculum evaluation may be appropriate to researchers leading directional career pathways and are mostly focused on research outputs. These researchers will typically stick to their area of research after their PhD and count on support across top institutions. Their outputs usually consist of a steady rate of publications, including top-tier international journals. On the other hand, the application of conventional criteria will likely be unfair for the evaluation of candidates with unconventional profiles, those from mid-/low-tier institutions (Way et al., 2019), non-established supervisors, or from countries with no science funding programs. Li et al. (2019) showed the importance of co-authoring papers with top scientists to unlock the potential and shift the career trajectory of early-stage researchers.

Top-tier vs. network researchers

Two archetypical profiles, though no-mutually exclusive, may be identified among researchers with a wide range of intermediate profiles, which we term here as (i) top-tier researchers and (ii) network researchers. Top-tier researchers are expected to be outstanding scientists from a traditional academic perspective, i.e., with an extensive number of scientific contributions in cutting-edge journals and demonstrated funding securing records in national and international competitive calls. Most universities hunt for these researchers as they help to maintain or increase their status in national and international university rankings. These researchers will be rewarded through an incentive system with promotions and full positions, encouraging candidates to focus almost exclusively on the production of scientific publications. Furthermore, since top-tier researchers are perceived as potential leaders in their respective fields, they are often awarded highly competitive fellowships and research funding. It is often (perhaps wrongly) assumed that these top-tier researchers will also excel in other aspects of academic life, such as teaching and science communication; engaging with the industry; establishing networks; creating an environment that supports research; and engaging in outreach activities.

Beyond the few top-tier researchers, there is a pool of high-quality network researchers that can contribute toward increasing the profile of academic institutions broadly across different areas. Network researchers can think outside the box, and they often engage with research outside their comfort zone, demonstrating unique skills for crosslinking studies and interdisciplinary collaboration. Crucially, they can also facilitate knowledge transfer activities beyond the lab scale, tackling challenges beyond the scientific phenomena that are usually the object of a scientific publication. With global national cuts on research funding, the role of network researchers is key in order to attract funding from the private sector. Network researchers can also establish fruitful alliances with local and regional communities, influence policy, and carry out outreach performances to engage with a future generation of researchers and the general public. Following the guidelines of conventional evaluation processes, the expertise and skillset of network researchers are, however, often neglected. Despite their multiple skills, several of them are susceptible to going unnoticed in conventional evaluation procedures.

Fit for purpose

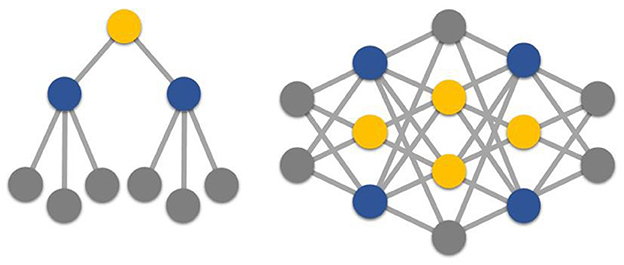

The heterogeneity of tasks and responsibilities within universities and research institutions is of utmost importance for potential candidates for new vacancies. Setting a detailed description of their expected role in the vacancy post, as well as the strategic purpose for their recruitment, can serve as an efficient mechanism to decrease the stratification of academia, i.e., the difference between the “haves” vs. “have nots” among academics (Fong and Wilhite, 2017). At first glance, top-tier leaders are attracted to bringing prestige to institutions and attracting funding from national and international schemes, as they are conventionally established. Due to their feedback nature, however, selection processes can work as a filter for certain personalities (e.g., self-confident, strong-minded individuals, novelty seekers; Dupont et al., 2009) who will effectively create their research groups as the leading figure of a pyramidal system (Figure 1), with postdoctoral researchers and associate professors under their umbrella. In this group structure, independence and critical thinking among subordinates are often lacking, and the continuity of their research lines may be compromised if the leading professor moves to another institution or retires.

Figure 1. Two model arrangements of scientific groups: (Left) the conventional pyramidal system, with top-tier researchers as sole leaders; (Right) the network system, with network researchers integrated within the same research group.

The group structure of network researchers follows a horizontal system where there are no leading heads in the traditional academic leadership (Figure 1), and the research direction typically spreads across different scientific areas. This interdisciplinary approach allows for real opportunities for novel blue-skies research and flexibility toward raising funding from different sources. With these targets in mind, most universities so far have used the strategy of creating research institutes comprising a number of groups, typically from different departments. In practice, however, the interaction between these groups is limited as they rarely share common projects and scientific objectives. Network researchers will work toward the same targets, as they guarantee the successful progress of the group. In addition, the research is highly delocalized across different experts, which ensures the continuity of the main research lines within the institution. For this kind of researcher, other inherent activities associated with faculty, such as funding, mentoring, outreach, and partnerships with communities, among others, should be taken into consideration by the committee when evaluating candidates for a position. On the other hand, the evaluation of the aforementioned merits is a daunting task since they cannot be integrated into an easy-to-use index, but it is at the mercy of reviewer considerations. Indeed, the role of network researchers is not as transparent as top-tier profiles, and it could be a Faraday box for mediocre researchers. The role of experienced reviewers will then be crucial to ensure not only high-quality standards in the evaluation process (Chapman et al., 2019) but also fairness in the assessment of curricula for candidates with highly heterogeneous backgrounds.

The duality of both kinds of profiles, i.e., top-tier or network researchers, is suggested to be of utmost importance in order to design an adequate evaluation approach by committees. Top-tier researchers can be evaluated by conventional impact indicators, i.e., citations, H index and variants, and normalized impact metrics (see Bornman and Werner, 2014). By contrast, network researchers may be evaluated by complementary metrics that consider unconventional aspects of the curriculum, such as outreach, engaging with the private sector, and collaboration networks. From our perspective, the aspects of the curriculum directly involved in the specific purpose should be considered a priority by the committee members. For example, a research institution willing to strengthen its collaboration with the industry should look for candidates with industrial patents, applied projects with enterprises, proven skills of transferable knowledge, etc. These curriculum aspects may be the first ticking-box preferences to be filled in by the committee members. Stating the main purpose of the vacancy clearly is key (e.g., “a top researcher is needed to lead our brand new laboratory on…” or “we expect the candidate to join coalition with the academic staff in the department to develop and maintain an interdisciplinary group”) and it will encourage researchers to carry out an adequate self-assessment of their respective profiles. This initial assessment will allow them to focus on realistic opportunities across the pool of vacancies offered by research institutions and also to strengthen their skill sets as researchers. It will also be time-saving for the institution, facilitating the initial screening of candidates for a particular position.

Author contributions

RR wrote the first draft and did amendments of last version. RQ-C revised the first draft and included suggestions and amendments. AM revised the first draft and gave feedback. SD revised the last version and provided feedback and suggestions. All authors contributed to the article and approved the submitted version.

Acknowledgments

Carlos Melián (EAWAG, Switzerland) and J. C. Alperin (Simon Fraser University, Canada) are thanked for the fruitful discussion around this topic. RQ-C thanks the MEFP, Spain, for funding (Beatriz Galindo Program).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alperin, J. P., Nieves, C. M., Schimanski, L. A., Fischman, G. E., Niles, M. T., and McKiernan, E. C. (2019). Meta-research: how significant are the public dimensions of faculty work in review, promotion and tenure documents? eLife 8, e42254. doi: 10.7554/eLife.42254

Bornman, L., and Werner, M. (2014). How to evaluate individual researchers working in the natural and life sciences meaningfully? A proposal of methods based on percentiles of citations. Scientometrics 98, 487–509. doi: 10.1007/s11192-013-1161-y

Bradshaw, A. C. J., Chalker, M. J., Crabtree, A. S., Eijkelkamp, A. B., Long, A. J., Smith, J. R., et al. (2021). A fairer way to compare researchers at any career stage and in any discipline using open-access citation data. PLoS ONE 16, e0257141. doi: 10.1371/journal.pone.0257141

Callaway, E. (2016). Publishing elite turns against the impact factor. Nature 535, 210–211. doi: 10.1038/nature.2016.20224

Cameron, E. Z., White, A. M., and Gray, M. E. (2016). Solving the productivity and impact puzzle: do men outperform women, or are metrics biased? BioScience 66, 245–252. doi: 10.1093/biosci/biv173

Chapman, A. C., Bicca-Marques, C. J., Calvignac-Spencer, S., Fan, P., Fashing, J. P., Gogarten, J., et al. (2019). Games academics play and their consequences: how authorship, h-index and journal impact factors are shaping the future of academia. Proc. R. Soc. B 286, 20192047. doi: 10.1098/rspb.2019.2047

Dupont, S., Le Bon, O., and Linotte, S. (2009). Selective pressure in academic environment. Acta Psychiatr. Belg. 109, 25–28.

Elliott, C. K., Settles, H. I., Montgomery, M. G., Brassel, T. S., Cheruvelil, S. K., and Soranno, P. A. (2016). Honorary authorship practices in environmental science teams: structural and cultural factors and solutions. Account. Res. Policies Qual. Assur. 24:80–98. doi: 10.1080/08989621.2016.1251320

Fernandes, D. J., Sarabipour, S., Smith, T. C., Niemi, M. N., Jadavji, M. N., Kozik, A. J., et al. (2020). Research culture: a survey-based analysis of the academic job market. Elife 9, e54097. doi: 10.7554/eLife.54097

Fong, E. A., and Wilhite, A. W. (2017). Authorship and citation manipulation in academic research. PLoS ONE 12, e0187394. doi: 10.1371/journal.pone.0187394

Frassk, M. A., Hamilton, D. P., Denfeld, B. A., de Eyto, E., Hampton, S. E., Keller, P. S., et al. (2018). Ten simple rules for collaboratively writing a multi-authored paper. PloS Comput. Biol. 14:e1006508. doi: 10.1371/journal.pcbi.1006508

Guimaraes, M. H., Pohl, C., Bina, O., Varanda, M., et al. (2019). Who is doing inter- and transdisciplinary research, and why? An empirical study of motivations, attitudes, skills, and behaviours. Futures 112, 102441. doi: 10.1016/j.futures.2019.102441

Hicks, D., Wouters, P., Waltman, L., de Rijcke, S., and Rafols, I. (2015). Bibliometrics: the Leiden Manifesto for research metrics. Nature 520, 429–431. doi: 10.1038/520429a

Hutchins, B. I., Yuan, X., Anderson, J. M., and Santangelo, G. M. (2016). Relative Citation Ratio (RCR): a new metric that uses citation rates to measure influence at the article level. PLoS Biol. 14, e1002541. doi: 10.1371/journal.pbio.1002541

Ioannidis, J. P., Klavans, R., and Boyack, K. W. (2016). Multiple citation indicators and their composite across scientific disciplines. PloS Biol. 14, e1002501. doi: 10.1371/journal.pbio.1002501

Ioannidis, J. P. A. (2016). Why most clinical research is not useful. PloS Med. 13, e1002049. doi: 10.1371/journal.pmed.1002049

Larson, C. R., Ghaffarzadegan, N., and Xue, Y. (2014). Too many PhD graduates or too few academic job openings: the basic reproductive number R0 in Academia. Syst. Res. Behav. Sci. 31, 745–750. doi: 10.1002/sres.2210

Li, W., Aste, T., Caccioli, F., and Livan, G. (2019). Early coauthorship with top scientists predicts success in academic careers. Nat. Commun. 10, 1–9. doi: 10.1038/s41467-019-13130-4

Mazumdar, M., Messinger, S., Finkelstein, M. D., Goldberg, D. J., Lindsell, J. C., Morton, S. C., et al. (2015). Evaluating academic scientists collaborating in team-based research: a proposed framework. Acad. Med. 90, 1302–1308. doi: 10.1097/ACM.0000000000000759

McKiernan, C. E., Schimanski, A. L., Nieves, M. C., Matthias, L., Niles, T. M., and Alperin, J. P. (2019). Use of the journal impact factor in academic review, promotion, tenure evaluations. Elife 8, e47338. doi: 10.7554/eLife.47338

OECD (2016). OECD Science Technology and Innovation Outlook 2016. Paris: OECD Publishing. doi: 10.1787/sti_in_outlook-2016-en

Paulus, F. M., Rademacher, L., Schäfer, T. A. J., Müller-Pinzler, L., and Krach, S. (2015). Journal impact factor shapes scientists' reward signal in the prospect of publication. PLoS ONE 10, e0142537. doi: 10.1371/journal.pone.0142537

Riera, R., Rodríguez, R. A., Herrera, A. M., Delgado, J. D., and Fath, B. D. (2018). Endorheic currents in ecology: an example of the effects from scientific specialization and interdisciplinary isolation. Interdiscip. Sci. Rev. 43, 175–191. doi: 10.1080/03080188.2017.1371480

Schimanski, L. A., and Alperin, J. P. (2018). The evaluation of scholarship in academic promotion and tenure processes: Past, present, and future. F1000Res. 7.

Shaffer, E. (2014). Too many authors spoil the credit. Can. J. Gastroenterol. Hepatol. 28, 605. doi: 10.1155/2014/381676

Teixeira da Silva, J. A., and Dobránszki, J. (2015). Multiple authorship in scientific manuscripts: ethical challenges, ghost and guest/gift authorship, and the cultural/disciplinary perspective. Sci. Eng. Ethics 22, 1457–1472. doi: 10.1007/s11948-015-9716-3

Tregoning, J. (2018). How will you judge me if not by impact factor? Nature 558, 345. doi: 10.1038/d41586-018-05467-5

Way, S. F., Morgan, A. C., Larremore, D. B., and Clauset, A. (2019). Productivity, prominence, and the effects of the academic environment. Proc. Natl. Acad. Sci. U. S. A. 116, 10729–10733. doi: 10.1073/pnas.1817431116

Keywords: academia, researchers, selection process, unorthodox, diversity and inclusion

Citation: Riera R, Quesada-Cabrera R, Martínez A and Dupont S (2023) Embracing diversity during researcher evaluation in the academic scientific environment. Front. Educ. 8:1098319. doi: 10.3389/feduc.2023.1098319

Received: 18 November 2022; Accepted: 26 January 2023;

Published: 16 February 2023.

Edited by:

Shamsun Nahar, University of Dhaka, BangladeshReviewed by:

Shams Mohammad Abrar, Bangladesh Atomic Energy Commission, BangladeshCopyright © 2023 Riera, Quesada-Cabrera, Martínez and Dupont. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rodrigo Riera,  cm9kcmlnby5yaWVyYUB1bHBnYy5lcw==

cm9kcmlnby5yaWVyYUB1bHBnYy5lcw==

Rodrigo Riera

Rodrigo Riera Raúl Quesada-Cabrera3,4

Raúl Quesada-Cabrera3,4 Sam Dupont

Sam Dupont