94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

CURRICULUM, INSTRUCTION, AND PEDAGOGY article

Front. Educ., 16 September 2022

Sec. Digital Education

Volume 7 - 2022 | https://doi.org/10.3389/feduc.2022.954044

This article is part of the Research TopicRe-inventing Project-Based Learning: Hackathons, Datathons, Devcamps as Learning ExpeditionsView all 6 articles

Our world’s complex challenges increase the need for those entering STEAM (Science, Technology, Engineering, Arts, and Math) disciplines to be able to creatively approach and collaboratively address wicked problems – complex problems with no “right” answer that span disciplines. Hackathons are environments that leverage problem-based learning practices so student teams can solve problems creatively and collaboratively by developing a solution to given challenges using engineering and computer science knowledge, skills, and abilities. The purpose of this paper is to offer a framework for interdisciplinary hackathon challenge development, as well as provide resources to aid interdisciplinary teams in better understanding the context and needs of a hackathon to evaluate and refine hackathon challenges. Three cohorts of interdisciplinary STEAM researchers were observed and interviewed as they collaboratively created a hackathon challenge incorporating all cohort-member disciplines for an online high school hackathon. The observation data and interview transcripts were analyzed using thematic analysis to distill the processes cohorts underwent and resources that were necessary for successfully creating a hackathon challenge. Through this research we found that the cohorts worked through four sequential stages as they collaborated to create a hackathon challenge. We detail the stages and offer them as a framework for future teams who seek to develop an interdisciplinary hackathon challenge. Additionally, we found that all cohorts lacked the knowledge and experience with hackathons to make fully informed decisions related to the challenge’s topic, scope, outcomes, etc. In response, this manuscript offers five hackathon quality considerations and three guiding principles for challenge developers to best meet the needs and goals of hackathon sponsors and participants.

Hackathons and coding contests are time-bound events where teams of participants from different backgrounds gather to build technology projects, learn from each other and experts, and create innovative solutions that are often judged for prizes (Longmeier, 2021). While hacking can have negative connotations especially related to security vulnerabilities, typically in these events hacking refers to modifying original lines of code or devices to create a workable prototype (either hardware or software). Hackathons are flexible venues that can adapt to a variety of outputs and outcomes yet are structured with specific challenge parameters to allow participants to demonstrate creativity when developing a product.

Originally hackathons were adopted and implemented at software companies to provide rapid innovation of prototypes (Raatikaine, 2013; Komssi et al., 2015) but quickly moved from industry to the realm of education (Gama et al., 2018a,b; Huppenkothen et al., 2018; Porras et al., 2018, 2019) and civic engagement (Carruthers, 2014; Johnson and Robinson, 2014; Lara and Lockwood, 2016; Enis, 2020). Some events are focused on specific hardware or software for project outputs; others have a goal of participant learning as an outcome so do not proscribe a specific topic to address. Most hackathons are based around one or several themes to help participants narrow the scope of the potential outputs of the newly formed teams (Lapp et al., 2007; Möller et al., 2014; Trainer et al., 2016).

Hackathons can run for part of a day up to a whole semester in terms of duration; however, most run for 24–36 h. They are usually held in open spaces where participants can see what others are developing; some provide project mentors or community involvement to keep participants focused. Hackathons are an ideal venue for tackling wicked problems (i.e., problems that are complex, multidisciplinary, and have multiple correct answers) given there are often several stakeholders with varying priorities resulting in multiple possible outcomes. But how are these hackathons organized and created such that they become a venue for valuable learning experiences? This work begins to explore the creation of hackathons and hackathon challenges to promote problem-based learning experiences amongst participants.

Hackathons have been around for decades and were used frequently by software companies in the early 2000s. They gained popularity in libraries (Nandi and Mandernach, 2016; Longmeier, 2021), on campuses to supplement other in-class learning (Horton et al., 2018), and for communities who were interested in promoting literacy (Vander Broek and Rodgers, 2015), project-based learning (La Place et al., 2017), and building awareness of community resources such as data (Carruthers, 2014). At times, conferences on a specific topic draw experts in the field together and planners may append a hackathon to the conference, taking advantage of the opportunity to creatively brainstorm and iterate on topics of interest (Feder, 2021).

Early research in this area focused on case studies of specific events (whose durations ranged from a single day to an entire semester) (Ward et al., 2014, 2015; Ghouila et al., 2018). Recently more research has been done about hackathon outcomes and participant motivations for these tech-related events (Falk Olesen and Halskov, 2020). Researchers have examined how the theme affects product output (Medina Angarita and Nolte, 2019; Pe-Than et al., 2022). Specifically, when exploring goal alignment between hackathon planners and participants, researchers found that some goals were shared (such as networking and learning) whereas prize attainment was not (Medina Angarita and Nolte, 2019). Additionally, participants were more likely to achieve the goals of the hackathon when they were clearly stated to participants. Research has also focused on the team dynamics of these events (Trainer and Herbsleb, 2014; Richterich, 2017). Still others focus on participant motivations for either specific themes or for the event overall (Kos, 2018). Hackathon participant surveys show that hackathons are excellent avenues for informal, peer-to-peer, and team-based learning (Nandi and Mandernach, 2016).

In examining various resources for successfully hosting a hackathon (McArthur et al., 2012; Nelson and Kashyap, 2014; Grant, 2017; Jansen-Dings et al., 2017; Tauberer, 2017; Byrne et al., 2018), the hackathon planning kit provides twelve decisions for organizing a successful event (Nolte et al., 2020), one of which is selection of a theme. Planners of the events may be hoping to solve specific issues, iterate on prototypes, or test specific technologies, where outcomes of the event drive theme development. Hackathons are ideal for problem-based learning assignments because they are flexible in how they are deployed, yet constraints can easily be built in.

For work to be considered interdisciplinary, it must facilitate and leverage communication and collaboration across two or more academic disciplines (Jacobs and Frickel, 2009). Interdisciplinary work has greatly increased in popularity in the past century, leading to countless innovations, inventions, and insights. As the technical and social aspects of day-to-day life become more deeply intertwined (Rhoten, 2004), new and complex problems emerge, and the need for interdisciplinary work continues to grow. This is especially true in academia, where we have seen recent pushes for an increase in interdisciplinary research projects to increase creativity and innovation (Jacobs and Frickel, 2009; Holley and Brown, 2021). Despite this growing necessity for interdisciplinarity in problem-solving and innovation in research settings, researchers have identified many barriers to such work, some of which are the knowledge and skills necessary for interdisciplinary collaboration (Holley, 2009). In order to begin developing the knowledge, skills, and experiences necessary to prepare students to be the next generation of problem-solvers, educational tools and pedagogies that highlight and leverage the utility of interdisciplinary problem-solving [e.g., problem-based learning (Savery, 2006; Brassler and Dettmers, 2017), introducing students to wicked problems (Denning, 2009), etc.,] can be leveraged in formal classrooms as well as informal learning environments such as hackathons.

Problem-based learning (PBL) is a learning strategy in which the driving force of the learning experience is a problem. This problem cannot be any problem because students should learn by “resolving complex, realistic problems under the guidance of faculty” (Allen et al., 2011, 21). PBL was first used in medical programs, where it was identified as a successful learning technique, and thus grew in popularity and adoption through the 1980s and 1990s (Savery, 2006). After PBL’s initial success, it was adapted in a variety of contexts and learning levels from elementary to higher education.

Central to a PBL experience is the problem and how students interact with that problem. PBL is learner-centered and driven by an ill-structured problem or set of problems that encourages free inquiry (Savery, 2006). Torp and Sage (2002) describe PBL as experiential learning that is organized around and focused on the investigation and resolution of a messy, real-world problem. Similarly, Hmelo-Silver (2004) described PBL as an instructional method in which students learn through facilitated problem solving centered on a complex problem that does not have a single correct answer. These definitions indicate that the problem guiding PBL needs to be complex enough that students can approach solving the problem from a variety of ways while considering real-world scenarios and generating solutions that create real-world value. Calls for student learning experiences to resemble “real world” and realistic experiences outside of classrooms have specifically highlighted PBL and its benefits (Bransford et al., 2000, 77).

What is also important to PBL is the focus on collaboration and knowledge connections. In PBL collaborations are central, both between students and in the environment where students need to integrate knowledge to solve the problem. These qualities of PBL are reflected in Duch et al. (2001)’s description of the skills that should be used and developed by students include: the ability to find, evaluate, and use appropriate learning resources; to work cooperatively, to demonstrate effective communication skills; and to use content knowledge and intellectual skills to become continual learners.

Problem-based learning in science, technology, engineering, and mathematics (STEM) disciplines has gained traction in elementary schools through college-level courses (Elsayary et al., 2015). Research shows that PBL is a successful strategy in improving instruction and student learning outcomes in STEM settings (Akınoğlu and Özkardeş Tandoğan, 2007) and more specifically in engineering (Yadav et al., 2011) and computer science and programming spaces (Peng, 2010; Chang et al., 2020). Beyond learning gains, PBL positively impacts students’ learning experiences by improving attitudes and motivation toward STEM disciplines and future STEM careers and opportunities (LaForce et al., 2017; Sarı et al., 2018). One way in which PBL has found its way into STEM learning spaces is through hackathons.

Hackathons can vary in structure but those that center on a single challenge prompt most closely resemble PBL. As an example, a PBL model was applied as a hackathon where community partners posed specific challenges for participants to address (Lara and Lockwood, 2016). This hackathon combined significant peer-to-peer learning using student mentors with needs assessment, product development, and building communication skills when working with community partners. The qualities of this example hackathon, as well as many others in industry, education, and civic engagement, align well with the collaborative nature of solving similar ill-structured problems that distinguish PBL as a learning approach.

Wicked problems got their start when complex social problems were being compared to technical scientific problems (Skaburskis, 2008). There exist clear methods for determining the answer to a technical or scientific problem, but addressing social problems tends to involve multiple stakeholders, often with competing priorities. Early wicked problem literature focused on these problems as existing in social science, for instance public policy and government contexts (Peters, 2017). In literature, wicked problems have distinguishing characteristics and are often contrasted against tame problems in technical and science fields (Ritchey, 2013). Examples of these characteristics include not being well-defined and having no clear point at which the problem is officially solved. Additionally, solutions to wicked problems cannot be assessed or evaluated as “right or wrong” because they cannot be tested – each solution impacts multiple stakeholders in many ways (Ritchey, 2013).

Scientists and engineers have been labeled as leaders in the next generation of the world’s problem solvers (Jablokow, 2007), and solving wicked problems calls for the interdisciplinary integration of knowledge and expertise from a variety of domains and specialties to develop and evaluate solutions (Denning, 2009). Educators of these future problem solvers in STEM fields have recognized this shift, leading to a noticeable increase in the consideration of wicked problems and how to introduce students to them in engineering and computer science spaces (Lönngren et al., 2019).

Oftentimes STEM students’ introduction to interdisciplinary, complex, ill-structured problem solving with many viable solutions has been done through PBL in formal classroom settings and hackathons as informal collaborative learning spaces. Given the fuzzy and flexible nature of wicked problems, hackathons and PBL approaches are excellent techniques for brainstorming solutions to wicked problems. Interdisciplinary PBL can help increase interdisciplinary skills needed for employability and development as well as reflective behavior and interdisciplinary competence (Brassler and Dettmers, 2017). By leveraging the interdisciplinary nature of PBL and wicked problems, students engage in creative problem solving and can make connections between their own content knowledge and real-world solutions [e.g., (Christy and Lima, 2007)]. Overall, these experiences in formal and informal learning environments lead to students engaging in the creative process of solving wicked problems to prepare them for future challenges and collaborations.

The purpose of this paper is to offer a framework for interdisciplinary hackathon challenge development, as well as provide resources to aid interdisciplinary teams in better understanding the context and needs of a hackathon to evaluate and refine their hackathon challenge. This article takes a unique perspective on furthering hackathons as learning expeditions, as it focuses not on the learning of hackathon participants but instead on the experiences, innovations, and learning of those who create hackathon challenges.

In this manuscript, we achieve this purpose by leveraging the accounts of participants within interdisciplinary STEAM cohorts paired with observations from the research project team. We explored how these cohorts navigated the challenge of creating a hackathon challenge that integrated elements of all participants’ unique disciplines – truly modeling a problem-based learning challenge, as well as the complex and interdisciplinary nature of a wicked problem.

We also offer quality considerations as well as guiding principles that we recommend using to develop, evaluate, and refine a hackathon challenge to best serve the needs of the hackathon sponsors as well as the hackathon participants. By exploring the experiences of those who develop hackathon challenges, identifying their needs, and providing resources to address those needs, we make a unique contribution with this manuscript that provides others with clear practices and considerations that can be leveraged by others creating hackathon challenges.

To better prepare researchers for science communication with the public, our combined research and evaluation team developed a program that included a series of individual and group public communication opportunities (Pelan et al., 2020). Participants filled out a questionnaire to apply for the program and were intentionally selected to represent a diverse faculty body as well as participants’ interests and experiences in interdisciplinary work. Once selected for the program, each participant was assigned a pseudonym to be used for all research reporting, including the present paper. Participants were also placed into four interdisciplinary cohorts. Each cohort included 3–5 individuals and reflected a broad topic. The broad topics (energy, space, movement, and elements) were used as a starting point for participants to bring their research domains together. After cohort formation, the program began with Portal-to-the-Public-like training (Selvakumar and Storksdieck, 2013) to develop basic skills around public science communication. Participants then used their newly developed skills to present their work in individual and collaborative ways across a variety of public settings (Desing, forthcoming).

One of the public venues for the collaborative portion of the work included a virtual hackathon for high school students which is the focus of this manuscript. For this activity, the cohorts designed challenge prompts that would be used for the hackathon. The goal of each cohort was to create a prompt relating to all their disciplines around their assigned topic. Each cohort took different approaches to developing their hackathon challenge, and in the end, each developed a unique prompt that showcased the interdisciplinarity of their cohorts. All four cohorts participated in this activity; however, the first cohort completed the exercise in person while the remaining three cohorts participated virtually. Subsequently, we used the first cohort as a pilot to understand the needs of the cohorts in challenge making. This manuscript focuses on the experiences of the three virtual cohorts. The three interdisciplinary virtual cohorts and their members are summarized in Table 1.

The data that informed this manuscript came from two sets of qualitative data. The first data set was transcripts of interviews conducted with the cohort participants. Each cohort participant participated in two 15–30-min virtual interviews. The first interview was before the hackathon event and asked questions related to how the challenge development went within the cohort including the challenges and success of preparing for the hackathon. The second interview was after the hackathon event and asked questions related to how the event went and how students worked with the challenges the cohorts developed. The second data set was observations of the meetings in which cohort participants met to develop the interdisciplinary hackathon challenge prompt. Members of the research project team – specifically the evaluation team and the event experts – attended these meetings. Their attendance served a dual purpose, as they observed the interactions of the cohort participants but also participated in discussions by providing occasional guidance and feedback to cohort participants. This form of data collection between the research project team and cohort participants is described in Ciesielska et al. (2018) as direct partial-participant observation.

Data analysis began with the transcribed interviews undergoing thematic analysis. Thematic analysis is a qualitative data analysis technique that allows for salient ideas and meaningful patterns to be identified that are related to the research questions and topic of interest (Braun and Clarke, 2006). After reading the transcripts and focusing our thematic analysis on the experiences of interdisciplinary collaboration in creating a hackathon challenge, four themes were identified using the participants’ experiences and perceptions documented in the interview transcripts. Next, the evaluation team compared their documented observation data to the four themes. Using this observation data, the themes were further developed and refined, thus representing both the participants’ accounts of their experiences and the observational perspective and expertise of project team members. Balancing participants’ self-reported data with accompanying research observations is a method of data triangulation that is commonly used to further improve the quality and reliability of qualitative research and its results (Flick, 2007). What emerged from this analysis was four sequential themes that our cohort participants used when developing an interdisciplinary hackathon challenge.

What emerged from the analysis described in the previous section was four sequential themes that our cohort participants used when developing a hackathon challenge that centered on their cohorts’ topic and related to all their disciplines. Because these themes were sequential, we present them here as stages of hackathon development. Based on (1) the salience of these stages and (2) their alignment with literature on small group work and team development (Tuckman, 1965), team problem-solving (Kozlowski et al., 2009; Super, 2020), curriculum development (Jonassen, 2008), and more specifically PBL problem development (Hung, 2006, 2016), we offer these stages as a useful process framework for others working within interdisciplinary groups to develop a hackathon challenge. The four stages that emerged through thematic analysis are illustrated in Figure 1 but are also elaborated upon and connected with literature in the sub-sections that follow.

In order to collaborate effectively, team members first needed to communicate their own discipline and specialty as well as understand the disciplines of other team members. Amy and Doug, both in their interviews prior to the hackathon event, spoke to how each of their cohorts went about this initial stage.

“We made concept maps of each other’s disciplines at one point. We had spent a lot of time telling each other what we do and then trying to repeat it back to each other and figure out where we were inspired and where we imagined cross-disciplinary insights.” – Amy (Movement cohort).

“Those moments, where each one of us introduced an idea that was like core to our practice which was accessible enough to the others, those were successful moments, you know. Those moments, where we introduce something that was core to our practice that kind of flew over the heads of the others or wasn’t accessible that’s where it was tricky, I feel so much of this entire endeavor is about sort of identifying for yourself in ways you haven’t thought about before, like what parts of what you do are sort of graspable and digestible by folks outside your immediate discipline.” – Doug (Elements cohort).

The project evaluation team’s observations confirmed the importance of this stage of development. Their observations also noted that this specific development stage appeared to be more challenging for the “Arts” members of each STEAM cohort of participants. While many of the STEM cohort participants relied on already-shared STEM language, those cohort participants who research in the Arts struggled to understand and find shared language between Arts and STEM fields. These observations align well with Pahl and Facer (2017) account of collaborative research teams needing to develop a common language to allow for collaboration and emergent ideas. This stage aligns with what Tuckman (1965) described as “forming.” Tuckman (1965) notes that that while forming, team members are concerned with orienting themselves with one another through identifying and testing interpersonal and task boundaries as well as establishing dependency relationships. As Doug noted in his quote, there were both successful and unsuccessful moments of identifying relationships and creating shared understanding. Kozlowski et al. (2009) calls this early step in project solving “problem diagnosis” – in which those who are doing the problem solving identify issues and expose weaknesses. The lack of understanding of each other’s disciplines was quickly identified as a barrier in persisting with the task and needed resolved. Super (2020) identifies a need for learning and continual improvement at this stage of team problem solving – and both Amy and Doug speak to the importance of learning and knowledge sharing to better communicating your own specialty and understanding others. The learning and knowledge sharing happening within this stage was not immediate though; as both Doug and Amy point out, it is time consuming and integral to the “entire endeavor” of developing an interdisciplinary hackathon challenge.

This second stage identified was interdisciplinary cohort participants shifting their focus from communicating their own specialties and understanding others to identifying similarities and connections between the seemingly disparate disciplines. This stage of development seemed to be difficult for each cohort. The quotes below from pre-hackathon interviews illustrate this difficulty of connecting various strands of STEAM specialties and the development of a hackathon challenge with all disciplines represented.

“But finding these things that come together, I think it was listening and listening for these concepts and the other disciplines that resonate as opposed to jumping in and saying,… ”No, no, no. Mine’s about.’ Or, “My tribe says it this way.” Instead listening and say, “Yeah, that’s the same. And maybe here’s where I think I could inject something a little different in order to elevate the discussion,” as opposed to “Your discipline just doesn’t think about this as well as my discipline.” I think that was really critical.” - Mitchell (Space cohort).

“I couldn’t figure out how to do it. I really couldn’t. I think I sat there in one or two meetings just thinking this is not going to happen. There’s no way that the four of our disciplines can work, but Amy, did it. Amy came up with this fantastic prompt, basically took their three disciplines data. What about data from […] planning? What about data from […] education? What about data from cancer research and Todd’s work has also.” – David (Movement cohort).

We see from these quotes that identifying these bridges between the disciplines was not an easy task. Tuckman’s (1965) second phase of team development – storming – describes interpersonal relationship difficulties involved in team development. The “storming” happening within these cohorts is better described as interdisciplinary relationship difficulties. Due to the innovation needed to create a challenge that integrated disparate disciplines, it is not surprising that the environment was complex, stressful, and portions of the process did not run as smoothly as some had hoped (Kozlowski et al., 2009). Project evaluation team observations noted that each cohort of participants struggled when working within this stage. Observations also revealed that the cohorts that struggled and stormed the most in this stage were those who did not spend a lot of time working iteratively and aimed for broad, high-level connections. These observations align with claims by Jonassen (2008) that instructional design is an open-ended and ill-structured problem that calls for iteration.

In cohort participant Mitchell’s quote above, he spoke to the need for more careful and critical listening and acceptance of differing perspectives when the group was still functioning within the first theme related to the stage of establishing communication and understanding. This need aligns with Super’s (2020) recommendation for leaders of problem-solving teams to encourage collective rather than individual cognition, as well as what Pahl and Facer (2017) describe as the development of a language that can be used by all team members that best allowed for open communication and emergence of new ideas that crossed disciplinary boundaries. David and his cohort looked not only at the different disciplines’ research outputs and significance but also at the data all cohort participants use in their research and found connections across data sets. These strategies used by cohort participants – such as adjusting goals, changing approaches and strategies, and identifying and leveraging multiple perspectives when looking how to solve a problem – mirror recommendations from literature on team-based problem solving (Kozlowski et al., 2009; Super, 2020).

The third stage emerged when cohort participants started leveraging the connections and commonalities between their various disciplines to create a high school hackathon challenge prompt that was related to all the disciplines represented in the cohort, as well as each cohorts’ theme. The most used word when cohort participants spoke about working within this stage was “creative,” but that creativity appeared in many ways. An example of this creativity is illustrated below.

“He identified that there was this common denominator in all of our research, which was climate change. That provided a nice anchor for us to adopt and use as something that the students themselves could latch on to. It’s a nice theme… So, then it was just a matter of refining.… We’re almost using “space” as more of a rhetorical tool to prompt students to think a little bit more creatively than they might otherwise.” – Mark (Space cohort).

As the cohort participants worked to turn the connections and similarities between their disciplines into a hackathon challenge prompt, the teams moved into Tuckman’s (1965) “norming” phase. In the norming phase teams develop a cohesive goal and working style in which roles and standards evolve and are accepted, and the standards and working style led to clear progress toward the cohesive goal. In most cases, the norming working style within these cohorts that was useful and effective within this stage was brainstorming and creativity. Mark’s quote above illustrates how that working style emerged as the space cohort identified a common dominator between their disciplines as climate change. While the cohorts’ themes were meant to be a starting point for their convergent thinking, the space cohort leveraged creativity to redefine what “space” meant in the context of their challenge (i.e., space between people emotionally or physically rather than outer space).

While at this point teams began making strong forward progress toward the development of a challenge, the project evaluation team noted that a common sticking point within this stage was how much structure (or lack of structure) the prompt should have. What was observed by the evaluation team to be most helpful for teams in determining how much structure to provide in the prompt was guidance from hackathon experts. After consultations, cohort participants were able to gain better knowledge of the context the created challenge would be functioning within – hackathons. Sean’s quote, below, speaks to this need for hackathon context expertise.

“I think we’re maybe a bit stuck. Just because I think most of us, maybe haven’t been involved with a hackathon so kind of, I think, especially on Tuesday we had some more background on in in some previous projects what had been done, and I think that was definitely helpful” – Sean (Elements cohort).

While in this stage, cohort participants were still embodying qualities of good problem solving, examples of this being working together to identify and explore potential paths forward to overcome a challenge (Kozlowski et al., 2009), planning and organizing themselves such that new ideas or perspectives can emerge (Super, 2020) – as demonstrated in Mark’s quote – and approaching the task of creating this challenge as a learning opportunity (Kozlowski et al., 2009). Despite functioning well as a team with regards to Tuckman’s (1965) phases of teaming and Super’s (2020) strategies for leaders in team development, as well as navigating problem solving in ways aligned with literature related to task engagement and learning cycles (Kozlowski et al., 2009), guidance from event experts was still necessary for teams to complete work within this theme in a way that was appropriately structured and scoped.

This final stage emerged after the team had decided on a broad topic or challenge prompt but worked to refine the challenge prompt so that the hackathon participants would work on it and be able to do so in a meaningful way. At this point, the teams would be “performing” according to Tuckman’s (1965) classification of team working phases – meaning that team members are flexible with one another, functioning well together to achieve their goal because group energy is channeled into the task. Doug’s reflection, below, illustrates what it was like for him to be working within this stage with his fellow cohort participants.

“I’ve just been thinking a little bit about this idea of sort of like sacrificing personal research stakes or something in order to find collaborative sort of balance. There’s a sacrifice involved a bit, you have to kind of maybe let go of what would be in your classroom or your lecture or something maybe the most perfect idea. But the trade-off is that you get to something that three other people can support and so actually in a way it feels like ultimately you give a little in order to actually be sort of buoyed up by even more so I’ve just been thinking about that idea of like sort of sacrifice.” – Doug (Elements cohort).

Doug demonstrates personal flexibility in the name of group functionality and goal achievement. This reflection is also important, as literature tell us that there are forms of interdisciplinary task-related conflict within small teams that lead to higher levels of creativity when problem solving (Yong et al., 2014). While Doug’s quote is very reflective of his “stage 4” experience, Mark’s quote below speaks more directly to how his cohort evaluated and then adjusted their prompts as ideas continued to develop.

“Something that we identified as an aspect that could provide some unique characteristics to the student projects. That was cooperation and games. We’ve moved a little bit away from both those themes, but they were helpful early. We’ve basically since broadened the challenge and provided the idea of a cooperation game as just one example of a way in which groups could get focused on climate change projects. But that was very helpful actually in getting us in spurring our own thinking and development, at least have that idea.” – Mark (Space cohort).

Mark notes how his cohorts’ ideas ebbed and flowed as they worked in both coming up with initial ideas for their challenge and the structure of the challenge. He speaks to the iterative, re-designing nature of developing these prompts. Participants in each cohort went through many iterations of turning connections between their disciplines into potential hackathon challenge prompts which allowed them to reflect on improvements as well as what would make the challenge appealing for participants. Jonassen (2008) argues that instructional design is not linear, but instead is a process of iterative decision making. He argues that it is like building a model, and then making decisions throughout iterations of implementations and the consideration of additional model constraints. Similarly, Kozlowski et al. (2009) echoes this idea in their task engagement and learning cycle, stating the need for innovative teams to engage in stages of reflection and repetition in a no-blame, learning-from-failure environment.

While these iterations of reflection, evaluation, and refinement are salient when talking to cohort participants about their experience in creating their hackathon challenge prompt, what was just as salient was their need for the contributions of experienced hackathon researchers and practitioners when working within this stage. This is illustrated in Sean’s quote in the previous section, as well as Mitchell’s quote below.

“I think we came together with a good pitch. I am not sure. I mean, I don’t know what this thing is, to be honest. I think we’ve crafted a pitch that helps convey our perspective and, hopefully, it will be attractive to more than one group. It’s unclear what’s a good pitch or what’s a bad pitch or what gets folks excited about our topic or not.” – Mitchell (Space cohort).

Within this research’s large project team were two project event experts who have many years of experience coordinating, facilitating, and researching hackathons. As cohort participants developed, evaluated, and refined their hackathon prompts, a project event expert also participated in the meetings to provide cohort participants with context regarding the hackathon’s student participants, general event structure and logistics, as well as guidelines as to how structured/open-ended challenge prompts should be. These project event experts served the purpose Super (2020) describes as the necessary leadership role on innovative teams when they are learning as they problem solve and reflecting on and refining their solution. They facilitate the team’s reflection and evaluation of the strengths and weakness of their ideas, as well as provide feedback when needed.

One important finding of this research was the clear need and benefit of having a resource that can serve the purpose of helping teams evaluate and revise their hackathon challenge. As we recognize that not everyone in the growing community of people who might develop hackathon challenges will have expertise with hackathon events or even have access to an event expert, we aimed to provide in this paper a resource for quality considerations for creating, evaluating, and refining a hackathon challenge. To do this, we turned back to our data as well as the hackathon experts situated on our team. First, we thematically analyzed the participant interview transcripts again, this time focusing on identifying evaluation considerations that appear to be important and impactful when developing a hackathon challenge. The results revealed that as cohort participants described their experiences working within stages three and four of interdisciplinary hackathon development, five different quality considerations of hackathon challenges appeared to be driving their brainstorming, development, and revisions: topic of the challenge, audience considerations, communication of scope, outcomes (explicit/implicit), and resource considerations.

The topic of challenge consideration related to not only how the cohort members chose a topic that integrated all their disciplines, but how they also aimed to choose a challenge topic that would be of interest to the hackathon participants. This was done by thinking about topical issues and challenges that would feel relevant to participants and be of broader consequence in the real world. The second important consideration that emerged was defining the topic and outcomes with attention to the likely knowledge, skills, and abilities of the high-school aged students in the hackathon audience. Without taking the audience of the hackathon into consideration the challenge may be uninteresting to them or too easy/hard for them to enjoy engaging in. The third emergent consideration was the scope of the problem. While there was no right or wrong answer for how much freedom or guidance to provide students with when giving them a hackathon challenge, this is something to iteratively reflect on and adjust accordingly. Scoping how much structure is given in the challenge always presents a trade-off. Give more information and students may hit fewer sticking points and be less confused, but they also may have less flexibility and creativity when designing and implementing solutions. Often what guides the communication of the scope are the outcomes of the hackathons. As backward curriculum design (McTighe and Thomas, 2003) tells us, many decisions about content and delivery need to be driven by the desired outcomes, and a similar principle applies in hackathon challenge design. Consider what the hackathon goals are for both participants and facilitators (both implicitly and explicitly) and make design challenge decisions accordingly. Finally, consider the resources that will be available as students navigate addressing the challenge and achieving the goals set out for them. The consideration of availability of resources such as data, access to equipment and networks, and content experts available for mentorship can impact a hackathon challenge’s success.

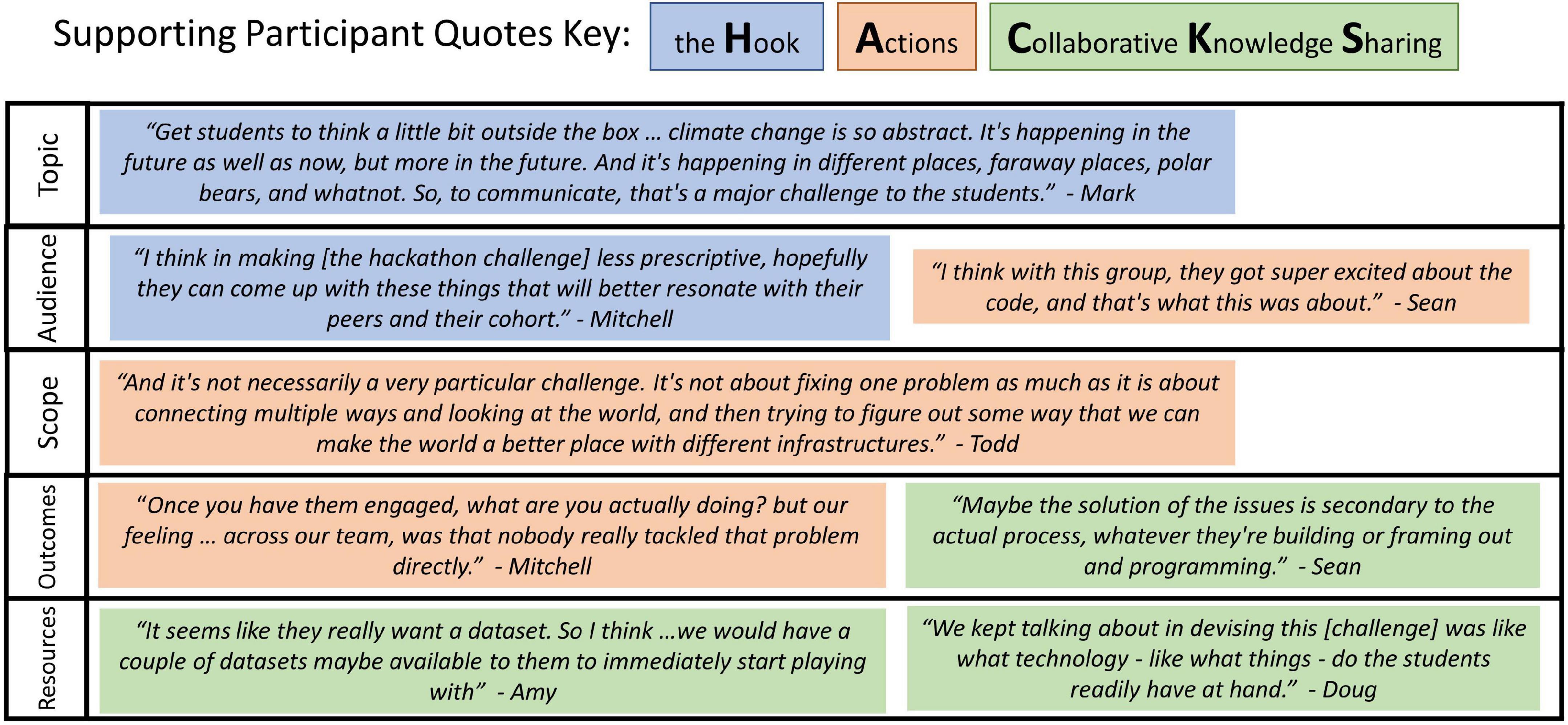

Because many of these considerations relate to one another and are flexible in nature depending on the goals of the hackathon being designed, experienced hackathon experts on our team suggested we sort the considerations into three guiding principles that can help support the development of hackathon challenges: the hook, actions, and collaborative knowledge sharing. Figure 2 maps the five emergent evaluation considerations to the three guiding principles. Figure 3 provides supporting quotes from interviews that illustrate how cohort participants identified these emergent considerations being important or impactful as well as maps the quote to the guiding principle it is related to. Below we offer further elaboration on each of the guiding principles for use by anyone who needs hackathon expert content knowledge when designing a challenge, just as our participants did.

Figure 3. Cohort participant quotes describing how they evaluated the success and quality of their hackathon challenges.

The “Hook” theme has two quality considerations – audience experience considerations and topic of challenge. In marketing terms, a hook is an angle that grabs attention, creates interest, and makes an audience receptive to your messages. Hackathon challenge designers will need to make sure their hook grabs the attention of the hackathon audience. Ensure the topic will interest and have value to those who are participating in the hackathon. Why would the participants of the hackathon want to spend their time and energy addressing the challenge prompt you put forward? The topic must be understandable and exciting, and the challenge prompt related to that topic a balance between too general and overly prescriptive. The topic should be relatable such that participants are familiar with the topic and have some level of context so that they will have a basis for designing potential solutions to address the given challenge. The challenge must be multidimensional enough to have multiple solutions, but not so complex that participants are overwhelmed. The challenge should not be prescriptive to allow for creative solutions, but also clear and direct enough that a solution could be produced in the timeframe of the event. While balancing these aspects of a hackathon topic and challenge prompt it is helpful to consider the zone of proximal development (ZPD), which refers to space between what is known and unknown. These are skills or knowledge that a person does not individually have mastery of but can acquire with the aid or guidance of someone who does (Vygotsky, 1978). When considering the hook, we recommend setting a challenge with an outcome expectation that falls in the ZPD of the audience that generates intrigue and interest to better hook participants on a topic.

The “Action” theme has three quality considerations – audience considerations, communication of scope, and outcomes. For this, hackathon challenge developers should consider what they want hackathon participants to be doing (actions) and what they want the results of those actions to be (outcomes). These are skills or knowledge that a person does not individually have mastery of yet but can acquire with the aid or guidance of someone who does (Vygotsky, 1978). We recommend determining both the challenge’s scope and outcome expectations by considering the ZPD of the audience. This may take time and iteration, as the zone is different for high school students than it is for college students, much in the same way it differs between a novice hacker and an expert. The challenge creators will need to think about what skills the participants have, what mentorship is available, and what materials/resources/data are accompanying the challenge in order to facilitate active learning through the development of a challenge solution. You should also communicate the scope in a way that guides participants’ actions in a productive direction but gives them autonomy to choose the direction of their problem-solving actions. Participants should not be building a product based on specifications, but instead solving a problem. Additionally, there should be a clear description of the explicit outcomes you hope for participants completing your challenge to achieve to provide additional clarity and directions to allow them to plan their actions accordingly. It is important in this step to determine who will own the product that is developed (the participant, the challenge provider, both) and to communicate that clearly to the participants (HackerEarth, 2022).

The “Collaborative Knowledge Sharing” theme has two quality considerations – outcomes and resource considerations. Important defining features of PBL are collaboration and drawing and leveraging connections between fields of knowledge (Duch et al., 2001; Torp and Sage, 2002; Savery, 2006). Collaborative knowledge sharing should also be a desired outcome of hackathons (Pe-Than and Herbsleb, 2019). Whether there is an explicit or implicit outcome, the challenge should not only allow for, but encourage collaboration with others and knowledge sharing across topics areas and fields of study. Participants will need to know what the judging criteria are if it is a competitive event or what the grading criteria are if it is part of a course. The goals of the event provide the parameters that set the tone of your hackathon. Consider if the goal is about the technical prowess and demonstrating specific skills or development around a particular hardware/software, creating something that others are able to iterate on, developing a list of potential solutions or if the focus of the event is about brainstorming/ideation building diverse networks and facilitating teamwork and communication, or creating a workable prototype so that the focus is more entrepreneurial in spirit. Additionally, if a pitch session or a video demonstration of the product is expected for the participants, resources for developing those should also be provided at the event. Challenge developers will also want to think about the work products (what participants submit in response to challenges) as a piece of evidence of collaboration and knowledge-sharing. Prompt developers may want the outcomes to focus on network building or collaboration, or whether the work products address the problem. Creating a rubric for what will be judged or evaluated will be helpful for participants to understand the full scope and goals of the challenge.

Both PBL and hackathons are grounded in collaborative problem-solving. Collaborative problem solving becomes increasingly important across disciplines as professionals across STEAM and social science disciplines work to address the world’s growing complex and wicked problems. Hackathons commonly function as learning environments that leverage PBL characteristics and practices to prompt students to design a solution collaboratively and creatively by integrating multiple domains of knowledge and expertise.

This research took a novel approach in studying the experiences of cohorts of STEAM researchers tasked with designing an interdisciplinary challenge prompt for high school hackathon participants, rather than the hackathon participants themselves. By using both interview and observation data to explore the experiences of our interdisciplinary cohorts as they developed hackathon challenges, we were able to make a unique contribution to the field that (1) offers a framework of helpful process stages used when developing an interdisciplinary challenge and (2) provides resources that aid in addressing identifying knowledge gaps related to hackathon contexts and challenge considerations. It is our goal that this manuscript provides others participating in the hackathon community with clear practices and considerations that can be leveraged when creating hackathon challenges.

The cohorts navigated developing an interdisciplinary challenge in four sequential phases that emerged through thematic analysis of the interview transcripts and observational data. The experiences of the cohort participants in the four phases aligned closely with literature on curriculum development, teaming, and problem solving. These phases, while they emerged from the data as primarily sequential, also demonstrated a need for iterations when working within each phase to improve team communication and final deliverables. Also salient in the data was the cohort participants’ need for context (hackathon) expertise and experience when creating and refining their challenges. Creating a challenge prompt to be “hackathon ready” was often more difficult for cohorts than the interdisciplinary nature of the task.

Hackathons are great opportunities for students to learn the complexities and interdisciplinary nature of addressing wicked problems. For high-quality hackathon challenges to emerge from multi-disciplinary design teams, the context of the hackathon environment and its target audience should be given considerable attention. In conclusion, for those creating hackathon challenges for discrete events or as a PBL assignment, hackathon challenges should hook participants with the topic of interest, have clear actionable goals that participants will address, and a means for collaborative knowledge sharing to ensure the development of a workable solution.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the Human Research Protection Program at the Ohio State University. The patients/participants provided their written informed consent to participate in this study.

ML, JA, DH, and RK conceptualized the research project. CW, DH, JA, RK, and RP completed data collection and analysis. CW organized the authorship team’s writing efforts and was responsible for citations, formatting, submission, and led revisions of the manuscript. All authors contributed to writing, reviewing, and revising various sections of this manuscript.

This manuscript was based upon work supported by the National Science Foundation under Grant No. 1811119.

We acknowledge and thank the cohort participants of this research for their work and communication in developing creative challenges for virtual high school hackathons and Sathya Gopalakrishnan, Justin Reeves Meyer, and Charlene Brenner. We thank them for their contributions and expertise as members of the project team in aiding in collection and sense making of the data sets used in this manuscript. We also acknowledge the National Science Foundation.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Akınoğlu, O., and Özkardeş Tandoğan, R. (2007). The effects of problem-based active learning in science education on students’ academic achievement, attitude and concept learning. Eur. J. Math. Sci. Technol. Educ. 3, 71–81. doi: 10.12973/ejmste/75375

Allen, D. E., Donham, R. S., and Bernhardt, S. A. (2011). Problem-based learning. New Dir. Teach. Learn. 2011, 21–29. doi: 10.1002/tl.465

Bransford, J. D., Brown, A. L., and Cocking, R. R. (eds) (2000). How People Learn. Washington, DC: National Academy Press.

Brassler, M., and Dettmers, J. (2017). How to enhance interdisciplinary competence—Interdisciplinary problem-based learning versus interdisciplinary project-based learning. Interdiscip. J. Problem Based Learn. 11:12. doi: 10.7771/1541-5015.1686

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101. doi: 10.1191/1478088706qp063oa

Byrne, J. R., Sullivan, K., and O’Sullivan, K. (2018). “Active learning of computer science using a hackathon-like pedagogical model,” in Proceedings of the Constructionism 2018, Vilnius, Lithuania.

Carruthers, A. (2014). Open data day hackathon 2014 at edmonton public library. Partnership 9, 1–13. doi: 10.21083/partnership.v9i2.3121

Chang, C.-S., Chung, C.-H., and Chang, J. A. (2020). Influence of problem-based learning games on effective computer programming learning in higher education. Educ. Tech. Res. Dev. 68, 2615–2634. doi: 10.1007/s11423-020-09784-3

Christy, A., and Lima, M. (2007). Developing creativity and multidisciplinary approaches in teaching engineering problem-solving. Int. J. Eng. Educ. 23, 636–644.

Ciesielska, M., Boström, K. W., and Öhlander, M. (2018). “Observation methods,” in Qualitative Methodologies in Organization Studies, eds M. Ciesielska and D. Jemielniak (Cham: Springer International Publishing), 33–52. doi: 10.1007/978-3-319-65442-3_2

Denning, P. J. (2009). “Resolving wicked problems through collaboration,” in Handbook of Research On Socio-Technical Design and Social Networking Systems, eds A. de Moor and B. Whitworth (Pennsylvania, PA: IGI Global), 715–730. doi: 10.4018/978-1-60566-264-0.ch047

Duch, B. J., Groh, S. E., and Allen, D. E. (2001). The Power of Problem-Based Learning: A Practical” How To” For Teaching Undergraduate Courses in Any Discipline. Sterling, VA: Stylus Publishing, LLC.

Elsayary, A., Forawi, S., and Mansour, N. (2015). “STEM eduation and problem-based learning,” in The Routledge International Handbook of Research on Teaching Thinking, eds J. C. Kaufman, L. Li, and R. Wegerif (Milton Park: Taylor & Francis).

Falk Olesen, J., and Halskov, K. (2020). “10 years of research with and on Hackathons,” in Proceedings of the 2020 ACM Designing Interactive Systems Conference, (New York, NY: ACM), 1073–1108. doi: 10.1145/3357236.3395543

Feder, T. (2021). Hackathons catch on for creativity, education, and networking. Phys. Today 74:23. doi: 10.1063/PT.3.4746

Flick, U. (2007). Managing Quality in Qualitative Research. London: SAGE Publications. doi: 10.4135/9781849209441

Gama, K., Alencar, B., Calegario, F., Neves, A., and Alessio, P. (2018a). A Hackathon Methodology for Undergraduate Course Projects. Piscataway, NJ: IEEE, 1–9.

Gama, K., Alencar Gonçalves, B., and Alessi, P. (2018b). “Hackathons in the formal learning process,” in Proceedings of the 23rd Annual ACM Conference on Innovation and Technology in Computer Science Education, (New York, NY: ACM), 248–253. doi: 10.1145/3197091.3197138

Ghouila, A., Siwo, G. H., Entfellner, J.-B. D., Panji, S., Button-Simons, K. A., Davis, S. Z., et al. (2018). Hackathons as a means of accelerating scientific discoveries and knowledge transfer. Genome Res. 28, 759–765. doi: 10.1101/gr.228460.117

Grant, A. (2017). Hackathons: a Practical Guide, Insights from the Future Libraries Project Forge Hackathon. Dunfermline: CarnegieUK Trust.

HackerEarth (2022). The Complete Guide to Organizing a Successful Hackathon. HackerEarth. Available online at: https://www.hackerearth.com/community-hackathons/resources/e-books/guide-to-organize-hackathon/ (accessed May 21, 2022).

Hmelo-Silver, C. E. (2004). Problem-Based learning: what and how do students learn? Educ. Psychol. Rev. 16, 235–266. doi: 10.1023/B:EDPR.0000034022.16470.f3

Holley, K. A. (2009). Understanding interdisciplinary challenges and opportunities in higher education. ASHE High. Edu. Rep. 35, 1–131. doi: 10.1002/aehe.3502

Holley, K., and Brown, K. (eds) (2021). “Interdisciplinarity and the American public research university,” in Re-Envisioning the Public Research University, (Milton Park: Routledge). doi: 10.4324/9781315110523-8

Horton, P. A., Jordan, S. S., Weiner, S., and Lande, M. (2018). “Project-Based learning among engineering students during short-form Hackathon events,” in Proceedings of the American Society for Engineering Education Annual Conference & Exposition, Salt Lake City, Utah. doi: 10.18260/1-2-30901

Hung, W. (2006). The 3C3R model: a conceptual framework for designing problems in PBL. Interdiscip. J. Probl. Based Learn. 1, 55–77. doi: 10.7771/1541-5015.1006

Hung, W. (2016). All PBL starts here: the problem. Interdiscip. J. Probl. Based Learn. 10:2. doi: 10.7771/1541-5015.1604

Huppenkothen, D., Arendt, A., Hogg, D. W., Ram, K., VanderPlas, J. T., and Rokem, A. (2018). Hack weeks as a model for data science education and collaboration. Proc. Natl. Acad. Sci. U.S.A. 115, 8872–8877. doi: 10.1073/pnas.1717196115

Jablokow, K. W. (2007). Engineers as problem-solving leaders: embracing the humanities. IEEE Technol. Soc. Mag. 26, 29–35. doi: 10.1109/MTS.2007.911075

Jacobs, J. A., and Frickel, S. (2009). Interdisciplinarity: a critical assessment. Annu. Rev. Sociol. 35, 43–65. doi: 10.1146/annurev-soc-070308-115954

Jansen-Dings, I., van Dijk, D., and van Westen, R. (2017). Hacking Culture: A How to Guide for Hackathons in the Cultural Sector. Available online at: https://waag.org/sites/waag/files/media/publicaties/es-hacking-culture-single-pages-print.pdf (accessed March 26, 2021).

Johnson, P., and Robinson, P. (2014). Civic Hackathons: innovation, procurement, or civic engagement? Rev. Policy Res. 31, 349–357. doi: 10.1111/ropr.12074

Jonassen, D. H. (2008). Instructional design as design problem solving: an iterative process. Educ. Technol. 48, 21–26.

Komssi, M., Pichlis, D., Raatikainen, M., Kindström, K., and Järvinen, J. (2015). What are Hackathons for? IEEE Softw. 32, 60–67. doi: 10.1109/MS.2014.78

Kos, B. A. (2018). “The Collegiate Hackathon experience,” in Proceedings of the 2018 ACM Conference on International Computing Education Research, (New York, NY: ACM), 274–275. doi: 10.1145/3230977.3231022

Kozlowski, S. W. J., Watola, D. J., Jensen, J. M., Kim, B. H., and Botero, I. C. (2009). “Developing adaptive teams: a theory of dynamic team leadership,” in Team Effectiveness in Complex Organizations: Cross-Disciplinary Perspectives and Approaches, eds C. S. Burke, E. Salas, and G. F. Goodwin (New York, NY: Routledge/Taylor Francis Group), 113–155.

La Place, C., Jordan, S. S., Lande, M., and Weiner, S. (2017). Engineering Students Rapidly Learning at Hackathon Events. Columbus, OH: ASEE. doi: 10.18260/1-2-28260

LaForce, M., Noble, E., and Blackwell, C. (2017). Problem-Based Learning (PBL) and student interest in STEM careers: the roles of motivation and ability beliefs. Educ. Sci. 7:92. doi: 10.3390/educsci7040092

Lapp, H., Bala, S., Balhoff, J. P., Bouck, A., Goto, N., Holder, M., et al. (2007). The 2006 NESCent phyloinformatics hackathon: a field report. Evol. Bioinform. 3, 287–296. doi: 10.1177/117693430700300016

Lara, M., and Lockwood, K. (2016). Hackathons as community-based learning: a case study. Techtrends 60, 486–495. doi: 10.1007/s11528-016-0101-0

Longmeier, M. M. (2021). Hackathons and libraries: the evolving landscape 2014-2020. Inf. Technol. J. 40:4. doi: 10.6017/ital.v40i4.13389

Lönngren, J., Adawi, T., and Svanström, M. (2019). Scaffolding strategies in a rubric-based intervention to promote engineering students’ ability to address wicked problems. Eur. J. Eng. Educ. 44, 196–221. doi: 10.1080/03043797.2017.1404010

McArthur, K., Lainchbury, H., and Horn, D. (2012). Open Data Hackathon How to Guide v. 1.0. Available online at: https://docs.google.com/document/d/19IqaRKOLW6wDX5DJF2U2ZrQwo6vMzqLz51riqVL22oQ/edit (accessed March 26, 2021).

McTighe, J., and Thomas, R. S. (2003). Backward design for forward action. Educ. Leadersh. 60, 52–55.

Medina Angarita, M. A., and Nolte, A. (2019). “Does it matter why we hack? - Exploring the impact of goal alignment in hackathons,” in Proceedings of 17th European Conference on Computer-Supported Cooperative Work Exploratory Papers, Reports of the European Society for Socially Embedded Technologies, Salzburg, Austria. doi: 10.18420/ecscw2019_ep01

Möller, S., Afgan, E., Banck, M., Bonnal, R. J., Booth, T., Chilton, J., et al. (2014). Community-driven development for computational biology at Sprints. Hackathons and Codefests. BMC Bioinformatics 15:S7. doi: 10.1186/1471-2105-15-S14-S7

Nandi, A., and Mandernach, M. (2016). “Hackathons as an informal learning platform,” in Proceedings of the 47th ACM Technical Symposium on Computing Science Education, (New York, NY: ACM), 346–351. doi: 10.1145/2839509.2844590

Nelson, C., and Kashyap, N. (2014). GLAM Hack-in-a-Box: A Short Guide for Helping You Organize a GLAM Hackathon. Available online at: http://dpla.wpengine.com/wp-content/uploads/2018/01/DPLA_HackathonGuide_ForCommunityReps_9-4-14-1.pdf (accessed March 26, 2021).

Nolte, A., Pe-Than, E. P. P., Affia, A. O., Chaihirunkarn, C., Filippova, A., Kalyanasundaram, A., et al. (2020). How to organize a Hackathon - a planning kit. arXiv [Preprint]. doi: 10.3390/tropicalmed7010013

Pahl, K., and Facer, K. (eds) (2017). “Understanding collaborative research practices: a lexicon,” in Valuing Interdisciplinary Collaborative Research: Beyond Impact, (Bristol: Bristol University Press), 215–232. doi: 10.2307/j.ctt1t895tj.17

Pelan, R., Drayton, T., Kajfez, R., and Armstrong, J. (2020). “Convergent learning from divergent perspectives: an executive summary of the pilot study,” in Proceedings of the 2020 ASEE Virtual Annual Conference Content Access Proceedings (Virtual On line: ASEE Conferences), (Washington, DC: ASEE), 34333. doi: 10.18260/1-2-34333

Peng, W. (2010). “Practice and experience in the application of problem-based learning in computer programming course,” in Proceedings of the 2010 International Conference on Educational and Information Technology, (Chongqing, China: IEEE), 5607778. doi: 10.1109/ICEIT.2010.5607778

Peters, B. G. (2017). What is so wicked about wicked problems? A conceptual analysis and a research program. Policy Soc. 36, 385–396. doi: 10.1080/14494035.2017.1361633

Pe-Than, E. P. P., and Herbsleb, J. D. (2019). “Understanding Hackathons for science: collaboration, affordances, and outcomes,” in Proceedings of the iConference 2019: Information in Contemporary Society, (Cham: Springer), 27–37. doi: 10.1007/978-3-030-15742-5_3

Pe-Than, E. P. P., Nolte, A., Filippova, A., Bird, C., Scallen, S., and Herbsleb, J. (2022). Corporate hackathons, how and why? A multiple case study of motivation, projects proposal and selection, goal setting, coordination, and outcomes. Hum. Comput. Interact. 37, 281–313. doi: 10.1080/07370024.2020.1760869

Porras, J., Khakurel, J., Ikonen, J., Happonen, A., Knutas, A., Herala, A., et al. (2018). “Hackathons in software engineering education: lessons learned from a decade of events,” in Proceedings of ACM ICSE Conference, (Gothenburg: ACM), 1–8. doi: 10.1145/3194779.3194783

Porras, J., Knutas, A., Ikonen, J., Happonen, A., Khakurel, J., and Herala, A. (2019). “Code camps and hackathons in education-literature review and lessons learned,” in Proceedings of the 52nd Hawaii International Conference on System Sciences, Hawaii. doi: 10.24251/HICSS.2019.933

Raatikaine, M. (2013). “Industrial experiences of organizing a Hackathon to assess a device-centric cloud ecosystem,” in Proceedings of the 2013 Computer Software and Applications Conference, Kyoto, Japan. doi: 10.1109/COMPSAC.2013.130

Richterich, A. (2017). Hacking events: project development practices and technology use at hackathons. Convergence 25, 1000–1026. doi: 10.1177/1354856517709405

Sarı, U., Alıcı, M., and Şen, ÖF. (2018). The effect of STEM instruction on attitude, career perception and career interest in a problem-based learning environment and student opinions. Electron. J. Sci. Educ. 22, 1–20.

Savery, J. R. (2006). Overview of problem-based learning: de?nitions and distinctions. Interdiscip. J. Prob. Based Learn. 1:3. doi: 10.7771/1541-5015.1002

Selvakumar, M., and Storksdieck, M. (2013). Portal to the public: museum educators collaborating with scientists to engage museum visitors with current science. Curator Museum J. 56, 69–78. doi: 10.1111/cura.12007

Skaburskis, A. (2008). The origin of “Wicked Problems.”. Plann. Theory Pract. 9, 277–280. doi: 10.1080/14649350802041654

Super, J. F. (2020). Building innovative teams: leadership strategies across the various stages of team development. Bus. Horiz. 63, 553–563. doi: 10.1016/j.bushor.2020.04.001

Tauberer, J. (2017). How to Run a Successful Hackathon. Available online at: https://hackathon.guide/ (accessed March 26, 2021).

Torp, L., and Sage, S. (2002). Problem as Possibilities, Problem Based Learning for K-16. Cheltenham, VC: Hawker Brownlow Education.

Trainer, E. H., and Herbsleb, J. D. (2014). “Beyond code: prioritizing issues, sharing knowledge, and establishing identity at Hackathons for Science,” in Proceedings of the CSCW Workshop on Sharing, Re-use, and Circulation of Resources in Scientific Cooperative Work, (New York, NY: ACM).

Trainer, E. H., Kalyanasundaram, A., Chaihirunkarn, C., and Herbsleb, J. D. (2016). “How to Hackathon: Socio-technical Tradeoffs in Brief, Intensive Collocation,” in Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing (CSCW ’16), (New York, NY: ACM), 1118–1130. doi: 10.1145/2818048.2819946

Tuckman, B. W. (1965). Developmental sequence in small groups. Psychol. Bull. 63, 384–399. doi: 10.1037/h0022100

Vander Broek, J. L., and Rodgers, E. P. (2015). Better together: responsive community programming at the UM library. J. Libr. Adm. 55, 131–141. doi: 10.1080/01930826.2014.995558

Vygotsky, L. S. (1978). Mind in Society: The Development of Higher Psychological Processes. Cambridge, MA: Harvard University Press.

Ward, D., Hahn, J., and Mestre, L. (2014). Adventure code camp: library mobile design in the backcountry. Inf. Technol. Libr. 33, 45–52. doi: 10.6017/ital.v33i3.5480

Ward, D., Hahn, J., and Mestre, L. (2015). Designing mobile technology to enhance library space use: findings from an undergraduate student competition. J. Learn. Spaces 4, 30–40.

Yadav, A., Subedi, D., Lundeberg, M. A., and Bunting, C. F. (2011). Problem-based learning: influence on students’ learning in an electrical engineering course. J. Eng. Educ. 100, 253–280. doi: 10.1002/j.2168-9830.2011.tb00013.x

Keywords: hackathon challenge development, problem-based learning, interdisciplinary problem-solving, wicked problems, faculty development, hackathon based learning

Citation: Wallwey C, Longmeier MM, Hayde D, Armstrong J, Kajfez R and Pelan R (2022) Consider “HACKS” when designing hackathon challenges: Hook, action, collaborative knowledge sharing. Front. Educ. 7:954044. doi: 10.3389/feduc.2022.954044

Received: 26 May 2022; Accepted: 17 August 2022;

Published: 16 September 2022.

Edited by:

Alexander Nolte, University of Tartu, EstoniaReviewed by:

Muhammet Usak, Kazan Federal University, RussiaCopyright © 2022 Wallwey, Longmeier, Hayde, Armstrong, Kajfez and Pelan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Cassie Wallwey, Y3dhbGx3ZXlAdnQuZWR1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.