- Ontario Institute for Studies in Education, University of Toronto, Toronto, ON, Canada

Post-secondary education institutions with English as a medium of instruction have prioritized internationalization, and as a result, many universities have been experiencing rapid growth in numbers of international students who speak English as an additional language (EAL). While many EAL students are required to submit language test scores to satisfy university admission criteria, relatively little is known about how EAL students interpret admission criteria in relation to language demands post admission and what their language challenges are. This study, situated at a large Canadian university, integrated student and faculty member focus group data with data obtained from a domain analysis across three programs of study and a reading skills questionnaire. Findings suggest that many students and faculty members tend to misinterpret language test scores required for admission, resulting in surprise and frustration with unexpected level of language demands in their programs. Also, students experience complex and challenging language demands in their program of study, which change over time. Recommendations for increased student awareness of language demands at the pre-admission stage and a more system-wide and discipline-based approach to language support post-admission are discussed.

Introduction

Many post-secondary education institutions using English as a medium of instruction increasingly identify internationalization as their top priority. As a result, many of these universities have been experiencing rapid growth in numbers of international students who speak English as an additional language (EAL). In the Canadian context where this study is situated, 341,964 international students were enrolled at Canadian post-secondary institutions in 2018/2019, which was a considerable increase from 228,924 in 2015/2016 (Statistics Canada, 2021). In addition to fulfilling university internationalization goals (Association of Universities and Colleges of Canada, 2014), international students make significant contributions to the Canadian economy, spending an excess of 15.5 billion Canadian dollars on tuition, accommodation, and discretionary spending in 2016 (Kunin, 2017). Canadian universities have been using international student revenues to compensate for reductions in government funding (Cudmore, 2005; Knight, 2008), making international students an important source of revenue for Canadian universities as domestic enrollment declines (Association of Universities and Colleges of Canada, 2014).

Although increase in international student enrollment contributes to cultural and linguistic diversity to campus life, research shows international students do not perform as well as domestic students. In a United Kingdom study (Morrison et al., 2005), data collected centrally by the Higher Education Statistics Agency on the class of degree obtained by international undergraduate students showed that domestic students in general obtained higher classes of degrees (i.e., first or upper second class honors) than international students. This finding indicates a disparity in the academic experiences between domestic and international students. Research also suggests that one possible explanation for this disparity is that EAL students face significant challenges from academic work due to a lack of support for academic language demands (Fox, 2005; Guo and Chase, 2011). Most English medium of instruction universities require EAL students to demonstrate proof of English language proficiency (ELP) for admission. One of the most common proofs of ELP is standardized English language tests, such as the Test of English as a Foreign Language (TOEFL), the International English Language Testing System (IELTS), and the Pearson Test of English (PTE). However, the extent to which these tests provide evidence for making inferences about EAL students’ ELP in discipline-specific post-secondary education contexts has been heatedly debated (Chapelle et al., 2008; Zheng and De Jong, 2011; Johnson and Tweedie, 2021). The characterization of the general ELP construct by standardized tests created disparities between tested ELP and real-life academic language demands that EAL students face upon admission (Guo and Chase, 2011; Guo and Guo, 2017; Pilcher and Richards, 2017).

Another widely used proof of ELP is institutional English-for-academic-purpose (EAP) courses for EAL students who are unable to achieve the required score on one of the recognized ELP tests. In addition to extra time and expenses associated with EAP programming, its generic approach to academic English has been criticized (Sheridan, 2011; Murray, 2016; Johnson and Tweedie, 2021). As well, it has been reported that students who enter university by taking courses in EAP pre-sessional programs have lower academic outcomes (Eddey and Baumann, 2011; Oliver et al., 2012).

While standardized EAP tests measure English language proficiency, the lack of contextual and discipline-specific constructs in these tests (Pilcher and Richards, 2017) creates a generalized view of ELP that may be at odds with the contextual and discipline-specific language required in academic settings as reported above. This situation may be problematic for universities who depend on these test scores to determine EAL student readiness to meet academic language demands. The inadequacy of the “one-size-fits-all” view of the general ELP construct used in standardized tests and EAP programming prompted the present study to closely examine critical questions of language requirements and EAL students’ preparedness to handle the academic language demands in their programs of study. The purpose of the study was to deepen our understanding of EAL students’ academic experiences with language demands based on their lived experiences as well as faculty members’ perspectives about EAL students’ academic language needs. As faculty members teach students and grade their academic work, their perspective on student readiness to cope with the demands of academic work is important to understand the appropriacy of language requirements. As such, it was deemed to be critical to explore the understandings of both students and faculty members to examine potential discrepancies in their perspectives and their impact on EAL student academic experience.

English language requirements for university admission

In English medium of instruction universities, international EAL students must provide proof of ELP as part of admission requirements. Admission requirements are varied but can be met typically through one of the following: (a) length of residence in the country in which the university is located and attendance at a school where English is the language of instruction, (b) attendance at a school that teaches in English in a country where the dominant language is English, (c) completion of an English-for-academic-purpose course or program, or (d) a score from a standardized English language test.

Although admission requirements based on length of residence and attendance at a school where English is the language of instruction are deemed to provide alternative options for applicants, little research exists on the performance of students admitted to a university based on different admission requirements. Fox’s (2005) four-year longitudinal study shows that EAL students whose admission was based on the residency requirement underperformed other EAL student groups and faced various challenges regardless of the number of years in an English-medium high school.

Still, the most common proof of ELP used by EAL applicants is a standardized test score of TOEFL, IELTS, PET, Duolingo English Test, or other tests. While cut-off scores are commonly used to determine the adequacy of ELP, there remain significant concerns about the misuse of these tests, weak predictive validity of future academic performance, and a lack of information for test-takers, university faculty, and administration staff about what these standardized test scores mean in practice (Deakin, 1997; McDowell and Merrylees, 1998; Banerjee, 2003; Coleman et al., 2003; O’Loughlin, 2008). There are few training opportunities for university faculty and staff to determine the adequate level of language proficiency in a specific field of study (Coleman et al., 2003; Rea-Dickins et al., 2007), making the decision of what cut scores to adopt for admission problematic.

The decision of an appropriate cut score on a standardized language test for university admission is a critical consideration, and many university cut scores are sometimes at odds with recommendations of test developers. MacDonald (2019) reported that in the case of the IELTS test, 34 out of 35 Canadian universities surveyed adopted an overall band of 6.5 for direct admission for undergraduate study. IELTS test developers, on the other hand, recommend band 7.5 and above as acceptable for linguistically demanding programs and 7.0 and above as acceptable for less linguistically demanding programs (IELTS, 2019). This disparity between the cut scores recommended by test companies and those used by Canadian universities might impact the experience of international EAL students.

University test score users’ insufficient knowledge about standardized test scores and academic language demands are further complicated by the under-representation of the EAP construct underlying the aforementioned standardized language tests. Research converges showing that standardized language tests used for admission do not represent the full range of language demands required in academic work (Weir et al., 2012; Brooks and Swain, 2014; Bruce and Hamp-Lyons, 2015; Pilcher and Richards, 2017). Brooks and Swain (2014) reported a mismatch between the TOEFL iBT speaking test and real-life academic learning contexts, which can potentially mislead EAL students to believe they are prepared for post-admission communicative tasks. This mismatch is not limited to speaking tests. Research on the IELTS reading test highlights its emphasis on basic textual comprehension, while lacking items measuring higher-order reading skills (Moore et al., 2012; Weir et al., 2012; Jang et al., 2019). Research in the target language domain has shown significant variation in reading skills between EAL students and students who speak English as a first language. Trenkic and Warmington (2019) compared reading skills of United Kingdom university students who spoke English as a first language and EAL students. Results showed that EAL students had a significantly smaller vocabulary range and comprehended less of what they read than students who spoke English as a first language. The authors suggested that, because of these differences, EAL students are at an academic disadvantage against first language students especially when program learning tasks require students to read independently and then be assessed through writing (Trenkic and Warmington, 2019).

Other research also points to the discrepancy in the target language construct between general language tests and discipline-specific languages used across different programs of study (Hyland and Hamp-Lyons, 2002; Hyland, 2004; Fang and Schleppegrell, 2008; Moore et al., 2012; Pilcher and Richards, 2017). Yet, Rosenfeld et al. (2003) identified language tasks that are frequently used in post-secondary courses offered in North American universities and confirmed that the TOEFL iBT prototype tasks were consistent with stakeholder perspectives. Few studies have systematically investigated the perspectives of EAL students and faculty members on the relationship of language requirements for admission and actual language demands across different fields of study. One such study, O’Loughlin (2008), found that students and university staff (e.g., admissions officers and academic staff) in two programs of study lacked understanding about the relationship of IELTS test scores and language ability, the need for future student support, and student readiness for study. The present study builds on O’Loughlin (2008) by focusing on input from students and faculty members across three programs of study. It is important to seek input from stakeholders, such as students and faculty members to fully understand academic language use across different disciplines (McNamara, 1996).

English as an additional language students’ experience with academic language demands

International EAL students encounter various challenges associated with access to social interactions, if any, participating in such social interactions meaningfully (Pritchard and Skinner, 2002), a sense of isolation, financial burdens (Li and Kaye, 1998; Lloyd, 2003), overt and covert racism and stereotypes (Guo and Guo, 2017), and a lack of requisite academic language skills required for successful academic work (Robertson et al., 2000). Among these various factors, students’ experience with academic language demands required for successful coursework has been researched extensively (Fitzgerald, 1995; Flowerdew and Miller, 1995; Ferris and Tagg, 1996; Mulligan and Kirkpatrick, 2000; Mendelsohn, 2002; Parks and Raymond, 2004). For example, EAL students may not be familiar with classroom discourse patterns, such as questions, responses, and appraisal, known as an initiation-response-evaluation (IRE) classroom discourse pattern (Cazden, 1988) and lecture styles (Flowerdew and Miller, 1995; Mulligan and Kirkpatrick, 2000). Mulligan and Kirkpatrick (2000) surveyed first-year EAL students and discovered that fewer than one in 10 students could understand the lecture very well and that one in 25 did not understand the lecture at all. Mendelsohn (2002) reported that EAL students’ difficulty in understanding lectures in their first academic year is shown to have negative impact on their academic performance.

Another challenge for EAL students is oral language communication. Ferris and Tagg (1996) surveyed 234 faculty members at four post-secondary institutions in the United States and asked them what they thought listening and speaking challenges were for EAL students. Results suggested that faculty members were concerned with EAL students’ willingness and ability to participate in class discussions and with their ability to ask or respond to questions. Other research has suggested that affective factors may impact EAL student willingness to participate in class discussions. For example, fear of making mistakes has been shown to negatively impact EAL student class participation (Jacob and Greggo, 2001). Parks and Raymond (2004) reported that while EAL students in a graduate program in a Canadian university were encouraged to interact with local students, their local peers did not always welcome EAL students due to their perceived lack of language ability.

Regarding reading comprehension challenges, research has suggested that EAL students take longer to read than first-language users (Fitzgerald, 1995), suggesting an extra burden placed on EAL students compared to first-language speakers. Other factors that may contribute to reading comprehension challenges are cultural; research suggests that lack of familiarity with background content plays a factor in comprehension and schemata activation (Steffenson and Joag-Dev, 1979; Moje et al., 2000). Research has suggested that university faculty members perceive EAL students lacking critical reading and strong writing skills (Robertson et al., 2000).

In addition to academic uses of language, university study requires social language use. In a study that focused on the first six months of university study, results suggested that due to a lack of a local support network, EAL students experience more difficulties than local students adjusting to university life (Hechanova-Alampay et al., 2002). EAL students also expressed more stress and anxiety than local students (Ramsay et al., 1999) and felt they have to initiate social interaction with local students, have difficulty talking with faculty members, and have difficulty working on group projects with local students (Rajapaksa and Dundes, 2002).

Universities typically provide various types of language support (e.g., workshops, preparation courses, writing consultations) to address EAL students’ language challenges, and research generally converges on a need for program-specific support that is developed through the interdisciplinary collaboration of applied linguists and faculty in relevant disciplinary areas (Andrade, 2006; Hyatt and Brooks, 2009; Anderson, 2015). Regarding faculty members’ support of EAL students, studies reveal that faculty members provide extra support to their students (Trice, 2003) while other studies conclude that faculty members feel support provision is not their responsibility (Gallagher and Haan, 2018), suggesting conflicting perspectives faculty members have on EAL student support.

While challenges that EAL students have with language demands have been well researched, many studies have focused on the perspectives of faculty, graduate contexts, EAP programming, and co-curricular staff, leaving the perspectives of post-secondary EAL students under-researched. As well, studies have lacked EAL students’ and their faculty members’ perceptions on the adequacy of ELP test criteria within particular programs of study. The present study seeks to address this gap by investigating the perspectives of post-secondary EAL students on their English language challenges and their interpretation of ELP test scores against language demands within three programs of study and across all years of undergraduate study. We elicited faculty member perspectives of EAL student readiness and experience with language challenges to deepen understanding of EAL student perspectives on the appropriacy of admission test criteria and student challenges with language demands. The study was guided by the following research questions:

1 What are EAL students’ perspectives about the extent to which English language tests used for university admission represent the target language demands?

2 What challenges do EAL students and faculty members identify as most challenging for EAL students’ academic success?

3 What strategies and resources do students and faculty members use to address EAL students’ challenges with language demands?

Materials and methods

Study context

The university where this study is situated accepts a variety of English language tests that are typically recognized by universities in Canada and other English speaking countries; for example, Cambridge Assessment English, Canadian Academic English Language (CAEL) Test, Canadian Test of English for Scholars and Trainees (CanTEST), Duolingo English Test (DET), International English Language Test System (IELTS) Academic, and the Test of English as a Foreign Language (TOEFL). At this university, cut scores for admission to all undergraduate programs across all faculties are the same. Taking the IELTS test for example, an overall score of 6.5 with no band below 6.0 for any subsection (i.e., reading, writing, listening, speaking) is required for admission.

In 2017–2018 when this study was conducted, international student enrollment at this university was 19,187 students which was 21.3% of total undergraduate and graduate enrollment (Planning and Budget Office, 2017-2018). Students attend this university from 166 countries and regions, and the top five countries for undergraduate students are China (65%), India (4%), the United States (3%), South Korea (3%), and Hong Kong (2%) (Planning and Budget Office, 2017-2018). In 2020–2021, the proportion of international students in the Faculty of Applied Science and Engineering was 35.3% and 41.3% in the Faculty of Arts and Science (Planning and Budget Office, 2017-2018). The three programs selected for this study were situated in these faculties, and the percentages indicate that significant numbers of international students were enrolled in these programs.

Study design

In this study, we examined the perspectives of EAL students and faculty members about academic language demands across three different disciplines of study and their responses to such challenges with an emphasis on reading skills. As part of a larger study investigating how score reporting could be enhanced on the reading section of a standardized English language test (Jang et al., 2019), the present study focused more specifically on EAL students’ perspectives about academic language demands based on their lived academic experience after admission as well as faculty members’ perspectives about EAL students’ academic language needs.

We employed a mixed-methods inquiry design in order to triangulate different perspectives elicited through both qualitative and quantitative data collection (Teddlie and Tashakkori, 2003; Greene, 2008). In triangulating different perspectives using qualitative and quantitative data, our intent was not just to seek convergence; instead, we sought nuanced insights into both converging and diverging perspectives from their lived experiences (Mathison, 1988; Greene, 2008).

Participants

A total of 37 EAL students and 16 faculty members across three programs (Commerce, Economics, and Engineering) were recruited to participate in the study from one of the largest universities in Canada. We chose to focus on Commerce, Economics, and Engineering programs as these programs tend to attract large numbers of EAL students as reported above.

The participant recruitment process began with the research team contacting leadership in the programs of focus to introduce the project. Once the units agreed to collaborate, the programs and principal investigator sent a joint letter of invitation to students and faculty members to participate in the project. All students who were registered in the programs of focus and had submitted English proficiency test scores for admission received an invitation to participate. Students were separated into either a first-year group or an upper-year group consisting of students in their second, third, or fourth year of their program. For students who indicated interest in participating in the project, a digital consent form was sent with more information about the project and detailed focus group instructions.

Among the 37 student participants, the majority (79%) were from mainland China. Other student participants included four students from India, two from Pakistan, two from Russia, and one from Taiwan. This student composition was reasonable given the sizable proportion of EAL students from mainland China in the international student population at this university. Ages ranged from 19 to 24 and the group was made up of 25 females and 12 males. No information was collected from students regarding their English language test scores, but all student participants had submitted a test score that met admission requirements. Faculty members who participated were mostly tenured faculty members.

Data collection

Focus groups

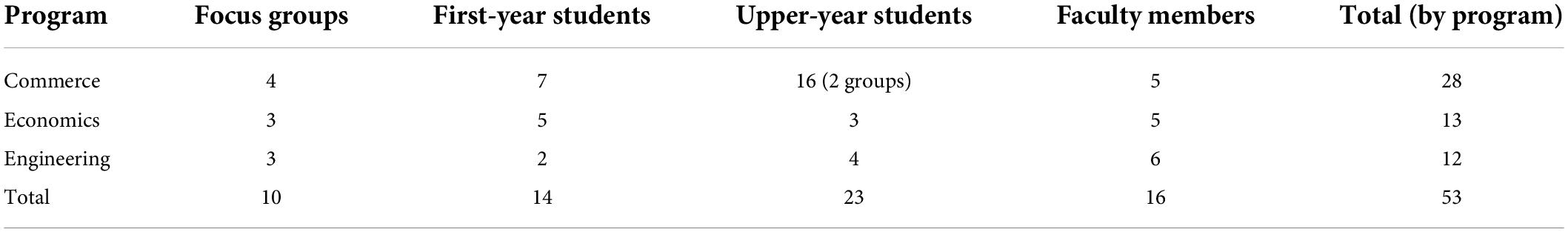

Each academic program had one faculty member focus group and two student focus groups (first-year and upper-year) except for the Commerce program which had three student focus groups (one first-year and two upper-year) due to strong student interest in participating in the study. Multiple focus group protocols tailored for different participant groups were developed separately (see Supplementary Appendix A). For first-year students, the protocol questions were aimed at understanding how EAL students perceived the language demands they faced in their program and how well they felt prepared for these language demands. Upper-year EAL students were asked, along with the aforementioned questions, to reflect on how they overcame any challenges they had and how their language ability to navigate university language demands grew over time. The protocol for faculty member focus groups included their general observation of EAL student language proficiency, their perceived preparedness of EAL students for their program, areas in which EAL students seemed to struggle the most, and EAL student progress in language development. Each focus group was video-recorded and lasted for 60–90 min. Table 1 shows the distribution of focus group participants by academic program.

Academic reading skill questionnaire

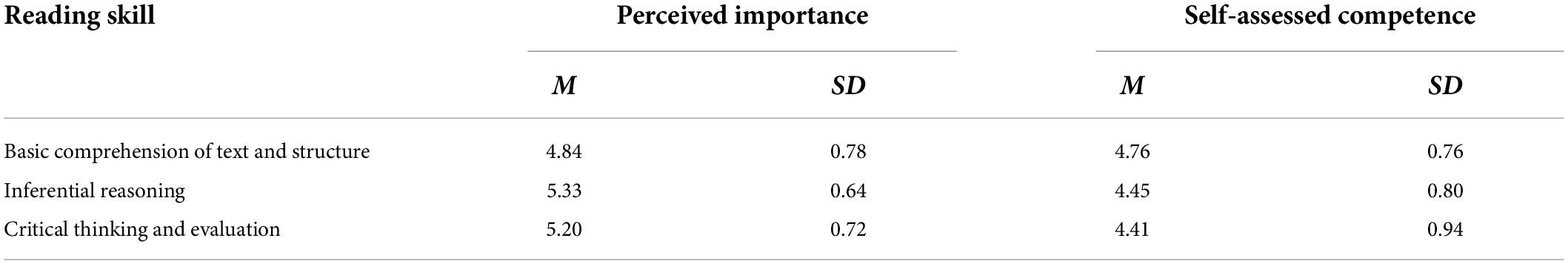

To further understand the challenges EAL students face in reading in their program of study in relation to RQ2, we asked student focus group participants to complete a questionnaire. We focused the questionnaire on academic reading skills to examine EAL students’ perceived importance of different reading skills for their academic work as well as their self-assessed competence in performing these skills. The skills included in the questionnaire were identified based on the relevant literature (e.g., Weir and Urquhart, 1998). A total of 10 items were intended to measure three specific reading skills on a six-point Likert scale: basic comprehension of text and structure (3 items), inferential reasoning (4 items), and critical thinking and evaluation (3 items) (see Supplementary Appendix B for the full list of items).

Course materials

In order to examine the language demands required in undergraduate courses (in addition to perceived language demands), we collected syllabi and reading materials from the faculty members who participated in focus groups. In each of the three programs, two courses were selected in each of the four program years (i.e., first year, second year, third year, and fourth year), leading to a total of 24 courses (2 courses × 4 program years × 3 programs of study) of which the course materials were evaluated. Within each course, official course syllabus and required readings for the typical week were analyzed to determine language demands and student assessment criteria.

Data analysis

Each data source was analyzed separately prior to integrative mixed-methods analyses. First, analysis of the focus group data employed an inductive thematic analysis in an iterative manner (Thomas, 2006). Data was first organized and analyzed in three groups: first-year EAL students, upper-year EAL students, and faculty members. The first round of inductive data analysis involved descriptive coding in order to capture emic perspectives expressed in participants’ own words. Subsequently, we categorized the descriptive codes by merging overlapping codes, clarified the underlying meanings of the descriptive codes, and compared resulting themes within and across the three groups as well as between EAL students and faculty members.

We analyzed the questionnaire data focusing on discrepancies in perceived importance and self-assessed competence across three reading skill categories: basic comprehension of text and structure, inferential reasoning, and critical thinking and evaluation. Due to the small sample size, no internal factor structure of the questionnaire data was tested in the present study. Instead, scores were calculated based on summed scores across items for each skill category. A one-way repeated measures ANOVA was conducted to compare means across three categories (e.g., basic comprehension of text and structure, inferential reasoning, and critical thinking and evaluation) for perceived importance and self-assessed competence, separately. In the case of self-assessed competence, the assumption of sphericity was violated and, therefore, the Greenhouse–Geisser correction was used to interpret the results. The interpretations of hypothesis testing were based on a significance level of 0.05. All quantitative analysis was conducted using Stata 15.1 (StataCorp, 2017).

We performed content analysis of the course materials. Faculty members from the three faculty focus groups provided samples of their course syllabi which totaled eight courses for each of the three programs of study. For each program, two courses represented each year of study. Our analytical scheme included five categories: overall reading requirements, modality and total number of assessments, volume of reading, text types (e.g., textbook, manual, journal article), and style and text genre. We performed this analysis per program and then developed a comparative matrix that included the five categories. Finally, we extracted quantitative information about the amount of overall reading requirements by text type and modality of evaluation criteria (e.g., written assignment, quiz, exam).

Results

RQ1. What are EAL students’ perspectives about the extent to which English language tests used for university admission represent the target language demands?

The first research question probed student perspectives about the extent to which the English language test represented the language demands in their program of study. We identified three inter-related themes concerning discrepancy between general academic and discipline-specific language demands, a higher level of complexity in target language demands, and an inflated view of language ability based on test scores.

Discrepancy between general language and discipline specific language demands

International students who learn English as a foreign language have limited access to authentic linguistic input and resources. Instead, they devote a considerable amount of time to mastering study materials in order to acquire the pass scores of standardized tests accepted for admission purposes in post-secondary education. As a result, international students arrive on campus with a poor understanding of academic language demands specific to their program of study.

Most focus group students expressed surprise and frustration at the mismatch between the general language demand requirements on the language test and the amount and complexity of disciplinary language requirements, specifically discipline-specific vocabulary in their program of study. One Engineering student explained:

I’m in Engineering Science. And so, for us in the first two years we have to take many different courses in the Engineering field. For example, like biology. I need to take biology. And I don’t understand anything. I had lots of trouble because there are so many terms. I get lost with all those terms. (Engineering)

Students from the other disciplines also expressed their struggles with academic vocabulary. Two Commerce students reported that despite their satisfactory test scores, they found it challenging to tackle the sheer volume of technical vocabulary while reading texts. An Engineering faculty member corroborated these opinions by noticing that students may recognize vocabulary, but “it does not always mean what they think it means” because of disciplinary usage.

In addition to technical vocabulary, students found the lengths of texts they are expected to read and write were much longer compared to reading passages and writing requirements used by standardized language tests. One Economics student explained: “before I came here, the longest passage (for writing) was 300 words. But for here, 30 pages! Oh, how can I do that?” One student also commented on differences between reading text structure on the test and that in academic readings, which impacted their ability to comprehend meaning in academic work:

For reading questions in IELTS, we know the specific questions we need to answer after reading it, and there are some keywords we can search in the paragraphs. But, for the reading in university, maybe the articles are not so structured like the readings in IELTS. So, we don’t know where to find the information at all. (Engineering)

An Economics student explained that reading comprehension on the reading test is focused more on local comprehension whereas reading comprehension in his courses requires more global comprehension and explained, “Reading (on the test) is more like collecting information. Courses are asking for interpretation.” An Engineering student, surprised that language demands were much more difficult than she had expected after having achieved the language score required for admission, said that she would tell incoming students: “don’t be satisfied with your IELTS score” as advice to incoming students.

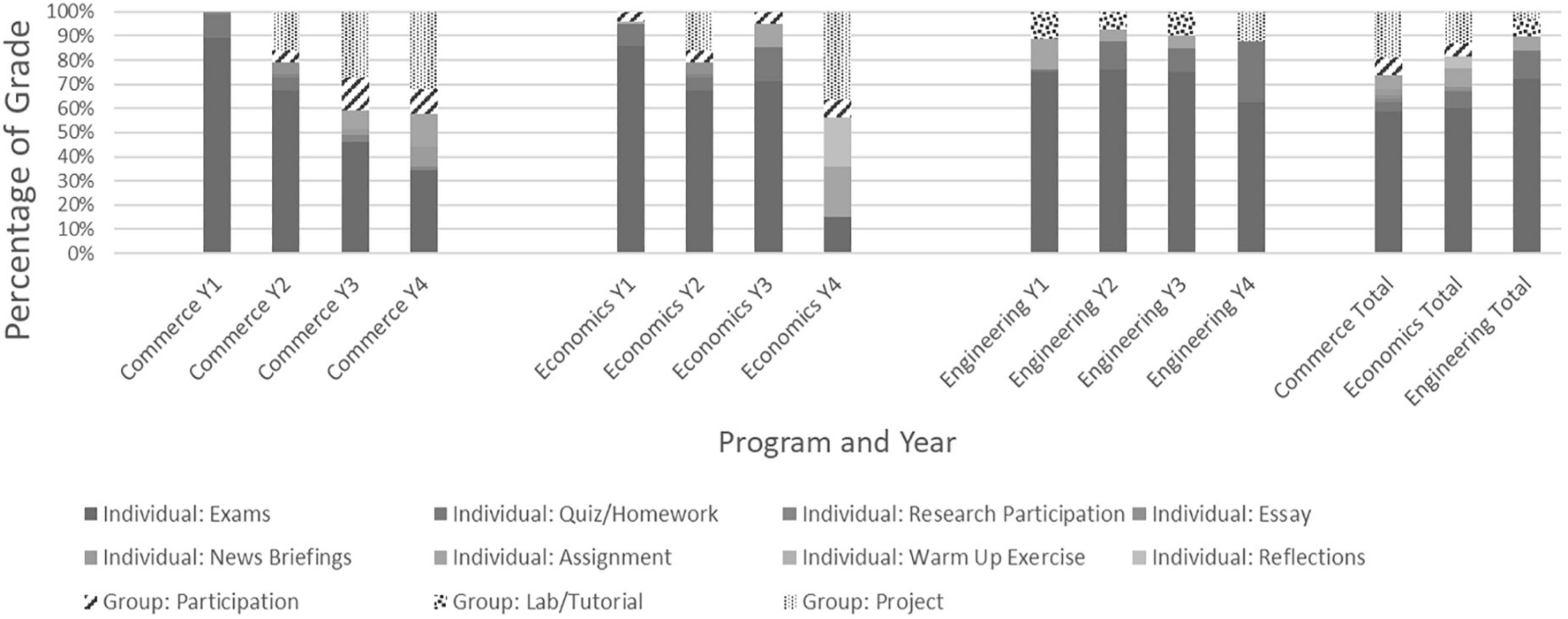

The content domain analysis revealed a heavy reading load for first-year students from all three programs with up to 12–16 textbook chapters per course. In addition to reading volume, the document analysis revealed that students are required to read critically and then demonstrate their comprehension of texts in written assignments. The analysis of Economics course syllabi showed that starting with second-year courses, students are required to complete writing assignments that represent 20% of the total grade, suggesting that demonstrating knowledge of course concepts through reading and writing is a substantial part of the course grade. Examination of Commerce course syllabi revealed a similar story for essay writing but with the addition of writing assignments in some third-year courses submitted as part of a group assignment that also included an oral presentation and was weighted at 35% of the total grade. Conversely, essay writing was not a requirement in any of the Engineering courses we examined.

Inflated view of own language ability based on test scores

Most students admitted that they had little knowledge of what the admission cut score meant for their preparedness for academic coursework. They tended to perceive that the level of language proficiency inferred from the cut score set for admission would be sufficient to handle academic and social language demands. This student perspective was also shared by faculty members believing that once students meet the university’s admission criteria, they should be prepared for academic work. In responding to the question about what the test scores said about their English language ability, students tended to associate their perceived English language ability with test scores for specific sub-sections of the test (i.e., reading, writing, listening, speaking). That is, they tended to have had a higher level of self-assessed competence in language skills for which they received relatively higher scores, indicating students’ perceived “face validity” of the test. A second-year Engineering student described his experience with his writing score by stating that “after I came (to this university), personally I found the most difficult part is writing. Even though I got the highest (score) on writing, that’s the part I find the hardest.” Another Engineering student explained that while she achieved a high test score for reading, she was surprised to find academic reading requirements still challenging:

I did really well in listening and reading. It’s almost to a full mark. So, I was confident in reading, but, when I came here, umm so praxis course requires us to do some research to read a lot of documents. I feel like, for example, in two hours, my partner can complete a research, but I can only do like half of them. So, when I read a website or something I cannot know where is the focus. (Engineering)

An Economics student reported that even after achieving a high score on the language test, “it was not good enough for here.” Two Commerce students felt that the Speaking subtest was too narrow in focus and did not elicit their true speaking ability. Other Commerce students felt that the Speaking subtest score was impacted by topic familiarity and variation in raters’ grading severity. Conversely, two other Economics students felt that the language test in general was too basic and not hard enough although they did not elaborate on how the test could be made harder.

Many students reported that they had taken the language proficiency test several times before attaining the score required for admission, indicating inflated scores due to practice effects. Several Engineering and Commerce students took the test twice, while two Commerce students took the test three times and two Engineering students took the test four times. One Engineering observed that practice effects increased their score, but they did not feel their proficiency had increased:

You know, the first time, I didn’t do good, because I didn’t really know the instructions, the way I should do it. And, the second time, I did better because I know how to do the test. But, actually, for my English level, I don’t think I improved. (Engineering)

In general, students reported that it was harder to achieve higher scores for speaking and writing than reading and listening on the language proficiency test. Many students explained they struggled to obtain the required score for writing. According to several Commerce students and one Economics student, the required listening score was easier to attain. A few Commerce students and one Engineering student felt that achieving the required reading score was easier than achieving the required speaking and writing scores because they could easily use test-taking strategies for receptive skill tests, which are mostly multiple-choice based. Another Engineering student added that test-taking strategies to attain a higher reading score include focusing on comprehending local rather than global meaning and added, “some people say that if you understand the paragraph, you’re doing it too slow.”

Despite achieving higher scores through test-taking strategies, students felt the higher scores did not represent increased proficiency and that the test score did not accurately measure their proficiency; one Engineering student explained:

And especially for IELTS reading, if you use the technique, you can get a high mark but it doesn’t really mean you really can read a passage and understand it fully. It just means you know how to do questions. (Engineering)

RQ2. What challenges do EAL students and faculty members identify as most challenging for EAL students’ academic success?

The second research question probed student and faculty member perspectives about challenges students have with language demands. We identified four inter-related themes concerning lack of oral language skills, lack of critical thinking and synthesis skills, struggle with cultural background knowledge, and a lessening of language challenges over time.

Lack of oral language skills

Students described difficulties speaking in class with both faculty members and local students. Several students stated that they were not adequately prepared for social language requirements as one Commerce student reported:

I felt awful…especially in terms of speaking, because we didn’t have enough practice, I felt that people didn’t understand me or they’d look at me like what am I talking about…which made it a lot easier to talk to international students rather than the native speakers. I make mistakes and they make mistakes, so we’re on the same wavelength. (Commerce)

An Economics faculty member reported that students were unable to comprehend course material in class, requested repetition (but that there was no time to repeat course material), and tried to transcribe lectures rather than extract salient information. A Commerce faculty member commented that student oral presentation skills are lacking and that students are asked to make presentations without being taught how to present. Another Commerce faculty member observed that students are challenged in negotiating the complex language demands of real-world client communication, which is a program requirement, and provided an example of such complex language use: “real-world survival (looking at families orphaned, dying of AIDS) therefore requires sensitivity, oral language, willingness to go into the field, high communication skills in unfamiliar context.”

Examination of course syllabi revealed that oral language skills are indispensable to complete academic work. Student group assignments, consisting of projects or labs that require peer-to-peer communication, are required in all years of the Engineering program. In Commerce courses, group assignments mostly begin in second year and are weighted at as high as 35% of the total grade in some courses. On the other hand, in Economics, group assignments tended to be assigned in fourth-year courses with a much higher weight (as high as 48%) of the total course grade.

Lack of critical thinking and synthesis skills

Another critical issue with EAL students’ academic language proficiency for coursework has to do with their perceived lack of higher-order reasoning skills. Engineering and Economics faculty members expressed students often read for the wrong purpose and focused more on literal meanings of texts or on sentence-level meaning rather than global-level interpretation. Engineering faculty members mentioned that students tended to rely on mathematical modeling and emulating rather than using critical reasoning and, as a result, students were not able to interpret what they had read and had difficulty explaining conceptual relationships. An Economics faculty member said that students found understanding implicit information in texts challenging and had difficulty comparing texts with different points of view:

And so when I ask them for feedback, last year, when I did this really simple, I thought, really simple press article that had two views, you need to pick one, and say why you preferred one? A lot of them, gave me feedback where they said it was hard. (Economics)

Domain analysis results suggest that as students progress through their program, there is a stronger requirement to apply critical reasoning skills in coursework. Decreased textbook utilization in favor of journal articles and other primary sources in upper years corresponds to the need for students to develop critical analytic reasoning beyond textual comprehension. This observation was further complemented by faculty member focus group discussions. Two Economics faculty members mentioned that the increased utilization of academic journal articles as course readings coincides with the expectation that students can critically review and synthesize those articles. Similarly, a Commerce faculty member noted that the utilization of case studies in upper years corresponds to an expectation for students to critically analyze the case studies and apply course concepts to them.

Although many faculty members stated that challenges in applying critical reasoning skills in general were universal regardless of their EAL student status, some acknowledged the additional challenges faced by EAL students because of their relatively low language proficiency. Some faculty members also attributed a higher level of challenges to cultural differences related to educational background. For example, Economics faculty members reported that EAL students tend to be afraid of challenging faculty members and to not think critically about course concepts learned in lectures because they were not accustomed to do so in their previous education experience.

Corroborating these results with focus group and domain analysis data, our analysis of the reading demands questionnaire suggests that students tend to perceive higher-order reading skills as more important in their academic work than basic comprehension skills and, yet they feel less competent in those more valuable skills. Table 2 includes the means and standard deviations of three reading skills resulted from the questionnaire.

The results of a one-way repeated measures ANOVA suggest that there were statistically significant differences in the level of perceived importance among the three reading skills, F(2,66) = 12.34, p < 0.001 and the effect size of this ANOVA model was large, η2 = 0.27. The post hoc pairwise comparisons using the Bonferroni test indicated that the level of perceived importance of the basic comprehension skill was significantly lower than both that of the inferential reasoning skill (t = 4.81, p < 0.000) and that of the critical thinking skill (t = 3.48, p = 0.003). However, the difference between the levels of perceived importance of the inferential reasoning skill and the critical thinking skill was not statistically significant (t = −1.33, p = 0.565).

In order to compare the level of self-assessed competence in the three reading skills, we conducted another one-way repeated measures ANOVA, which resulted in a statistically significant result, F(2,66) = 4.33, p = 0.028. The effect size of this ANOVA model was medium to large, η2 = 0.12. Again, the post hoc pairwise comparisons were used to locate the differences. The post hoc tests revealed a statistically significant difference between the levels of self-assessed competence in the basic comprehension skill and in the critical thinking skill (t = −2.68, p = 0.028). However, neither the difference between the basic comprehension skill and the inferential reasoning skill (t = −2.40, p = 0.058) nor the difference between the inferential reasoning skill and the critical thinking skill (t = −0.28, p = 1.000) were found to be statistically significant, although the significance value of the former approaches the pre-determined alpha level.

In sum, the results from the two ANOVA tests and post hoc comparisons suggest that students tend to struggle with the higher-order reading skills such as critical thinking and inferential reasoning while these skills are perceived as more important than basic comprehension skills in their academic work.

Struggle with cultural background knowledge

Students discussed significant challenges they experience with lectures and oral discussions due to cultural references in language use and idiomatic expressions used within and outside of coursework. Students expressed surprise at how background knowledge of Canadian or North American culture is necessary for better communication and felt unprepared and at a disadvantage to participate in class and complete assignments:

I think we lack Canadian background knowledge, making it harder to relate to some textbooks and instructors’ examples. (Commerce)

When you talk about different companies, native peers already know them, but I didn’t know this company … Case-based courses based on Canadian and North American cases…. (Commerce)

An Economics student explained that he was also not prepared for local language use by saying “I was not prepared particularly for local examples or jargon” while an Engineering student explained that her frustration with basic small talk was due to lack of comprehension of colloquial language:

Conversations were very hard to continue. I could only make basic small talk. I didn’t understand jokes or slang. (Engineering)

An Economics student also explained that while he received a satisfactory score on the listening test, he was surprised to find that lecture comprehension was still challenging due to local content which he described as local language use (e.g., product brands):

Even I got satisfied marking on IELTS test, I still feel the knowledge is not enough for here. In the lecture, the professor usually use something really familiar with you because you are local people. Use some words or some examples, but I’m so “what’s that? What’s that?” So, I search Google for that maybe a local team, local brand. The name of coffee and I think, some nouns, they are difficult to remember. (Economics)

Commerce and Economics faculty members also reported that students have challenges with cultural differences and struggle with course content due to a lack of cultural knowledge. In echoing student frustration with comprehending jokes and slang, a Commerce faculty member felt that students struggle with the vernacular, resulting in students not understanding or misunderstanding the faculty member’s humor. One faculty member mentioned how he takes into consideration the impact of cultural factors when designing course materials:

When you said the example of having them solve a problem for a client, those are the types of test questions I’d love to ask. But I do worry that they will differentially impact based on both language and cultural standards. (Economics)

Lessening of language challenges over time

Results from the upper-year student and faculty member focus groups and domain analysis revealed that student challenges with language lessened over time and that language demands decreased for them as they moved into the third and fourth years of their program. This change was partly because of smaller class sizes and the more interactive nature of their classes that involved discussions and presentations. Students felt that in their third and fourth years, they had acquired relevant vocabulary and non-verbal language use, which helped enhance their confidence, and found it easier to socialize. However, Engineering students expressed that critical reading demands increased significantly in the third and fourth years of their program and did not feel that the reading demands in their first and second year adequately prepared them.

Faculty member views showed variation about student language improvement. While many faculty members felt student language improved over time, some faculty members felt that many students’ English did not make sufficient improvements. One Economics faculty member commented on students’ limited language ability: “I still see a number of students in March or April and I am astounded that they got in and I’m astounded at how limited their English still is.” Other faculty members noticed that even in upper-year courses, some students were reluctant to participate in class discussions and did not seem to improve. An Economics faculty member commented on lack of student participation in a third-year course: “I think there’s ones that they really, they just find it hard. They’re not going to get better.” Another faculty member acknowledged that many students’ language improved over time but, nevertheless, he was surprised at the number of students who graduate without having improved:

And I also share this view that many of them improved over time. But what really surprises me is the number of them who never improved at all and they graduate anyway. (Economics)

Results from the domain analysis align with student and most faculty member views that student language challenges lessen over time while learning tasks become more interactive and group-based in upper year courses. Examination of student evaluation criteria in course syllabi revealed difference across the programs as well as the years of study within each program. Figure 1 shows a general tendency in course assessment to move from independent assessment in the early years of a program to more group-based assessment in the upper years. This is especially noticeable in the Commerce program but less so in the Engineering program. First-year Commerce students’ grades are predominantly derived from more traditional exams and quizzes, emphasizing independent work. Group assignments are introduced in second year, suggesting the additional requirement of productive language skills that emphasize communication though the majority of course credit still involves exams and quizzes. Among the Economics courses investigated, evaluations somewhat mirrored Commerce, where independent exams are prominent in the first year, with more diverse assessment types being included in upper years (e.g., tutorial participation, problem sets, group projects). Like Commerce, not all fourth-year Economics courses used final exams as evaluations; one course utilized writing assignments and reflections as the main evaluation criteria. On the other hand, Engineering courses maintained similar evaluation criteria across all four years, with most assessments involving midterms and final exams—occasionally making up 100% of course grade—and a smaller proportion of grades being based on assignments, quizzes, projects, and tutorials. However, labs in Engineering courses involved more hands-on tasks involving teamwork, emphasizing the importance of knowledge demonstration and application in addition to memorization and expository knowledge.

RQ3. What strategies and resources do students and faculty members use to address EAL students’ challenges with language demands?

The third research question probed how students and faculty members responded to student challenges with language demands. We identified four themes concerning student utilization of learning aids, student selection of courses and learning tasks, faculty member simplification of language, and a perception from faculty members that no specific strategies or resources are needed for EAL students.

Students utilizing learning aids

Students expressed that it takes them longer to complete academic work due to challenges with university language demands and struggle to find the extra time and, as a result, students described a variety of coping strategies to handle these challenges. Students reported using online videos and online translation tools to help them understand reading texts; however, students described pros and cons about the efficacy of translation tools, especially concerning accuracy and language skill development. In commenting on how some students avoid certain types of learning tasks, one Engineering faculty member wondered to what extent students could understand translation of course concepts, as students may not have had the linguistic resources in their first language to fully translate discipline-specific language and concepts.

Students reported accessing university supports to overcome challenges with language demands. While some students reported successes through university supports such as writing centers, students also reported frustrations when they attempted to access the university’s academic supports. Students felt university supports were constrained by wait times and tutors’ lack of disciplinary understanding of assignments; for example, significant amounts of appointment time were taken up explaining assignment requirements to support staff. Students also complained that access to teaching assistants was limited because of the volume of students and the relative lack of time the teaching assistants had available. Conversely, some faculty members felt that students did not attend office hours enough with faculty members or teaching assistants and did not take enough advantage of university supports like writing centers and drop-in workshops to build their language skills.

Students being selective of courses and learning tasks

Some faculty members felt that students avoided courses or learning tasks that students perceived to have heavier language demands. One Economics faculty member noticed how students tend to select courses that emphasize quantitative skills while another commented on lack of student participation in class discussion:

I definitely have selection into and out of my courses. Students who believe their comparative advantages in quantitative skills tend not to take my course. So I know that my third year course is lighter on international students than the average third year course. (Economics)

Even though there are quite a bit of participation marks, they seem to disproportionately check out. They won’t come. Or they, you know, they, they don’t, they just don’t do anything. … So I think I’m sort of disappointed because I think they’re not going to get any better. (Economics)

Some faculty members had a negative perception of student motivation characteristics. For example, some Engineering faculty members noticed that some students did not believe they needed to improve their language skills because they intended to practice engineering in their country of origin. These faculty members felt that these students tended to not spend the time they needed to improve their language skills and instead focused more on technical skills:

I think we’re making some assumptions and goals of these students to learn English. And so when we offer strategies like, they should try to read in other genres and ways to kind of adjust to the work they’re not that interested in that because their goal is to get through the program so they can practice in their own language. So there’s multiple groups of students within that cohort as well in terms of motivation. (Engineering)

Let’s say this course requires a lot of writing, a lot of presentation within those 3 months. I’m just not going to get that good at it and why don’t I just concentrate on the other courses where they don’t need to make any presentation. (Engineering)

However, one Engineering faculty member felt that courses even with a technical skill focus did require communication skills and that students were not making informed choices:

I also think that they don’t believe that they actually need the language to do some of their technical courses and they do. (Engineering)

Faculty simplifying language

Some faculty members recognized student language challenges and made accommodations in their teaching practice. For example, some faculty members wrote exam questions in simple language, allowed dictionary use during exams, placed more emphasis on content rather than grammatical accuracy when grading, provided instructions for participating in class discussions, provided student feedback on specific writing skills (e.g., a positioning statement or formula justification), and provided lecture videos and slides to support student lecture listening skills. Other faculty members mentioned being sensitive to cultural language and background when writing exam questions.

Some Commerce faculty members scaffolded students’ professional oral communication needs by providing more frequent in-class oral presentation opportunities to build student confidence and provide feedback for students. Also, a Commerce faculty member mentioned providing first-year students with scripts with sample discussion questions to help them facilitate class discussion, noting that students were challenged by cross-cultural communication but that with feedback their skills improved. Another Commerce faculty member mentioned that presentation feedback should be integrated across all courses so students could see their improvement over time and that students could benefit from a pre-sessional program where they could build their confidence in handling business communication. In the Engineering program, faculty members mentioned that project poster sessions were one opportunity where students could learn project-based client communication through question-and-answer sessions.

While many faculty members mentioned various ways in which they altered their teaching practice to adjust for EAL student language challenges, one faculty member wondered how much accommodation could be made while maintaining course integrity:

And, you know, it is a legitimate question how far the institution ought to go to accommodate these difficulties. I don’t, I don’t have the answer. (Economics)

No specific strategies or resources needed

The third research question was concerned with the strategies and resources that students and faculty members use to lessen the challenges EAL students have with language demands. While the fourth theme that emerged from the data was not a strategy or resource, it is worth noting that some faculty members felt that concerning certain challenges, no specific strategy or resource was necessary for international students. Some faculty member participants felt that a specific response to some EAL student language challenges was not necessary because, they observed, all students struggle with developing a critical perspective, using the language of the discipline, interpreting academic articles, summarizing readings, and writing effectively, and this was especially apparent in first year. An Economics faculty member commented that academic reading was challenging for both first and second language speakers:

But on that kind of preparatory reading which I think is especially crucial in the third- and fourth-year courses, I don’t identify that as an international student issue at all. Plenty of domestic students or native speakers are not understanding how to read, and are not doing it or trying it sufficiently. So for that kind of reading, I think we have a challenge for all of our students and I don’t, I wouldn’t single out international students. (Economics)

Discussion

Earlier studies lack EAL student perceptions on the adequacy of English language test criteria to meet university language demands within particular programs of study. The present study addressed this gap by investigating the perspectives of post-secondary EAL students on their English language challenges and their interpretation of English language test scores against language demands within three programs of study and across all years of undergraduate study. This study also examined how faculty members in different programs of study perceive the language challenges EAL students experience and how EAL students and faculty members respond to these challenges. The results of the study show the mismatch that EAL students perceive between admission criteria required on English language tests and actual language demands. The results also deepen understanding of post-secondary language requirements and suggest that program-specific language demands are not static across program years but change over time. These findings have implications for language proficiency requirements for university admission as well as post-admission support programming.

Our study sought to understand EAL students’ perspectives on the extent to which academic English language tests used for university admission represent target language demands in specific programs of study. Our results suggest that both EAL students and faculty members may have undue confidence about test scores and their ability to predict academic success, resulting in tensions regarding language preparedness for EAL students and faculty members. To avoid these tensions, a better understanding of the relationship between the constructs measured by English language tests and the language skills required in academic work is needed. Previous research has called for better language test score interpretation (McDowell and Merrylees, 1998; Rea-Dickins et al., 2007; Baker et al., 2014) and the results of this study add the perspectives of EAL students and faculty members. EAL students in this study reported that despite achieving a higher score on the language test after repeated attempts, they felt their language skill had not improved, and they still reported challenges with language demands in their program of study. This result suggests that due to practice effects, students are being admitted with scores above their actual English proficiency level and have not yet achieved the threshold of language required for academic work. This finding aligns with previous studies that point out practice effects inflate test scores (Hu and Trenkic, 2021; Trenkic and Hu, 2021).

EAL students in this study also reported that they were surprised how difficult both oral and written communication was for them despite meeting the admission criteria. Research has shown that test scores for productive skills (i.e., speaking, writing) are more strongly correlated with first-year grade point average than scores on receptive skills (i.e., reading, listening) (Ginther and Yan, 2018). Other research has shown that students who have a wide discrepancy in their productive and receptive scores do not perform as well academically (Bridgeman et al., 2016). This research suggests that productive skills are important for student academic success, and our results call for careful attention to the selection of cut scores for productive skills for admission as well as tailored support for students in these skill areas post admission.

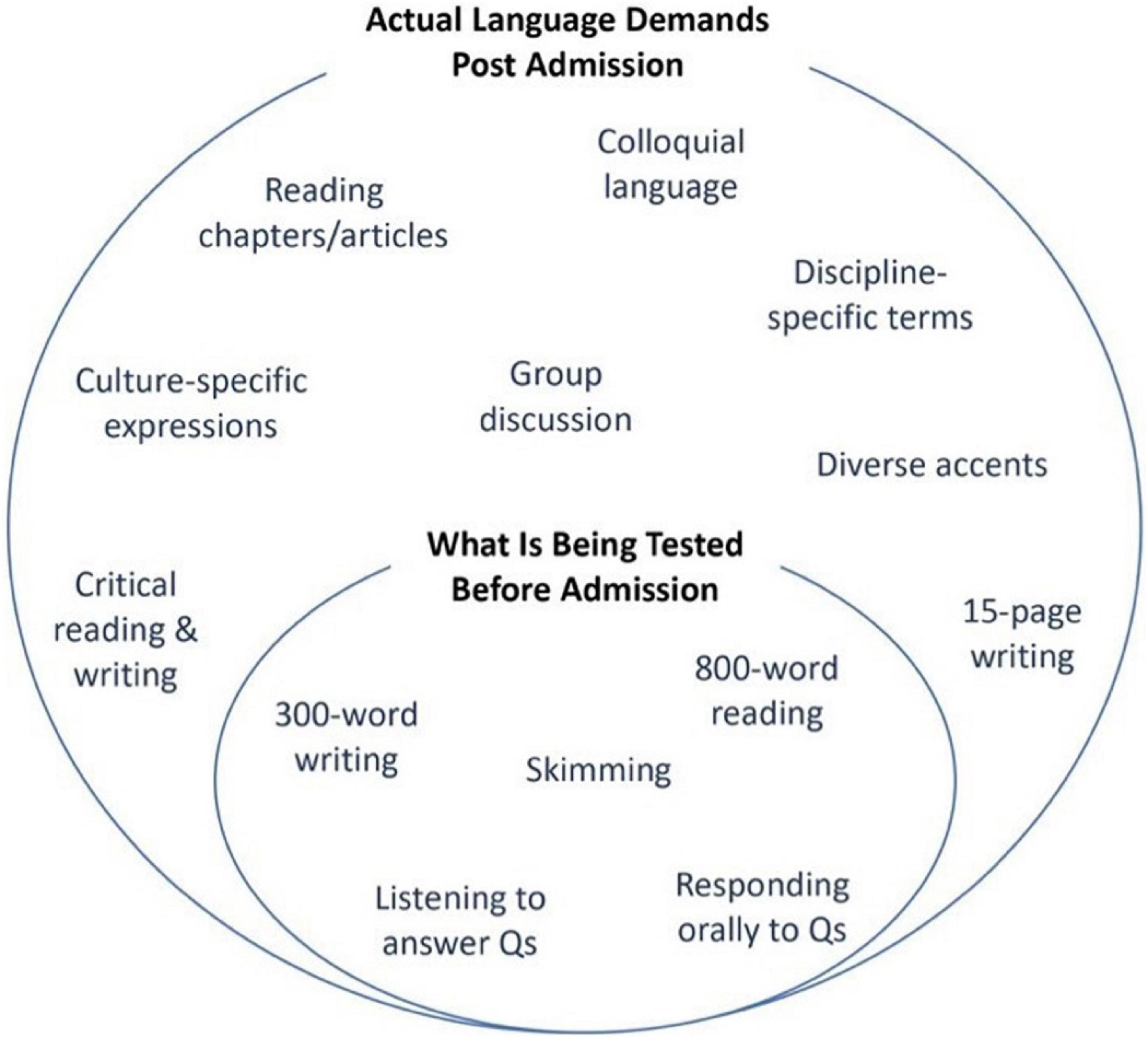

This study also sought to understand the challenges with language demands that EAL students and faculty members identify EAL students have. EAL students and faculty members reported that EAL students had concerning challenges with academic oral communication and critical reading skills. Our domain analysis findings confirmed that these skills were required in academic work. These findings are important as they contribute to the understanding of what language skills are required across and within programs and show the limitations of admission criteria as measured on English language tests. Previous studies have suggested that constructs measured by English language tests may not represent the full range of language demands required in academic work. For example, while Brooks and Swain (2014) found an overlap between constructs on the TOEFL iBT and in real-life academic speaking contexts, findings also suggested lack of overlap with the extrapolation inference argument on the test. In another study, Moore et al. (2012) investigated the suitability of items on the reading section of the IELTS test to the requirements of real-life reading tasks; findings suggested the test lacked items that were more interpretive and global (e.g., extract meaning from multiple sources), consistent with our findings of the current study. Since the students who had satisfied admission language criteria were still reporting challenges with language, the results suggest a discrepancy between the skills measured by the language tests used for admission and actual language demands in university settings. Furthermore, these results suggest that post-admission support needs to be developed to address these critical gaps. More studies could contribute to the understanding of the relationship between admission criteria and actual language demands, and Figure 2 suggests possible areas of investigation. With deepened understanding of admission criteria as represented in English language tests and program-specific language demands, universities can better inform incoming students of language demands in pre-arrival programming and plan post-admission support that addresses specific program language demands.

A theme under the research question related to student challenges with language demands was student struggle with cultural background knowledge embedded in learning materials and instructions. Previous research suggests international students face additional burdens due to loss of social networks, discrimination, acculturation, language challenges, and a lack of internationalization in the curriculum (da Silva and Ravindran, 2016; Guo and Guo, 2017; Sohn and Spiliotopoulos, 2021) that privileges Euro-centric perspectives (Guo and Guo, 2017). Our results suggest that both EAL students and faculty members identify cultural content as a source of tension for EAL students. Considering the large number of EAL students admitted to English-medium universities to meet university goals of internationalization and globalization, we recommend that universities address questions of equitable curriculum and student fairness.

Previous research on university language requirements has tended to focus on preparedness for first-year study (Flowerdew and Miller, 1995; Mulligan and Kirkpatrick, 2000; Mendelsohn, 2002). A novel aspect of our results suggests that university academic work changes from more independent academic work in the early years of study to more group-based learning tasks in the upper years, with expectations of highly developed communication skills for real-world interaction. This change in the demands of academic work suggests language preparedness for first-year study is not sufficient for upper-year students and that support for student language development needs to change as academic language demands become more complex and challenging over time. While most students in the study reported that language challenges lessened in their third and fourth years, faculty members reported they noticed students who were still struggling with language skills in the upper years suggesting that some students were still lacking important language skills key to academic success. While students in the focus groups seemed largely successful in meeting these language needs, future studies could investigate the behavior of students who were not successful in meeting these language needs.

Our study investigated how EAL students and faculty members respond to EAL students’ challenges with language demands. Some students turn to co-curricular support to overcome language challenges, and our results suggest that some co-curricular support may be problematic as students report a lack of disciplinary focus and limited access. This situation seems to run counter to current literature, which emphasizes a more disciplinary focus to language support provided at the course level (Hyland, 2002; Andrade, 2006; Moore et al., 2012; Anderson, 2015; Pilcher and Richards, 2017). Our results also show that faculty member support of EAL students is varied and independent, a finding that aligns with other studies (Trice, 2003; Gallagher and Haan, 2018) and suggests a lack of consistency and fairness for students. To provide consistent, positive student experience and reduce extra burdens placed on faculty members, we recommend that faculty members be supported by better program policies regarding EAL student support. As reported in the literature, EAL student support that involves collaboration between applied linguists and disciplinary instructors improves EAL student experience, reduces the burden on faculty members of independent support provision (Hyatt and Brooks, 2009; Anderson, 2015), and results in positive interdisciplinary collaborations (Zappa-Hollman, 2018). Our study results suggest that while some faculty members are independently providing discipline-specific support, higher-level coordination is needed to ensure fairness and consistency in student experience.

One limitation of the present study is related to self-selection bias. Most students in our upper-year student focus groups reported that language and adjustment challenges lessened as they entered their upper years. This finding does not align with the Roessingh and Douglas (2012) study where quantitative measures of EAL student academic performance such as GPA and academic standing were used; their results suggested that EAL students did not perform well on these measures against students who spoke English as a first language. Students who chose to participate in our focus groups may be strong academic performers and may not represent the academic behavior of all EAL students. As well, in a study conducted by Trenkic and Warmington (2019), results suggested minimal language gains over an academic year, which is a finding that points out that the gap between English as a first language speakers and EAL students does not easily narrow as students move through their program. It is also possible that students in the focus group may have been hesitant to report challenges in front of their peers and the researchers. For a fuller picture of EAL student language behavior, future studies could investigate the experiences of a more diverse performing group of EAL students.

Another methodological limitation is that the generalizability of the reading skill questionnaire results is limited due to the small sample size and, thus, further studies with larger sample sizes would ensure the replicability of our results. Also, the courses, programs, and experiences of university support we examined were limited by study participants and do not necessarily represent the full range of courses, programs, and support offered at this university. Future studies that examine language needs in a wider range of courses and the impact of a wider range of co-curricular support models would deepen understanding of needs and contribute to the development of a systemic support framework for students.

Conclusion

The study findings suggest that even after meeting the English language requirement for university admission through English language tests, EAL students experience concerning challenges with oral language demands in and out of classrooms with faculty members and other students and have challenges performing critical reading and writing tasks. Some of these challenges are related to unfamiliarity with discipline-specific vocabulary and a lack of cultural background knowledge. In general, critical reading and writing tasks that represent the complexity of university academic work, cultural language, and discipline-specific language are elements of language that are not targeted in most English language tests. As a result of language challenges, EAL students avoid courses and learning tasks within courses that they perceive as challenging, a behavior which ultimately limits their engagement with their program of study. EAL students are surprised at these challenges and feel a lack of effective support from the university in overcoming them. Faculty member perspectives tend to corroborate student perspectives, but variation exists among faculty member responses to student challenges, suggesting students receive varying degree of support in their courses.

If universities continue to admit large numbers of EAL students using the current admission criteria without adequate support, critical questions need to be addressed. Is the language level with which students were admitted sufficient for academic success? How can we identify students most at risk post admission? What types of language support programs can best support these students? Answers to these questions are critical for universities so they can inform EAL students at admission of language expectations and develop post-admission support for EAL students that recognizes continuous language learning within specific disciplinary contexts.

Data availability statement

The data analyzed in this study was obtained from a third party and permission to access datasets is required. Requests to access these datasets should be directed to BR, YnJ1Y2UucnVzc2VsbEB1dG9yb250by5jYQ==.

Ethics statement

The studies involving human participants were reviewed and approved by the Research Oversight and Compliance Office – Human Research Ethics Program, University of Toronto. Protocol Reference #34352. The patients/participants provided their written informed consent to participate in this study.

Author contributions

BR was responsible for writing all drafts of the manuscript, coordinating the writing of the co-authors, and leading the submission. CB analyzed the data from the document analysis (one of the data sources) and wrote about this in the results section, contributed to the figure, and reviewed all drafts of the manuscript. HK analyzed the Academic Reading Questionnaire data and wrote about this analysis in the results section, contributed to the figure, and reviewed all drafts of the manuscript. EJ was the authors’ Ph.D. supervisor and provided extensive feedback to the other authors on all sections of the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This research was funded by the IELTS Partners: British Council, Cambridge Assessment English and IDP: IELTS Australia. The grant was awarded in 2016.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2022.934692/full#supplementary-material

References

Anderson, T. (2015). Seeking internationalization: the state of Canadian higher education. Can. J. High. Educ. 45, 166–187.

Andrade, M. S. (2006). International students in English-speaking universities: adjustment factors. J. Res. Int. Educ. 5, 131–154. doi: 10.1177/1475240906065589

Association of Universities and Colleges of Canada (2014). Canada’s Universities in the World AUCC Internationalization Survey. Available online at: https://www.univcan.ca/wp-content/uploads/2015/07/internationalization-survey-2014.pdf [accessed on July 25, 2022].

Baker, B. A., Tsushima, R., and Wang, S. (2014). Investigating language assessment literacy: collaboration between assessment specialists and Canadian university admissions officers. Lang. Learn. High. Educ. 4, 137–157. doi: 10.1515/cercles-2014-0009

Banerjee, J. V. (2003). Interpreting and using Proficiency Test Scores. Doctoral Dissertation. Lancaster, PA: Lancaster University.

Bridgeman, B., Cho, Y., and DiPietro, S. (2016). Predicting grades from an English language assessment: the importance of peeling the onion. Lang. Test. 33, 307–318.

Brooks, L., and Swain, M. (2014). Contextualizing performances: comparing performances during TOEFL iBT and real-life academic speaking activities. Lang. Assess. Q. 11, 353–373. doi: 10.1080/15434303.2014.947532

Bruce, E., and Hamp-Lyons, L. (2015). Opposing tensions of local and international standards for EAP writing programmes: Who are we assessing for? J. Engl. Acad. Purposes 18, 64–77. doi: 10.1016/j.jeap.2015.03.003

Cazden, C. B. (1988). Classroom Discourse: The Language of Teaching and Learning. Portsmouth, NH: Heinemann.

Chapelle, C. A., Enright, M. K., and Jamieson, J. M. (2008). Building a Validity Argument for the Test of English as a Foreign Language. London: Routledge.

Coleman, D., Starfield, S., and Hagan, A. (2003). The attitudes of IELTS stakeholders: student and staff perceptions of IELTS in Australian, UK and Chinese tertiary institutions. IELTS Res. Rep. 1, 161–235.

Cudmore, G. (2005). Globalization, internationalization, and the recruitment of international students in higher education, and in the Ontario colleges of applied arts and technology. Can. J. High. Educ. 35, 37–60.

da Silva, T. L., and Ravindran, A. V. (2016). Contributors to academic failure in postsecondary education: a review and a Canadian context. Int. J. Non Commun. Dis. 1, 9–17.

Eddey, P. H., and Baumann, C. (2011). Language proficiency and academic achievement in postgraduate business degrees. Int. Educ. J. 10, 34–46.

Fang, Z., and Schleppegrell, M. J. (2008). Reading in Secondary Content Areas. Ann Arbor, MI: University of Michigan Press.

Ferris, D., and Tagg, T. (1996). Academic listening/speaking tasks for ESL students: problems, suggestions, and implications. TESOL Q. 30, 297–320. doi: 10.2307/3588145