95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Educ. , 23 March 2022

Sec. Digital Education

Volume 7 - 2022 | https://doi.org/10.3389/feduc.2022.856918

This article is part of the Research Topic Digital Technology in Physical Education - Pedagogical Approaches View all 6 articles

The purpose of this study was to examine the nature and quality of interactions between 24 students (9 male, 15 female) in an Alberta elementary physical education class using video-modeling and three different peer-to-peer (P2P) evaluation methods. Nature of interaction was determined by the duration of interaction (total, on-task time, off-task time, neutral), the type of comments (positive, constructive, negative), and quality of interaction by the category of feedback (4 categories) from both the evaluators and performers. This study compared structured paper evaluation (SP), unstructured video evaluation using the video feature on iPads (UV), and structured video evaluation using a prototype app on the iPad (SV). The SV condition provided statistically significant results for evaluator on-task, evaluator off-task, and performer on-task, along with increased positive comments from evaluators. The SP condition had significantly more depth of feedback. This study concludes that the use of SV to deliver feedback in a P2P learning environment has the potential to improve the nature of feedback during peer evaluations.

According to studies, incorporating quality characteristics in physical education (PE) will result in a comprehensive development in students’ lives in various domains including physical and motor skills (Emmanouel et al., 1992; Eather et al., 2013), academic performance (Rasberry et al., 2011; Marques et al., 2017), cognitive function (Ardoy et al., 2014), self-perception (Goni and Zulaika, 2000), self-determination and motivation (Shen et al., 2009; Wallhead et al., 2014; Cuevas et al., 2016), self-efficacy and enjoyment (Dishman et al., 2004, 2005) and social interactions and reciprocal relationships (Wallhead et al., 2013; Garn and Wallhead, 2015). Globally, there is a shift to holistic PE that incorporates social and cultural factors (Lynch, 2019). The emergence of holistic PE in curriculums can help countries meet some of the United Nations’ Sustainable Development Goals such as quality education and gender equality (Baena-Morales et al., 2021). Quality PE fosters a healthy lifestyle and enjoyment in students, and they can learn social and interactive skills (National Association for Sport and Physical Education, 2013). Social interaction plays an important role in the students’ skill-learning process outside of their comfort zone, and social activities can shape their learning process (Koekoek and Knoppers, 2015).

Recent studies have also demonstrated young people’s general acceptance of applications related to health and learning settings (Casey et al., 2017; Goodyear et al., 2019; Lee and Gao, 2020). For this reason, teachers should consider using applications and tablets (e.g., iPads) to help students achieve PE learning goals. They are also encouraged to use a selection of apps for efficient classroom management or for emphasizing other learning domains (Lee and Gao, 2020). Casey et al. (2017) have argued that with the appropriate pedagogical considerations, digital technology can offer potential benefits to students’ learning and performance. Recently, there has been a rise in literature exploring app-integrated PE in which various apps are used on the iPad in PE to facilitate the teaching and learning process (Watterson, 2012; Zhu and Dragon, 2016; Krause et al., 2020; Thacker et al., 2021). As part of this growing trend, tablets have become popular in PE, as they provide a portable multimedia platform to download a host of applications to accomplish a variety of tasks (Weir and Connor, 2009; McManis and Gunnewig, 2012; Koekoek et al., 2018).

It is critical that when technology is being used that it has appropriately scaffolded lesson plans to keep students on-task, engaging them in higher levels of thinking and performance (Ward et al., 2005; Yelland and Masters, 2007; Mosston and Ashworth, 2008; Metzler, 2011; Koehler et al., 2013). Because of these benefits, applications need to be carefully constructed to adhere to a pedagogical curriculum (Lee and Gao, 2020; Yu, 2020). Since it is necessary to develop curriculum programming for PE (Wallhead et al., 2021), effective use of technology, specifically through tablet computers, may offer a way to provide scaffolded content knowledge in a PE classroom (Gubacs-Collins and Juniu, 2009; Leight et al., 2009; Sinelnikov, 2012; Sun, 2012; O’Loughlin et al., 2013; Palao et al., 2015; Zhu and Dragon, 2016).

It has been shown that the traditional teaching methods in PE limit the student to the reproduction of observed behavior (Pritchard et al., 2008; Moy et al., 2014). Observed behavior involves the teacher modeling a skill followed by the students attempting to replicate the movement and practicing privately while receiving feedback individually from the teacher (Mosston and Ashworth, 2008). As a kind of instructional technology, video-modeling (VM) encompasses the individual watching a video of visual and auditory processes, which is modeled by a peer, a sibling, an instructor, an athlete, or the self, and then, similarly, performing the sequence of skills (Mechling, 2005; Bellini and Akullian, 2007). Video-modeling is a unique feature that is made possible through a combination of multiple technologies such as iPads, video cameras, and health-related apps and wearable devices (Aldi et al., 2016). This feature can reinforce observational learning through video analysis (Koekoek et al., 2019; Lee and Gao, 2020; Thacker et al., 2021). It is also easier to keep track of activities, and monitoring and evaluation can be facilitated by technology (Jeong and Hmelo-Silver, 2016), which is a suitable apparatus for improving students’ understanding and assessment of their movements while promoting their shared understanding (Koekoek et al., 2019; Yu, 2020; Thacker et al., 2021).

Further, watching VM could result in decreasing mental effort by focusing attention on the relevant stimuli (Charlop-Christy et al., 2000) and improving learning achievements (Kant et al., 2017). It can also facilitate retention of the skill elements and is attractive for children of all ages (Charlop-Christy et al., 2000). VM has been reported as an effective method for teaching, and for the acquisition of fundamental movement skills (Obrusnikova and Rattigan, 2016; Obrusnikova and Cavalier, 2018; Thacker et al., 2021), locomotor skills (Bulca et al., 2020), sporting skills such as throwing the discus and hammer (Maryam et al., 2009), and gymnastic skills (Boyer et al., 2009; Bouazizi et al., 2014) as well. It has also helped enhance positive social interactions in a natural setting focused on free play, and social and emotional development (Green et al., 2017). Another study reported improved on-task behavior after an intervention including self-monitoring and VM to math tasks (King et al., 2014).

Collaborative learning is another advantage provided by technology (Resta and Laferrière, 2007; Jeong and Hmelo-Silver, 2016; Bodsworth and Goodyear, 2017), which has the potential to foster peer-to-peer interactions (Resta and Laferrière, 2007). Peer-to-peer (P2P) learning occurs when peers are directly involved in the teaching and evaluation process, increasing student engagement, collaboration, and responsiveness (Byra and Marks, 1993; Topping and Ehly, 1998; Jenkinson et al., 2014; Kelly and Katz, 2016; Thacker et al., 2021). P2P learning is already being adopted in many situations due to increased engagement, improved performance, and constructive behaviors (Chai and Koh, 2017). Overall, technology may improve peer learning (Li and Gao, 2015) and provide support for PE, developing positive social interactions among students (Krause et al., 2020).

Video-modeling can also shape the aggregate and nonspecific feedback and make them specific and related to the task (Jeong and Hmelo-Silver, 2016). Enriching the quality of how feedback is provided can play a critical role in fostering effective peer evaluation and peer learning (Zher et al., 2016). High-quality constructive feedback could also help improve the learning process (Li et al., 2010; Waggoner Denton, 2018) and strengthen social interactions between peers (Lee and Gao, 2020).

Finding an effective combination of collaborative technology and scaffolding pedagogy through peer learning is important (Goodyear et al., 2014; Fernandez-Rio et al., 2017). The P2P learning model, as an effective procedure for collaborative learning, can be enhanced through pedagogical strategies and scaffolding while fostering social interactions (Jeong and Hmelo-Silver, 2016). In addition, there are still key issues regarding the suitability of technology and software for young learners that must be addressed. Measuring the quality of interaction between students offers a pathway to understanding more effective teaching and learning strategies in a P2P environment, which could also lead to improving collaborative performance and evaluation (Johnson and Ward, 2001; Jenkinson et al., 2014).

Using VM, the purpose of this study was to examine the nature and quality of interactions between elementary students in three different P2P evaluation methods and conditions. The nature and quality of the peer interaction, not necessarily the performance of the skill, was of interest. To assess student interactions, we compared three different evaluation methods and conditions: Structured Paper, Unstructured Video, and Structured Video. Nature (the duration and comments’ type) and quality of interaction (we developed four categories related to the specificity of feedback) were determined by videotaping the conversations between peers and systematically assessing them based on oral transcripts.

A class was recruited from a private southern Alberta elementary school. 24 fourth, fifth, and sixth grade students (9 male, 15 female) participated in the study (Table 1). An even number of participants was necessary for the study protocol; therefore, one eighth grade female student was recruited as a substitute whenever there was an absent student during testing days since absences made the numbers unequal. Parental consent and student assent were obtained.

Each participant was randomly assigned a partner and then placed into one of three rotations: A, B, or C (see Table 2). Although in different orders, all pairs completed the P2P evaluations in the three conditions: structured paper-based evaluation (SP), unstructured video evaluation (UV), and structured video evaluation (SV) using a prototype mobile application as described below.

The SP evaluation condition required students to use only the authors-developed marking guide to assess their peer’s performance (see Supplementary Appendix A). Students in the UV evaluation condition assessed using the video taken of their partner’s performance and relied on recalling the movement criteria previously presented during the teacher demonstration to provide feedback. Finally, the SV evaluation condition allowed pairs to assess each other using video playback guided by the prototype application on the iPad. For a complete copy of the trial’s lesson plan, see Supplementary Appendix B.

The prototype mobile application, called Move Improve® (MI), was used to facilitate structured video feedback and peer-evaluation among student pairs in the SV condition. MI is a video performance analysis tool designed to improve an individual’s ability to perform several skills (see Figure 1 for a screen image). In the demonstration videos, select skills are broken down into components, so that users can compare the movements in the pre-recorded demonstration video to their recorded performance to better understand their mistakes and strengths. MI can be used to evaluate individual and peer performance.

To use MI, users simply launch the app, select either individual or peer evaluation, pick the skill that is to be evaluated, and start recording their performance. Afterwards, they begin the evaluation phase. Each stage of the evaluation details a different part of the skill to assess. In-app movement cues (example: “Did your partner keep ball lower than waist level?”) are provided to guide the evaluators to accurately rate performers on a “yes,” “partial,” or “no” scale based on what they see the performers’ achieved in the video playback. Since the app displays a standard set of cues, all students in this study were provided with the same prompts, so they assessed their peers using the same criteria.

A 3 days prior to the start of the study, the researchers visited the participating classes to explain it, answer students’ questions, and obtain written assent from them. The researchers outlined the study procedure and gave the participants a tutorial on how to use MI on the iPad. The tutorial strictly focused on technical training for using the app. No training was provided on how to offer feedback.

The study occurred during three regular PE classes over 3 consecutive days. Each class was 30-min in length and included all participating grades. To ensure standardized instruction during the study, the research team, together with the PE teacher, created a mini lesson plan for three basketball dribbling techniques. Each day, students focused on a different basketball technique: the 1st day was stationary dribbling with the dominant hand; the 2nd day was stationary dribbling with the non-dominant hand; and the 3rd day was running while dribbling (see Table 2).

On the 1st day, all participants (grades 4, 5, and 6 PE class, n = 24) were randomly paired up and then assigned to either the A, B, or C rotations (see Table 2). There were four pairs for each rotation. The participants remained with the same partner and within the same rotational cohort (A, B, or C) throughout the 3 days of the study. To distinguish each cohort, students in rotations A, B, and C wore blue, yellow, and red colored vests, respectively, for the duration of the study. The school supplied 25 size-six basketballs.

Six evaluation stations (two for each condition) were set up outside of the gymnasium. At the SP condition stations, only the paper evaluation sheet was provided (Supplementary Appendix A). At the UV and SV condition stations, students referred to the provided iPads (four in total, two per condition) to assess their peer’s performance. MI was downloaded onto the two iPads designated for the SV stations. Six video camcorders were provided by the University of Calgary’s Sport Technology Research Laboratory (STRL) for use at the six evaluation stations to record interactions. Additionally, STRL supplied the four iPads.

Each study day began with the teacher instructing and demonstrating the basketball dribbling technique of that day for 3 min followed by approximately 6 min of practice time for all students (see Supplementary Appendix B).

After practicing and due to the limited evaluation stations, students were divided into two groups (Group 1, n = 12, three pairs; Group 2, n = 12, three pairs). Group 1 used the evaluation stations first while Group 2 stayed in the gymnasium to practice non-dribbling basketball skills with the teacher.

Before going to the evaluation stations, one student from each pair in Group 1 performed the dribbling technique of the day in the gym while their partner observed. If pairs were in either the UV or SV evaluation conditions, they were provided with an iPad to record their partner’s performance. After the skill performances, Group 1 went to the evaluation stations and the evaluator student began to assess their partner’s performance and provide feedback using either the SP, UV, or SV methods. After completing the assessments, Group 1 switched roles with Group 2, and pairs in Group 2 began the same performance and evaluation process as Group 1.

When Group 2 completed their assessments, they once again alternated roles with Group 1, but this time, students who initially performed first became the evaluator and vice versa. To allow for all students to perform and evaluate, there was a total of four evaluation sessions, two for each group, per day. A new basketball dribbling skill was subsequently performed during the 2nd and 3rd days of the study, following the same alternating process between Groups 1 and 2.

Students in the SV condition used tablet devices loaded with MI to collect data (Figure 1). Outside of the gym, each evaluation station consisted of a video camera to record interactions between the evaluator and the performer. Research assistants were present at each station to record the peer evaluations. They did not provide any educational prompts or interact with the participants unless students encountered problems understanding the task.

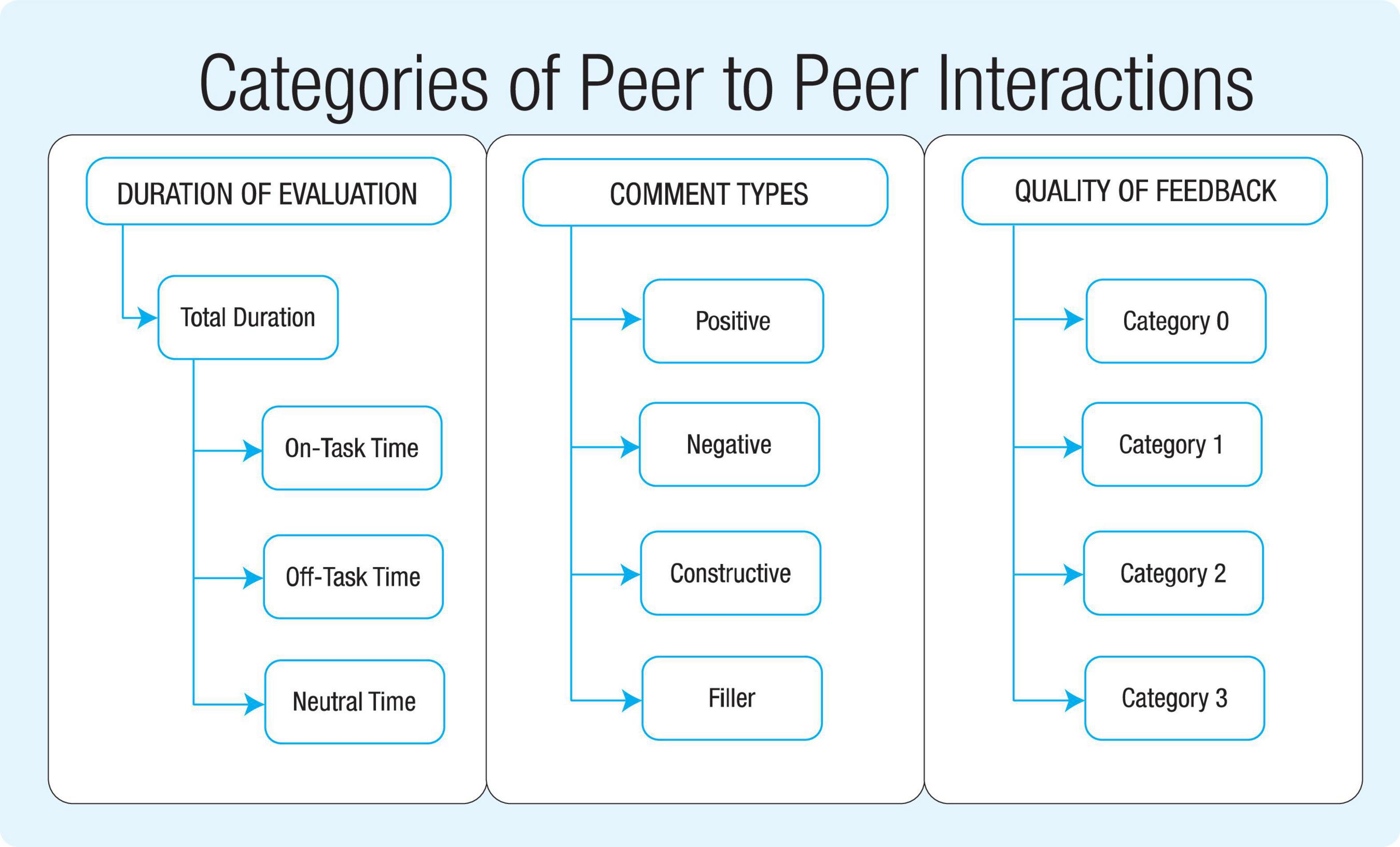

The quality of interaction coding scheme (Figure 2) was adapted from Sluijsmans et al.’s (2002) rating form. Sluijsmans et al.’s (2002) quality of interaction coding scheme included time of interaction (total duration of interaction, on-task time, off-task time, and neutral time), type of comments that occurred during the interactions (positive, constructive, and negative comments), and the quality of feedback (Category 0, Category 1, Category 2, and Category 3).

Figure 2. Coding scheme used to evaluate peer evaluation and discussion interactions between students.

Operational definitions were created prior to the study. Total duration was defined as the point at which participants began the evaluation process until the participants indicated they had completed their evaluations. Introductions and explanations to the participants were excluded from the calculation of total duration. Visual and audio cues from the video were used to determine on-task time, off-task time, and neutral time.

The on-task time criterion was time spent focused on the evaluation. It included relevant dialog between the participants with their partners focused on using the tool in their condition and listening to their partners’ feedback. The off-task time criterion was time spent on distractions. It included irrelevant dialog, looking elsewhere (away from partner, at a wall, or outside distractions), playing with their hands or the ball. The neutral time criterion was defined as time not relevant to the evaluations. It included listening to instructions from the research assistant, technical difficulties present during evaluations, or excess camera time. Filler comments, which were a part of neutral time, were defined as regular sentences or regular parts of speech unrelated to basketball dribbling (e.g., if students repeated the questions out loud, those were considered filler). During coding, a single sentence was considered one comment. Transcripts of videos were produced and coded to determine the type of comment. The number of each type of comment was counted and recorded from each evaluation.

The types of feedback can be classified in many ways depending on the purpose and setting: evaluative feedback refers to judgment, corrective feedback tells the learner what to do or what not to do, and there is a differentiation between general vs. specific feedback, and positive vs. negative feedback (Rink, 2014). We employed Rink’s system in the current study; however, it was simplified to suit young children. Positive comments included non-specific encouraging and affirming statements, while negative comments were identified as disparaging or unfavorable phrases. Rink’s evaluative and corrective feedback categories were treated as one category, constructive comments, which referred to specific feedback on areas for improvement.

To determine the quality of feedback, specific categories were established. Quality comments were defined as Non-Specific Feedback (Category 0), General Feedback referring to task at hand (Category 1), Feedback Referring to Skill but with no specific feedback to improve (Category 2), and Feedback Referring to Skill with specific area to improve (Category 3; see Supplementary Appendix C). To see examples of the rating of feedback evaluations for each condition, plus a definition of terms, refer to the Data Analysis section.

A research assistant was trained in the coding protocol to assess the reliability of the coding (Palao et al., 2015). Nine video files were randomly selected across the 3-day study period and peer evaluation conditions to determine inter-rater reliability. The inter-rater correlation coefficient (Cronbach’s alpha) was 0.97 for duration and 0.93 for comments. A 2 months after the last coding session, the files were re-assessed to determine intra-rater reliability. The intra-rater correlation coefficient (Cronbach’s alpha) was 0.99 for duration and 0.95 for comments.

The independent variable in this longitudinal study was peer evaluation and discussion assignment (structured paper, unstructured video, and structured video). The dependent variables were: (1) the nature of interaction including the time of interaction (total duration of interaction, on-task time, off-task time, and neutral time); (2) the types of comments (positive, constructive, and negative) between peers (evaluator and performer); and (3) the quality of feedback (Category 0, Category 1, Category 2, and Category 3) given by the evaluator. A Generalized Estimating Equation (GEE, under GENLIN procedure of SPSS version 22.0) was employed to account for a correlated and unbalanced nature due to some missing values of the data. Testing of condition effects, along with means and standard error for each of the three peer’s evaluation and discussion conditions was estimated (i.e., Estimated Marginal Means) using a GEE modeling approach for total duration of interaction, on-task time, off-task time, and for positive, constructive, and negative comments provided by the evaluator.

To determine the quality of feedback, each transcript was initially coded for the type of comments (positive, constructive, negative) from both the evaluator and performer. Once the types of comments were established, comments were further coded for quality. For examples of types and categories of comments, see Supplementary Appendix D.

Sixty-two interactions were collected over 3 days of data collection: 20 from the SP group, 24 from UV group, and 18 from SV group. Four files were lost from the SV group due to technical errors during data transfer.

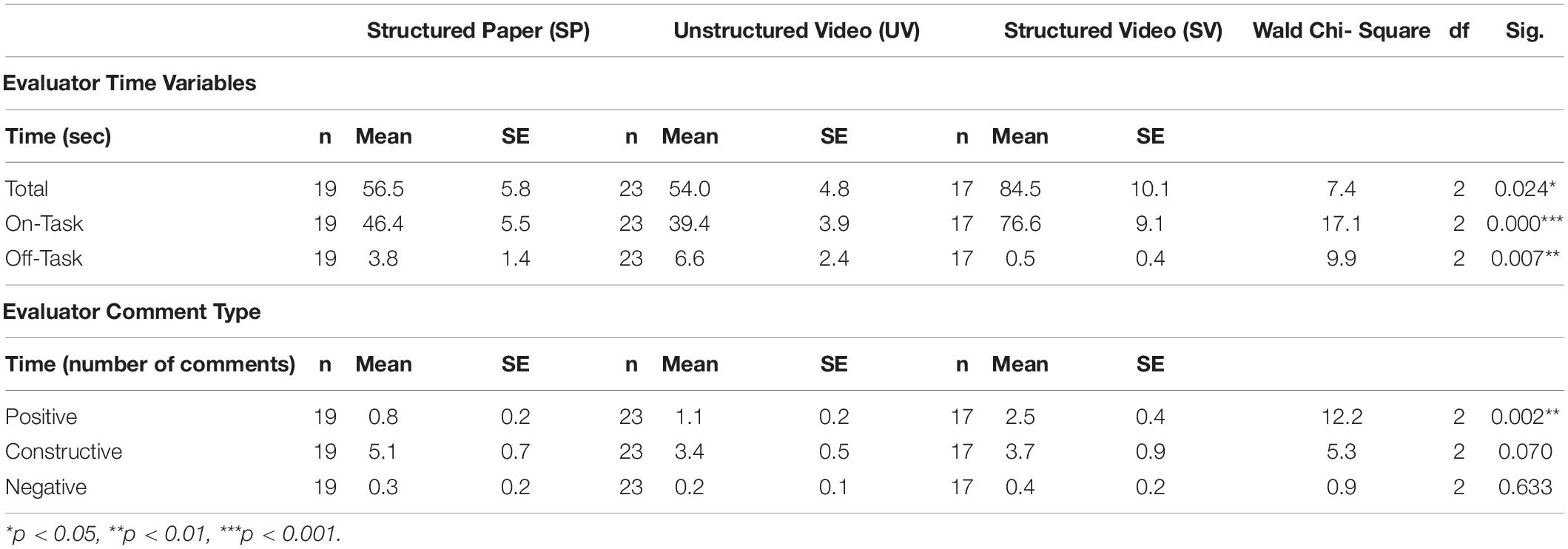

Table 3 displays the Estimated Marginal Mean and Standard Error of variables for evaluators by the duration of interaction and comment type. The GEE analysis revealed statistically significant condition differences for total time (p < 0.05), on-task time (p < 0.001), off-task time (p < 0.01), and positive comments (p < 0.01) for the evaluator role (Table 3).

Table 3. Estimate marginal mean and standard error of variables for evaluator by engagement time and comment type.

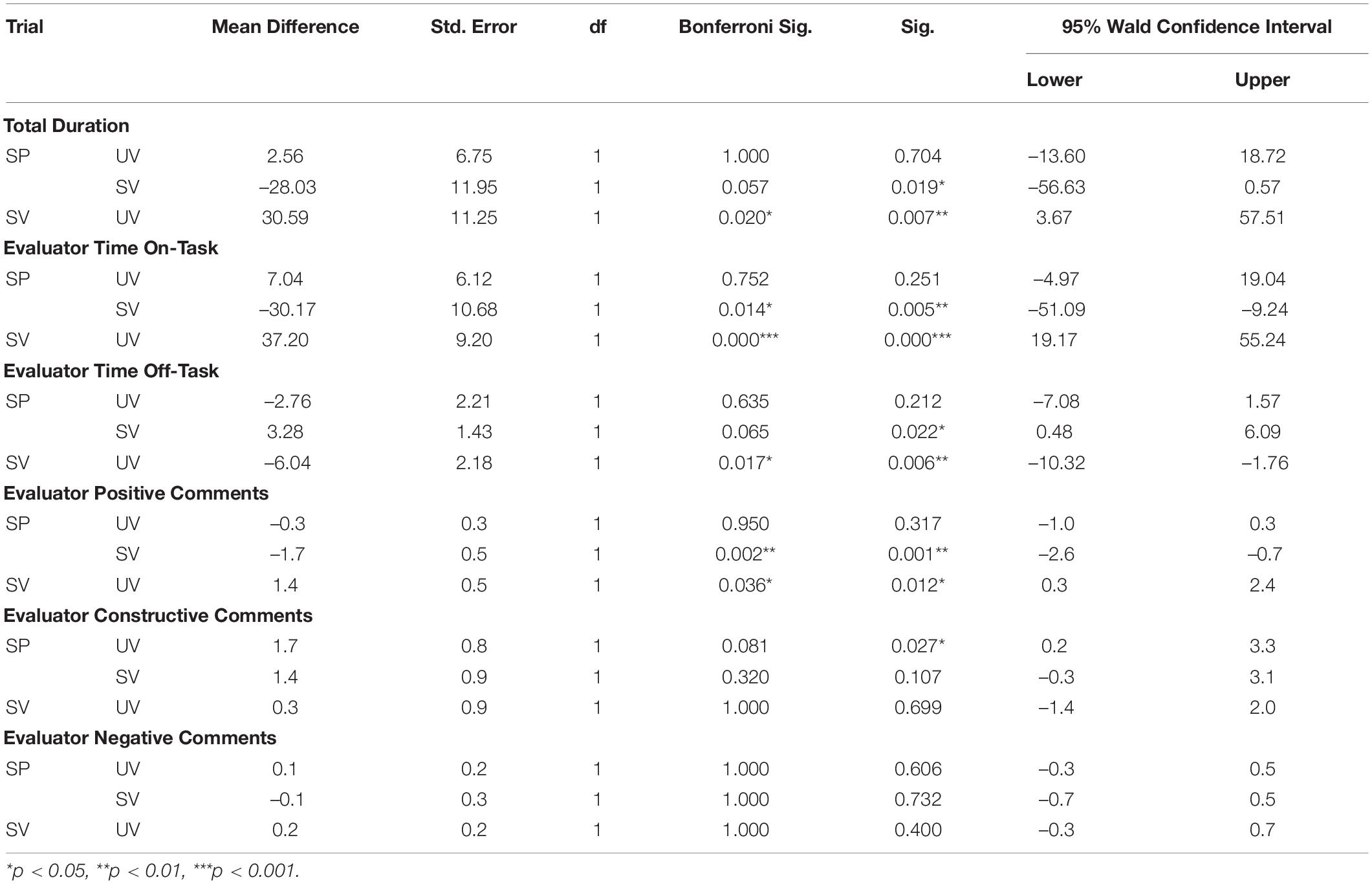

Table 4 provides the pairwise comparison analysis for total duration, evaluator on-task time, evaluator off-task time, and evaluator comments type by evaluation condition. Pairwise comparison further revealed that for the total duration, significant differences were seen between the SP group (56.5 ± 5.8) and SV group (84.5 ± 10.1), and between UV (54.0 ± 4.8) and SV group (84.5 ± 10.1). For evaluator on-task time, significant differences were seen between the SP group (46.4 ± 5.5) and SV group (76.6 ± 9.1), and between UV (39.4 ± 3.9) and SV group (76.6 ± 9.1). For evaluator off-task time, significant differences were only seen between the UV group (6.6 ± 2.4) and the SV group (0.5 ± 0.4). Regarding evaluator comment types, significant differences were seen between the SP group (0.8 ± 0.2) and SV group (2.5 ± 0.4), and between UV (1.1 ± 0.2) and SV group (2.5 ± 0.4). Evaluators in the SV groups had more positive feedback overall.

Table 4. Between group pairwise comparison of evaluator duration, on-task, off-task variables, and evaluator comments.

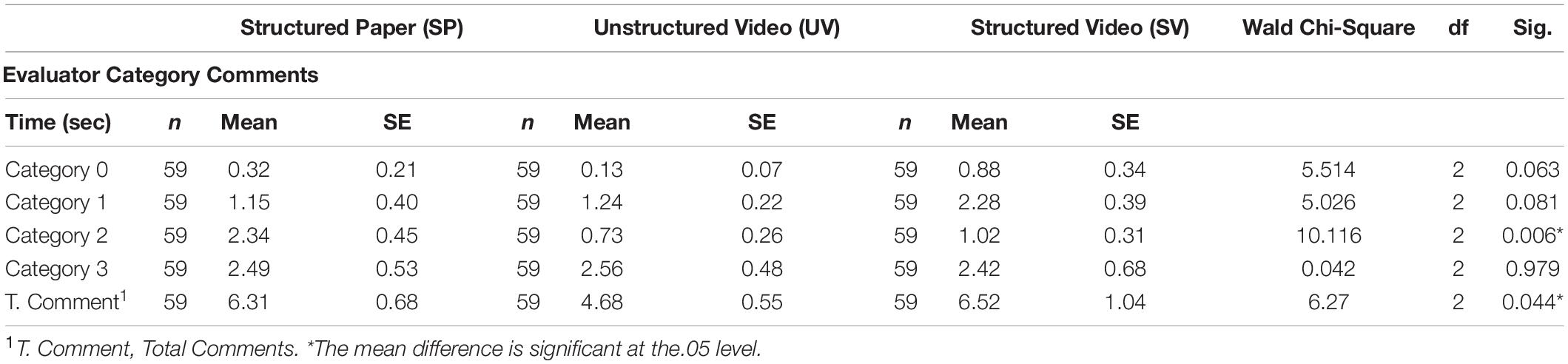

Table 5 displays the Estimated Marginal Mean and Standard Error of variables for evaluators and performers by quality of feedback recorded. GEE analysis revealed statistically significant condition differences for evaluator comments in Category 2 (p < 0.01) and in total comments (p < 0.05). Category 0 and Category 1 showed trends (p < 0.10) and there were no differences in Category 3 level comments.

Table 5. Estimated marginal means and standard error of evaluator and performer quality feedback and significance by peer evaluation condition.

Table 6 shows the pairwise comparison analysis for quality of evaluator comment rating between groups. Pairwise comparison further revealed that there was a significant difference between SP (2.34 ± 0.45) and SV (1.02 ± 0.31), and UV (0.76 ± 0.26) groups in Category 2 feedback. In total feedback, there was a significant difference between the SP (6.31 ± 0.68) and UV groups (4.68 ± 0.55).

The results of the performers were also analyzed using the same methods as the evaluators. For performer engagement time and comment types, significant differences were found only for time on-task (p < 0.01) between the UV (36.4 ± 3.9) and SV group (71.4 ± 8.9), and positive comments (p < 0.05) between the UV (0.5 ± 0.1) and SV group (1.7 ± 0.2). No other significant differences were found between the total time, time off-task, and negative and constructive comments. In terms of the quality of feedback, pairwise comparison further revealed a significant difference in Category 2 feedback for performers (p < 0.05) between the students in the SP (0.92 ± 0.32) and UV groups (0.18 ± 0.10).

The purpose of this study was to examine the nature and quality of interactions between peers in an elementary PE class using VM and in a peer-evaluation setting with three different P2P evaluation methods. Nature of interaction was determined by the duration of interaction (total time, on-task time, off-task time, neutral), and the type of comments (positive, constructive, negative). The quality of interaction was measured by the four categories of feedback from both the evaluators and performers. The evaluators in the SV condition (video playback with structured evaluation) spent significantly more time on-task while interacting with their partners and spent less time off-task compared to the SP and the UV conditions. Students (both evaluators and performers) were observed following the instructions and reviewing the video of their performance under the SV condition. Further, they provided more feedback that is positive to their peers than when under the SP and UV conditions. Similarly, participants in the performer role spent more time on-task in the SV condition compared to the other conditions. When provided with video feedback and playback options, the students had an objective way to evaluate their partners’ performances instead of solely relying on their memory of them. There was statistically significantly less off-task time for the SV condition compared to the SP or the UV feedback conditions. When in the SV condition, students spent more time on-task and increased their time interacting with their peers than in the other teaching methods. While there was an increase in positive feedback with the use of SV, no difference was observed in the frequency of constructive and negative comments between the three conditions for both the evaluator and performer roles. When examining the quality of the positive feedback given, there was more Category 2 feedback given by the SP group compared to the other two conditions (Table 6). There were no other significant differences observed when examining the quality of the feedback between the three conditions.

To our knowledge, this is the first study that examined the nature and quality of social interactions between peers based on a tablet-based intervention providing P2P learning along with VM, specifically while learning a basketball skill in three different evaluation conditions. Compared to the SP and UV conditions, the SV group increased on-task time behavior and had more positive feedback during discussions between peers, which shows that students are more likely to encourage their peers when there is video playback and structured assessments. This finding is in line with Law and Baer’s (2020) study which reported that by facilitating peer assessment using a structured software program, students improved their ability to provide quality feedback regarding their classmates’ writing skills.

Bartimote-Aufflick et al. (2016) noted that when students’ psychological needs are met, they become more actively involved in the learning process. According to their review of 64 studies, pedagogical strategies that could help students meet their needs include using videos, structured learning activities, and task analysis. Structured peer evaluation using technology, in combination with access to video recall, could lead to more engagement in task performance while decreasing the frequency of unrelated or disruptive behaviors (King et al., 2014). According to Obrusnikova and Rattigan (2016), using the VM technique improved fundamental movement performance through increasing the students’ attention and motivation to the skill complexity and production. Furthermore, when students provide peer feedback, it makes them think more critically about the assessment criteria, engaging them in the process for a longer period of time, and ultimately, sustaining that process (Zher et al., 2016). This is likely the case because cooperative learning and peer evaluation foster positive experiences and skills such as enjoyment, connection, teamwork, and creativity (Fernandez-Rio et al., 2017).

Another possible reason for finding significant improvement in positive comments and extending on-task time for the SV group could be the use of a rating criteria for peer assessment. This observation aligns with previous research that showed having explicit rating criteria makes the peer evaluation process more fruitful (Li and Gao, 2015), leading to students reaching more learning goals. In general, integrating technology in peer assessment seems to be more beneficial in the learning process compared to paper-based peer evaluation. This is supported by Li et al.’s (2010) study which reported that computer-mediated peer evaluation was associated with greater learning achievement compared to paper-based peer evaluation. The efficacy of technology-based feedback on students’ motivation and learning has been well-documented (Roure et al., 2019).

One limitation of the present study was the omission of skill-learning analysis. Thacker et al. (2021) reported that when using a structured approach in P2P learning and evaluation, peers improved their own performance. Therefore, integrating structure and technology to peer feedback and assessments seems to enhance performance; however, in our study, because we did not measure learning outcomes, it is difficult to quantify how the SP, UV, and SV evaluation conditions affected performance. Future studies should measure performance outcomes comparing SP, UV, and SV evaluation conditions.

Incorporating technology into PE pedagogy has been growing in recent years (Wallhead et al., 2014; Koekoek et al., 2018). More specifically, since various kinds of VM have been effective in improving physical and sport skills (Boyer et al., 2009; Maryam et al., 2009; Reynolds, 2013; Bouazizi et al., 2014; Obrusnikova and Rattigan, 2016; Obrusnikova and Cavalier, 2018; Bulca et al., 2020) in future studies, it is worth considering how learning physical and sport skills are affected by different evaluation conditions and social interaction more generally.

Another limitation of the current study is how the results were affected by students occupying both the evaluator and performer roles. When students assume either the role of the assessor or assessee in a peer evaluation, they each contribute differently to the learning process and therefore alter the feedback quality (Li et al., 2010); future in-depth research is needed to discover the effects of role (assessor or assessee) on quality of feedback and learning process.

Additionally, one of the barriers to collaborative learning has been reported as unfamiliarity with learning and using technology devices (Bodsworth and Goodyear, 2017). Because of the limited number of training sessions in this study, students are likely to have varying degrees of confidence in using iPads which would in turn affect their evaluations. Future studies should include adequate training to ensure that all students feel competent and comfortable using technological applications.

Previous studies reported positive outcomes that are related to students’ basic psychological needs like relatedness and competence in PE (Li et al., 2010). When these basic psychological needs are met, students improve their motivation for autonomous learning. Therefore, examining some related psychological characteristics may be of significant importance (Wallhead et al., 2014). Social intimacy can be considered a vital factor when learning new skills and in determining the quality of interactions during peer learning and evaluation. This is true in the current study where only male students are representative of grade 4 and all the older students were female. The gender imbalance in the sample size would have impacted the level of social intimacy between pairs, and therefore, affecting the nature and quality of the interactions. Students’ preferences for choosing their partners should therefore be taken into consideration in future research to adjust for their level of social comfort. By allowing students to choose their partners, students can place the learning challenges inside or outside of their comfort zone (Koekoek and Knoppers, 2015).

The findings in this research show the potential of structured feedback with VM to provide positive feedback during the peer-learning process and enhance the quality of interactions between students in peer-assessed performance analysis. One of the goals of physical education involves psychomotor performances that can be effectively captured by digital video. While digital video is beneficial for the PE classroom, providing structure for evaluation, such as a prototype app in this study, can further enhance engagement. Designing digital video tools that incorporate structure and can provide feedback for the self and in peer evaluations leads to increasing participant engagement and meeting knowledge outcomes.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author/s.

The studies involving human participants were reviewed and approved by the Conjoint Health Research Ethics Board, University of Calgary. Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin.

NS was the lead author, participated in the design, led the data collection, managed the data, interpreted the audio recordings, prepared the first draft of the study including the tables, and contributed significantly to each draft (six revisions). HL participated in the study design, had a major role in the data collection, and provided feedback on the drafts. VB helped to organize the study, assisted in the data collection, edited the first draft, and conducted the initial literature review. TF was the team statistician, analyzed the data, provided advice and feedback on the results, and assisted with the study design. HR reviewed the literature for both the introduction and discussion, expanded the study focus, added creatively to the discussion, and provided feedback on the drafts. LK developed the application, designed the study, participated in the data collection, edited the drafts, and supervised the team. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2022.856918/full#supplementary-material

Aldi, C., Crigler, A., Kates-McElrath, K., Long, B., Smith, H., Rehak, K., et al. (2016). Examining the effects of video modeling and prompts to teach activities of daily living skills. Behav. Anal. Pract. 9, 384–388. doi: 10.1007/s40617-016-0127-y

Ardoy, D. N., Fernandez-Rodriguez, J. M., Jimenez-Pavon, D., Castillo, R., Ruiz, J. R., and Ortega, F. B. (2014). A physical education trial improves adolescents’ cognitive performance and academic achievement: the EDUFIT study. Scand. J. Med. Sci. Sports 24, e52–e61. doi: 10.1111/sms.12093

Baena-Morales, S., Jerez-Mayorga, D., Delgado-Floody, P., and Martínez-Martínez, J. (2021). Sustainable development goals and physical education: a proposal for practice-based models. Int. J. Environ. Res. Public Health 18:2129. doi: 10.3390/ijerph18042129

Bartimote-Aufflick, K., Bridgeman, A., Walker, R., Sharma, M., and Smith, L. (2016). The study, evaluation, and improvement of university student self-efficacy. Stud. High. Educ. 41, 1918–1942. doi: 10.1080/03075079.2014.999319

Bellini, S., and Akullian, J. (2007). A meta-analysis of video modeling and video self-modeling interventions for children and adolescents with autism spectrum disorders. Except. Child. 73, 264–287. doi: 10.1177/001440290707300301

Bodsworth, H., and Goodyear, V. A. (2017). Barriers and facilitators to using digital technologies in the cooperative learning model in physical education. Phys. Educ. Sport Pedag. 22, 563–579. doi: 10.1080/17408989.2017.1294672

Bouazizi, M., Azaiez, F., and Boudhiba, D. (2014). Effects of learning by video modeling on gymnastic performances among tunisian students in the second year of secondary level. IOSR J. Sports Phys. Educ. 1, 5–8. doi: 10.9790/6737-0150508

Boyer, E., Miltenberger, R. G., Batsche, C., and Fogel, V. (2009). Video modeling by experts with video feedback to enhance gymnastics skills. J. Appl. Behav. Anal. 42, 855–860. doi: 10.1901/jaba.2009.42-855

Bulca, Y., Ozdurak, R. H., and Demirhan, G. (2020). The effects of digital physical exercise videos on the locomotor skill learning of pre-school children. Eur. Early Child. Educ. Res. J. 28, 231–241. doi: 10.1080/1350293X.2020.1716475

Byra, M., and Marks, M. C. (1993). The effect of two pairing techniques on specific feedback and comfort levels of learners in the reciprocal style of teaching. J. Teach. Phys. Educ. 12, 286–300. doi: 10.1123/jtpe.12.3.286

Casey, A., Goodyear, V. A., and Armour, K. M. (2017). Rethinking the relationship between pedagogy, technology and learning in health and physical education. Sport Educ. Soc. 22, 288–304. doi: 10.1080/13573322.2016.1226792

Chai, C. S., and Koh, J. H. L. (2017). Changing teachers’ TPACK and design beliefs through the Scaffolded TPACK Lesson Design Model (STLDM). Learn. Res. Pract. 3, 114–129. doi: 10.1080/23735082.2017.1360506

Charlop-Christy, M. H., Le, L., and Freeman, K. A. (2000). A comparison of video modeling with in vivo modeling for teaching children with autism. J. Autism Dev. Disord. 30, 537–552. doi: 10.1023/a:1005635326276

Cuevas, R., García López, L., and Olivares, J. (2016). Sport education model and self-determination theory: an intervention in secondary school children. Kinesiology 48, 30–38. doi: 10.26582/k.48.1.15

Dishman, R. K., Motl, R. W., Saunders, R., Felton, G., Ward, D. S., Dowda, M., et al. (2004). Self-efficacy partially mediates the effect of a school-based physical-activity intervention among adolescent girls. Prevent. Med. 38, 628–636. doi: 10.1016/j.ypmed.2003.12.007

Dishman, R. K., Motl, R. W., Saunders, R., Felton, G., Ward, D. S., Dowda, M., et al. (2005). Enjoyment mediates effects of a school-based physical-activity intervention. Med. Sci. Sports Exerc. 37, 478–487. doi: 10.1249/01.mss.0000155391.62733.a7

Eather, N., Morgan, P. J., and Lubans, D. R. (2013). Improving the fitness and physical activity levels of primary school children: results of the Fit-4-Fun group randomized controlled trial. Prevent. Med. 56, 12–19. doi: 10.1016/j.ypmed.2012.10.019

Emmanouel, C., Zervas, Y., and Vagenas, G. (1992). Effects of four physical education teaching methods on development of motor skill, self-concept, and social attitudes of fifth-grade children. Percept. Motor Skills 74(3 Pt 2), 1151–1167. doi: 10.2466/pms.1992.74.3c.1151

Fernandez-Rio, J., Sanz, N., Fernandez-Cando, J., and Santos, L. (2017). Impact of a sustained Cooperative Learning intervention on student motivation. Phys. Educ. Sport Pedag. 22, 89–105. doi: 10.1080/17408989.2015.1123238

Garn, A., and Wallhead, T. (2015). Social goals and basic psychological needs in high school physical education. Sport Exercise Perform. Psychol. 4, 88–99. doi: 10.1037/spy0000029

Goni, A., and Zulaika, L. (2000). Relationships between physical education classes and the enhancement of fifth grade pupils’ self-concept. Percept. Motor Skills 91, 246–250. doi: 10.2466/pms.2000.91.1.246

Goodyear, V. A., Casey, A., and Kirk, D. (2014). Hiding behind the camera: social learning within the cooperative learning model to engage girls in physical education. Sport Educ. Soc. 19, 712–734. doi: 10.1080/13573322.2012.707124

Goodyear, V. A., Kerner, C., and Quennerstedt, M. (2019). Young people’s uses of wearable healthy lifestyle technologies; surveillance, self-surveillance and resistance. Sport Educ. Soc. 24, 212–225. doi: 10.1080/13573322.2017.1375907

Green, V. A., Prior, T., Smart, E., Boelema, T., Drysdale, H., Harcourt, S., et al. (2017). The use of individualized video modeling to enhance positive peer interactions in three preschool children. Educ. Treat. Child. 40, 353–378. doi: 10.1353/etc.2017.0015

Gubacs-Collins, K., and Juniu, S. (2009). The mobile gymnasium. J. Phys. Educ. Recreat. Dance 80, 24–31. doi: 10.1080/07303084.2009.10598279

Jenkinson, K. A., Naughton, G., and Benson, A. C. (2014). Peer-assisted learning in school physical education, sport and physical activity programmes: a systematic review. Phys. Educ. Sport Pedag. 19, 253–277. doi: 10.1080/17408989.2012.754004

Jeong, H., and Hmelo-Silver, C. E. (2016). Seven affordances of computer-supported collaborative learning: how to support collaborative learning? How can technologies help? Educ. Psychol. 51, 247–265. doi: 10.1080/00461520.2016.1158654

Johnson, M., and Ward, P. (2001). Effects of classwide peer tutoring on correct performance of striking skills in 3rd grade physical education. J. Teach. Phys. Educ. 20, 247–263. doi: 10.1123/jtpe.20.3.247

Kant, J. M., Scheiter, K., and Oschatz, K. (2017). How to sequence video modeling examples and inquiry tasks to foster scientific reasoning. Learn. Instr. 52, 46–58. doi: 10.1016/j.learninstruc.2017.04.005

Kelly, P., and Katz, L. (2016). Comparing peer-to-peer and individual learning: teaching basic computer skills to disadvantaged adults. Int. J. Adult Vocat. Educ. Technol. 7, 1–15. doi: 10.4018/IJAVET.2016100101

King, B., Radley, K., Jenson, W., Clark, E., and O’Neill, R. (2014). Utilization of video modeling combined with self-monitoring to increase rates of on-task behavior. Behav. Intervent. 29, 125–144. doi: 10.1002/bin.1379

Koehler, M. J., Mishra, P., and Cain, W. (2013). What is technological pedagogical content knowledge (TPACK)? J. Educ. 193, 13–19. doi: 10.1177/002205741319300303

Koekoek, J., and Knoppers, A. (2015). The role of perceptions of friendships and peers in learning skills in physical education. Phys. Educ. Sport Pedag. 20, 231–249. doi: 10.1080/17408989.2013.837432

Koekoek, J., van der Kamp, J., Walinga, W., and van Hilvoorde, I. (2019). Exploring students’ perceptions of video-guided debates in a game-based basketball setting. Phys. Educ. Sport Pedag. 24, 519–533. doi: 10.1080/17408989.2019.1635107

Koekoek, J., van der Mars, H., van der Kamp, J., Walinga, W., and van Hilvoorde, I. (2018). Aligning digital video technology with game pedagogy in physical education. J. Phys. Educ. Recreat. Dance 89, 12–22. doi: 10.1080/07303084.2017.1390504

Krause, J. M., O’Neil, K., and Jones, E. (2020). Technology in physical education teacher education: a call to action. Quest 72, 241–259. doi: 10.1080/00336297.2019.1685553

Law, S., and Baer, A. (2020). Using technology and structured peer reviews to enhance students’ writing. Act. Learn. High. Educ. 21, 23–38. doi: 10.1177/1469787417740994

Lee, J. E., and Gao, Z. (2020). Effects of the iPad and mobile application-integrated physical education on children’s physical activity and psychosocial beliefs. Phys. Educ. Sport Pedag. 25, 567–584. doi: 10.1080/17408989.2020.1761953

Leight, J., Banville, D., and Polifko, M. F. (2009). Using digital video recorders in physical education. J. Phys. Educ. Recreat. Dance 80, 17–21. doi: 10.1080/07303084.2009.10598262

Li, L., and Gao, F. (2015). The effect of peer assessment on project performance of students at different learning levels. Assess. Eval. High. Educ. 41, 1–16. doi: 10.1080/02602938.2015.1048185

Li, L., Liu, X., and Steckelberg, A. L. (2010). Assessor or assessee: how student learning improves by giving and receiving peer feedback. Br. J. Educ. Technol. 41, 525–536. doi: 10.1111/j.1467-8535.2009.00968.x

Lynch, T. (2019). Physical Education and Wellbeing: Global and Holistic Approaches to Child Health. Cham: Palgrave Macmillan.

Marques, A., Gómez, F., Martins, J., Catunda, R., and Sarmento, H. (2017). Association between physical education, school-based physical activity, and academic performance: a systematic review. Retos 31, 316–320. doi: 10.47197/retos.v0i31.53509

Maryam, C., yaghoob, M., Darush, N., and Mojtaba, I. (2009). The comparison of effect of video-modeling and verbal instruction on the performance in throwing the discus and hammer. Procedia Soc. Behav. Sci. 1, 2782–2785. doi: 10.1016/j.sbspro.2009.01.493

McManis, L. D., and Gunnewig, S. B. (2012). Finding the education in educational technology with early learners. Young Child. 67, 14–24.

Mechling, L. (2005). The effect of instructor-created video programs to teach students with disabilities: a literature review. J. Spec. Educ. Technol. 20, 25–36. doi: 10.1177/016264340502000203

Metzler, M. W. (2011). Instructional Models for Physical Education, 3rd Edn. Scottsdale, AZ: Holcomb Hathaway.

Mosston, M., and Ashworth, S. (2008). Teaching Physical Education, 1st Edn. Spectrum Institute for Teaching and Learning. Available online at: https://spectrumofteachingstyles.org/assets/files/book/Teaching_Physical_Edu_1st_Online.pdf.

Moy, B., Renshaw, I., and Davids, K. (2014). Variations in acculturation and Australian physical education teacher education students’ receptiveness to an alternative pedagogical approach to games teaching. Phys. Educ. Sport Pedag. 19, 349–369. doi: 10.1080/17408989.2013.780591

National Association for Sport and Physical Education (2013). NASPE Resource brief: Quality Physical Education. Available online at: https://files.eric.ed.gov/fulltext/ED541490.pdf.

Obrusnikova, I., and Cavalier, A. (2018). An evaluation of videomodeling on fundamental motor skill performance of preschool children. Early Child. Educ. J. 46, 287–299. doi: 10.1007/s10643-017-0861-y

Obrusnikova, I., and Rattigan, P. (2016). Using video-based modeling to promote acquisition of fundamental motor skills. J. Phys. Educ. Recreat. Dance 87, 24–29. doi: 10.1080/07303084.2016.1141728

O’Loughlin, J., Chróinín, D. N., and O’Grady, D. (2013). Digital video: the impact on children’s learning experiences in primary physical education. Eur. Phys. Educ. Rev. 19, 165–182. doi: 10.1177/1356336x13486050

Palao, J. M., Hastie, P. A., Cruz, P. G., and Ortega, E. (2015). The impact of video technology on student performance in physical education. Technol. Pedag. Educ. 24, 51–63. doi: 10.1080/1475939X.2013.813404

Pritchard, T., Hawkins, A., Wiegand, R., and Metzler, J. N. (2008). Effects of two instructional approaches on skill development, knowledge, and game performance. Meas. Phys. Educ. Exerc. Sci. 12, 219–236. doi: 10.1080/10913670802349774

Rasberry, C. N., Lee, S. M., Robin, L., Laris, B. A., Russell, L. A., Coyle, K. K., et al. (2011). The association between school-based physical activity, including physical education, and academic performance: a systematic review of the literature. Prevent. Med. 52(Suppl. 1) S10–S20. doi: 10.1016/j.ypmed.2011.01.027

Resta, P., and Laferrière, T. (2007). Technology in support of collaborative learning. Educ. Psychol. Rev. 19, 65–83. doi: 10.1007/s10648-007-9042-7

Reynolds, C. E. (2013). The Use of Video Modeling Plus Video Feedback to Improve Boxing Skills. Master’s thesis. Tampa, FL: University of South Florida.

Roure, C., Méard, J., Lentillon-Kaestner, V., Flamme, X., Devillers, Y., and Dupont, J.-P. (2019). The effects of video feedback on studentsâ situational interest in gymnastics. Technol. Pedagogy Educ. 28, 563–574. doi: 10.1080/1475939X.2019.1682652

Shen, B., McCaughtry, N., Martin, J., and Fahlman, M. (2009). Effects of teacher autonomy support and students’ autonomous motivation on learning in physical education. Res. Q. Exerc. Sport 80, 44–53. doi: 10.1080/02701367.2009.10599528

Sinelnikov, O. A. (2012). Using the iPad in a sport education season. J. Phys. Educ. Recreat. Dance 83, 39–45. doi: 10.1080/07303084.2012.10598710

Sluijsmans, D. M. A., Brand-Gruwel, S., and van Merriënboer, J. J. G. (2002). Peer assessment training in teacher education: effects on performance and perceptions. Assess. Eval. High. Educ. 27, 443–454. doi: 10.1080/0260293022000009311

Sun, H. (2012). Exergaming impact on physical activity and interest in elementary school children. Res. Q. Exerc. Sport 83, 212–220. doi: 10.1080/02701367.2012.10599852

Thacker, A., Ho, J., Khawaja, A., and Katz, L. (2021). Peer-to-peer learning: the impact of order of performance on learning fundamental movement skills through video analysis with middle school children. J. Teach. Phys. Educ. 1–11. doi: 10.1123/jtpe.2021-0021 [Epub ahead of print].

Waggoner Denton, A. (2018). Improving the quality of constructive peer feedback. Coll. Teach. 66, 22–23. doi: 10.1080/87567555.2017.1349075

Wallhead, T. L., Garn, A., and Vidoni, C. (2014). Effect of a sport education program on motivation for physical education and leisure-time physical activity. Res. Q. Exerc. Sport 84, 478–487. doi: 10.1080/02701367.2014.961051

Wallhead, T. L., Garn, A. C., and Vidoni, C. (2013). Sport education and social goals in physical education: relationships with enjoyment, relatedness, and leisure-time physical activity. Phys. Educ. Sport Pedagogy 18, 427–441. doi: 10.1080/17408989.2012.690377

Wallhead, T. L., Hastie, P. A., Harvey, S., and Pill, S. (2021). Academics’ perspectives on the future of sport education. Phys. Educ. Sport Pedagogy 26, 533–548. doi: 10.1080/17408989.2020.1823960

Ward, P., Lee, M.-A., and Lee, M.-A. (2005). Peer-assisted learning in physical education: a review of theory and research. J. Teach. Phys. Educ. 24, 205–225. doi: 10.1123/jtpe.24.3.205

Watterson, T. A. (2012). Changes in Attitudes and Behaviors Toward Physical Activity, Nutrition, and Social Support for Middle School Students Using the AFIT App as a Suppliment to Instruction in a Physical Education Class. Doctoral dissertation. Tampa, FL: University of South Florida.

Weir, T., and Connor, S. (2009). The use of digital video in physical education. Technol. Pedagogy Educ. 18, 155–171. doi: 10.1080/14759390902992642

Yelland, N., and Masters, J. (2007). Rethinking scaffolding in the information age. Comput. Educ. 48, 362–382. doi: 10.1016/j.compedu.2005.01.010

Yu, H. (2020). The Effects of Mobile App Technology on Technique and Game Performance in Physical Education. Doctoral dissertation. Tempe, AZ: Arizona State University.

Zher, N. H., Hussein, R. M. R., and Saat, R. M. (2016). Enhancing feedback via peer learning in large classrooms. Malays. Online J. Educ. Technol. 4, 1–16.

Keywords: nature and quality of interactions, peer to peer learning, video-modeling, tablets, structured feedback

Citation: San NC, Lee HS, Bucholtz V, Fung T, Rafiei Milajerdi H and Katz L (2022) Nature and Quality of Interactions Between Elementary School Children Using Video-Modeling and Peer-to-Peer Evaluation With and Without Structured Video Feedback. Front. Educ. 7:856918. doi: 10.3389/feduc.2022.856918

Received: 17 January 2022; Accepted: 28 February 2022;

Published: 23 March 2022.

Edited by:

Ove Østerlie, Norwegian University of Science and Technology, NorwayReviewed by:

Salvador Baena Morales, University of Alicante, SpainCopyright © 2022 San, Lee, Bucholtz, Fung, Rafiei Milajerdi and Katz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Larry Katz, a2F0ekB1Y2FsZ2FyeS5jYQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.